2018 OSEP Project Directors Conference DISCLAIMER The contents

- Slides: 41

2018 OSEP Project Directors’ Conference DISCLAIMER: The contents of this presentation were developed by the presenters for the 2018 Project Directors’ Conference. However, these contents do not necessarily represent the policy of the Department of Education, and you should not assume endorsement by the Federal Government. (Authority: 20 U. S. C. 1221 e-3 and 3474)

Evaluating Implementation and Improvement: Getting Better Together Moderator: Leslie Fox, OSEP Panelists: Wendy Sawtell, Bill Huennekens, David Merves

Colorado SSIP – Implementation & Improvement Wendy Sawtell State Systemic Improvement Plan Coordinator State Transformation Specialist

ALIGNMENT IS OUR GOAL State-identified Measurable Result is based upon the second portion of the Theory of Action.

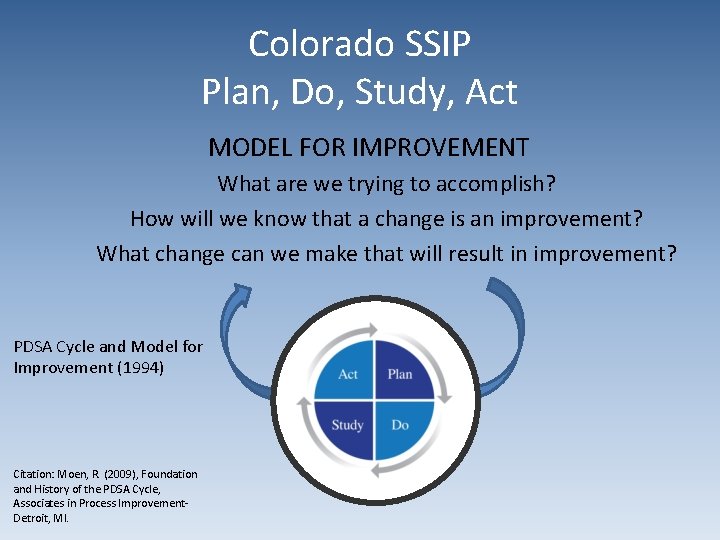

Colorado SSIP Plan, Do, Study, Act MODEL FOR IMPROVEMENT What are we trying to accomplish? How will we know that a change is an improvement? What change can we make that will result in improvement? PDSA Cycle and Model for Improvement (1994) Citation: Moen, R. (2009), Foundation and History of the PDSA Cycle, Associates in Process Improvement. Detroit, MI.

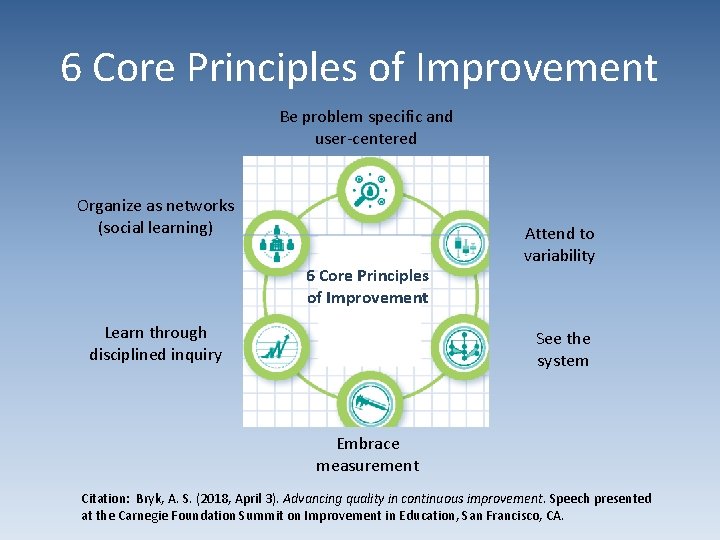

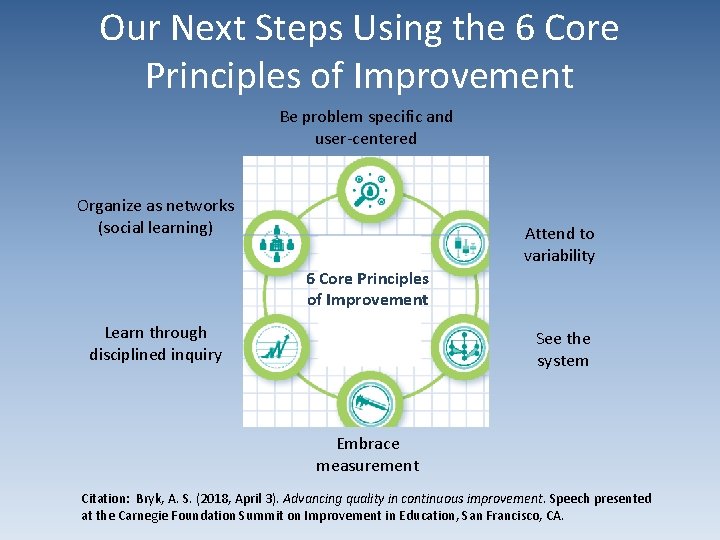

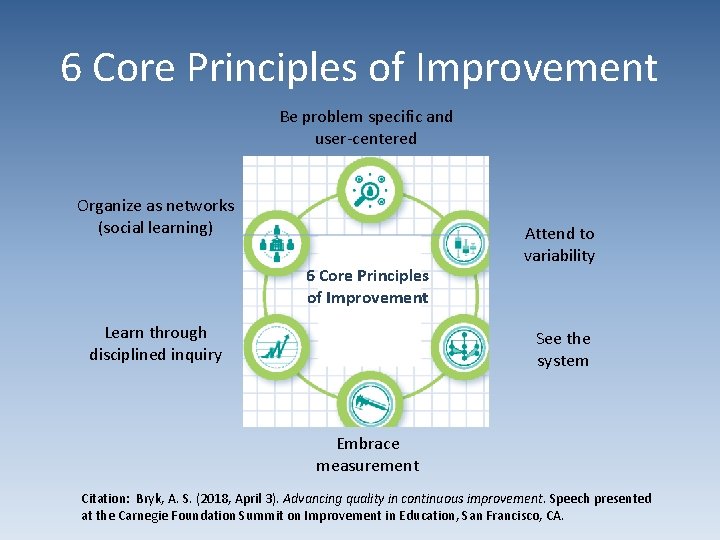

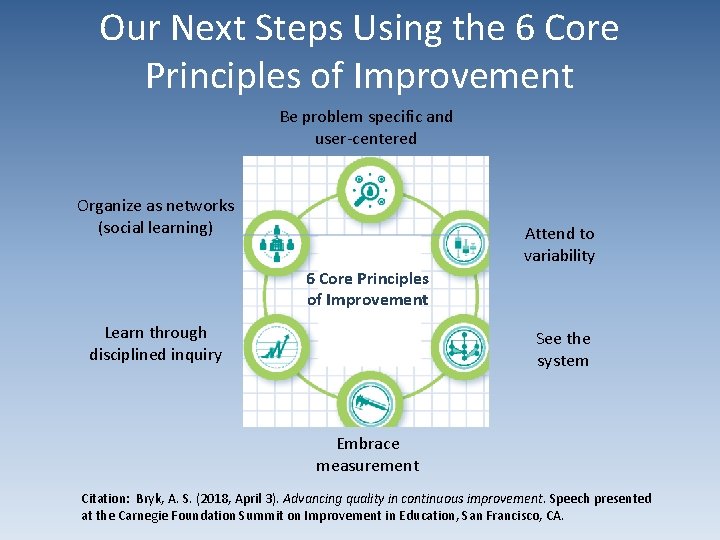

6 Core Principles of Improvement Be problem specific and user-centered Organize as networks (social learning) 6 Core Principles of Improvement Learn through disciplined inquiry Attend to variability See the system Embrace measurement Citation: Bryk, A. S. (2018, April 3). Advancing quality in continuous improvement. Speech presented at the Carnegie Foundation Summit on Improvement in Education, San Francisco, CA.

Anthony Bryk, Keynote Speech: Advancing quality in continuous improvement • Lesson 1: Take time to really understand the problem you have to solve • Lesson 2: Develop evidence that truly informs improvement • Lesson 3: As problems increase in their complexity, engage and activate the diversity of expertise assembled in improvement networks • Lesson 4: It is a paradigm shift: a cultural transformation in the ways schools work

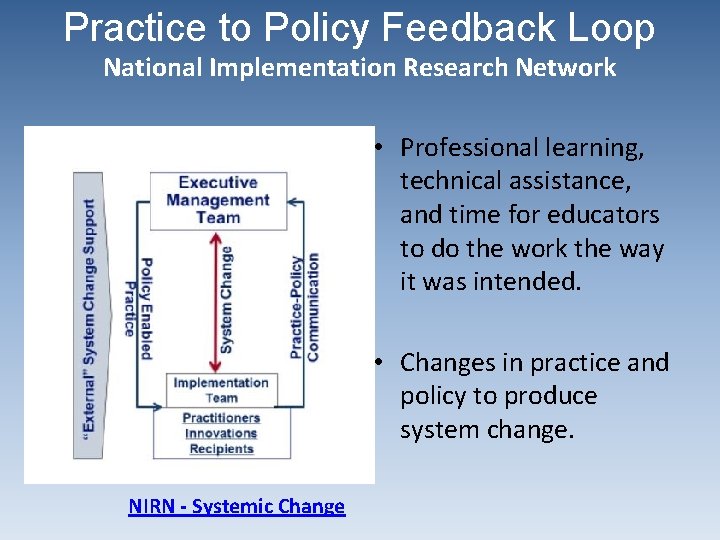

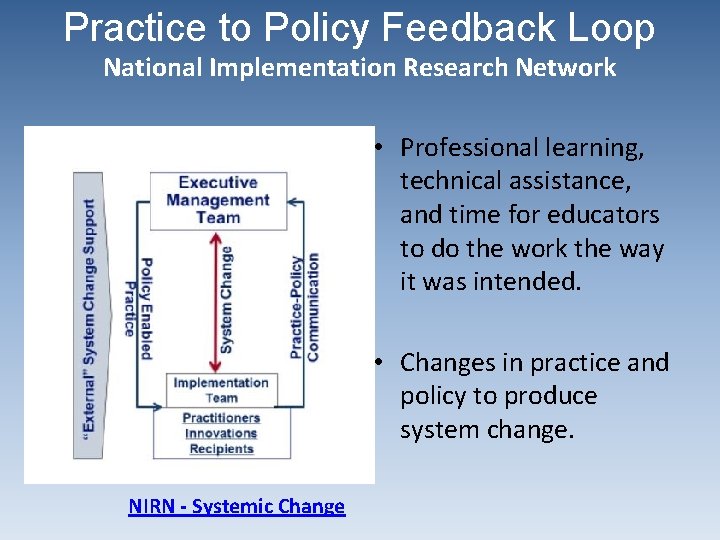

Practice to Policy Feedback Loop National Implementation Research Network • Professional learning, technical assistance, and time for educators to do the work the way it was intended. • Changes in practice and policy to produce system change. NIRN - Systemic Change

Fidelity Assessment Measuring Implementation “Fidelity assessment is defined as indicators of doing what is intended. ”

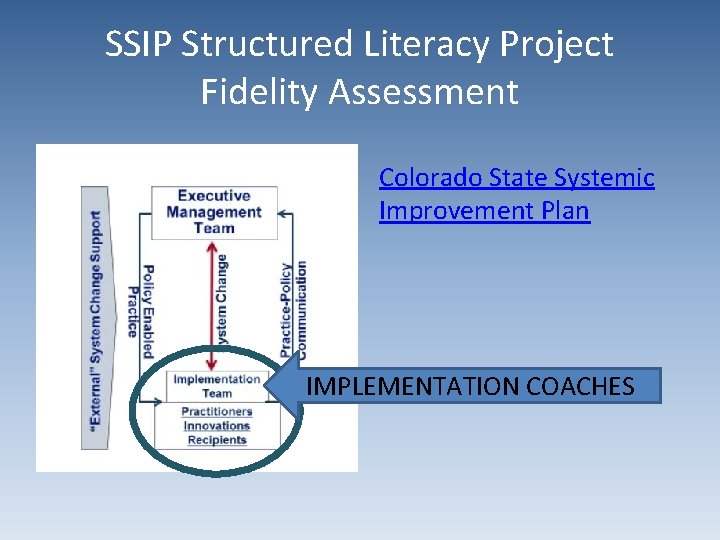

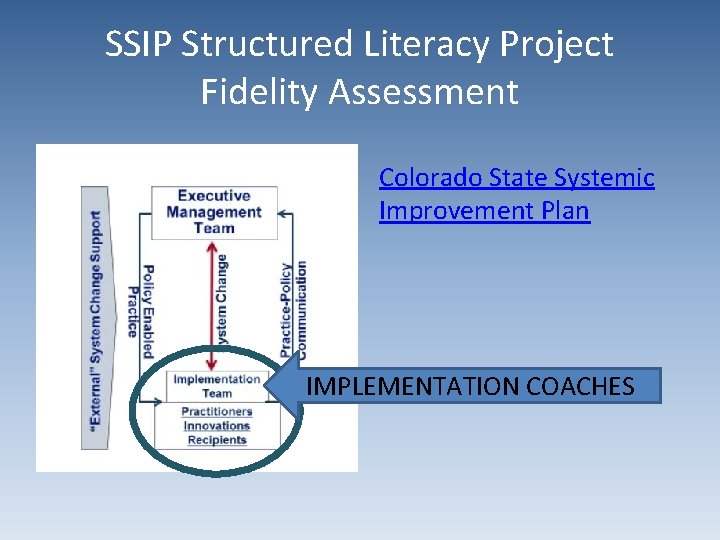

SSIP Structured Literacy Project Fidelity Assessment Colorado State Systemic Improvement Plan IMPLEMENTATION COACHES

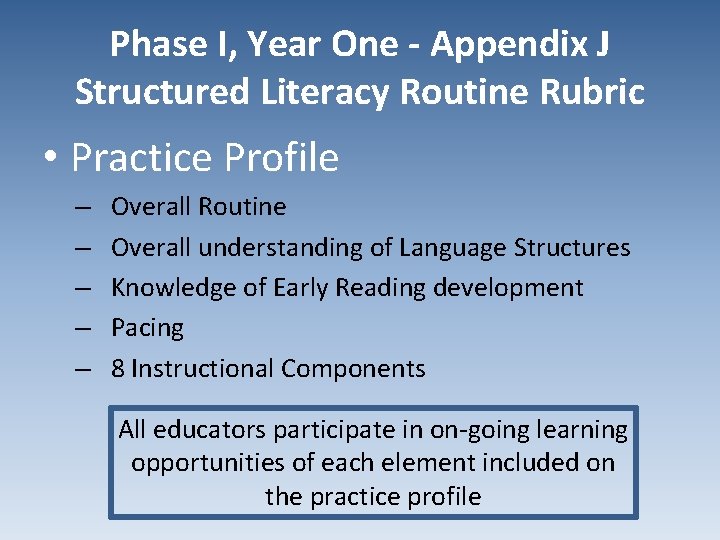

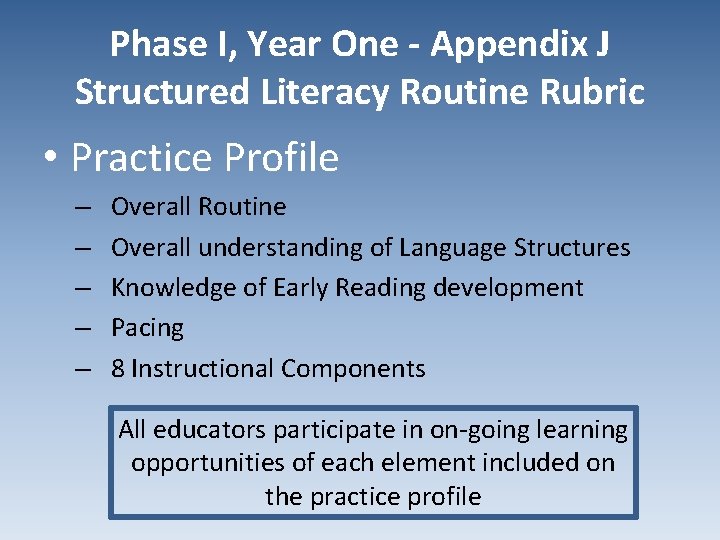

Phase I, Year One - Appendix J Structured Literacy Routine Rubric • Practice Profile – – – Overall Routine Overall understanding of Language Structures Knowledge of Early Reading development Pacing 8 Instructional Components All educators participate in on-going learning opportunities of each element included on the practice profile

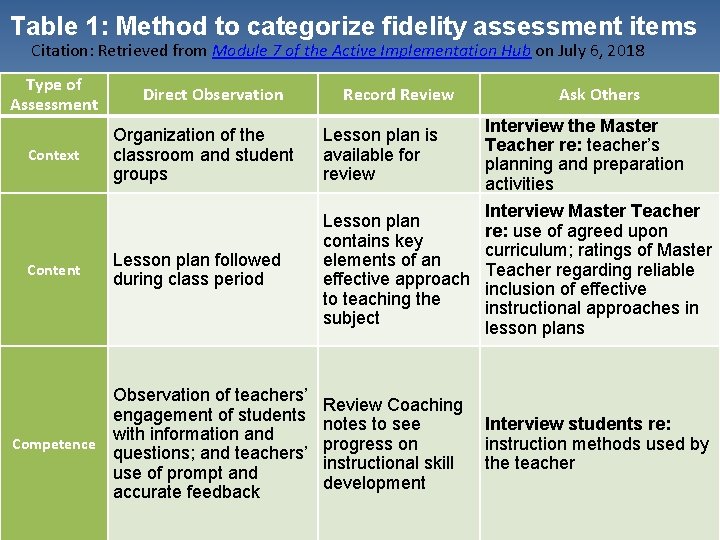

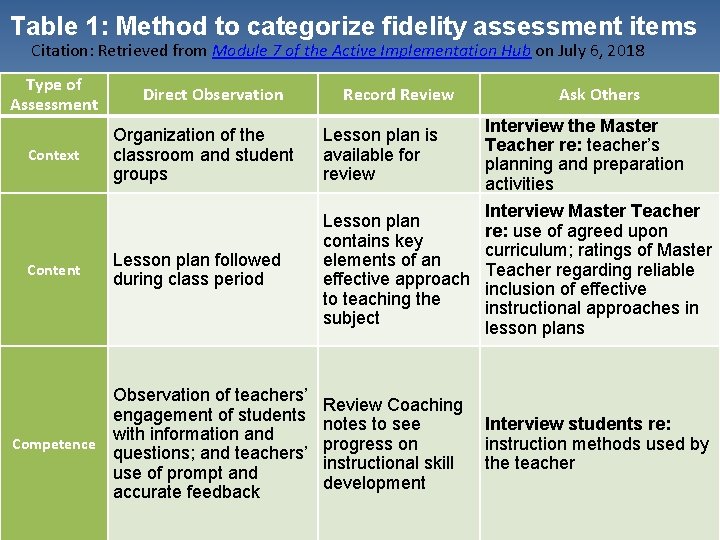

Table 1: Method to categorize fidelity assessment items Citation: Retrieved from Module 7 of the Active Implementation Hub on July 6, 2018 Type of Assessment Context Content Direct Observation Record Review Ask Others Organization of the classroom and student groups Lesson plan is available for review Interview the Master Teacher re: teacher’s planning and preparation activities Lesson plan followed during class period Lesson plan contains key elements of an effective approach to teaching the subject Interview Master Teacher re: use of agreed upon curriculum; ratings of Master Teacher regarding reliable inclusion of effective instructional approaches in lesson plans Review Coaching notes to see progress on instructional skill development Interview students re: instruction methods used by the teacher Observation of teachers’ engagement of students with information and Competence questions; and teachers’ use of prompt and accurate feedback

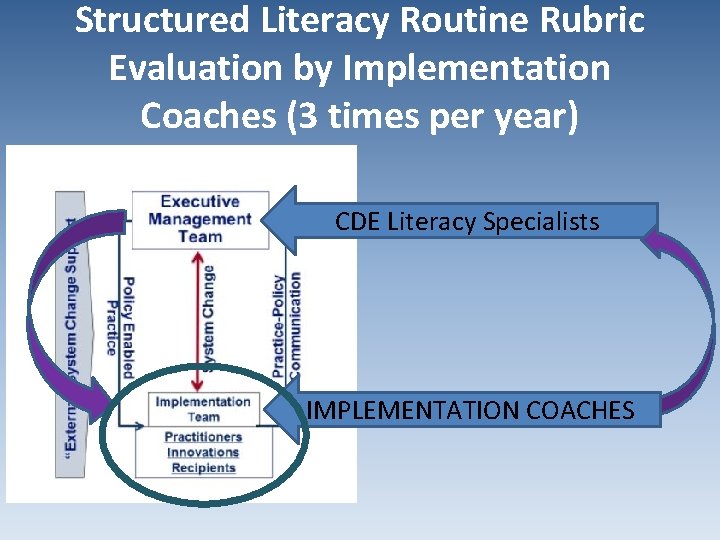

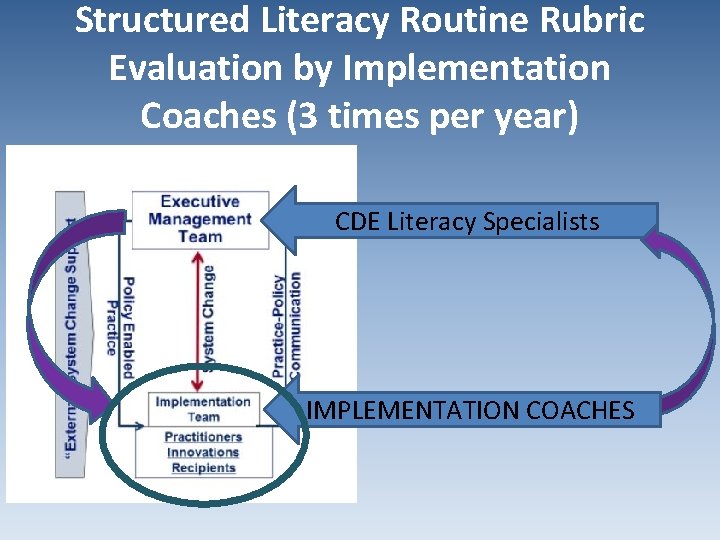

Structured Literacy Routine Rubric Evaluation by Implementation Coaches (3 times per year) CDE Literacy Specialists IMPLEMENTATION COACHES

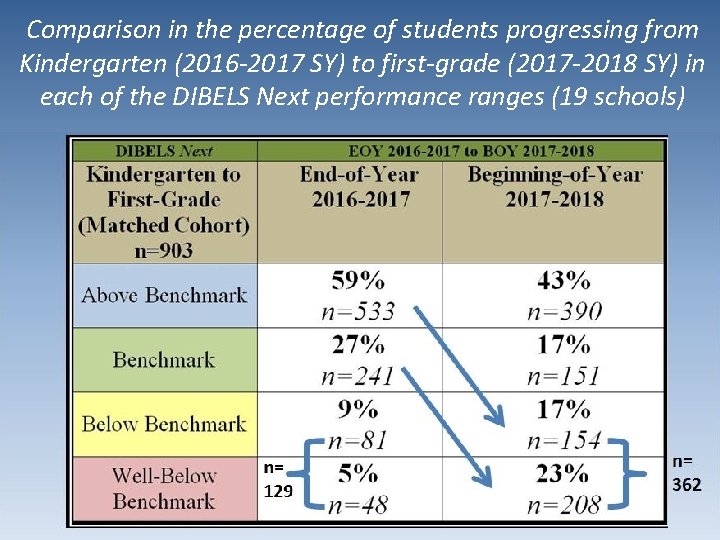

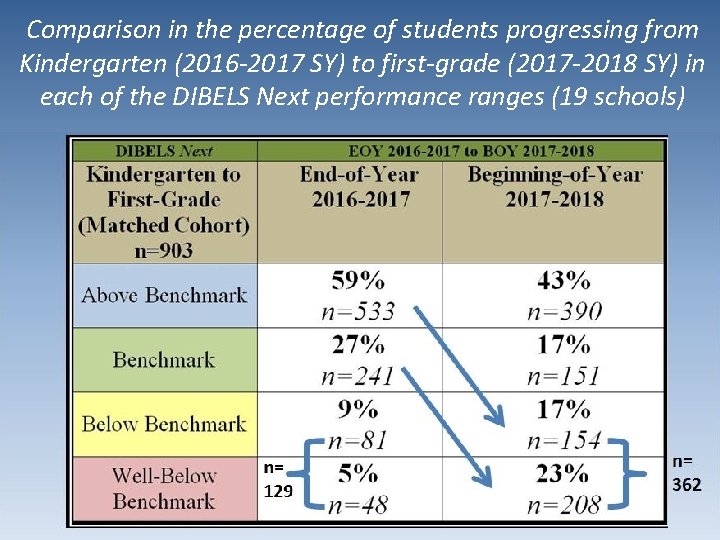

Comparison in the percentage of students progressing from Kindergarten (2016 -2017 SY) to first-grade (2017 -2018 SY) in each of the DIBELS Next performance ranges (19 schools)

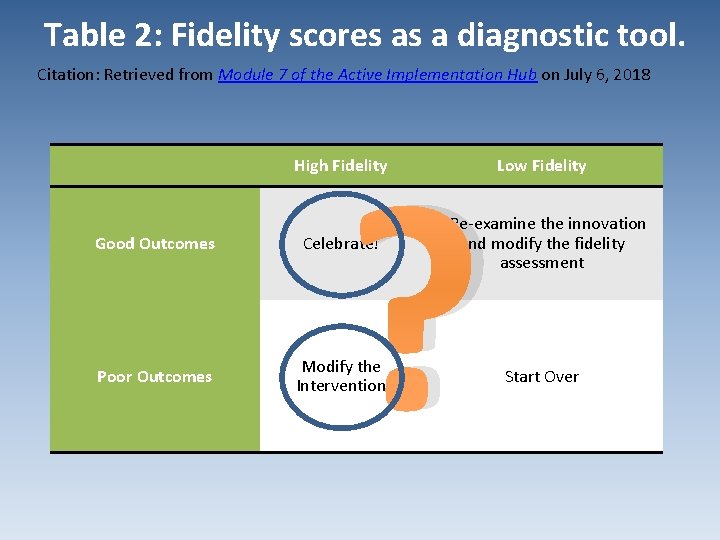

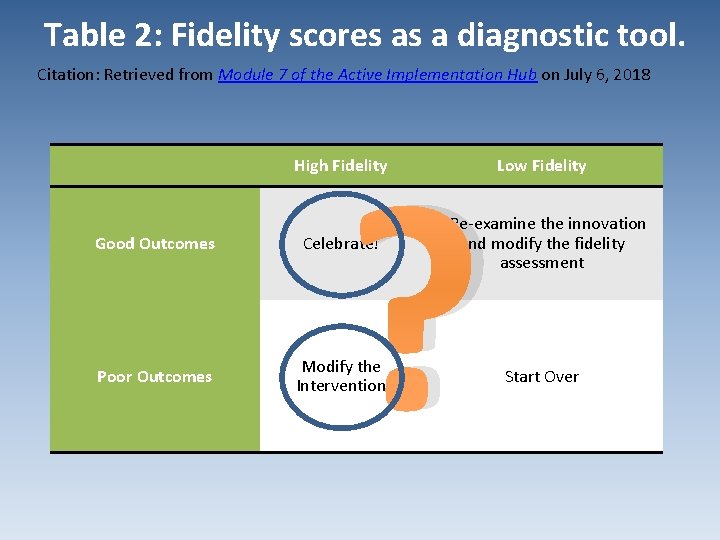

Table 2: Fidelity scores as a diagnostic tool. Citation: Retrieved from Module 7 of the Active Implementation Hub on July 6, 2018 ? High Fidelity Good Outcomes Celebrate! Poor Outcomes Modify the Intervention Low Fidelity Re-examine the innovation and modify the fidelity assessment Start Over

Our Next Steps Using the 6 Core Principles of Improvement Be problem specific and user-centered Organize as networks (social learning) Attend to variability 6 Core Principles of Improvement Learn through disciplined inquiry See the system Embrace measurement Citation: Bryk, A. S. (2018, April 3). Advancing quality in continuous improvement. Speech presented at the Carnegie Foundation Summit on Improvement in Education, San Francisco, CA.

EXPLORATION PHASE Consideration of a new evaluation tool Network Leadership – Build, Manage, and Evaluate Effective Networks Citation: Retrieved from the Partner Tool website on July 6, 2018

How can I use the Partner tool data? “PARTNER data are meant to be used as a Quality Improvement process, focused on strategic planning (to steer decision-making). ” Citation: Retrieved from the Partner Tool website on July 6, 2018

Using the PDSA Cycle with the PARTNER tool “To do this you will need to: • identify your goals (plan), • implement your collaborative activities (do), • gather PARTNER data (study), • and develop action steps to get you from where you “are” to where your goals indicate you “should be” (act). ” Citation: Retrieved from the Partner Tool website on July 6, 2018

Reflection and Learning 1. To increase your awareness, please read the handout with information about the tool Or you can access the material directly from the website at https: //partnertool. net/about 2. Based upon your knowledge at this point, would this tool be useful to you? 3. Discuss with your elbow partner what may be beneficial and why Citation: Retrieved from: the PARTNER Tool website on July 6, 2018

Agenda • What is CIID • Goal of evaluation efforts • Ensure understanding of CIID approach and work, align the plans with that work • Execute the plan and collect the data • Tools used in the effort • Evaluate the data, review recommendations for making changes and improving our work 22

What is CIID • Center for the Integration of IDEA Data • Resolve data silos • Improve the quality and efficiency or EDFacts reporting • Develop and implement automated reporting tool - Generate 23

Evaluation effort Goal Collaborative effort with external evaluator to establish comprehensive evaluation program to improve Technical Assistance (TA) services to SEAs 24

Understanding CIID work is a little different – significant part of the work is IT project management • Focus on people, process and systems of data management Evaluation team included in the work – help us shape the work • Leadership meetings to calls with TA providers to Training • Access to all documentation 25

Data Collection Plan • Establish timeframes • Think about work flow in SEAs and with TA efforts • Determine methods for different activities • Focus groups • Manage burden with annual survey • Ensure coverage of Universal, Targeted and Intensive TA 26

Execution of the Plan • Collaborative effort • Communication – CIID Leadership involved • Identify audiences • Challenge across special education, IT and EDFacts staff in SEAs 27

Evaluation Tools 1. Data System Framework • Adopted from Da. Sy Data System Framework used in Part C • Administered before and after • Determine if goals and objective of data integration were met 2. Intensive Technical Assistance Quality Rubric • David will cover in detail 28

Evaluation Results • Review reports in detail with evaluation team • Reports include recommendations • Share reports with TA providers • Explore options for addressing areas that need improvement 29

Making changes • Based on Focus group information – changed engagement with stakeholders • Individual meetings with TA providers • Trainings with TA providers • Example - CEDS Tools 30

Discussion Question How do we link our collaborative and comprehensive effort to improve services to SEAs to educational outcomes for students with disabilities? 31

Contact Information Bill Huennekens bill. huennekens@aemcorp. com Follow us on Twitter: @CIIDTA Sign-up for our newsletter: ciidta@aemcorp. com Visit the CIID website: www. ciidta. org Follow us on Linked. In: https: //www. linkedin. com/company/CIID 32

Thank You! 33

Evaluating Implementation and Improvement: Getting Better Together Evergreen Evaluation & Consulting, Inc. David Merves MBA, CAS

New Evaluation Tools to Inform Project Improvement • Intensive Technical Assistance Quality Rubric • PARTNER Tool • Measuring Fidelity as a Method for Evaluating Intensive Technical Assistance

Intensive Technical Assistance Quality Rubric • Developed by IDEA Data Center (IDC) • It is still in DRAFT form • Guiding Principles for TA • Four Components of Effective Intensive TA

PARTNER TOOL • Designed by Danielle Varda with funding from RWJ Foundation Housed at University of Colorado Denver • Program to Analyze, Record, and Track Networks to Enhance Relationships • Participatory Approach, PARTNER Framework, and FLEXIBLE • https: //partnertool. net/about/

Measuring Fidelity as a Method for Evaluation Intensive Technical Assistance • Presentation at a TACC meeting in September 2015 by Jill Feldman and Jill Lammert of Westat • Identify the key components of the intensive TA your Center provides • Define and operationalize measurable indicators for key intensive TA components in your logic model • Identify data sources and measures for each indicator • Create numeric thresholds for determining whether adequate fidelity has been reached for each indicator • Assign fidelity ratings (scores) for each indicator and combine indicator scores into a fidelity score for the key component

Discussion Question How would you use any of these tools/instruments in your work?

THANK YOU David Merves Evergreen Evaluation & Consulting, Inc david@eecvt. com (802) 857 -5935

2018 OSEP Project Directors’ Conference DISCLAIMER: The contents of this presentation were developed by the presenters for the 2018 Project Directors’ Conference. However, these contents do not necessarily represent the policy of the Department of Education, and you should not assume endorsement by the Federal Government. (Authority: 20 U. S. C. 1221 e-3 and 3474)

Osep syllabus

Osep syllabus Osep leak

Osep leak B a f c j e

B a f c j e Gartner bi summit

Gartner bi summit Fuze conference 2018

Fuze conference 2018 Cmaa conference 2018

Cmaa conference 2018 Asha convention 2018

Asha convention 2018 Workforce planning conference 2018

Workforce planning conference 2018 Lifelong learning conference 2018

Lifelong learning conference 2018 Pmi houston conference 2018

Pmi houston conference 2018 Adss conference 2018

Adss conference 2018 Freedom pay credit card processing

Freedom pay credit card processing Financial risk management conference 2018

Financial risk management conference 2018 National business group on health conference 2018

National business group on health conference 2018 Pfa conference 2018

Pfa conference 2018 Cigna conference 2018

Cigna conference 2018 Boston library consortium

Boston library consortium Eacs conference 2018

Eacs conference 2018 Tlffra conference 2018

Tlffra conference 2018 Interact conference 2018 teachstone

Interact conference 2018 teachstone Bccie summer conference

Bccie summer conference Tlffra conference 2018

Tlffra conference 2018 Cfla conference 2018

Cfla conference 2018 Table of contents for project proposal

Table of contents for project proposal Methyl group ortho para directing

Methyl group ortho para directing Ciso dashboard ppt

Ciso dashboard ppt Namata board of directors

Namata board of directors Directors brief

Directors brief Jmh board of directors

Jmh board of directors Directors emma wolverson

Directors emma wolverson Compliance presentation to board

Compliance presentation to board Board advisory services

Board advisory services Astdd

Astdd German expressionist directors

German expressionist directors Clinical directors network

Clinical directors network National association of deans and directors

National association of deans and directors Jean baptiste voisin lvmh

Jean baptiste voisin lvmh Aspiring directors programme

Aspiring directors programme Board of directors risk oversight responsibilities

Board of directors risk oversight responsibilities Thai institute of directors association

Thai institute of directors association Npc board

Npc board Perfectionist directors

Perfectionist directors