2006 NHC Verification Report Interdepartmental Hurricane Conference 5

- Slides: 41

2006 NHC Verification Report Interdepartmental Hurricane Conference 5 March 2007 James L. Franklin NHC/TPC

Verification Rules System must be a tropical (or subtropical) cyclone at both the forecast time and verification time, includes depression stage (except as noted). Verification results are final (until we change something). Special advisories ignored; regular advisories verified. Skill baselines for track is revised CLIPER 5 (updated developmental data to 1931 -2004 [ATL] and 1949 -2004 [EPAC]), run post-storm on operational compute data. Skill baseline for intensity is the new decay-SHIFOR 5 model, run post-storm on operational compute data (OCS 5). Minimum D-SHIFOR 5 forecast is 15 kt. New interpolated version of the GFDL: GHMI. Previous GFDL intensity forecast is lagged 6 h as always, but the offset is not applied at or beyond 30 h. Half the offset is applied at 24 h. Full offset applied at 6 -18 h. ICON now uses GHMI.

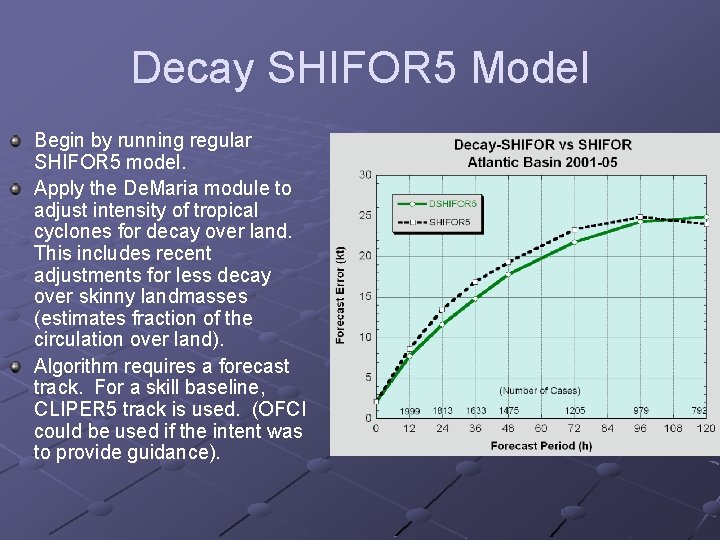

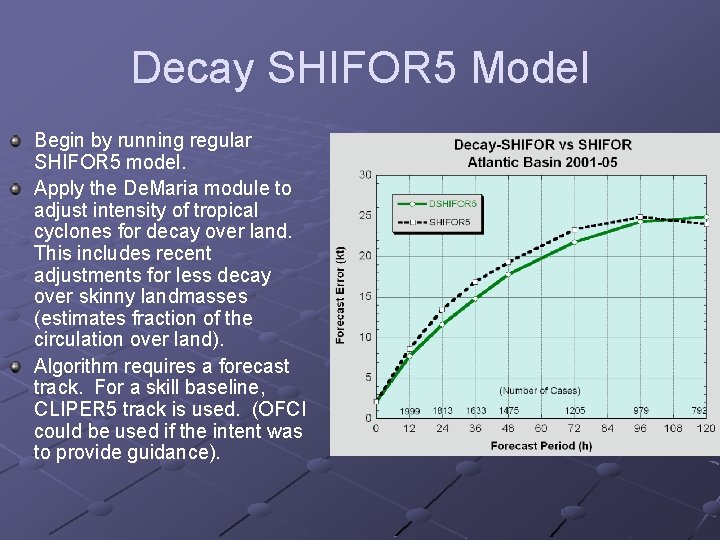

Decay SHIFOR 5 Model Begin by running regular SHIFOR 5 model. Apply the De. Maria module to adjust intensity of tropical cyclones for decay over land. This includes recent adjustments for less decay over skinny landmasses (estimates fraction of the circulation over land). Algorithm requires a forecast track. For a skill baseline, CLIPER 5 track is used. (OFCI could be used if the intent was to provide guidance).

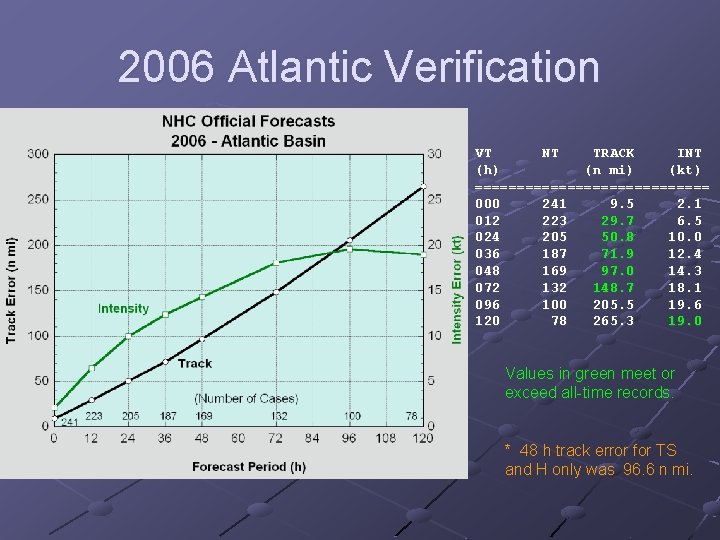

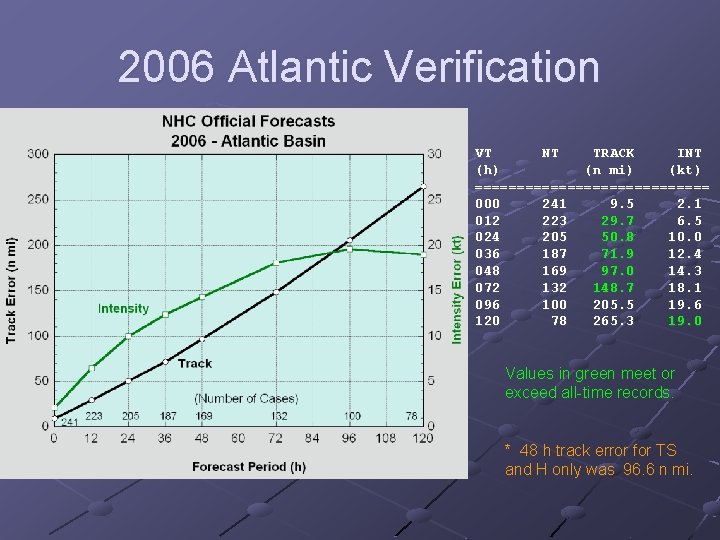

2006 Atlantic Verification VT NT TRACK INT (h) (n mi) (kt) ============== 000 241 9. 5 2. 1 012 223 29. 7 6. 5 024 205 50. 8 10. 0 036 187 71. 9 12. 4 048 169 97. 0 14. 3 072 132 148. 7 18. 1 096 100 205. 5 19. 6 120 78 265. 3 19. 0 Values in green meet or exceed all-time records. * 48 h track error for TS and H only was 96. 6 n mi.

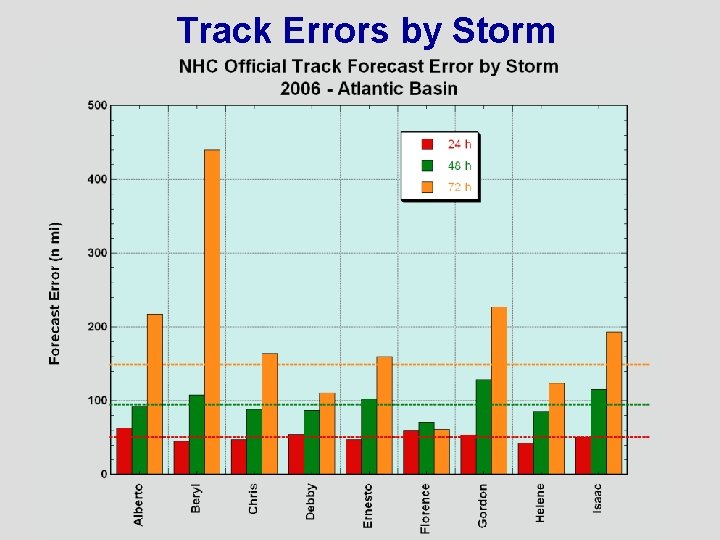

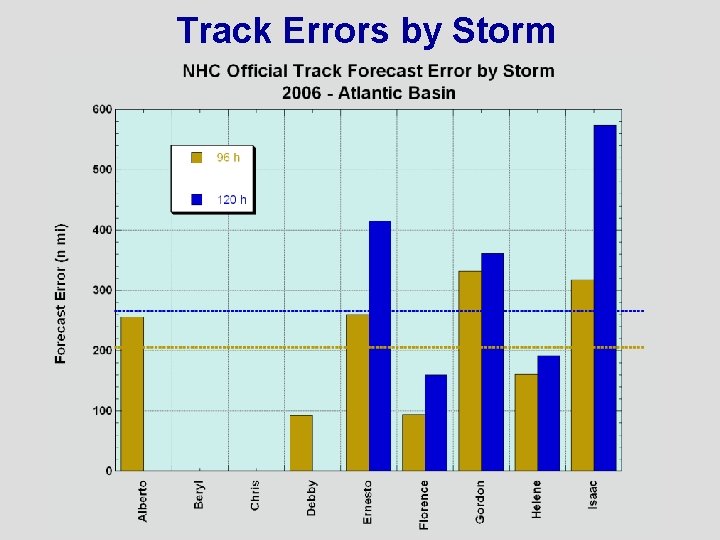

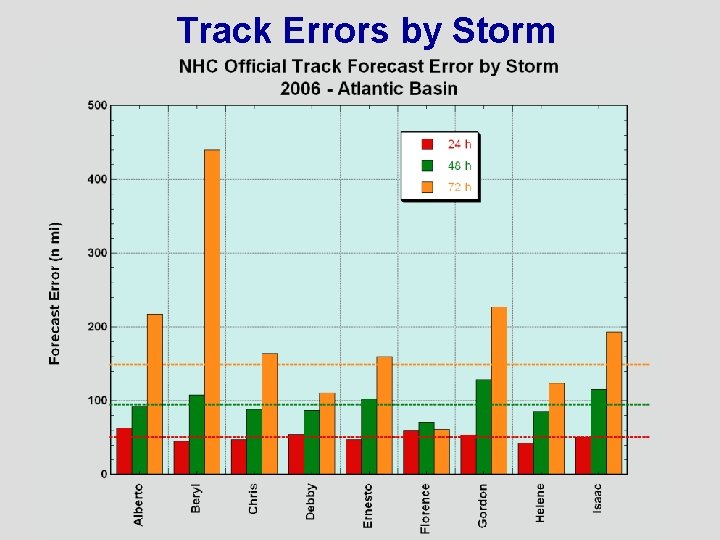

Track Errors by Storm

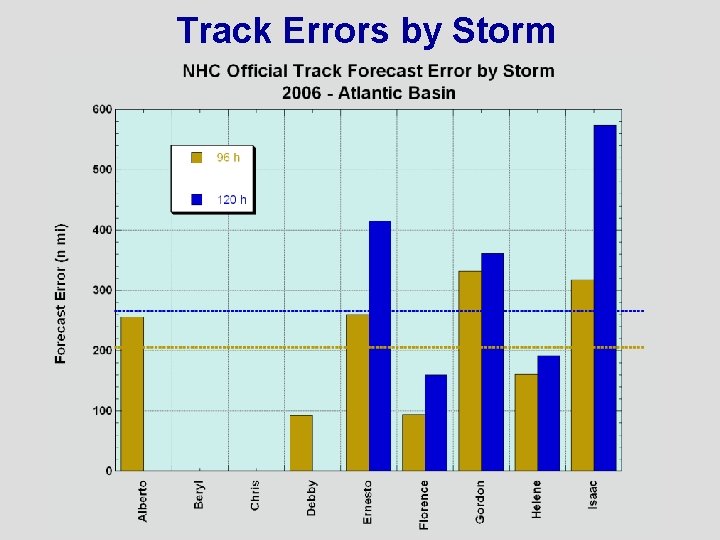

Track Errors by Storm

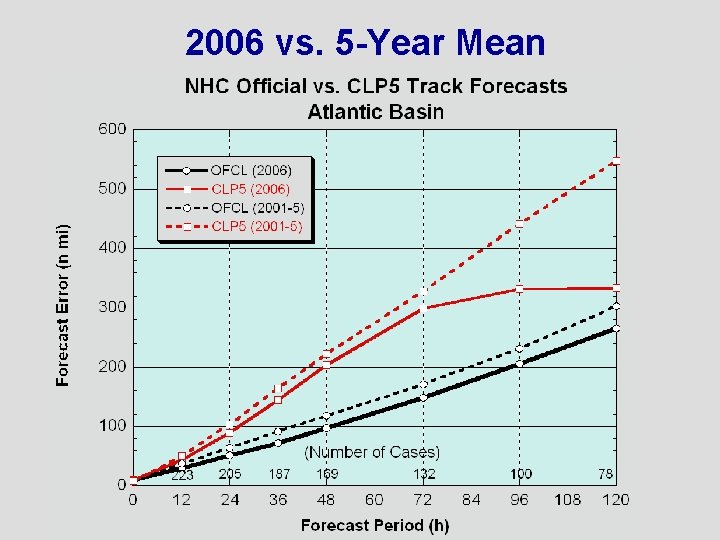

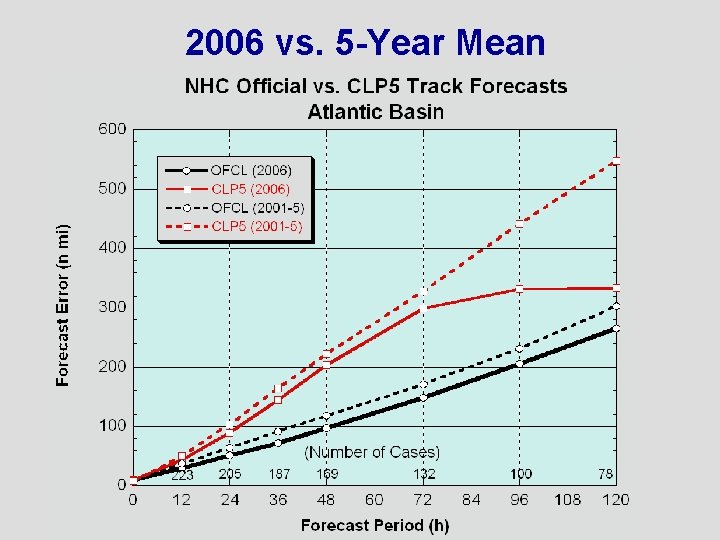

2006 vs. 5 -Year Mean

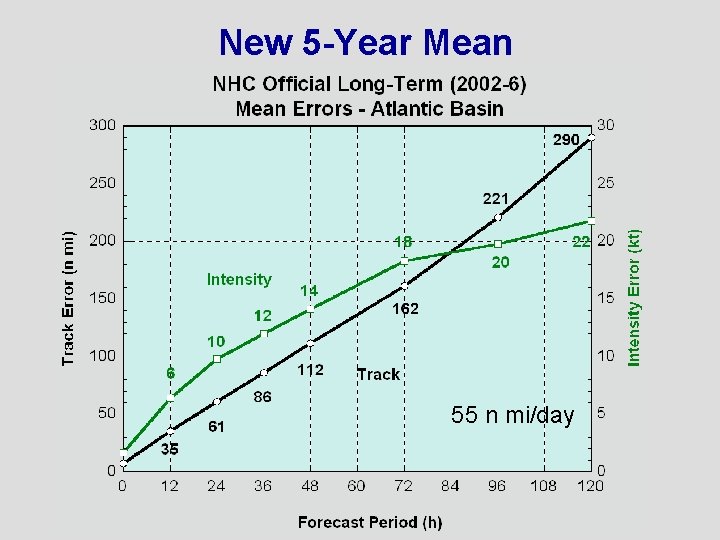

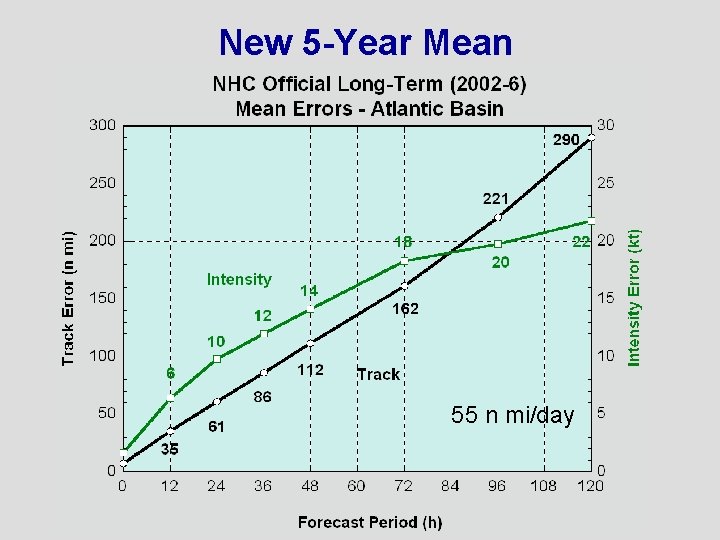

New 5 -Year Mean 55 n mi/day

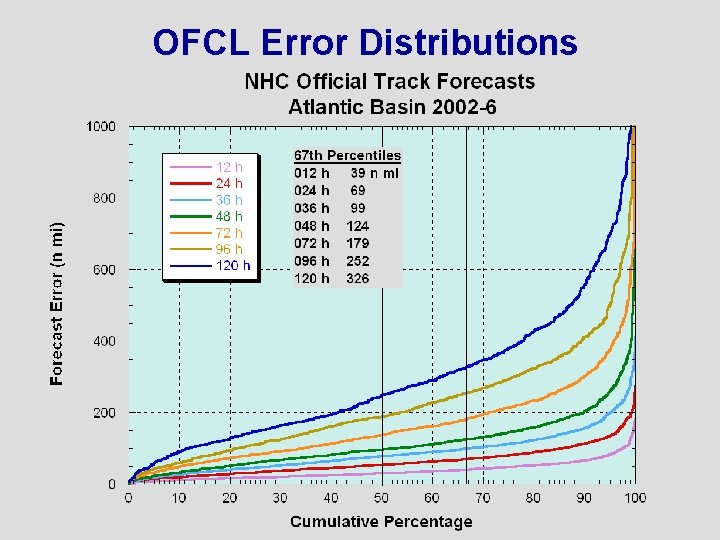

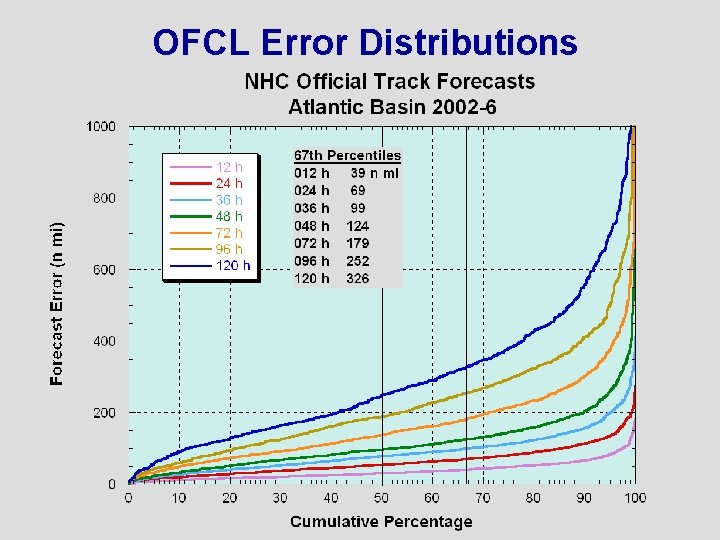

OFCL Error Distributions

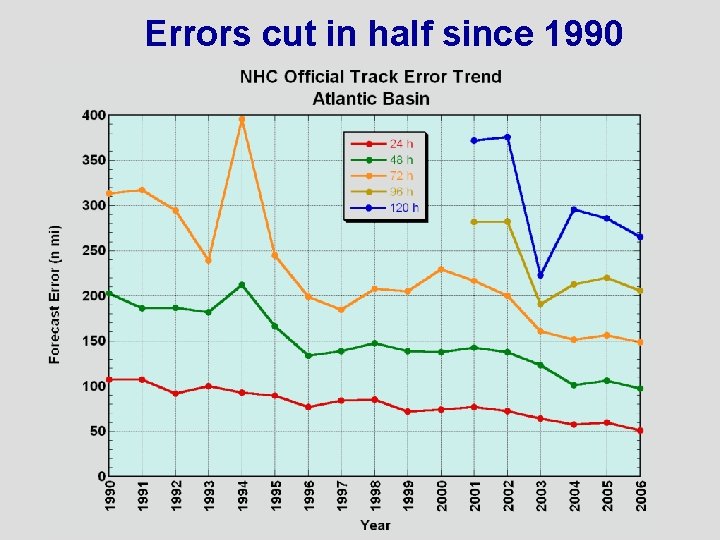

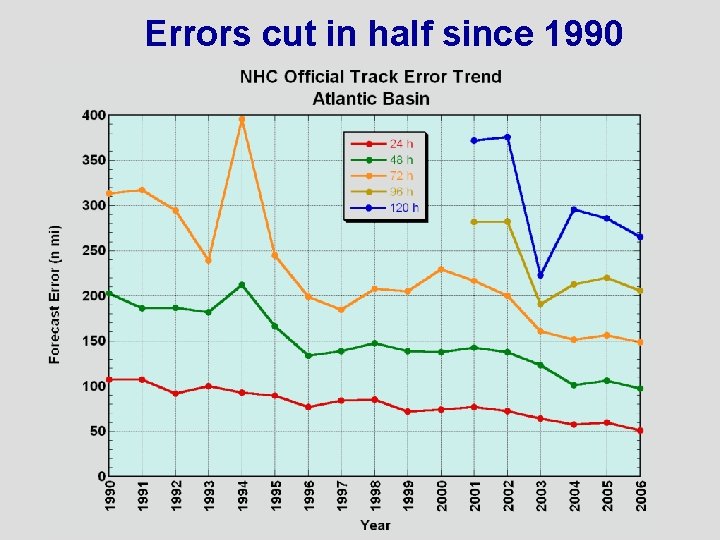

Errors cut in half since 1990

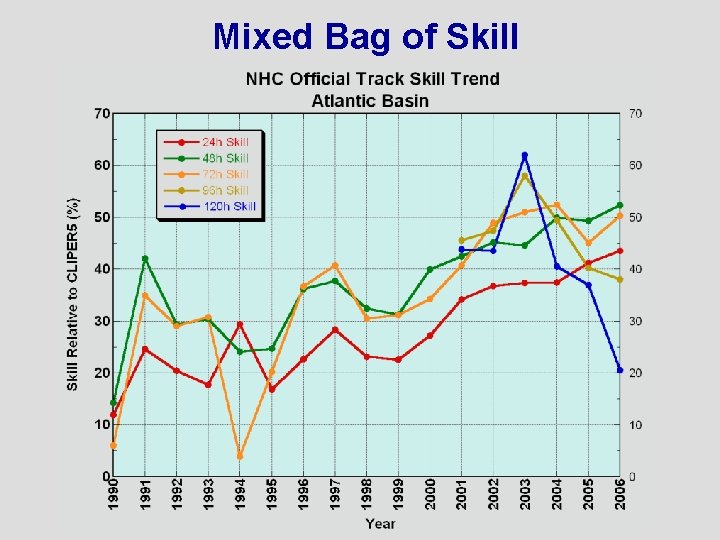

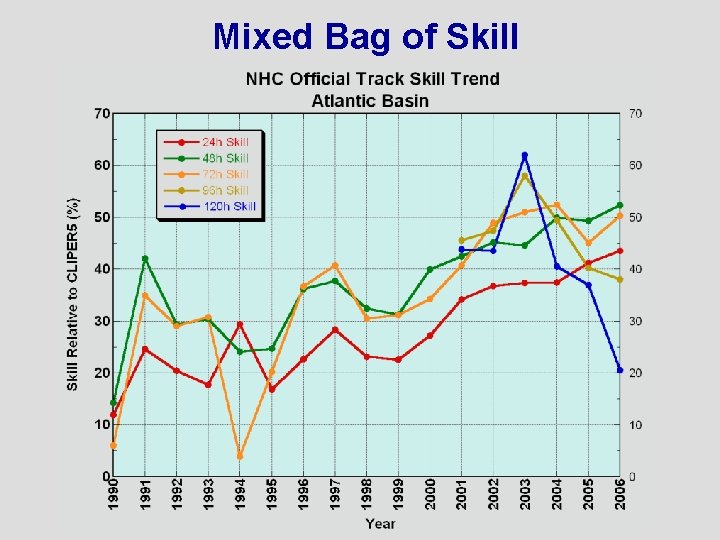

Mixed Bag of Skill

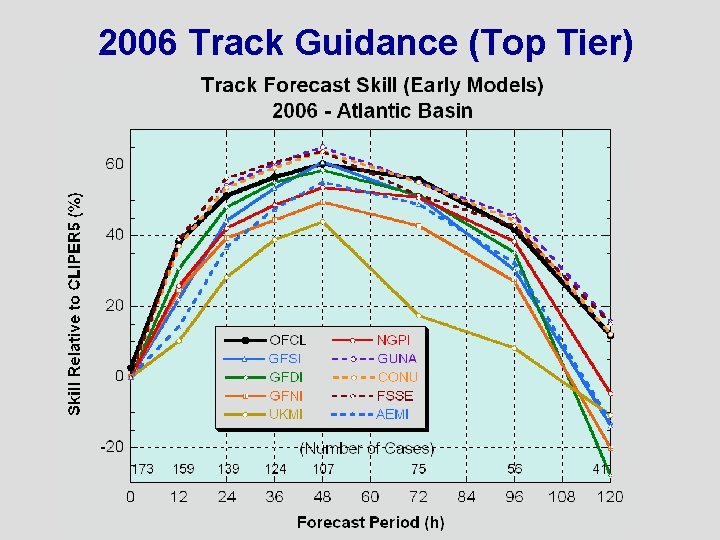

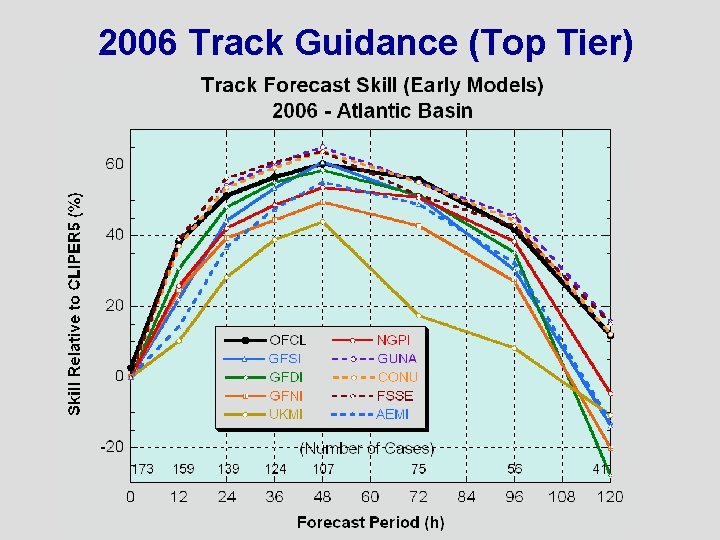

2006 Track Guidance (Top Tier)

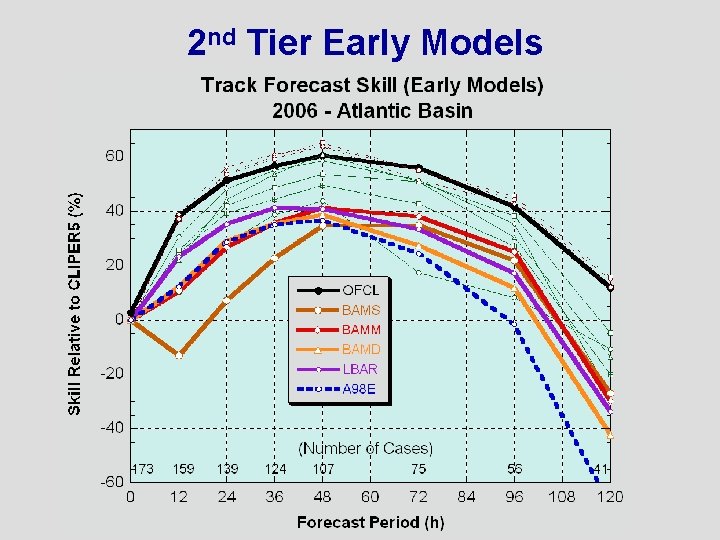

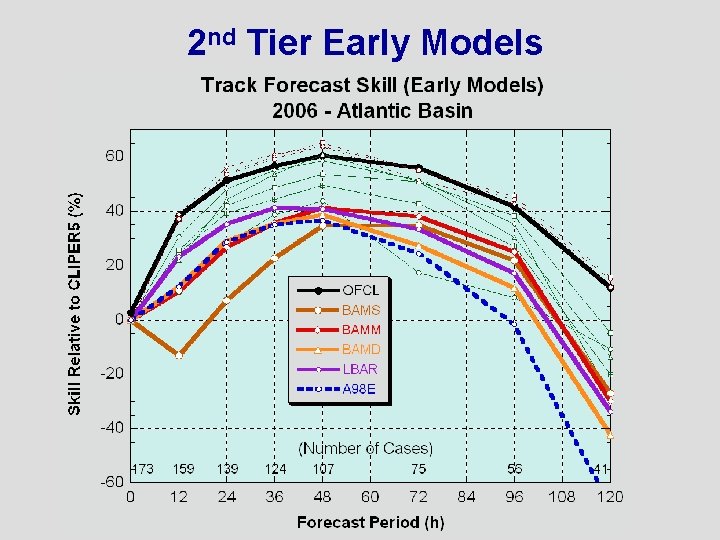

2 nd Tier Early Models

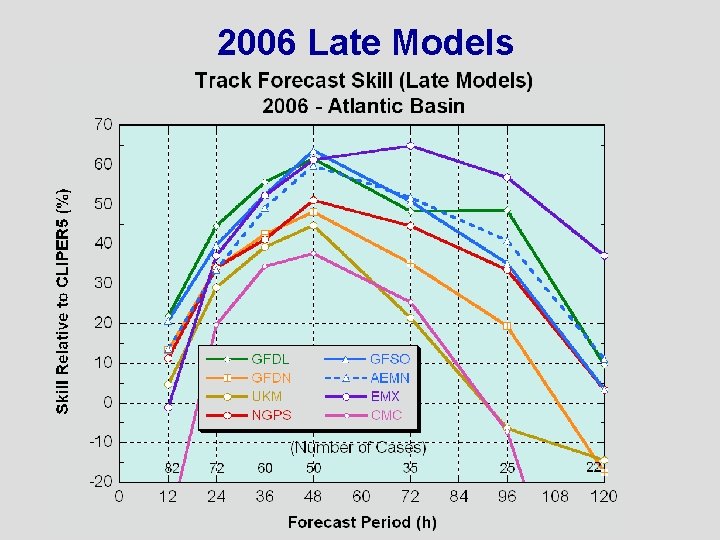

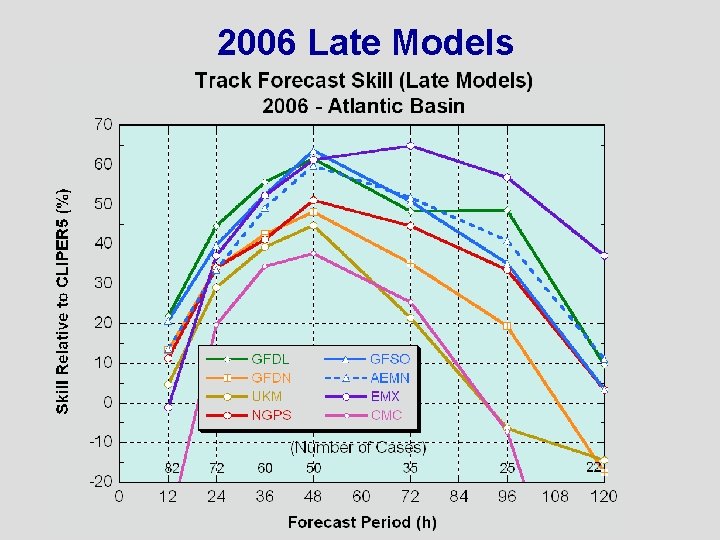

2006 Late Models

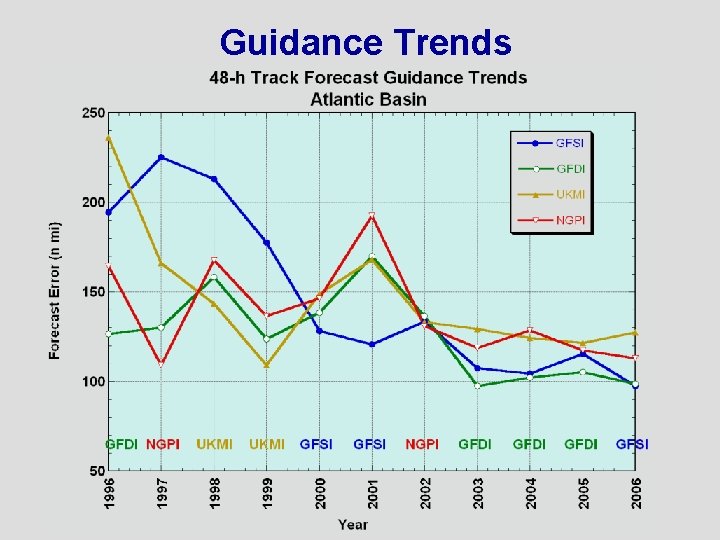

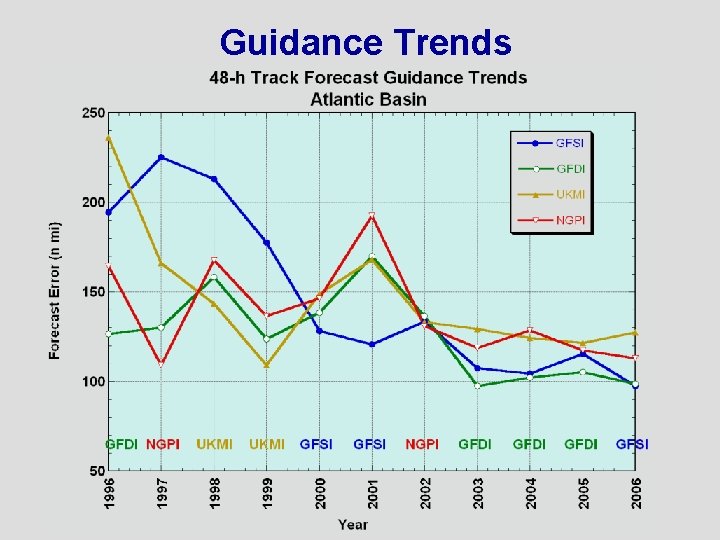

Guidance Trends

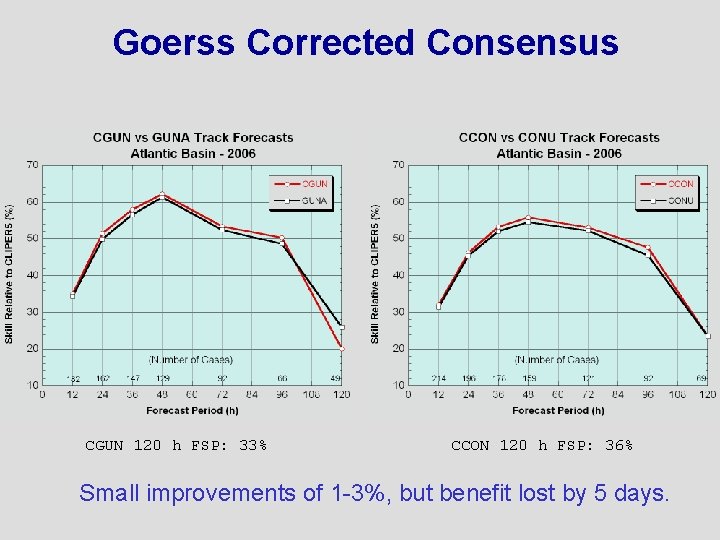

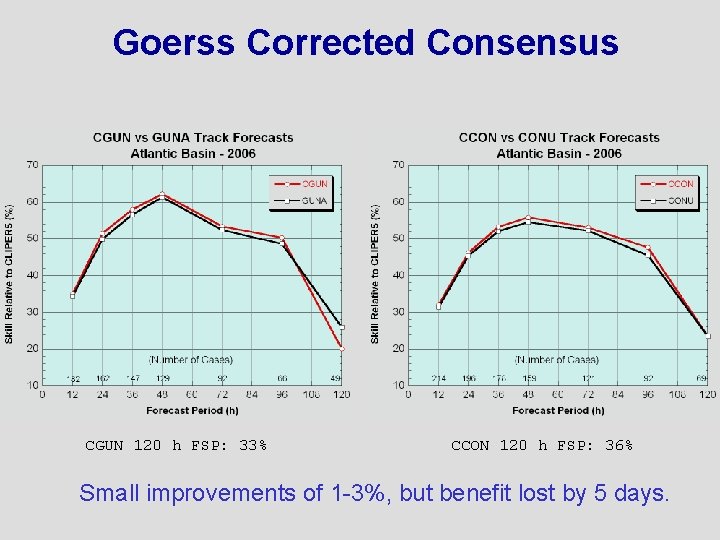

Goerss Corrected Consensus CGUN 120 h FSP: 33% CCON 120 h FSP: 36% Small improvements of 1 -3%, but benefit lost by 5 days.

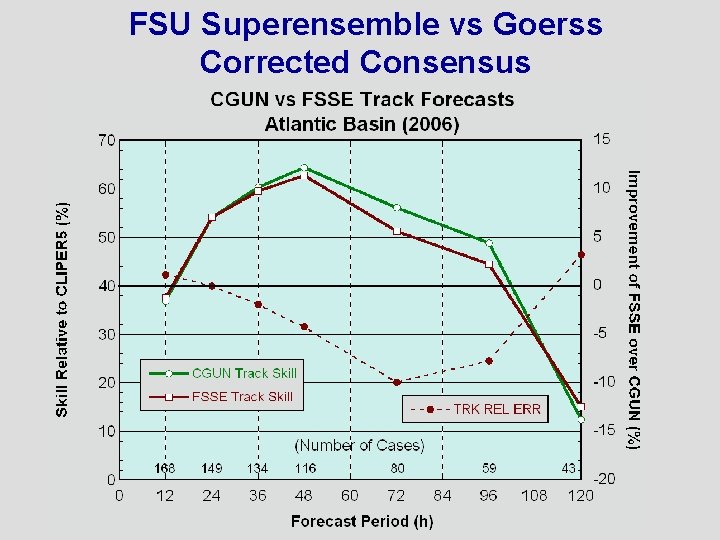

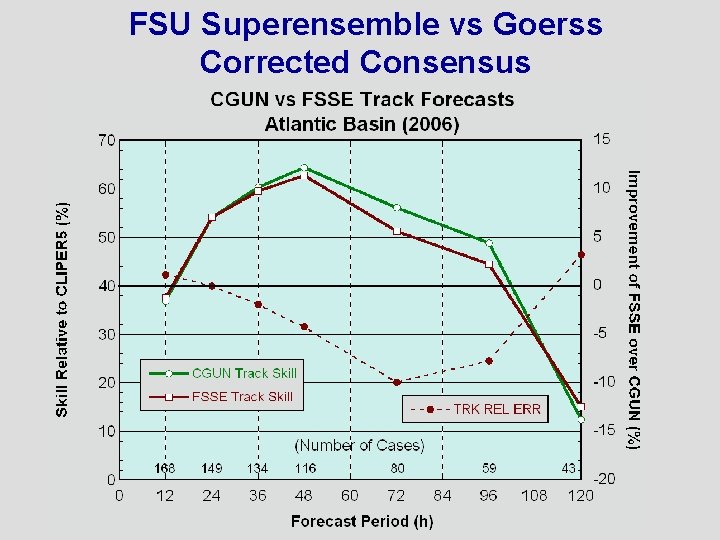

FSU Superensemble vs Goerss Corrected Consensus

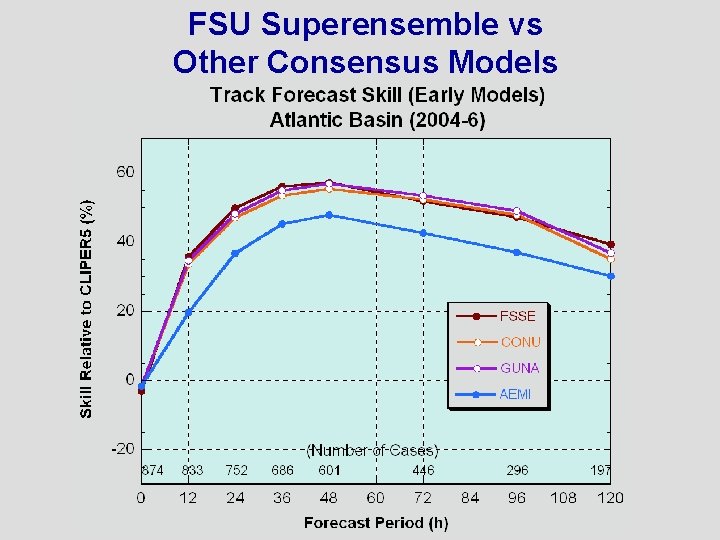

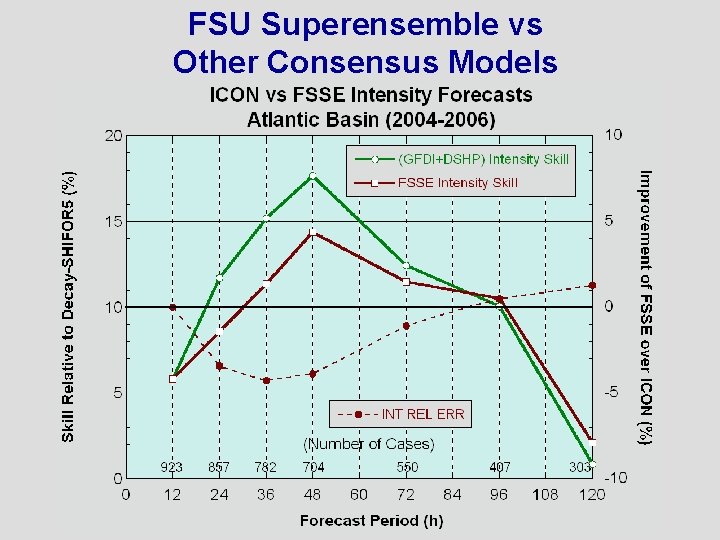

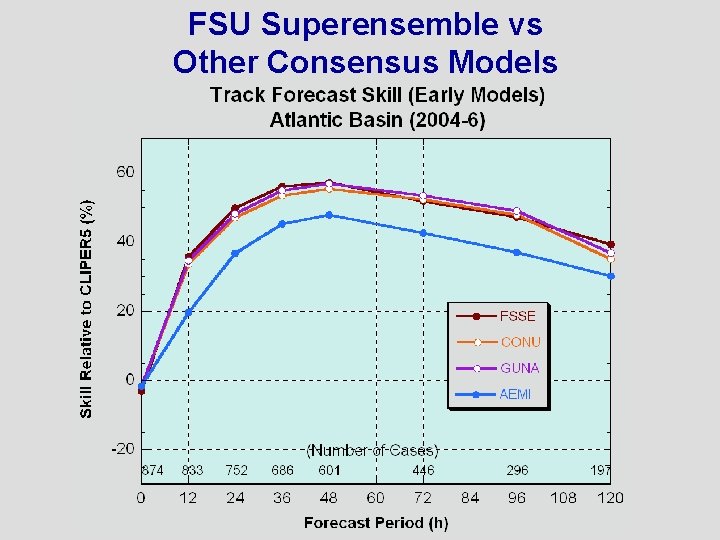

FSU Superensemble vs Other Consensus Models

2006 vs 5 -Year Mean

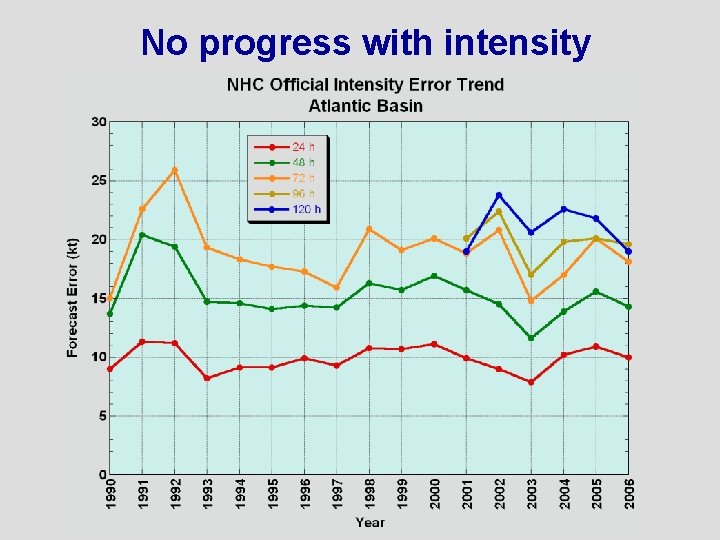

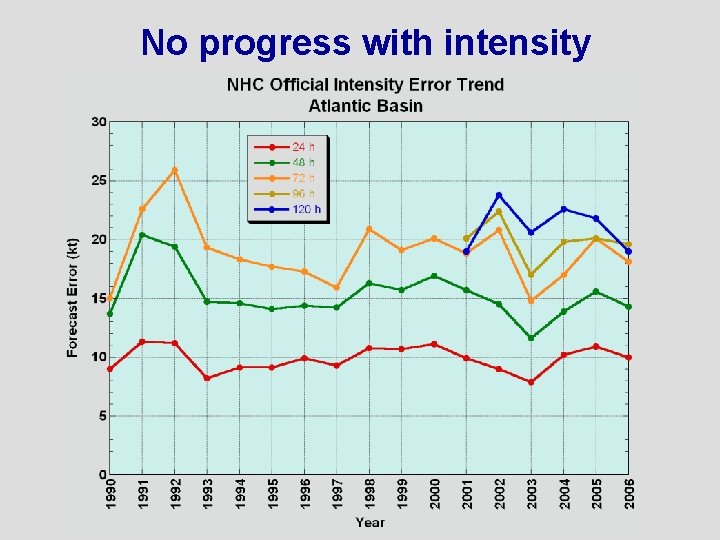

No progress with intensity

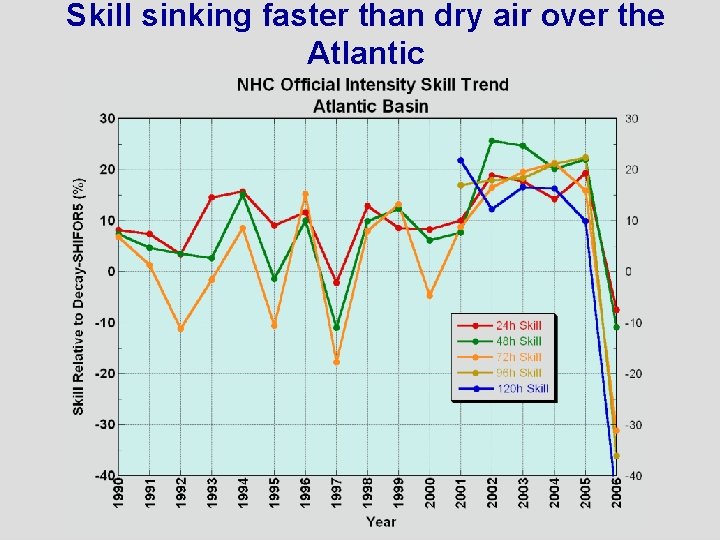

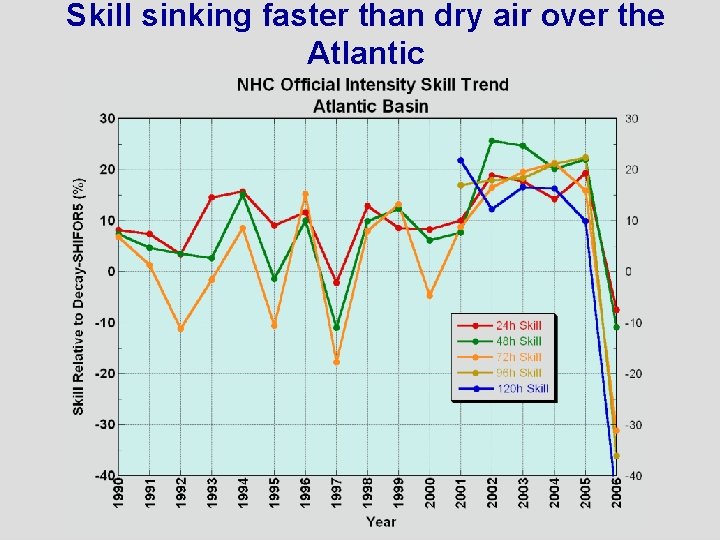

Skill sinking faster than dry air over the Atlantic

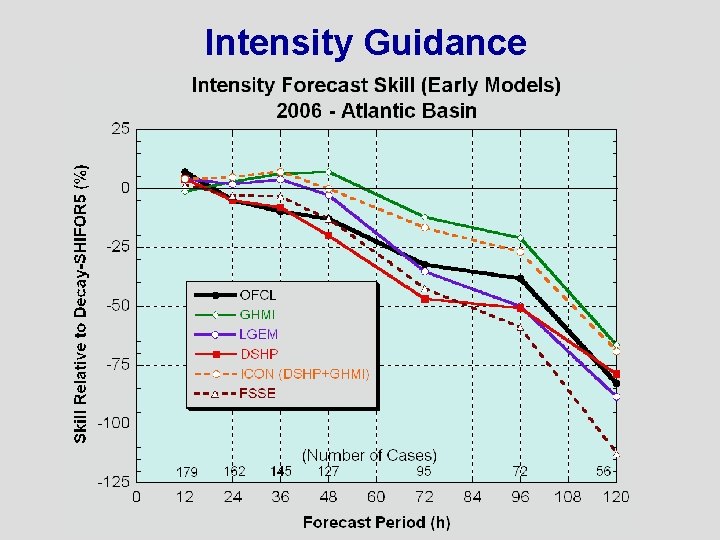

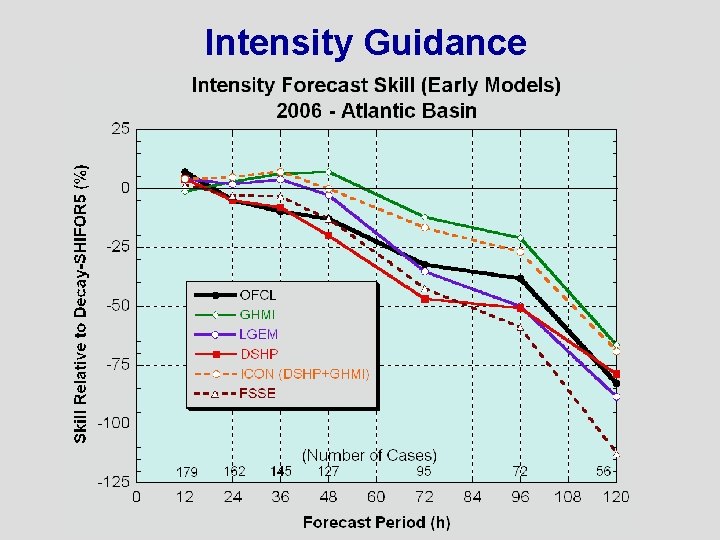

Intensity Guidance

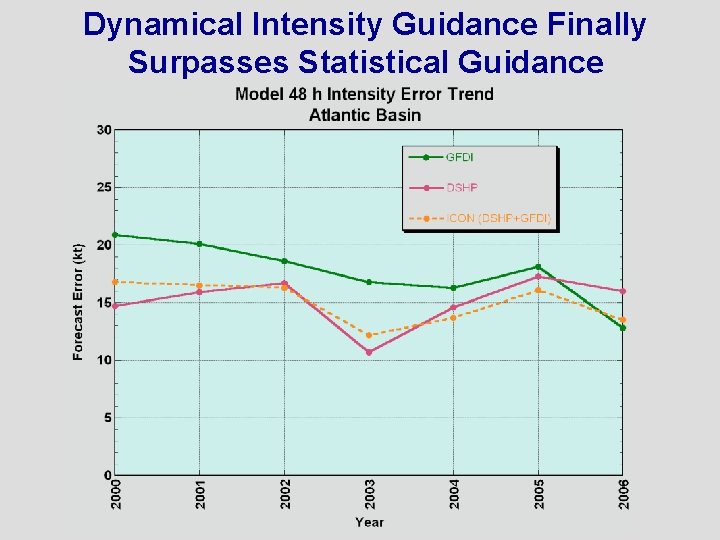

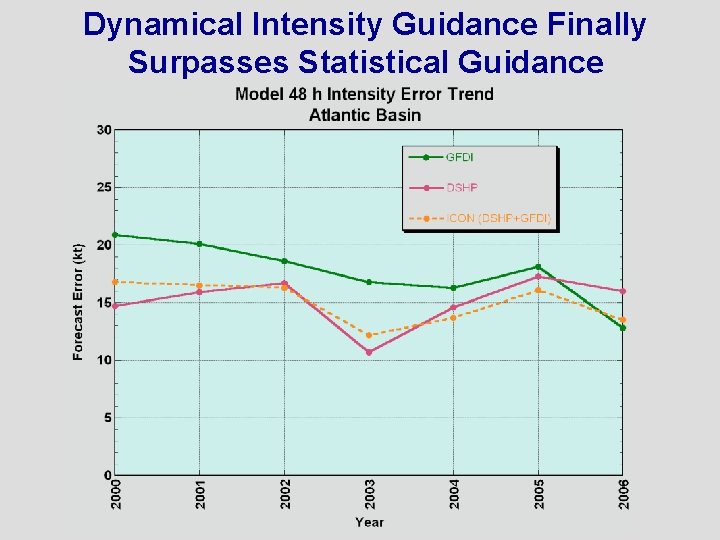

Dynamical Intensity Guidance Finally Surpasses Statistical Guidance

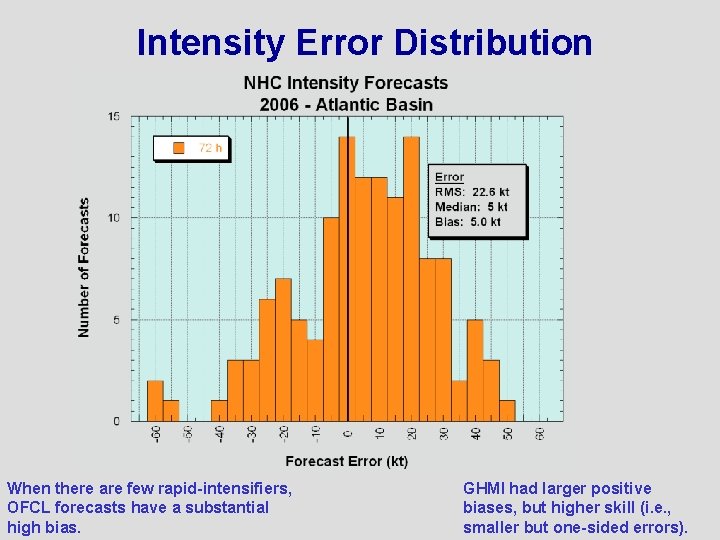

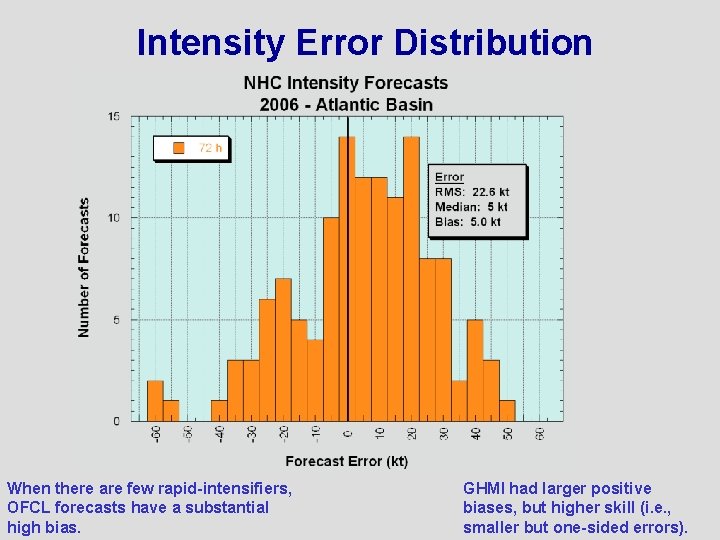

Intensity Error Distribution When there are few rapid-intensifiers, OFCL forecasts have a substantial high bias. GHMI had larger positive biases, but higher skill (i. e. , smaller but one-sided errors).

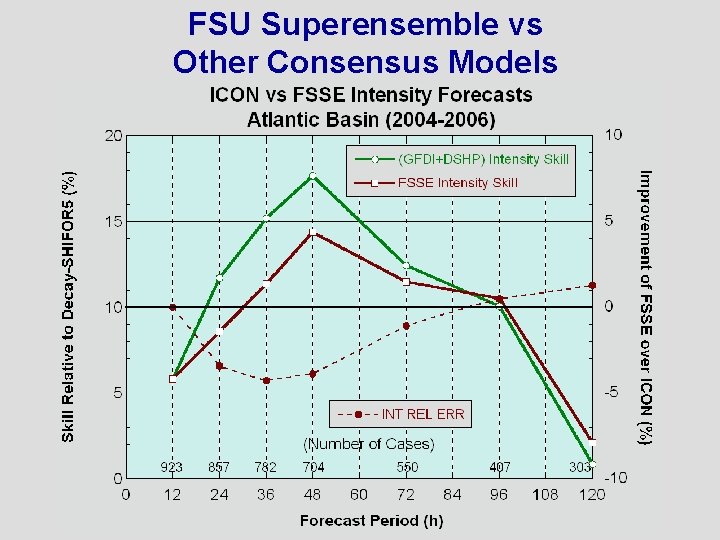

FSU Superensemble vs Other Consensus Models

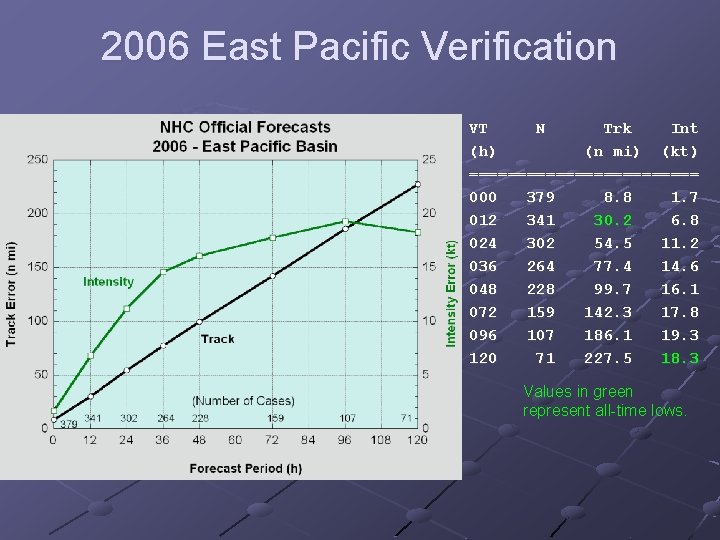

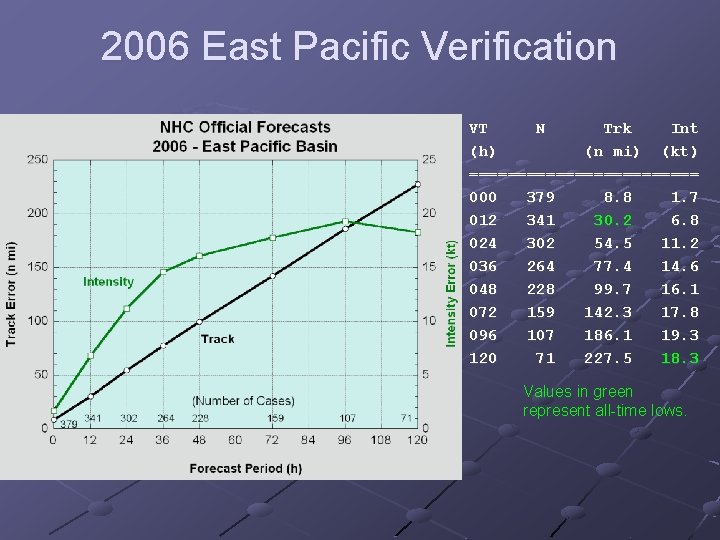

2006 East Pacific Verification VT N Trk Int (h) (n mi) (kt) ============ 000 379 8. 8 1. 7 012 341 30. 2 6. 8 024 302 54. 5 11. 2 036 264 77. 4 14. 6 048 228 99. 7 16. 1 072 159 142. 3 17. 8 096 107 186. 1 19. 3 120 71 227. 5 18. 3 Values in green represent all-time lows.

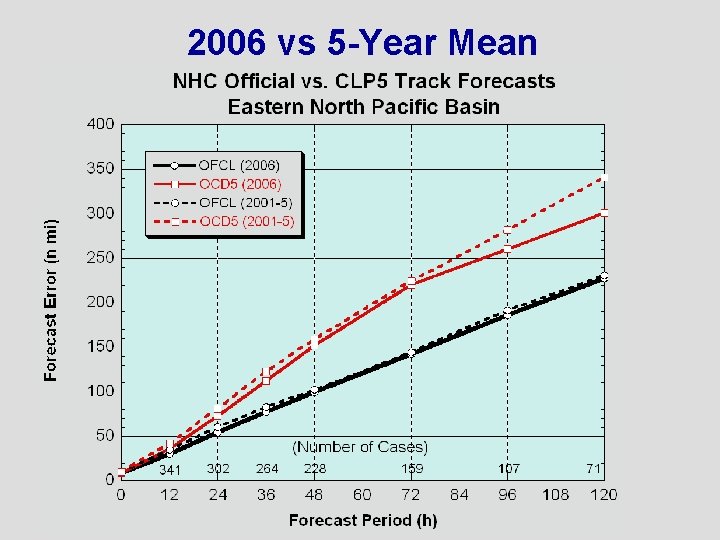

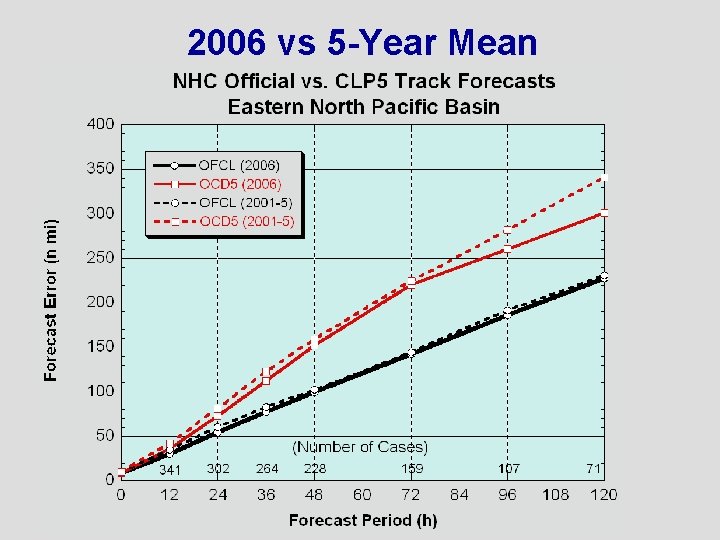

2006 vs 5 -Year Mean

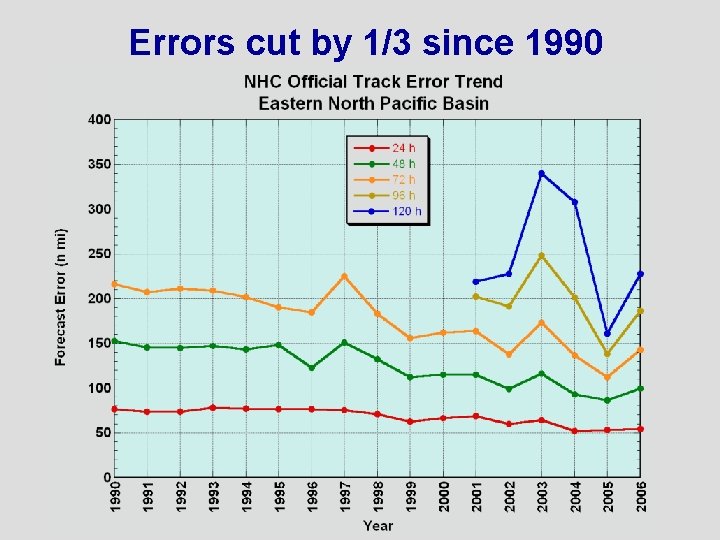

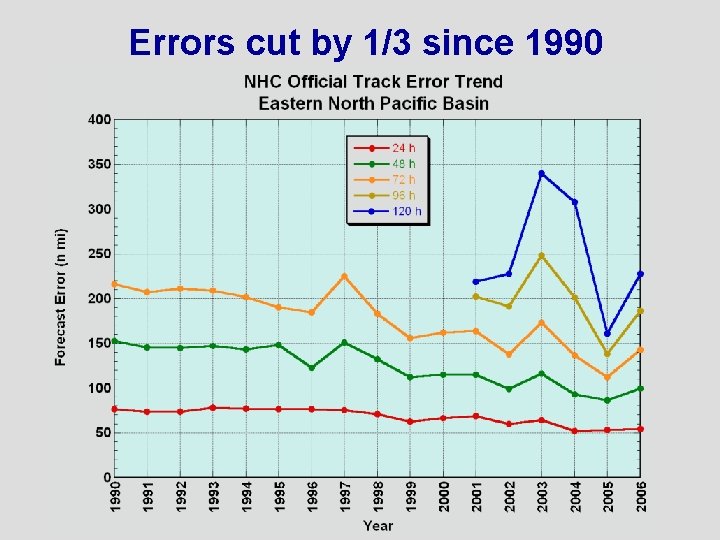

Errors cut by 1/3 since 1990

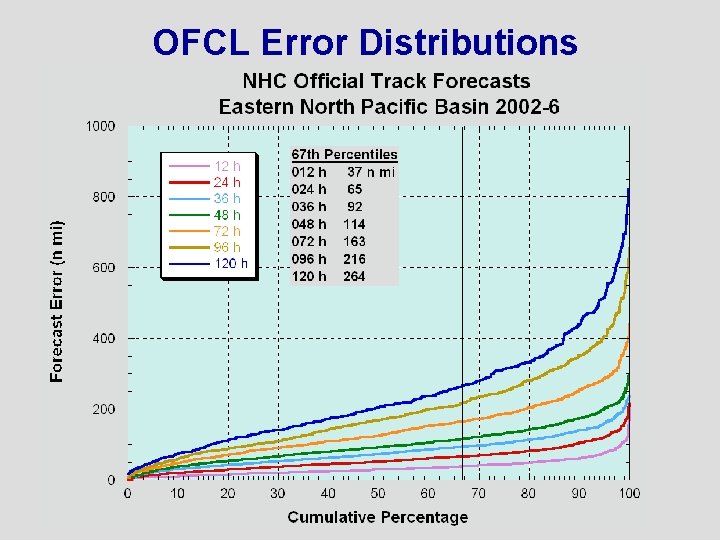

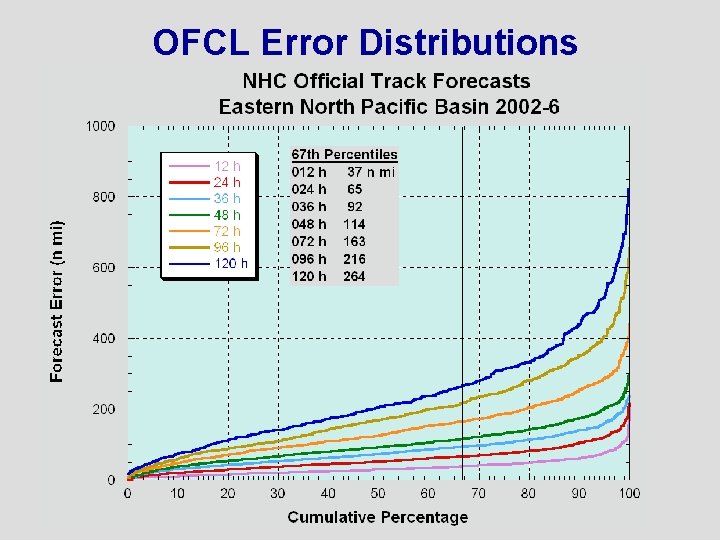

OFCL Error Distributions

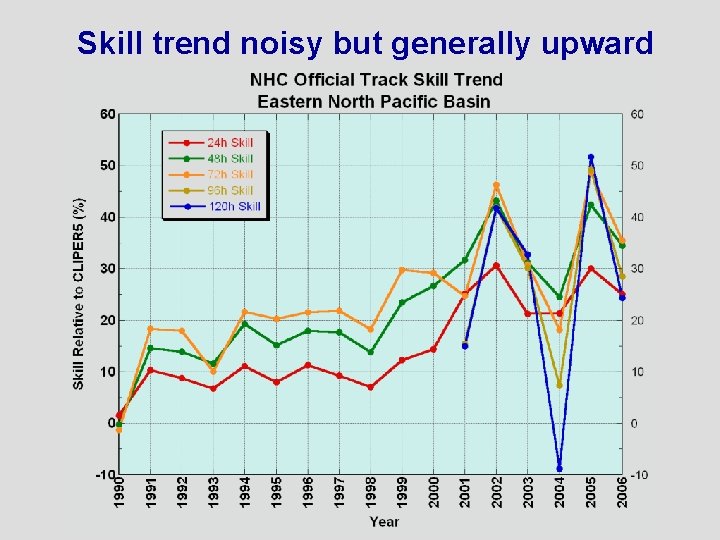

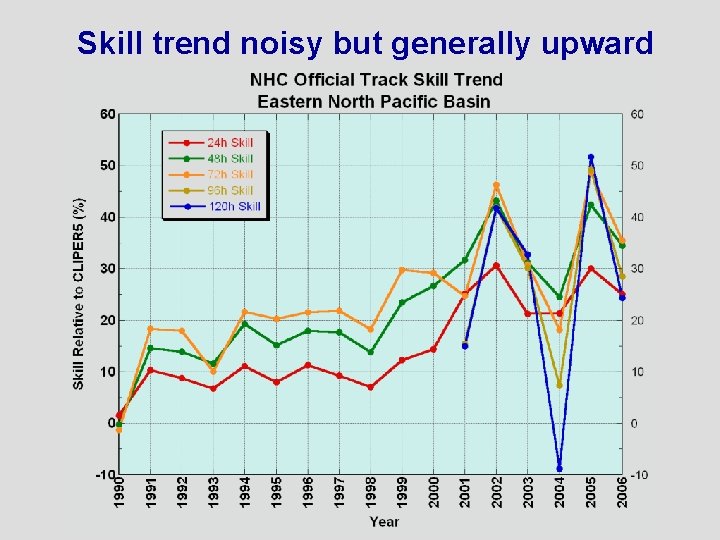

Skill trend noisy but generally upward

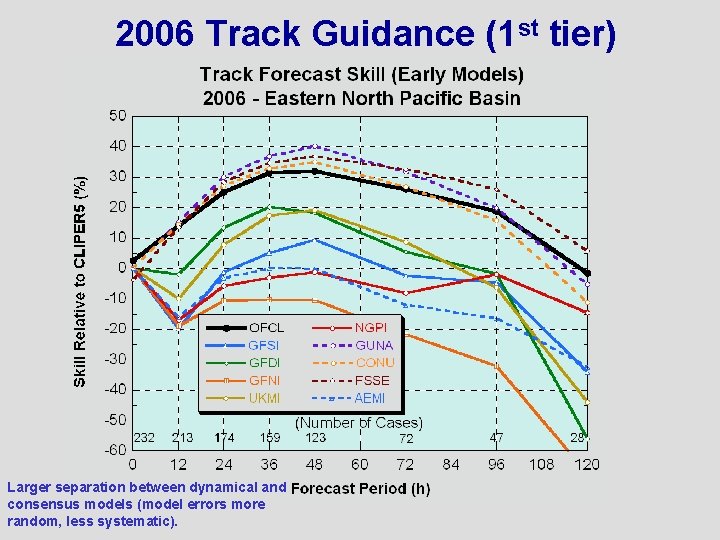

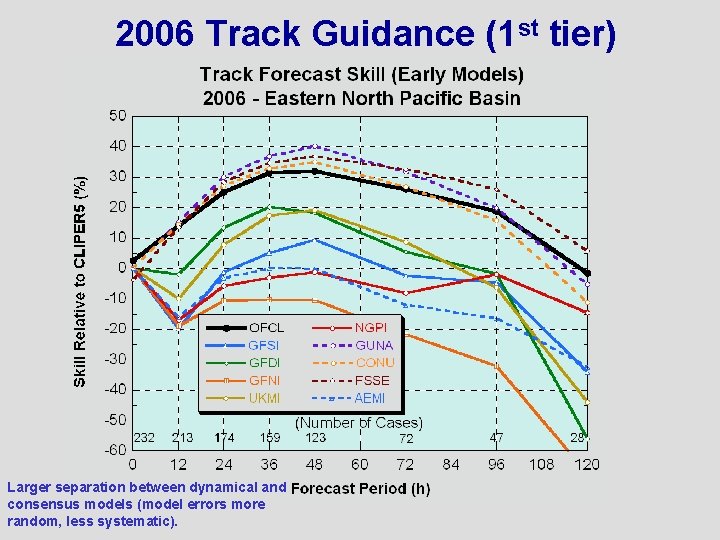

2006 Track Guidance (1 st tier) Larger separation between dynamical and consensus models (model errors more random, less systematic).

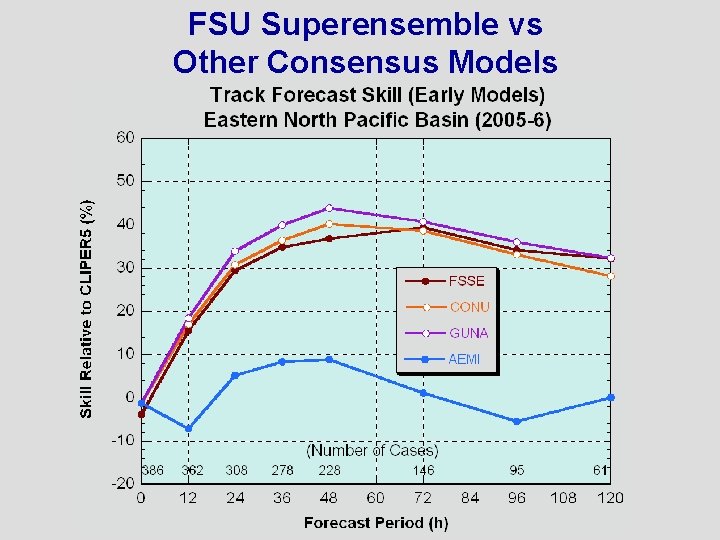

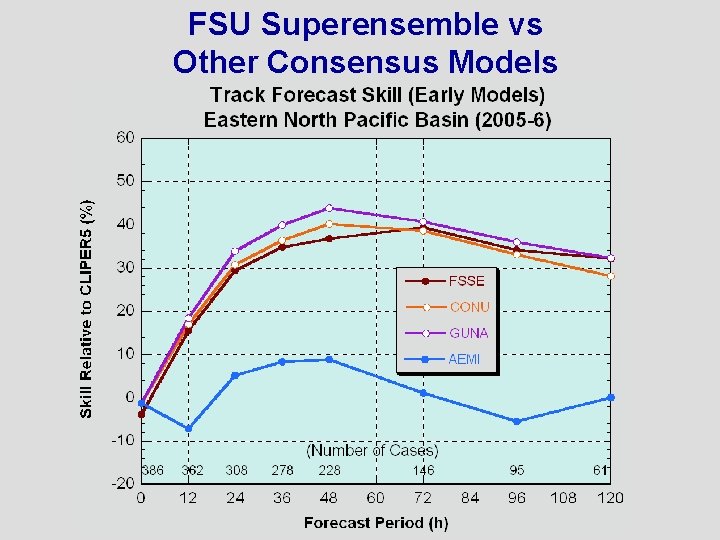

FSU Superensemble vs Other Consensus Models

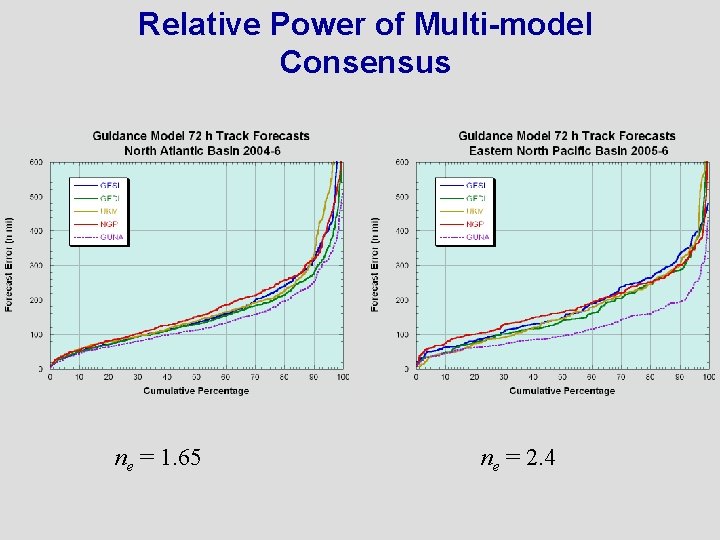

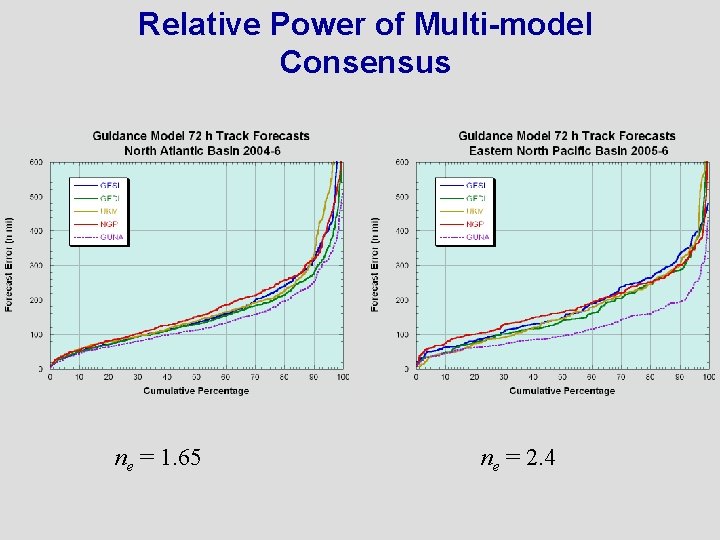

Relative Power of Multi-model Consensus ne = 1. 65 ne = 2. 4

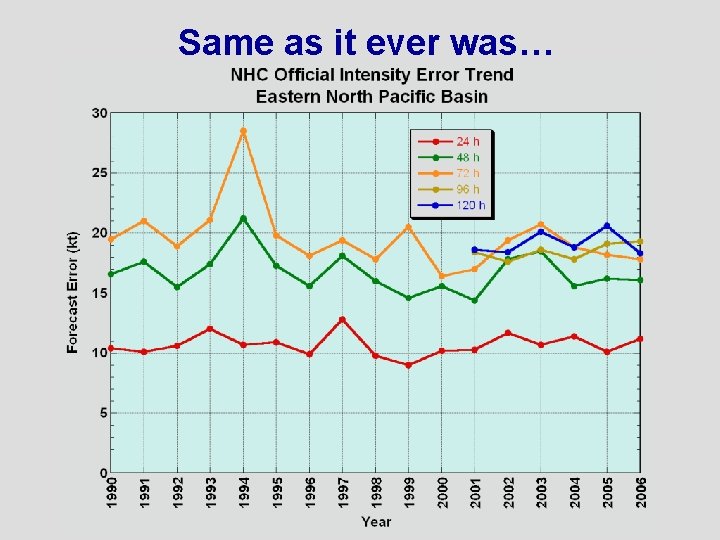

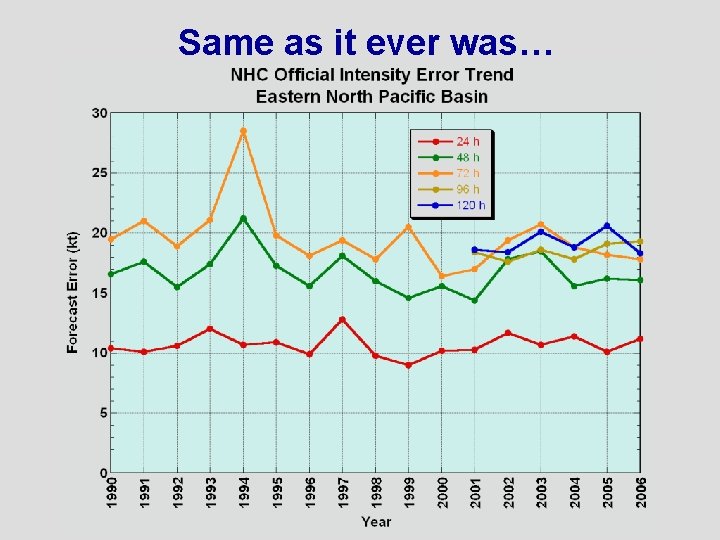

Same as it ever was…

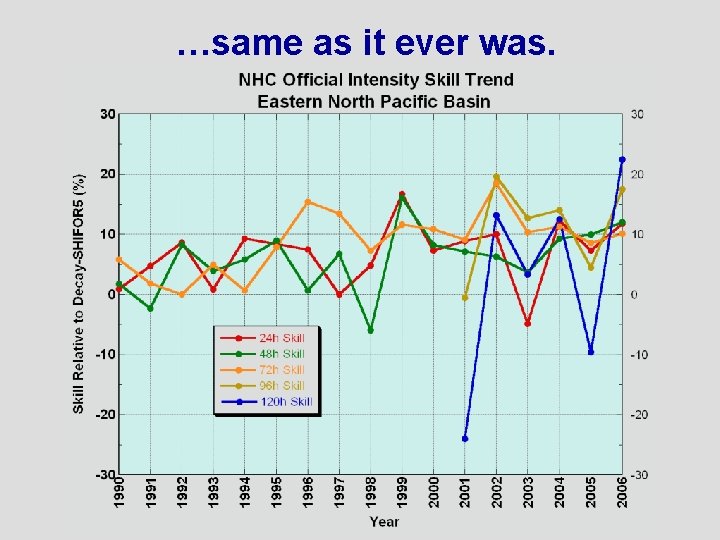

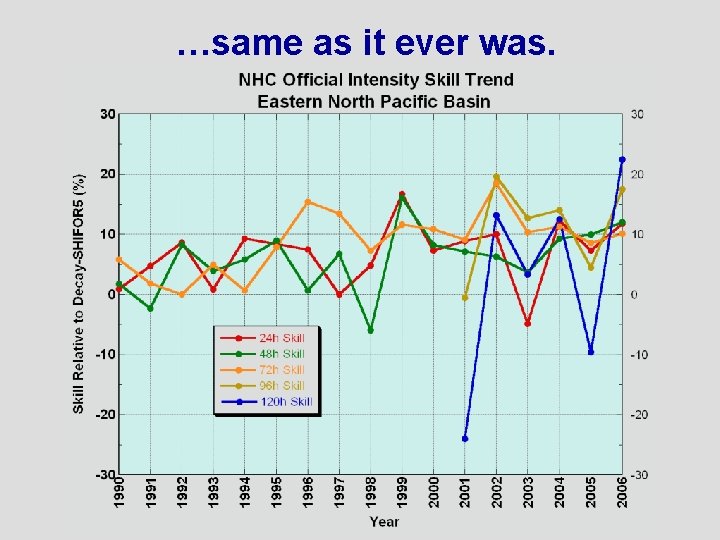

…same as it ever was.

2006 Intensity Guidance

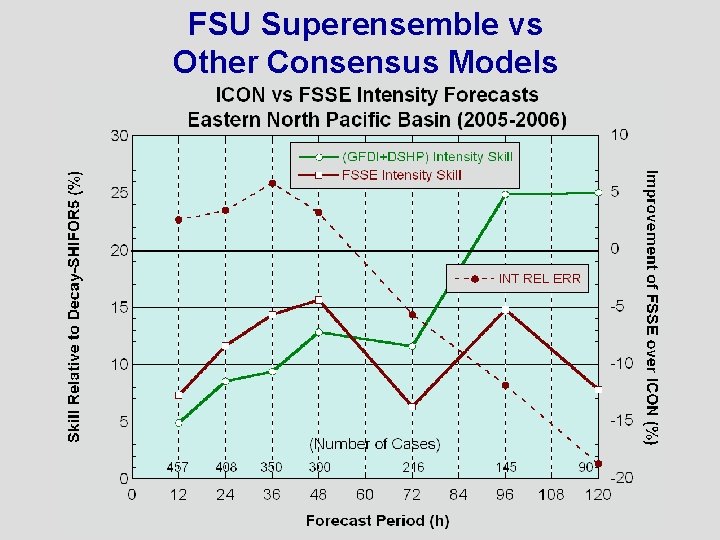

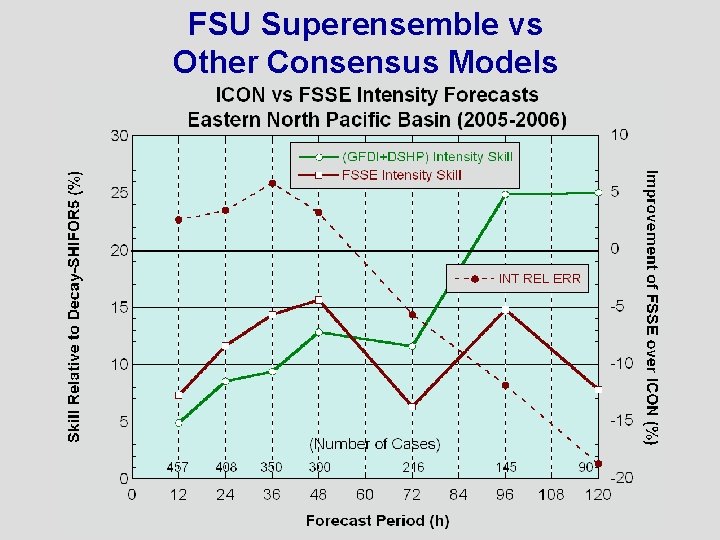

FSU Superensemble vs Other Consensus Models

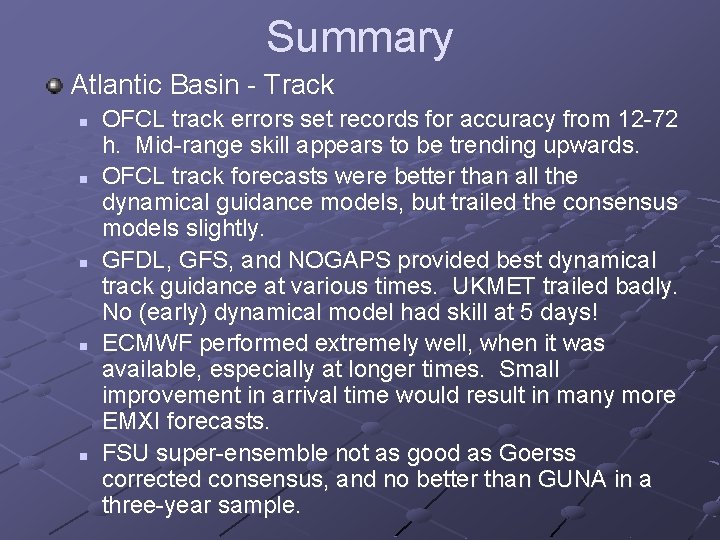

Summary Atlantic Basin - Track n n n OFCL track errors set records for accuracy from 12 -72 h. Mid-range skill appears to be trending upwards. OFCL track forecasts were better than all the dynamical guidance models, but trailed the consensus models slightly. GFDL, GFS, and NOGAPS provided best dynamical track guidance at various times. UKMET trailed badly. No (early) dynamical model had skill at 5 days! ECMWF performed extremely well, when it was available, especially at longer times. Small improvement in arrival time would result in many more EMXI forecasts. FSU super-ensemble not as good as Goerss corrected consensus, and no better than GUNA in a three-year sample.

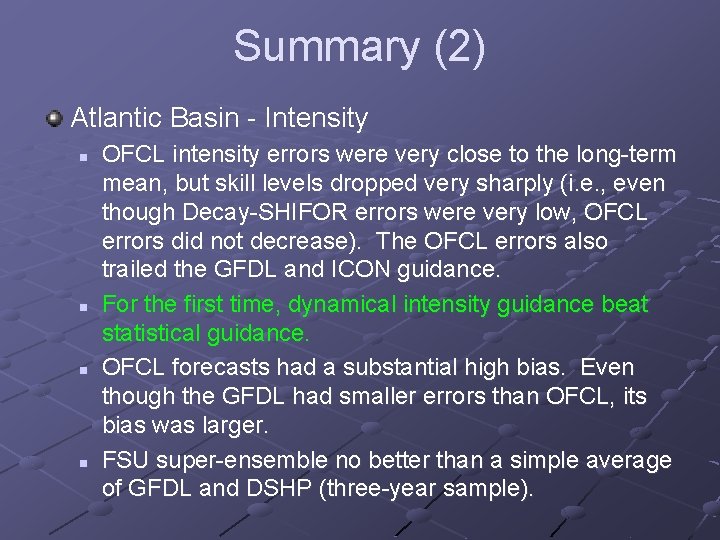

Summary (2) Atlantic Basin - Intensity n n OFCL intensity errors were very close to the long-term mean, but skill levels dropped very sharply (i. e. , even though Decay-SHIFOR errors were very low, OFCL errors did not decrease). The OFCL errors also trailed the GFDL and ICON guidance. For the first time, dynamical intensity guidance beat statistical guidance. OFCL forecasts had a substantial high bias. Even though the GFDL had smaller errors than OFCL, its bias was larger. FSU super-ensemble no better than a simple average of GFDL and DSHP (three-year sample).

Summary (3) East Pacific Basin - Track n n n OFCL track errors up, skill down in 2006, although errors were slightly better than the long-term mean. OFCL beat dynamical models, but not the consensus models. Much larger difference between dynamical models and the consensus in the EPAC (same as 2005). FSU super-ensemble no better than GUNA (two-year sample).

Summary (4) East Pacific Basin - Intensity n n n OFCL intensity errors/skill show little improvement. GFDL beat DSHP after 36 h, but ICON generally beat both. FSU super-ensemble slightly better than ICON at 24 -48 h, but worse than ICON after that.

2006 hurricane season

2006 hurricane season Download nhc

Download nhc Nghe nhc

Nghe nhc Ngày nay con đến hát khen mừng mẹ

Ngày nay con đến hát khen mừng mẹ C nhc

C nhc Muốn xoá một hoặc nhiều cột em thực hiện

Muốn xoá một hoặc nhiều cột em thực hiện Interdepartmental transaction

Interdepartmental transaction Chapter 12 leadership

Chapter 12 leadership Layout strategy example

Layout strategy example Interdepartmental flow graph

Interdepartmental flow graph Example of layout

Example of layout Interdepartmental flow graph

Interdepartmental flow graph Strategi layout

Strategi layout Layout strategy example

Layout strategy example Interdepartmental adalah

Interdepartmental adalah Long formal report

Long formal report Layout strategies examples

Layout strategies examples Expenditure verification report

Expenditure verification report The rose report 2006

The rose report 2006 Partial report technique

Partial report technique Project progress status

Project progress status Hurricane gustav timeline

Hurricane gustav timeline Hurricane webquest

Hurricane webquest Is the girl who sits behind rafael a better student than i

Is the girl who sits behind rafael a better student than i Turks and caicos hurricane season

Turks and caicos hurricane season Hurricane pam

Hurricane pam Historical hurricane tracks

Historical hurricane tracks Warm front diagram

Warm front diagram Hurricane rules

Hurricane rules Hurricane cross section

Hurricane cross section Hurricane pam

Hurricane pam Hurricane measurement

Hurricane measurement Hurricane and tornado venn diagram

Hurricane and tornado venn diagram Ihc hurricane

Ihc hurricane Hurricane causes

Hurricane causes Hmon

Hmon Hurricane florence statistics

Hurricane florence statistics What year was hurricane donna

What year was hurricane donna Hilton head island gis

Hilton head island gis 3333 hurricane lane missouri city

3333 hurricane lane missouri city Hurricane katrina apush

Hurricane katrina apush Hurricane myths and legends

Hurricane myths and legends