www grid 5000 fr Grid 5000 One of

![Grid’ 5000 Agenda Grid’ 5000 Early results Experiment on fault tolerant MPI [SC 2006] Grid’ 5000 Agenda Grid’ 5000 Early results Experiment on fault tolerant MPI [SC 2006]](https://slidetodoc.com/presentation_image/67ffd83e85b9ab09fc05a2cd6ce09bb7/image-22.jpg)

- Slides: 30

www. grid 5000. fr Grid’ 5000 One of the 30+ ACI Grid projects * Grid’ 5000 and a focus on a Fault tolerant MPI experiment *5000 CPUs Franck Cappello INRIA Grid’ 5000 Email 9/25/2020 fci@lri. fr CCGSC'06, Asheville 1

Grid’ 5000 Agenda Grid’ 5000 Some early results Experiment on fault tolerant MPI 9/25/2020 CCGSC'06, Asheville 2

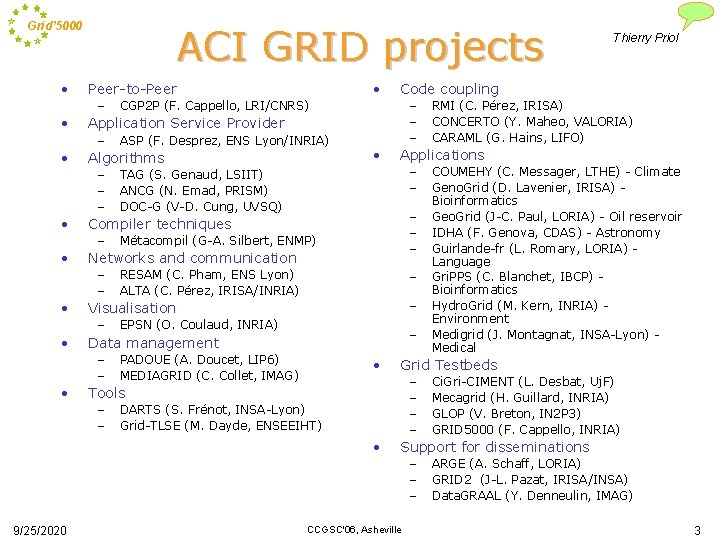

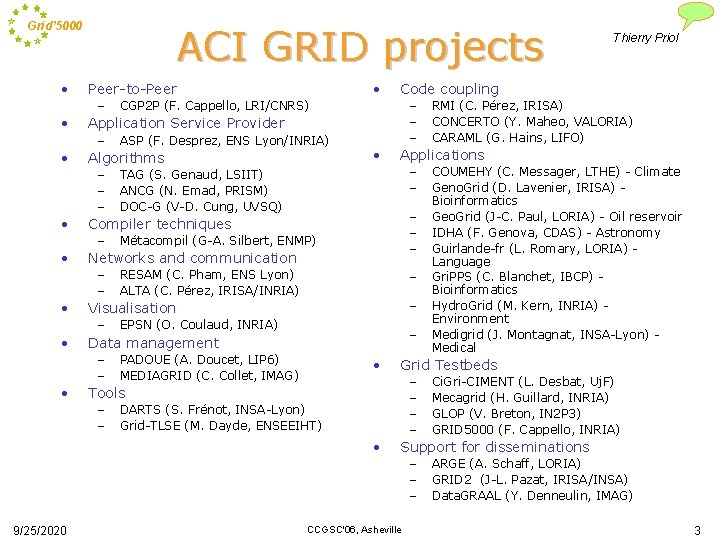

Grid’ 5000 • ACI GRID projects – • • – – TAG (S. Genaud, LSIIT) ANCG (N. Emad, PRISM) DOC-G (V-D. Cung, UVSQ) – – – Métacompil (G-A. Silbert, ENMP) RESAM (C. Pham, ENS Lyon) ALTA (C. Pérez, IRISA/INRIA) – – EPSN (O. Coulaud, INRIA) – Data management – – PADOUE (A. Doucet, LIP 6) MEDIAGRID (C. Collet, IMAG) • – – DARTS (S. Frénot, INSA-Lyon) Grid-TLSE (M. Dayde, ENSEEIHT) • Ci. Gri-CIMENT (L. Desbat, Uj. F) Mecagrid (H. Guillard, INRIA) GLOP (V. Breton, IN 2 P 3) GRID 5000 (F. Cappello, INRIA) Support for disseminations – – – 9/25/2020 COUMEHY (C. Messager, LTHE) - Climate Geno. Grid (D. Lavenier, IRISA) Bioinformatics Geo. Grid (J-C. Paul, LORIA) - Oil reservoir IDHA (F. Genova, CDAS) - Astronomy Guirlande-fr (L. Romary, LORIA) Language Gri. PPS (C. Blanchet, IBCP) Bioinformatics Hydro. Grid (M. Kern, INRIA) Environment Medigrid (J. Montagnat, INSA-Lyon) Medical Grid Testbeds Tools – – RMI (C. Pérez, IRISA) CONCERTO (Y. Maheo, VALORIA) CARAML (G. Hains, LIFO) Applications Visualisation – • • Networks and communication – – • ASP (F. Desprez, ENS Lyon/INRIA) Compiler techniques – • – – – CGP 2 P (F. Cappello, LRI/CNRS) Algorithms – – – • Code coupling Application Service Provider – • • Peer-to-Peer Thierry Priol CCGSC'06, Asheville ARGE (A. Schaff, LORIA) GRID 2 (J-L. Pazat, IRISA/INSA) Data. GRAAL (Y. Denneulin, IMAG) 3

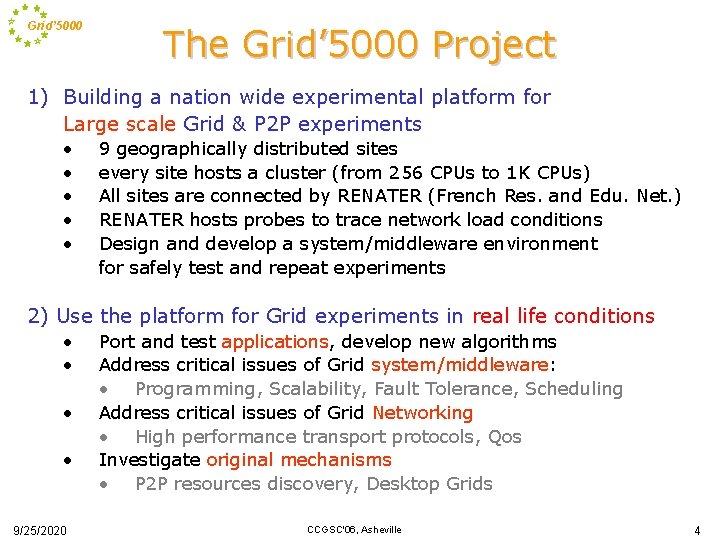

Grid’ 5000 The Grid’ 5000 Project 1) Building a nation wide experimental platform for Large scale Grid & P 2 P experiments • • • 9 geographically distributed sites every site hosts a cluster (from 256 CPUs to 1 K CPUs) All sites are connected by RENATER (French Res. and Edu. Net. ) RENATER hosts probes to trace network load conditions Design and develop a system/middleware environment for safely test and repeat experiments 2) Use the platform for Grid experiments in real life conditions • • 9/25/2020 Port and test applications, develop new algorithms Address critical issues of Grid system/middleware: • Programming, Scalability, Fault Tolerance, Scheduling Address critical issues of Grid Networking • High performance transport protocols, Qos Investigate original mechanisms • P 2 P resources discovery, Desktop Grids CCGSC'06, Asheville 4

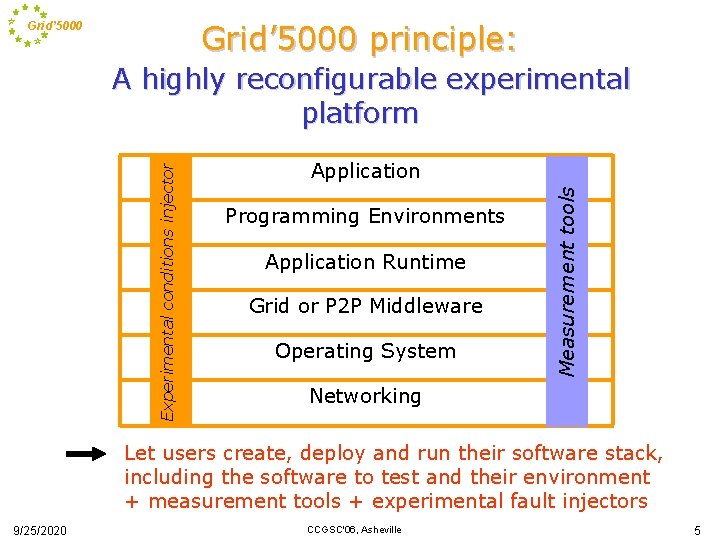

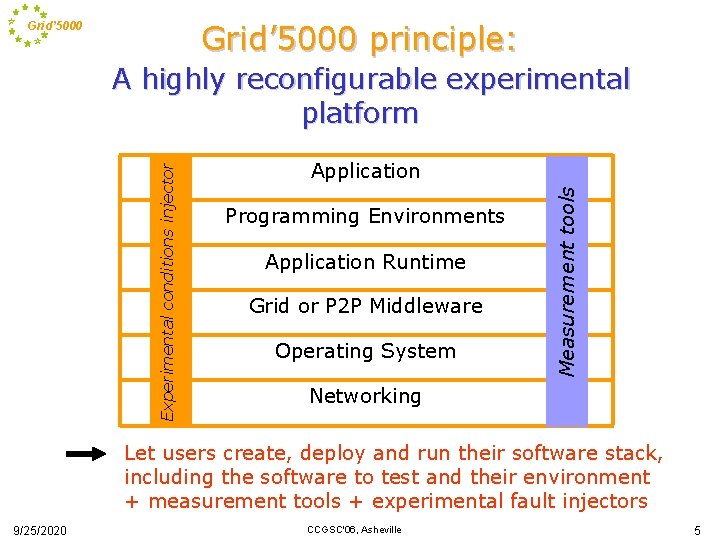

Grid’ 5000 principle: Grid’ 5000 Application Programming Environments Application Runtime Grid or P 2 P Middleware Operating System Measurement tools Experimental conditions injector A highly reconfigurable experimental platform Networking Let users create, deploy and run their software stack, including the software to test and their environment + measurement tools + experimental fault injectors 9/25/2020 CCGSC'06, Asheville 5

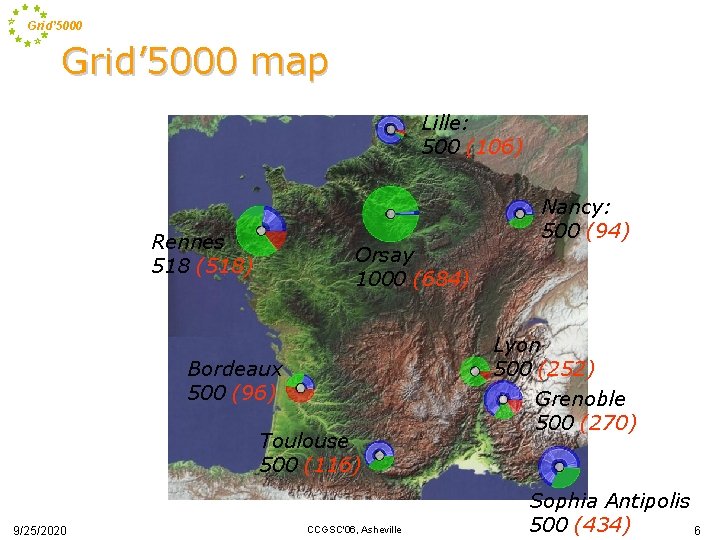

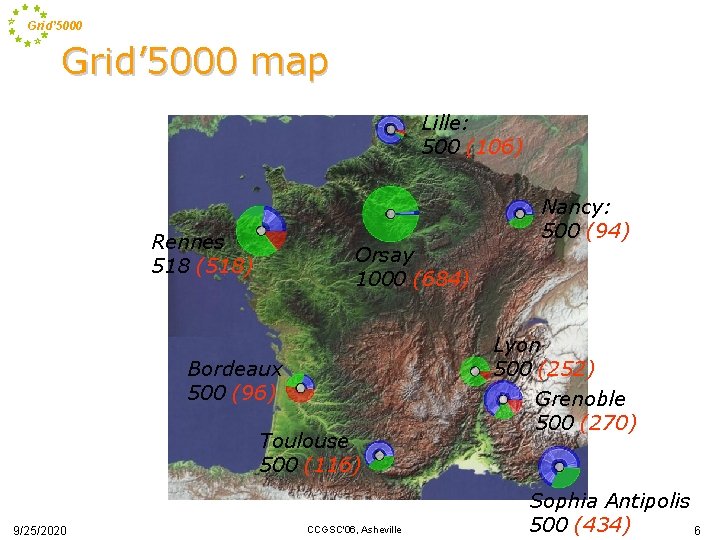

Grid’ 5000 map Lille: 500 (106) Rennes 518 (518) Orsay 1000 (684) Bordeaux 500 (96) Toulouse 500 (116) 9/25/2020 CCGSC'06, Asheville Nancy: 500 (94) Lyon 500 (252) Grenoble 500 (270) Sophia Antipolis 500 (434) 6

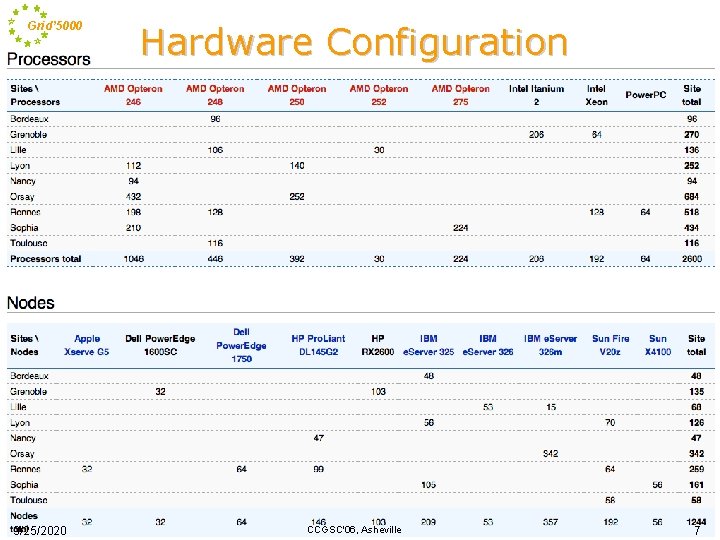

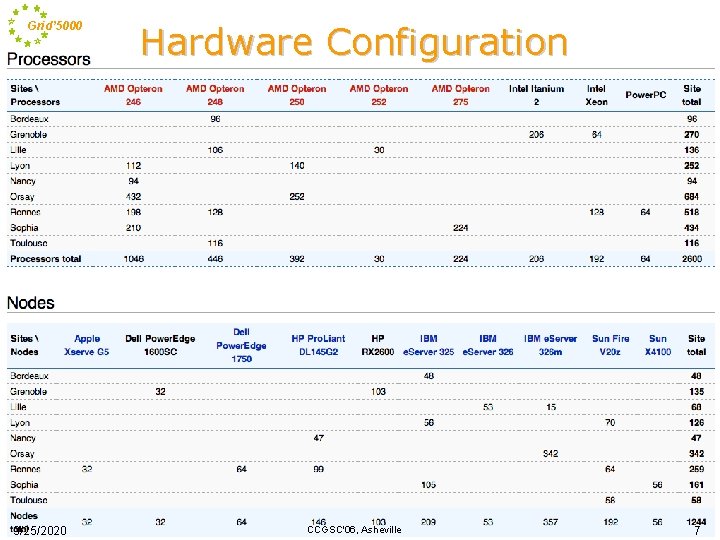

Grid’ 5000 9/25/2020 Hardware Configuration CCGSC'06, Asheville 7

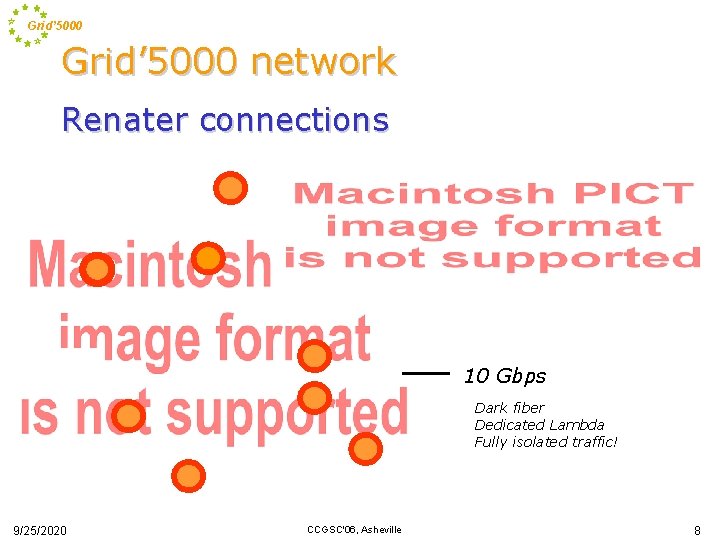

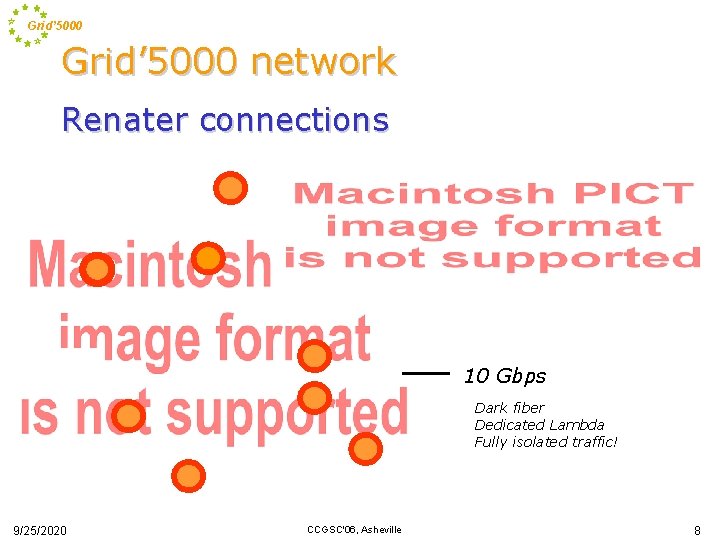

Grid’ 5000 network Renater connections 10 Gbps Dark fiber Dedicated Lambda Fully isolated traffic! 9/25/2020 CCGSC'06, Asheville 8

Grid’ 5000 as an Instrument 4 main features: • A high security for Grid’ 5000 and the Internet, despite the deep reconfiguration feature A confined system • A software infrastructure allowing users to access Grid’ 5000 from any Grid’ 5000 site and have home dir in every site -Multiple access points -Single system view -User controlled data sync. • A reservation/scheduling tools allowing users to select node sets and schedule experiments • A user toolkit to reconfigure the nodes and monitor experiments 9/25/2020 CCGSC'06, Asheville 9

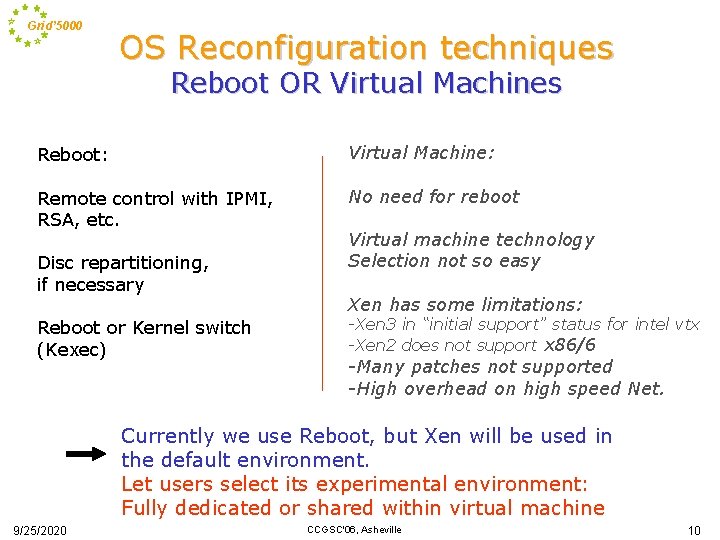

Grid’ 5000 OS Reconfiguration techniques Reboot OR Virtual Machines Reboot: Virtual Machine: Remote control with IPMI, RSA, etc. No need for reboot Disc repartitioning, if necessary Reboot or Kernel switch (Kexec) Virtual machine technology Selection not so easy Xen has some limitations: -Xen 3 in “initial support” status for intel vtx -Xen 2 does not support x 86/6 -Many patches not supported -High overhead on high speed Net. Currently we use Reboot, but Xen will be used in the default environment. Let users select its experimental environment: Fully dedicated or shared within virtual machine 9/25/2020 CCGSC'06, Asheville 10

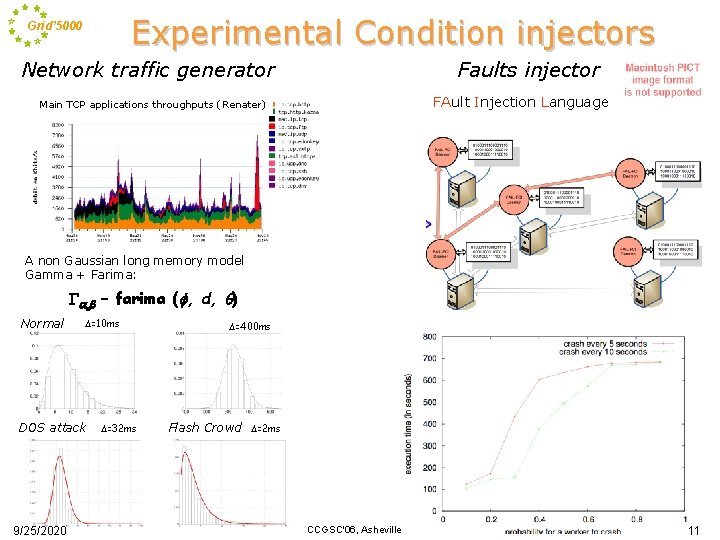

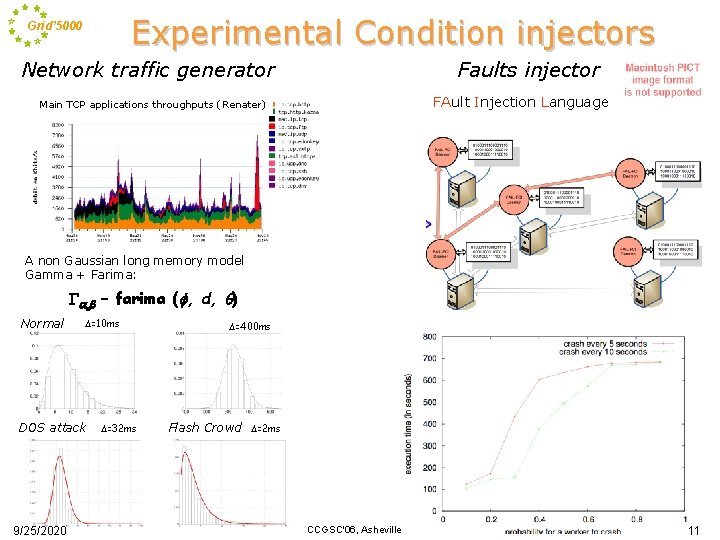

Experimental Condition injectors Grid’ 5000 Network traffic generator Faults injector FAult Injection Language Main TCP applications throughputs (Renater) A non Gaussian long memory model Gamma + Farima: Ga, b – farima (f, d, q) Normal D=10 ms DOS attack 9/25/2020 D=32 ms D=400 ms Flash Crowd D=2 ms CCGSC'06, Asheville 11

Grid’ 5000 Agenda Grid’ 5000 Early • • • results: Communities, Platform usage, Experiments Experiment on Fault tolerant MPI 9/25/2020 CCGSC'06, Asheville 12

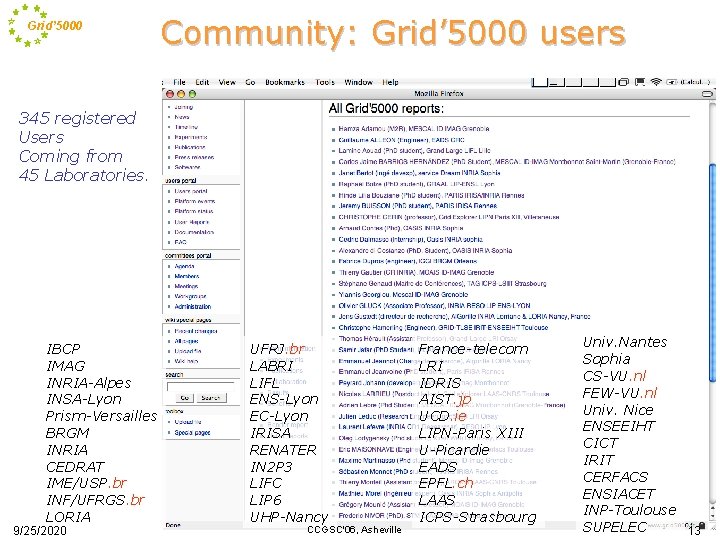

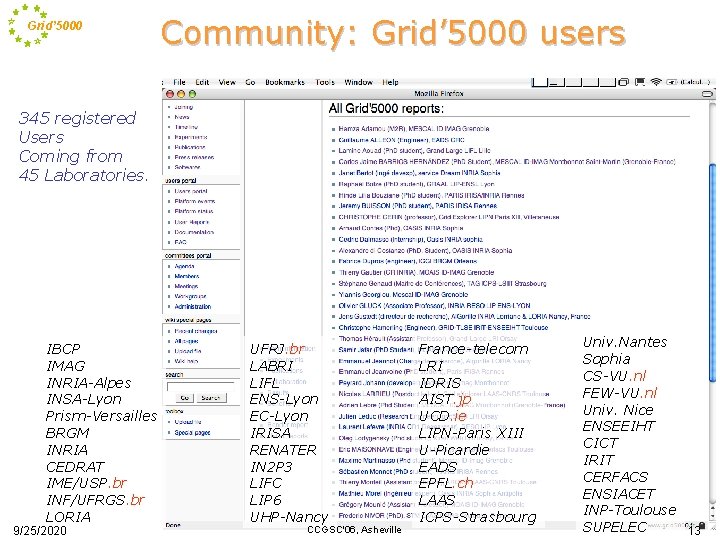

Grid’ 5000 Community: Grid’ 5000 users 345 registered Users Coming from 45 Laboratories. IBCP IMAG INRIA-Alpes INSA-Lyon Prism-Versailles BRGM INRIA CEDRAT IME/USP. br INF/UFRGS. br LORIA 9/25/2020 UFRJ. br LABRI LIFL ENS-Lyon EC-Lyon IRISA RENATER IN 2 P 3 LIFC LIP 6 UHP-Nancy CCGSC'06, Asheville France-telecom LRI IDRIS AIST. jp UCD. ie LIPN-Paris XIII U-Picardie EADS EPFL. ch LAAS ICPS-Strasbourg Univ. Nantes Sophia CS-VU. nl FEW-VU. nl Univ. Nice ENSEEIHT CICT IRIT CERFACS ENSIACET INP-Toulouse SUPELEC 13

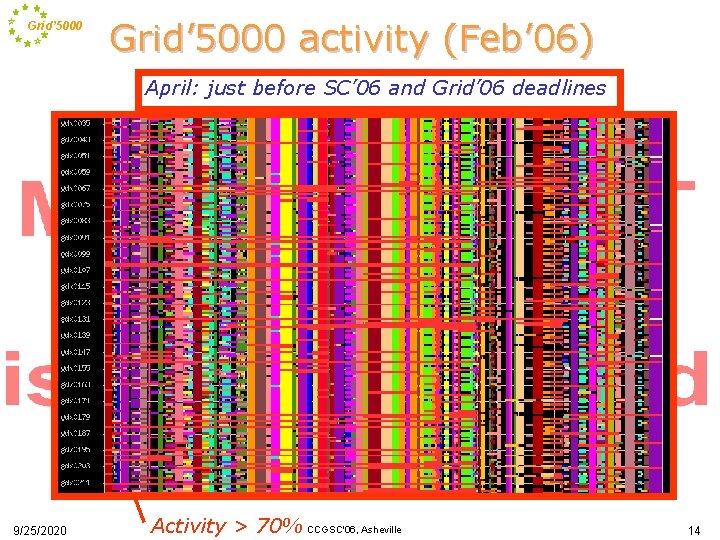

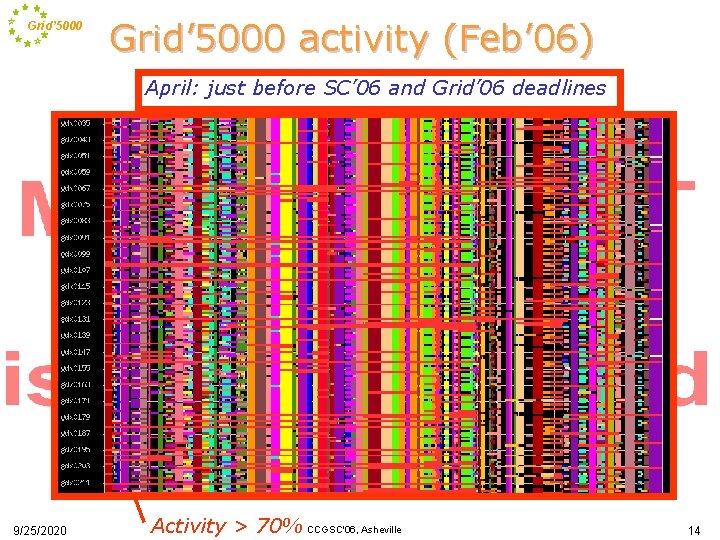

Grid’ 5000 activity (Feb’ 06) April: just before SC’ 06 and Grid’ 06 deadlines 9/25/2020 Activity > 70% CCGSC'06, Asheville 14

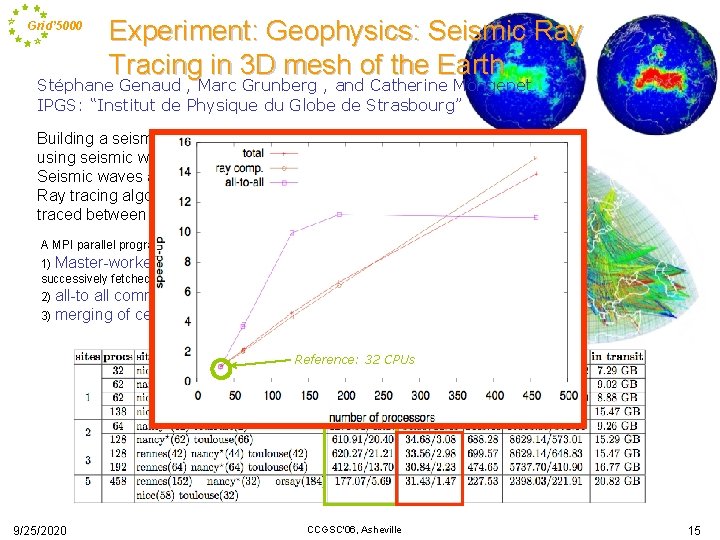

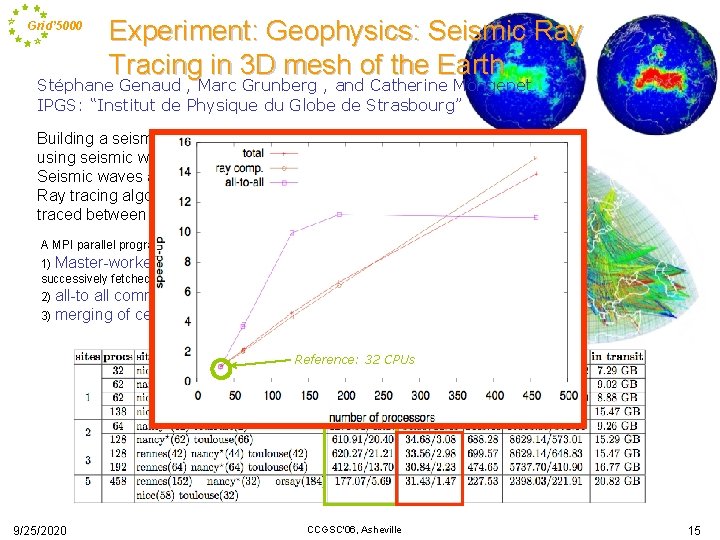

Grid’ 5000 Experiment: Geophysics: Seismic Ray Tracing in 3 D mesh of the Earth Stéphane Genaud , Marc Grunberg , and Catherine Mongenet IPGS: “Institut de Physique du Globe de Strasbourg” Building a seismic tomography model of the Earth geology using seismic wave propagation characteristics in the Earth. Seismic waves are modeled from events detected by sensors. Ray tracing algorithm: waves are reconstructed from rays traced between the epicenter and the sensors. A MPI parallel program composed of 3 steps 1) Master-worker: ray tracing and mesh update by each process with blocks of rays successively fetched from the master process, 2) all-to all communications to exchange submesh in-formation between the processes, 3) merging of cell information of the submesh associated with each process. Reference: 32 CPUs 9/25/2020 CCGSC'06, Asheville 15

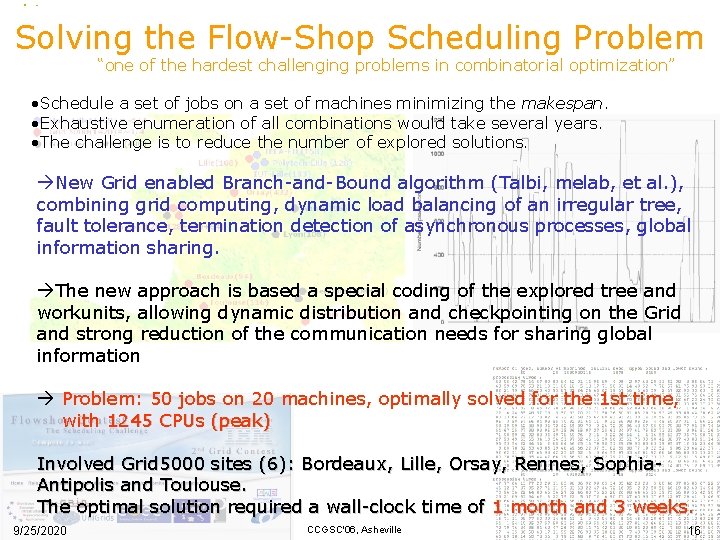

Solving the Flow-Shop Scheduling Problem Grid’ 5000 “one of the hardest challenging problems in combinatorial optimization” • Schedule a set of jobs on a set of machines minimizing the makespan. • Exhaustive enumeration of all combinations would take several years. • The challenge is to reduce the number of explored solutions. àNew Grid enabled Branch-and-Bound algorithm (Talbi, melab, et al. ), combining grid computing, dynamic load balancing of an irregular tree, fault tolerance, termination detection of asynchronous processes, global information sharing. àThe new approach is based a special coding of the explored tree and workunits, allowing dynamic distribution and checkpointing on the Grid and strong reduction of the communication needs for sharing global information à Problem: 50 jobs on 20 machines, optimally solved for the 1 st time, with 1245 CPUs (peak) Involved Grid 5000 sites (6): Bordeaux, Lille, Orsay, Rennes, Sophia. Antipolis and Toulouse. The optimal solution required a wall-clock time of 1 month and 3 weeks. 9/25/2020 CCGSC'06, Asheville 16

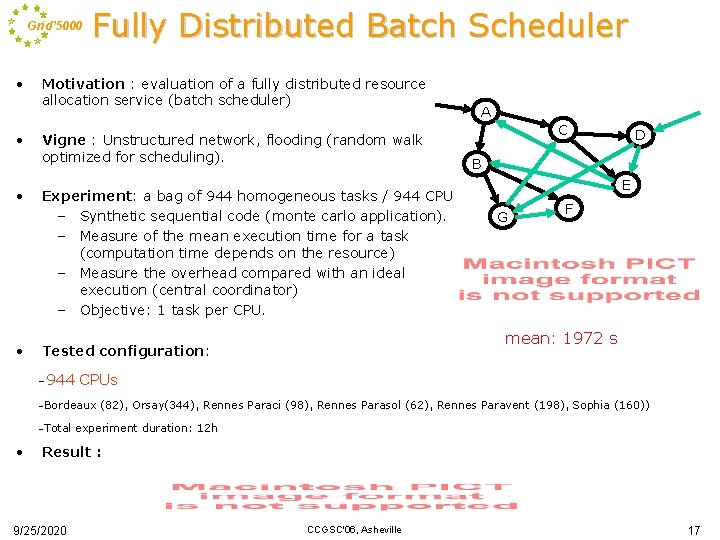

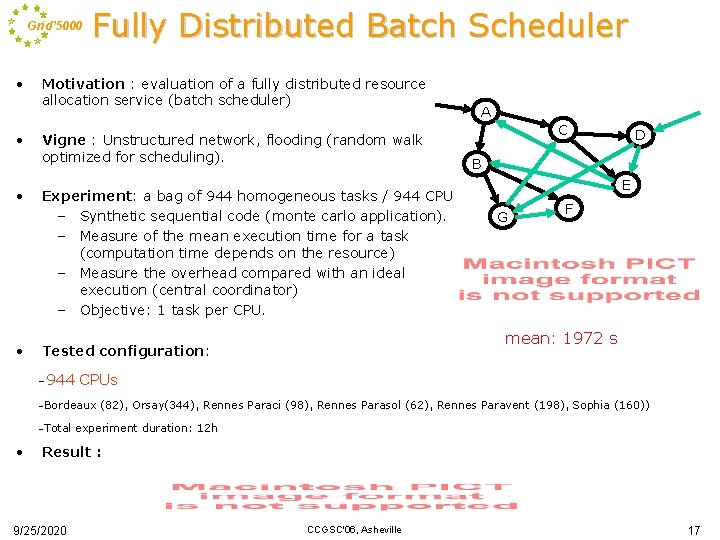

Grid’ 5000 • • Fully Distributed Batch Scheduler Motivation : evaluation of a fully distributed resource allocation service (batch scheduler) Vigne : Unstructured network, flooding (random walk optimized for scheduling). Experiment: a bag of 944 homogeneous tasks / 944 CPU – Synthetic sequential code (monte carlo application). – Measure of the mean execution time for a task (computation time depends on the resource) – Measure the overhead compared with an ideal execution (central coordinator) – Objective: 1 task per CPU. • D B E G F CPUs Bordeaux Total C mean: 1972 s Tested configuration: 944 A (82), Orsay(344), Rennes Paraci (98), Rennes Parasol (62), Rennes Paravent (198), Sophia (160)) experiment duration: 12 h Result : 9/25/2020 CCGSC'06, Asheville 17

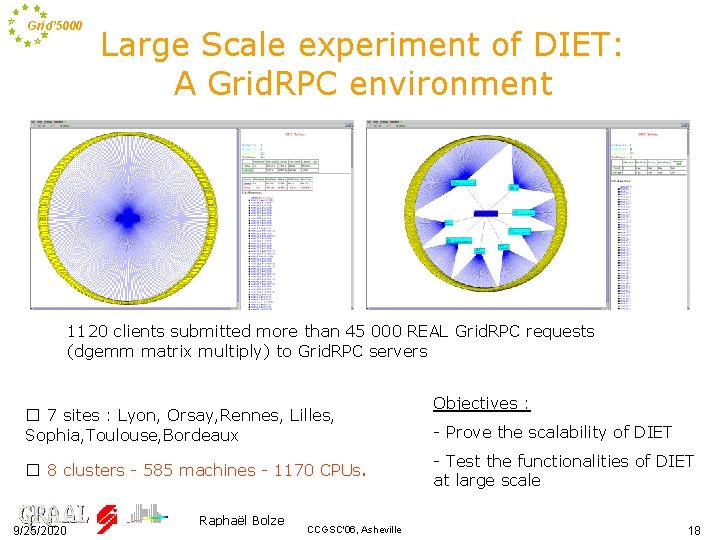

Grid’ 5000 Large Scale experiment of DIET: A Grid. RPC environment 1120 clients submitted more than 45 000 REAL Grid. RPC requests (dgemm matrix multiply) to Grid. RPC servers � 7 sites : Lyon, Orsay, Rennes, Lilles, Sophia, Toulouse, Bordeaux � 8 clusters - 585 machines - 1170 CPUs. 9/25/2020 Raphaël Bolze CCGSC'06, Asheville Objectives : - Prove the scalability of DIET - Test the functionalities of DIET at large scale 18

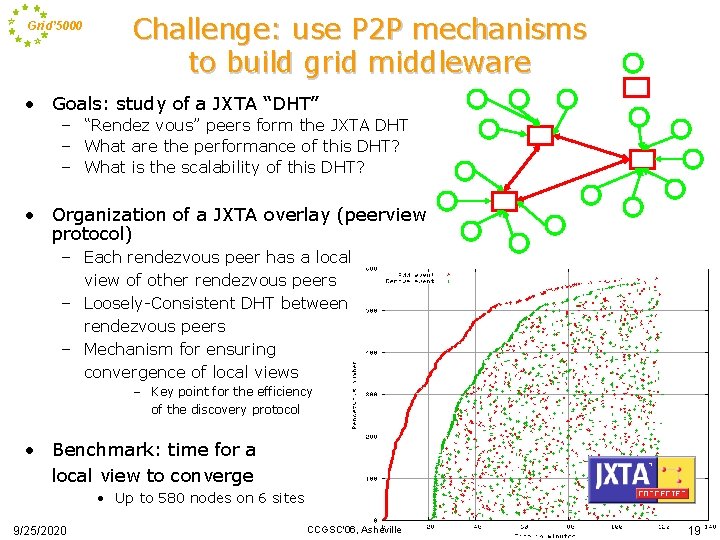

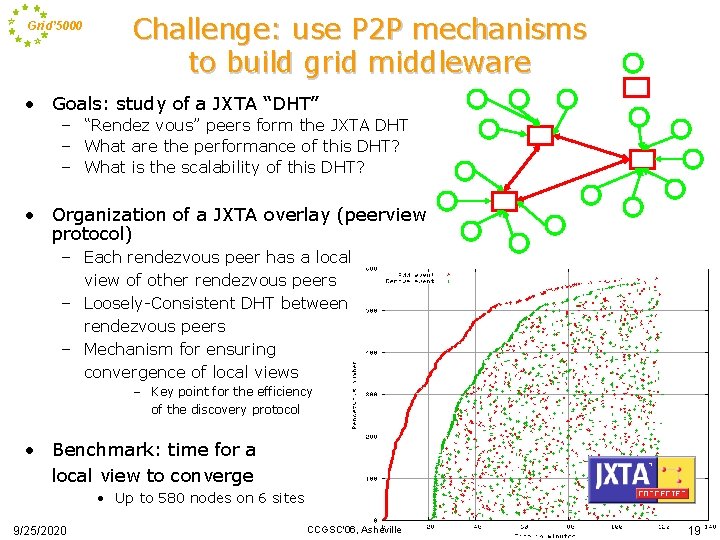

Grid’ 5000 Challenge: use P 2 P mechanisms to build grid middleware • Goals: study of a JXTA “DHT” – “Rendez vous” peers form the JXTA DHT – What are the performance of this DHT? – What is the scalability of this DHT? • Organization of a JXTA overlay (peerview protocol) – Each rendezvous peer has a local view of other rendezvous peers – Loosely-Consistent DHT between rendezvous peers – Mechanism for ensuring convergence of local views – Key point for the efficiency of the discovery protocol • Benchmark: time for a local view to converge • Up to 580 nodes on 6 sites 9/25/2020 CCGSC'06, Asheville 19

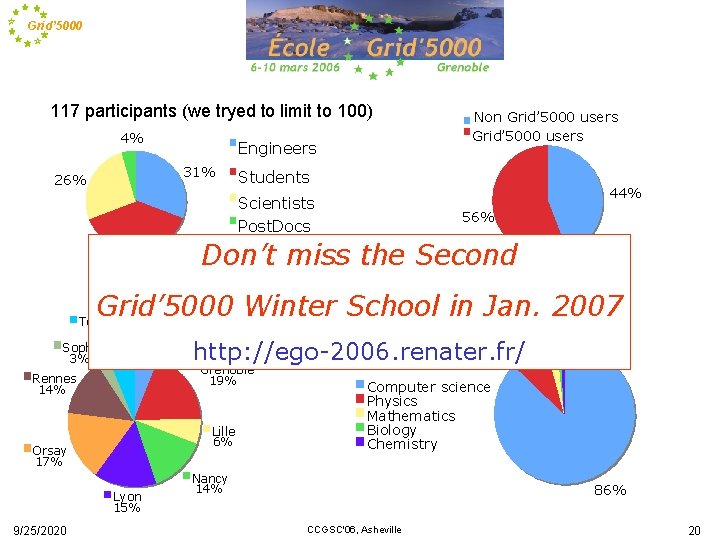

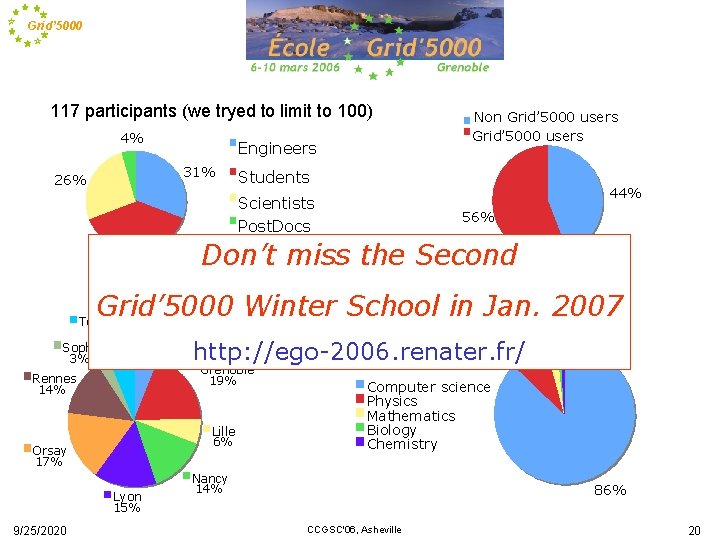

Grid’ 5000 117 participants (we tryed to limit to 100) 4% Engineers 31% 26% Students 44% Scientists Post. Docs 39% Non Grid’ 5000 users 56% Don’t miss the Second Topics and exercises: • • Reservation Reconfiguration MPI on the Cluster of Clusters Virtual Grid based on Globus GT 4 1% Grid’ 5000 Winter School in Jan. 2007 1% Toulouse 6% Sophia 3% Bordeaux 6% http: //ego-2006. renater. fr/ Grenoble 19% Rennes 14% Lille 6% Orsay 17% Lyon 15% 9/25/2020 3% 9% Computer science Physics Mathematics Biology Chemistry Nancy 14% 86% CCGSC'06, Asheville 20

Grid’ 5000 Grid@work (Octobre 10 -14 2005) • Series of conferences and tutorials including • Grid Plug. Test (N-Queens and Flowshop Contests). The objective of this event was to bring together Pro. Active users, to present and discuss current and future features. Don’t of the Pro. Active Grid platform, and 2006 miss Grid@work to test the deployment and interoperability of Pro. Active Grid applications on various Grids. in Nov. 26 to Dec. 1 The N-Queens Contest (4 teams) where the aim was to find the number of solutions http: //www. etsi. org/plugtests/Upcoming/GRID 2006. htm to the N-queens problem, N being as big as possible, in a limited amount of time The Flowshop Contest (3 teams) 1600 CPUs in total: 1200 provided by Grid’ 5000 + 50 by the other Grids (EGEE, DEISA, Nordu. Grid) + 350 CPUs on clusters. 9/25/2020 CCGSC'06, Asheville 21

![Grid 5000 Agenda Grid 5000 Early results Experiment on fault tolerant MPI SC 2006 Grid’ 5000 Agenda Grid’ 5000 Early results Experiment on fault tolerant MPI [SC 2006]](https://slidetodoc.com/presentation_image/67ffd83e85b9ab09fc05a2cd6ce09bb7/image-22.jpg)

Grid’ 5000 Agenda Grid’ 5000 Early results Experiment on fault tolerant MPI [SC 2006] 9/25/2020 CCGSC'06, Asheville 22

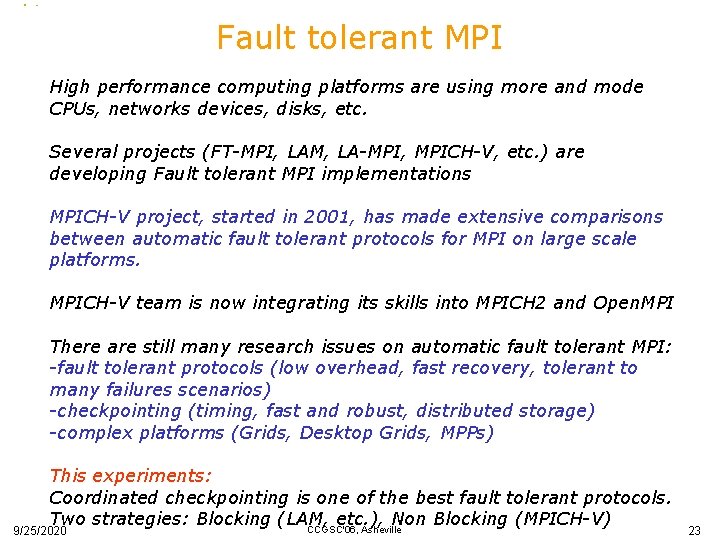

Grid’ 5000 Fault tolerant MPI High performance computing platforms are using more and mode CPUs, networks devices, disks, etc. Several projects (FT-MPI, LAM, LA-MPI, MPICH-V, etc. ) are developing Fault tolerant MPI implementations MPICH-V project, started in 2001, has made extensive comparisons between automatic fault tolerant protocols for MPI on large scale platforms. MPICH-V team is now integrating its skills into MPICH 2 and Open. MPI There are still many research issues on automatic fault tolerant MPI: -fault tolerant protocols (low overhead, fast recovery, tolerant to many failures scenarios) -checkpointing (timing, fast and robust, distributed storage) -complex platforms (Grids, Desktop Grids, MPPs) This experiments: Coordinated checkpointing is one of the best fault tolerant protocols. Two strategies: Blocking (LAM, etc. ), Non Blocking (MPICH-V) CCGSC'06, Asheville 9/25/2020 23

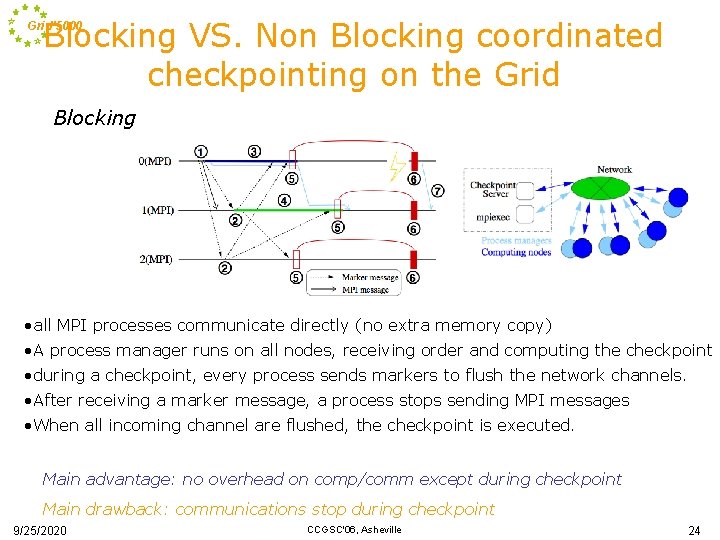

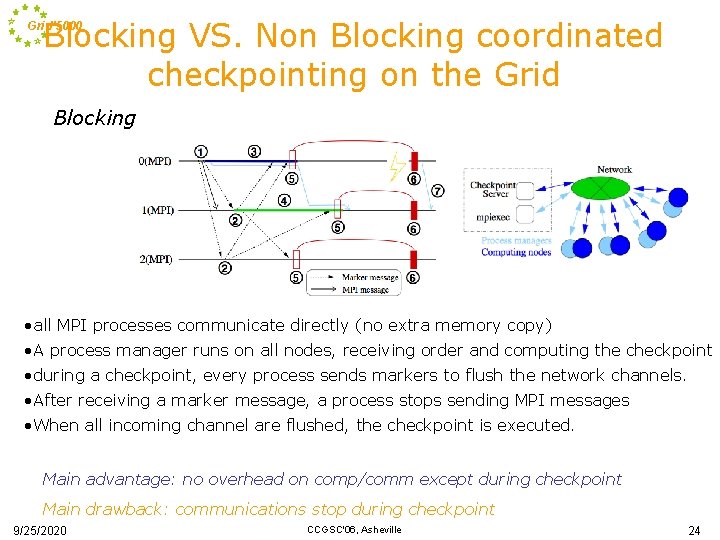

Blocking VS. Non Blocking coordinated checkpointing on the Grid’ 5000 Blocking • all MPI processes communicate directly (no extra memory copy) • A process manager runs on all nodes, receiving order and computing the checkpoint • during a checkpoint, every process sends markers to flush the network channels. • After receiving a marker message, a process stops sending MPI messages • When all incoming channel are flushed, the checkpoint is executed. Main advantage: no overhead on comp/comm except during checkpoint Main drawback: communications stop during checkpoint 9/25/2020 CCGSC'06, Asheville 24

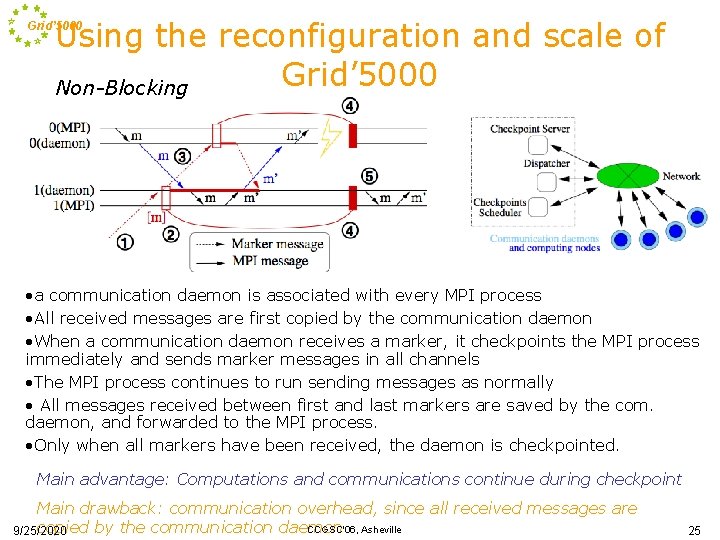

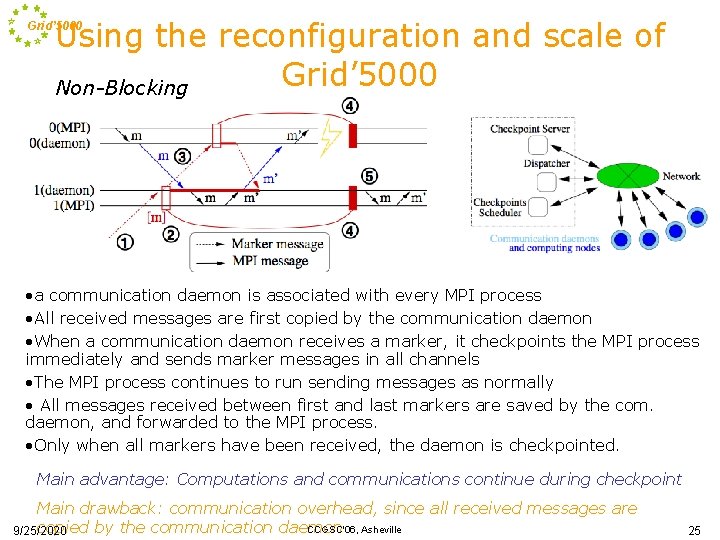

Using the reconfiguration and scale of Grid’ 5000 Non-Blocking Grid’ 5000 • a communication daemon is associated with every MPI process • All received messages are first copied by the communication daemon • When a communication daemon receives a marker, it checkpoints the MPI process immediately and sends marker messages in all channels • The MPI process continues to run sending messages as normally • All messages received between first and last markers are saved by the com. daemon, and forwarded to the MPI process. • Only when all markers have been received, the daemon is checkpointed. Main advantage: Computations and communications continue during checkpoint Main drawback: communication overhead, since all received messages are CCGSC'06, Asheville copied by the communication daemon 9/25/2020 25

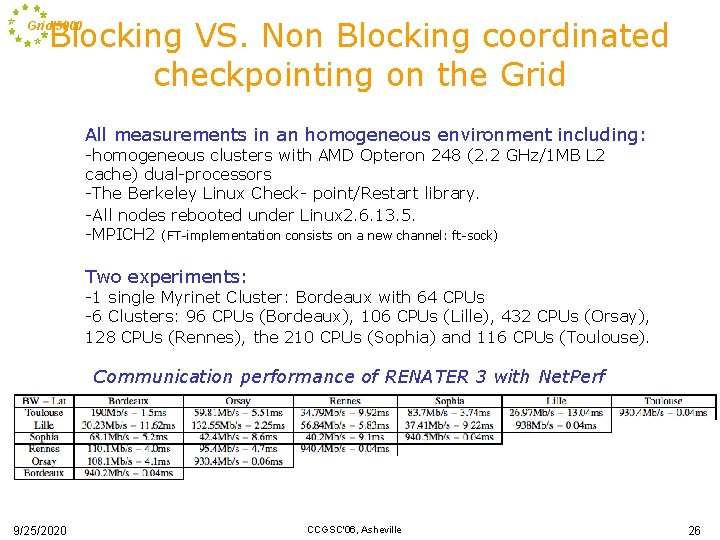

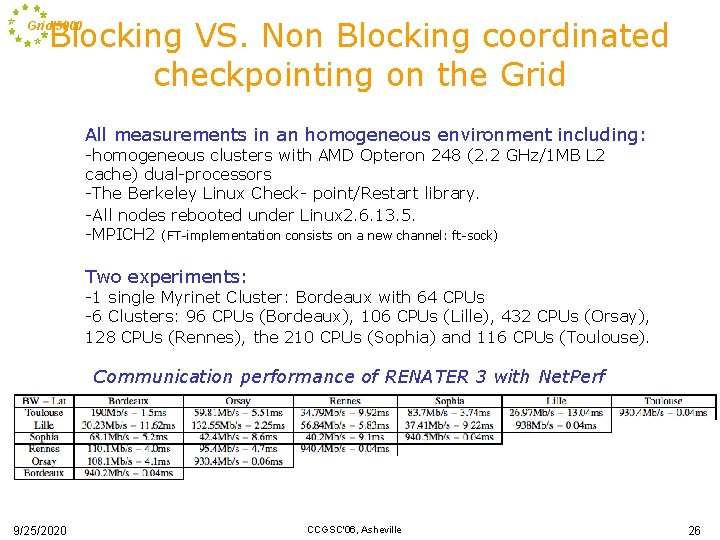

Blocking VS. Non Blocking coordinated checkpointing on the Grid’ 5000 All measurements in an homogeneous environment including: -homogeneous clusters with AMD Opteron 248 (2. 2 GHz/1 MB L 2 cache) dual-processors -The Berkeley Linux Check- point/Restart library. -All nodes rebooted under Linux 2. 6. 13. 5. -MPICH 2 (FT-implementation consists on a new channel: ft-sock) Two experiments: -1 single Myrinet Cluster: Bordeaux with 64 CPUs -6 Clusters: 96 CPUs (Bordeaux), 106 CPUs (Lille), 432 CPUs (Orsay), 128 CPUs (Rennes), the 210 CPUs (Sophia) and 116 CPUs (Toulouse). Communication performance of RENATER 3 with Net. Perf 9/25/2020 CCGSC'06, Asheville 26

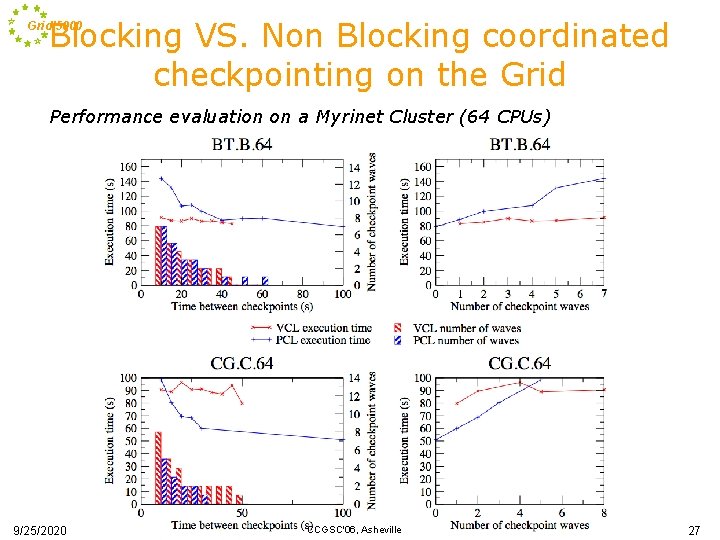

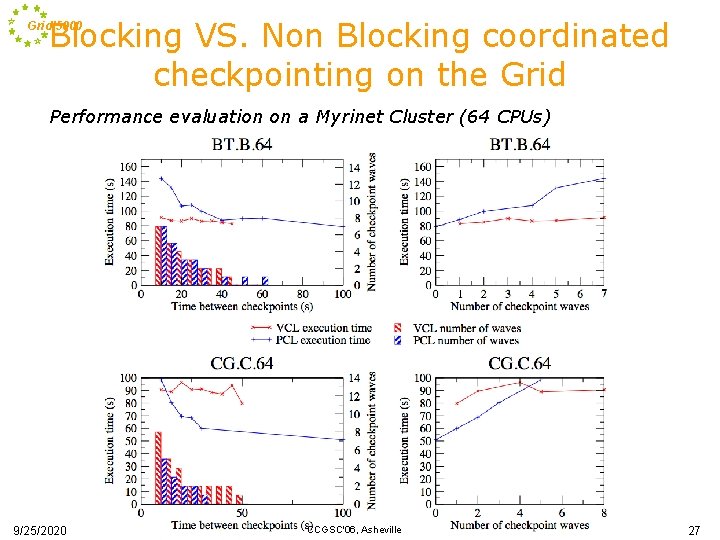

Blocking VS. Non Blocking coordinated checkpointing on the Grid’ 5000 Performance evaluation on a Myrinet Cluster (64 CPUs) 9/25/2020 CCGSC'06, Asheville 27

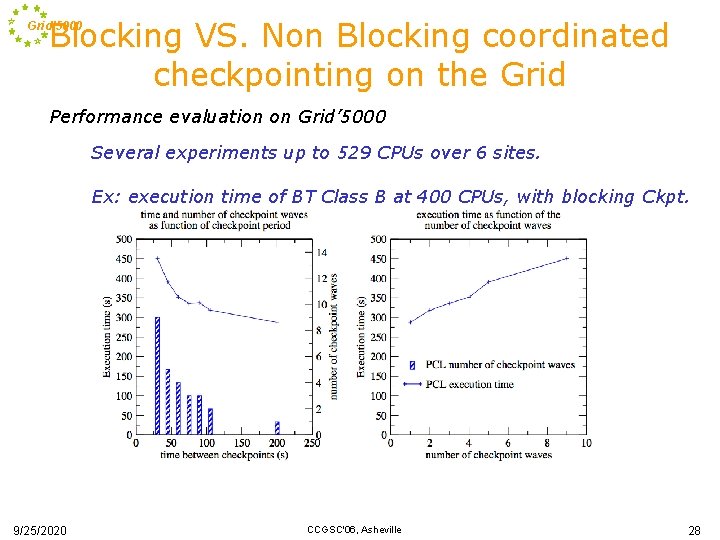

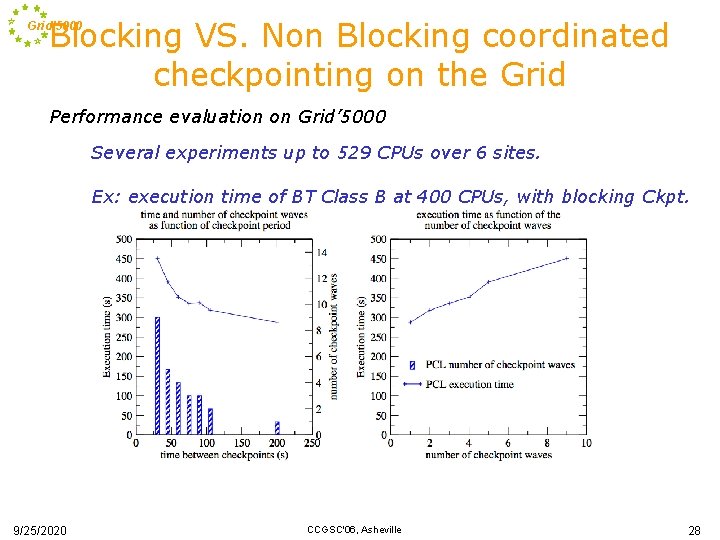

Blocking VS. Non Blocking coordinated checkpointing on the Grid’ 5000 Performance evaluation on Grid’ 5000 Several experiments up to 529 CPUs over 6 sites. Ex: execution time of BT Class B at 400 CPUs, with blocking Ckpt. 9/25/2020 CCGSC'06, Asheville 28

Conclusion: toward an international Platform Grid’ 5000 DAS GENI (NSF) 1500 CPUs Sept 2006 Grid’ 5000 2600 CPUs Japan (Tsubame, I-Explosion) The Distributed systems (P 2 P, Grid) and Networking communities recognizes the necessity of large scale experimental platforms (Planet. Lab, Emulab, Grid’ 5000, DAS, I-Explosion, GENI, etc. ) 9/25/2020 CCGSC'06, Asheville 29

Grid’ 5000 QUESTIONS? 9/25/2020 CCGSC'06, Asheville 30