Workshop on Charm and Applications Welcome and Introduction

![A Core Idea: Processor Virtualization Programmer: [Over] decomposition into virtual processors Runtime: Assigns VPs A Core Idea: Processor Virtualization Programmer: [Over] decomposition into virtual processors Runtime: Assigns VPs](https://slidetodoc.com/presentation_image_h/25abb7f0e5e2934c904422f4540e3c11/image-3.jpg)

![Molecular Dynamics in NAMD • Collection of [charged] atoms, with bonds – Newtonian mechanics Molecular Dynamics in NAMD • Collection of [charged] atoms, with bonds – Newtonian mechanics](https://slidetodoc.com/presentation_image_h/25abb7f0e5e2934c904422f4540e3c11/image-22.jpg)

- Slides: 29

Workshop on Charm++ and Applications Welcome and Introduction Laxmikant Kale http: //charm. cs. uiuc. edu Parallel Programming Laboratory Department of Computer Science University of Illinois at Urbana Champaign 3/5/2021 Charm Workshop 2003 1

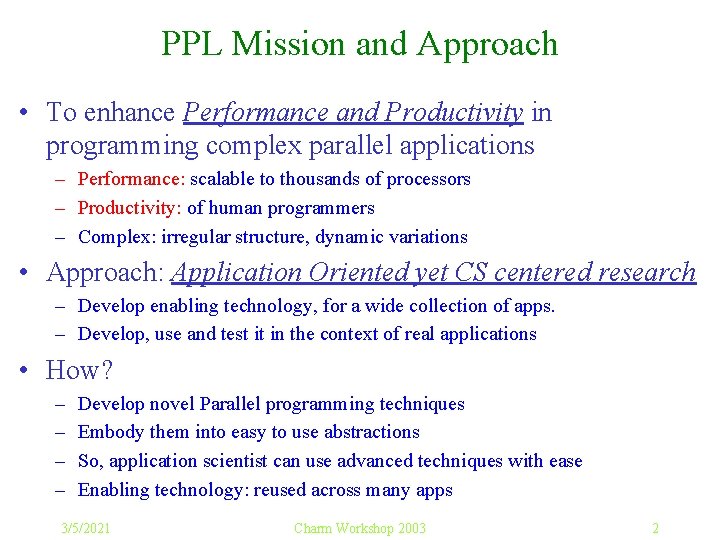

PPL Mission and Approach • To enhance Performance and Productivity in programming complex parallel applications – Performance: scalable to thousands of processors – Productivity: of human programmers – Complex: irregular structure, dynamic variations • Approach: Application Oriented yet CS centered research – Develop enabling technology, for a wide collection of apps. – Develop, use and test it in the context of real applications • How? – – Develop novel Parallel programming techniques Embody them into easy to use abstractions So, application scientist can use advanced techniques with ease Enabling technology: reused across many apps 3/5/2021 Charm Workshop 2003 2

![A Core Idea Processor Virtualization Programmer Over decomposition into virtual processors Runtime Assigns VPs A Core Idea: Processor Virtualization Programmer: [Over] decomposition into virtual processors Runtime: Assigns VPs](https://slidetodoc.com/presentation_image_h/25abb7f0e5e2934c904422f4540e3c11/image-3.jpg)

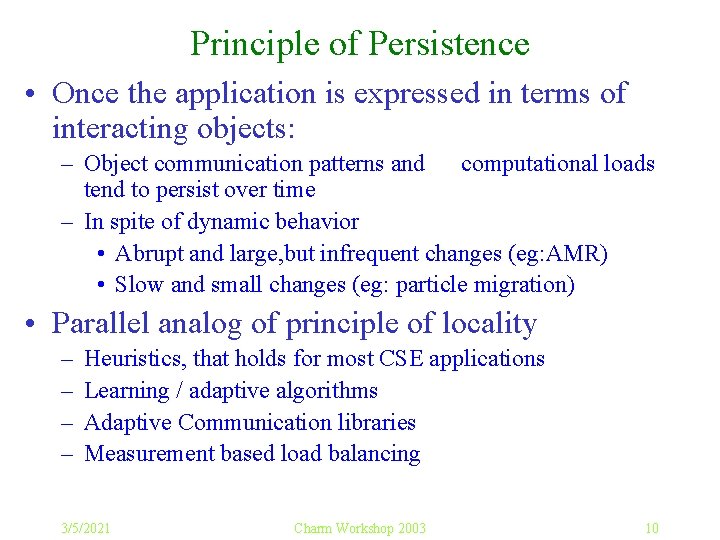

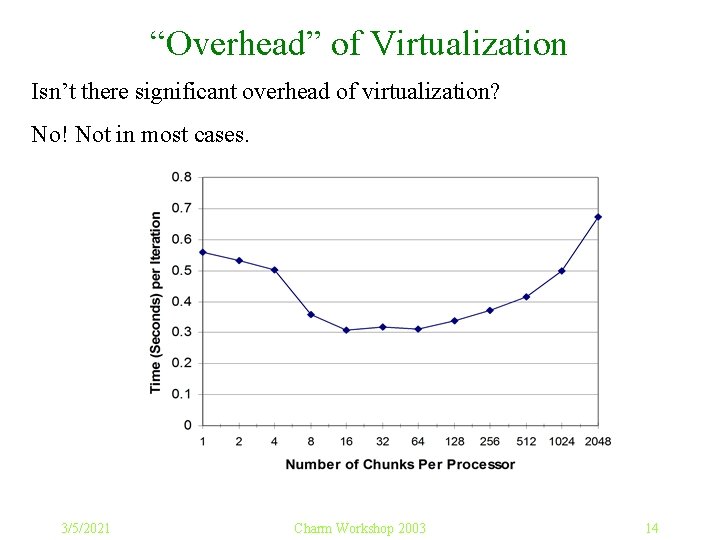

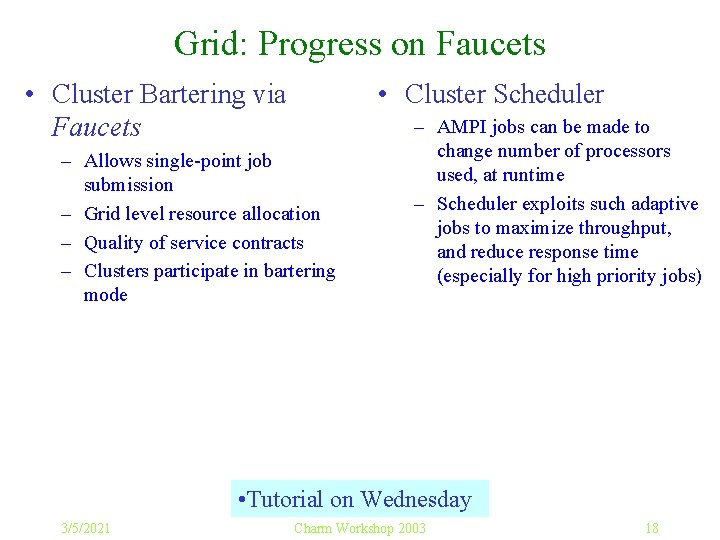

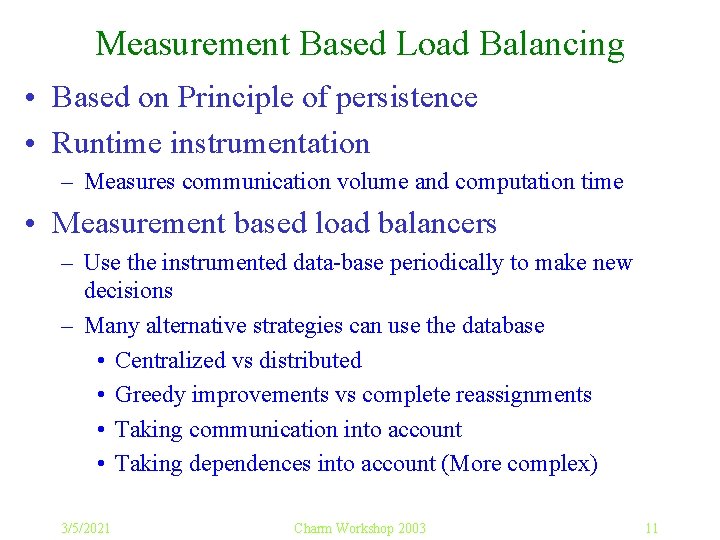

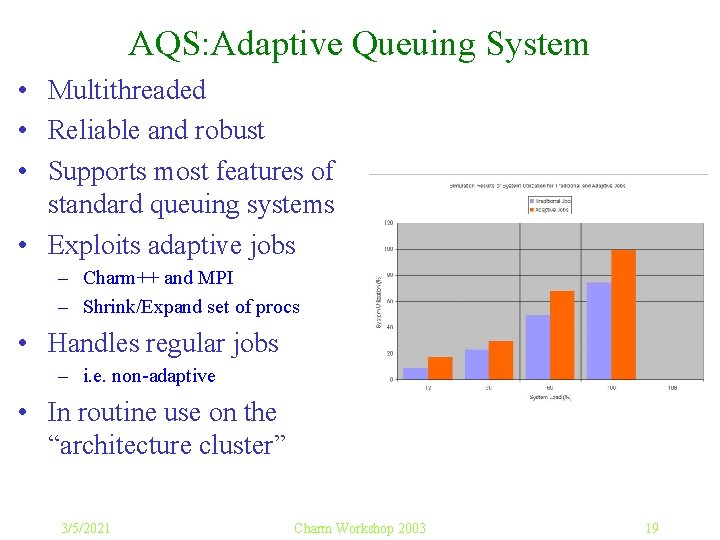

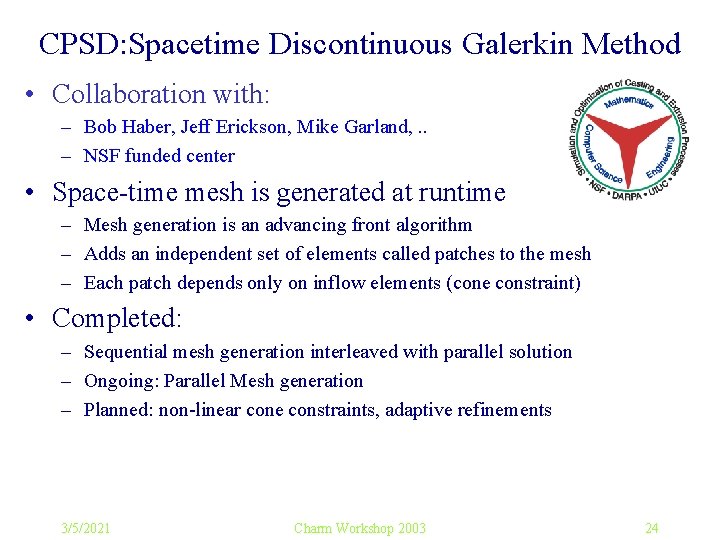

A Core Idea: Processor Virtualization Programmer: [Over] decomposition into virtual processors Runtime: Assigns VPs to processors Enables adaptive runtime strategies Implementations: Charm++, AMPI processes Virtual Processors (user-level migratable threads) Real Processors 3/5/2021 Benefits • Software engineering – Number of virtual processors can be independently controlled – Separate VPs for different modules • Message driven execution – Adaptive overlap of communication – Predictability : • Automatic out-of-core – Asynchronous reductions • Dynamic mapping – Heterogeneous clusters • Vacate, adjust to speed, share – Automatic checkpointing – Change set of processors used – Automatic dynamic load balancing – Communication optimization Charm Workshop 2003 3

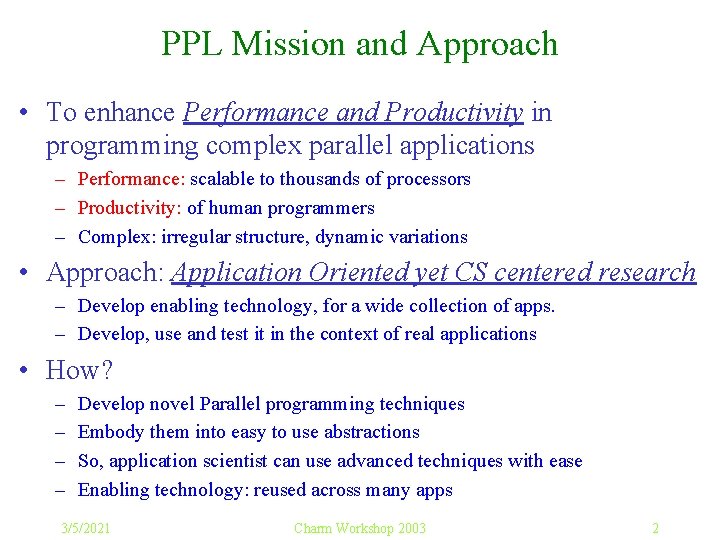

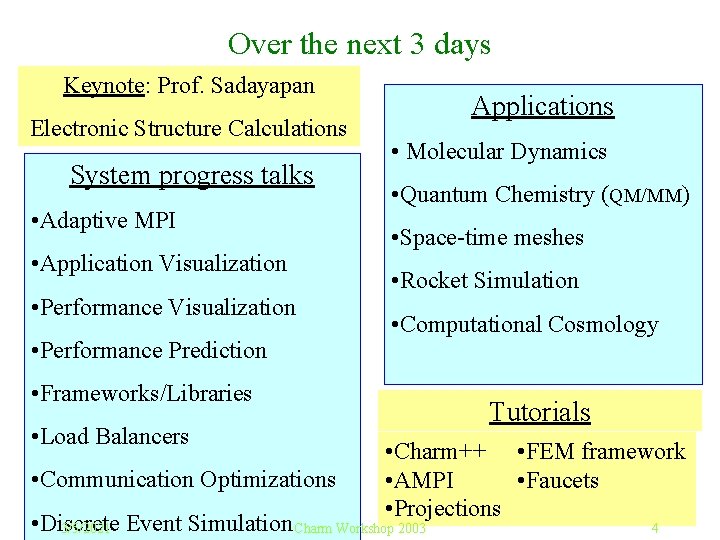

Over the next 3 days Keynote: Prof. Sadayapan Electronic Structure Calculations System progress talks • Adaptive MPI • Application Visualization • Performance Prediction Applications • Molecular Dynamics • Quantum Chemistry (QM/MM) • Space-time meshes • Rocket Simulation • Computational Cosmology • Frameworks/Libraries • Load Balancers • Communication Optimizations Tutorials • Charm++ • FEM framework • AMPI • Faucets • Projections • Discrete 3/5/2021 Event Simulation Charm Workshop 2003 4

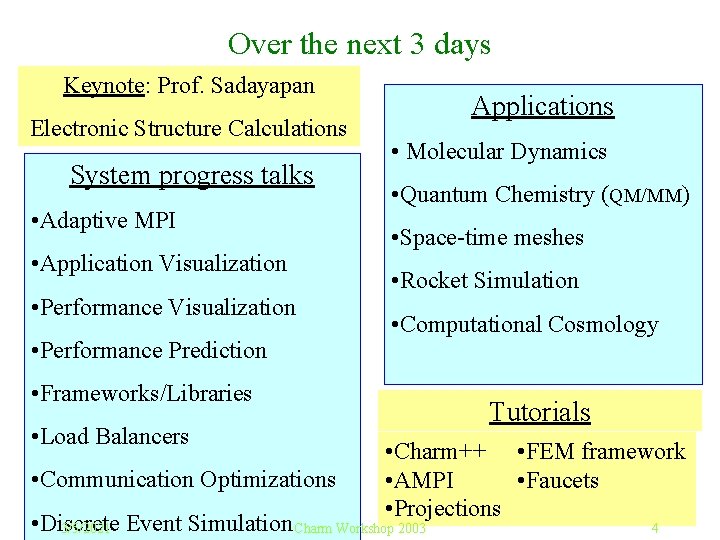

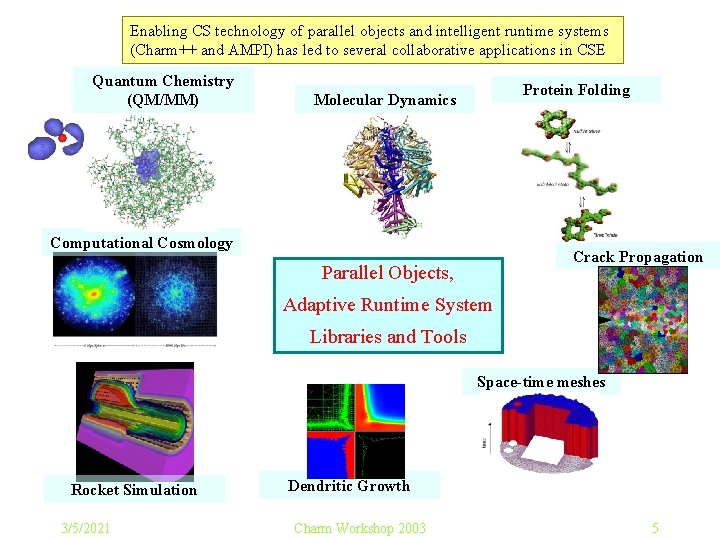

Enabling CS technology of parallel objects and intelligent runtime systems (Charm++ and AMPI) has led to several collaborative applications in CSE Quantum Chemistry (QM/MM) Protein Folding Molecular Dynamics Computational Cosmology Crack Propagation Parallel Objects, Adaptive Runtime System Libraries and Tools Space-time meshes Rocket Simulation 3/5/2021 Dendritic Growth Charm Workshop 2003 5

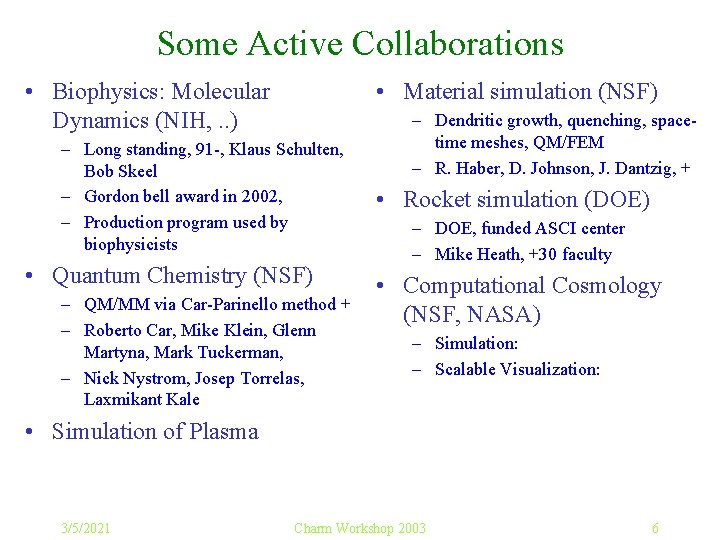

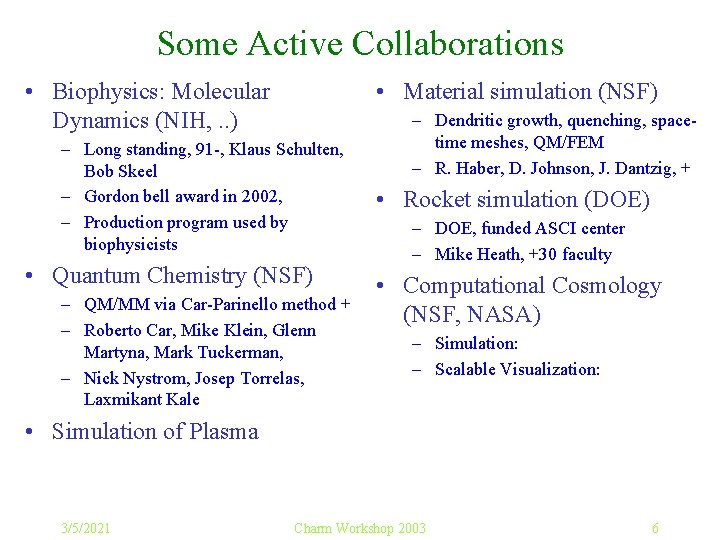

Some Active Collaborations • Biophysics: Molecular Dynamics (NIH, . . ) • Material simulation (NSF) – Long standing, 91 -, Klaus Schulten, Bob Skeel – Gordon bell award in 2002, – Production program used by biophysicists • Quantum Chemistry (NSF) – QM/MM via Car-Parinello method + – Roberto Car, Mike Klein, Glenn Martyna, Mark Tuckerman, – Nick Nystrom, Josep Torrelas, Laxmikant Kale – Dendritic growth, quenching, spacetime meshes, QM/FEM – R. Haber, D. Johnson, J. Dantzig, + • Rocket simulation (DOE) – DOE, funded ASCI center – Mike Heath, +30 faculty • Computational Cosmology (NSF, NASA) – Simulation: – Scalable Visualization: • Simulation of Plasma 3/5/2021 Charm Workshop 2003 6

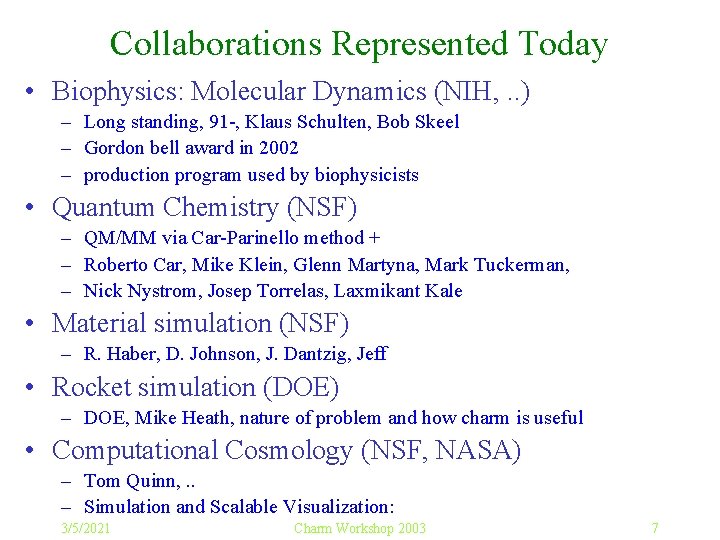

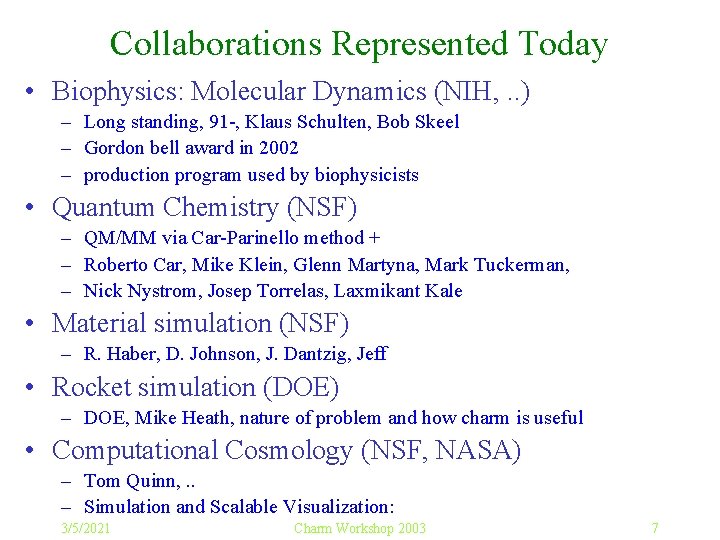

Collaborations Represented Today • Biophysics: Molecular Dynamics (NIH, . . ) – Long standing, 91 -, Klaus Schulten, Bob Skeel – Gordon bell award in 2002 – production program used by biophysicists • Quantum Chemistry (NSF) – QM/MM via Car-Parinello method + – Roberto Car, Mike Klein, Glenn Martyna, Mark Tuckerman, – Nick Nystrom, Josep Torrelas, Laxmikant Kale • Material simulation (NSF) – R. Haber, D. Johnson, J. Dantzig, Jeff • Rocket simulation (DOE) – DOE, Mike Heath, nature of problem and how charm is useful • Computational Cosmology (NSF, NASA) – Tom Quinn, . . – Simulation and Scalable Visualization: 3/5/2021 Charm Workshop 2003 7

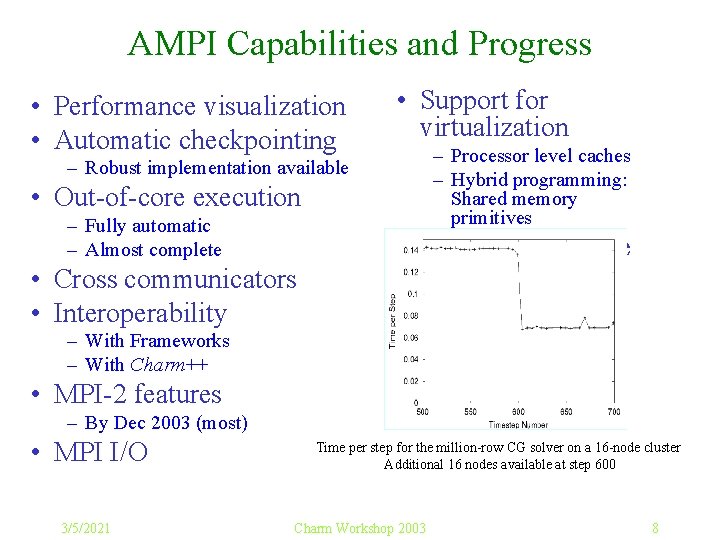

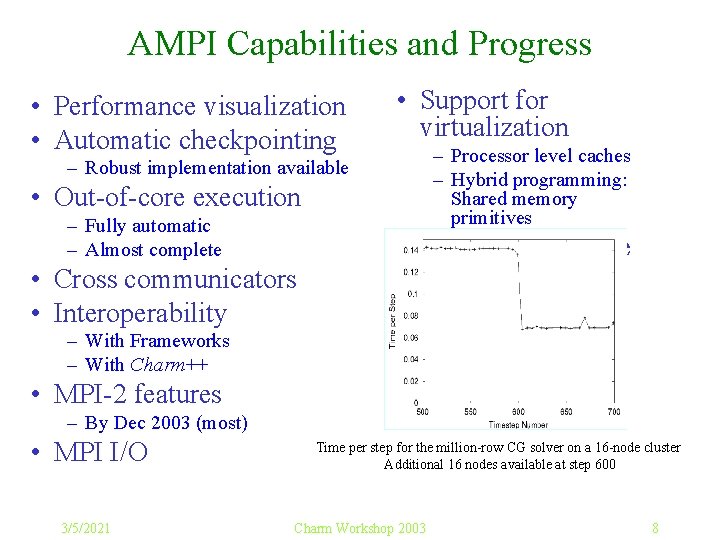

AMPI Capabilities and Progress • Performance visualization • Automatic checkpointing • Support for virtualization – Processor level caches – Hybrid programming: Shared memory primitives – Robust implementation available • Out-of-core execution – Fully automatic – Almost complete • Cross communicators • Interoperability • Adjust to available processors – With Frameworks – With Charm++ • MPI-2 features – By Dec 2003 (most) • MPI I/O 3/5/2021 Time per step for the million-row CG solver on a 16 -node cluster Additional 16 nodes available at step 600 Charm Workshop 2003 8

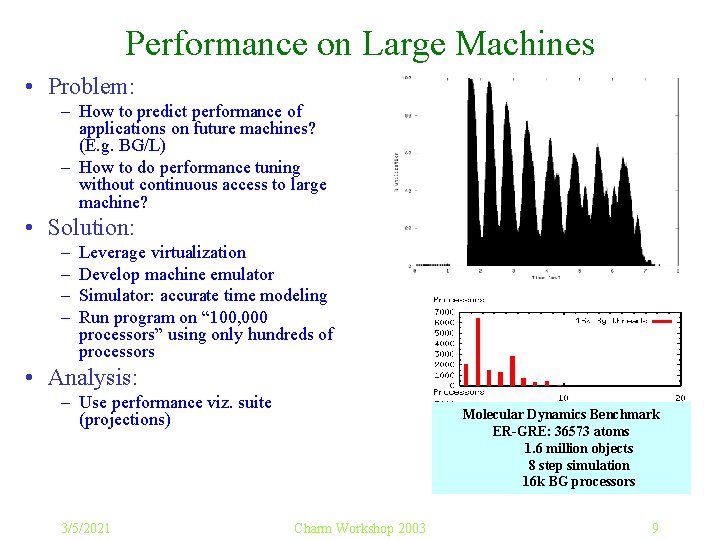

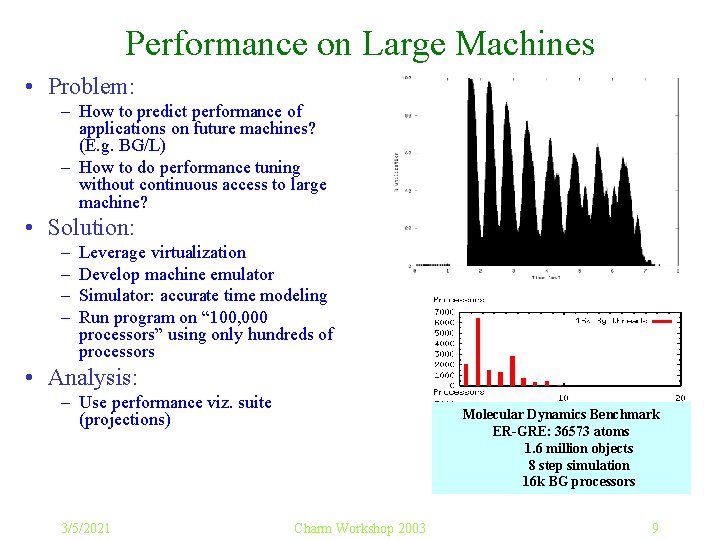

Performance on Large Machines • Problem: – How to predict performance of applications on future machines? (E. g. BG/L) – How to do performance tuning without continuous access to large machine? • Solution: – – Leverage virtualization Develop machine emulator Simulator: accurate time modeling Run program on “ 100, 000 processors” using only hundreds of processors • Analysis: – Use performance viz. suite (projections) 3/5/2021 Molecular Dynamics Benchmark ER-GRE: 36573 atoms 1. 6 million objects 8 step simulation 16 k BG processors Charm Workshop 2003 9

Principle of Persistence • Once the application is expressed in terms of interacting objects: – Object communication patterns and computational loads tend to persist over time – In spite of dynamic behavior • Abrupt and large, but infrequent changes (eg: AMR) • Slow and small changes (eg: particle migration) • Parallel analog of principle of locality – – Heuristics, that holds for most CSE applications Learning / adaptive algorithms Adaptive Communication libraries Measurement based load balancing 3/5/2021 Charm Workshop 2003 10

Measurement Based Load Balancing • Based on Principle of persistence • Runtime instrumentation – Measures communication volume and computation time • Measurement based load balancers – Use the instrumented data-base periodically to make new decisions – Many alternative strategies can use the database • Centralized vs distributed • Greedy improvements vs complete reassignments • Taking communication into account • Taking dependences into account (More complex) 3/5/2021 Charm Workshop 2003 11

Optimizing for Communication Patterns • The parallel-objects Runtime System can observe, instrument, and measure communication patterns – Communication is from/to objects, not processors – Load balancers use this to optimize object placement – Communication libraries can optimize • By substituting most suitable algorithm for each operation • Learning at runtime – E. g. Each to all individualized sends • Performance depends on many runtime characteristics • Library switches between different algorithms V. Krishnan, MS Thesis, 1996 Ongoing work: Sameer Kumar, G Zheng, and Greg Koenig 3/5/2021 Charm Workshop 2003 12

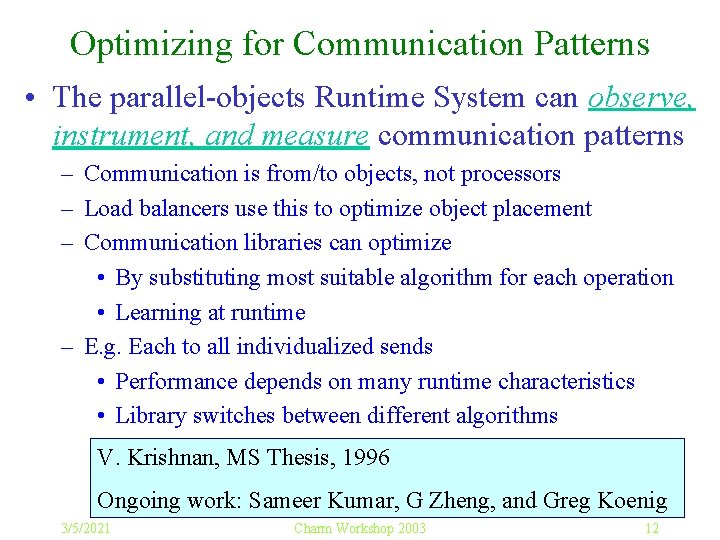

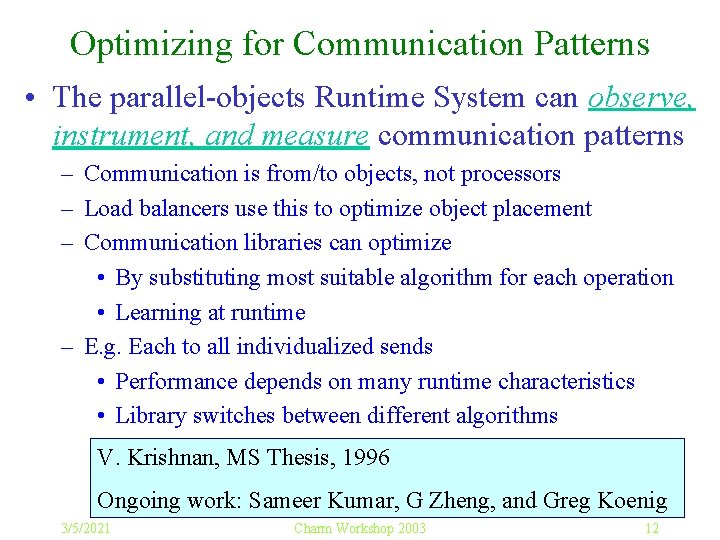

Asynchronous collectives Powered by a communication optimization library Time breakdown: all-to-all using Mesh library • Observation: Computation is only a small proportion of the elapsed time. 3/5/2021 2 D FFT optimized using AMPI Time breakdown of 2 D FFT benchmark • AMPI overlaps useful computation with the waiting time of collective operations • Total completion time reduced Charm Workshop 2003 13

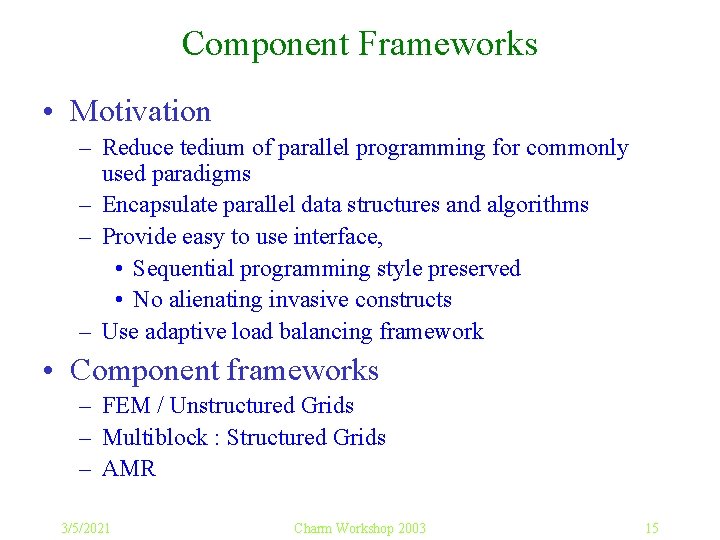

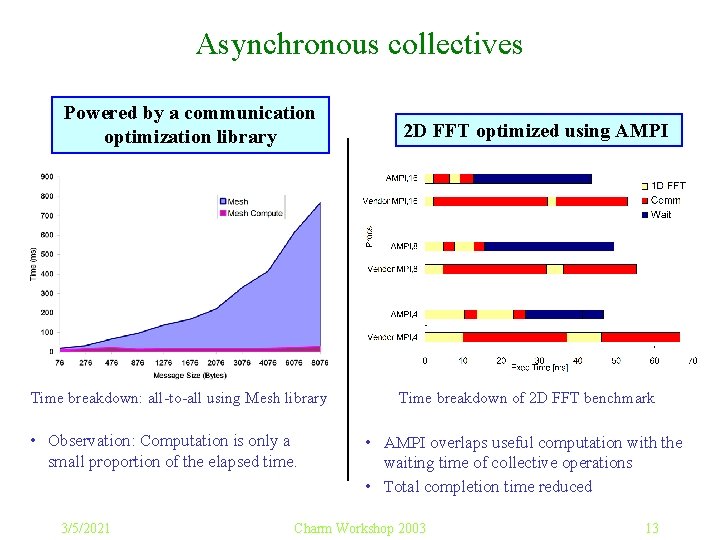

“Overhead” of Virtualization Isn’t there significant overhead of virtualization? No! Not in most cases. 3/5/2021 Charm Workshop 2003 14

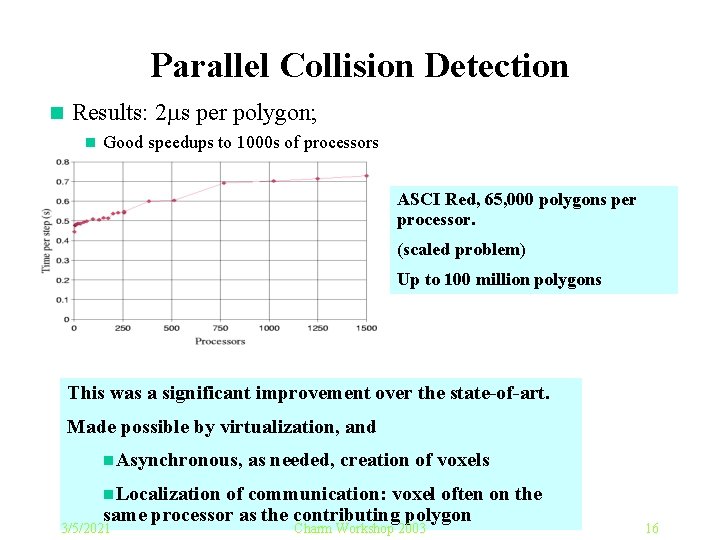

Component Frameworks • Motivation – Reduce tedium of parallel programming for commonly used paradigms – Encapsulate parallel data structures and algorithms – Provide easy to use interface, • Sequential programming style preserved • No alienating invasive constructs – Use adaptive load balancing framework • Component frameworks – FEM / Unstructured Grids – Multiblock : Structured Grids – AMR 3/5/2021 Charm Workshop 2003 15

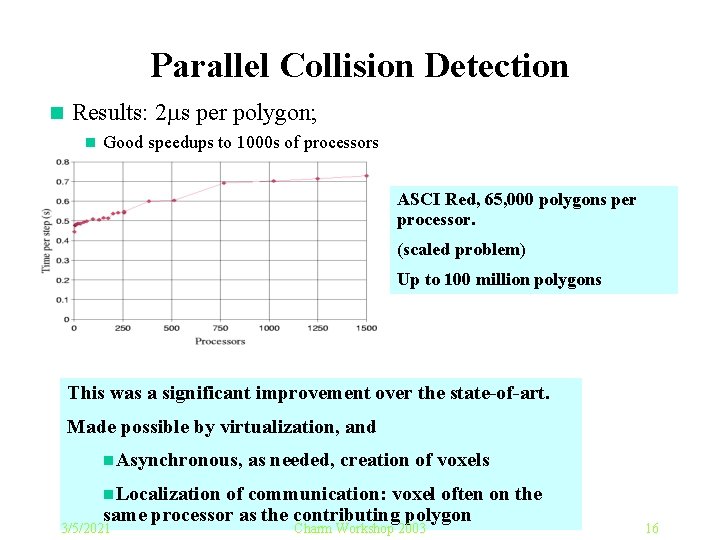

Parallel Collision Detection n Results: 2 s per polygon; n Good speedups to 1000 s of processors ASCI Red, 65, 000 polygons per processor. (scaled problem) Up to 100 million polygons This was a significant improvement over the state-of-art. Made possible by virtualization, and n. Asynchronous, as needed, creation of voxels n. Localization of communication: voxel often on the same processor as the contributing polygon 3/5/2021 Charm Workshop 2003 16

Frameworks progress • In regular use • At CSAR (Rocket Center) – Finite Volume Fluid flow code (Rocflu) developed using it • AMPI version of the framework developed • Adaptivity: – Mesh refinement: 2 D done, 3 D in progress – Remeshing support • assembly, and parallel solution transfer • Extended usage planned for Space-time meshes 3/5/2021 Charm Workshop 2003 17

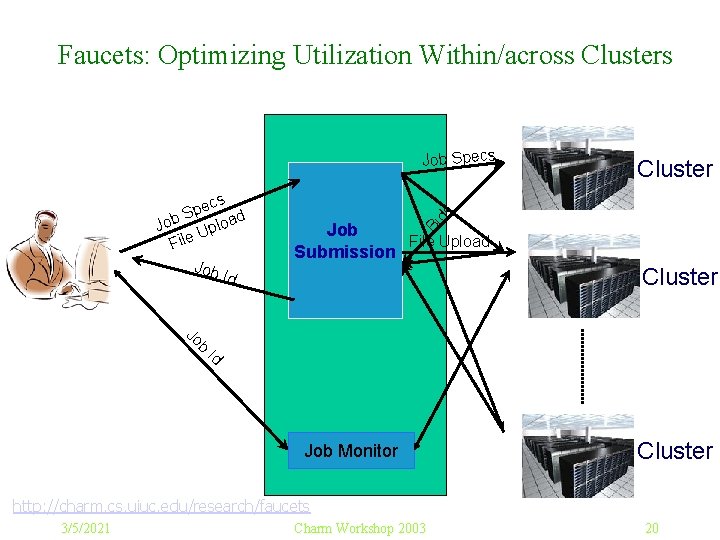

Grid: Progress on Faucets • Cluster Bartering via Faucets • Cluster Scheduler – Allows single-point job submission – Grid level resource allocation – Quality of service contracts – Clusters participate in bartering mode – AMPI jobs can be made to change number of processors used, at runtime – Scheduler exploits such adaptive jobs to maximize throughput, and reduce response time (especially for high priority jobs) • Tutorial on Wednesday 3/5/2021 Charm Workshop 2003 18

AQS: Adaptive Queuing System • Multithreaded • Reliable and robust • Supports most features of standard queuing systems • Exploits adaptive jobs – Charm++ and MPI – Shrink/Expand set of procs • Handles regular jobs – i. e. non-adaptive • In routine use on the “architecture cluster” 3/5/2021 Charm Workshop 2003 19

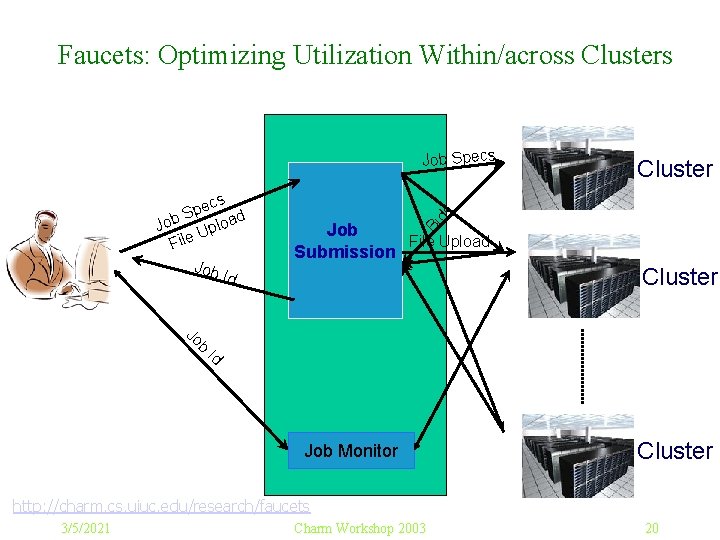

Faucets: Optimizing Utilization Within/across Clusters Jo b Job File Upload Submission Bi cs e p S d Job Uploa File Job Id Cluster ds Job Specs Cluster Id Job Monitor Cluster http: //charm. cs. uiuc. edu/research/faucets 3/5/2021 Charm Workshop 2003 20

Other Ongoing and Planned System Projects • • Fault tolerance Parallel languages JADE Orchestration Parallel Debugger Automatic out-of-core execution Parallel Multigrid Support 3/5/2021 Charm Workshop 2003 21

![Molecular Dynamics in NAMD Collection of charged atoms with bonds Newtonian mechanics Molecular Dynamics in NAMD • Collection of [charged] atoms, with bonds – Newtonian mechanics](https://slidetodoc.com/presentation_image_h/25abb7f0e5e2934c904422f4540e3c11/image-22.jpg)

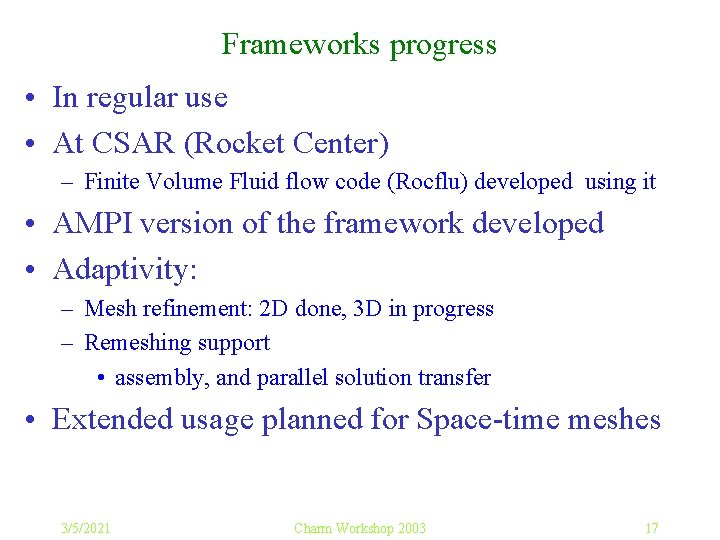

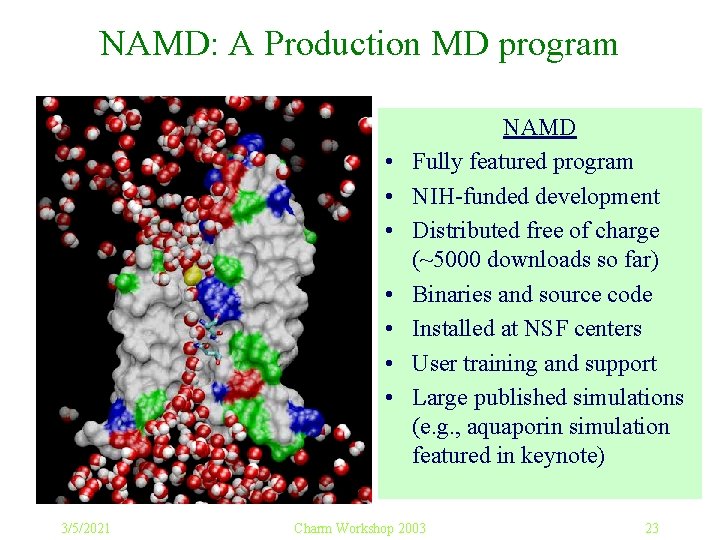

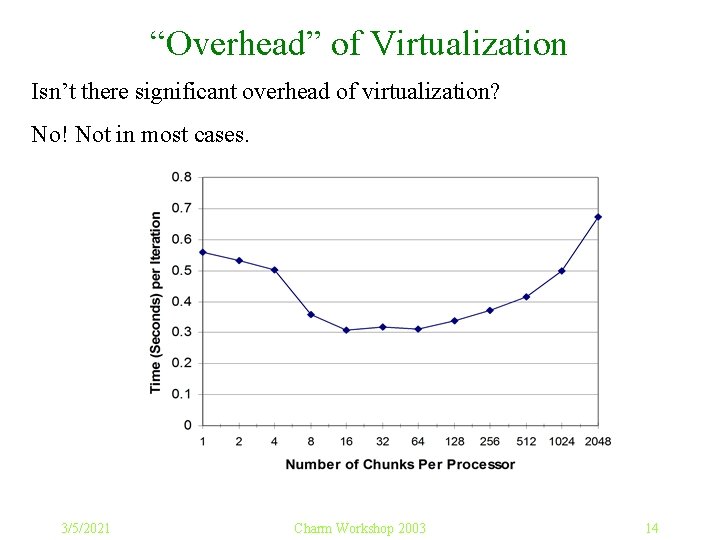

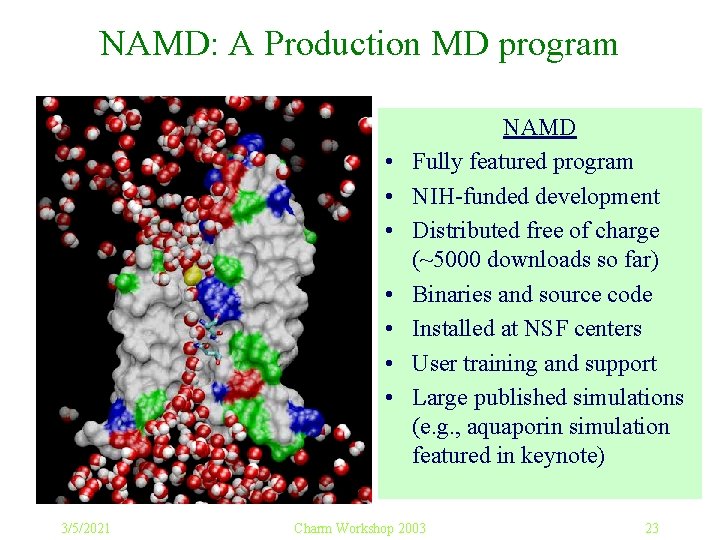

Molecular Dynamics in NAMD • Collection of [charged] atoms, with bonds – Newtonian mechanics – Thousands of atoms (1, 000 - 500, 000) – 1 femtosecond time-step, millions needed! • At each time-step – Calculate forces on each atom • Bonds: • Non-bonded: electrostatic and van der Waal’s – Short-distance: every timestep – Long-distance: every 4 timesteps using PME (3 D FFT) – Multiple Time Stepping – Calculate velocities and advance positions Collaboration with K. Schulten, R. Skeel, and coworkers 3/5/2021 Charm Workshop 2003 22

NAMD: A Production MD program • • 3/5/2021 NAMD Fully featured program NIH-funded development Distributed free of charge (~5000 downloads so far) Binaries and source code Installed at NSF centers User training and support Large published simulations (e. g. , aquaporin simulation featured in keynote) Charm Workshop 2003 23

CPSD: Spacetime Discontinuous Galerkin Method • Collaboration with: – Bob Haber, Jeff Erickson, Mike Garland, . . – NSF funded center • Space-time mesh is generated at runtime – Mesh generation is an advancing front algorithm – Adds an independent set of elements called patches to the mesh – Each patch depends only on inflow elements (cone constraint) • Completed: – Sequential mesh generation interleaved with parallel solution – Ongoing: Parallel Mesh generation – Planned: non-linear cone constraints, adaptive refinements 3/5/2021 Charm Workshop 2003 24

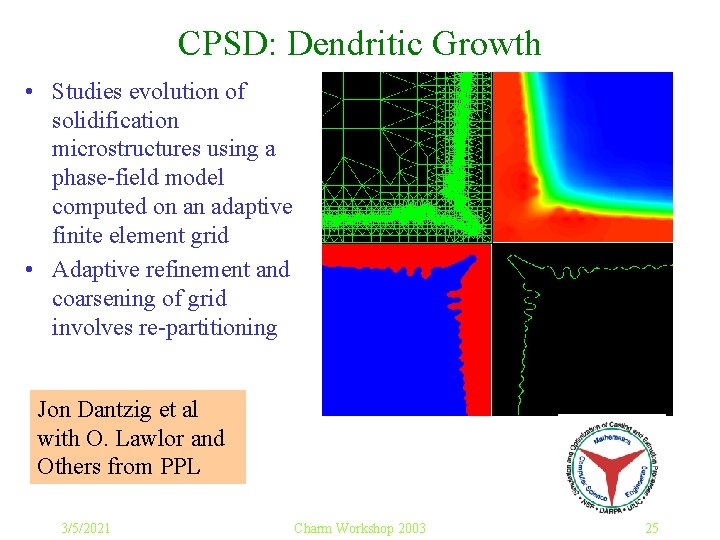

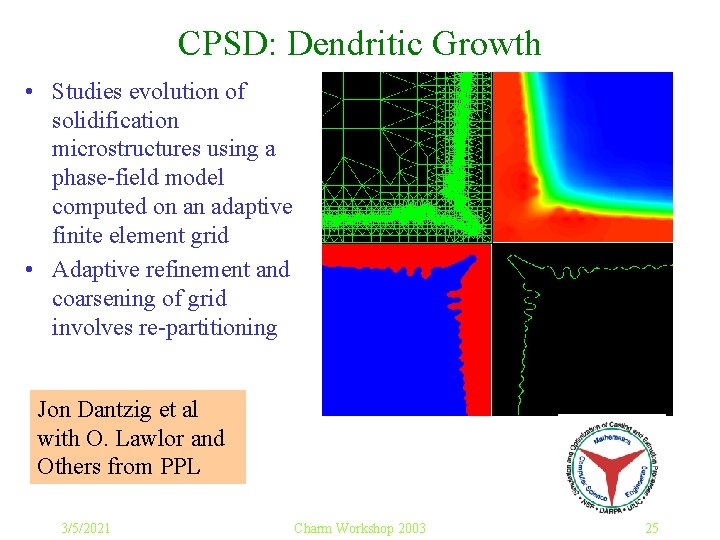

CPSD: Dendritic Growth • Studies evolution of solidification microstructures using a phase-field model computed on an adaptive finite element grid • Adaptive refinement and coarsening of grid involves re-partitioning Jon Dantzig et al with O. Lawlor and Others from PPL 3/5/2021 Charm Workshop 2003 25

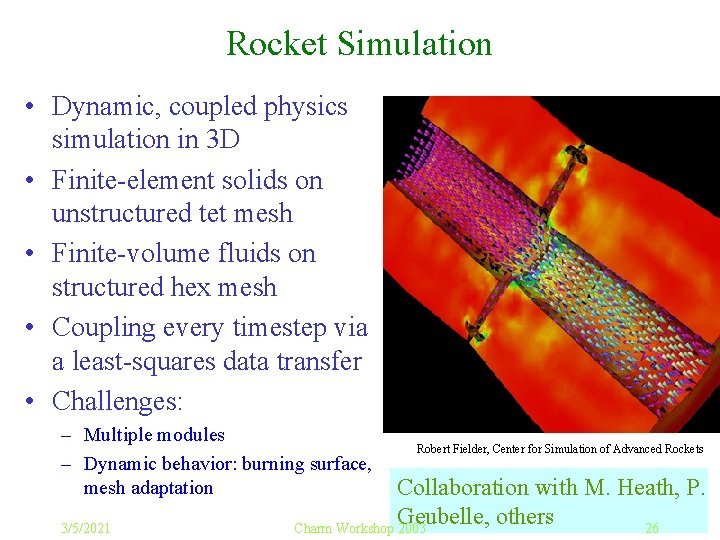

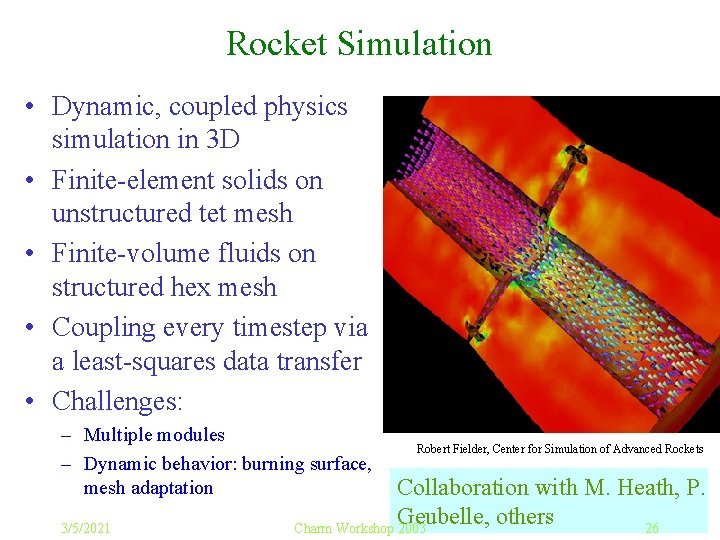

Rocket Simulation • Dynamic, coupled physics simulation in 3 D • Finite-element solids on unstructured tet mesh • Finite-volume fluids on structured hex mesh • Coupling every timestep via a least-squares data transfer • Challenges: – Multiple modules – Dynamic behavior: burning surface, mesh adaptation 3/5/2021 Robert Fielder, Center for Simulation of Advanced Rockets Collaboration with M. Heath, P. Geubelle, others Charm Workshop 2003 26

Computational Cosmology • N body Simulation – N particles (1 million to 1 billion), in a periodic box – Move under gravitation – Organized in a tree (oct, binary (k-d), . . ) • Output data Analysis: in parallel – Particles are read in parallel – Interactive Analysis • Issues: – Load balancing, fine-grained communication, tolerating communication latencies. – Multiple-time stepping Collaboration with T. Quinn, Y. Staedel, M. Winslett, others 3/5/2021 Charm Workshop 2003 27

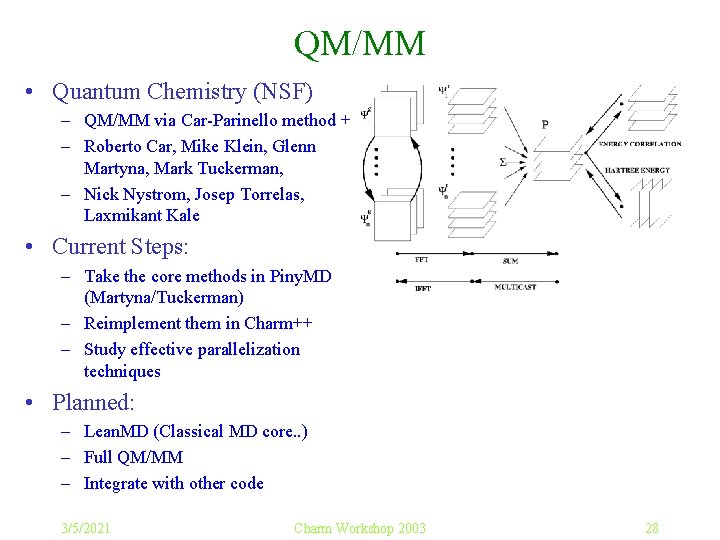

QM/MM • Quantum Chemistry (NSF) – QM/MM via Car-Parinello method + – Roberto Car, Mike Klein, Glenn Martyna, Mark Tuckerman, – Nick Nystrom, Josep Torrelas, Laxmikant Kale • Current Steps: – Take the core methods in Piny. MD (Martyna/Tuckerman) – Reimplement them in Charm++ – Study effective parallelization techniques • Planned: – Lean. MD (Classical MD core. . ) – Full QM/MM – Integrate with other code 3/5/2021 Charm Workshop 2003 28

Summary and Messages • We at PPL have advanced parallel-methods technology – We are committed to supporting applications – We grow our base of reusable techniques via such collaborations • Try using our technology: – AMPI, (Charm++), Faucets, FEM framework, . . – Available via the web (http: //charm. cs. uiuc. edu) 3/5/2021 Charm Workshop 2003 29