Advanced Charm Tutorial Charm Workshop Tutorial Sameer Kumar

![Stencil Example Using Structured Dagger array[1 D] my. Array { … entry void Get. Stencil Example Using Structured Dagger array[1 D] my. Array { … entry void Get.](https://slidetodoc.com/presentation_image_h/3745255e7435ec9cb776fda491d28ee9/image-68.jpg)

![Overlap for Lean. MD Initialization array[1 D] my. Array { … entry void wait. Overlap for Lean. MD Initialization array[1 D] my. Array { … entry void wait.](https://slidetodoc.com/presentation_image_h/3745255e7435ec9cb776fda491d28ee9/image-69.jpg)

- Slides: 73

Advanced Charm++ Tutorial Charm Workshop Tutorial Sameer Kumar Orion Sky Lawlor charm. cs. uiuc. edu 2004/10/19 1

How to Become a Charm++ Hacker n Advanced Charm++ Advanced Messaging n Writing system libraries n • Groups • Delegation Communication framework n Advanced load-balancing n Checkpointing n Threads n SDAG n 2

Advanced Messaging 3

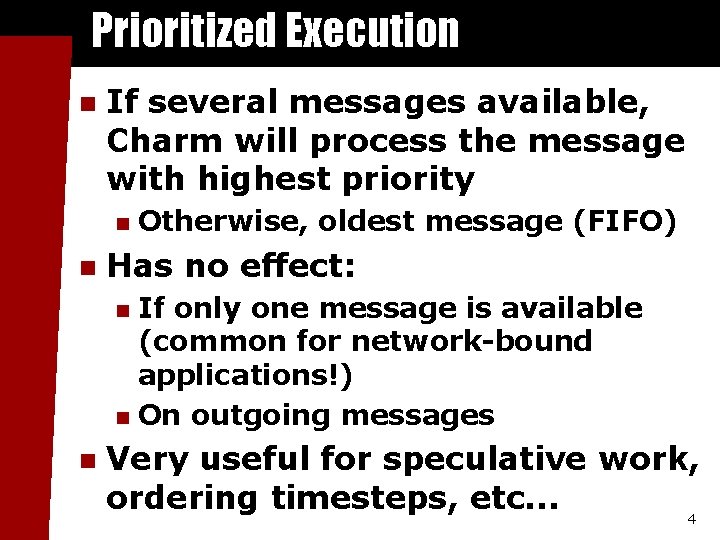

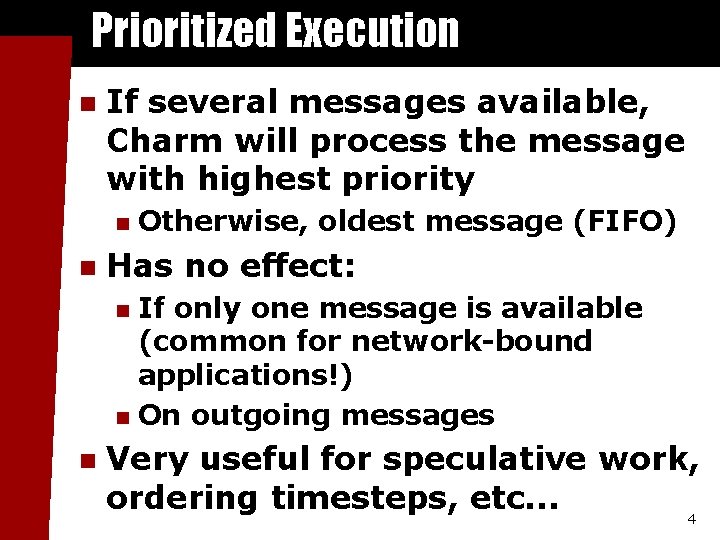

Prioritized Execution n If several messages available, Charm will process the message with highest priority n n Otherwise, oldest message (FIFO) Has no effect: If only one message is available (common for network-bound applications!) n On outgoing messages n n Very useful for speculative work, ordering timesteps, etc. . . 4

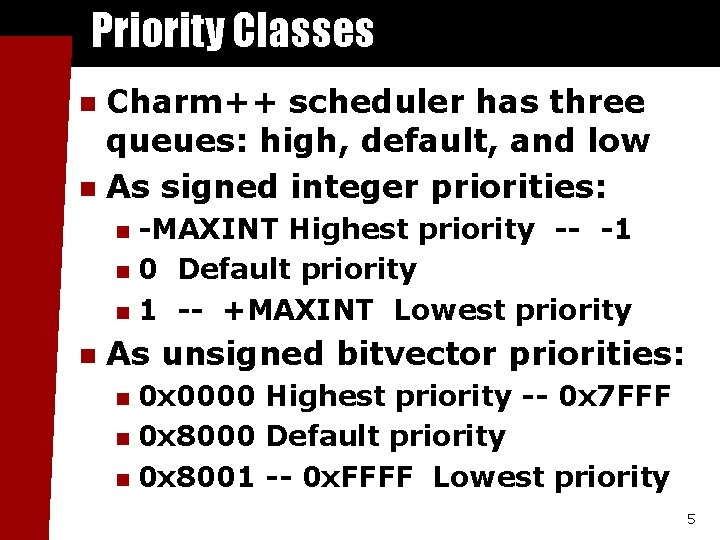

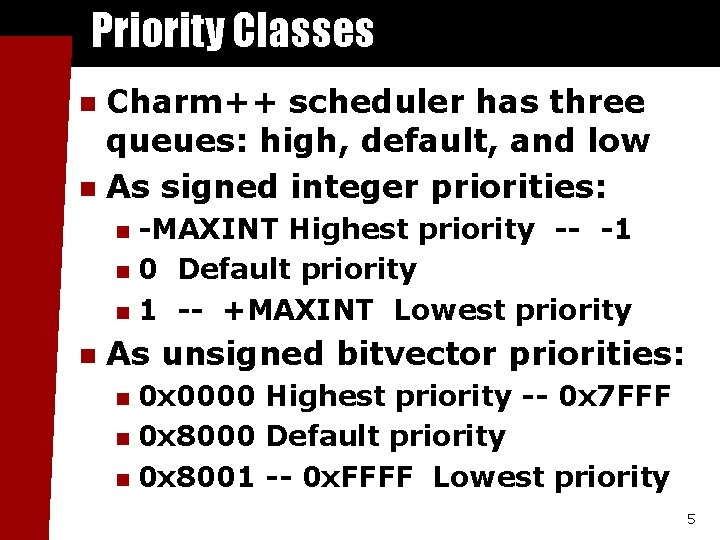

Priority Classes Charm++ scheduler has three queues: high, default, and low n As signed integer priorities: n -MAXINT Highest priority -- -1 n 0 Default priority n 1 -- +MAXINT Lowest priority n n As unsigned bitvector priorities: 0 x 0000 Highest priority -- 0 x 7 FFF n 0 x 8000 Default priority n 0 x 8001 -- 0 x. FFFF Lowest priority n 5

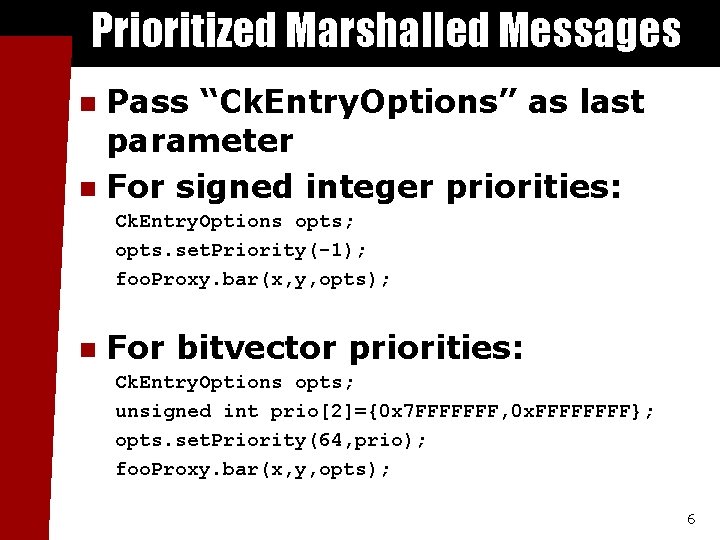

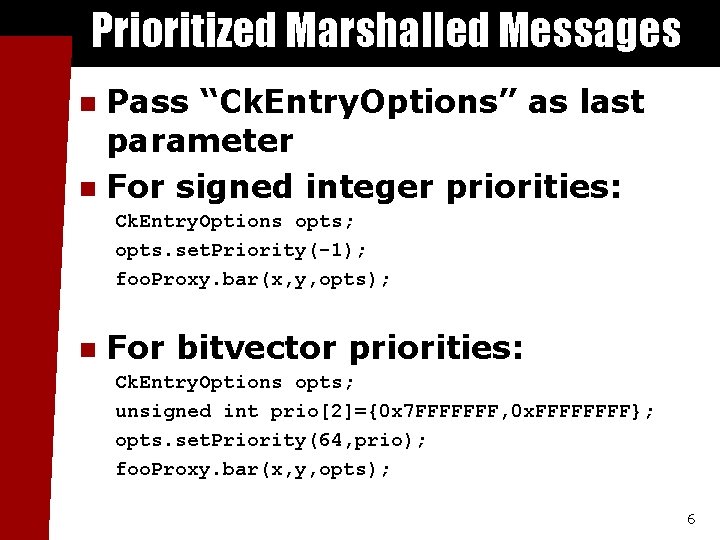

Prioritized Marshalled Messages Pass “Ck. Entry. Options” as last parameter n For signed integer priorities: n Ck. Entry. Options opts; opts. set. Priority(-1); foo. Proxy. bar(x, y, opts); n For bitvector priorities: Ck. Entry. Options opts; unsigned int prio[2]={0 x 7 FFFFFFF, 0 x. FFFF}; opts. set. Priority(64, prio); foo. Proxy. bar(x, y, opts); 6

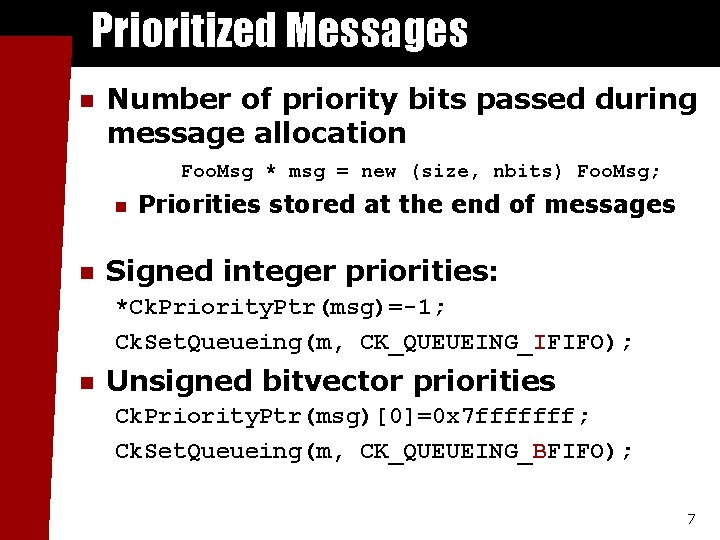

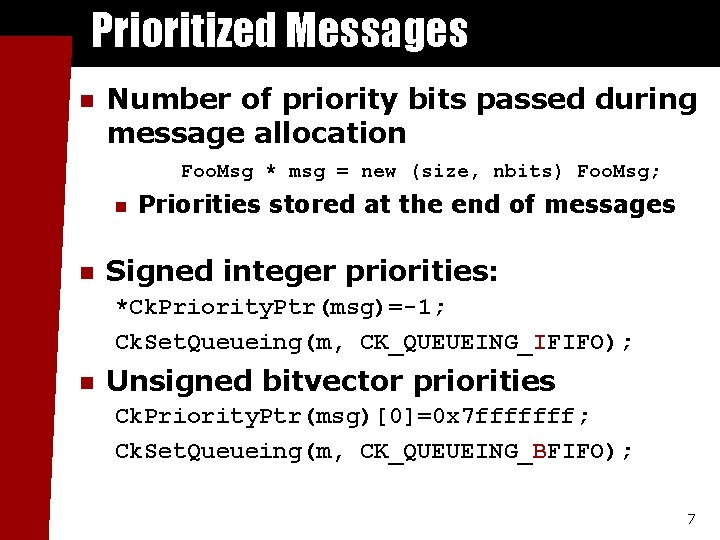

Prioritized Messages n Number of priority bits passed during message allocation Foo. Msg * msg = new (size, nbits) Foo. Msg; n n Priorities stored at the end of messages Signed integer priorities: *Ck. Priority. Ptr(msg)=-1; Ck. Set. Queueing(m, CK_QUEUEING_IFIFO); n Unsigned bitvector priorities Ck. Priority. Ptr(msg)[0]=0 x 7 fffffff; Ck. Set. Queueing(m, CK_QUEUEING_BFIFO); 7

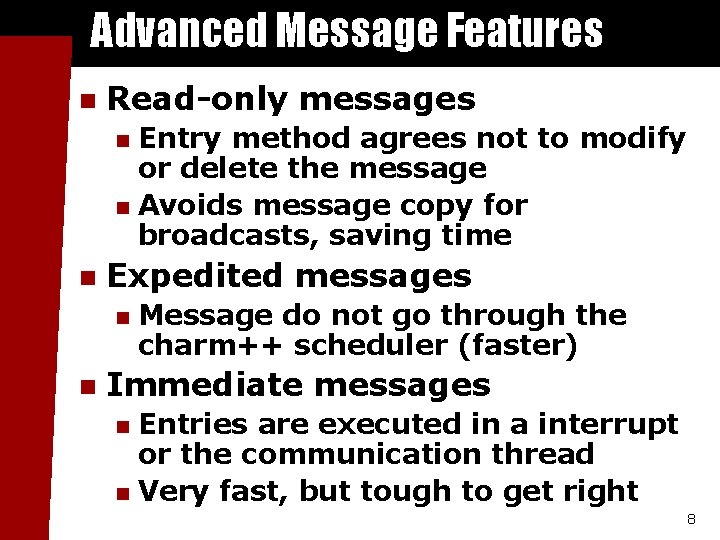

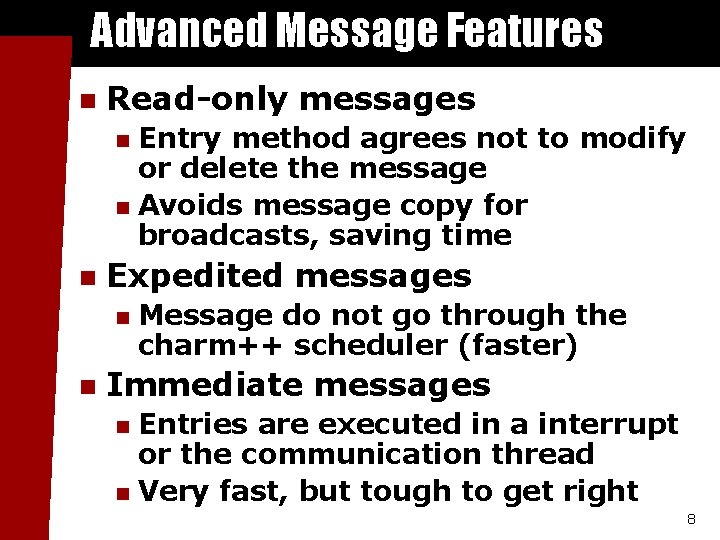

Advanced Message Features n Read-only messages Entry method agrees not to modify or delete the message n Avoids message copy for broadcasts, saving time n n Expedited messages n n Message do not go through the charm++ scheduler (faster) Immediate messages Entries are executed in a interrupt or the communication thread n Very fast, but tough to get right n 8

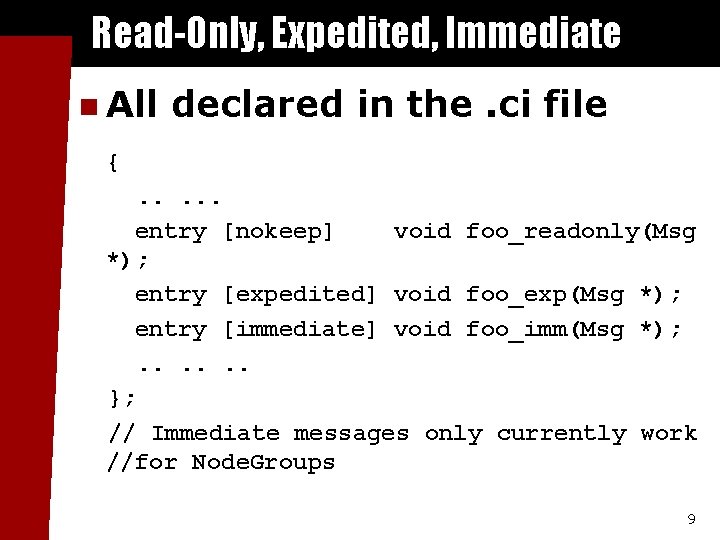

Read-Only, Expedited, Immediate n All declared in the. ci file {. . . entry [nokeep] void foo_readonly(Msg *); entry [expedited] void foo_exp(Msg *); entry [immediate] void foo_imm(Msg *); . . . }; // Immediate messages only currently work //for Node. Groups 9

Groups 10

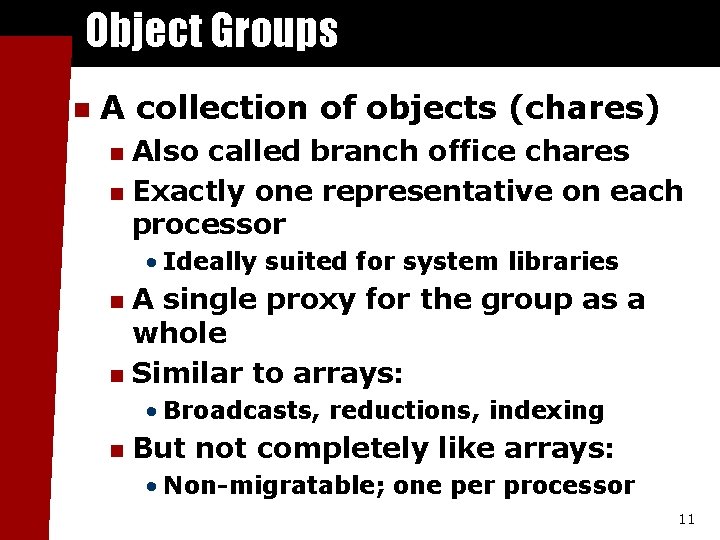

Object Groups n A collection of objects (chares) Also called branch office chares n Exactly one representative on each processor n • Ideally suited for system libraries A single proxy for the group as a whole n Similar to arrays: n • Broadcasts, reductions, indexing n But not completely like arrays: • Non-migratable; one per processor 11

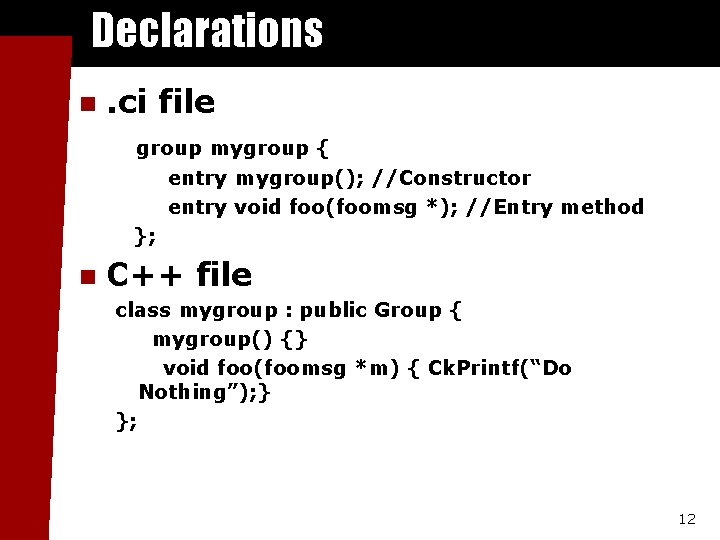

Declarations n . ci file group mygroup { entry mygroup(); //Constructor entry void foo(foomsg *); //Entry method }; n C++ file class mygroup : public Group { mygroup() {} void foo(foomsg *m) { Ck. Printf(“Do Nothing”); } }; 12

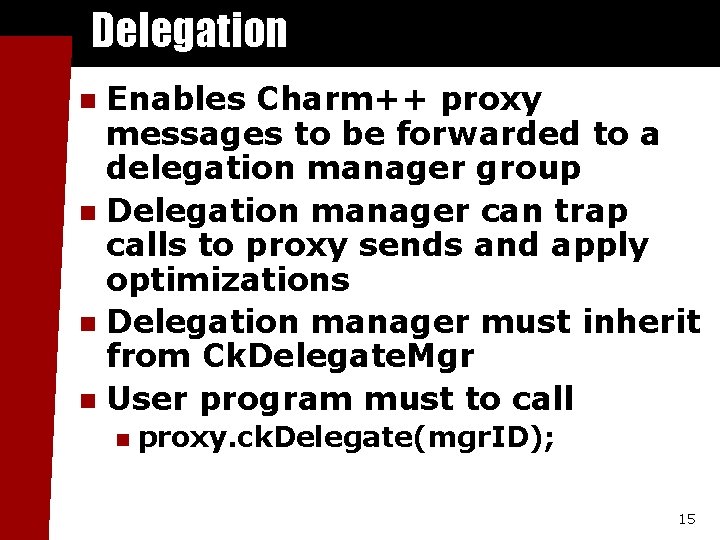

Creating and Calling Groups n Creation p = CProxy_mygroup: : ck. New(); n Remote invocation p. foo(msg); //broadcast p[1]. foo(msg); //asynchronous invocation n Direct local access mygroup *g=p. ck. Local. Branch(); g->foo(…. ); //local invocation n Danger: if you migrate, the group stays behind! 13

Delegation 14

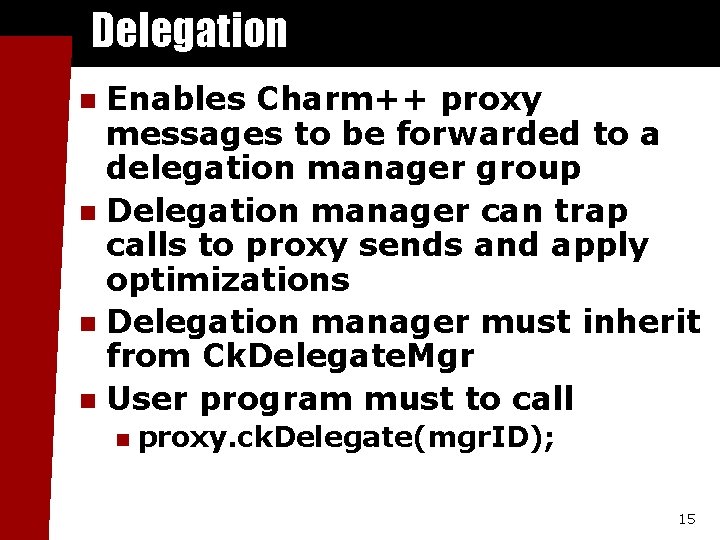

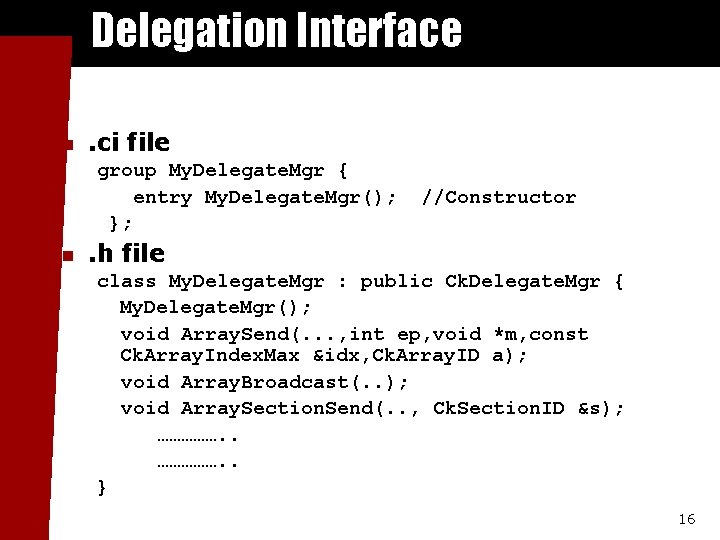

Delegation Enables Charm++ proxy messages to be forwarded to a delegation manager group n Delegation manager can trap calls to proxy sends and apply optimizations n Delegation manager must inherit from Ck. Delegate. Mgr n User program must to call n n proxy. ck. Delegate(mgr. ID); 15

Delegation Interface n . ci file group My. Delegate. Mgr { entry My. Delegate. Mgr(); }; n //Constructor . h file class My. Delegate. Mgr : public Ck. Delegate. Mgr { My. Delegate. Mgr(); void Array. Send(. . . , int ep, void *m, const Ck. Array. Index. Max &idx, Ck. Array. ID a); void Array. Broadcast(. . ); void Array. Section. Send(. . , Ck. Section. ID &s); ……………. . } 16

Communication Optimization 17

Automatic Communication Optimizations n The parallel-objects Runtime System can observe, instrument, and measure communication patterns n Communication libraries can optimize • By substituting most suitable algorithm for each operation • Learning at runtime n E. g. All to all communication • Performance depends on many runtime characteristics • Library switches between different algorithms n Communication is from/to objects, not processors • Streaming messages optimization 18

Managing Collective Communication n Communication operation where all (or most) the processors participate n n n For example broadcast, barrier, all reduce, all to all communication etc Applications: NAMD multicast, NAMD PME, CPAIMD Issues n n n Performance impediment Naïve implementations often do not scale Synchronous implementations do not utilize the co-processor effectively 19

All to All Communication n All processors send data to all other processors n All to all personalized communication (AAPC) • MPI_Alltoall n All to all multicast/broadcast (AAMC) • MPI_Allgather 20

Strategies For AAPC n Short message optimizations High software over head (α) n Message combining n n Large messages n Network contention 21

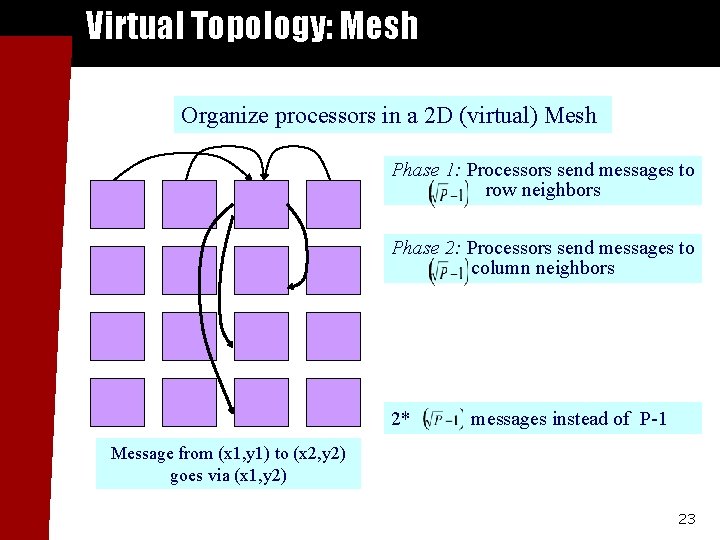

Short Message Optimizations n n Direct all to all communication is α dominated Message combining for small messages n n Reduce the total number of messages Multistage algorithm to send messages along a virtual topology Group of messages combined and sent to an intermediate processor which then forwards them to their final destinations AAPC strategy may send same message multiple times 22

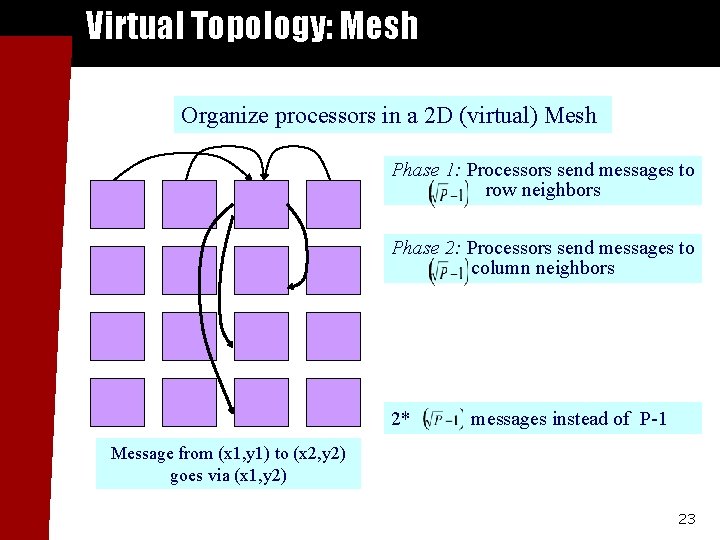

Virtual Topology: Mesh Organize processors in a 2 D (virtual) Mesh Phase 1: Processors send messages to row neighbors Phase 2: Processors send messages to column neighbors 2* messages instead of P-1 Message from (x 1, y 1) to (x 2, y 2) goes via (x 1, y 2) 23

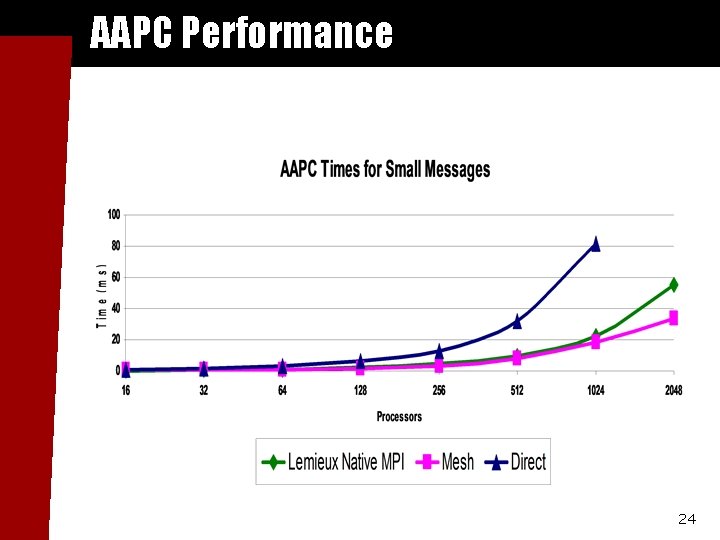

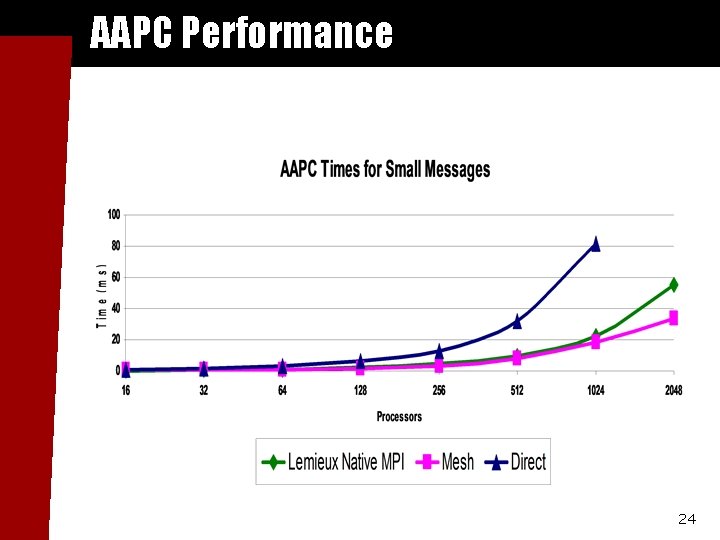

AAPC Performance 24

Large Message Issues n Network contention Contention free schedules n Topology specific optimizations n 25

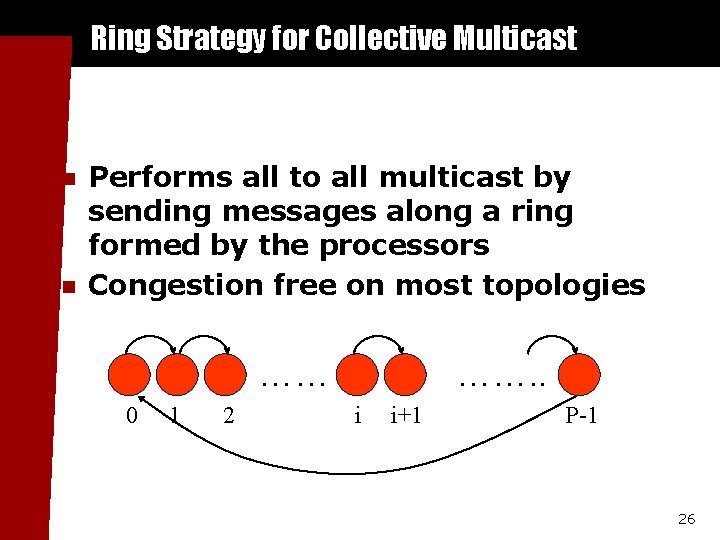

Ring Strategy for Collective Multicast n n Performs all to all multicast by sending messages along a ring formed by the processors Congestion free on most topologies …… 0 1 2 ……. . i i+1 P-1 26

Streaming Messages Programs often have streams of short messages n Streaming library combines a bunch of messages and sends them off n Stripping large charm++ header n Short array message packing n Effective message performance of about 3 us n 27

Using communication library n Communication optimizations embodied as strategies Each. To. Many. Multicast. Strategy n Ring. Multicast n Pipe. Broadcast n Streaming n Mesh. Streaming n 28

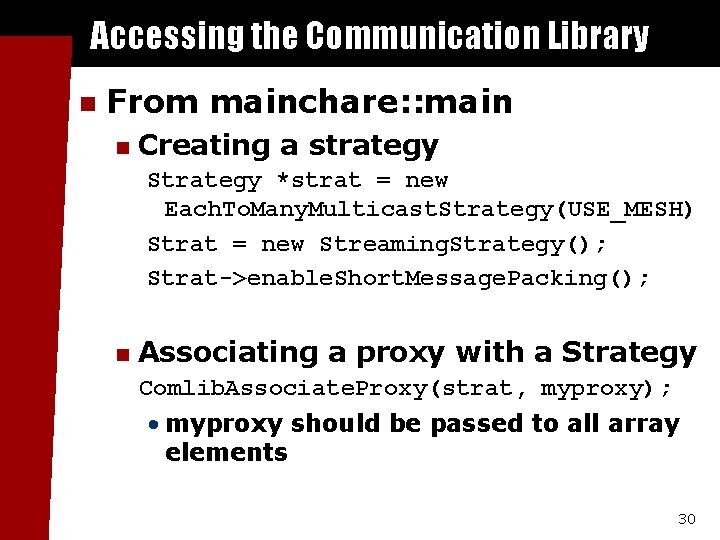

Bracketed vs. Non-bracketed n Bracketed Strategies Require user to give specific end points for each iteration of message sends n Endpoints declared by calling Comlib. Begin() and Comlib. End() n Examples: Each. To. Many. Multicast n n Non bracketed strategies No such end points necessary n Examples: Streaming, Pipe. Broadcast n 29

Accessing the Communication Library n From mainchare: : main n Creating a strategy Strategy *strat = new Each. To. Many. Multicast. Strategy(USE_MESH) Strat = new Streaming. Strategy(); Strat->enable. Short. Message. Packing(); n Associating a proxy with a Strategy Comlib. Associate. Proxy(strat, myproxy); • myproxy should be passed to all array elements 30

Sending Messages Comlib. Begin(myproxy); //Bracketed Strategies for(. . ) {. . myproxy. foo(msg); . . } Comlib. End(); //Bracketed strategies 31

Handling Migration n Migrating array element PUP’s the comlib associated proxy Foo. Array: : pup(PUP: : er &p) {. . . p | my. Proxy; . . . } 32

Compiling n You must include compile time option –module commlib 33

Advanced Load-balancers Writing a Load-balancing Strategy 34

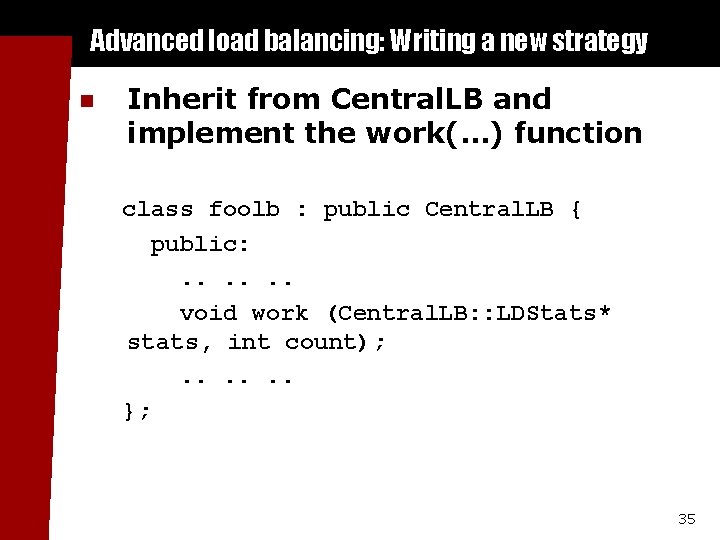

Advanced load balancing: Writing a new strategy n Inherit from Central. LB and implement the work(…) function class foolb : public Central. LB { public: . . . void work (Central. LB: : LDStats* stats, int count); . . . }; 35

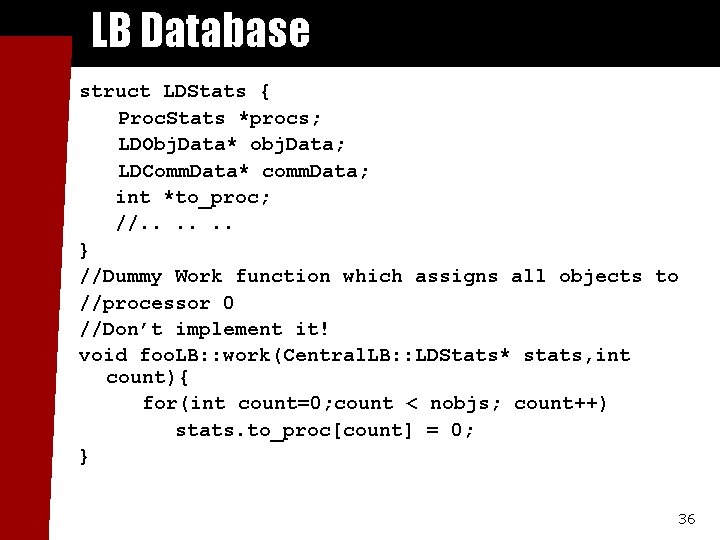

LB Database struct LDStats { Proc. Stats *procs; LDObj. Data* obj. Data; LDComm. Data* comm. Data; int *to_proc; //. . . } //Dummy Work function which assigns all objects to //processor 0 //Don’t implement it! void foo. LB: : work(Central. LB: : LDStats* stats, int count){ for(int count=0; count < nobjs; count++) stats. to_proc[count] = 0; } 36

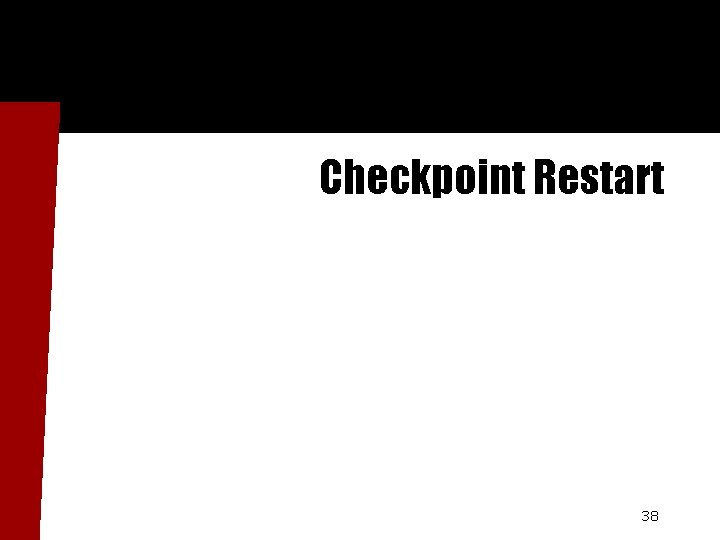

Compiling and Integration n Edit and run Makefile_lb. sh n Creates Make. lb which is included by the LDB Makefile Run make depends to correct dependencies n Rebuild charm++ n 37

Checkpoint Restart 38

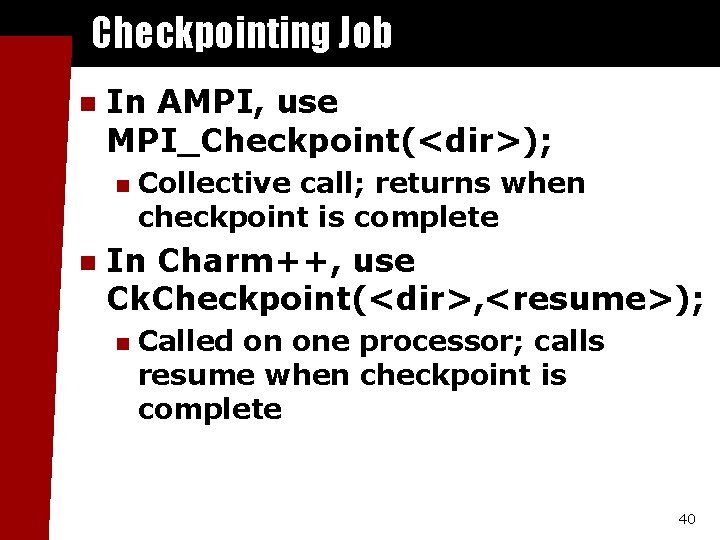

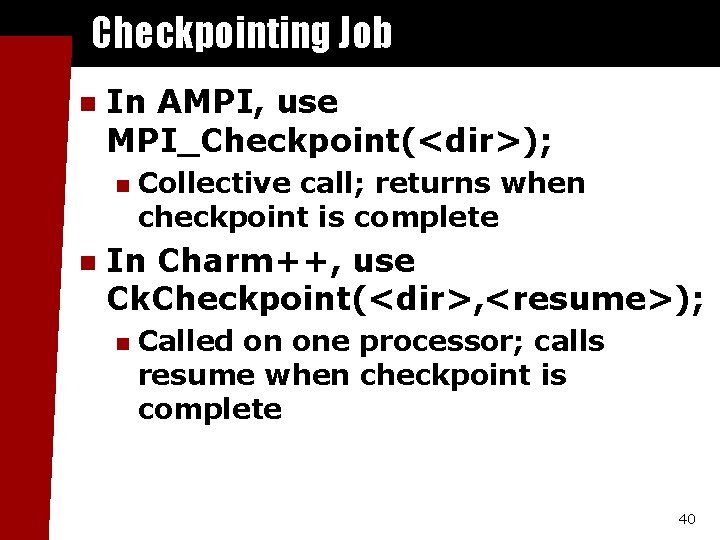

Checkpoint/Restart Any long running application must be able to save its state n When you checkpoint an application, it uses the pup routine to store the state of all objects n State information is saved in a directory of your choosing n Restore also uses pup, so no additional application code is needed (pup is all you need) n 39

Checkpointing Job n In AMPI, use MPI_Checkpoint(<dir>); n n Collective call; returns when checkpoint is complete In Charm++, use Ck. Checkpoint(<dir>, <resume>); n Called on one processor; calls resume when checkpoint is complete 40

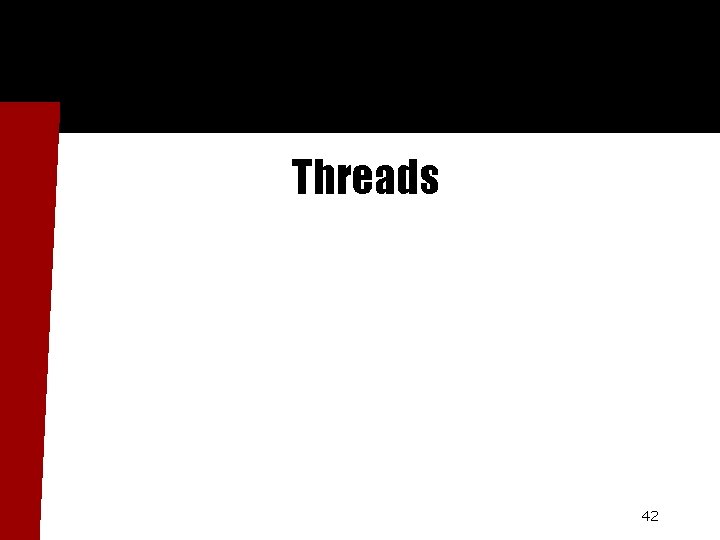

Restart Job from Checkpoint n The charmrun option ++restart <dir> is used to restart n n Number of processors need not be the same You can also restart groups by marking them migratable and writing a PUP routine – they still will not load balance, though 41

Threads 42

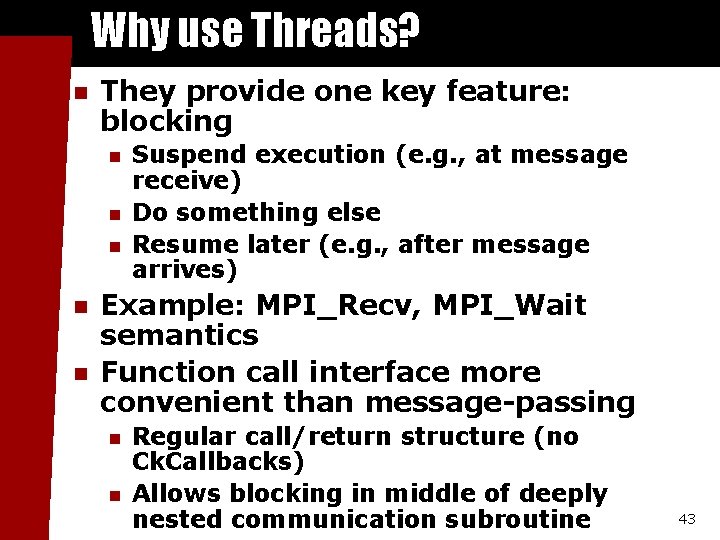

Why use Threads? n They provide one key feature: blocking n n n Suspend execution (e. g. , at message receive) Do something else Resume later (e. g. , after message arrives) Example: MPI_Recv, MPI_Wait semantics Function call interface more convenient than message-passing n n Regular call/return structure (no Ck. Callbacks) Allows blocking in middle of deeply nested communication subroutine 43

Why not use Threads? n Slower n n n More complexity, more bugs n n Breaks a lot of machines! (but we have workarounds) Migration more difficult n n n Around 1 us context-switching overhead unavoidable Creation/deletion perhaps 10 us State of thread is scattered through stack, which is maintained by compiler By contrast, state of object is maintained by users Thread disadvantages form the motivation to use SDAG (later) 44

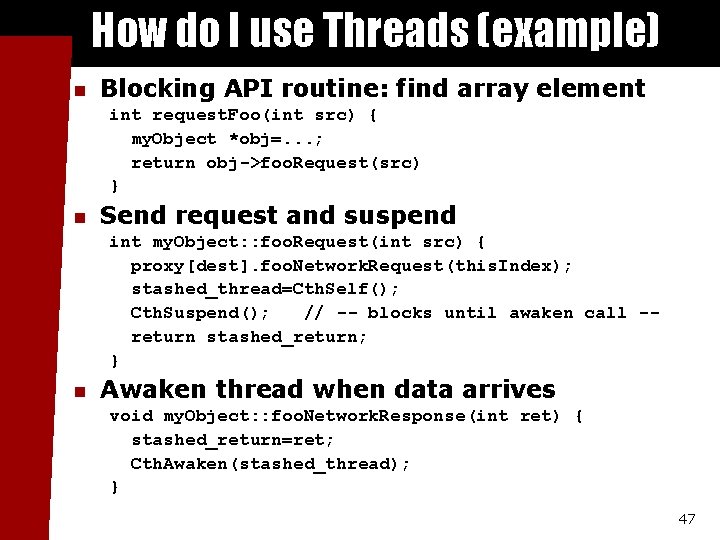

What are (Charm) Threads? n One flow of control (instruction stream) n n Machine Registers & program counter Execution stack Like pthreads (kernel threads) Only different: n n n Implemented at user level (in Converse) Scheduled at user level; non-preemptive Migratable between nodes 45

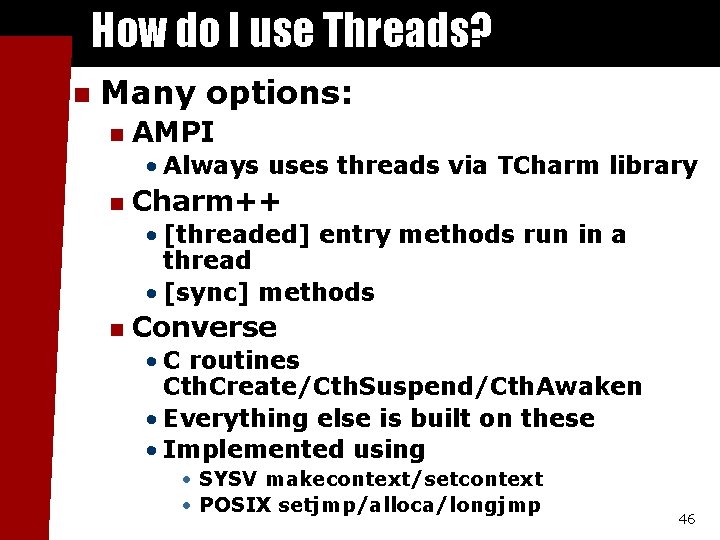

How do I use Threads? n Many options: n AMPI • Always uses threads via TCharm library n Charm++ • [threaded] entry methods run in a thread • [sync] methods n Converse • C routines Cth. Create/Cth. Suspend/Cth. Awaken • Everything else is built on these • Implemented using • SYSV makecontext/setcontext • POSIX setjmp/alloca/longjmp 46

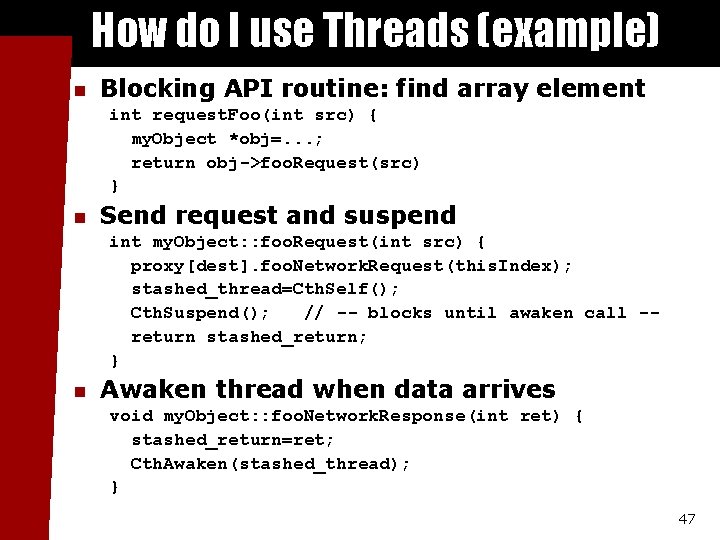

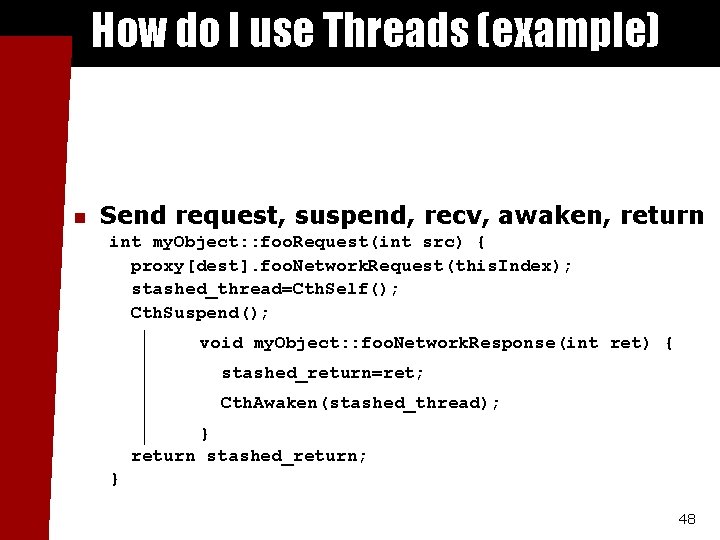

How do I use Threads (example) n Blocking API routine: find array element int request. Foo(int src) { my. Object *obj=. . . ; return obj->foo. Request(src) } n Send request and suspend int my. Object: : foo. Request(int src) { proxy[dest]. foo. Network. Request(this. Index); stashed_thread=Cth. Self(); Cth. Suspend(); // -- blocks until awaken call -return stashed_return; } n Awaken thread when data arrives void my. Object: : foo. Network. Response(int ret) { stashed_return=ret; Cth. Awaken(stashed_thread); } 47

How do I use Threads (example) n Send request, suspend, recv, awaken, return int my. Object: : foo. Request(int src) { proxy[dest]. foo. Network. Request(this. Index); stashed_thread=Cth. Self(); Cth. Suspend(); void my. Object: : foo. Network. Response(int ret) { stashed_return=ret; Cth. Awaken(stashed_thread); } return stashed_return; } 48

The Horror of Thread Migration 49

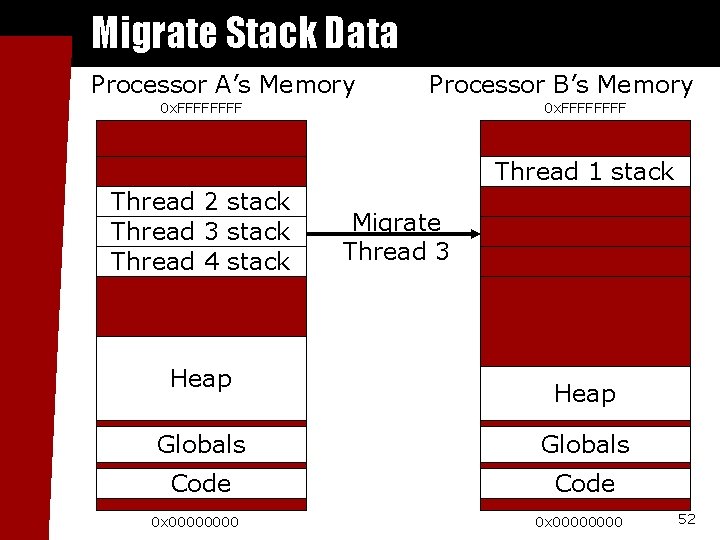

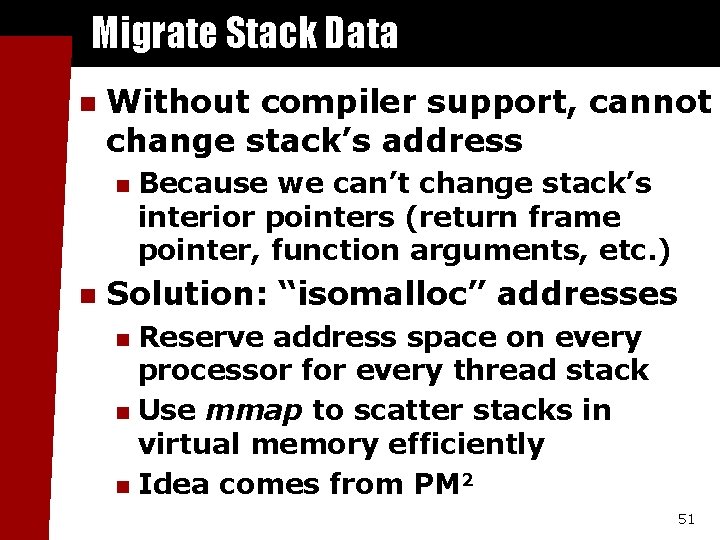

Stack Data n The stack is used by the compiler to track function calls and provide temporary storage Local Variables n Subroutine Parameters n C “alloca” storage n Most of the variables in a typical application are stack data n Users have no control over how stack is laid out 50 n

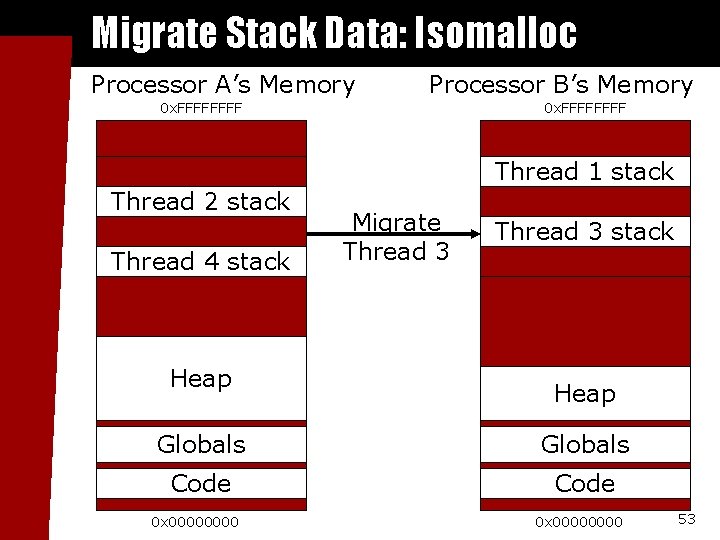

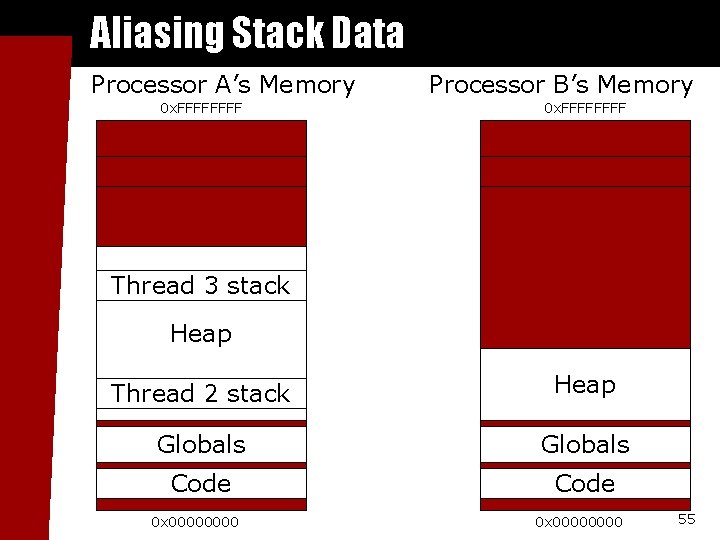

Migrate Stack Data n Without compiler support, cannot change stack’s address n n Because we can’t change stack’s interior pointers (return frame pointer, function arguments, etc. ) Solution: “isomalloc” addresses Reserve address space on every processor for every thread stack n Use mmap to scatter stacks in virtual memory efficiently n Idea comes from PM 2 n 51

Migrate Stack Data Processor A’s Memory Processor B’s Memory 0 x. FFFFFFFF Thread 1 stack Thread 2 stack Thread 3 stack Thread 4 stack Heap Migrate Thread 3 Heap Globals Code 0 x 00000000 52

Migrate Stack Data: Isomalloc Processor A’s Memory Processor B’s Memory 0 x. FFFFFFFF Thread 1 stack Thread 2 stack Thread 4 stack Heap Migrate Thread 3 stack Heap Globals Code 0 x 00000000 53

Migrate Stack Data n Isomalloc is a completely automatic solution n No changes needed in application or compilers Just like a software shared-memory system, but with proactive paging But has a few limitations n Depends on having large quantities of virtual address space (best on 64 -bit) • 32 -bit machines can only have a few gigs of isomalloc stacks across the whole machine n Depends on unportable mmap • Which addresses are safe? (We must guess!) • What about Windows? Or Blue Gene? 54

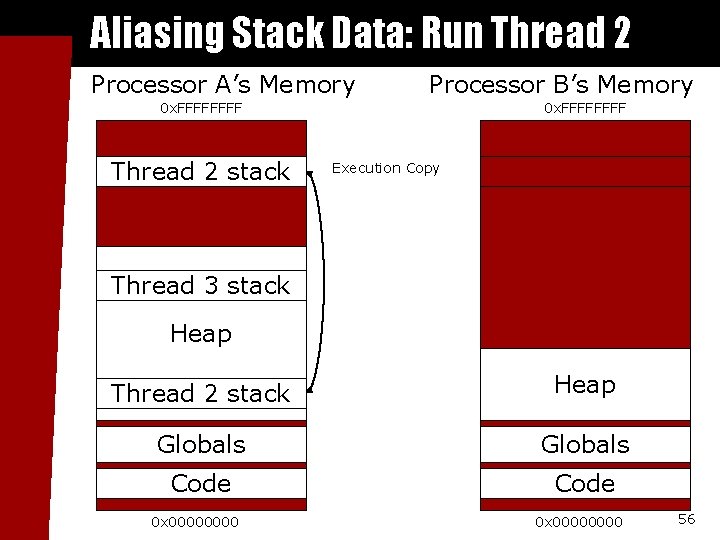

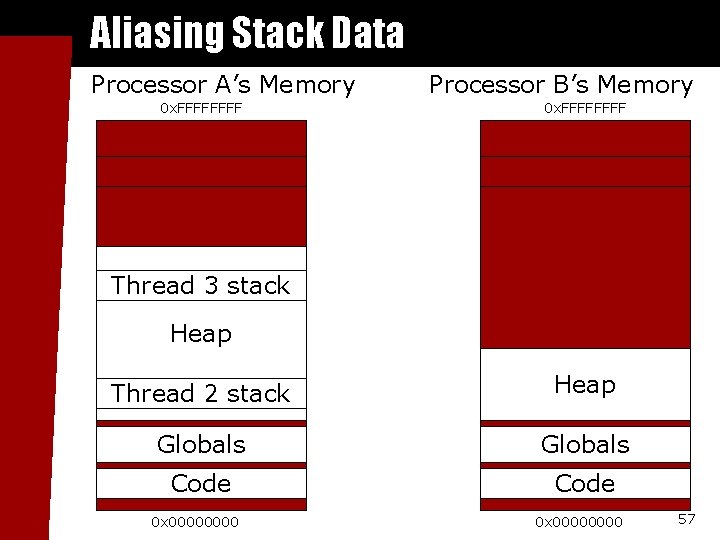

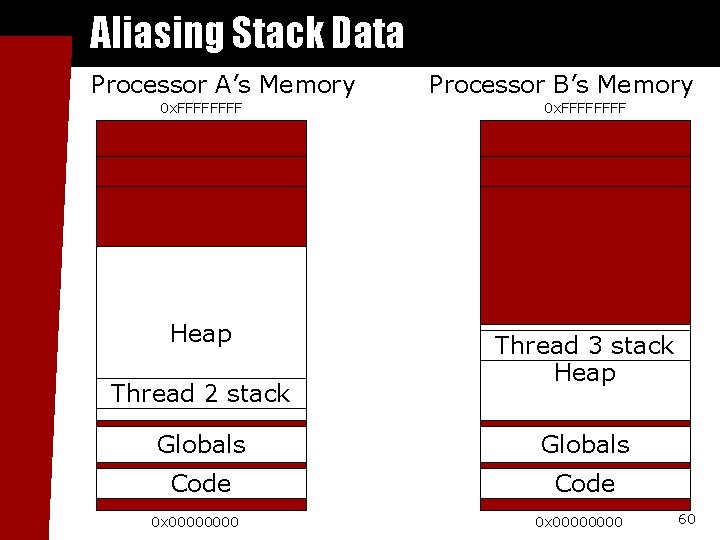

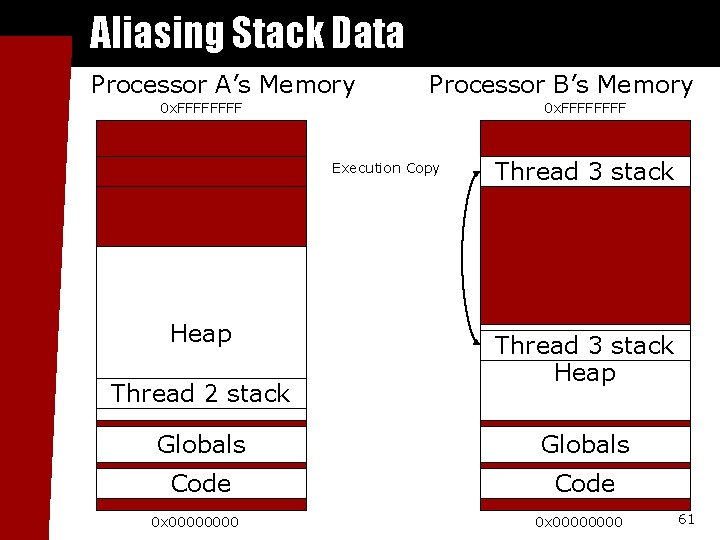

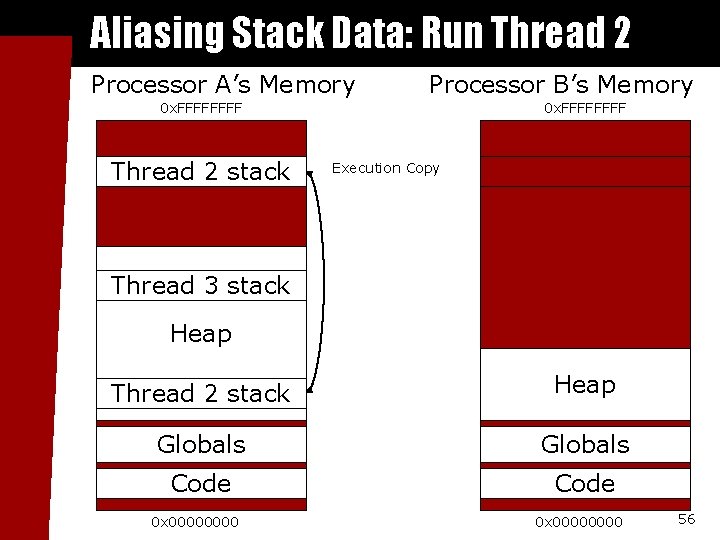

Aliasing Stack Data Processor A’s Memory 0 x. FFFF Processor B’s Memory 0 x. FFFF Thread 3 stack Heap Thread 2 stack Heap Globals Code 0 x 00000000 55

Aliasing Stack Data: Run Thread 2 Processor A’s Memory Processor B’s Memory 0 x. FFFF Thread 2 stack 0 x. FFFF Execution Copy Thread 3 stack Heap Thread 2 stack Heap Globals Code 0 x 00000000 56

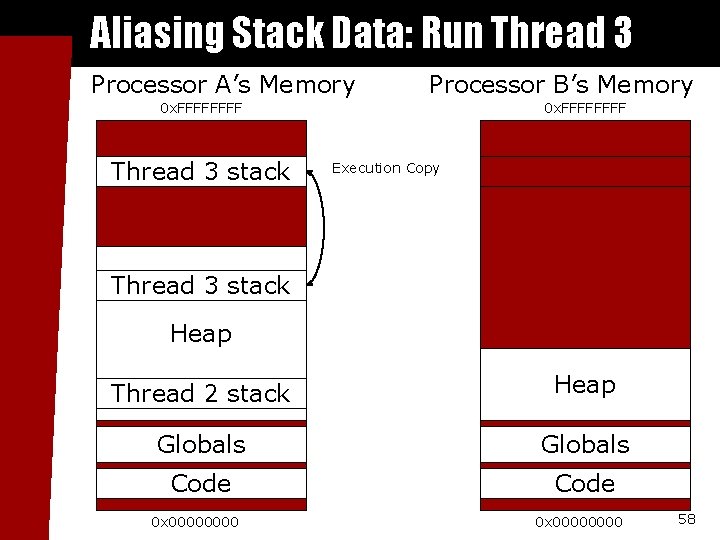

Aliasing Stack Data Processor A’s Memory 0 x. FFFF Processor B’s Memory 0 x. FFFF Thread 3 stack Heap Thread 2 stack Heap Globals Code 0 x 00000000 57

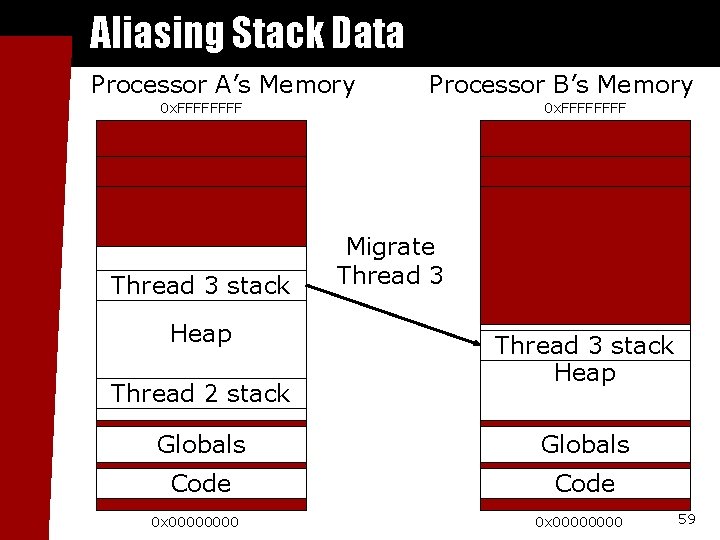

Aliasing Stack Data: Run Thread 3 Processor A’s Memory Processor B’s Memory 0 x. FFFF Thread 3 stack 0 x. FFFF Execution Copy Thread 3 stack Heap Thread 2 stack Heap Globals Code 0 x 00000000 58

Aliasing Stack Data Processor A’s Memory Processor B’s Memory 0 x. FFFF Thread 3 stack Heap Thread 2 stack 0 x. FFFF Migrate Thread 3 stack Heap Globals Code 0 x 00000000 59

Aliasing Stack Data Processor A’s Memory 0 x. FFFF Heap Thread 2 stack Processor B’s Memory 0 x. FFFF Thread 3 stack Heap Globals Code 0 x 00000000 60

Aliasing Stack Data Processor A’s Memory Processor B’s Memory 0 x. FFFFFFFF Execution Copy Heap Thread 2 stack Thread 3 stack Heap Globals Code 0 x 00000000 61

Aliasing Stack Data n Does not depend on having large quantities of virtual address space n n Requires only one mmap’d region at a time n n Works well on 32 -bit machines Works even on Blue Gene! Downsides: Thread context switch requires munmap/mmap (3 us) n Can only have one thread running at a time (so no SMP’s!) n 62

Heap Data n Heap data is any dynamically allocated data C “malloc” and “free” n C++ “new” and “delete” n F 90 “ALLOCATE” and “DEALLOCATE” n n Arrays and linked data structures are almost always heap data 63

Migrate Heap Data n Automatic solution: isomalloc all heap data just like stacks! n n n “-memory isomalloc” link option Overrides malloc/free No new application code needed Same limitations as isomalloc; page allocation granularity (huge!) Manual solution: application moves its heap data n n Need to be able to size message buffer, pack data into message, and unpack on other side “pup” abstraction does all three 64

SDAG 65

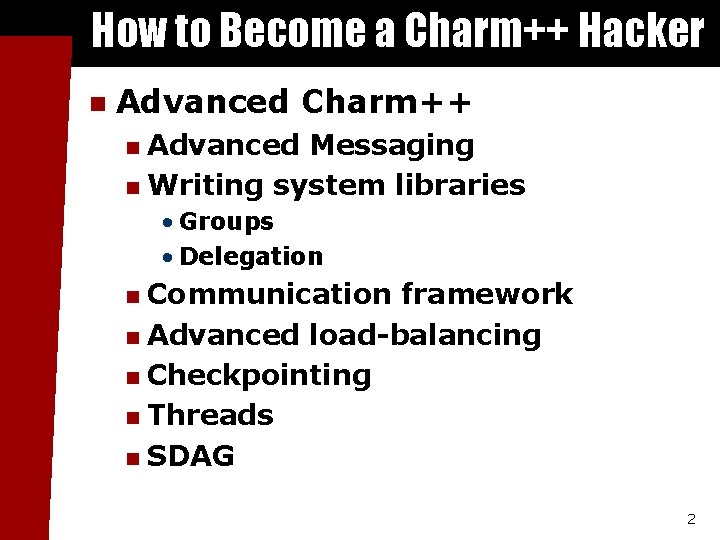

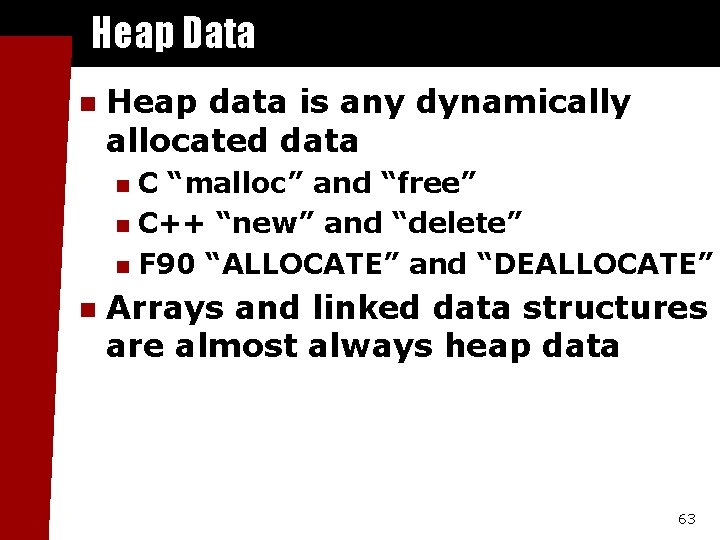

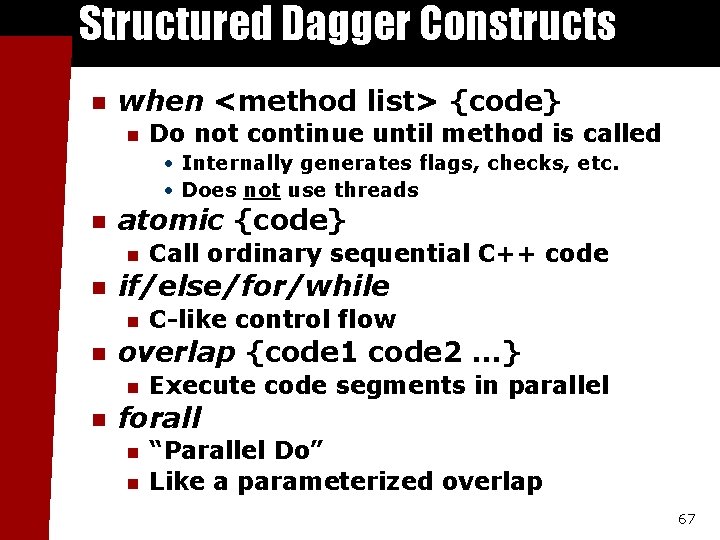

Structured Dagger n What is it? n n A coordination language built on top of Charm++ Motivation n Charm++’s asynchrony is efficient and reliable, but tough to program • Flags, buffering, out-of-order receives, etc. n Threads are easy to program, but less efficient and less reliable • Implementation complexity • Porting headaches n Want benefits of both! 66

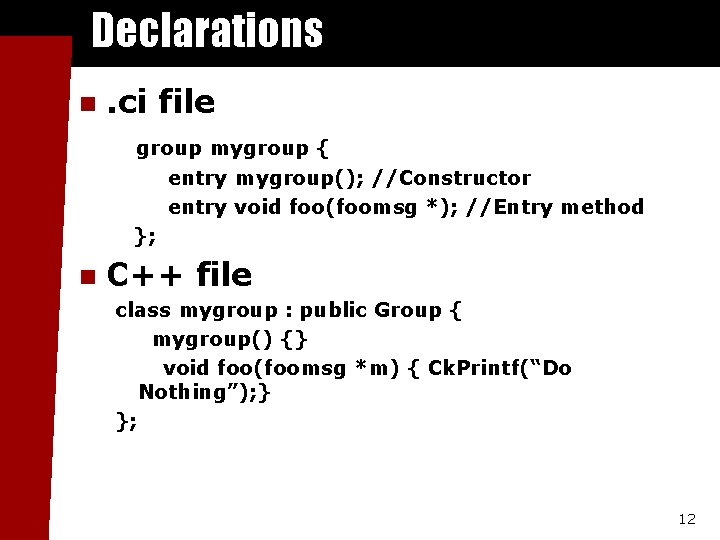

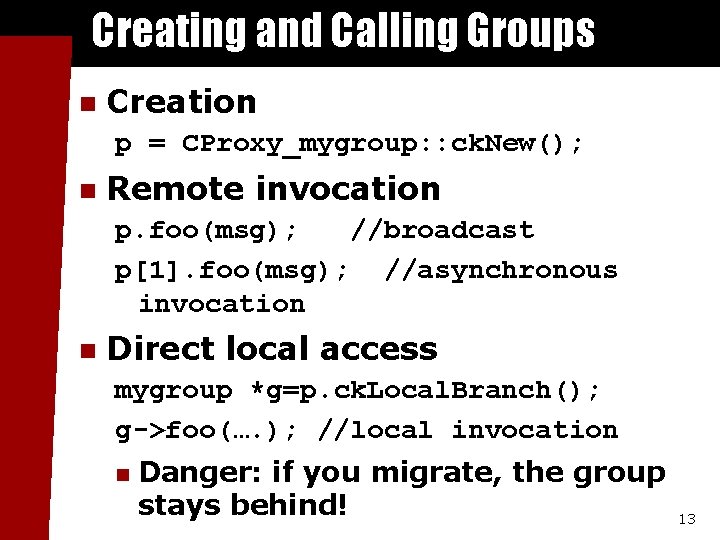

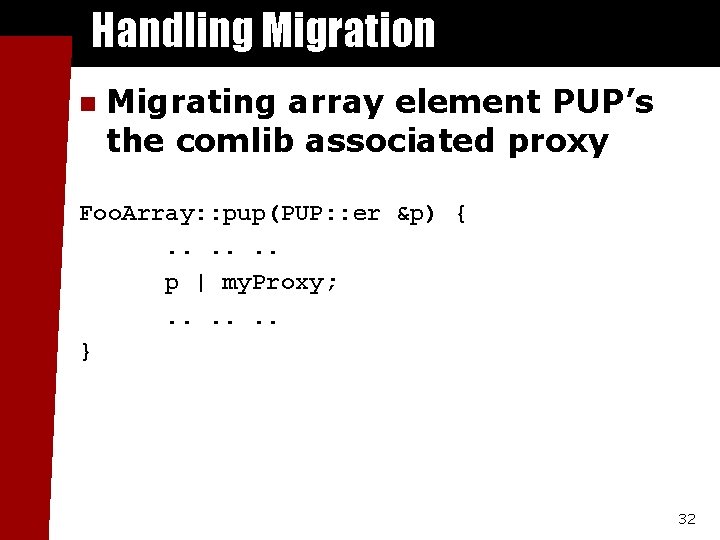

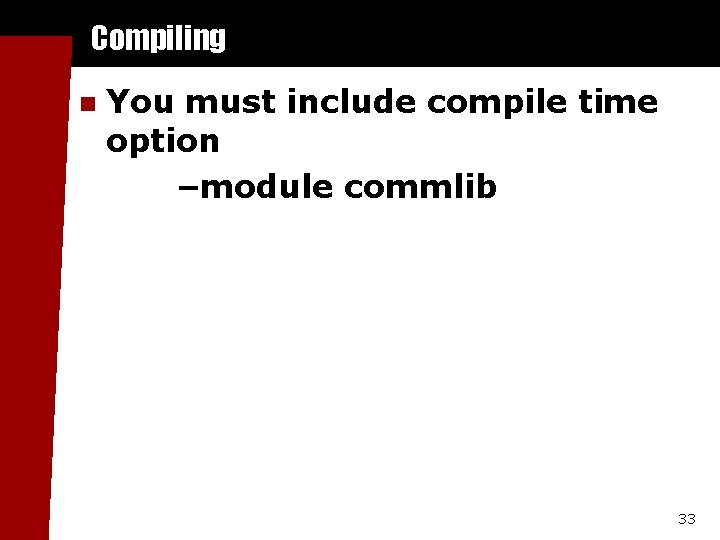

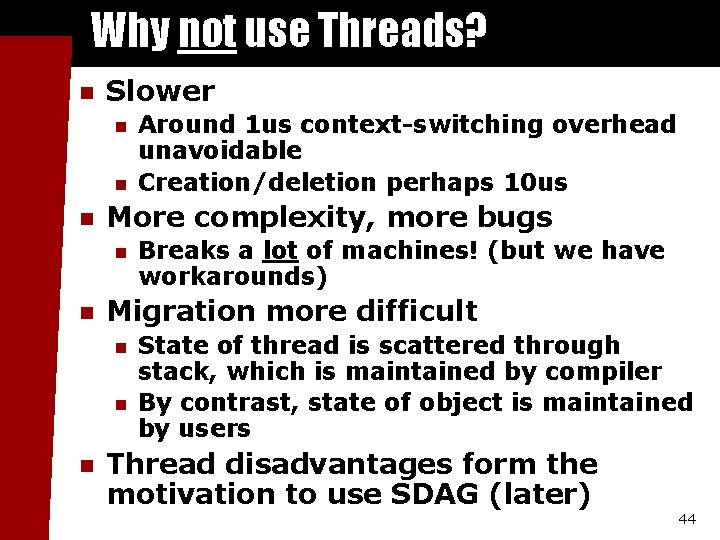

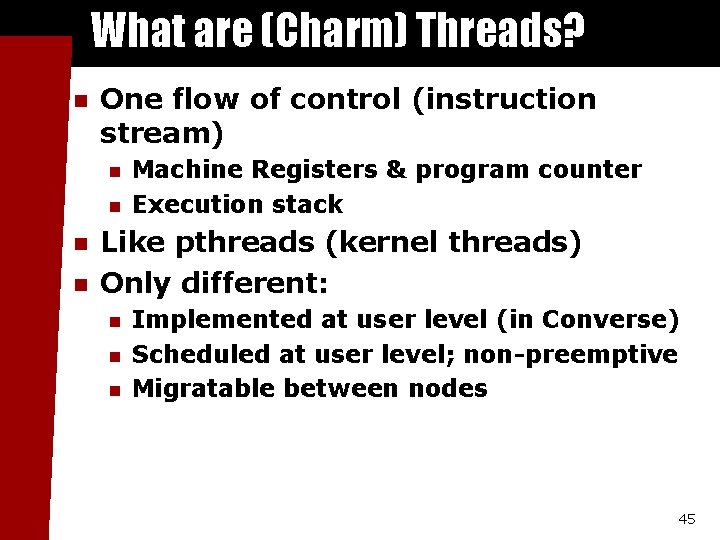

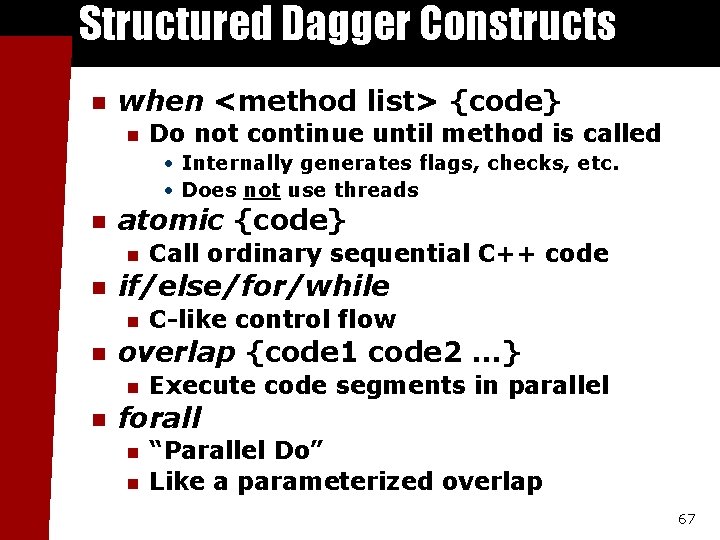

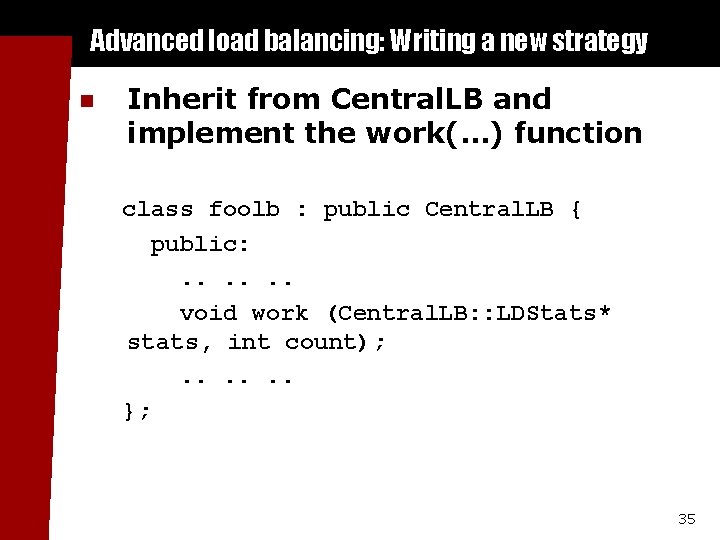

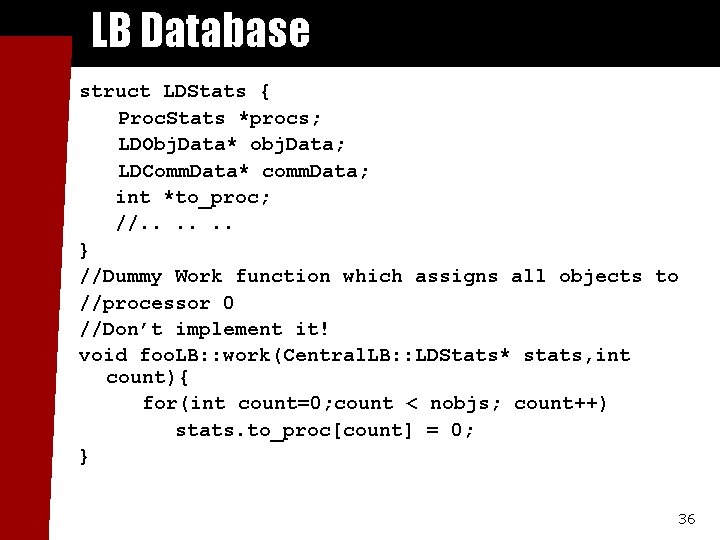

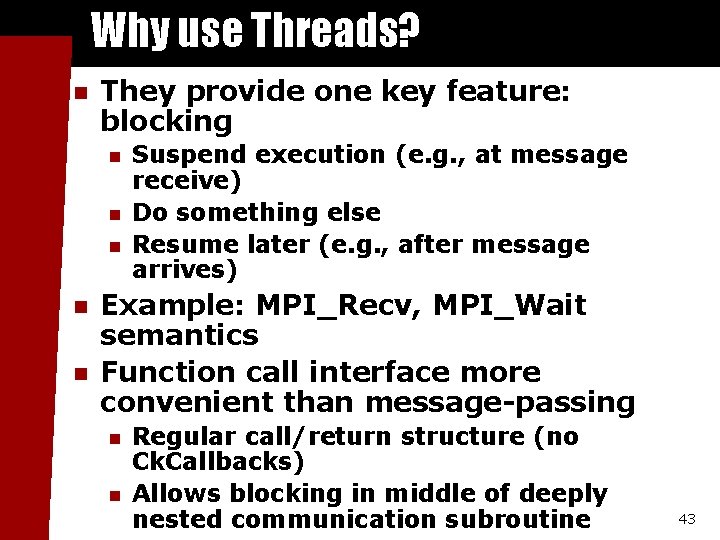

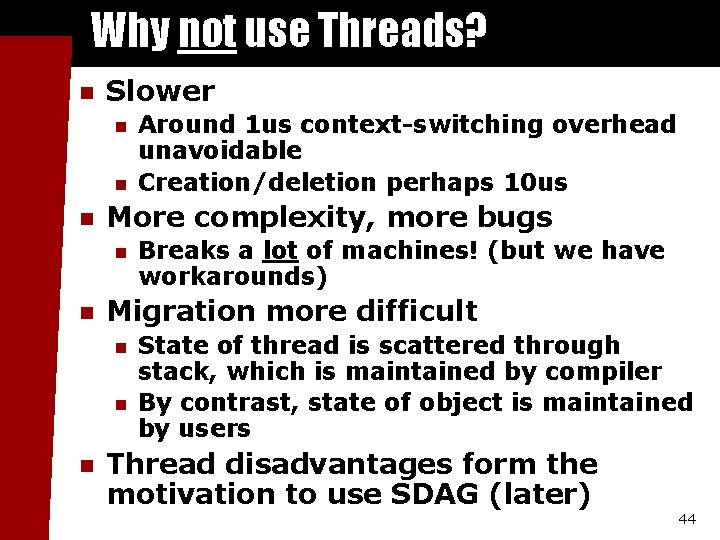

Structured Dagger Constructs n when <method list> {code} n Do not continue until method is called • Internally generates flags, checks, etc. • Does not use threads n atomic {code} n n if/else/for/while n n C-like control flow overlap {code 1 code 2. . . } n n Call ordinary sequential C++ code Execute code segments in parallel forall n n “Parallel Do” Like a parameterized overlap 67

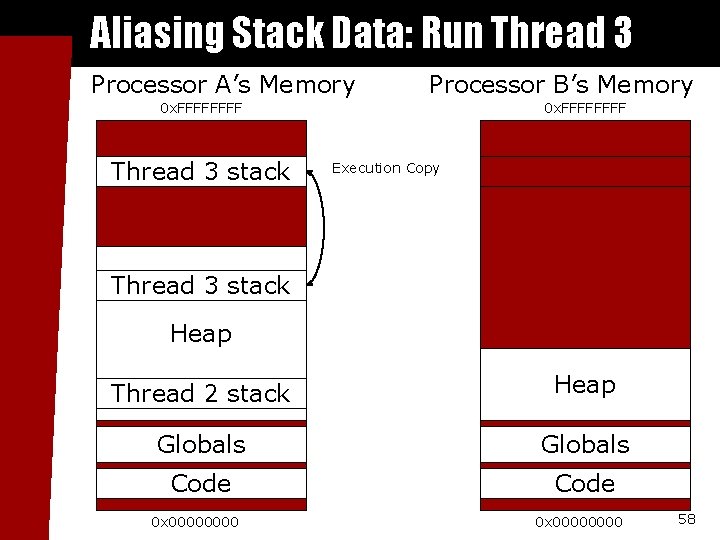

![Stencil Example Using Structured Dagger array1 D my Array entry void Get Stencil Example Using Structured Dagger array[1 D] my. Array { … entry void Get.](https://slidetodoc.com/presentation_image_h/3745255e7435ec9cb776fda491d28ee9/image-68.jpg)

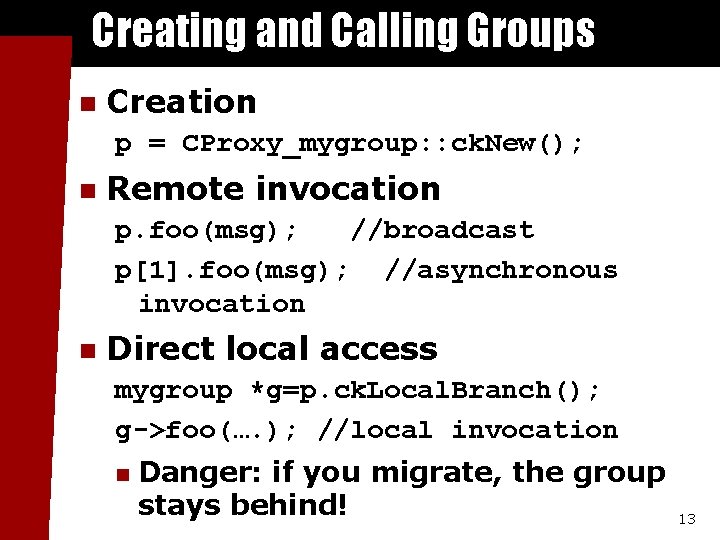

Stencil Example Using Structured Dagger array[1 D] my. Array { … entry void Get. Messages () { when rightmsg. Entry(), leftmsg. Entry() { atomic { Ck. Printf(“Got both left and right messages n”); do. Work(right, left); } } }; entry void rightmsg. Entry(); entry void leftmsg. Entry(); … }; 68

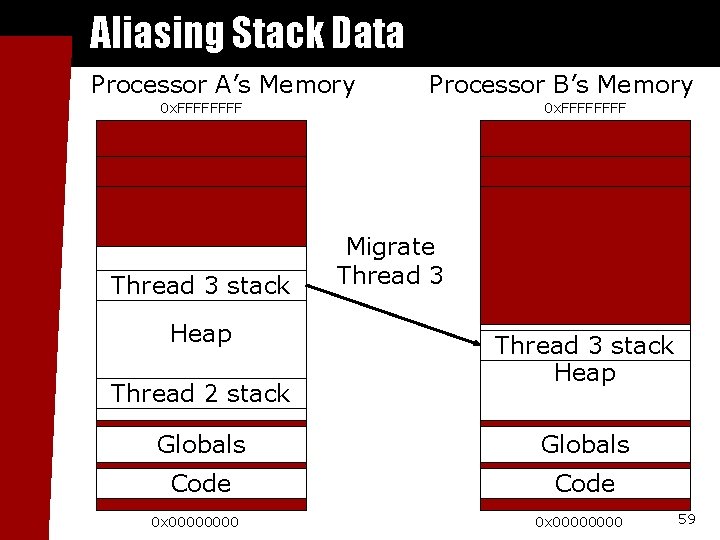

![Overlap for Lean MD Initialization array1 D my Array entry void wait Overlap for Lean. MD Initialization array[1 D] my. Array { … entry void wait.](https://slidetodoc.com/presentation_image_h/3745255e7435ec9cb776fda491d28ee9/image-69.jpg)

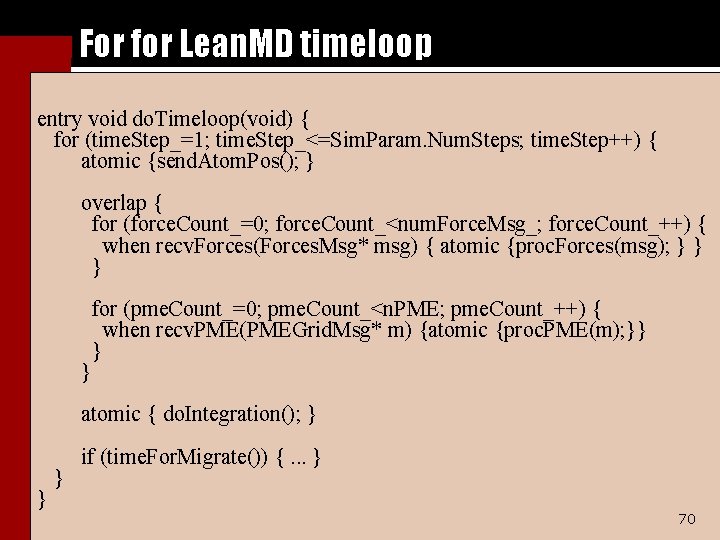

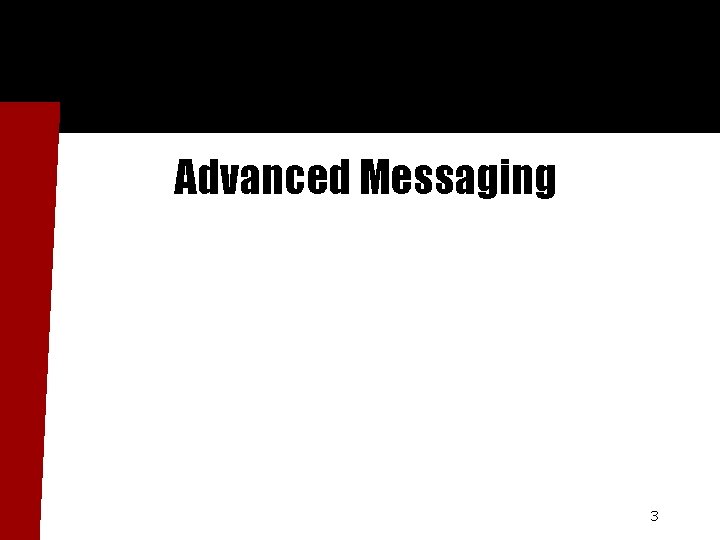

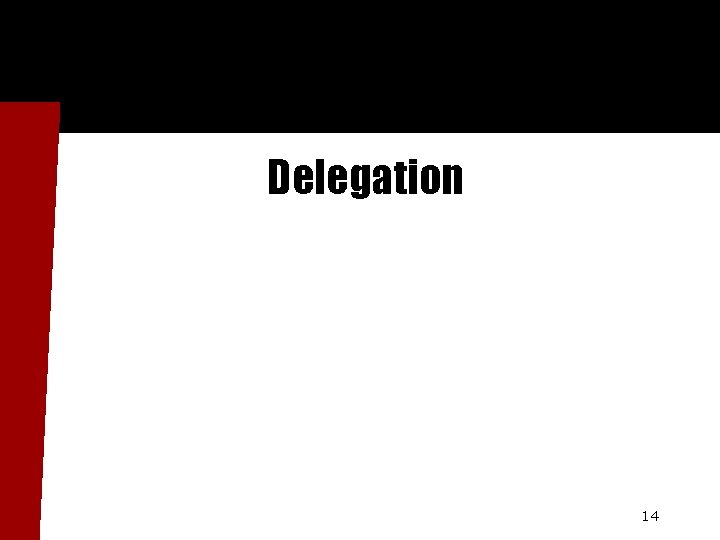

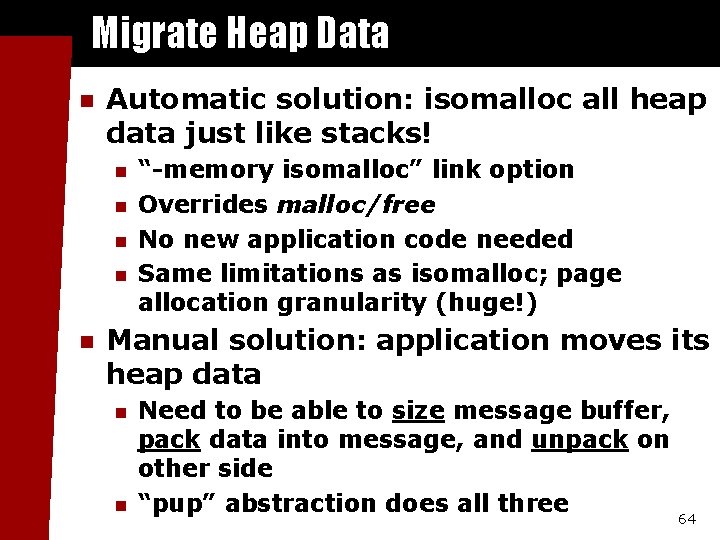

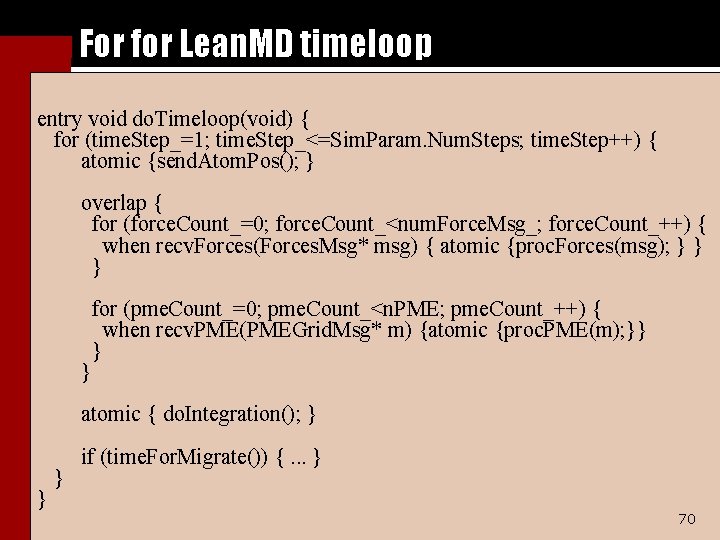

Overlap for Lean. MD Initialization array[1 D] my. Array { … entry void wait. For. Init(void) { overlap { when recv. Num. Cell. Pairs(my. Msg* p. Msg) { atomic { set. Num. Cell. Pairs(p. Msg->int. Val); delete p. Msg; } } when recv. Num. Cells(my. Msg * c. Msg) { atomic { set. Num. Cells(c. Msg->int. Val); delete c. Msg; } } }; 69

For for Lean. MD timeloop entry void do. Timeloop(void) { for (time. Step_=1; time. Step_<=Sim. Param. Num. Steps; time. Step++) { atomic {send. Atom. Pos(); } overlap { for (force. Count_=0; force. Count_<num. Force. Msg_; force. Count_++) { when recv. Forces(Forces. Msg* msg) { atomic {proc. Forces(msg); } } for (pme. Count_=0; pme. Count_<n. PME; pme. Count_++) { when recv. PME(PMEGrid. Msg* m) {atomic {proc. PME(m); }} } atomic { do. Integration(); } } } if (time. For. Migrate()) {. . . } 70

Conclusions 71

Conclusions n AMPI and Charm++ provide a fully virtualized runtime system Load balancing via migration n Communication optimizations n Checkpoint/restart n n Virtualization can significantly improve performance for real applications 72

Thank You! Free source, binaries, manuals, and more information at: http: //charm. cs. uiuc. edu/ Parallel Programming Lab at University of Illinois 73