Workshop on Charm and Applications Welcome and Introduction

![Migratable Objects (aka Processor Virtualization) Benefits • Software engineering Programmer: [Over] decomposition into virtual Migratable Objects (aka Processor Virtualization) Benefits • Software engineering Programmer: [Over] decomposition into virtual](https://slidetodoc.com/presentation_image_h2/ee3f73870350e22ac35723dce4f7cba1/image-3.jpg)

![Migratable Objects (aka Processor Virtualization) Benefits • Software engineering Programmer: [Over] decomposition into virtual Migratable Objects (aka Processor Virtualization) Benefits • Software engineering Programmer: [Over] decomposition into virtual](https://slidetodoc.com/presentation_image_h2/ee3f73870350e22ac35723dce4f7cba1/image-4.jpg)

- Slides: 39

Workshop on Charm++ and Applications Welcome and Introduction Laxmikant Kale http: //charm. cs. uiuc. edu Parallel Programming Laboratory Department of Computer Science University of Illinois at Urbana Champaign 10/27/2021 IITB 2003 1

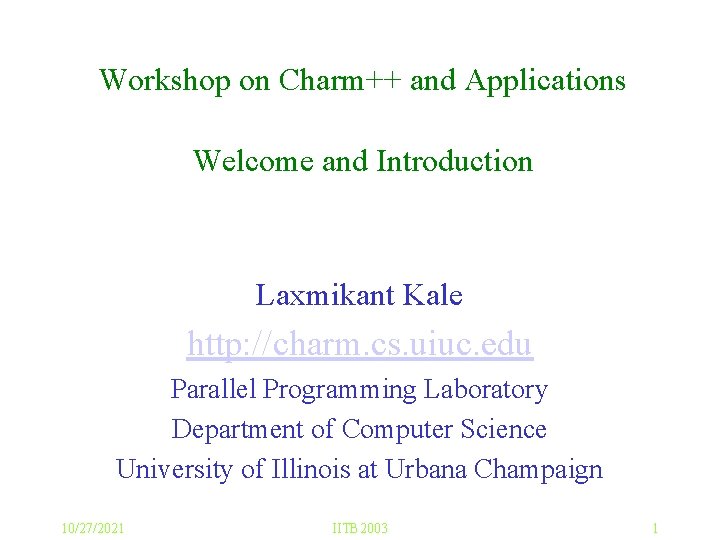

PPL Mission and Approach • To enhance Performance and Productivity in programming complex parallel applications – Performance: scalable to thousands of processors – Productivity: of human programmers – Complex: irregular structure, dynamic variations • Approach: Application Oriented yet CS centered research – Develop enabling technology, for a wide collection of apps. – Develop, use and test it in the context of real applications • How? – – Develop novel Parallel programming techniques Embody them into easy to use abstractions So, application scientist can use advanced techniques with ease Enabling technology: reused across many apps 10/27/2021 IITB 2003 2

![Migratable Objects aka Processor Virtualization Benefits Software engineering Programmer Over decomposition into virtual Migratable Objects (aka Processor Virtualization) Benefits • Software engineering Programmer: [Over] decomposition into virtual](https://slidetodoc.com/presentation_image_h2/ee3f73870350e22ac35723dce4f7cba1/image-3.jpg)

Migratable Objects (aka Processor Virtualization) Benefits • Software engineering Programmer: [Over] decomposition into virtual processors – Number of virtual processors can be independently controlled – Separate VPs for different modules Runtime: Assigns VPs to processors Enables adaptive runtime strategies Implementations: Charm++, AMPI System implementation • Message driven execution – Adaptive overlap of communication – Predictability : • Automatic out-of-core – Asynchronous reductions • Dynamic mapping – Heterogeneous clusters • Vacate, adjust to speed, share – Automatic checkpointing – Change set of processors used – Automatic dynamic load balancing – Communication optimization User View 10/27/2021 IITB 2003 3

![Migratable Objects aka Processor Virtualization Benefits Software engineering Programmer Over decomposition into virtual Migratable Objects (aka Processor Virtualization) Benefits • Software engineering Programmer: [Over] decomposition into virtual](https://slidetodoc.com/presentation_image_h2/ee3f73870350e22ac35723dce4f7cba1/image-4.jpg)

Migratable Objects (aka Processor Virtualization) Benefits • Software engineering Programmer: [Over] decomposition into virtual processors – Number of virtual processors can be independently controlled – Separate VPs for different modules Runtime: Assigns VPs to processors Enables adaptive runtime strategies Implementations: Charm++, AMPI • Message driven execution – Adaptive overlap of communication – Predictability : • Automatic out-of-core – Asynchronous reductions MPI processes Virtual Processors (user-level migratable threads) • Dynamic mapping – Heterogeneous clusters • Vacate, adjust to speed, share – Automatic checkpointing – Change set of processors used – Automatic dynamic load balancing – Communication optimization Real Processors 10/27/2021 IITB 2003 4

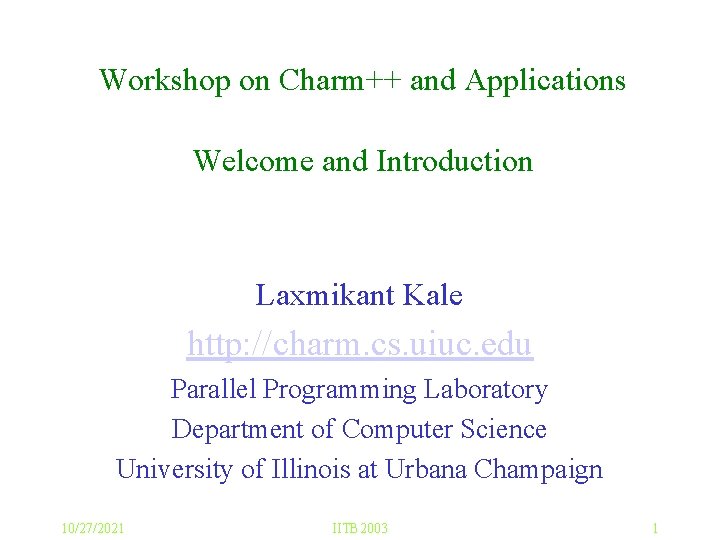

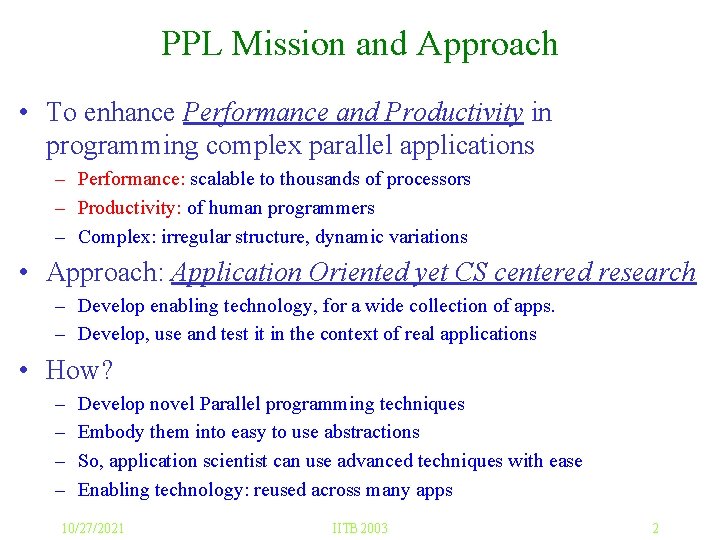

Adaptive overlap and modules SPMD and Message-Driven Modules (From A. Gursoy, Simplified expression of message-driven programs and quantification of their impact on performance, Ph. D Thesis, Apr 1994. ) Modularity, Reuse, and Efficiency with Message-Driven Libraries: Proc. of the Seventh SIAM Conference on Parallel Processing for Scientigic Computing, San Fransisco, 1995 10/27/2021 IITB 2003 5

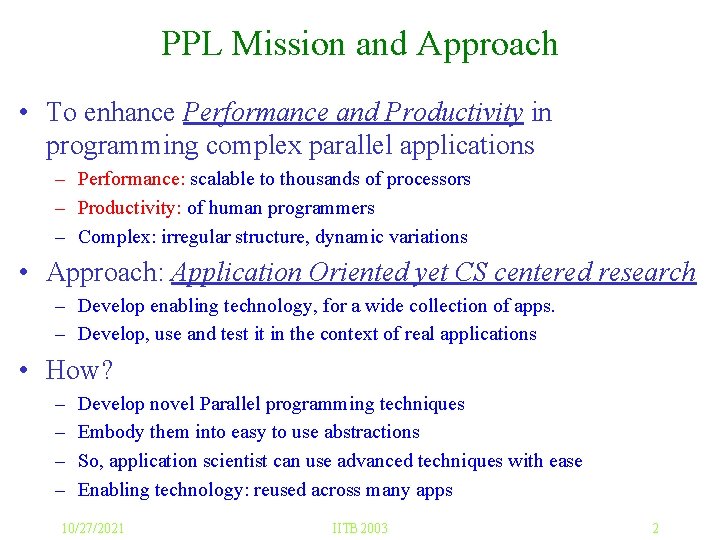

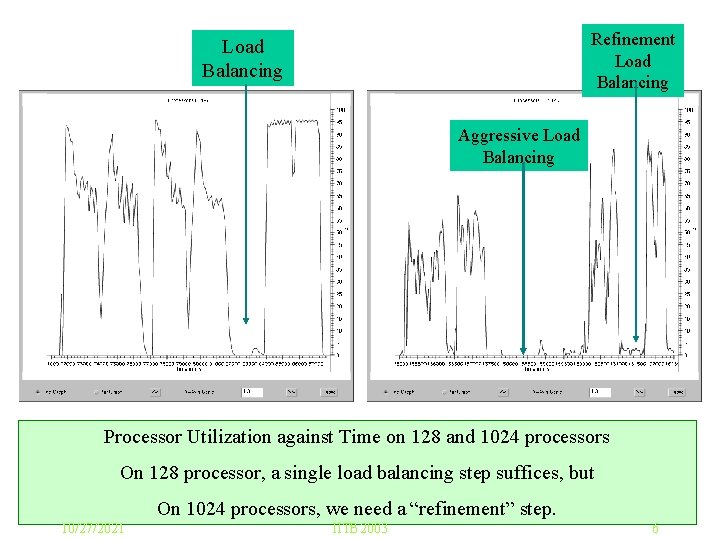

Refinement Load Balancing Aggressive Load Balancing Processor Utilization against Time on 128 and 1024 processors On 128 processor, a single load balancing step suffices, but On 1024 processors, we need a “refinement” step. 10/27/2021 IITB 2003 6

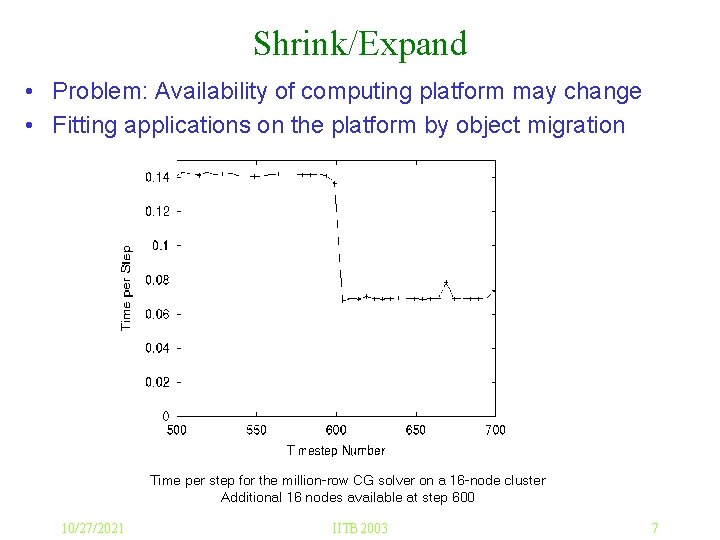

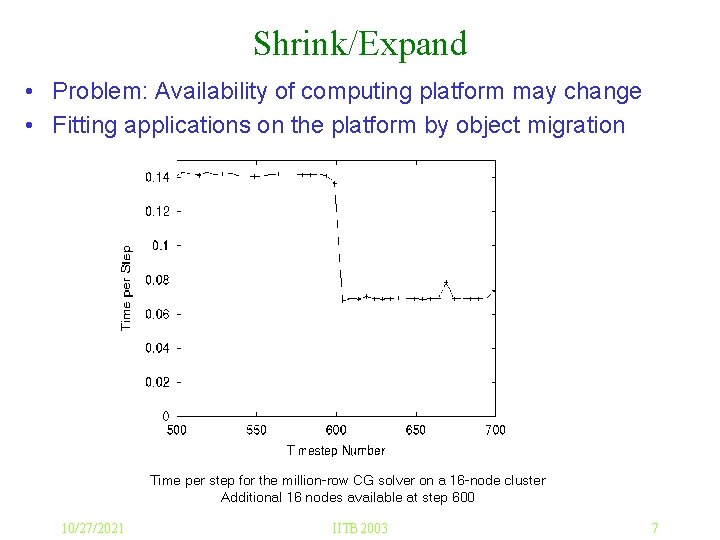

Shrink/Expand • Problem: Availability of computing platform may change • Fitting applications on the platform by object migration Time per step for the million-row CG solver on a 16 -node cluster Additional 16 nodes available at step 600 10/27/2021 IITB 2003 7

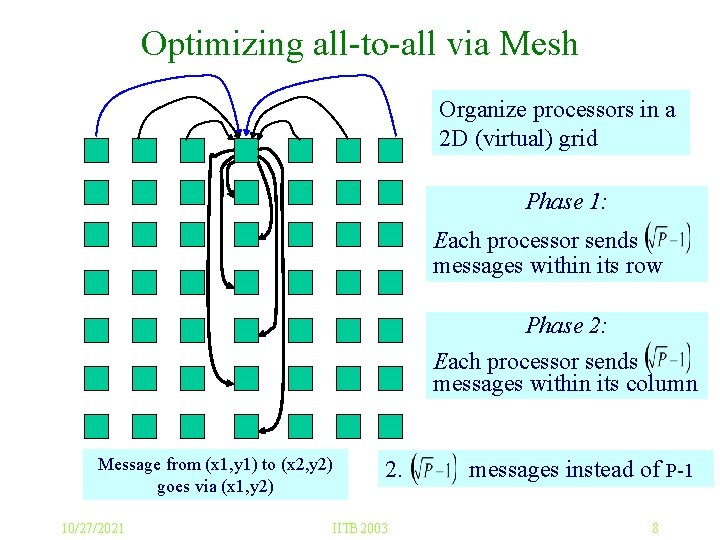

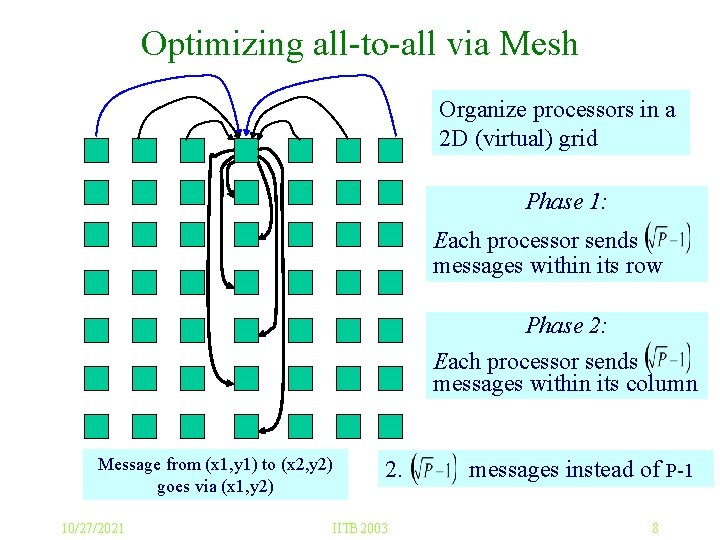

Optimizing all-to-all via Mesh Organize processors in a 2 D (virtual) grid Phase 1: Each processor sends messages within its row Phase 2: Each processor sends messages within its column Message from (x 1, y 1) to (x 2, y 2) goes via (x 1, y 2) 10/27/2021 2. IITB 2003 messages instead of P-1 8

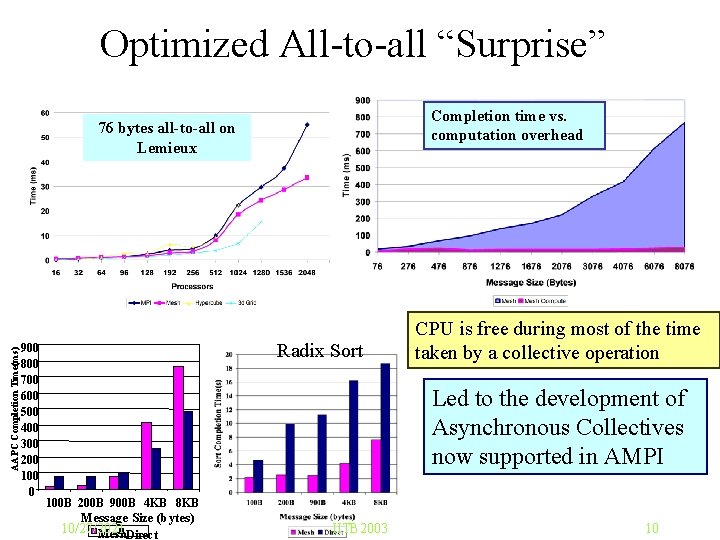

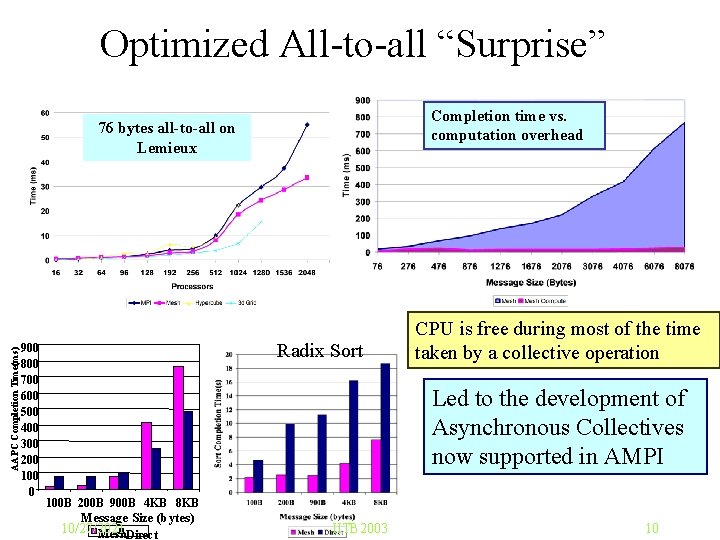

Optimized All-to-all “Surprise” Completion time vs. computation overhead AAPC Completion Time(ms) 76 bytes all-to-all on Lemieux 900 800 700 600 500 400 300 200 100 0 Radix Sort CPU is free during most of the time taken by a collective operation Led to the development of Asynchronous Collectives now supported in AMPI 100 B 200 B 900 B 4 KB 8 KB Message Size (bytes) 10/27/2021 Mesh. Direct IITB 2003 10

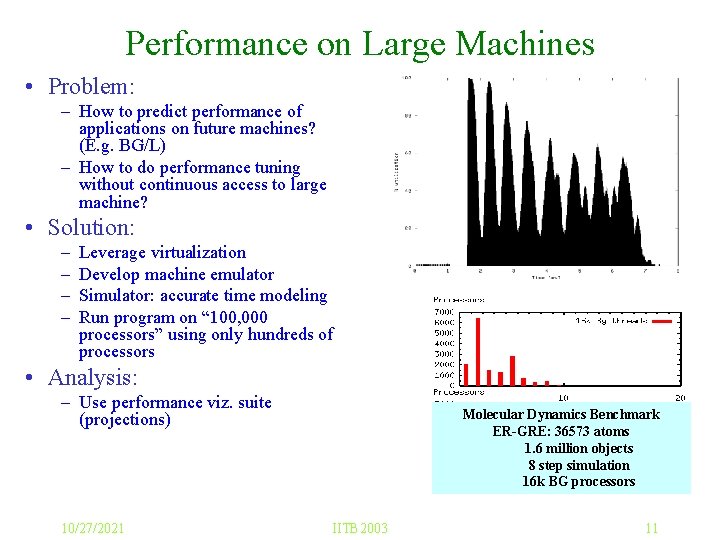

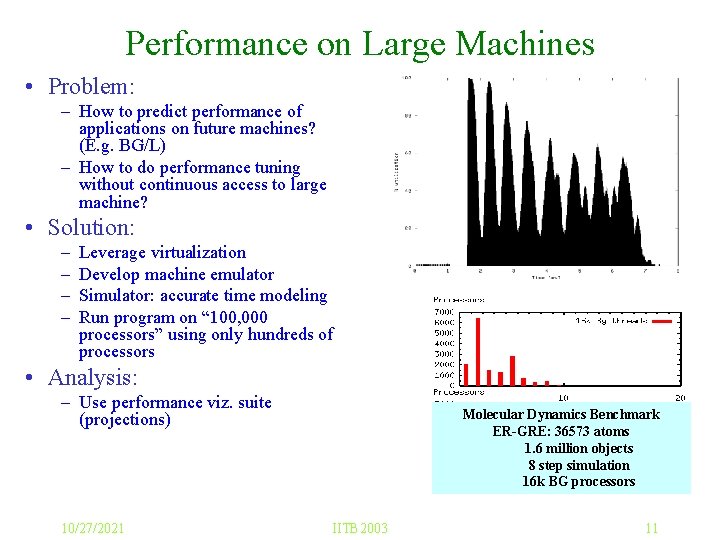

Performance on Large Machines • Problem: – How to predict performance of applications on future machines? (E. g. BG/L) – How to do performance tuning without continuous access to large machine? • Solution: – – Leverage virtualization Develop machine emulator Simulator: accurate time modeling Run program on “ 100, 000 processors” using only hundreds of processors • Analysis: – Use performance viz. suite (projections) 10/27/2021 Molecular Dynamics Benchmark ER-GRE: 36573 atoms 1. 6 million objects 8 step simulation 16 k BG processors IITB 2003 11

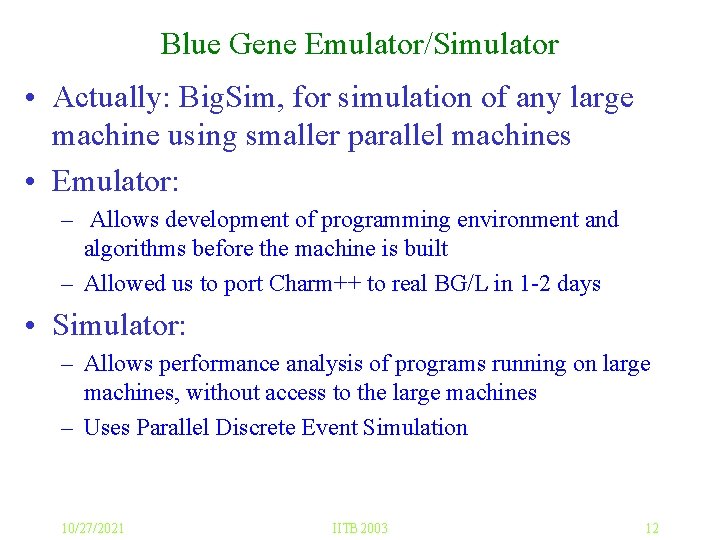

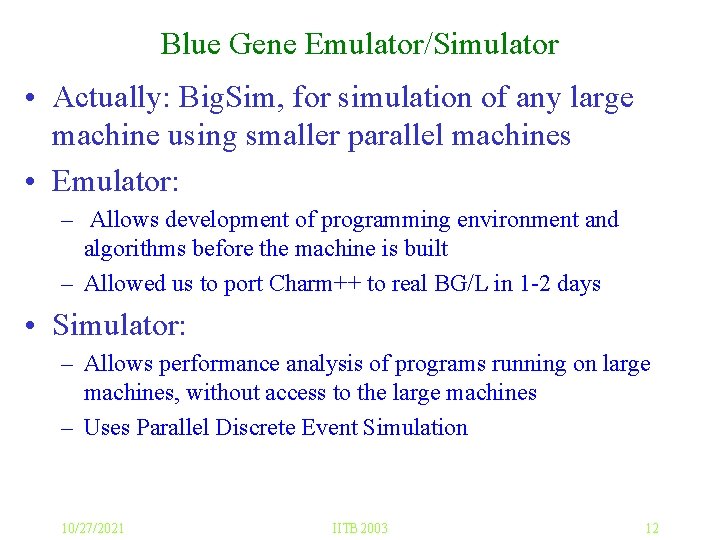

Blue Gene Emulator/Simulator • Actually: Big. Sim, for simulation of any large machine using smaller parallel machines • Emulator: – Allows development of programming environment and algorithms before the machine is built – Allowed us to port Charm++ to real BG/L in 1 -2 days • Simulator: – Allows performance analysis of programs running on large machines, without access to the large machines – Uses Parallel Discrete Event Simulation 10/27/2021 IITB 2003 12

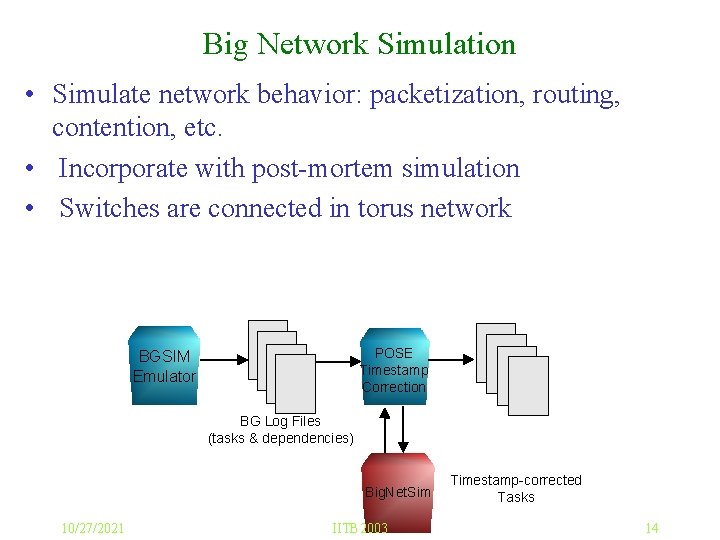

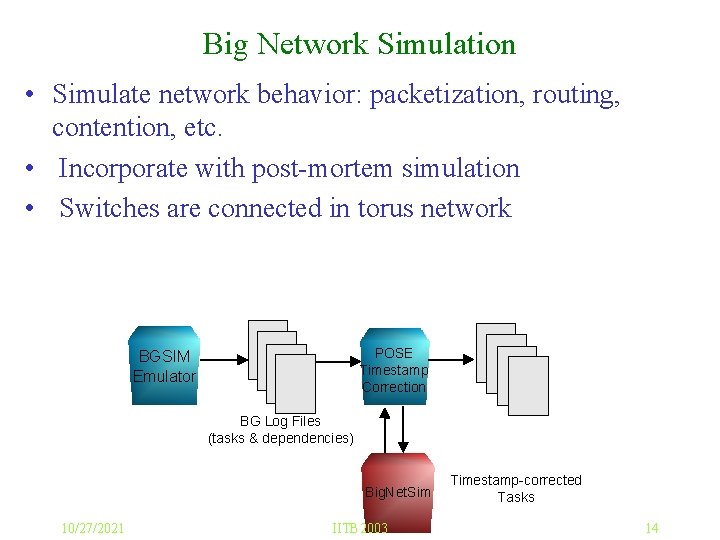

Big Network Simulation • Simulate network behavior: packetization, routing, contention, etc. • Incorporate with post-mortem simulation • Switches are connected in torus network POSE Timestamp Correction BGSIM Emulator BG Log Files (tasks & dependencies) Big. Net. Sim 10/27/2021 IITB 2003 Timestamp-corrected Tasks 14

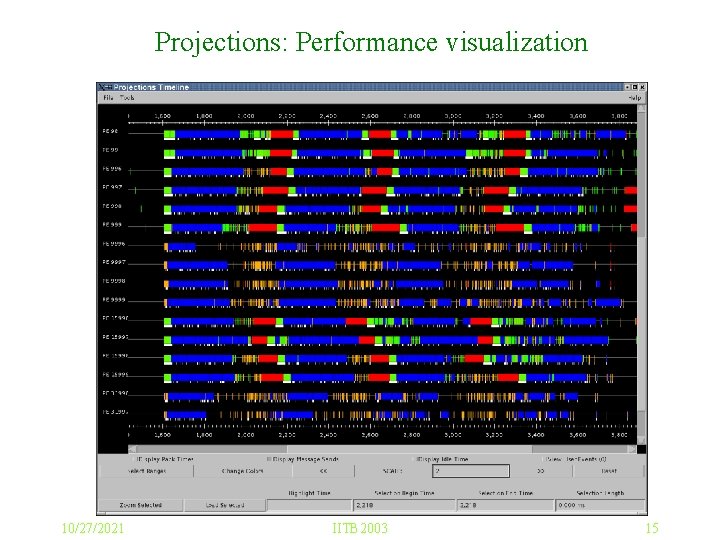

Projections: Performance visualization 10/27/2021 IITB 2003 15

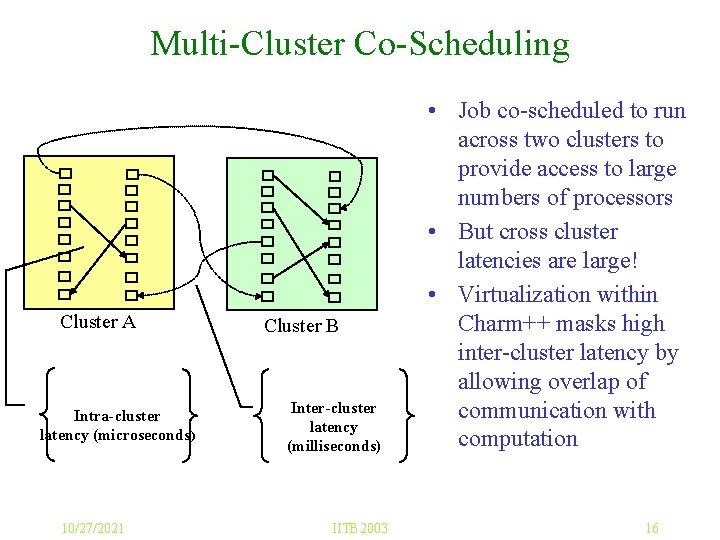

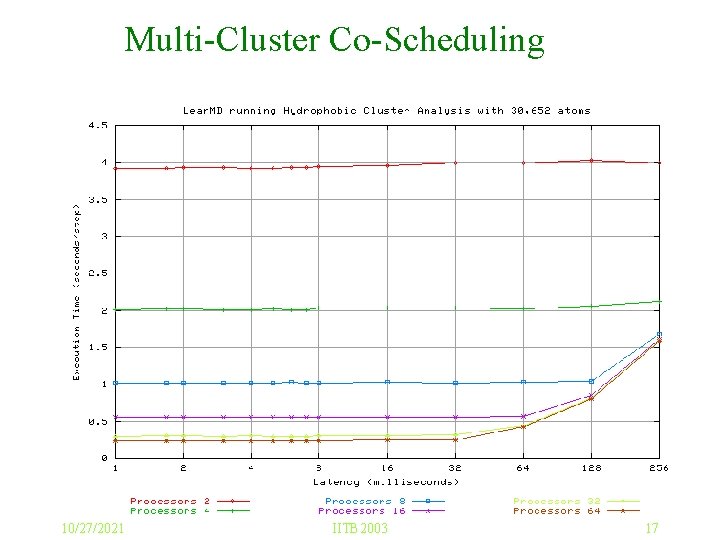

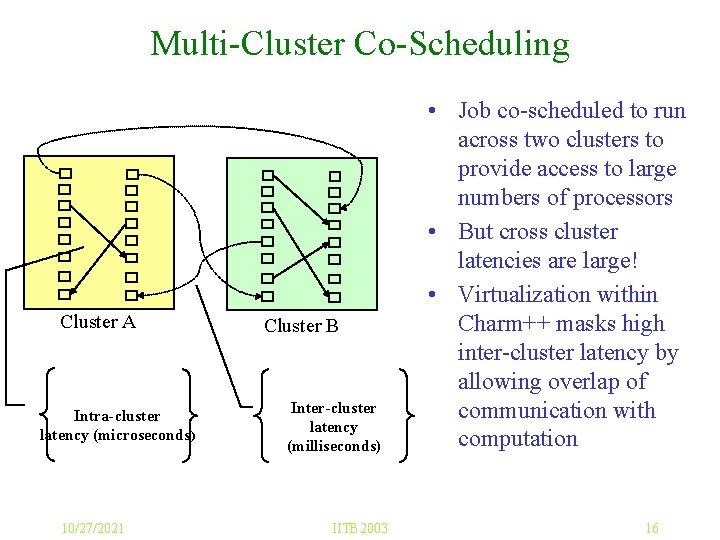

Multi-Cluster Co-Scheduling Cluster A Intra-cluster latency (microseconds) 10/27/2021 Cluster B Inter-cluster latency (milliseconds) IITB 2003 • Job co-scheduled to run across two clusters to provide access to large numbers of processors • But cross cluster latencies are large! • Virtualization within Charm++ masks high inter-cluster latency by allowing overlap of communication with computation 16

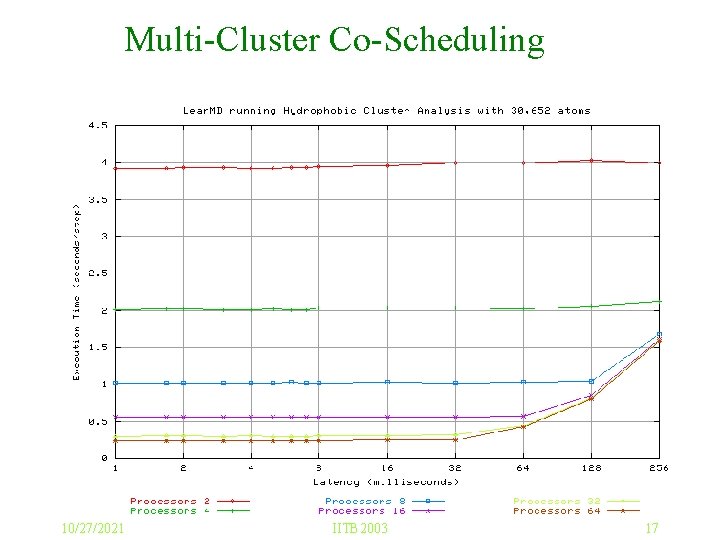

Multi-Cluster Co-Scheduling 10/27/2021 IITB 2003 17

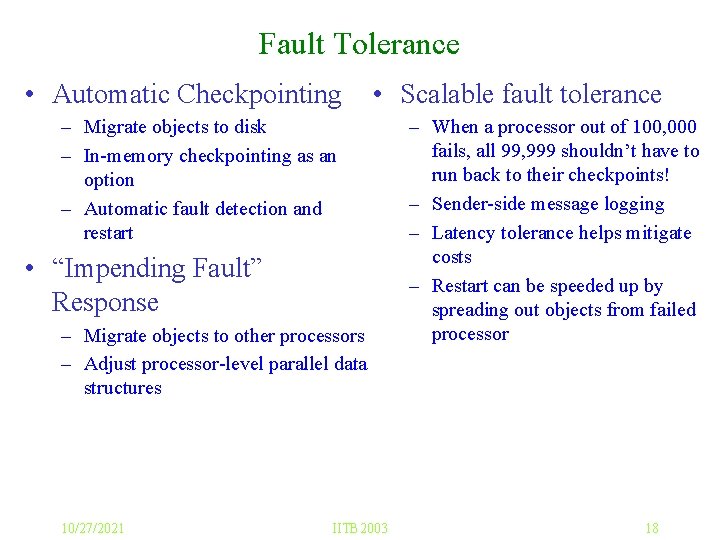

Fault Tolerance • Automatic Checkpointing • Scalable fault tolerance – Migrate objects to disk – In-memory checkpointing as an option – Automatic fault detection and restart • “Impending Fault” Response – Migrate objects to other processors – Adjust processor-level parallel data structures 10/27/2021 IITB 2003 – When a processor out of 100, 000 fails, all 99, 999 shouldn’t have to run back to their checkpoints! – Sender-side message logging – Latency tolerance helps mitigate costs – Restart can be speeded up by spreading out objects from failed processor 18

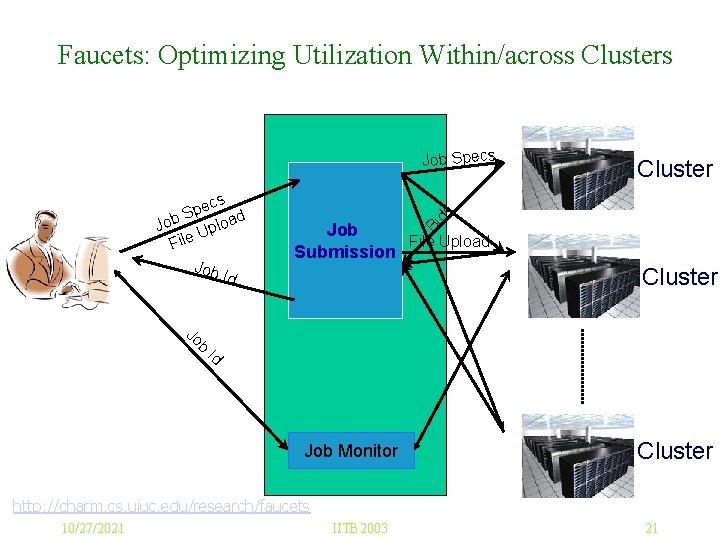

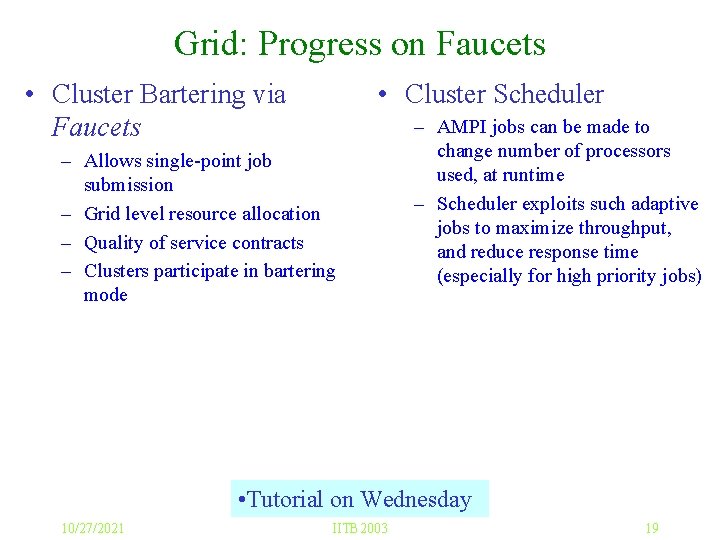

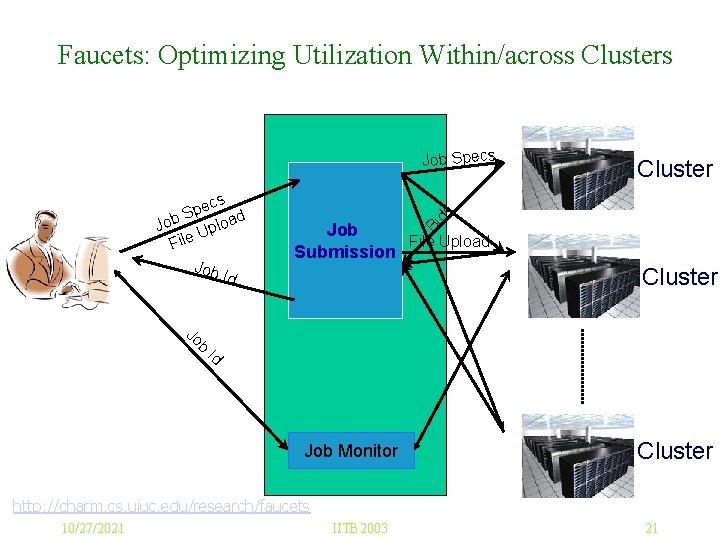

Grid: Progress on Faucets • Cluster Bartering via Faucets • Cluster Scheduler – Allows single-point job submission – Grid level resource allocation – Quality of service contracts – Clusters participate in bartering mode – AMPI jobs can be made to change number of processors used, at runtime – Scheduler exploits such adaptive jobs to maximize throughput, and reduce response time (especially for high priority jobs) • Tutorial on Wednesday 10/27/2021 IITB 2003 19

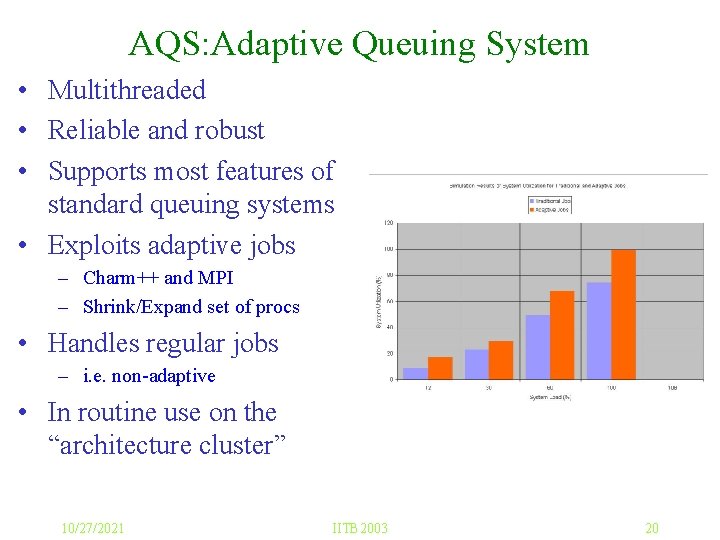

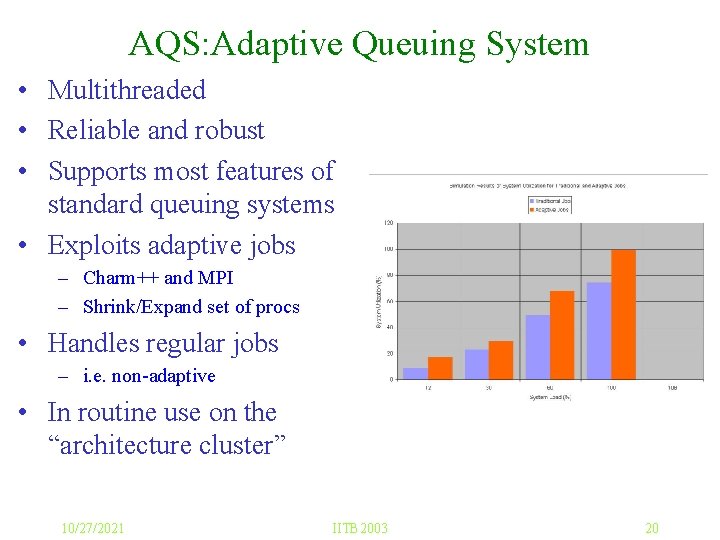

AQS: Adaptive Queuing System • Multithreaded • Reliable and robust • Supports most features of standard queuing systems • Exploits adaptive jobs – Charm++ and MPI – Shrink/Expand set of procs • Handles regular jobs – i. e. non-adaptive • In routine use on the “architecture cluster” 10/27/2021 IITB 2003 20

Faucets: Optimizing Utilization Within/across Clusters Jo b Job File Upload Submission Bi cs e p S d Job Uploa File Job Id Cluster ds Job Specs Cluster Id Job Monitor Cluster http: //charm. cs. uiuc. edu/research/faucets 10/27/2021 IITB 2003 21

Higher level programming • Multiphase Shared Arrays – Provides a disciplined use of shared address space – Each array can be accessed only in one of the following modes: • Read. Only, Write-by-One-Thread, Accumulate-only – Access mode can change from phase to phase – Phases delineated by per-array “sync” • Orchestration language – Allows expressing global control flow in a charm program – HPF like flavor, but Charm++-like processor virtualization, and explicit communication 10/27/2021 IITB 2003 22

Other Ongoing and Planned System Projects • Parallel languages – JADE – Orchestration • Parallel Debugger • Automatic out-of-core execution • Parallel Multigrid Support 10/27/2021 IITB 2003 23

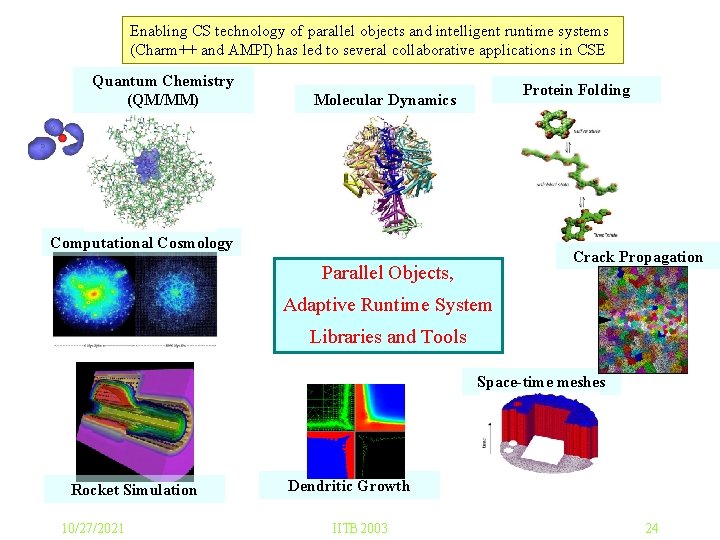

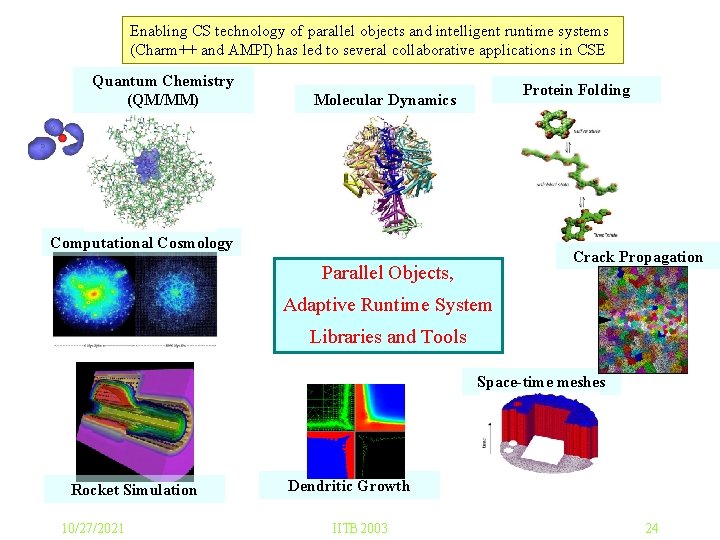

Enabling CS technology of parallel objects and intelligent runtime systems (Charm++ and AMPI) has led to several collaborative applications in CSE Quantum Chemistry (QM/MM) Protein Folding Molecular Dynamics Computational Cosmology Crack Propagation Parallel Objects, Adaptive Runtime System Libraries and Tools Space-time meshes Rocket Simulation 10/27/2021 Dendritic Growth IITB 2003 24

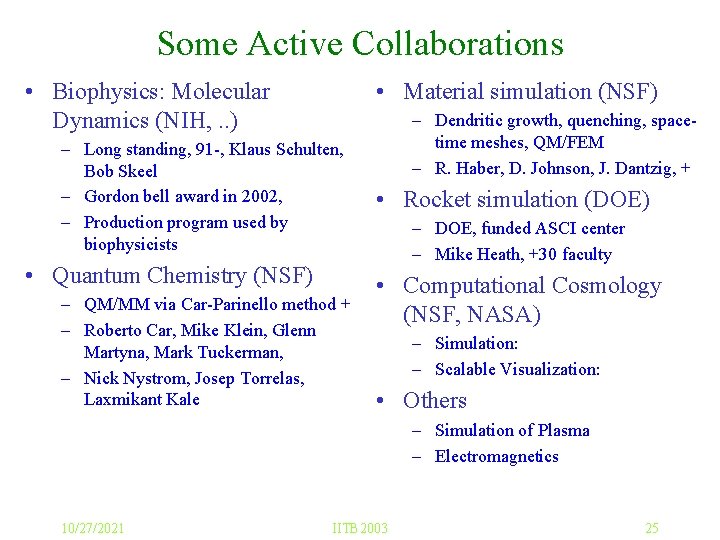

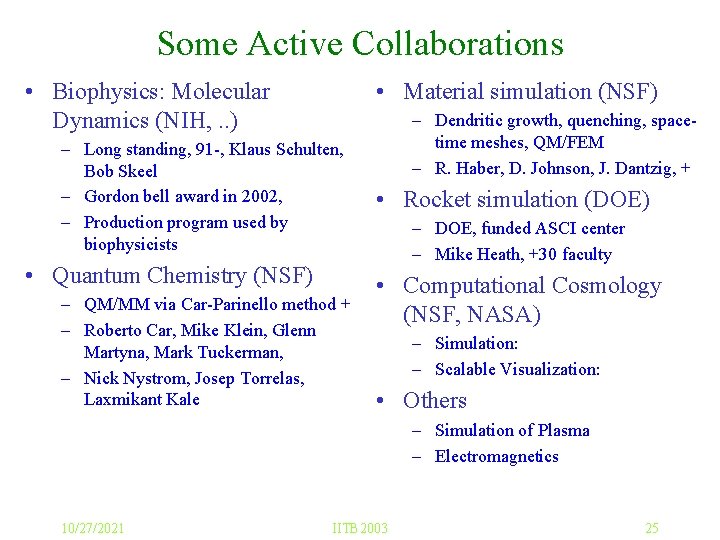

Some Active Collaborations • Biophysics: Molecular Dynamics (NIH, . . ) • Material simulation (NSF) – Long standing, 91 -, Klaus Schulten, Bob Skeel – Gordon bell award in 2002, – Production program used by biophysicists • Quantum Chemistry (NSF) – QM/MM via Car-Parinello method + – Roberto Car, Mike Klein, Glenn Martyna, Mark Tuckerman, – Nick Nystrom, Josep Torrelas, Laxmikant Kale – Dendritic growth, quenching, spacetime meshes, QM/FEM – R. Haber, D. Johnson, J. Dantzig, + • Rocket simulation (DOE) – DOE, funded ASCI center – Mike Heath, +30 faculty • Computational Cosmology (NSF, NASA) – Simulation: – Scalable Visualization: • Others – Simulation of Plasma – Electromagnetics 10/27/2021 IITB 2003 25

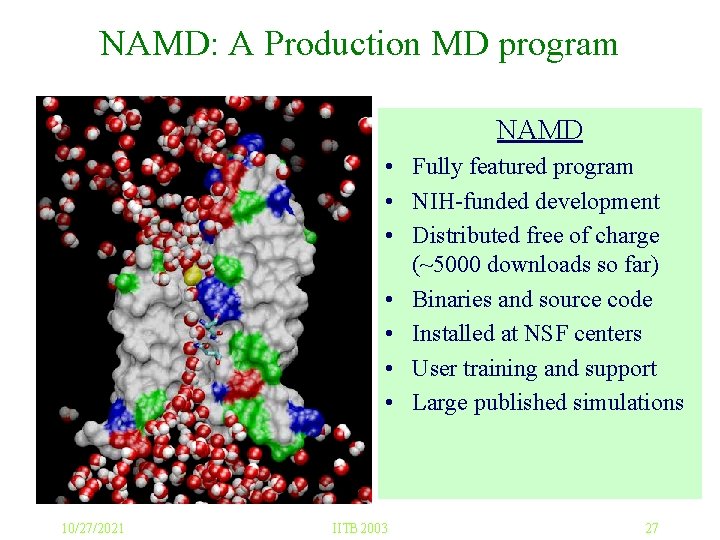

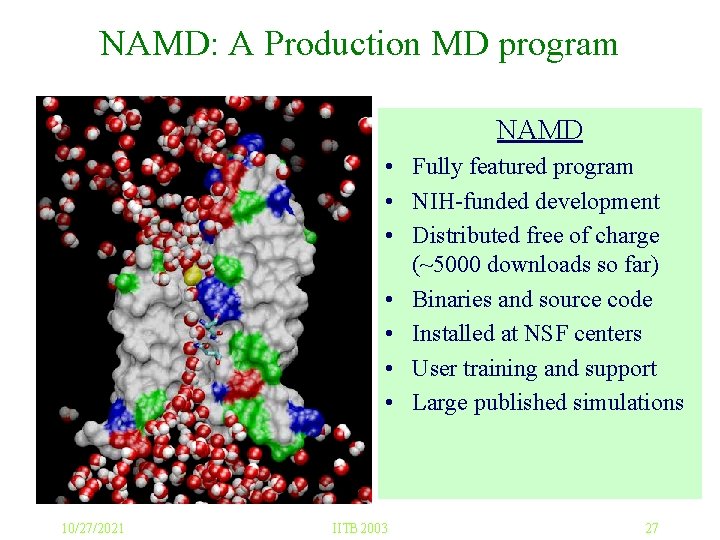

NAMD: A Production MD program NAMD • Fully featured program • NIH-funded development • Distributed free of charge (~5000 downloads so far) • Binaries and source code • Installed at NSF centers • User training and support • Large published simulations 10/27/2021 IITB 2003 27

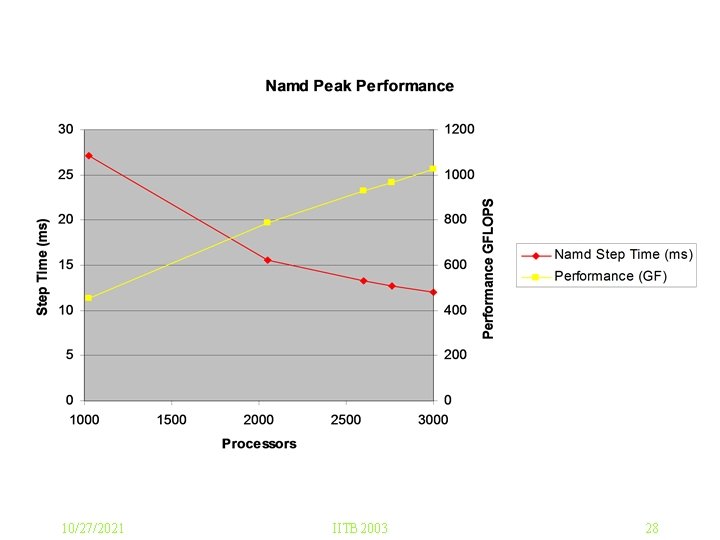

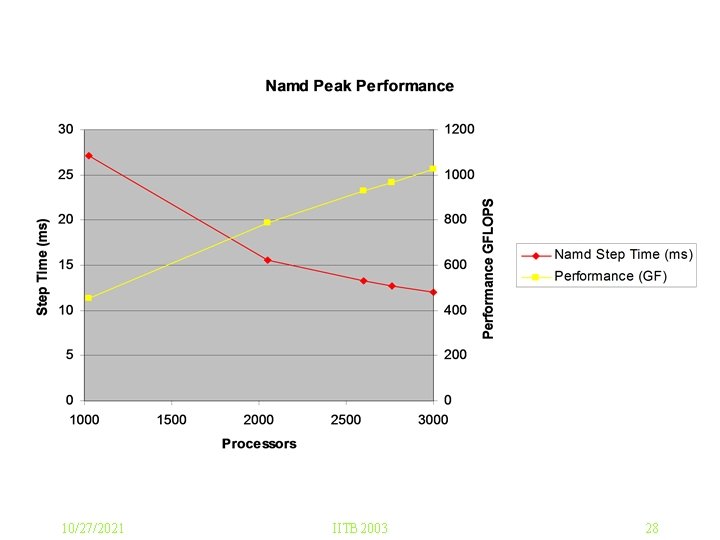

10/27/2021 IITB 2003 28

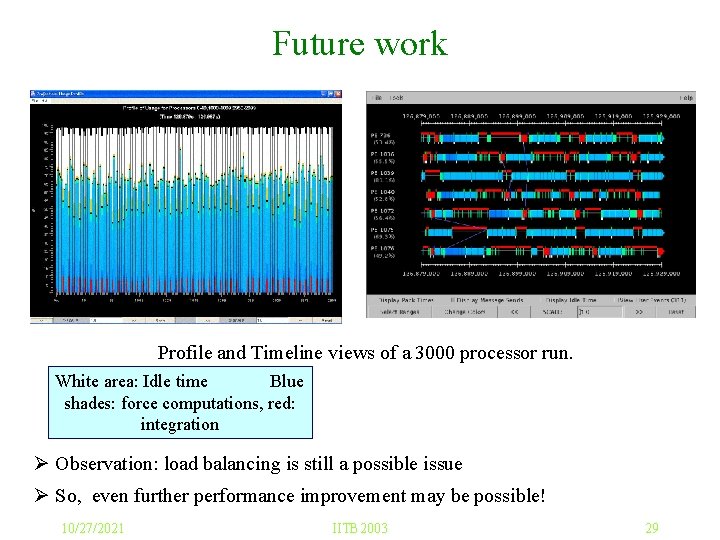

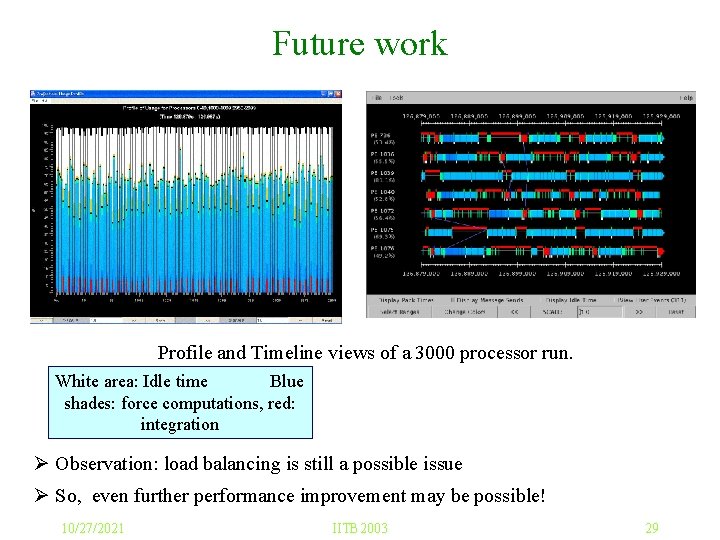

Future work Profile and Timeline views of a 3000 processor run. White area: Idle time Blue shades: force computations, red: integration Ø Observation: load balancing is still a possible issue Ø So, even further performance improvement may be possible! 10/27/2021 IITB 2003 29

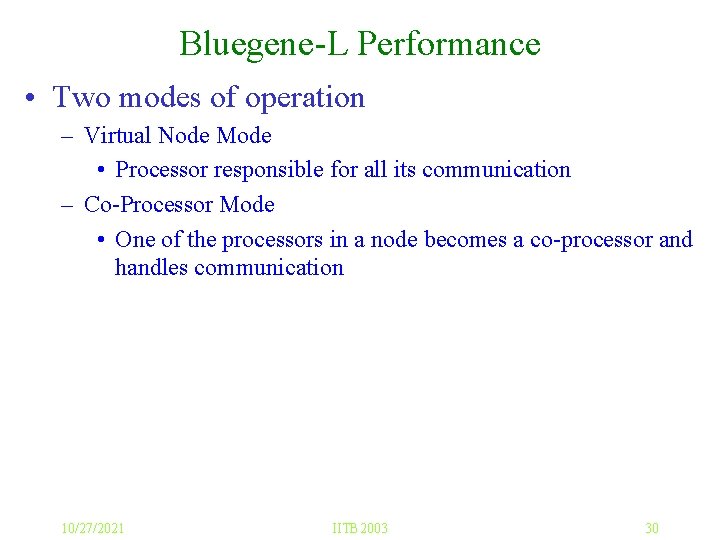

Bluegene-L Performance • Two modes of operation – Virtual Node Mode • Processor responsible for all its communication – Co-Processor Mode • One of the processors in a node becomes a co-processor and handles communication 10/27/2021 IITB 2003 30

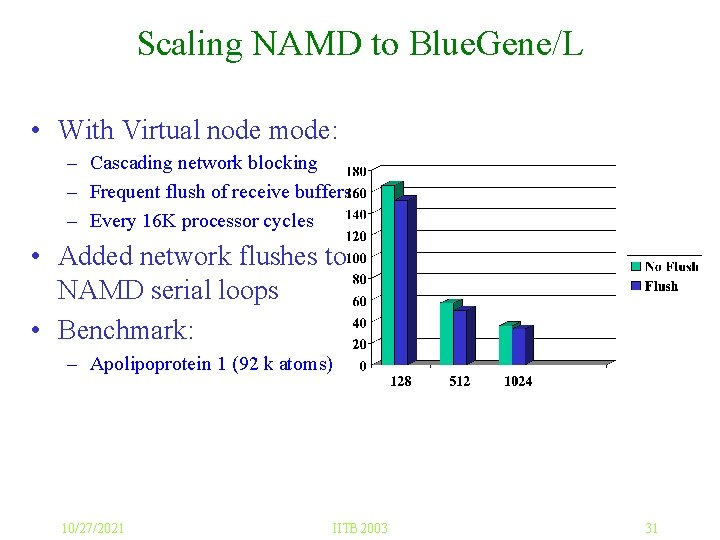

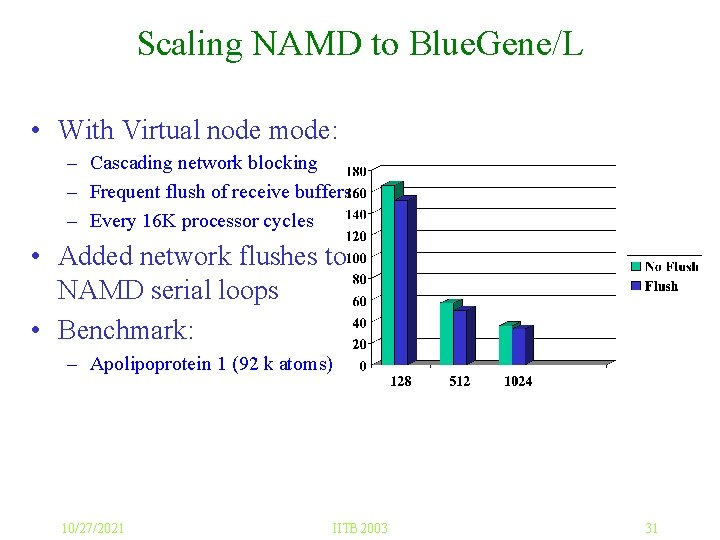

Scaling NAMD to Blue. Gene/L • With Virtual node mode: – Cascading network blocking – Frequent flush of receive buffers – Every 16 K processor cycles • Added network flushes to NAMD serial loops • Benchmark: – Apolipoprotein 1 (92 k atoms) 10/27/2021 IITB 2003 31

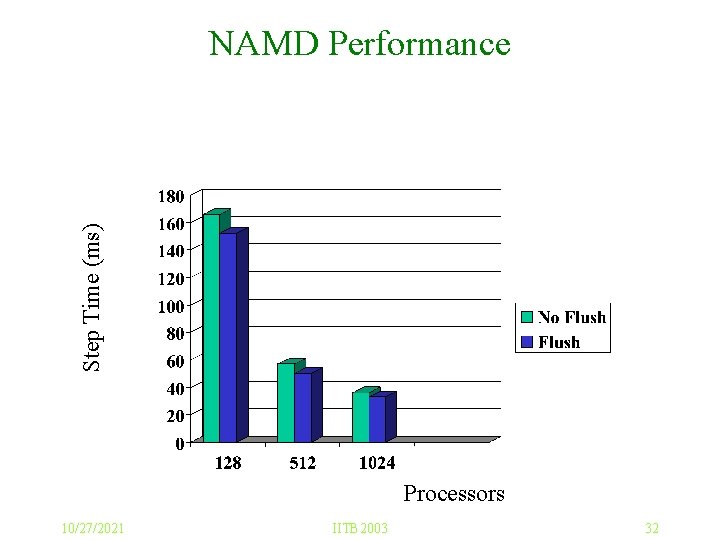

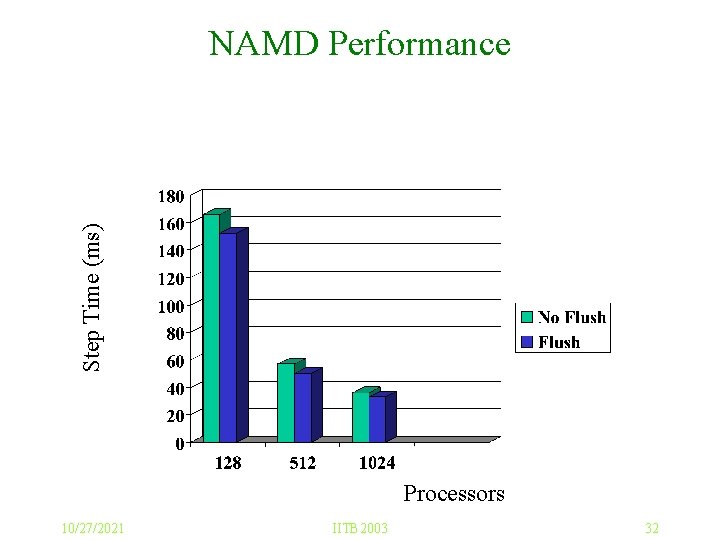

Step Time (ms) NAMD Performance Processors 10/27/2021 IITB 2003 32

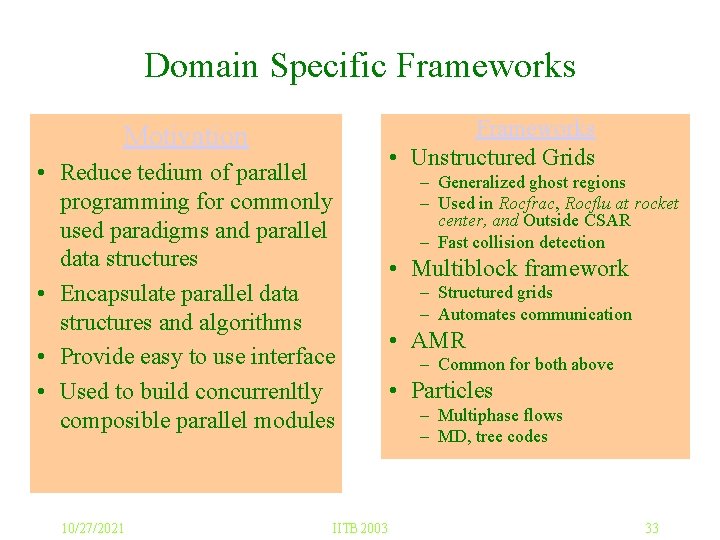

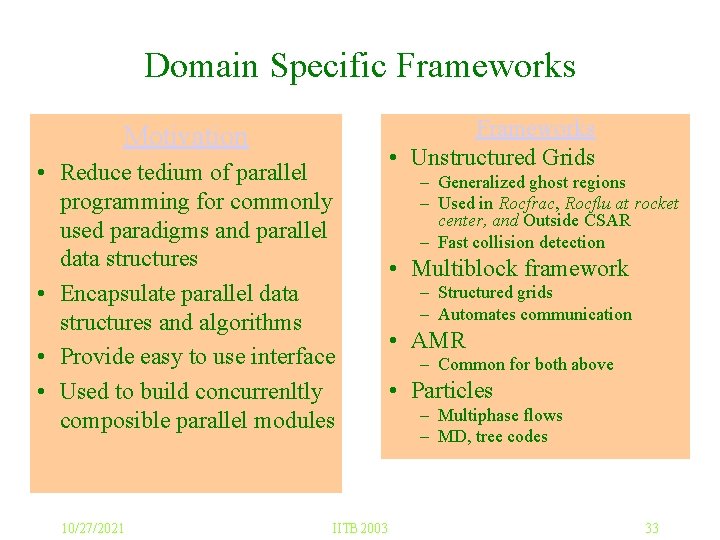

Domain Specific Frameworks Motivation • Reduce tedium of parallel programming for commonly used paradigms and parallel data structures • Encapsulate parallel data structures and algorithms • Provide easy to use interface • Used to build concurrenltly composible parallel modules 10/27/2021 IITB 2003 Frameworks • Unstructured Grids – Generalized ghost regions – Used in Rocfrac, Rocflu at rocket center, and Outside CSAR – Fast collision detection • Multiblock framework – Structured grids – Automates communication • AMR – Common for both above • Particles – Multiphase flows – MD, tree codes 33

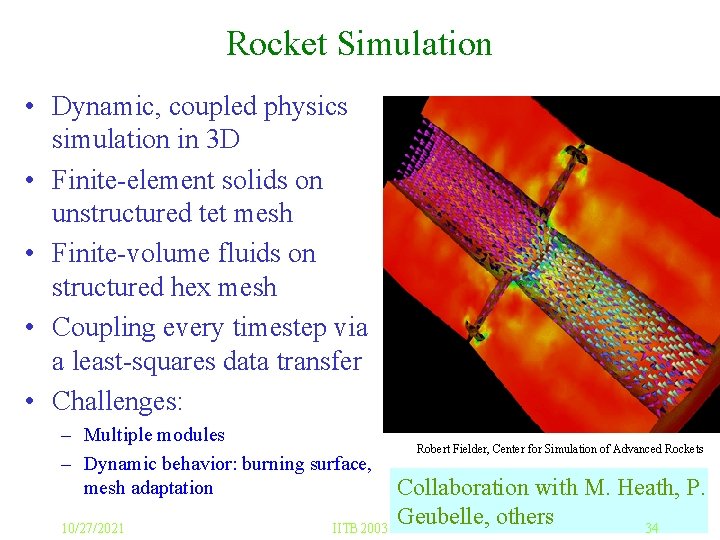

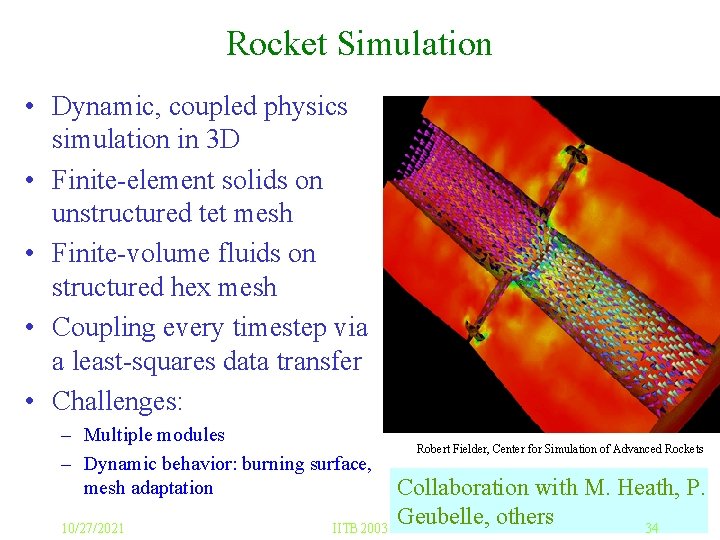

Rocket Simulation • Dynamic, coupled physics simulation in 3 D • Finite-element solids on unstructured tet mesh • Finite-volume fluids on structured hex mesh • Coupling every timestep via a least-squares data transfer • Challenges: – Multiple modules – Dynamic behavior: burning surface, mesh adaptation 10/27/2021 IITB 2003 Robert Fielder, Center for Simulation of Advanced Rockets Collaboration with M. Heath, P. Geubelle, others 34

Dynamic load balancing in Crack Propagation 10/27/2021 IITB 2003 35

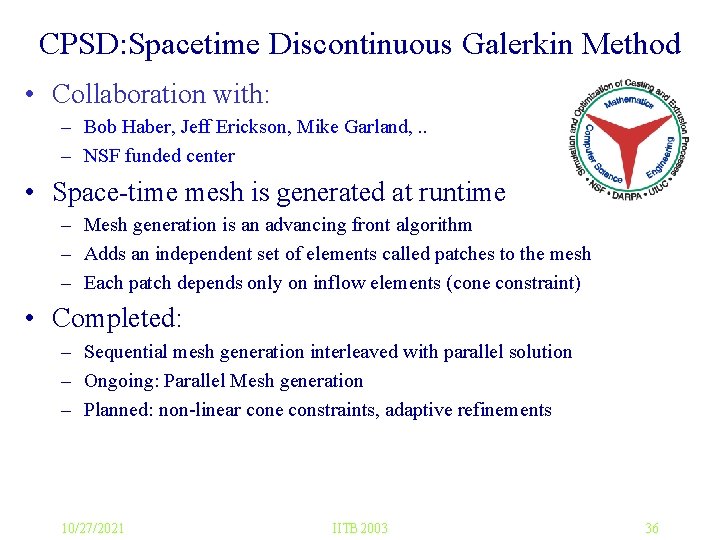

CPSD: Spacetime Discontinuous Galerkin Method • Collaboration with: – Bob Haber, Jeff Erickson, Mike Garland, . . – NSF funded center • Space-time mesh is generated at runtime – Mesh generation is an advancing front algorithm – Adds an independent set of elements called patches to the mesh – Each patch depends only on inflow elements (cone constraint) • Completed: – Sequential mesh generation interleaved with parallel solution – Ongoing: Parallel Mesh generation – Planned: non-linear cone constraints, adaptive refinements 10/27/2021 IITB 2003 36

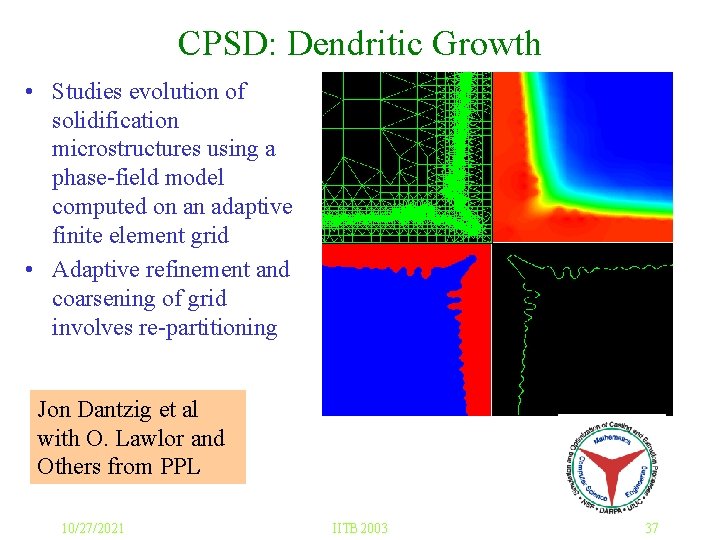

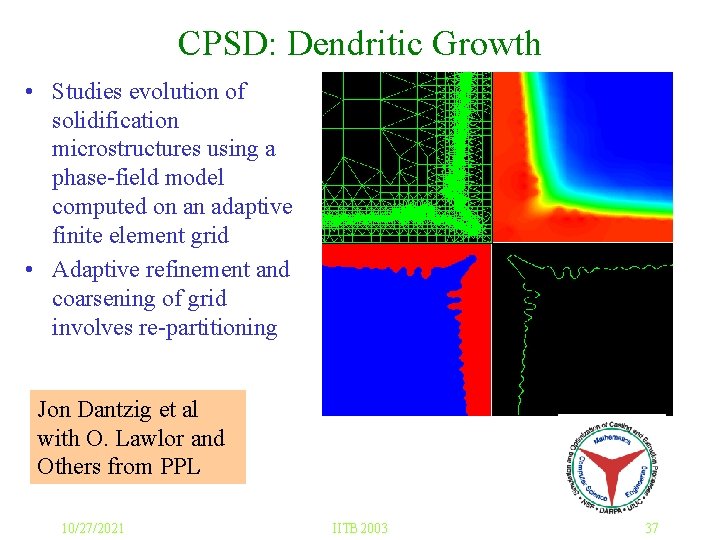

CPSD: Dendritic Growth • Studies evolution of solidification microstructures using a phase-field model computed on an adaptive finite element grid • Adaptive refinement and coarsening of grid involves re-partitioning Jon Dantzig et al with O. Lawlor and Others from PPL 10/27/2021 IITB 2003 37

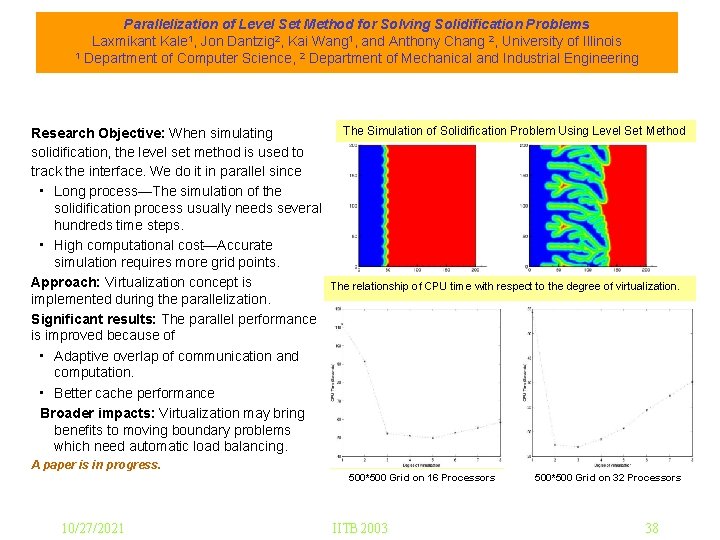

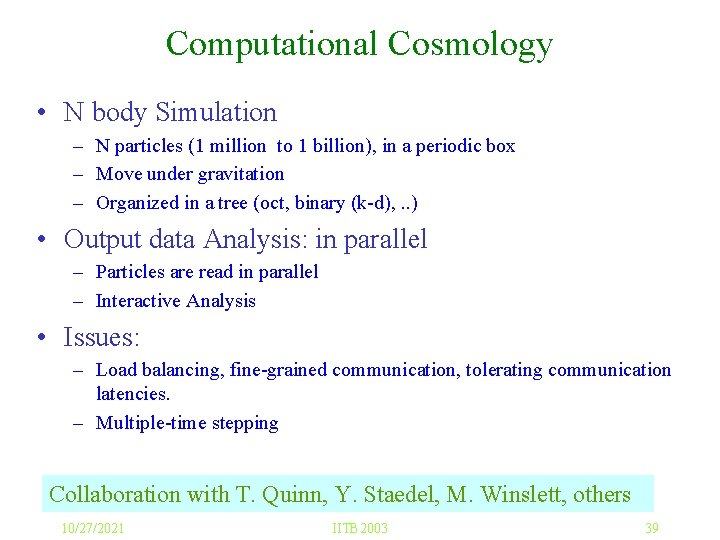

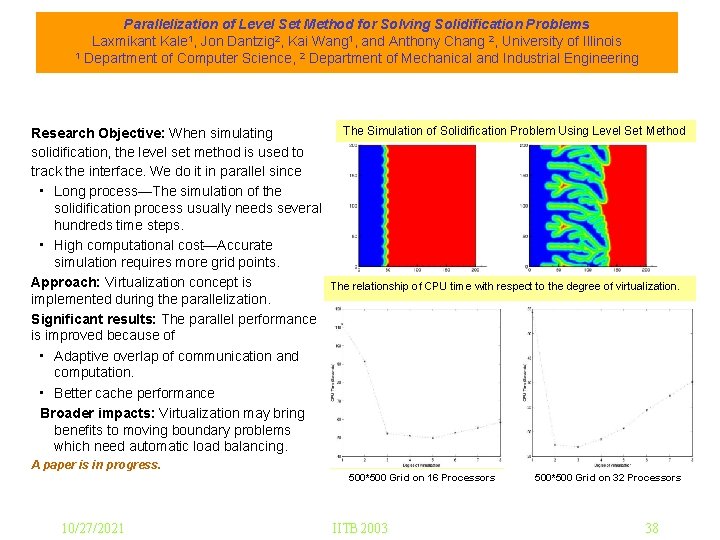

Parallelization of Level Set Method for Solving Solidification Problems Laxmikant Kale 1, Jon Dantzig 2, Kai Wang 1, and Anthony Chang 2, University of Illinois 1 Department of Computer Science, 2 Department of Mechanical and Industrial Engineering Research Objective: When simulating solidification, the level set method is used to track the interface. We do it in parallel since • Long process—The simulation of the solidification process usually needs several hundreds time steps. • High computational cost—Accurate simulation requires more grid points. Approach: Virtualization concept is implemented during the parallelization. Significant results: The parallel performance is improved because of • Adaptive overlap of communication and computation. • Better cache performance Broader impacts: Virtualization may bring benefits to moving boundary problems which need automatic load balancing. The Simulation of Solidification Problem Using Level Set Method The relationship of CPU time with respect to the degree of virtualization. A paper is in progress. 500*500 Grid on 16 Processors 10/27/2021 IITB 2003 500*500 Grid on 32 Processors 38

Computational Cosmology • N body Simulation – N particles (1 million to 1 billion), in a periodic box – Move under gravitation – Organized in a tree (oct, binary (k-d), . . ) • Output data Analysis: in parallel – Particles are read in parallel – Interactive Analysis • Issues: – Load balancing, fine-grained communication, tolerating communication latencies. – Multiple-time stepping Collaboration with T. Quinn, Y. Staedel, M. Winslett, others 10/27/2021 IITB 2003 39

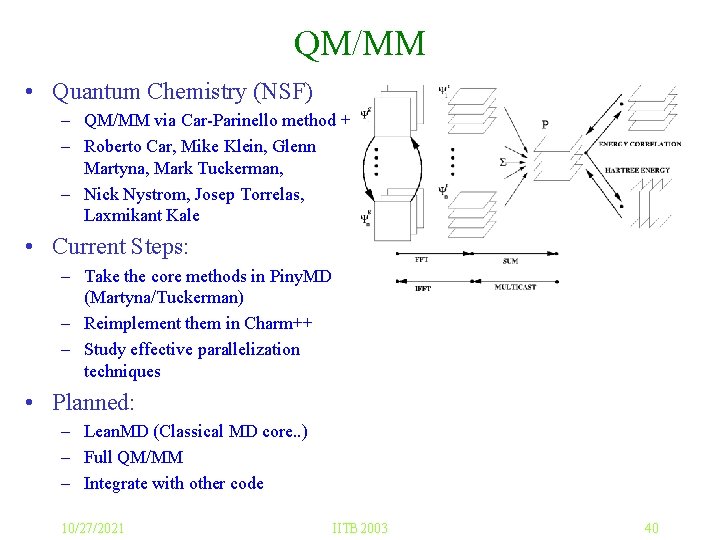

QM/MM • Quantum Chemistry (NSF) – QM/MM via Car-Parinello method + – Roberto Car, Mike Klein, Glenn Martyna, Mark Tuckerman, – Nick Nystrom, Josep Torrelas, Laxmikant Kale • Current Steps: – Take the core methods in Piny. MD (Martyna/Tuckerman) – Reimplement them in Charm++ – Study effective parallelization techniques • Planned: – Lean. MD (Classical MD core. . ) – Full QM/MM – Integrate with other code 10/27/2021 IITB 2003 40

Summary and Messages • We at PPL have advanced migratable objects technology – We are committed to supporting applications – We grow our base of reusable techniques via such collaborations • Try using our technology: – AMPI, (Charm++), Faucets, FEM framework, . . – Available via the web (http: //charm. cs. uiuc. edu) 10/27/2021 IITB 2003 41

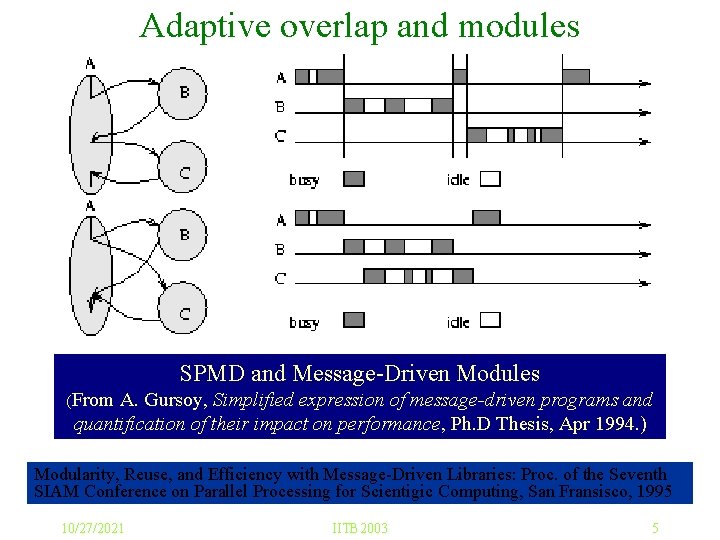

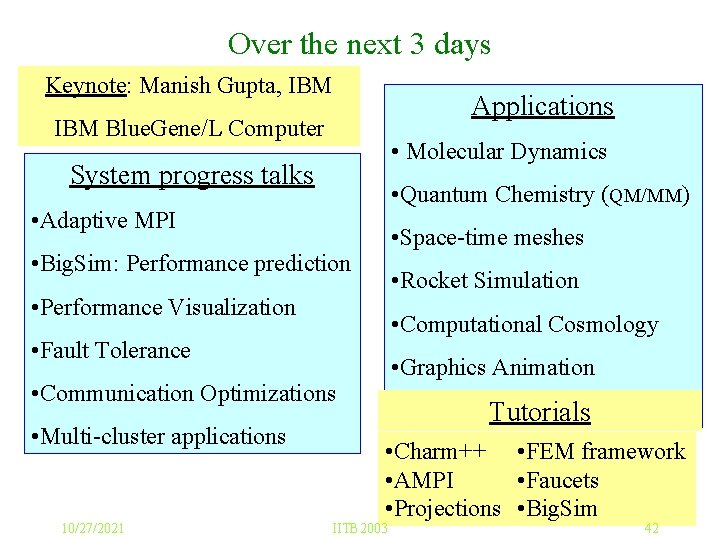

Over the next 3 days Keynote: Manish Gupta, IBM Applications IBM Blue. Gene/L Computer • Molecular Dynamics System progress talks • Quantum Chemistry (QM/MM) • Adaptive MPI • Space-time meshes • Big. Sim: Performance prediction • Rocket Simulation • Performance Visualization • Computational Cosmology • Fault Tolerance • Graphics Animation • Communication Optimizations • Multi-cluster applications 10/27/2021 Tutorials • Charm++ • FEM framework • AMPI • Faucets • Projections • Big. Sim IITB 2003 42