Higher Level Parallel Programming Language Chao Huang Parallel

![Language Design (cont. ) n Orchestration Statements ¨ forall i in my. Workers[i]. do. Language Design (cont. ) n Orchestration Statements ¨ forall i in my. Workers[i]. do.](https://slidetodoc.com/presentation_image_h/df1d6c47664c6eeb5181856d9d02003b/image-8.jpg)

![Language Design (cont. ) n Communication Patterns ¨ Point-to-point <p[i]> : = A[i]. f(. Language Design (cont. ) n Communication Patterns ¨ Point-to-point <p[i]> : = A[i]. f(.](https://slidetodoc.com/presentation_image_h/df1d6c47664c6eeb5181856d9d02003b/image-11.jpg)

- Slides: 16

Higher Level Parallel Programming Language Chao Huang Parallel Programming Lab University of Illinois charm. cs. uiuc. edu

Outline n Motivation ¨ Charm++ n and Virtualization Language Design ¨ Program Structure ¨ Orchestration Statements ¨ Communication Patterns ¨ Code Examples n Implementation ¨ Jade n and MSA Future Work 12/4/2020 charm. cs. uiuc. edu 2

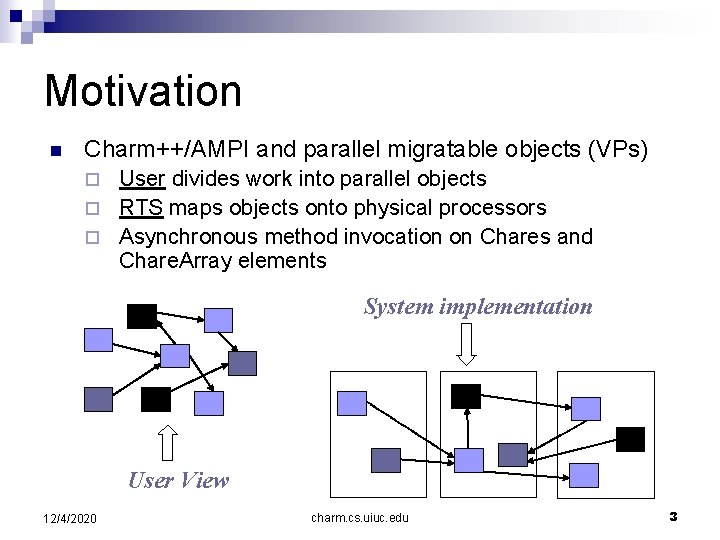

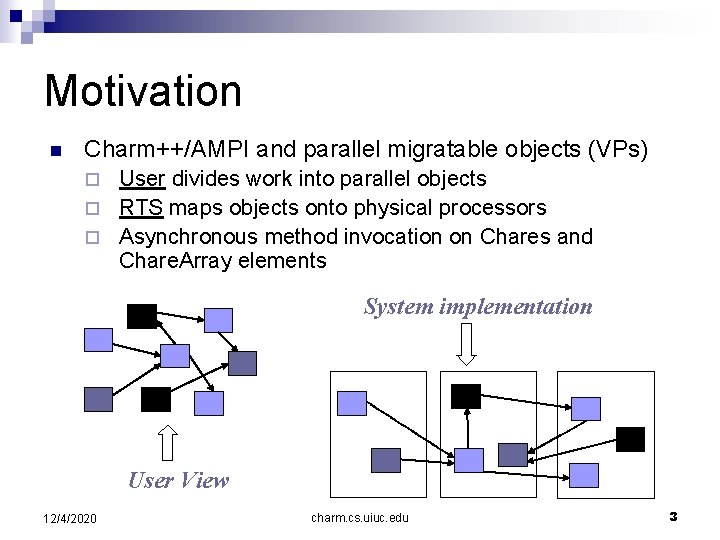

Motivation n Charm++/AMPI and parallel migratable objects (VPs) User divides work into parallel objects ¨ RTS maps objects onto physical processors ¨ Asynchronous method invocation on Chares and Chare. Array elements ¨ System implementation User View 12/4/2020 charm. cs. uiuc. edu 3

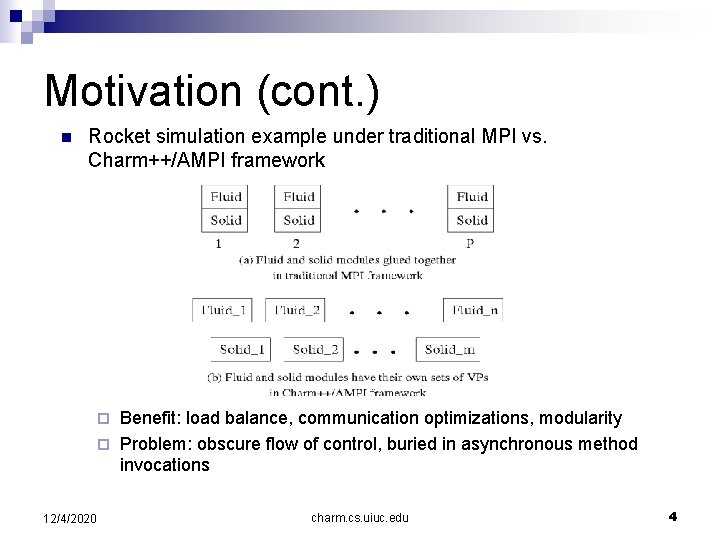

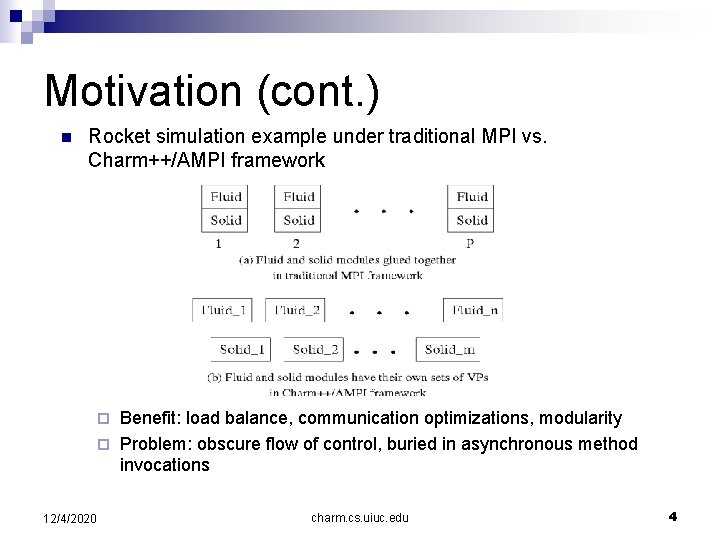

Motivation (cont. ) n Rocket simulation example under traditional MPI vs. Charm++/AMPI framework Benefit: load balance, communication optimizations, modularity ¨ Problem: obscure flow of control, buried in asynchronous method invocations ¨ 12/4/2020 charm. cs. uiuc. edu 4

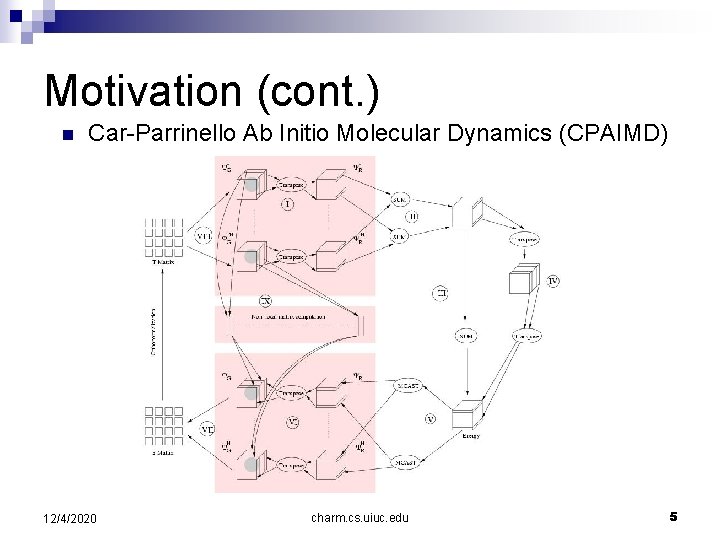

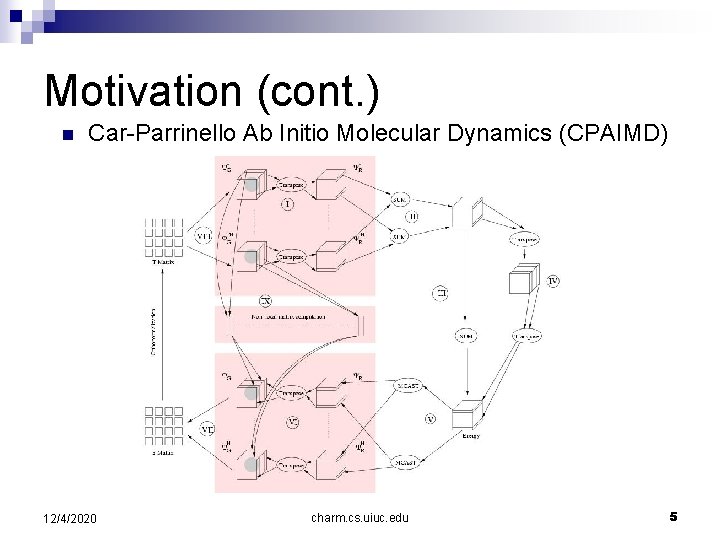

Motivation (cont. ) n Car-Parrinello Ab Initio Molecular Dynamics (CPAIMD) 12/4/2020 charm. cs. uiuc. edu 5

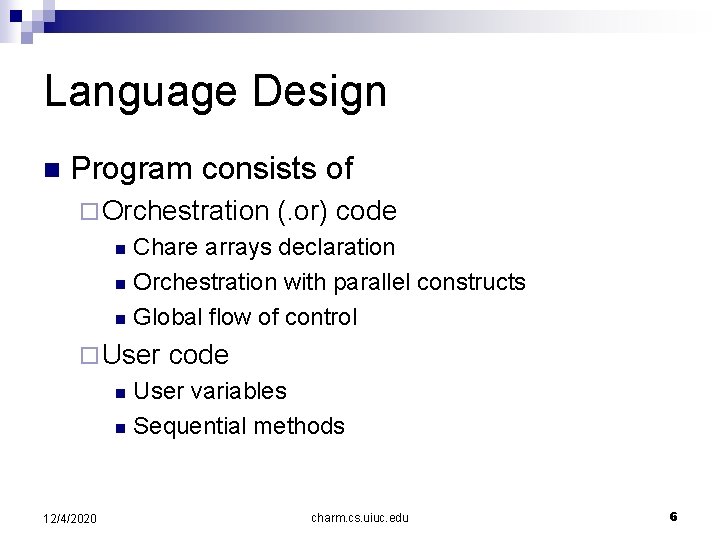

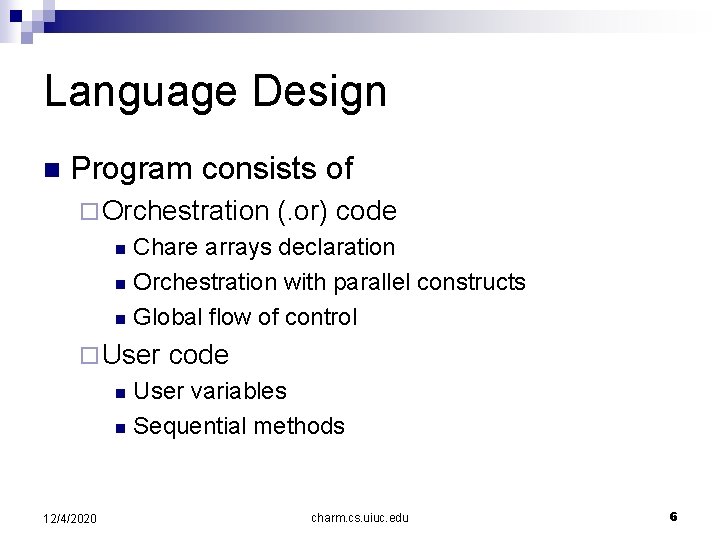

Language Design n Program consists of ¨ Orchestration (. or) code Chare arrays declaration n Orchestration with parallel constructs n Global flow of control n ¨ User code User variables n Sequential methods n 12/4/2020 charm. cs. uiuc. edu 6

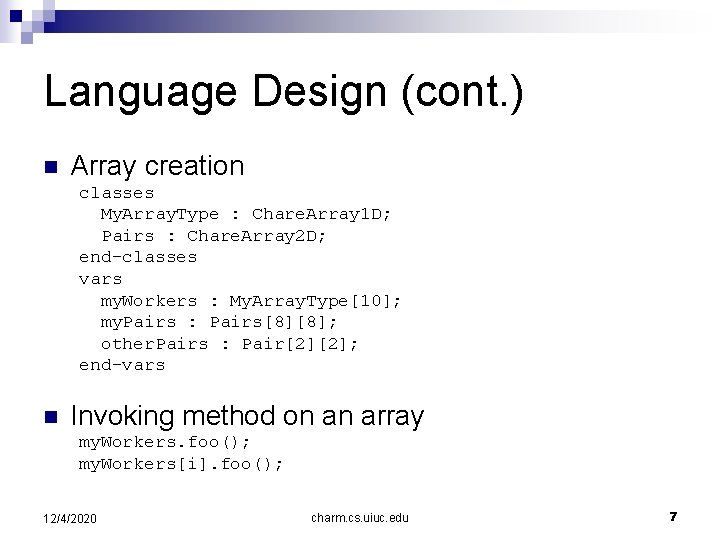

Language Design (cont. ) n Array creation classes My. Array. Type : Chare. Array 1 D; Pairs : Chare. Array 2 D; end-classes vars my. Workers : My. Array. Type[10]; my. Pairs : Pairs[8][8]; other. Pairs : Pair[2][2]; end-vars n Invoking method on an array my. Workers. foo(); my. Workers[i]. foo(); 12/4/2020 charm. cs. uiuc. edu 7

![Language Design cont n Orchestration Statements forall i in my Workersi do Language Design (cont. ) n Orchestration Statements ¨ forall i in my. Workers[i]. do.](https://slidetodoc.com/presentation_image_h/df1d6c47664c6eeb5181856d9d02003b/image-8.jpg)

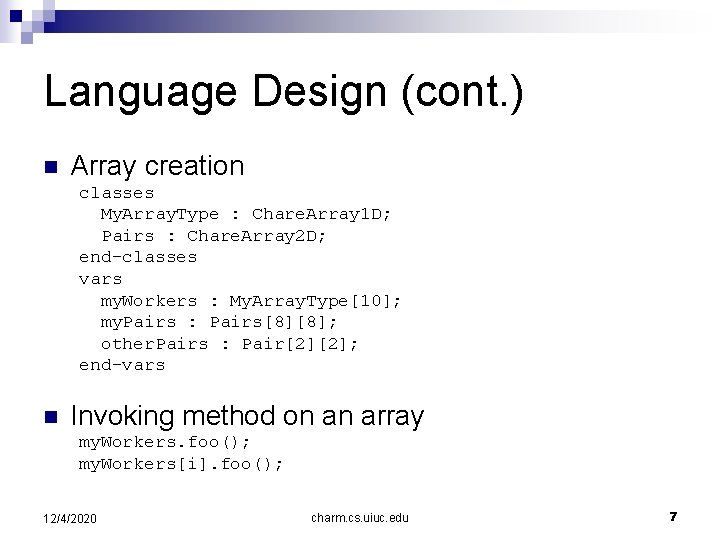

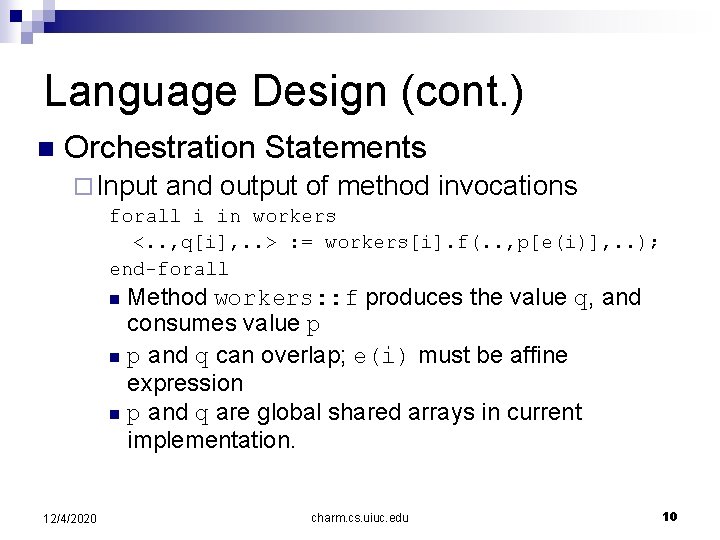

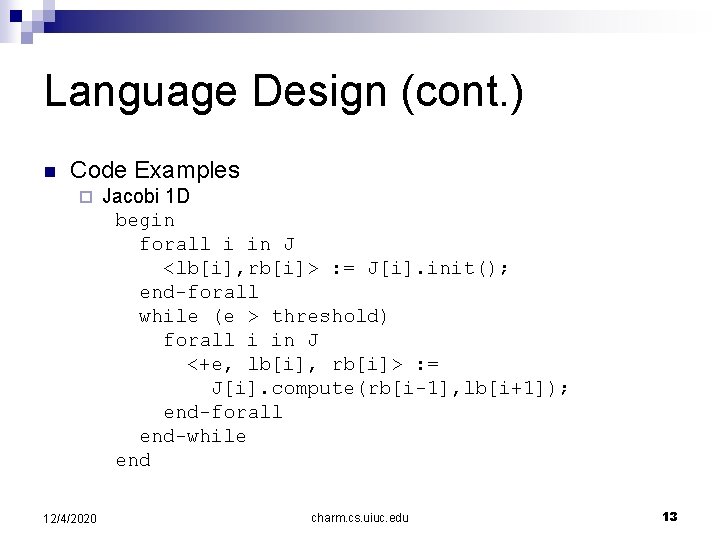

Language Design (cont. ) n Orchestration Statements ¨ forall i in my. Workers[i]. do. Work(1, 100); end-forall ¨ Whole set of elements (abbreviated) forall in my. Workers do. Work(1, 100); end-forall ¨ Subset of elements forall i: 0: 10: 2 in my. Workers forall <i, j: 0: 8: 2> in my. Pairs 12/4/2020 charm. cs. uiuc. edu 8

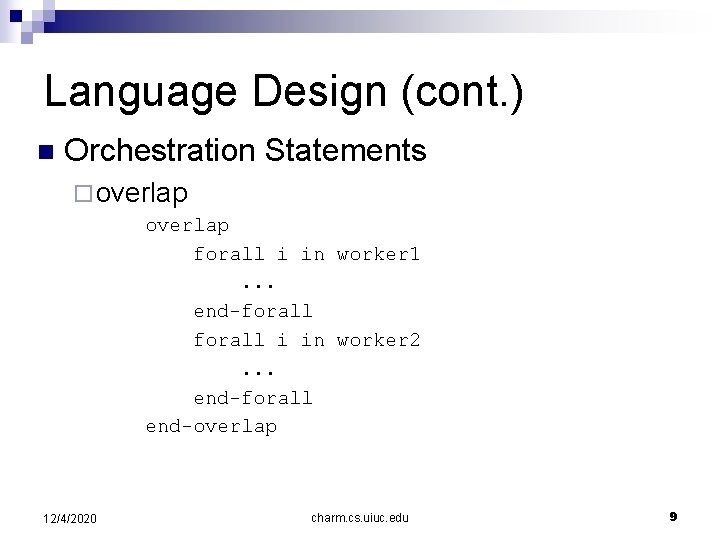

Language Design (cont. ) n Orchestration Statements ¨ overlap forall i in worker 1. . . end-forall i in worker 2. . . end-forall end-overlap 12/4/2020 charm. cs. uiuc. edu 9

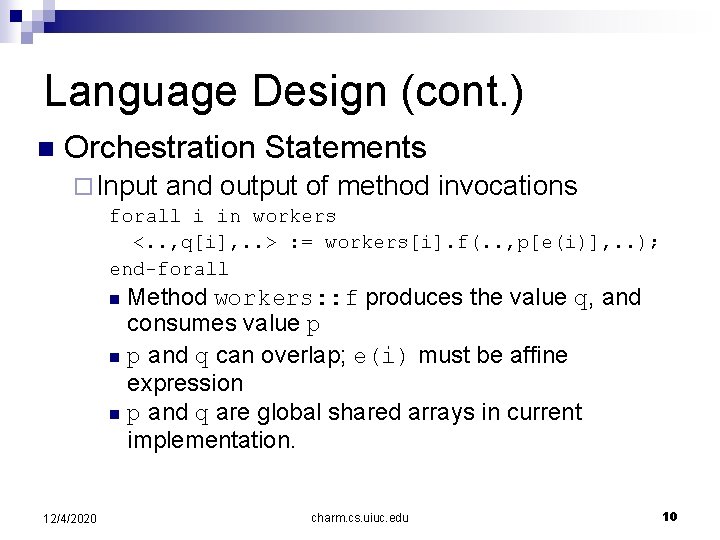

Language Design (cont. ) n Orchestration Statements ¨ Input and output of method invocations forall i in workers <. . , q[i], . . > : = workers[i]. f(. . , p[e(i)], . . ); end-forall Method workers: : f produces the value q, and consumes value p n p and q can overlap; e(i) must be affine expression n p and q are global shared arrays in current implementation. n 12/4/2020 charm. cs. uiuc. edu 10

![Language Design cont n Communication Patterns Pointtopoint pi Ai f Language Design (cont. ) n Communication Patterns ¨ Point-to-point <p[i]> : = A[i]. f(.](https://slidetodoc.com/presentation_image_h/df1d6c47664c6eeb5181856d9d02003b/image-11.jpg)

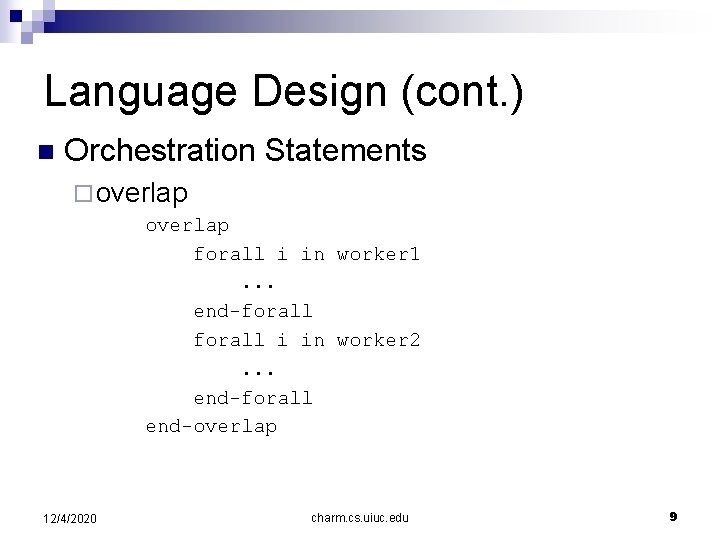

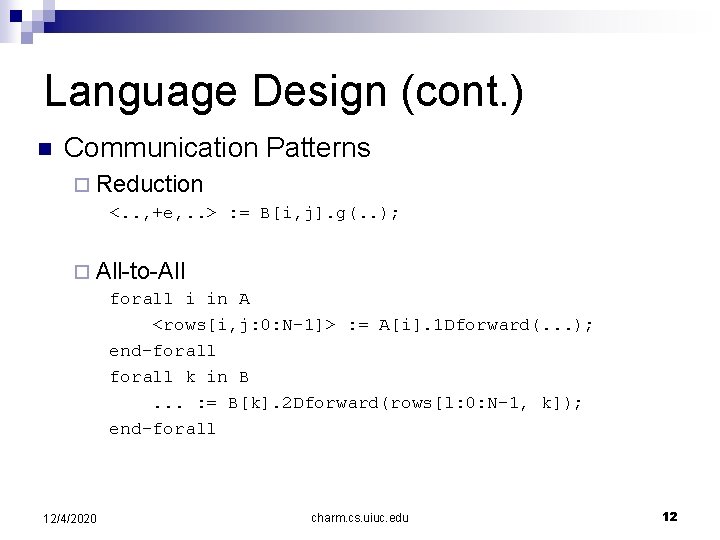

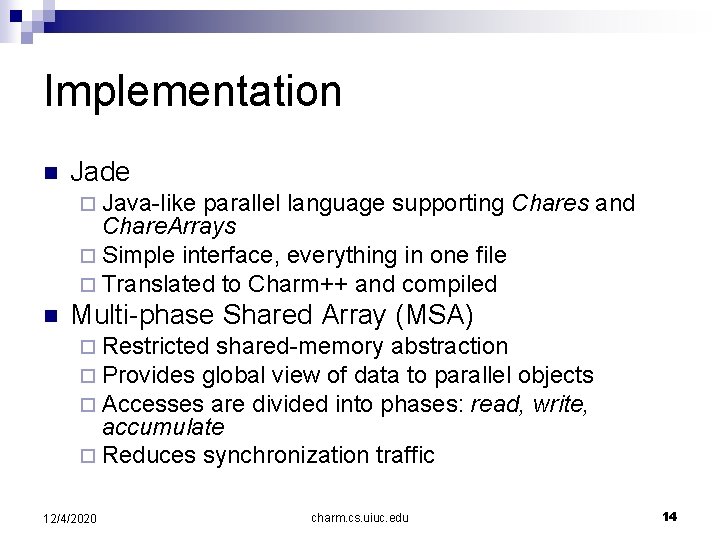

Language Design (cont. ) n Communication Patterns ¨ Point-to-point <p[i]> : = A[i]. f(. . ); <. . > : = B[i]. g(p[i]); ¨ Multicast <p[i]> : = A[i]. f(. . . ); <. . . > : = B[i]. g(p[i-1], p[i+1]); 12/4/2020 charm. cs. uiuc. edu 11

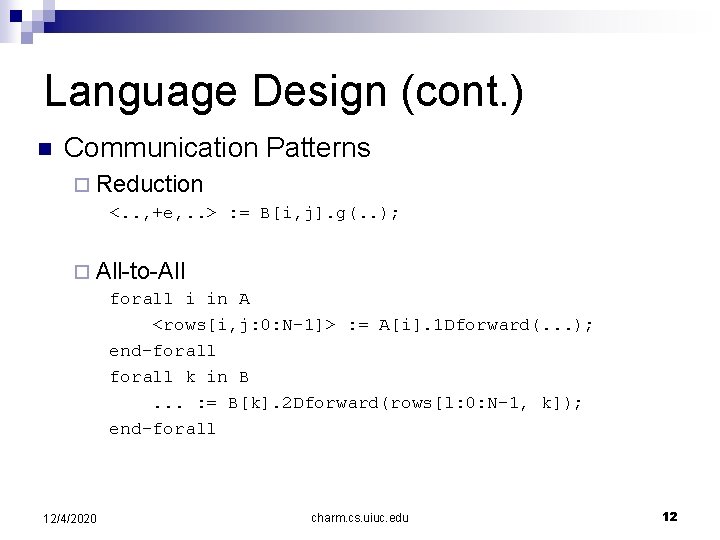

Language Design (cont. ) n Communication Patterns ¨ Reduction <. . , +e, . . > : = B[i, j]. g(. . ); ¨ All-to-All forall i in A <rows[i, j: 0: N-1]> : = A[i]. 1 Dforward(. . . ); end-forall k in B. . . : = B[k]. 2 Dforward(rows[l: 0: N-1, k]); end-forall 12/4/2020 charm. cs. uiuc. edu 12

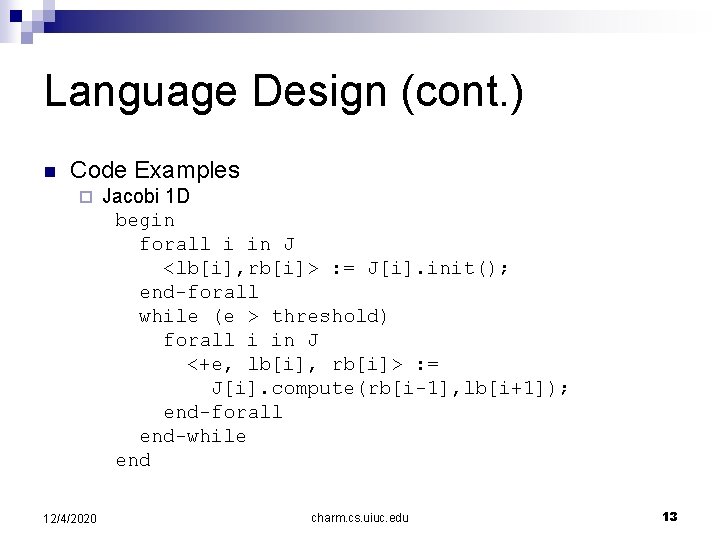

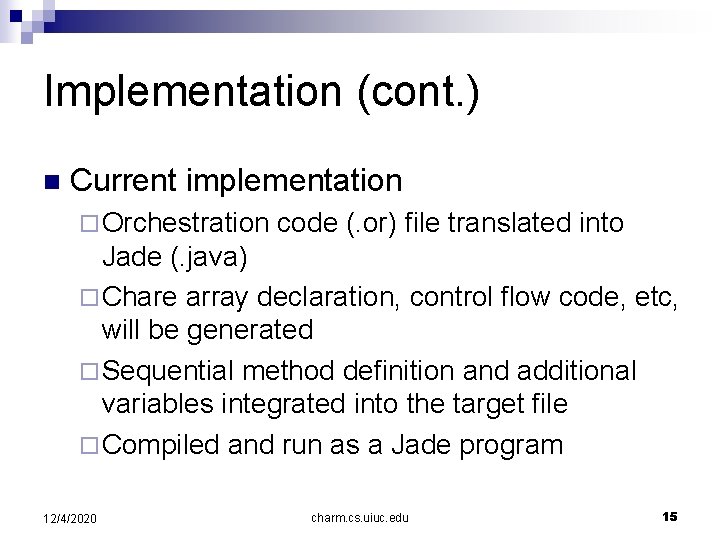

Language Design (cont. ) n Code Examples ¨ 12/4/2020 Jacobi 1 D begin forall i in J <lb[i], rb[i]> : = J[i]. init(); end-forall while (e > threshold) forall i in J <+e, lb[i], rb[i]> : = J[i]. compute(rb[i-1], lb[i+1]); end-forall end-while end charm. cs. uiuc. edu 13

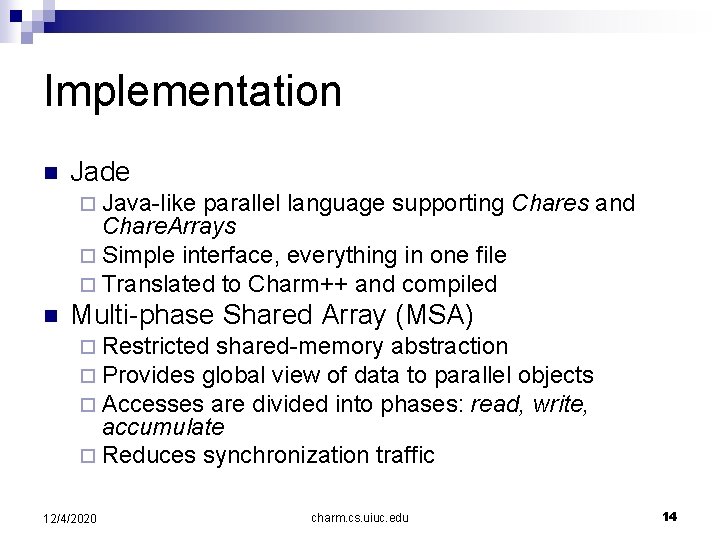

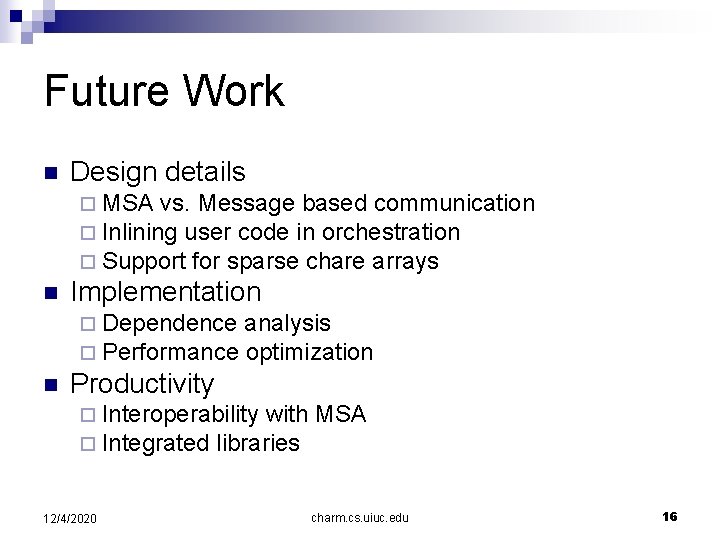

Implementation n Jade ¨ Java-like parallel language supporting Chares and Chare. Arrays ¨ Simple interface, everything in one file ¨ Translated to Charm++ and compiled n Multi-phase Shared Array (MSA) ¨ Restricted shared-memory abstraction ¨ Provides global view of data to parallel objects ¨ Accesses are divided into phases: read, write, accumulate ¨ Reduces synchronization traffic 12/4/2020 charm. cs. uiuc. edu 14

Implementation (cont. ) n Current implementation ¨ Orchestration code (. or) file translated into Jade (. java) ¨ Chare array declaration, control flow code, etc, will be generated ¨ Sequential method definition and additional variables integrated into the target file ¨ Compiled and run as a Jade program 12/4/2020 charm. cs. uiuc. edu 15

Future Work n Design details ¨ MSA vs. Message based communication ¨ Inlining user code in orchestration ¨ Support for sparse chare arrays n Implementation ¨ Dependence analysis ¨ Performance optimization n Productivity ¨ Interoperability with ¨ Integrated libraries 12/4/2020 MSA charm. cs. uiuc. edu 16