Vertical K Median Clustering Amal Perera William Perrizo

![Pi, 2 Pi, 1 Pi, 0 0 1 0 1 0 [1] Median with Pi, 2 Pi, 1 Pi, 0 0 1 0 1 0 [1] Median with](https://slidetodoc.com/presentation_image_h/ba7064f4460354edaf98be75cd07cf07/image-11.jpg)

![[2] Bulk Membership Assignment (not 1 -by-1) n Find perpendicular Bi- Sector boundaries from [2] Bulk Membership Assignment (not 1 -by-1) n Find perpendicular Bi- Sector boundaries from](https://slidetodoc.com/presentation_image_h/ba7064f4460354edaf98be75cd07cf07/image-12.jpg)

![[3] Efficient Error computation Error = Sum Squared Distance from Centroid (a) to Points [3] Efficient Error computation Error = Sum Squared Distance from Centroid (a) to Points](https://slidetodoc.com/presentation_image_h/ba7064f4460354edaf98be75cd07cf07/image-14.jpg)

- Slides: 23

Vertical K Median Clustering Amal Perera, William Perrizo {amal. perera, william. perrizo}@ndsu. edu Dept. of CS, North Dakota State University. CATA 2006 – Seattle Washington 11/29/2020

Outline n Introduction n Background n Our Approach n Results n Conclusions 11/29/2020 Vertical K Median Clustering 2

Introduction n Clustering: n n Application areas include: n n Partition, Hierarchical, Density, Grid Based Major Problem: n n Datamining, Search engine indexing, Pattern recognition, Image processing, Trend analysis and many other areas Clustering Algorithms: n n Automated identification of groups of objects based on similarity. Scalability with respect to data set size We propose: n 11/29/2020 A Partition Based Vertical K Median Clustering 3

Background n Many clustering algorithms work well on small datasets. n Current approaches for Large data sets include: n Sampling eg. n CLARA : choosing a representative sample n CLARANS : Selecting a randomized sample for each iteration. n Preserve summary statistics eg. n BIRCH : tree structure that records the sufficient statistics for data set. Requirement for Input Parameters with prior knowledge n Above techniques may lead to sub optimal solutions. 11/29/2020 Vertical K Median Clustering 4

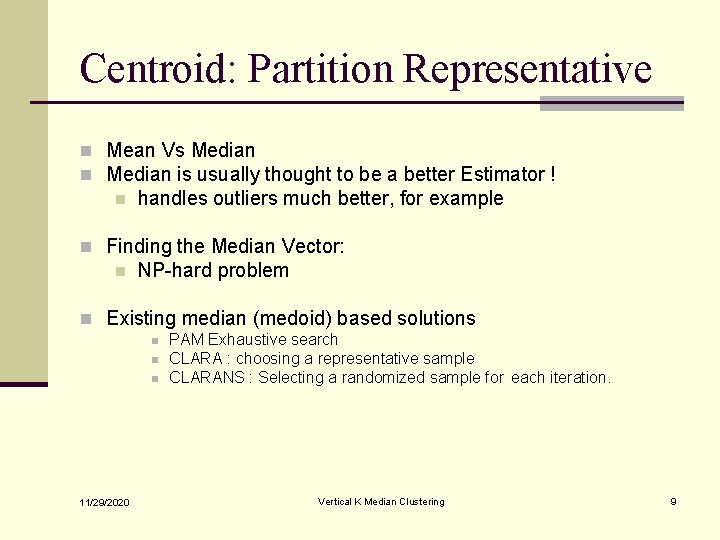

Background n Partition Clustering (k): n n objects in the original data set n Broken into k partitions (iteratively, each time resulting in an improved k-clustering), n to achieve a certain optimality criterion n Computational Steps: 1. 2. 3. 11/29/2020 Find a representative for each cluster component assign others to be in cluster of best representative Calculate error (repeat if error is too high) Vertical K Median Clustering 5

Our Approach n Scalability is addressed n n it is a partition based approach it uses a vertical data structure (P-tree) the computation is efficient: n selects the partition representative using a simple directed search across bit slices rather than down rows, n assigns membership using bit slices with geometric reasoning n computes error using position based manipulation of bit slices Solution quality is improved or maintained while increasing speed and scalability. n 11/29/2020 Uses a median rather than mean Vertical K Median Clustering 6

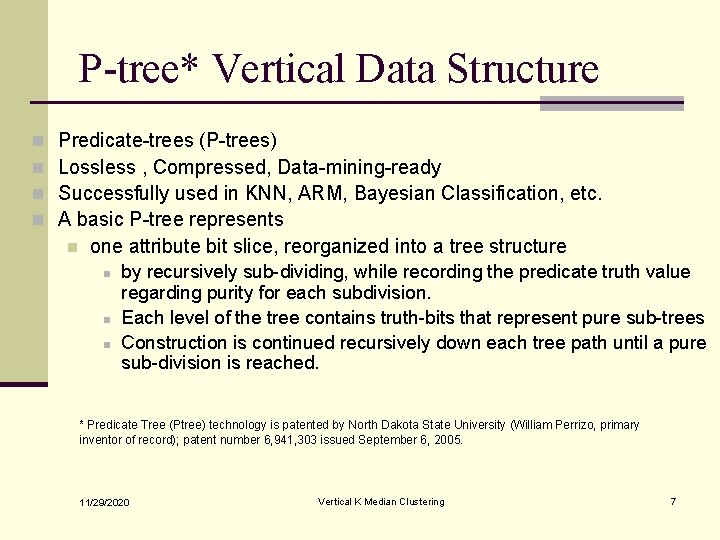

P-tree* Vertical Data Structure n n Predicate-trees (P-trees) Lossless , Compressed, Data-mining-ready Successfully used in KNN, ARM, Bayesian Classification, etc. A basic P-tree represents n one attribute bit slice, reorganized into a tree structure n n n by recursively sub-dividing, while recording the predicate truth value regarding purity for each subdivision. Each level of the tree contains truth-bits that represent pure sub-trees Construction is continued recursively down each tree path until a pure sub-division is reached. * Predicate Tree (Ptree) technology is patented by North Dakota State University (William Perrizo, primary inventor of record); patent number 6, 941, 303 issued September 6, 2005. 11/29/2020 Vertical K Median Clustering 7

A file, R(A 1. . An), contains horizontal structures (horizontal records) processed vertically (vertical scans) Ptrees: vertically partition; then compress each vertical bit slice into a basic Ptree; horizontally process these basic Ptrees using one multi-operand logical AND. R( A 1 A 2 A 3 A 4) Horizontal structures (records) Scanned vertically 010 011 010 101 010 111 111 110 111 010 000 110 101 001 001 001 000 001 111 100 100 R[A 1] R[A 2] R[A 3] R[A 4] R 11 0 0 1 0 1 1 1 -Dimensional Ptrees are built by recording the truth of the predicate “pure 1” recursively on halves, until there is purity, P 11: 1. Whole file is not pure 1 0 2. 1 st half is not pure 1 0 3. 2 nd half is not pure 1 0 4. 1 st half of 2 nd half not 0 5. 2 nd half of 2 nd half is 1 11/29/2020 6. 1 st half of 1 st of 2 nd is 1 But it is pure (pure 0) so this 7. 2 nd half of 1 st of 2 nd not 0 branch ends 010 011 010 101 010 111 0 0 111 110 111 010 000 110 101 001 001 001 000 001 111 100 100 R 11 R 12 R 13 R 21 R 22 R 23 R 31 R 32 R 33 0 0 1 0 1 1 1 0 0 1 1 1 0 0 1 1 0 0 0 0 1 1 1 R 41 R 42 R 43 0 0 0 1 1 1 0 0 0 0 1 1 0 0 P 11 P 12 P 13 P 21 P 22 P 23 P 31 P 32 P 33 P 41 P 42 P 43 0 0 0 0 1 0 0 01 0 0 0 10 10 10 01 01 0001 0100 01 01 10 0 0 1 Vertical K Median Clustering 8 Eg, to count, 111 000 001 100 s, use “pure 111000001100”: 0 23 -level 1 10 P 11^P 12^P 13^P’ 21^P’ 22^P’ 23^P’ 31^P’ 32^P 33^P 41^P’ 42^P’ 43 = 0 0 22 -level =2 01 21 -level

Centroid: Partition Representative n Mean Vs Median n Median is usually thought to be a better Estimator ! n handles outliers much better, for example n Finding the Median Vector: n NP-hard problem n Existing median (medoid) based solutions n n n 11/29/2020 PAM Exhaustive search CLARA : choosing a representative sample CLARANS : Selecting a randomized sample for each iteration. Vertical K Median Clustering 9

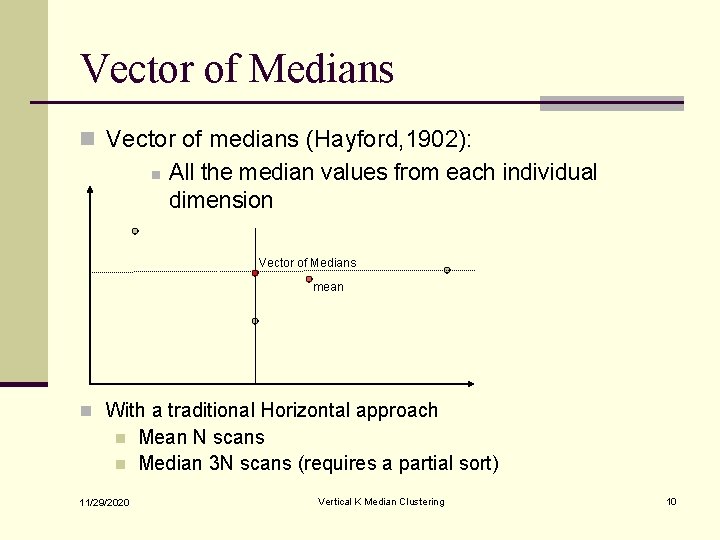

Vector of Medians n Vector of medians (Hayford, 1902): n All the median values from each individual dimension Vector of Medians mean n With a traditional Horizontal approach n n 11/29/2020 Mean N scans Median 3 N scans (requires a partial sort) Vertical K Median Clustering 10

![Pi 2 Pi 1 Pi 0 0 1 0 1 0 1 Median with Pi, 2 Pi, 1 Pi, 0 0 1 0 1 0 [1] Median with](https://slidetodoc.com/presentation_image_h/ba7064f4460354edaf98be75cd07cf07/image-11.jpg)

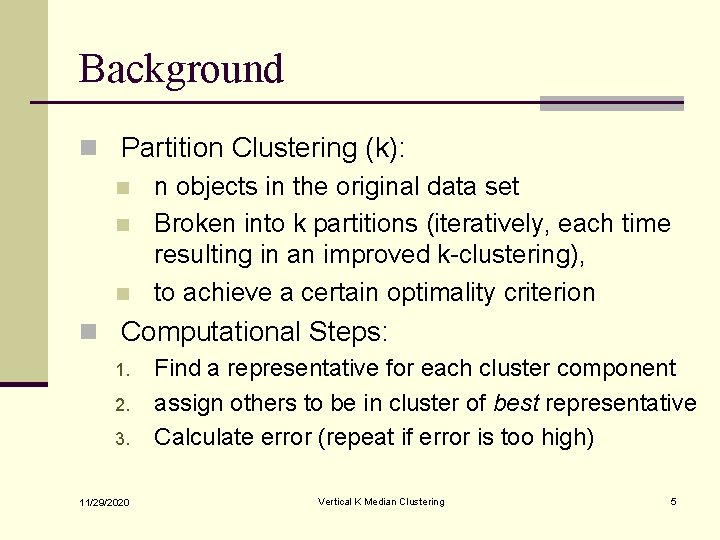

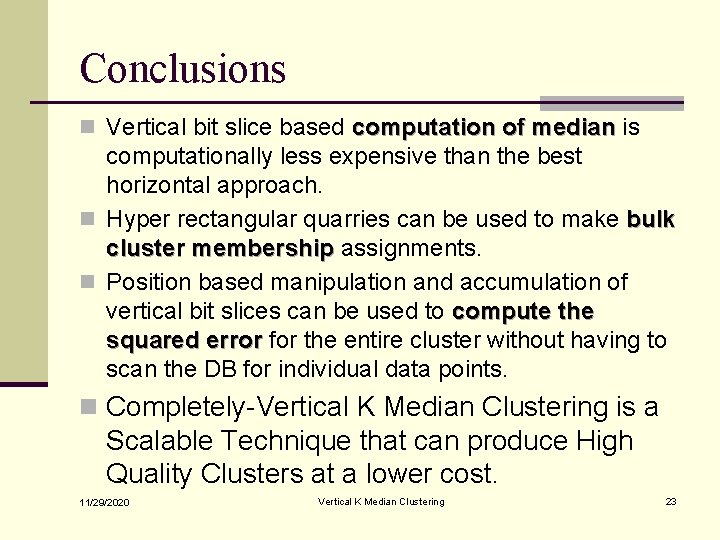

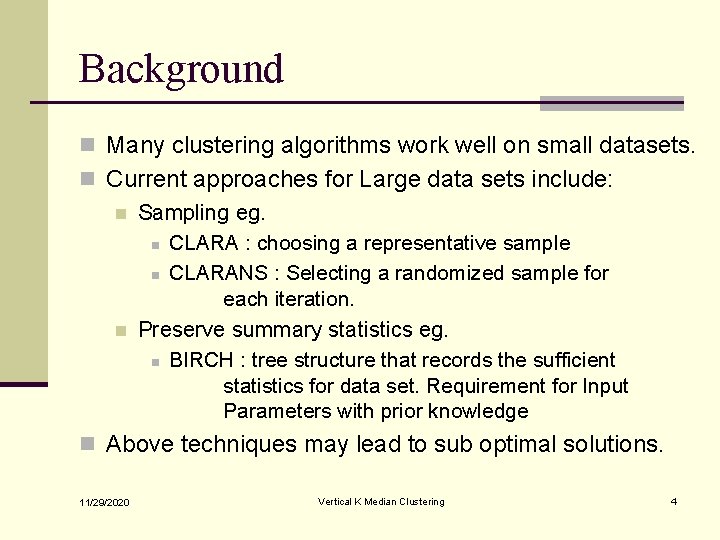

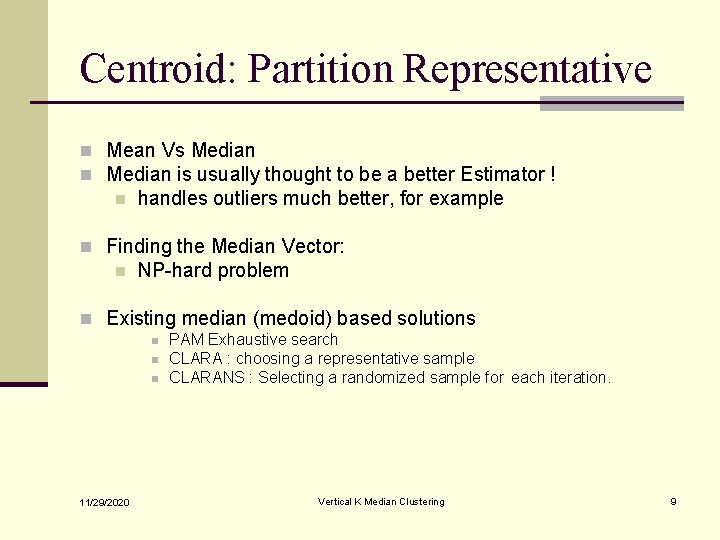

Pi, 2 Pi, 1 Pi, 0 0 1 0 1 0 [1] Median with Ptrees n Starting from the Most Significant Bit, Repeatedly AND appropriate Bit Slice until the Least Significant Bit is reached while building the Median pattern. Pi, 2 P'i, 2 Pi, 1 Pi, 0 0 1 0 1 0 1 1 1 1 0 0 0 0 0 Median Pattern 000 5 1, _, _ 001 0, _, _ 010 011 2 3 0, 1, _ 0 0, 1, 1 100 101 0, 0, _ 110 111 2 0, 1, 0 One. Cnt rc =4 4 6 4 Vertical K Median Clustering Rather than scan 4 billion records, we AND log 2=32 P-trees. Zero. Cnt < > < 5 3 5 Corresp bit of Median 0=hi bit 1 0 010 Scalability? e. g. , if the cardinality= 232=4, 294, 967, 296 11/29/2020 0 1 0 0 0 Distribution of values _, _, _ 4 0 1 0 1 1 11

![2 Bulk Membership Assignment not 1 by1 n Find perpendicular Bi Sector boundaries from [2] Bulk Membership Assignment (not 1 -by-1) n Find perpendicular Bi- Sector boundaries from](https://slidetodoc.com/presentation_image_h/ba7064f4460354edaf98be75cd07cf07/image-12.jpg)

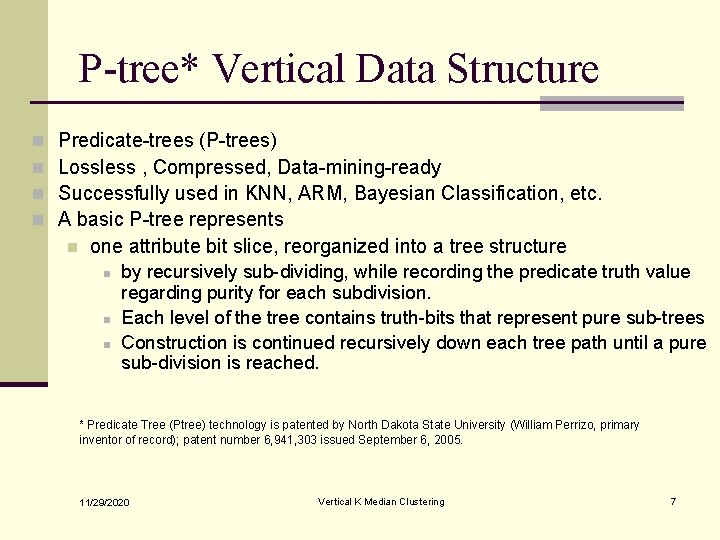

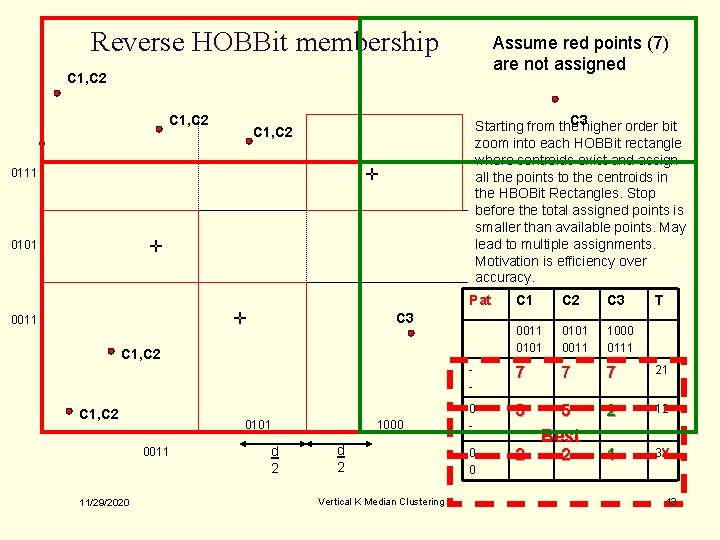

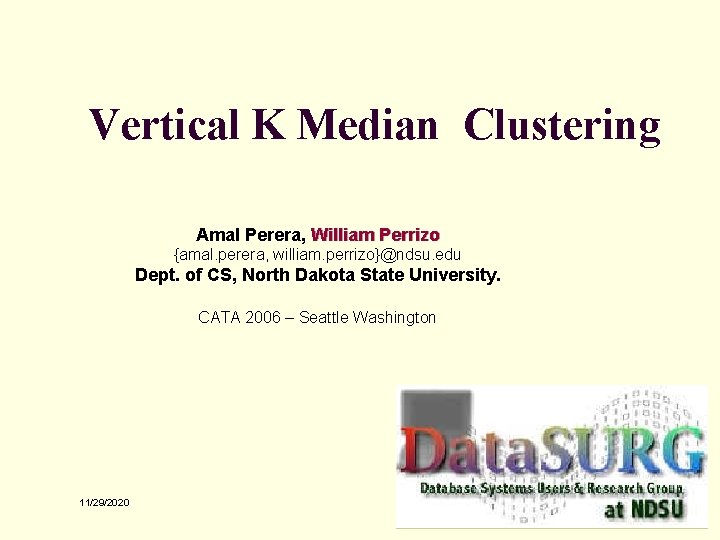

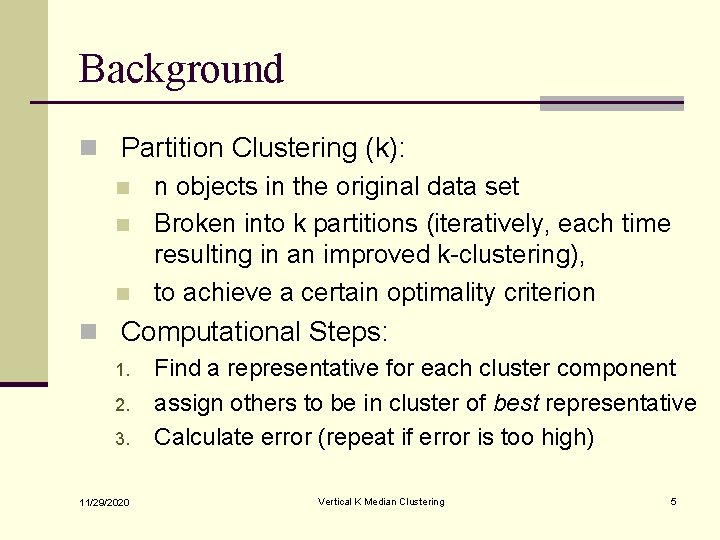

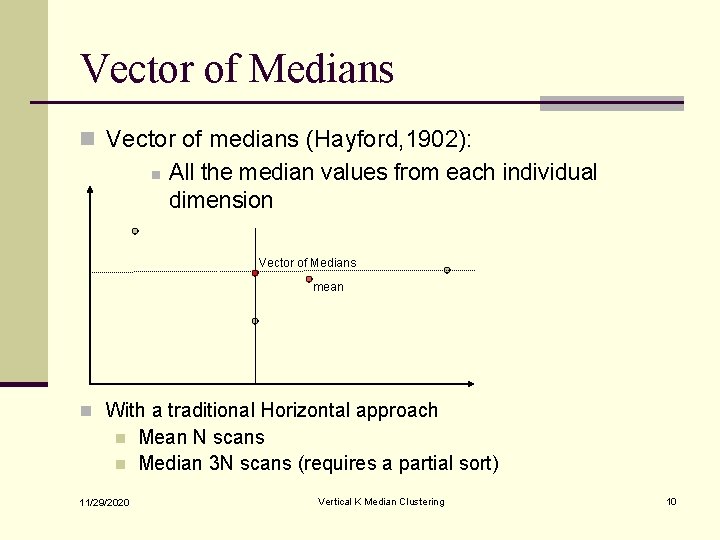

[2] Bulk Membership Assignment (not 1 -by-1) n Find perpendicular Bi- Sector boundaries from centroids (vectors of attribute medians which are easily computed as in previous slide) n Assign membership to all the points within these boundary n Assignment is done using AND & OR of respective Bit Slices without a scan. d 2 11/29/2020 Vertical K Median Clustering d 2 12

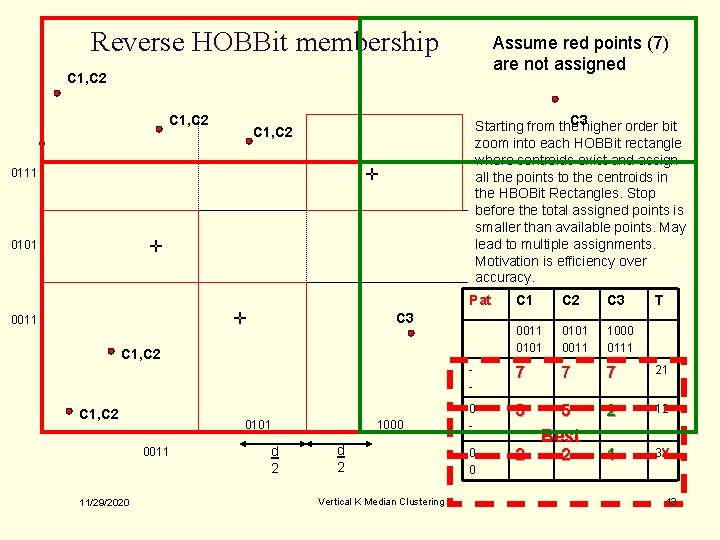

Reverse HOBBit membership Assume red points (7) are not assigned C 1, C 2 C 3 higher order bit Starting from the zoom into each HOBBit rectangle where centroids exist and assign all the points to the centroids in the HBOBit Rectangles. Stop before the total assigned points is smaller than available points. May lead to multiple assignments. Motivation is efficiency over accuracy. C 1, C 2 0111 0101 Pat C 1 C 2 C 3 0011 0101 0011 1000 0111 - 7 7 7 21 0 - 5 5 2 12 0 0 2 1 3 X C 3 0011 C 1, C 2 0101 0011 11/29/2020 d 2 1000 d 2 Vertical K Median Clustering Best 2 T 13

![3 Efficient Error computation Error Sum Squared Distance from Centroid a to Points [3] Efficient Error computation Error = Sum Squared Distance from Centroid (a) to Points](https://slidetodoc.com/presentation_image_h/ba7064f4460354edaf98be75cd07cf07/image-14.jpg)

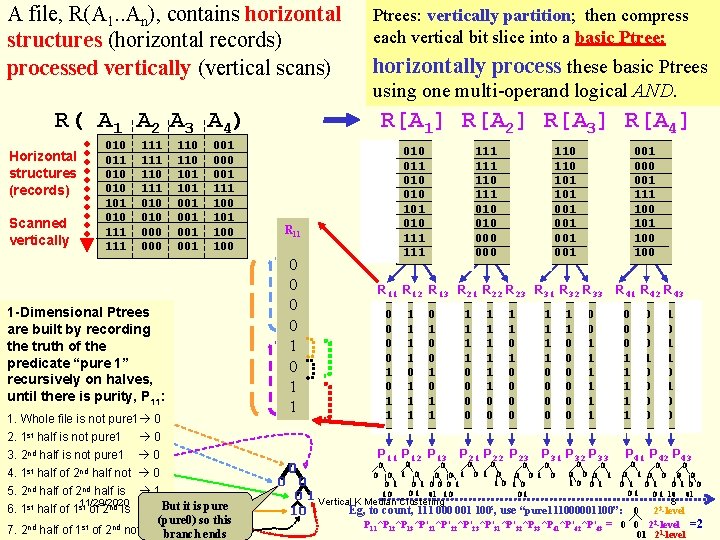

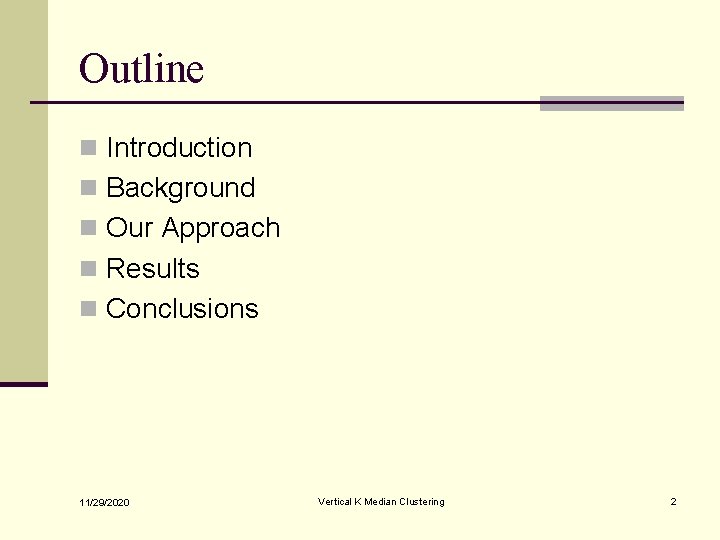

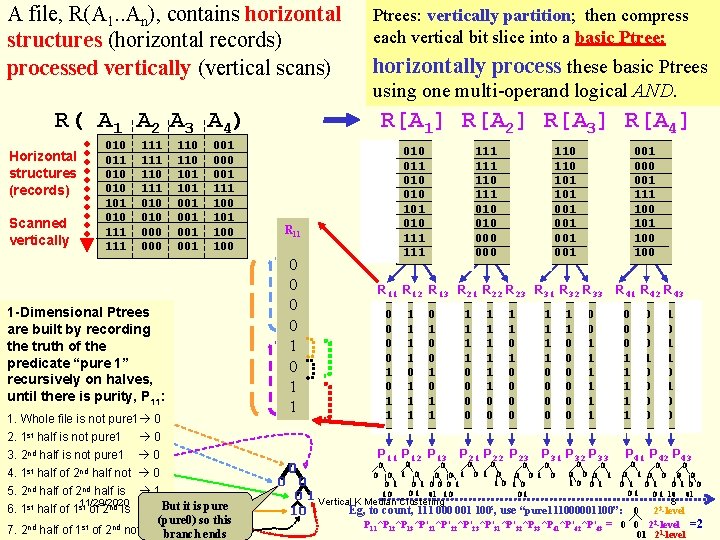

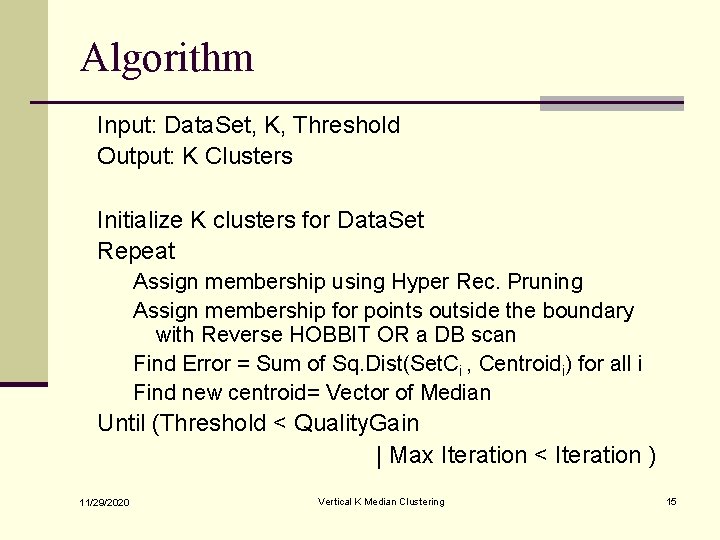

[3] Efficient Error computation Error = Sum Squared Distance from Centroid (a) to Points in Cluster (x) Where: Pi, j : P-tree for the jth bit of ith attribute COUNT(P) : count of the number of truth bits. PX : Ptree (mask) for the cluster subset X 11/29/2020 Vertical K Median Clustering 14

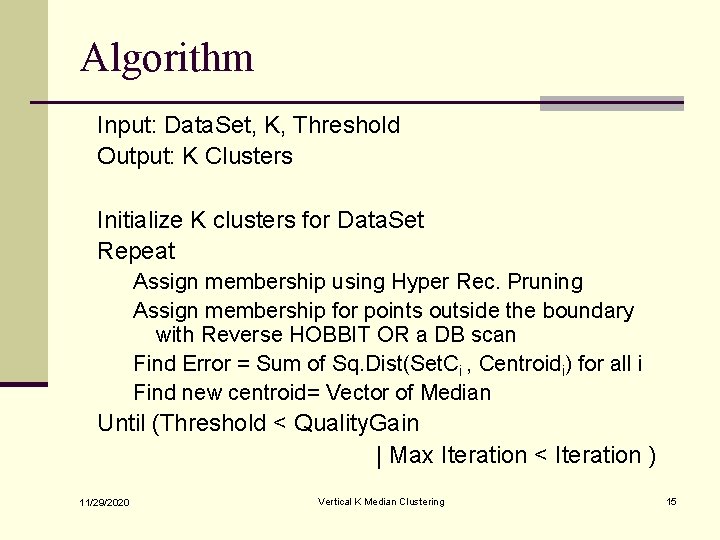

Algorithm Input: Data. Set, K, Threshold Output: K Clusters Initialize K clusters for Data. Set Repeat Assign membership using Hyper Rec. Pruning Assign membership for points outside the boundary with Reverse HOBBIT OR a DB scan Find Error = Sum of Sq. Dist(Set. Ci , Centroidi) for all i Find new centroid= Vector of Median Until (Threshold < Quality. Gain | Max Iteration < Iteration ) 11/29/2020 Vertical K Median Clustering 15

Experimental Results n Objective : Quality and Scalability n Datasets n n Synthetic data - Quality Iris Plant Data - Quality KDD-99 Network Intrusion Data - Quality Remotely Sensed Image Data - Scalability n Quality Measured with Where: F=1 for perfect clustering Be some cluster 11/29/2020 Vertical K Median Clustering Original cluster 16

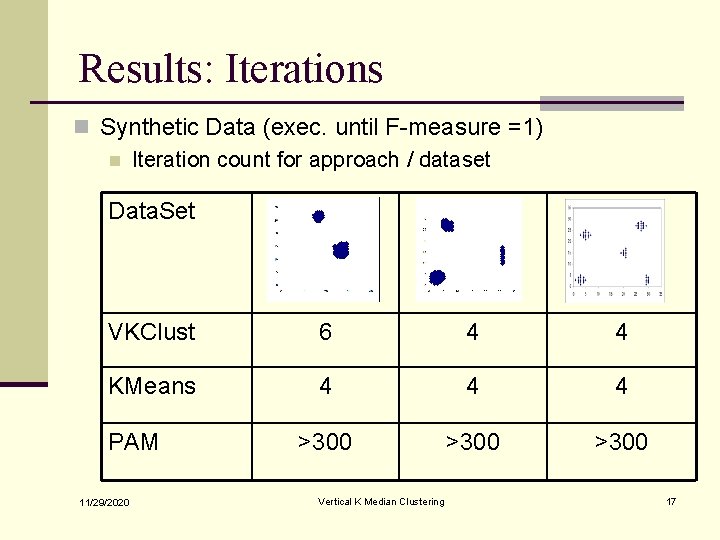

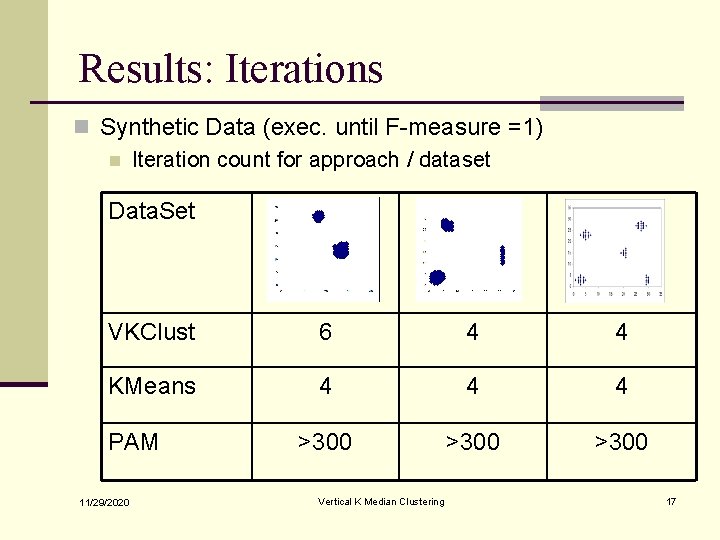

Results: Iterations n Synthetic Data (exec. until F-measure =1) n Iteration count for approach / dataset Data. Set VKClust 6 4 4 KMeans 4 4 4 >300 PAM 11/29/2020 Vertical K Median Clustering 17

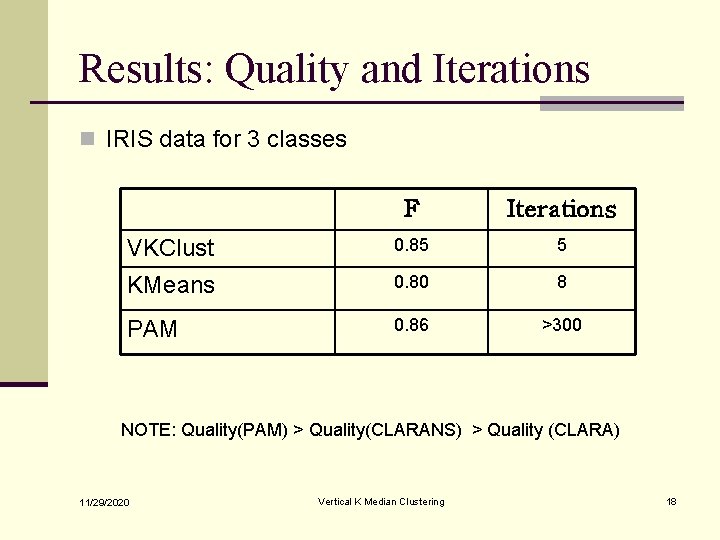

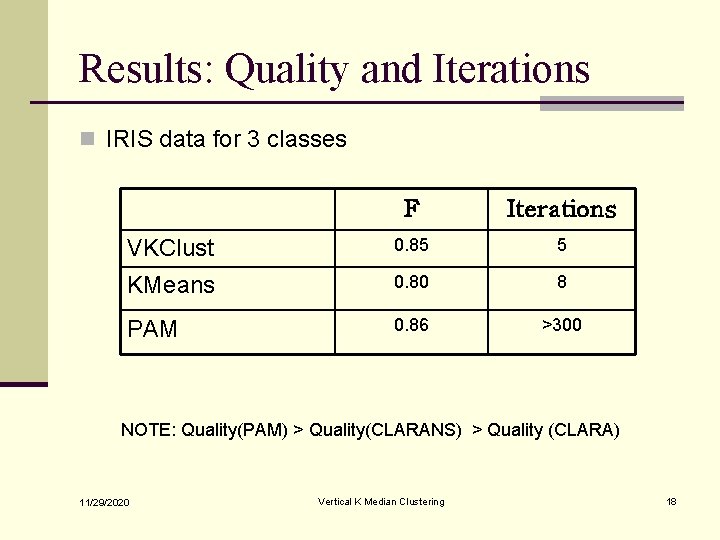

Results: Quality and Iterations n IRIS data for 3 classes F Iterations VKClust 0. 85 5 KMeans 0. 80 8 PAM 0. 86 >300 NOTE: Quality(PAM) > Quality(CLARANS) > Quality (CLARA) 11/29/2020 Vertical K Median Clustering 18

11/29/2020 Vertical K Median Clustering 19

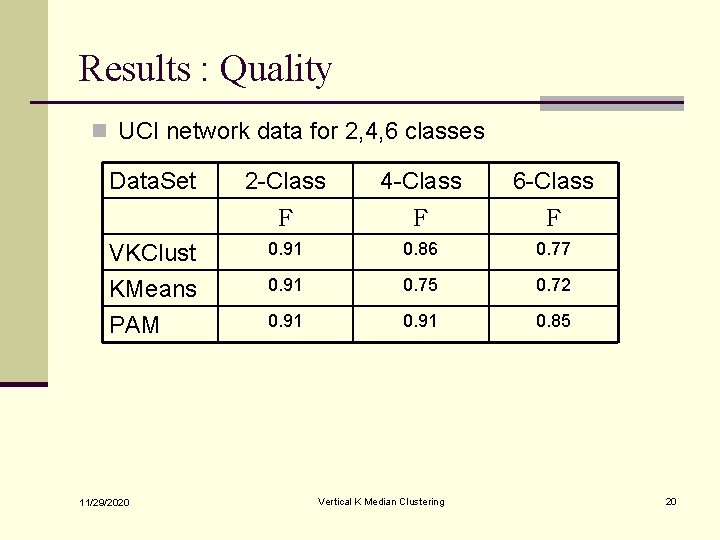

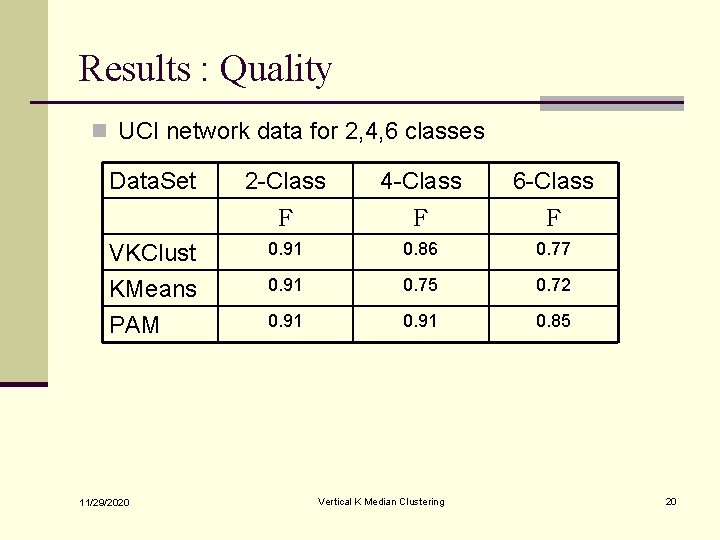

Results : Quality n UCI network data for 2, 4, 6 classes Data. Set 2 -Class 4 -Class 6 -Class F F F VKClust KMeans 0. 91 0. 86 0. 77 0. 91 0. 75 0. 72 PAM 0. 91 0. 85 11/29/2020 Vertical K Median Clustering 20

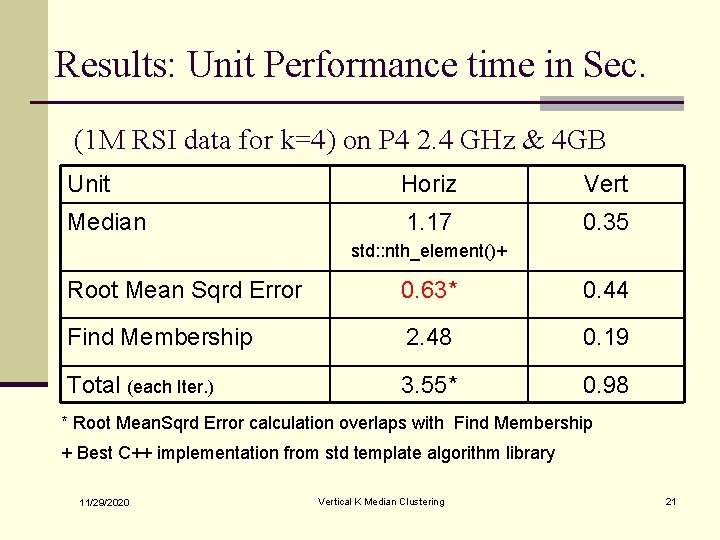

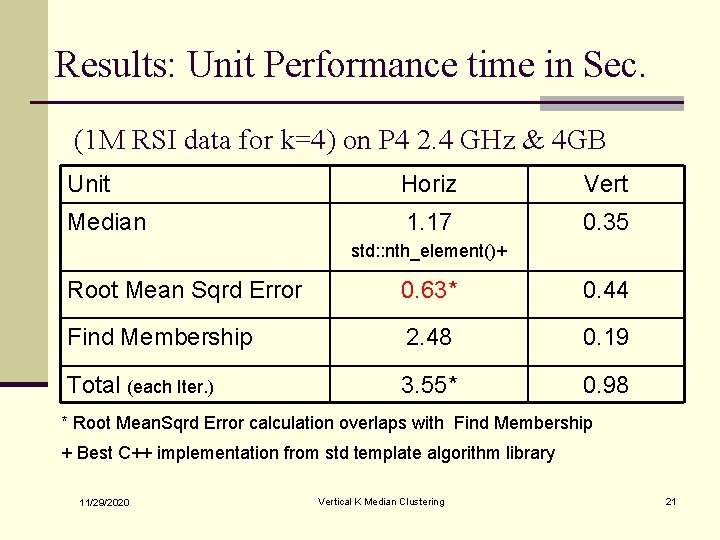

Results: Unit Performance time in Sec. (1 M RSI data for k=4) on P 4 2. 4 GHz & 4 GB Unit Horiz Vert Median 1. 17 0. 35 std: : nth_element()+ Root Mean Sqrd Error 0. 63* 0. 44 Find Membership 2. 48 0. 19 Total (each Iter. ) 3. 55* 0. 98 * Root Mean. Sqrd Error calculation overlaps with Find Membership + Best C++ implementation from std template algorithm library 11/29/2020 Vertical K Median Clustering 21

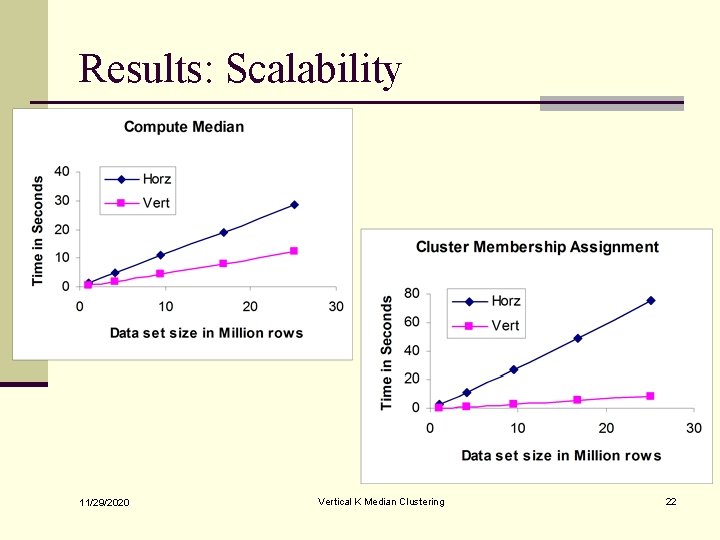

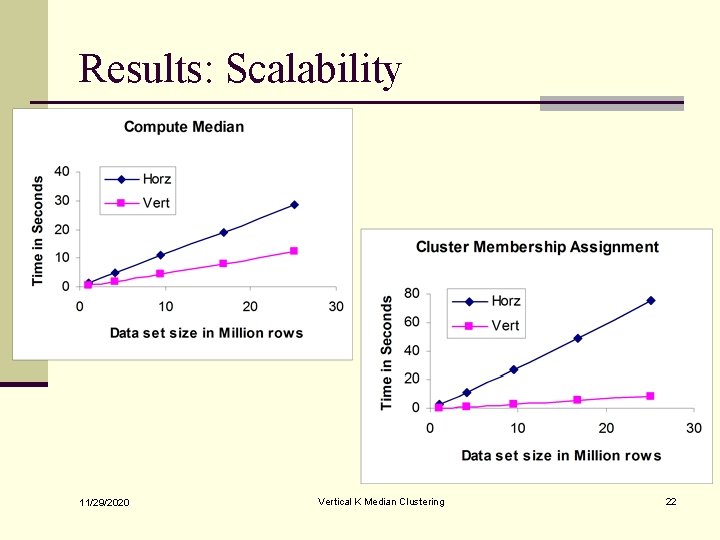

Results: Scalability 11/29/2020 Vertical K Median Clustering 22

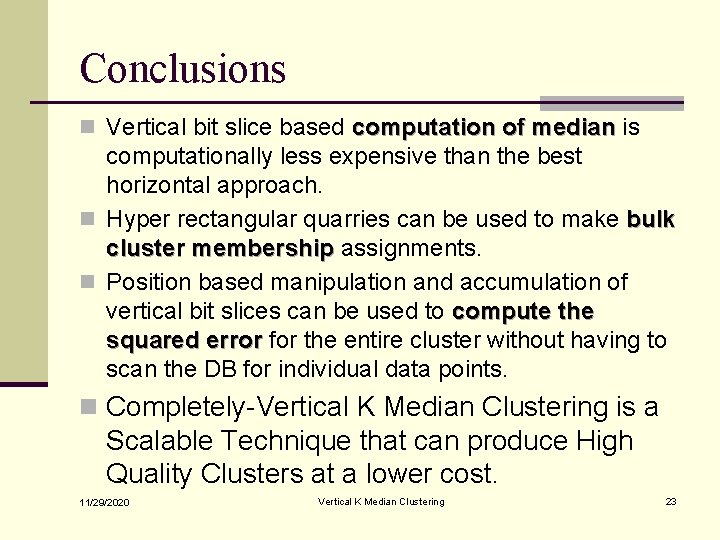

Conclusions n Vertical bit slice based computation of median is computationally less expensive than the best horizontal approach. n Hyper rectangular quarries can be used to make bulk cluster membership assignments. n Position based manipulation and accumulation of vertical bit slices can be used to compute the squared error for the entire cluster without having to scan the DB for individual data points. n Completely-Vertical K Median Clustering is a Scalable Technique that can produce High Quality Clusters at a lower cost. 11/29/2020 Vertical K Median Clustering 23

Nyt top stories

Nyt top stories Bond energy algorithm

Bond energy algorithm Rumus distance

Rumus distance Median-median regression line

Median-median regression line Fabiana sofia perera

Fabiana sofia perera Graciela perera

Graciela perera Bubble he assessment

Bubble he assessment Lakeesha perera

Lakeesha perera Rafael perera

Rafael perera Doreen perera

Doreen perera Postulados de witebsky

Postulados de witebsky Behavior driven development

Behavior driven development Dinuka perera

Dinuka perera Susun sisih 5s

Susun sisih 5s Amal jama’ah memiliki arti,,, *

Amal jama’ah memiliki arti,,, * 19th ramadan amal

19th ramadan amal Amal laylatul qadr

Amal laylatul qadr Dr amal sarah

Dr amal sarah Amal wellness

Amal wellness Shirkah

Shirkah Amal mitra

Amal mitra Di urutan keberapakah bidang amal usaha perekonomian itu: *

Di urutan keberapakah bidang amal usaha perekonomian itu: * Rukun amal jamaie

Rukun amal jamaie Amal balıkesir

Amal balıkesir