Turing Halting Theorem P NPC Hamiltonian TSP SAT

- Slides: 62

Turing, Halting Theorem, P, NPC, Hamiltonian, TSP, SAT, Subset Sum…. Dr. Mustafa Sakalli Marmara Univ. Very Preliminary slights to be modified, June of 2009, CS 246 CLRS chapter 34

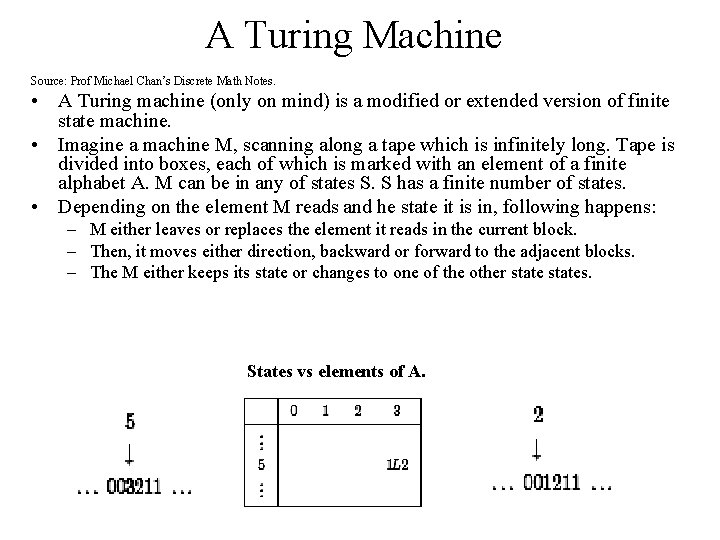

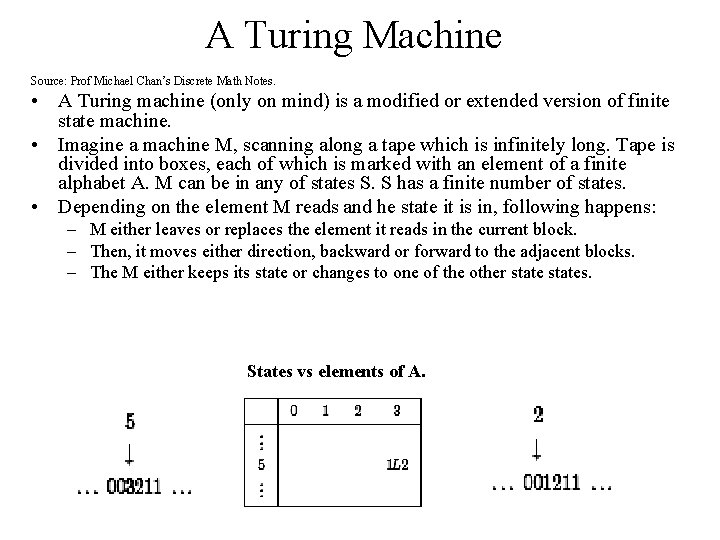

A Turing Machine Source: Prof Michael Chan’s Discrete Math Notes. • A Turing machine (only on mind) is a modified or extended version of finite state machine. • Imagine a machine M, scanning along a tape which is infinitely long. Tape is divided into boxes, each of which is marked with an element of a finite alphabet A. M can be in any of states S. S has a finite number of states. • Depending on the element M reads and he state it is in, following happens: – M either leaves or replaces the element it reads in the current block. – Then, it moves either direction, backward or forward to the adjacent blocks. – The M either keeps its state or changes to one of the other states. States vs elements of A.

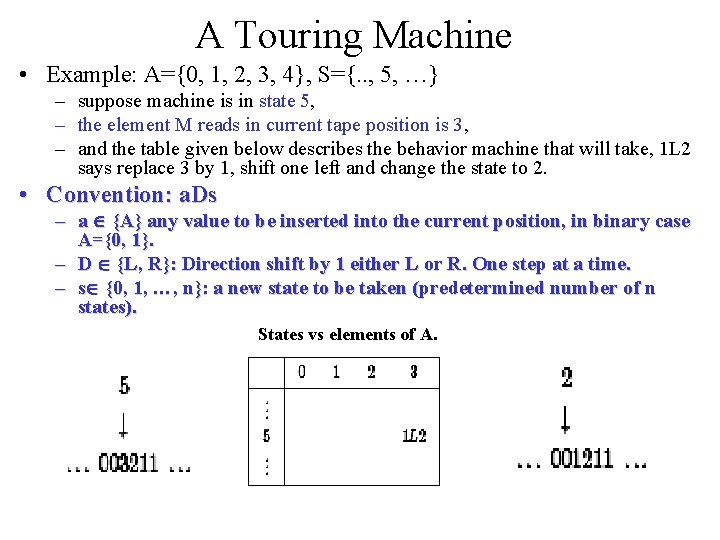

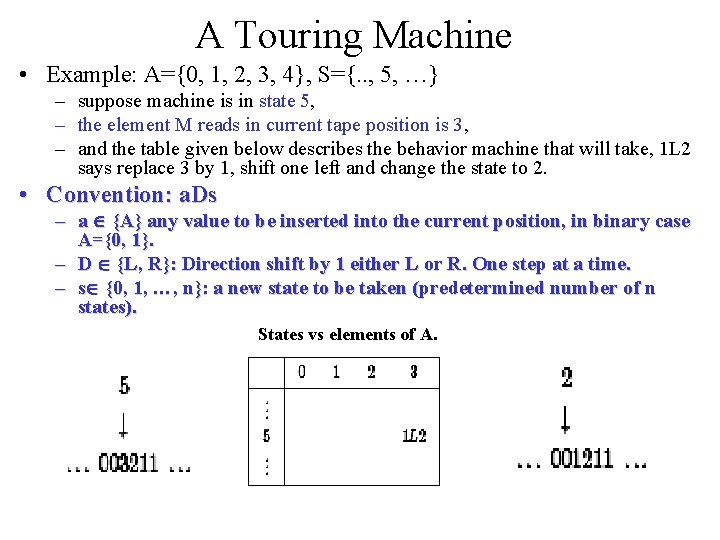

A Touring Machine • Example: A={0, 1, 2, 3, 4}, S={. . , 5, …} – suppose machine is in state 5, – the element M reads in current tape position is 3, – and the table given below describes the behavior machine that will take, 1 L 2 says replace 3 by 1, shift one left and change the state to 2. • Convention: a. Ds – a {A} any value to be inserted into the current position, in binary case A={0, 1}. – D {L, R}: Direction shift by 1 either L or R. One step at a time. – s {0, 1, …, n}: a new state to be taken (predetermined number of n states). States vs elements of A.

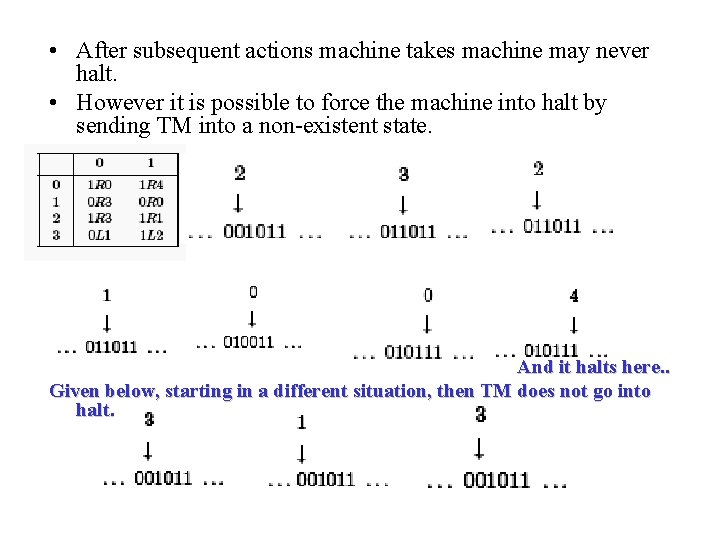

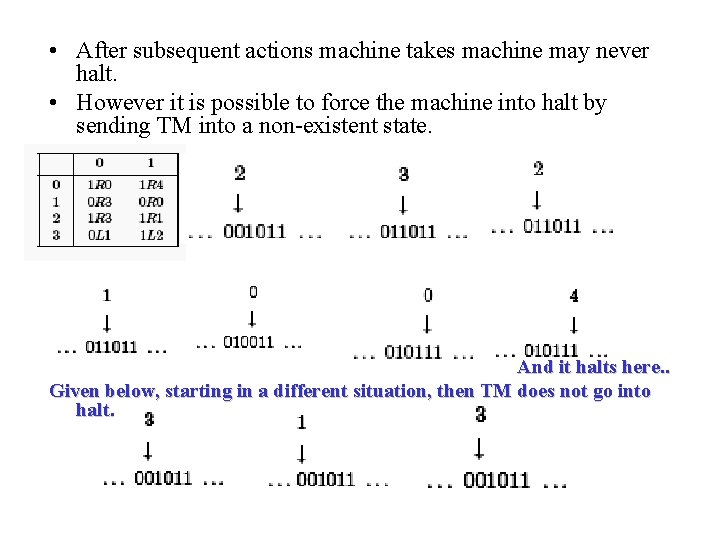

• After subsequent actions machine takes machine may never halt. • However it is possible to force the machine into halt by sending TM into a non-existent state. And it halts here. . Given below, starting in a different situation, then TM does not go into halt.

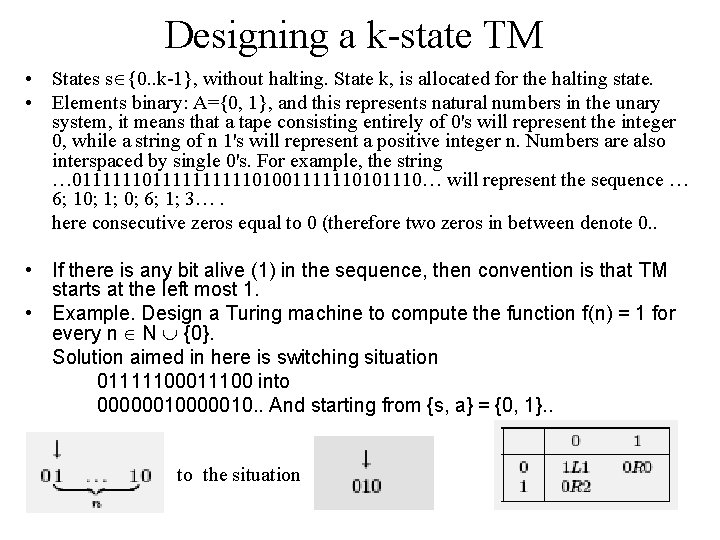

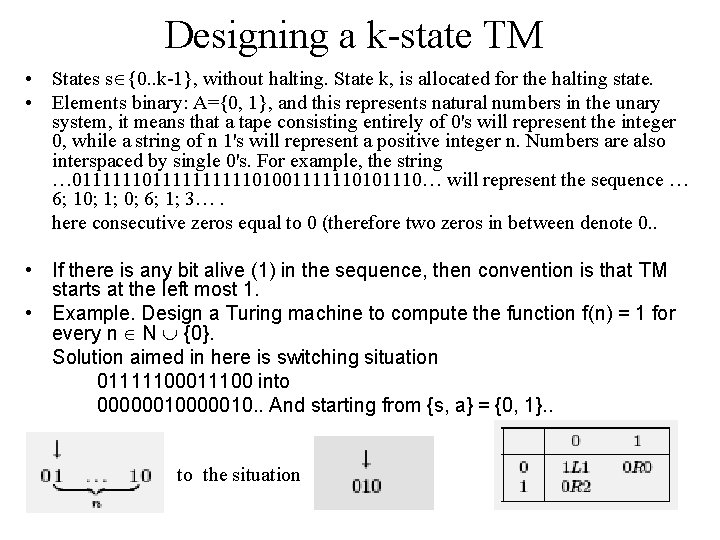

Designing a k-state TM • States s {0. . k-1}, without halting. State k, is allocated for the halting state. • Elements binary: A={0, 1}, and this represents natural numbers in the unary system, it means that a tape consisting entirely of 0's will represent the integer 0, while a string of n 1's will represent a positive integer n. Numbers are also interspaced by single 0's. For example, the string … 0111111111101001111110101110… will represent the sequence … 6; 10; 1; 0; 6; 1; 3…. here consecutive zeros equal to 0 (therefore two zeros in between denote 0. . • If there is any bit alive (1) in the sequence, then convention is that TM starts at the left most 1. • Example. Design a Turing machine to compute the function f(n) = 1 for every n N {0}. Solution aimed in here is switching situation 01111100011100 into 00000010. . And starting from {s, a} = {0, 1}. . to the situation

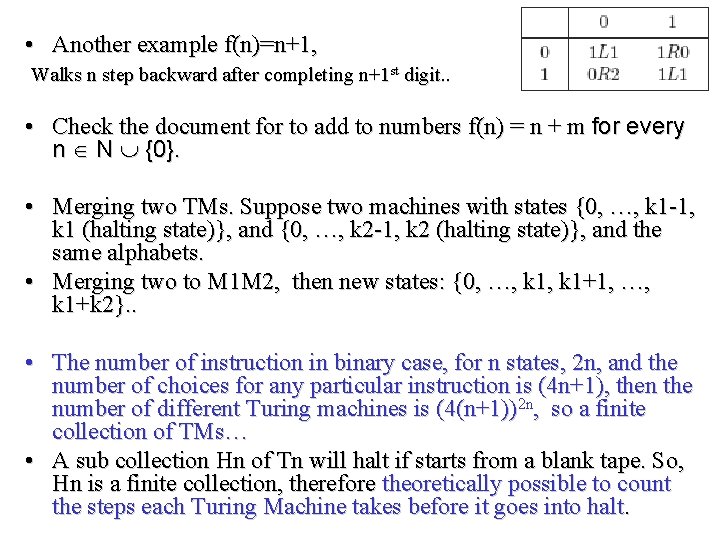

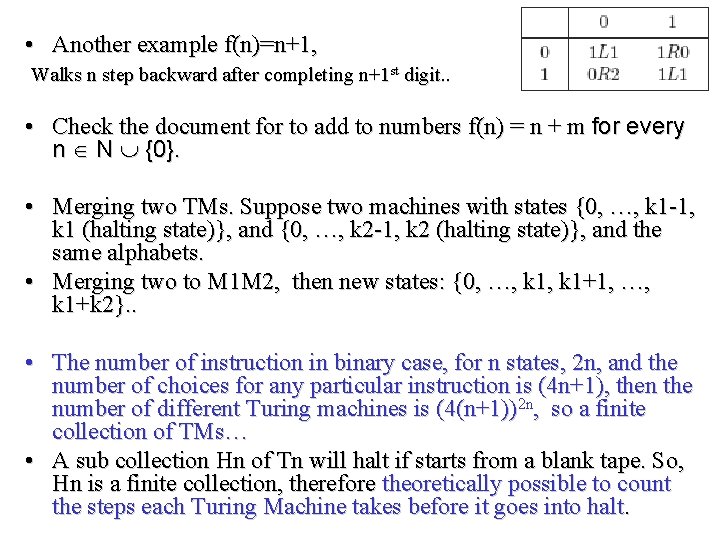

• Another example f(n)=n+1, Walks n step backward after completing n+1 st digit. . • Check the document for to add to numbers f(n) = n + m for every n N {0}. • Merging two TMs. Suppose two machines with states {0, …, k 1 -1, k 1 (halting state)}, and {0, …, k 2 -1, k 2 (halting state)}, and the same alphabets. • Merging two to M 1 M 2, then new states: {0, …, k 1+1, …, k 1+k 2}. . • The number of instruction in binary case, for n states, 2 n, and the number of choices for any particular instruction is (4 n+1), then the number of different Turing machines is (4(n+1))2 n, so a finite collection of TMs… • A sub collection Hn of Tn will halt if starts from a blank tape. So, Hn is a finite collection, therefore theoretically possible to count the steps each Turing Machine takes before it goes into halt.

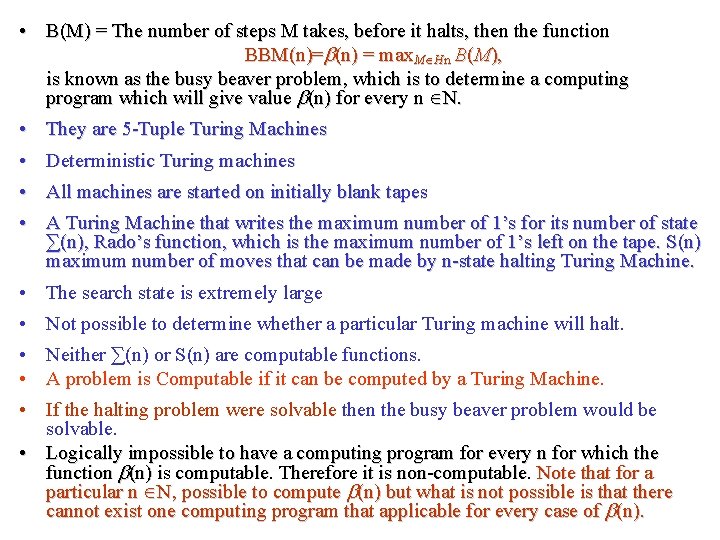

• B(M) = The number of steps M takes, before it halts, then the function BBM(n)= (n) = max. M Hn B(M), is known as the busy beaver problem, which is to determine a computing program which will give value (n) for every n N. • They are 5 -Tuple Turing Machines • Deterministic Turing machines • All machines are started on initially blank tapes • A Turing Machine that writes the maximum number of 1’s for its number of state ∑(n), Rado’s function, which is the maximum number of 1’s left on the tape. S(n) maximum number of moves that can be made by n-state halting Turing Machine. • The search state is extremely large • Not possible to determine whether a particular Turing machine will halt. • Neither ∑(n) or S(n) are computable functions. • A problem is Computable if it can be computed by a Turing Machine. • If the halting problem were solvable then the busy beaver problem would be solvable. • Logically impossible to have a computing program for every n for which the function (n) is computable. Therefore it is non-computable. Note that for a particular n N, possible to compute (n) but what is not possible is that there cannot exist one computing program that applicable for every case of (n).

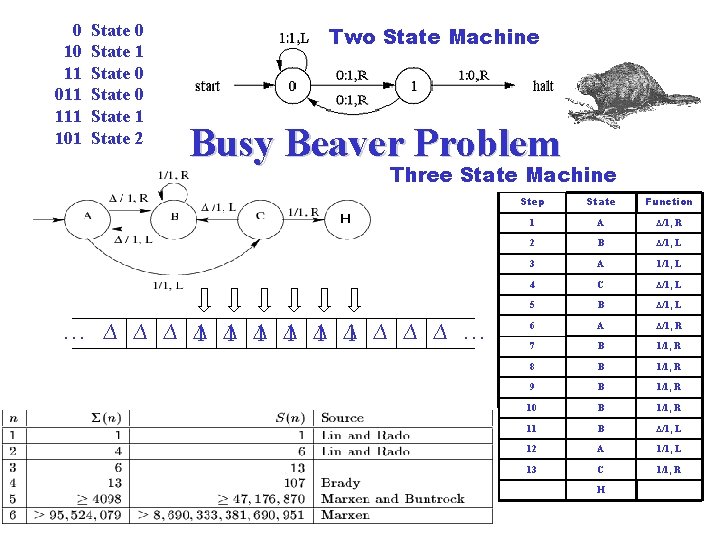

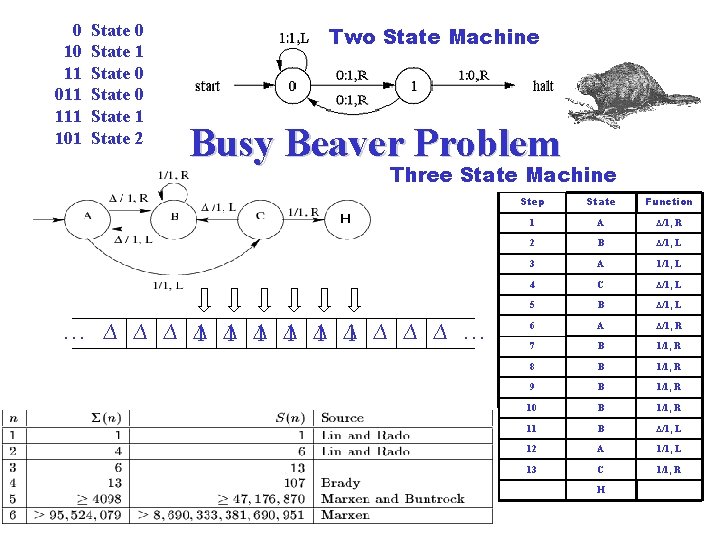

0 State 0 10 State 1 11 State 0 011 State 0 111 State 1 101 State 2 Two State Machine Busy Beaver Problem Three State Machine … ∆ ∆ 1 ∆ 1 ∆ 1 ∆1 ∆ ∆ ∆ … Step State Function 1 A ∆/1, R 2 B ∆/1, L 3 A 1/1, L 4 C ∆/1, L 5 B ∆/1, L 6 A ∆/1, R 7 B 1/1, R 8 B 1/1, R 9 B 1/1, R 10 B 1/1, R 11 B ∆/1, L 12 A 1/1, L 13 C 1/1, R H

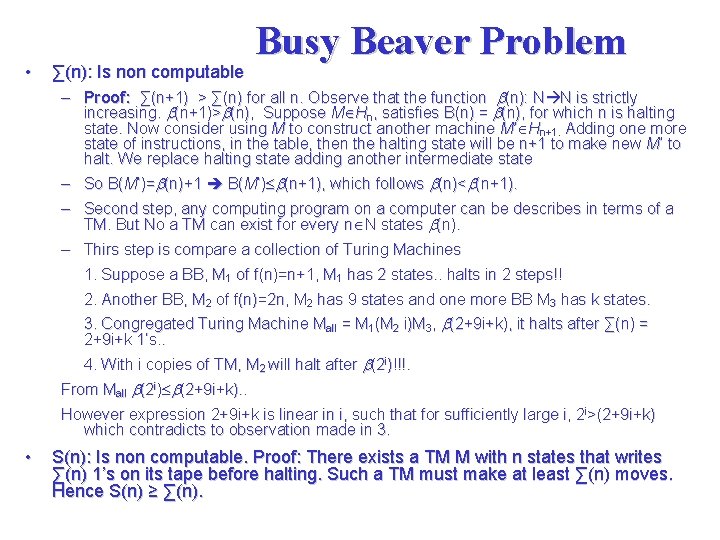

• ∑(n): Is non computable Busy Beaver Problem – Proof: ∑(n+1) > ∑(n) for all n. Observe that the function (n): N N is strictly increasing. (n+1)> (n), Suppose M Hn, satisfies B(n) = (n), for which n is halting state. Now consider using M to construct another machine M’ Hn+1. Adding one more state of instructions, in the table, then the halting state will be n+1 to make new M’ to halt. We replace halting state adding another intermediate state – So B(M’)= (n)+1 B(M’) (n+1), which follows (n)< (n+1). – Second step, any computing program on a computer can be describes in terms of a TM. But No a TM can exist for every n N states (n). – Thirs step is compare a collection of Turing Machines 1. Suppose a BB, M 1 of f(n)=n+1, M 1 has 2 states. . halts in 2 steps!! 2. Another BB, M 2 of f(n)=2 n, M 2 has 9 states and one more BB M 3 has k states. 3. Congregated Turing Machine Mall = M 1(M 2 i)M 3, (2+9 i+k), it halts after ∑(n) = 2+9 i+k 1’s. . 4. With i copies of TM, M 2 will halt after (2 i)!!!. From Mall (2 i) (2+9 i+k). . However expression 2+9 i+k is linear in i, such that for sufficiently large i, 2 i>(2+9 i+k) which contradicts to observation made in 3. • S(n): Is non computable. Proof: There exists a TM M with n states that writes ∑(n) 1’s on its tape before halting. Such a TM must make at least ∑(n) moves. Hence S(n) ≥ ∑(n).

P=NP? • First four pages is the summary of the article “NP completeness of Minesweeper” by Ian Stewart. • An algorithm - a procedure solving some problem - in the computational term: how efficient - the running time - the number of clock time required to get the answer ~ the initial data? • Theoretically the main distinction between problems that are of type P - polynomial time- and those that are not. • A problem is of type P if it can be solved using an algorithm whose running time grows no faster than some fixed power of the number of symbols required to specify the initial data. Problems of this type are easy to solve. • Otherwise the problem is non-P. Non-P problems are hard. Of course it's not quite as simple as that, but it's a good rule of thumb. • Intuitively, problems in P can be solved efficiently, whereas non-P problems cannot be solved algorithmically in any practical manner because any algorithm will take a ridiculously long time to get an answer.

• If a problem is of type P, possible to exhibit it in polynomial time. For example, sorting a list of numbers or and searching a string for some sequence of symbols, “which is why commercial databases can sort data; or word processors can carry out searchand-replace operations”. • In contrast, Traveling Salesman Problem finds the shortest route whereby a salesman can visit every city on some itinerary is believed to be non-P, which has never been proved. • Hard to prove that a problem is non-P? Because requires to contemplate all possible algorithms, and show none of which can solve the problem in polynomial time. A mind-boggling task. • The best that has been done to date is to prove that a broad class of candidate non-P problems are all on the same footing, if any one of them can be solved in polynomial time, then all can be. The problems involved here are said to have 'nondeterministic polynomial' running time: type NP. • NP is not the same as non-P. A problem is NP, for which if it is possible to check a solution in a polynomial time.

P=? NP • a jigsaw puzzle, if solved, usually quick to check whether the solution is right, since the checking runs in polynomial time. But solving the puzzle, trying every potential solution in turn and checking each, the number of potential solutions grows much faster than any fixed power of the number of pieces. • Many of NP problems have 'equivalent' running times. • Specifically, an NP problem is said to be NP-complete if the existence of a polynomial time solution for that problem implies that all (of its sub) NP problems have a polynomial time solution. Solving one in polynomial time, means all the other ones are in polynomial time. • A vast range of problems are known to be NP-complete. The P=NP? problem asks whether types P and NP are (despite all appearances to the contrary) the same. The expected answer is 'no'. However, if any NP-complete problem turns out to be of type P — - having a polynomial time solution — - than NP must equal P. We therefore expect all NP-complete problems to be non-P, not proven yet.

• One simplest known NP-complete problem is SAT, the logical satisfiability of a Boolean condition. • Boolean circuits are logic gates AND, OR and NOT. Each gate may accept a number of inputs, and outputs the logical value of that combination. • The SAT problem asks, for a given Boolean circuit, whethere exist choices of inputs that produce the output T. May sound easy! if a circuit contains a large/or a huge number of gates and inputs. • The link to the computer game comes with the consistency problem, for example consistency of the logical assertion. • Kaye proves that Minesweeper is equivalent to SAT, in the sense that for a given Boolean circuit a SAT can be 'encoded' as a Minesweeper Consistency Problem for some position in the game, using a code procedure that is running in polynomial time. • Therefore, solving the Minesweeper Consistency Problem in polynomial time, would mean solving the SAT problem for that circuit in polynomial time. In other words, Minesweeper is NPcomplete.

Algorithmic complexity is some measure of algorithmic length, “how complex a system is in terms of the length of the shortest computer program, or set of algorithms”, to perform the computation. Rather information theoretical approach is taken, – Kolmogorov complexity. The complexity of a string x as the length of its shortest description p on a universal Turing machine U (K(x)=min{l(p): U(p)=x}). A string is simple if it can be described by a short program, like "the string of one million ones", and is complex if there is no such short description, like for a random string whose shortest description is specifying it bit-by-bit. Independent of the choice of U, computer. Having similar properties with Shannon's entropy (information) S, but K is superior!! to S in many respects!! it says. K is an excellent universal complexity measure, suitable e. g. for quantifying Occam's razor. Entities should not be multiplied unnecessarily William of Occam. Drawback is incomputable, approximated by MML/MDL or Lempel-Ziv compression or others. – Algorithmic Probability. . "Solomonoff“ takes a universal computer and randomly generate an input program. The program will compute some possibly infinite output. The algorithmic probability of any given finite output prefix q is the sum of the probabilities of the programs that compute something starting with q. Certain long objects with short programs have high probability. Incomputable. – Descriptional complexity.

• Computational complexity rather deals with the running time and space efficiencies. Classifies the problems by how hard they are. e. g. the problem of searching an ordered list at most has lgn time complexity. – Universal Search complexity "Levin". Time-bounded, computable, ``Levin'' complexity penalizing a slow program by adding the log of its running time to its length. This leads to computable variants of AC and AP. It solves all inversion problems in optimal (apart from some multiplicative constant) time.

• Solving problems quickly, i. e. , in close to linear time, or at least in some fraction of polynomial time as a function of the input size • Evidence that many important problems can not be solved quickly. • NP-complete problems are hard problems. – Use a heuristic: come up with a method for solving a reasonable fraction of the common cases. – Solve approximately: come up with a solution that you can prove that is close to right. – Use an exponential time solution: if you really have to solve the problem exactly and stop worrying about finding a better solution. • Decision problems – Given an input and a question of a problem, determine if the answer is yes or no • Optimization problems – Find a solution with the “best” value • Optimization problems can be cast as decision problems that are easier to study – E. g. : Shortest path: for a G = unweighted directed • Find a path between u and v that uses the fewest edges • Does a path exist from u to v consisting of at most k edges?

• Class P are called tractable. Consists of (decision) problems (solvable in polynomial time), performance is given i. e worst-case running time as O(nk), for some constant k, O(n 2), O(n 3), O(1), O(n lg n) • Class non-P Problems not in P are called intractable or unsolvable, non-p examples O(2 n), O(n!) – But can be solved in reasonable time only for small inputs – Or, can not be solved at all • Do non-polynomial algorithms always perform worse than polynomial algorithms? – n 1, 000 is technically tractable, but in reality impossible – nlog log n is technically intractable, but in reality it is possible

• Decision problems as sets of encodings of instances; the encodings that are in the set are "yes" instances. Definition: P is the class of decision problems D and there exists an algorithm A returns "yes" if and only if input I is in D. (i. e. , A correctly decides D), and the running time of A = O(nk) where n is the size of the I to be decided and k is a constant. Informally, P is the class of decision problems that can be decided in polynomial time for the given instances. Such an algorithm A is said to run in polynomial time. Examples of class P problems: • Problem: SINGLE-SOURCE-SHORTEST-PATH Instance: A weighted, directed graph G = (V, E), a pair of vertices v and w, both in V, and a number k in R. Question: Is there a path in G from v to w of length at most k? This is in P: Using Dykstra's Algorithm, it runs in time polynomial in the size of the instance. Proving the result of the decision problem, use the sequence of vertices along a path of length at most k as a certificate; verify the length of this path without having to trust Dikstra's Algorithm.

• Problem: LESS-THAN Instance: Two integers, n and m. Question: Is n less than m? The size of the problem is O(log n + log m), using binary representation of the numbers. This problem can be decided in O(log n + log m) time, which is linear in the sizes of the numbers (i. e. , k=1 in the definition of P). • P roughly corresponds to algorithmic notion of efficient computation; if a problem is in P, then it can be solved efficiently. • Some problems are not in P. For example, consider this problem: • Problem: HALTING Instance: A C program A. Question: Will A ever call exit()? However, there is no general algorithm for deciding this problem in polynomial time. (It turns out that there is no algorithm for doing this at all, in any kind of time, but you don't have to believe that until later. ) • It isn't known for many problems whether or not they are in P. For example: Problem: GRAPH-ISOMORPHISM Instance: Two graphs G and H. Question: Are G and H isomorphic? (i. e. , are they the same shape? Can we relabel the vertices of one to make it identical to the other? ) It isn't known whether GRAPH-ISOMORPHISM is in P.

Non-p problems (intractable) • Turing 1930, Problems unsolvable by any algorithm. The most well known is the halting problem: Given an arbitrary algorithm and its input, will that algorithm eventually halt, or will it continue forever in an “infinite loop? ” • Hamiltonian Paths: Given a G(V, E), find a path visiting every vertex just once. Searching such a path is an optimization problem. Decision problem: But deciding if there is such a path is a Decision Problem of yes or no. • TSP: Finding a minimum weight Hamiltonian cycle. TSP whose all the edge weights is set to one, is a special case of Hamiltonian cycle. Decision problem: For a given G and for a total weight of w. Is there a TSP with a total weight at most w. P • Can be classified in various categories based on their degree of difficulty, e. g. , NPC, NP-hard NP-complete NP

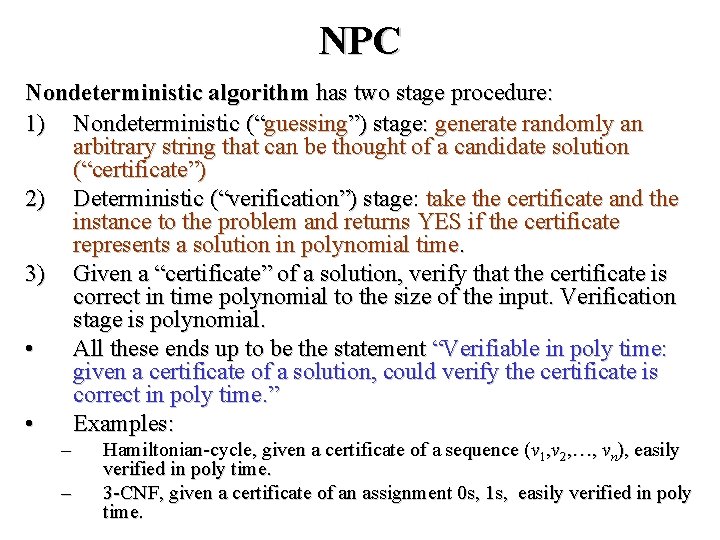

NPC Nondeterministic algorithm has two stage procedure: 1) Nondeterministic (“guessing”) stage: generate randomly an arbitrary string that can be thought of a candidate solution (“certificate”) 2) Deterministic (“verification”) stage: take the certificate and the instance to the problem and returns YES if the certificate represents a solution in polynomial time. 3) Given a “certificate” of a solution, verify that the certificate is correct in time polynomial to the size of the input. Verification stage is polynomial. • All these ends up to be the statement “Verifiable in poly time: given a certificate of a solution, could verify the certificate is correct in poly time. ” • Examples: – – Hamiltonian-cycle, given a certificate of a sequence (v 1, v 2, …, vn), easily verified in poly time. 3 -CNF, given a certificate of an assignment 0 s, 1 s, easily verified in poly time.

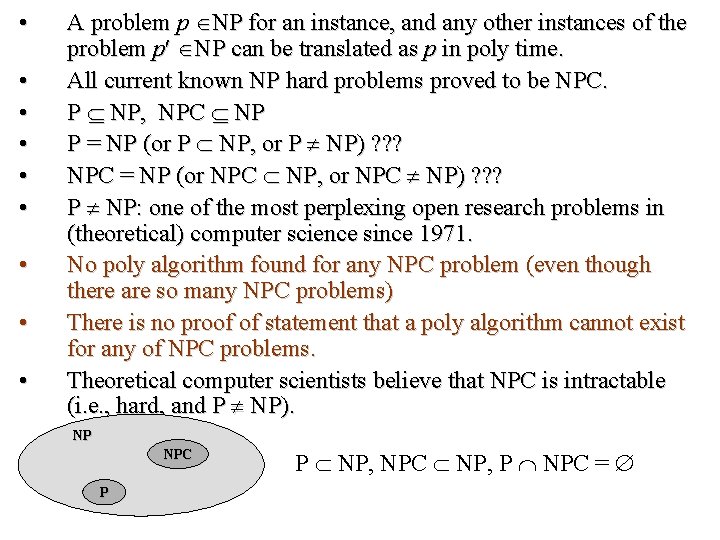

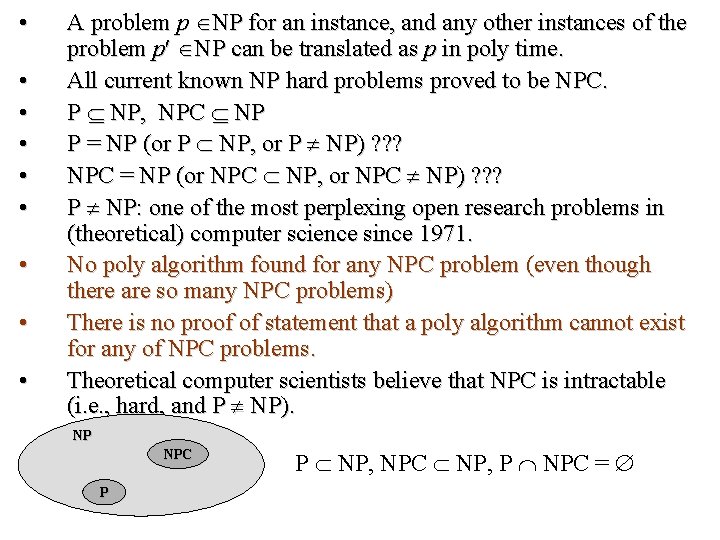

• • • A problem p NP for an instance, and any other instances of the problem p NP can be translated as p in poly time. All current known NP hard problems proved to be NPC. P NP, NPC NP P = NP (or P NP, or P NP) ? ? ? NPC = NP (or NPC NP, or NPC NP) ? ? ? P NP: one of the most perplexing open research problems in (theoretical) computer science since 1971. No poly algorithm found for any NPC problem (even though there are so many NPC problems) There is no proof of statement that a poly algorithm cannot exist for any of NPC problems. Theoretical computer scientists believe that NPC is intractable (i. e. , hard, and P NP). NP NPC P P NP, NPC NP, P NPC =

Why searching for NPC • If proved NPC, a good evidence for its intractability (hardness). • No need to waste time on trying to find out an efficient algorithm, rather focus on an approximate algorithm or solution for a special cases • Some problems might seem to be easy, but in fact, np hard (NPC). Decision VS. Optimization Problems • Decision problem: solution is binary “YES” or “NO” • Optimization problem: seeking solution of an optimal solution. • Easier to define reduction from and between decision problems than optimization. NPC is confined to decision problem. (but also applicable to optimization problem. ) • Examples: – SHORTEST-PATH (optimization) Given G, and vertices u, v, find a path from u to v with minimum number of edges (and with minimum weight). – PATH (decision) Given G, vertices u, v, and k, whether exists a path from u to v consisting of at most k edges. • If there is solution for an optimization problem, the algorithm can be used to solve the corresponding decision problem, and if optimization is easy, its corresponding decision is also easy. Or if provides evidence that decision problem is hard, then the corresponding optimization problem is also hard.

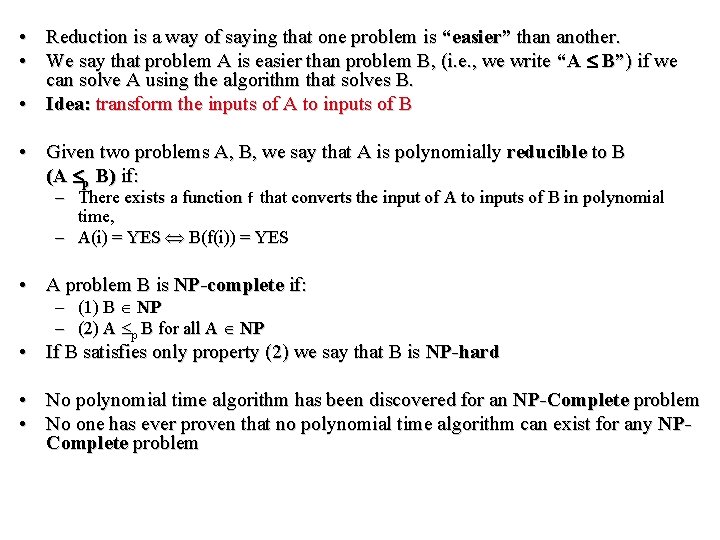

• Reduction is a way of saying that one problem is “easier” than another. • We say that problem A is easier than problem B, (i. e. , we write “A B”) if we can solve A using the algorithm that solves B. • Idea: transform the inputs of A to inputs of B • Given two problems A, B, we say that A is polynomially reducible to B (A p B) if: – There exists a function f that converts the input of A to inputs of B in polynomial time, – A(i) = YES B(f(i)) = YES • A problem B is NP-complete if: – (1) B NP – (2) A p B for all A NP • If B satisfies only property (2) we say that B is NP-hard • No polynomial time algorithm has been discovered for an NP-Complete problem • No one has ever proven that no polynomial time algorithm can exist for any NPComplete problem

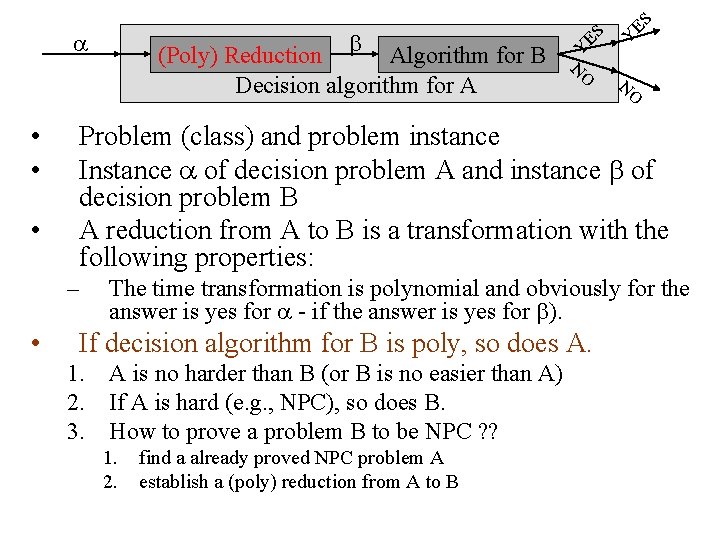

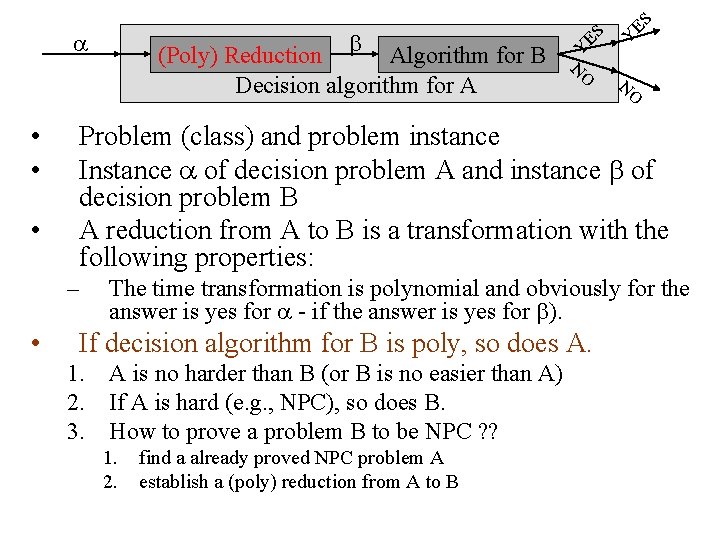

ES ES Y O N Problem (class) and problem instance Instance of decision problem A and instance of decision problem B A reduction from A to B is a transformation with the following properties: – • O • N • • (Poly) Reduction Algorithm for B Decision algorithm for A Y The time transformation is polynomial and obviously for the answer is yes for - if the answer is yes for ). If decision algorithm for B is poly, so does A. 1. A is no harder than B (or B is no easier than A) 2. If A is hard (e. g. , NPC), so does B. 3. How to prove a problem B to be NPC ? ? 1. 2. find a already proved NPC problem A establish a (poly) reduction from A to B

NP-Completeness • Any problem in P is also in NP: P NP • The big (and open question) is whether NP P or P = NP – i. e. , if it is always easy to check a solution, should it also be easy to find a solution? • Most computer scientists believe that this is false but we do not have a proof NP-complete problems are defined as the hardest problems in NP. Most practical problems turn out to be either P or NP-complete.

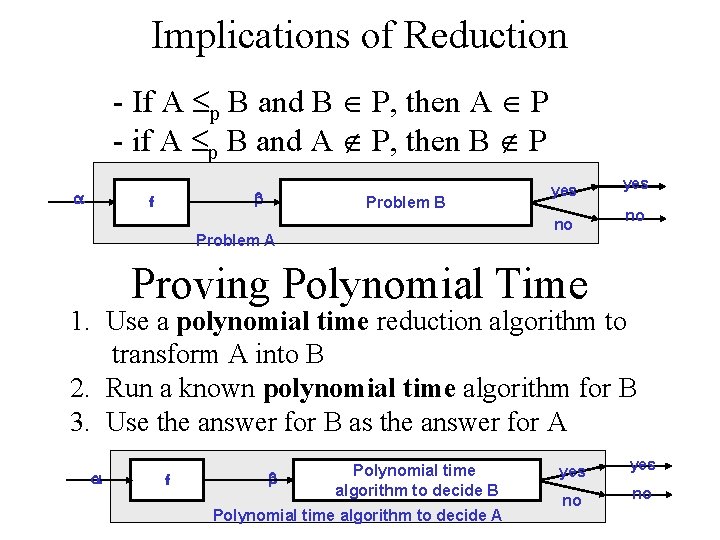

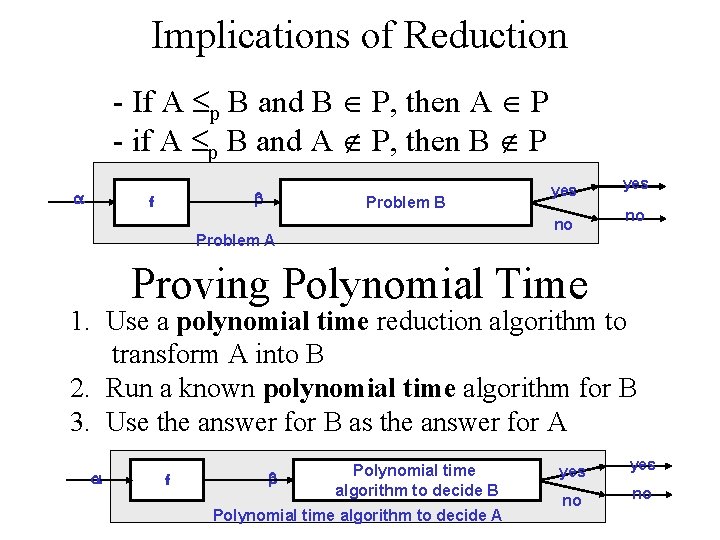

Implications of Reduction - If A p B and B P, then A P - if A p B and A P, then B P f Problem B yes no Problem A yes no Proving Polynomial Time 1. Use a polynomial time reduction algorithm to transform A into B 2. Run a known polynomial time algorithm for B 3. Use the answer for B as the answer for A f Polynomial time algorithm to decide B Polynomial time algorithm to decide A yes no no

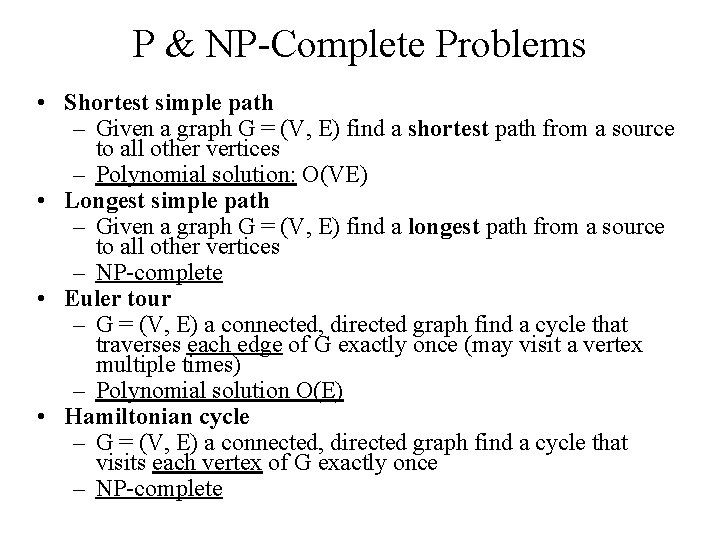

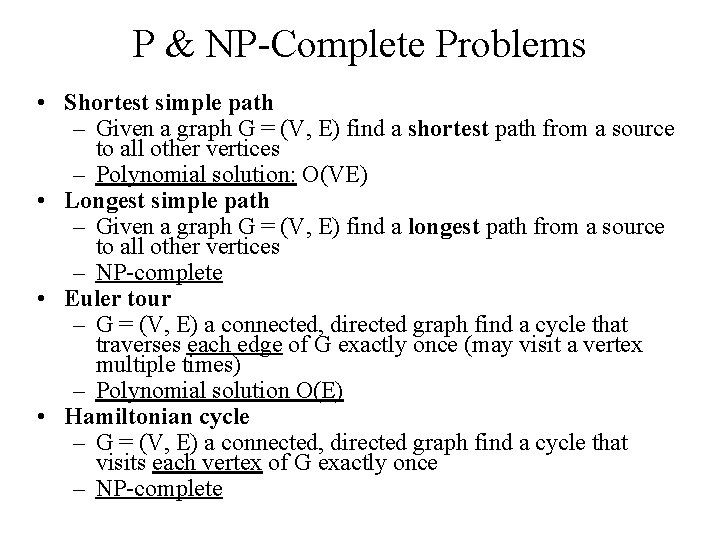

P & NP-Complete Problems • Shortest simple path – Given a graph G = (V, E) find a shortest path from a source to all other vertices – Polynomial solution: O(VE) • Longest simple path – Given a graph G = (V, E) find a longest path from a source to all other vertices – NP-complete • Euler tour – G = (V, E) a connected, directed graph find a cycle that traverses each edge of G exactly once (may visit a vertex multiple times) – Polynomial solution O(E) • Hamiltonian cycle – G = (V, E) a connected, directed graph find a cycle that visits each vertex of G exactly once – NP-complete

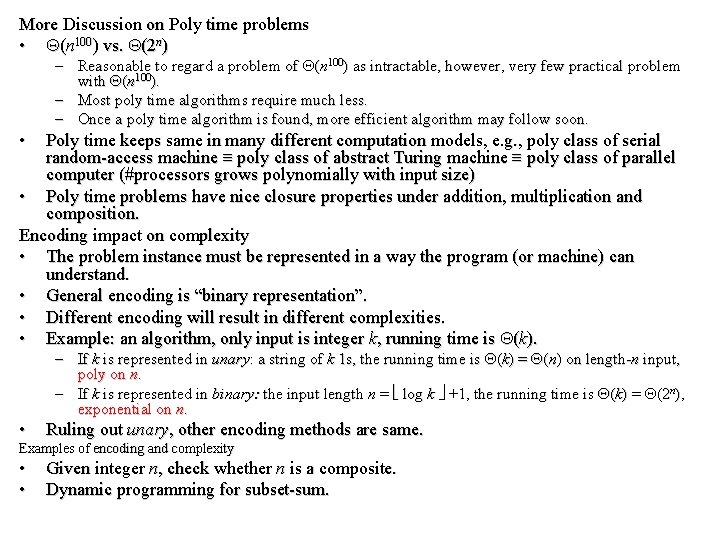

More Discussion on Poly time problems • (n 100) vs. (2 n) – Reasonable to regard a problem of (n 100) as intractable, however, very few practical problem with (n 100). – Most poly time algorithms require much less. – Once a poly time algorithm is found, more efficient algorithm may follow soon. • Poly time keeps same in many different computation models, e. g. , poly class of serial random-access machine poly class of abstract Turing machine poly class of parallel computer (#processors grows polynomially with input size) • Poly time problems have nice closure properties under addition, multiplication and composition. Encoding impact on complexity • The problem instance must be represented in a way the program (or machine) can understand. • General encoding is “binary representation”. • Different encoding will result in different complexities. • Example: an algorithm, only input is integer k, running time is (k). – If k is represented in unary: a string of k 1 s, the running time is (k) = (n) on length-n input, poly on n. – If k is represented in binary: the input length n = log k +1, the running time is (k) = (2 n), exponential on n. • Ruling out unary, other encoding methods are same. Examples of encoding and complexity • • Given integer n, check whether n is a composite. Dynamic programming for subset-sum.

Class P Problems • Let n= the length of binary encoding of a problem (i. e. , input size), T(n) is the time to solve it. • A problem is poly-time solvable if T(n) =O(nk) for some constant k. • Complexity class P=set of problems that are poly-time solvable. Poly Time Verification • PATH problem: Given <G, u, v, k>, whether exists a path from u to v with at most k edges? • Moreover, also given a path p from u to v, verify whether the length of p is at most k? • Easy or not? Easy. . Poly Time Verification, encoding, and language • Hamiltonian cycles – – A simple path containing every vertex. HAM-CYCLE={<G>: G is a Hamiltonian graph, i. e. containing Hamiltonian cycle}. Suppose n is the length of encoding of G. HAM-CYCLE can be considered as a Language after encoding, i. e. a subset of * where ={0, 1}*. • The naïve algorithm for determining HAM-CYCLE runs in (m!)= (2 m) time, where m is the number of vertices, m n 1/2. • However, given an ordered sequence of m vertices (called “certificate”), let you verify whether the sequence is a Hamiltonian cycle. Very easy. In O(n 2) time.

Class NP problems • For a problem p, given its certificate, the certificate can be verified in poly time. • Call this kind of problem an NP one. • Complement set/class: Co-NP. – Given a set S (as a universal) and given a subset A – The complement is that S-A. – When NP problems are represented as languages (i. e. a set), we can discuss their complement set, i. e. , Co-NP. NP-completeness and Reducibility • A (class of) problem P 1 is poly-time reducible to P 2 , written as P 1 p P 2 if there exists a poly-time function f: P 1 P 2 such that for any instance of p 1 P 1, p 1 has “YES” answer if and only if answer to f(x) ( P 2) is also “YES”. • Theorem 34. 3: (page 985) – For two problems P 1, P 2, if P 1 p P 2 then P 2 P implies P 1 P. • A problem p is NP-complete if 1. p NP and 2. p' p p for every p' NP. (if p satisfies 2, then p is said NP-hard. ) Theorem 34. 4 (page 986) if any NP-compete problem is poly-time solvable, then P=NP. Or say: if any problem in NP is not poly-time solvable, then no NP-complete problem is polytime solvable.

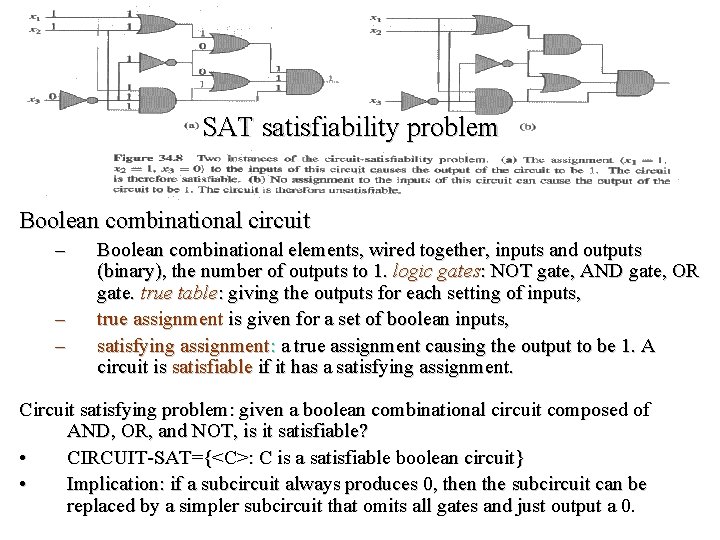

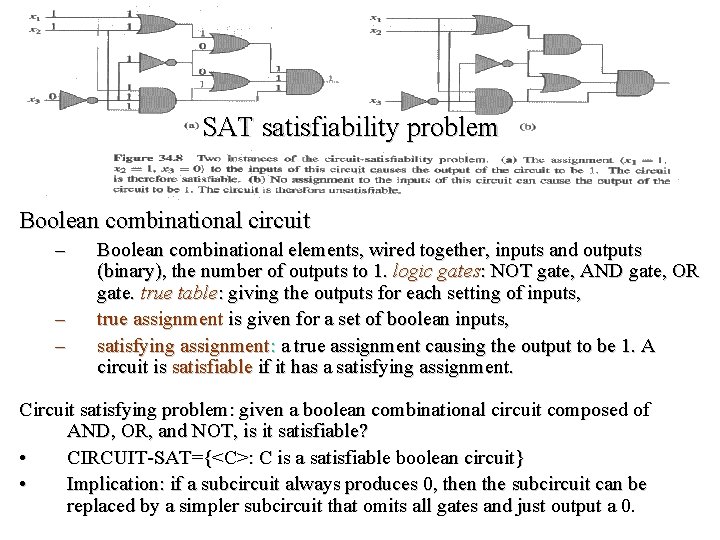

SAT satisfiability problem Boolean combinational circuit – – – Boolean combinational elements, wired together, inputs and outputs (binary), the number of outputs to 1. logic gates: NOT gate, AND gate, OR gate. true table: giving the outputs for each setting of inputs, true assignment is given for a set of boolean inputs, satisfying assignment: a true assignment causing the output to be 1. A circuit is satisfiable if it has a satisfying assignment. Circuit satisfying problem: given a boolean combinational circuit composed of AND, OR, and NOT, is it satisfiable? • CIRCUIT-SAT={<C>: C is a satisfiable boolean circuit} • Implication: if a subcircuit always produces 0, then the subcircuit can be replaced by a simpler subcircuit that omits all gates and just output a 0.

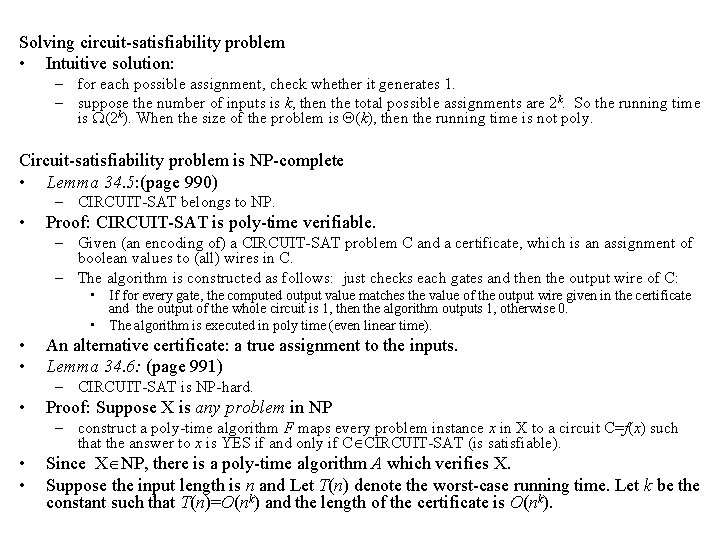

Solving circuit-satisfiability problem • Intuitive solution: – for each possible assignment, check whether it generates 1. – suppose the number of inputs is k, then the total possible assignments are 2 k. So the running time is (2 k). When the size of the problem is (k), then the running time is not poly. Circuit-satisfiability problem is NP-complete • Lemma 34. 5: (page 990) – CIRCUIT-SAT belongs to NP. • Proof: CIRCUIT-SAT is poly-time verifiable. – Given (an encoding of) a CIRCUIT-SAT problem C and a certificate, which is an assignment of boolean values to (all) wires in C. – The algorithm is constructed as follows: just checks each gates and then the output wire of C: • If for every gate, the computed output value matches the value of the output wire given in the certificate and the output of the whole circuit is 1, then the algorithm outputs 1, otherwise 0. • The algorithm is executed in poly time (even linear time). • • An alternative certificate: a true assignment to the inputs. Lemma 34. 6: (page 991) – CIRCUIT-SAT is NP-hard. • Proof: Suppose X is any problem in NP – construct a poly-time algorithm F maps every problem instance x in X to a circuit C=f(x) such that the answer to x is YES if and only if C CIRCUIT-SAT (is satisfiable). • • Since X NP, there is a poly-time algorithm A which verifies X. Suppose the input length is n and Let T(n) denote the worst-case running time. Let k be the constant such that T(n)=O(nk) and the length of the certificate is O(nk).

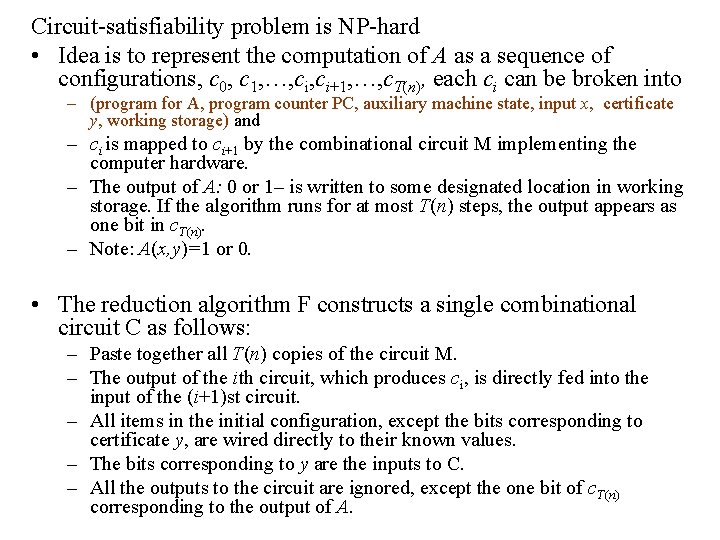

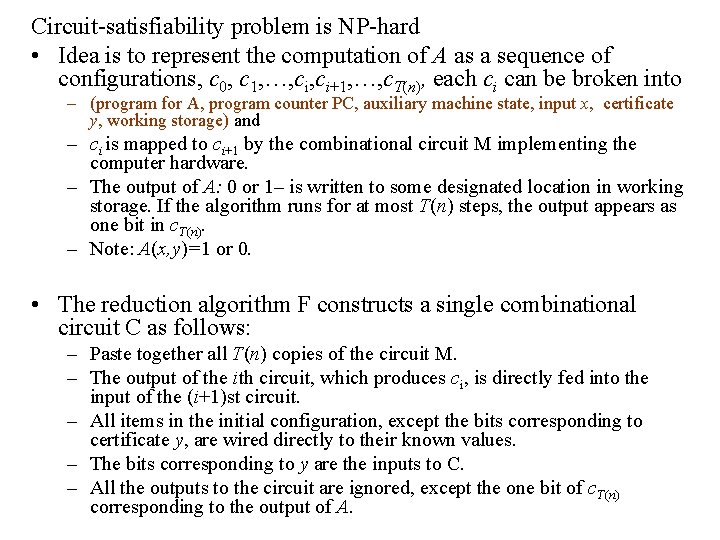

Circuit-satisfiability problem is NP-hard • Idea is to represent the computation of A as a sequence of configurations, c 0, c 1, …, ci+1, …, c. T(n), each ci can be broken into – (program for A, program counter PC, auxiliary machine state, input x, certificate y, working storage) and – ci is mapped to ci+1 by the combinational circuit M implementing the computer hardware. – The output of A: 0 or 1– is written to some designated location in working storage. If the algorithm runs for at most T(n) steps, the output appears as one bit in c. T(n). – Note: A(x, y)=1 or 0. • The reduction algorithm F constructs a single combinational circuit C as follows: – Paste together all T(n) copies of the circuit M. – The output of the ith circuit, which produces ci, is directly fed into the input of the (i+1)st circuit. – All items in the initial configuration, except the bits corresponding to certificate y, are wired directly to their known values. – The bits corresponding to y are the inputs to C. – All the outputs to the circuit are ignored, except the one bit of c. T(n) corresponding to the output of A.

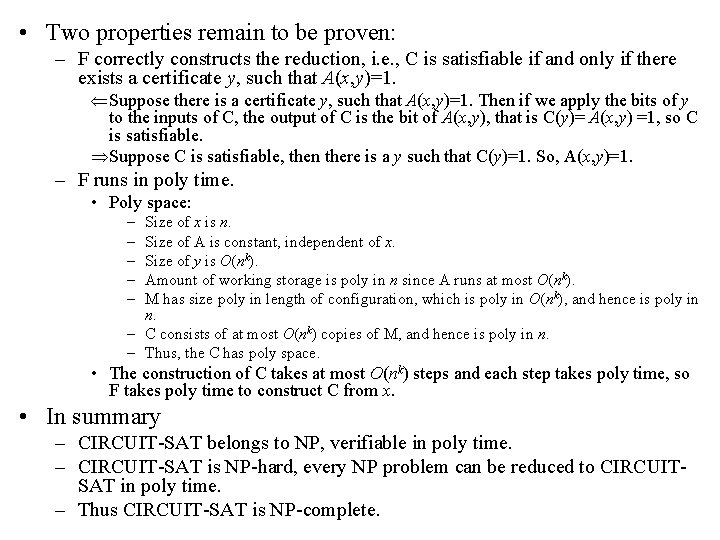

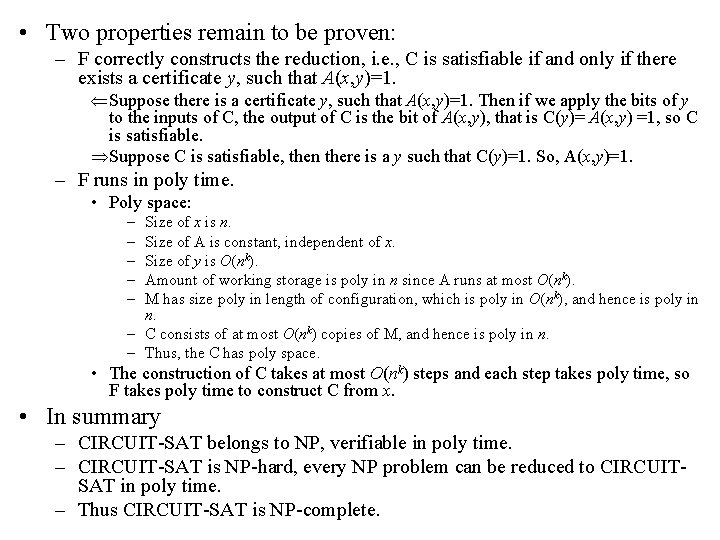

• Two properties remain to be proven: – F correctly constructs the reduction, i. e. , C is satisfiable if and only if there exists a certificate y, such that A(x, y)=1. Suppose there is a certificate y, such that A(x, y)=1. Then if we apply the bits of y to the inputs of C, the output of C is the bit of A(x, y), that is C(y)= A(x, y) =1, so C is satisfiable. Suppose C is satisfiable, then there is a y such that C(y)=1. So, A(x, y)=1. – F runs in poly time. • Poly space: – – – Size of x is n. Size of A is constant, independent of x. Size of y is O(nk). Amount of working storage is poly in n since A runs at most O(nk). M has size poly in length of configuration, which is poly in O(nk), and hence is poly in n. – C consists of at most O(nk) copies of M, and hence is poly in n. – Thus, the C has poly space. • The construction of C takes at most O(nk) steps and each step takes poly time, so F takes poly time to construct C from x. • In summary – CIRCUIT-SAT belongs to NP, verifiable in poly time. – CIRCUIT-SAT is NP-hard, every NP problem can be reduced to CIRCUITSAT in poly time. – Thus CIRCUIT-SAT is NP-complete.

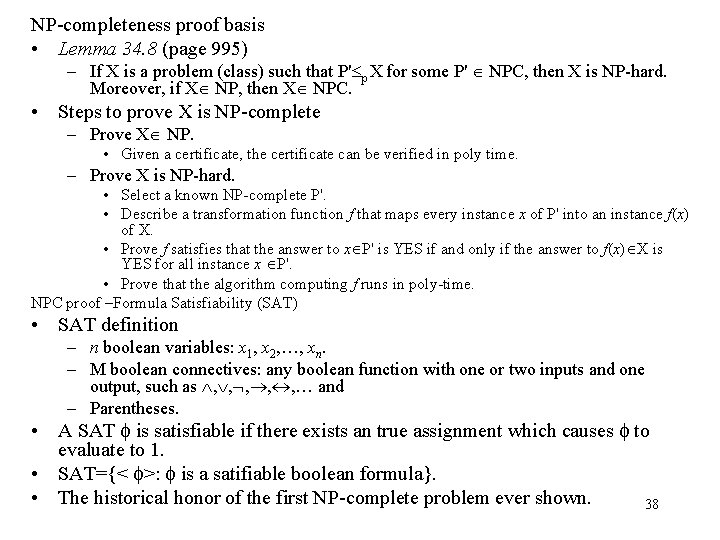

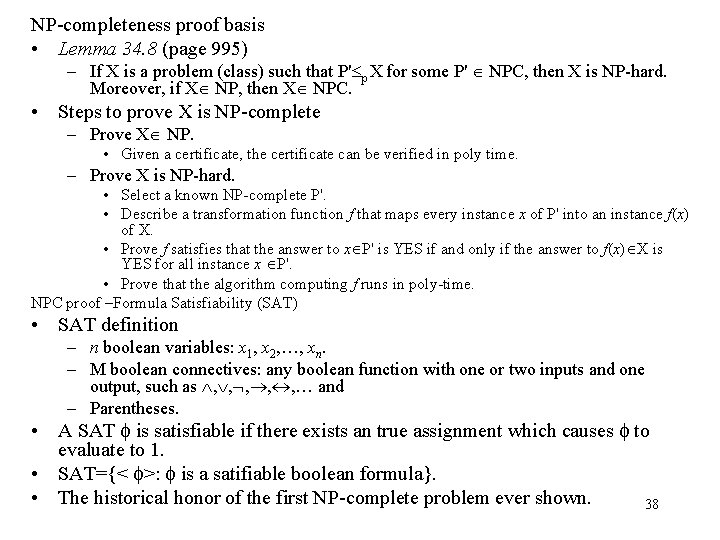

NP-completeness proof basis • Lemma 34. 8 (page 995) – If X is a problem (class) such that P' p X for some P' NPC, then X is NP-hard. Moreover, if X NP, then X NPC. • Steps to prove X is NP-complete – Prove X NP. • Given a certificate, the certificate can be verified in poly time. – Prove X is NP-hard. • Select a known NP-complete P'. • Describe a transformation function f that maps every instance x of P' into an instance f(x) of X. • Prove f satisfies that the answer to x P' is YES if and only if the answer to f(x) X is YES for all instance x P'. • Prove that the algorithm computing f runs in poly-time. NPC proof –Formula Satisfiability (SAT) • SAT definition – n boolean variables: x 1, x 2, …, xn. – M boolean connectives: any boolean function with one or two inputs and one output, such as , , , … and – Parentheses. • A SAT is satisfiable if there exists an true assignment which causes to evaluate to 1. • SAT={< >: is a satifiable boolean formula}. • The historical honor of the first NP-complete problem ever shown. 38

SAT is NP-complete • Theorem 34. 9: (page 997) – SAT is NP-complete. • Proof: – SAT belongs to NP. • Given a satisfying assignment, the verifying algorithm replaces each variable with its value and evaluates the formula, in poly time. – SAT is NP-hard (show CIRCUIT-SAT p SAT). • CIRCUIT-SAT p SAT, i. e. , any instance of circuit satisfiability can be reduced in poly time to an instance of formula satisfiability. • Intuitive induction: – Look at the gate that produces the circuit output. – Inductively express each of gate’s inputs as formulas. – Formula for the circuit is then obtained by writing an expression that applies the gate’s function to its input formulas. 39

• Unfortunately, this is not a poly reduction – Shared formula (the gate whose output is fed to 2 or more inputs of other gates) cause the size of generated formula to grow exponentially. • Correct reduction: – For every wire xi of C, give a variable xi in the formula. – Every gate can be expressed as xo (xi 1 xi 2 … xil) – The final formula is the AND of the circuit output variable and conjunction of all clauses describing the operation of each gate. (example Figure 34. 10) • Correctness of the reduction – Clearly the reduction can be done in poly time. – C is satisfiable if and only if is satisfiable. • If C is satisfiable, then there is a satisfying assignment. This means that each wire of C has a well-defined value and the output of C is 1. Thus the assignment of wire values to variables in makes each clause in evaluate to 1. So is 1. • The reverse proof can be done in the same way. 40

Example of reduction of CIRCUIT-SAT to SAT = x 10 (x 7 x 8 x 9)) (x 9 (x 6 x 7)) (x 8 (x 5 x 6)) (x 7 (x 1 x 2 x 4)) (x 6 x 4)) (x 5 (x 1 x 2)) (x 4 x 3) INCORRECT REDUCTION: = x 10= x 7 x 8 x 9=(x 1 x 2 x 4) (x 5 x 6) (x 6 x 7) =(x 1 x 2 x 4) ((x 1 x 2) x 4) ( x 4 (x 1 x 2 x 4))=…. 41

NPC Proof – 3 -CNF Satisfiability • 3 -CNF definition – A literal in a boolean formula is an occurrence of a variable or its negation. A clause is a logical variable or a number of logical variables. – CNF (Conjunctive Normal Form) is a boolean formula expressed as AND of clauses, each of which is the OR of one or more literals. – 3 -CNF is a CNF in which each clause has exactly 3 distinct literals (a literal and its negation are distinct) • 3 -CNF-SAT: whether a given 3 -CNF is satiafiable? 42

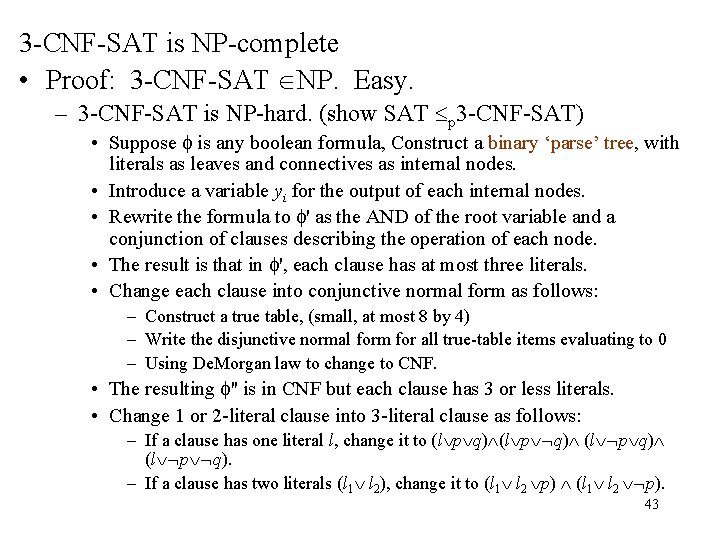

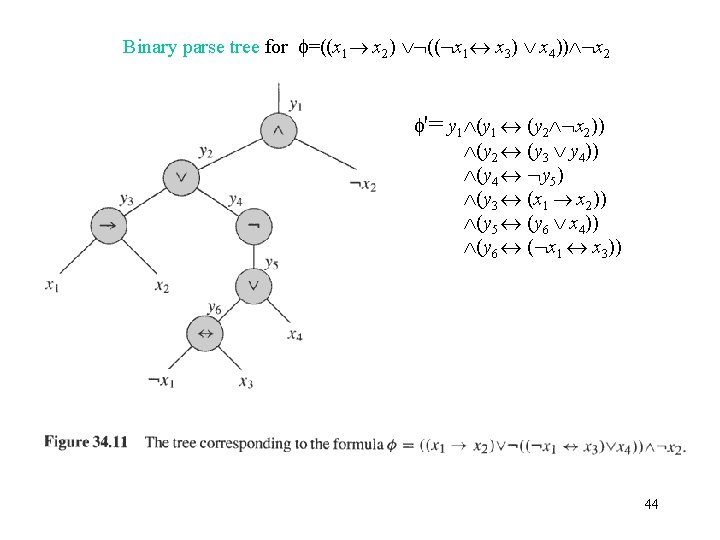

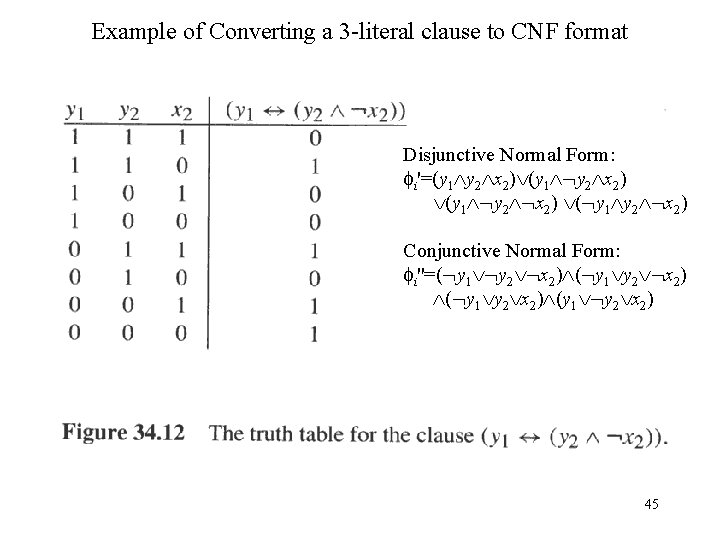

3 -CNF-SAT is NP-complete • Proof: 3 -CNF-SAT NP. Easy. – 3 -CNF-SAT is NP-hard. (show SAT p 3 -CNF-SAT) • Suppose is any boolean formula, Construct a binary ‘parse’ tree, with literals as leaves and connectives as internal nodes. • Introduce a variable yi for the output of each internal nodes. • Rewrite the formula to ' as the AND of the root variable and a conjunction of clauses describing the operation of each node. • The result is that in ', each clause has at most three literals. • Change each clause into conjunctive normal form as follows: – Construct a true table, (small, at most 8 by 4) – Write the disjunctive normal form for all true-table items evaluating to 0 – Using De. Morgan law to change to CNF. • The resulting '' is in CNF but each clause has 3 or less literals. • Change 1 or 2 -literal clause into 3 -literal clause as follows: – If a clause has one literal l, change it to (l p q) (l p q). – If a clause has two literals (l 1 l 2), change it to (l 1 l 2 p) (l 1 l 2 p). 43

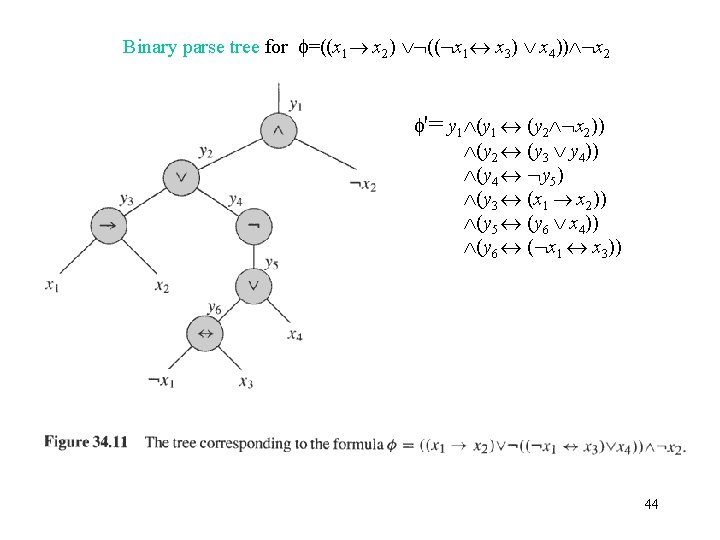

Binary parse tree for =((x 1 x 2) (( x 1 x 3) x 4)) x 2 '= y 1 (y 1 (y 2 x 2)) (y 2 (y 3 y 4)) (y 4 y 5) (y 3 (x 1 x 2)) (y 5 (y 6 x 4)) (y 6 ( x 1 x 3)) 44

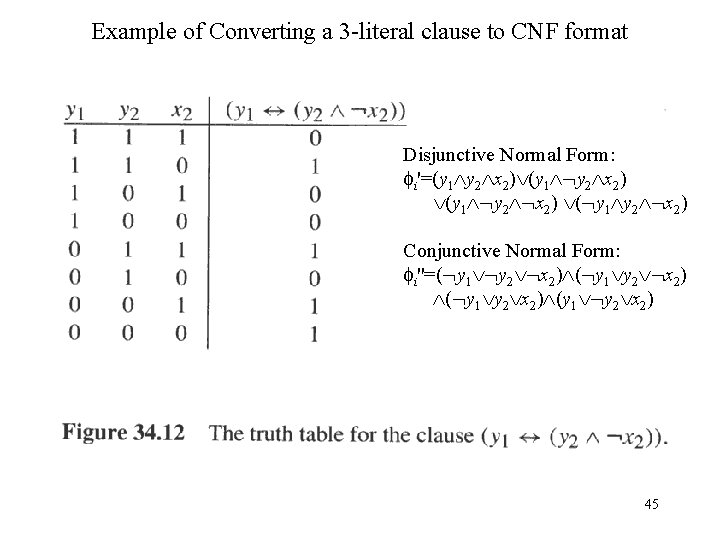

Example of Converting a 3 -literal clause to CNF format Disjunctive Normal Form: i'=(y 1 y 2 x 2) ( y 1 y 2 x 2) Conjunctive Normal Form: i''=( y 1 y 2 x 2) (y 1 y 2 x 2) 45

3 -CNF is NP-complete • and reduced 3 -CNF are equivalent: – From to ' , keep equivalence. – From ' to '' , keep equivalence. – From '' to final 3 -CNF, keep equivalence. • Reduction is in poly time, – From to ' , introduce at most 1 variable and 1 clause per connective in . – From ' to '' , introduce at most 8 clauses for each clause in '. – From '' to final 3 -CNF, introduce at most 4 clauses for each clause in ''. 46

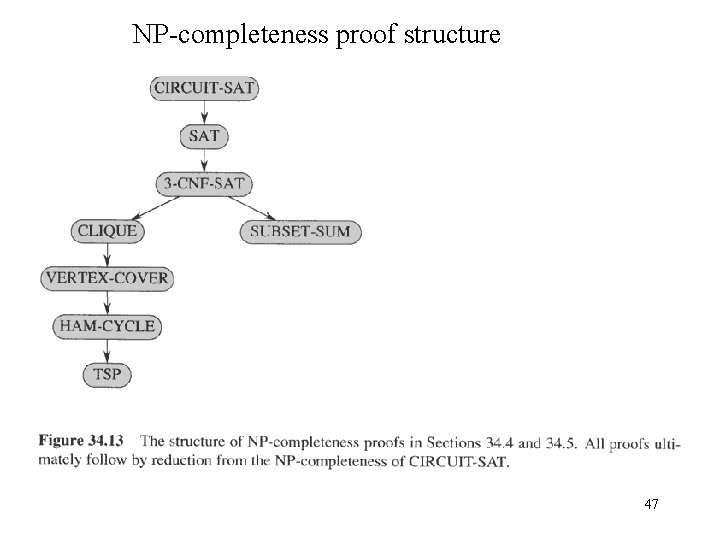

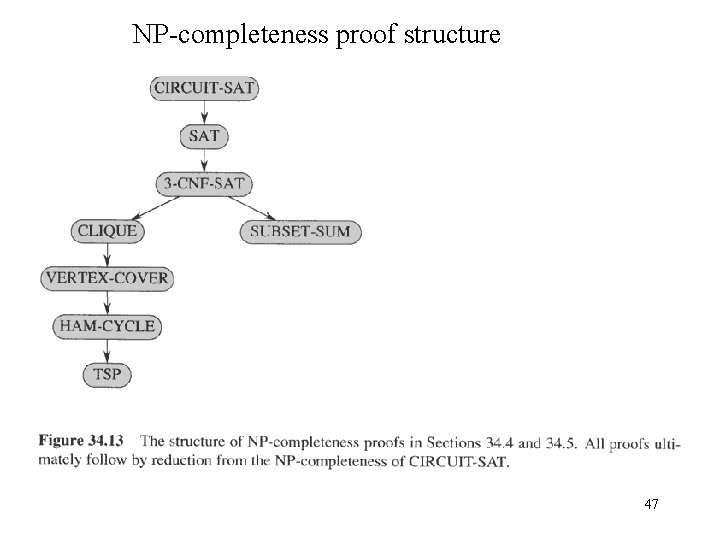

NP-completeness proof structure 47

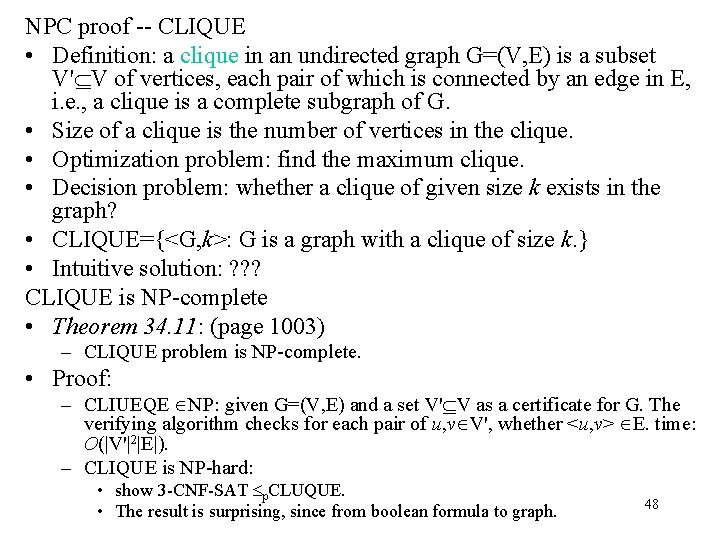

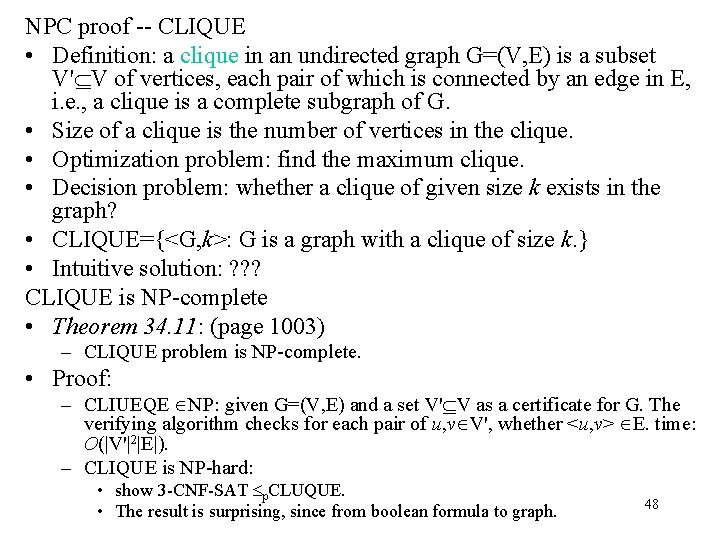

NPC proof -- CLIQUE • Definition: a clique in an undirected graph G=(V, E) is a subset V' V of vertices, each pair of which is connected by an edge in E, i. e. , a clique is a complete subgraph of G. • Size of a clique is the number of vertices in the clique. • Optimization problem: find the maximum clique. • Decision problem: whether a clique of given size k exists in the graph? • CLIQUE={<G, k>: G is a graph with a clique of size k. } • Intuitive solution: ? ? ? CLIQUE is NP-complete • Theorem 34. 11: (page 1003) – CLIQUE problem is NP-complete. • Proof: – CLIUEQE NP: given G=(V, E) and a set V' V as a certificate for G. The verifying algorithm checks for each pair of u, v V', whether <u, v> E. time: O(|V'|2|E|). – CLIQUE is NP-hard: • show 3 -CNF-SAT p. CLUQUE. • The result is surprising, since from boolean formula to graph. 48

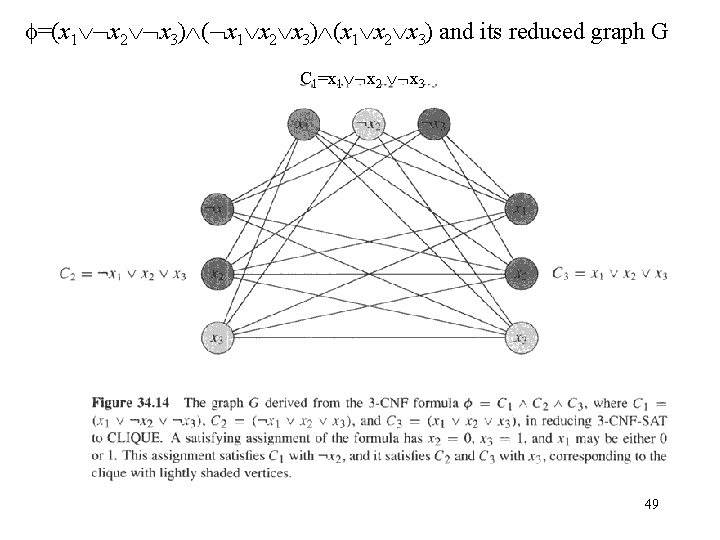

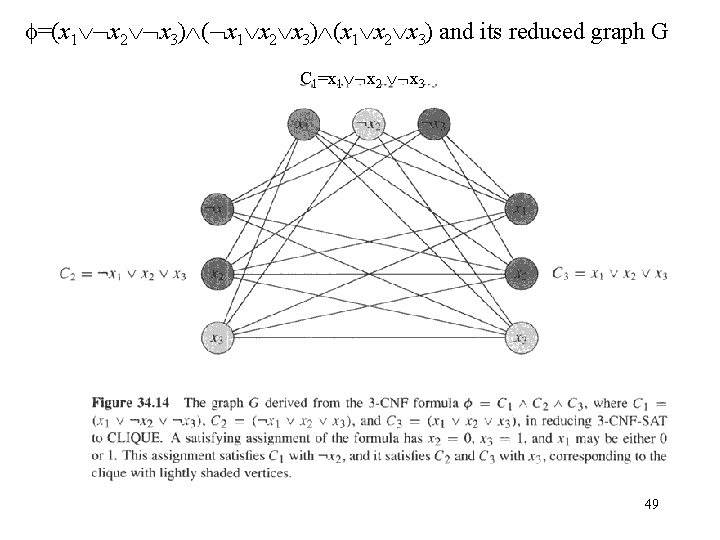

=(x 1 x 2 x 3) (x 1 x 2 x 3) and its reduced graph G C 1=x 1 x 2 x 3 49

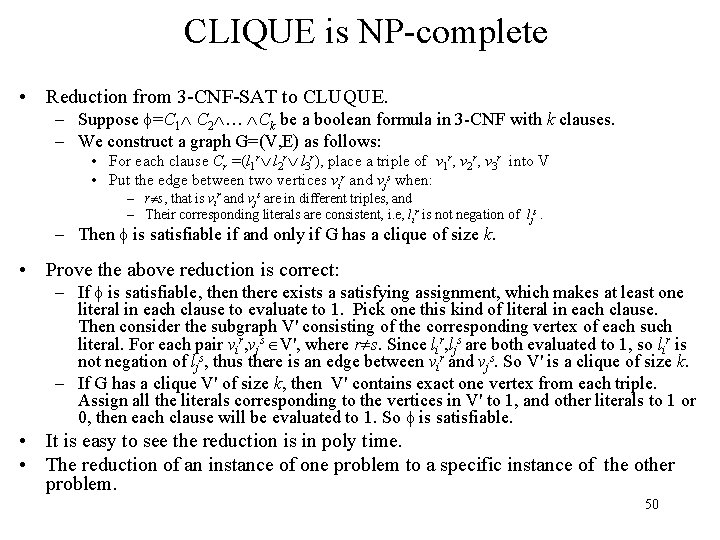

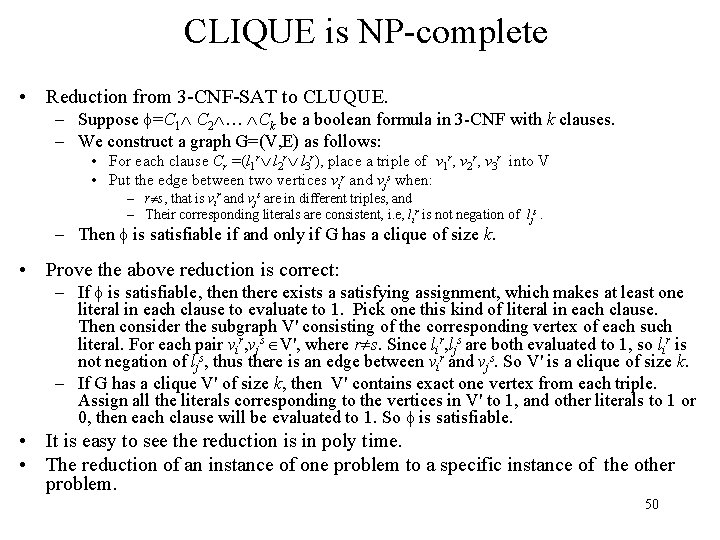

CLIQUE is NP-complete • Reduction from 3 -CNF-SAT to CLUQUE. – Suppose =C 1 C 2 … Ck be a boolean formula in 3 -CNF with k clauses. – We construct a graph G=(V, E) as follows: • For each clause Cr =(l 1 r l 2 r l 3 r), place a triple of v 1 r, v 2 r, v 3 r into V • Put the edge between two vertices vir and vjs when: – r s, that is vir and vjs are in different triples, and – Their corresponding literals are consistent, i. e, lir is not negation of ljs. – Then is satisfiable if and only if G has a clique of size k. • Prove the above reduction is correct: – If is satisfiable, then there exists a satisfying assignment, which makes at least one literal in each clause to evaluate to 1. Pick one this kind of literal in each clause. Then consider the subgraph V' consisting of the corresponding vertex of each such literal. For each pair vir, vjs V', where r s. Since lir, ljs are both evaluated to 1, so lir is not negation of ljs, thus there is an edge between vir and vjs. So V' is a clique of size k. – If G has a clique V' of size k, then V' contains exact one vertex from each triple. Assign all the literals corresponding to the vertices in V' to 1, and other literals to 1 or 0, then each clause will be evaluated to 1. So is satisfiable. • It is easy to see the reduction is in poly time. • The reduction of an instance of one problem to a specific instance of the other problem. 50

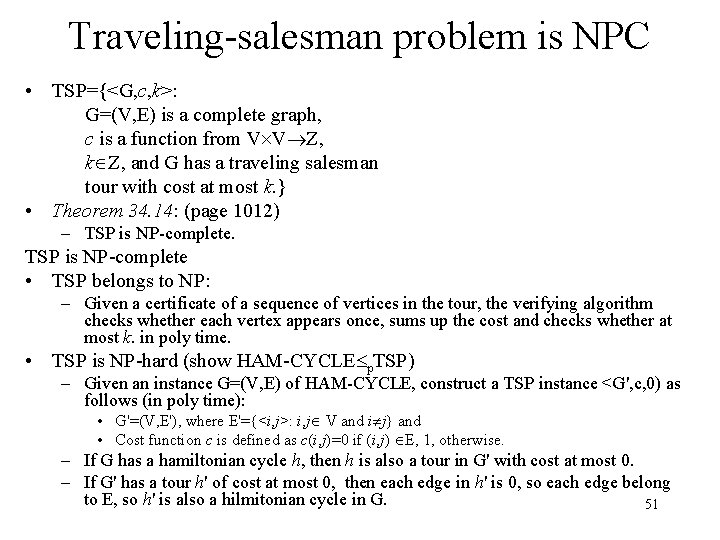

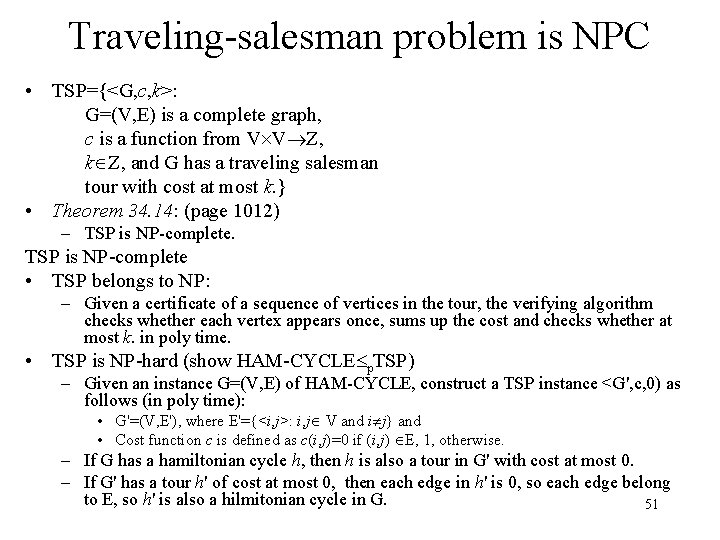

Traveling-salesman problem is NPC • TSP={<G, c, k>: G=(V, E) is a complete graph, c is a function from V V Z, k Z, and G has a traveling salesman tour with cost at most k. } • Theorem 34. 14: (page 1012) – TSP is NP-complete • TSP belongs to NP: – Given a certificate of a sequence of vertices in the tour, the verifying algorithm checks whether each vertex appears once, sums up the cost and checks whether at most k. in poly time. • TSP is NP-hard (show HAM-CYCLE p. TSP) – Given an instance G=(V, E) of HAM-CYCLE, construct a TSP instance <G', c, 0) as follows (in poly time): • G'=(V, E'), where E'={<i, j>: i, j V and i j} and • Cost function c is defined as c(i, j)=0 if (i, j) E, 1, otherwise. – If G has a hamiltonian cycle h, then h is also a tour in G' with cost at most 0. – If G' has a tour h' of cost at most 0, then each edge in h' is 0, so each edge belong to E, so h' is also a hilmitonian cycle in G. 51

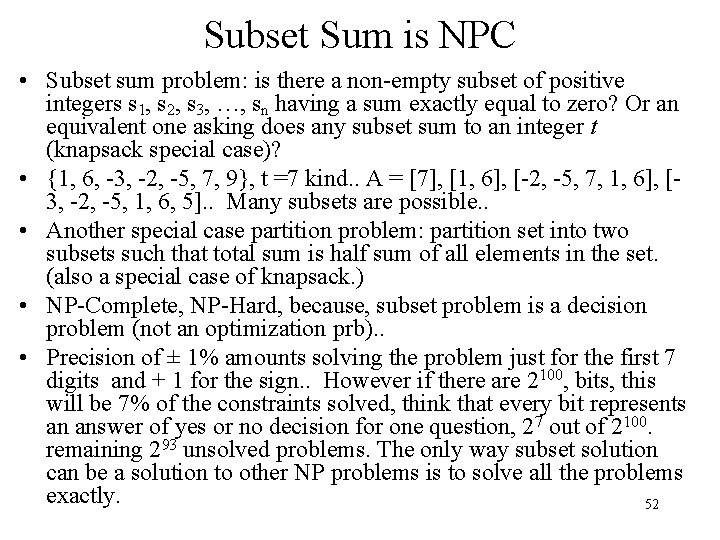

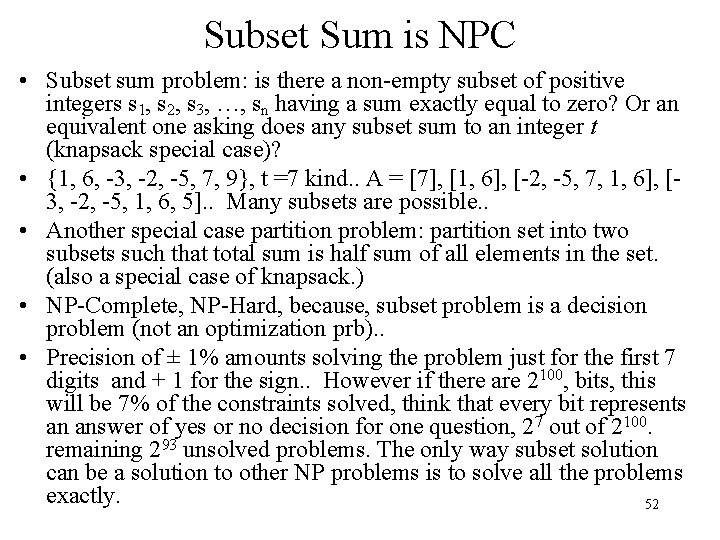

Subset Sum is NPC • Subset sum problem: is there a non-empty subset of positive integers s 1, s 2, s 3, …, sn having a sum exactly equal to zero? Or an equivalent one asking does any subset sum to an integer t (knapsack special case)? • {1, 6, -3, -2, -5, 7, 9}, t =7 kind. . A = [7], [1, 6], [-2, -5, 7, 1, 6], [3, -2, -5, 1, 6, 5]. . Many subsets are possible. . • Another special case partition problem: partition set into two subsets such that total sum is half sum of all elements in the set. (also a special case of knapsack. ) • NP-Complete, NP-Hard, because, subset problem is a decision problem (not an optimization prb). . • Precision of ± 1% amounts solving the problem just for the first 7 digits and + 1 for the sign. . However if there are 2100, bits, this will be 7% of the constraints solved, think that every bit represents an answer of yes or no decision for one question, 27 out of 2100. remaining 293 unsolved problems. The only way subset solution can be a solution to other NP problems is to solve all the problems exactly. 52

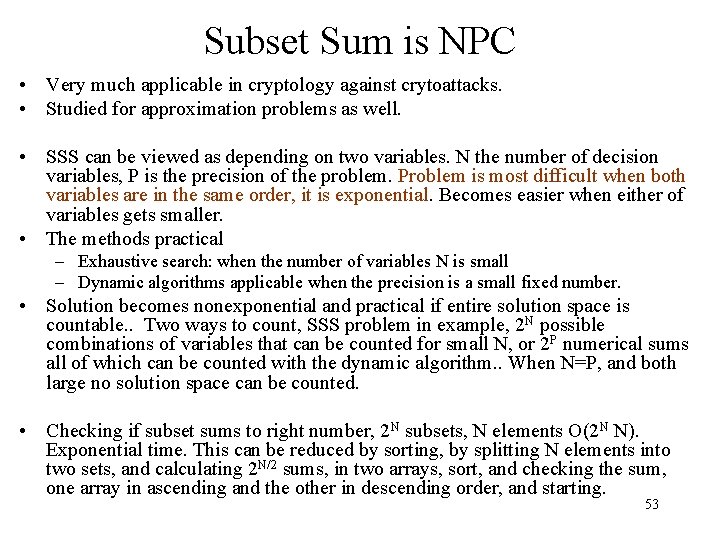

Subset Sum is NPC • Very much applicable in cryptology against crytoattacks. • Studied for approximation problems as well. • SSS can be viewed as depending on two variables. N the number of decision variables, P is the precision of the problem. Problem is most difficult when both variables are in the same order, it is exponential. Becomes easier when either of variables gets smaller. • The methods practical – Exhaustive search: when the number of variables N is small – Dynamic algorithms applicable when the precision is a small fixed number. • Solution becomes nonexponential and practical if entire solution space is countable. . Two ways to count, SSS problem in example, 2 N possible combinations of variables that can be counted for small N, or 2 P numerical sums all of which can be counted with the dynamic algorithm. . When N=P, and both large no solution space can be counted. • Checking if subset sums to right number, 2 N subsets, N elements O(2 N N). Exponential time. This can be reduced by sorting, by splitting N elements into two sets, and calculating 2 N/2 sums, in two arrays, sort, and checking the sum, one array in ascending and the other in descending order, and starting. 53

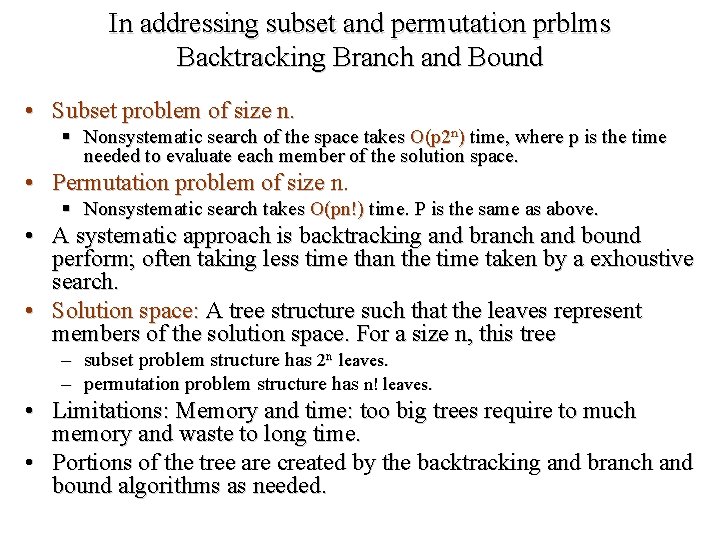

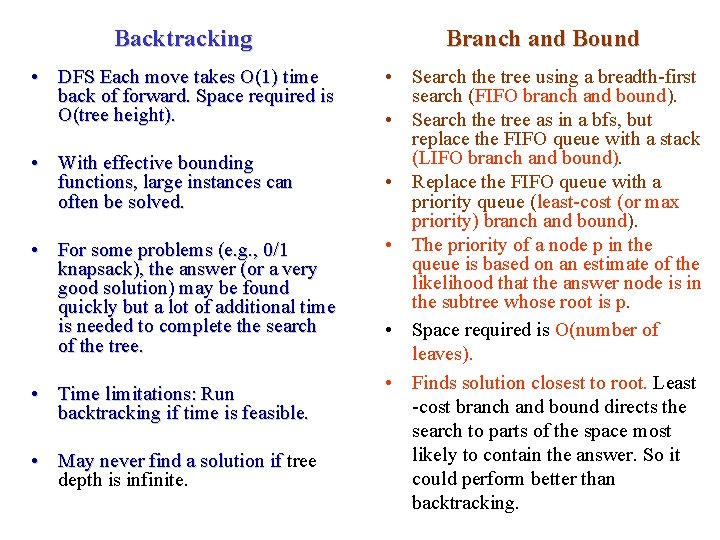

In addressing subset and permutation prblms Backtracking Branch and Bound • Subset problem of size n. § Nonsystematic search of the space takes O(p 2 n) time, where p is the time needed to evaluate each member of the solution space. • Permutation problem of size n. § Nonsystematic search takes O(pn!) time. P is the same as above. • A systematic approach is backtracking and branch and bound perform; often taking less time than the time taken by a exhoustive search. • Solution space: A tree structure such that the leaves represent members of the solution space. For a size n, this tree – subset problem structure has 2 n leaves. – permutation problem structure has n! leaves. • Limitations: Memory and time: too big trees require to much memory and waste to long time. • Portions of the tree are created by the backtracking and branch and bound algorithms as needed.

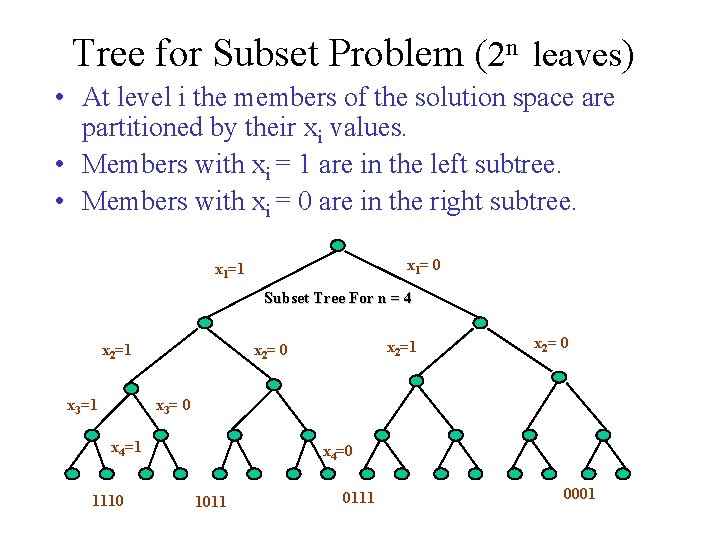

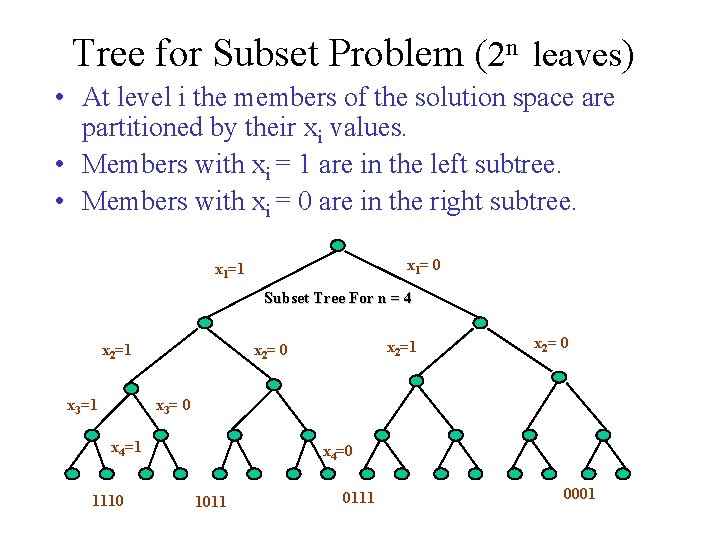

Tree for Subset Problem (2 n leaves) • At level i the members of the solution space are partitioned by their xi values. • Members with xi = 1 are in the left subtree. • Members with xi = 0 are in the right subtree. x 1= 0 x 1=1 Subset Tree For n = 4 x 2=1 x 3=1 x 2= 0 x 3= 0 x 4=1 1110 x 4=0 1011 0111 0001

Permutation Tree (n! Leaves) for n = 3 • At level i the members of the solution space are partitioned by their xi values. • Members (if any) with xi = 1 are in the first subtree. • Members (if any) with xi = 2 are in the next subtree. x 1=1 x 2= 2 x 3=3 x 2= 3 x 3=2 123 x 2= 1 x 3=3 132 x 1= 3 x 1=2 x 2= 3 x 3=1 213 x 2= 1 x 3=2 231 x 2= 2 x 3=1 312 321

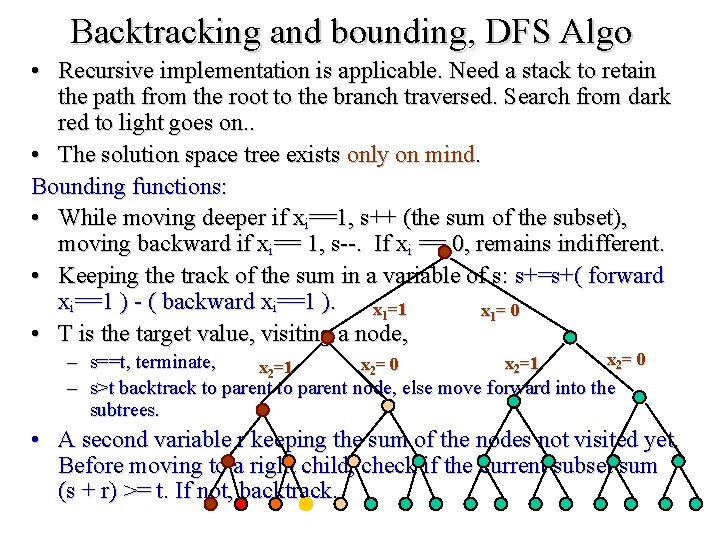

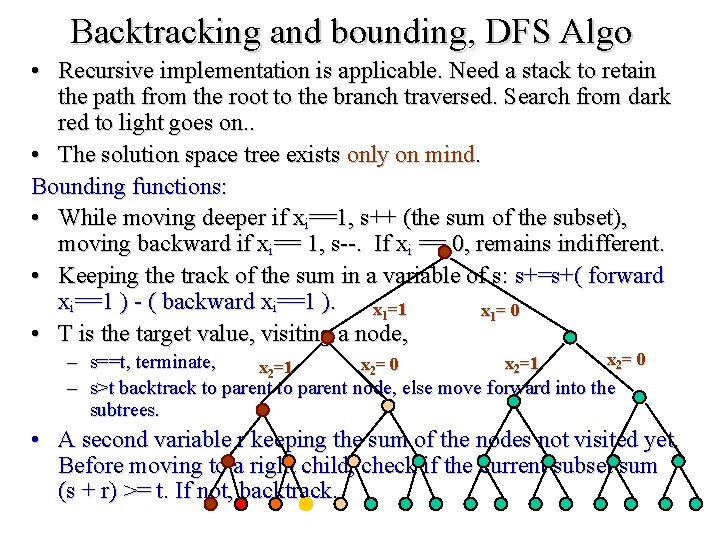

Backtracking and bounding, DFS Algo • Recursive implementation is applicable. Need a stack to retain the path from the root to the branch traversed. Search from dark red to light goes on. . • The solution space tree exists only on mind. Bounding functions: • While moving deeper if xi==1, s++ (the sum of the subset), moving backward if xi== 1, s--. If xi == 0, remains indifferent. • Keeping the track of the sum in a variable of s: s+=s+( forward xi==1 ) - ( backward xi==1 ). x 1=1 x 1= 0 • T is the target value, visiting a node, x 2= 0 – s==t, terminate, x 2=1 x 2= 0 x 2=1 – s>t backtrack to parent node, else move forward into the subtrees. • A second variable r keeping the sum of the nodes not visited yet. Before moving to a right child, check if the current subset sum (s + r) >= t. If not, backtrack.

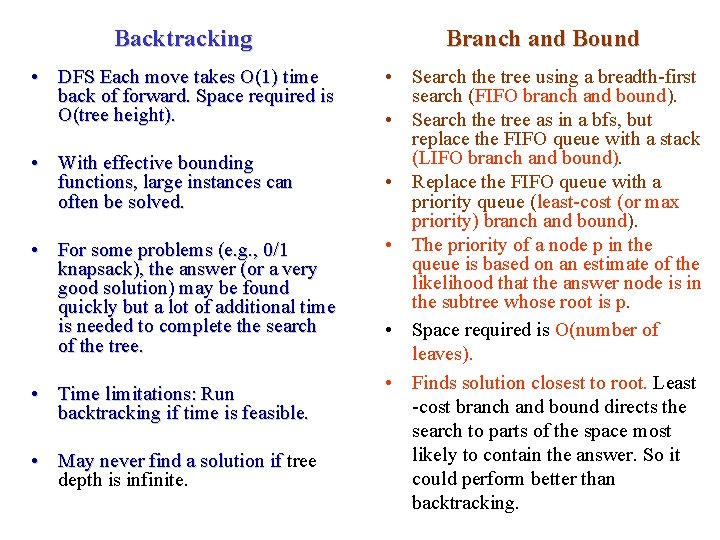

Backtracking Branch and Bound • DFS Each move takes O(1) time back of forward. Space required is O(tree height). • Search the tree using a breadth-first search (FIFO branch and bound). • Search the tree as in a bfs, but replace the FIFO queue with a stack (LIFO branch and bound). • Replace the FIFO queue with a priority queue (least-cost (or max priority) branch and bound). • The priority of a node p in the queue is based on an estimate of the likelihood that the answer node is in the subtree whose root is p. • Space required is O(number of leaves). • Finds solution closest to root. Least -cost branch and bound directs the search to parts of the space most likely to contain the answer. So it could perform better than backtracking. • With effective bounding functions, large instances can often be solved. • For some problems (e. g. , 0/1 knapsack), the answer (or a very good solution) may be found quickly but a lot of additional time is needed to complete the search of the tree. • Time limitations: Run backtracking if time is feasible. • May never find a solution if tree May never find a solution if depth is infinite.

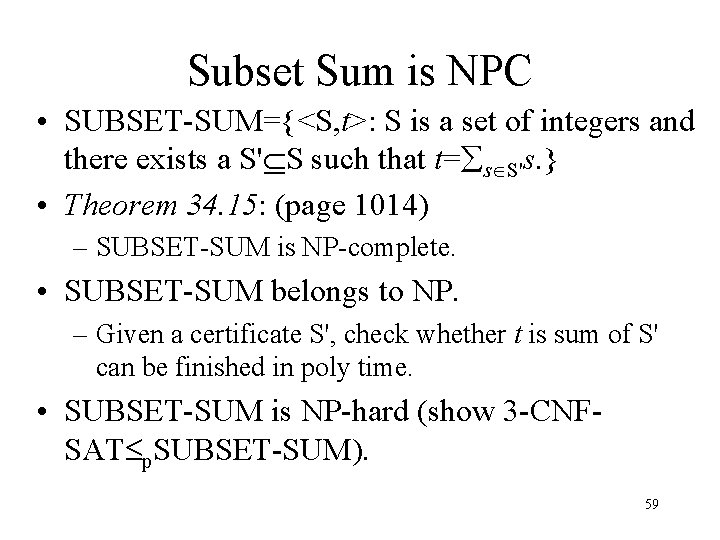

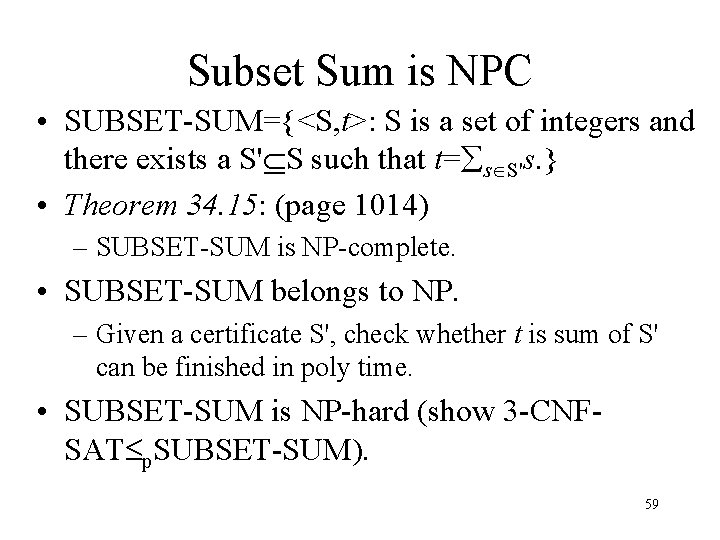

Subset Sum is NPC • SUBSET-SUM={<S, t>: S is a set of integers and there exists a S' S such that t= s S's. } • Theorem 34. 15: (page 1014) – SUBSET-SUM is NP-complete. • SUBSET-SUM belongs to NP. – Given a certificate S', check whether t is sum of S' can be finished in poly time. • SUBSET-SUM is NP-hard (show 3 -CNFSAT p. SUBSET-SUM). 59

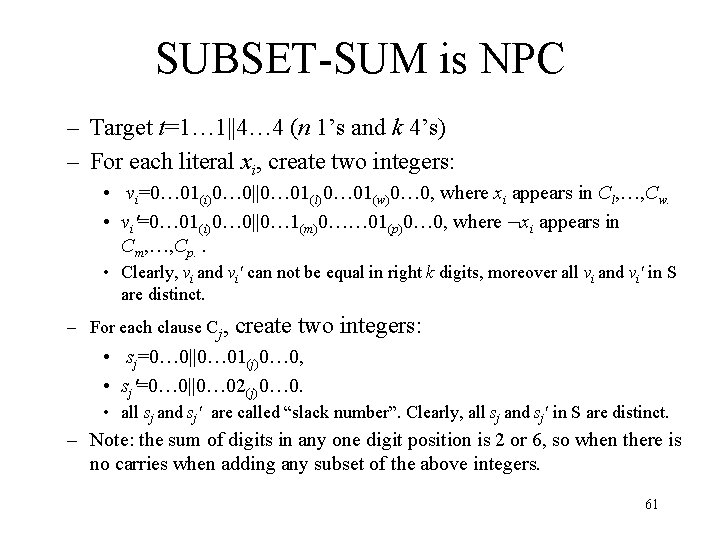

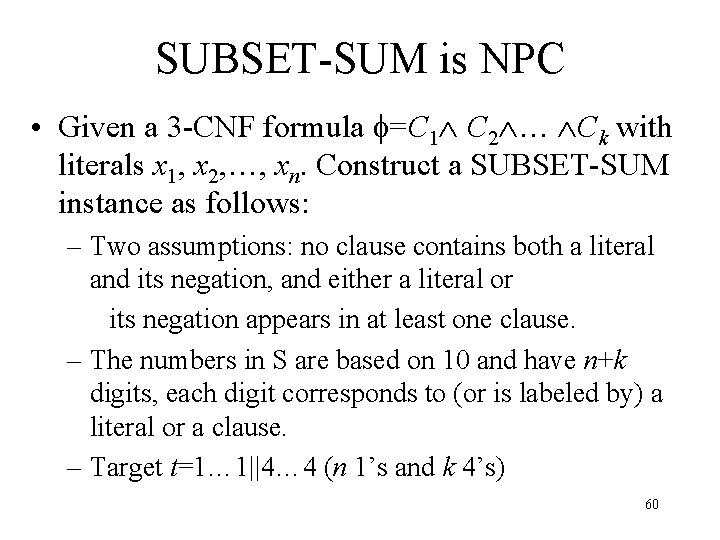

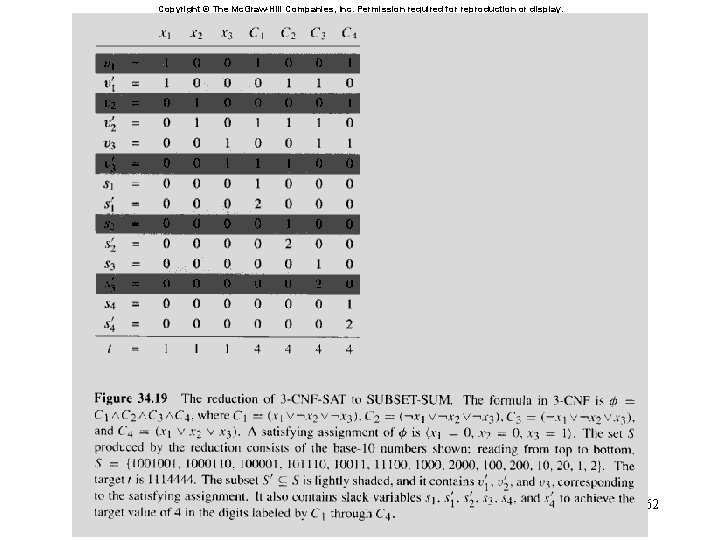

SUBSET-SUM is NPC • Given a 3 -CNF formula =C 1 C 2 … Ck with literals x 1, x 2, …, xn. Construct a SUBSET-SUM instance as follows: – Two assumptions: no clause contains both a literal and its negation, and either a literal or its negation appears in at least one clause. – The numbers in S are based on 10 and have n+k digits, each digit corresponds to (or is labeled by) a literal or a clause. – Target t=1… 1||4… 4 (n 1’s and k 4’s) 60

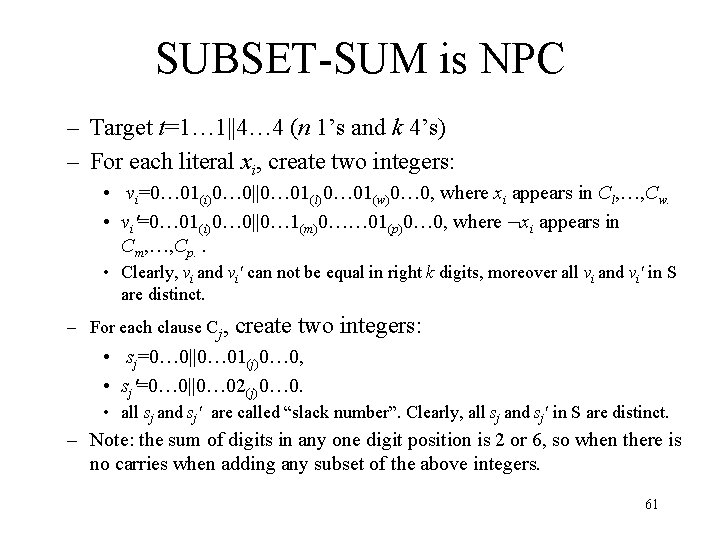

SUBSET-SUM is NPC – Target t=1… 1||4… 4 (n 1’s and k 4’s) – For each literal xi, create two integers: • vi=0… 01(i)0… 0||0… 01(l)0… 01(w)0… 0, where xi appears in Cl, …, Cw. • vi'=0… 01(i)0… 0||0… 1(m)0…… 01(p)0… 0, where xi appears in Cm, …, Cp. . • Clearly, vi and vi' can not be equal in right k digits, moreover all vi and vi' in S are distinct. – For each clause Cj, create two integers: • sj=0… 0||0… 01(j)0… 0, • sj'=0… 0||0… 02(j)0… 0. • all sj and sj' are called “slack number”. Clearly, all sj and sj' in S are distinct. – Note: the sum of digits in any one digit position is 2 or 6, so when there is no carries when adding any subset of the above integers. 61

Copyright © The Mc. Graw-Hill Companies, Inc. Permission required for reproduction or display. 62

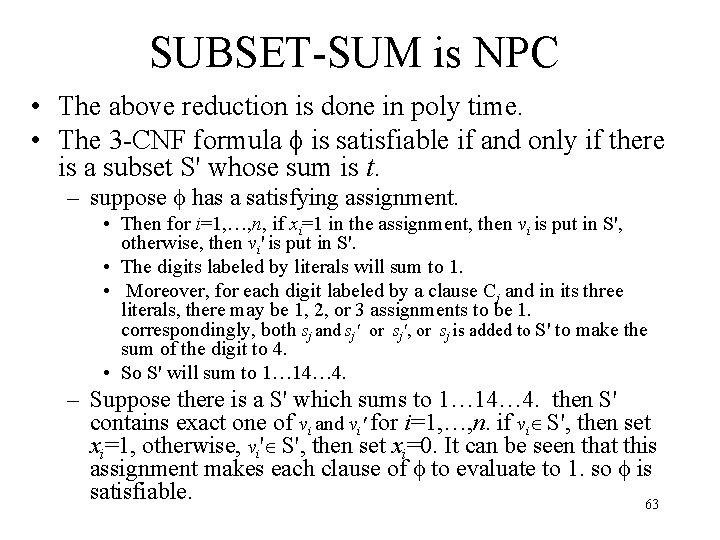

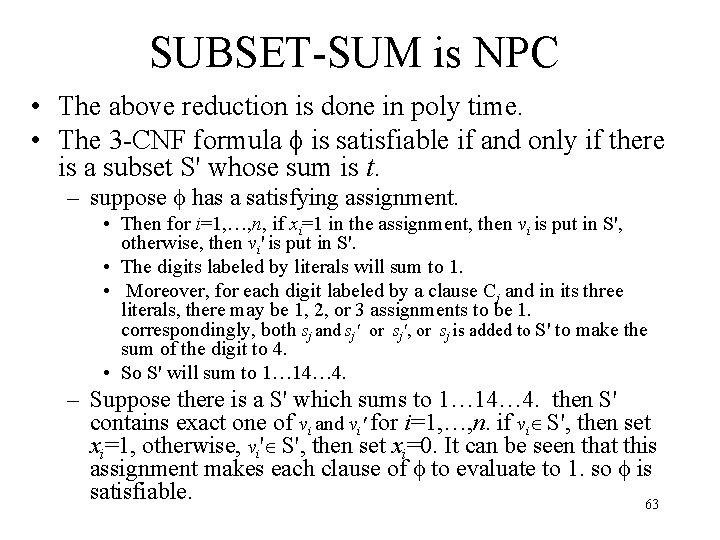

SUBSET-SUM is NPC • The above reduction is done in poly time. • The 3 -CNF formula is satisfiable if and only if there is a subset S' whose sum is t. – suppose has a satisfying assignment. • Then for i=1, …, n, if xi=1 in the assignment, then vi is put in S', otherwise, then vi' is put in S'. • The digits labeled by literals will sum to 1. • Moreover, for each digit labeled by a clause Cj and in its three literals, there may be 1, 2, or 3 assignments to be 1. correspondingly, both sj and sj' or sj', or sj is added to S' to make the sum of the digit to 4. • So S' will sum to 1… 14… 4. – Suppose there is a S' which sums to 1… 14… 4. then S' contains exact one of vi and vi' for i=1, …, n. if vi S', then set xi=1, otherwise, vi' S', then set xi=0. It can be seen that this assignment makes each clause of to evaluate to 1. so is satisfiable. 63