TopK documents Exact retrieval Goal n n Given

- Slides: 32

Top-K documents Exact retrieval

Goal n n Given a query Q, find the exact top K docs for Q, using some ranking function r Simplest Strategy: 1) 2) 3) 4) Find all documents in the intersection Compute score r(d) for all these documents d Sort results by score Return top K results

Background n Score computation is a large fraction of the CPU work on a query n n Generally, we have a tight budget on latency (say, 100 ms) We can’t exhaustively score every document on every query! Goal is to cut CPU usage for scoring, without compromising (too much) on the quality of results Basic idea: avoid scoring docs that won’t make it into the top K

The WAND technique n n n We wish to compute the top K docs It is a pruning method which uses a max heap over the scores, and there is a proof that these are indeed the exact top-K Basic idea reminiscent of branch and bound n n n We maintain a running threshold score = the K-th highest score computed so far We prune away all docs whose scores are guaranteed to be below the threshold We compute exact scores for only the unpruned docs

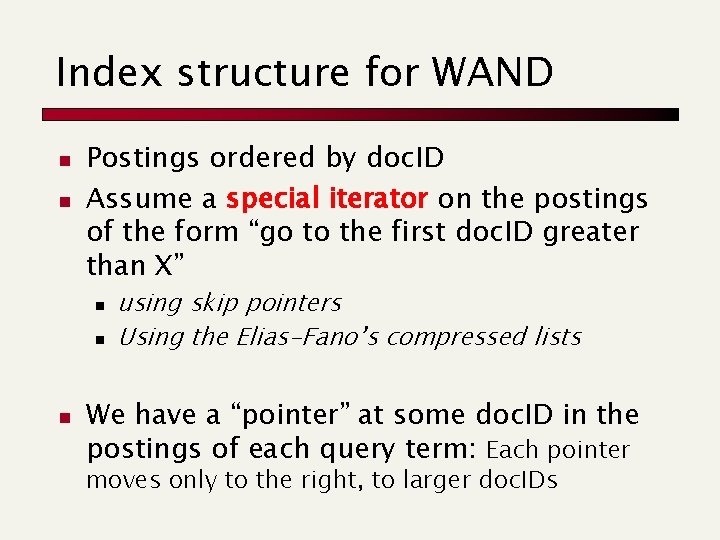

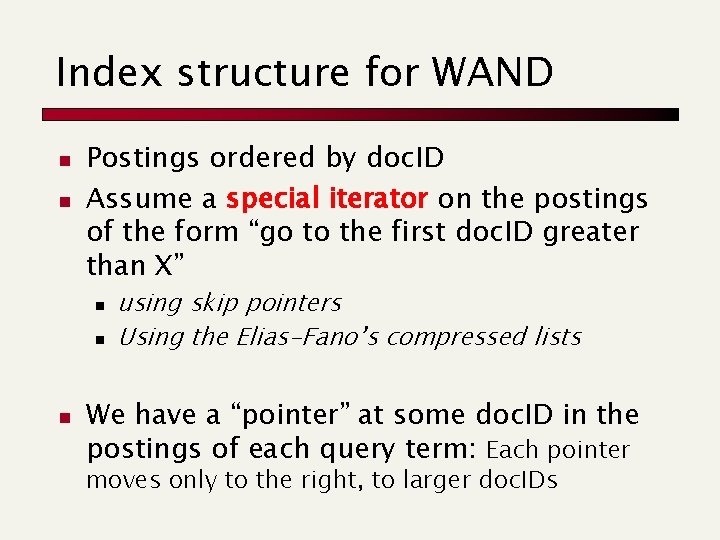

Index structure for WAND n n Postings ordered by doc. ID Assume a special iterator on the postings of the form “go to the first doc. ID greater than X” n n n using skip pointers Using the Elias-Fano’s compressed lists We have a “pointer” at some doc. ID in the postings of each query term: Each pointer moves only to the right, to larger doc. IDs

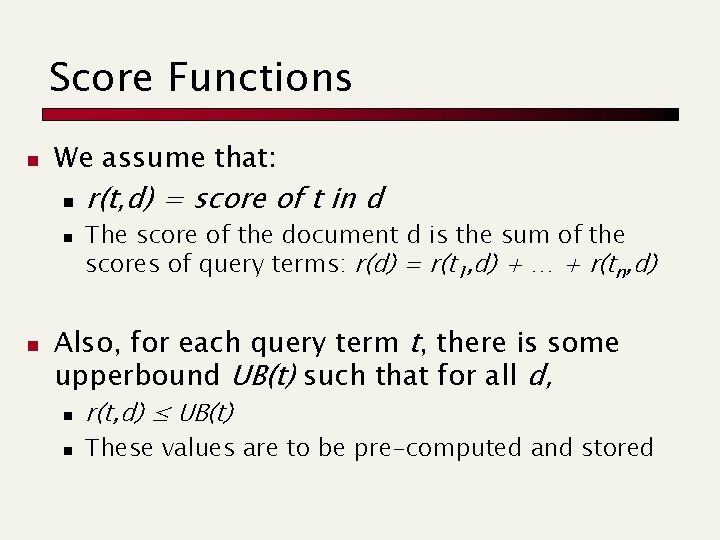

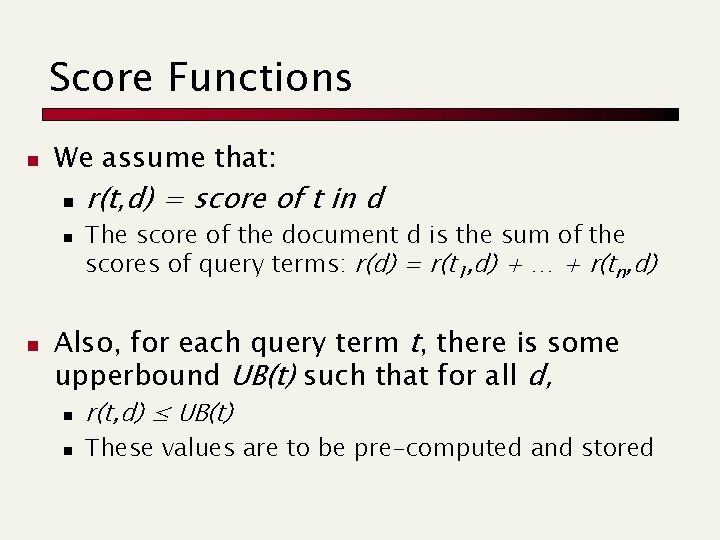

Score Functions n We assume that: n n n r(t, d) = score of t in d The score of the document d is the sum of the scores of query terms: r(d) = r(t 1, d) + … + r(tn, d) Also, for each query term t, there is some upperbound UB(t) such that for all d, n n r(t, d) ≤ UB(t) These values are to be pre-computed and stored

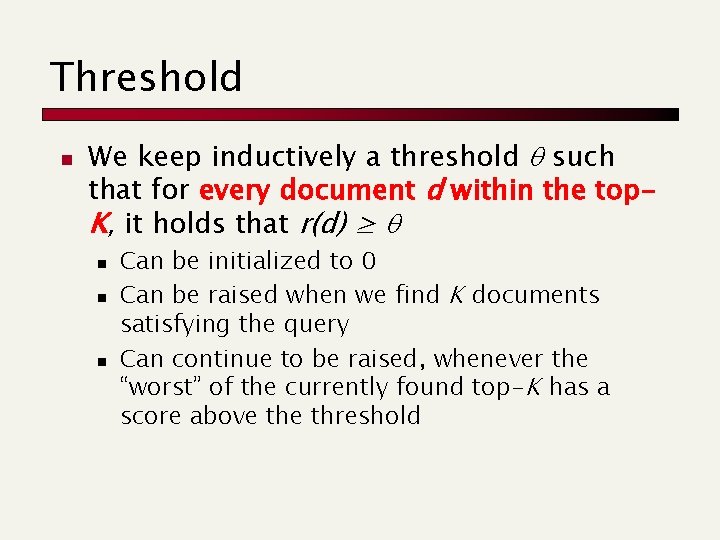

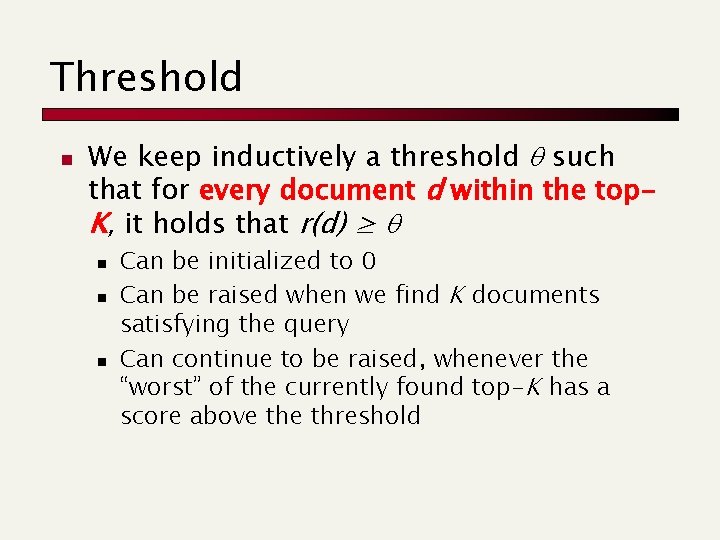

Threshold n We keep inductively a threshold such that for every document d within the top. K, it holds that r(d) ≥ n n n Can be initialized to 0 Can be raised when we find K documents satisfying the query Can continue to be raised, whenever the “worst” of the currently found top-K has a score above threshold

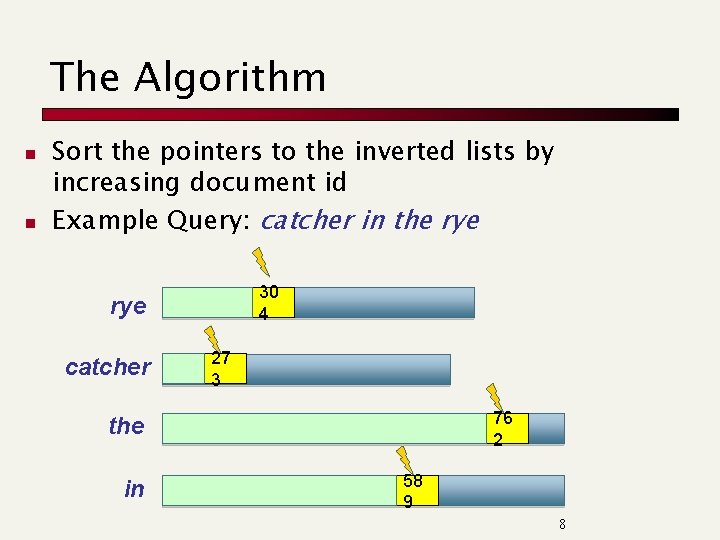

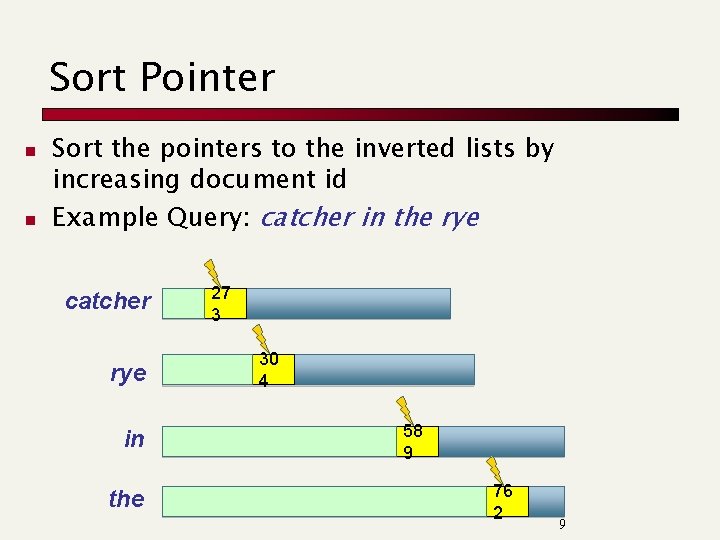

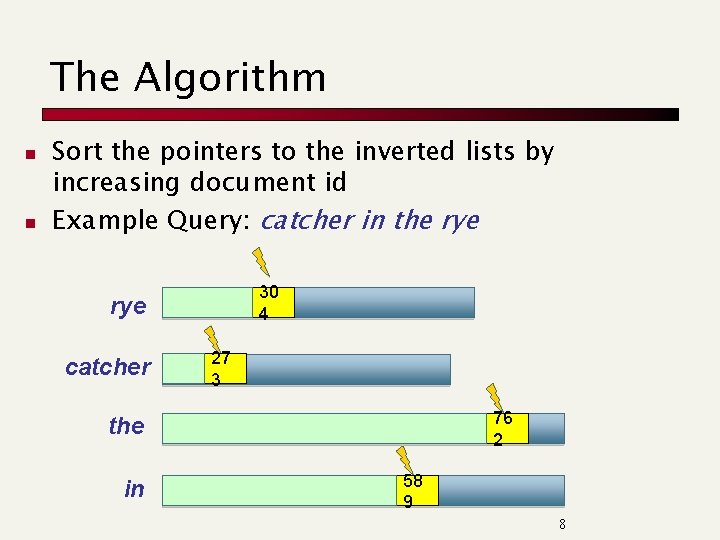

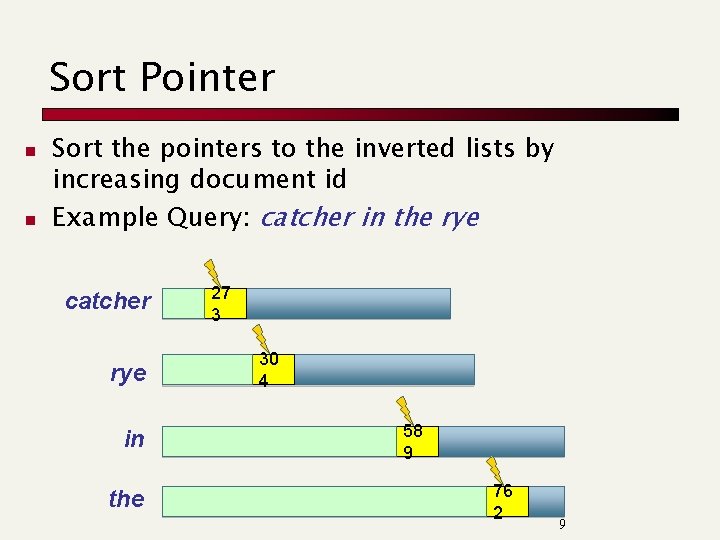

The Algorithm n n Sort the pointers to the inverted lists by increasing document id Example Query: catcher in the rye 30 4 rye catcher 27 3 76 2 the in 58 9 8

Sort Pointer n n Sort the pointers to the inverted lists by increasing document id Example Query: catcher in the rye catcher rye in the 27 3 30 4 58 9 76 2 9

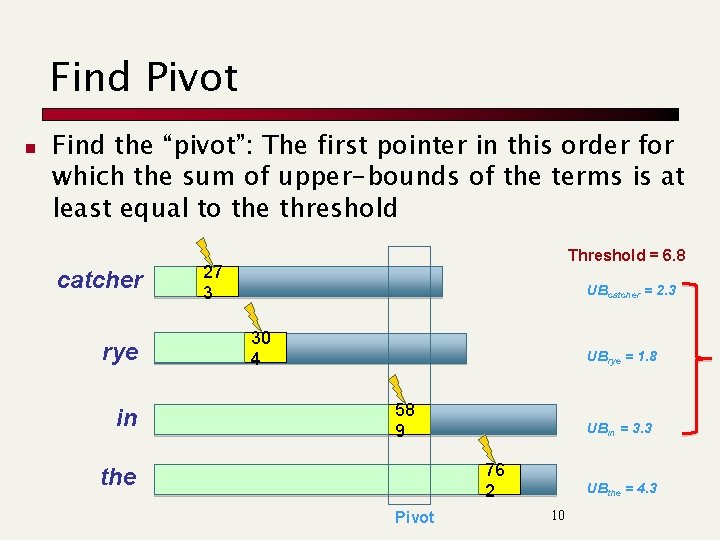

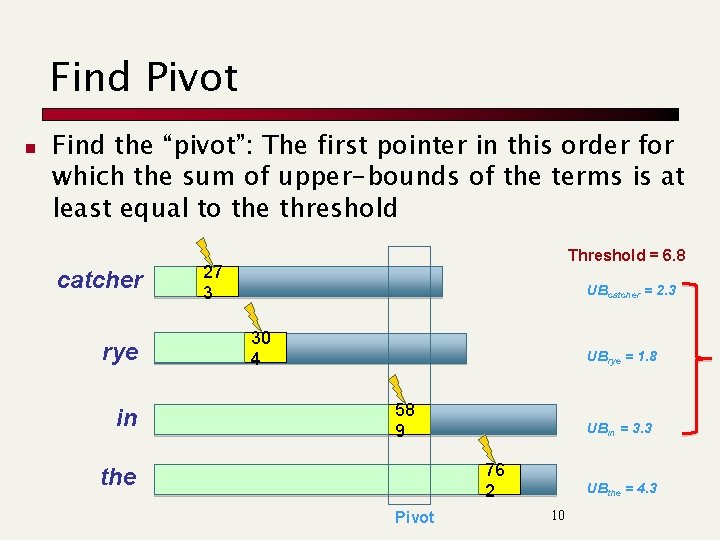

Find Pivot n Find the “pivot”: The first pointer in this order for which the sum of upper-bounds of the terms is at least equal to the threshold catcher rye in Threshold = 6. 8 27 3 UBcatcher = 2. 3 30 4 UBrye = 1. 8 58 9 UBin = 3. 3 76 2 the Pivot UBthe = 4. 3 10

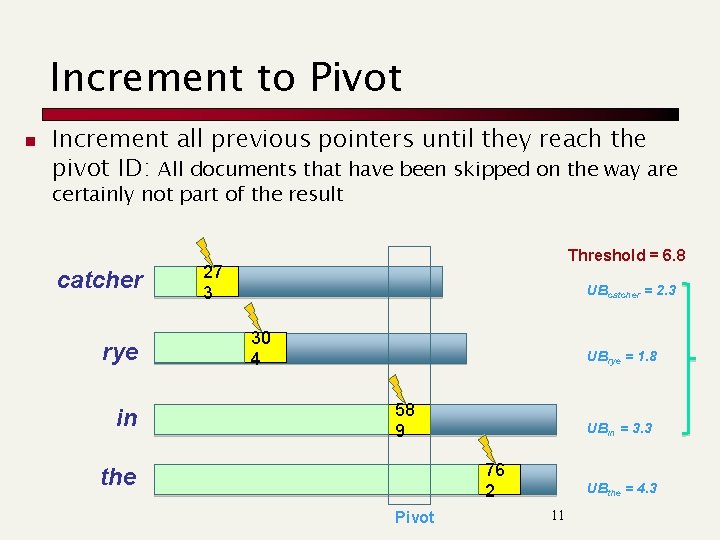

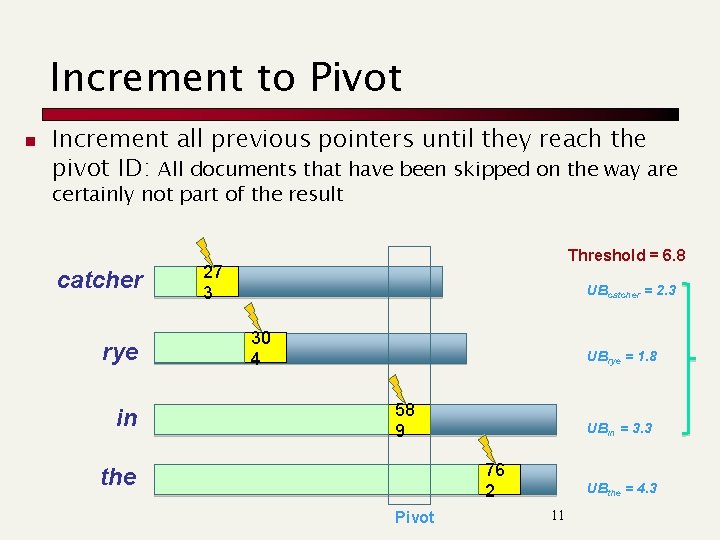

Increment to Pivot n Increment all previous pointers until they reach the pivot ID: All documents that have been skipped on the way are certainly not part of the result catcher rye in Threshold = 6. 8 27 3 UBcatcher = 2. 3 30 4 UBrye = 1. 8 58 9 UBin = 3. 3 76 2 the Pivot UBthe = 4. 3 11

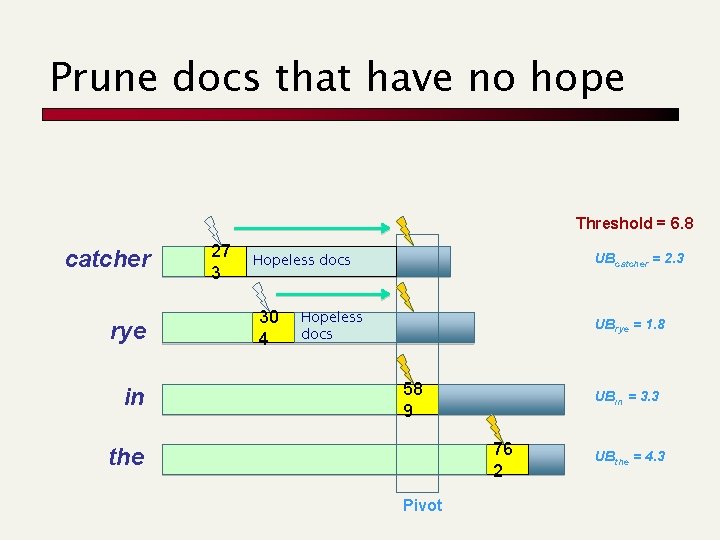

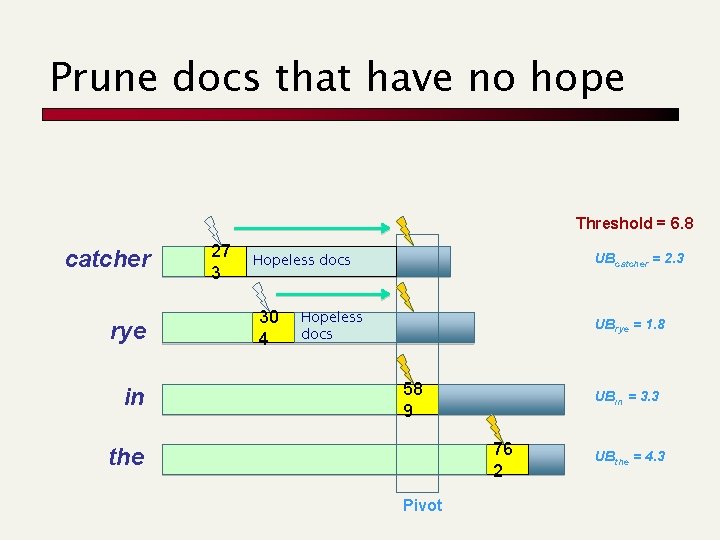

Prune docs that have no hope Threshold = 6. 8 catcher rye in 27 3 Hopeless docs 30 4 UBcatcher = 2. 3 Hopeless docs UBrye = 1. 8 58 9 UBin = 3. 3 76 2 the Pivot UBthe = 4. 3

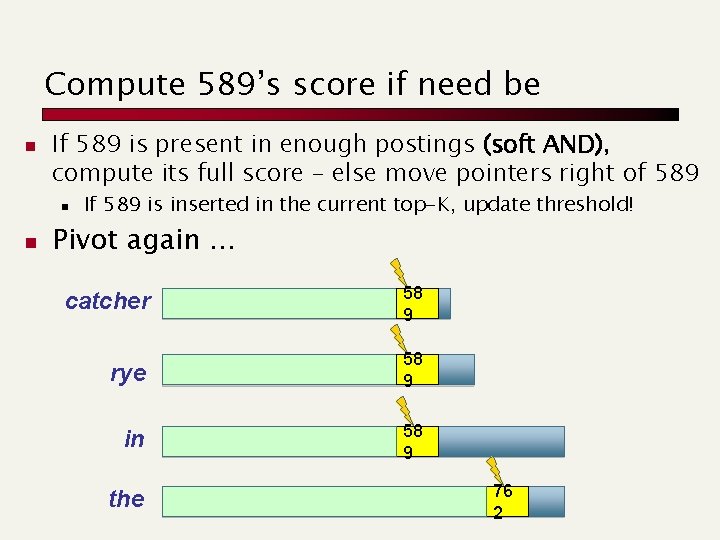

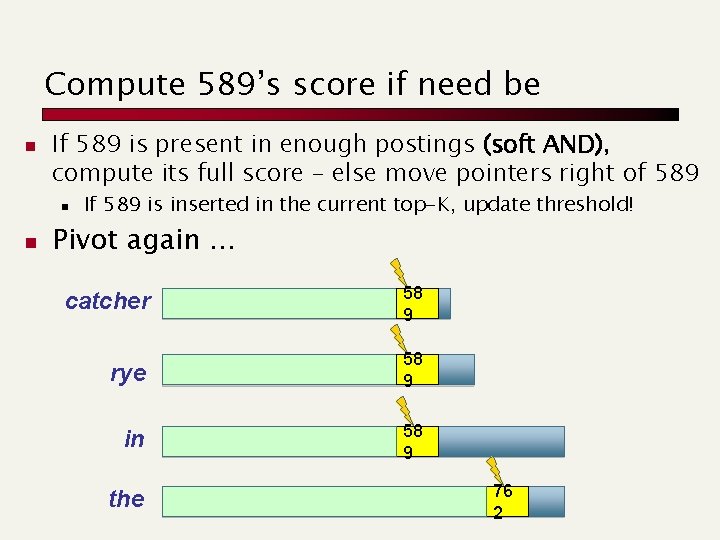

Compute 589’s score if need be n If 589 is present in enough postings (soft AND), compute its full score – else move pointers right of 589 n n If 589 is inserted in the current top-K, update threshold! Pivot again … catcher 58 9 rye 58 9 in 58 9 the 76 2

WAND summary n In tests, WAND leads to a 90+% reduction in score computation n n Better gains on longer queries WAND gives us safe ranking n Possible to devise “careless” variants that are a bit faster but not safe

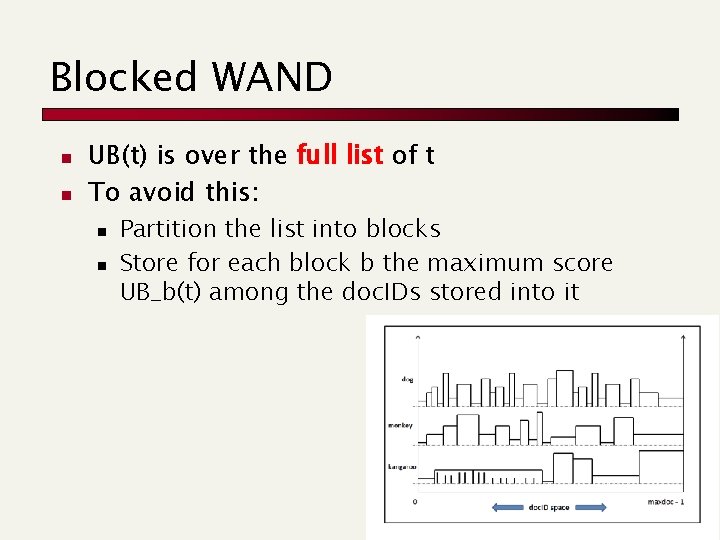

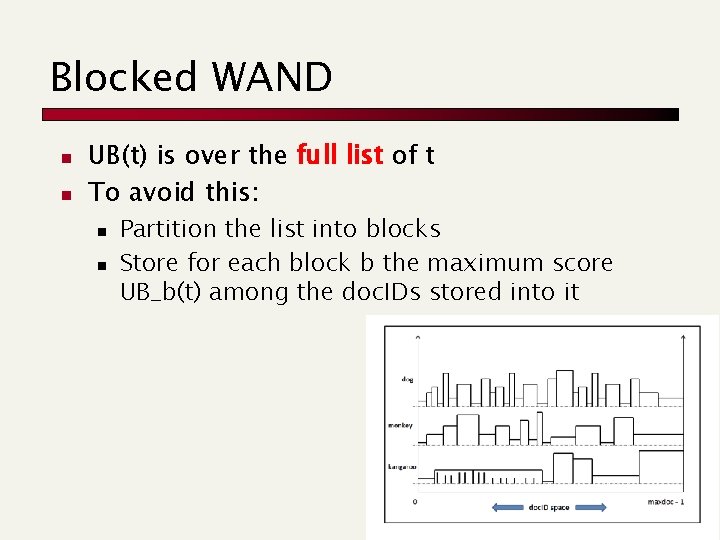

Blocked WAND n n UB(t) is over the full list of t To avoid this: n n Partition the list into blocks Store for each block b the maximum score UB_b(t) among the doc. IDs stored into it

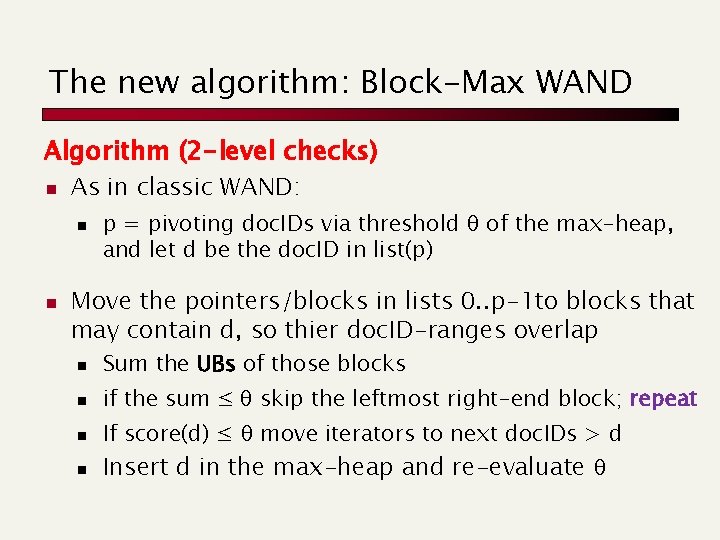

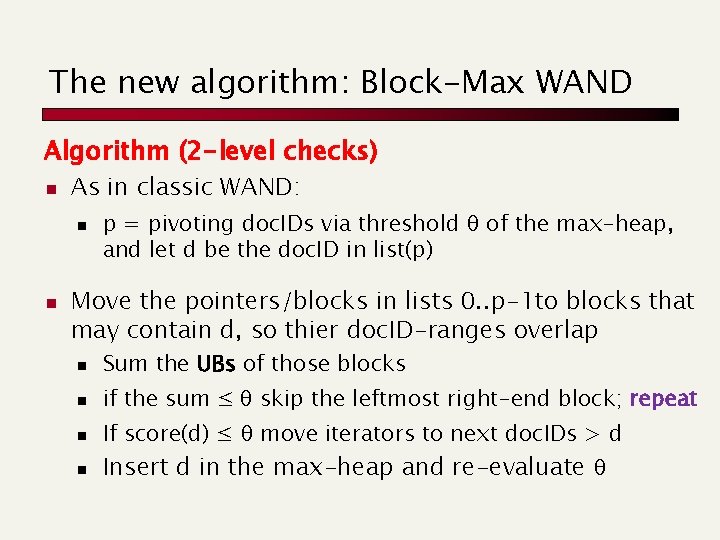

The new algorithm: Block-Max WAND Algorithm (2 -level checks) n As in classic WAND: n n p = pivoting doc. IDs via threshold q of the max-heap, and let d be the doc. ID in list(p) Move the pointers/blocks in lists 0. . p-1 to blocks that may contain d, so thier doc. ID-ranges overlap n Sum the UBs of those blocks n if the sum ≤ q skip the leftmost right-end block; repeat n If score(d) ≤ q move iterators to next doc. IDs > d n Insert d in the max-heap and re-evaluate q

Document RE-ranking Relevance feedback

Sec. 9. 1 Relevance Feedback n Relevance feedback: user feedback on relevance of docs in initial set of results n n User issues a (short, simple) query The user marks some results as relevant or non -relevant. The system computes a better representation of the information need based on feedback. Relevance feedback can go through one or more iterations.

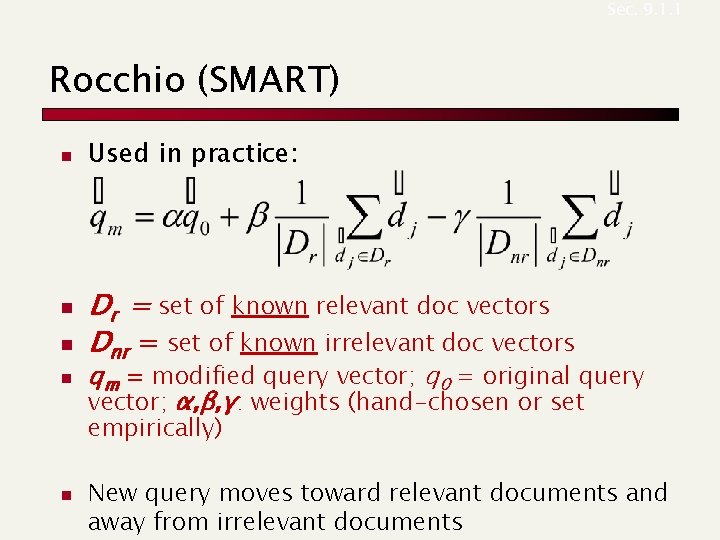

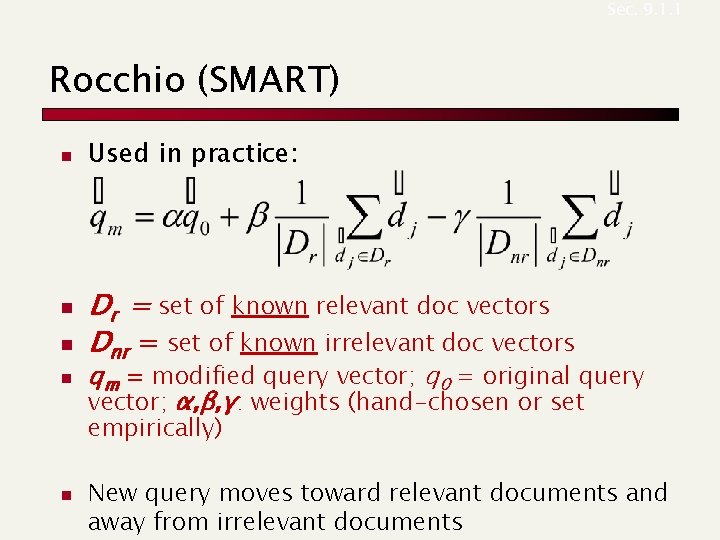

Sec. 9. 1. 1 Rocchio (SMART) n n Used in practice: Dr = set of known relevant doc vectors Dnr = set of known irrelevant doc vectors qm = modified query vector; q 0 = original query vector; α, β, γ: weights (hand-chosen or set empirically) n New query moves toward relevant documents and away from irrelevant documents

Relevance Feedback: Problems n n n Users are often reluctant to provide explicit feedback It’s often harder to understand why a particular document was retrieved after applying relevance feedback There is no clear evidence that relevance feedback is the “best use” of the user’s time.

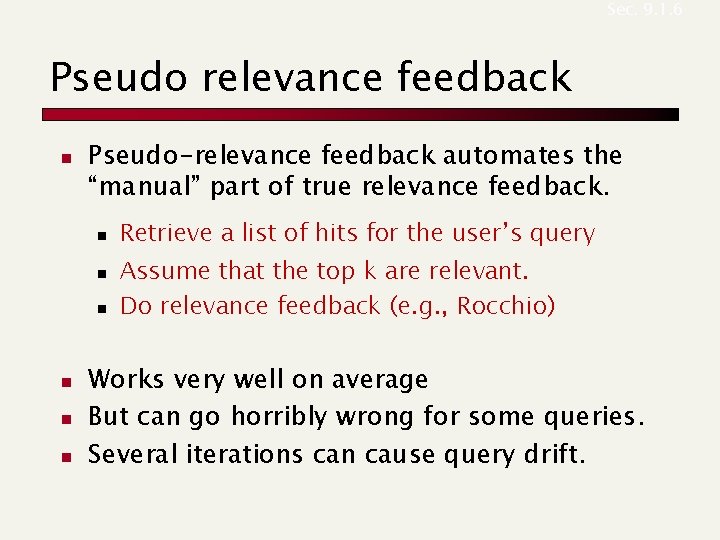

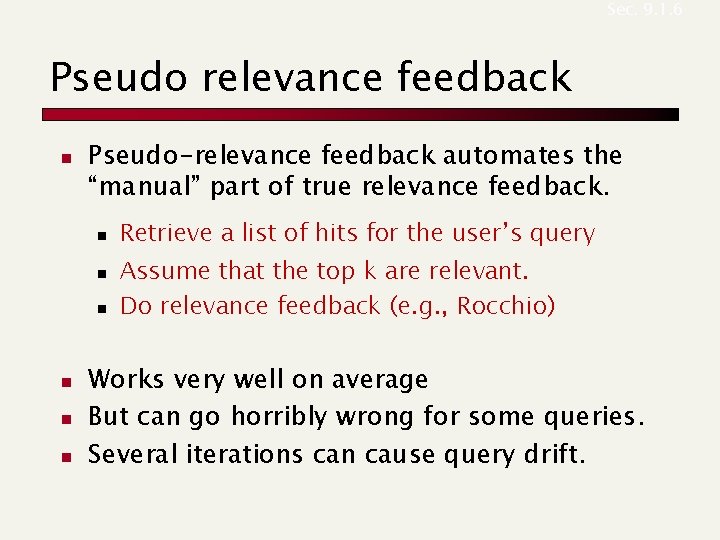

Sec. 9. 1. 6 Pseudo relevance feedback n Pseudo-relevance feedback automates the “manual” part of true relevance feedback. n n n Retrieve a list of hits for the user’s query Assume that the top k are relevant. Do relevance feedback (e. g. , Rocchio) Works very well on average But can go horribly wrong for some queries. Several iterations can cause query drift.

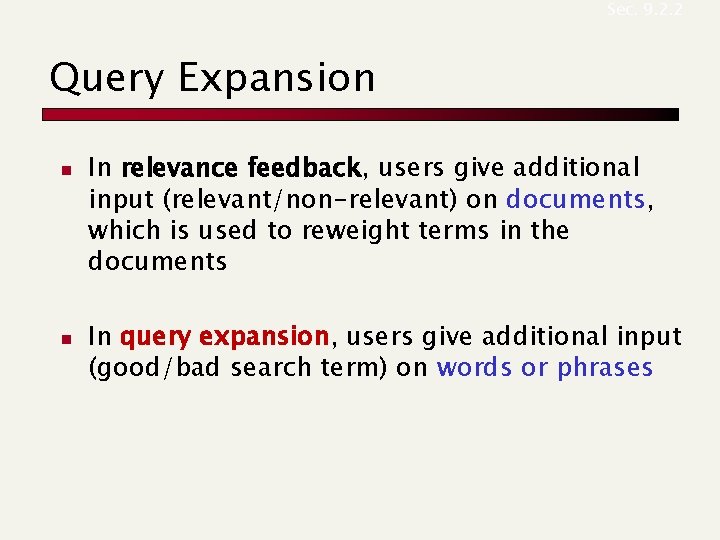

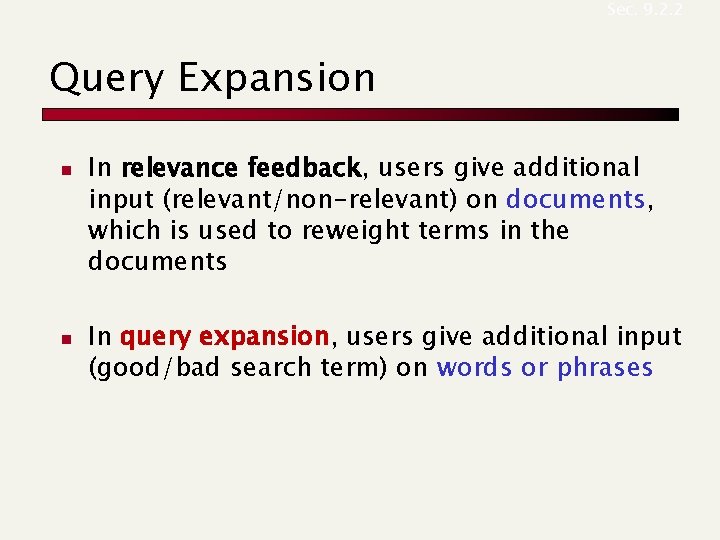

Sec. 9. 2. 2 Query Expansion n n In relevance feedback, users give additional input (relevant/non-relevant) on documents, which is used to reweight terms in the documents In query expansion, users give additional input (good/bad search term) on words or phrases

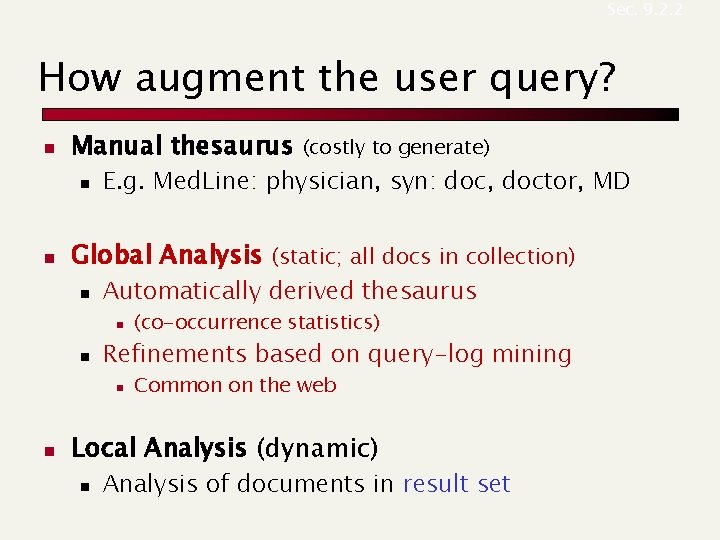

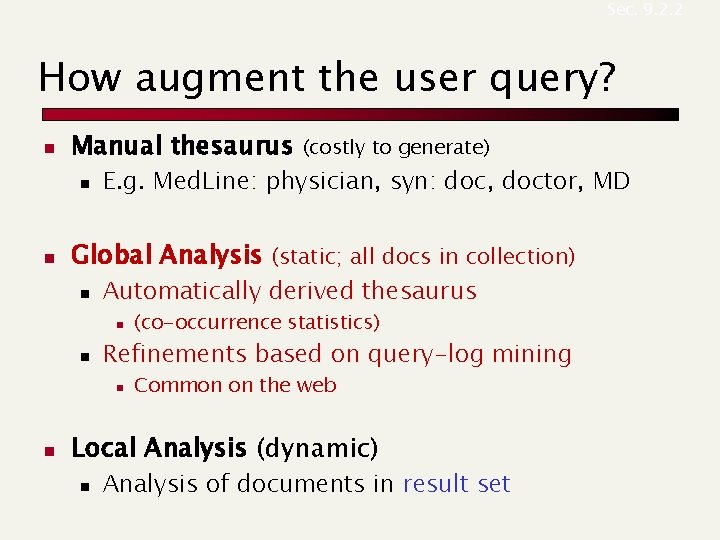

Sec. 9. 2. 2 How augment the user query? n Manual thesaurus n n E. g. Med. Line: physician, syn: doc, doctor, MD Global Analysis (static; all docs in collection) n Automatically derived thesaurus n n (co-occurrence statistics) Refinements based on query-log mining n n (costly to generate) Common on the web Local Analysis (dynamic) n Analysis of documents in result set

Quality of a search engine Paolo Ferragina Dipartimento di Informatica Università di Pisa

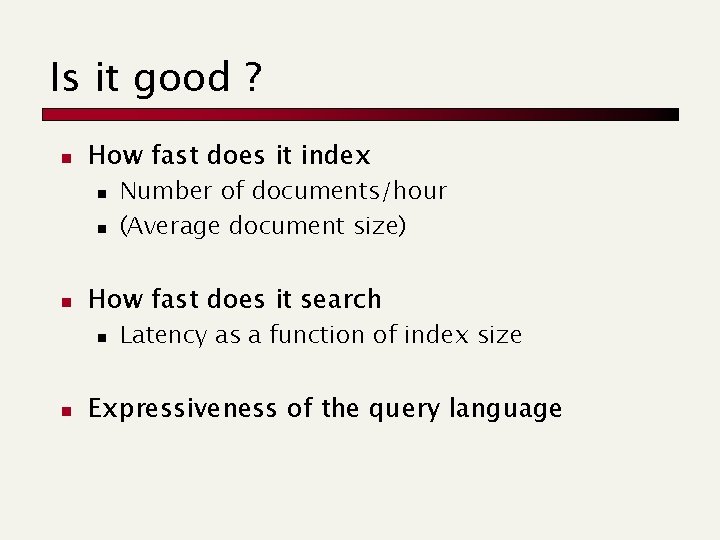

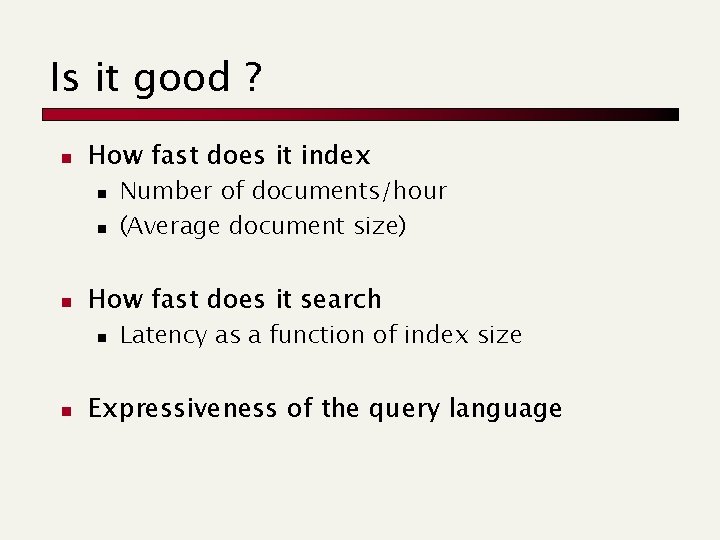

Is it good ? n How fast does it index n n n How fast does it search n n Number of documents/hour (Average document size) Latency as a function of index size Expressiveness of the query language

Measures for a search engine n All of the preceding criteria are measurable n The key measure: user happiness …useless answers won’t make a user happy n User groups for testing !!

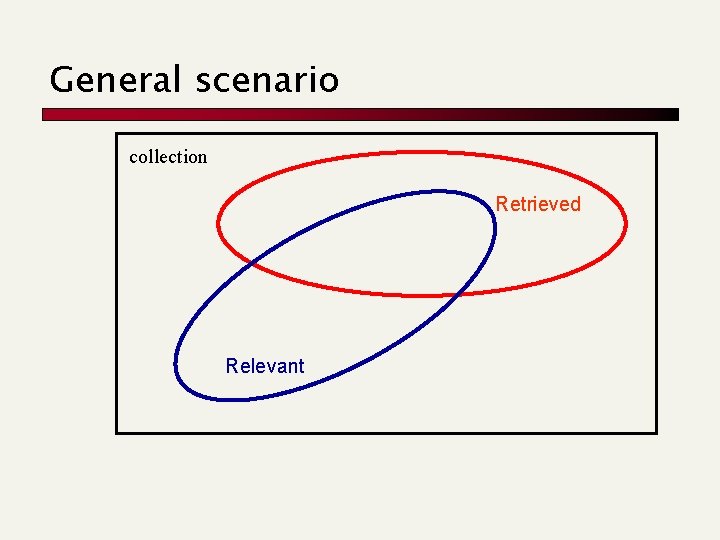

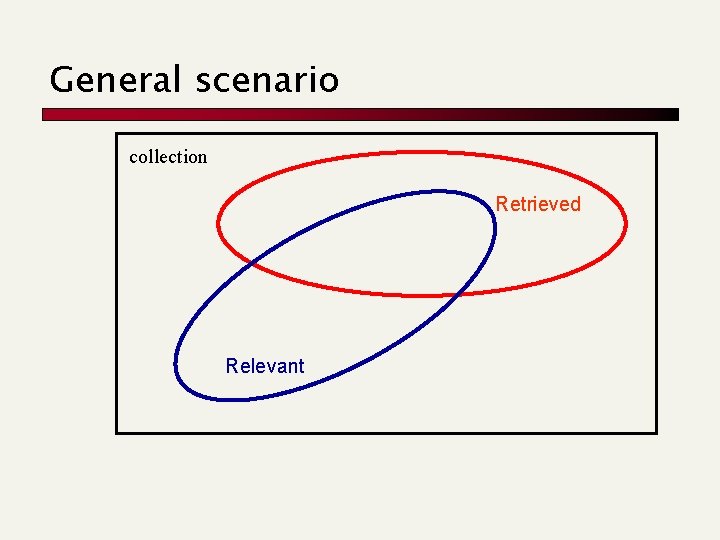

General scenario collection Retrieved Relevant

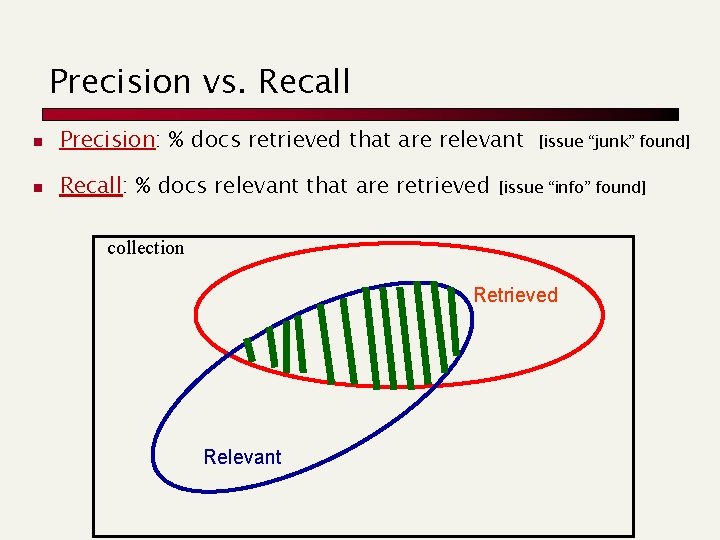

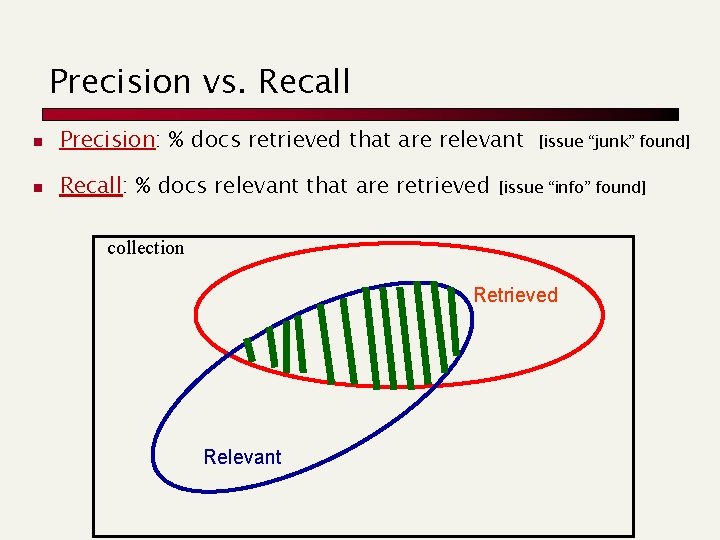

Precision vs. Recall n Precision: % docs retrieved that are relevant n Recall: % docs relevant that are retrieved [issue “junk” found] [issue “info” found] collection Retrieved Relevant

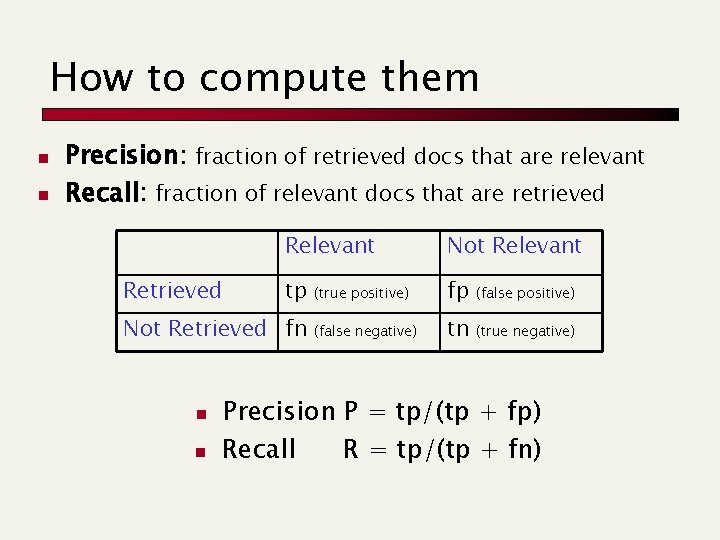

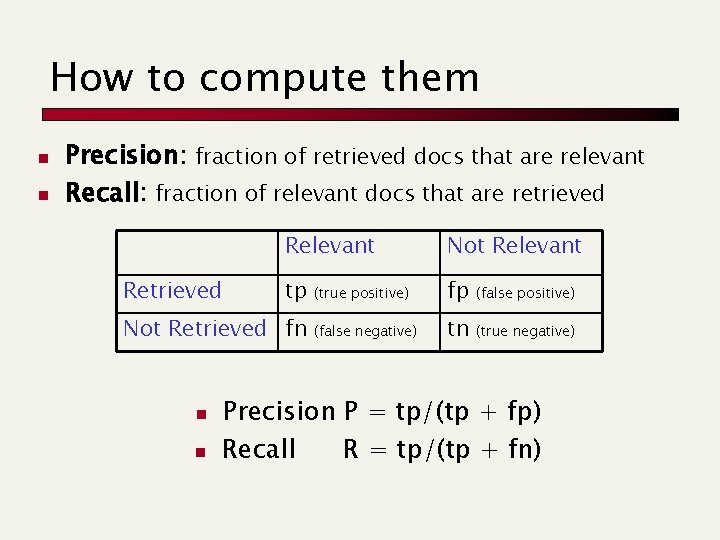

How to compute them n n Precision: fraction of retrieved docs that are relevant Recall: fraction of relevant docs that are retrieved Relevant Not Relevant tp (true positive) fp (false positive) (false negative) tn (true negative) Not Retrieved fn n n Precision P = tp/(tp + fp) Recall R = tp/(tp + fn)

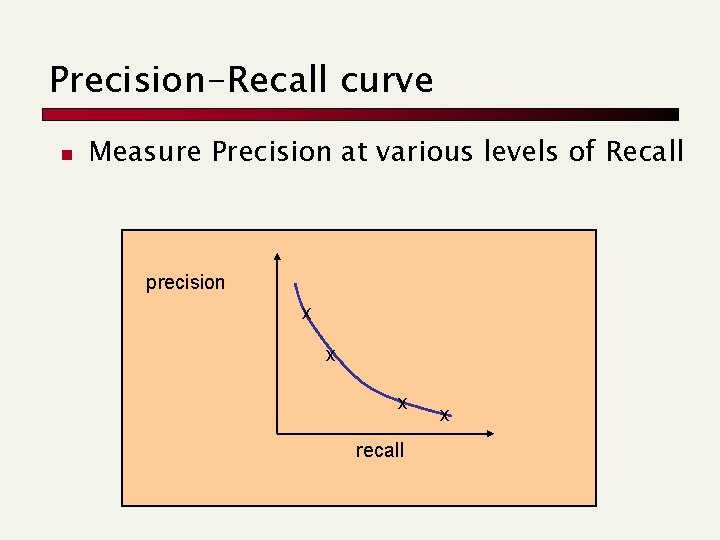

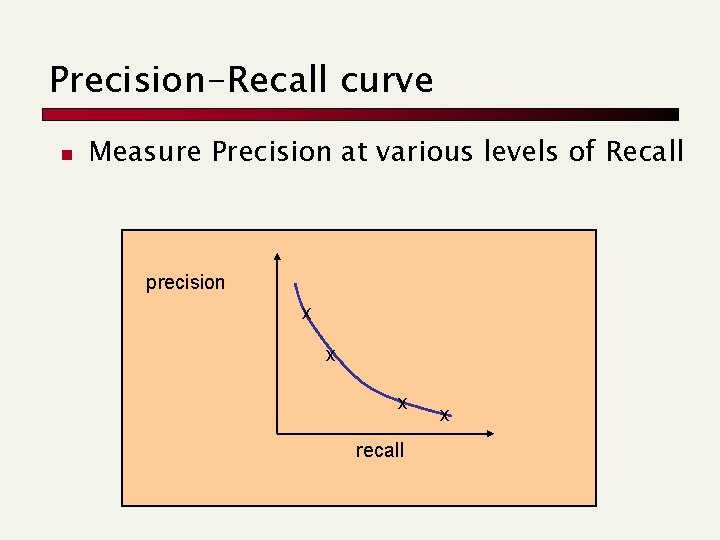

Precision-Recall curve n Measure Precision at various levels of Recall precision x x x recall x

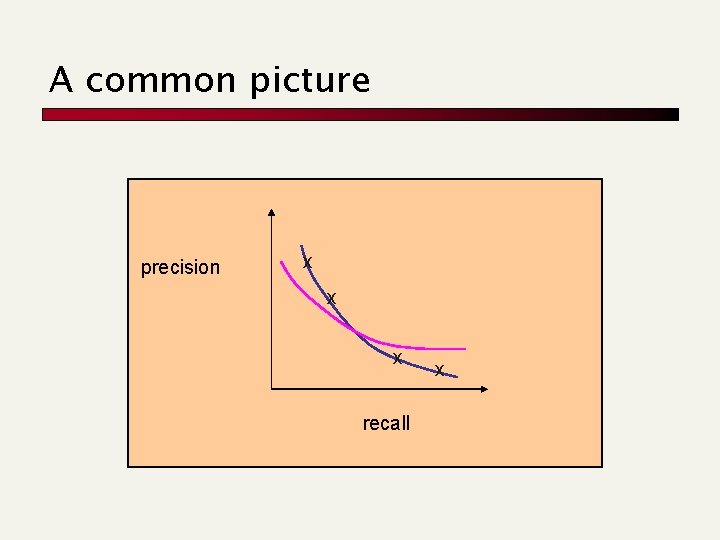

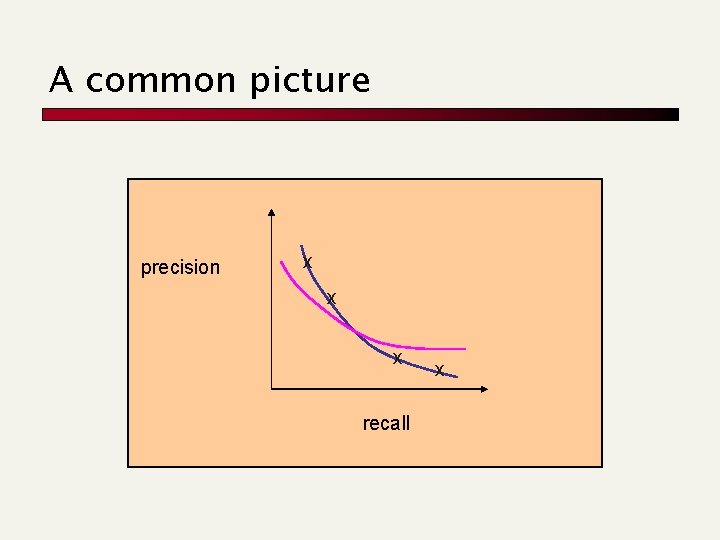

A common picture precision x x x recall x

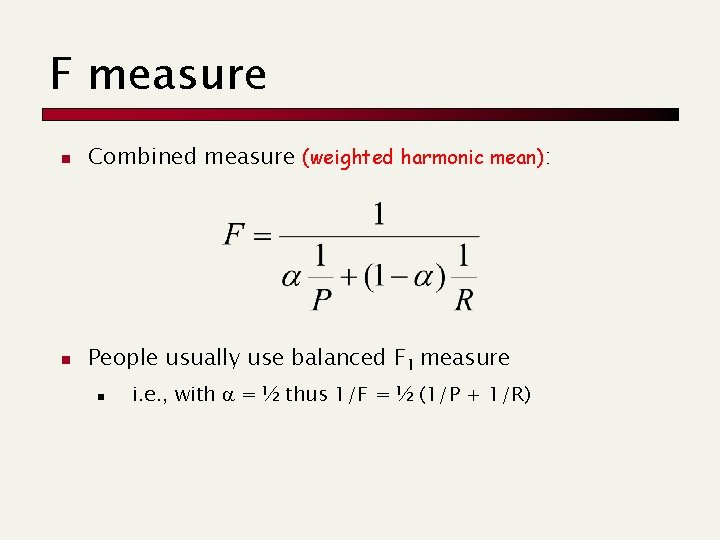

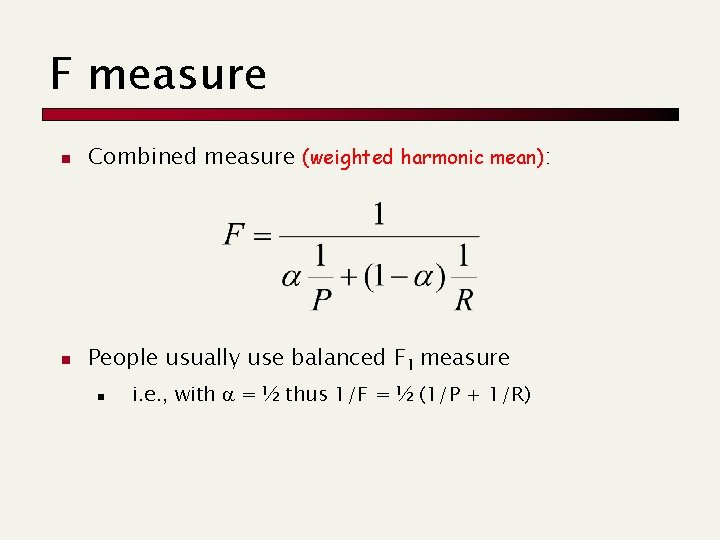

F measure n Combined measure (weighted harmonic mean): n People usually use balanced F 1 measure n i. e. , with = ½ thus 1/F = ½ (1/P + 1/R)