Topic Model Latent Dirichlet Allocation Ouyang Ruofei May

- Slides: 35

Topic Model Latent Dirichlet Allocation Ouyang Ruofei May. 10 2013 Ouyang Ruofei LDA

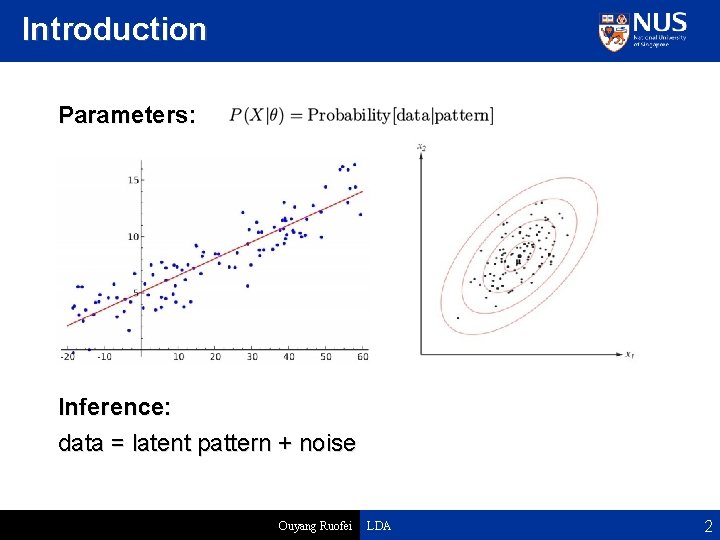

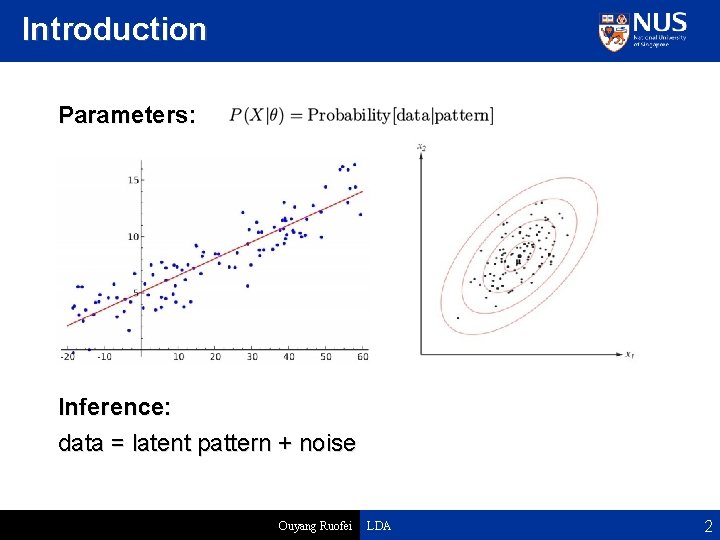

Introduction Parameters: Inference: data = latent pattern + noise Ouyang Ruofei LDA 2

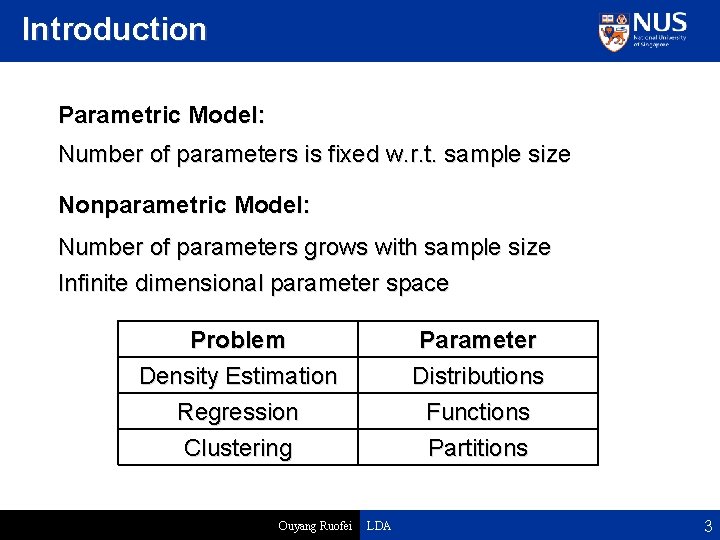

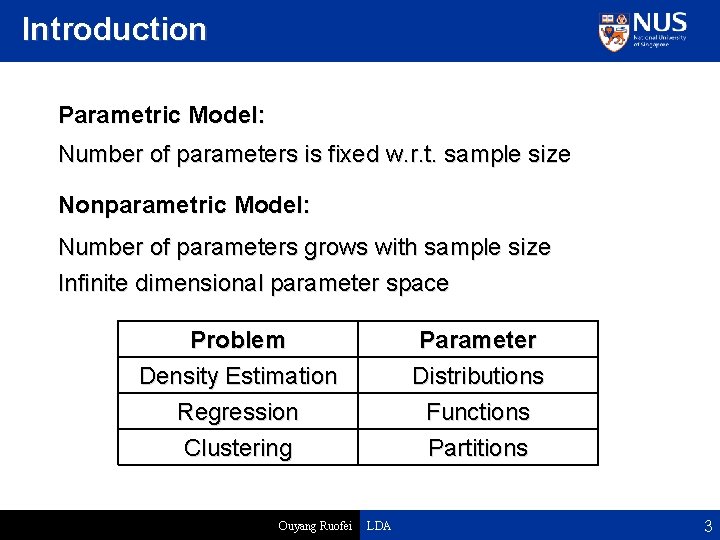

Introduction Parametric Model: Number of parameters is fixed w. r. t. sample size Nonparametric Model: Number of parameters grows with sample size Infinite dimensional parameter space Problem Density Estimation Regression Clustering Ouyang Ruofei Parameter Distributions Functions Partitions LDA 3

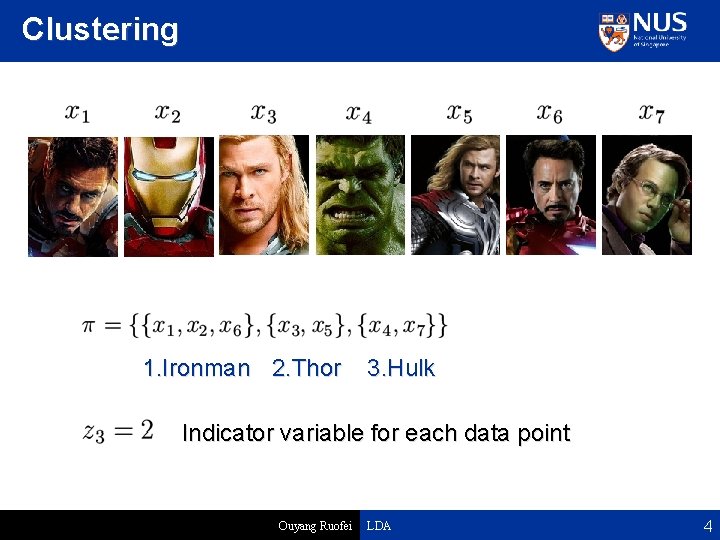

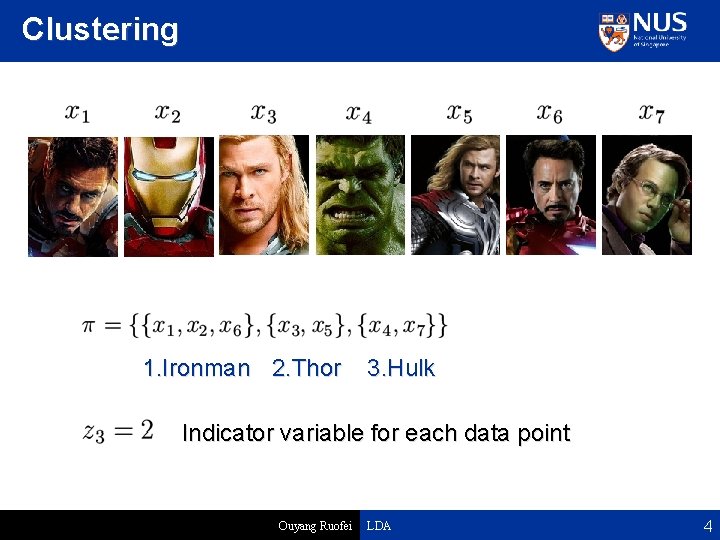

Clustering 1. Ironman 2. Thor 3. Hulk Indicator variable for each data point Ouyang Ruofei LDA 4

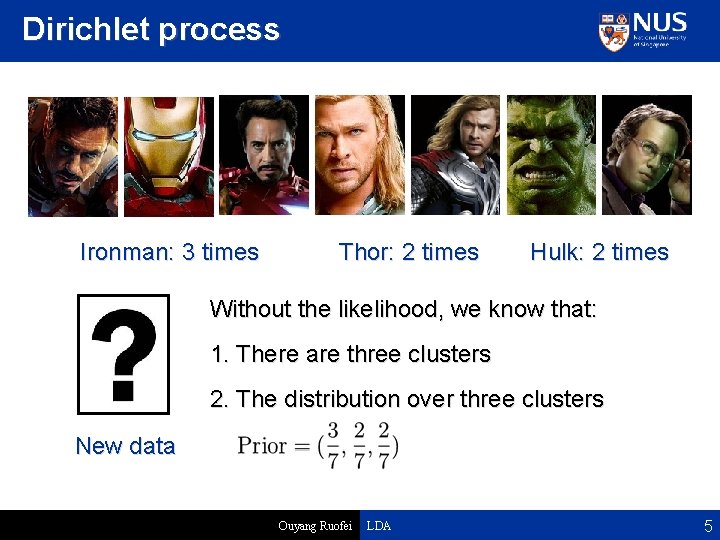

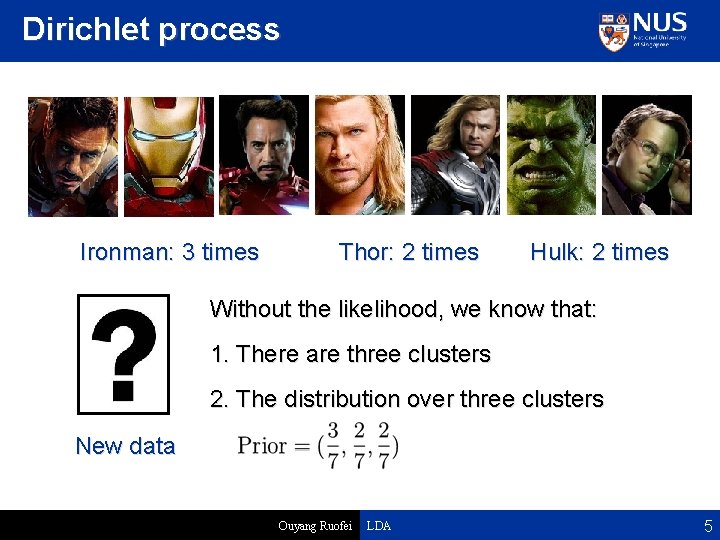

Dirichlet process Ironman: 3 times Thor: 2 times Hulk: 2 times Without the likelihood, we know that: 1. There are three clusters 2. The distribution over three clusters New data Ouyang Ruofei LDA 5

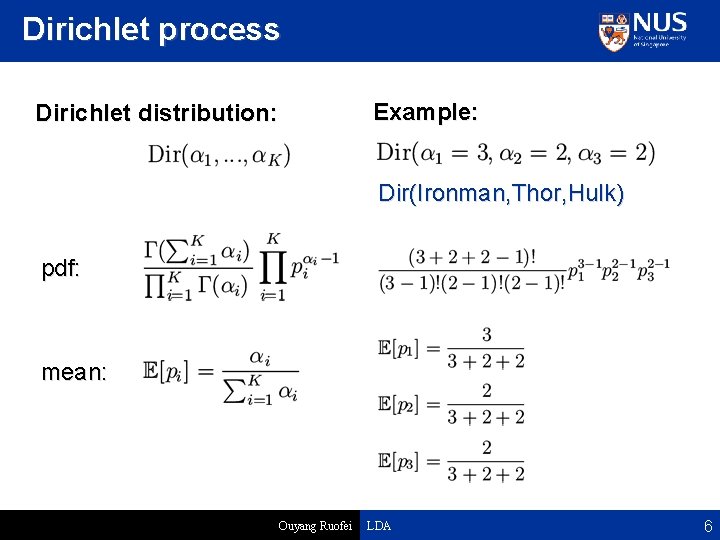

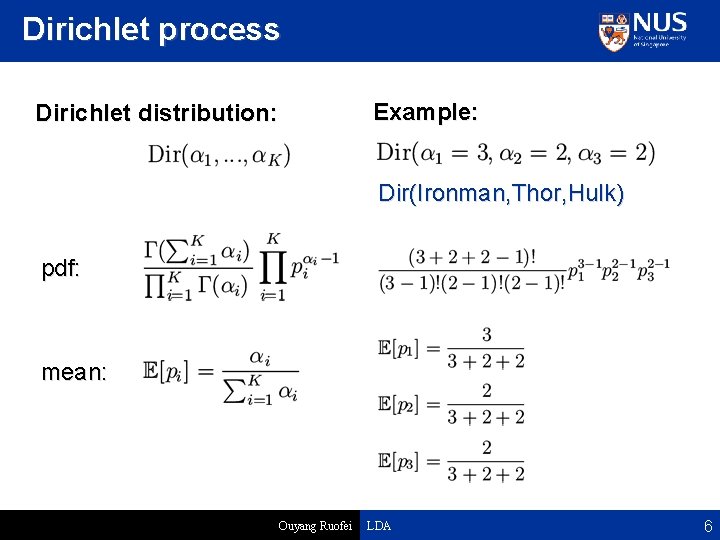

Dirichlet process Example: Dirichlet distribution: Dir(Ironman, Thor, Hulk) pdf: mean: Ouyang Ruofei LDA 6

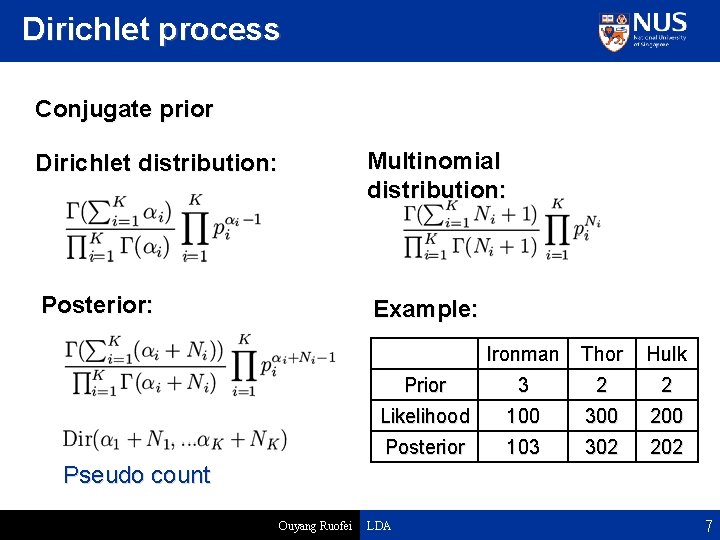

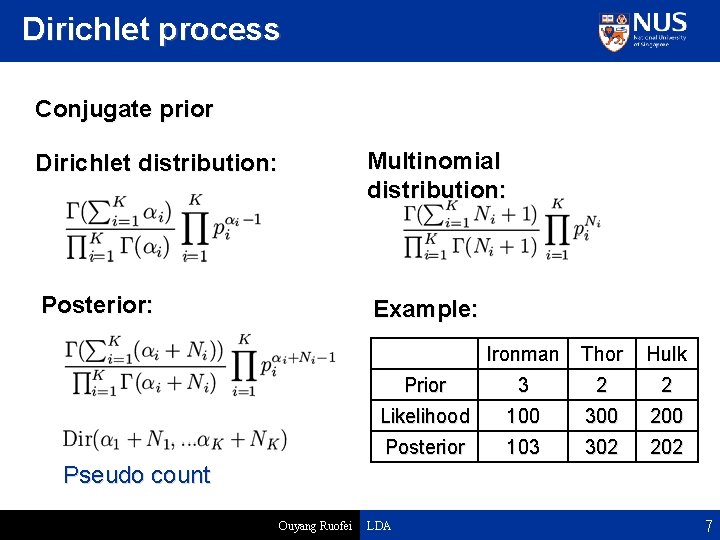

Dirichlet process Conjugate prior Dirichlet distribution: Multinomial distribution: Posterior: Example: Ironman Thor Hulk Prior 3 2 2 Likelihood 100 300 200 Posterior 103 302 202 Pseudo count Ouyang Ruofei LDA 7

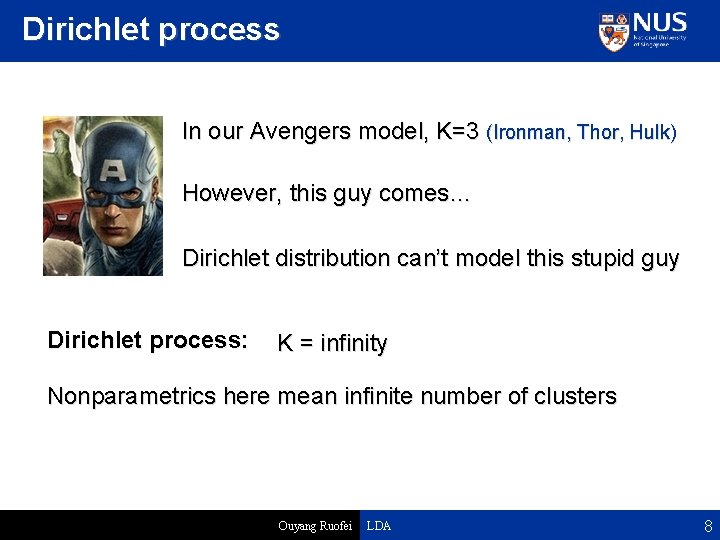

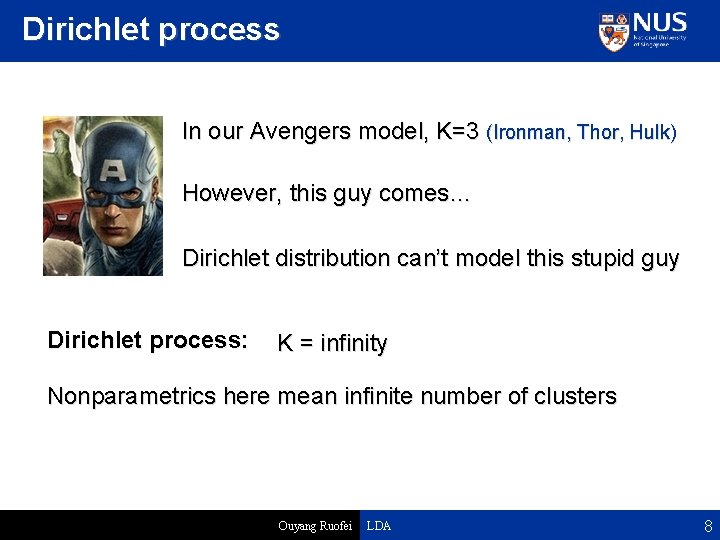

Dirichlet process In our Avengers model, K=3 (Ironman, Thor, Hulk) However, this guy comes… Dirichlet distribution can’t model this stupid guy Dirichlet process: K = infinity Nonparametrics here mean infinite number of clusters Ouyang Ruofei LDA 8

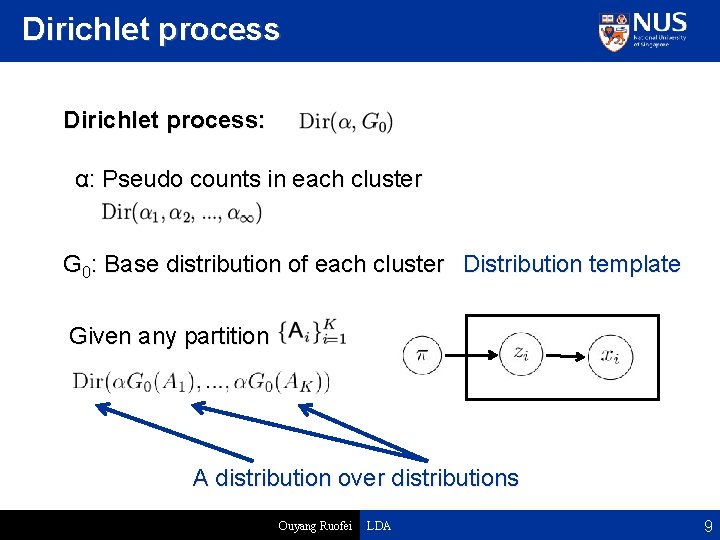

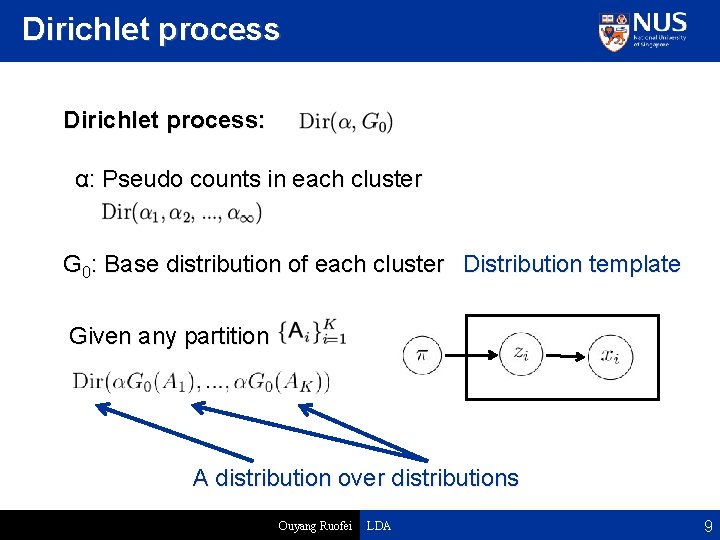

Dirichlet process: α: Pseudo counts in each cluster G 0: Base distribution of each cluster Distribution template Given any partition A distribution over distributions Ouyang Ruofei LDA 9

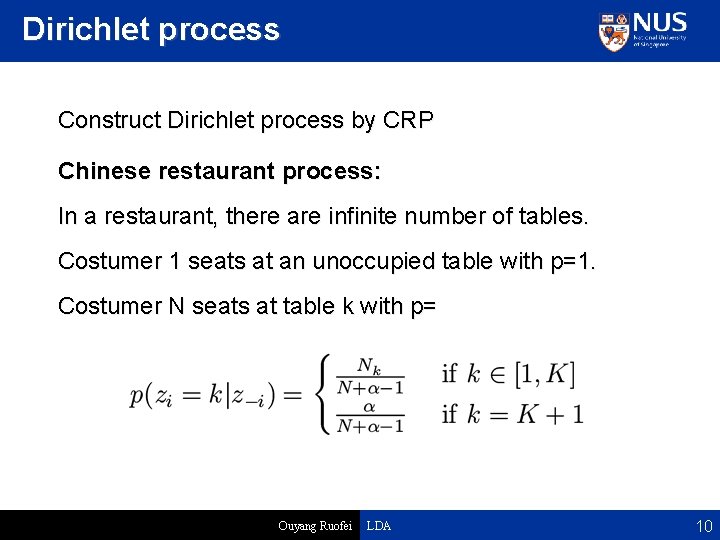

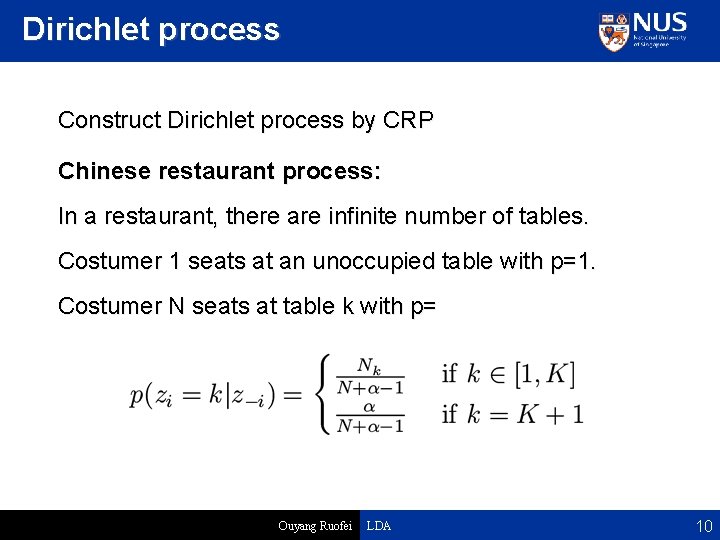

Dirichlet process Construct Dirichlet process by CRP Chinese restaurant process: In a restaurant, there are infinite number of tables. Costumer 1 seats at an unoccupied table with p=1. Costumer N seats at table k with p= Ouyang Ruofei LDA 10

Dirichlet process Ouyang Ruofei LDA 11

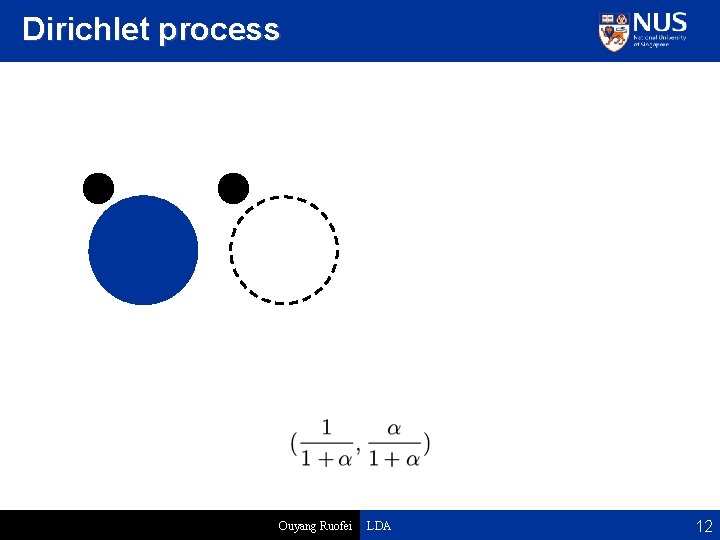

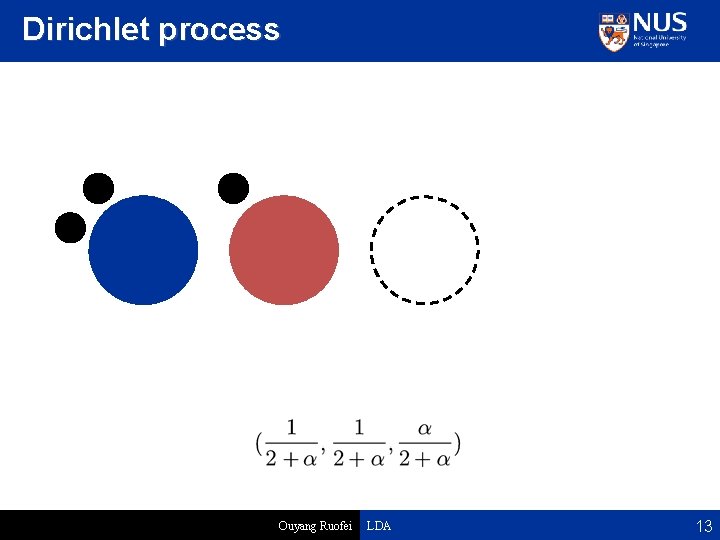

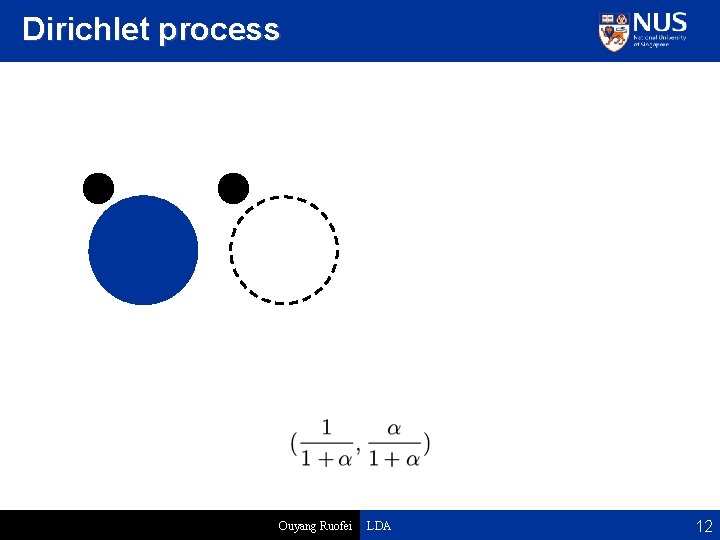

Dirichlet process Ouyang Ruofei LDA 12

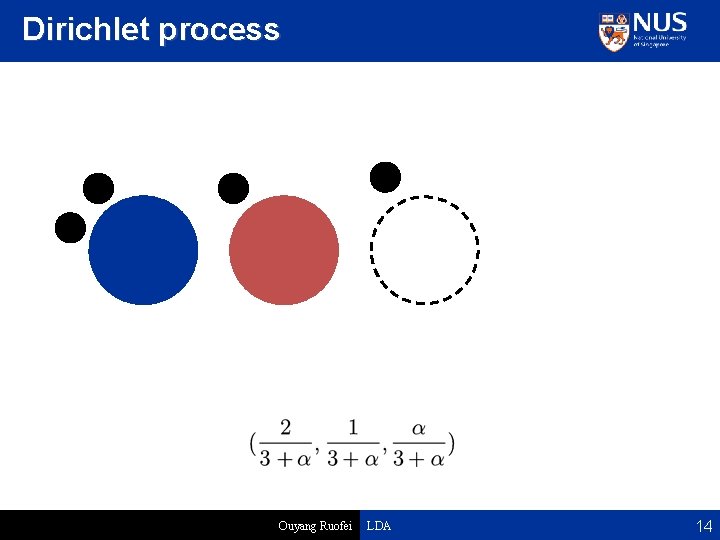

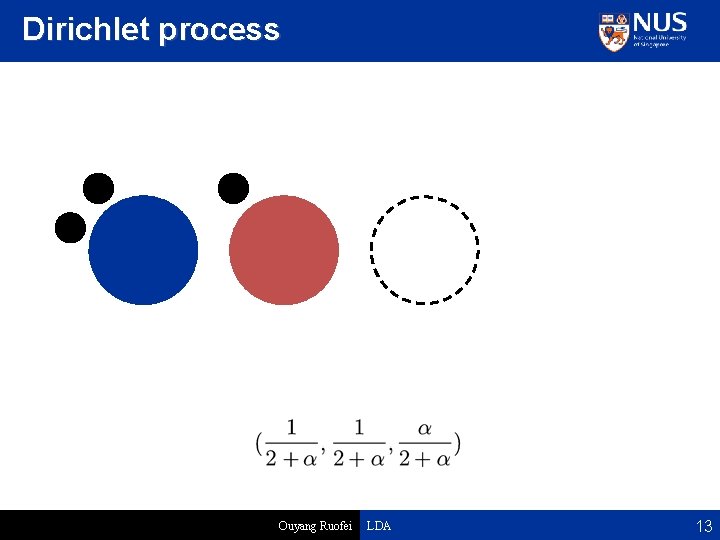

Dirichlet process Ouyang Ruofei LDA 13

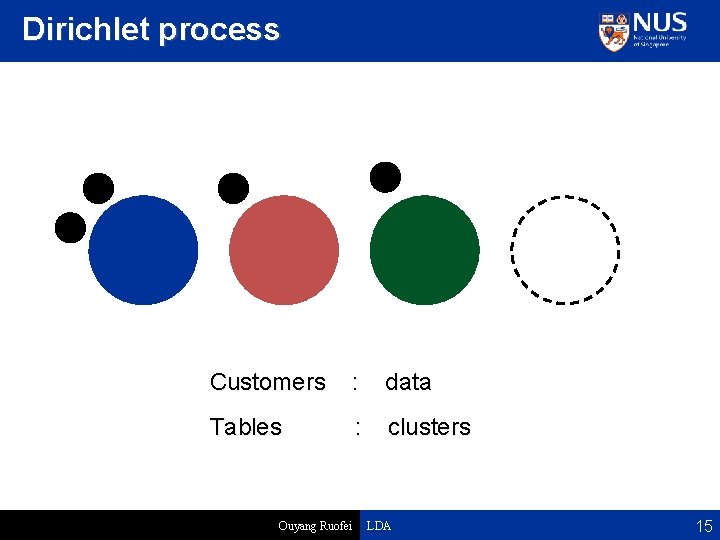

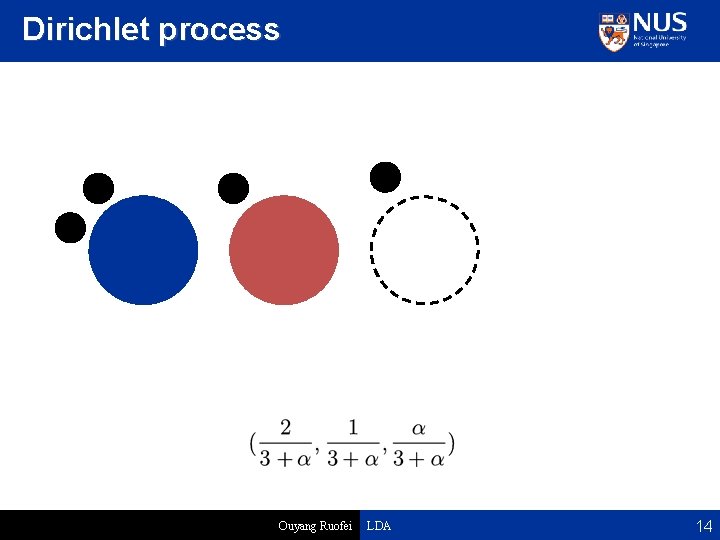

Dirichlet process Ouyang Ruofei LDA 14

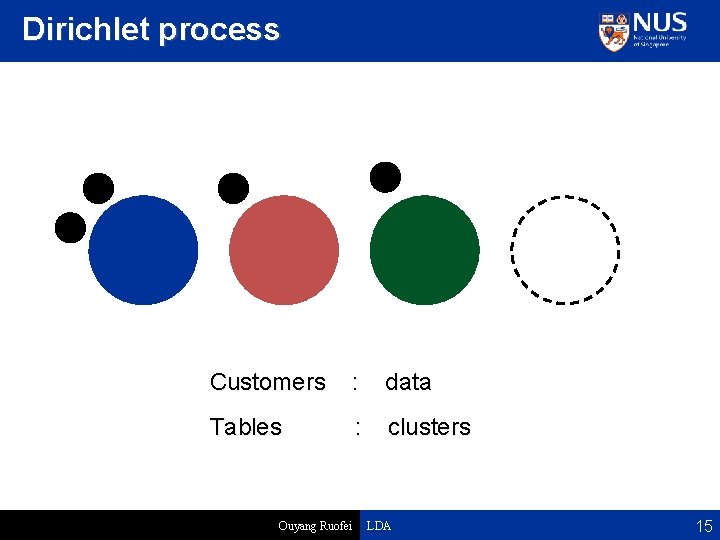

Dirichlet process Customers : data Tables : clusters Ouyang Ruofei LDA 15

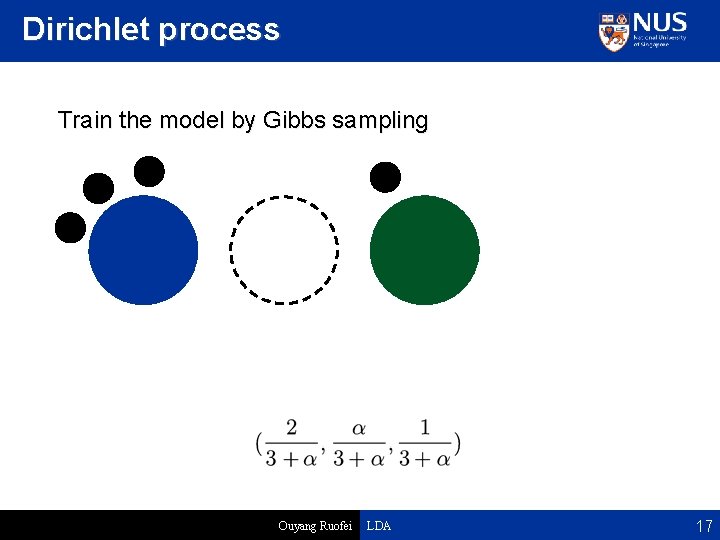

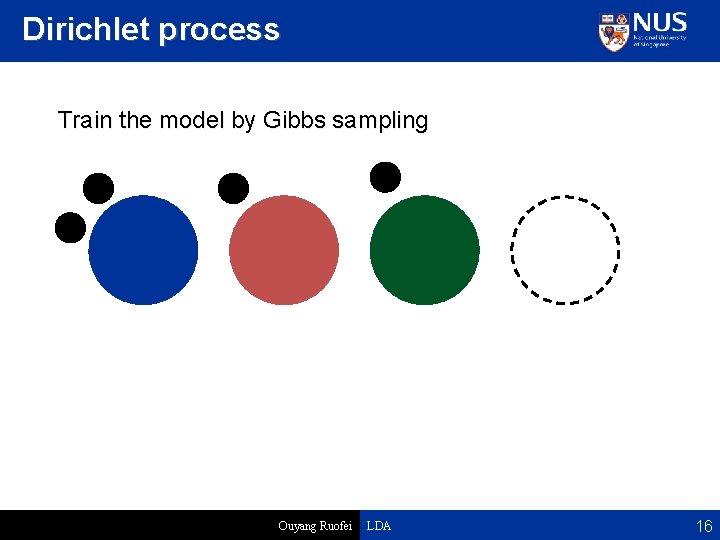

Dirichlet process Train the model by Gibbs sampling Ouyang Ruofei LDA 16

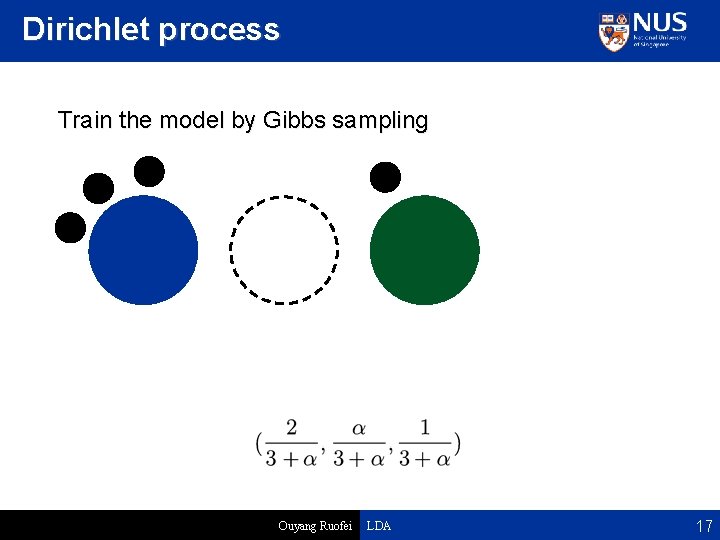

Dirichlet process Train the model by Gibbs sampling Ouyang Ruofei LDA 17

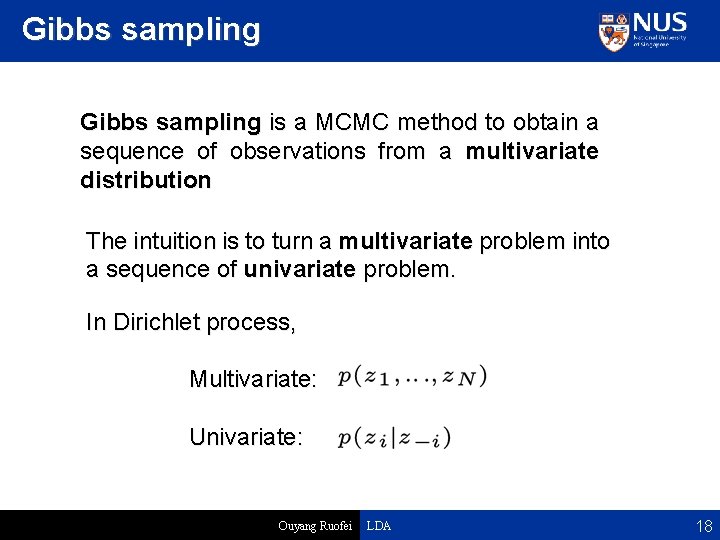

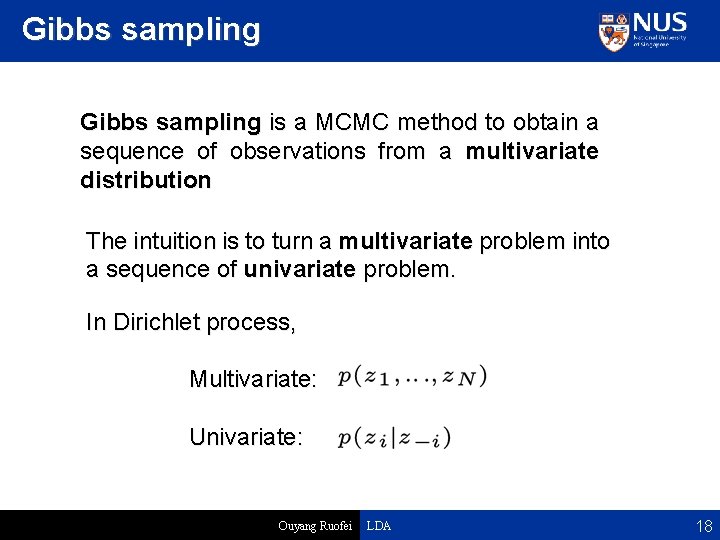

Gibbs sampling is a MCMC method to obtain a sequence of observations from a multivariate distribution The intuition is to turn a multivariate problem into a sequence of univariate problem. In Dirichlet process, Multivariate: Univariate: Ouyang Ruofei LDA 18

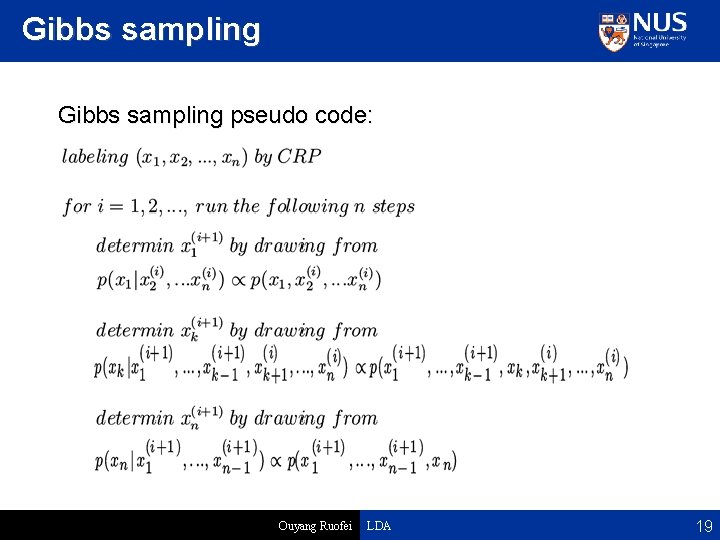

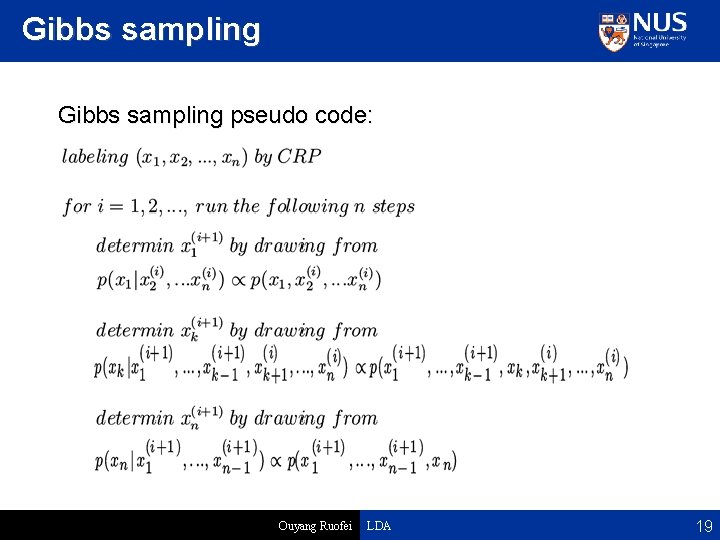

Gibbs sampling pseudo code: Ouyang Ruofei LDA 19

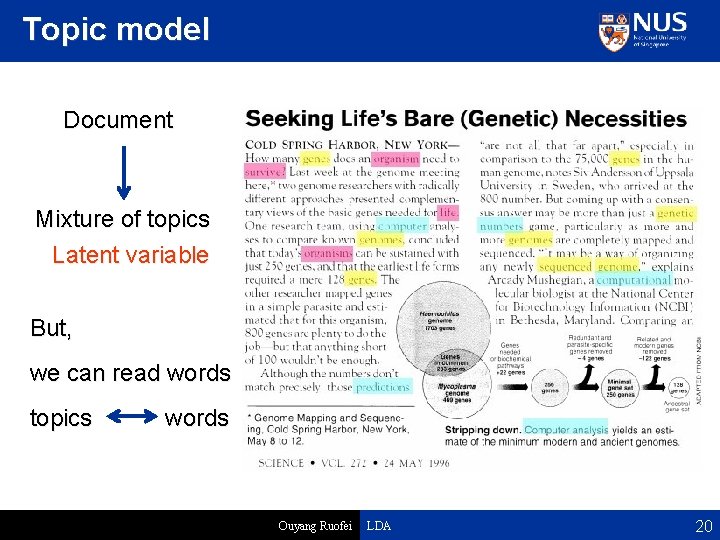

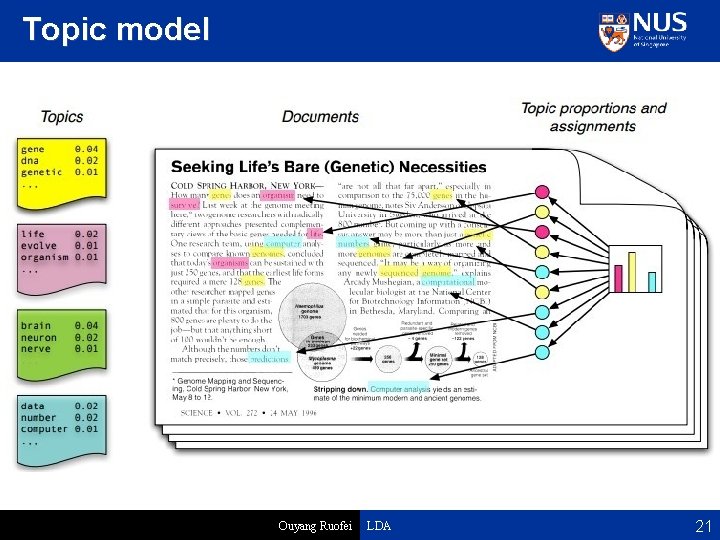

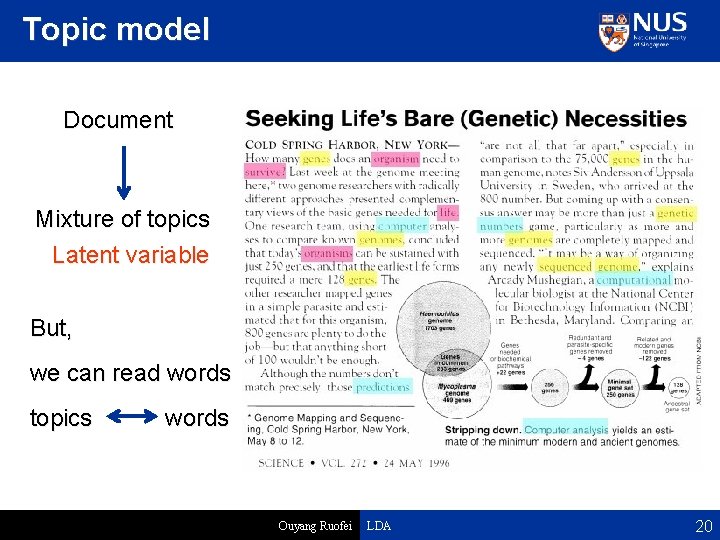

Topic model Document Mixture of topics Latent variable But, we can read words topics words Ouyang Ruofei LDA 20

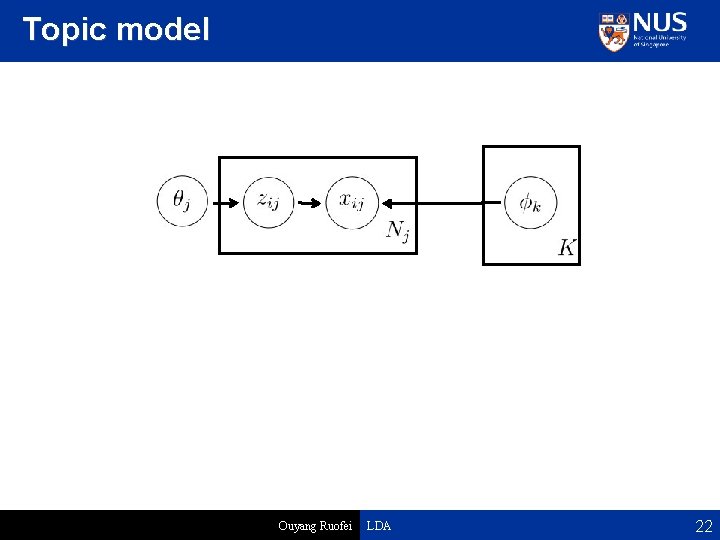

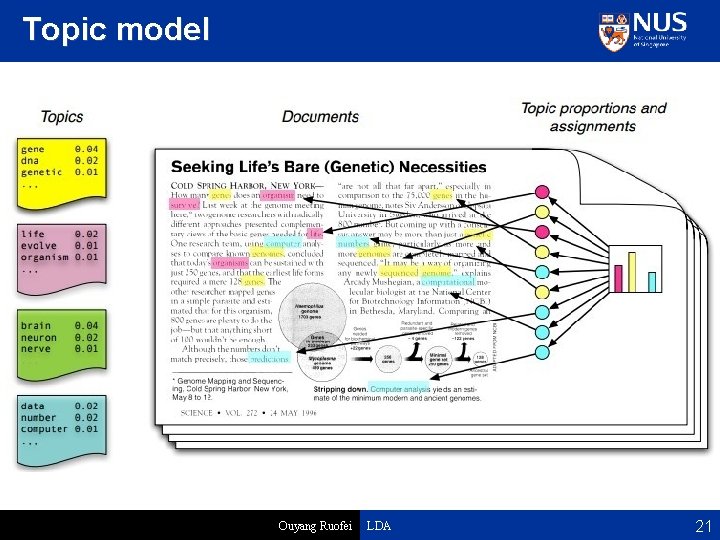

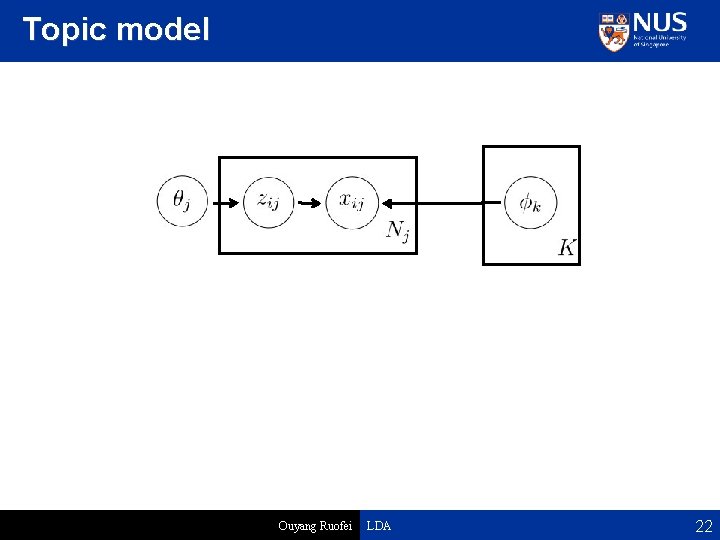

Topic model Ouyang Ruofei LDA 21

Topic model Ouyang Ruofei LDA 22

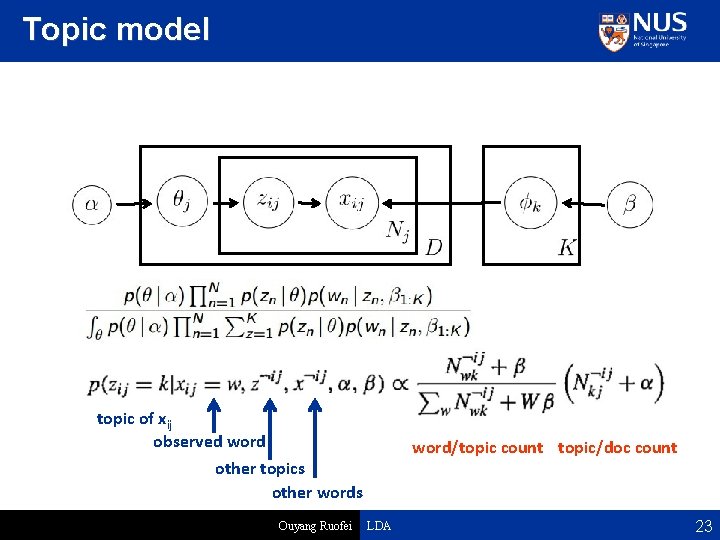

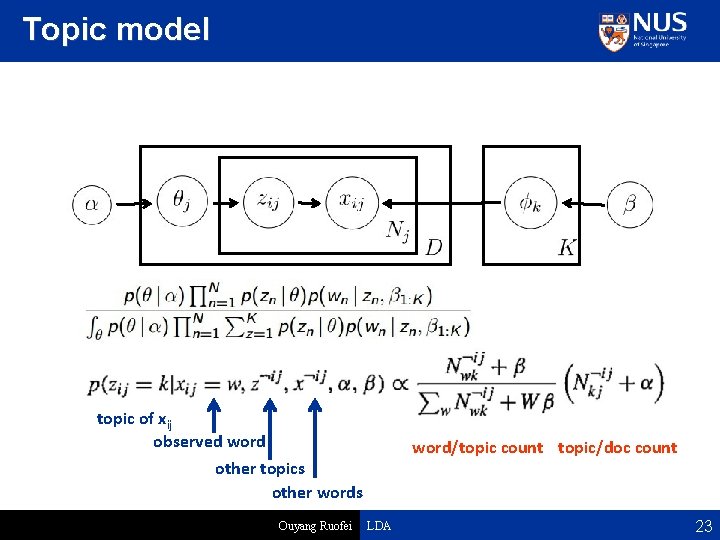

Topic model topic of xij observed word/topic count topic/doc count other topics other words Ouyang Ruofei LDA 23

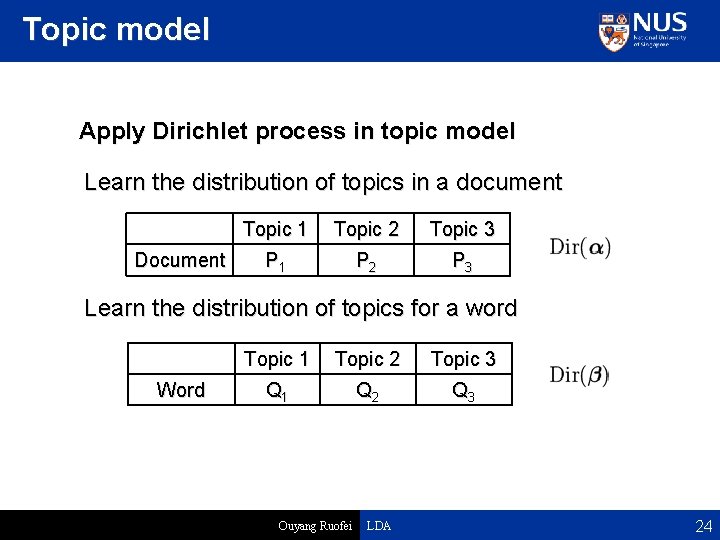

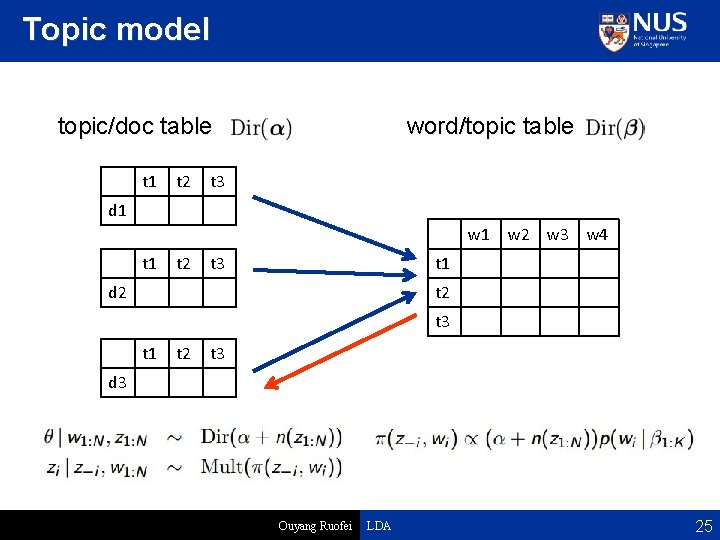

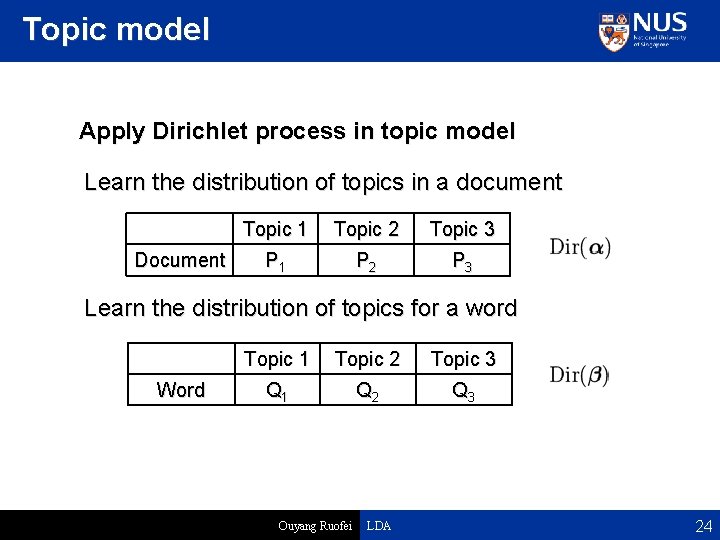

Topic model Apply Dirichlet process in topic model Learn the distribution of topics in a document Document Topic 1 Topic 2 Topic 3 P 1 P 2 P 3 Learn the distribution of topics for a word Word Topic 1 Topic 2 Topic 3 Q 1 Q 2 Q 3 Ouyang Ruofei LDA 24

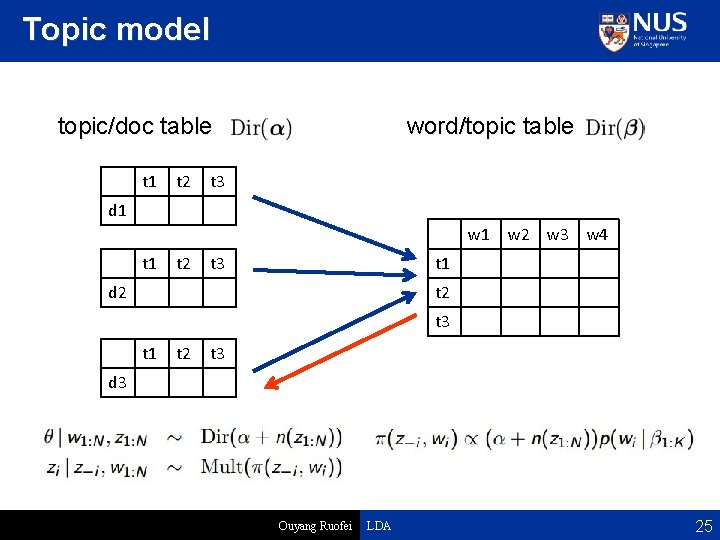

Topic model topic/doc table t 1 t 2 word/topic table t 3 d 1 w 2 w 3 w 4 t 1 t 2 t 1 t 3 t 2 d 2 t 3 t 1 t 2 t 3 d 3 Ouyang Ruofei LDA 25

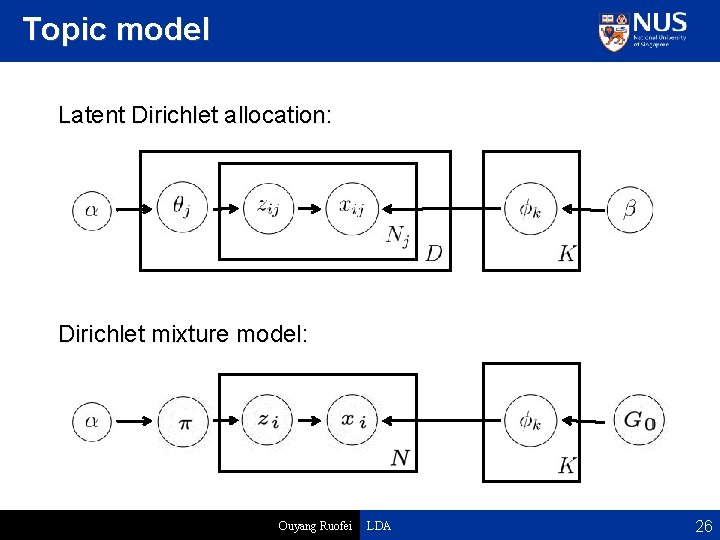

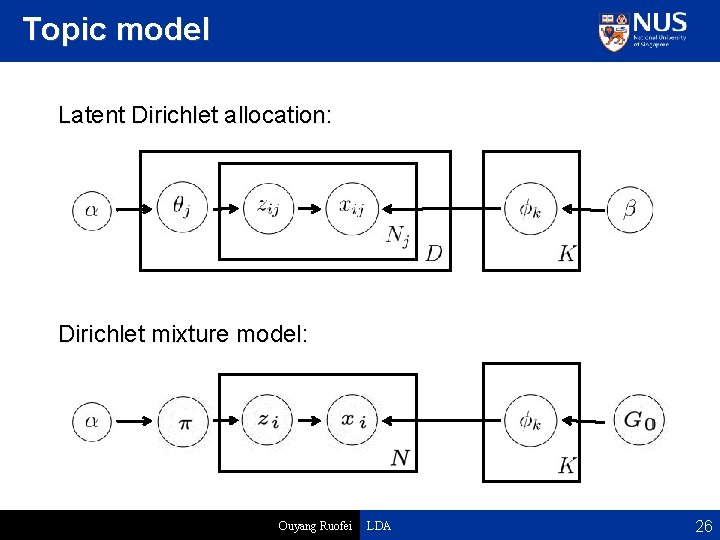

Topic model Latent Dirichlet allocation: Dirichlet mixture model: Ouyang Ruofei LDA 26

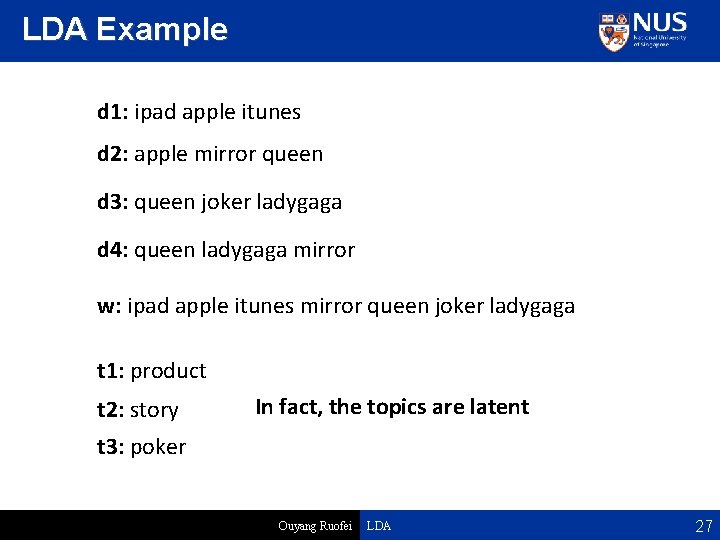

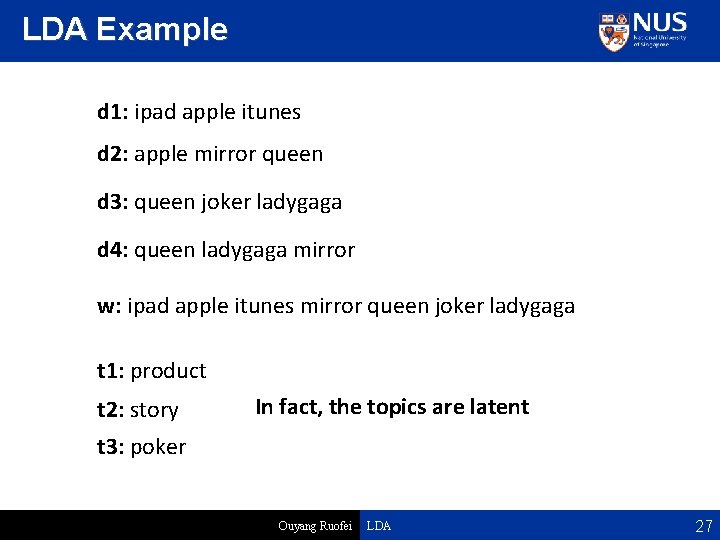

LDA Example d 1: ipad apple itunes d 2: apple mirror queen d 3: queen joker ladygaga d 4: queen ladygaga mirror w: ipad apple itunes mirror queen joker ladygaga t 1: product t 2: story In fact, the topics are latent t 3: poker Ouyang Ruofei LDA 27

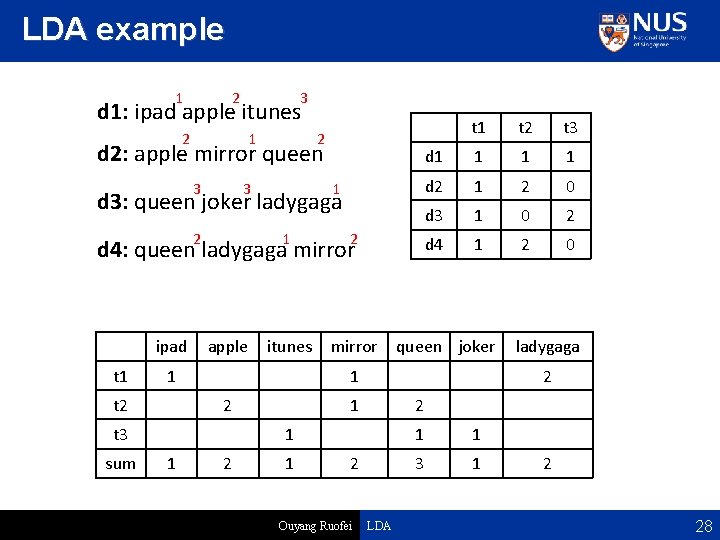

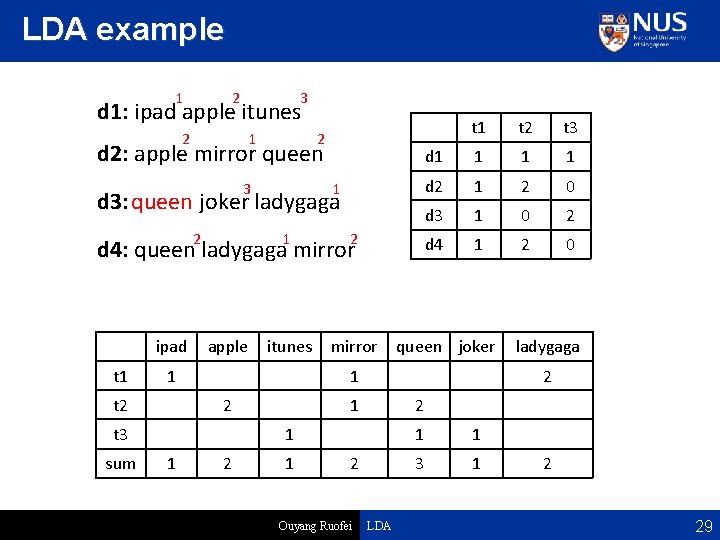

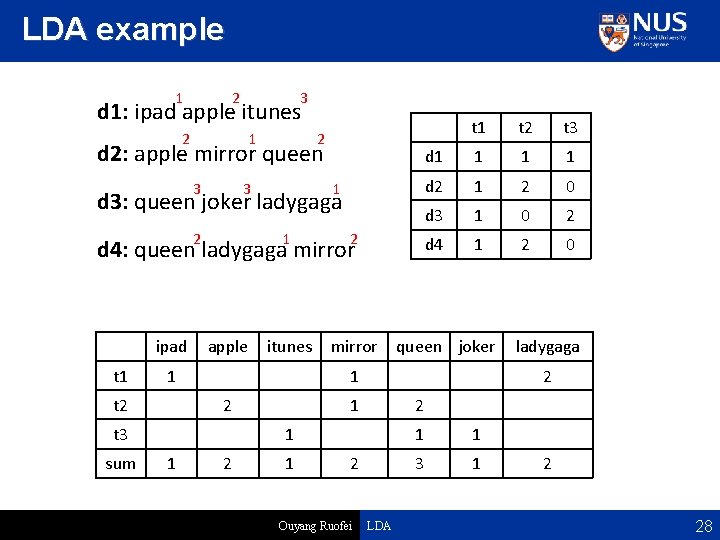

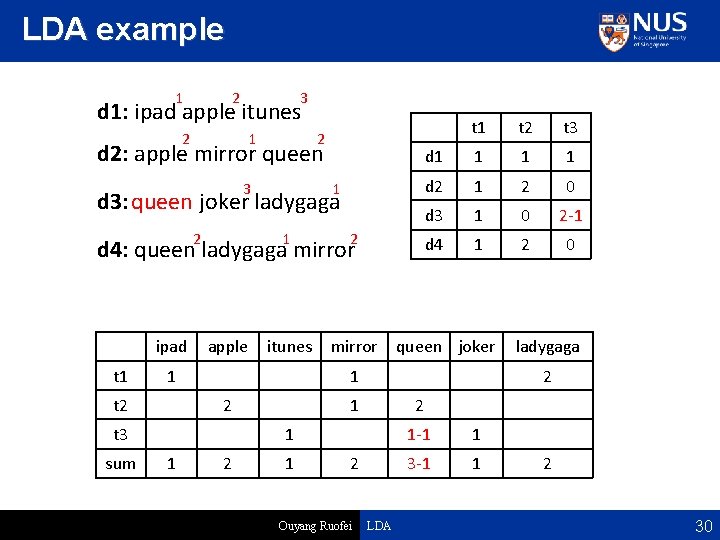

LDA example 1 2 3 d 1: ipad apple itunes 2 1 2 d 2: apple mirror queen 3 3 1 d 3: queen joker ladygaga 2 1 2 itunes mirror d 4: queen ladygaga mirror ipad t 1 apple 1 t 2 2 t 3 d 1 1 d 2 1 2 0 d 3 1 0 2 d 4 1 2 0 queen joker 2 1 1 2 2 Ouyang Ruofei ladygaga 2 1 1 t 2 1 t 3 sum t 1 LDA 1 1 3 1 2 28

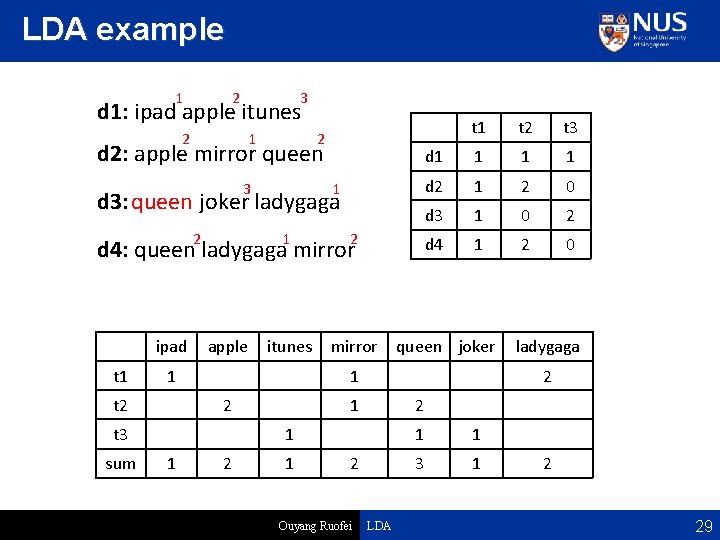

LDA example 1 2 3 d 1: ipad apple itunes 2 1 2 d 2: apple mirror queen 3 1 d 3: queen joker ladygaga 2 1 2 itunes mirror d 4: queen ladygaga mirror ipad t 1 apple 1 t 2 2 t 3 d 1 1 d 2 1 2 0 d 3 1 0 2 d 4 1 2 0 queen joker 2 1 1 2 2 Ouyang Ruofei ladygaga 2 1 1 t 2 1 t 3 sum t 1 LDA 1 1 3 1 2 29

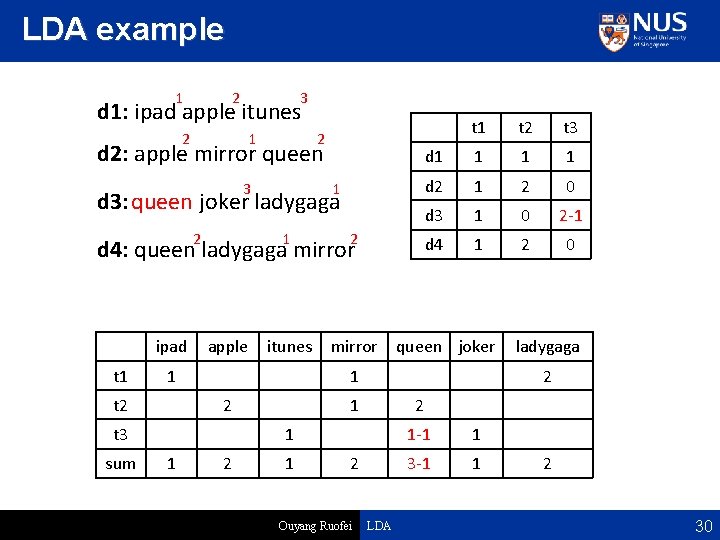

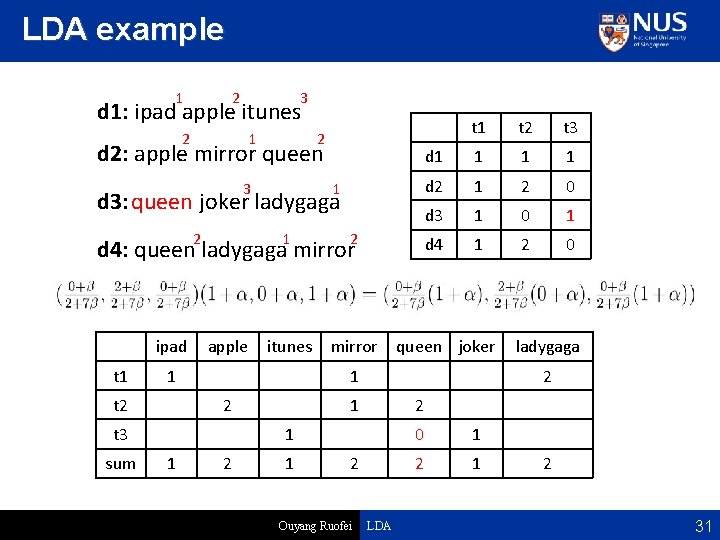

LDA example 1 2 3 d 1: ipad apple itunes 2 1 2 d 2: apple mirror queen 3 1 d 3: queen joker ladygaga 2 1 2 itunes mirror d 4: queen ladygaga mirror ipad t 1 apple 1 t 2 2 t 3 d 1 1 d 2 1 2 0 d 3 1 0 2 -1 d 4 1 2 0 queen joker 2 1 1 2 2 Ouyang Ruofei ladygaga 2 1 1 t 2 1 t 3 sum t 1 LDA 1 -1 1 3 -1 1 2 30

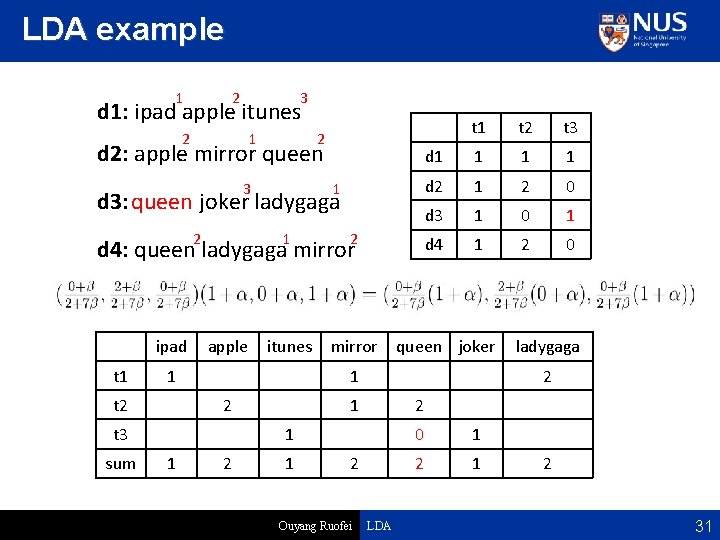

LDA example 1 2 3 d 1: ipad apple itunes 2 1 2 d 2: apple mirror queen 3 1 d 3: queen joker ladygaga 2 1 2 itunes mirror d 4: queen ladygaga mirror ipad t 1 apple 1 t 2 2 t 3 d 1 1 d 2 1 2 0 d 3 1 0 1 d 4 1 2 0 queen joker 2 1 1 2 2 Ouyang Ruofei ladygaga 2 1 1 t 2 1 t 3 sum t 1 LDA 0 1 2 31

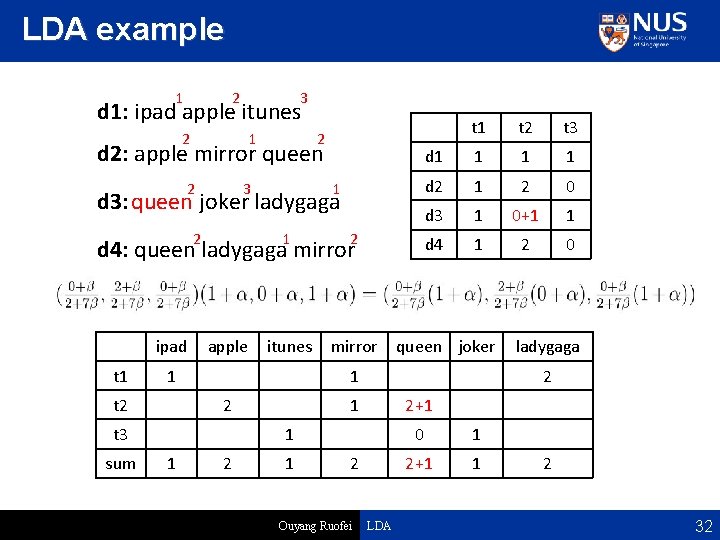

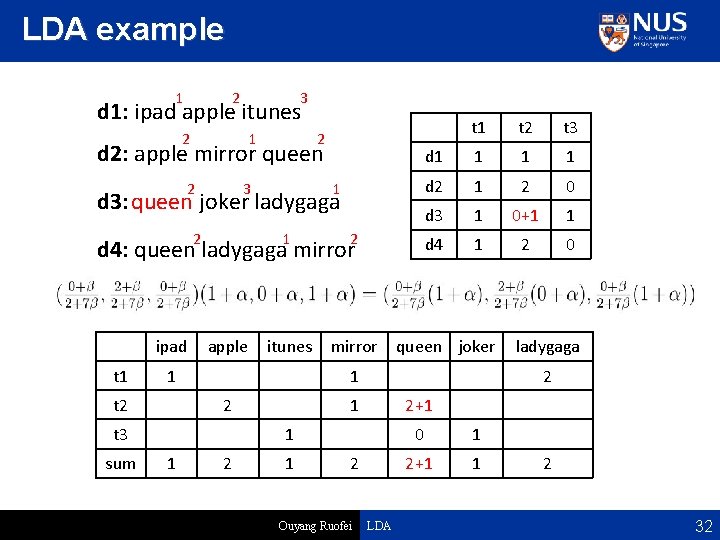

LDA example 1 2 3 d 1: ipad apple itunes 2 1 2 d 2: apple mirror queen 2 3 1 d 3: queen joker ladygaga 2 1 2 itunes mirror d 4: queen ladygaga mirror ipad t 1 apple 1 t 2 2 t 3 d 1 1 d 2 1 2 0 d 3 1 0+1 1 d 4 1 2 0 queen joker 2 1 1 2+1 2 Ouyang Ruofei ladygaga 2 1 1 t 2 1 t 3 sum t 1 LDA 0 1 2+1 1 2 32

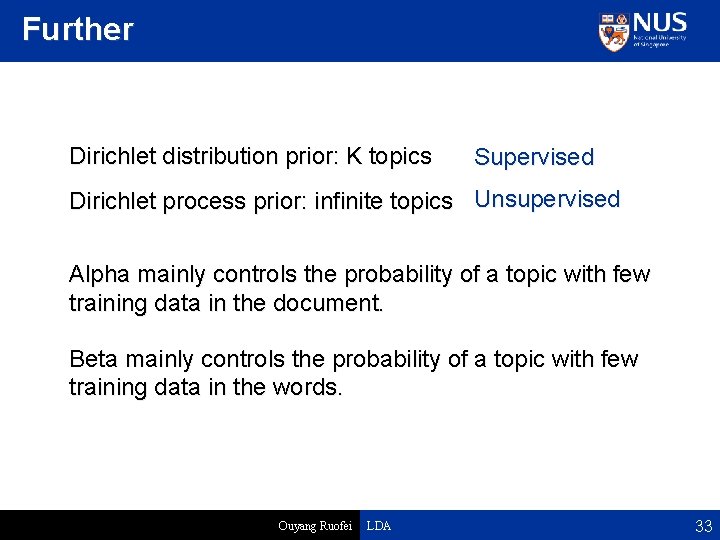

Further Dirichlet distribution prior: K topics Supervised Dirichlet process prior: infinite topics Unsupervised Alpha mainly controls the probability of a topic with few training data in the document. Beta mainly controls the probability of a topic with few training data in the words. Ouyang Ruofei LDA 33

Further Unrealistic bag of words assumption TNG, bi. LDA Lose power law behavior Pitman Yor language model David Blei has done an extensive survey on topic model http: //home. etf. rs/~bfurlan/publications/SURVEY-1. pdf Ouyang Ruofei LDA 34

Q&A Ouyang Ruofei LDA