Tirgul 11 NIO Java NewIO nio classes Reactor

- Slides: 35

Tirgul 11 NIO - Java New-IO (nio) classes Reactor

Java New-IO (nio) classes

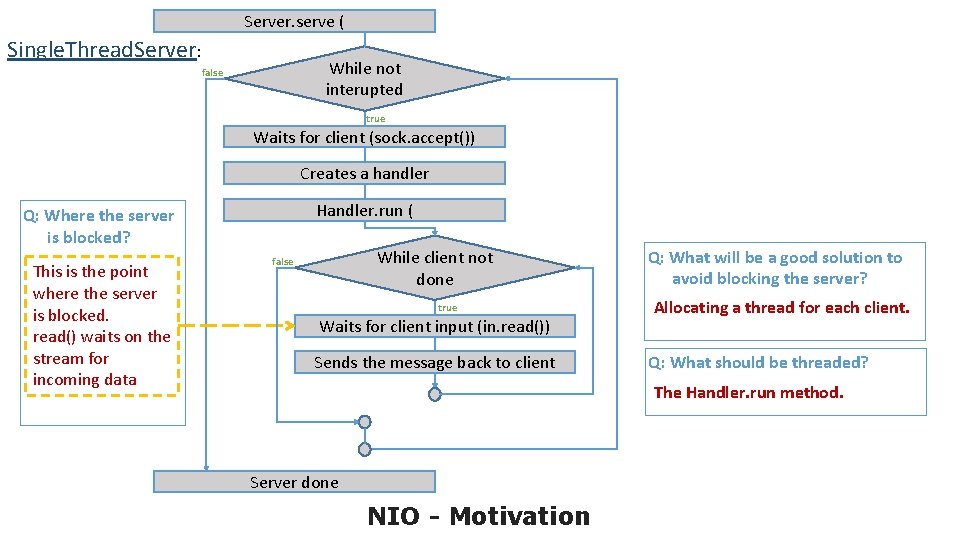

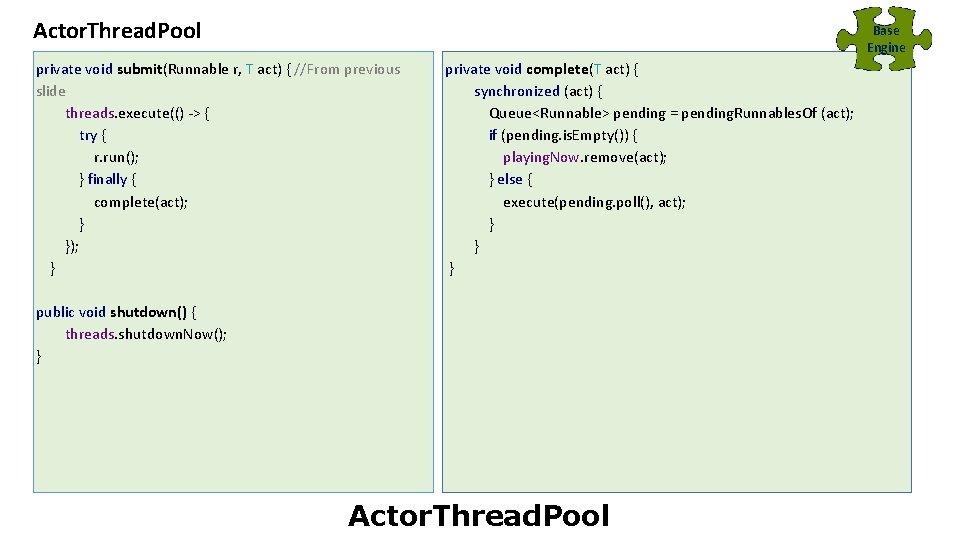

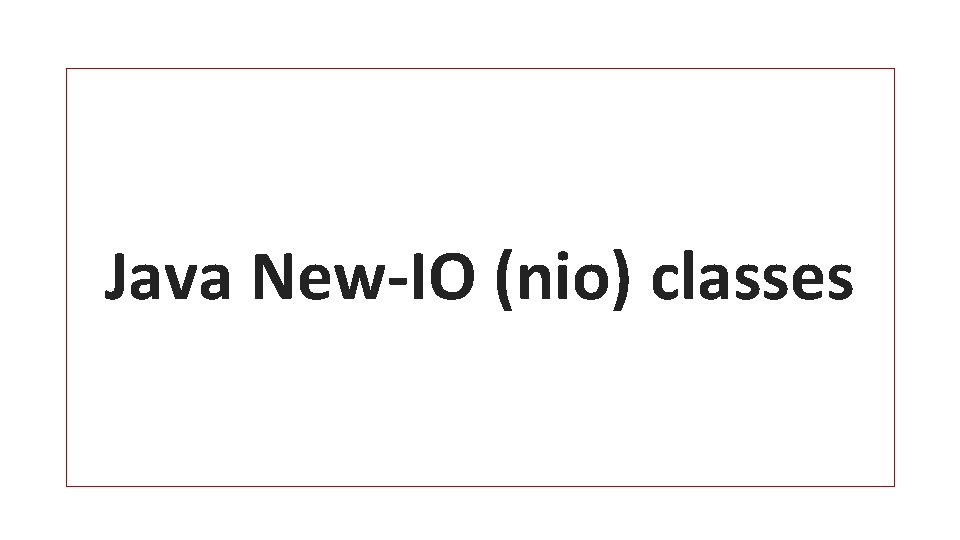

Server. serve ( Single. Thread. Server: While not interupted false true Waits for client (sock. accept()) Creates a handler Handler. run ( Q: Where the server is blocked? This is the point where the server is blocked. read() waits on the stream for incoming data While client not done false true Waits for client input (in. read()) Sends the message back to client Q: What will be a good solution to avoid blocking the server? Allocating a thread for each client. Q: What should be threaded? The Handler. run method. Server done NIO - Motivation

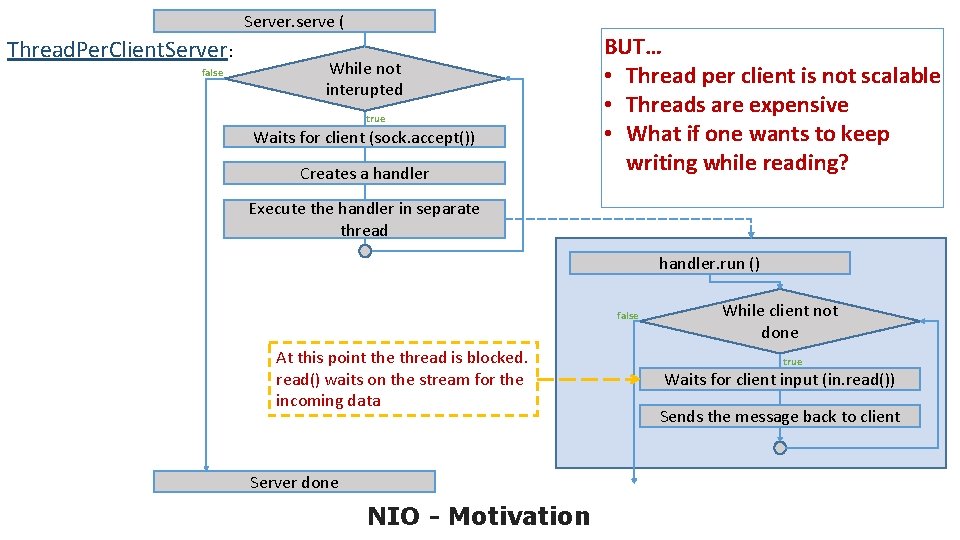

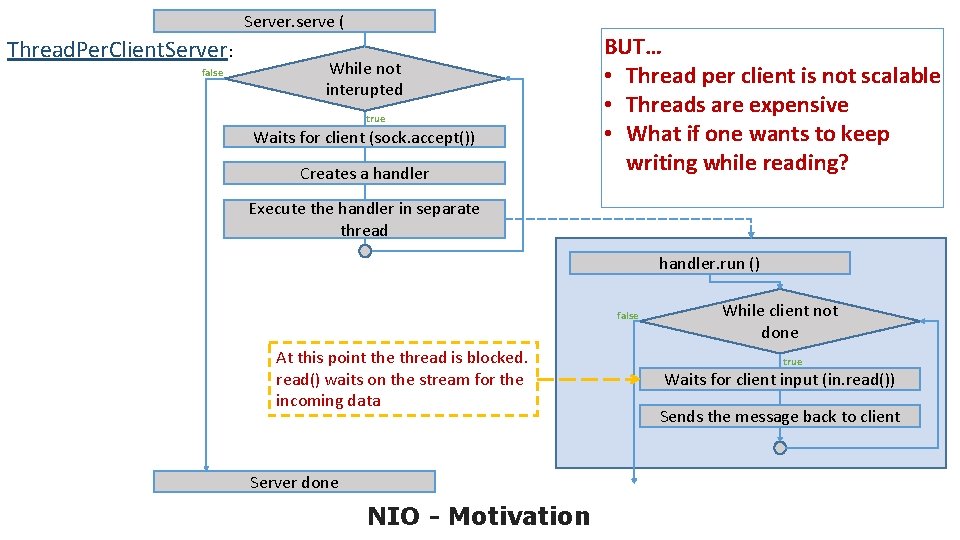

Server. serve ( Thread. Per. Client. Server: false While not interupted true Waits for client (sock. accept()) Creates a handler BUT… • Thread per client is not scalable • Threads are expensive • What if one wants to keep writing while reading? Execute the handler in separate thread handler. run () false At this point the thread is blocked. read() waits on the stream for the incoming data Server done NIO - Motivation While client not done true Waits for client input (in. read()) Sends the message back to client

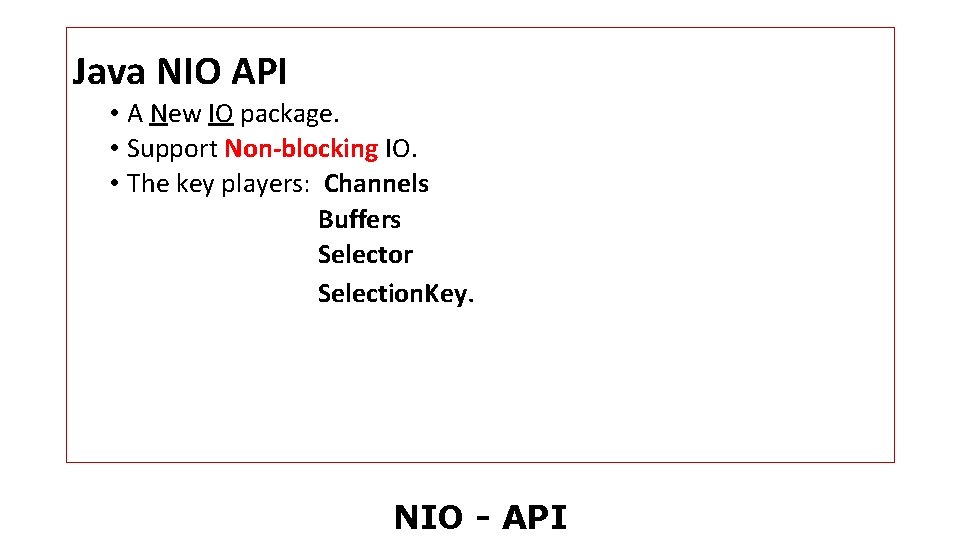

Java NIO API • A New IO package. • Support Non-blocking IO. • The key players: Channels Buffers Selector Selection. Key. NIO - API

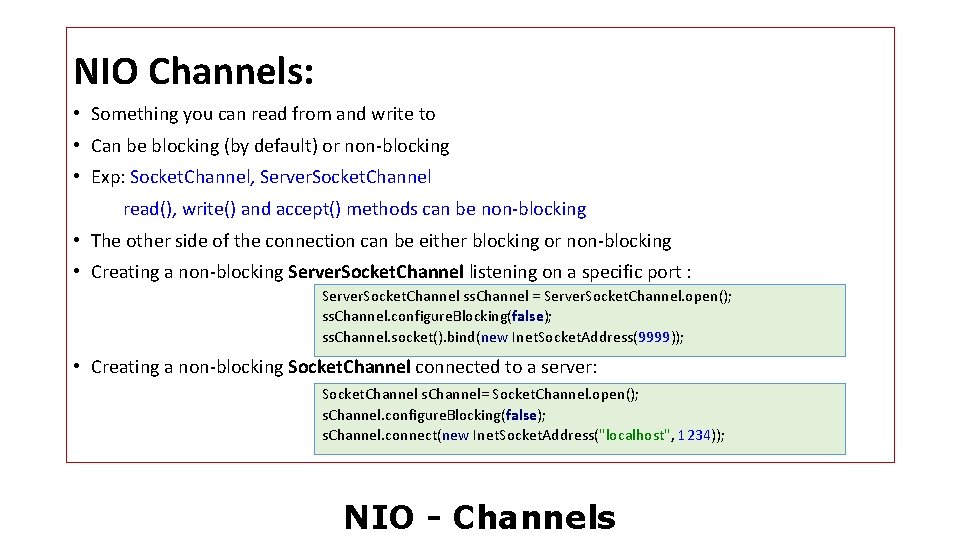

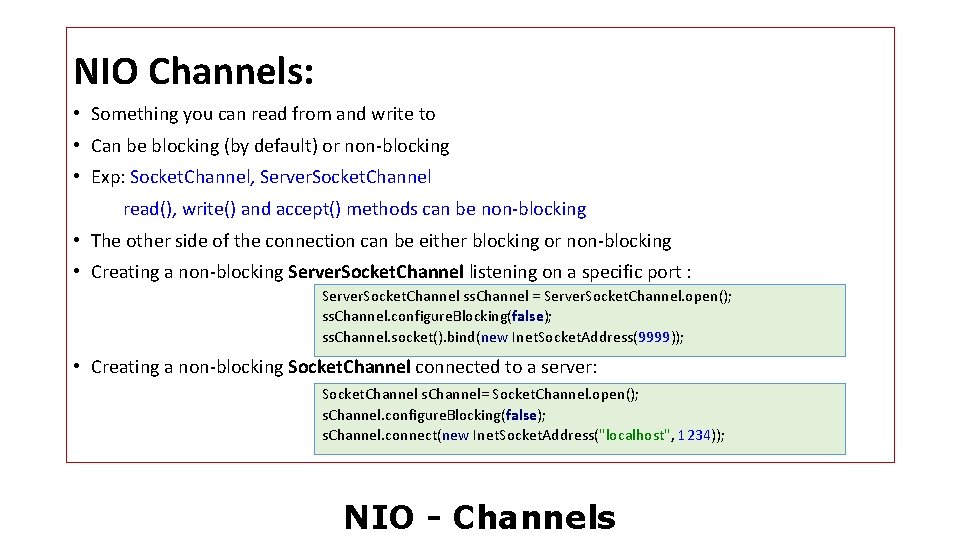

NIO Channels: • Something you can read from and write to • Can be blocking (by default) or non-blocking • Exp: Socket. Channel, Server. Socket. Channel read(), write() and accept() methods can be non-blocking • The other side of the connection can be either blocking or non-blocking • Creating a non-blocking Server. Socket. Channel listening on a specific port : Server. Socket. Channel ss. Channel = Server. Socket. Channel. open(); ss. Channel. configure. Blocking(false); ss. Channel. socket(). bind(new Inet. Socket. Address(9999)); • Creating a non-blocking Socket. Channel connected to a server: Socket. Channel s. Channel= Socket. Channel. open(); s. Channel. configure. Blocking(false); s. Channel. connect(new Inet. Socket. Address("localhost", 1234)); NIO - Channels

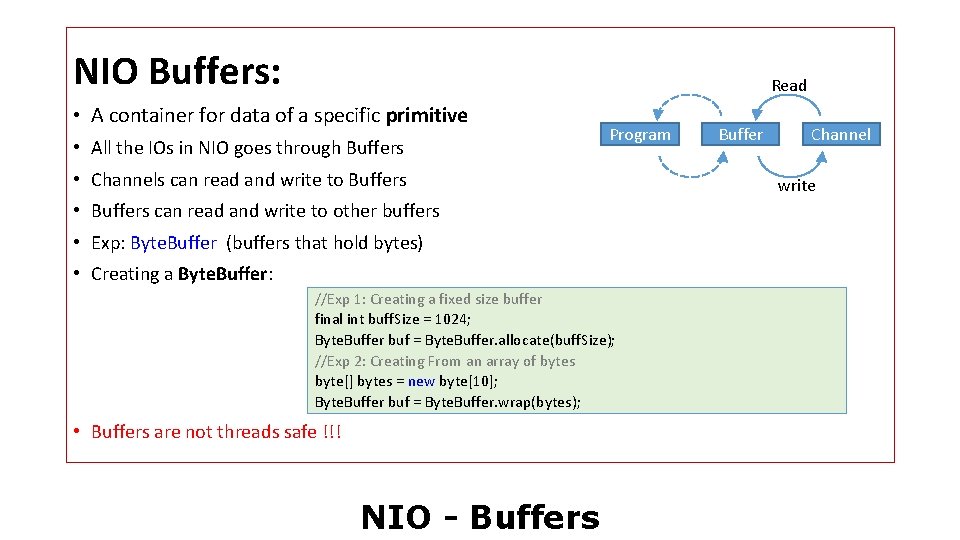

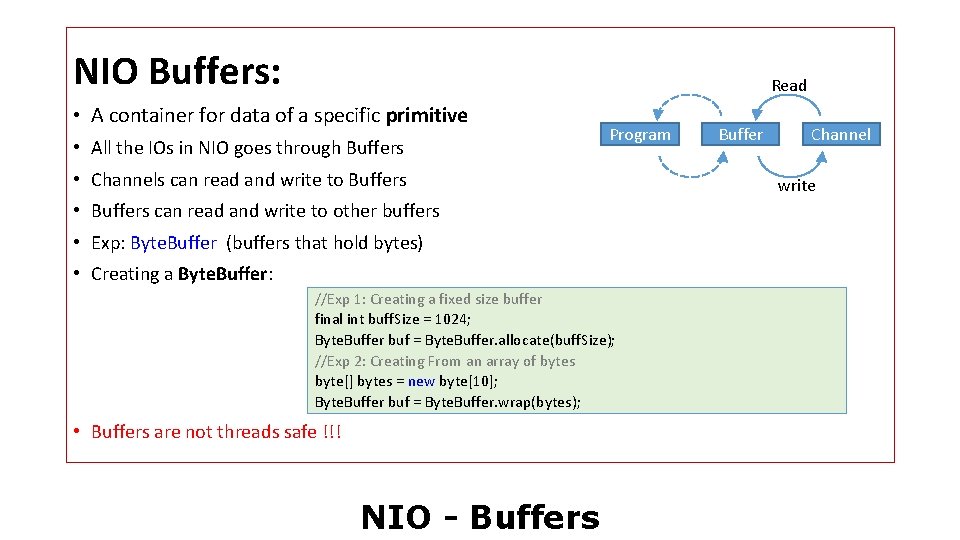

NIO Buffers: Read • A container for data of a specific primitive • All the IOs in NIO goes through Buffers Program • Channels can read and write to Buffers • Buffers can read and write to other buffers • Exp: Byte. Buffer (buffers that hold bytes) • Creating a Byte. Buffer: //Exp 1: Creating a fixed size buffer final int buff. Size = 1024; Byte. Buffer buf = Byte. Buffer. allocate(buff. Size); //Exp 2: Creating From an array of bytes byte[] bytes = new byte[10]; Byte. Buffer buf = Byte. Buffer. wrap(bytes); • Buffers are not threads safe !!! NIO - Buffers Buffer Channel write

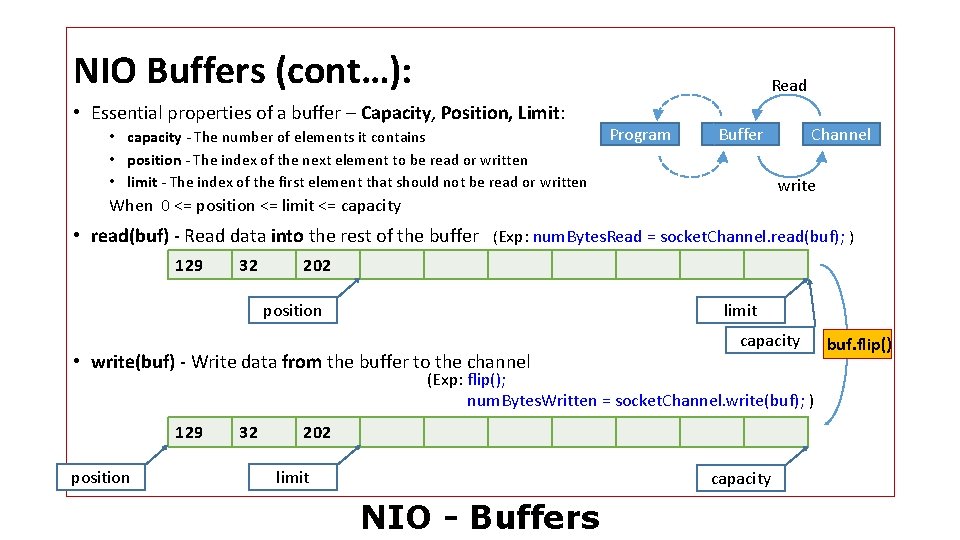

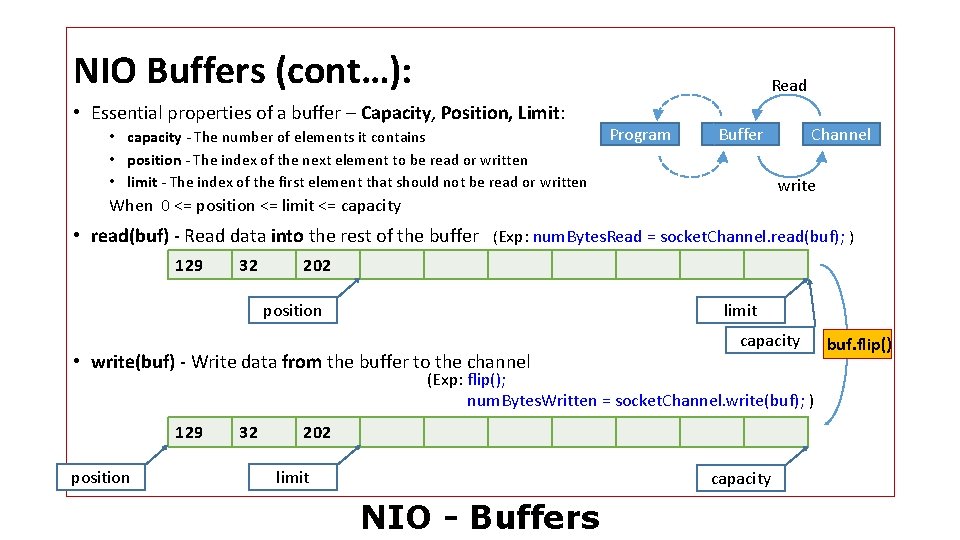

NIO Buffers (cont…): Read • Essential properties of a buffer – Capacity, Position, Limit: • capacity - The number of elements it contains • position - The index of the next element to be read or written • limit - The index of the first element that should not be read or written Program Buffer Channel write When 0 <= position <= limit <= capacity • read(buf) - Read data into the rest of the buffer (Exp: num. Bytes. Read = socket. Channel. read(buf); ) 129 32 202 position limit • write(buf) - Write data from the buffer to the channel capacity (Exp: flip(); num. Bytes. Written = socket. Channel. write(buf); ) 129 position 32 202 limit capacity NIO - Buffers buf. flip()

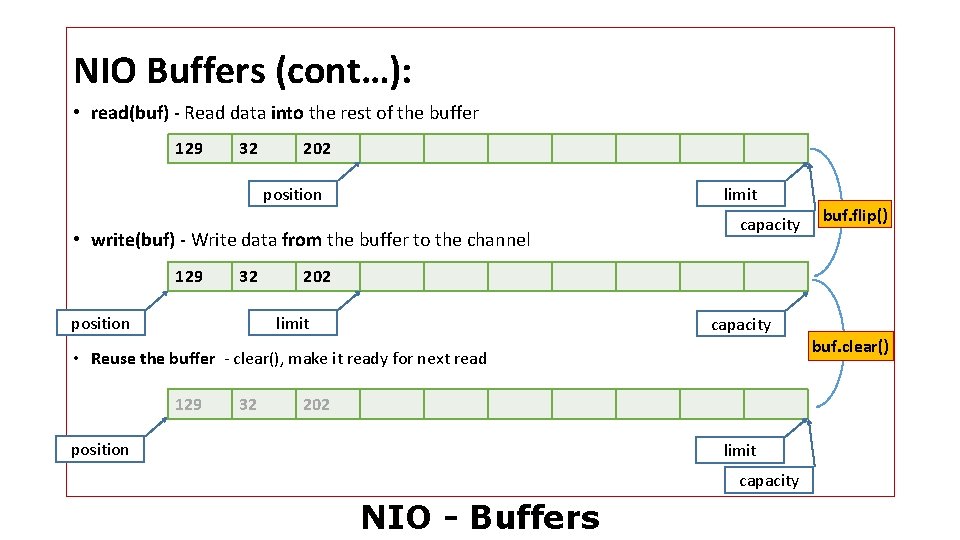

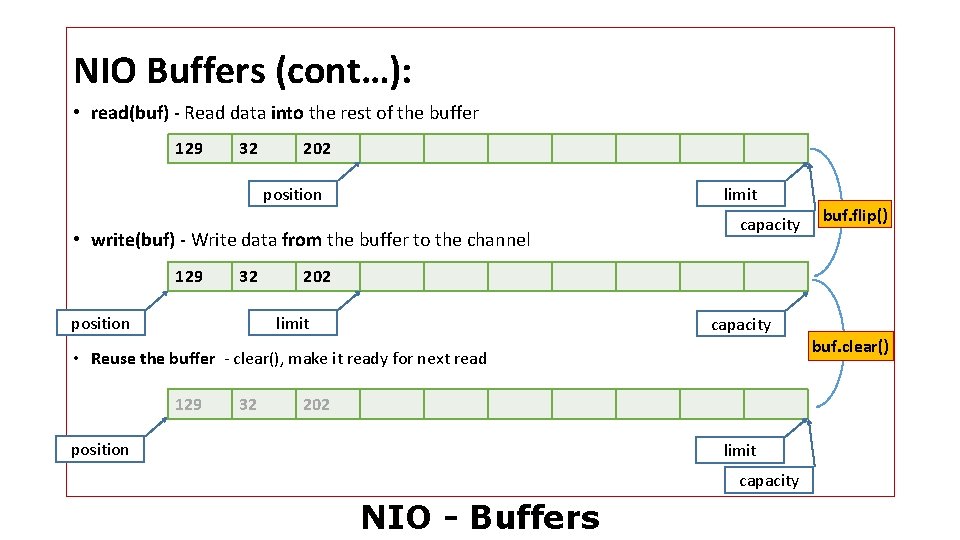

NIO Buffers (cont…): • read(buf) - Read data into the rest of the buffer 129 32 202 position limit • write(buf) - Write data from the buffer to the channel 129 32 position capacity 202 limit capacity buf. clear() • Reuse the buffer - clear(), make it ready for next read 129 32 buf. flip() 202 position limit capacity NIO - Buffers

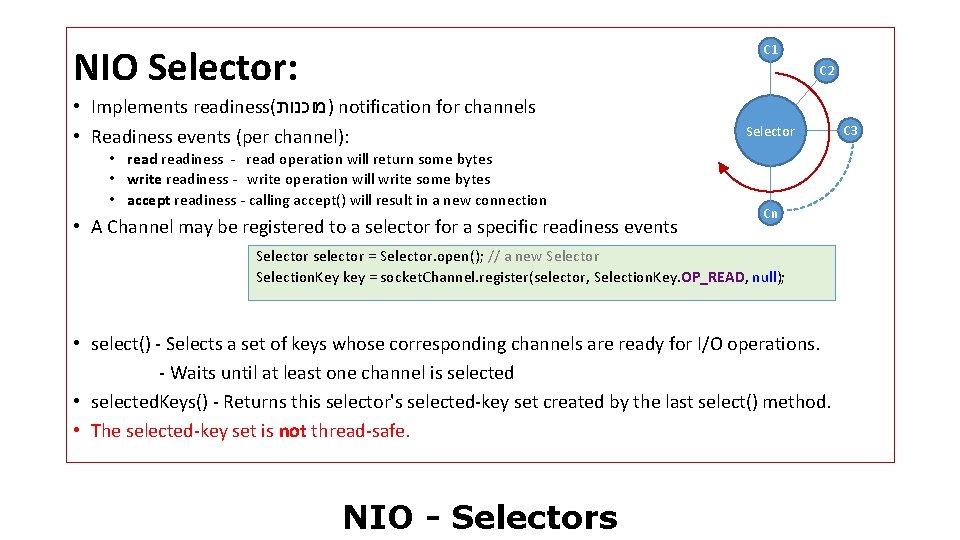

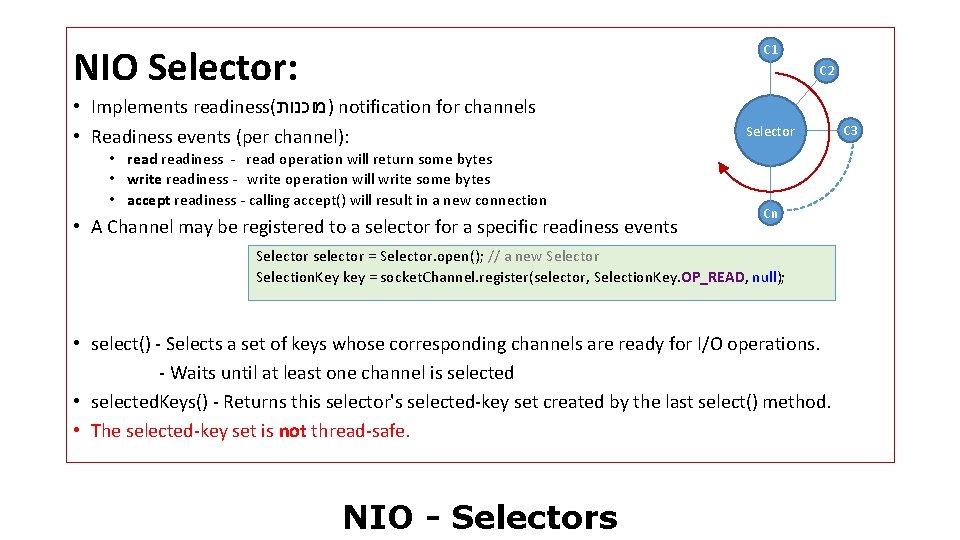

NIO Selector: C 1 C 2 • Implements readiness( )מוכנות notification for channels • Readiness events (per channel): • readiness - read operation will return some bytes • write readiness - write operation will write some bytes • accept readiness - calling accept() will result in a new connection • A Channel may be registered to a selector for a specific readiness events Selector Cn Selector selector = Selector. open(); // a new Selector Selection. Key key = socket. Channel. register(selector, Selection. Key. OP_READ, null); • select() - Selects a set of keys whose corresponding channels are ready for I/O operations. - Waits until at least one channel is selected • selected. Keys() - Returns this selector's selected-key set created by the last select() method. • The selected-key set is not thread-safe. NIO - Selectors C 3

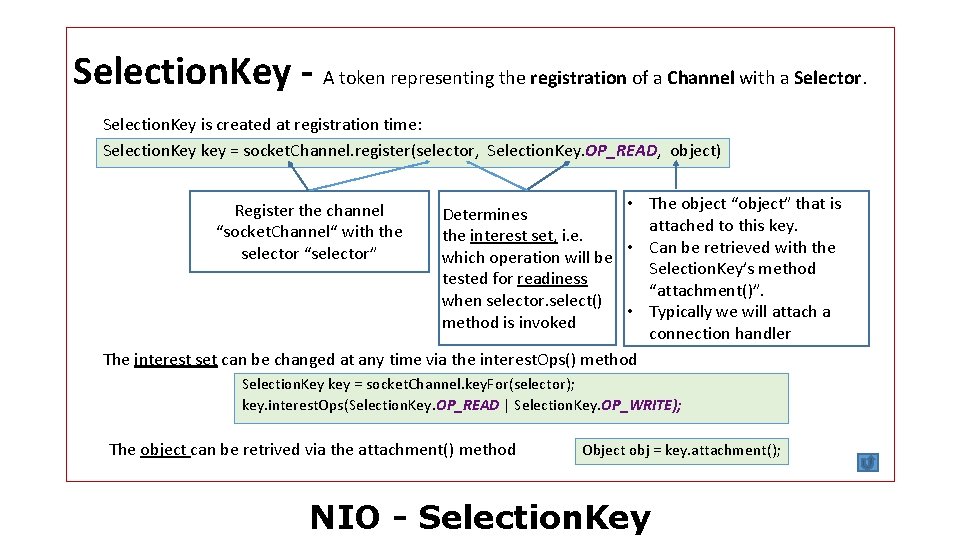

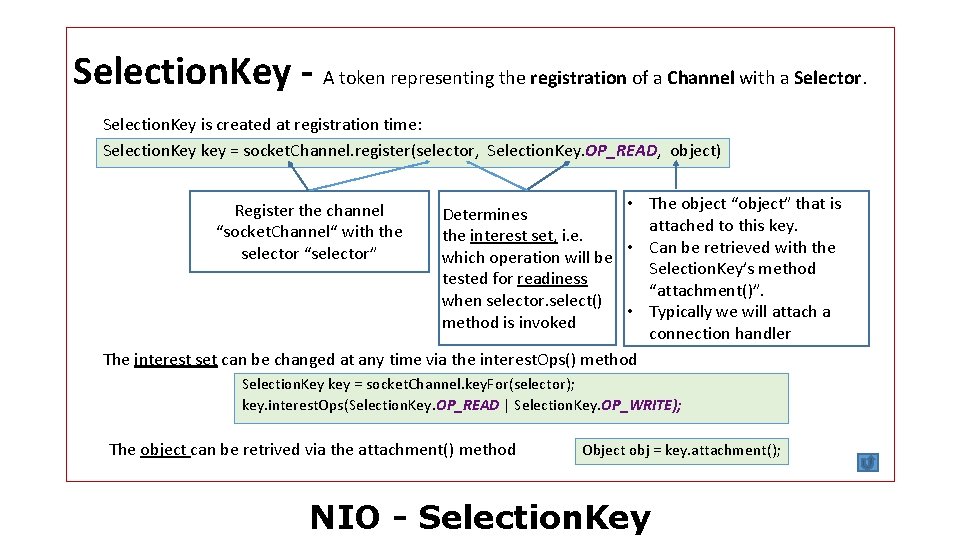

Selection. Key - A token representing the registration of a Channel with a Selector. Selection. Key is created at registration time: Selection. Key key = socket. Channel. register(selector, Selection. Key. OP_READ, object) • The object “object” that is Determines attached to this key. the interest set, i. e. • Can be retrieved with the which operation will be Selection. Key’s method tested for readiness “attachment()”. when selector. select() • Typically we will attach a method is invoked connection handler The interest set can be changed at any time via the interest. Ops() method Register the channel “socket. Channel“ with the selector “selector” Selection. Key key = socket. Channel. key. For(selector); key. interest. Ops(Selection. Key. OP_READ | Selection. Key. OP_WRITE); The object can be retrived via the attachment() method Object obj = key. attachment(); NIO - Selection. Key

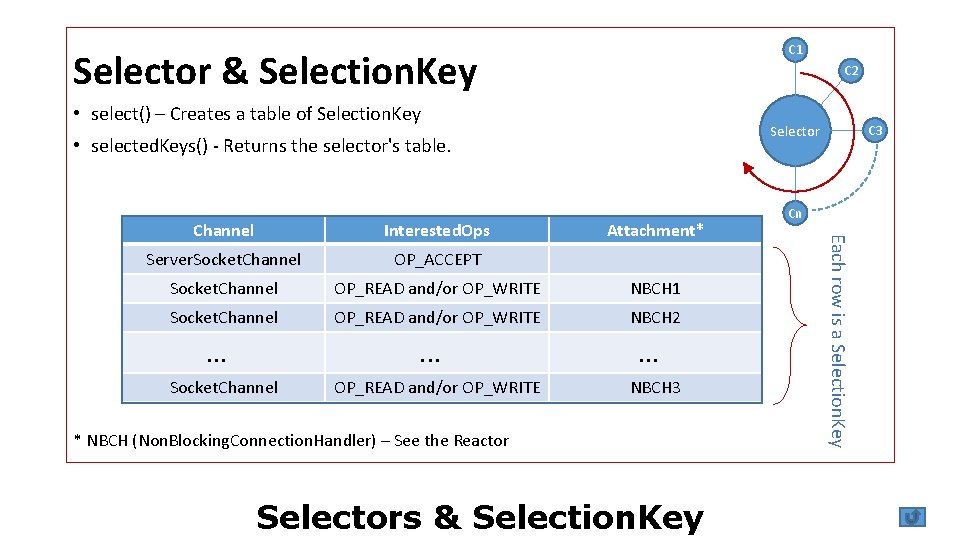

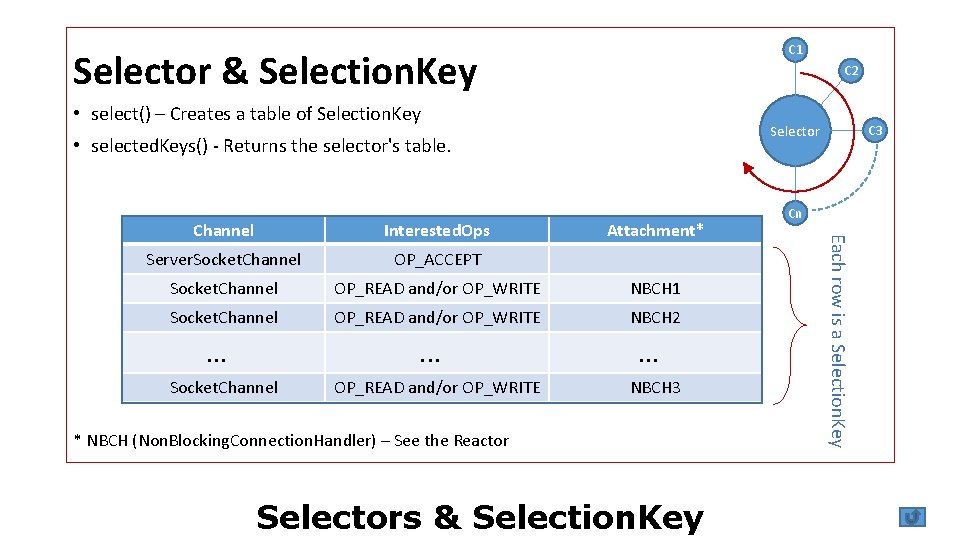

C 1 Selector & Selection. Key C 2 • select() – Creates a table of Selection. Key Selector • selected. Keys() - Returns the selector's table. Interested. Ops Server. Socket. Channel OP_ACCEPT Socket. Channel OP_READ and/or OP_WRITE NBCH 1 Socket. Channel OP_READ and/or OP_WRITE NBCH 2 . . . Socket. Channel OP_READ and/or OP_WRITE Attachment* . . . NBCH 3 * NBCH (Non. Blocking. Connection. Handler) – See the Reactor Selectors & Selection. Key Cn Each row is a Selection. Key Channel C 3

Reactor

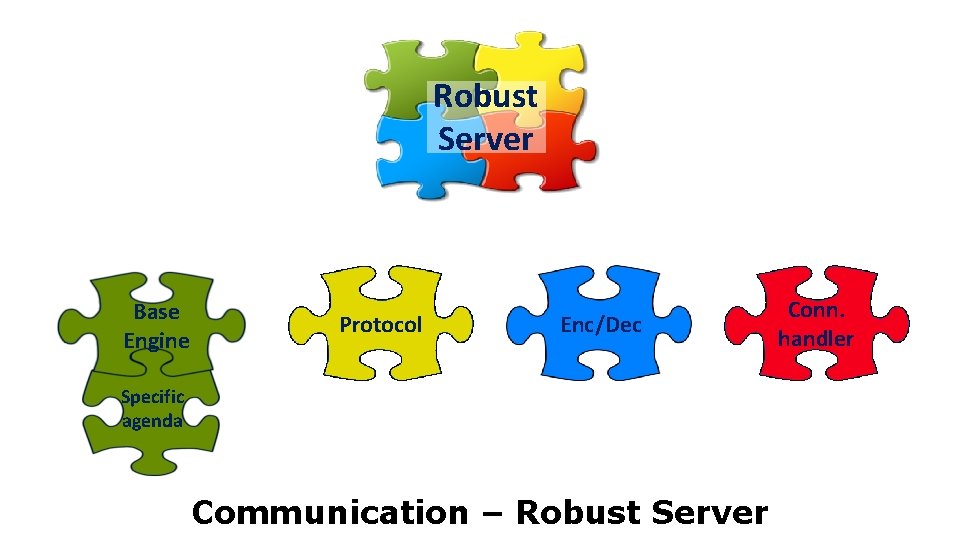

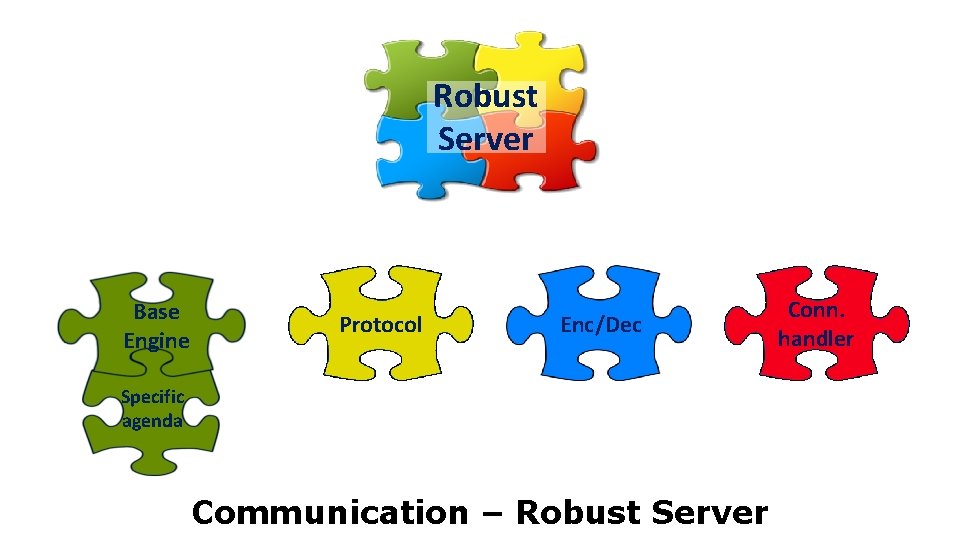

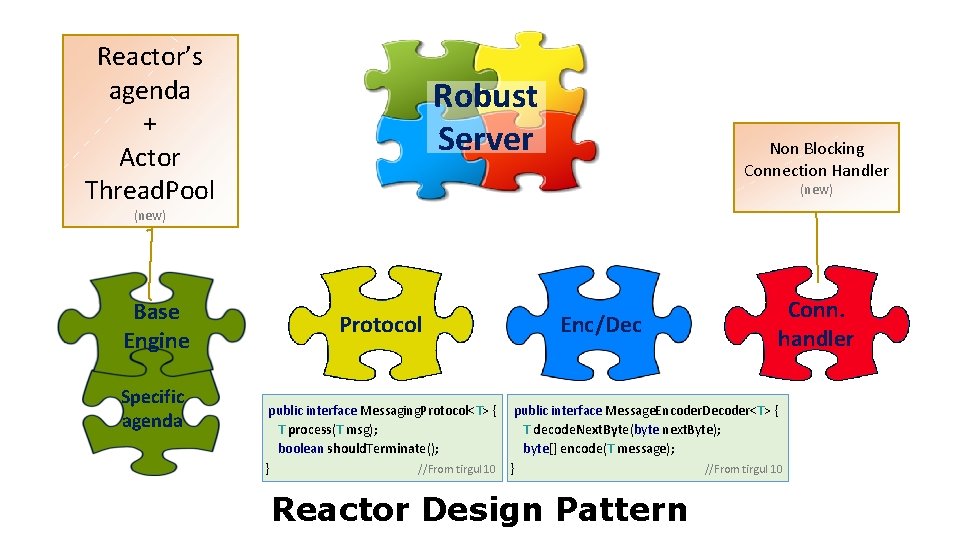

Robust Server Base Engine Protocol Enc/Dec Specific agenda Communication – Robust Server Conn. handler

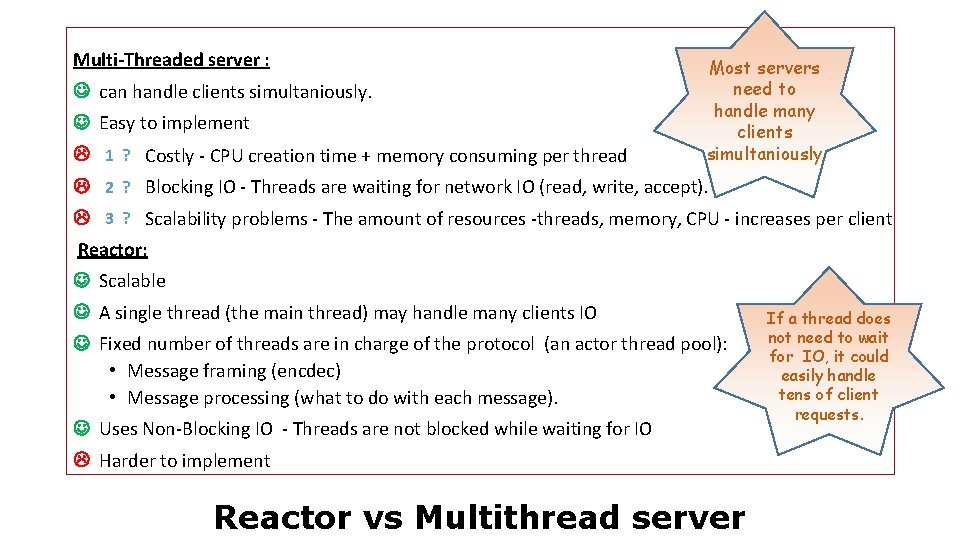

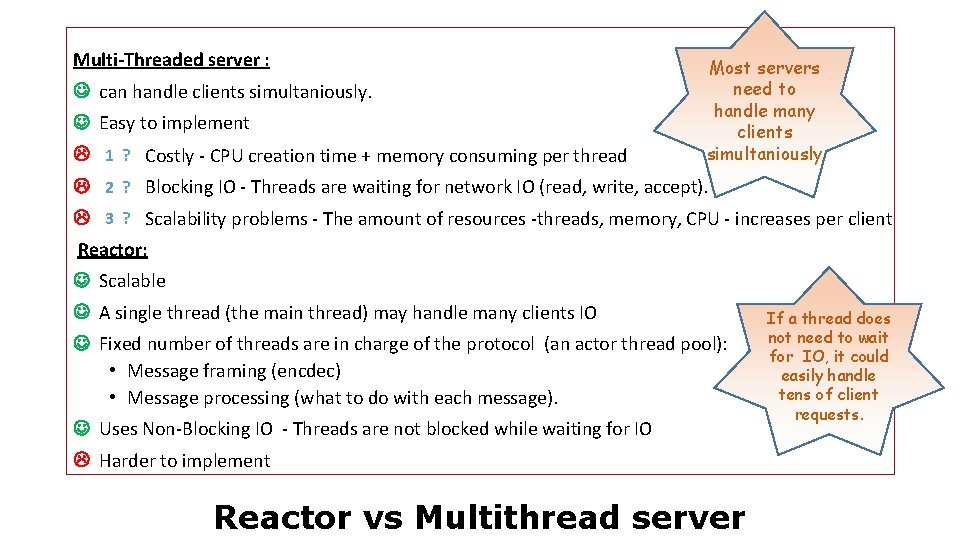

Multi-Threaded server : can handle clients simultaniously. Easy to implement 1 ? Costly - CPU creation time + memory consuming per thread Most servers need to handle many clients simultaniously 2 ? Blocking IO - Threads are waiting for network IO (read, write, accept). 3 ? Scalability problems - The amount of resources -threads, memory, CPU - increases per client Reactor: Scalable A single thread (the main thread) may handle many clients IO Fixed number of threads are in charge of the protocol (an actor thread pool): • Message framing (encdec) • Message processing (what to do with each message). Uses Non-Blocking IO - Threads are not blocked while waiting for IO Harder to implement Reactor vs Multithread server If a thread does not need to wait for IO, it could easily handle tens of client requests.

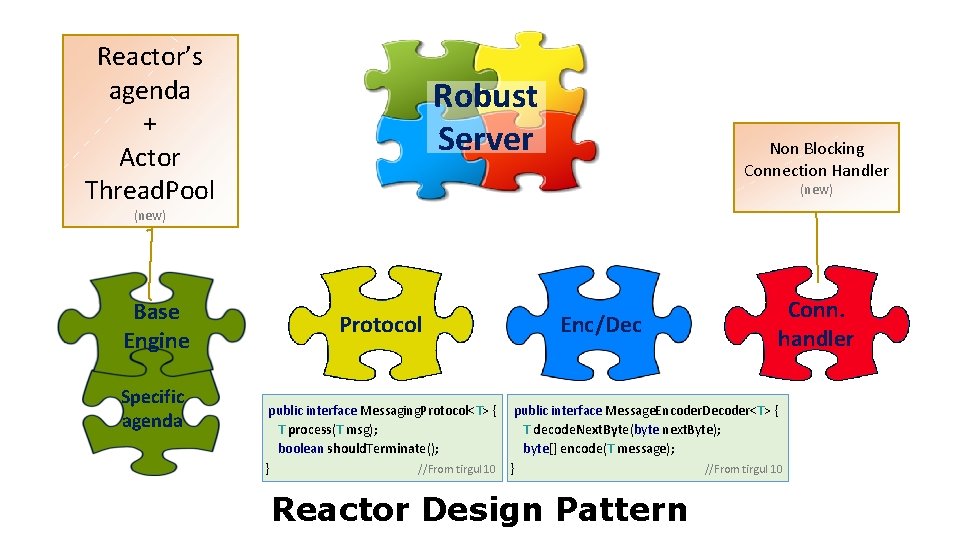

Reactor’s agenda + Actor Thread. Pool Robust Server Non Blocking Connection Handler (new) Base Engine Specific agenda Protocol Enc/Dec Conn. handler public interface Messaging. Protocol<T> { public interface Message. Encoder. Decoder<T> { T process(T msg); T decode. Next. Byte(byte next. Byte); boolean should. Terminate(); byte[] encode(T message); } //From tirgul 10 } //From tirgul 10 Reactor Design Pattern

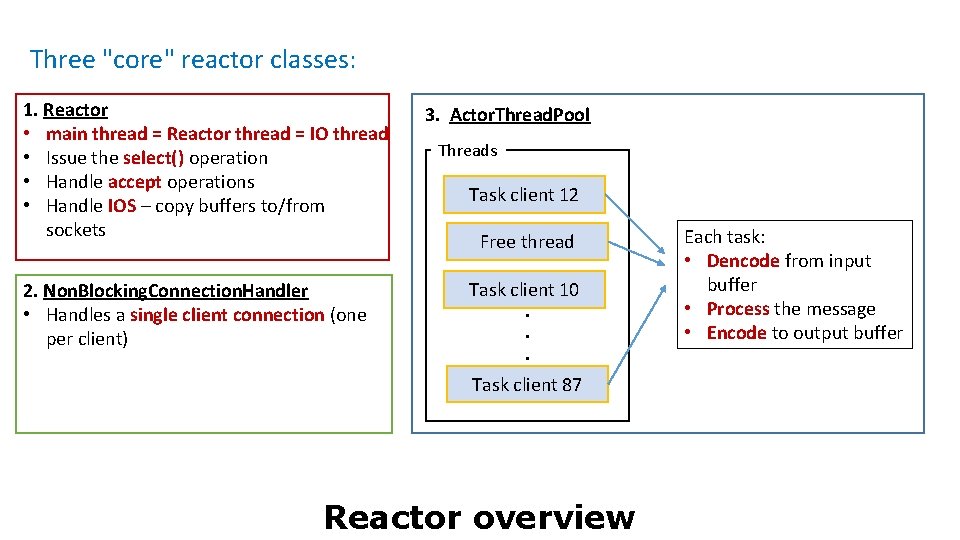

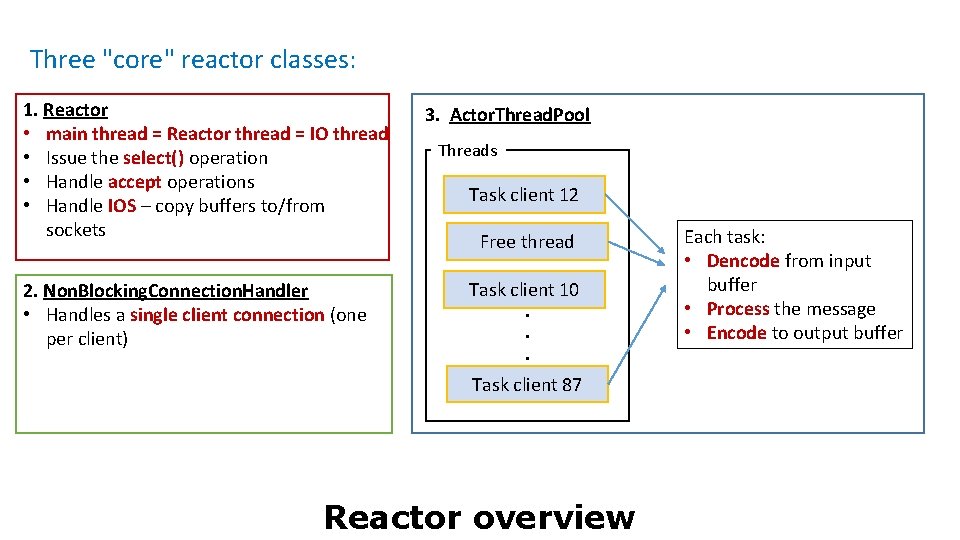

Three "core" reactor classes: 1. Reactor • main thread = Reactor thread = IO thread • Issue the select() operation • Handle accept operations • Handle IOS – copy buffers to/from sockets 2. Non. Blocking. Connection. Handler • Handles a single client connection (one per client) 3. Actor. Thread. Pool Threads Task client 12 Free thread Task client 10. . . Task client 87 Reactor overview Each task: • Dencode from input buffer • Process the message • Encode to output buffer

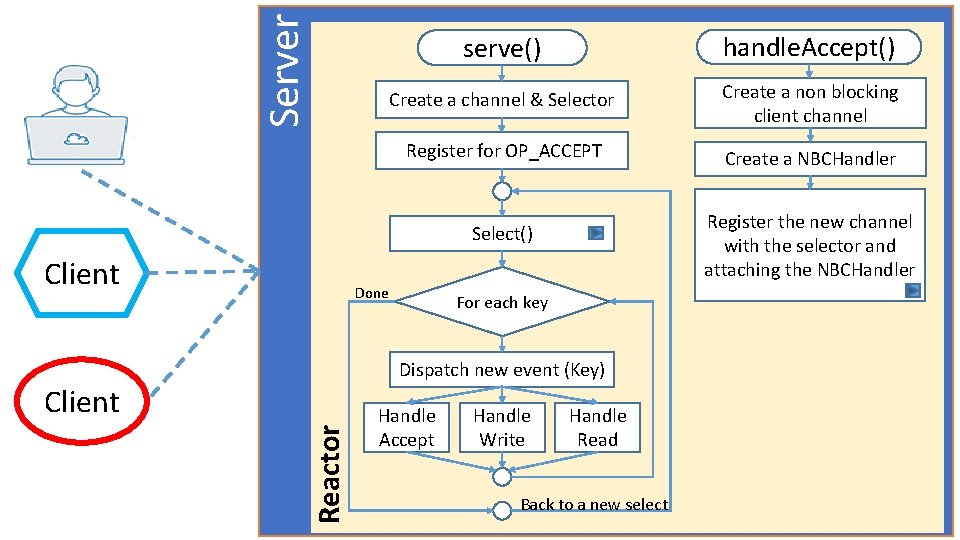

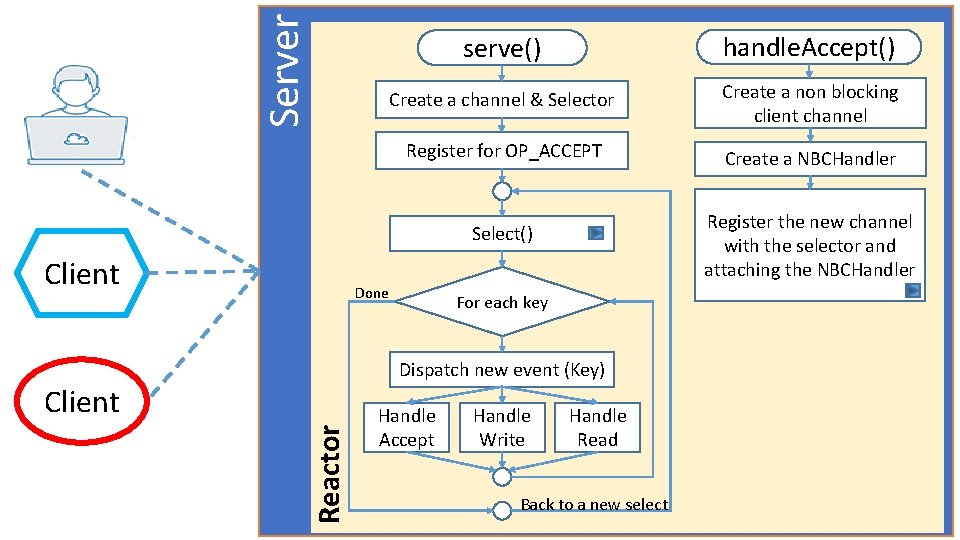

Server serve() handle. Accept() Create a channel & Selector Create a non blocking client channel Register for OP_ACCEPT Create a NBCHandler Register the new channel with the selector and attaching the NBCHandler Select() Client Done For each key Dispatch new event (Key) Reactor Client Handle Accept Handle Write Handle Read Back to a new select

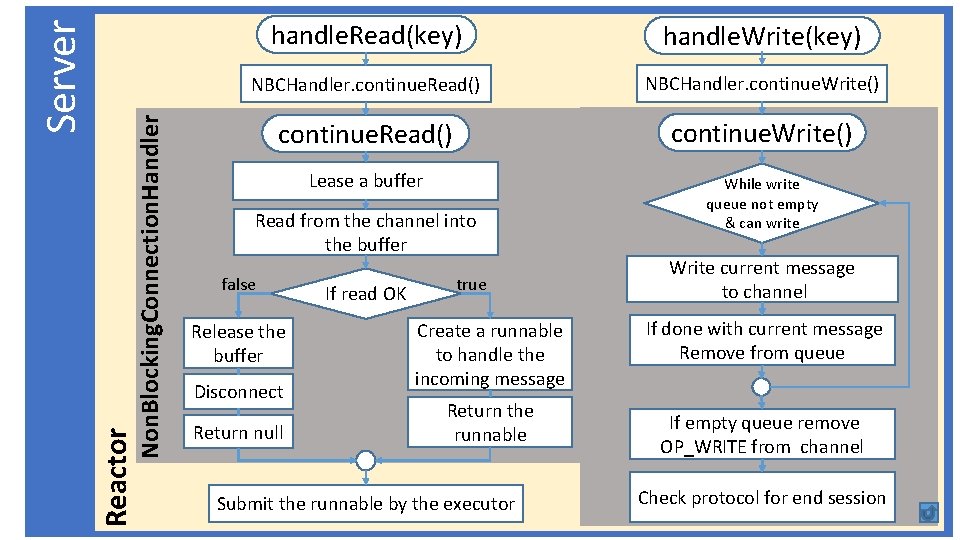

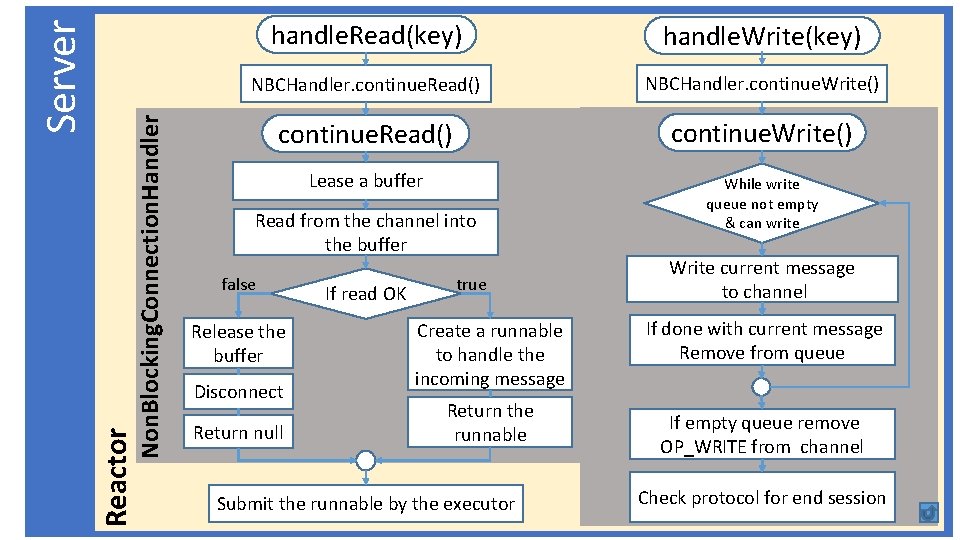

Non. Blocking. Connection. Handler Reactor Server handle. Read(key) handle. Write(key) NBCHandler. continue. Read() NBCHandler. continue. Write() continue. Read() continue. Write() Lease a buffer While write queue not empty & can write Read from the channel into the buffer false Release the buffer Disconnect Return null If read OK true Create a runnable to handle the incoming message Return the runnable Submit the runnable by the executor Write current message to channel If done with current message Remove from queue If empty queue remove OP_WRITE from channel Check protocol for end session

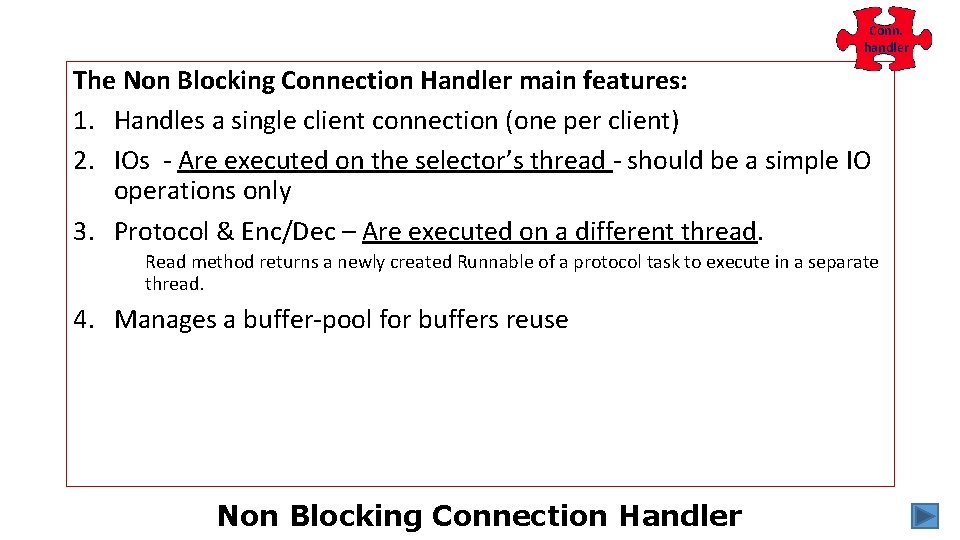

Conn. handler The Non Blocking Connection Handler main features: 1. Handles a single client connection (one per client) 2. IOs - Are executed on the selector’s thread - should be a simple IO operations only 3. Protocol & Enc/Dec – Are executed on a different thread. Read method returns a newly created Runnable of a protocol task to execute in a separate thread. 4. Manages a buffer-pool for buffers reuse Non Blocking Connection Handler

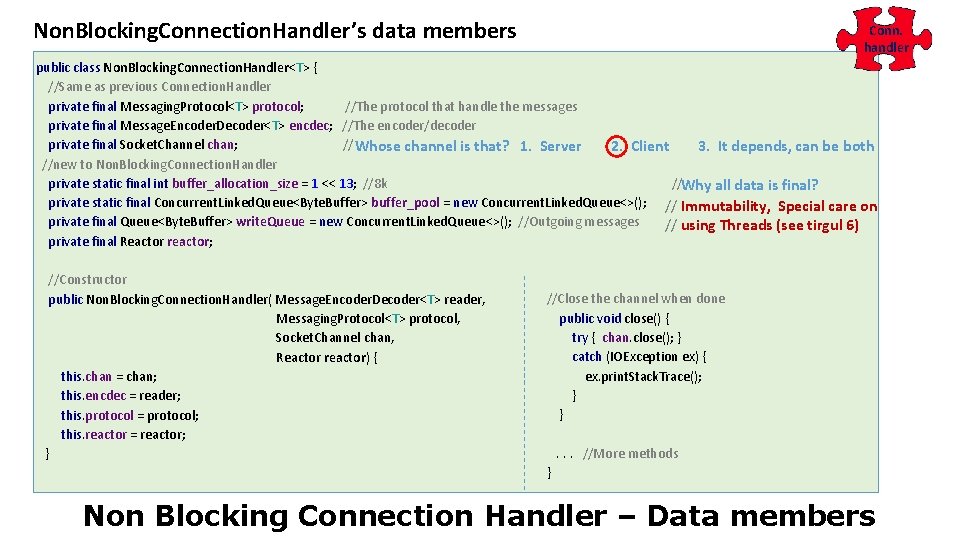

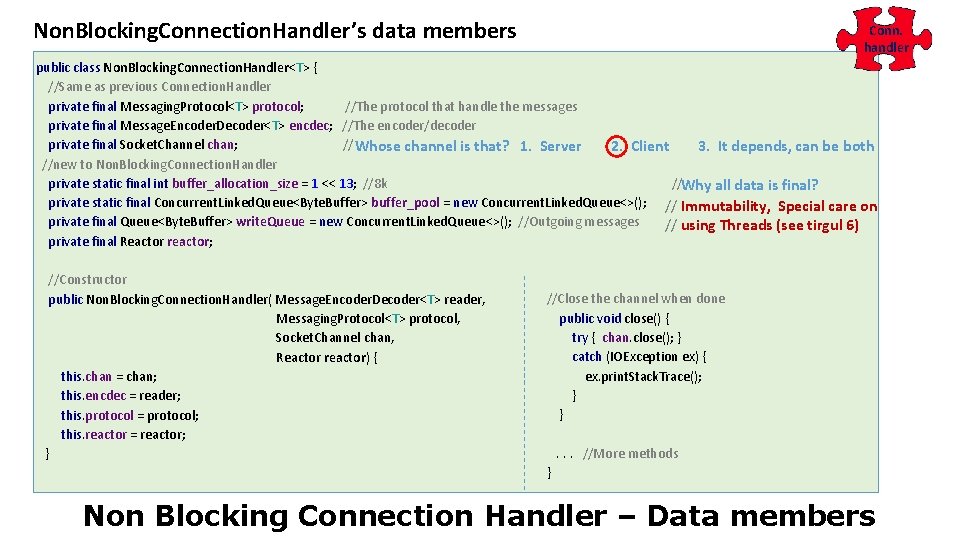

Non. Blocking. Connection. Handler’s data members Conn. handler public class Non. Blocking. Connection. Handler<T> { //Same as previous Connection. Handler private final Messaging. Protocol<T> protocol; //The protocol that handle the messages private final Message. Encoder. Decoder<T> encdec; //The encoder/decoder private final Socket. Channel chan; // Whose channel is that? 1. Server 2. Client 3. It depends, can be both //new to Non. Blocking. Connection. Handler private static final int buffer_allocation_size = 1 << 13; //8 k //Why all data is final? private static final Concurrent. Linked. Queue<Byte. Buffer> buffer_pool = new Concurrent. Linked. Queue<>(); // Immutability, Special care on private final Queue<Byte. Buffer> write. Queue = new Concurrent. Linked. Queue<>(); //Outgoing messages // using Threads (see tirgul 6) private final Reactor reactor; //Constructor public Non. Blocking. Connection. Handler( Message. Encoder. Decoder<T> reader, Messaging. Protocol<T> protocol, Socket. Channel chan, Reactor reactor) { this. chan = chan; this. encdec = reader; this. protocol = protocol; this. reactor = reactor; } //Close the channel when done public void close() { try { chan. close(); } catch (IOException ex) { ex. print. Stack. Trace(); } } . . . //More methods } Non Blocking Connection Handler – Data members

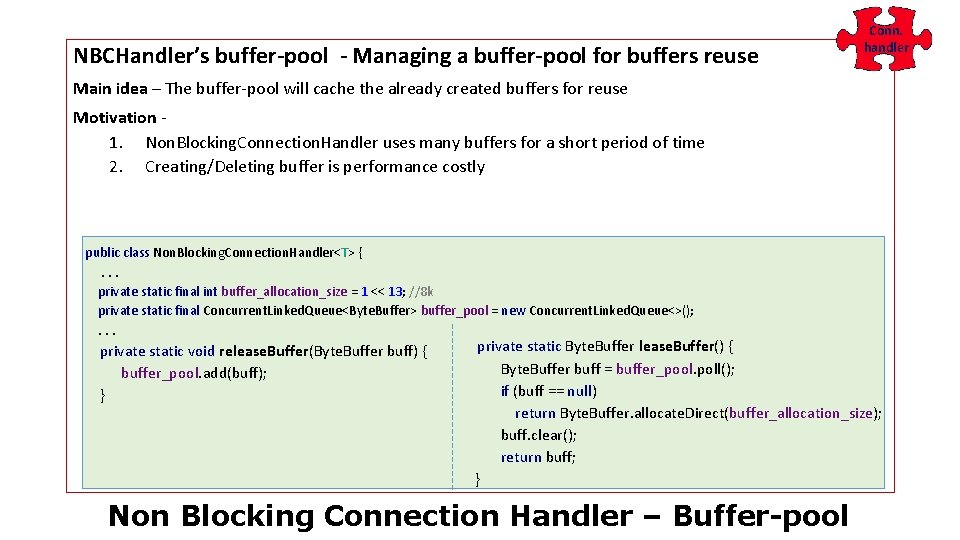

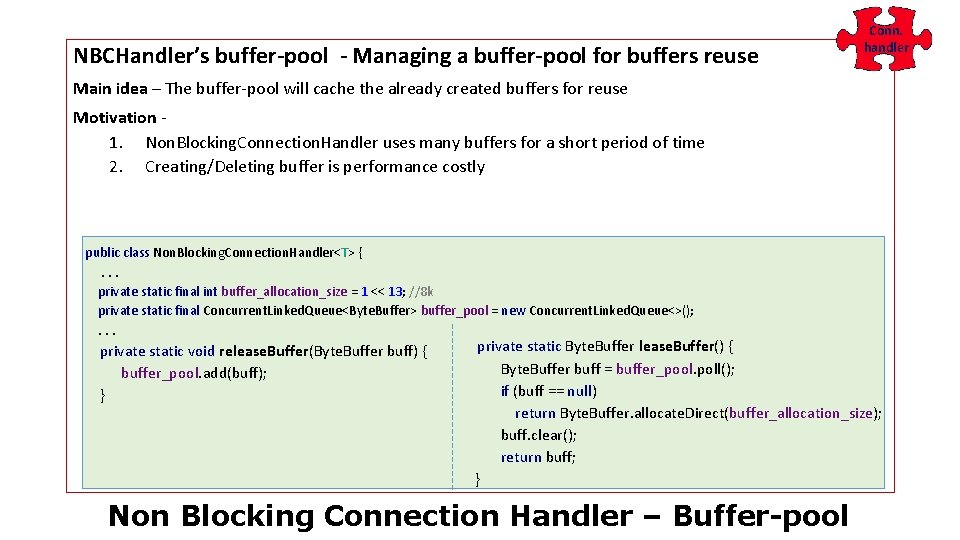

NBCHandler’s buffer-pool - Managing a buffer-pool for buffers reuse Conn. handler Main idea – The buffer-pool will cache the already created buffers for reuse Motivation - 1. Non. Blocking. Connection. Handler uses many buffers for a short period of time 2. Creating/Deleting buffer is performance costly public class Non. Blocking. Connection. Handler<T> { . . . private static final int buffer_allocation_size = 1 << 13; //8 k private static final Concurrent. Linked. Queue<Byte. Buffer> buffer_pool = new Concurrent. Linked. Queue<>(); . . . private static Byte. Buffer lease. Buffer() { private static void release. Buffer(Byte. Buffer buff) { buffer_pool. add(buff); } Byte. Buffer buff = buffer_pool. poll(); if (buff == null) return Byte. Buffer. allocate. Direct(buffer_allocation_size); buff. clear(); return buff; } Non Blocking Connection Handler – Buffer-pool

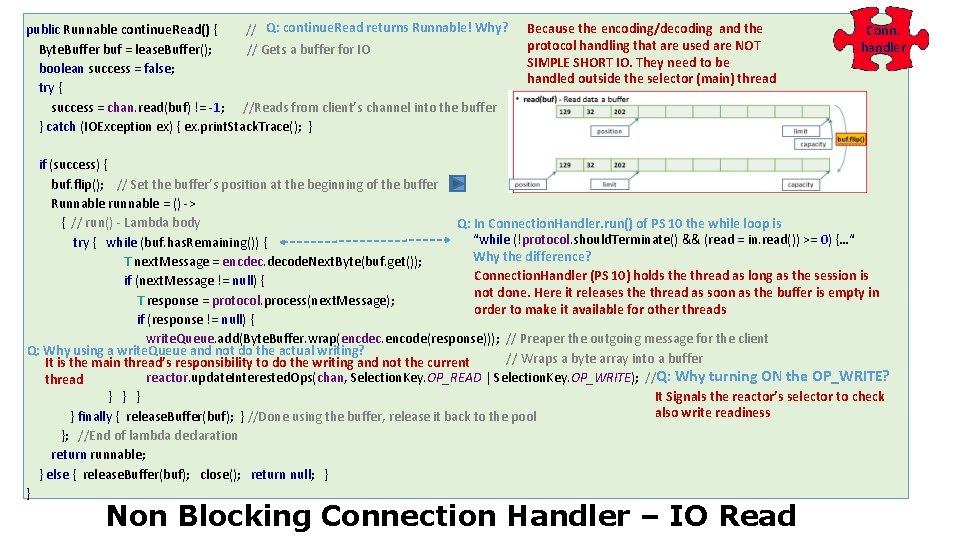

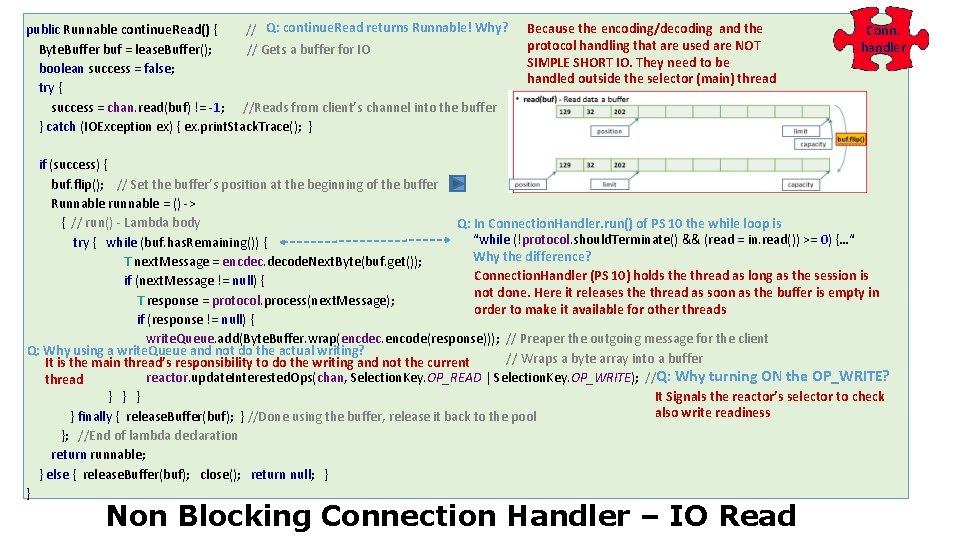

public Runnable continue. Read() { // Q: continue. Read returns Runnable! Why? Byte. Buffer buf = lease. Buffer(); // Gets a buffer for IO boolean success = false; try { success = chan. read(buf) != -1; //Reads from client’s channel into the buffer } catch (IOException ex) { ex. print. Stack. Trace(); } Because the encoding/decoding and the protocol handling that are used are NOT SIMPLE SHORT IO. They need to be handled outside the selector (main) thread Conn. handler if (success) { buf. flip(); // Set the buffer’s position at the beginning of the buffer Runnable runnable = () -> { // run() - Lambda body Q: In Connection. Handler. run() of PS 10 the while loop is try { while (buf. has. Remaining()) { “while (!protocol. should. Terminate() && (read = in. read()) >= 0) {…“ Why the difference? T next. Message = encdec. decode. Next. Byte(buf. get()); Connection. Handler (PS 10) holds the thread as long as the session is if (next. Message != null) { not done. Here it releases the thread as soon as the buffer is empty in T response = protocol. process(next. Message); order to make it available for other threads if (response != null) { write. Queue. add(Byte. Buffer. wrap(encdec. encode(response))); // Preaper the outgoing message for the client Q: Why using a write. Queue and not do the actual writing? // Wraps a byte array into a buffer It is the main thread’s responsibility to do the writing and not the current Q: Why turning ON the OP_WRITE? reactor. update. Interested. Ops(chan, Selection. Key. OP_READ | Selection. Key. OP_WRITE); // thread It Signals the reactor’s selector to check } } } also write readiness } finally { release. Buffer(buf); } //Done using the buffer, release it back to the pool }; //End of lambda declaration return runnable; } else { release. Buffer(buf); close(); return null; } } Non Blocking Connection Handler – IO Read

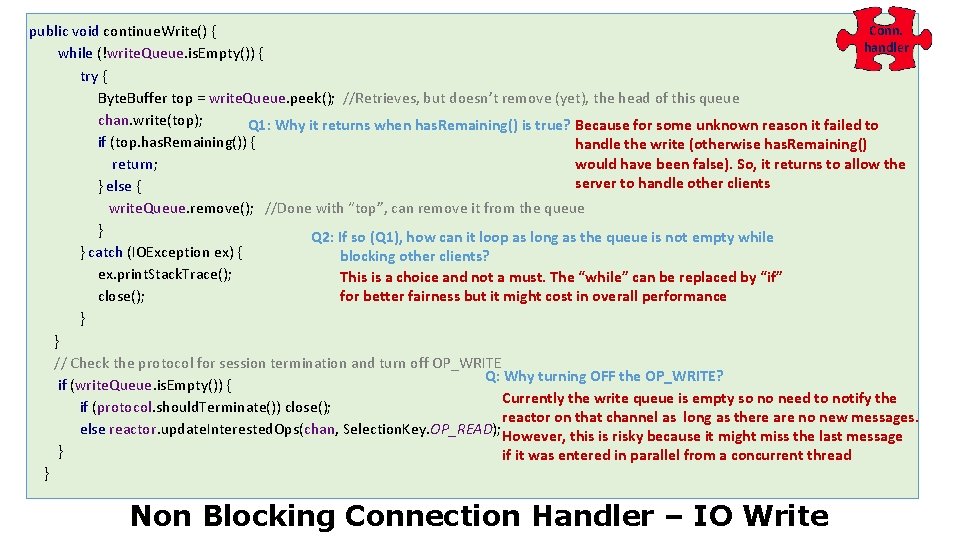

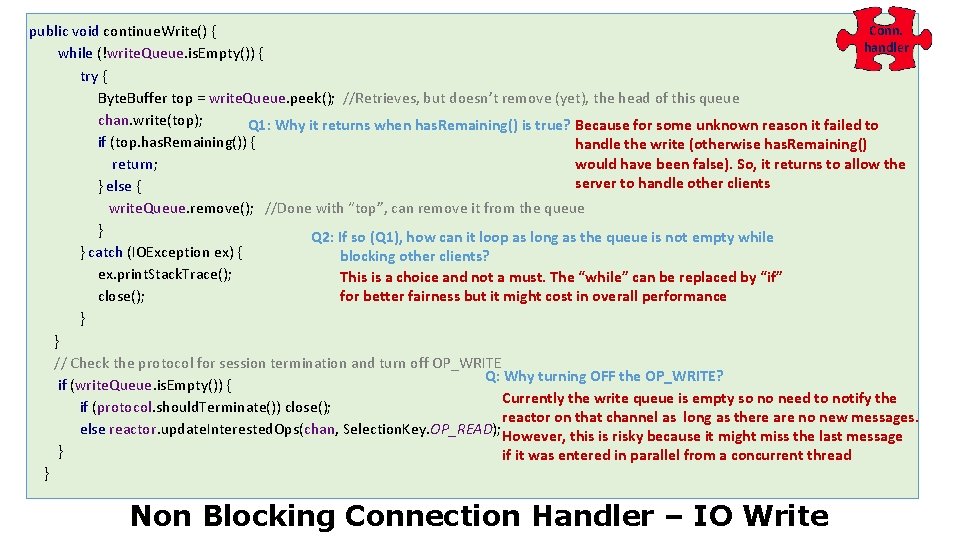

Conn. public void continue. Write() { handler while (!write. Queue. is. Empty()) { try { Byte. Buffer top = write. Queue. peek(); //Retrieves, but doesn’t remove (yet), the head of this queue chan. write(top); Q 1: Why it returns when has. Remaining() is true? Because for some unknown reason it failed to if (top. has. Remaining()) { handle the write (otherwise has. Remaining() would have been false). So, it returns to allow the return; server to handle other clients } else { write. Queue. remove(); //Done with “top”, can remove it from the queue } Q 2: If so (Q 1), how can it loop as long as the queue is not empty while } catch (IOException ex) { blocking other clients? ex. print. Stack. Trace(); This is a choice and not a must. The “while” can be replaced by “if” for better fairness but it might cost in overall performance close(); } } // Check the protocol for session termination and turn off OP_WRITE Q: Why turning OFF the OP_WRITE? if (write. Queue. is. Empty()) { Currently the write queue is empty so no need to notify the if (protocol. should. Terminate()) close(); reactor on that channel as long as there are no new messages. else reactor. update. Interested. Ops(chan, Selection. Key. OP_READ); However, this is risky because it might miss the last message } if it was entered in parallel from a concurrent thread } Non Blocking Connection Handler – IO Write

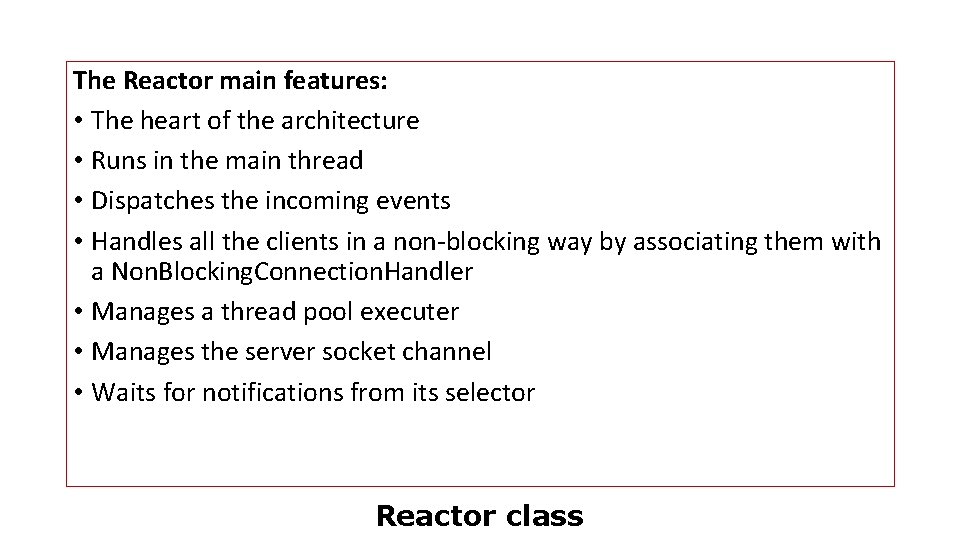

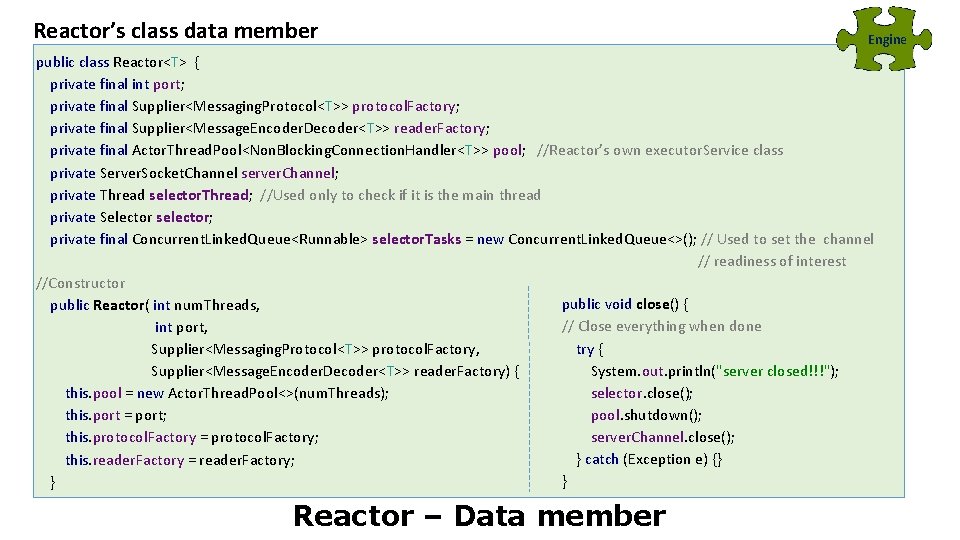

The Reactor main features: • The heart of the architecture • Runs in the main thread • Dispatches the incoming events • Handles all the clients in a non-blocking way by associating them with a Non. Blocking. Connection. Handler • Manages a thread pool executer • Manages the server socket channel • Waits for notifications from its selector Reactor class

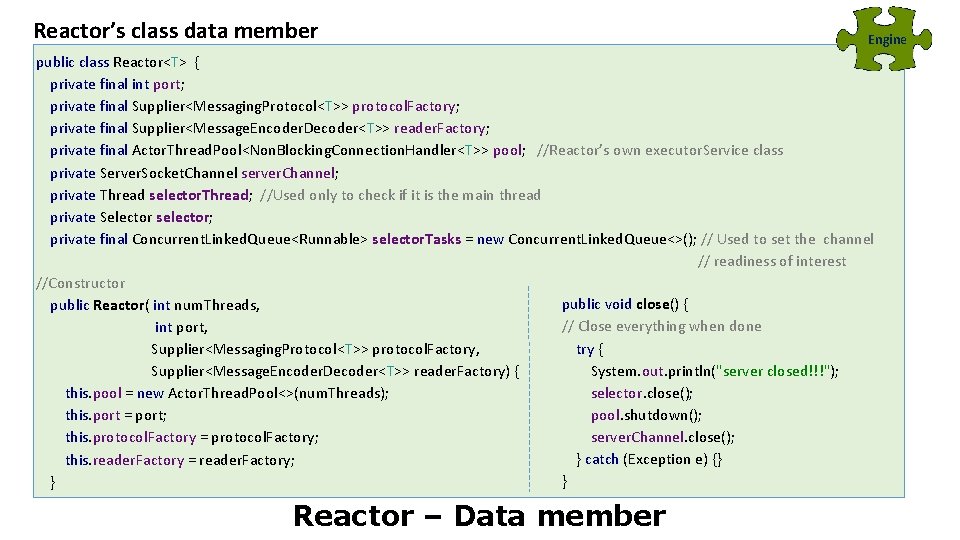

Reactor’s class data member Engine public class Reactor<T> { private final int port; private final Supplier<Messaging. Protocol<T>> protocol. Factory; private final Supplier<Message. Encoder. Decoder<T>> reader. Factory; private final Actor. Thread. Pool<Non. Blocking. Connection. Handler<T>> pool; //Reactor’s own executor. Service class private Server. Socket. Channel server. Channel; private Thread selector. Thread; //Used only to check if it is the main thread private Selector selector; private final Concurrent. Linked. Queue<Runnable> selector. Tasks = new Concurrent. Linked. Queue<>(); // Used to set the channel // readiness of interest //Constructor public void close() { public Reactor( int num. Threads, // Close everything when done int port, try { Supplier<Messaging. Protocol<T>> protocol. Factory, System. out. println("server closed!!!"); Supplier<Message. Encoder. Decoder<T>> reader. Factory) { selector. close(); this. pool = new Actor. Thread. Pool<>(num. Threads); pool. shutdown(); this. port = port; server. Channel. close(); this. protocol. Factory = protocol. Factory; } catch (Exception e) {} this. reader. Factory = reader. Factory; } } Reactor – Data member

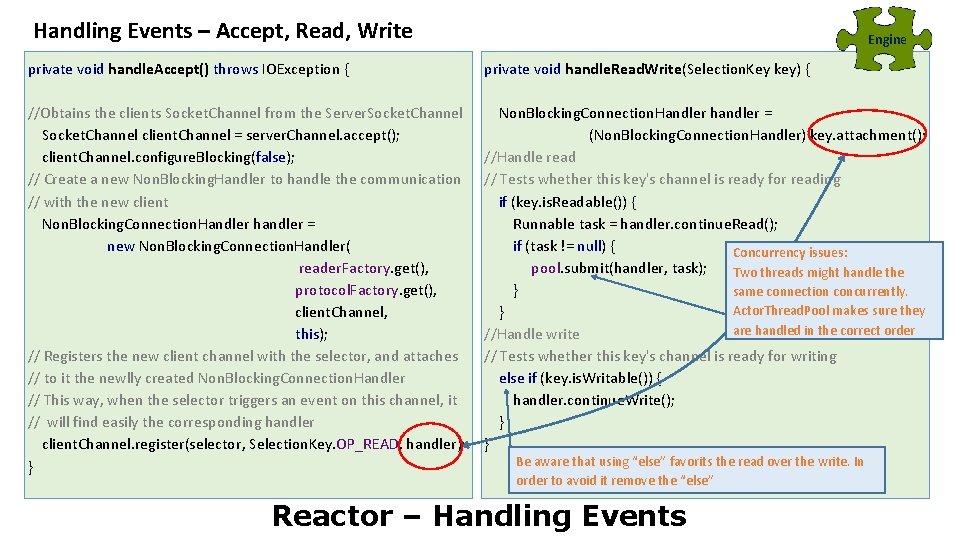

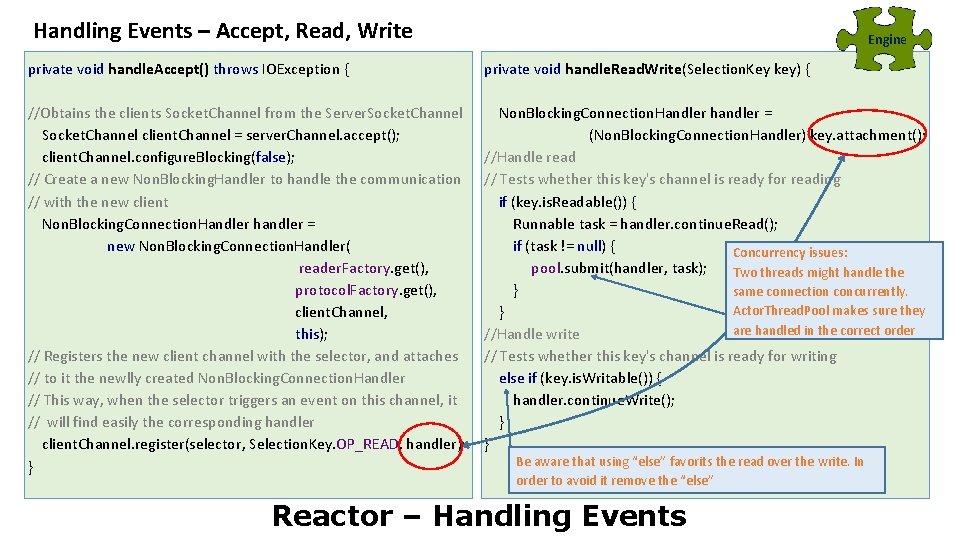

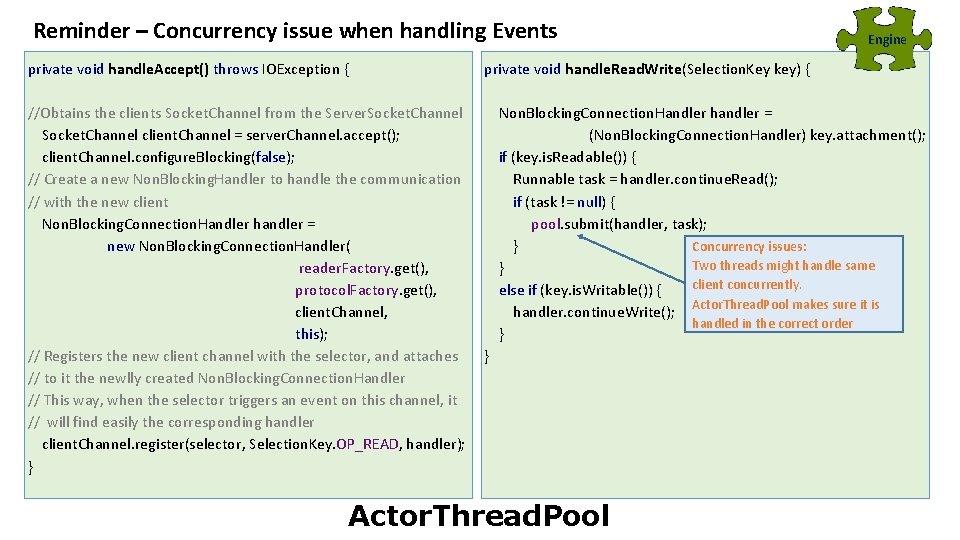

Handling Events – Accept, Read, Write Engine private void handle. Accept() throws IOException { private void handle. Read. Write(Selection. Key key) { //Obtains the clients Socket. Channel from the Server. Socket. Channel client. Channel = server. Channel. accept(); client. Channel. configure. Blocking(false); // Create a new Non. Blocking. Handler to handle the communication // with the new client Non. Blocking. Connection. Handler handler = new Non. Blocking. Connection. Handler( reader. Factory. get(), protocol. Factory. get(), client. Channel, this); // Registers the new client channel with the selector, and attaches // to it the newlly created Non. Blocking. Connection. Handler // This way, when the selector triggers an event on this channel, it // will find easily the corresponding handler client. Channel. register(selector, Selection. Key. OP_READ, handler); } Non. Blocking. Connection. Handler handler = (Non. Blocking. Connection. Handler) key. attachment(); //Handle read // Tests whether this key's channel is ready for reading if (key. is. Readable()) { Runnable task = handler. continue. Read(); if (task != null) { Concurrency issues: pool. submit(handler, task); Two threads might handle the } same connection concurrently. Actor. Thread. Pool makes sure they } are handled in the correct order //Handle write // Tests whether this key's channel is ready for writing else if (key. is. Writable()) { handler. continue. Write(); } } Be aware that using “else” favorits the read over the write. In order to avoid it remove the “else” Reactor – Handling Events

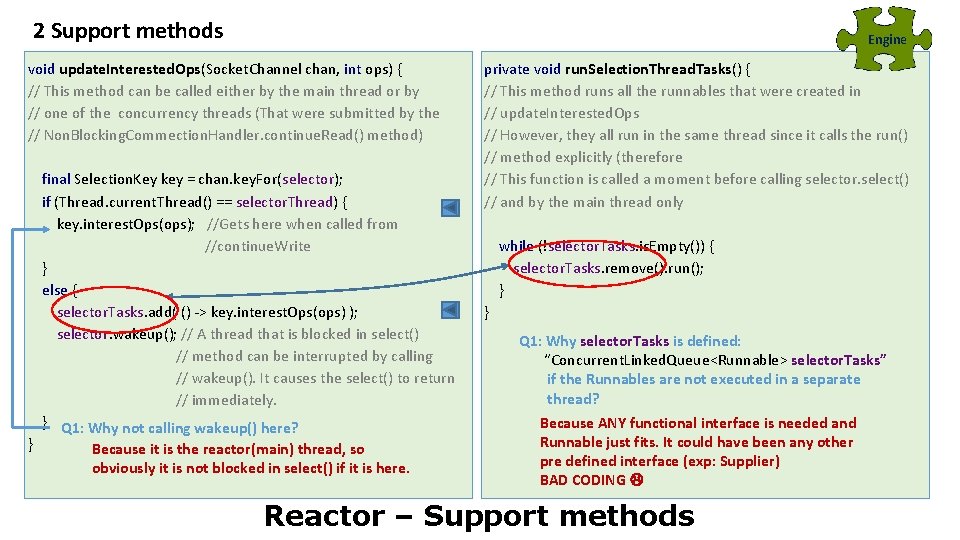

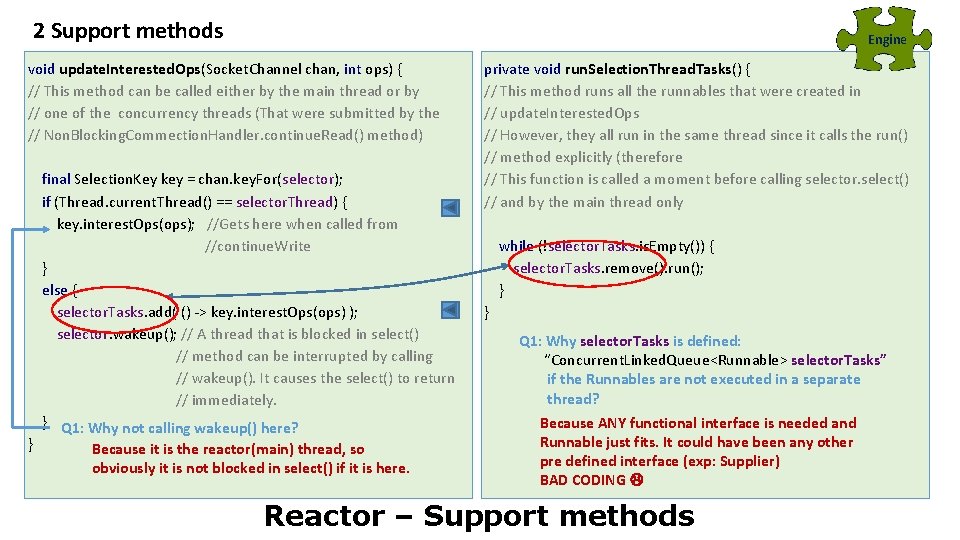

2 Support methods Engine void update. Interested. Ops(Socket. Channel chan, int ops) { // This method can be called either by the main thread or by // one of the concurrency threads (That were submitted by the // Non. Blocking. Commection. Handler. continue. Read() method) final Selection. Key key = chan. key. For(selector); if (Thread. current. Thread() == selector. Thread) { key. interest. Ops(ops); //Gets here when called from //continue. Write } else { selector. Tasks. add( () -> key. interest. Ops(ops) ); selector. wakeup(); // A thread that is blocked in select() // method can be interrupted by calling // wakeup(). It causes the select() to return // immediately. } Q 1: Why not calling wakeup() here? } Because it is the reactor(main) thread, so obviously it is not blocked in select() if it is here. private void run. Selection. Thread. Tasks() { // This method runs all the runnables that were created in // update. Interested. Ops // However, they all run in the same thread since it calls the run() // method explicitly (therefore // This function is called a moment before calling selector. select() // and by the main thread only while (!selector. Tasks. is. Empty()) { selector. Tasks. remove(). run(); } } Q 1: Why selector. Tasks is defined: “Concurrent. Linked. Queue<Runnable> selector. Tasks” if the Runnables are not executed in a separate thread? Because ANY functional interface is needed and Runnable just fits. It could have been any other pre defined interface (exp: Supplier) BAD CODING Reactor – Support methods

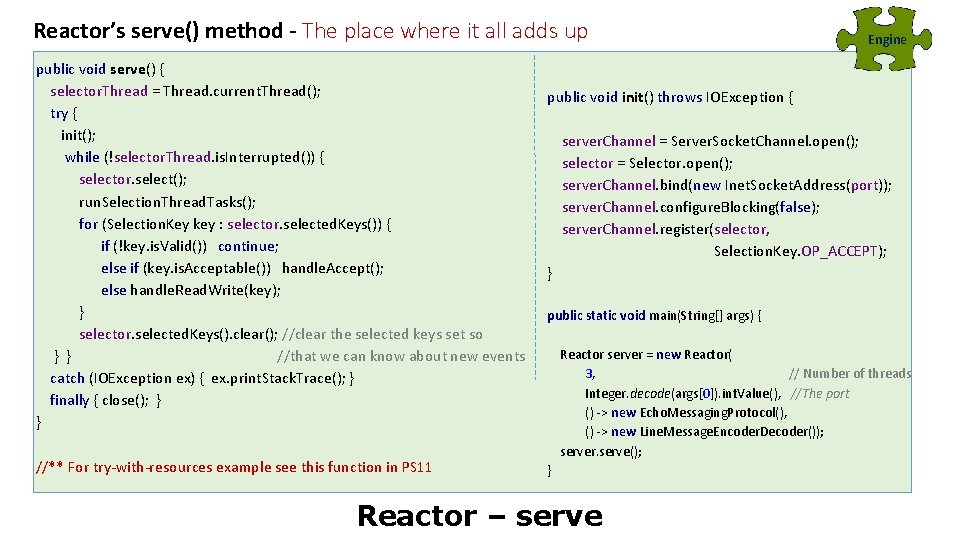

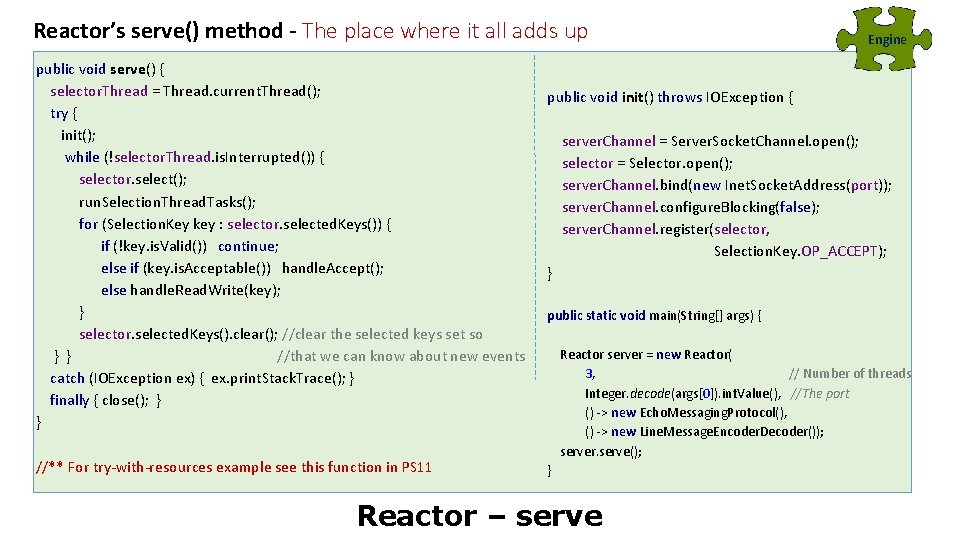

Reactor’s serve() method - The place where it all adds up public void serve() { selector. Thread = Thread. current. Thread(); try { init(); while (!selector. Thread. is. Interrupted()) { selector. select(); run. Selection. Thread. Tasks(); for (Selection. Key key : selector. selected. Keys()) { if (!key. is. Valid()) continue; else if (key. is. Acceptable()) handle. Accept(); else handle. Read. Write(key); } selector. selected. Keys(). clear(); //clear the selected keys set so } } //that we can know about new events catch (IOException ex) { ex. print. Stack. Trace(); } finally { close(); } } //** For try-with-resources example see this function in PS 11 Engine public void init() throws IOException { server. Channel = Server. Socket. Channel. open(); selector = Selector. open(); server. Channel. bind(new Inet. Socket. Address(port)); server. Channel. configure. Blocking(false); server. Channel. register(selector, Selection. Key. OP_ACCEPT); } public static void main(String[] args) { Reactor server = new Reactor( 3, // Number of threads Integer. decode(args[0]). int. Value(), //The port () -> new Echo. Messaging. Protocol(), () -> new Line. Message. Encoder. Decoder()); server. serve(); } Reactor – serve

Server Client

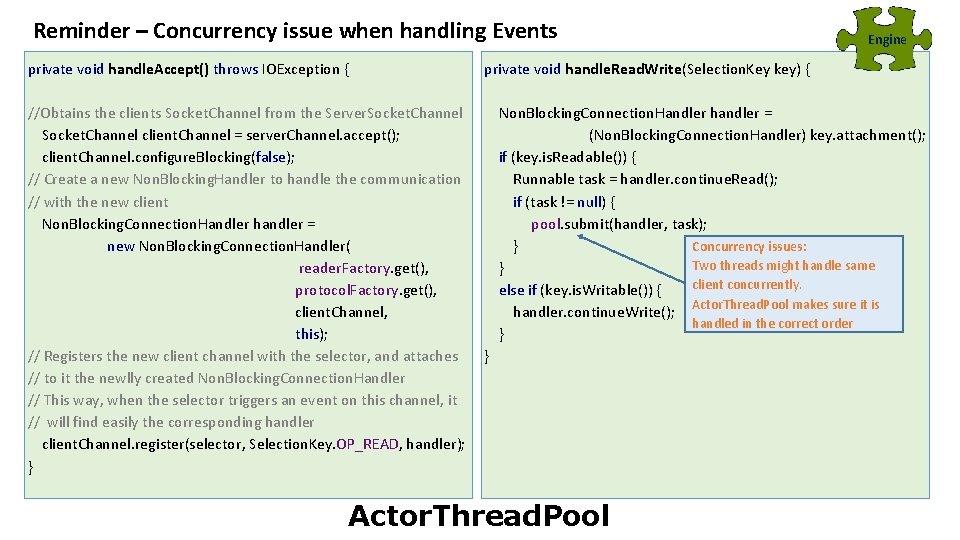

Reminder – Concurrency issue when handling Events Engine private void handle. Accept() throws IOException { private void handle. Read. Write(Selection. Key key) { //Obtains the clients Socket. Channel from the Server. Socket. Channel client. Channel = server. Channel. accept(); client. Channel. configure. Blocking(false); // Create a new Non. Blocking. Handler to handle the communication // with the new client Non. Blocking. Connection. Handler handler = new Non. Blocking. Connection. Handler( reader. Factory. get(), protocol. Factory. get(), client. Channel, this); // Registers the new client channel with the selector, and attaches // to it the newlly created Non. Blocking. Connection. Handler // This way, when the selector triggers an event on this channel, it // will find easily the corresponding handler client. Channel. register(selector, Selection. Key. OP_READ, handler); } Non. Blocking. Connection. Handler handler = (Non. Blocking. Connection. Handler) key. attachment(); if (key. is. Readable()) { Runnable task = handler. continue. Read(); if (task != null) { pool. submit(handler, task); Concurrency issues: } Two threads might handle same } client concurrently. else if (key. is. Writable()) { Actor. Thread. Pool makes sure it is handler. continue. Write(); handled in the correct order } } Actor. Thread. Pool

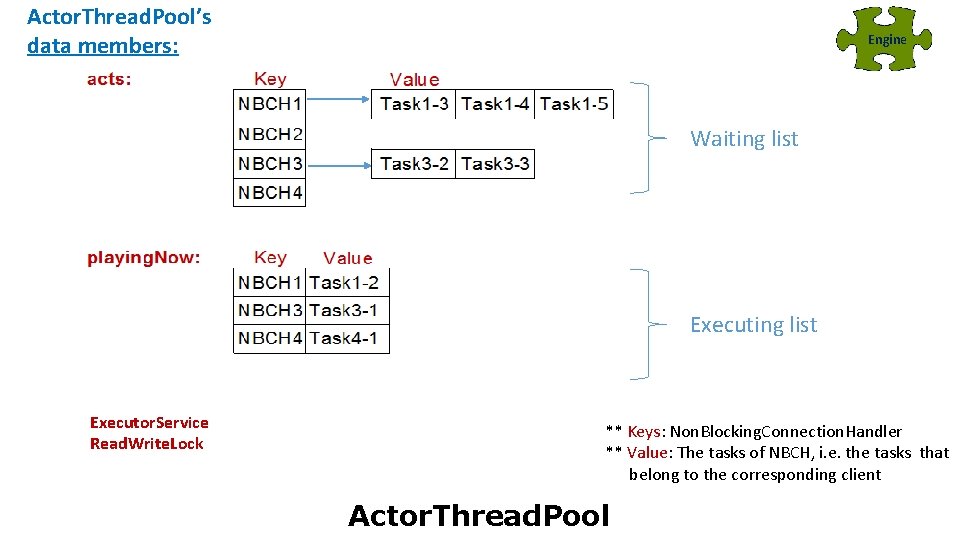

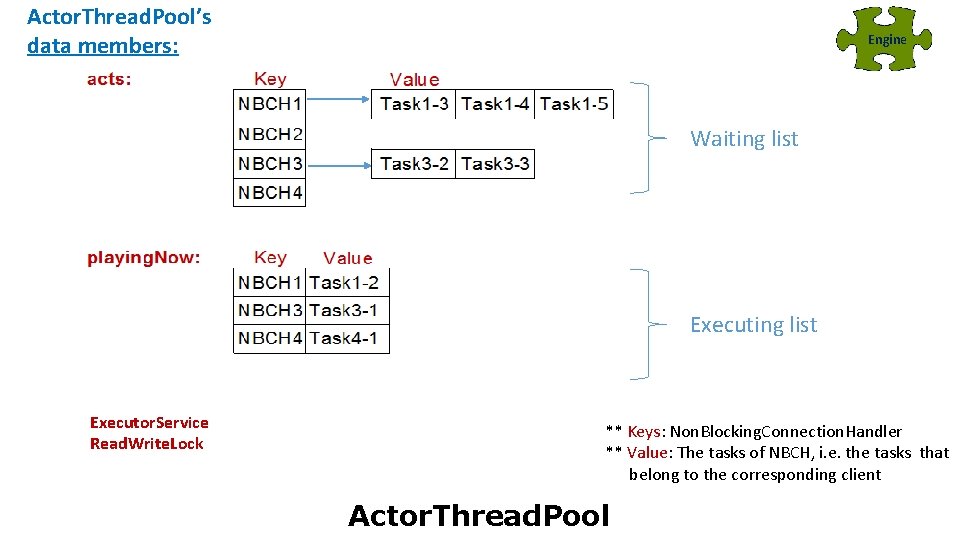

Actor. Thread. Pool’s data members: Engine Waiting list Executor. Service Read. Write. Lock ** Keys: Non. Blocking. Connection. Handler ** Value: The tasks of NBCH, i. e. the tasks that belong to the corresponding client Actor. Thread. Pool

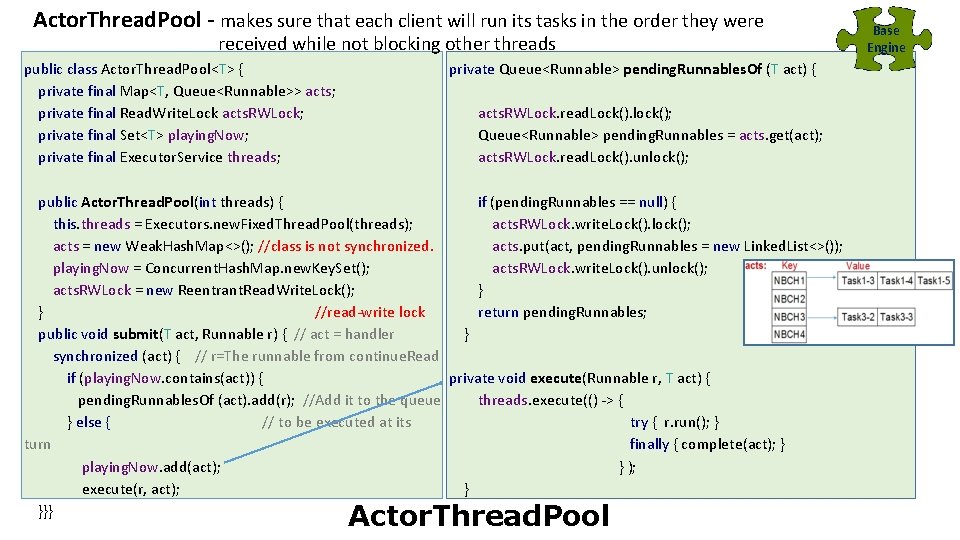

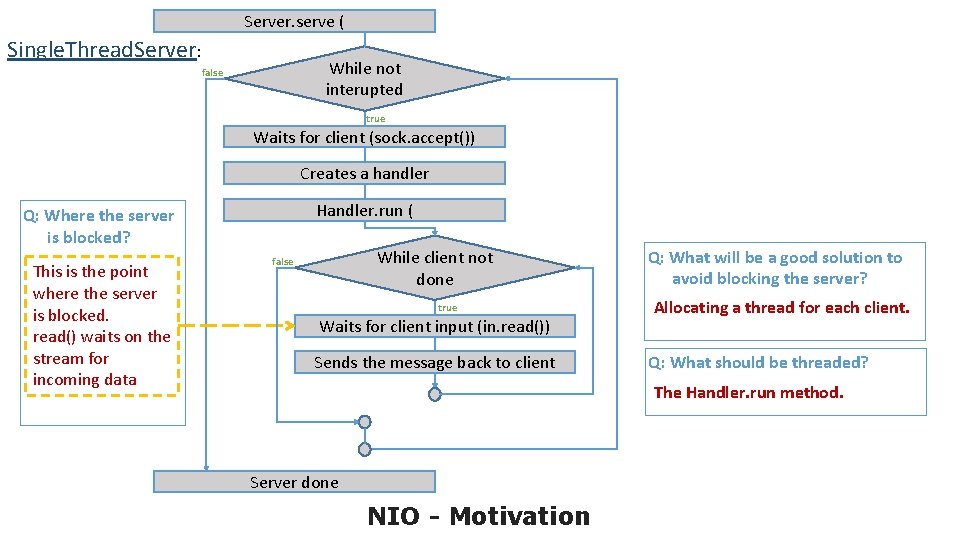

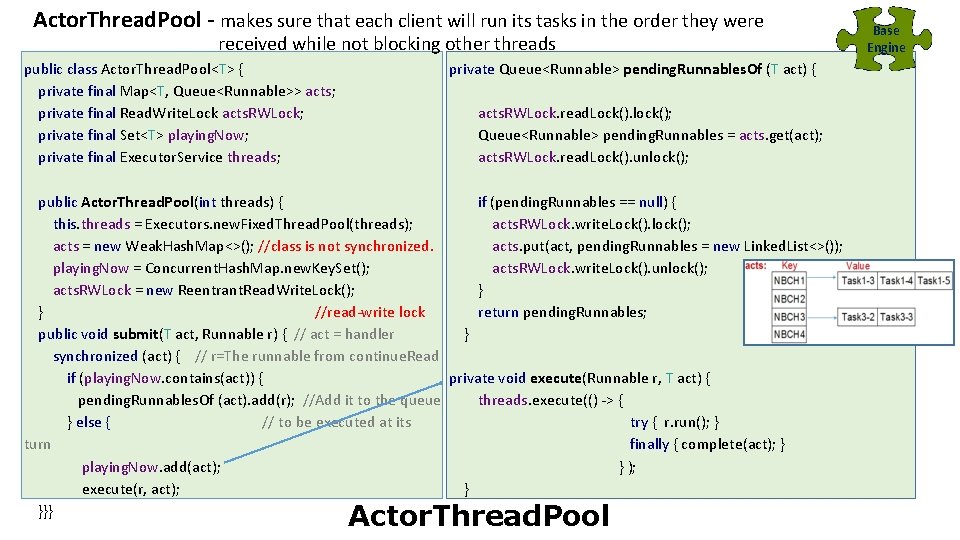

Actor. Thread. Pool - makes sure that each client will run its tasks in the order they were received while not blocking other threads public class Actor. Thread. Pool<T> { private final Map<T, Queue<Runnable>> acts; private final Read. Write. Lock acts. RWLock; private final Set<T> playing. Now; private final Executor. Service threads; private Queue<Runnable> pending. Runnables. Of (T act) { acts. RWLock. read. Lock(). lock(); Queue<Runnable> pending. Runnables = acts. get(act); acts. RWLock. read. Lock(). unlock(); public Actor. Thread. Pool(int threads) { if (pending. Runnables == null) { this. threads = Executors. new. Fixed. Thread. Pool(threads); acts. RWLock. write. Lock(). lock(); acts = new Weak. Hash. Map<>(); //class is not synchronized. acts. put(act, pending. Runnables = new Linked. List<>()); playing. Now = Concurrent. Hash. Map. new. Key. Set(); acts. RWLock. write. Lock(). unlock(); acts. RWLock = new Reentrant. Read. Write. Lock(); } } //read-write lock return pending. Runnables; public void submit(T act, Runnable r) { // act = handler } synchronized (act) { // r=The runnable from continue. Read if (playing. Now. contains(act)) { private void execute(Runnable r, T act) { pending. Runnables. Of (act). add(r); //Add it to the queue threads. execute(() -> { } else { // to be executed at its try { r. run(); } turn finally { complete(act); } playing. Now. add(act); } ); execute(r, act); } }}} Actor. Thread. Pool Base Engine

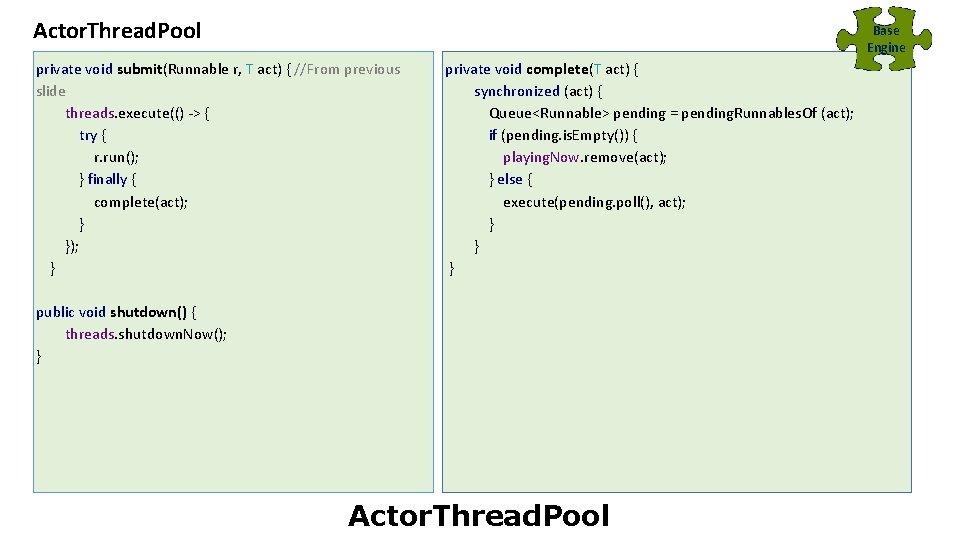

Actor. Thread. Pool Base Engine private void submit(Runnable r, T act) { //From previous slide threads. execute(() -> { try { r. run(); } finally { complete(act); }); } private void complete(T act) { synchronized (act) { Queue<Runnable> pending = pending. Runnables. Of (act); if (pending. is. Empty()) { playing. Now. remove(act); } else { execute(pending. poll(), act); } } public void shutdown() { threads. shutdown. Now(); } Actor. Thread. Pool

THE END !! See Practical Session 11