THE INFN GRID PROJECT z Scope Study and

- Slides: 16

THE INFN GRID PROJECT z Scope: Study and develop a general INFN computing infrastructure, based on GRID technologies, to be validated (as first use case) implementing distributed Regional Center prototypes for LHC expts: ATLAS, CMS, ALICE and, later on, also for other INFN expts (Virgo, Gran Sasso …. ) z Project Status: y Outline of proposal submitted to INFN management 13 -1 -2000 y 3 Year duration y Next meeting with INFN management 18 th of February y Feedback documents from LHC expts by end of February (sites, FTEs. . ) y Final proposal to INFN by end of March

INFN & “Grid Related Projects” z. Globus tests z“Condor on WAN” as general purpose computing resource z“GRID” working group to analyze viable and useful solutions (LHC computing, Virgo…) y. Global architecture that allows strategies for the discovery, allocation, reservation and management of resource collection z. MONARC project related activities

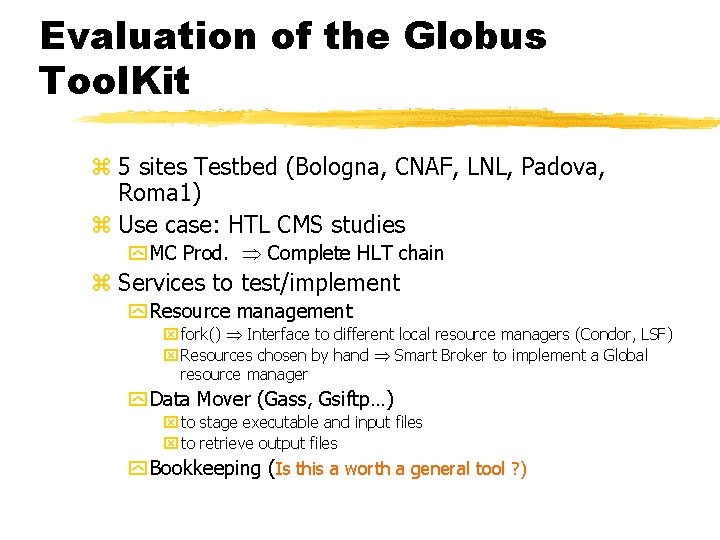

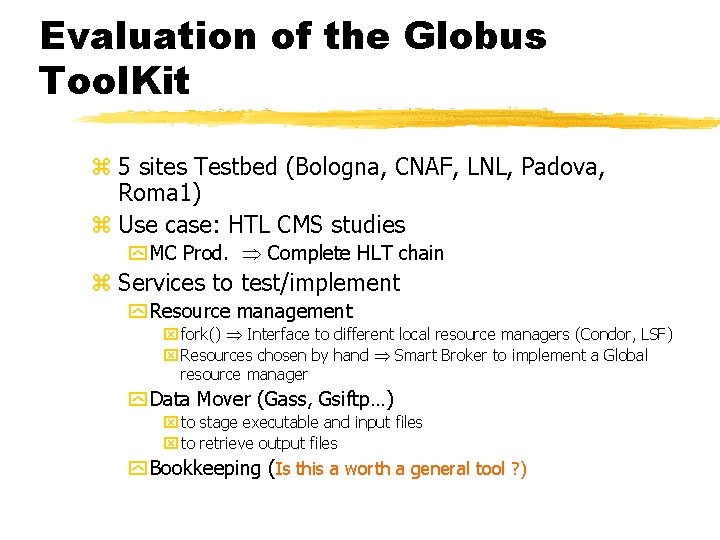

Evaluation of the Globus Tool. Kit z 5 sites Testbed (Bologna, CNAF, LNL, Padova, Roma 1) z Use case: HTL CMS studies y MC Prod. Complete HLT chain z Services to test/implement y Resource management x fork() Interface to different local resource managers (Condor, LSF) x Resources chosen by hand Smart Broker to implement a Global resource manager y Data Mover (Gass, Gsiftp…) x to stage executable and input files x to retrieve output files y Bookkeeping (Is this a worth a general tool ? )

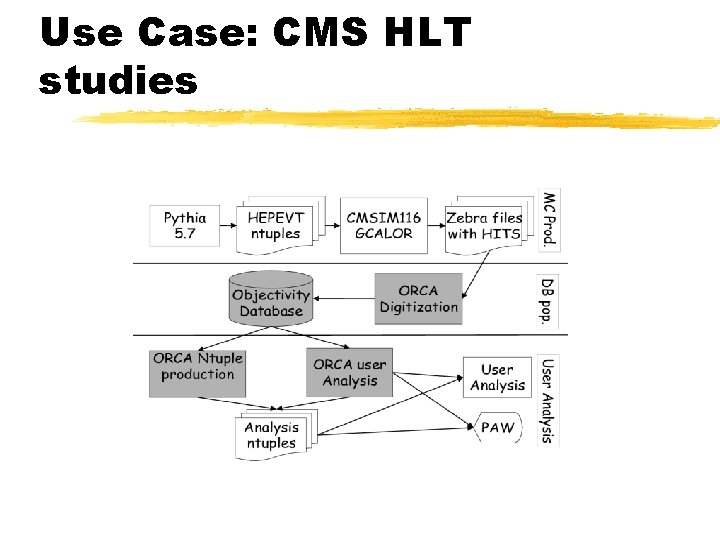

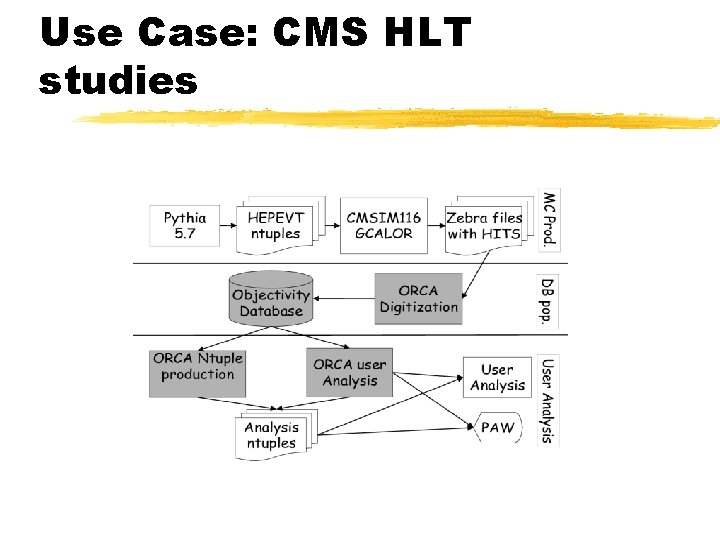

Use Case: CMS HLT studies

Status z. Globus installed in 5 Linux PCs in 3 sites z. Globus Security Infrastructure yworks !! z. MDS y. Initial problems accessing data and time out) z. GRAM, GASS, Gloperf y. Work in progress (long response time

Condor on WAN Objectives z Large INFN project of the Computing Commission involving ~20 sites z INFN collaboration with Condor Team UWISC z I goal: Condor “tuning” on WAN yverify Condor reliability and robustness in Wide Area Network environment y. Verify suitability to INFN computing needs y. Network I/O impact and measures

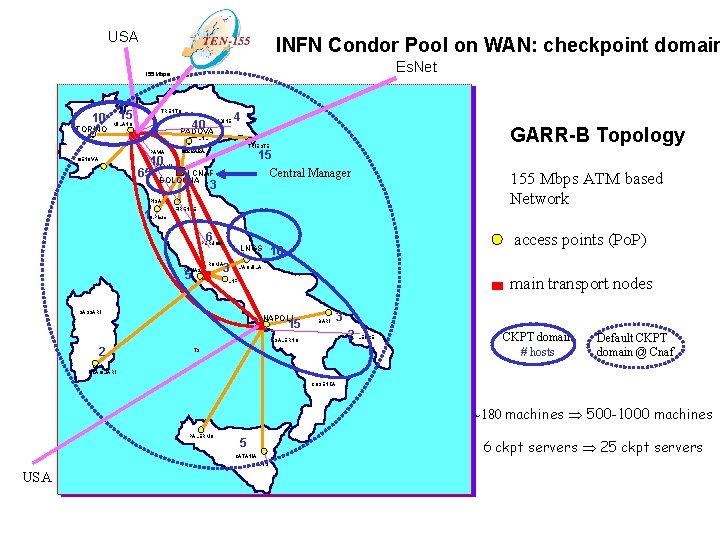

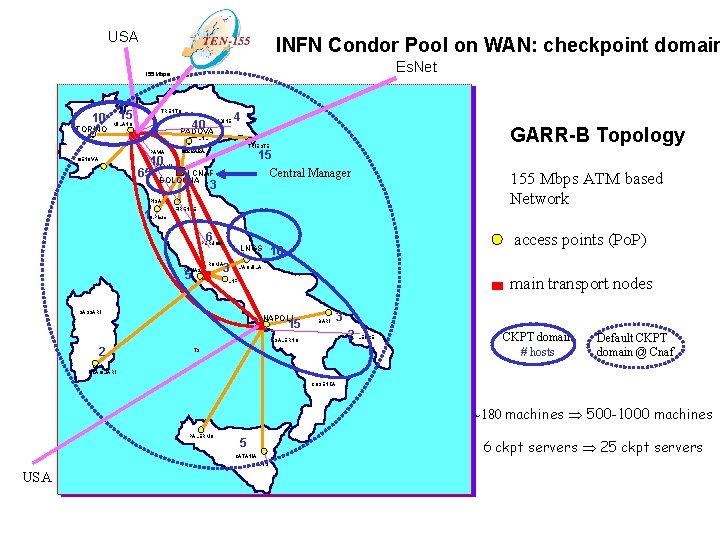

z II goal: Network as a Condor Resource y. Dynamic checkpointing and Checkpoint domain configuration y. Pool partitioned in checkpoint domains (a dedicated ckpt server for each domain) y. Definition of a checkpoint domain according: x. Presence of a sufficiently large CPU capacity x. Presence of a set of machines with an efficient network connectivity x. Sub-pools

Checkpointing: next step z. Distributed dynamic checkpointing y. Pool machines select the “best” checkpoint server (from a network view) y. Association between execution machine and checkpoint server dynamically decided

Implementation Characteristics of the INFN Condor pool: z. Single pool y. To optimize CPU usage of all INFN hosts z. Sub-pools y. To define policies/priorities on resource usage z. Checkpoint domains y. To guarantee the performance and the efficiency of the system y. To reduce network traffic for checkpointing activity

USA INFN Condor Pool on WAN: checkpoint domain Es. Net 155 Mbps 10 TORINO 15 TRENTO 40 MILANO UDINE 4 GARR-B Topology PADOVA LNL PAVIA 10 PARMA 65 GENOVA TRIESTE 15 Central Manager FERRARA CNAF BOLOGNA 3 PISA 1 S. Piero FIRENZE 6 PERUGIA ROMA 2 5 LNGS 3 access points (Po. P) 10 L’AQUILA main transport nodes LNF SASSARI NAPOLI 15 BARI 3 2 LECCE SALERNO 2 155 Mbps ATM based Network T 3 CKPT domain # hosts Default CKPT domain @ Cnaf CAGLIARI COSENZA ~180 machines 500 -1000 machines PALERMO 5 6 ckpt servers 25 ckpt servers CATANIA LNS USA

Management z. Central management (condoradmin@infn. it) z. Local management (condor@infn. it) z. Steering committee zsoftware maintenance contract with Condor_support team of University of Madison

INFN-GRID project requirements Networked Workload Management: - Optimal co-allocation of data and CPU and network for a specific “grid/network-aware” job - distributed scheduling (data and/or code migration) - unscheduled/ scheduled job submission - Management of heterogeneous computing systems - Uniform interface to various local resource managers and schedulers - Priorities, policies on resource (CPU, Data, Network) usage - bookkeeping and ‘web’ user interface

Project req. (cont. ) Networked Data Management: - Universal name space: transparent, location independent - Data replication and caching - Data mover (scheduled/interactive at OBJ/file/DB granularity) - Loose synchronization between replicas - Application Metadata, interfaced with DBMS, i. e. Objectivity, … - Network services definition for a given application - End systems network protocol tuning

Project req. (cont. ) Application Monitoring/Management: - Performance, “instrumented systems” with timing information and analysis tools - Run-time analysis of collected application events - Bottleneck analysis - Dynamic monitoring of GRID resources to optimize resource allocation - Failure management

Project req. (cont. ) Computing Fabric and general utilities for a global managed Grid: - Configuration management of computing facilities - Automatic software installation and maintenance - System, service, network monitoring and global alarm notification, automatic recovery from failures - resource use accounting - Security of GRID resources and infrastructure usage - Information service

Grid Tools