Testing General Requirements DFT Multilevel Testing General Requirements

- Slides: 26

Testing: General Requirements, DFT, Multilevel Testing: General Requirements DFT Multilevel Testing-System, Black Box, White or Glass Box Tests

Testing--General Requirements and Strategies Testing--general requirements: • thorough • ongoing • DEVELOPED WITH DESIGN (DFT--design for test) note: this implies that several LEVELS of testing will be carried out efficiently • Carried out independently of design/implementation Testing--strategies: • Fault avoidance: reduce possibility of faults through techniques such as configuration management, good development process • Fault detection: use debugging and testing to find and remove faults • Fault tolerance (for remote systems or safety-critical systems, e. g. ): use techniques such as 3 or more redundant modules, let them “vote” on what the output should be

Testing goals At different points in the development cycle, tests may have different goals, e. g. : --verification--functions correctly implemented (according to specifications) --validation--we are implementing the correct functions (according to requirements)

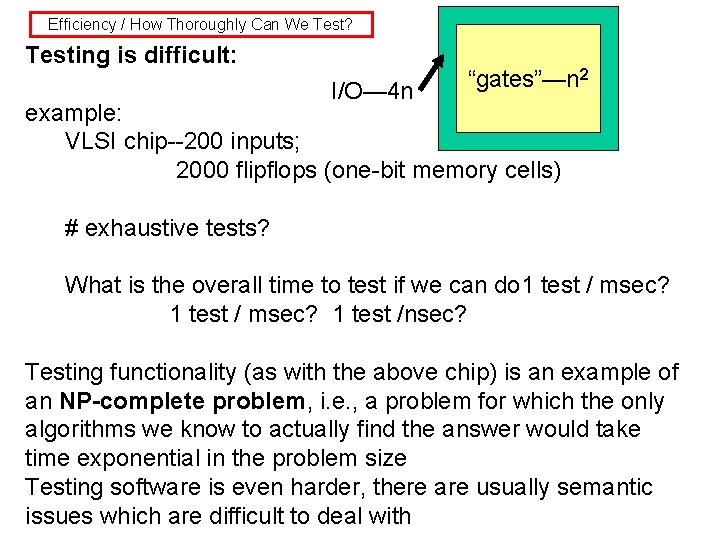

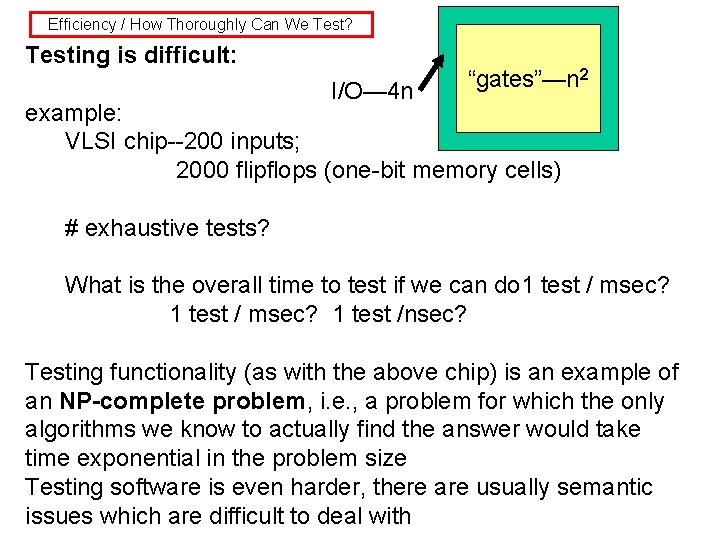

Efficiency / How Thoroughly Can We Test? Testing is difficult: I/O— 4 n “gates”—n 2 example: VLSI chip--200 inputs; 2000 flipflops (one-bit memory cells) # exhaustive tests? What is the overall time to test if we can do 1 test / msec? 1 test /nsec? Testing functionality (as with the above chip) is an example of an NP-complete problem, i. e. , a problem for which the only algorithms we know to actually find the answer would take time exponential in the problem size Testing software is even harder, there are usually semantic issues which are difficult to deal with

Good, Bad, and Successful Tests “Psychology of testing”: • good test: has a high probability of finding an error • ("bad test": not likely to find anything new) • successful test: finds a new error

Most Effective Testing Is Independent most effective testing: by an "independent” third party It is more difficult to “see” the errors you have included in your own work: --subconsciously you want the system to work --you may know what you intended and overlook what is actually there --an independent tester brings a fresh viewpoint to the question of what works and what doesn’t --testing requires expertise; common practice of putting the most inexperienced team members in charge of testing is not really effective Question: what does this imply about your team testing strategy for the quarter project? What about in waterfall model? In XP?

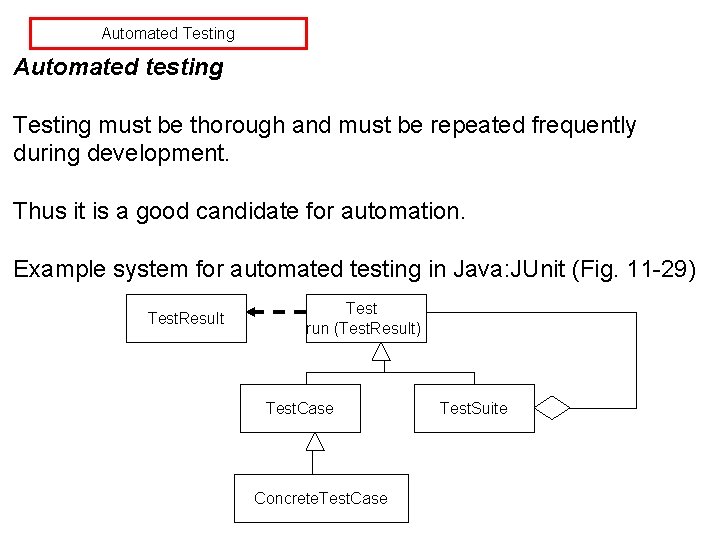

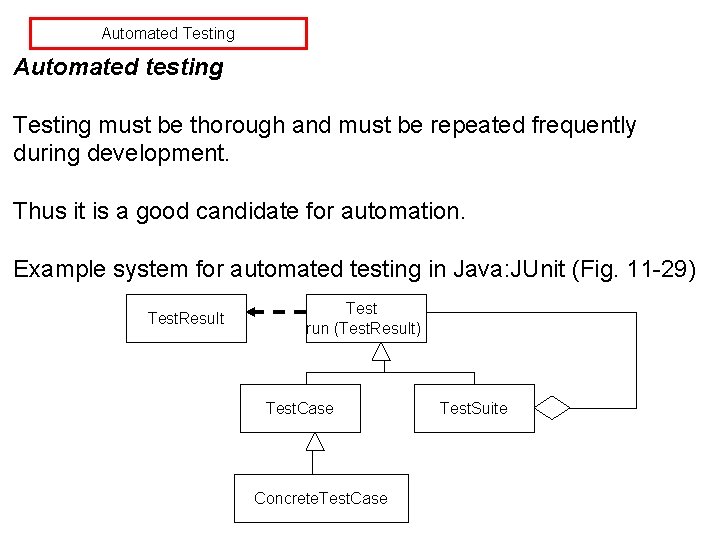

Automated Testing Automated testing Testing must be thorough and must be repeated frequently during development. Thus it is a good candidate for automation. Example system for automated testing in Java: JUnit (Fig. 11 -29) Test. Result Test run (Test. Result) Test. Case Concrete. Test. Case Test. Suite

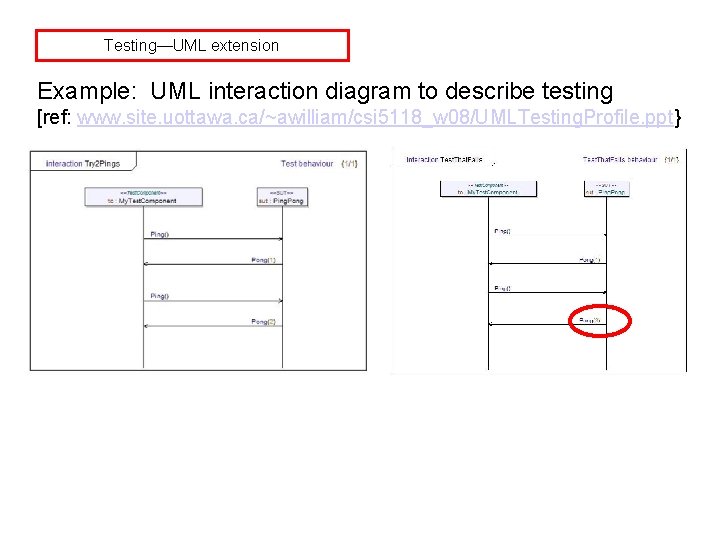

Testing—UML extension Example: U 2 TP—UML 2 Testing Profile (2005 extension to UML) Extends UML to allow the modeling of testing Can be used with manual or automated testing Example: Figure 11 -31: ----Context: organize test cases, test components, system under test (SUT) ----Components: set up tests ----SUT: what is being tested

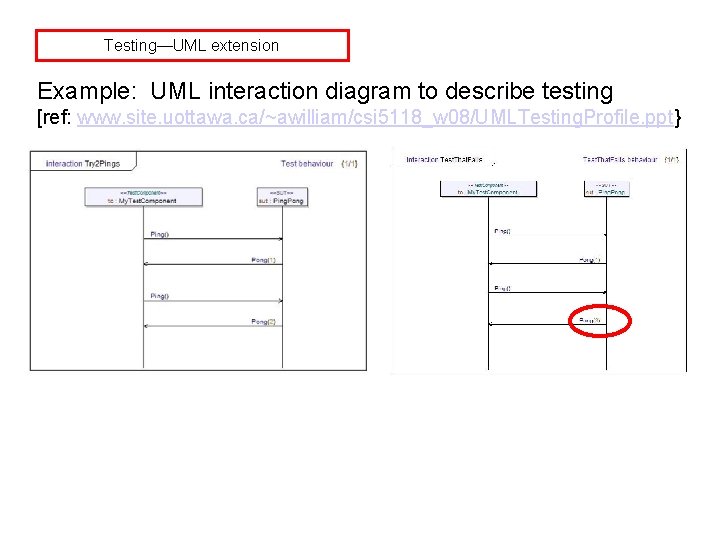

Testing—UML extension Example: UML interaction diagram to describe testing [ref: www. site. uottawa. ca/~awilliam/csi 5118_w 08/UMLTesting. Profile. ppt}

Matching Testing with Design and Language How will testing match with design/language? e. g. : *procedural: example--sort an array—program has access to all array information *oo: example--develop an "array class”—only the owner class and “friends” have direct access to the array structure what kinds of test need to be done in each case? Can you “trust” that the array class has tested its sorting routine sufficiently?

Design for Testability (DFT)--what makes software "testable"? • understandability: you understand module, inputs, and outputs • decomposability: you can decompose into smaller problems and test each separately • operability: only a few errors possible--incremental test strategy • controllability: you can control state + input to test • observability: you can see the results of the test • simplicity: you choose the “simplest solution that will work” • stability: same test will give same results each time

Design for Testability (DFT): useful strategies Some useful strategies: --pretest: test before carrying out an action example: test divisor is not zero before division --posttest: test that you got a valid result example: if you are calculating a grade, test that the result is between 0 and 100 --invariant: test that iterations don’t change value/range example: test that loop counter stays within bounds

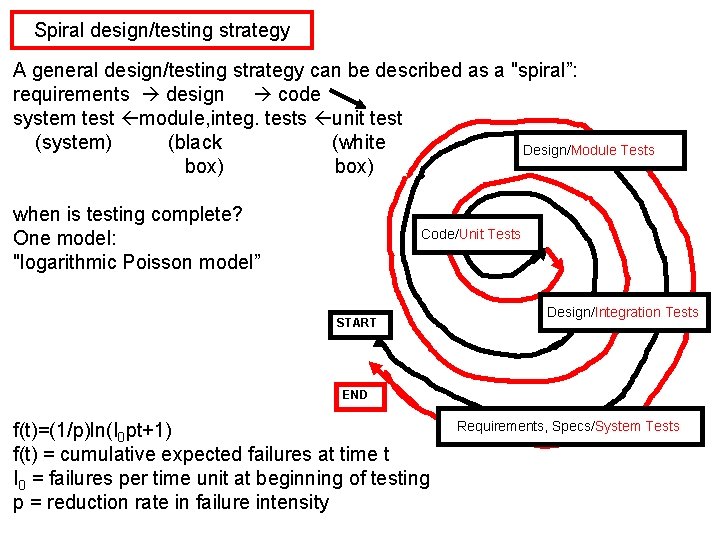

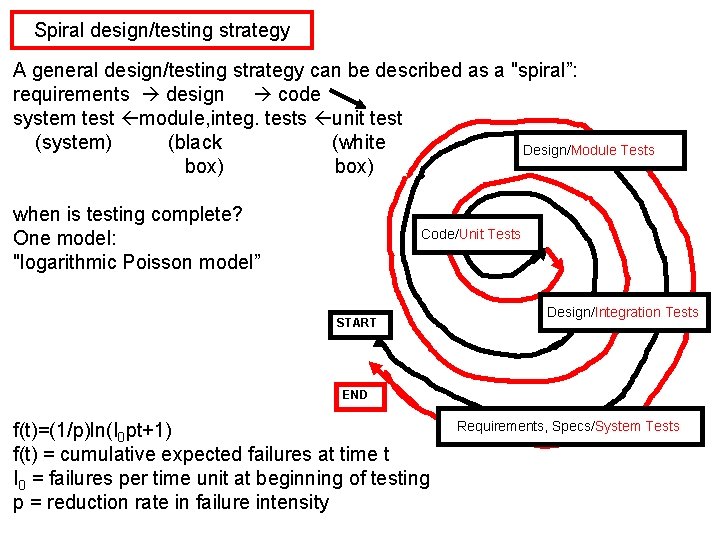

Spiral design/testing strategy A general design/testing strategy can be described as a "spiral”: requirements design code system test module, integ. tests unit test (system) (black (white Design/Module Tests box) when is testing complete? One model: "logarithmic Poisson model” Code/Unit Tests START Design/Integration Tests END f(t)=(1/p)ln(I 0 pt+1) f(t) = cumulative expected failures at time t I 0 = failures per time unit at beginning of testing p = reduction rate in failure intensity Requirements, Specs/System Tests

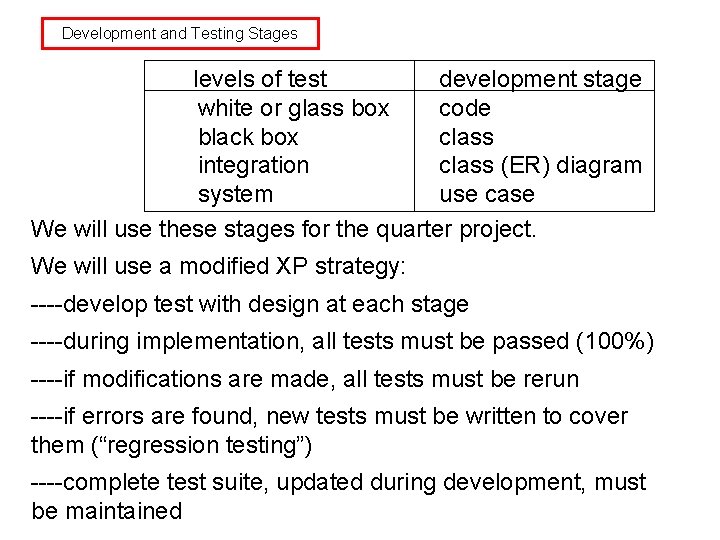

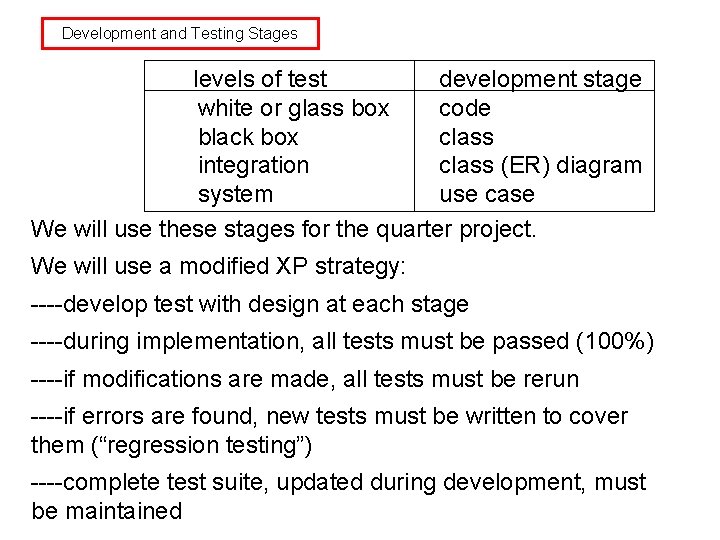

Development and Testing Stages levels of test development stage white or glass box code black box class integration class (ER) diagram system use case We will use these stages for the quarter project. We will use a modified XP strategy: ----develop test with design at each stage ----during implementation, all tests must be passed (100%) ----if modifications are made, all tests must be rerun ----if errors are found, new tests must be written to cover them (“regression testing”) ----complete test suite, updated during development, must be maintained

Types of testing: · white box--"internals” (also called "glass box") · black box—modules and their "interfaces” (also called "behavioral") · system--”functionality” (can be based on specs, use cases) · application-specific-GUIs Client/Server Real-time (e. g. : drug dosage monitor; thermostat) Documentation/help

Good testing strategy steps in good test strategy: • quantified requirements • test objectives explicit • user requirements clear • use "rapid cycle testing" • build self-testing software • filter errors by technical reviews • review test cases and strategy formally also • continually improve testing process

OO testing strategy OO testing: • emphasis is on interfaces • use UML tools to support testing strategies and development of test cases --system tests: use cases; quality measurements --black box tests: ER diagrams, object message diagrams, dataflow and state diagrams --white box tests: class and state diagrams, CRC cards

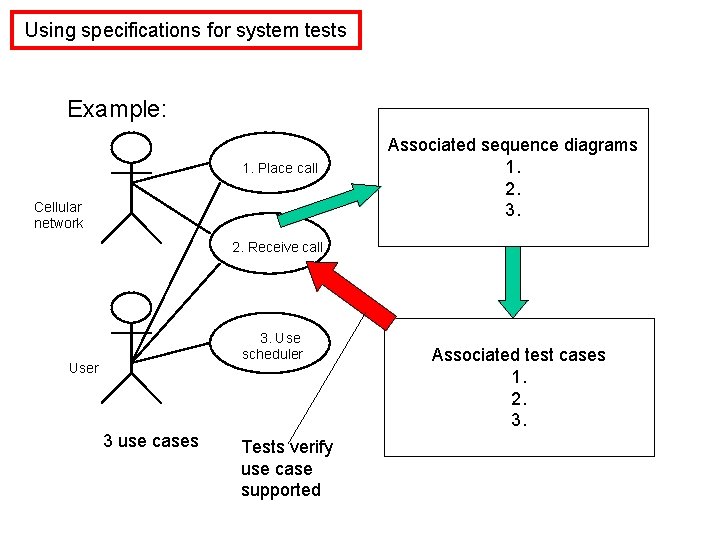

Using specifications for system tests System tests should verify that specifications have been met For UML-based strategy: each use case ---> one or more system tests each quality / performance requirement one or more system tests Additional qualitative or quantitative tests (not from use cases): examples: is system “user-friendly”? are timing requirements met? are available resources sufficient?

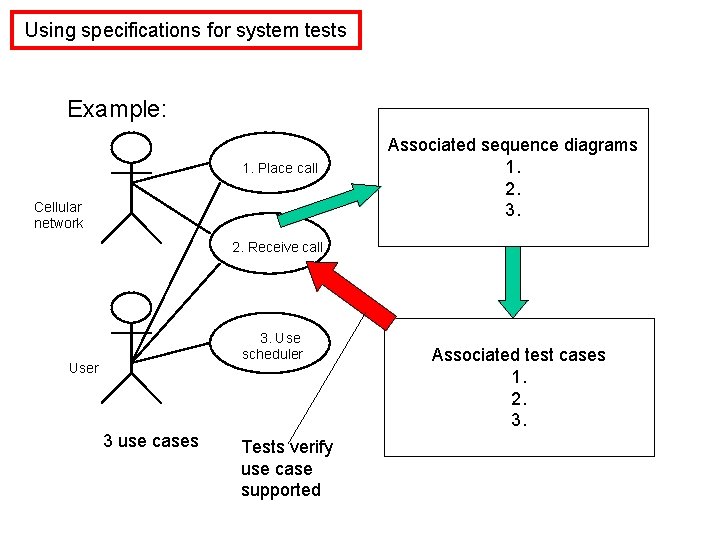

Using specifications for system tests Example: 1. Place call Cellular network Associated sequence diagrams 1. 2. 3. 2. Receive call 3. Use scheduler User 3 use cases Tests verify use case supported Associated test cases 1. 2. 3.

Black box testing--what to test black box testing: test functional requirements of components. Must check: · incorrect or missing components · interface errors · data structures or external data access · behavior /performance errors · initialization and termination errors

Black box testing--testing graph start point is usually a graph: • objects to be modeled • relationships connecting them ("links") • link properties example: can use ER diagrams, object message diagrams, state diagrams, with additional information on links as necessary

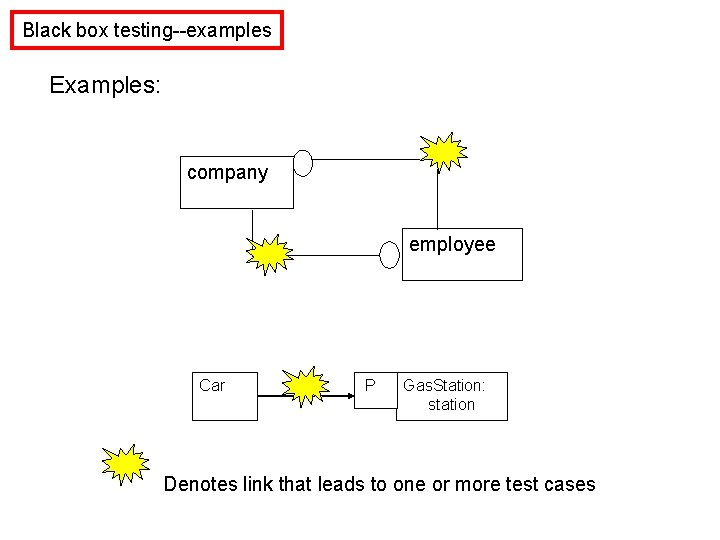

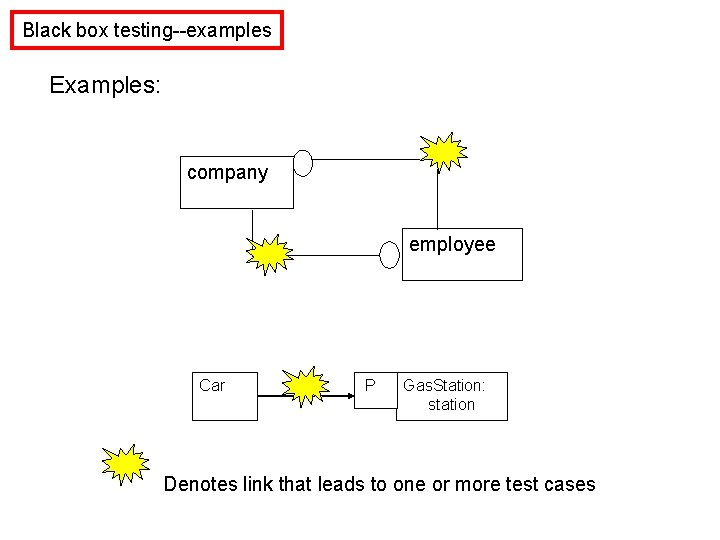

Black box testing--examples Examples: company employee Car P Gas. Station: station Denotes link that leads to one or more test cases

Black box testing techniques Some useful techniques for black box testing: • transaction flow • data flow • state modeling • timing modeling (all "dynamic") much testing focuses on "boundary values" between components

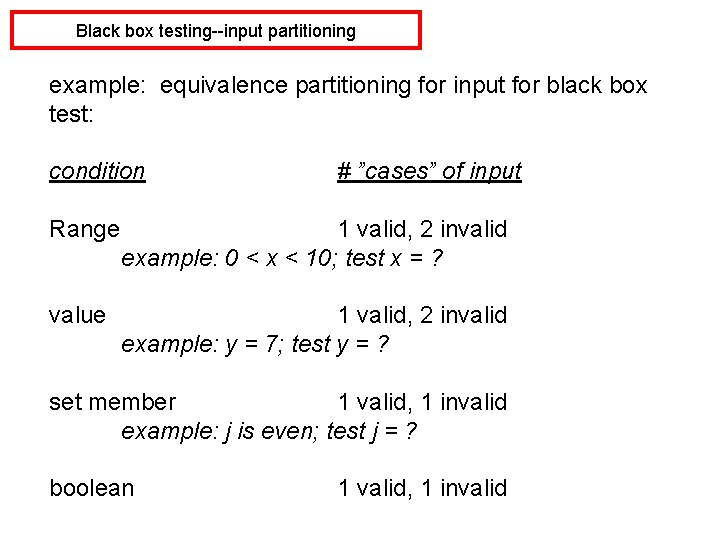

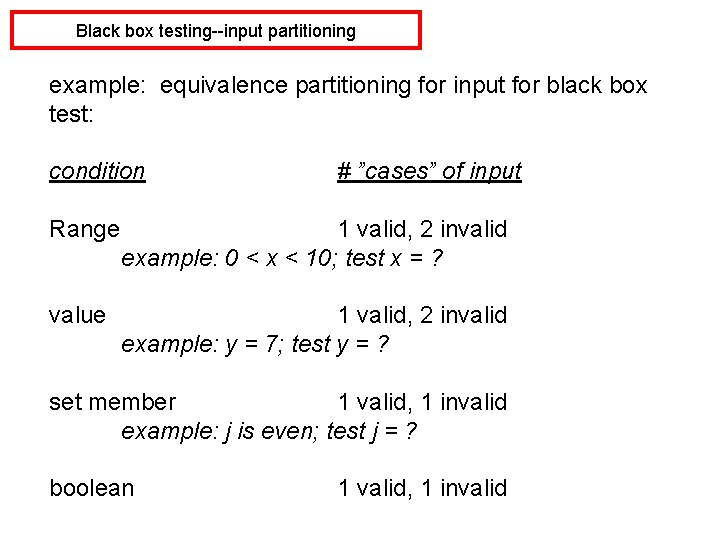

Black box testing--input partitioning example: equivalence partitioning for input for black box test: condition # ”cases” of input Range 1 valid, 2 invalid example: 0 < x < 10; test x = ? value 1 valid, 2 invalid example: y = 7; test y = ? set member 1 valid, 1 invalid example: j is even; test j = ? boolean 1 valid, 1 invalid

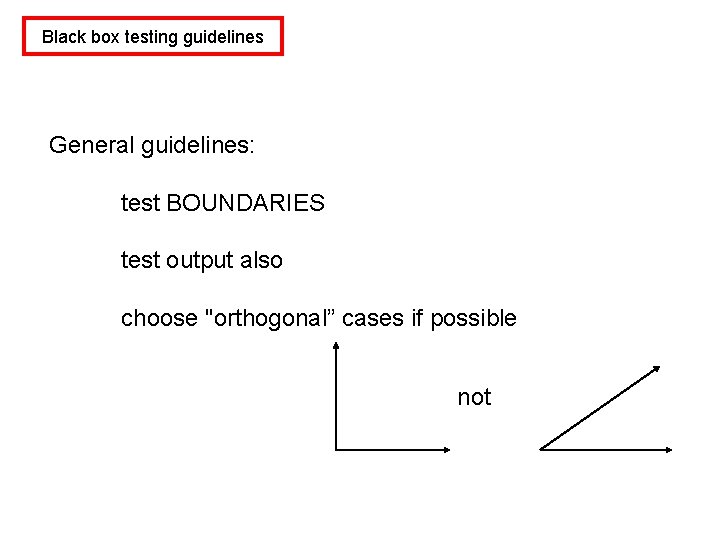

Black box testing guidelines General guidelines: test BOUNDARIES test output also choose "orthogonal” cases if possible not

White box testing: we will look at specific strategies later on (e. g. , path testing)