Process scheduling Multilevel Queue Scheduling A multilevel queue

- Slides: 13

Process scheduling

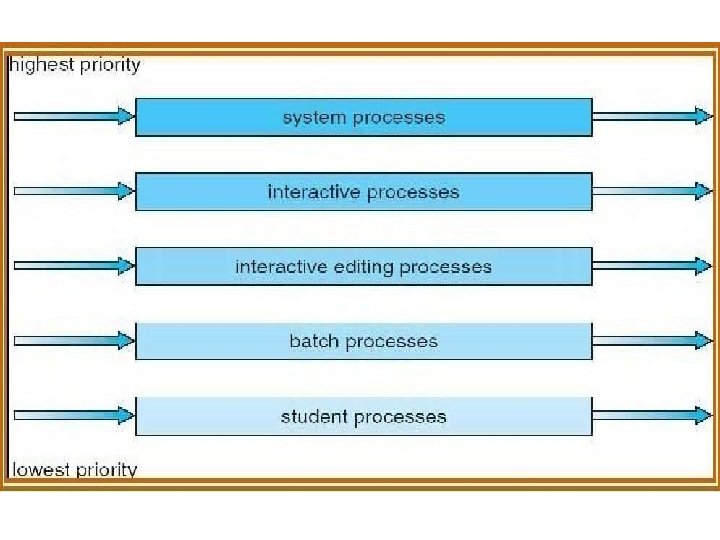

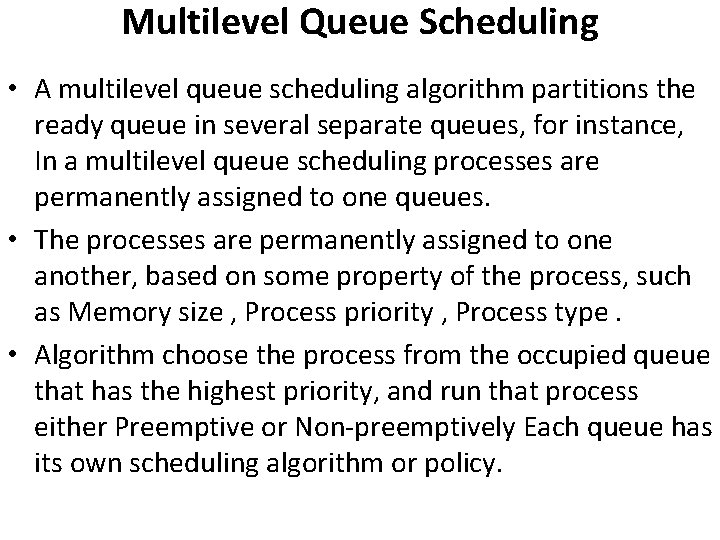

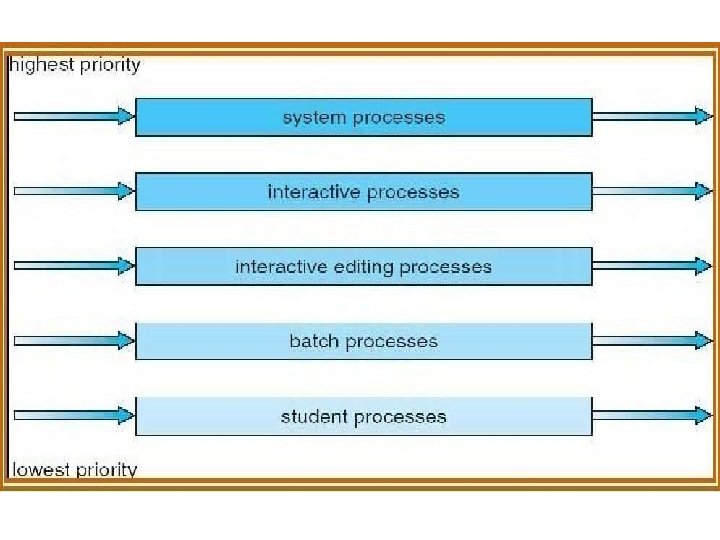

Multilevel Queue Scheduling • A multilevel queue scheduling algorithm partitions the ready queue in several separate queues, for instance, In a multilevel queue scheduling processes are permanently assigned to one queues. • The processes are permanently assigned to one another, based on some property of the process, such as Memory size , Process priority , Process type. • Algorithm choose the process from the occupied queue that has the highest priority, and run that process either Preemptive or Non-preemptively Each queue has its own scheduling algorithm or policy.

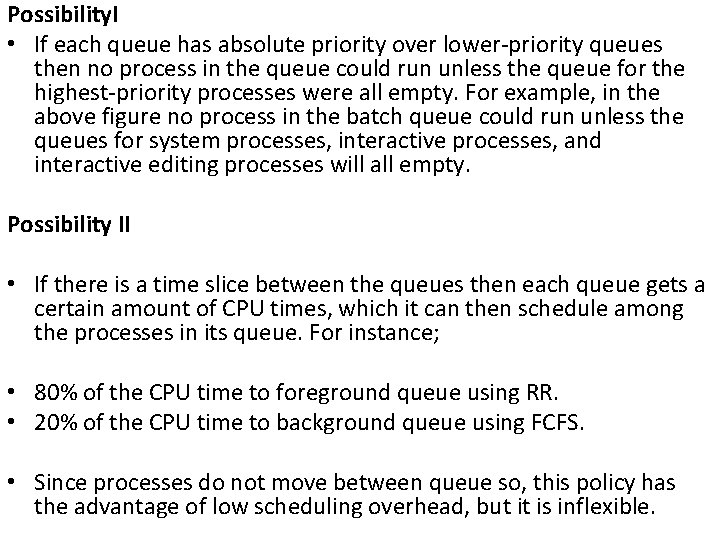

Possibility. I • If each queue has absolute priority over lower-priority queues then no process in the queue could run unless the queue for the highest-priority processes were all empty. For example, in the above figure no process in the batch queue could run unless the queues for system processes, interactive processes, and interactive editing processes will all empty. Possibility II • If there is a time slice between the queues then each queue gets a certain amount of CPU times, which it can then schedule among the processes in its queue. For instance; • 80% of the CPU time to foreground queue using RR. • 20% of the CPU time to background queue using FCFS. • Since processes do not move between queue so, this policy has the advantage of low scheduling overhead, but it is inflexible.

Multilevel Feedback Queue Scheduling • Multilevel feedback queue-scheduling algorithm allows a process to move between queues. • It uses many ready queues and associate a different priority with each queue. • The Algorithm chooses to process with highest priority from the occupied queue and run that process either preemptively or unpreemptively. • If the process uses too much CPU time it will moved to a lower-priority queue. • Similarly, a process that wait too long in the lowerpriority queue may be moved to a higher-priority queue may be moved to a highest-priority queue. Note that this form of aging prevents starvation.

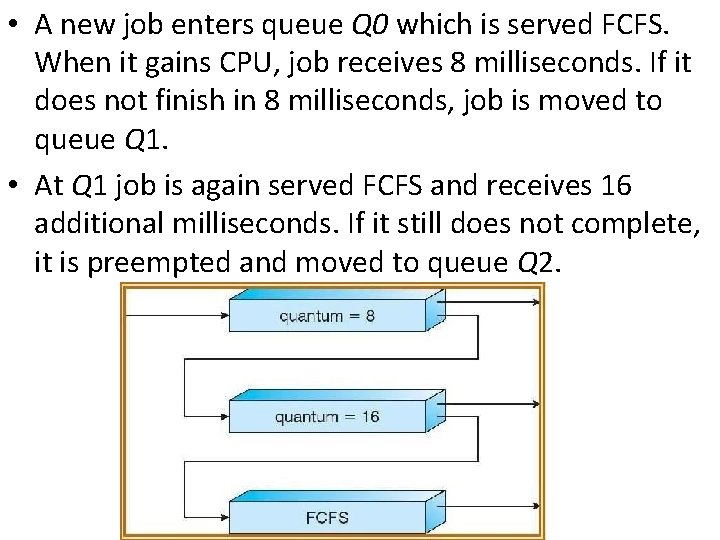

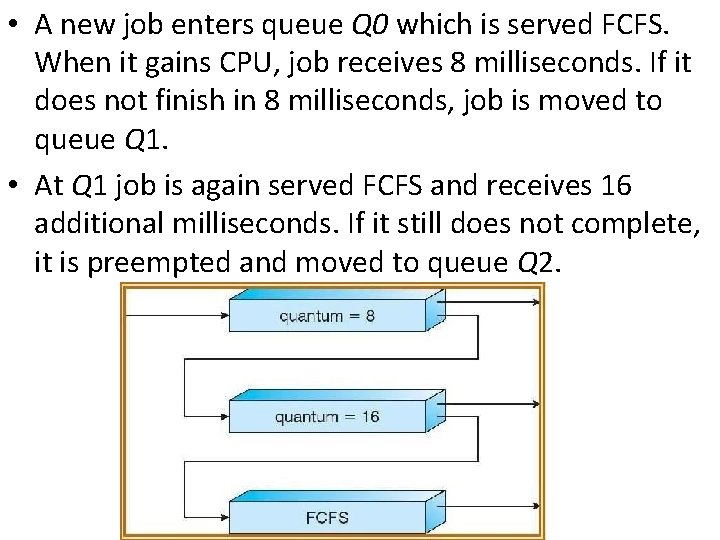

• x A process entering the ready queue is placed in queue 0. • x If it does not finish within 8 milliseconds time, it is moved to the tail of queue 1. • x If it does not complete, it is preempted and placed into queue 2. • x Processes in queue 2 run on a FCFS basis, only when 2 run on a FCFS basis queue, only when queue 0 and queue 1 are empty. • Example: -Three queues: Q 0 – RR with time quantum 8 milliseconds Q 1 – RR time quantum 16 milliseconds Q 2 – FCFS

• A new job enters queue Q 0 which is served FCFS. When it gains CPU, job receives 8 milliseconds. If it does not finish in 8 milliseconds, job is moved to queue Q 1. • At Q 1 job is again served FCFS and receives 16 additional milliseconds. If it still does not complete, it is preempted and moved to queue Q 2.

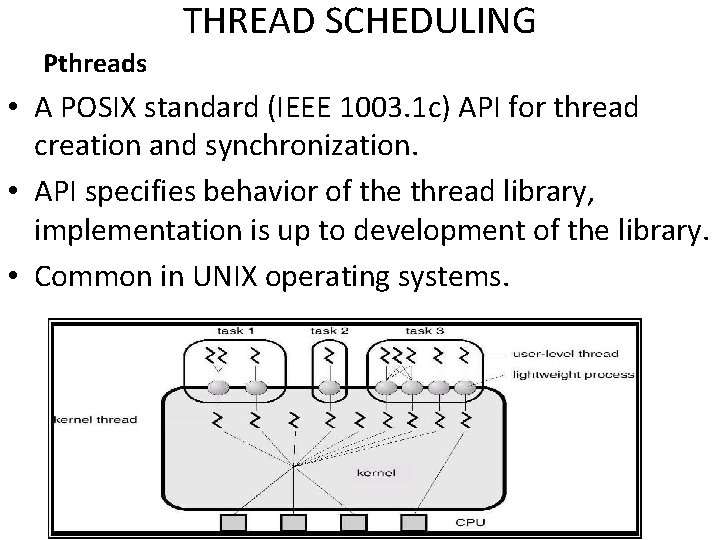

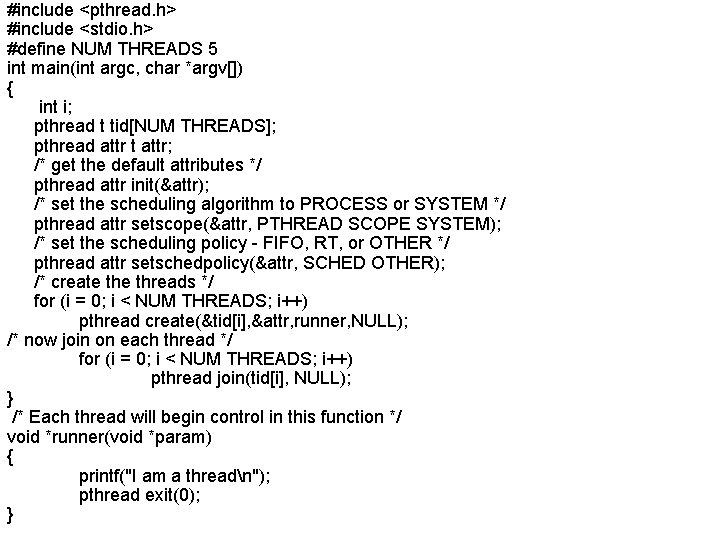

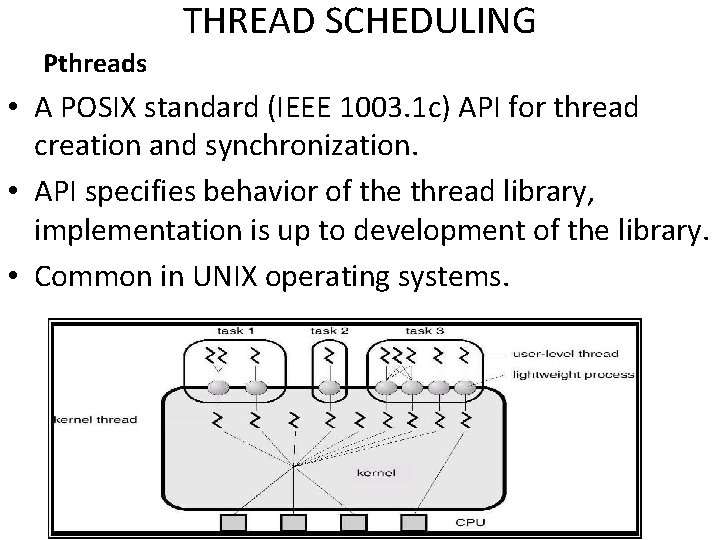

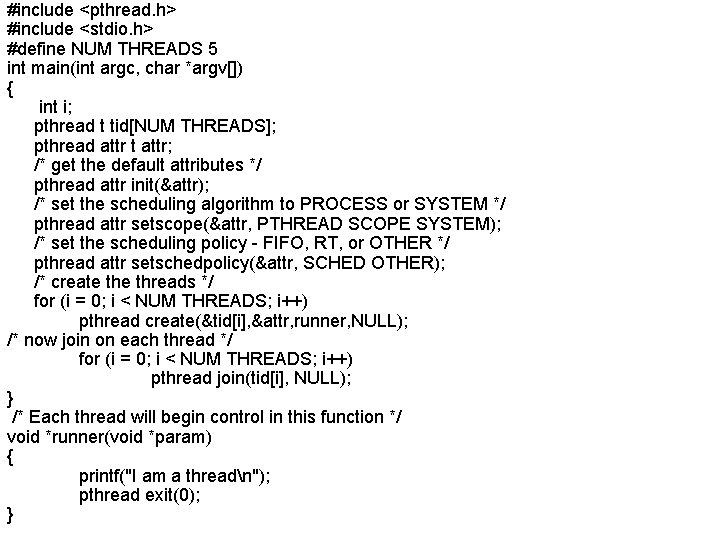

THREAD SCHEDULING Pthreads • A POSIX standard (IEEE 1003. 1 c) API for thread creation and synchronization. • API specifies behavior of the thread library, implementation is up to development of the library. • Common in UNIX operating systems.

#include <pthread. h> #include <stdio. h> #define NUM THREADS 5 int main(int argc, char *argv[]) { int i; pthread t tid[NUM THREADS]; pthread attr t attr; /* get the default attributes */ pthread attr init(&attr); /* set the scheduling algorithm to PROCESS or SYSTEM */ pthread attr setscope(&attr, PTHREAD SCOPE SYSTEM); /* set the scheduling policy - FIFO, RT, or OTHER */ pthread attr setschedpolicy(&attr, SCHED OTHER); /* create threads */ for (i = 0; i < NUM THREADS; i++) pthread create(&tid[i], &attr, runner, NULL); /* now join on each thread */ for (i = 0; i < NUM THREADS; i++) pthread join(tid[i], NULL); } /* Each thread will begin control in this function */ void *runner(void *param) { printf("I am a threadn"); pthread exit(0); }

Windows 2000 Threads Implements the one-to-one mapping. Each thread contains -a thread id -register set -separate user and kernel stacks -private data storage area Linux threads Linux refers to them as tasks rather than threads. x Thread creation is done through clone() system call. x Clone() allows a child task to share the address space of the parent task (process) Java threads may be created by: Extending Thread class Implementing the Runnable interface x Java threads are managed by the JVM.

MULTIPLE-PROCESSOR SCHEDULING • CPU scheduling more complex when multiple CPUs are available. • Homogeneous processors within a multiprocessor • Load sharing • Asymmetric multiprocessing – only one processor accesses the system data structures, alleviating the need for data sharing. • Hard real-time systems – required to complete a critical task within a guaranteed amount of time. • Soft real- time computing – requires that critical processes receive priority over less fortunate ones.

• Symmetric multiprocessing- each processer is self scheduling. All processes may be in same ready queue, or each processer may have its own private queue for ready processes. • Processor affinity- In SMP process has affinity for the processor on which it is currently running i. e. it will not migrate process from one processor to another, since it is of high cost. • Load balancing- Attempts to keep the workload evenly distributed across all processors in SMP system. • Two approaches to load balancing: Push migration and pull migration.