Server Hardware Roadmap Sean Mc Grane Program Manager

- Slides: 29

Server Hardware Roadmap Sean Mc. Grane Program Manager Windows Server Platform Architecture Microsoft Corporation

Agenda Windows Server Roadmap Windows Server 2003 (WS 03) Windows Server codenamed “Longhorn” (WSL) Hardware Roadmap Performance and Scalability Server Consolidation Reliability Security

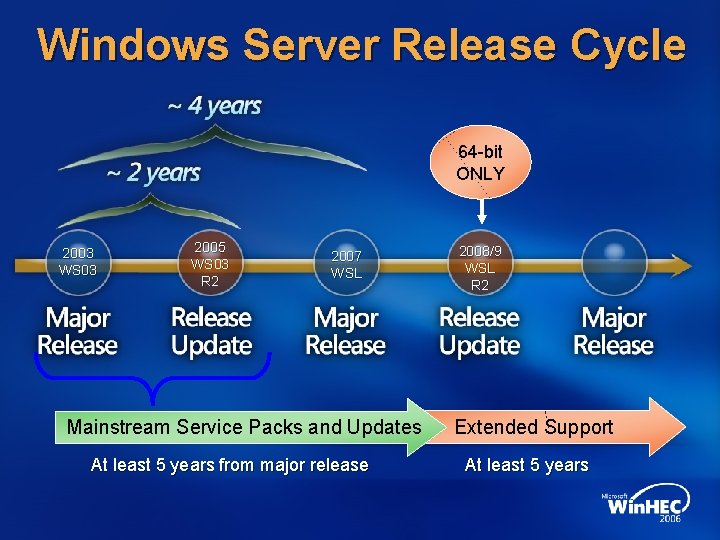

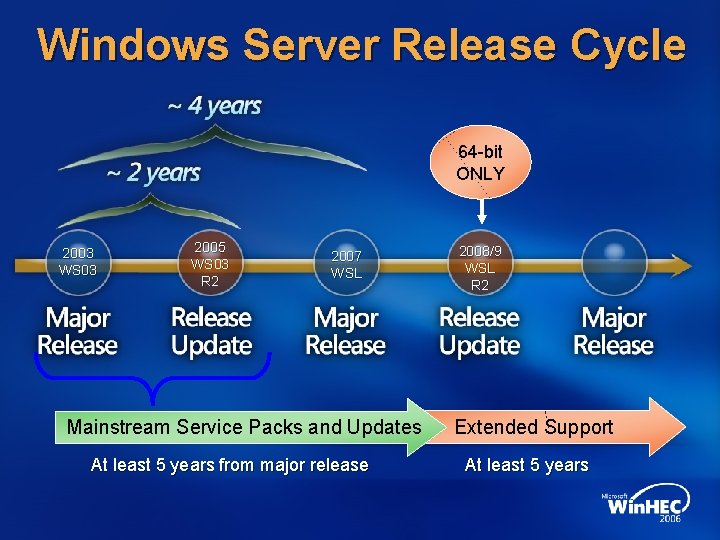

Windows Server Release Cycle 64 -bit ONLY 2003 WS 03 2005 WS 03 R 2 2007 WSL Mainstream Service Packs and Updates At least 5 years from major release 2008/9 WSL R 2 Extended Support At least 5 years

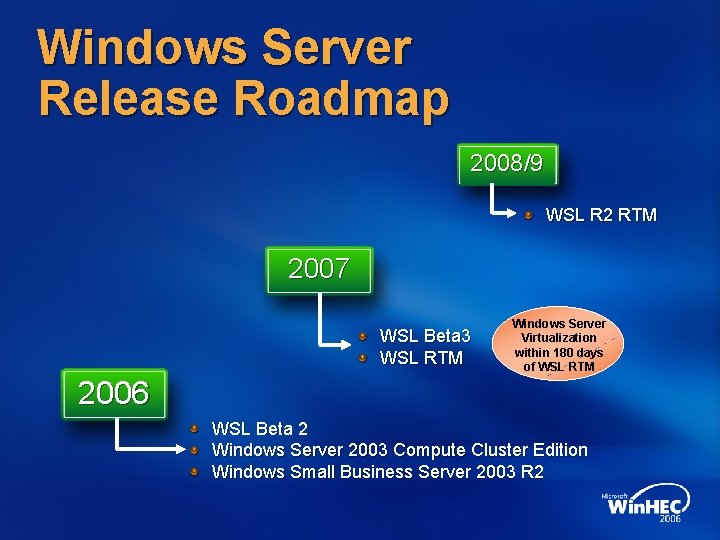

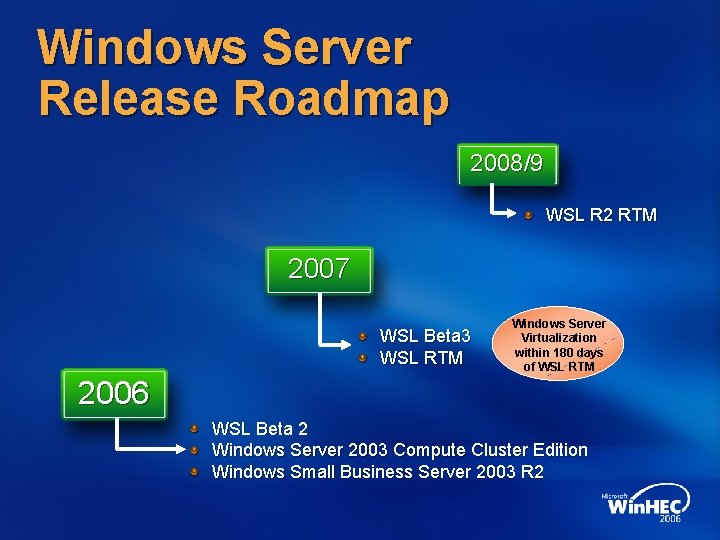

Windows Server Release Roadmap 2008/9 WSL R 2 RTM 2007 WSL Beta 3 WSL RTM Windows Server Virtualization within 180 days of WSL RTM 2006 WSL Beta 2 Windows Server 2003 Compute Cluster Edition Windows Small Business Server 2003 R 2

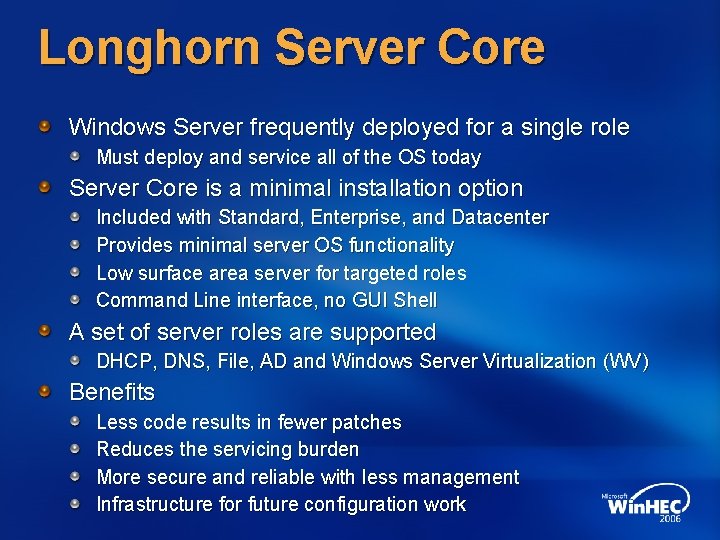

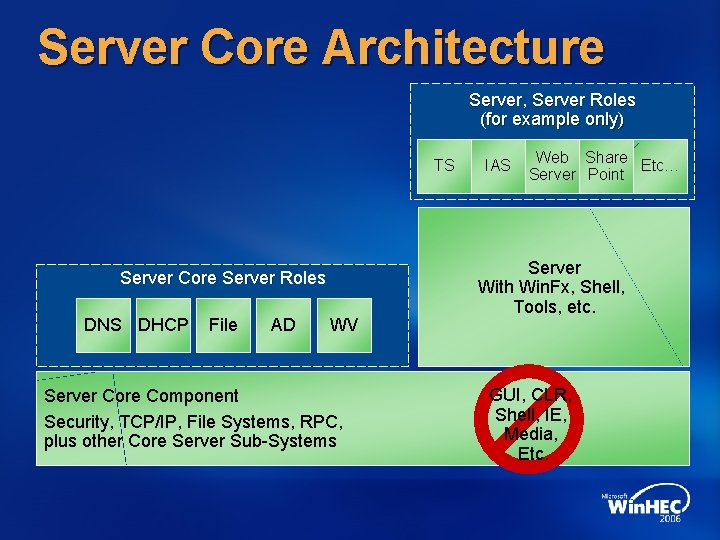

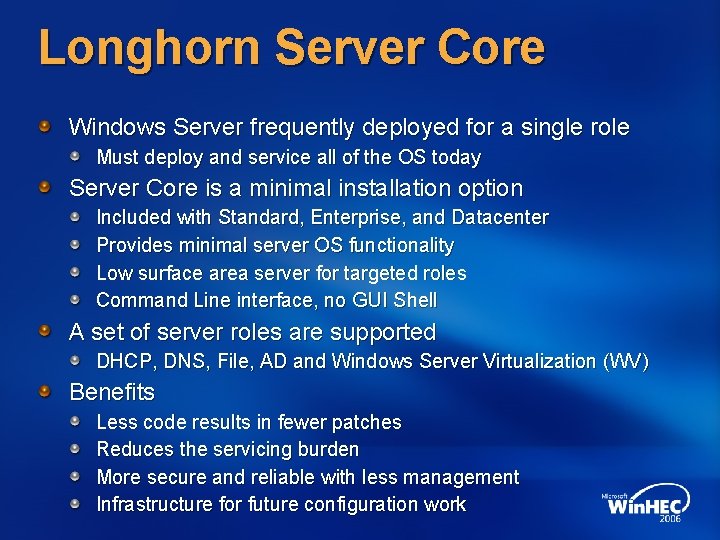

Longhorn Server Core Windows Server frequently deployed for a single role Must deploy and service all of the OS today Server Core is a minimal installation option Included with Standard, Enterprise, and Datacenter Provides minimal server OS functionality Low surface area server for targeted roles Command Line interface, no GUI Shell A set of server roles are supported DHCP, DNS, File, AD and Windows Server Virtualization (WV) Benefits Less code results in fewer patches Reduces the servicing burden More secure and reliable with less management Infrastructure for future configuration work

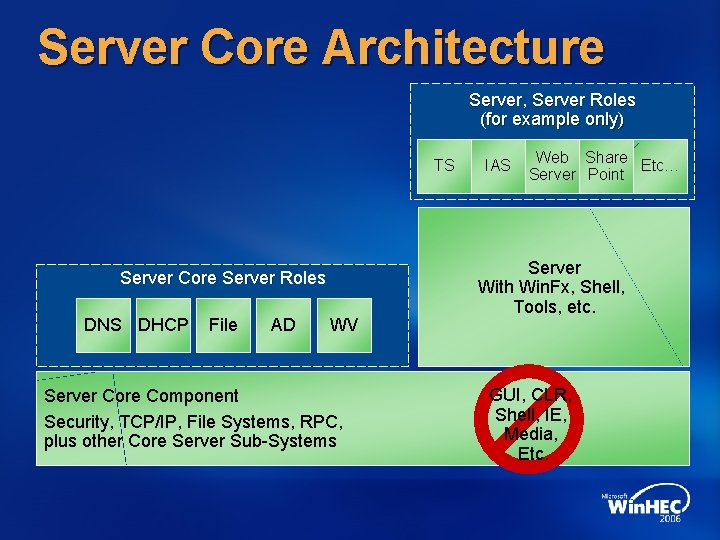

Server Core Architecture Server, Server Roles (for example only) TS Server Core Server Roles DNS DHCP File AD WV Server Core Component Security, TCP/IP, File Systems, RPC, plus other Core Server Sub-Systems IAS Web Share Etc… Server Point Server With Win. Fx, Shell, Tools, etc. GUI, CLR, Shell, IE, Media, Etc.

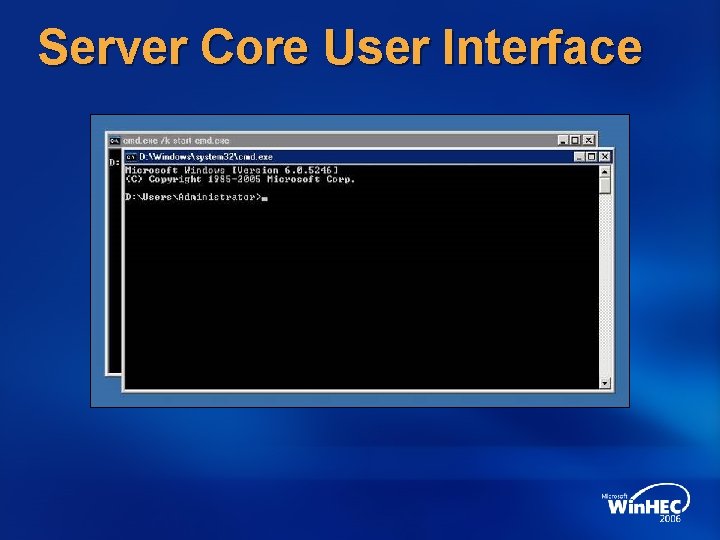

Server Core User Interface

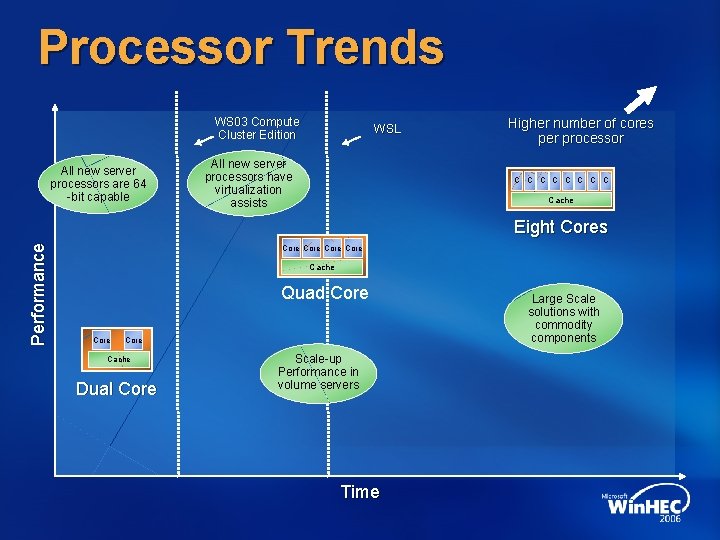

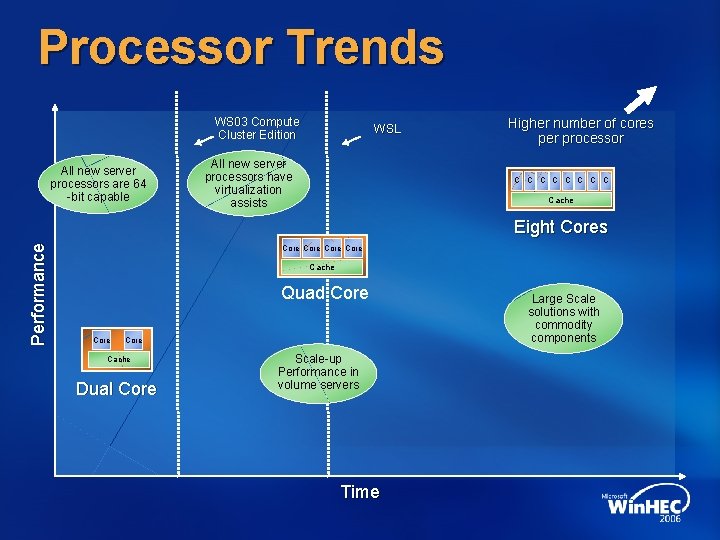

Processor Trends WS 03 Compute Cluster Edition All new server processors are 64 -bit capable WSL All new server processors have virtualization assists Higher number of cores per processor C C C C Cache Performance Eight Cores Core Cache Quad Core Cache Dual Core Scale-up Performance in volume servers Time Large Scale solutions with commodity components

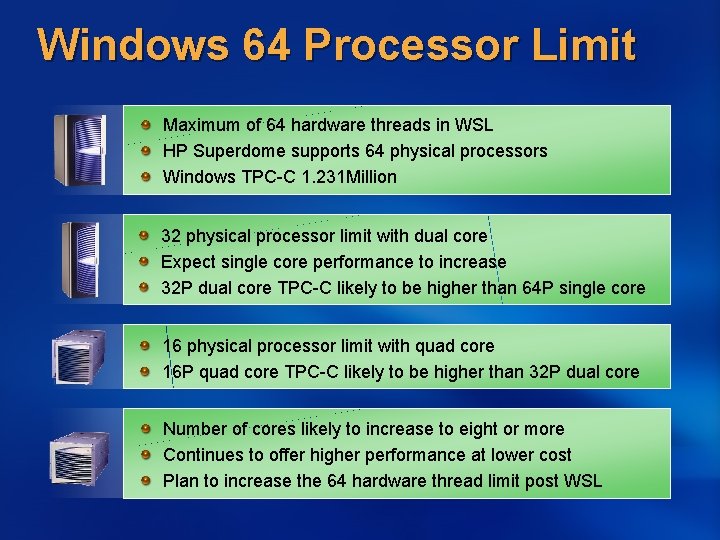

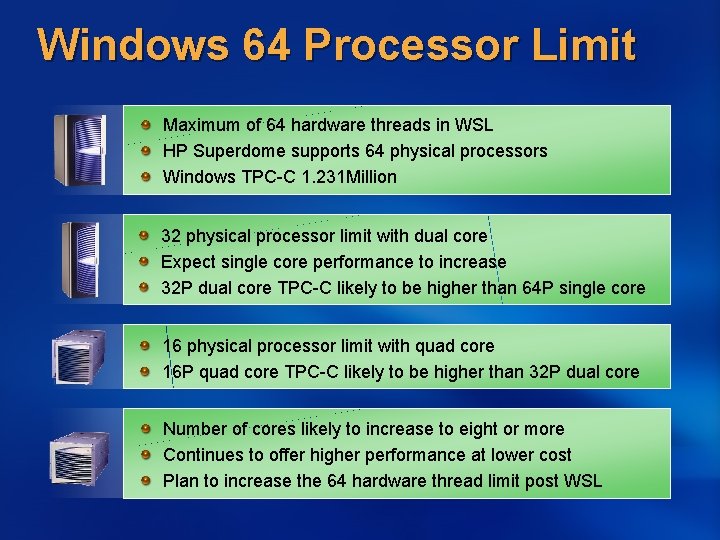

Windows 64 Processor Limit Maximum of 64 hardware threads in WSL HP Superdome supports 64 physical processors Windows TPC-C 1. 231 Million 32 physical processor limit with dual core Expect single core performance to increase 32 P dual core TPC-C likely to be higher than 64 P single core 16 physical processor limit with quad core 16 P quad core TPC-C likely to be higher than 32 P dual core Number of cores likely to increase to eight or more Continues to offer higher performance at lower cost Plan to increase the 64 hardware thread limit post WSL

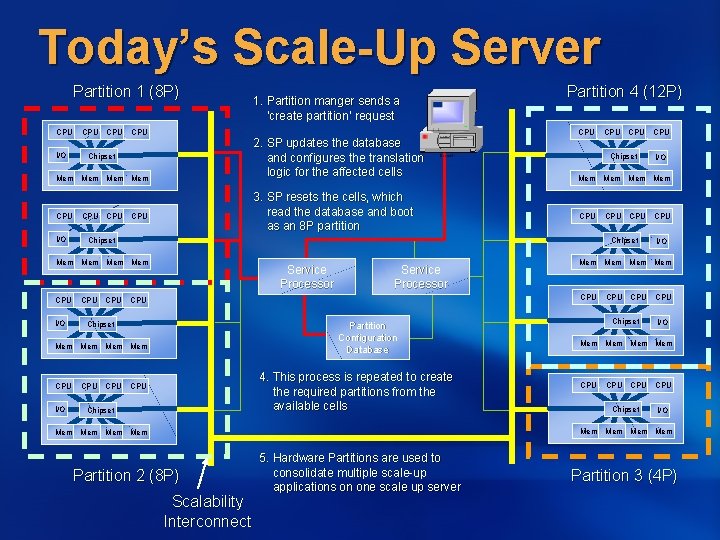

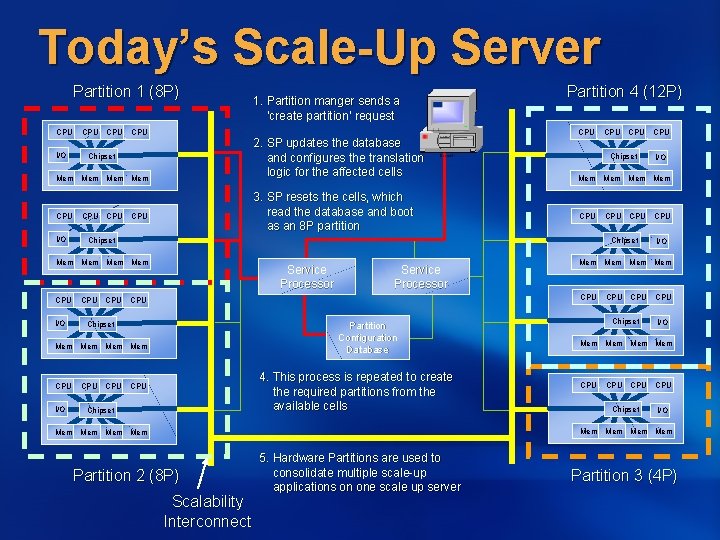

Today’s Scale-Up Server Partition 1 (8 P) CPU I/O CPU CPU 2. SP updates the database and configures the translation logic for the affected cells Chipset Mem Mem CPU I/O 3. SP resets the cells, which read the database and boot as an 8 P partition CPU CPU I/O CPU CPU Chipset Service Processor CPU CPU Partition Configuration Database Chipset 4. This process is repeated to create the required partitions from the available cells CPU CPU Chipset I/O Mem Mem CPU CPU I/O Mem Mem Chipset Mem Mem CPU Partition 4 (12 P) Chipset Mem Mem CPU 1. Partition manger sends a ‘create partition’ request CPU CPU Chipset I/O Mem Mem Mem Mem Partition 2 (8 P) Scalability Interconnect 5. Hardware Partitions are used to consolidate multiple scale-up applications on one scale up server Partition 3 (4 P)

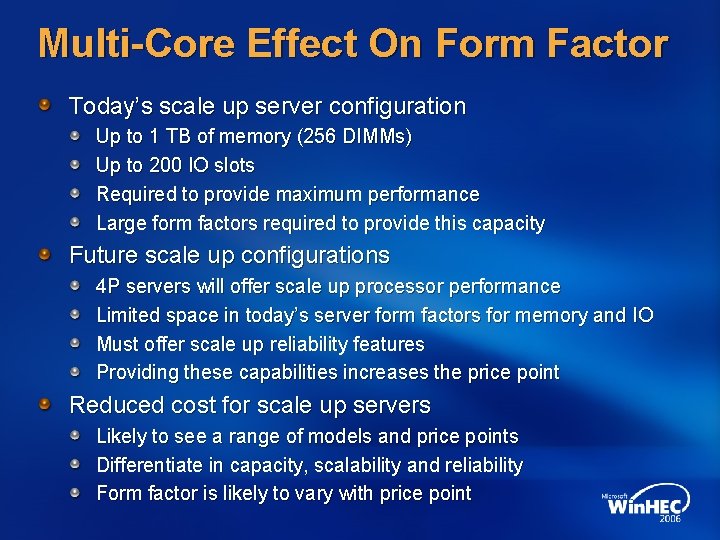

Multi-Core Effect On Form Factor Today’s scale up server configuration Up to 1 TB of memory (256 DIMMs) Up to 200 IO slots Required to provide maximum performance Large form factors required to provide this capacity Future scale up configurations 4 P servers will offer scale up processor performance Limited space in today’s server form factors for memory and IO Must offer scale up reliability features Providing these capabilities increases the price point Reduced cost for scale up servers Likely to see a range of models and price points Differentiate in capacity, scalability and reliability Form factor is likely to vary with price point

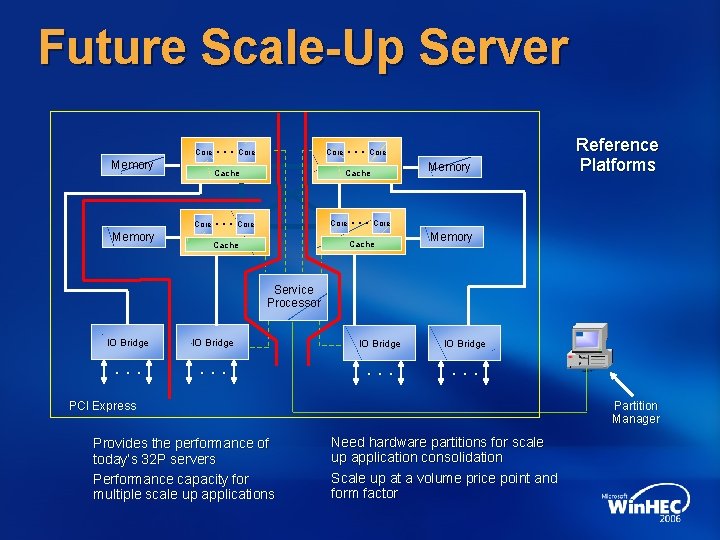

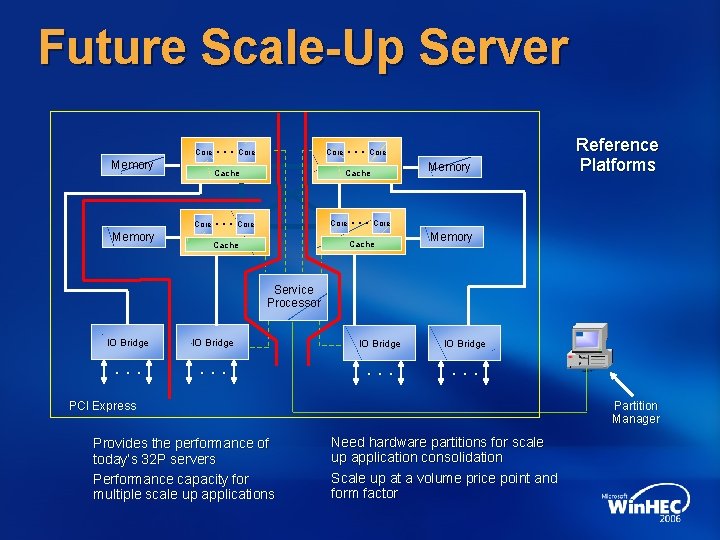

Future Scale-Up Server Core Memory Core Cache Core Memory … … … Core Memory Cache Core … Core Cache Reference Platforms Memory Service Processor IO Bridge . . . PCI Express Provides the performance of today’s 32 P servers Performance capacity for multiple scale up applications Partition Manager Need hardware partitions for scale up application consolidation Scale up at a volume price point and form factor

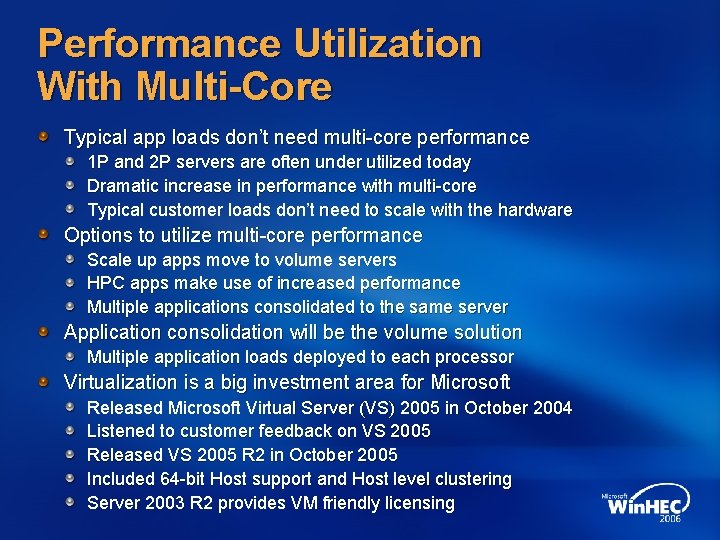

Performance Utilization With Multi-Core Typical app loads don’t need multi-core performance 1 P and 2 P servers are often under utilized today Dramatic increase in performance with multi-core Typical customer loads don’t need to scale with the hardware Options to utilize multi-core performance Scale up apps move to volume servers HPC apps make use of increased performance Multiple applications consolidated to the same server Application consolidation will be the volume solution Multiple application loads deployed to each processor Virtualization is a big investment area for Microsoft Released Microsoft Virtual Server (VS) 2005 in October 2004 Listened to customer feedback on VS 2005 Released VS 2005 R 2 in October 2005 Included 64 -bit Host support and Host level clustering Server 2003 R 2 provides VM friendly licensing

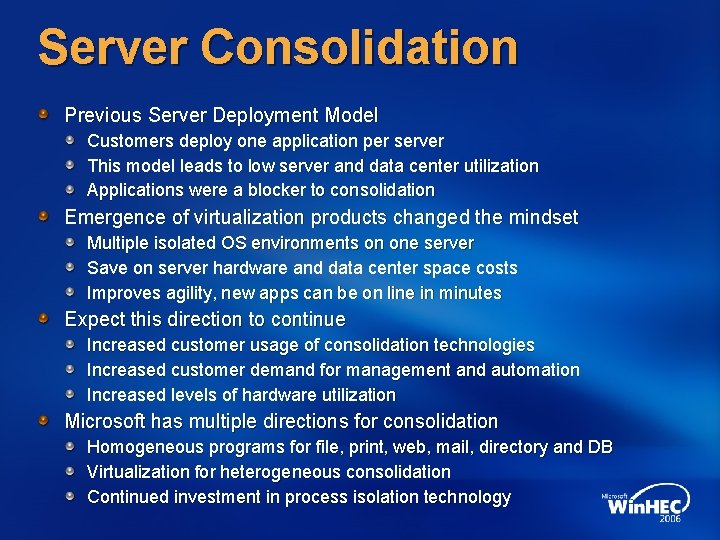

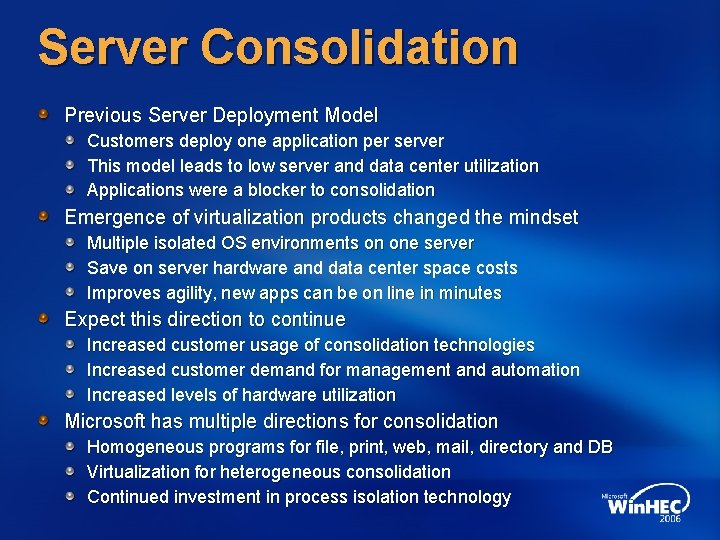

Server Consolidation Previous Server Deployment Model Customers deploy one application per server This model leads to low server and data center utilization Applications were a blocker to consolidation Emergence of virtualization products changed the mindset Multiple isolated OS environments on one server Save on server hardware and data center space costs Improves agility, new apps can be on line in minutes Expect this direction to continue Increased customer usage of consolidation technologies Increased customer demand for management and automation Increased levels of hardware utilization Microsoft has multiple directions for consolidation Homogeneous programs for file, print, web, mail, directory and DB Virtualization for heterogeneous consolidation Continued investment in process isolation technology

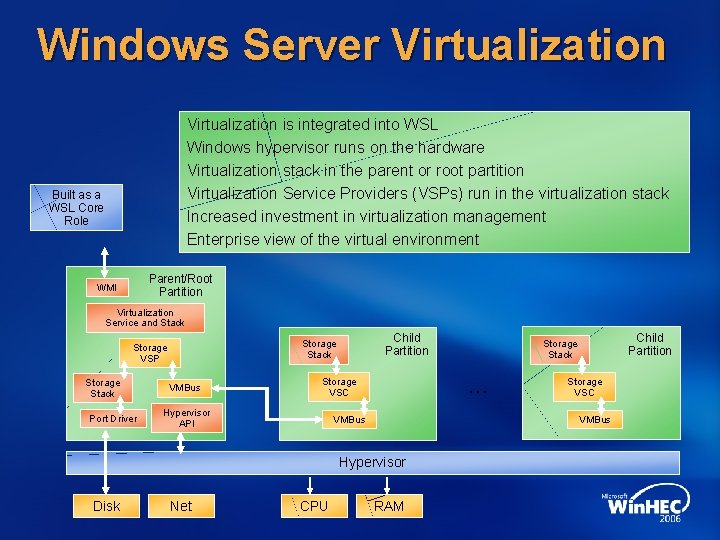

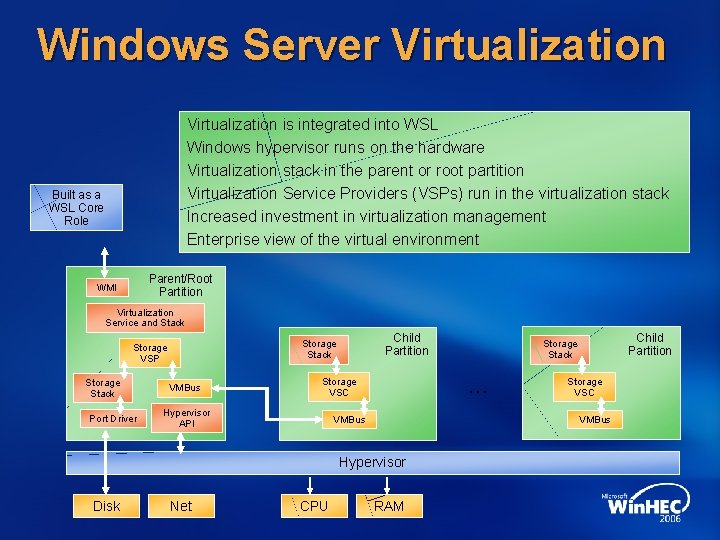

Windows Server Virtualization is integrated into WSL Windows hypervisor runs on the hardware Virtualization stack in the parent or root partition Virtualization Service Providers (VSPs) run in the virtualization stack Increased investment in virtualization management Enterprise view of the virtual environment Built as a WSL Core Role Parent/Root Partition WMI Virtualization Service and Stack Storage Stack Port Driver Child Partition Storage Stack Storage VSP VMBus … Storage VSC Hypervisor API VMBus Net CPU Storage VSC VMBus Hypervisor Disk Child Partition Storage Stack RAM

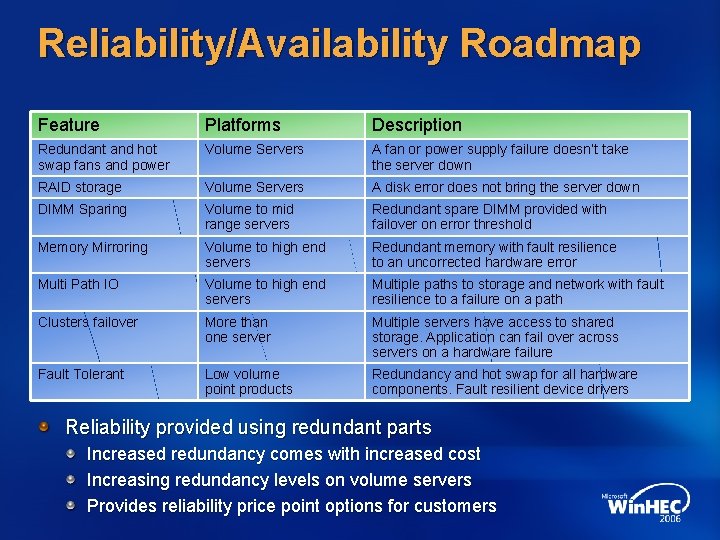

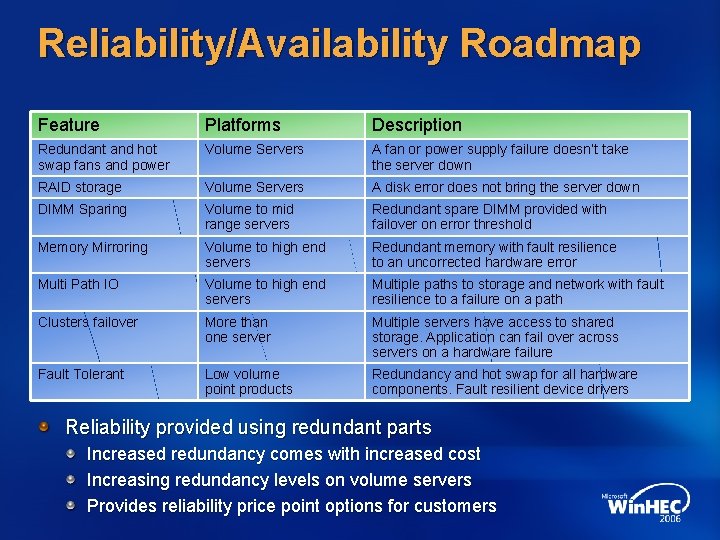

Reliability/Availability Roadmap Feature Platforms Description Redundant and hot swap fans and power Volume Servers A fan or power supply failure doesn’t take the server down RAID storage Volume Servers A disk error does not bring the server down DIMM Sparing Volume to mid range servers Redundant spare DIMM provided with failover on error threshold Memory Mirroring Volume to high end servers Redundant memory with fault resilience to an uncorrected hardware error Multi Path IO Volume to high end servers Multiple paths to storage and network with fault resilience to a failure on a path Clusters failover More than one server Multiple servers have access to shared storage. Application can fail over across servers on a hardware failure Fault Tolerant Low volume point products Redundancy and hot swap for all hardware components. Fault resilient device drivers Reliability provided using redundant parts Increased redundancy comes with increased cost Increasing redundancy levels on volume servers Provides reliability price point options for customers

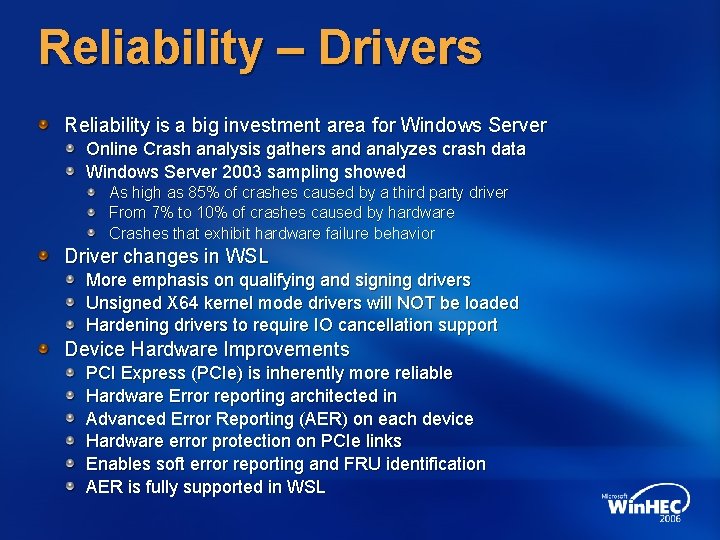

Reliability – Drivers Reliability is a big investment area for Windows Server Online Crash analysis gathers and analyzes crash data Windows Server 2003 sampling showed As high as 85% of crashes caused by a third party driver From 7% to 10% of crashes caused by hardware Crashes that exhibit hardware failure behavior Driver changes in WSL More emphasis on qualifying and signing drivers Unsigned X 64 kernel mode drivers will NOT be loaded Hardening drivers to require IO cancellation support Device Hardware Improvements PCI Express (PCIe) is inherently more reliable Hardware Error reporting architected in Advanced Error Reporting (AER) on each device Hardware error protection on PCIe links Enables soft error reporting and FRU identification AER is fully supported in WSL

Windows Hardware Error Architecture (WHEA) Motivation – The problem Platform reliability is a big investment area for WSL Limited hardware error handling architecture for X 64 No standards for hardware error reporting in Windows Limits the ability to improve reliability Hardware improving but limited integration with OS Goals of WHEA Create a common hardware error infrastructure in Windows Provide standard error handling interfaces Enable OS participation in hardware error processing Work with the industry to improve hardware error handling Scope of WHEA In WSL WHEA handles system hardware errors Includes processor, chipset, memory and PCIe errors PCIe end device errors are out of scope for WSL WHEA will coexist with Baseboard Management Controller (BMC) error handling WHEA won’t report environmental errors, e. g. fans

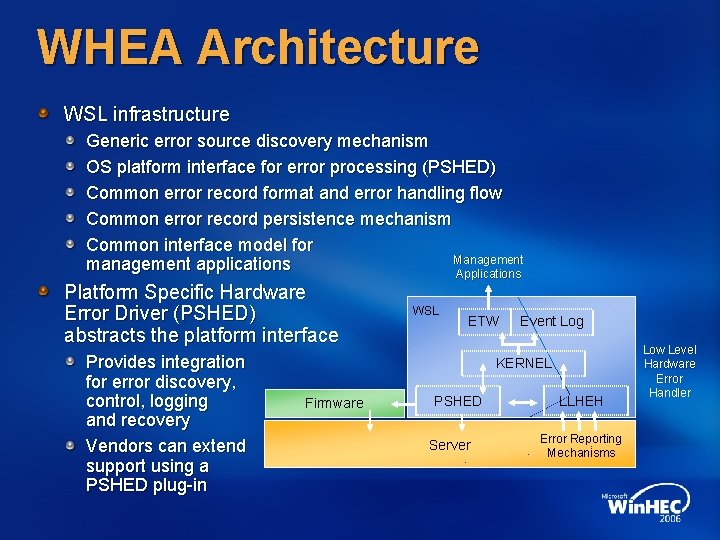

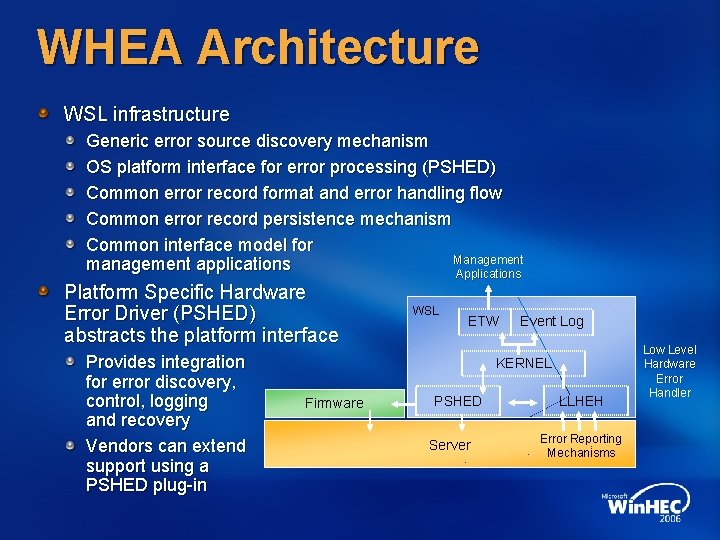

WHEA Architecture WSL infrastructure Generic error source discovery mechanism OS platform interface for error processing (PSHED) Common error record format and error handling flow Common error record persistence mechanism Common interface model for Management management applications Applications Platform Specific Hardware Error Driver (PSHED) abstracts the platform interface Provides integration for error discovery, control, logging and recovery Vendors can extend support using a PSHED plug-in WSL ETW Event Log KERNEL Firmware PSHED Server LLHEH Error Reporting Mechanisms Low Level Hardware Error Handler

WHEA Value of WHEA Reduce mean time to recovery through richer error reporting Enable effective hardware health monitoring Enable powerful error management applications Reduce crashes using OS based error recovery Stop using components that are predicted to fail, e. g. Page of physical memory, PCIe device, replace a processor, etc. Effect and utilize existing and future hardware error standards Futures Will continue investment in platform reliability post WSL WHEA is the enabler for higher levels of reliability May include IO end point devices in the next release Would require driver participation with WHEA Will build more advanced error recovery mechanisms

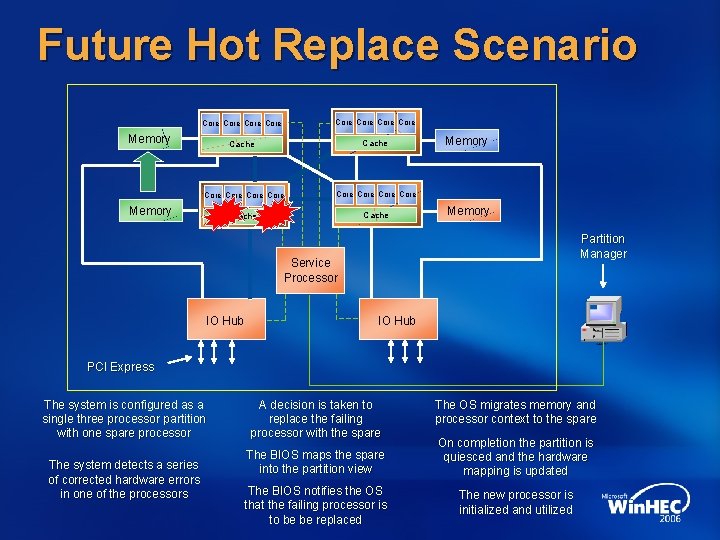

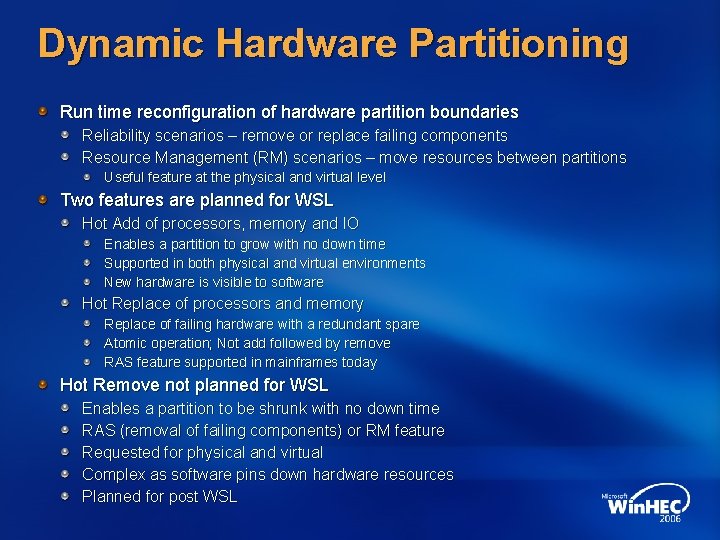

Dynamic Hardware Partitioning Run time reconfiguration of hardware partition boundaries Reliability scenarios – remove or replace failing components Resource Management (RM) scenarios – move resources between partitions Useful feature at the physical and virtual level Two features are planned for WSL Hot Add of processors, memory and IO Enables a partition to grow with no down time Supported in both physical and virtual environments New hardware is visible to software Hot Replace of processors and memory Replace of failing hardware with a redundant spare Atomic operation; Not add followed by remove RAS feature supported in mainframes today Hot Remove not planned for WSL Enables a partition to be shrunk with no down time RAS (removal of failing components) or RM feature Requested for physical and virtual Complex as software pins down hardware resources Planned for post WSL

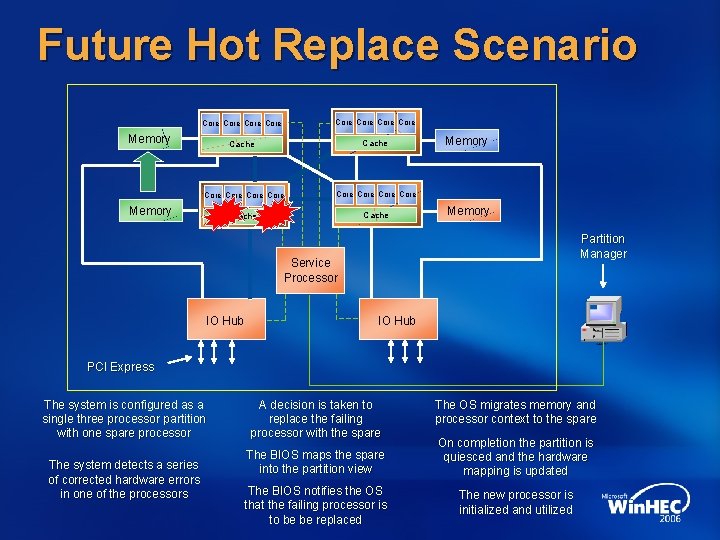

Future Hot Replace Scenario Core Core Core Core Cache Memory Partition Manager Service Processor IO Hub PCI Express The system is configured as a single three processor partition with one spare processor The system detects a series of corrected hardware errors in one of the processors A decision is taken to replace the failing processor with the spare The OS migrates memory and processor context to the spare The BIOS maps the spare into the partition view On completion the partition is quiesced and the hardware mapping is updated The BIOS notifies the OS that the failing processor is to be be replaced The new processor is initialized and utilized

Branch Office Security Servers are typically physically secure Reside in data centers or computer rooms The exception is small and branch office servers >25% of Windows servers are sold into this Segment Typically 1 P or 2 P pedestal servers The environment often has no or limited physical security The public and third parties have physical access to servers Server drives contain sensitive information Medical, financial or credit card information Vulnerable to physical attack from non–authorized parties Might boot the server to another OS and mount the drives Could steal the server or drive Bit. Locker™ Drive Encryption is included in WSL Measures the boot path to enable authenticated boot Encrypts the data at rest on OS or Data drives Provides key security using Trusted Platform Module (TPM) hardware Protects drive data against physical attacks

Boot Path Protection When Bit. Locker is enabled BIOS/OS form a static root of trust and create encryption keys Early boot components are measured BIOS initializes the TPM and measures itself OS measures Master Boot Block, Boot Manager and OS Loader Measurements are saved to Platform Configuration Registers (PCRs) in the TPM Measurements are checked on each boot These are verified against the TPM contents Any changes will cause the measurements to fail On failure the encryption key is not unsealed and the recovery console is invoked This prevents access to the encrypted drive Recovery from a lost key A recovery key is provided when Bit. Locker is enabled Can be stored in Active Directory Recovery console detects and prompts for recovery key

Bit. Locker™ Overview Protects data on physically insecure servers Low management overhead Boot path authentication Key protection provided by TPM hardware Data encrypted on disk Delivered in WSL Targeted at small and branch office servers Post WSL considerations Expand usage models for server More advanced key management New ways to utilize the TPM resources

Call To Action Validate WSL on your servers and devices Ask your Microsoft contact for participation in the WSL Beta or TAP programs Validate Core Server roles and Windows Server Virtualization Validate management tools with Core Server Prepare for WSL and 64 -bit only Windows Provide 64 -bit drivers and kernel components Ensure all drivers are digitally signed Consider multi-core effect on servers Provide sufficient memory and IO capacity Support PCIe AER to improve server reliability Extend WHEA support with PSHED plug-ins Provide management solutions around WHEA Consider hardware partitioning Ensure device drivers are hot add and hot replace aware Consider spare components for high reliability Provide a TPM on branch office servers

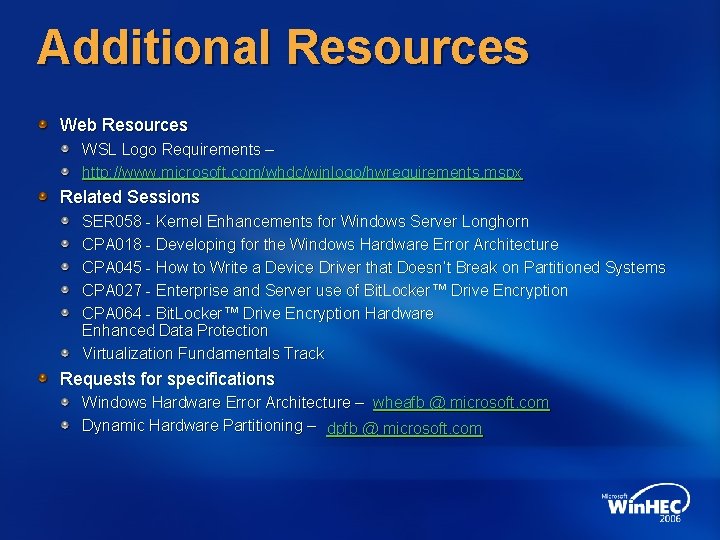

Additional Resources Web Resources WSL Logo Requirements – http: //www. microsoft. com/whdc/winlogo/hwrequirements. mspx Related Sessions SER 058 - Kernel Enhancements for Windows Server Longhorn CPA 018 - Developing for the Windows Hardware Error Architecture CPA 045 - How to Write a Device Driver that Doesn’t Break on Partitioned Systems CPA 027 - Enterprise and Server use of Bit. Locker™ Drive Encryption CPA 064 - Bit. Locker™ Drive Encryption Hardware Enhanced Data Protection Virtualization Fundamentals Track Requests for specifications Windows Hardware Error Architecture – wheafb @ microsoft. com Dynamic Hardware Partitioning – dpfb @ microsoft. com

© 2006 Microsoft Corporation. All rights reserved. Microsoft, Windows Vista and other product names are or may be registered trademarks and/or trademarks in the U. S. and/or other countries. The information herein is for informational purposes only and represents the current view of Microsoft Corporation as of the date of this presentation. Because Microsoft must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Microsoft, and Microsoft cannot guarantee the accuracy of any information provided after the date of this presentation. MICROSOFT MAKES NO WARRANTIES, EXPRESS, IMPLIED OR STATUTORY, AS TO THE INFORMATION IN THIS PRESENTATION.