Scale and interest point descriptors T11 Computer Vision

![SIFT descriptor [Lowe 2004] • Use histograms to bin pixels within sub-patches according to SIFT descriptor [Lowe 2004] • Use histograms to bin pixels within sub-patches according to](https://slidetodoc.com/presentation_image_h2/7e6de99a05c8b88a740ee35895e4e1fd/image-53.jpg)

![SIFT descriptor [Lowe 2004] • Extraordinarily robust matching technique • Can handle changes in SIFT descriptor [Lowe 2004] • Extraordinarily robust matching technique • Can handle changes in](https://slidetodoc.com/presentation_image_h2/7e6de99a05c8b88a740ee35895e4e1fd/image-57.jpg)

![Histogram of oriented gradients (HOG) [Dalal 2005] • Variant of SIFT • Nearby gradients Histogram of oriented gradients (HOG) [Dalal 2005] • Variant of SIFT • Nearby gradients](https://slidetodoc.com/presentation_image_h2/7e6de99a05c8b88a740ee35895e4e1fd/image-58.jpg)

- Slides: 75

Scale and interest point descriptors T-11 Computer Vision University of Ioannina Christophoros Nikou Images and slides from: James Hayes, Brown University, Computer Vision course Svetlana Lazebnik, University of North Carolina at Chapel Hill, Computer Vision course D. Forsyth and J. Ponce. Computer Vision: A Modern Approach, Prentice Hall, 2011. R. Gonzalez and R. Woods. Digital Image Processing, Prentice Hall, 2008.

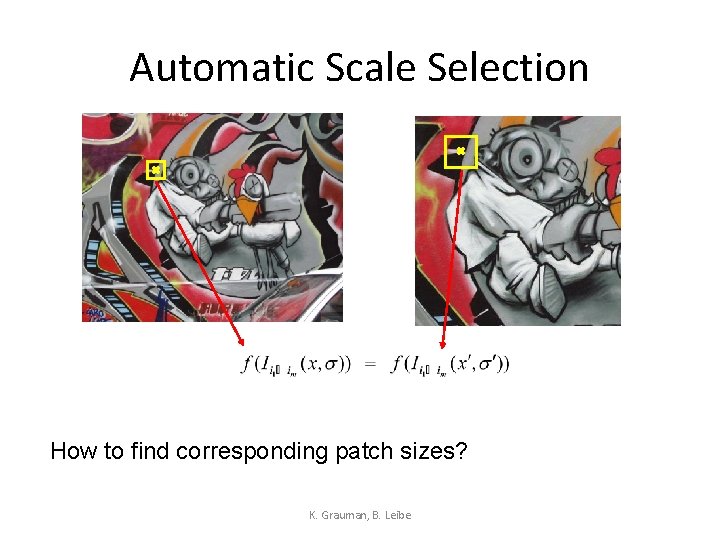

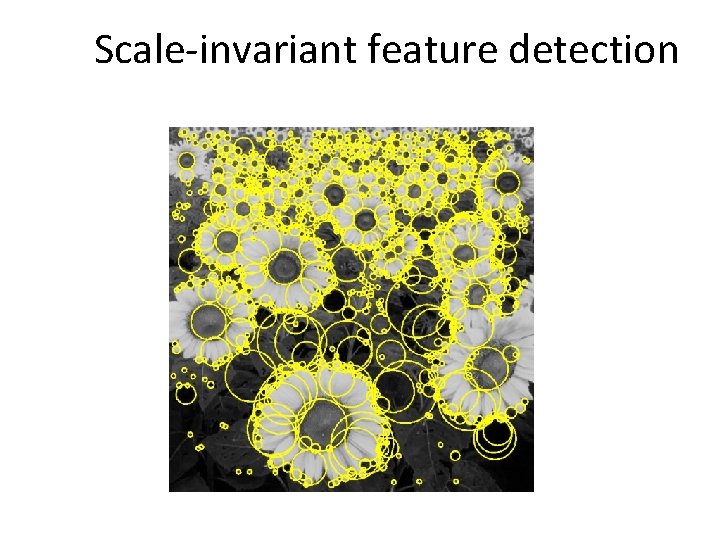

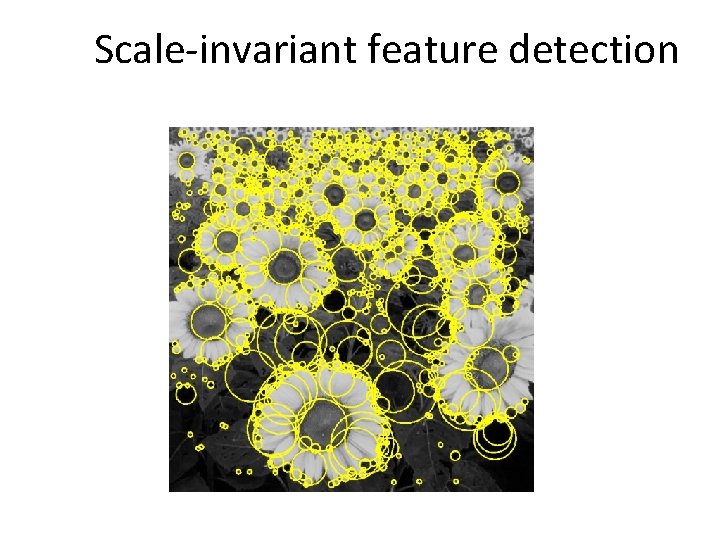

Scale-invariant feature detection Harris corners can be localized in space but not in scale

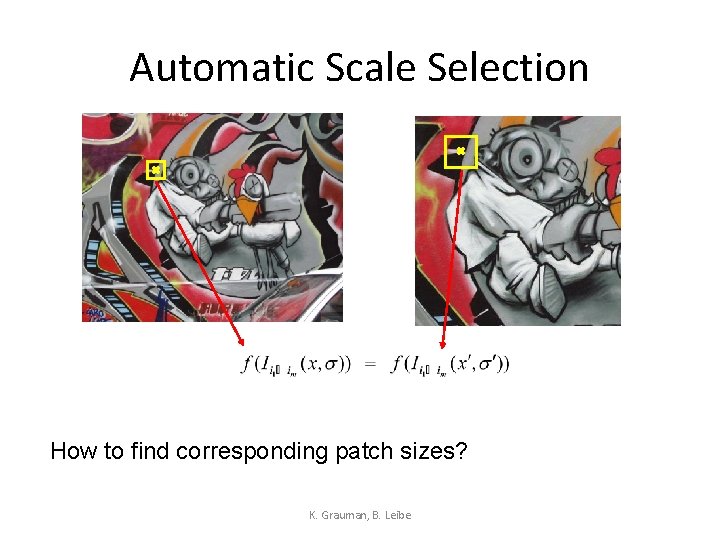

Scale-invariant feature detection • • Goal: independently detect corresponding regions in scaled versions of the same image. Need scale selection mechanism for finding characteristic region size that is covariant with the image transformation. Idea: Given a key point in two images determine if the surrounding neighborhoods contain the same structure up to scale. We could do this by sampling each image at a range of scales and perform comparisons at each pixel to find a match but it is impractical.

Automatic Scale Selection How to find corresponding patch sizes? K. Grauman, B. Leibe

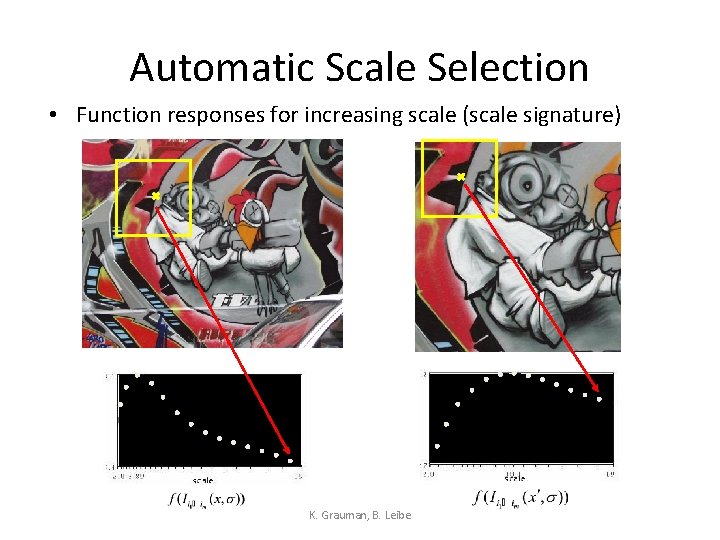

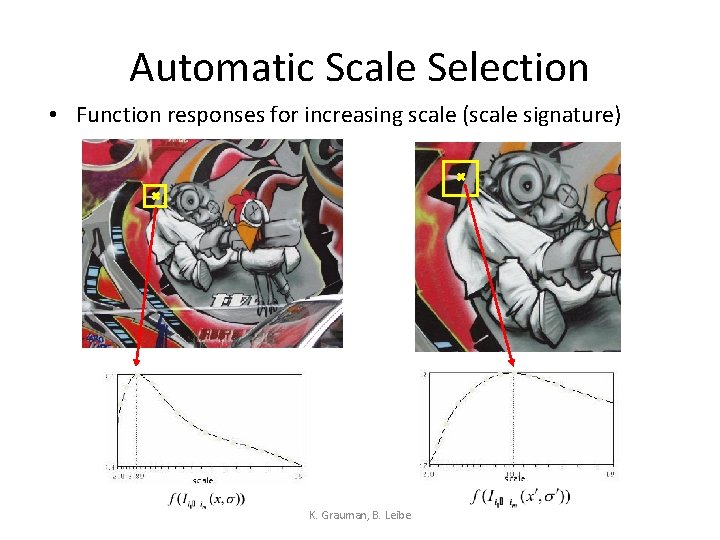

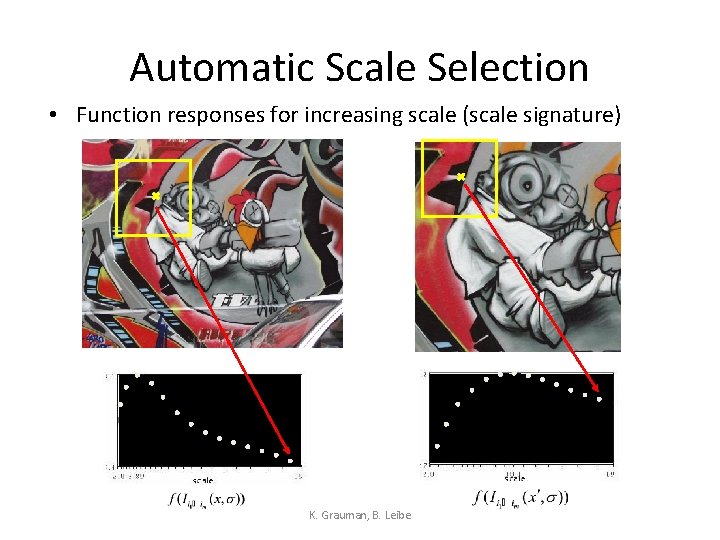

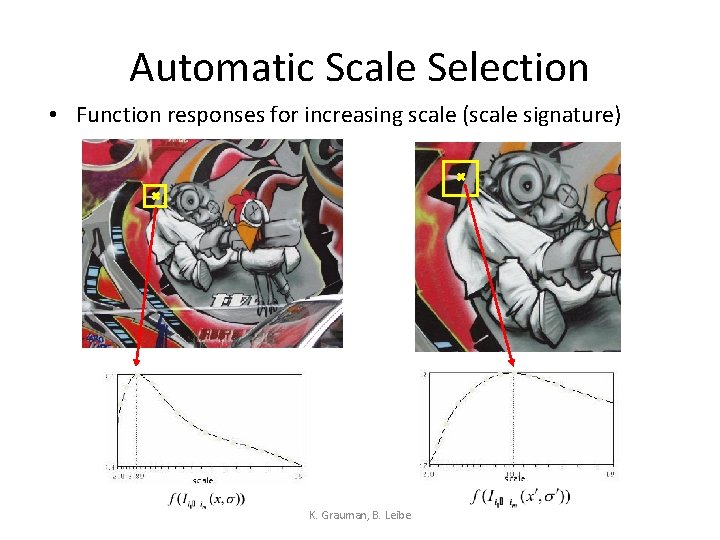

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

Automatic Scale Selection • Function responses for increasing scale (scale signature) K. Grauman, B. Leibe

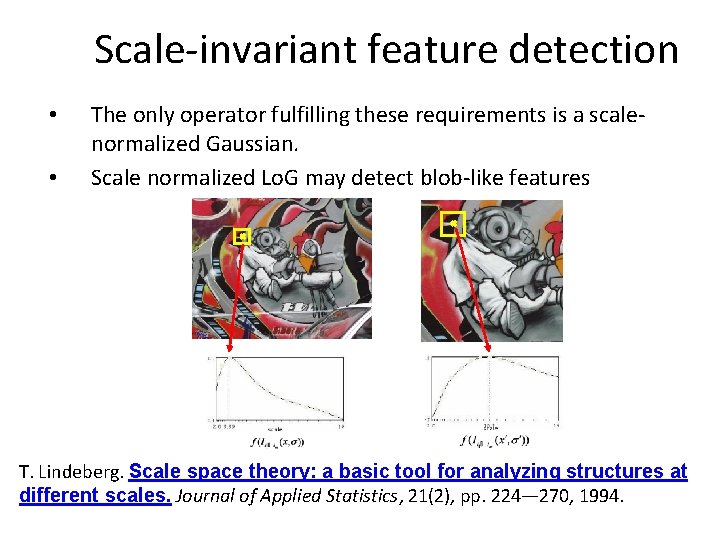

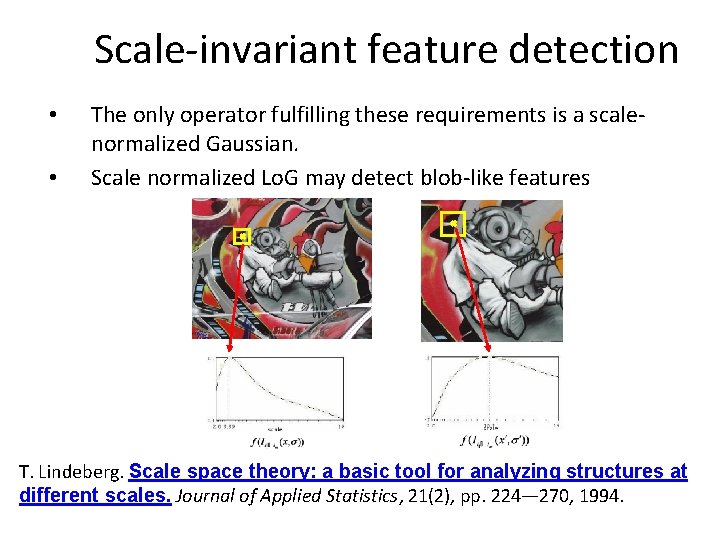

Scale-invariant feature detection • • The only operator fulfilling these requirements is a scalenormalized Gaussian. Scale normalized Lo. G may detect blob-like features T. Lindeberg. Scale space theory: a basic tool for analyzing structures at different scales. Journal of Applied Statistics, 21(2), pp. 224— 270, 1994.

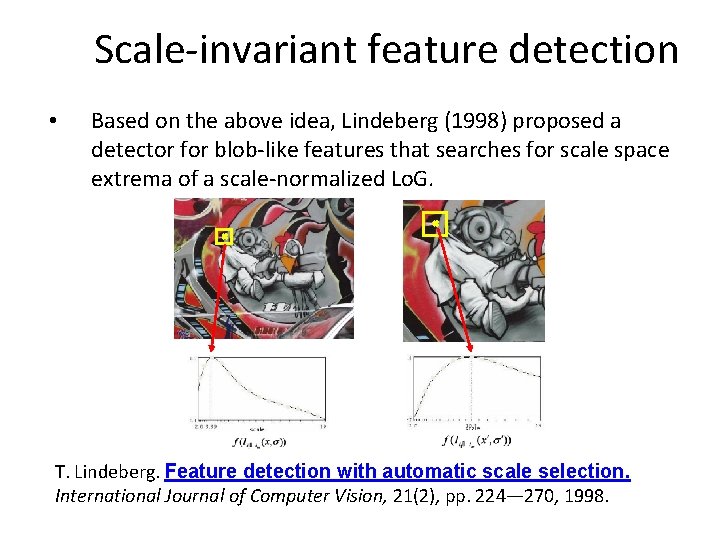

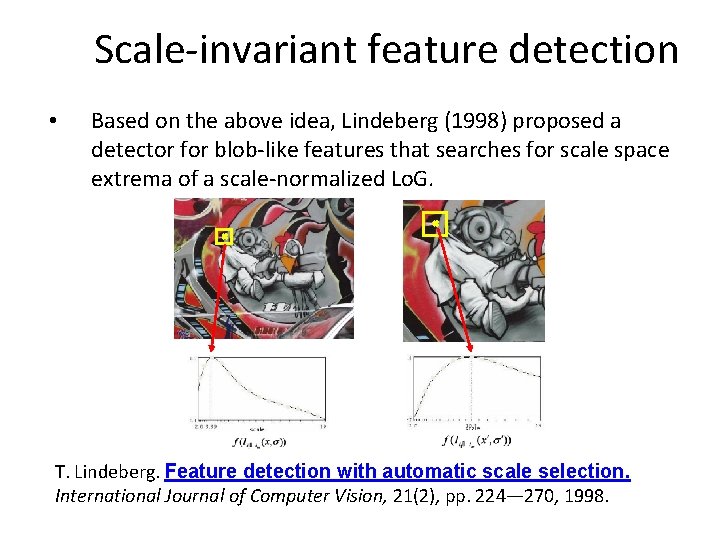

Scale-invariant feature detection • Based on the above idea, Lindeberg (1998) proposed a detector for blob-like features that searches for scale space extrema of a scale-normalized Lo. G. T. Lindeberg. Feature detection with automatic scale selection. International Journal of Computer Vision, 21(2), pp. 224— 270, 1998.

Scale-invariant feature detection

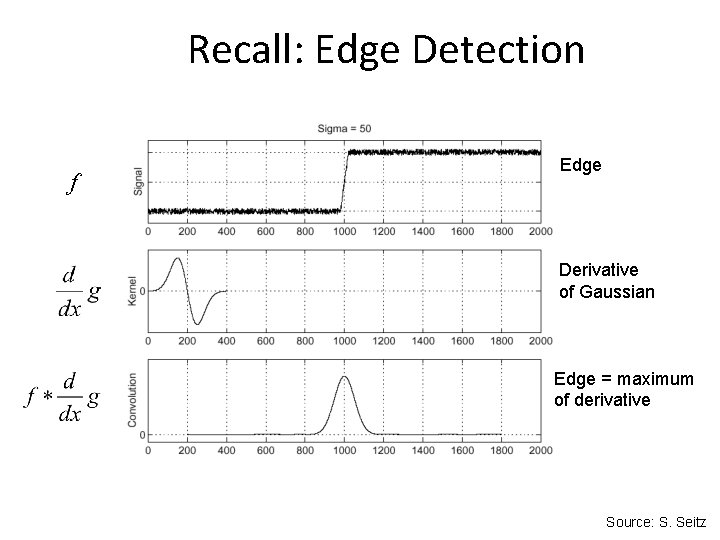

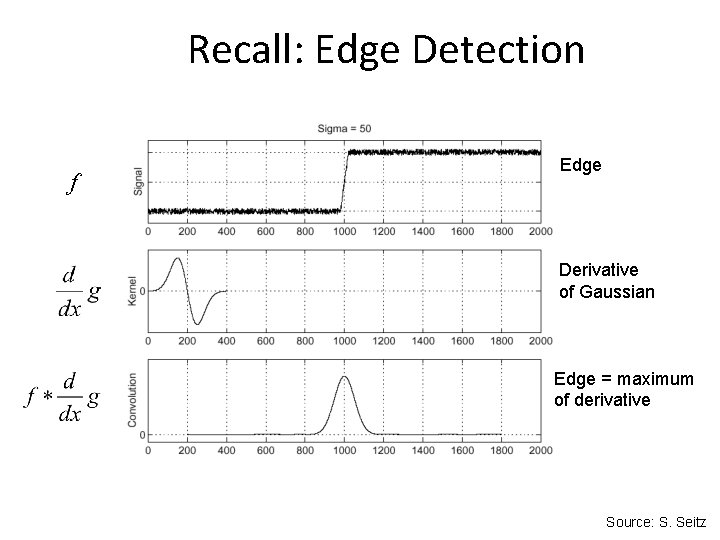

Recall: Edge Detection f Edge Derivative of Gaussian Edge = maximum of derivative Source: S. Seitz

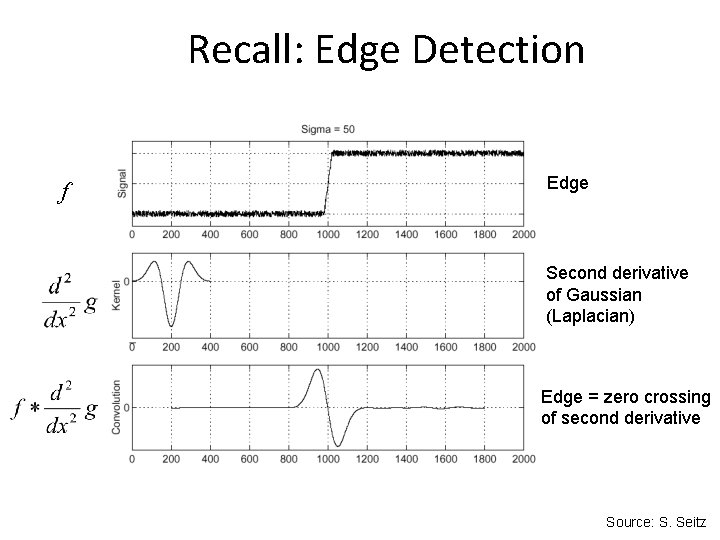

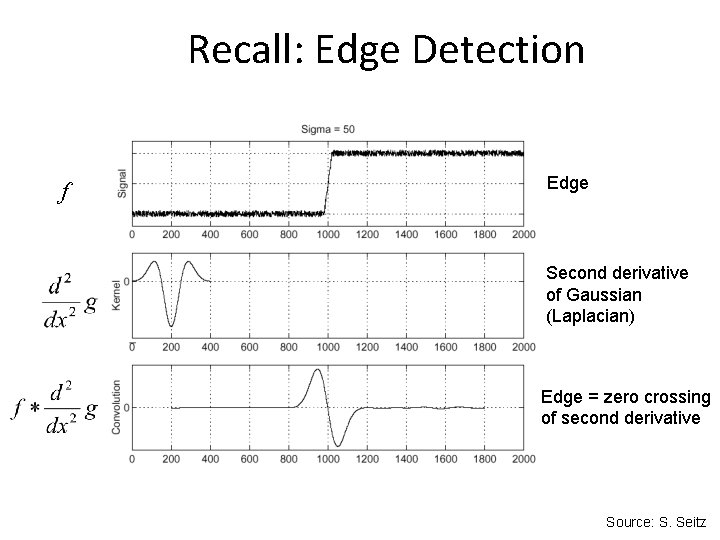

Recall: Edge Detection f Edge Second derivative of Gaussian (Laplacian) Edge = zero crossing of second derivative Source: S. Seitz

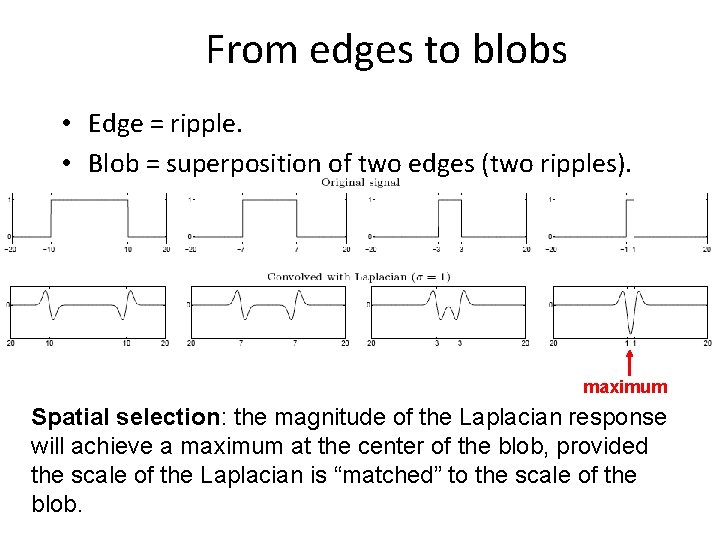

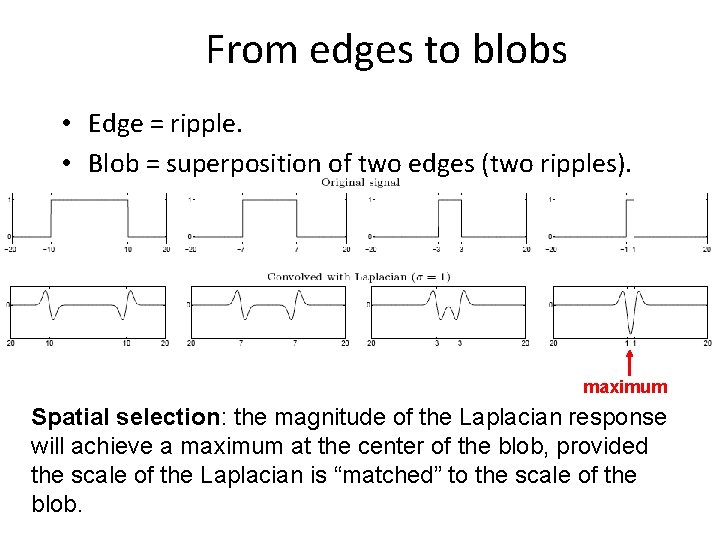

From edges to blobs • Edge = ripple. • Blob = superposition of two edges (two ripples). maximum Spatial selection: the magnitude of the Laplacian response will achieve a maximum at the center of the blob, provided the scale of the Laplacian is “matched” to the scale of the blob.

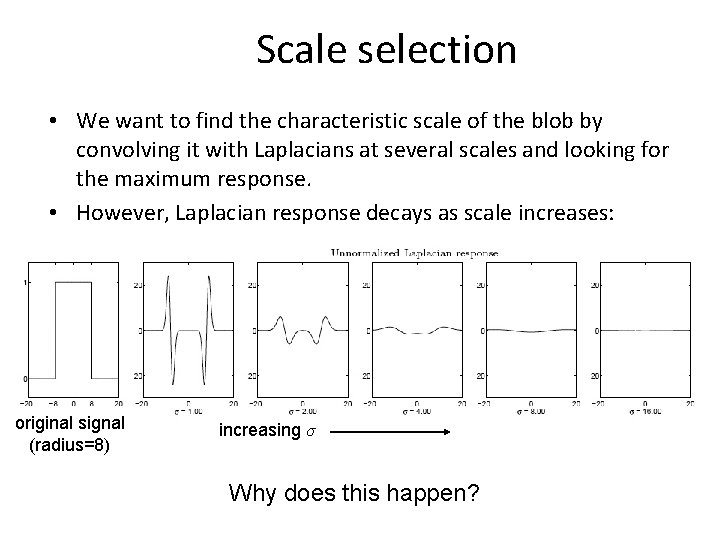

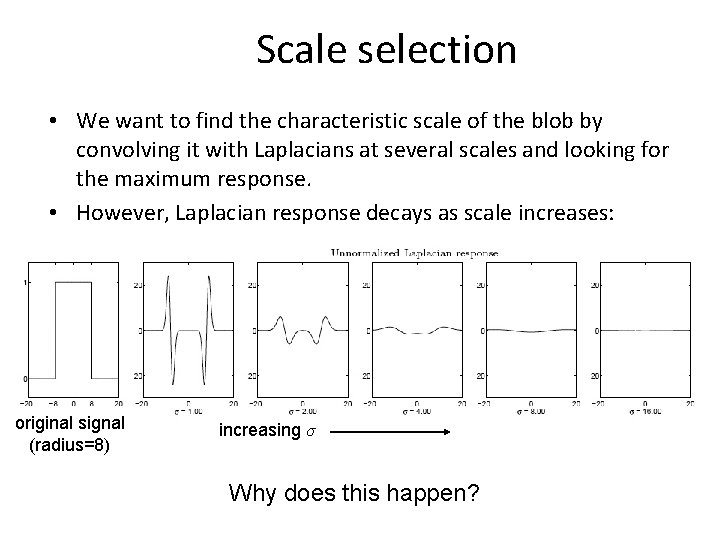

Scale selection • We want to find the characteristic scale of the blob by convolving it with Laplacians at several scales and looking for the maximum response. • However, Laplacian response decays as scale increases: original signal (radius=8) increasing σ Why does this happen?

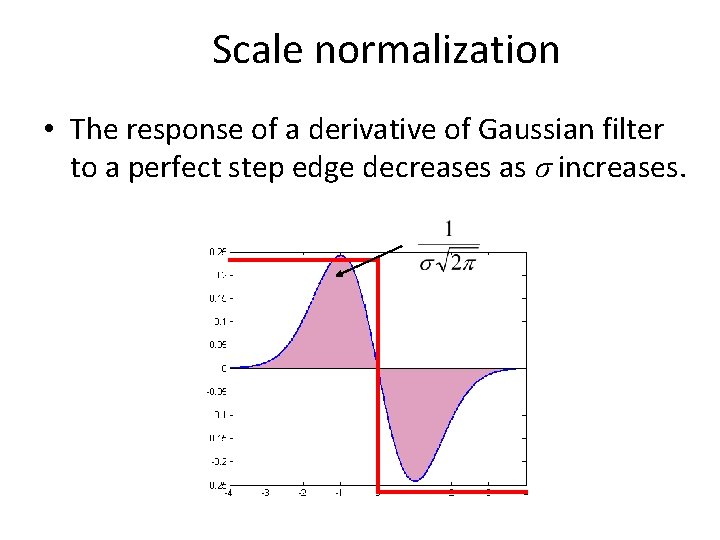

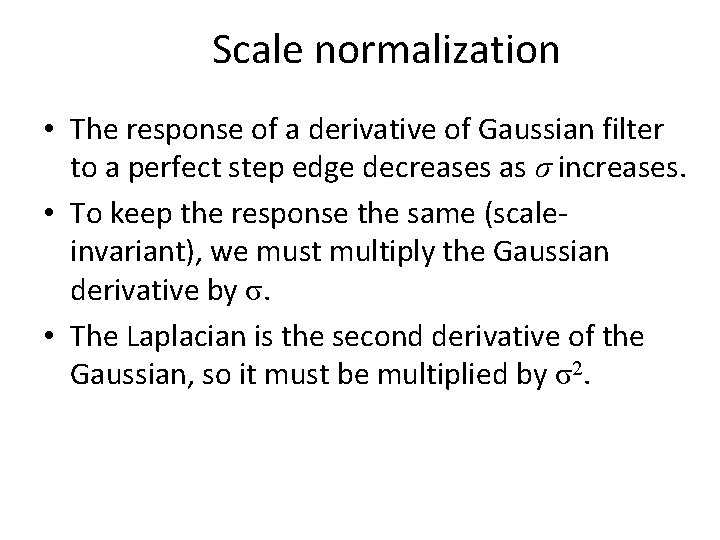

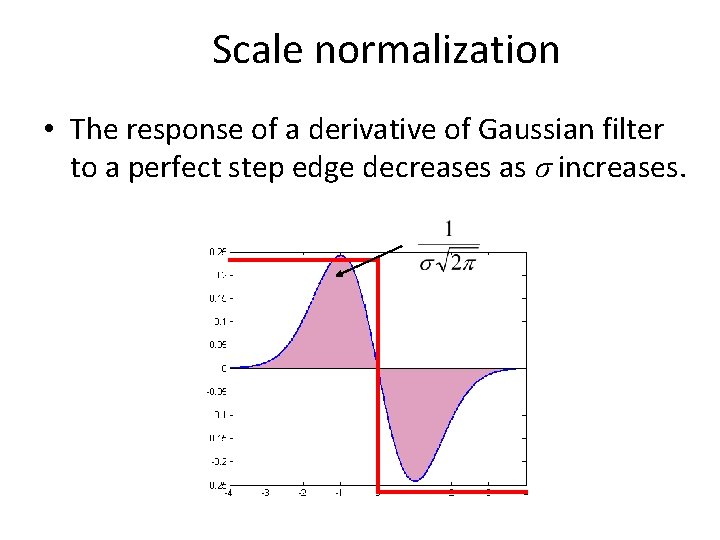

Scale normalization • The response of a derivative of Gaussian filter to a perfect step edge decreases as σ increases.

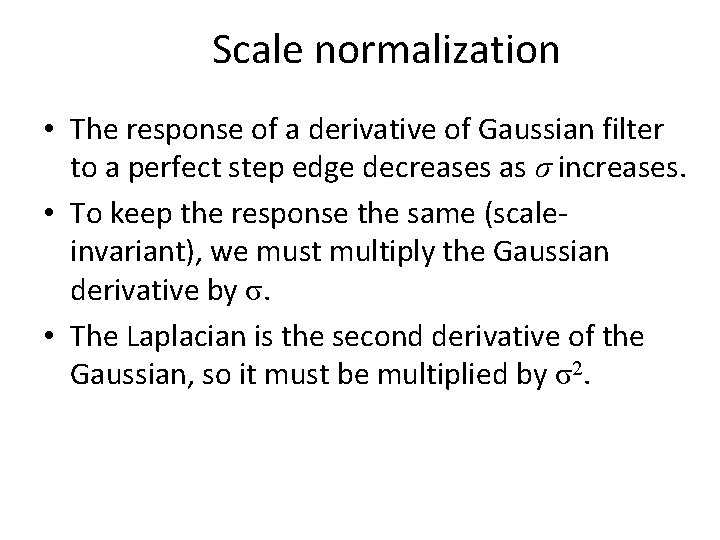

Scale normalization • The response of a derivative of Gaussian filter to a perfect step edge decreases as σ increases. • To keep the response the same (scaleinvariant), we must multiply the Gaussian derivative by σ. • The Laplacian is the second derivative of the Gaussian, so it must be multiplied by σ2.

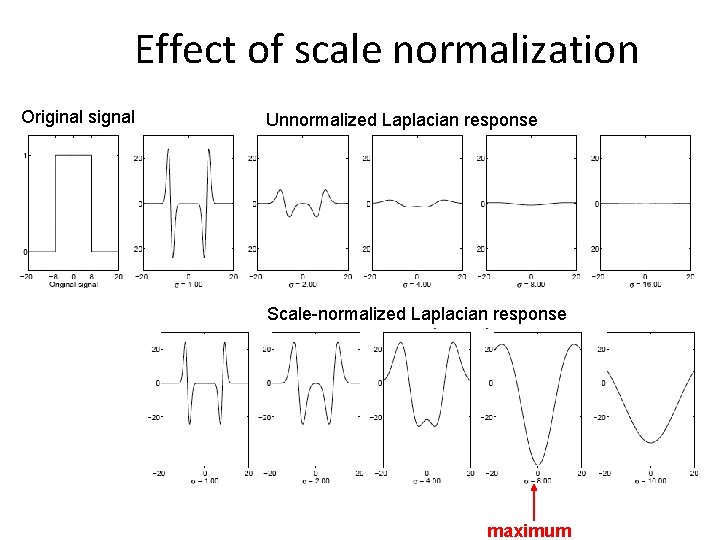

Effect of scale normalization Original signal Unnormalized Laplacian response Scale-normalized Laplacian response maximum

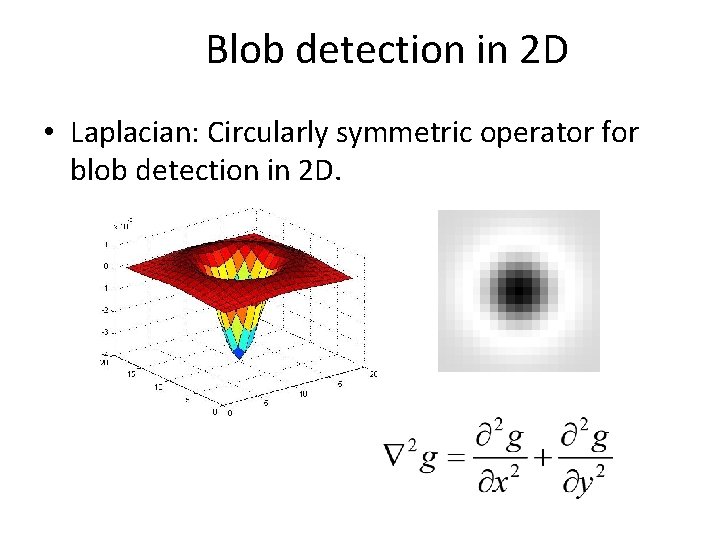

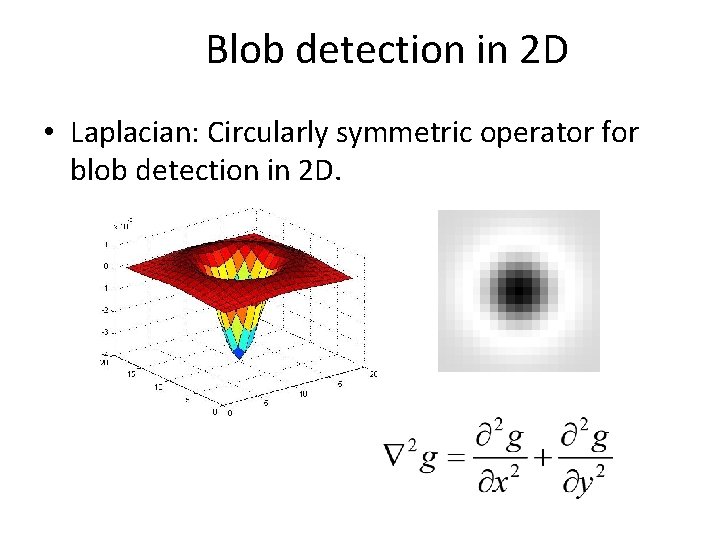

Blob detection in 2 D • Laplacian: Circularly symmetric operator for blob detection in 2 D.

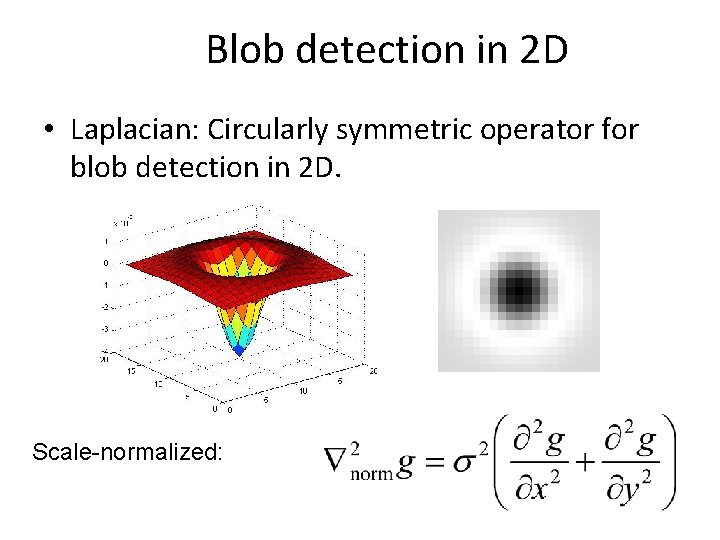

Blob detection in 2 D • Laplacian: Circularly symmetric operator for blob detection in 2 D. Scale-normalized:

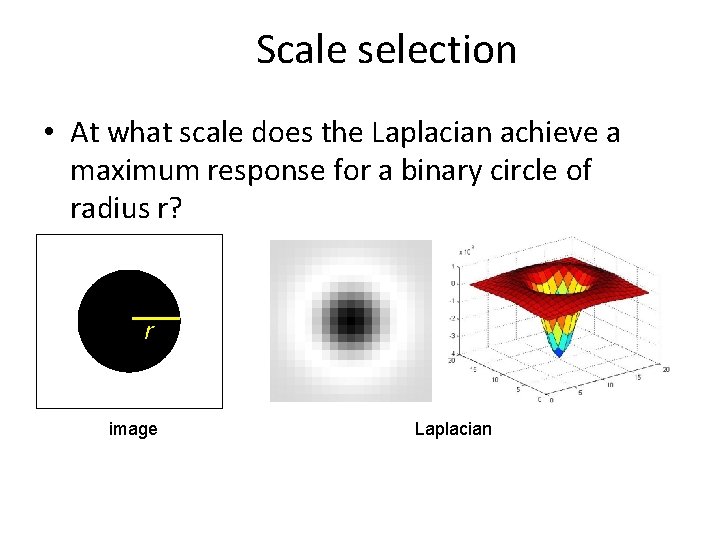

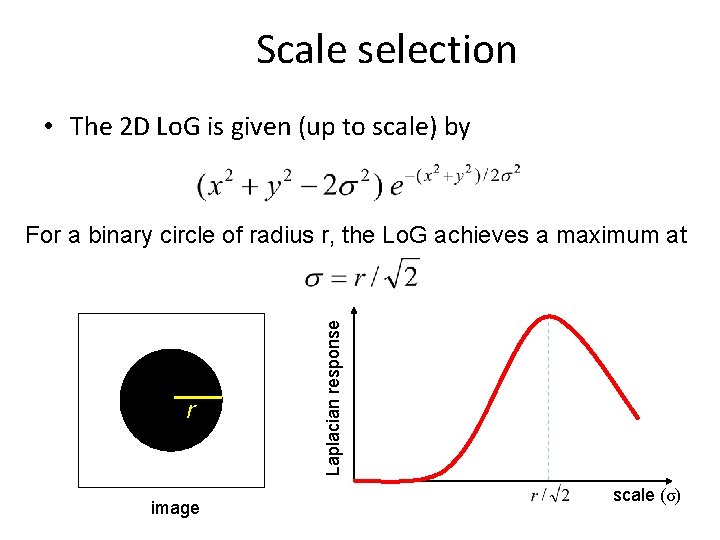

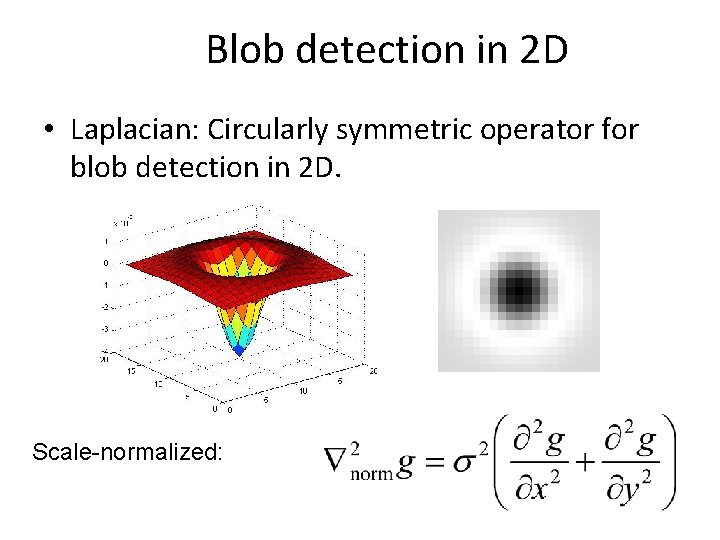

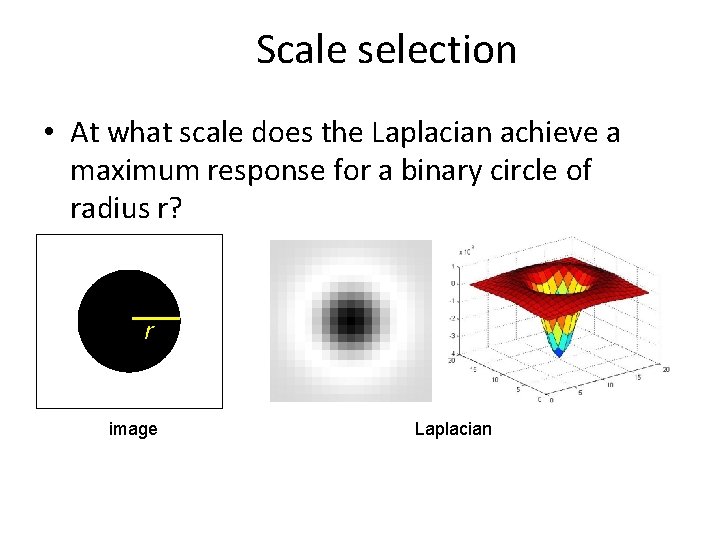

Scale selection • At what scale does the Laplacian achieve a maximum response for a binary circle of radius r? r image Laplacian

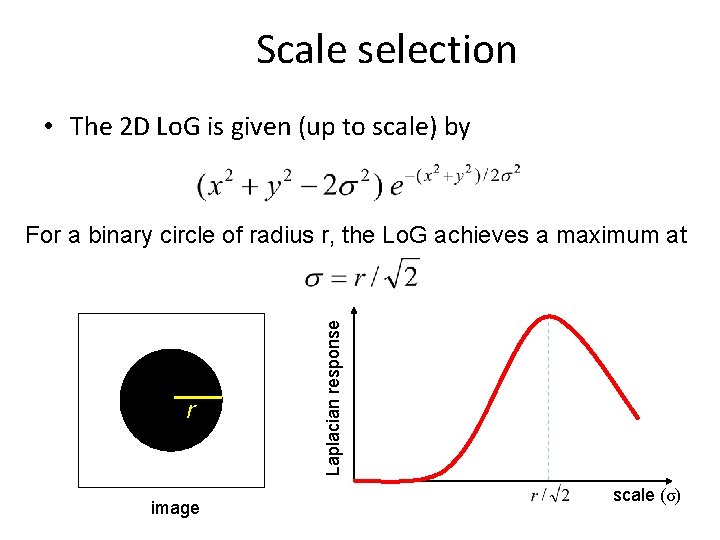

Scale selection • The 2 D Lo. G is given (up to scale) by r image Laplacian response For a binary circle of radius r, the Lo. G achieves a maximum at scale (σ)

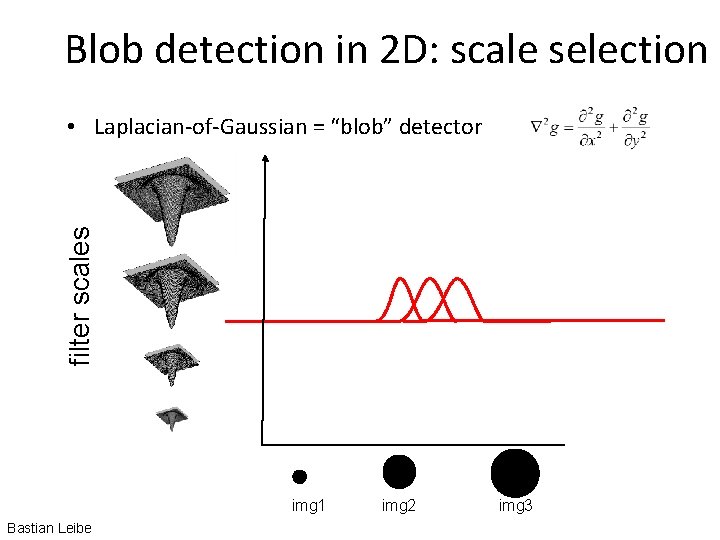

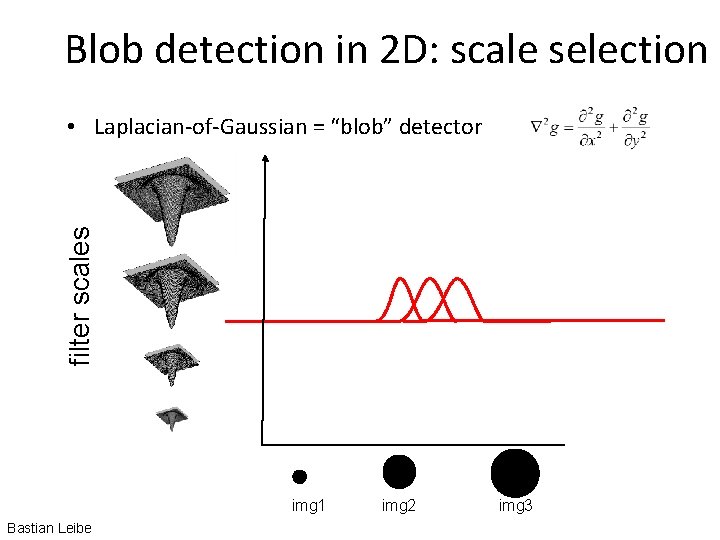

Blob detection in 2 D: scale selection filter scales • Laplacian-of-Gaussian = “blob” detector img 1 Bastian Leibe img 2 img 3

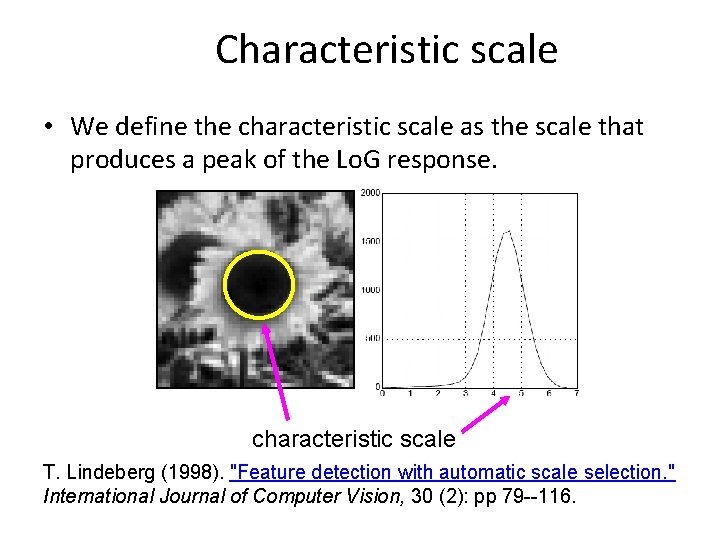

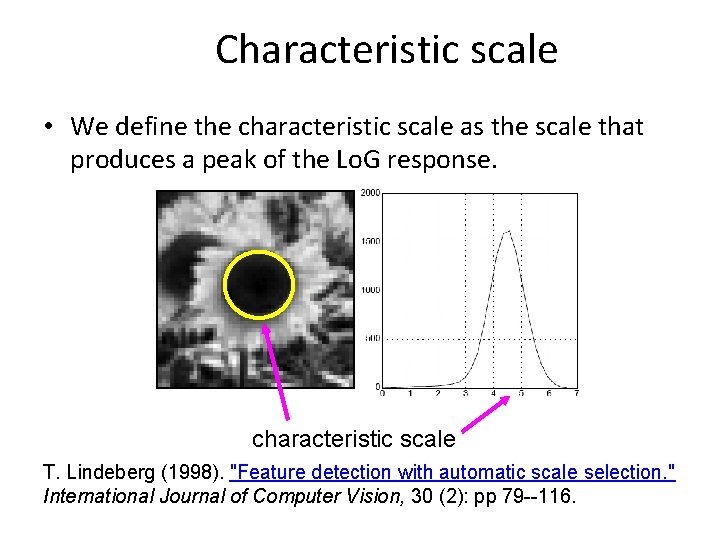

Characteristic scale • We define the characteristic scale as the scale that produces a peak of the Lo. G response. characteristic scale T. Lindeberg (1998). "Feature detection with automatic scale selection. " International Journal of Computer Vision, 30 (2): pp 79 --116.

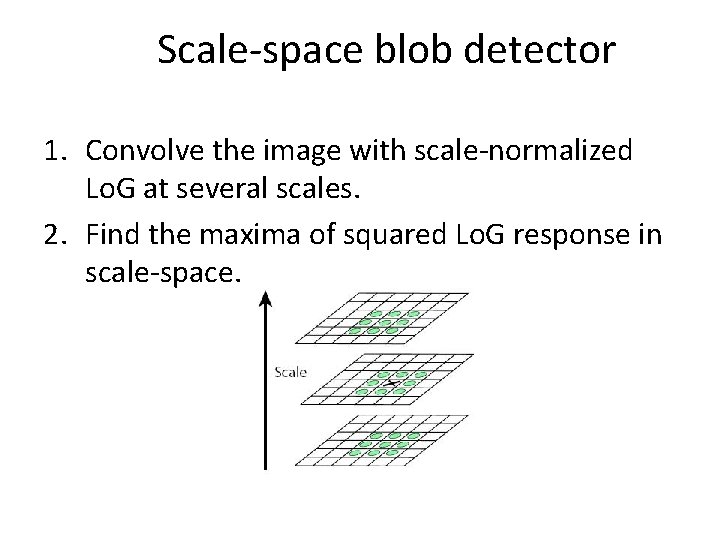

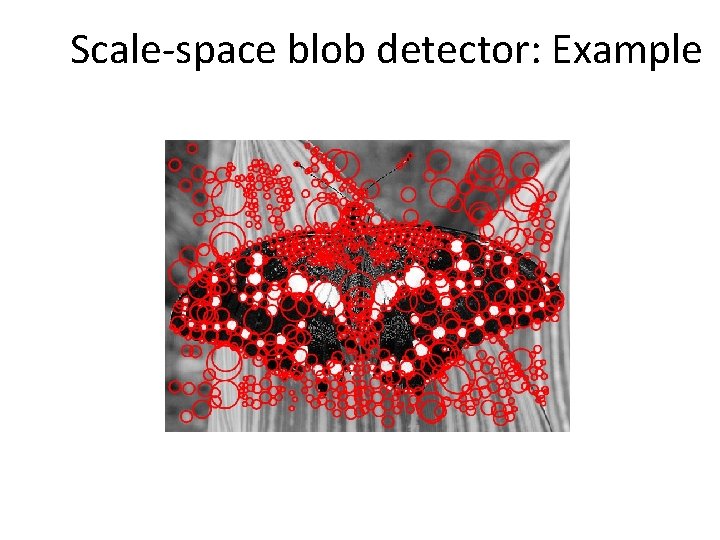

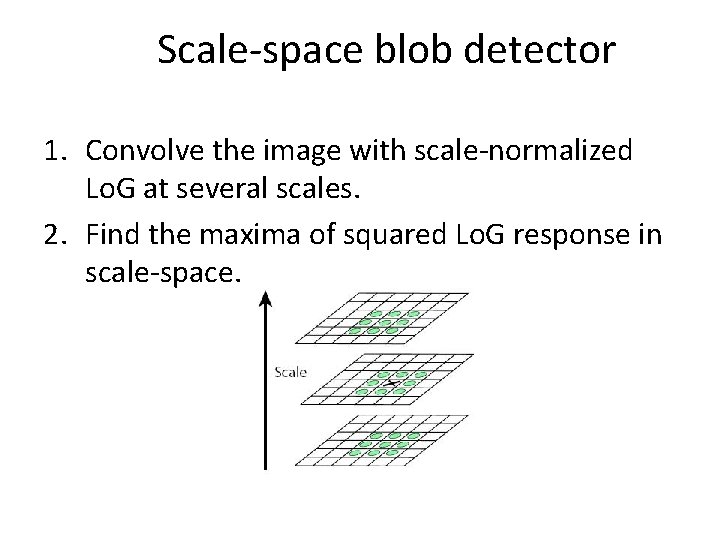

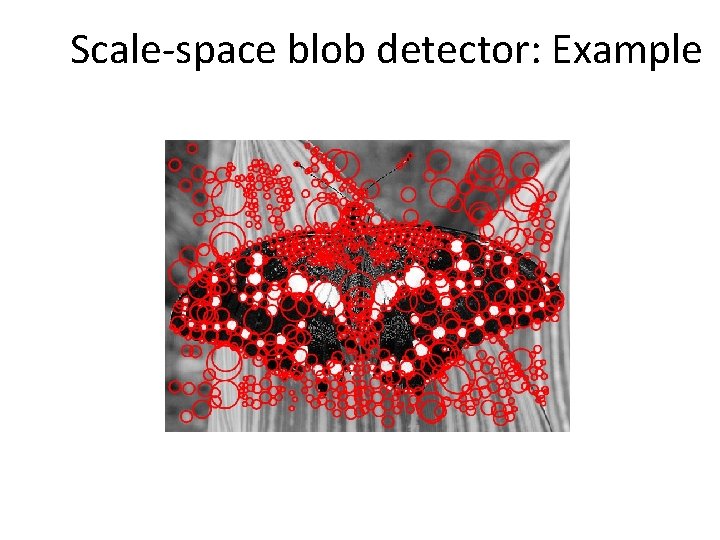

Scale-space blob detector 1. Convolve the image with scale-normalized Lo. G at several scales. 2. Find the maxima of squared Lo. G response in scale-space.

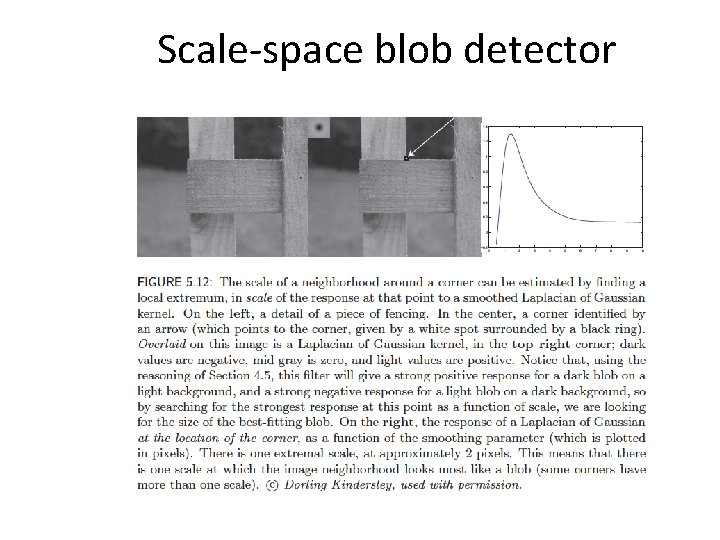

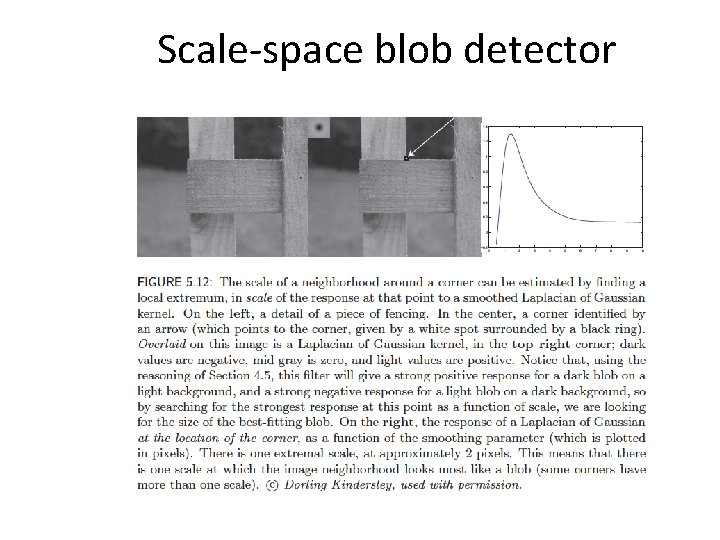

Scale-space blob detector

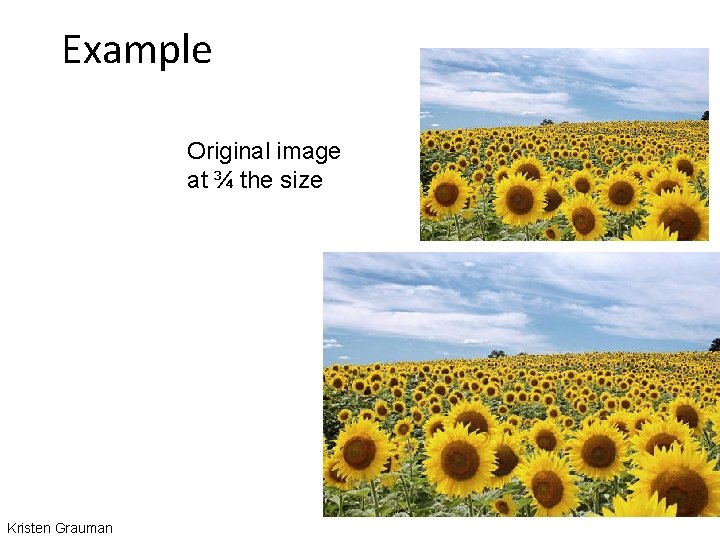

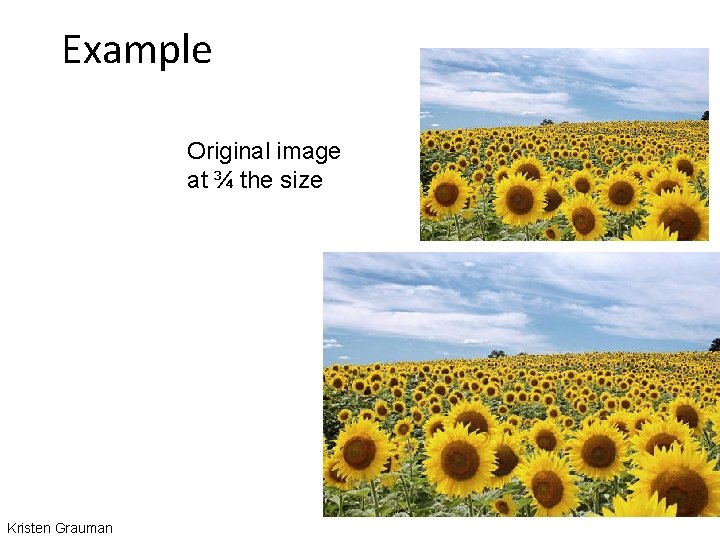

Example Original image at ¾ the size Kristen Grauman

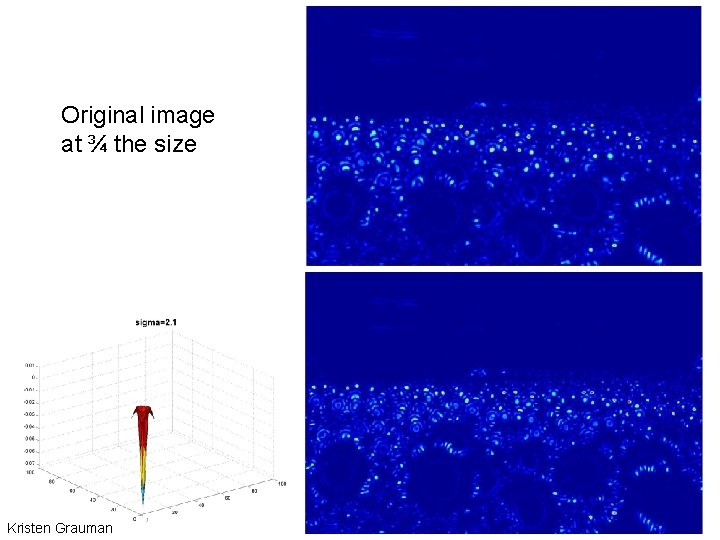

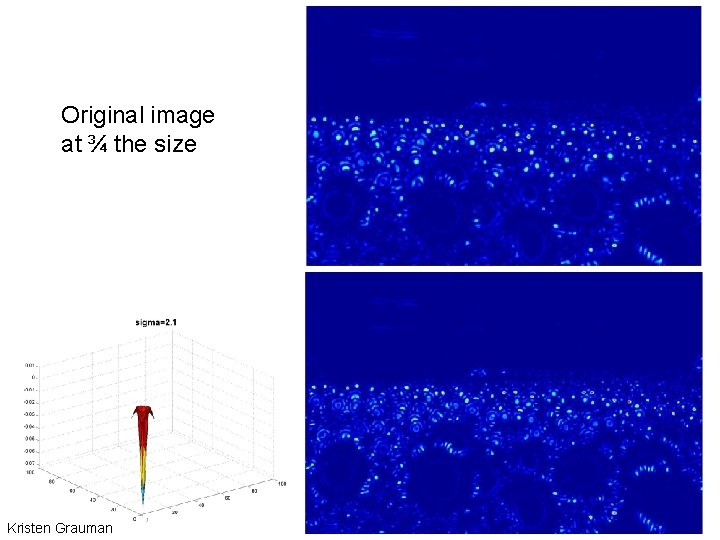

Original image at ¾ the size Kristen Grauman

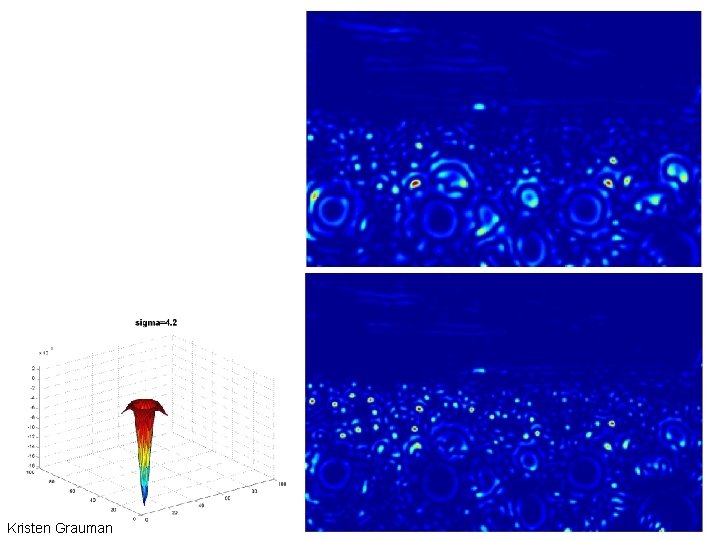

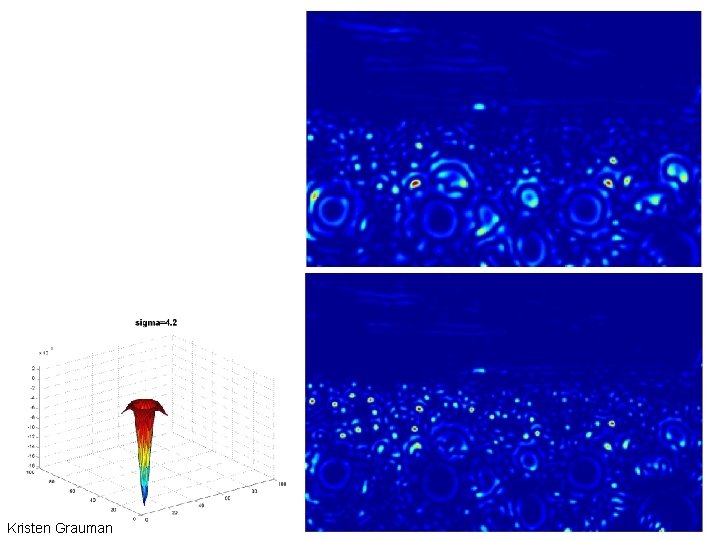

Kristen Grauman

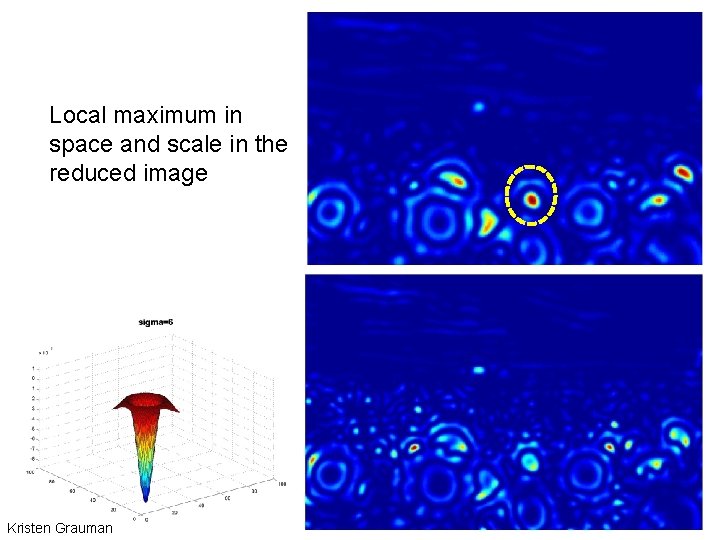

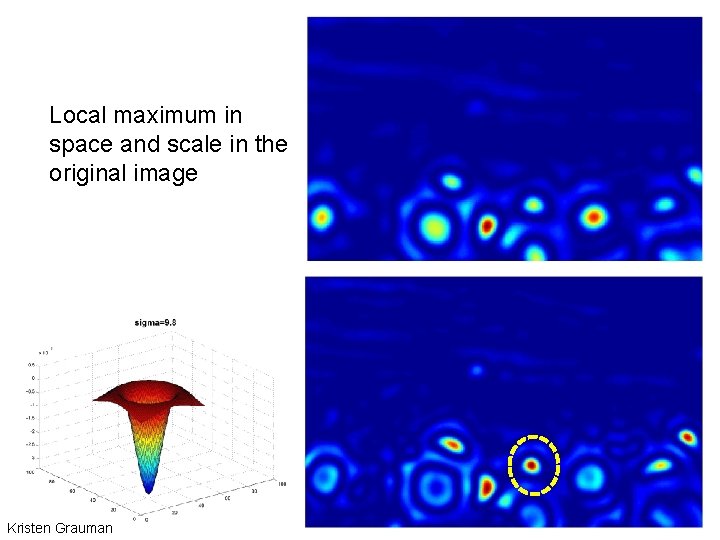

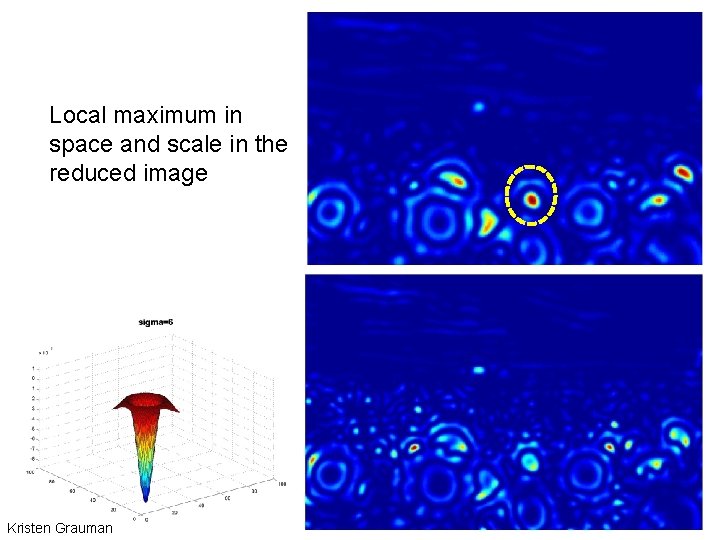

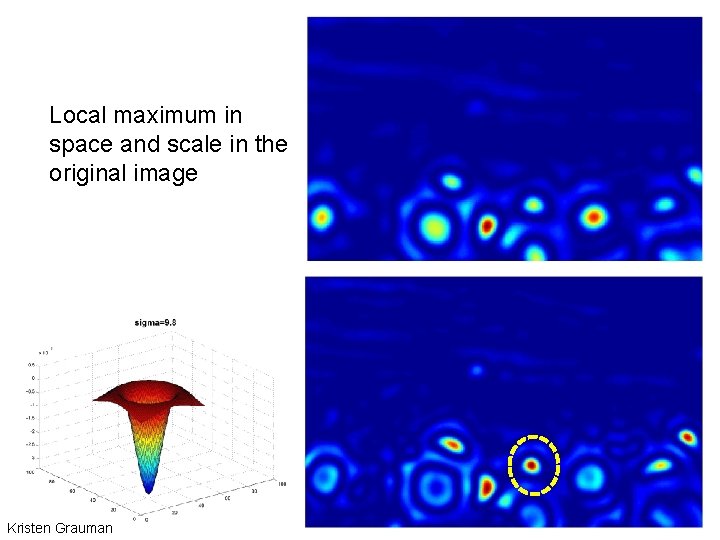

Local maximum in space and scale in the reduced image Kristen Grauman

Local maximum in space and scale in the original image Kristen Grauman

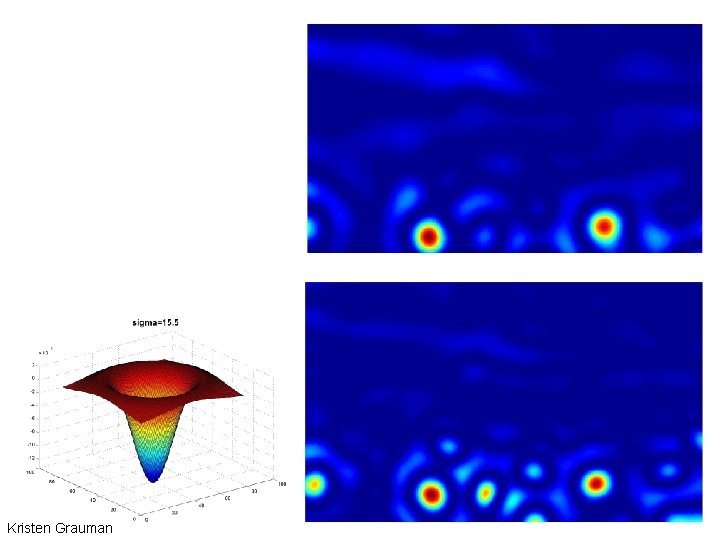

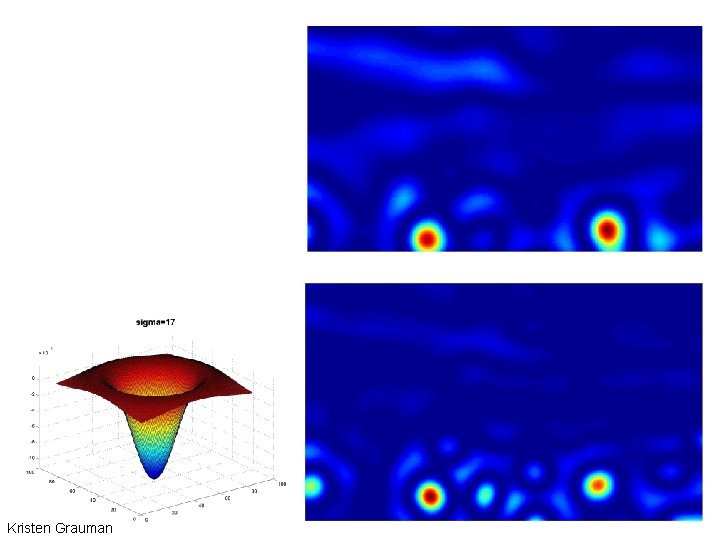

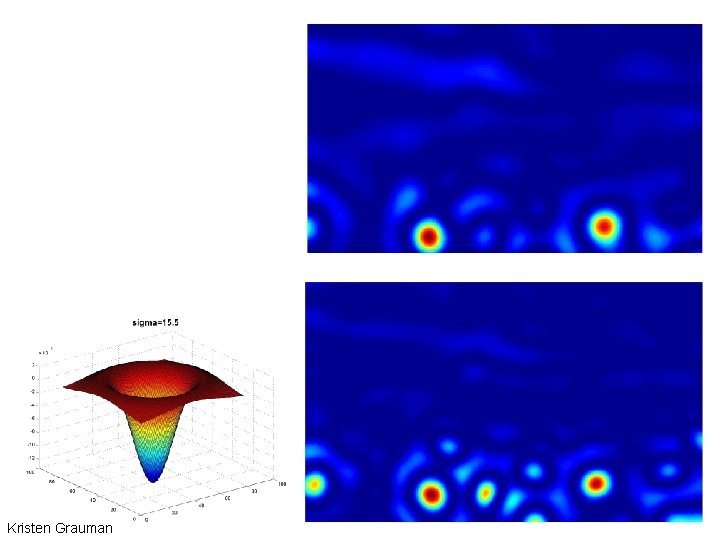

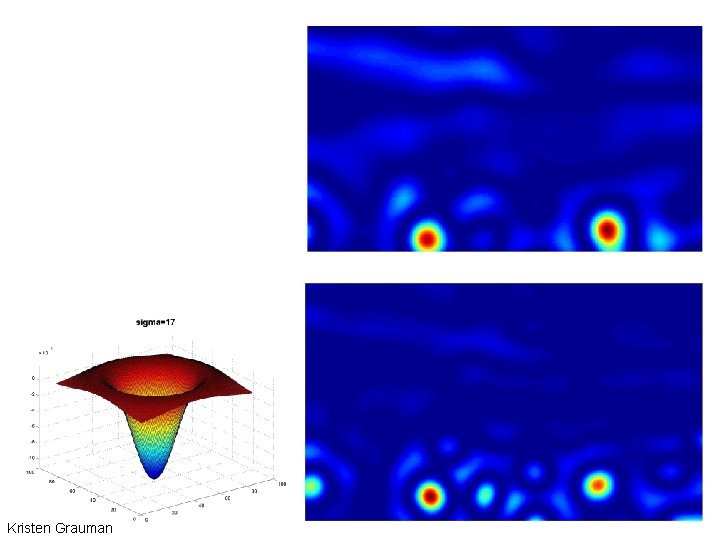

Kristen Grauman

Kristen Grauman

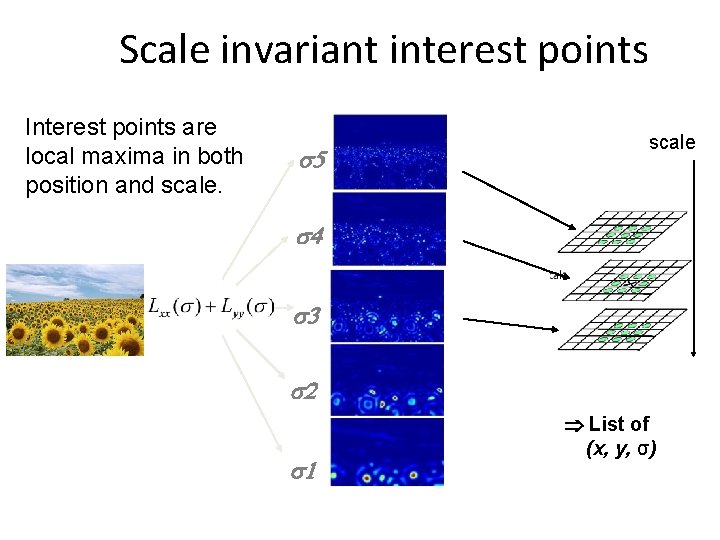

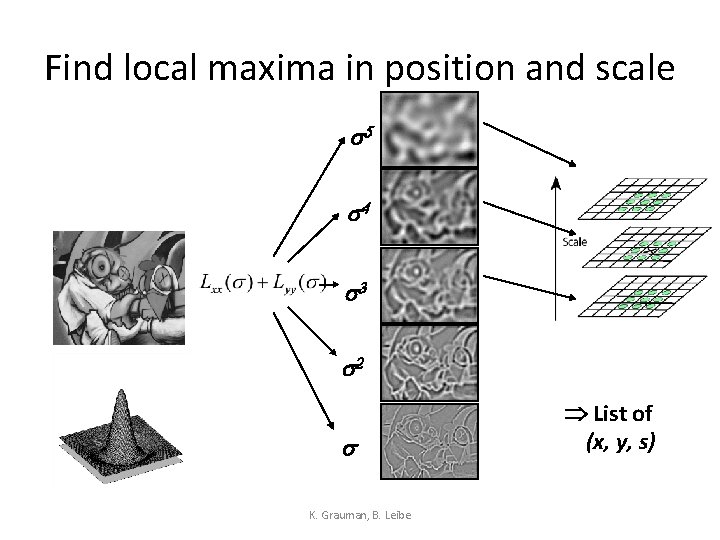

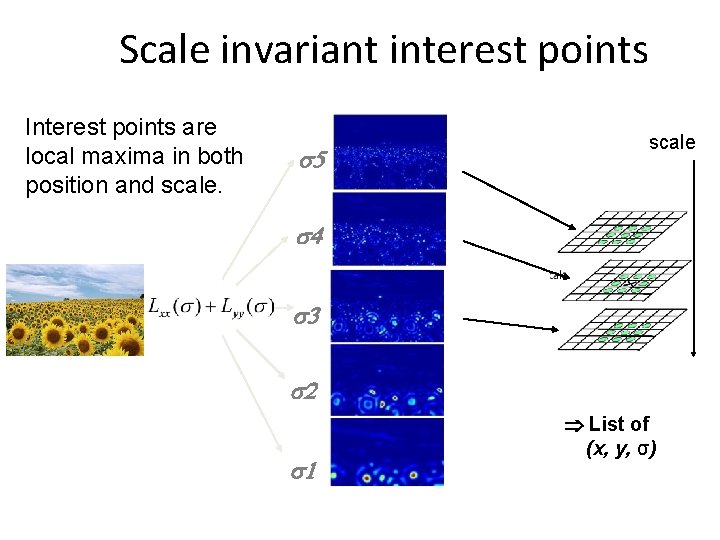

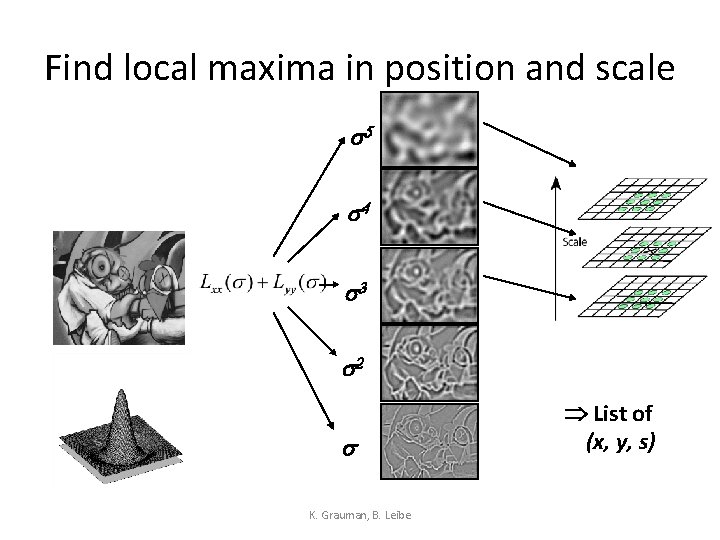

Scale invariant interest points Interest points are local maxima in both position and scale. s 5 scale s 4 s 3 s 2 s 1 List of (x, y, σ)

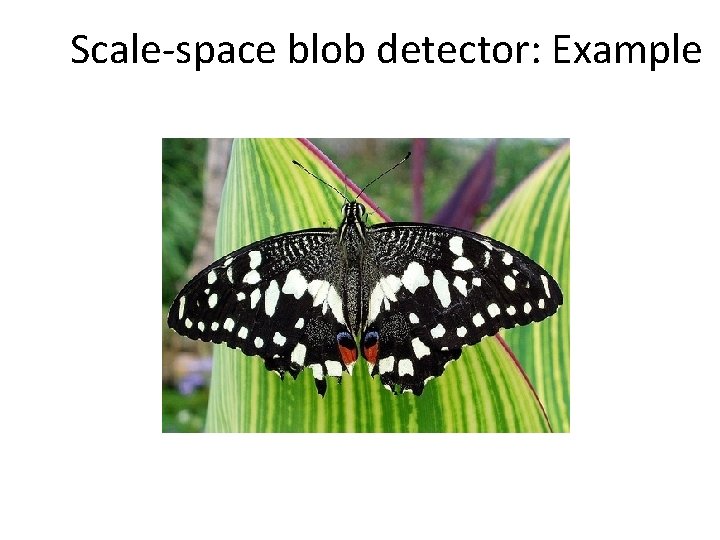

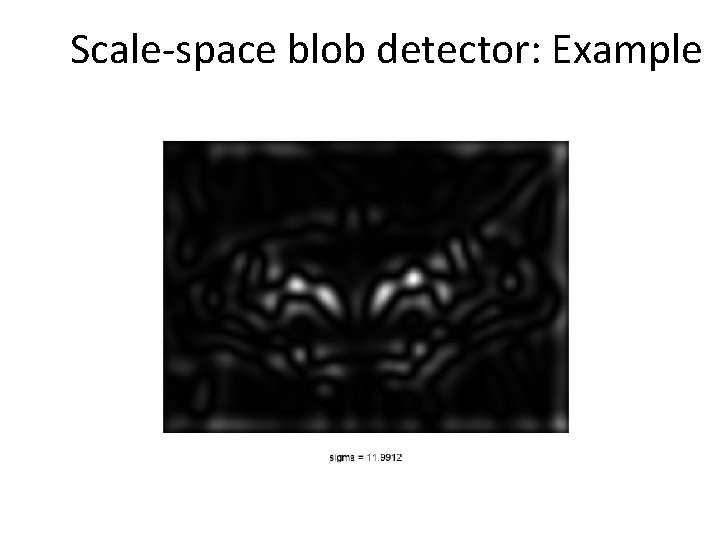

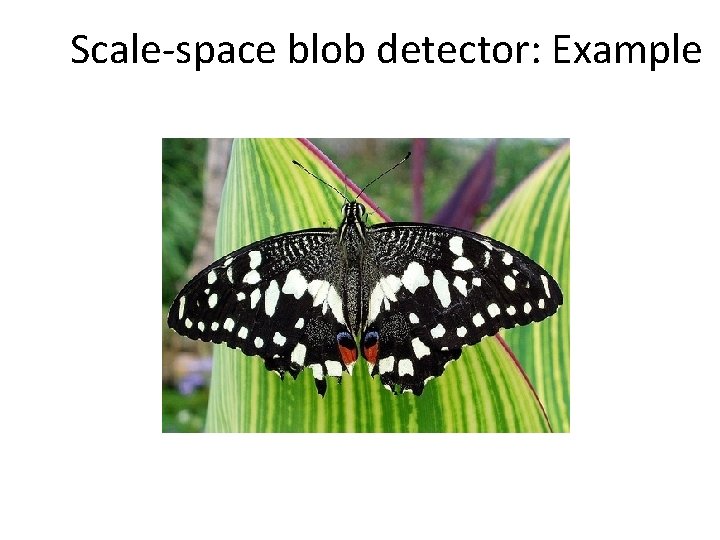

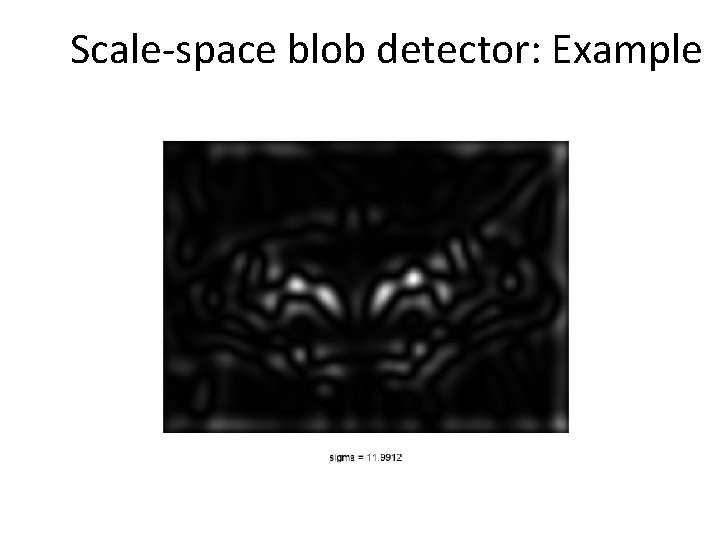

Scale-space blob detector: Example

Scale-space blob detector: Example

Scale-space blob detector: Example

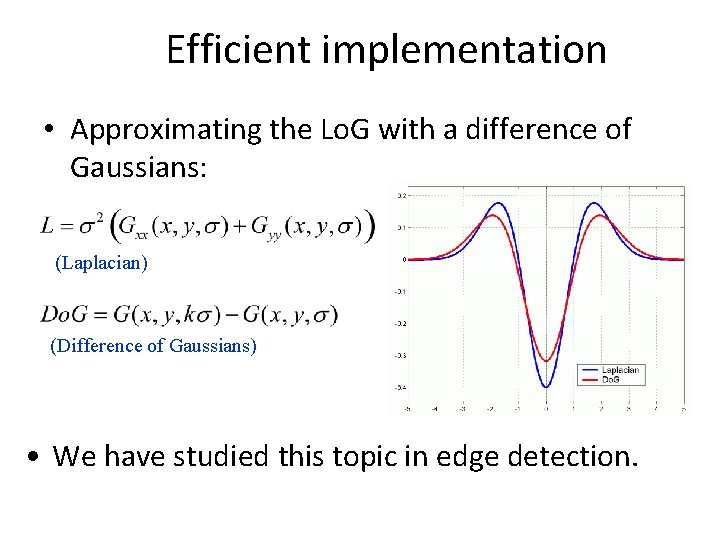

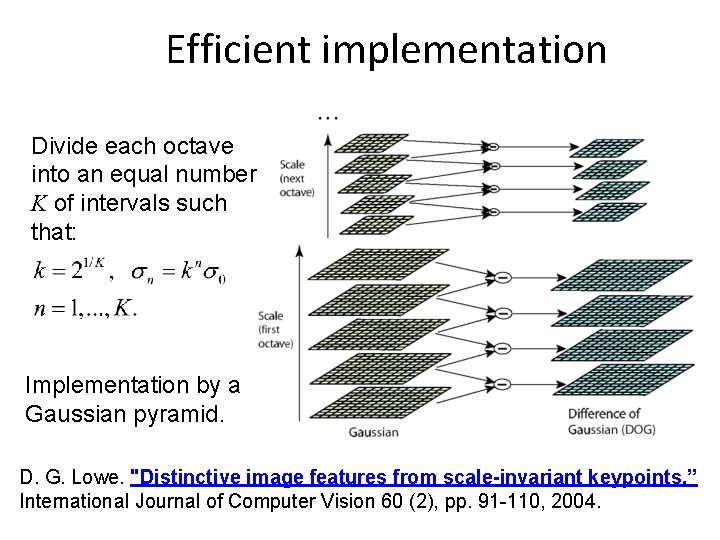

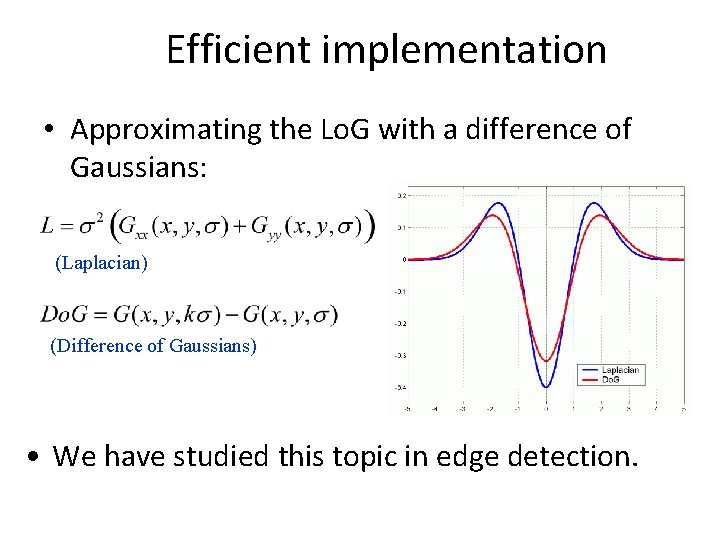

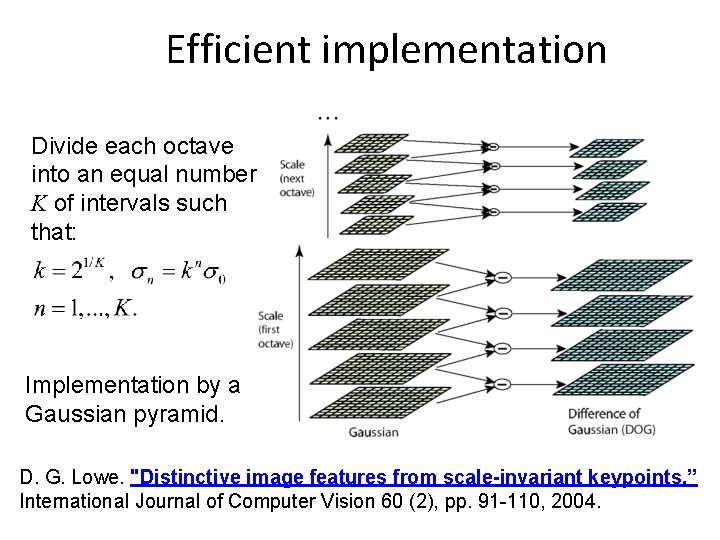

Efficient implementation • Approximating the Lo. G with a difference of Gaussians: (Laplacian) (Difference of Gaussians) • We have studied this topic in edge detection.

Efficient implementation Divide each octave into an equal number K of intervals such that: Implementation by a Gaussian pyramid. D. G. Lowe. "Distinctive image features from scale-invariant keypoints. ” International Journal of Computer Vision 60 (2), pp. 91 -110, 2004.

Find local maxima in position and scale s 5 s 4 s 3 s 2 s K. Grauman, B. Leibe List of (x, y, s)

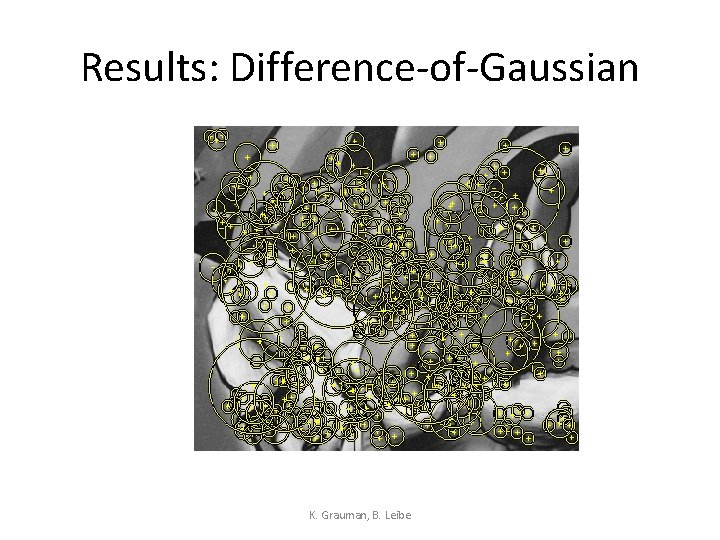

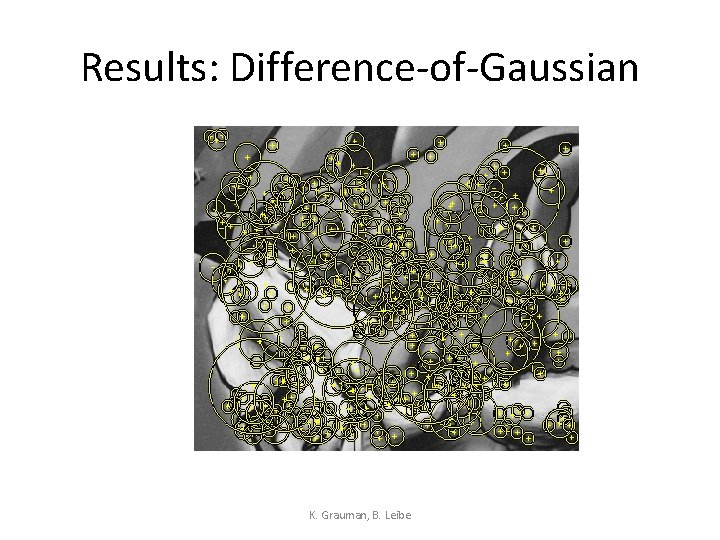

Results: Difference-of-Gaussian K. Grauman, B. Leibe

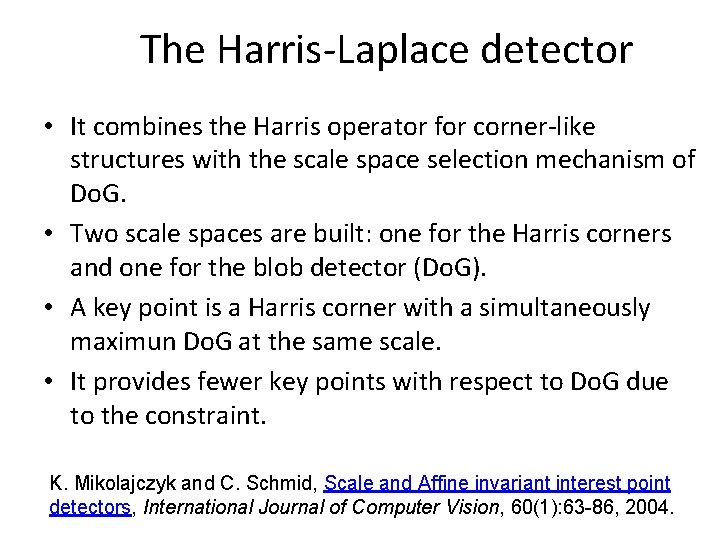

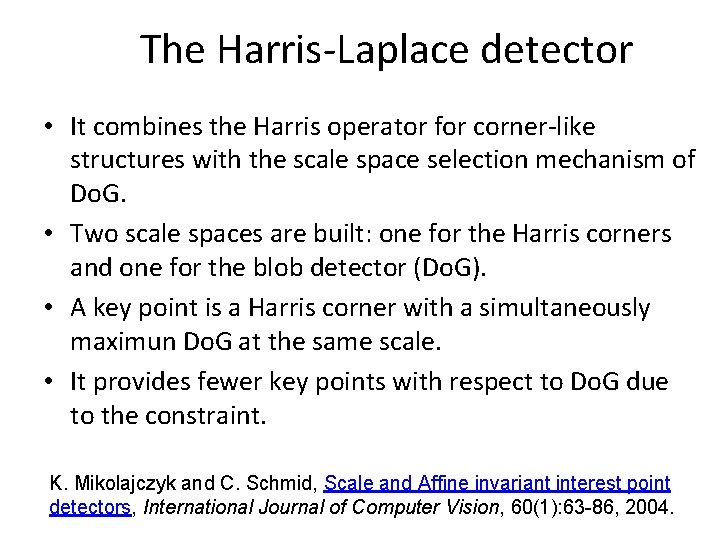

The Harris-Laplace detector • It combines the Harris operator for corner-like structures with the scale space selection mechanism of Do. G. • Two scale spaces are built: one for the Harris corners and one for the blob detector (Do. G). • A key point is a Harris corner with a simultaneously maximun Do. G at the same scale. • It provides fewer key points with respect to Do. G due to the constraint. K. Mikolajczyk and C. Schmid, Scale and Affine invariant interest point detectors, International Journal of Computer Vision, 60(1): 63 -86, 2004.

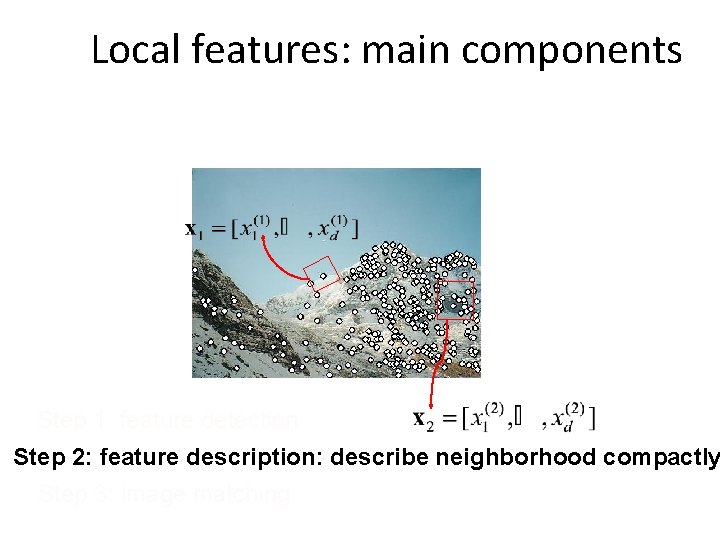

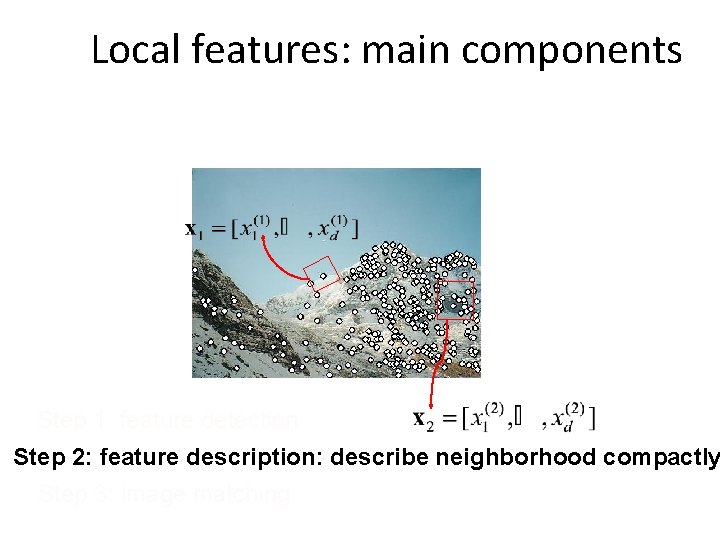

Local features: main components Step 1: feature detection Step 2: feature description: describe neighborhood compactly Step 3: image matching

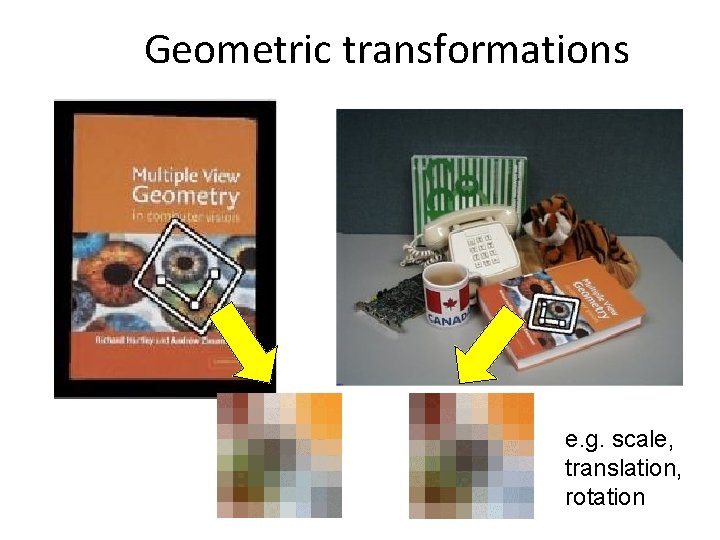

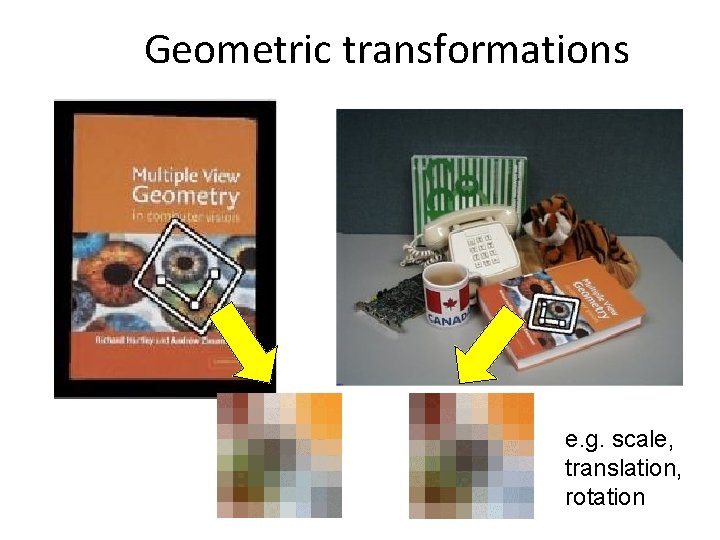

Geometric transformations e. g. scale, translation, rotation

Photometric transformations Figure from T. Tuytelaars ECCV 2006 tutorial

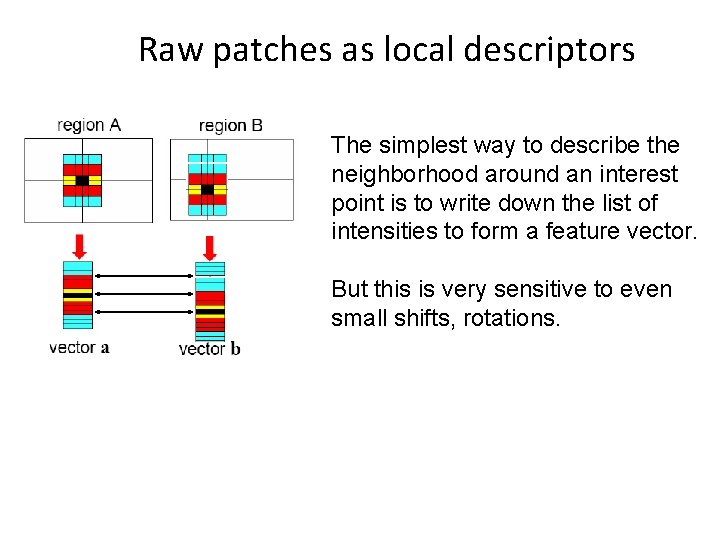

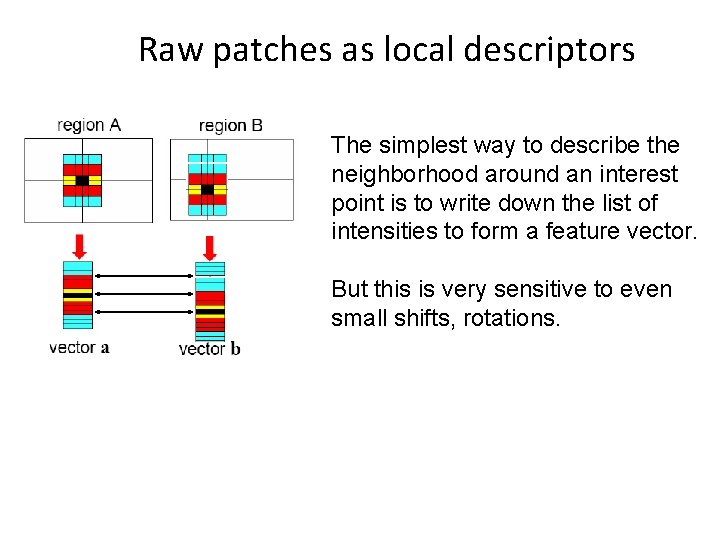

Raw patches as local descriptors The simplest way to describe the neighborhood around an interest point is to write down the list of intensities to form a feature vector. But this is very sensitive to even small shifts, rotations.

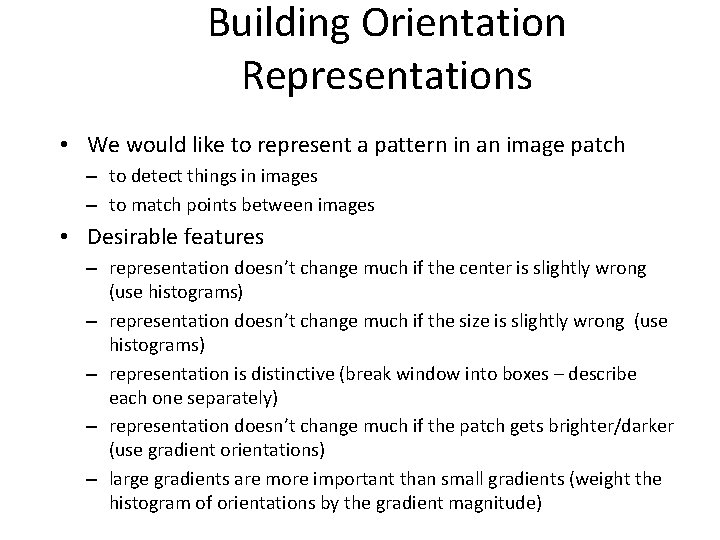

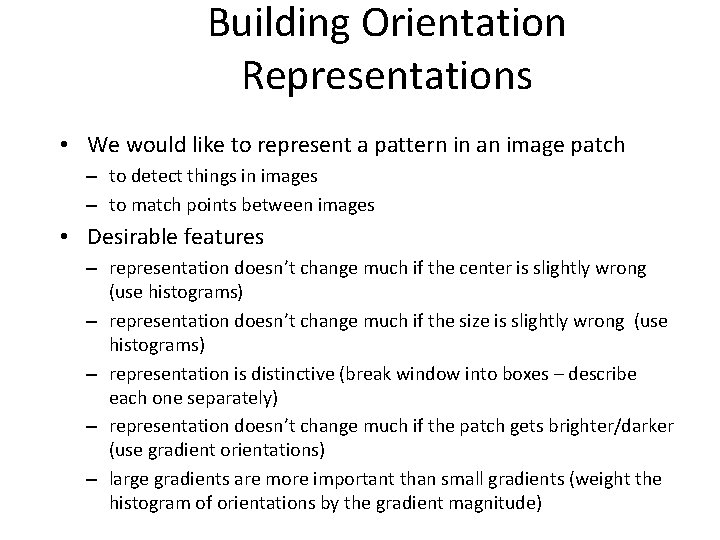

Gradient orientations as descriptors • Gradient magnitude is affected by illumination scaling (e. g. multiplication by a constant) but the gradient direction is not.

Gradient orientations as descriptors • Gradient direction depends on the smoothing scale. – Blurred edges generate new orientations

Gradient orientations as descriptors • Orientation fields are quite characteristic of particular arrangements (e. g. textures).

Building Orientation Representations • We would like to represent a pattern in an image patch – to detect things in images – to match points between images • Desirable features – representation doesn’t change much if the center is slightly wrong (use histograms) – representation doesn’t change much if the size is slightly wrong (use histograms) – representation is distinctive (break window into boxes – describe each one separately) – representation doesn’t change much if the patch gets brighter/darker (use gradient orientations) – large gradients are more important than small gradients (weight the histogram of orientations by the gradient magnitude)

![SIFT descriptor Lowe 2004 Use histograms to bin pixels within subpatches according to SIFT descriptor [Lowe 2004] • Use histograms to bin pixels within sub-patches according to](https://slidetodoc.com/presentation_image_h2/7e6de99a05c8b88a740ee35895e4e1fd/image-53.jpg)

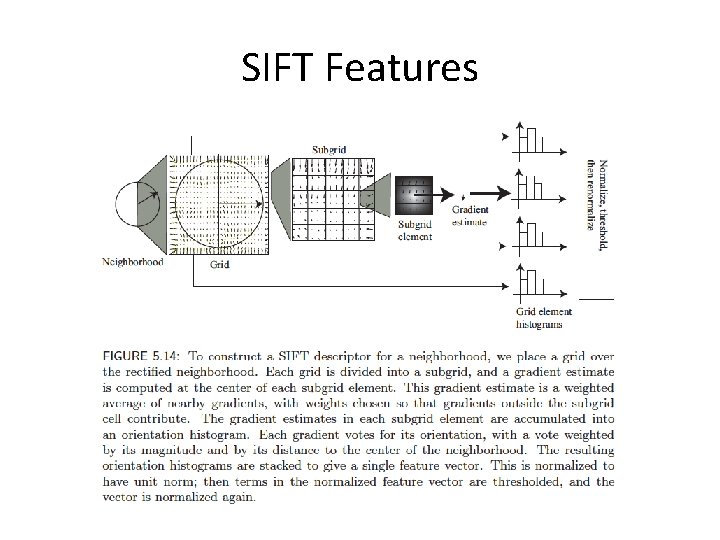

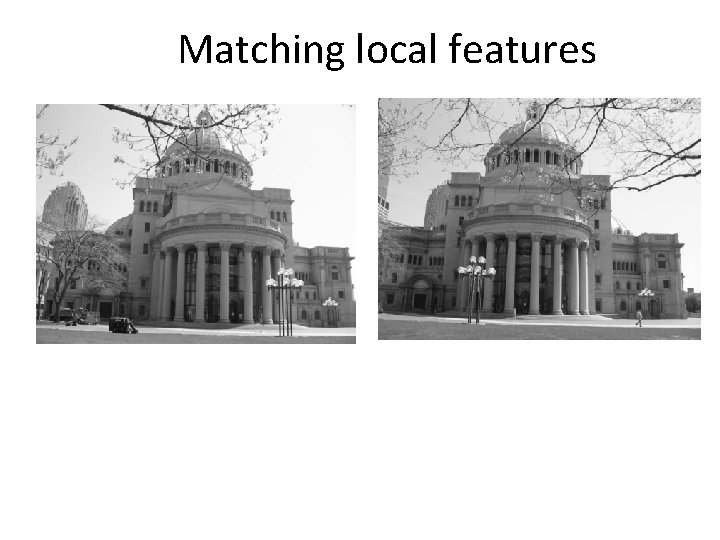

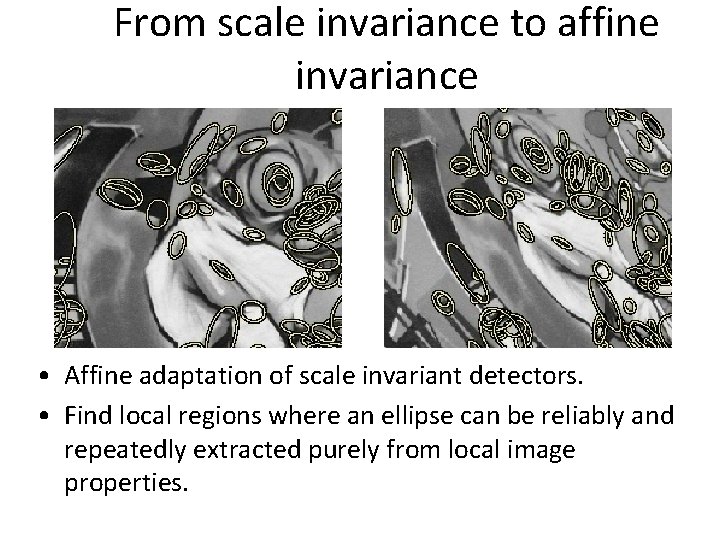

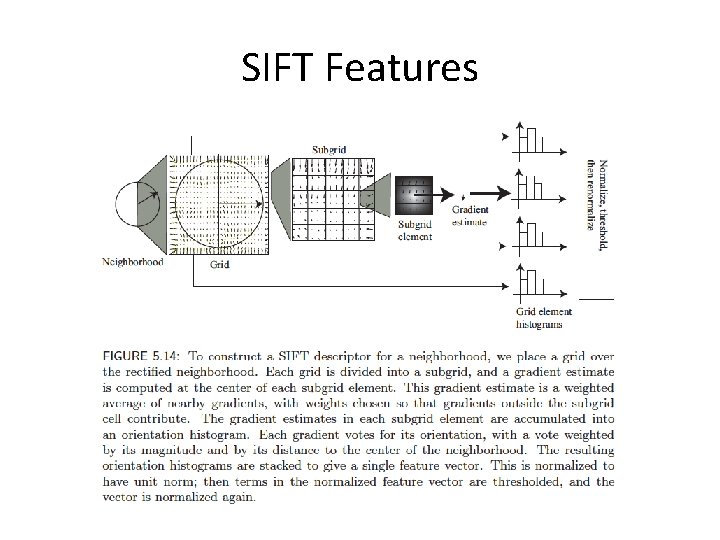

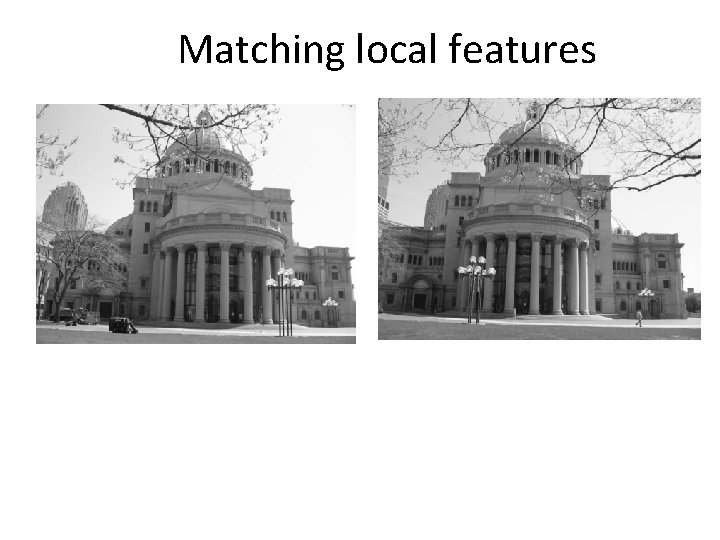

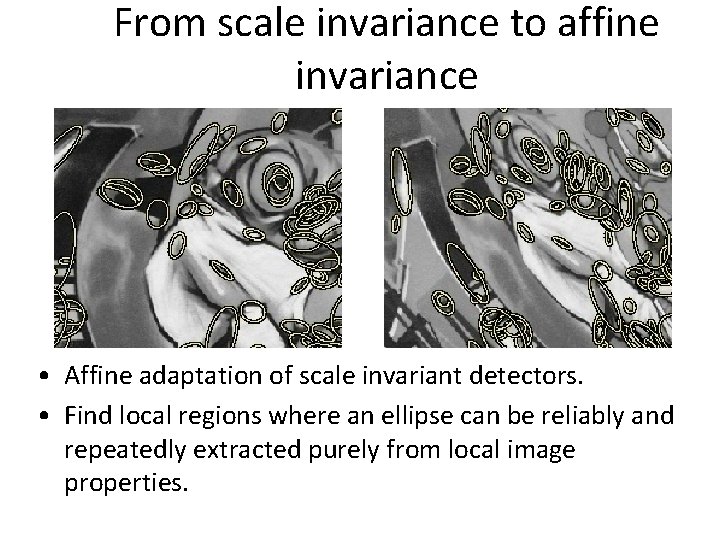

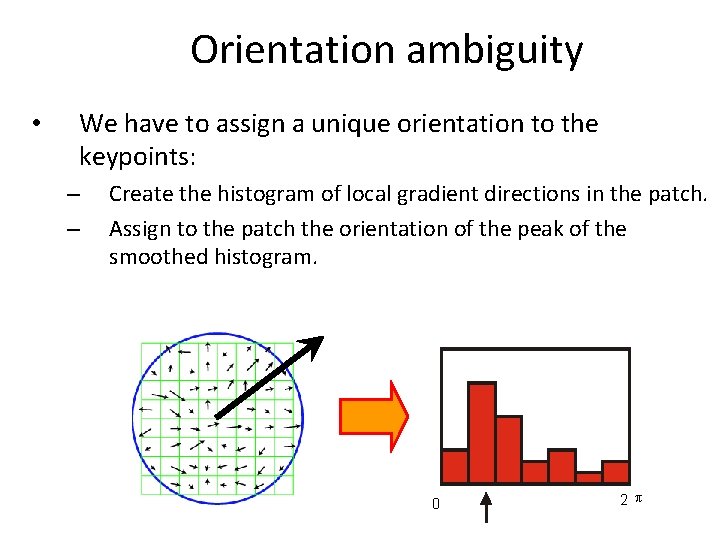

SIFT descriptor [Lowe 2004] • Use histograms to bin pixels within sub-patches according to their orientation. 0 2 p

SIFT Features

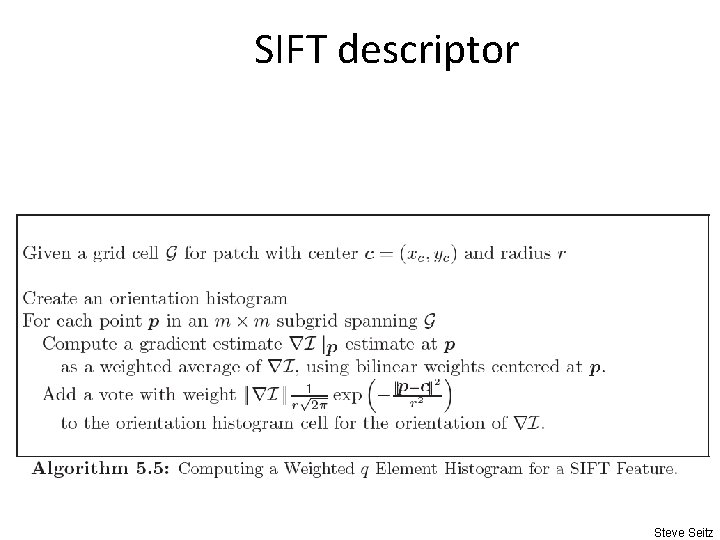

SIFT descriptor Steve Seitz

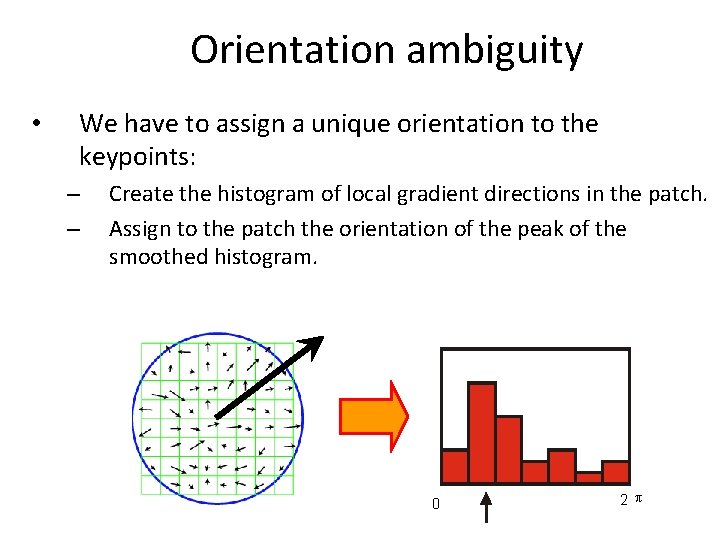

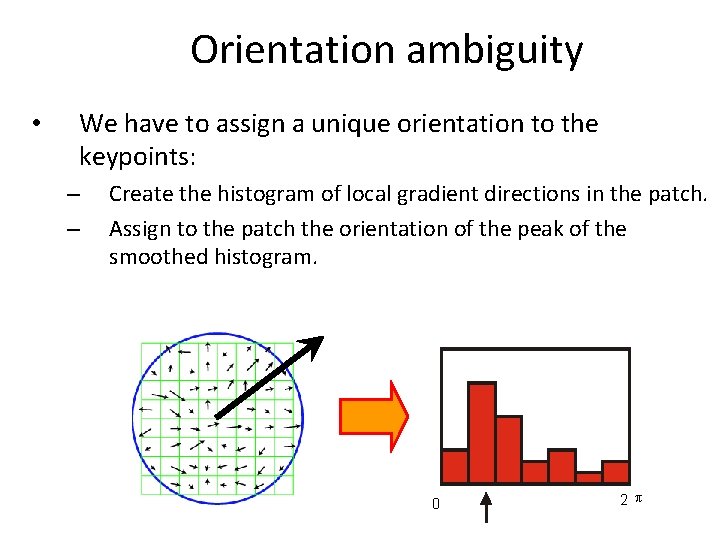

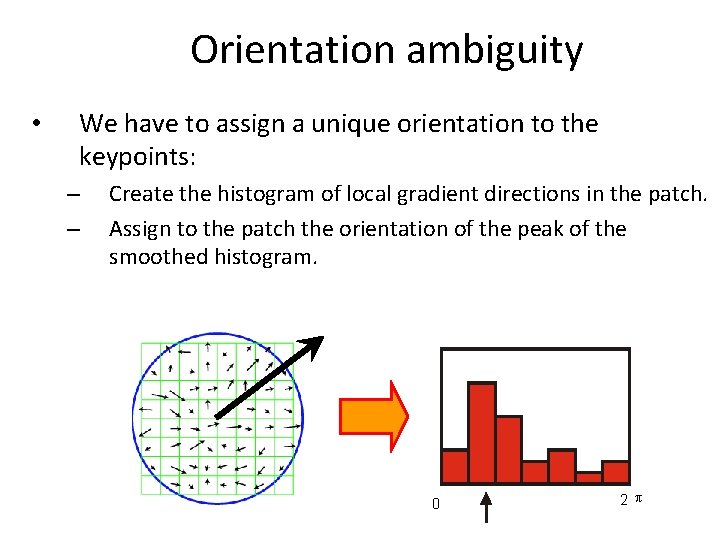

Orientation ambiguity • We have to assign a unique orientation to the keypoints: – – Create the histogram of local gradient directions in the patch. Assign to the patch the orientation of the peak of the smoothed histogram. 0 2 p

![SIFT descriptor Lowe 2004 Extraordinarily robust matching technique Can handle changes in SIFT descriptor [Lowe 2004] • Extraordinarily robust matching technique • Can handle changes in](https://slidetodoc.com/presentation_image_h2/7e6de99a05c8b88a740ee35895e4e1fd/image-57.jpg)

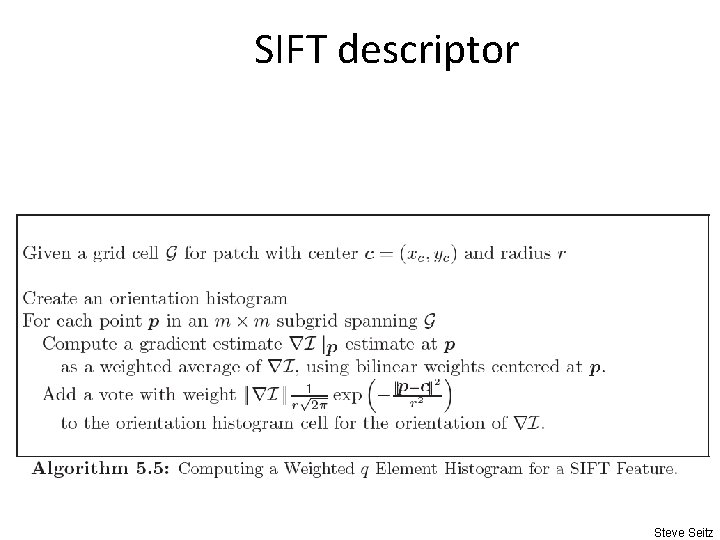

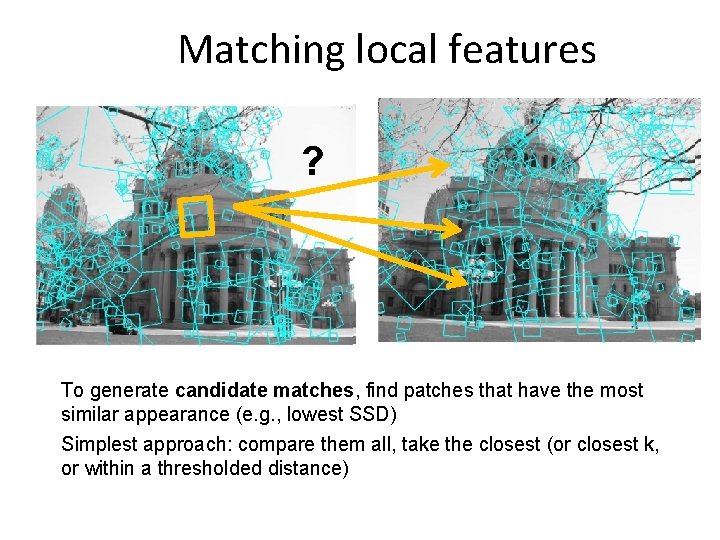

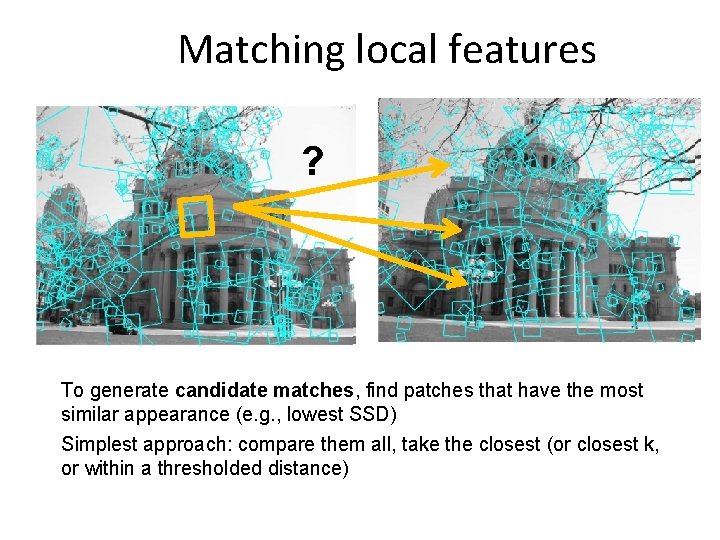

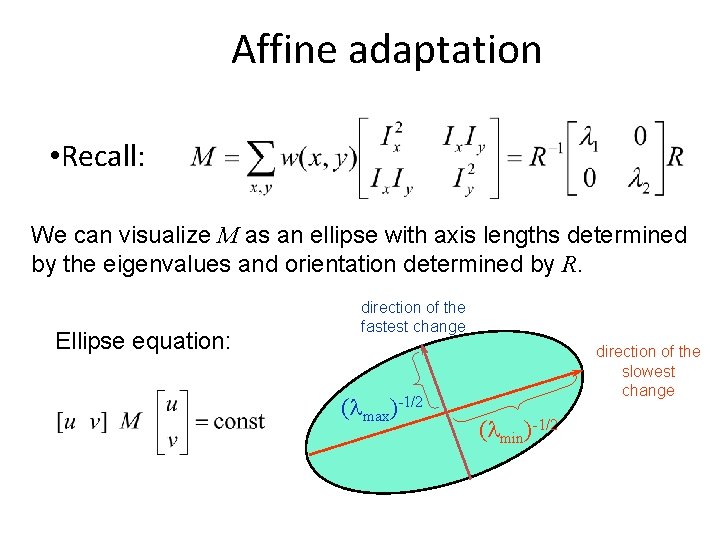

SIFT descriptor [Lowe 2004] • Extraordinarily robust matching technique • Can handle changes in viewpoint • Up to about 60 degree out of plane rotation • Can handle significant changes in illumination • Sometimes even day vs. night (below) • Fast and efficient—can run in real time • Lots of code available • http: //people. csail. mit. edu/albert/ladypack/wiki/index. php/Known_implementations_of_SIFT Steve Seitz

![Histogram of oriented gradients HOG Dalal 2005 Variant of SIFT Nearby gradients Histogram of oriented gradients (HOG) [Dalal 2005] • Variant of SIFT • Nearby gradients](https://slidetodoc.com/presentation_image_h2/7e6de99a05c8b88a740ee35895e4e1fd/image-58.jpg)

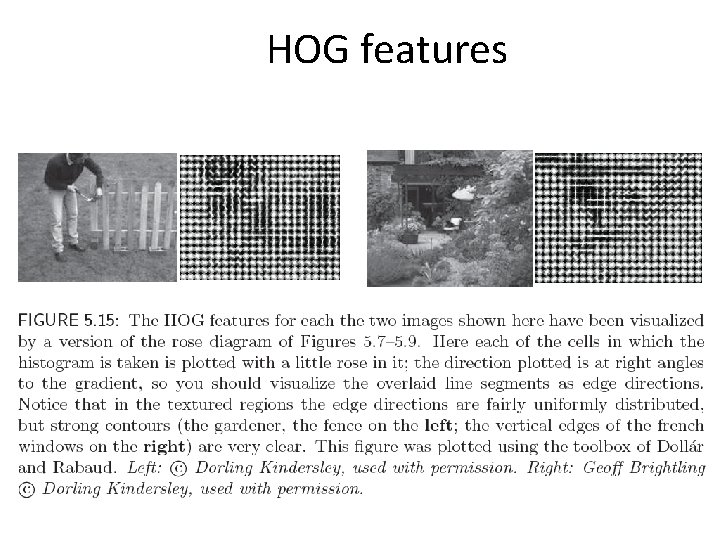

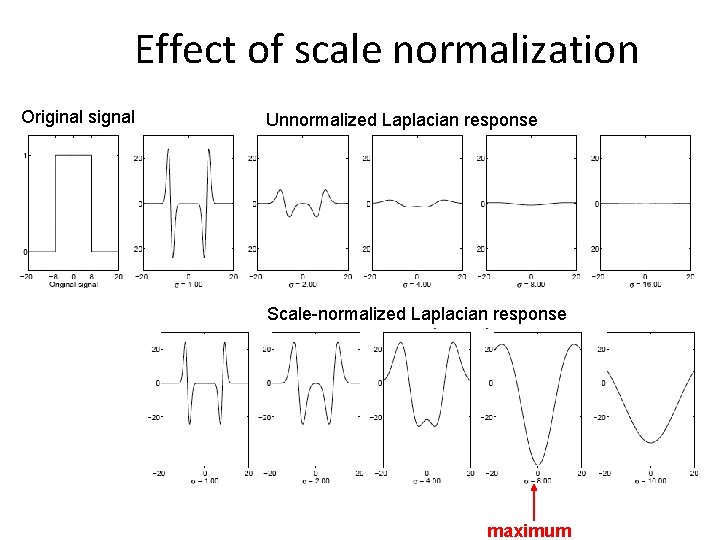

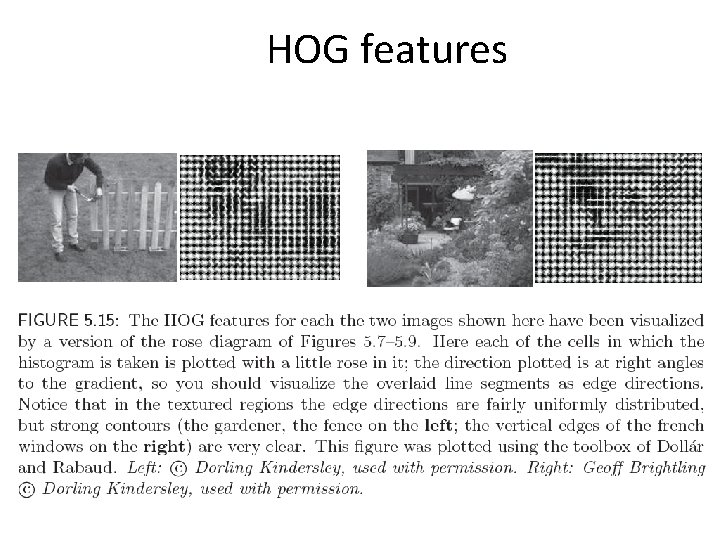

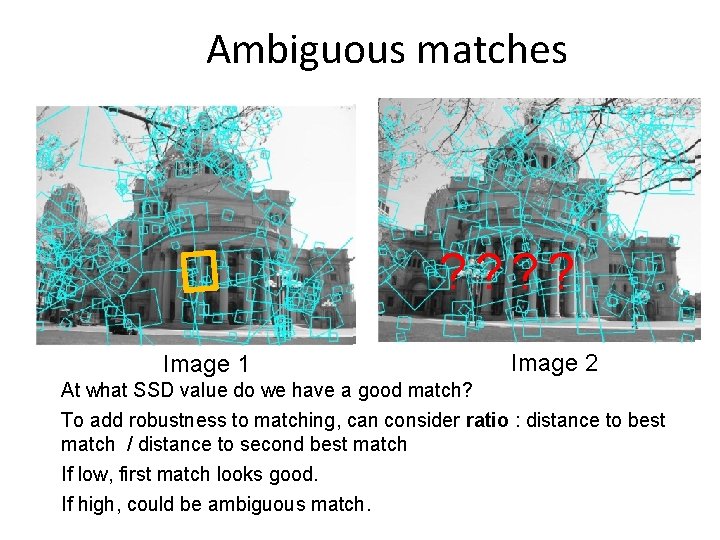

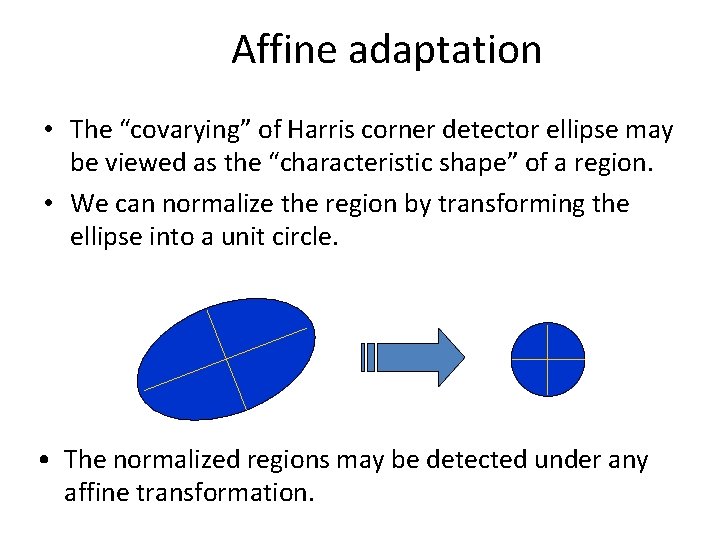

Histogram of oriented gradients (HOG) [Dalal 2005] • Variant of SIFT • Nearby gradients only are considered for the histogram computation • Rather than normalize gradient contributions over the whole neighborhood, we normalize with respect to nearby gradients only • A single gradient may contribute to several histograms Steve Seitz

HOG features

Matching local features

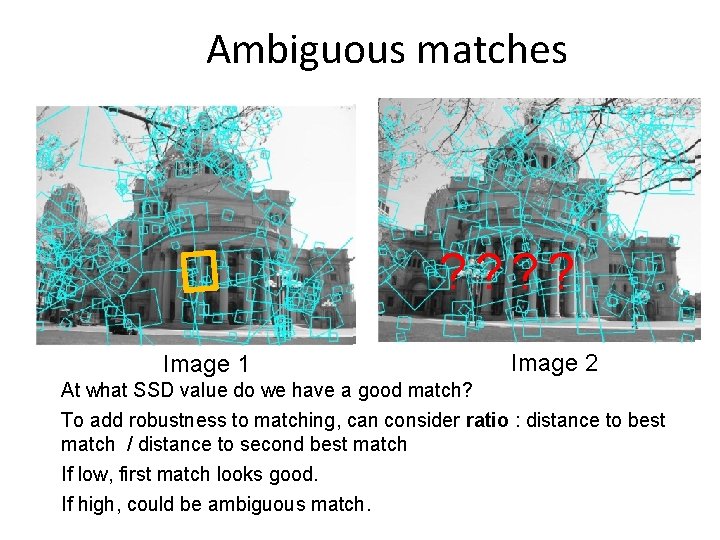

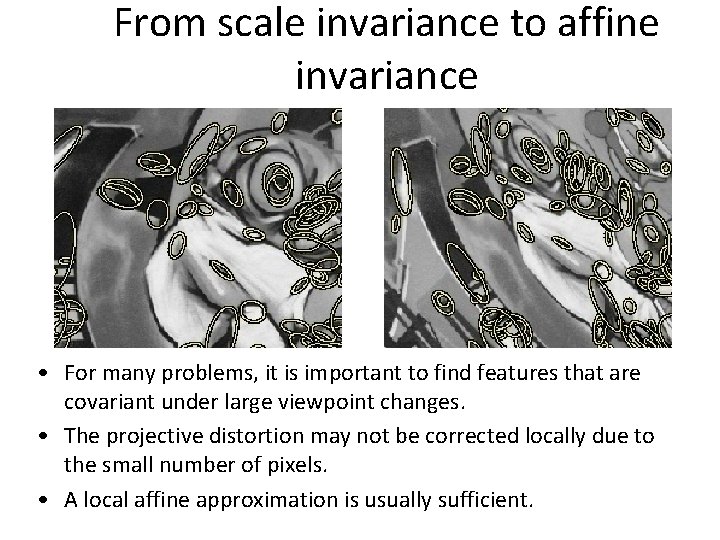

Matching local features ? To generate candidate matches, find patches that have the most similar appearance (e. g. , lowest SSD) Simplest approach: compare them all, take the closest (or closest k, or within a thresholded distance)

Ambiguous matches ? ? Image 1 Image 2 At what SSD value do we have a good match? To add robustness to matching, can consider ratio : distance to best match / distance to second best match If low, first match looks good. If high, could be ambiguous match.

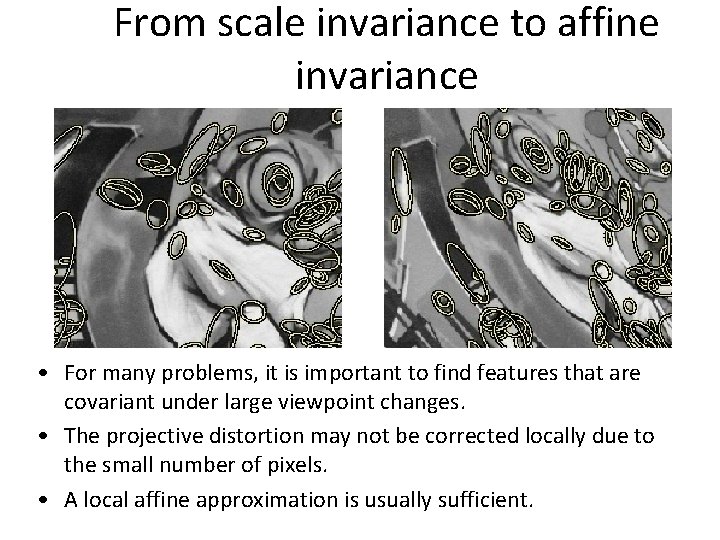

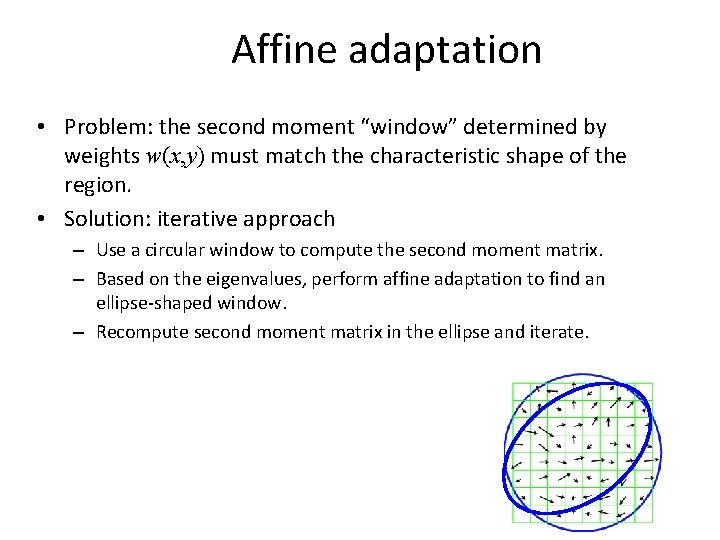

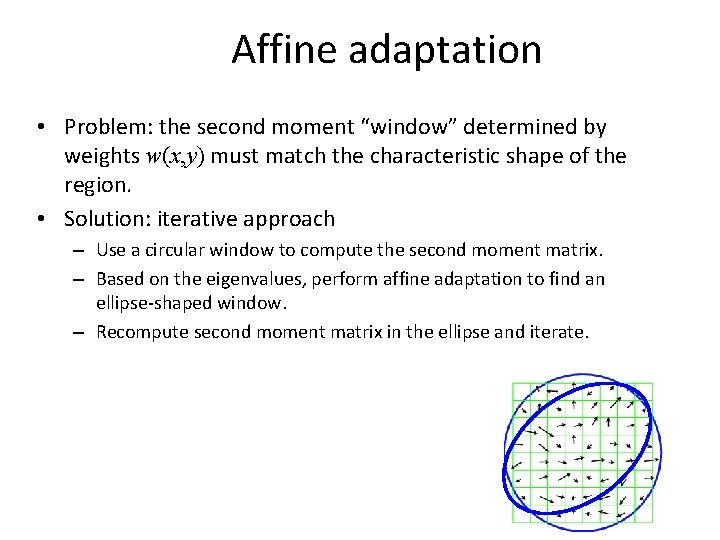

From scale invariance to affine invariance • For many problems, it is important to find features that are covariant under large viewpoint changes. • The projective distortion may not be corrected locally due to the small number of pixels. • A local affine approximation is usually sufficient.

From scale invariance to affine invariance • Affine adaptation of scale invariant detectors. • Find local regions where an ellipse can be reliably and repeatedly extracted purely from local image properties.

Affine adaptation • Recall: We can visualize M as an ellipse with axis lengths determined by the eigenvalues and orientation determined by R. Ellipse equation: direction of the fastest change ( max)-1/2 direction of the slowest change ( min)-1/2

Affine adaptation • The “covarying” of Harris corner detector ellipse may be viewed as the “characteristic shape” of a region. • We can normalize the region by transforming the ellipse into a unit circle. • The normalized regions may be detected under any affine transformation.

Affine adaptation • Problem: the second moment “window” determined by weights w(x, y) must match the characteristic shape of the region. • Solution: iterative approach – Use a circular window to compute the second moment matrix. – Based on the eigenvalues, perform affine adaptation to find an ellipse-shaped window. – Recompute second moment matrix in the ellipse and iterate.

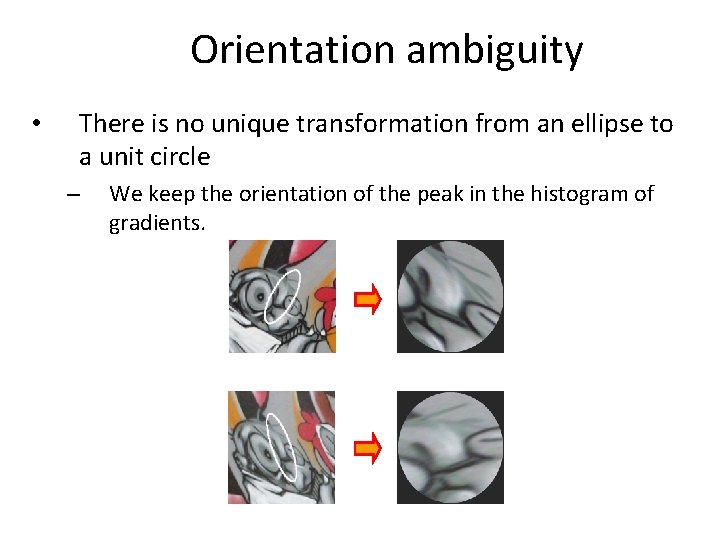

Orientation ambiguity • We have to assign a unique orientation to the keypoints: – – Create the histogram of local gradient directions in the patch. Assign to the patch the orientation of the peak of the smoothed histogram. 0 2 p

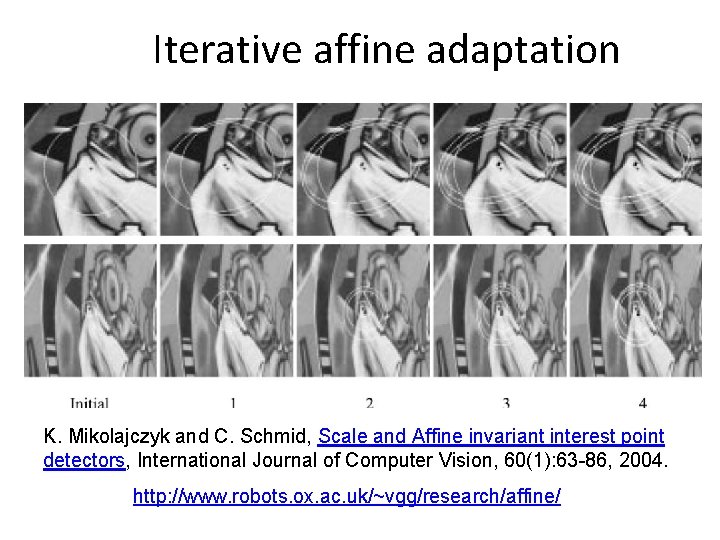

Iterative affine adaptation K. Mikolajczyk and C. Schmid, Scale and Affine invariant interest point detectors, International Journal of Computer Vision, 60(1): 63 -86, 2004. http: //www. robots. ox. ac. uk/~vgg/research/affine/

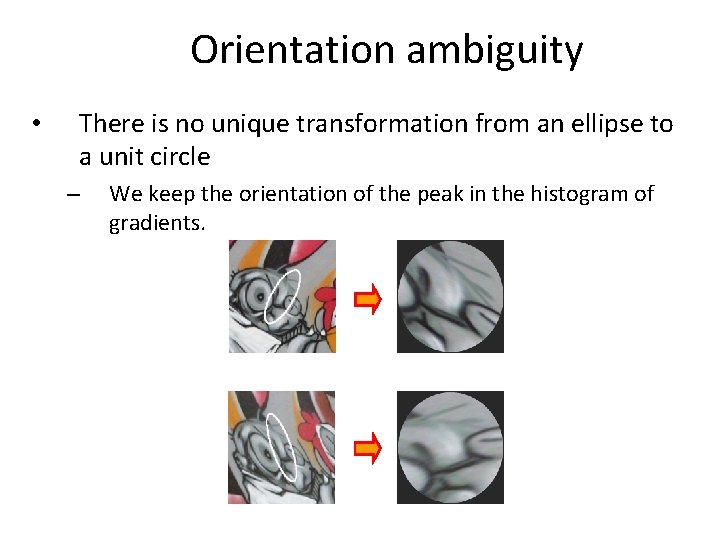

Orientation ambiguity • There is no unique transformation from an ellipse to a unit circle – We keep the orientation of the peak in the histogram of gradients.

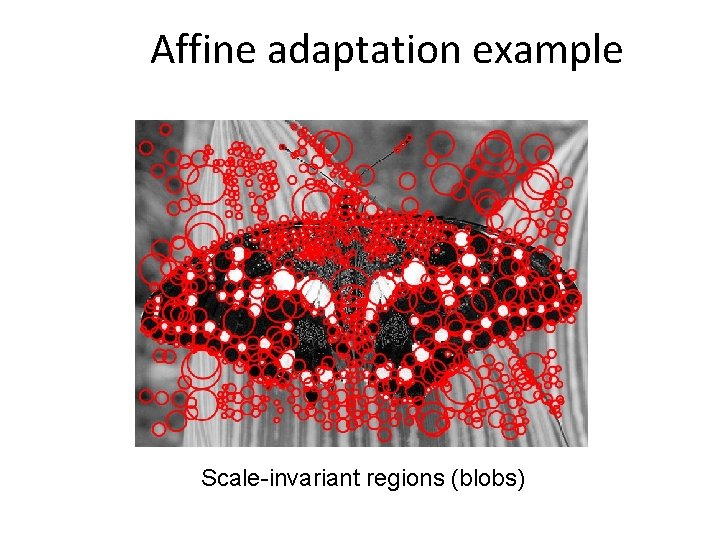

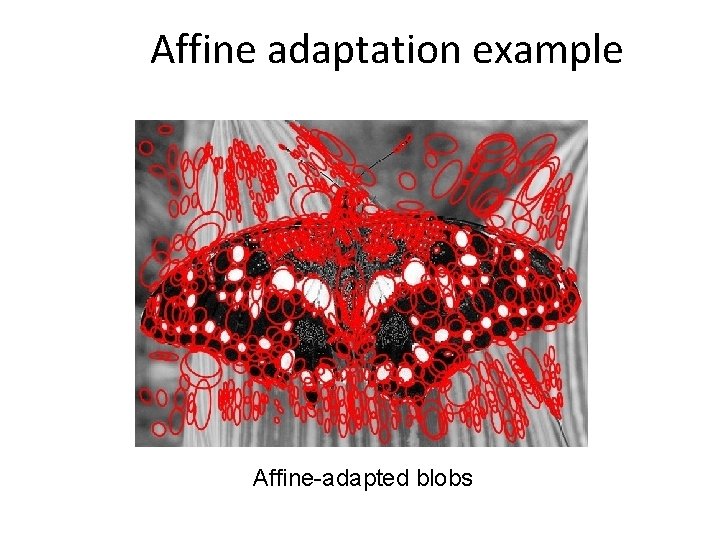

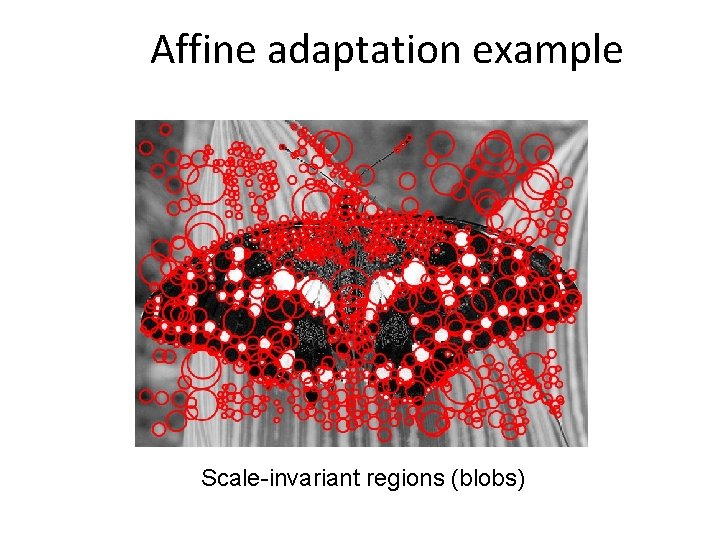

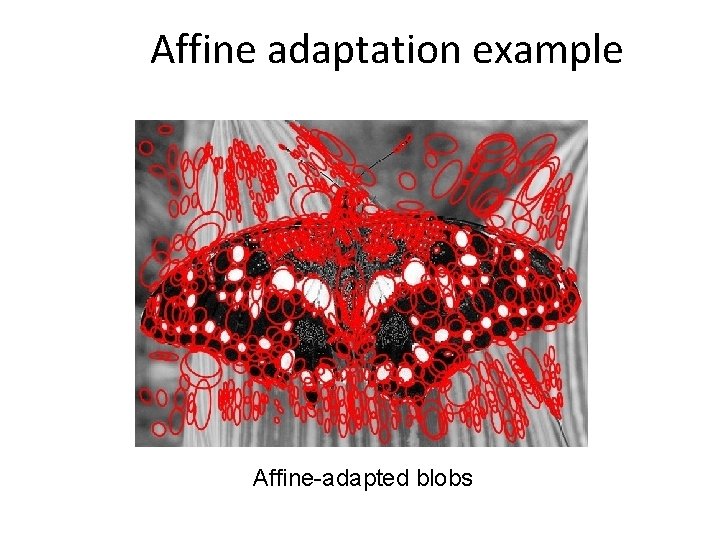

Affine adaptation example Scale-invariant regions (blobs)

Affine adaptation example Affine-adapted blobs

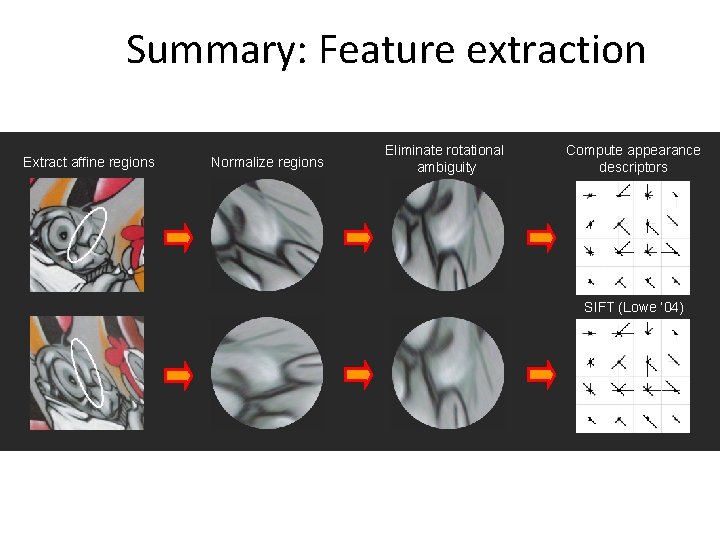

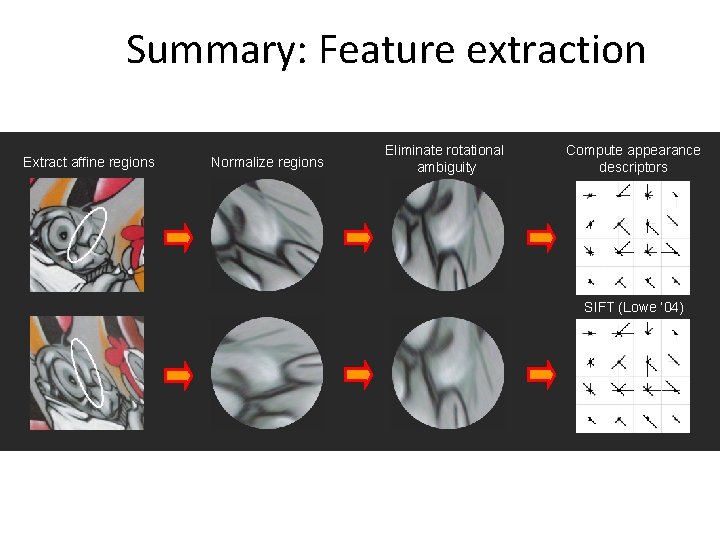

Summary: Feature extraction Extract affine regions Normalize regions Eliminate rotational ambiguity Compute appearance descriptors SIFT (Lowe ’ 04)

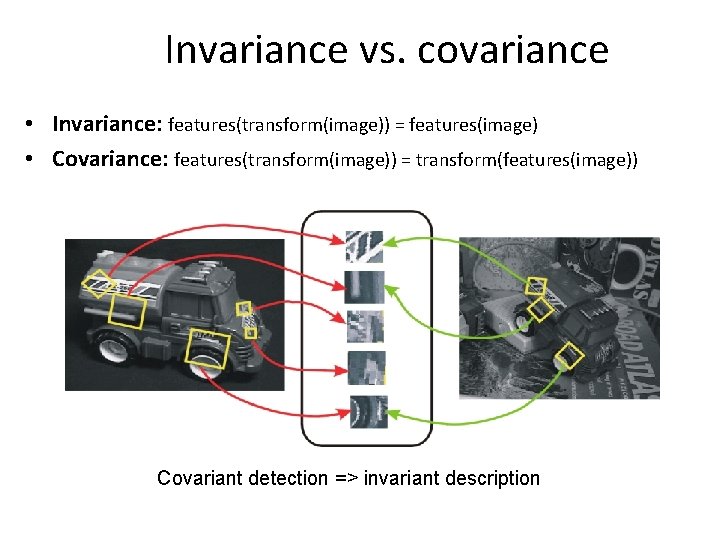

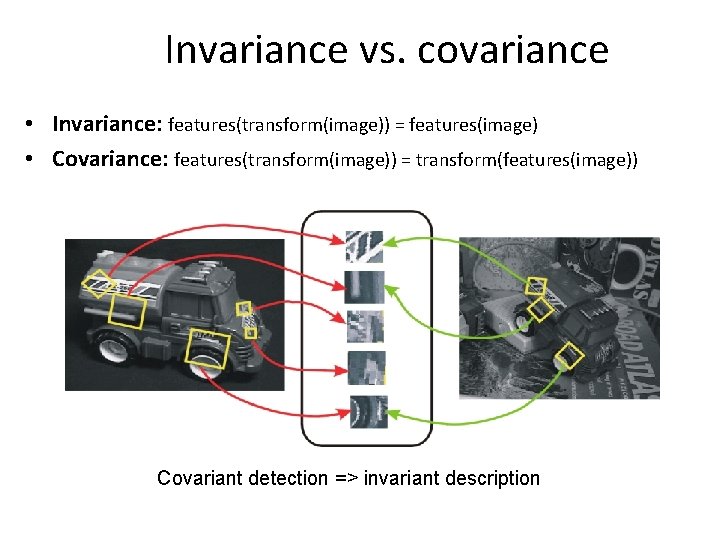

Invariance vs. covariance • Invariance: features(transform(image)) = features(image) • Covariance: features(transform(image)) = transform(features(image)) Covariant detection => invariant description

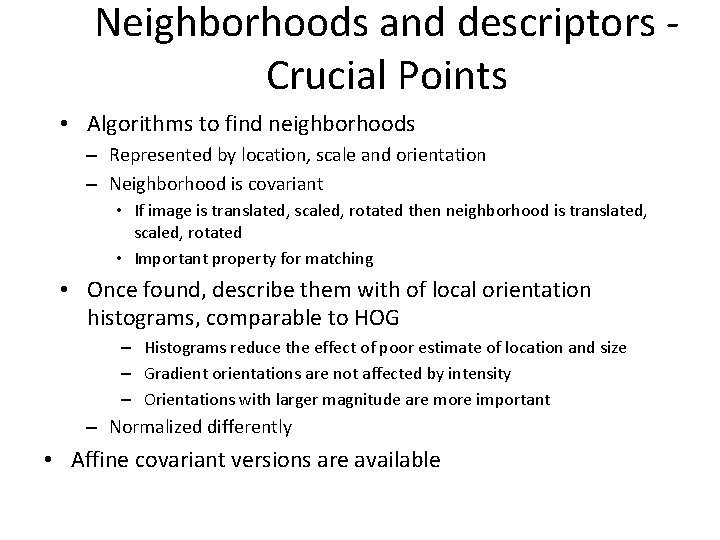

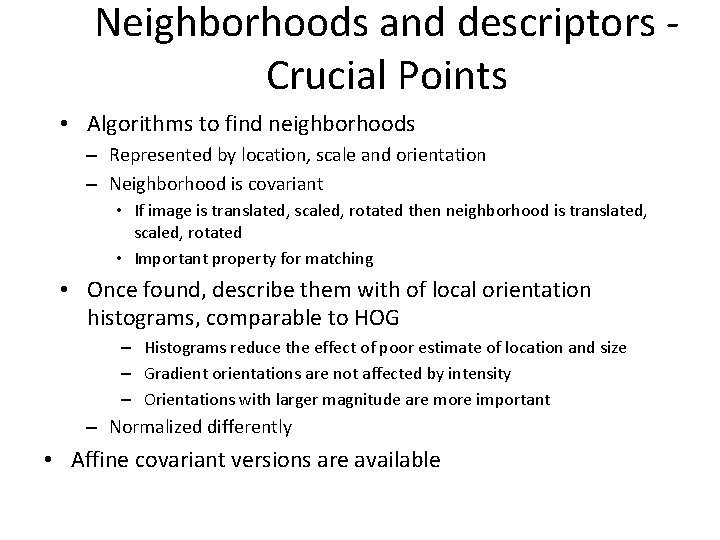

Neighborhoods and descriptors Crucial Points • Algorithms to find neighborhoods – Represented by location, scale and orientation – Neighborhood is covariant • If image is translated, scaled, rotated then neighborhood is translated, scaled, rotated • Important property for matching • Once found, describe them with of local orientation histograms, comparable to HOG – Histograms reduce the effect of poor estimate of location and size – Gradient orientations are not affected by intensity – Orientations with larger magnitude are more important – Normalized differently • Affine covariant versions are available