Review of LZ man gzip gives the following

- Slides: 20

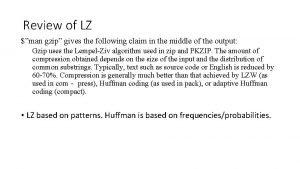

Review of LZ $”man gzip” gives the following claim in the middle of the output: Gzip uses the Lempel-Ziv algorithm used in zip and PKZIP. The amount of compression obtained depends on the size of the input and the distribution of common substrings. Typically, text such as source code or English is reduced by 60 -70%. Compression is generally much better than that achieved by LZW (as used in com‐ press), Huffman coding (as used in pack), or adaptive Huffman coding (compact). • LZ based on patterns. Huffman is based on frequencies/probabilities.

How does LZ Handles A Pattern Such as “Jesus”

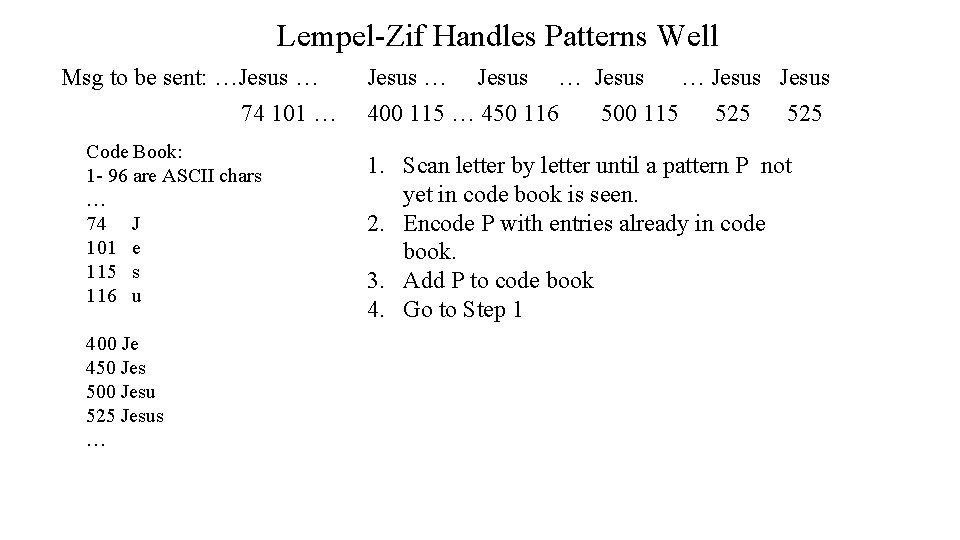

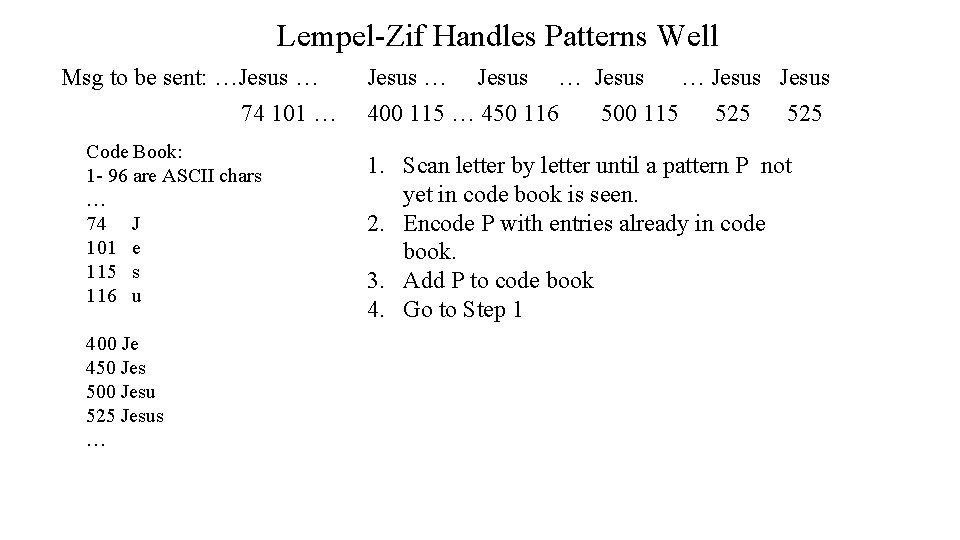

Lempel-Zif Handles Patterns Well Msg to be sent: …Jesus … 74 101 … Code Book: 1 - 96 are ASCII chars … 74 J 101 e 115 s 116 u 400 Je 450 Jes 500 Jesu 525 Jesus … Jesus 400 115 … 450 116 500 115 525 1. Scan letter by letter until a pattern P not yet in code book is seen. 2. Encode P with entries already in code book. 3. Add P to code book 4. Go to Step 1

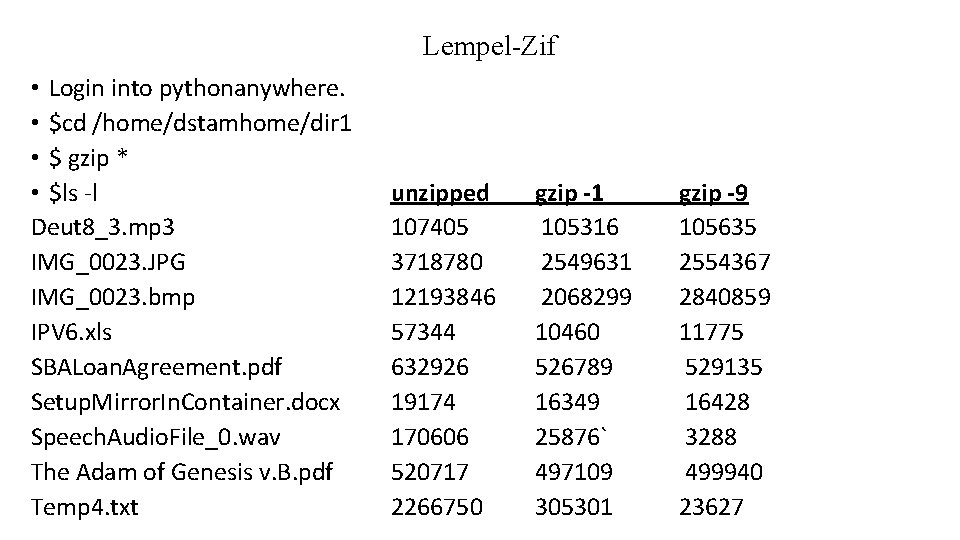

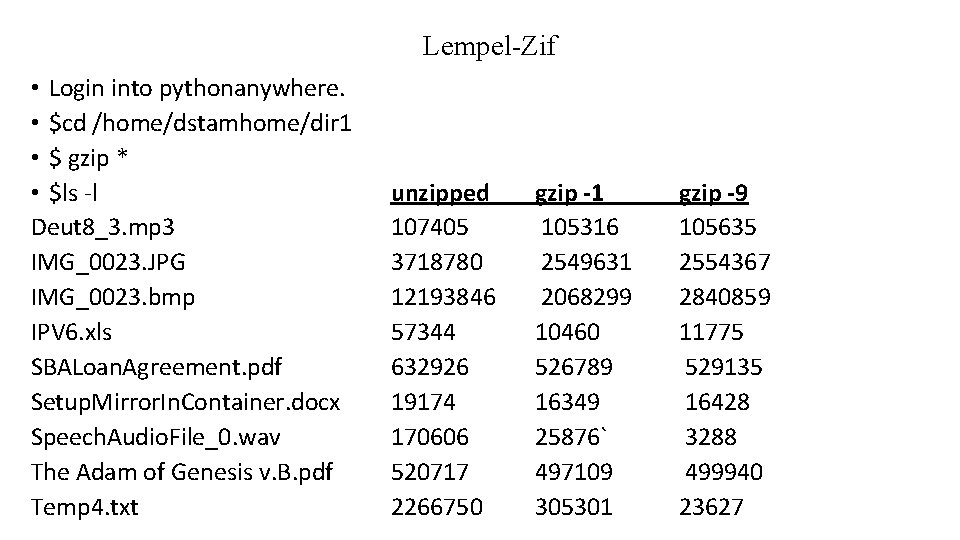

Lempel-Zif • Login into pythonanywhere. • $cd /home/dstamhome/dir 1 • $ gzip * • $ls -l Deut 8_3. mp 3 IMG_0023. JPG IMG_0023. bmp IPV 6. xls SBALoan. Agreement. pdf Setup. Mirror. In. Container. docx Speech. Audio. File_0. wav The Adam of Genesis v. B. pdf Temp 4. txt unzipped 107405 3718780 12193846 57344 632926 19174 170606 520717 2266750 gzip -1 105316 2549631 2068299 10460 526789 16349 25876` 497109 305301 gzip -9 105635 2554367 2840859 11775 529135 16428 3288 499940 23627

Huffman Algorithm Huffman encoding is a compression method which is lossless. It is used in the famous JPEG format and MG Invested in 1952 by David Huffman while he was a student at MIT. It can be used in all kinds of situations in which the actual frequencies or probabilities of the items to be compressed are known.

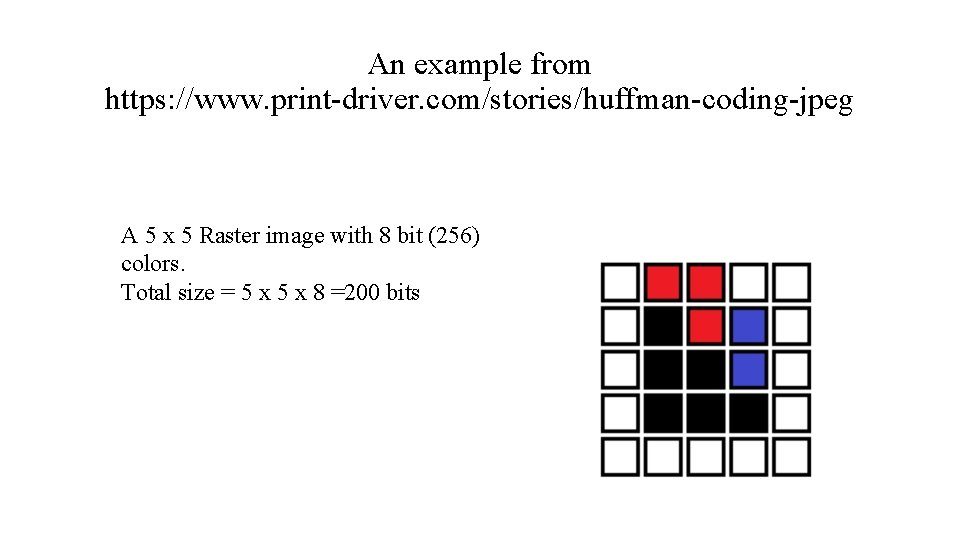

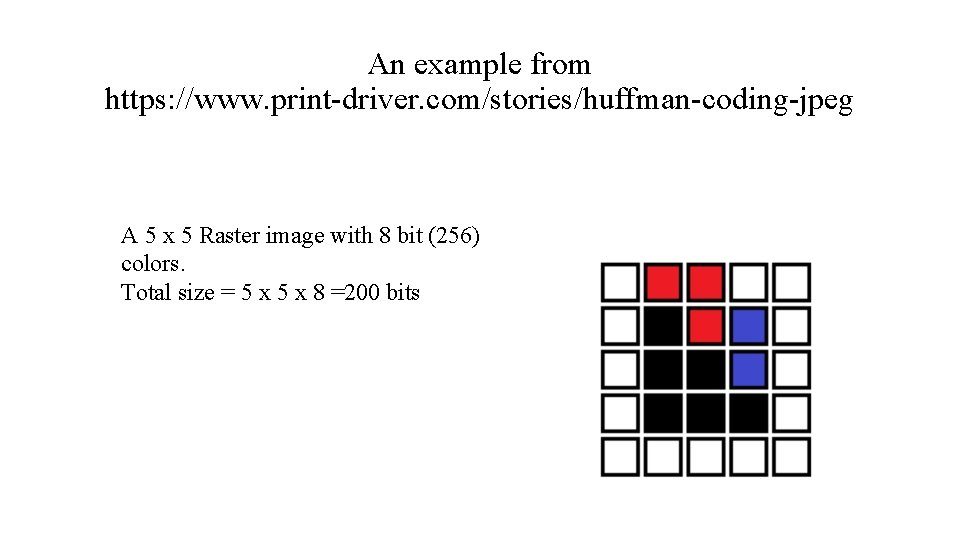

An example from https: //www. print-driver. com/stories/huffman-coding-jpeg A 5 x 5 Raster image with 8 bit (256) colors. Total size = 5 x 8 =200 bits

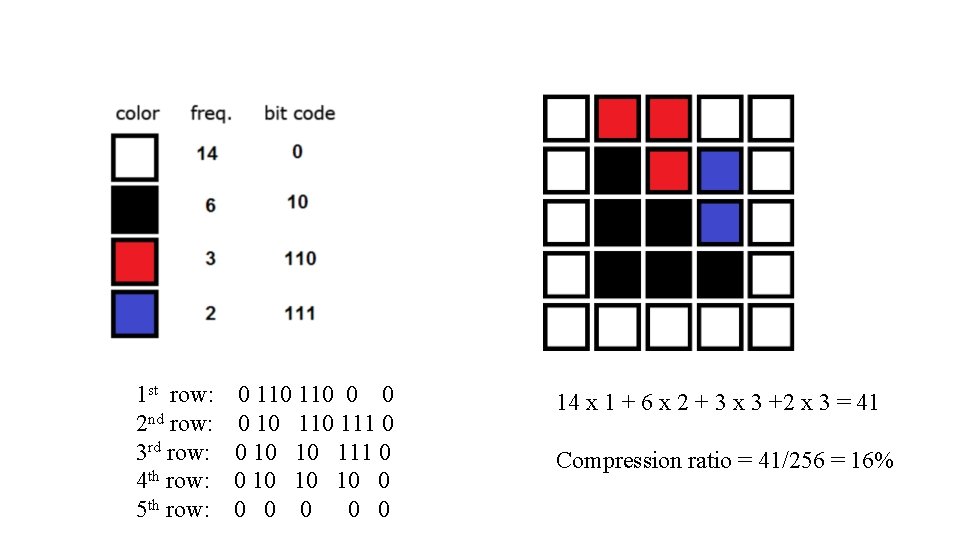

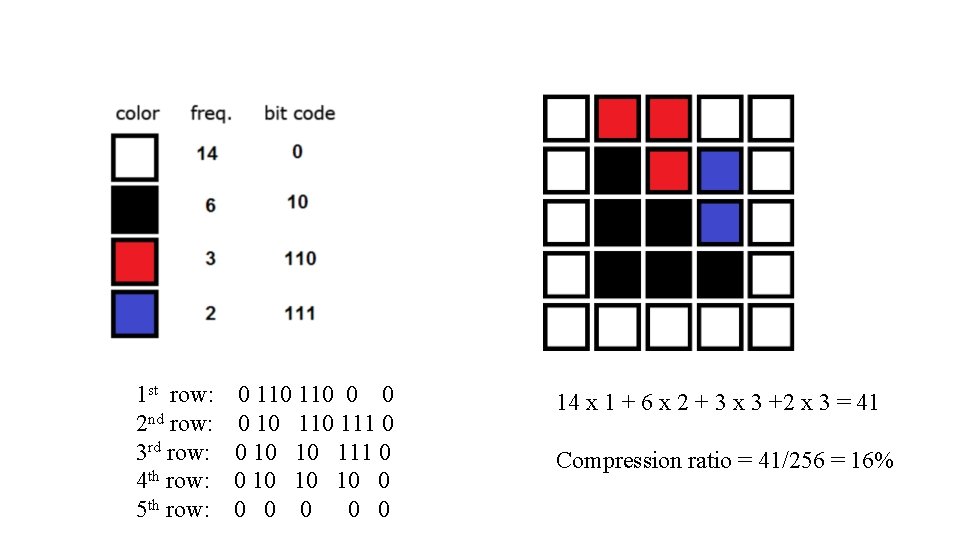

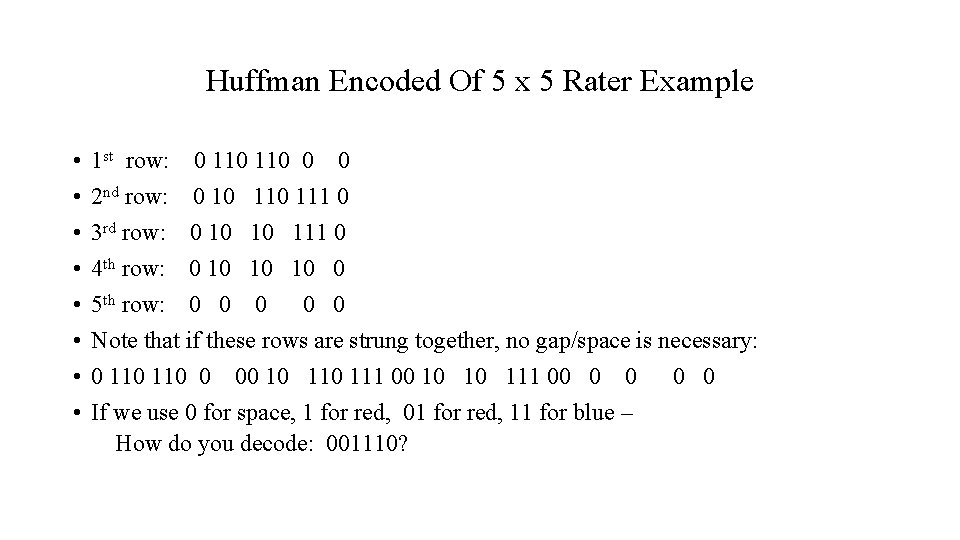

1 st row: 2 nd row: 3 rd row: 4 th row: 5 th row: 0 110 0 10 111 0 0 10 10 10 0 0 0 14 x 1 + 6 x 2 + 3 x 3 +2 x 3 = 41 Compression ratio = 41/256 = 16%

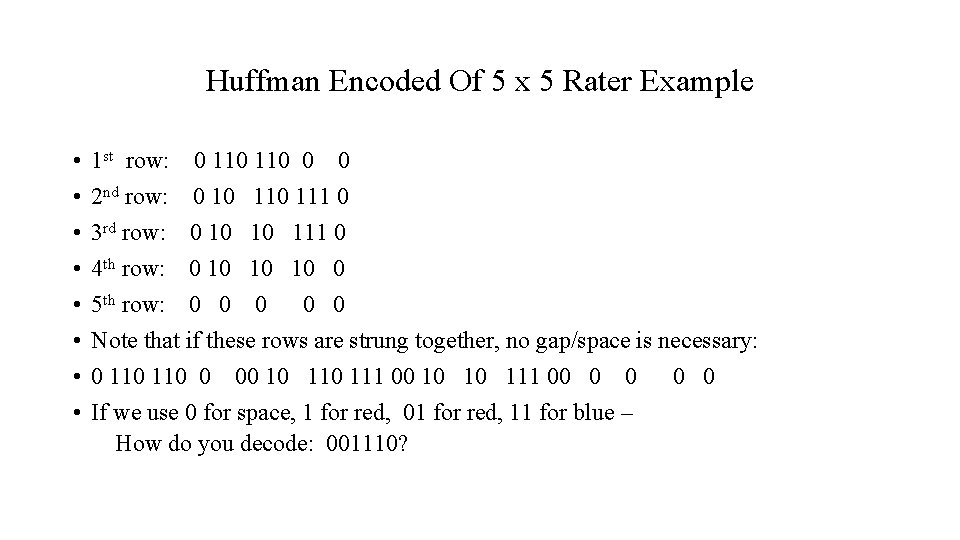

Huffman Encoded Of 5 x 5 Rater Example • • 1 st row: 0 110 0 0 2 nd row: 0 10 111 0 3 rd row: 0 10 10 111 0 4 th row: 0 10 10 10 0 5 th row: 0 0 0 Note that if these rows are strung together, no gap/space is necessary: 0 110 0 00 10 111 00 10 10 111 00 0 0 If we use 0 for space, 1 for red, 01 for red, 11 for blue – How do you decode: 001110?

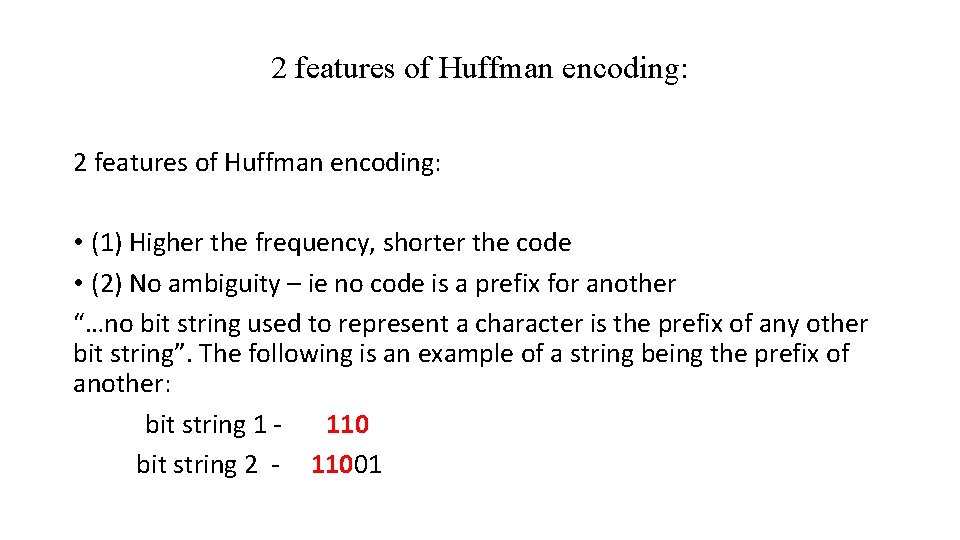

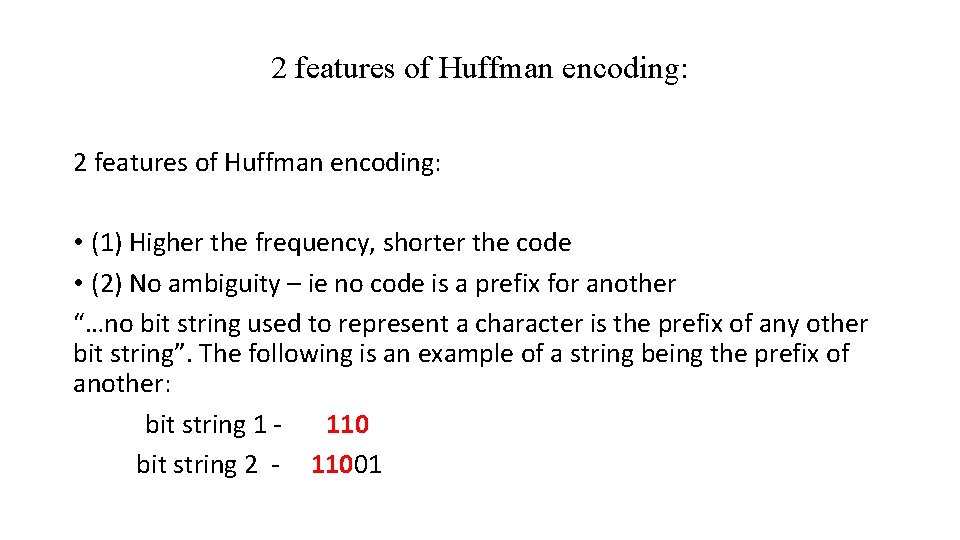

2 features of Huffman encoding: • (1) Higher the frequency, shorter the code • (2) No ambiguity – ie no code is a prefix for another “…no bit string used to represent a character is the prefix of any other bit string”. The following is an example of a string being the prefix of another: bit string 1 110 bit string 2 - 11001

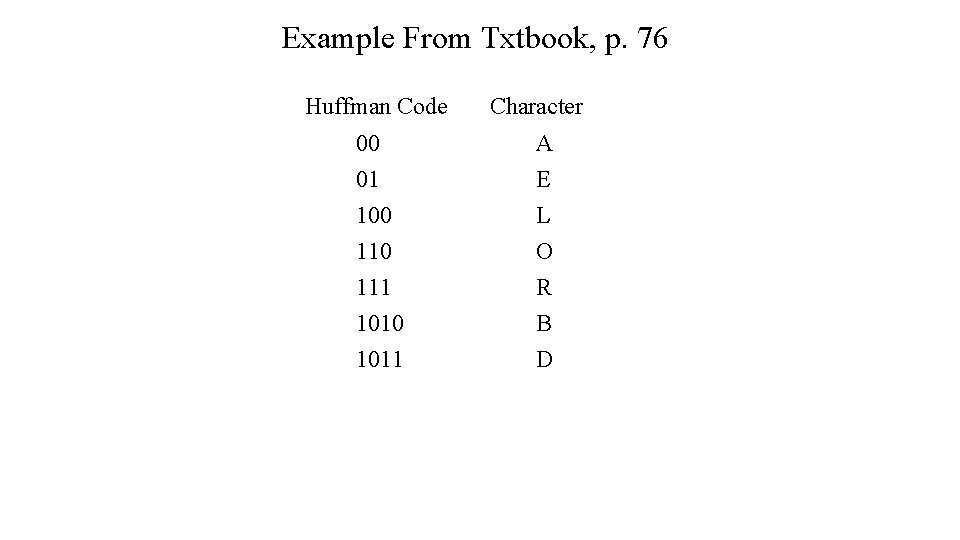

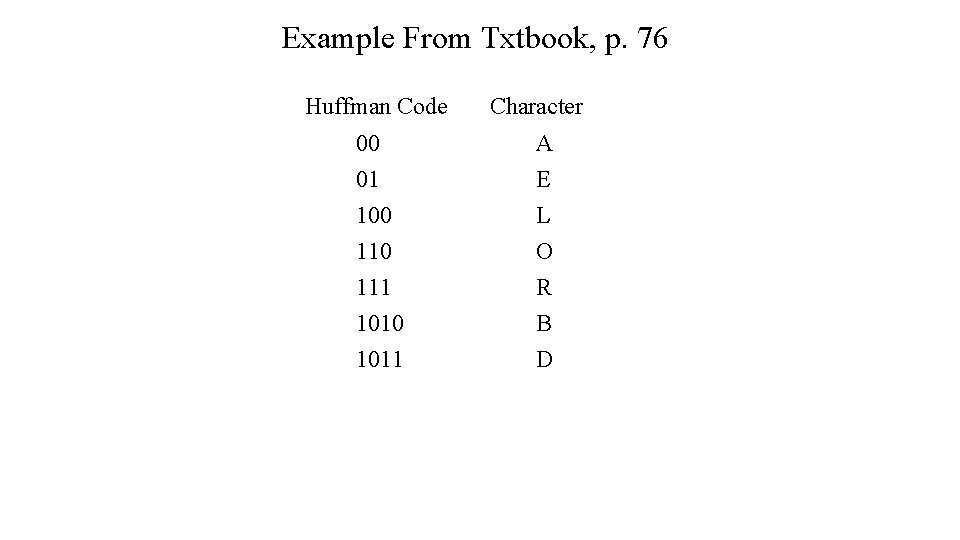

Example From Txtbook, p. 76 Huffman Code 00 01 100 111 1010 1011 Character A E L O R B D

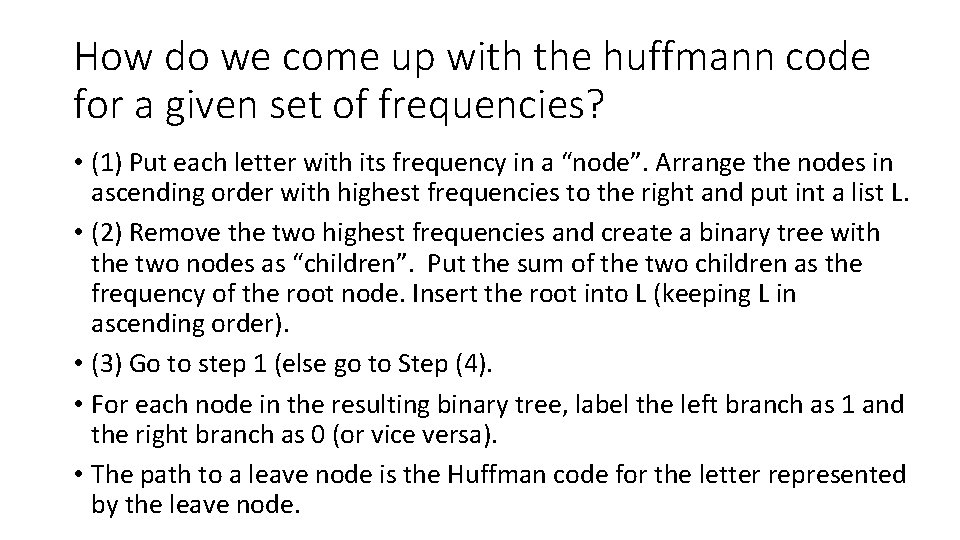

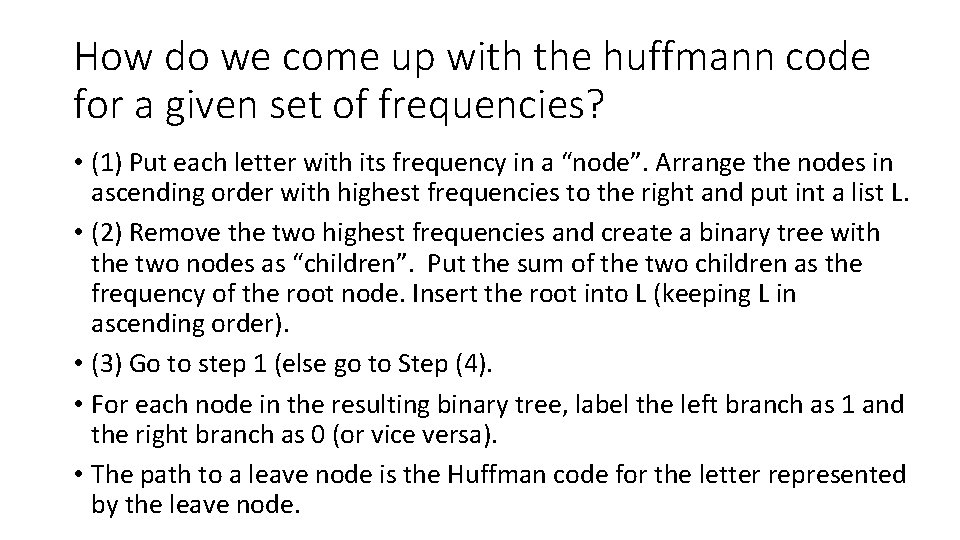

How do we come up with the huffmann code for a given set of frequencies? • (1) Put each letter with its frequency in a “node”. Arrange the nodes in ascending order with highest frequencies to the right and put int a list L. • (2) Remove the two highest frequencies and create a binary tree with the two nodes as “children”. Put the sum of the two children as the frequency of the root node. Insert the root into L (keeping L in ascending order). • (3) Go to step 1 (else go to Step (4). • For each node in the resulting binary tree, label the left branch as 1 and the right branch as 0 (or vice versa). • The path to a leave node is the Huffman code for the letter represented by the leave node.

https: //www. tek. com/support/faqs/what-huffman -coding • Dynamic (2 passes) vs static (1 pass) • Huffman coding is a method of data compression that is independent of the data type, that is, the data could represent an image, audio or spreadsheet. This compression scheme is used in JPEG and MPEG-2. Huffman coding works by looking at the data stream that makes up the file to be compressed. Those data bytes that occur most often are assigned a small code to represent them (certainly smaller then the data bytes being represented)

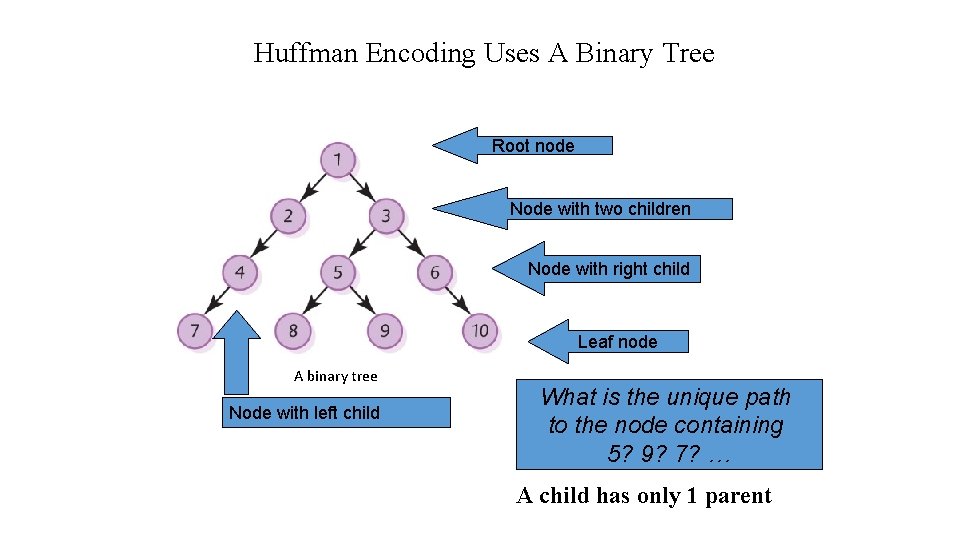

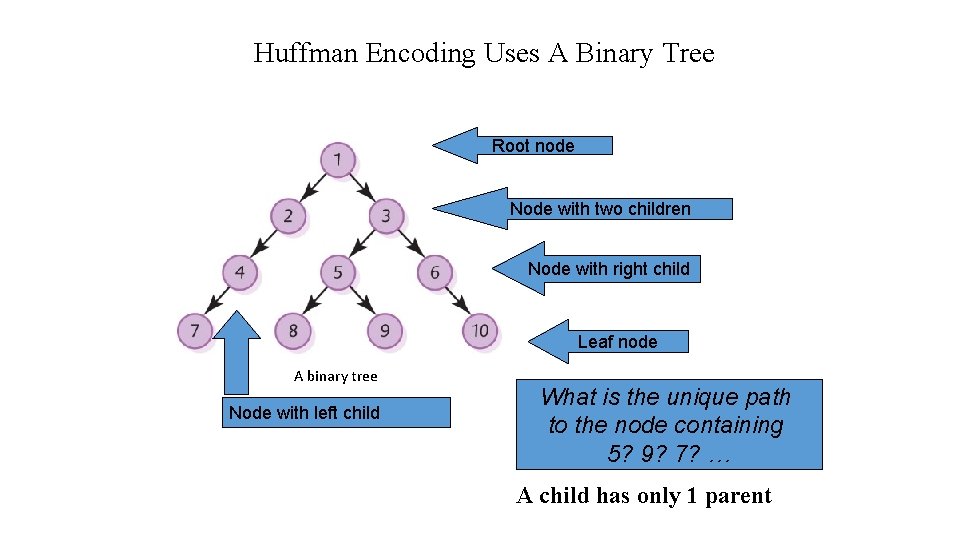

Huffman Encoding Uses A Binary Tree Root node Node with two children Node with right child Leaf node A binary tree Node with left child What is the unique path to the node containing 5? 9? 7? … A child has only 1 parent

How Can We Develop Huffman Code From Frequency Data? • https: //www. youtube. com/watch? v=Js. Tptu 56 GM 8 • Ths video is fast moving but does give a good first impression of how Hffman Code can be developed from frequency data. • A slower moving talk on developing Huffman code will follow this video.

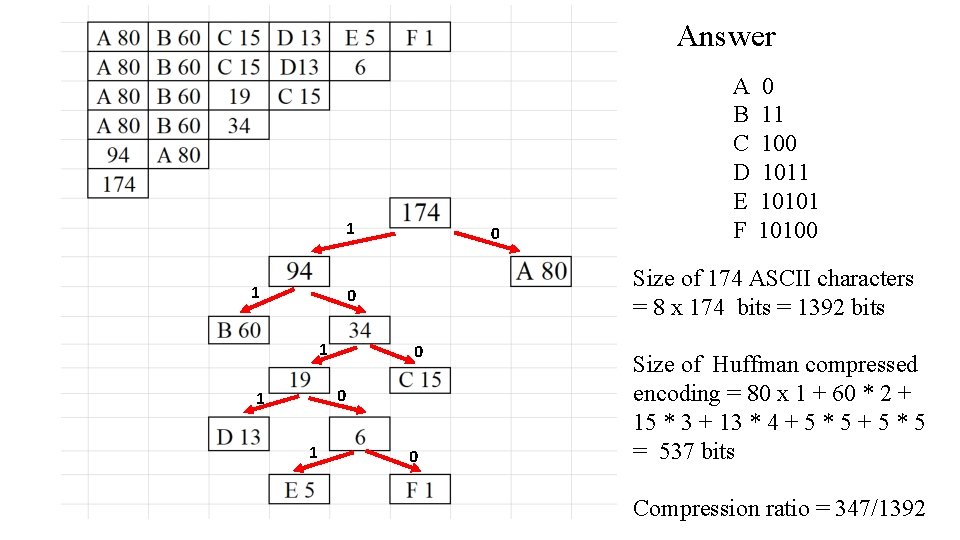

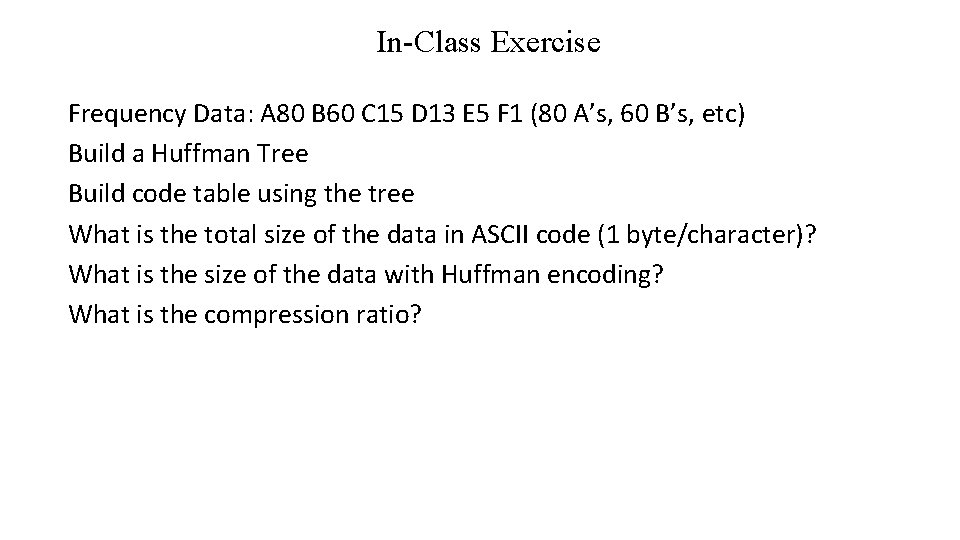

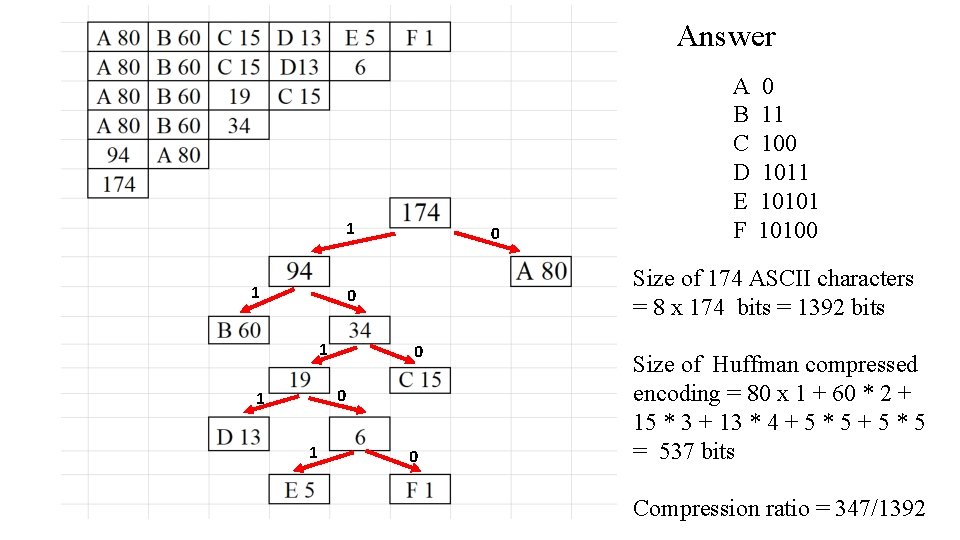

In-Class Exercise Frequency Data: A 80 B 60 C 15 D 13 E 5 F 1 (80 A’s, 60 B’s, etc) Build a Huffman Tree Build code table using the tree What is the total size of the data in ASCII code (1 byte/character)? What is the size of the data with Huffman encoding? What is the compression ratio?

Answer 1 0 0 1 1 0 11 100 1011 10100 Size of 174 ASCII characters = 8 x 174 bits = 1392 bits 0 1 A B C D E F 0 Size of Huffman compressed encoding = 80 x 1 + 60 * 2 + 15 * 3 + 13 * 4 + 5 * 5 = 537 bits Compression ratio = 347/1392

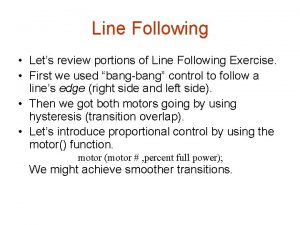

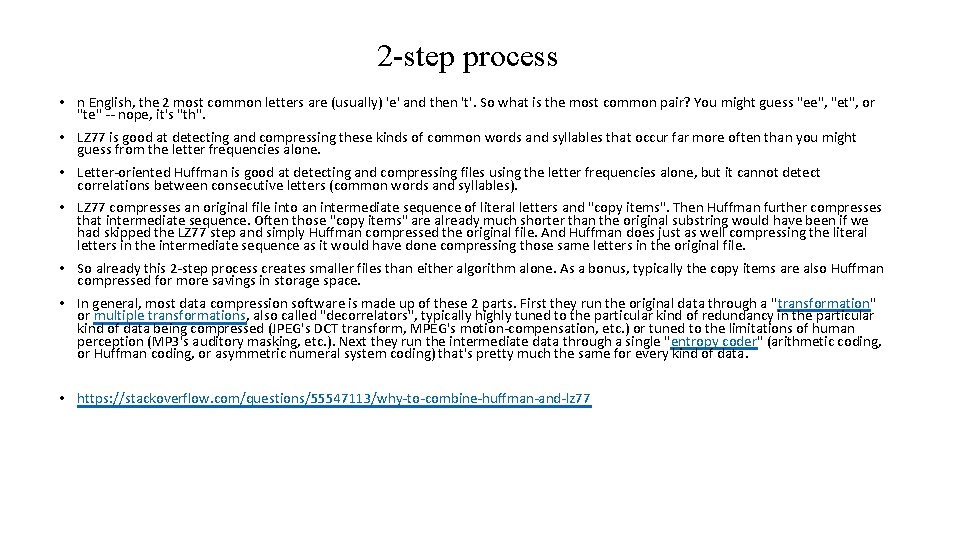

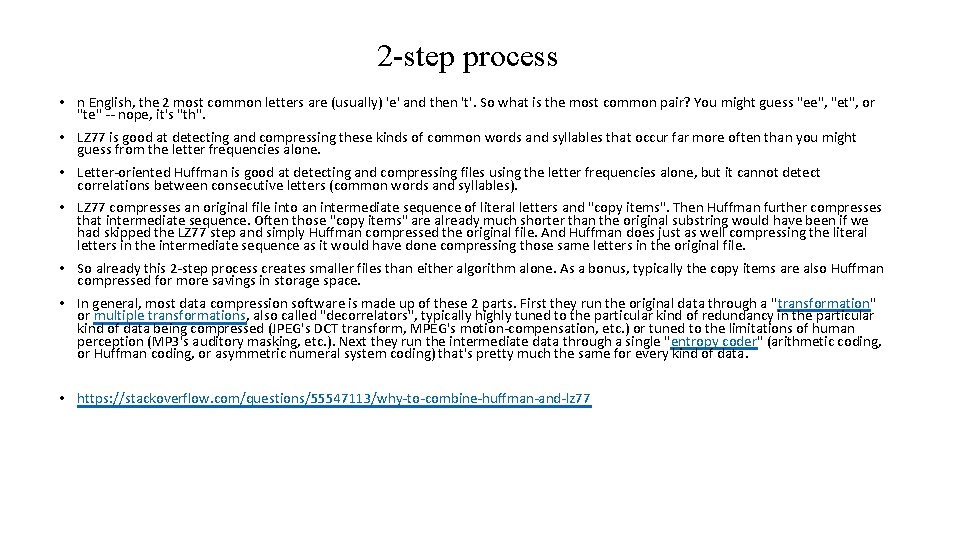

2 -step process • n English, the 2 most common letters are (usually) 'e' and then 't'. So what is the most common pair? You might guess "ee", "et", or "te" -- nope, it's "th". • LZ 77 is good at detecting and compressing these kinds of common words and syllables that occur far more often than you might guess from the letter frequencies alone. • Letter-oriented Huffman is good at detecting and compressing files using the letter frequencies alone, but it cannot detect correlations between consecutive letters (common words and syllables). • LZ 77 compresses an original file into an intermediate sequence of literal letters and "copy items". Then Huffman further compresses that intermediate sequence. Often those "copy items" are already much shorter than the original substring would have been if we had skipped the LZ 77 step and simply Huffman compressed the original file. And Huffman does just as well compressing the literal letters in the intermediate sequence as it would have done compressing those same letters in the original file. • So already this 2 -step process creates smaller files than either algorithm alone. As a bonus, typically the copy items are also Huffman compressed for more savings in storage space. • In general, most data compression software is made up of these 2 parts. First they run the original data through a "transformation" or multiple transformations, also called "decorrelators", typically highly tuned to the particular kind of redundancy in the particular kind of data being compressed (JPEG's DCT transform, MPEG's motion-compensation, etc. ) or tuned to the limitations of human perception (MP 3's auditory masking, etc. ). Next they run the intermediate data through a single "entropy coder" (arithmetic coding, or Huffman coding, or asymmetric numeral system coding) that's pretty much the same for every kind of data. • https: //stackoverflow. com/questions/55547113/why-to-combine-huffman-and-lz 77

DCT • The discrete cosine transform (DCT) represents an image as a sum of sinusoids of varying magnitudes and frequencies. . For this reason, the DCT is often used in image compression applications. For example, the DCT is at the heart of the international standard lossy image compression algorithm known as JPEG.

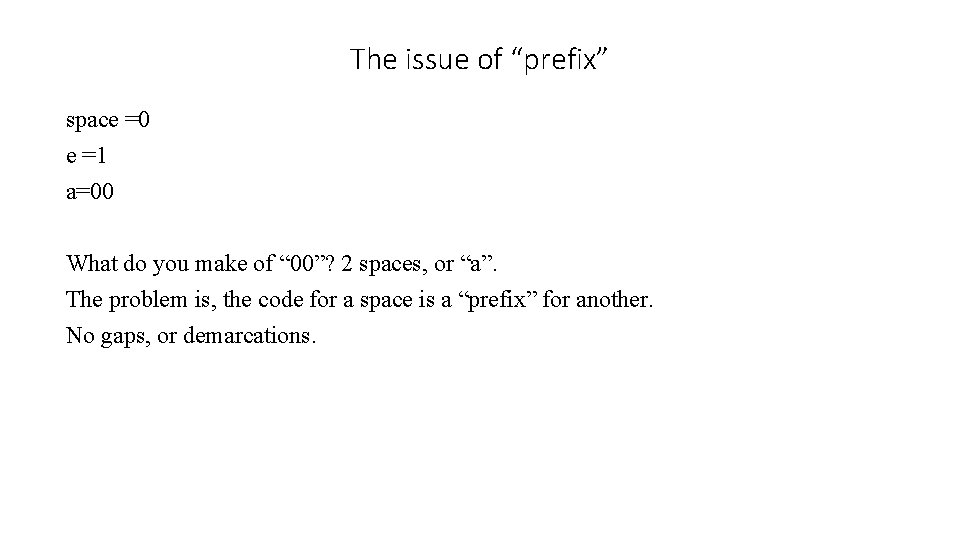

The issue of “prefix” space =0 e =1 a=00 What do you make of “ 00”? 2 spaces, or “a”. The problem is, the code for a space is a “prefix” for another. No gaps, or demarcations.

• https: //applesaucekids. com/Link. Page/File%20 Formats. html • A Christian Site. Good 30 minute talk for youth group. • Practical advices about wav vs mp 3 • https: //vox. rocks/resources/wav-vs-mp 3 • https: //blog. online-convert. com/lossless-file-formats/ Images in GIF and JPEG formats are lossy, while PNG, BMP and Raw are lossless formats for images. Audio files in OGG, MP 4 and MP 3 are lossy formats, while files in ALAC, FLAC and WAV are all lossless.

Person vs. society conflict

Person vs. society conflict Jstptu56gm8 -site:youtube.com

Jstptu56gm8 -site:youtube.com Lossless compression

Lossless compression Gzip 압축률

Gzip 압축률 Do not make friends with a hot tempered man

Do not make friends with a hot tempered man Hình ảnh bộ gõ cơ thể búng tay

Hình ảnh bộ gõ cơ thể búng tay Lp html

Lp html Bổ thể

Bổ thể Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Gấu đi như thế nào

Gấu đi như thế nào Chụp tư thế worms-breton

Chụp tư thế worms-breton Hát lên người ơi alleluia

Hát lên người ơi alleluia Môn thể thao bắt đầu bằng chữ f

Môn thể thao bắt đầu bằng chữ f Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Công của trọng lực

Công của trọng lực Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Cách giải mật thư tọa độ

Cách giải mật thư tọa độ 101012 bằng

101012 bằng độ dài liên kết

độ dài liên kết Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới