Report on Text VQA Challenge Wang Zhaokai Outline

- Slides: 39

Report on Text. VQA Challenge Wang Zhaokai

Outline 0、Text. VQA dataset 1、Lo. RRA (baseline) 2、2019 winner 3、2019 runner-up 4、M 4 C Accuracy: 27. 63% Accuracy: 31. 44% Accuracy: 30. 54% Accuracy: 40. 46%

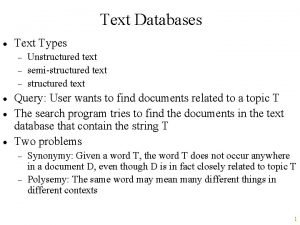

Text. VQA Dataset VQA that are related to extracting text from images.

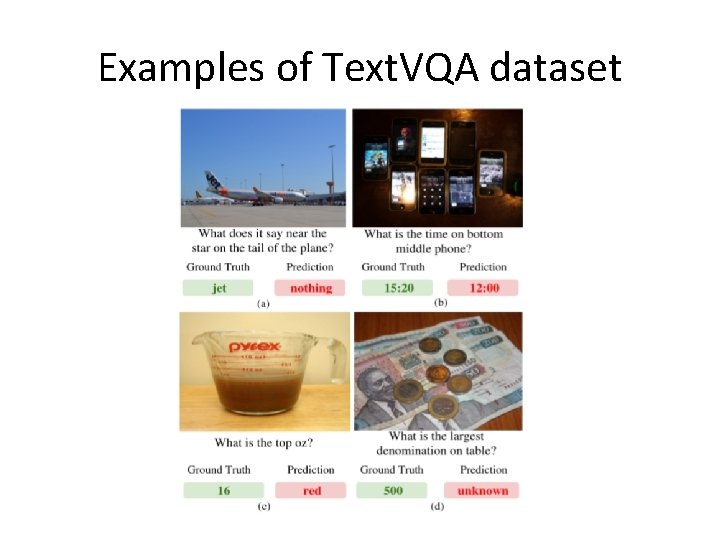

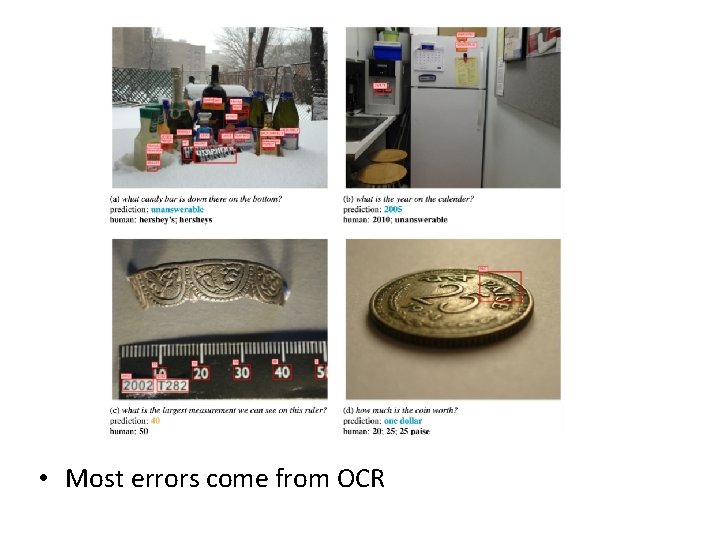

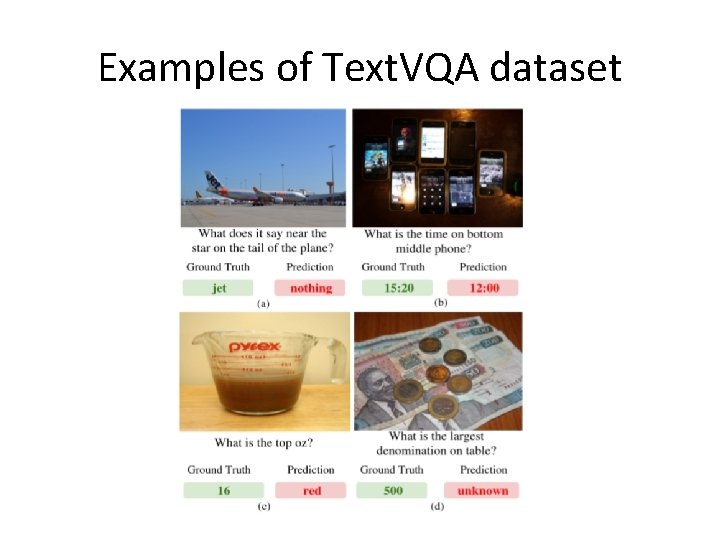

Examples of Text. VQA dataset

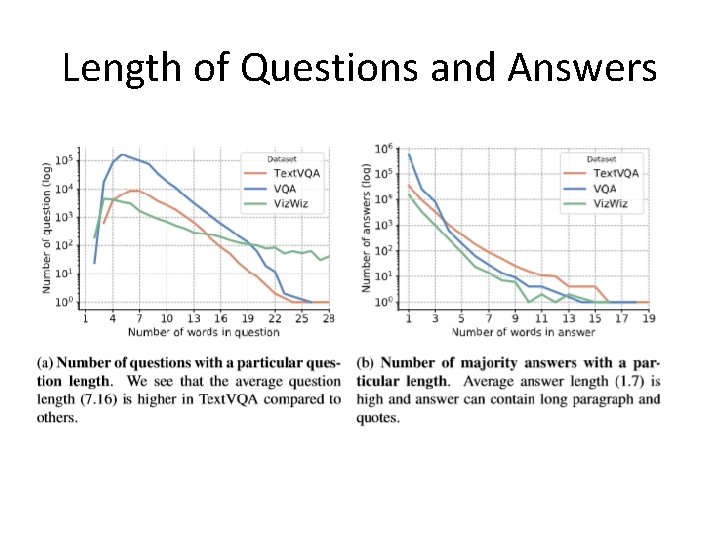

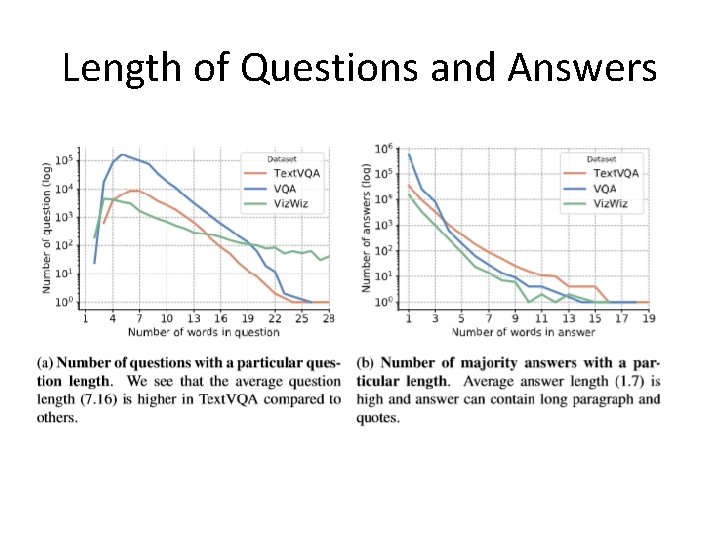

Length of Questions and Answers

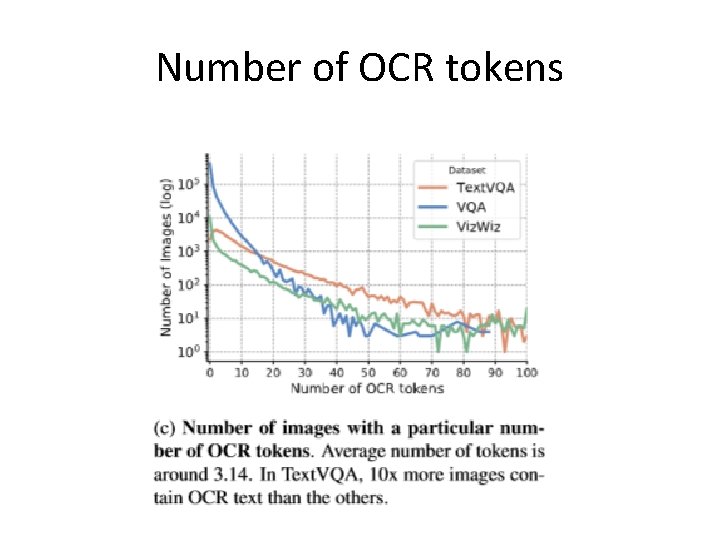

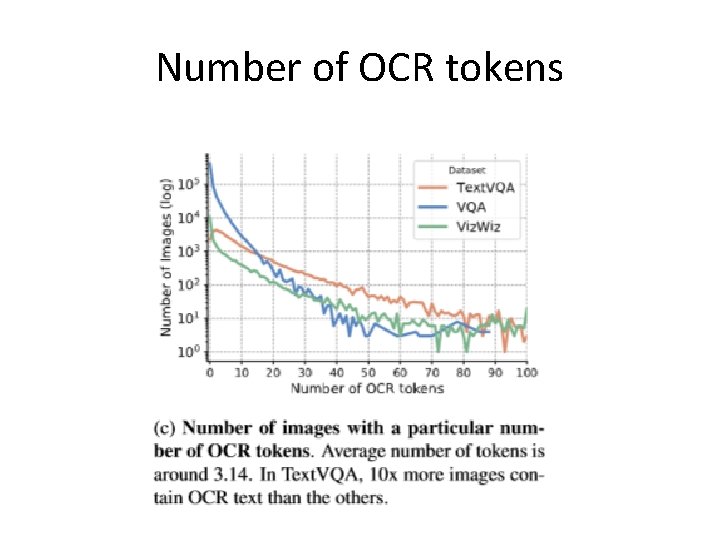

Number of OCR tokens

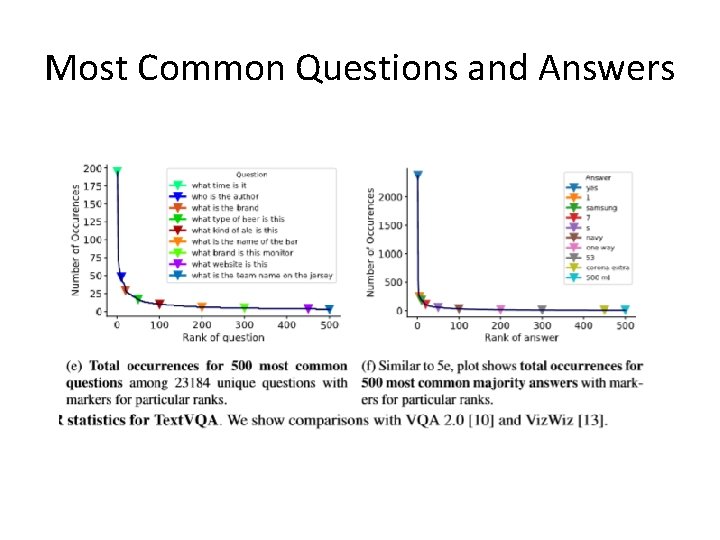

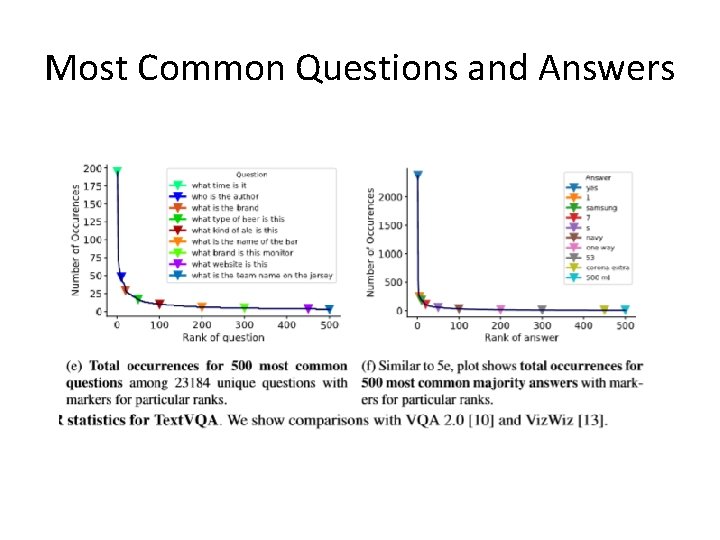

Most Common Questions and Answers

• The model need to combine question, image and text, and choose between OCR results or fixed vocabulary. • VQA models do not perform well on Text. VQA.

1、Lo. RRA (Baseline) CVPR 2019

2、2019 Text. VQA Challenge Winner VQA-Dial Workshop 2019

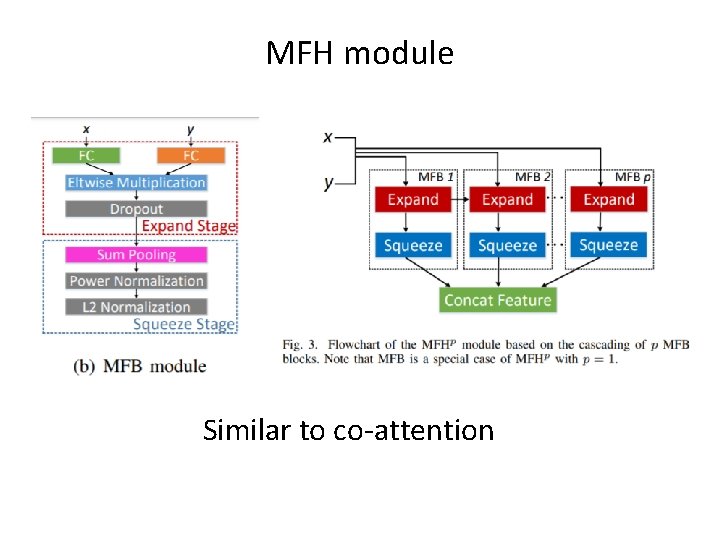

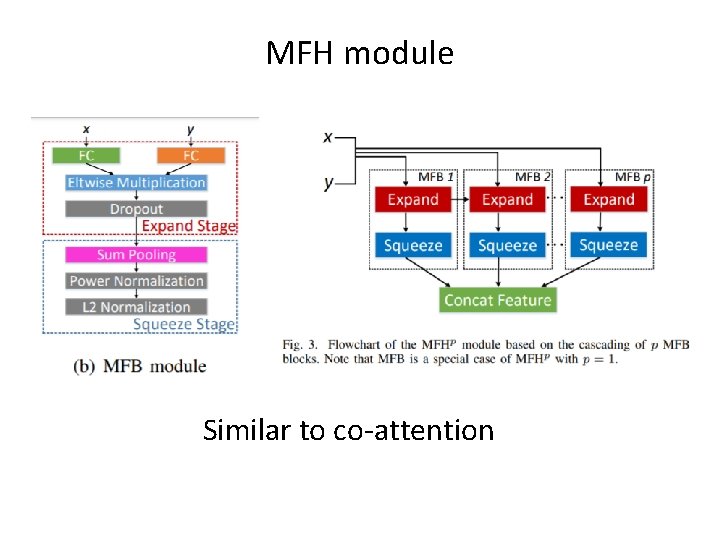

MFH module Similar to co-attention

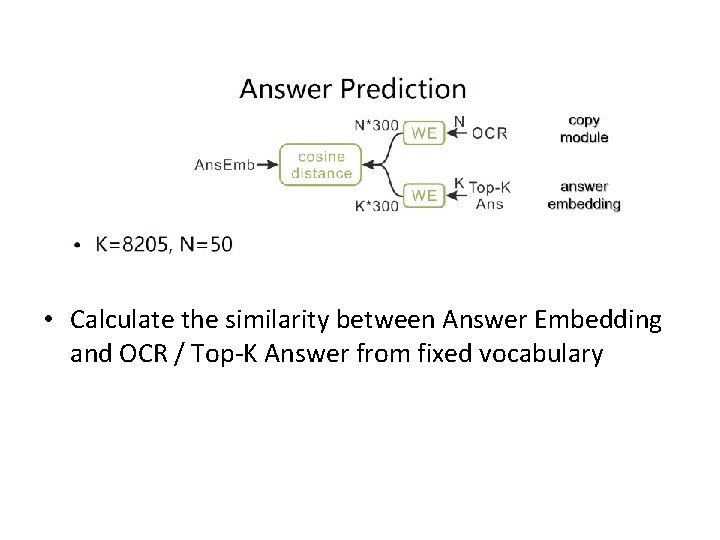

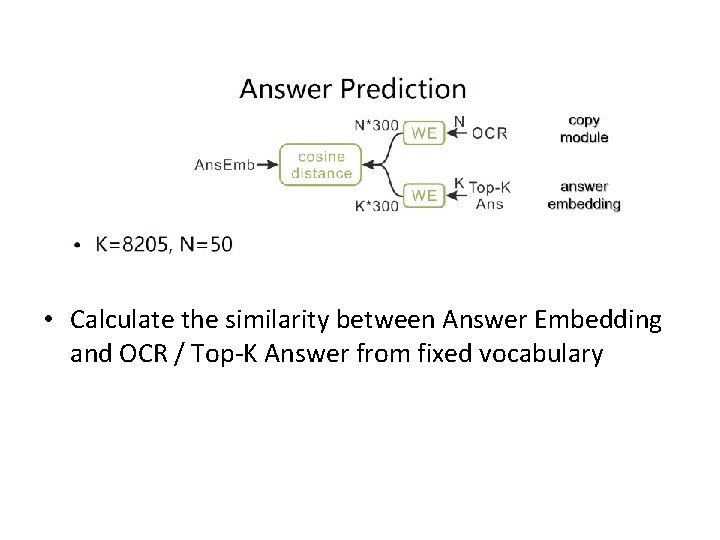

• Calculate the similarity between Answer Embedding and OCR / Top-K Answer from fixed vocabulary

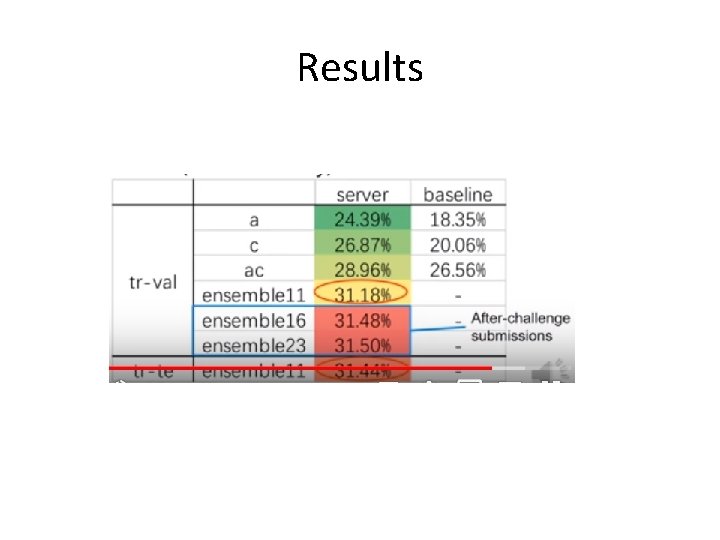

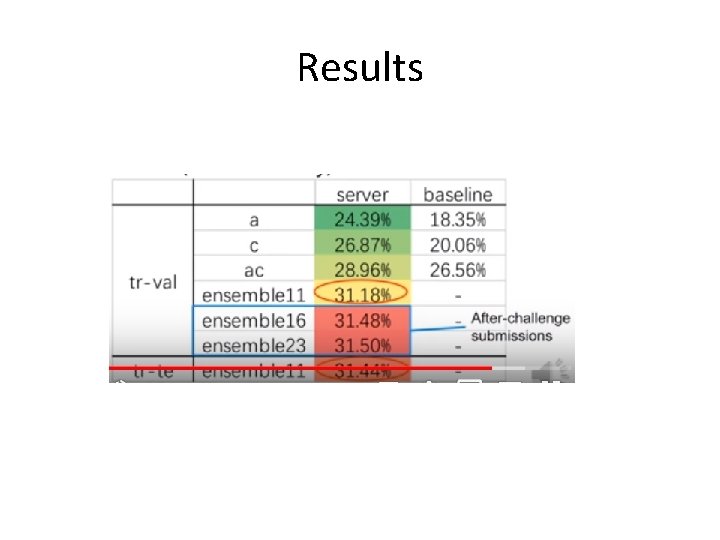

Results

3、2019 Text. VQA Challenge Runner-up VQA-Dial Workshop 2019

4、M 4 C From arxiv

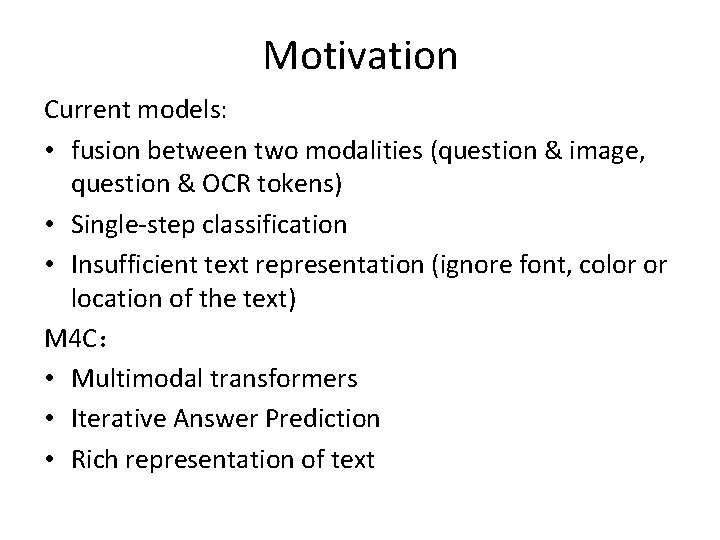

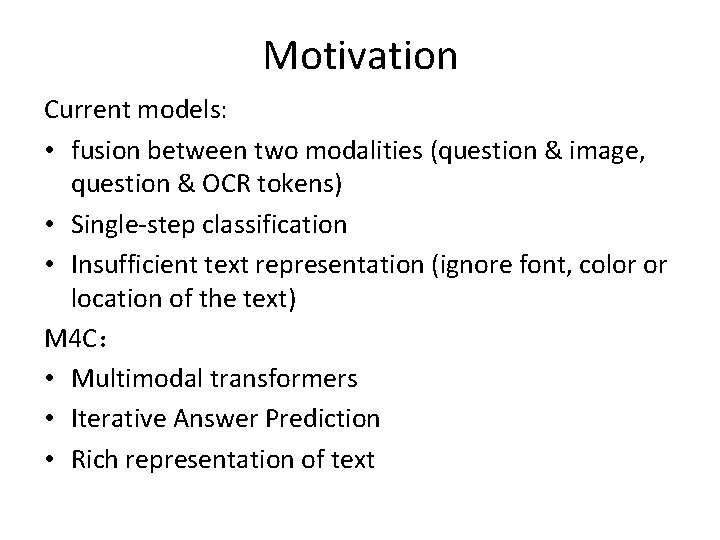

Motivation Current models: • fusion between two modalities (question & image, question & OCR tokens) • Single-step classification • Insufficient text representation (ignore font, color or location of the text) M 4 C: • Multimodal transformers • Iterative Answer Prediction • Rich representation of text

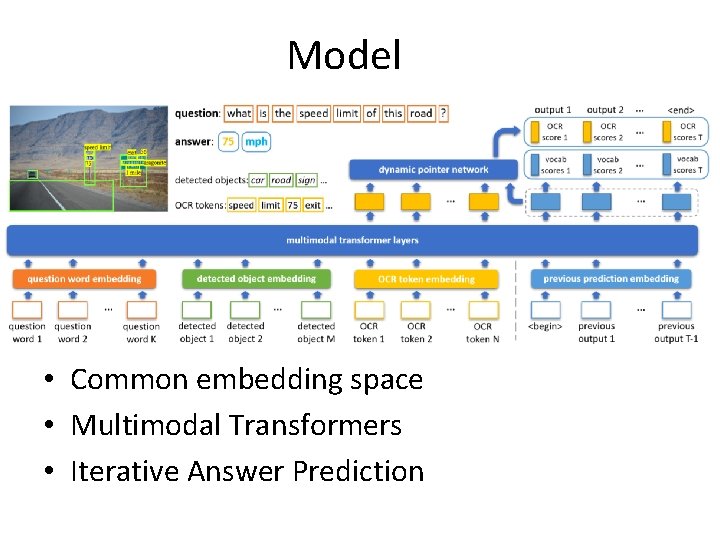

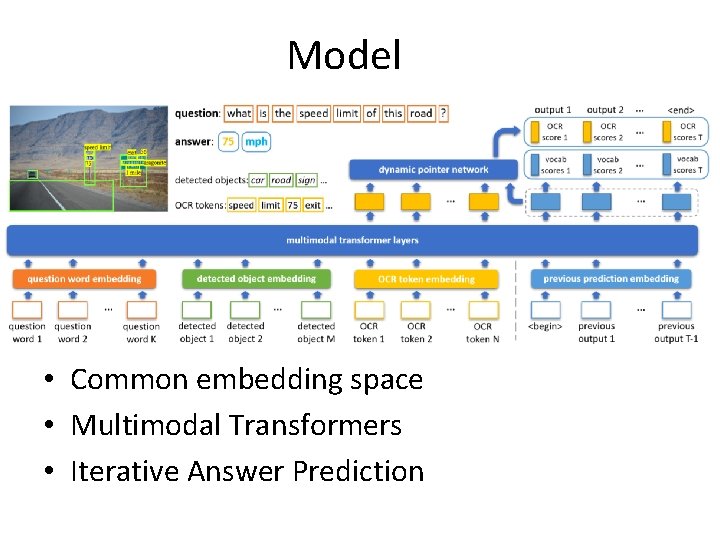

Model • Common embedding space • Multimodal Transformers • Iterative Answer Prediction

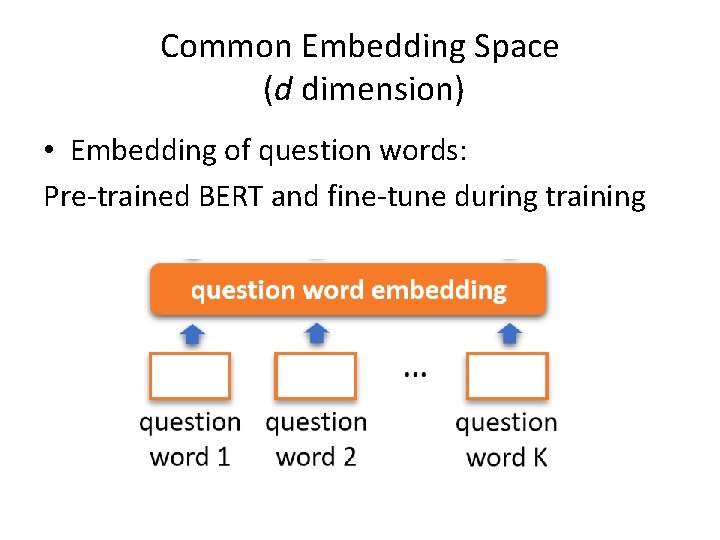

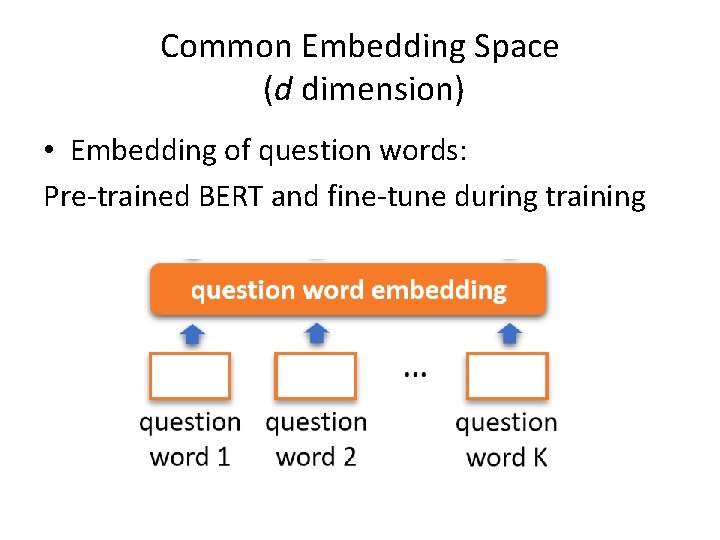

Common Embedding Space (d dimension) • Embedding of question words: Pre-trained BERT and fine-tune during training

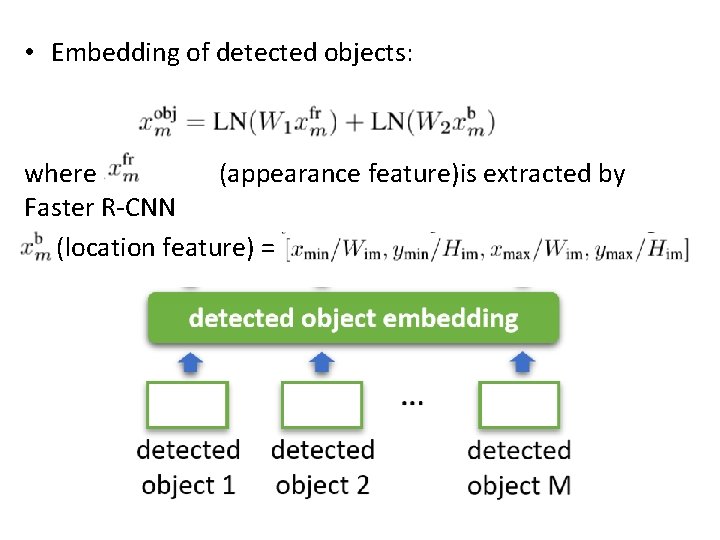

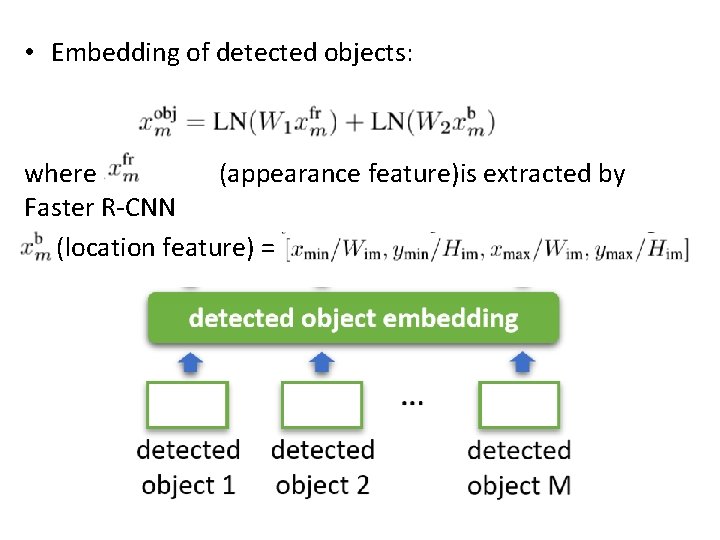

• Embedding of detected objects: where (appearance feature)is extracted by Faster R-CNN (location feature) =

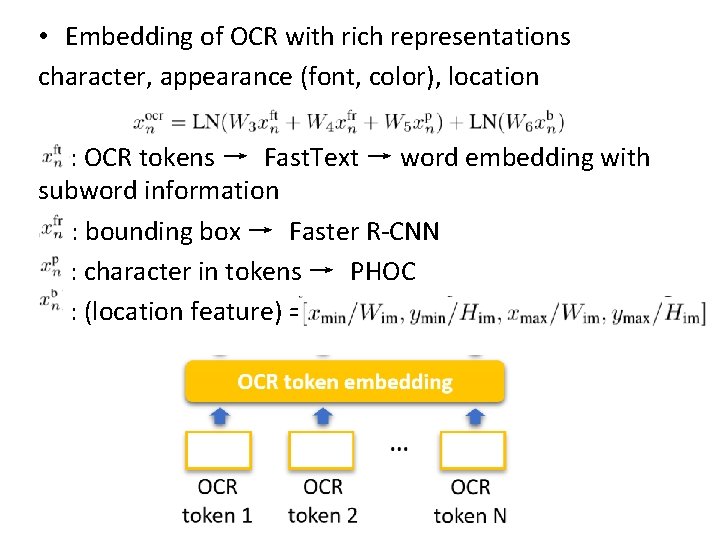

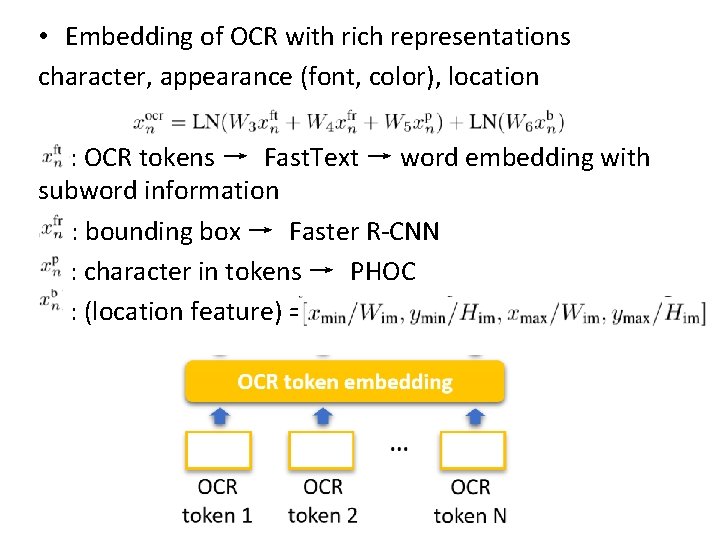

• Embedding of OCR with rich representations character, appearance (font, color), location : OCR tokens → Fast. Text → word embedding with subword information : : bounding box → Faster R-CNN : character in tokens → PHOC : (location feature) =

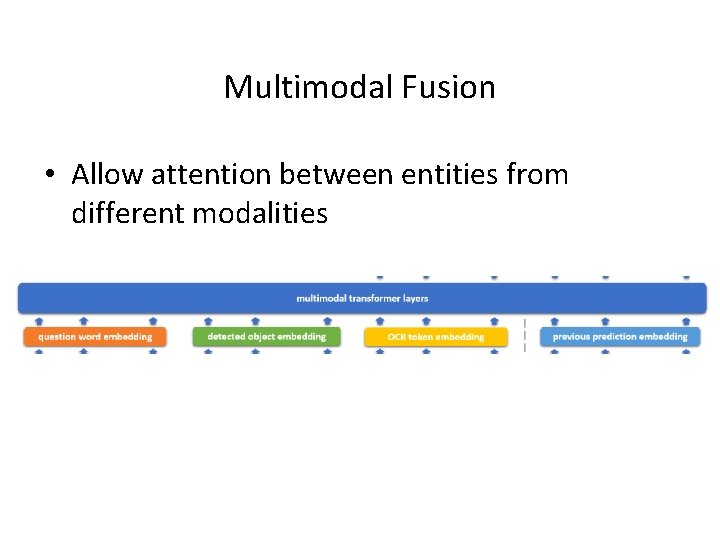

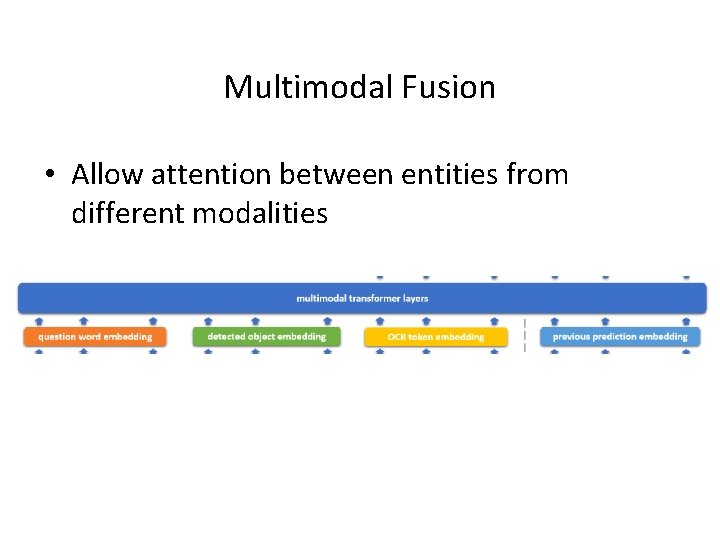

Multimodal Fusion • Allow attention between entities from different modalities

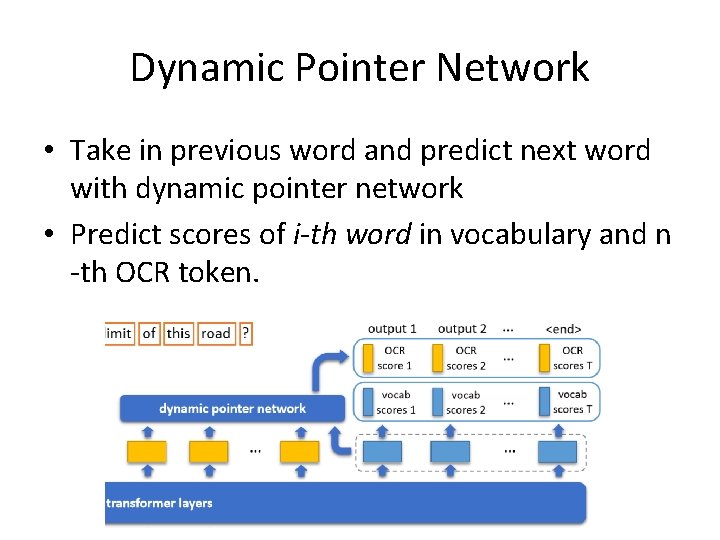

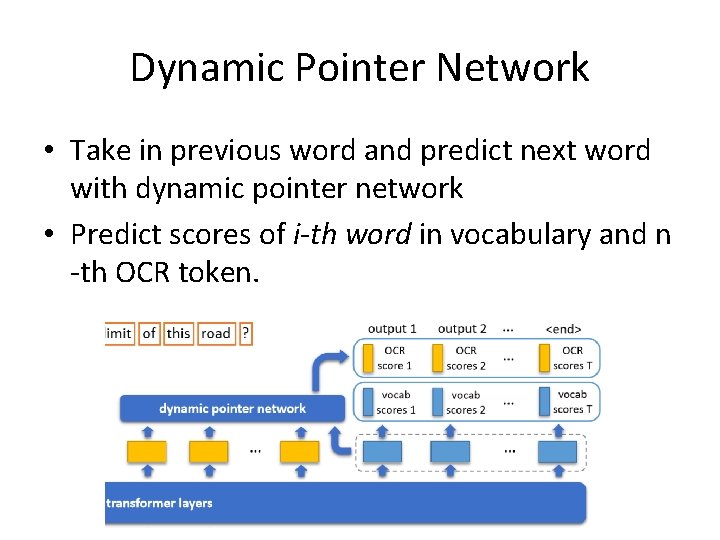

Dynamic Pointer Network • Take in previous word and predict next word with dynamic pointer network • Predict scores of i-th word in vocabulary and n -th OCR token.

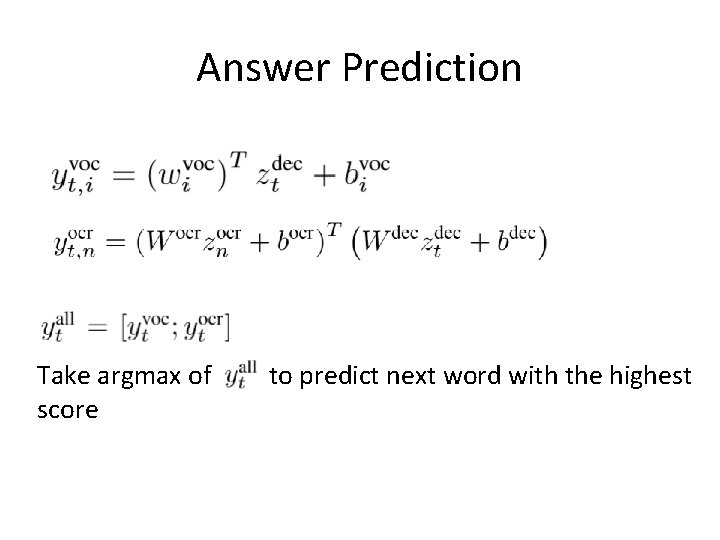

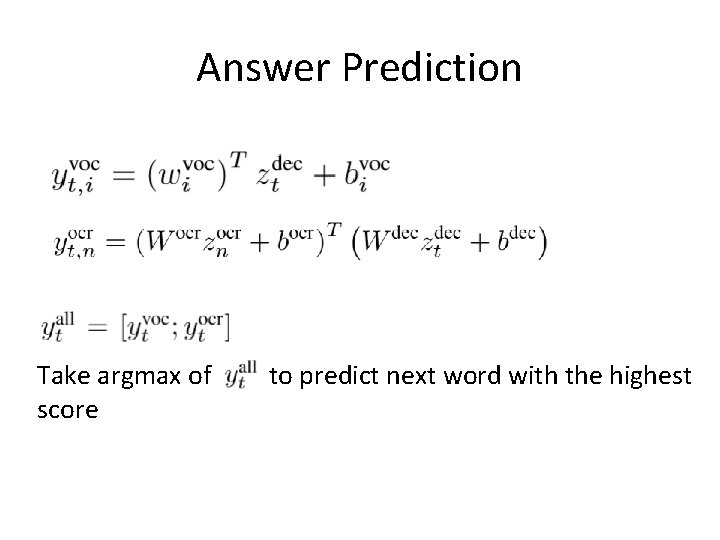

Answer Prediction Take argmax of score , to predict next word with the highest

Extra Token • Add position embedding of step t • Add type embedding (whether previous word is from vocabulary or OCR) • Add <start> and <end> token • Use multi-label sigmoid loss

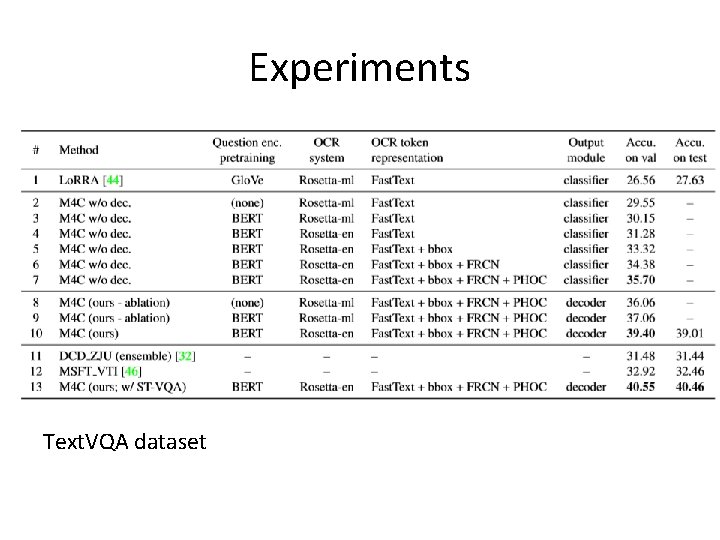

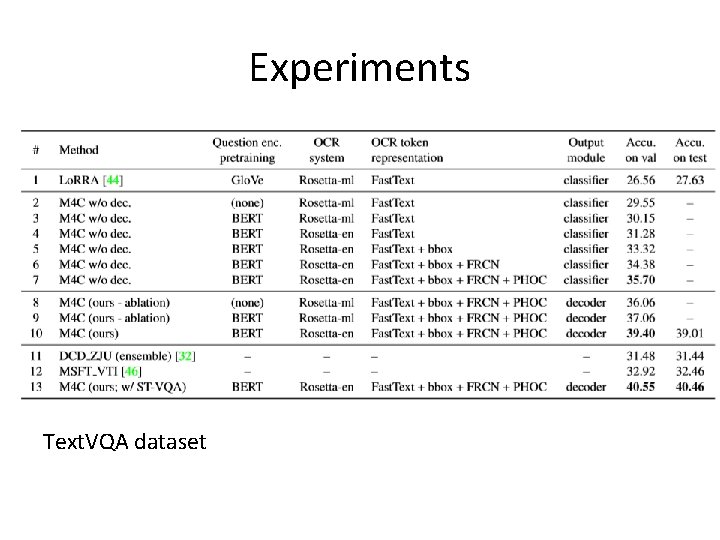

Experiments Text. VQA dataset

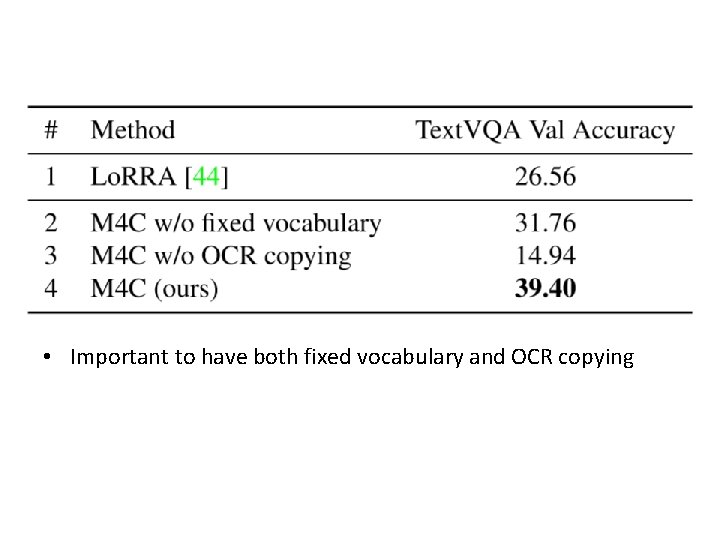

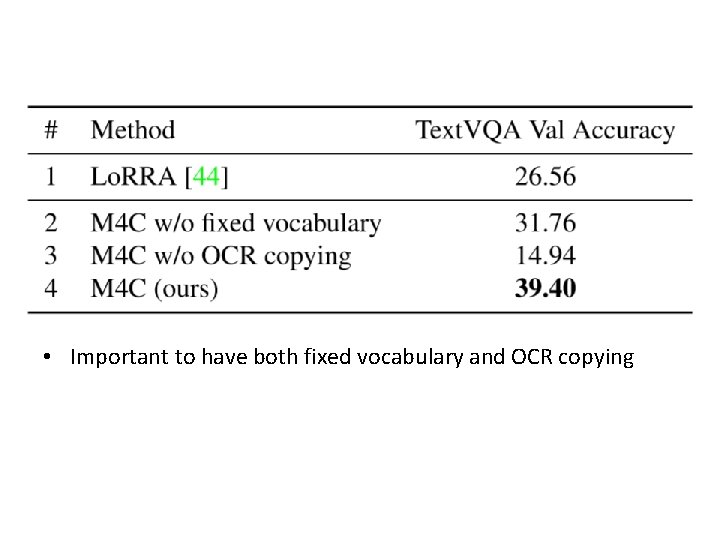

• Important to have both fixed vocabulary and OCR copying

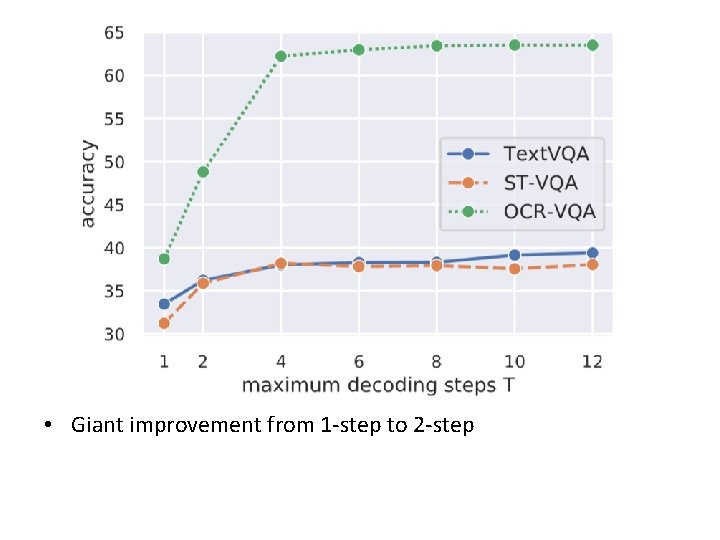

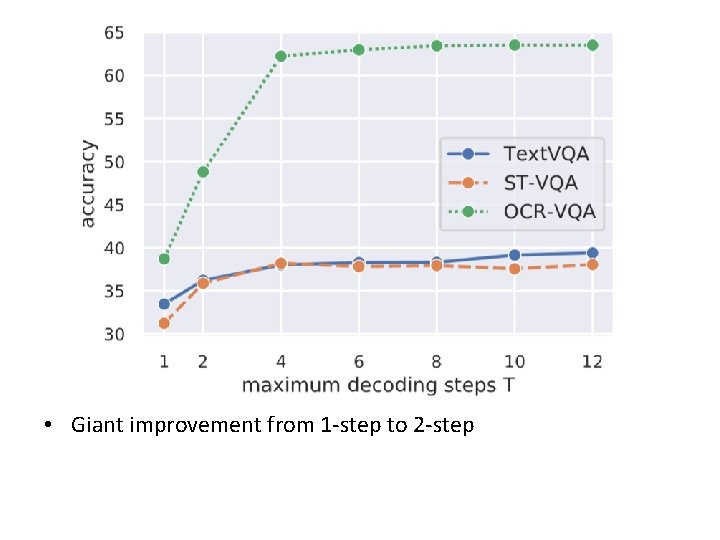

• Giant improvement from 1 -step to 2 -step

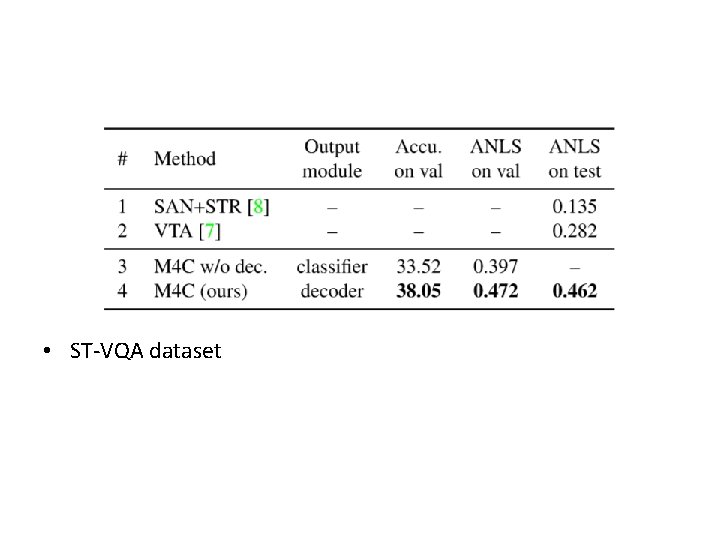

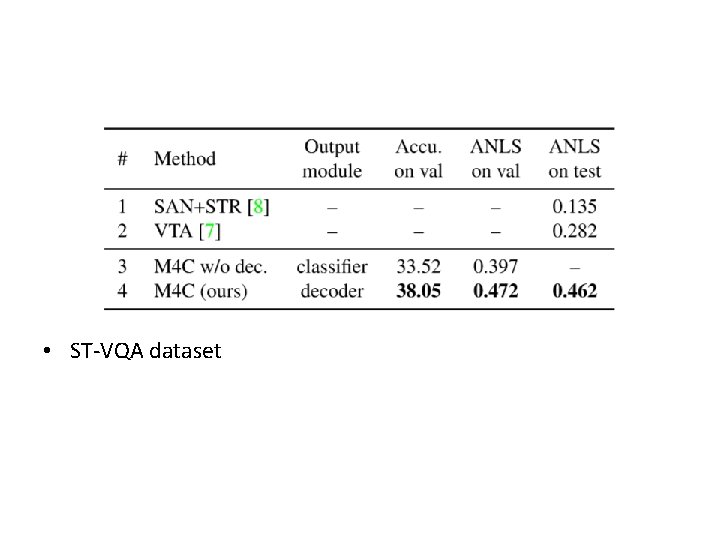

• ST-VQA dataset

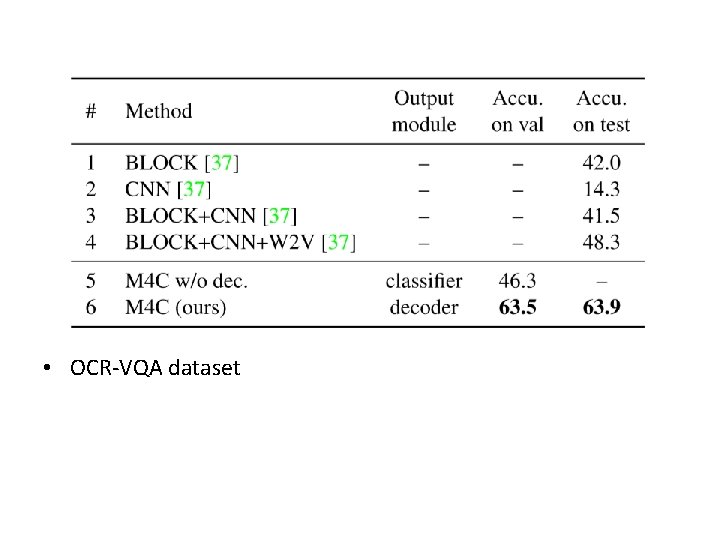

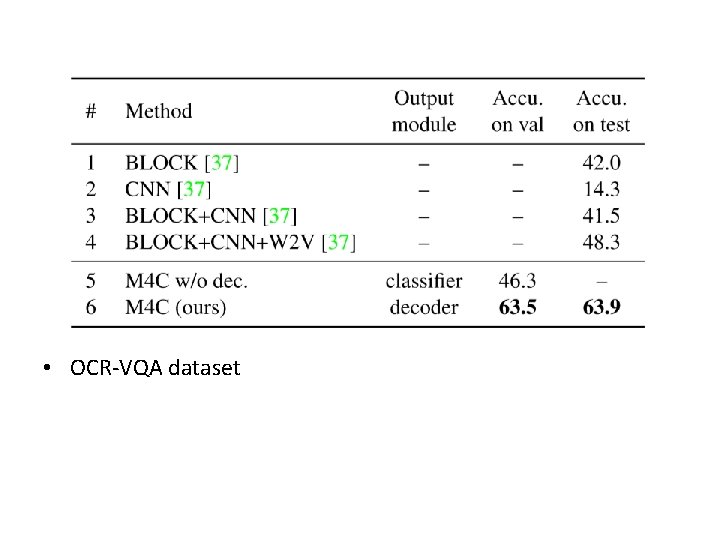

• OCR-VQA dataset

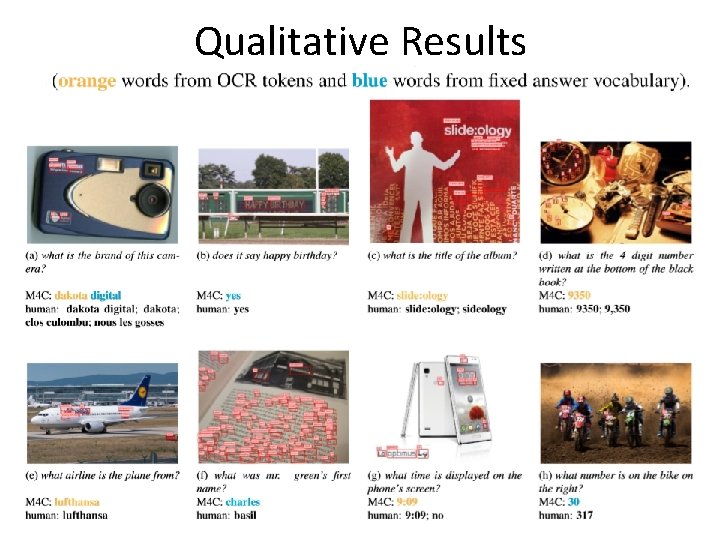

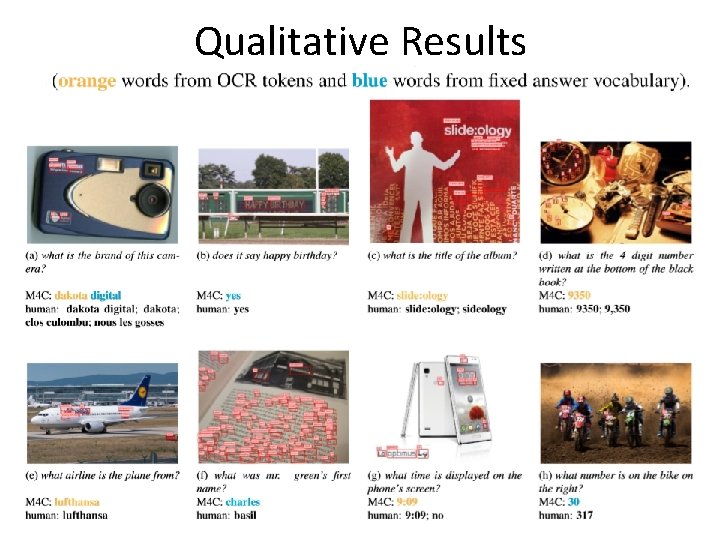

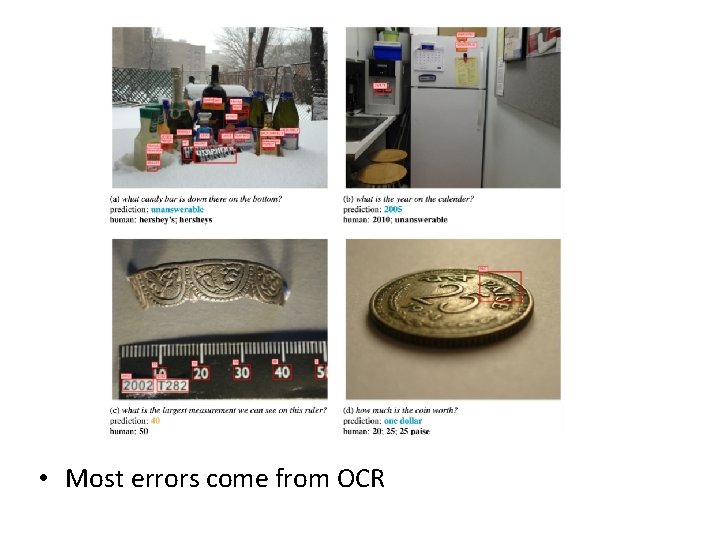

Qualitative Results

- • Most errors come from OCR

My Understanding • Key point: how to combine 3 modalities (question, image, OCR tokens) and how to choose answers from OCR or fixed vocabulary. • (compared to VQA:only 2 modalities; answers only from fixed vocabulary)

Preparations • 2020. 2 Basic Knowledge (cs 231 n, cs 224 n) Papers (Lo. RRA, M 4 C) Environment (pythia) • 2020. 3 Run Lo. RRA model, achieve same accuracy • 2020. 4 – 2020. 5 Train model and fine-tune, improve accuracy by 2% • Deadline: 5. 27

Devi parikh

Devi parikh What is an example of a text-to-media connection?

What is an example of a text-to-media connection? Whitchester house

Whitchester house What is the text structure of report text

What is the text structure of report text Cfa institute research challenge 2018

Cfa institute research challenge 2018 Cfa research challenge report template

Cfa research challenge report template Example of sentence outline

Example of sentence outline Outline of the research

Outline of the research Writing a status report

Writing a status report Using the partial report method sperling

Using the partial report method sperling Outline critical approaches to interpreting text

Outline critical approaches to interpreting text Report text definition

Report text definition Pengertian report

Pengertian report Report text bats

Report text bats Karakteristik factual report text

Karakteristik factual report text Fungsi business report

Fungsi business report Exemplification text structure

Exemplification text structure What does functional text mean

What does functional text mean Regina wang md

Regina wang md Stealing hyperparameters in machine learning

Stealing hyperparameters in machine learning Yongge wang

Yongge wang Caroline c wang

Caroline c wang Amos wang

Amos wang Ap 70 pengeluaran resit rasmi

Ap 70 pengeluaran resit rasmi Wang panjar runcit

Wang panjar runcit Huazheng wang

Huazheng wang Salinan surat pengesahan asal entiti serahan

Salinan surat pengesahan asal entiti serahan Holtek semiconductor inc

Holtek semiconductor inc Hongning wang

Hongning wang Dr xia wang

Dr xia wang Dr kenneth wang

Dr kenneth wang Minmei wang

Minmei wang Gyomor 36 pont

Gyomor 36 pont Social media trend analysis

Social media trend analysis Dr john wang

Dr john wang Ryan wang hsbc

Ryan wang hsbc Guanhua wang

Guanhua wang Landy wang microsoft

Landy wang microsoft Jyhwen wang

Jyhwen wang Dr robert wang

Dr robert wang