Reducing Mechanism Design to Optimization Constantinos Costis Daskalakis

![Algorithmic Mechanism Design [Nisan-Ronen’ 99]: How much more difficult are optimization problems on “strategic” Algorithmic Mechanism Design [Nisan-Ronen’ 99]: How much more difficult are optimization problems on “strategic”](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-6.jpg)

![Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-16.jpg)

![Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-17.jpg)

![Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-18.jpg)

![A General Reduction = reduction Impossible [CIL’ 12] Algorithm Design Given: 1. same X A General Reduction = reduction Impossible [CIL’ 12] Algorithm Design Given: 1. same X](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-23.jpg)

![A General Reduction = reduction Impossible [CIL’ 12] Algorithm Design Given: 1. same X A General Reduction = reduction Impossible [CIL’ 12] Algorithm Design Given: 1. same X](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-24.jpg)

- Slides: 40

Reducing Mechanism Design to Optimization Constantinos (Costis) Daskalakis (MIT) Yang Cai (Mc. Gill) Matt Weinberg (Princeton)

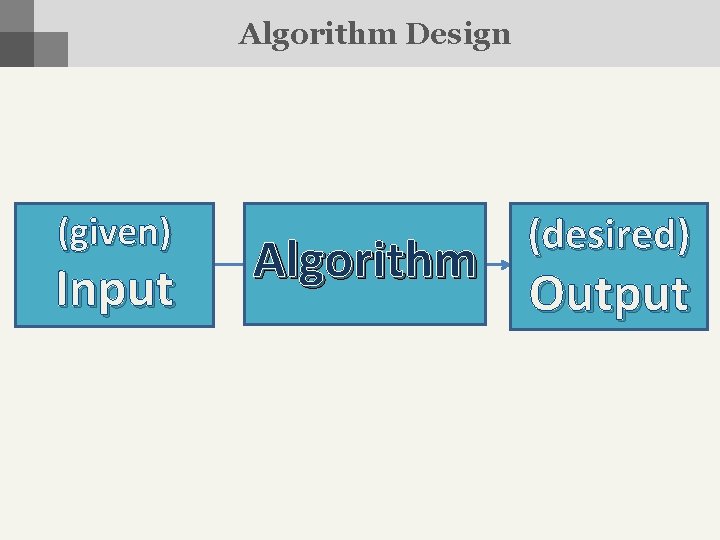

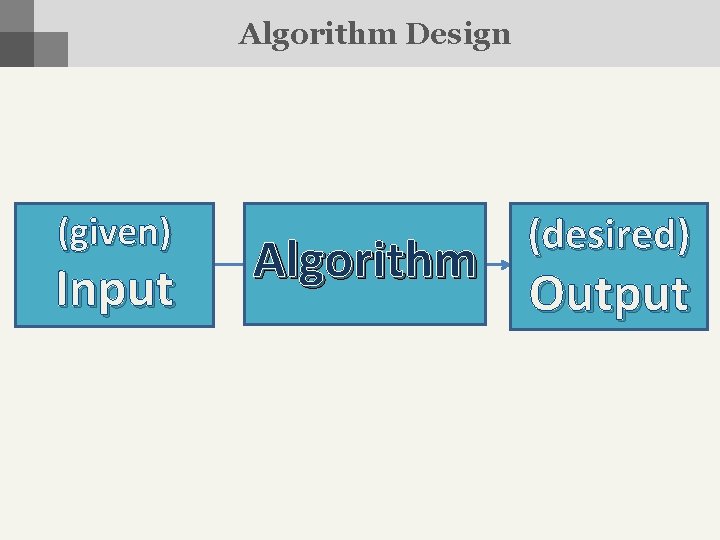

Algorithm Design (given) Input Algorithm (desired) Output

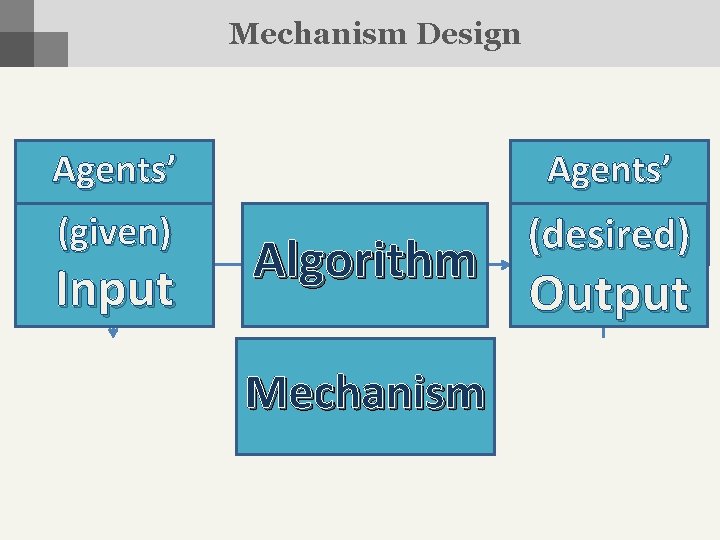

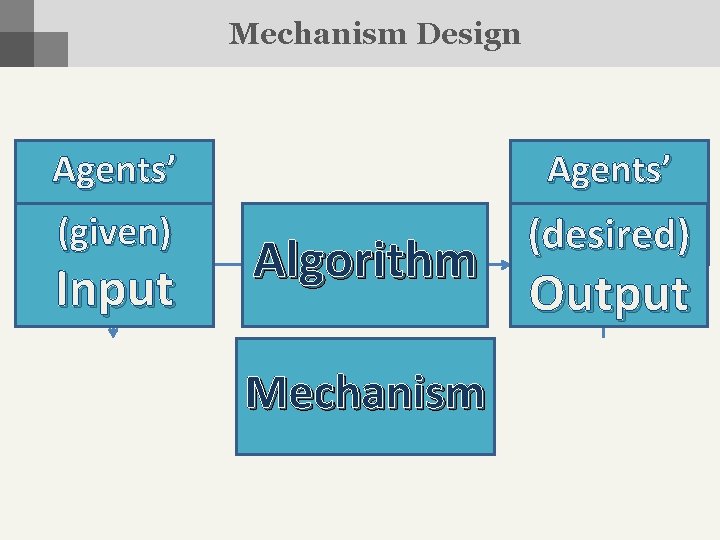

Mechanism Design Agents’ Payoffs Reports (given) (desired) Algorithm Input Output Mechanism

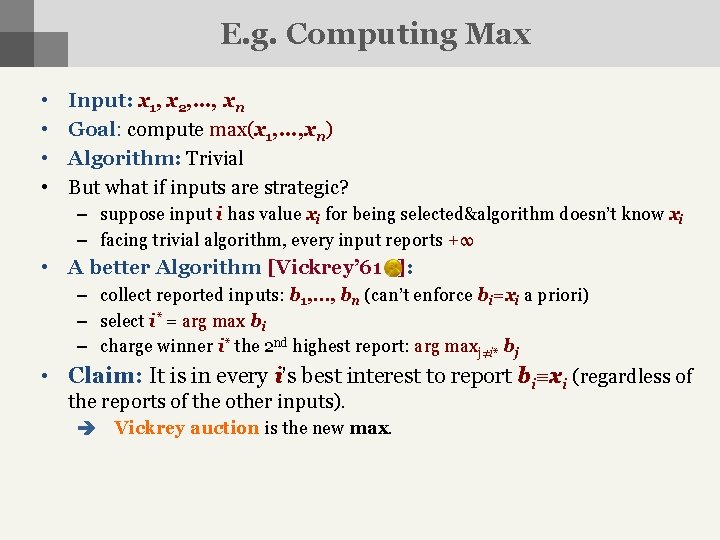

E. g. Computing Max • • Input: x 1, x 2, …, xn Goal: compute max(x 1, …, xn) Algorithm: Trivial But what if inputs are strategic? – suppose input i has value xi for being selected&algorithm doesn’t know xi – facing trivial algorithm, every input reports +∞ • A better Algorithm [Vickrey’ 61 ]: – collect reported inputs: b 1, …, bn (can’t enforce bi=xi a priori) – select i* = arg max bi – charge winner i* the 2 nd highest report: arg maxj≠i* bj • Claim: It is in every i’s best interest to report bi xi (regardless of the reports of the other inputs). Vickrey auction is the new max.

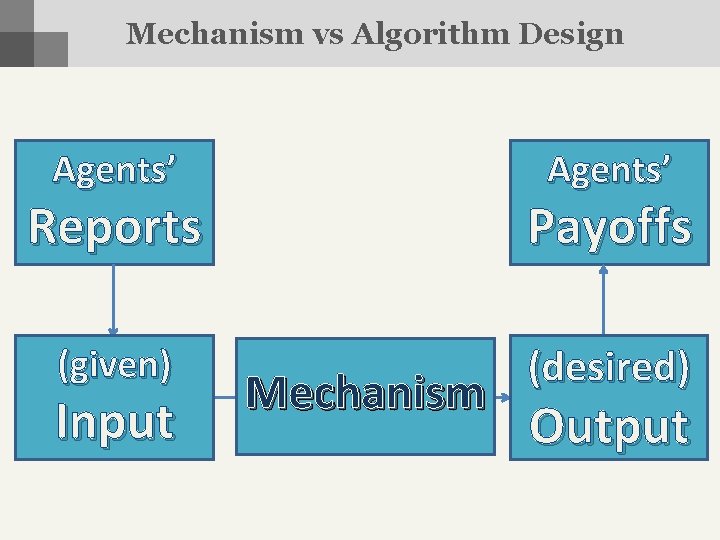

Mechanism vs Algorithm Design Agents’ Reports Payoffs (given) (desired) Input Mechanism Output

![Algorithmic Mechanism Design NisanRonen 99 How much more difficult are optimization problems on strategic Algorithmic Mechanism Design [Nisan-Ronen’ 99]: How much more difficult are optimization problems on “strategic”](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-6.jpg)

Algorithmic Mechanism Design [Nisan-Ronen’ 99]: How much more difficult are optimization problems on “strategic” input compared to “honest” input? • Information: - what information does the mechanism have about the inputs? - what information do the inputs have about each other? - does the mechanism also have some private information whose release may influence the inputs’ behavior (e. g. quality of a good in an auction)? • Complexity: - computational, communication, … - centralized: complexity to run the mechanism vs distributed: complexity for each input to optimize own behavior

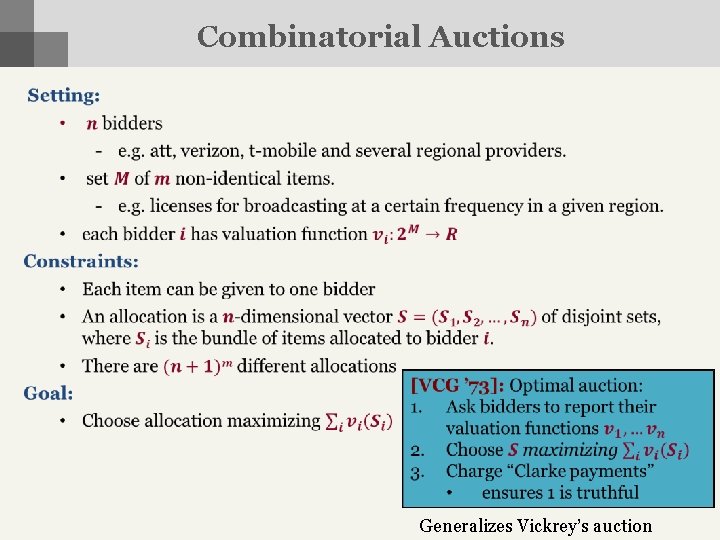

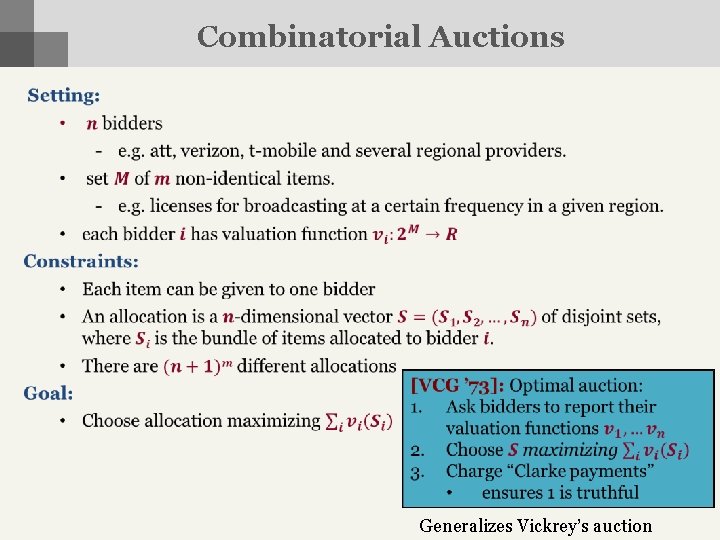

Combinatorial Auctions Generalizes Vickrey’s auction

VCG vs Communication vs Computation •

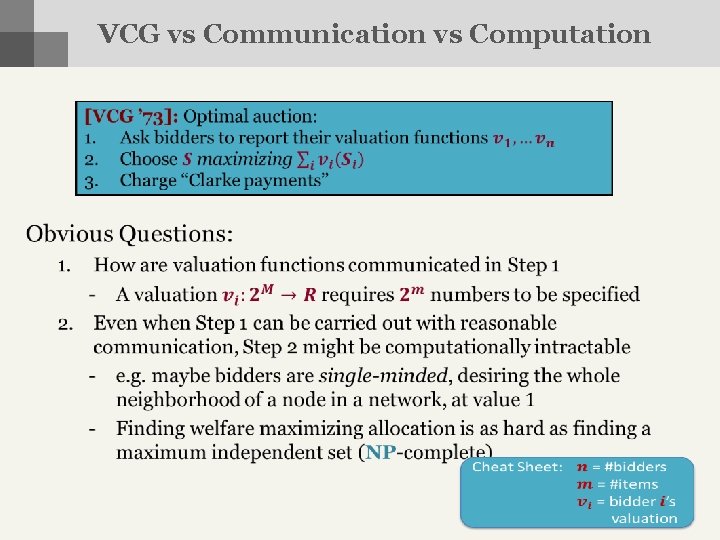

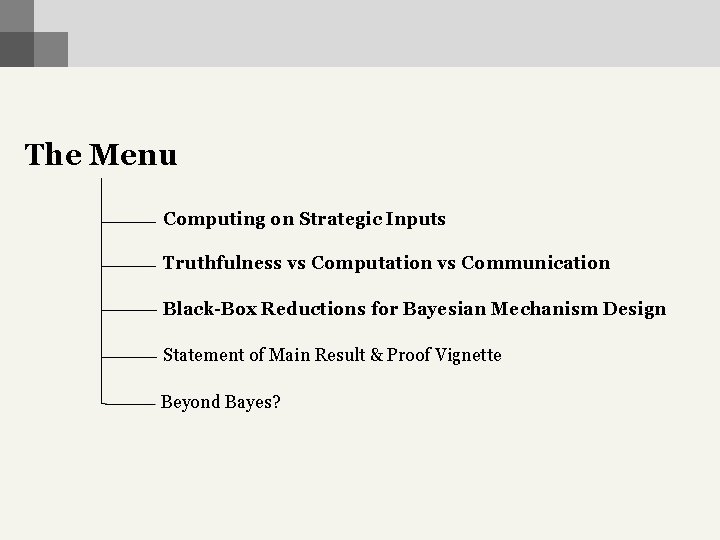

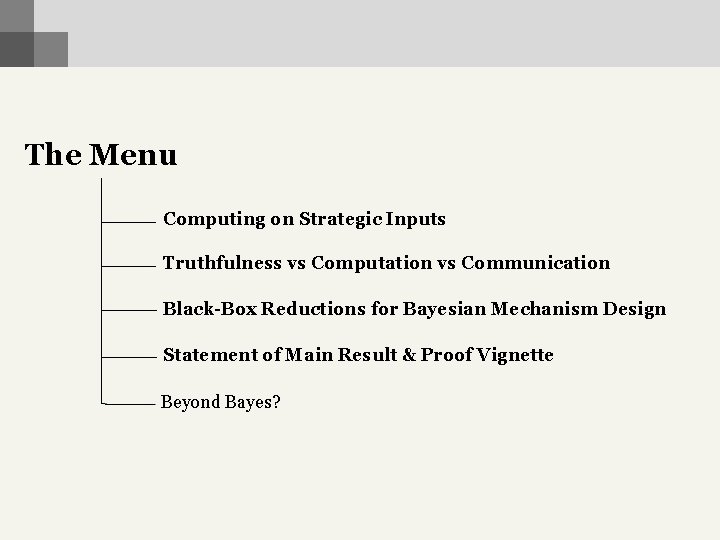

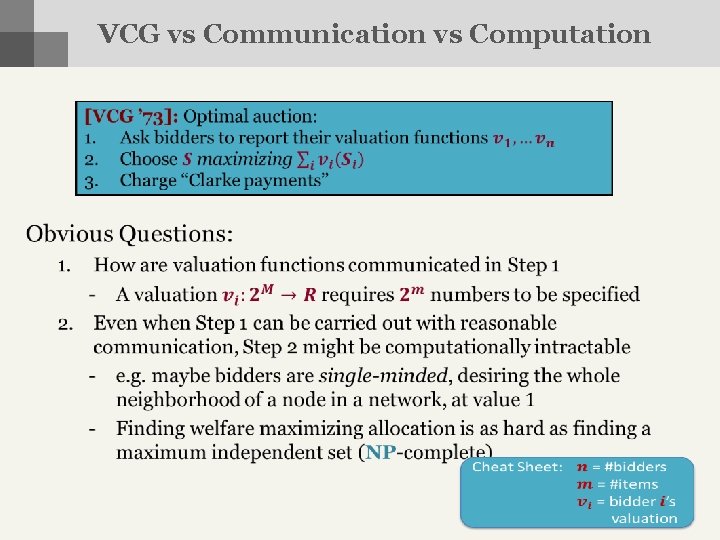

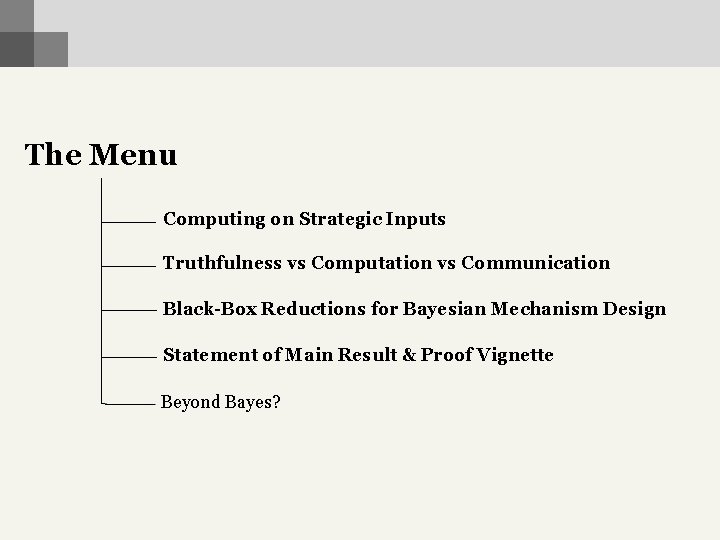

The Menu Computing on Strategic Inputs Truthfulness vs Computation vs Communication Black-Box Reductions for Bayesian Mechanism Design Statement of Main Result & Proof Vignette Beyond Bayes?

The Menu Computing on Strategic Inputs Truthfulness vs Computation vs Communication Black-Box Reductions for Bayesian Mechanism Design Statement of Main Result & Proof Vignette Beyond Bayes?

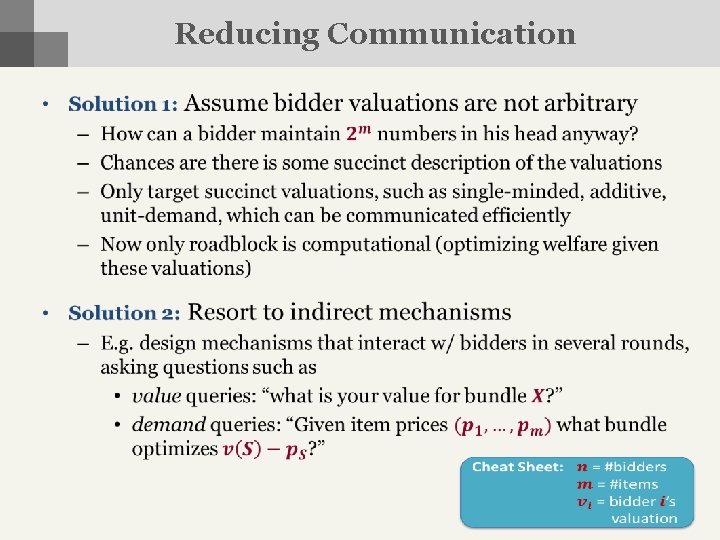

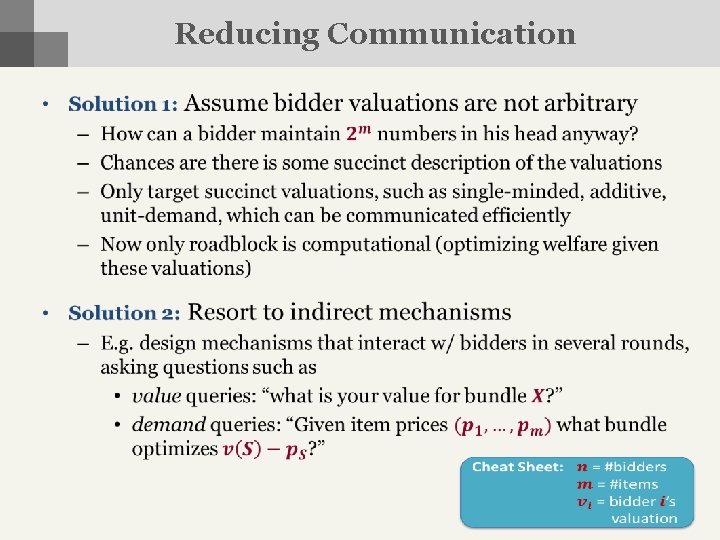

Reducing Communication •

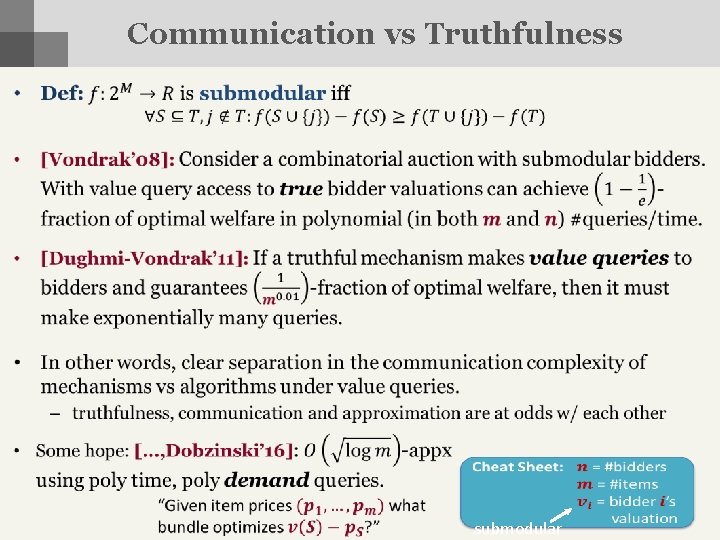

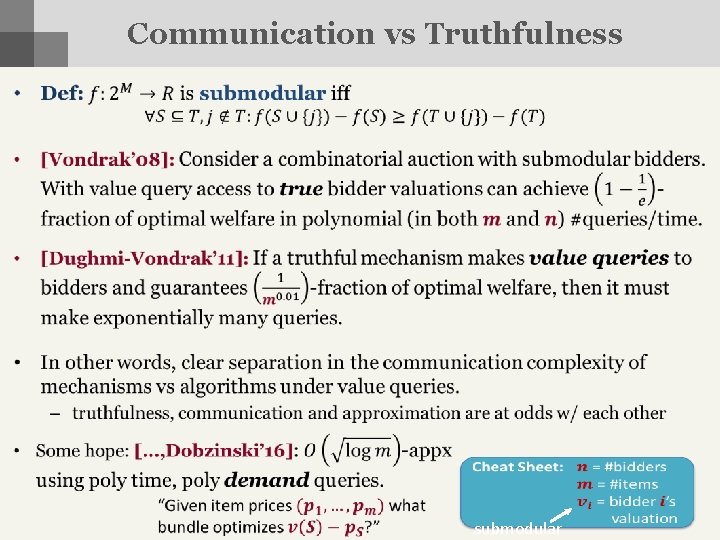

Communication vs Truthfulness • submodular

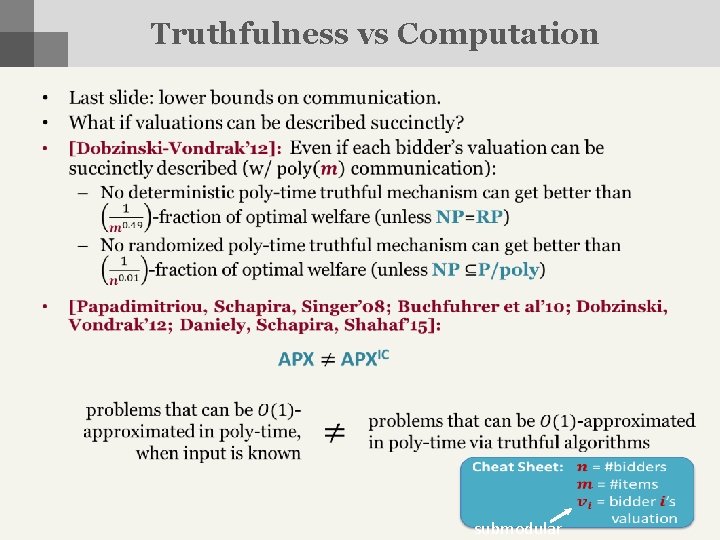

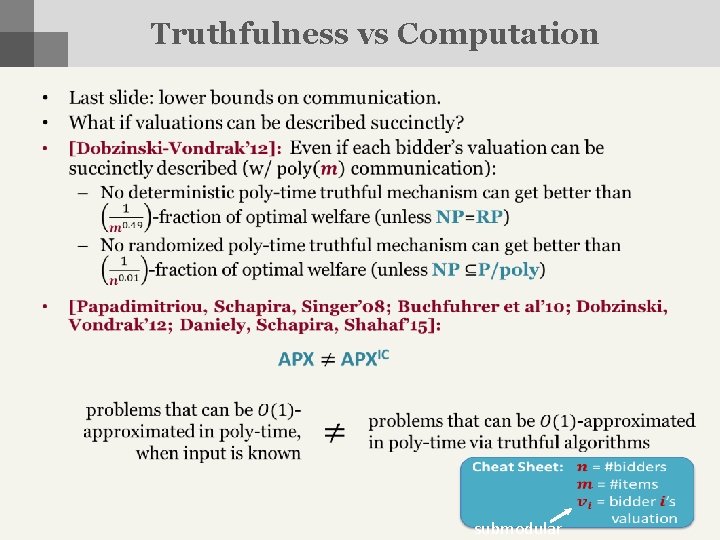

Truthfulness vs Computation • submodular

Bayes to the Rescue •

The Menu Computing on Strategic Inputs Truthfulness vs Computation vs Communication Black-Box Reductions for Bayesian Mechanism Design Statement of Main Result & Proof Vignette Beyond Bayes?

![Mechanism Design via Reductions NisanRonen 99 How much more difficult are optimization problems on Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-16.jpg)

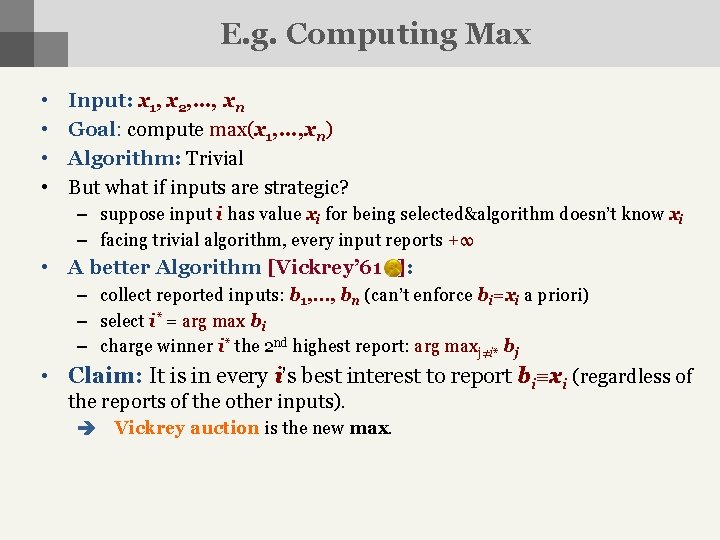

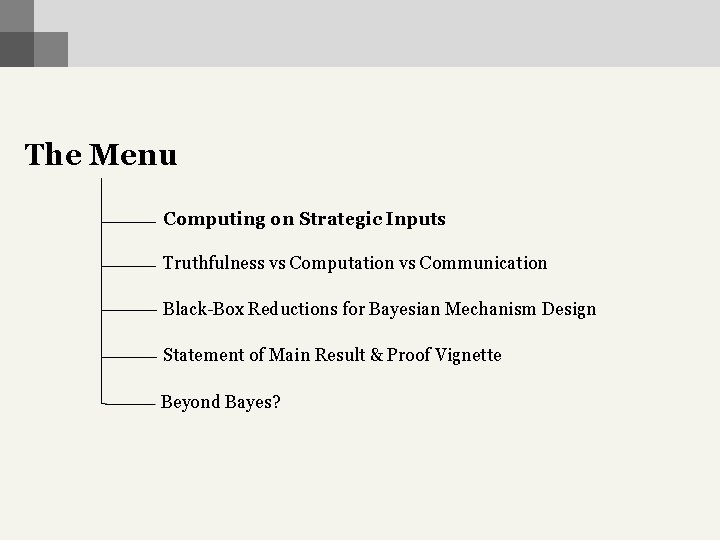

Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on “strategic” input compared to “honest” input? The Dream: Algorithm that works on strategic input Output Want: … Agent n Input Agent 1 Input Black-box reduction from mechanism- to algorithm-design for all optimization problems Have: Known Input Algorithm that works on honest input Output

![Mechanism Design via Reductions NisanRonen 99 How much more difficult are optimization problems on Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-17.jpg)

Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on “strategic” input compared to “honest” input? The Dream: Algorithm that works on strategic input Output Want: … Agent n Input Agent 1 Input Black-box reduction from mechanism- to algorithm-design for all optimization problems Have: Known Input Algorithm that works on honest input Output

![Mechanism Design via Reductions NisanRonen 99 How much more difficult are optimization problems on Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-18.jpg)

Mechanism Design via Reductions [Nisan-Ronen’ 99]: How much more difficult are optimization problems on “strategic” input compared to “honest” input? The Dream: Output Algorithm that works on strategic input Chosen Input 1 Output 1 … Want: … Agent n Input Agent 1 Input Black-box reduction from mechanism- to algorithm-design for all optimization problems Chosen Input k Output k Have: Known Input Algorithm that works on honest input Output

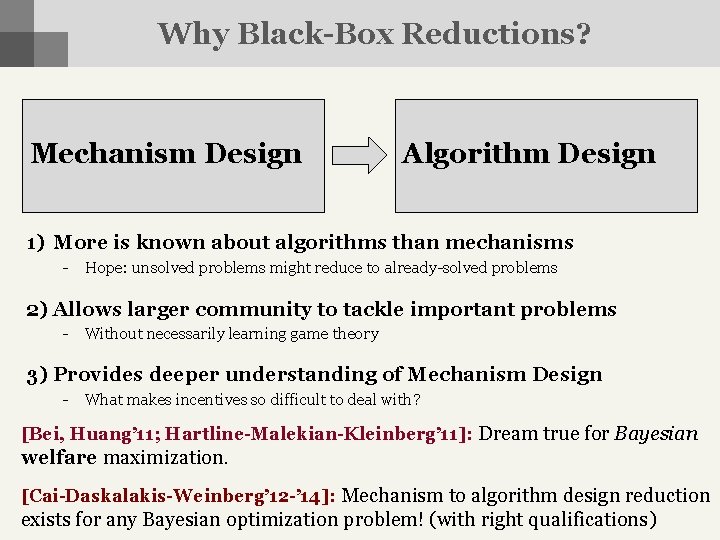

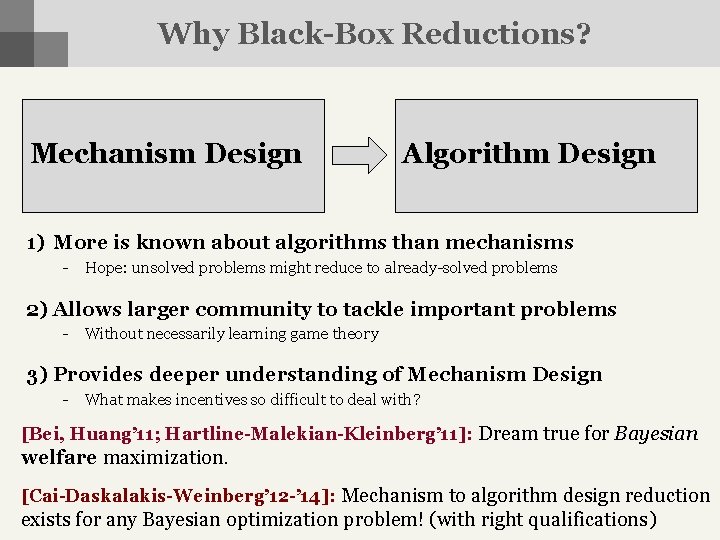

Why Black-Box Reductions? Mechanism Design Algorithm Design 1) More is known about algorithms than mechanisms - Hope: unsolved problems might reduce to already-solved problems 2) Allows larger community to tackle important problems - Without necessarily learning game theory 3) Provides deeper understanding of Mechanism Design - What makes incentives so difficult to deal with? [Bei, Huang’ 11; Hartline-Malekian-Kleinberg’ 11]: Dream true for Bayesian welfare maximization. [Cai-Daskalakis-Weinberg’ 12 -’ 14]: Mechanism to algorithm design reduction exists for any Bayesian optimization problem! (with right qualifications)

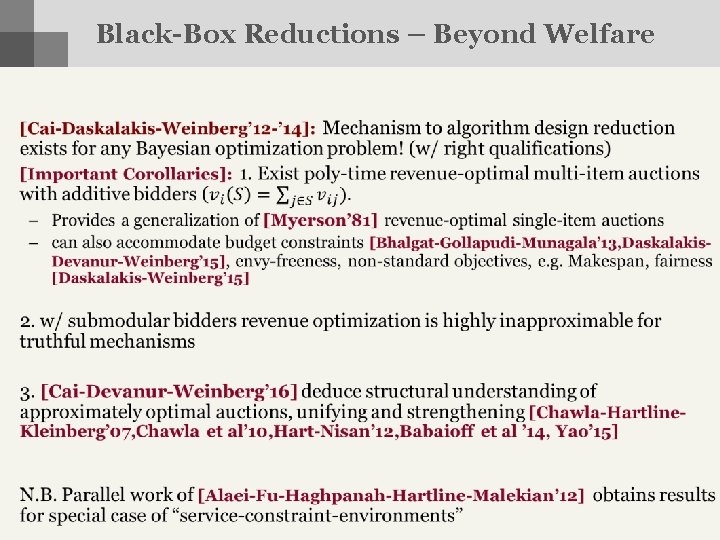

• Black-Box Reductions – Beyond Welfare

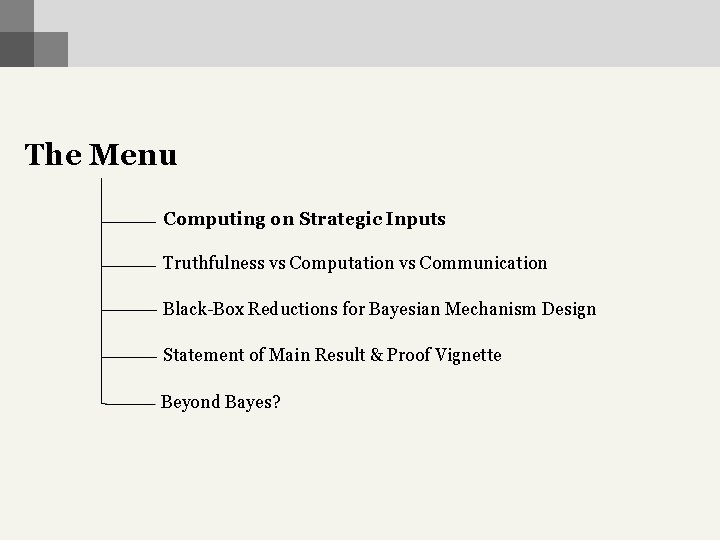

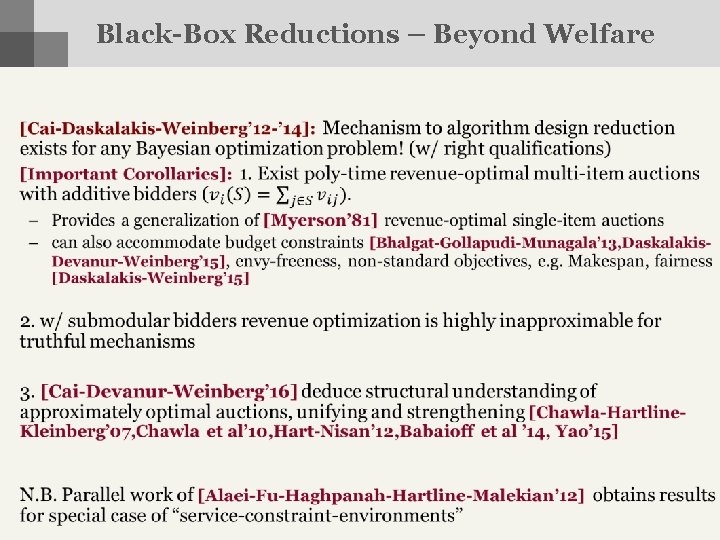

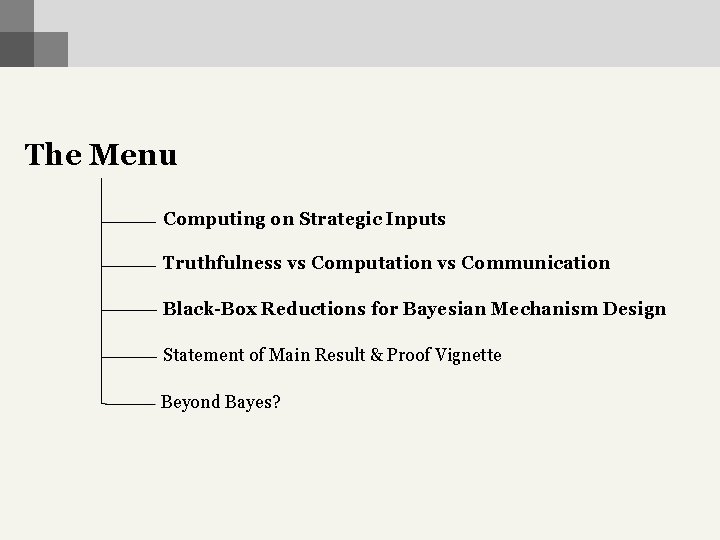

The Menu Computing on Strategic Inputs Truthfulness vs Computation vs Communication Black-Box Reductions for Bayesian Mechanism Design Statement of Main Result & Proof Vignette Beyond Bayes?

The Menu Computing on Strategic Inputs Truthfulness vs Computation vs Communication Black-Box Reductions for Bayesian Mechanism Design Statement of Main Result & Proof Vignette Beyond Bayes?

![A General Reduction reduction Impossible CIL 12 Algorithm Design Given 1 same X A General Reduction = reduction Impossible [CIL’ 12] Algorithm Design Given: 1. same X](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-23.jpg)

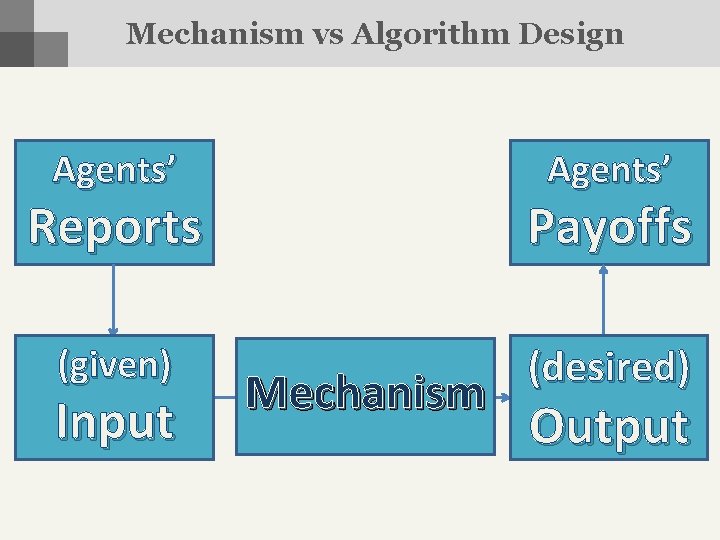

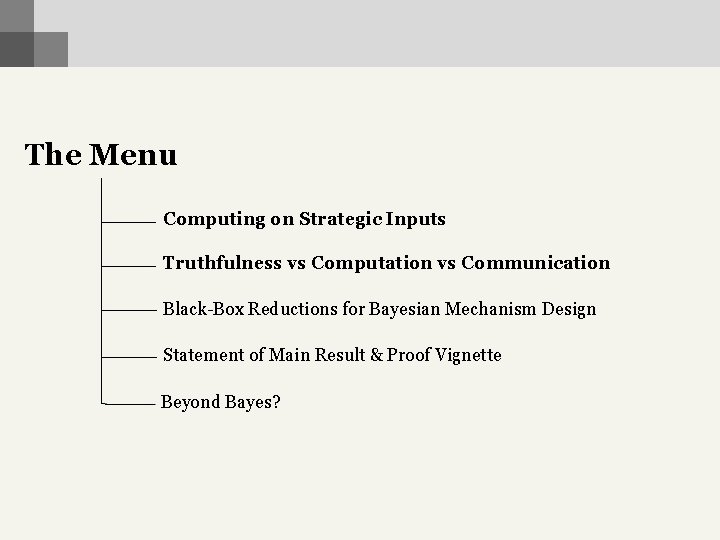

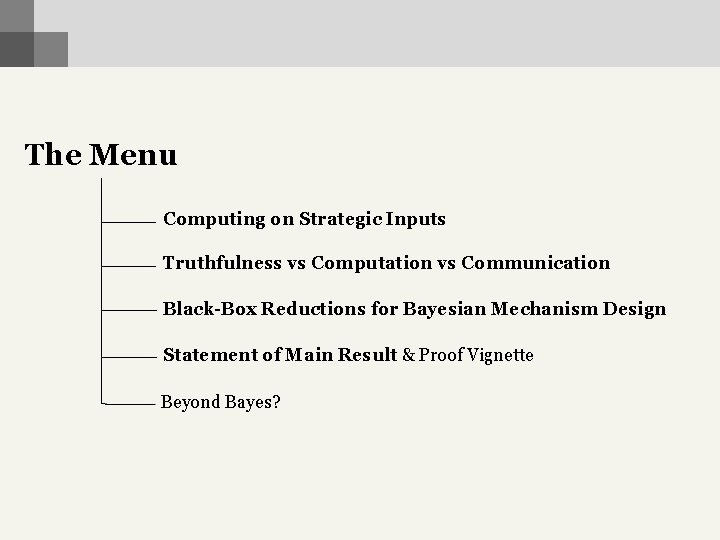

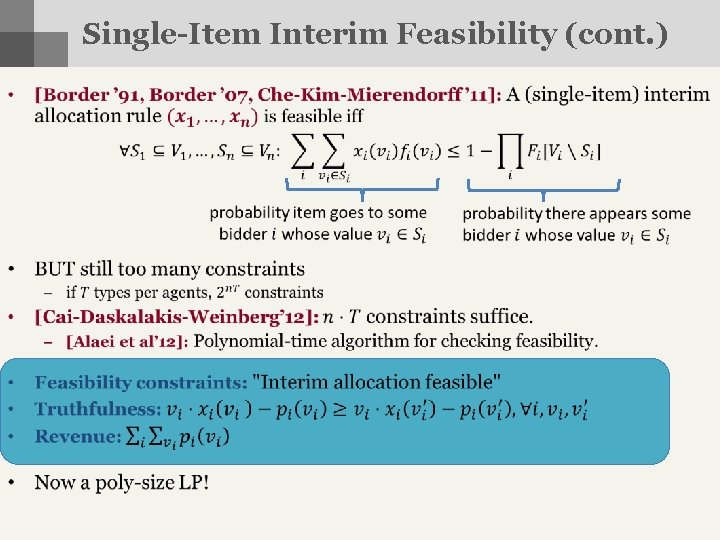

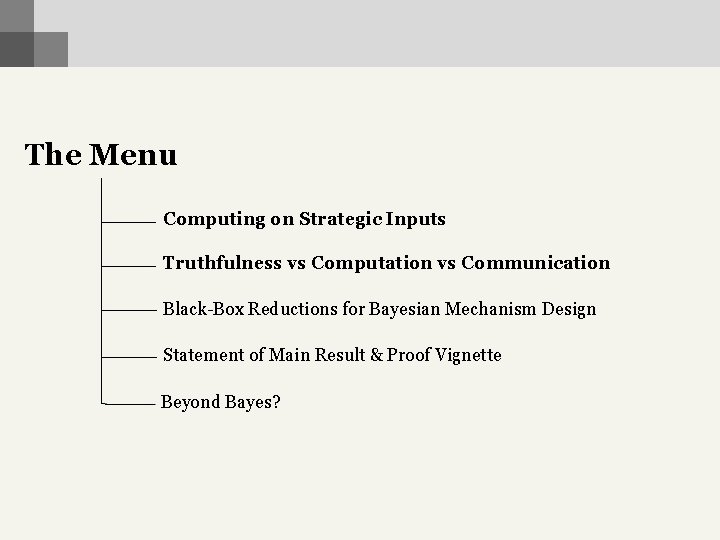

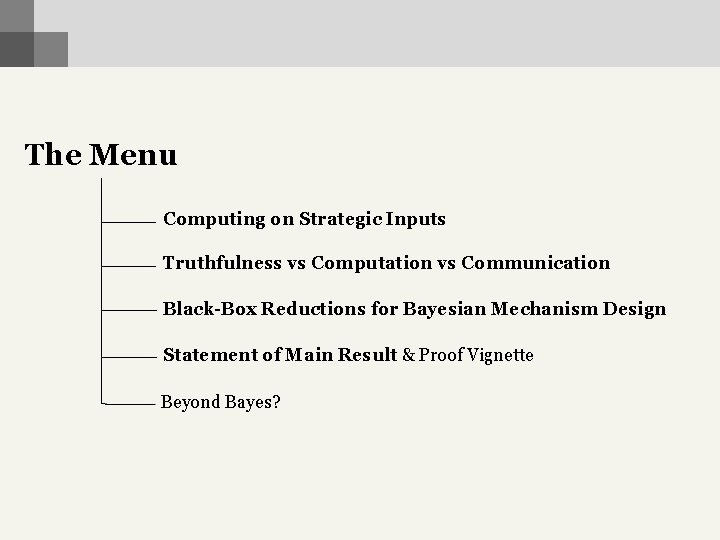

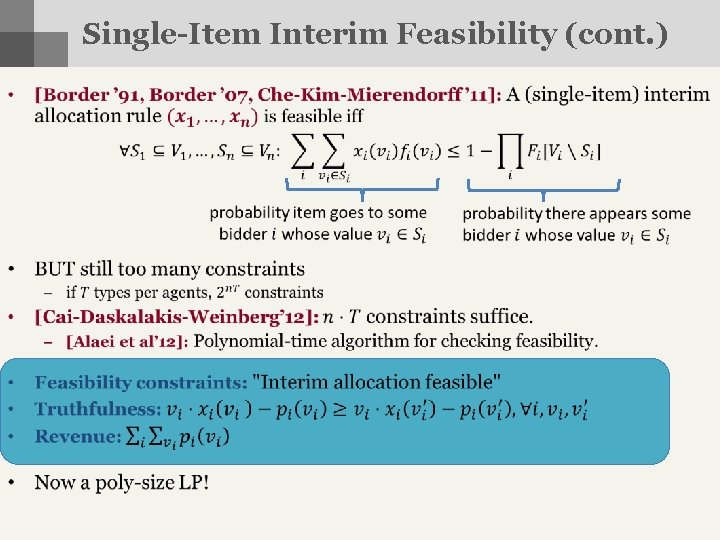

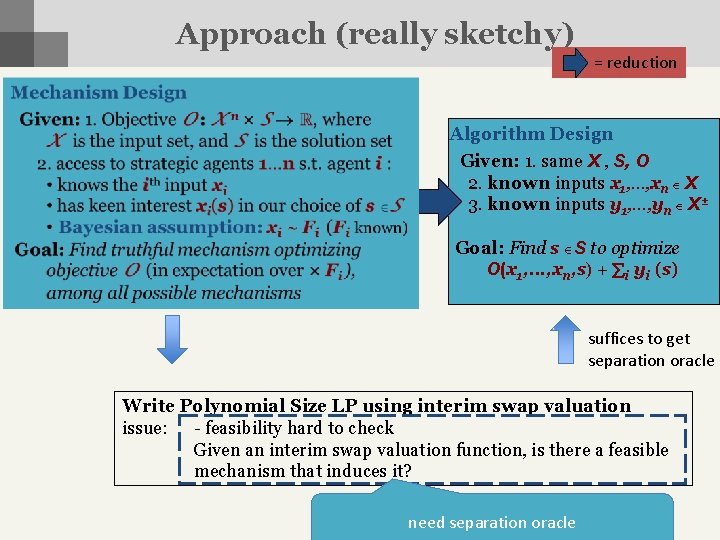

A General Reduction = reduction Impossible [CIL’ 12] Algorithm Design Given: 1. same X , S, O 2. known inputs x 1, …, xn X 3. known inputs y 1, …, yn X ± Goal: Find s S to optimize O(x 1, . . . , xn, s) + i yi (s) ~ Fi

![A General Reduction reduction Impossible CIL 12 Algorithm Design Given 1 same X A General Reduction = reduction Impossible [CIL’ 12] Algorithm Design Given: 1. same X](https://slidetodoc.com/presentation_image_h/1212607c40dda26a0c8133030763c166/image-24.jpg)

A General Reduction = reduction Impossible [CIL’ 12] Algorithm Design Given: 1. same X , S, O 2. known inputs x 1, …, xn X 3. known inputs y 1, …, yn X ± Goal: Find s S to optimize O(x 1, . . . , xn, s) + i yi (s) • [Cai-Daskalakis-Weinberg’ 13]: Polynomial-time, black-box reduction from mechanism design for arbitrary objective O to algorithm design for same objective O plus linear cost function. • i. e. if RHS tractable, then LHS tractable • also approximation preserving: • i. e. α-approximation to RHS α-approximation to LHS • techniques: probability and convex programming: approximation-sensitive versions of the equivalence of optimization and separation [Grötschel-Lovász-Schrijver’ 80, Karp-Papadimitriou’ 80]; constructive versions of Border’s theorem […, Border’ 91, ’ 07, CKM’ 11, CDW’ 12, Alaei et al’ 12] and multi-item extensions thereof [CDW’ 12]

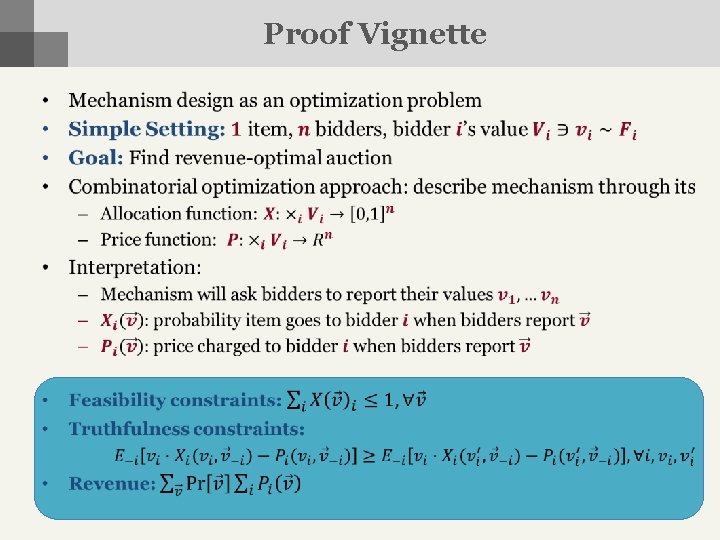

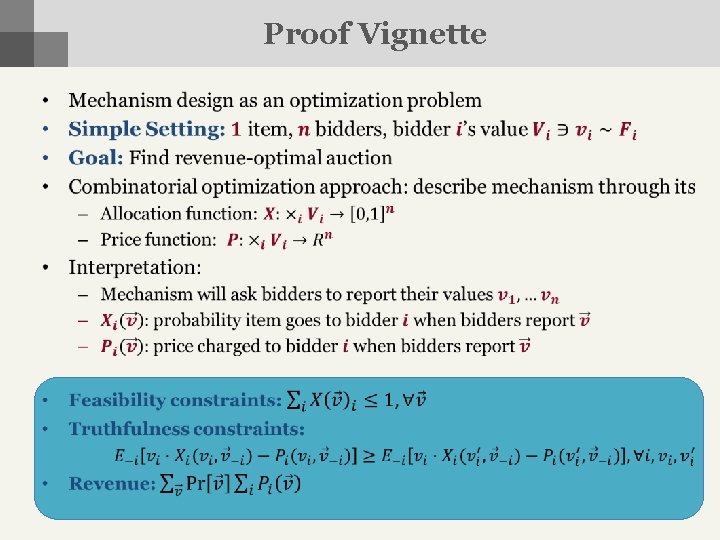

Proof Vignette •

Proof Vignette (cont. ) •

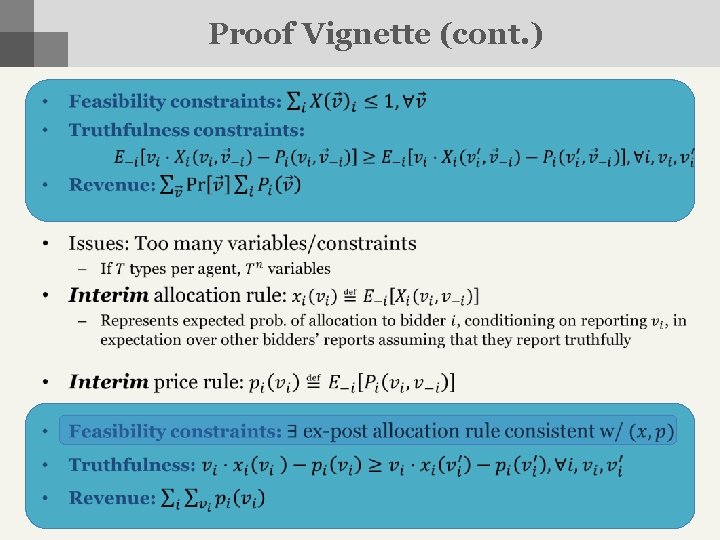

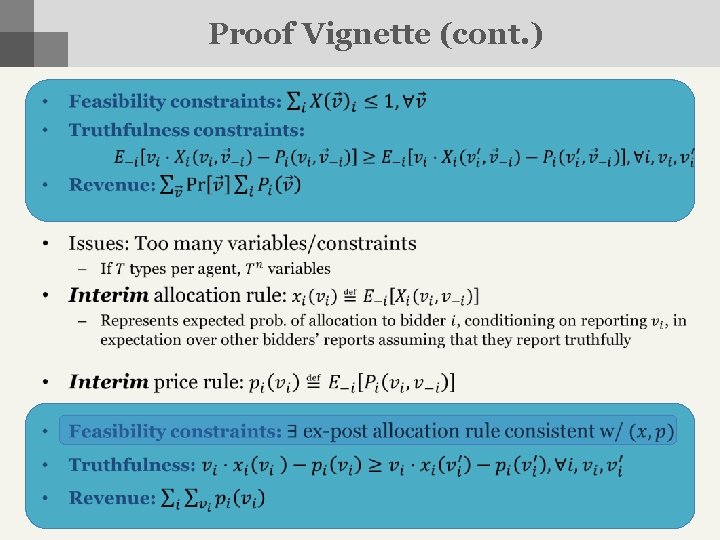

Single-Item Interim Feasibility • A ⅓ B ⅓ ⅓ ib eas nf so i C D E F le ! ⅓ ⅓ ⅓

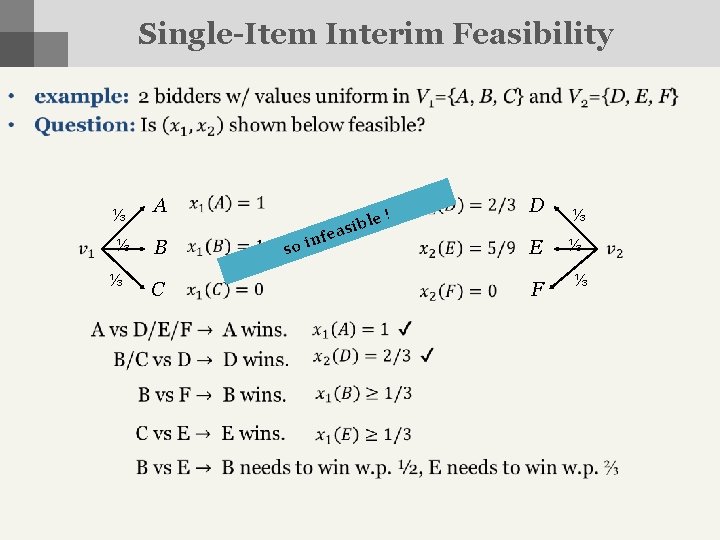

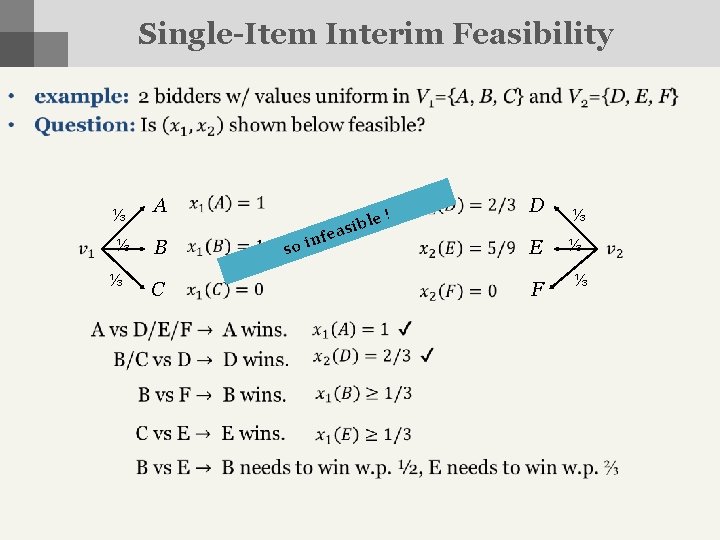

Single-Item Interim Feasibility (cont. ) •

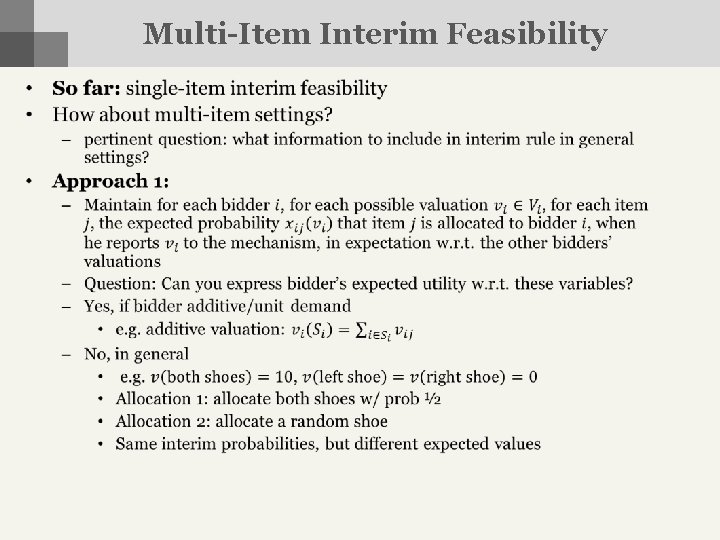

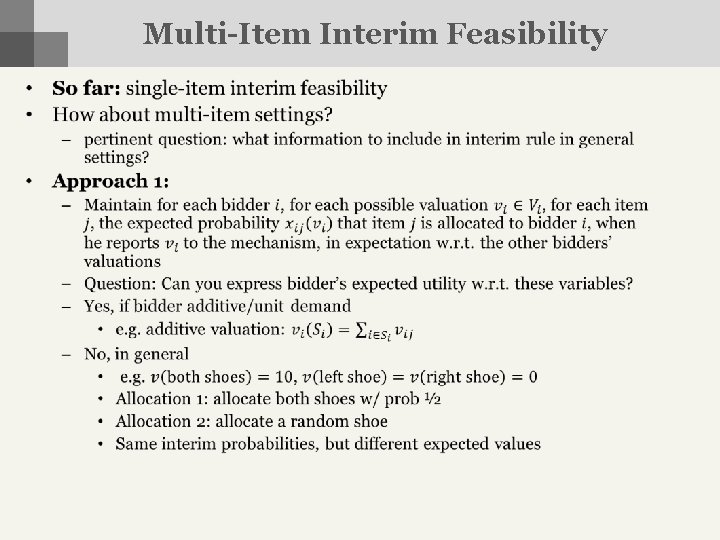

Multi-Item Interim Feasibility •

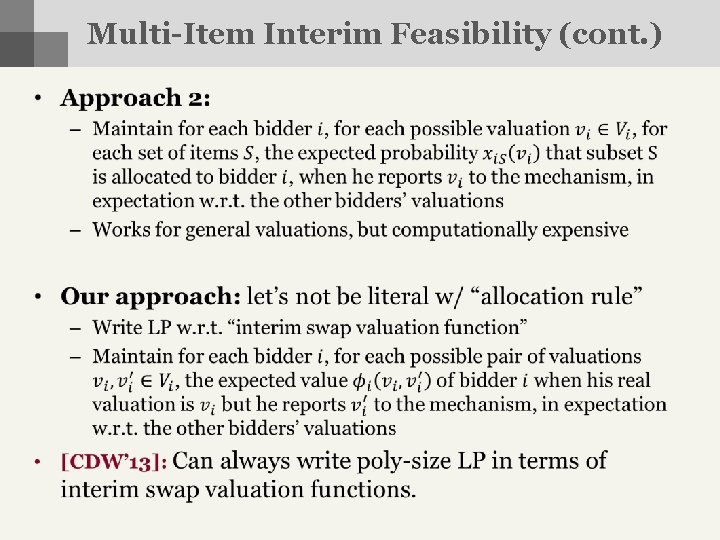

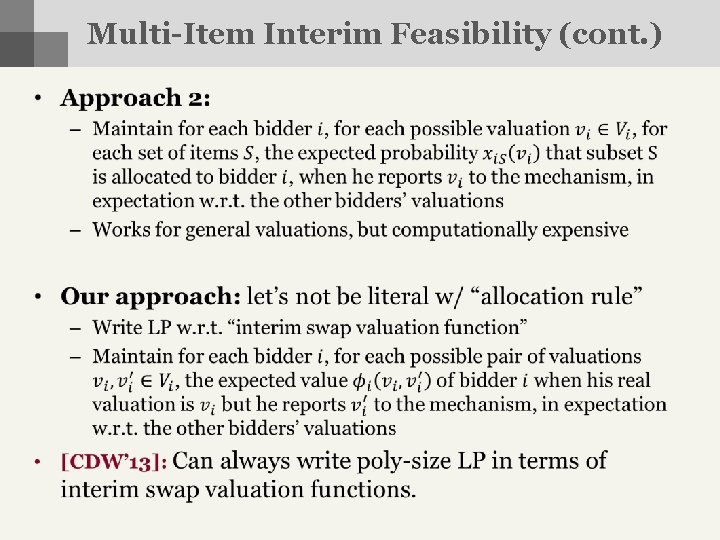

Multi-Item Interim Feasibility (cont. ) •

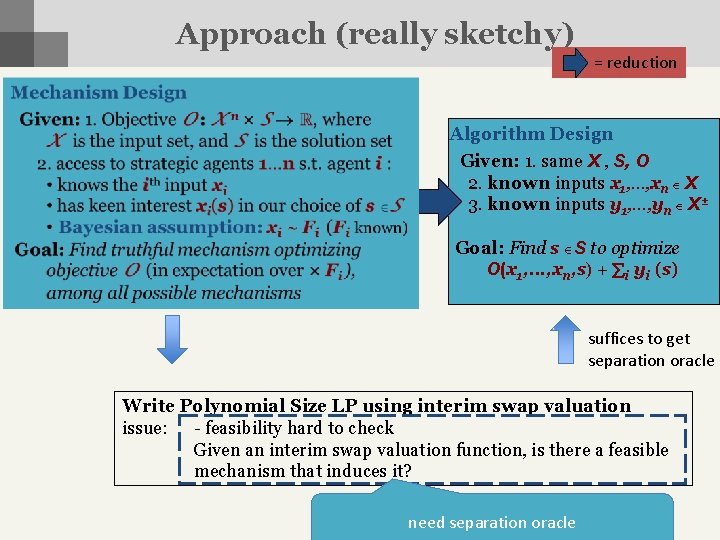

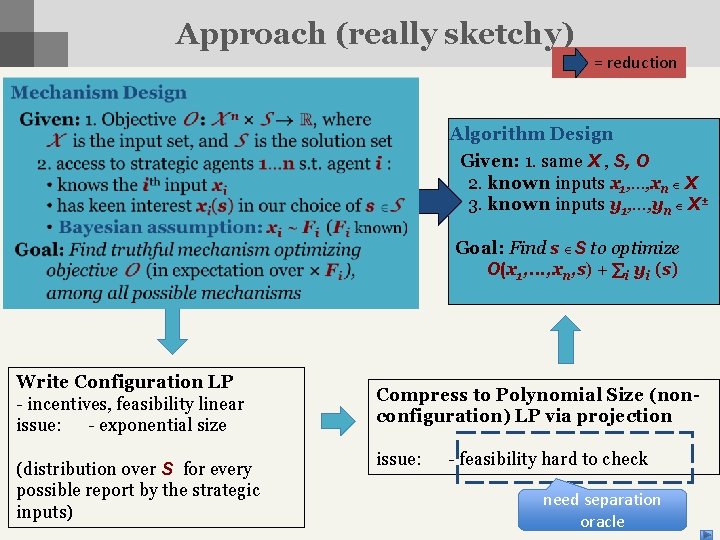

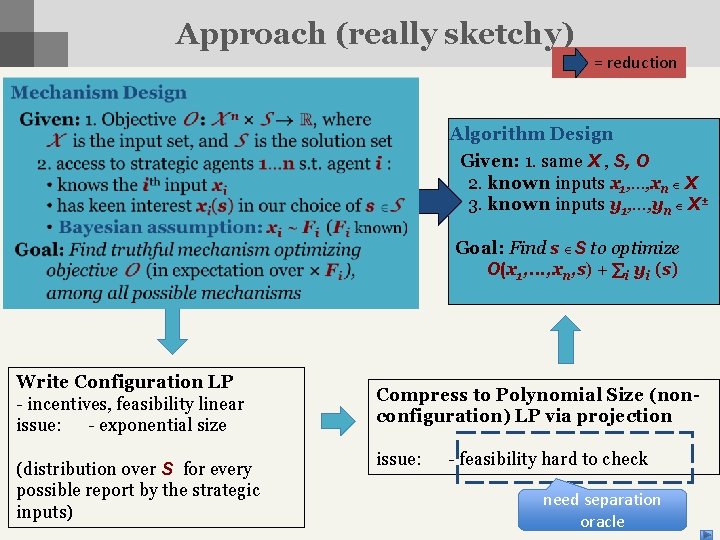

Approach (really sketchy) = reduction Algorithm Design Given: 1. same X , S, O 2. known inputs x 1, …, xn X 3. known inputs y 1, …, yn X ± Goal: Find s S to optimize O(x 1, . . . , xn, s) + i yi (s) suffices to get separation oracle Write Polynomial Size LP using interim swap valuation issue: - feasibility hard to check Given an interim swap valuation function, is there a feasible mechanism that induces it? need separation oracle

The Menu Computing on Strategic Inputs Truthfulness vs Computation vs Communication Black-Box Reductions for Bayesian Mechanism Design Statement of Main Result & Proof Vignette Beyond Bayes?

The Menu Computing on Strategic Inputs Truthfulness vs Computation vs Communication Black-Box Reductions for Bayesian Mechanism Design Statement of Main Result & Proof Vignette Beyond Bayes?

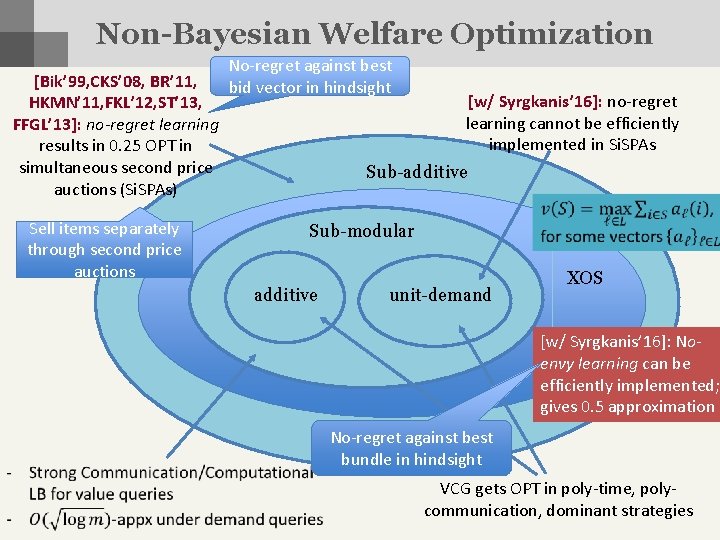

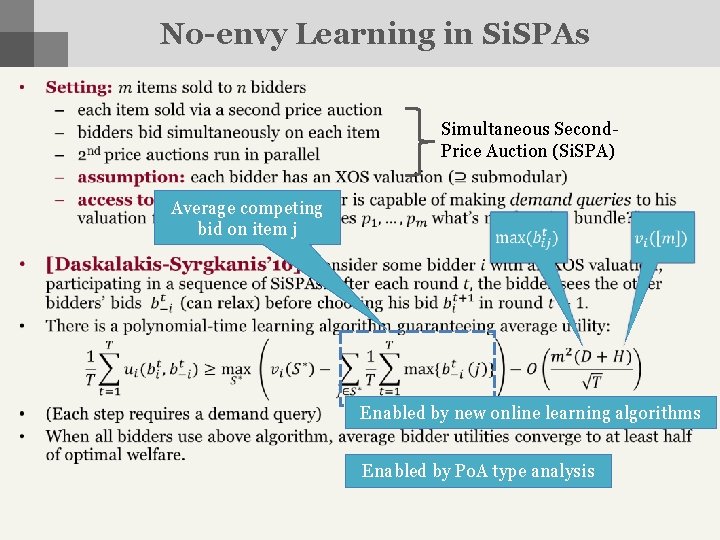

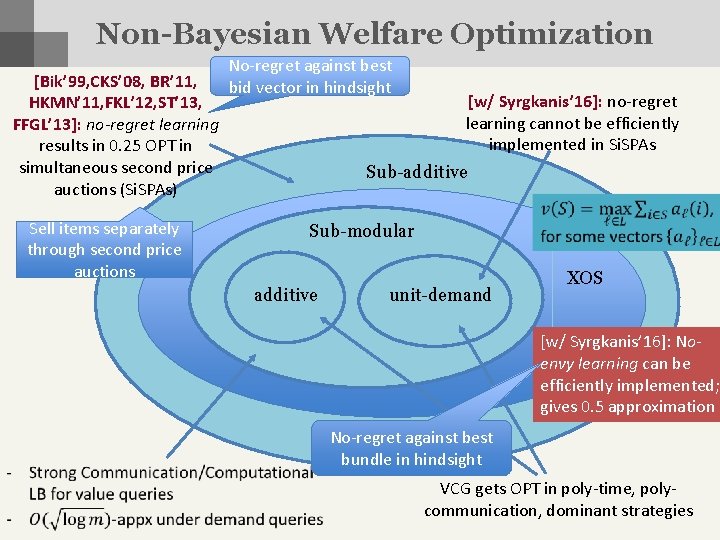

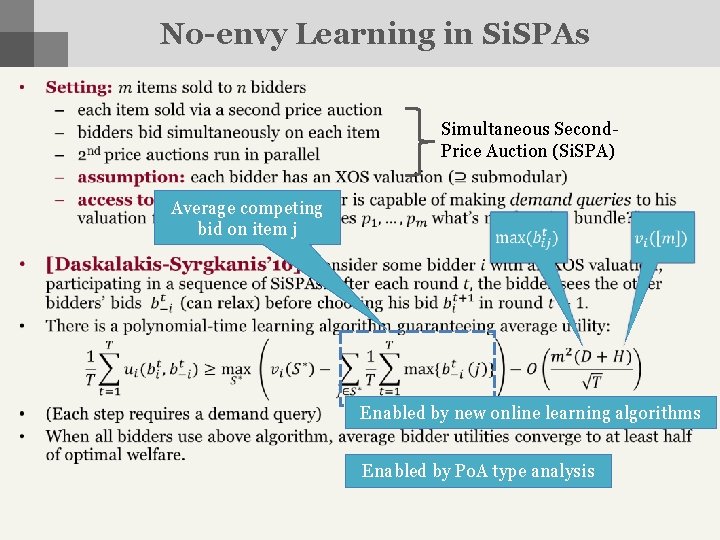

Non-Bayesian Welfare Optimization [Bik’ 99, CKS’ 08, BR’ 11, HKMN’ 11, FKL’ 12, ST’ 13, FFGL’ 13]: no-regret learning results in 0. 25 OPT in simultaneous second price auctions (Si. SPAs) Sell items separately through second price auctions No-regret against best bid vector in hindsight [w/ Syrgkanis’ 16]: no-regret learning cannot be efficiently implemented in Si. SPAs Sub-additive Sub-modular additive unit-demand XOS [w/ Syrgkanis’ 16]: Noenvy learning can be efficiently implemented; gives 0. 5 approximation No-regret against best bundle in hindsight VCG gets OPT in poly-time, polycommunication, dominant strategies

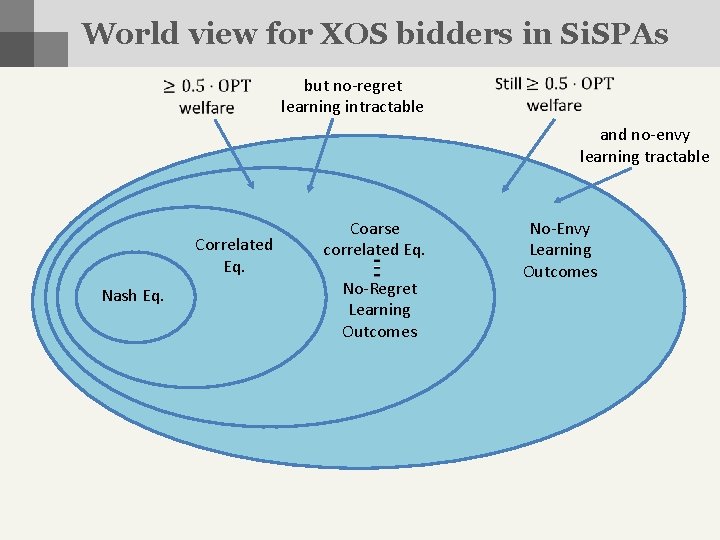

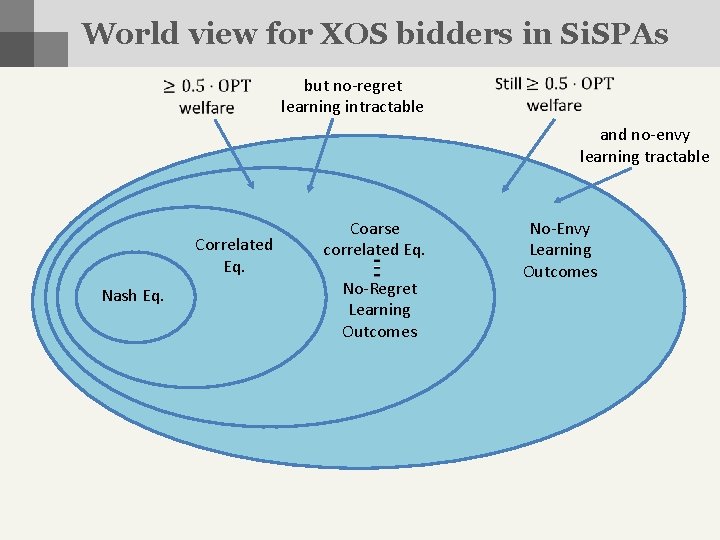

World view for XOS bidders in Si. SPAs but no-regret learning intractable and no-envy learning tractable Nash Eq. Correlated Eq. Coarse correlated Eq. No-Regret Learning Outcomes No-Envy Learning Outcomes

Summary • This Talk: Optimization on strategic input • Standard mechanism design theory may not be implementable once computational/communication considerations are introduced • The fields of communication and computational complexity provide tools for mechanism design w/ these considerations • Bayesian MD gets around computational intractability barriers • w/ Cai and Weinberg, we obtain a generic, poly-time reduction from mechanism to algorithm design – “optimizing on strategic inputs is no harder than adding linear cost to your objective” – Important Corollary: revenue optimal multi-item auctions; generalizes [Myerson’ 81] • Interaction of worst-case and Bayesian guarantees not well-understood – E. g. dominant strategy truthful mechanisms in Bayesian settings with optimal expected revenue? • Non-Bayesian Setting: Syrgkanis and I broke the submodular bidder barrier using no-envy learning. Beyond XOS bidders? Other applications?

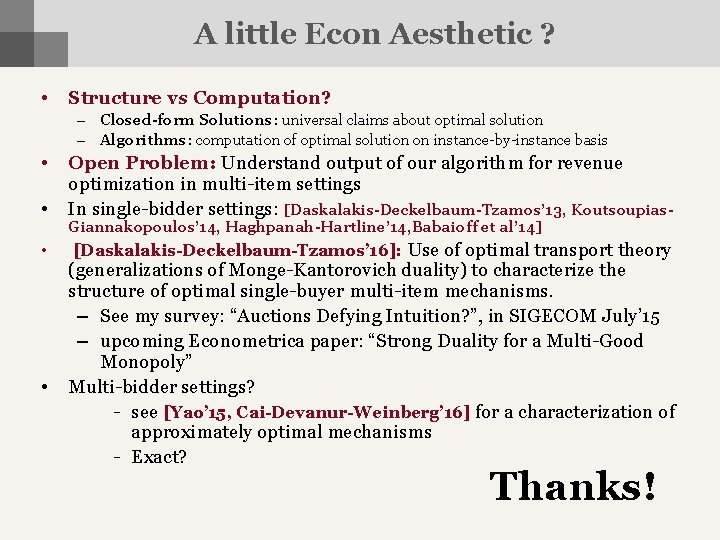

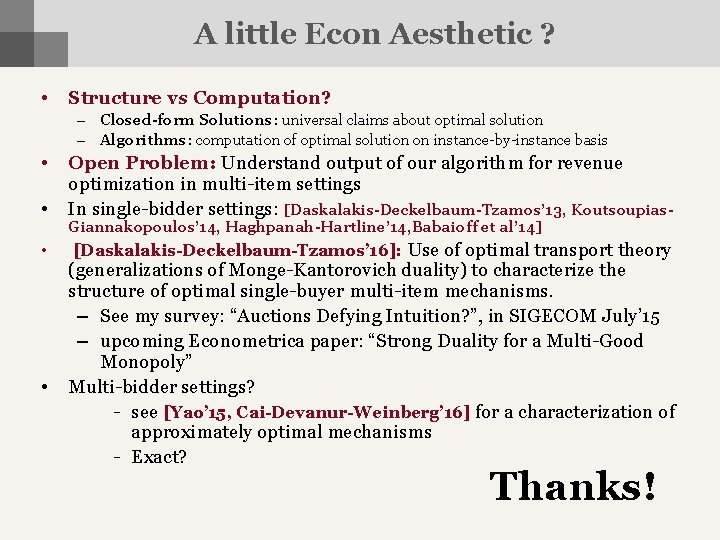

A little Econ Aesthetic ? • Structure vs Computation? – Closed-form Solutions: universal claims about optimal solution – Algorithms: computation of optimal solution on instance-by-instance basis • • Open Problem: Understand output of our algorithm for revenue optimization in multi-item settings In single-bidder settings: [Daskalakis-Deckelbaum-Tzamos’ 13, Koutsoupias- • [Daskalakis-Deckelbaum-Tzamos’ 16]: Use of optimal transport theory • Giannakopoulos’ 14, Haghpanah-Hartline’ 14, Babaioff et al’ 14] (generalizations of Monge-Kantorovich duality) to characterize the structure of optimal single-buyer multi-item mechanisms. – See my survey: “Auctions Defying Intuition? ”, in SIGECOM July’ 15 – upcoming Econometrica paper: “Strong Duality for a Multi-Good Monopoly” Multi-bidder settings? - see [Yao’ 15, Cai-Devanur-Weinberg’ 16] for a characterization of approximately optimal mechanisms - Exact? Thanks!

Approach (really sketchy) = reduction Algorithm Design Given: 1. same X , S, O 2. known inputs x 1, …, xn X 3. known inputs y 1, …, yn X ± Goal: Find s S to optimize O(x 1, . . . , xn, s) + i yi (s) Write Configuration LP - incentives, feasibility linear issue: - exponential size (distribution over S for every possible report by the strategic inputs) Compress to Polynomial Size (nonconfiguration) LP via projection issue: - feasibility hard to check need separation oracle

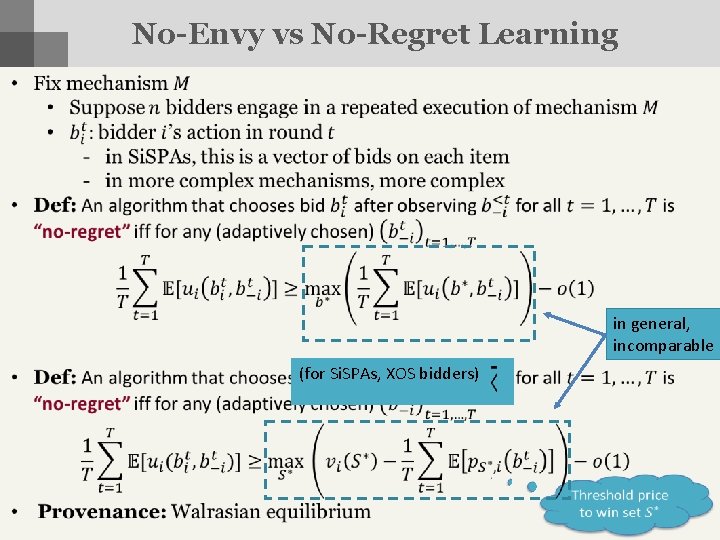

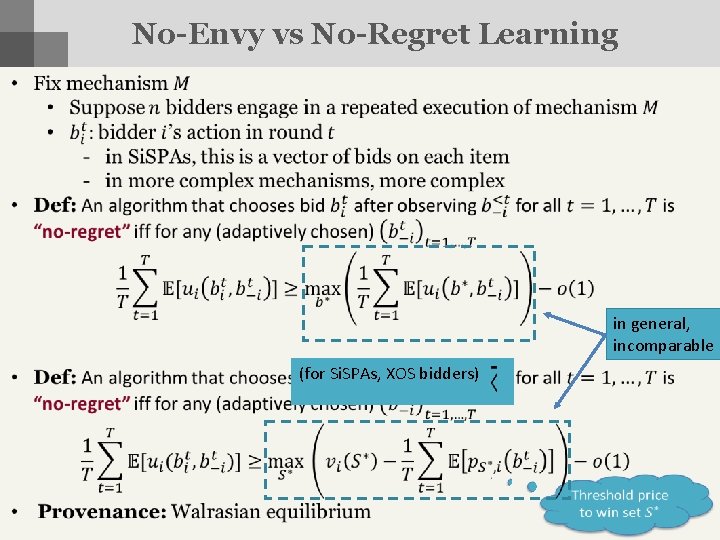

No-Envy vs No-Regret Learning in general, incomparable (for Si. SPAs, XOS bidders)

No-envy Learning in Si. SPAs • Simultaneous Second. Price Auction (Si. SPA) Average competing bid on item j Enabled by new online learning algorithms Enabled by Po. A type analysis