P 2 P Integration Concluded and Data Stream

![Dataflow in the CDSS [Taylor & Ives 06], [Green + 07], [Karvounarakis & Ives Dataflow in the CDSS [Taylor & Ives 06], [Green + 07], [Karvounarakis & Ives](https://slidetodoc.com/presentation_image_h2/e802fad9964c5211f11dd5ef938aa90c/image-3.jpg)

![Some Examples § Select * From S 1 [Rows 1000], S 2 [Range 2 Some Examples § Select * From S 1 [Rows 1000], S 2 [Range 2](https://slidetodoc.com/presentation_image_h2/e802fad9964c5211f11dd5ef938aa90c/image-16.jpg)

- Slides: 32

P 2 P Integration, Concluded, and Data Stream Processing Zachary G. Ives University of Pennsylvania CIS 650 – Implementing Data Management Systems November 13, 2008

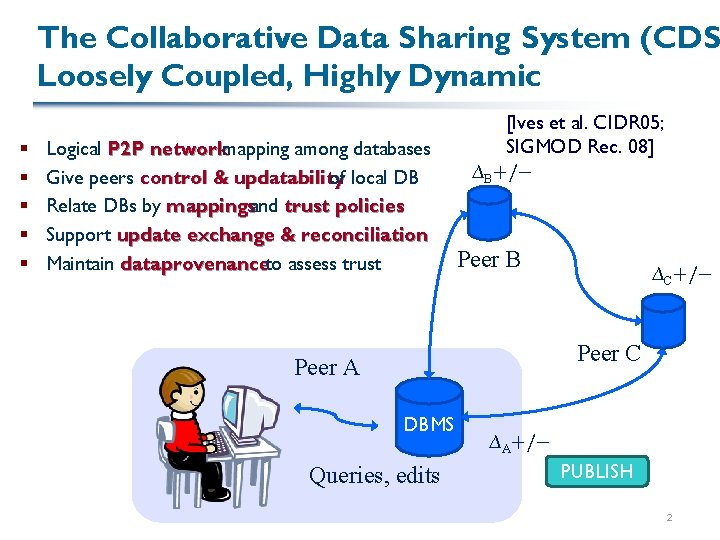

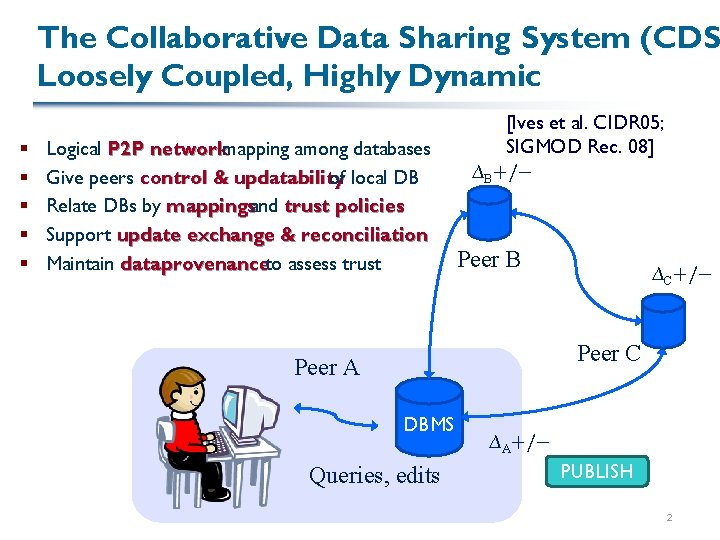

The Collaborative Data Sharing System (CDS Loosely Coupled, Highly Dynamic § § § Logical P 2 P networkmapping among databases Give peers control & updatability of local DB Relate DBs by mappingsand trust policies Support update exchange & reconciliation Maintain dataprovenanceto assess trust [Ives et al. CIDR 05; SIGMOD Rec. 08] ∆B+/− Peer B ∆C+/− Peer C Peer A DBMS Queries, edits ∆A+/− PUBLISH 2

![Dataflow in the CDSS Taylor Ives 06 Green 07 Karvounarakis Ives Dataflow in the CDSS [Taylor & Ives 06], [Green + 07], [Karvounarakis & Ives](https://slidetodoc.com/presentation_image_h2/e802fad9964c5211f11dd5ef938aa90c/image-3.jpg)

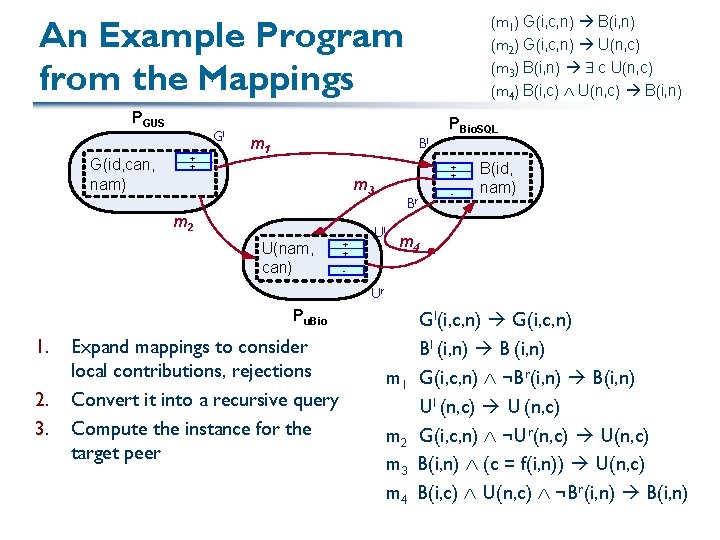

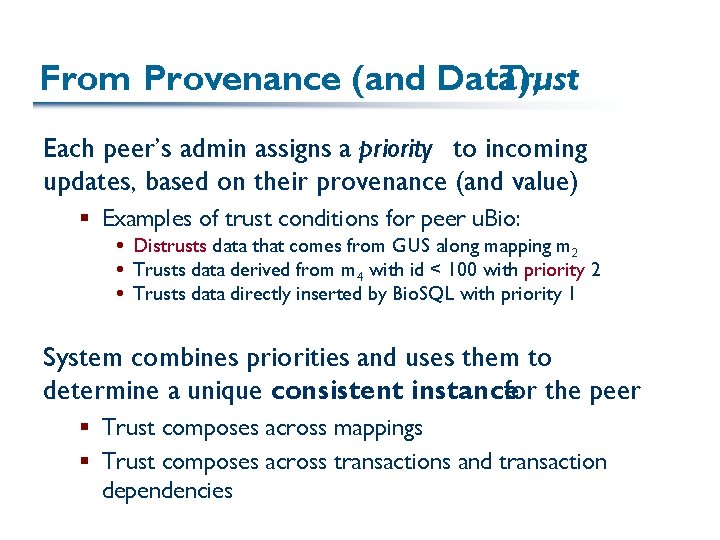

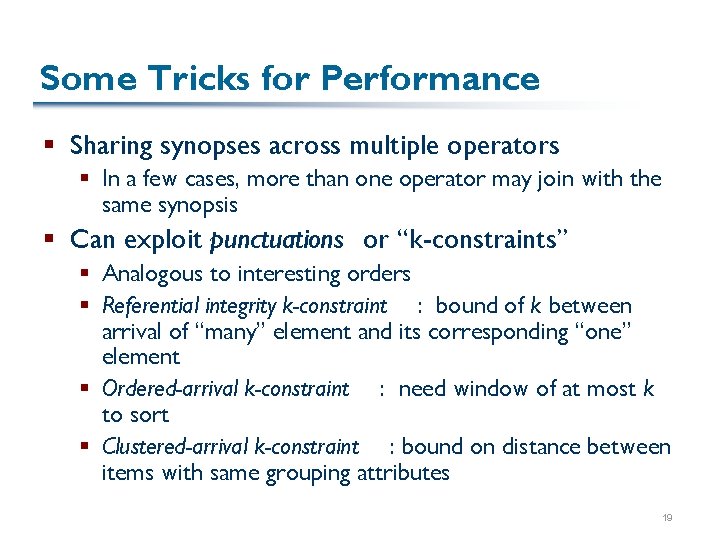

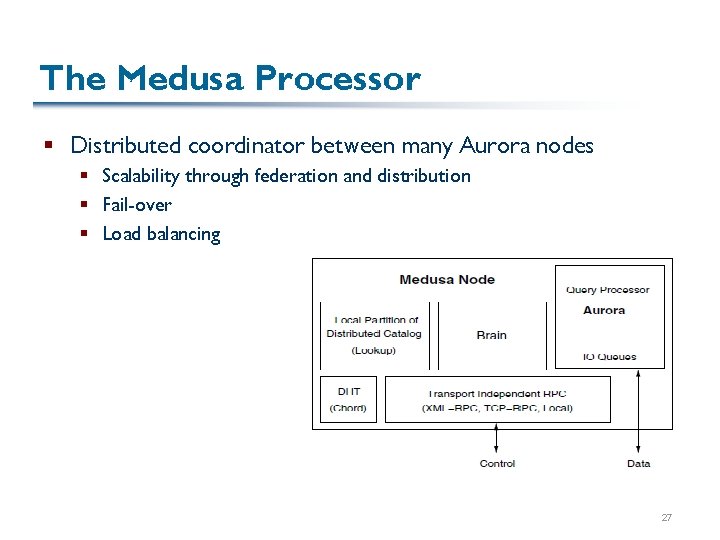

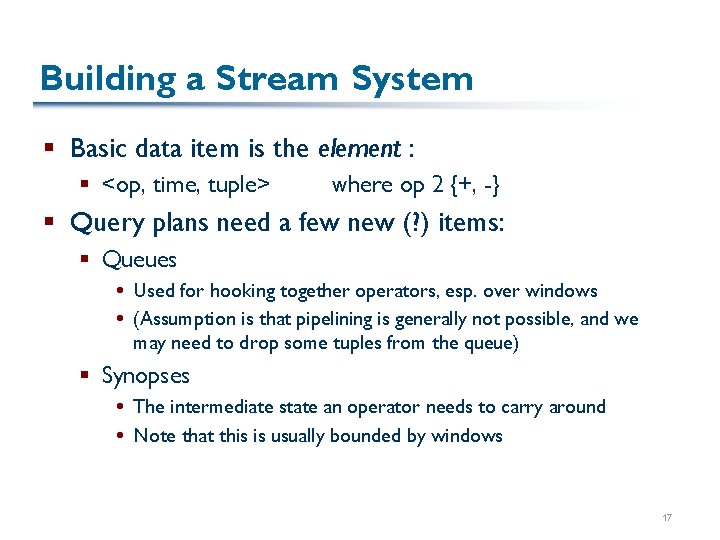

Dataflow in the CDSS [Taylor & Ives 06], [Green + 07], [Karvounarakis & Ives 08] Publish Updates from this peer P Updates from all peers ∆Ppub CDSS archive ⇗ (A permanent log using P 2 P replication) Publish updates Updates from all peers Import ∆Pother Mapped updates ⇘ σ ∆Pm Translate Apply trust through policies mappings with using data + provenance: provenance update exchange Final updates for peer + − Reconcile conflicts Apply local curation ∆P

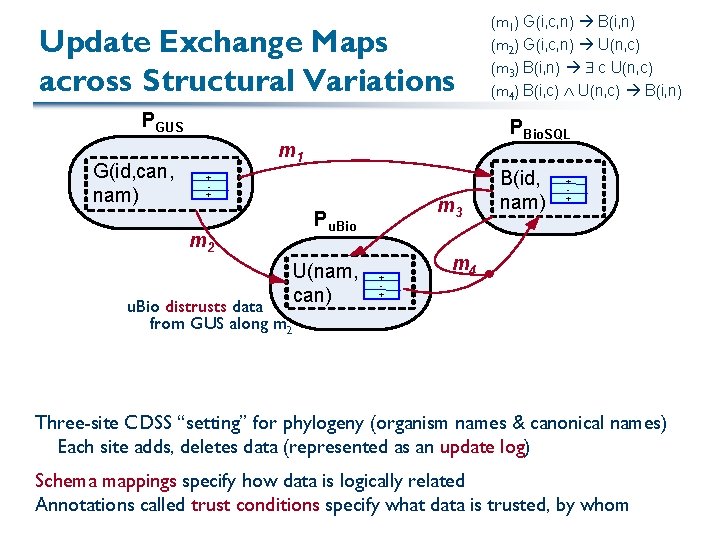

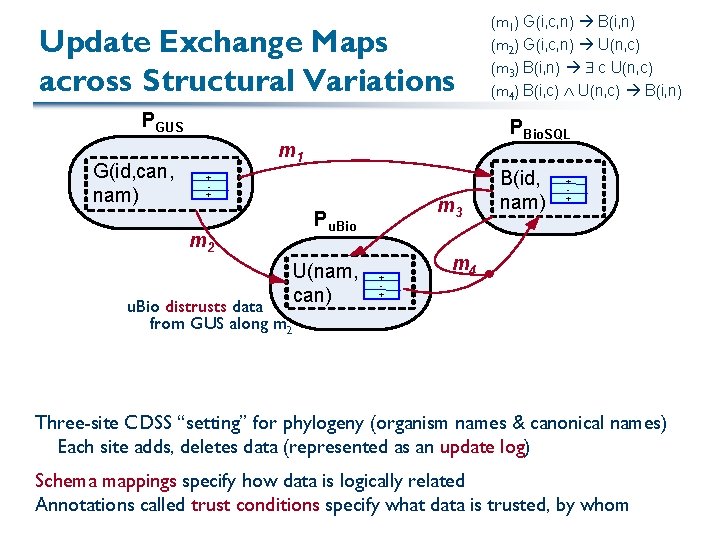

Update Exchange Maps across Structural Variations PGUS G(id, can, nam) PBio. SQL m 1 + + m 2 u. Bio distrusts data from GUS along m 2 (m 1) G(i, c, n) B(i, n) (m 2) G(i, c, n) U(n, c) (m 3) B(i, n) c U(n, c) (m 4) B(i, c) U(n, c) B(i, n) m 3 Pu. Bio U(nam, can) + + B(id, nam) + + m 4 Three-site CDSS “setting” for phylogeny (organism names & canonical names) Each site adds, deletes data (represented as an update log) Schema mappings specify how data is logically related Annotations called trust conditions specify what data is trusted, by whom

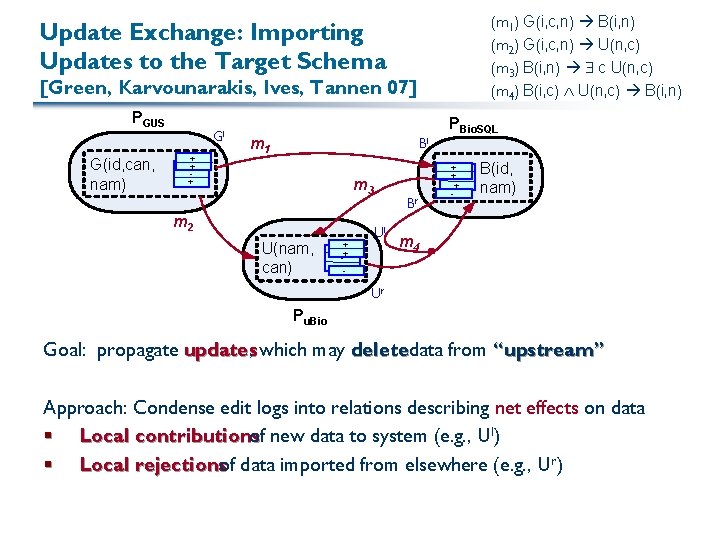

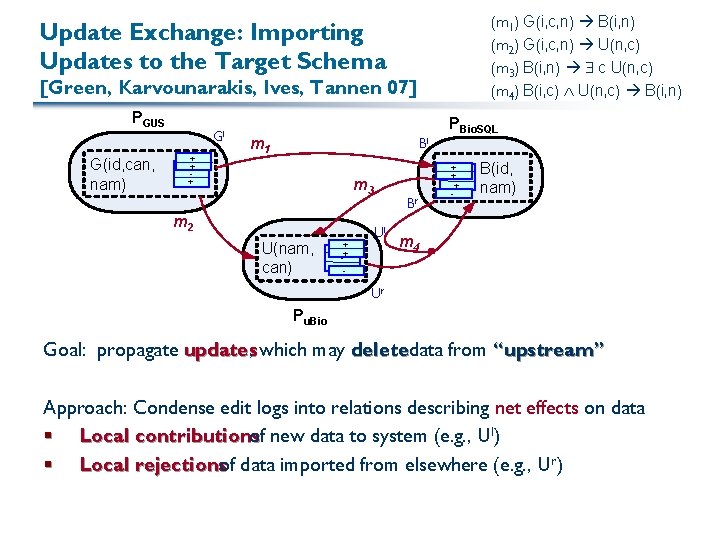

(m 1) G(i, c, n) B(i, n) (m 2) G(i, c, n) U(n, c) (m 3) B(i, n) c U(n, c) (m 4) B(i, c) U(n, c) B(i, n) Update Exchange: Importing Updates to the Target Schema [Green, Karvounarakis, Ives, Tannen 07] PGUS G(id, can, nam) Gl + ++ + m 1 Bl m 3 m 2 U(nam, can) + ++ +- Br Ul PBio. SQL ++ ++ - B(id, nam) m 4 Ur Pu. Bio Goal: propagate updates, which may deletedata from “upstream” Approach: Condense edit logs into relations describing net effects on data § Local contributions of new data to system (e. g. , Ul) § Local rejectionsof data imported from elsewhere (e. g. , Ur)

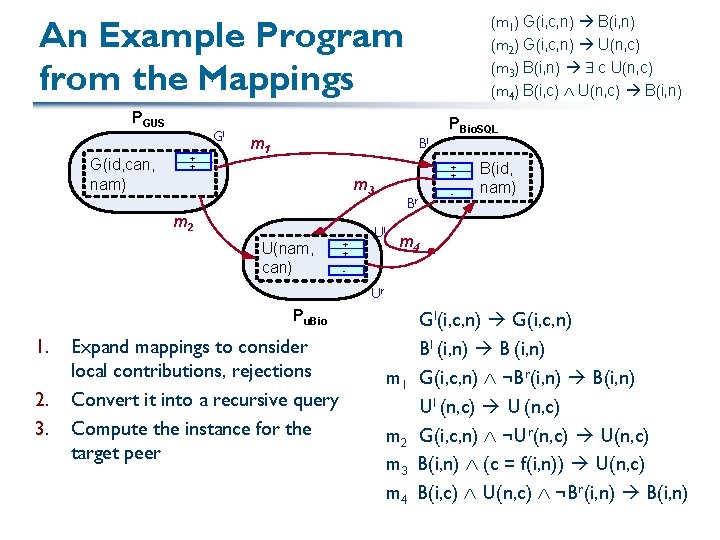

(m 1) G(i, c, n) B(i, n) (m 2) G(i, c, n) U(n, c) (m 3) B(i, n) c U(n, c) (m 4) B(i, c) U(n, c) B(i, n) An Example Program from the Mappings PGUS G(id, can, nam) Gl + + m 1 Bl + + m 3 m 2 U(nam, can) + + PBio. SQL Br Ul - B(id, nam) m 4 - Ur Pu. Bio 1. 2. 3. Expand mappings to consider local contributions, rejections Convert it into a recursive query Compute the instance for the target peer m 1 m 2 m 3 m 4 Gl(i, c, n) G(i, c, n) Bl (i, n) B (i, n) G(i, c, n) ¬Br(i, n) B(i, n) Ul (n, c) U (n, c) G(i, c, n) ¬Ur(n, c) U(n, c) B(i, n) (c = f(i, n)) U(n, c) B(i, c) U(n, c) ¬Br(i, n) B(i, n)

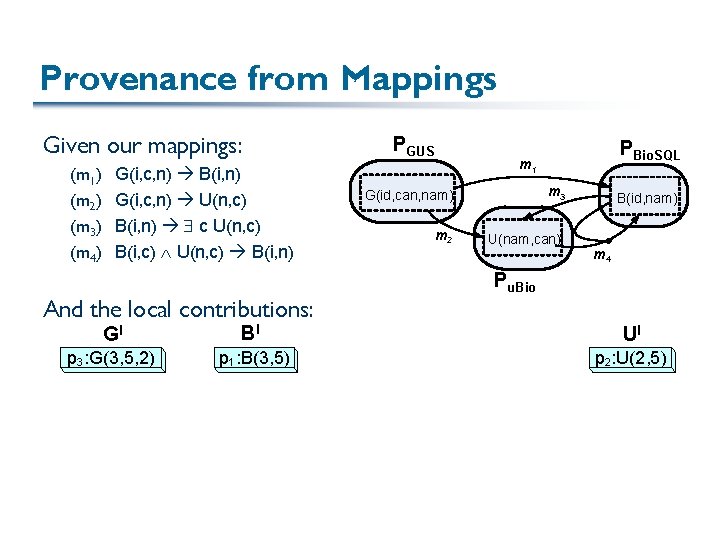

Beyond the Basic Update Exchange Program § Can generalize to perform incremental propagation given new updates § Propagate updates downstream [Green+07] § Propagate updates back to the original “base” data [Karvounarakis & Ives 08] § But what if not all data is equally useful? What if some sources are more authoritative than others? § We need a record of how we mapped the data (updates)

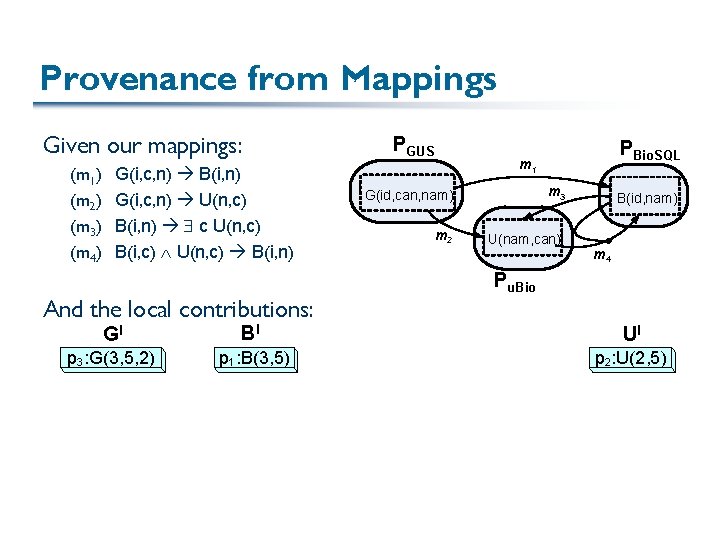

Provenance from Mappings Given our mappings: (m 1) (m 2) (m 3) (m 4) G(i, c, n) B(i, n) G(i, c, n) U(n, c) B(i, n) c U(n, c) B(i, c) U(n, c) B(i, n) And the local contributions: PGUS PBio. SQL m 1 m 3 G(id, can, nam) m 2 U(nam, can) B(id, nam) m 4 Pu. Bio Gl Bl Ul p 3: G(3, 5, 2) p 1: B(3, 5) p 2: U(2, 5)

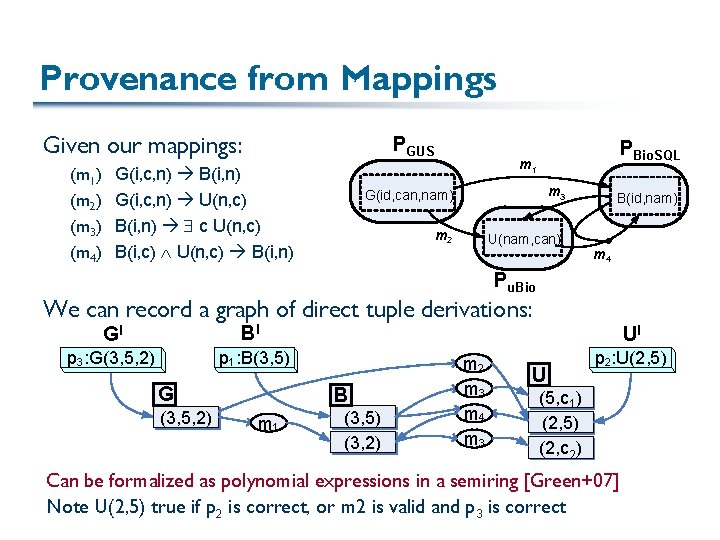

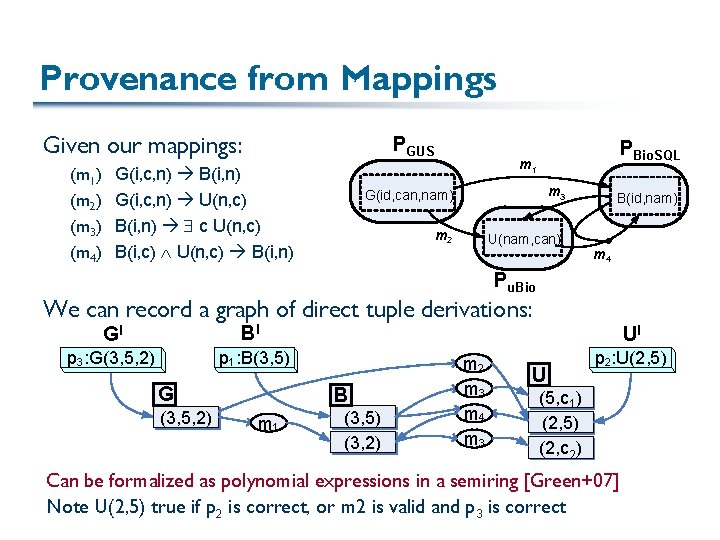

Provenance from Mappings Given our mappings: (m 1) (m 2) (m 3) (m 4) PGUS G(i, c, n) B(i, n) G(i, c, n) U(n, c) B(i, n) c U(n, c) B(i, c) U(n, c) B(i, n) PBio. SQL m 1 m 3 G(id, can, nam) m 2 U(nam, can) B(id, nam) m 4 Pu. Bio We can record a graph of direct tuple derivations: Gl Bl p 3: G(3, 5, 2) p 1: B(3, 5) G (3, 5, 2) Ul B m 1 (3, 5) (3, 2) m 2 m 3 m 4 m 3 U p 2: U(2, 5) (5, c 1) (2, 5) (2, c 2) Can be formalized as polynomial expressions in a semiring [Green+07] Note U(2, 5) true if p 2 is correct, or m 2 is valid and p 3 is correct

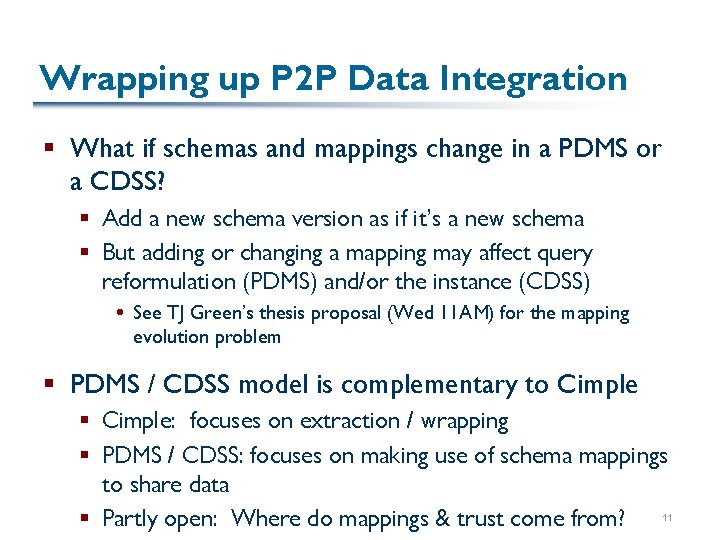

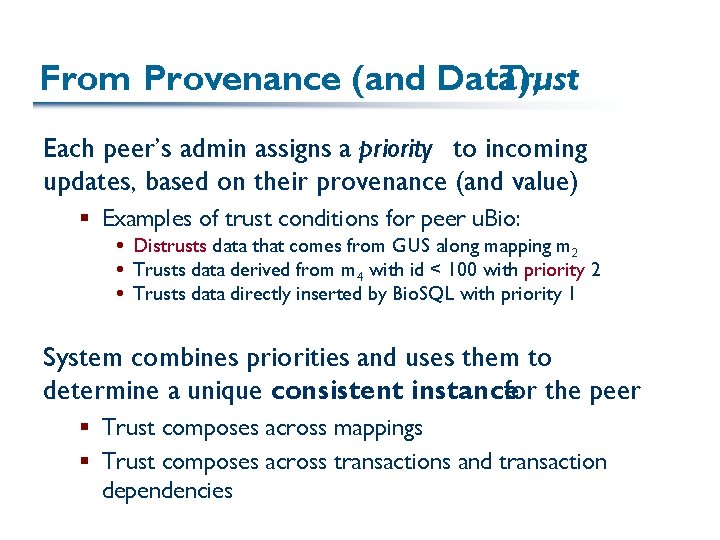

From Provenance (and Data), Trust Each peer’s admin assigns a priority to incoming updates, based on their provenance (and value) § Examples of trust conditions for peer u. Bio: Distrusts data that comes from GUS along mapping m 2 Trusts data derived from m 4 with id < 100 with priority 2 Trusts data directly inserted by Bio. SQL with priority 1 System combines priorities and uses them to determine a unique consistent instance for the peer § Trust composes across mappings § Trust composes across transactions and transaction dependencies

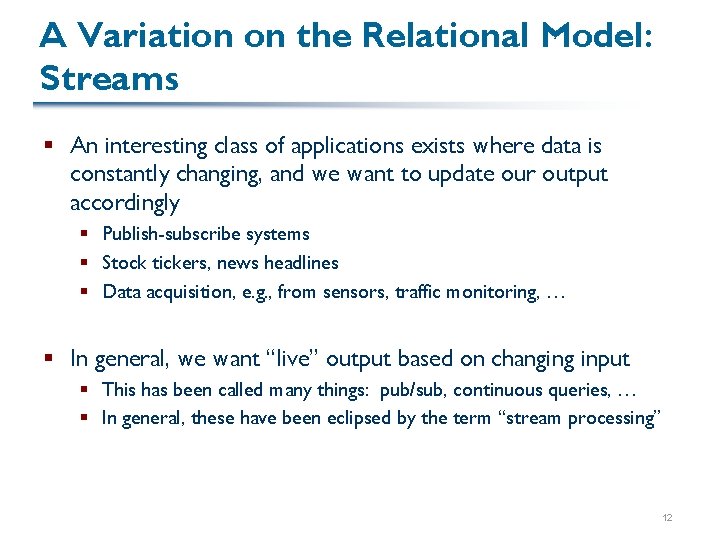

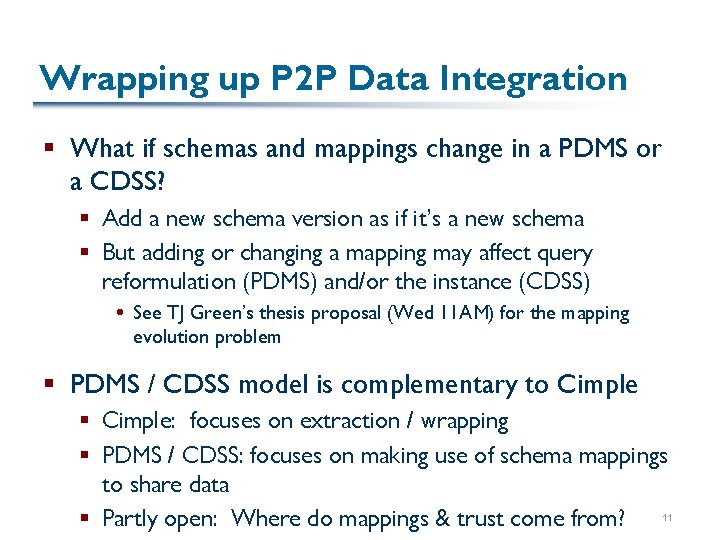

Wrapping up P 2 P Data Integration § What if schemas and mappings change in a PDMS or a CDSS? § Add a new schema version as if it’s a new schema § But adding or changing a mapping may affect query reformulation (PDMS) and/or the instance (CDSS) See TJ Green’s thesis proposal (Wed 11 AM) for the mapping evolution problem § PDMS / CDSS model is complementary to Cimple § Cimple: focuses on extraction / wrapping § PDMS / CDSS: focuses on making use of schema mappings to share data § Partly open: Where do mappings & trust come from? 11

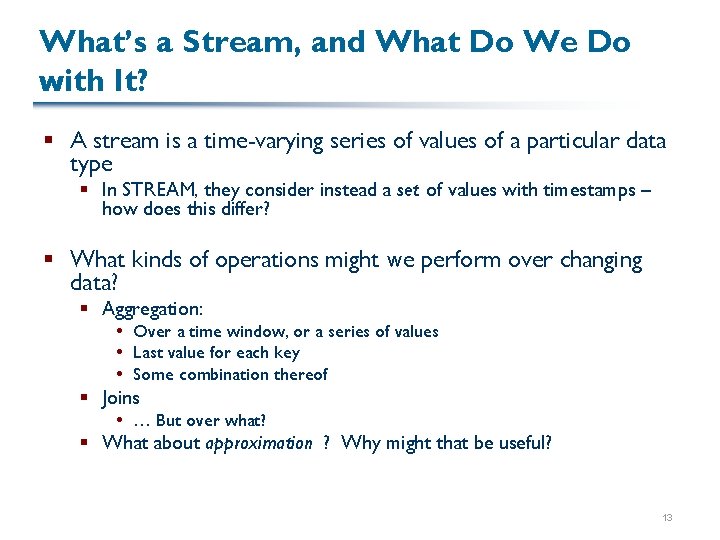

A Variation on the Relational Model: Streams § An interesting class of applications exists where data is constantly changing, and we want to update our output accordingly § Publish-subscribe systems § Stock tickers, news headlines § Data acquisition, e. g. , from sensors, traffic monitoring, … § In general, we want “live” output based on changing input § This has been called many things: pub/sub, continuous queries, … § In general, these have been eclipsed by the term “stream processing” 12

What’s a Stream, and What Do We Do with It? § A stream is a time-varying series of values of a particular data type § In STREAM, they consider instead a set of values with timestamps – how does this differ? § What kinds of operations might we perform over changing data? § Aggregation: Over a time window, or a series of values Last value for each key Some combination thereof § Joins … But over what? § What about approximation ? Why might that be useful? 13

STREAM’s Model: the CQL Language § An attempt to extend SQL to handle streams – not to invent a language from the ground up § Thus it’s a bit quirky § In CQL, everything is built around instantaneous relations , which are time-varying bags of tuples § Relation-relation operators (normal SQL) § Stream-relation operators (convert to relations) § Relation-stream operators (convert instantaneous to streams) § No stream-stream operators! 14

Converting between Streams & Relations § Stream-to-relation operators: § Sliding window: tuple-based (last N rows) or time-based (within time range) § Partitioned sliding window: does grouping by keys, then does sliding window over that Is this necessary or minimal? § Relation-to-stream operators: § Istream: stream-ifies any insertions over a relation § Dstream : stream-ifies the deletes § Rstream : stream contains the set of tuples in the relation 15

![Some Examples Select From S 1 Rows 1000 S 2 Range 2 Some Examples § Select * From S 1 [Rows 1000], S 2 [Range 2](https://slidetodoc.com/presentation_image_h2/e802fad9964c5211f11dd5ef938aa90c/image-16.jpg)

Some Examples § Select * From S 1 [Rows 1000], S 2 [Range 2 minutes] Where S 1. A = S 2. A And S 1. A > 10 § Select Rstream(S. A, R. B) From S [Now], R Where S. A = R. A 16

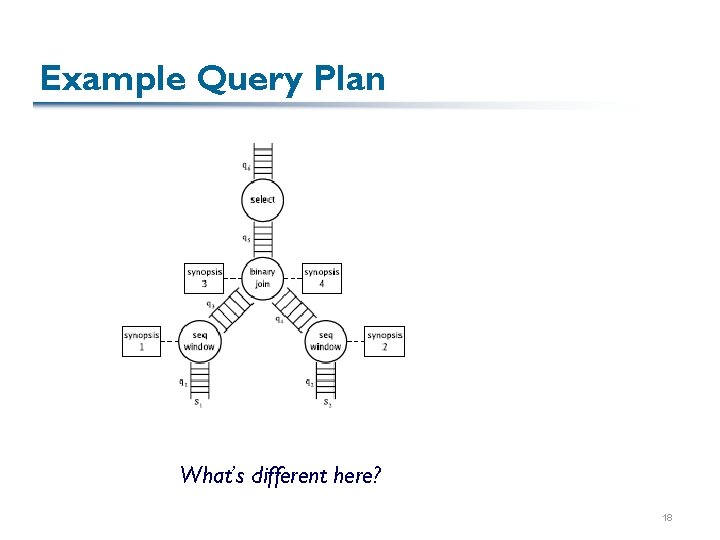

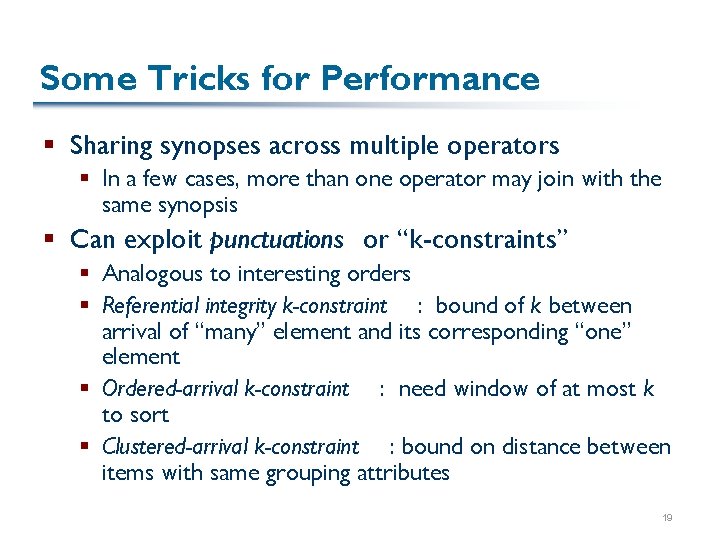

Building a Stream System § Basic data item is the element : § <op, time, tuple> where op 2 {+, -} § Query plans need a few new (? ) items: § Queues Used for hooking together operators, esp. over windows (Assumption is that pipelining is generally not possible, and we may need to drop some tuples from the queue) § Synopses The intermediate state an operator needs to carry around Note that this is usually bounded by windows 17

Example Query Plan What’s different here? 18

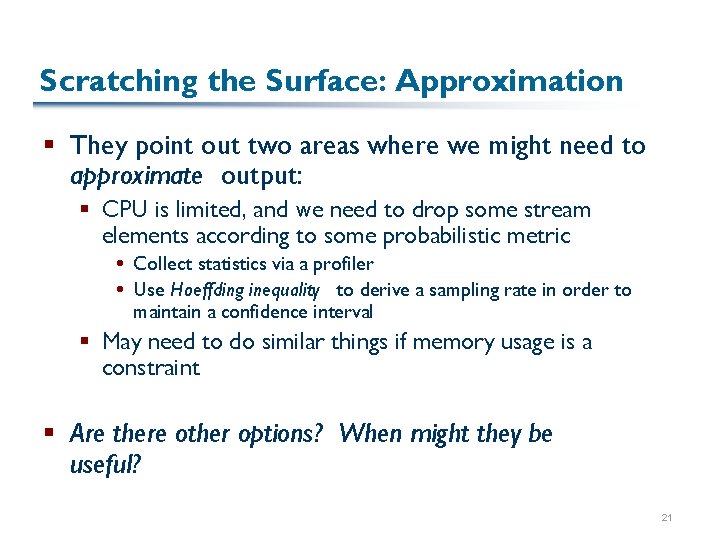

Some Tricks for Performance § Sharing synopses across multiple operators § In a few cases, more than one operator may join with the same synopsis § Can exploit punctuations or “k-constraints” § Analogous to interesting orders § Referential integrity k-constraint : bound of k between arrival of “many” element and its corresponding “one” element § Ordered-arrival k-constraint : need window of at most k to sort § Clustered-arrival k-constraint : bound on distance between items with same grouping attributes 19

Query Processing – “Chain Scheduling” § Similar in many ways to eddies § May decide to apply operators as follows: § Assume we know how many tuples can be processed in a time unit § Cluster groups of operators into “chains” that maximize reduction in queue size per unit time § Greedily forward tuples into the most selective chain § Within a chain, process in FIFO order § They also do a form of join reordering 20

Scratching the Surface: Approximation § They point out two areas where we might need to approximate output: § CPU is limited, and we need to drop some stream elements according to some probabilistic metric Collect statistics via a profiler Use Hoeffding inequality to derive a sampling rate in order to maintain a confidence interval § May need to do similar things if memory usage is a constraint § Are there other options? When might they be useful? 21

STREAM in General § “Logical semantics first” § Starts with a basic data model: streams as timestamped sets § Develops a language and semantics § Heavily based on SQL § Proposes a relatively straightforward implementation § Interesting ideas like k-constraints § Interesting approaches like chain scheduling § No real consideration of distributed processing 22

Aurora § “Implementation first; mix and match operations from past literature” § Basic philosophy: most of the ideas in streams existed in previous research § Sliding windows, load shedding, approximation, … § So let’s borrow those ideas and focus on how to build a real system with them! § Emphasis is on building a scalable, robust system § Distributed implementation: Medusa 23

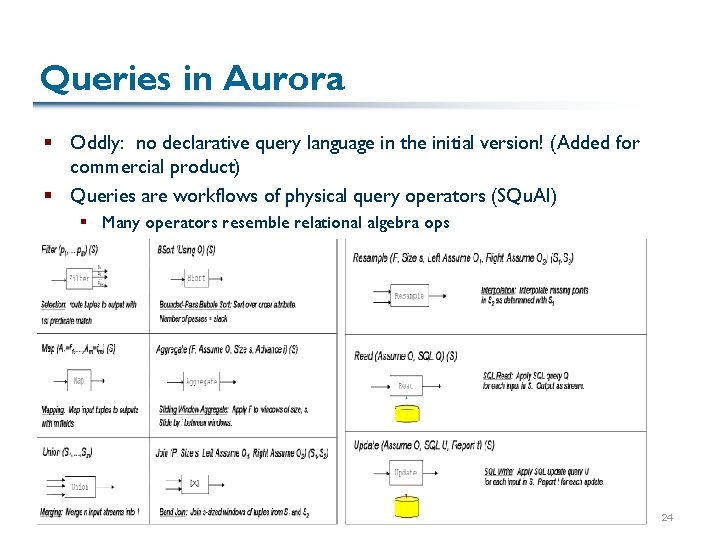

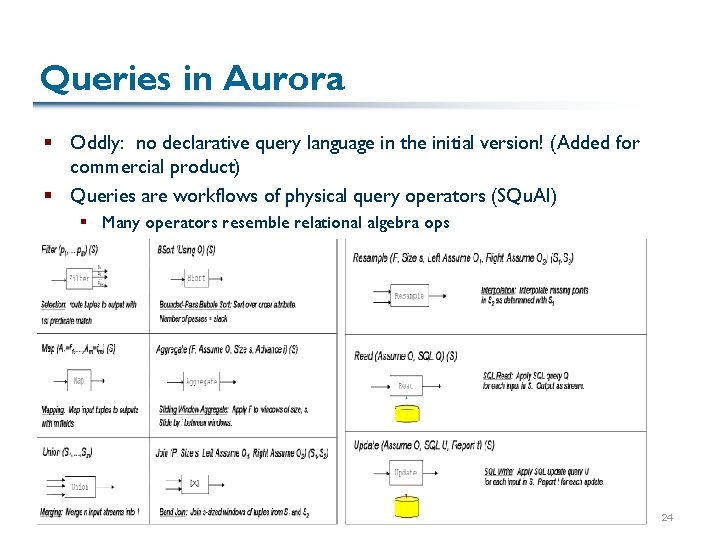

Queries in Aurora § Oddly: no declarative query language in the initial version! (Added for commercial product) § Queries are workflows of physical query operators (SQu. Al) § Many operators resemble relational algebra ops 24

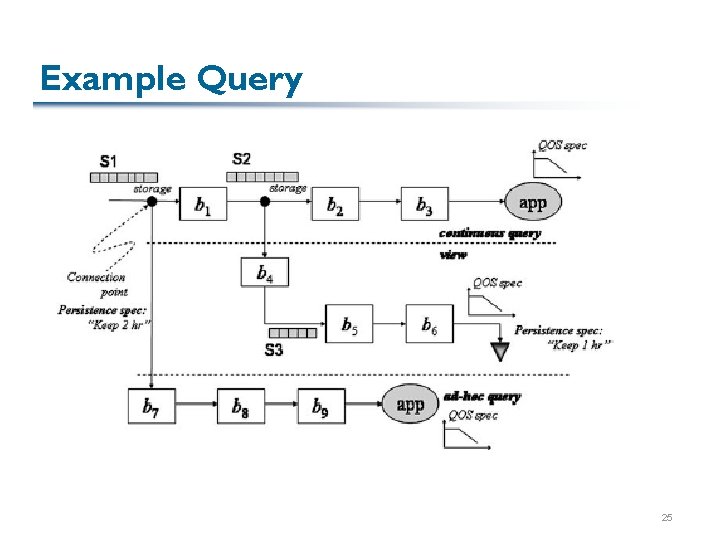

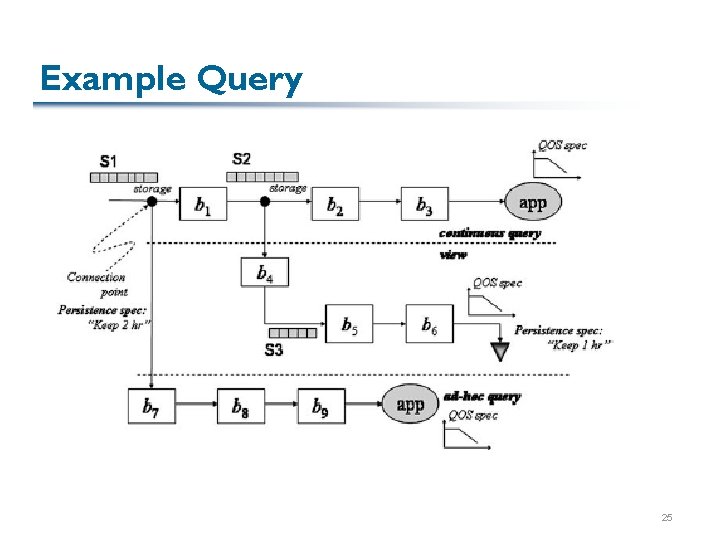

Example Query 25

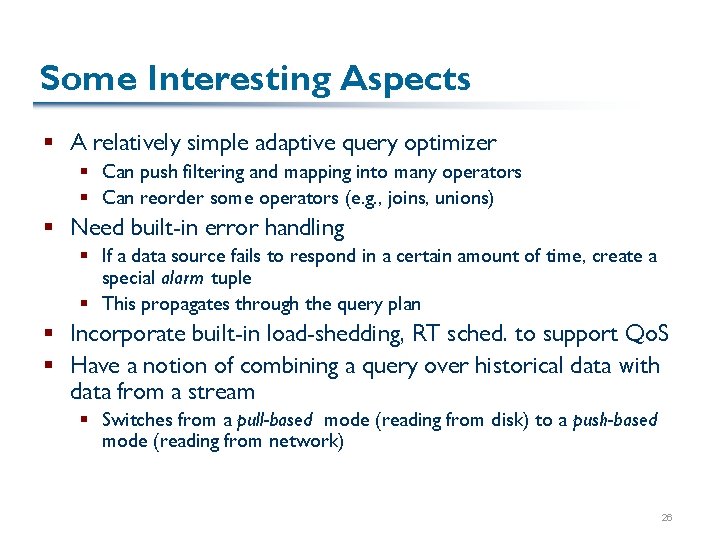

Some Interesting Aspects § A relatively simple adaptive query optimizer § Can push filtering and mapping into many operators § Can reorder some operators (e. g. , joins, unions) § Need built-in error handling § If a data source fails to respond in a certain amount of time, create a special alarm tuple § This propagates through the query plan § Incorporate built-in load-shedding, RT sched. to support Qo. S § Have a notion of combining a query over historical data with data from a stream § Switches from a pull-based mode (reading from disk) to a push-based mode (reading from network) 26

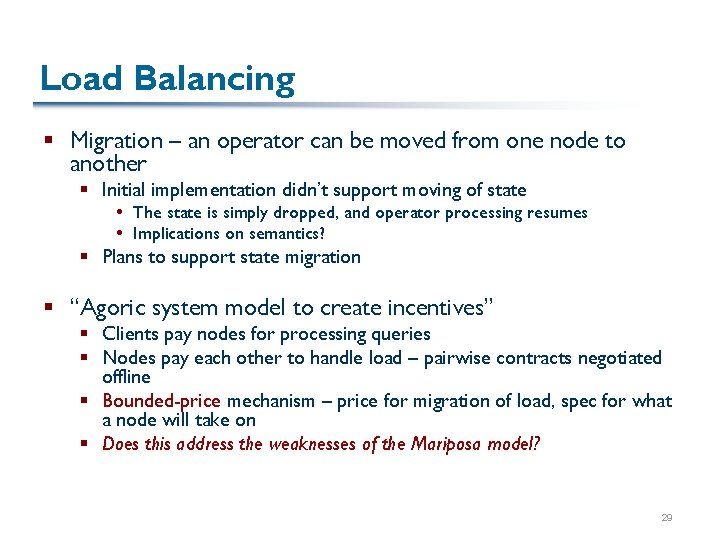

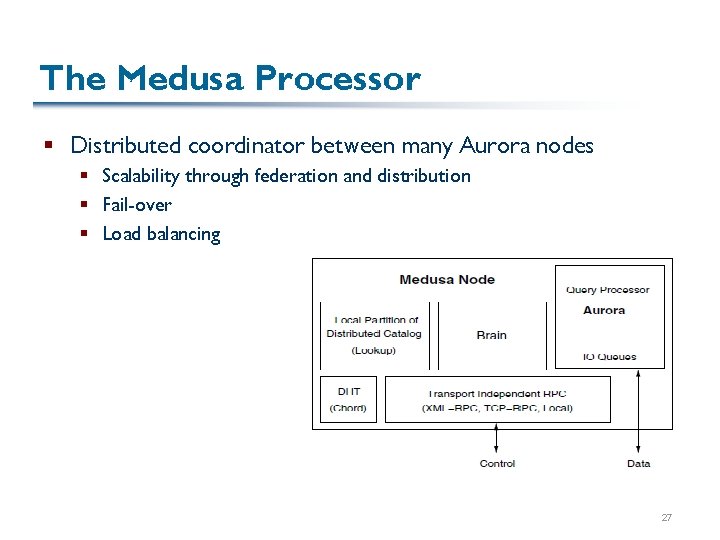

The Medusa Processor § Distributed coordinator between many Aurora nodes § Scalability through federation and distribution § Fail-over § Load balancing 27

Main Components § Lookup § Distributed catalog – schemas, where to find streams, where to find queries § Brain § Query setup, load monitoring via I/O queues and stats § Load distribution and balancing scheme is used Very reminiscent of Mariposa! 28

Load Balancing § Migration – an operator can be moved from one node to another § Initial implementation didn’t support moving of state The state is simply dropped, and operator processing resumes Implications on semantics? § Plans to support state migration § “Agoric system model to create incentives” § Clients pay nodes for processing queries § Nodes pay each other to handle load – pairwise contracts negotiated offline § Bounded-price mechanism – price for migration of load, spec for what a node will take on § Does this address the weaknesses of the Mariposa model? 29

Some Applications They Tried § Financial services (stock ticker) § Main issue is not volume, but problems with feeds § Two-level alarm system, where higher-level alarm helps diagnose problems § Shared computation among queries § User-defined aggregation and mapping § Linear road (sensor monitoring) § Traffic sensors in a toll road – change toll depending on how many cars are on the road § Combination of historical and continuous queries § Environmental monitoring § Sliding-window calculations 30

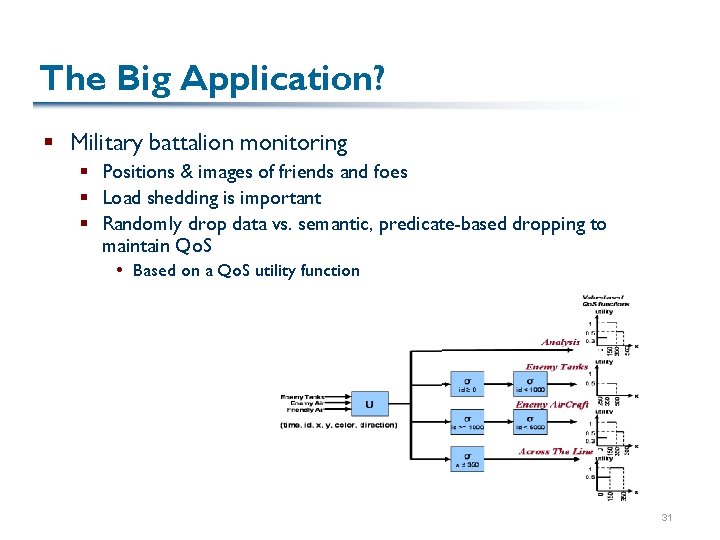

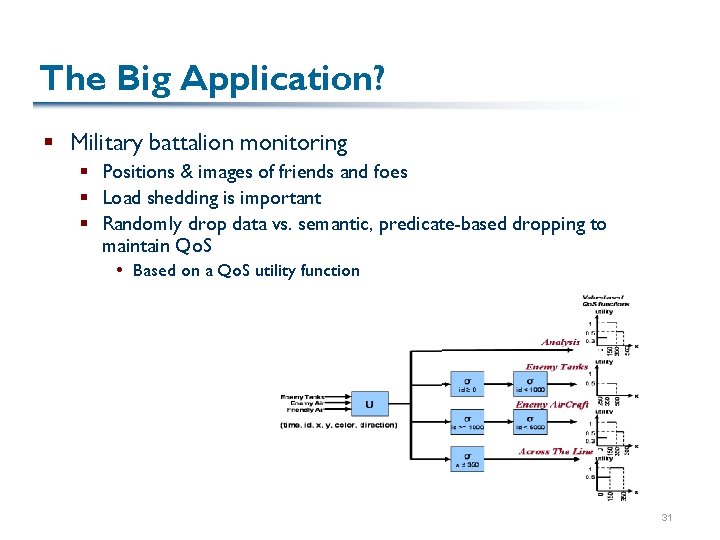

The Big Application? § Military battalion monitoring § Positions & images of friends and foes § Load shedding is important § Randomly drop data vs. semantic, predicate-based dropping to maintain Qo. S Based on a Qo. S utility function 31

Lessons Learned § Historical data is important – not just stream data § (Summaries? ) § Sometimes need synchronization for consistency § “ACID for streams”? § Streams can be out of order, bursty § “Stream cleaning”? § Adaptors (and also XML) are important § … But we already knew that! § Performance is critical § They spent a great deal of time using microbenchmarks and optimizing 32