Data Integration Concluded and Physical Storage Zachary G

- Slides: 43

Data Integration, Concluded and Physical Storage Zachary G. Ives University of Pennsylvania CIS 550 – Database & Information Systems November 16, 2004

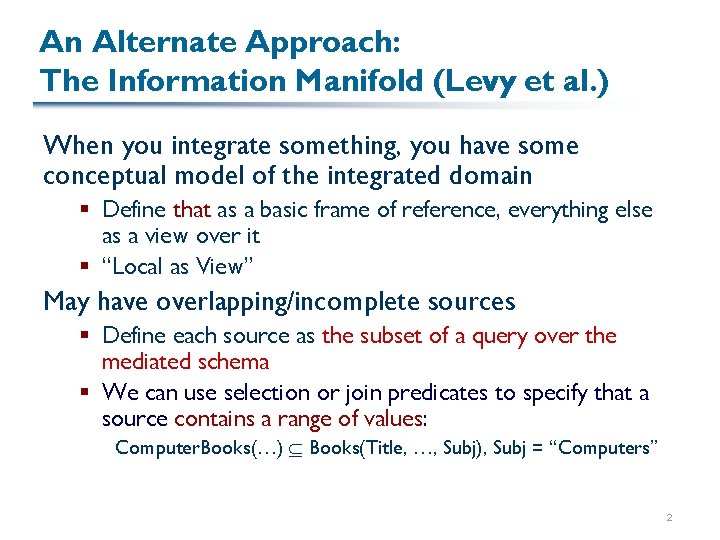

An Alternate Approach: The Information Manifold (Levy et al. ) When you integrate something, you have some conceptual model of the integrated domain § Define that as a basic frame of reference, everything else as a view over it § “Local as View” May have overlapping/incomplete sources § Define each source as the subset of a query over the mediated schema § We can use selection or join predicates to specify that a source contains a range of values: Computer. Books(…) Books(Title, …, Subj), Subj = “Computers” 2

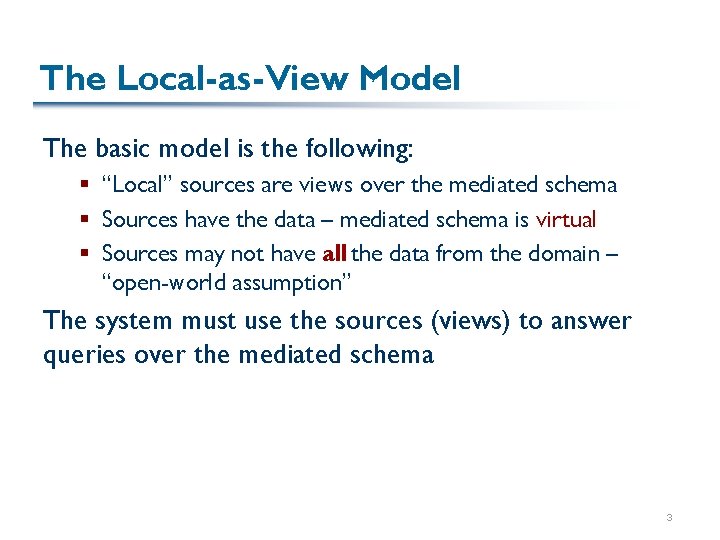

The Local-as-View Model The basic model is the following: § “Local” sources are views over the mediated schema § Sources have the data – mediated schema is virtual § Sources may not have all the data from the domain – “open-world assumption” The system must use the sources (views) to answer queries over the mediated schema 3

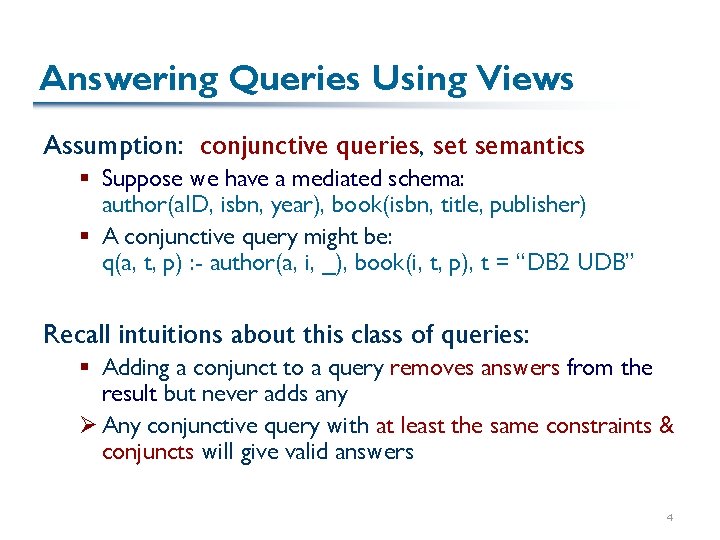

Answering Queries Using Views Assumption: conjunctive queries, set semantics § Suppose we have a mediated schema: author(a. ID, isbn, year), book(isbn, title, publisher) § A conjunctive query might be: q(a, t, p) : - author(a, i, _), book(i, t, p), t = “DB 2 UDB” Recall intuitions about this class of queries: § Adding a conjunct to a query removes answers from the result but never adds any Ø Any conjunctive query with at least the same constraints & conjuncts will give valid answers 4

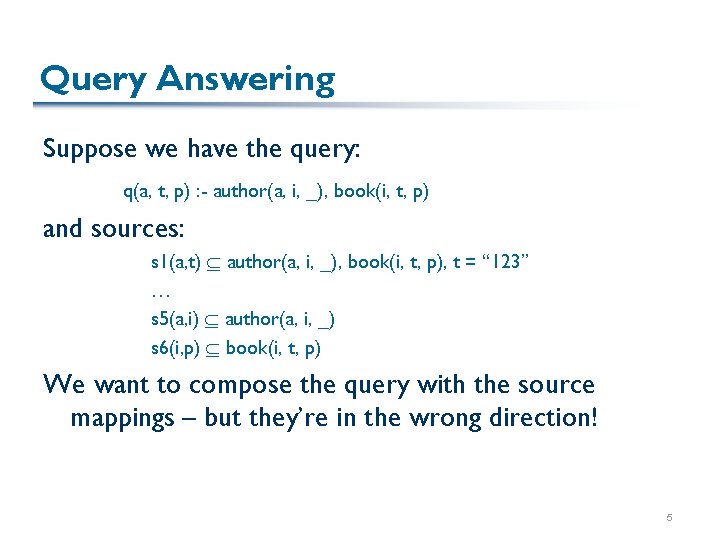

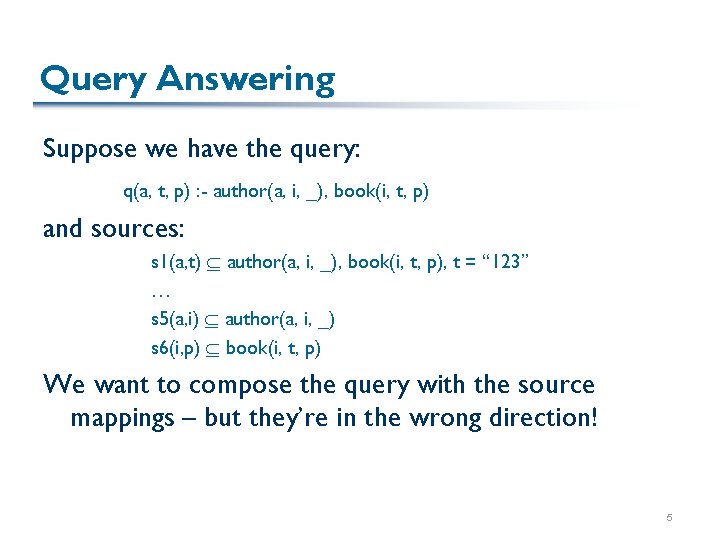

Query Answering Suppose we have the query: q(a, t, p) : - author(a, i, _), book(i, t, p) and sources: s 1(a, t) author(a, i, _), book(i, t, p), t = “ 123” … s 5(a, i) author(a, i, _) s 6(i, p) book(i, t, p) We want to compose the query with the source mappings – but they’re in the wrong direction! 5

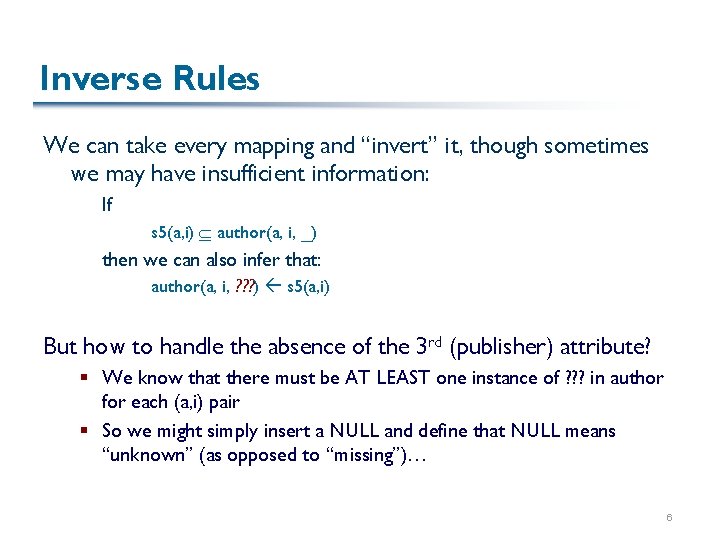

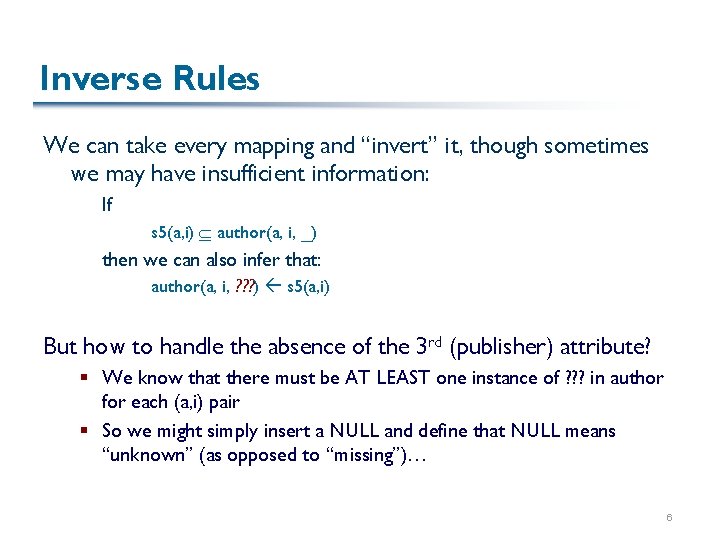

Inverse Rules We can take every mapping and “invert” it, though sometimes we may have insufficient information: If s 5(a, i) author(a, i, _) then we can also infer that: author(a, i, ? ? ? ) s 5(a, i) But how to handle the absence of the 3 rd (publisher) attribute? § We know that there must be AT LEAST one instance of ? ? ? in author for each (a, i) pair § So we might simply insert a NULL and define that NULL means “unknown” (as opposed to “missing”)… 6

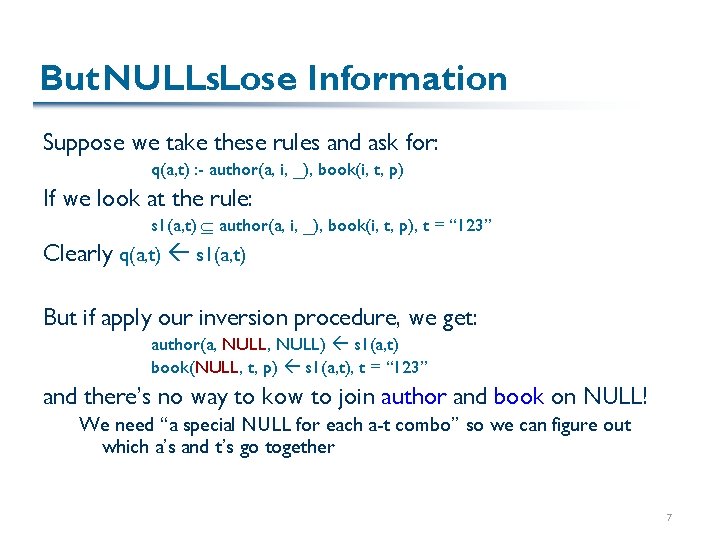

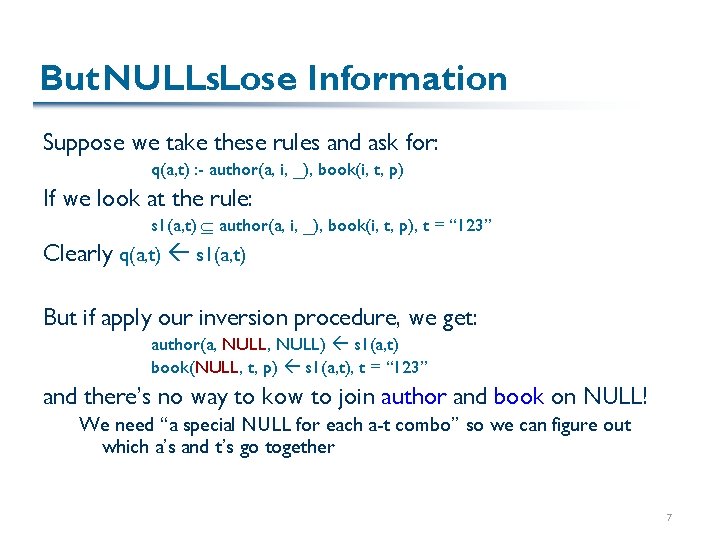

But NULLs. Lose Information Suppose we take these rules and ask for: q(a, t) : - author(a, i, _), book(i, t, p) If we look at the rule: s 1(a, t) author(a, i, _), book(i, t, p), t = “ 123” Clearly q(a, t) s 1(a, t) But if apply our inversion procedure, we get: author(a, NULL) s 1(a, t) book(NULL, t, p) s 1(a, t), t = “ 123” and there’s no way to kow to join author and book on NULL! We need “a special NULL for each a-t combo” so we can figure out which a’s and t’s go together 7

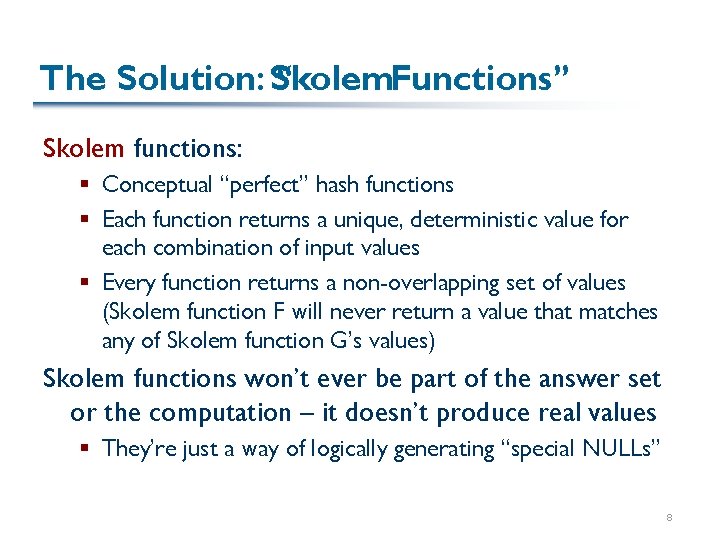

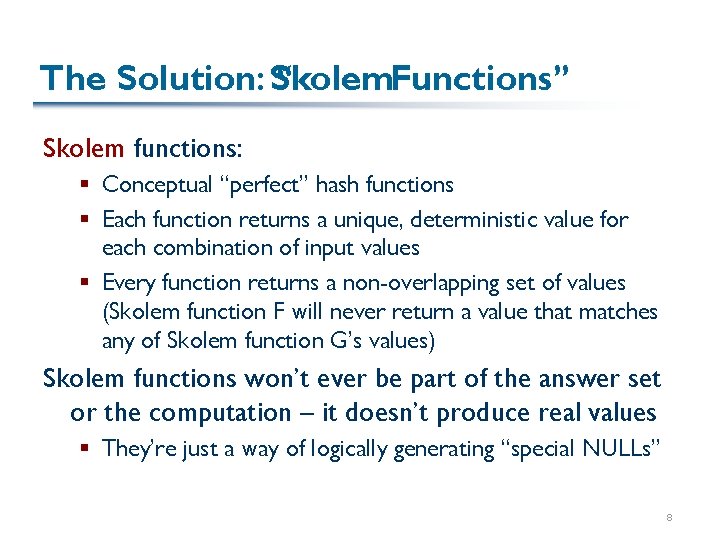

The Solution: Skolem “ Functions” Skolem functions: § Conceptual “perfect” hash functions § Each function returns a unique, deterministic value for each combination of input values § Every function returns a non-overlapping set of values (Skolem function F will never return a value that matches any of Skolem function G’s values) Skolem functions won’t ever be part of the answer set or the computation – it doesn’t produce real values § They’re just a way of logically generating “special NULLs” 8

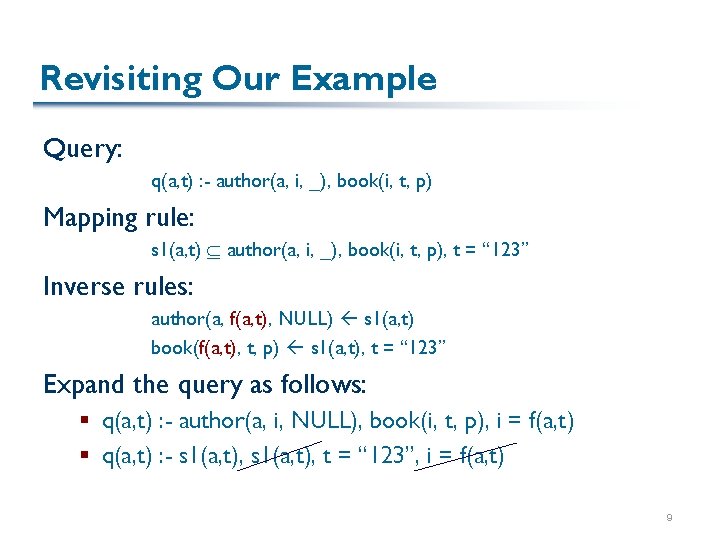

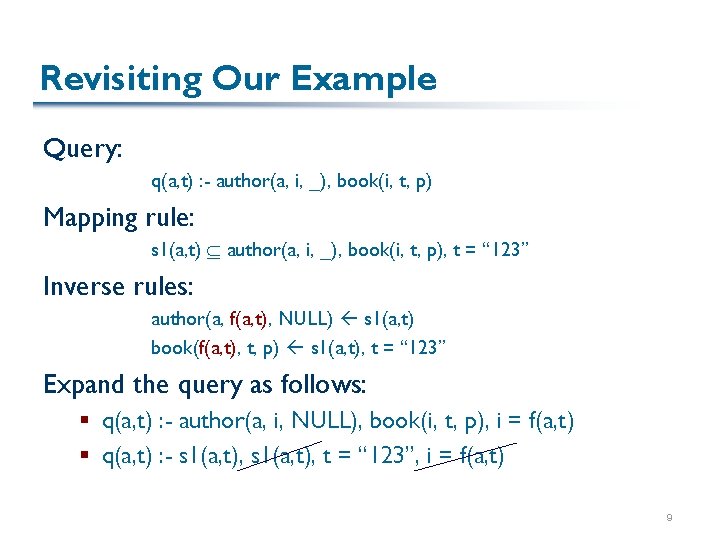

Revisiting Our Example Query: q(a, t) : - author(a, i, _), book(i, t, p) Mapping rule: s 1(a, t) author(a, i, _), book(i, t, p), t = “ 123” Inverse rules: author(a, f(a, t), NULL) s 1(a, t) book(f(a, t), t, p) s 1(a, t), t = “ 123” Expand the query as follows: § q(a, t) : - author(a, i, NULL), book(i, t, p), i = f(a, t) § q(a, t) : - s 1(a, t), t = “ 123”, i = f(a, t) 9

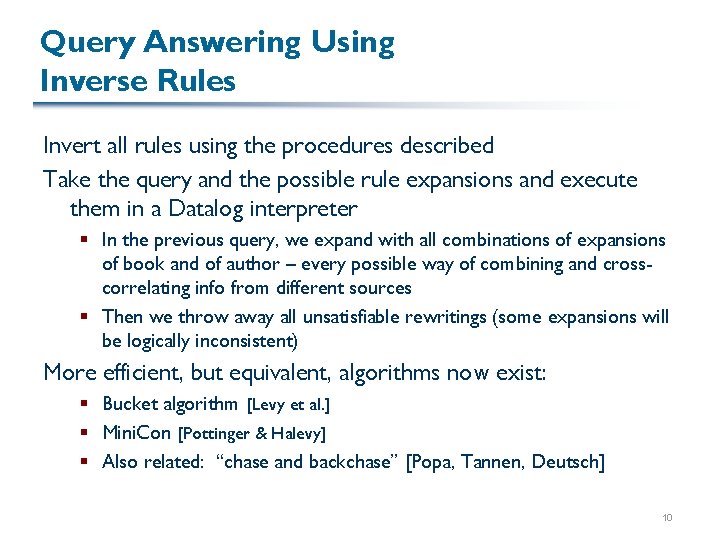

Query Answering Using Inverse Rules Invert all rules using the procedures described Take the query and the possible rule expansions and execute them in a Datalog interpreter § In the previous query, we expand with all combinations of expansions of book and of author – every possible way of combining and crosscorrelating info from different sources § Then we throw away all unsatisfiable rewritings (some expansions will be logically inconsistent) More efficient, but equivalent, algorithms now exist: § Bucket algorithm [Levy et al. ] § Mini. Con [Pottinger & Halevy] § Also related: “chase and backchase” [Popa, Tannen, Deutsch] 10

Summary of Data Integration Local-as-view integration has replaced global-as-view as the standard § More robust way of defining mediated schemas and sources § Mediated schema is clearly defined, less likely to change § Sources can be more accurately described Methods exist for query reformulation, including inverse rules Integration requires standardization on a single schema § Can be hard to get consensus § Today we have peer-to-peer data integration, e. g. , Piazza [Halevy et al. ], Orchestra [Ives et al. ], Hyperion [Miller et al. ] Some other aspects of integration were addressed in related papers § Overlap between sources; coverage of data at sources § Semi-automated creation of mappings and wrappers Data integration capabilities in commercial products: BEA’s Liquid Data, IBM’s DB 2 Information Integrator, numerous packages from middleware companies 11

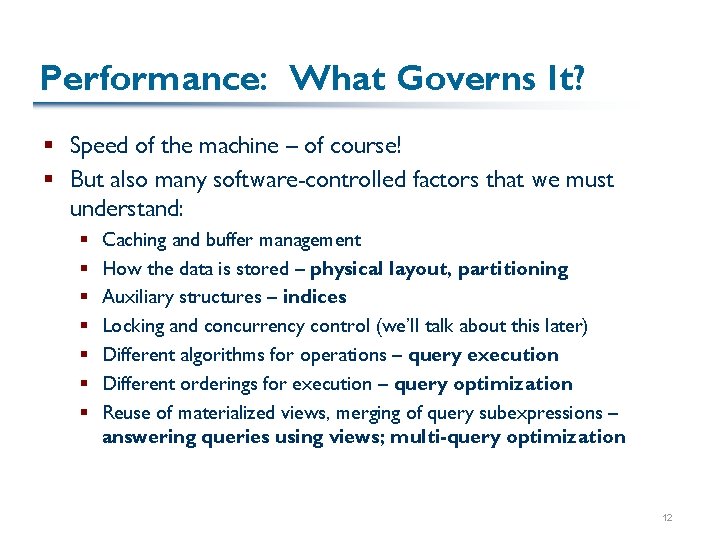

Performance: What Governs It? § Speed of the machine – of course! § But also many software-controlled factors that we must understand: § § § § Caching and buffer management How the data is stored – physical layout, partitioning Auxiliary structures – indices Locking and concurrency control (we’ll talk about this later) Different algorithms for operations – query execution Different orderings for execution – query optimization Reuse of materialized views, merging of query subexpressions – answering queries using views; multi-query optimization 12

Our General Emphasis § Goal: cover basic principles that are applied throughout database system design § Use the appropriate strategy in the appropriate place Every (reasonable) algorithm is good somewhere § … And a corollary: database people reinvent a lot of things and add minor tweaks… 13

What’s the “Base” in “Database”? § Could just be a file with random access § What are the advantages and disadvantages? § DBs generally require “raw” disk access § Need to know when a page is actually written to disk, vs. queued by the OS § Predictable performance, less fragmentation § May want to exploit striping or contiguous regions § Typically divided into “extents” and pages 14

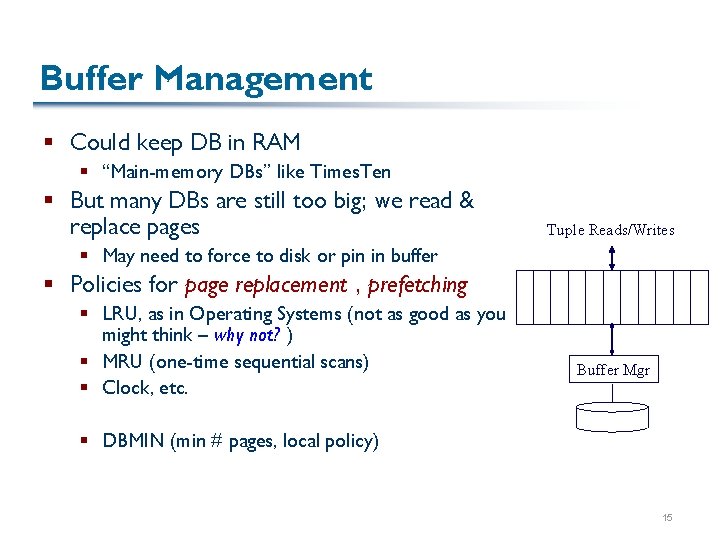

Buffer Management § Could keep DB in RAM § “Main-memory DBs” like Times. Ten § But many DBs are still too big; we read & replace pages Tuple Reads/Writes § May need to force to disk or pin in buffer § Policies for page replacement , prefetching § LRU, as in Operating Systems (not as good as you might think – why not? ) § MRU (one-time sequential scans) § Clock, etc. Buffer Mgr § DBMIN (min # pages, local policy) 15

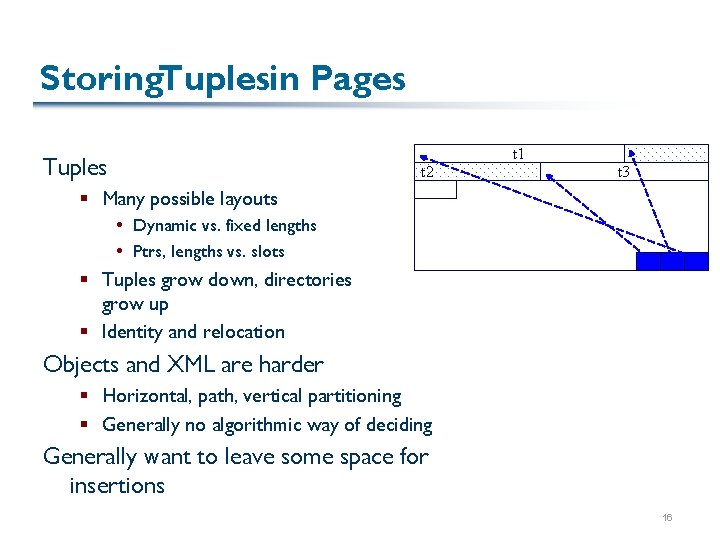

Storing. Tuplesin Pages t 1 Tuples t 2 t 3 § Many possible layouts Dynamic vs. fixed lengths Ptrs, lengths vs. slots § Tuples grow down, directories grow up § Identity and relocation Objects and XML are harder § Horizontal, path, vertical partitioning § Generally no algorithmic way of deciding Generally want to leave some space for insertions 16

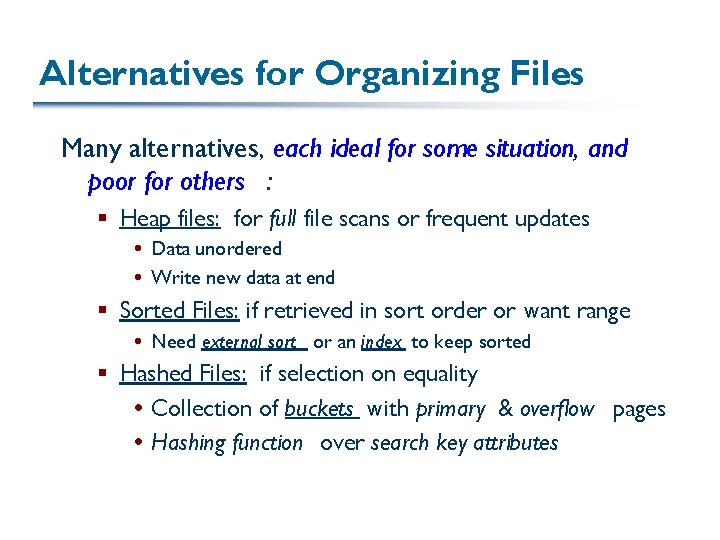

Alternatives for Organizing Files Many alternatives, each ideal for some situation, and poor for others : § Heap files: for full file scans or frequent updates Data unordered Write new data at end § Sorted Files: if retrieved in sort order or want range Need external sort or an index to keep sorted § Hashed Files: if selection on equality Collection of buckets with primary & overflow pages Hashing function over search key attributes

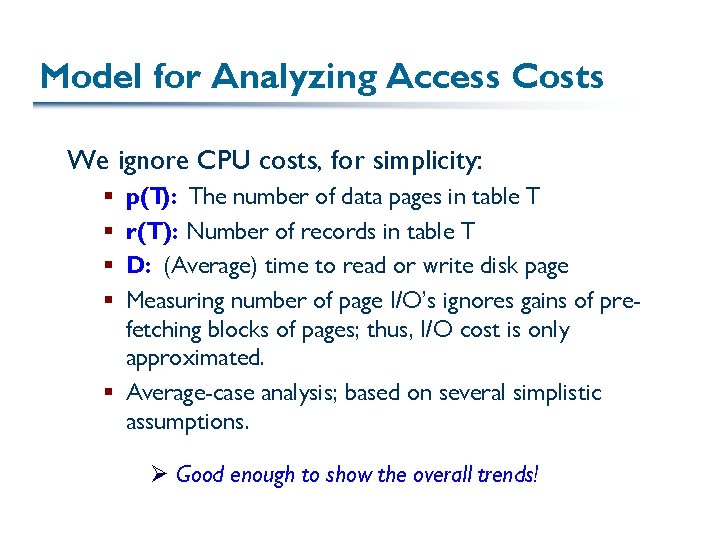

Model for Analyzing Access Costs We ignore CPU costs, for simplicity: p(T): The number of data pages in table T r(T): Number of records in table T D: (Average) time to read or write disk page Measuring number of page I/O’s ignores gains of prefetching blocks of pages; thus, I/O cost is only approximated. § Average-case analysis; based on several simplistic assumptions. § § Ø Good enough to show the overall trends!

Assumptions in Our Analysis § Single record insert and delete § Heap files: § Equality selection on key; exactly one match § Insert always at end of file § Sorted files: § Files compacted after deletions § Selections on sort field(s) § Hashed files: § No overflow buckets, 80% page occupancy

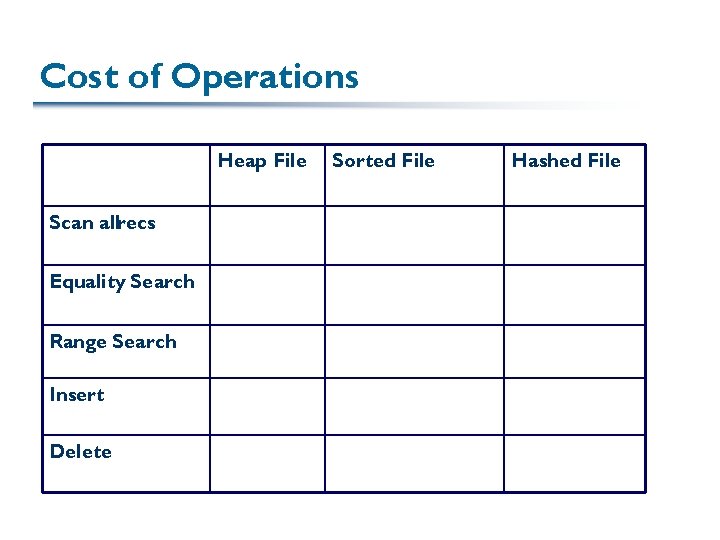

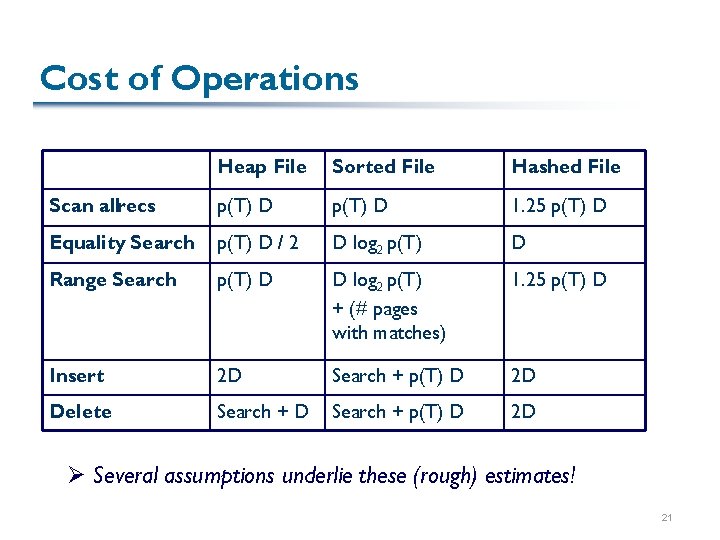

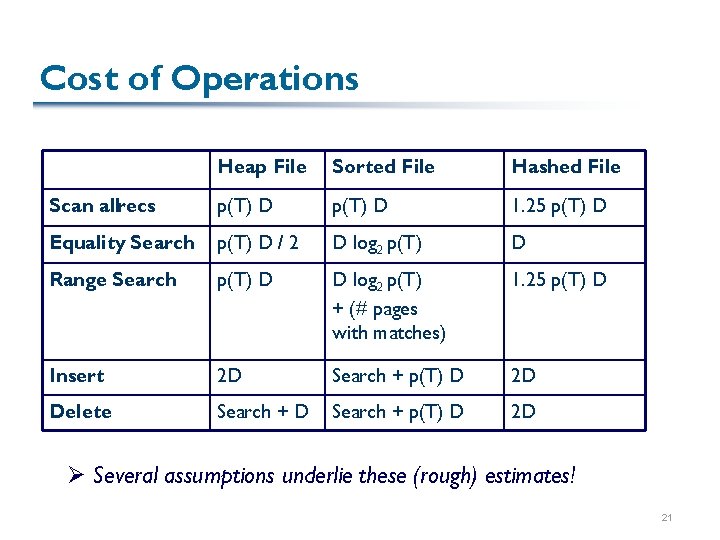

Cost of Operations Heap File Scan allrecs Equality Search Range Search Insert Delete Sorted File Hashed File

Cost of Operations Heap File Sorted File Hashed File Scan allrecs p(T) D 1. 25 p(T) D Equality Search p(T) D / 2 D log 2 p(T) D Range Search p(T) D D log 2 p(T) + (# pages with matches) 1. 25 p(T) D Insert 2 D Search + p(T) D 2 D Delete Search + D Search + p(T) D 2 D Ø Several assumptions underlie these (rough) estimates! 21

Speeding Operations over Data § Three general data organization techniques: § Indexing § Sorting § Hashing 22

Technique I: Indexing § An index on a file speeds up selections on the search key attributes for the index (trade space for speed). § Any subset of the fields of a relation can be the search key for an index on the relation. § Search key is not the same as key (minimal set of fields that uniquely identify a record in a relation). § An index contains a collection of data entries , and supports efficient retrieval of all data entries k* with a given key value k.

Alternatives for Data Entry k* in Index § Three alternatives: 1. Data record with key value k ü Clustered fast lookup O Index is large; only 1 can exist 2. <k, rid of data record with search key value k>, OR 3. <k, list of rids of data records with search key k> ü Can have secondary indices ü Smaller index may mean faster lookup O Often not clustered more expensive to use § Choice of alternative for data entries is orthogonal to the indexing technique used to locate data entries with a given key value k.

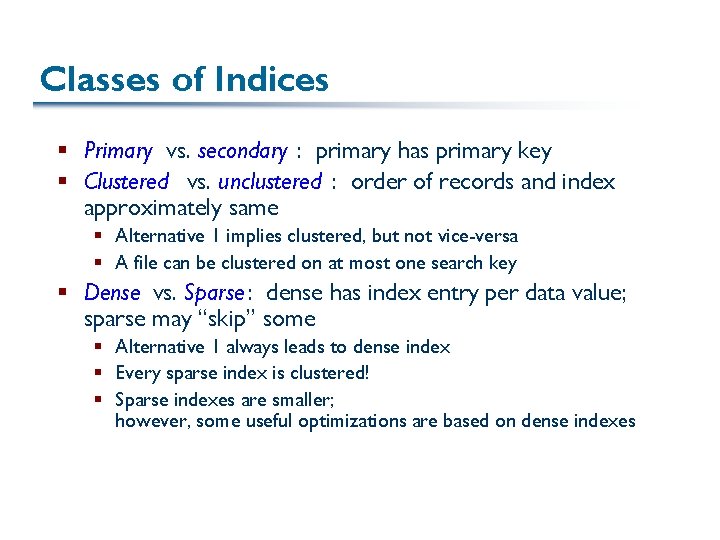

Classes of Indices § Primary vs. secondary : primary has primary key § Clustered vs. unclustered : order of records and index approximately same § Alternative 1 implies clustered, but not vice-versa § A file can be clustered on at most one search key § Dense vs. Sparse : dense has index entry per data value; sparse may “skip” some § Alternative 1 always leads to dense index § Every sparse index is clustered! § Sparse indexes are smaller; however, some useful optimizations are based on dense indexes

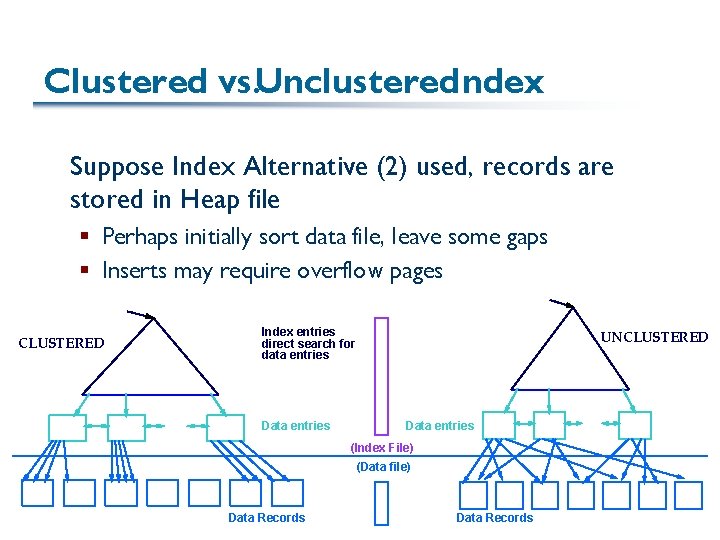

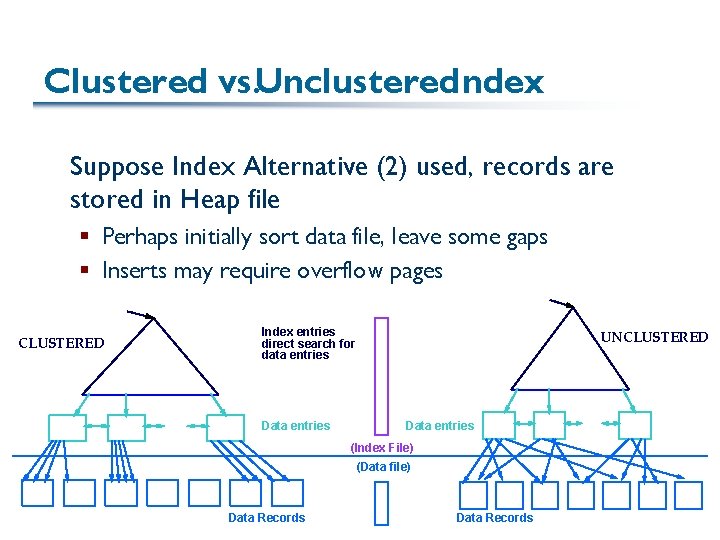

Clustered vs. Unclustered. Index Suppose Index Alternative (2) used, records are stored in Heap file § Perhaps initially sort data file, leave some gaps § Inserts may require overflow pages CLUSTERED Index entries direct search for data entries Data entries UNCLUSTERED Data entries (Index File) (Data file) Data Records

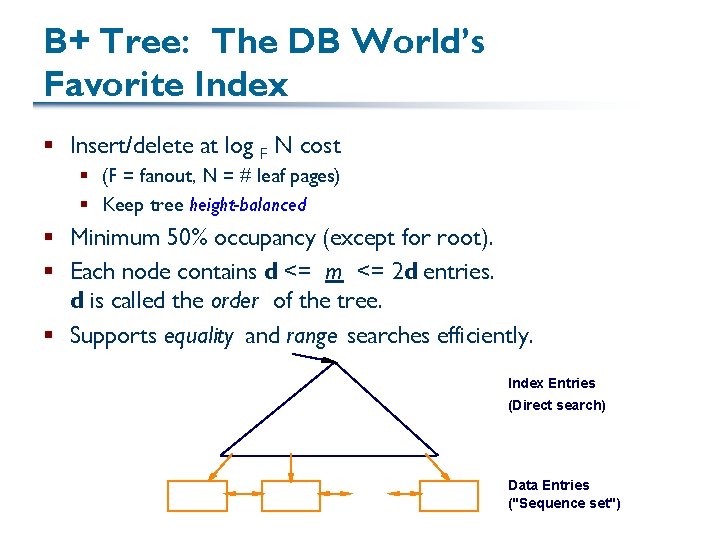

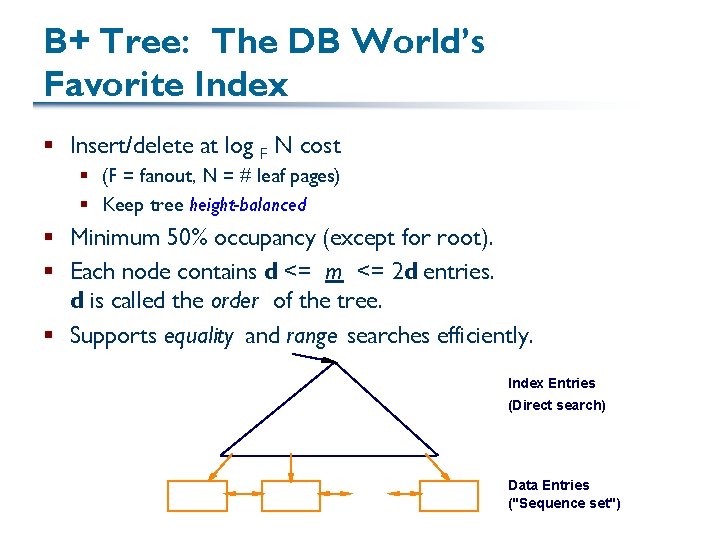

B+ Tree: The DB World’s Favorite Index § Insert/delete at log F N cost § (F = fanout, N = # leaf pages) § Keep tree height-balanced § Minimum 50% occupancy (except for root). § Each node contains d <= m <= 2 d entries. d is called the order of the tree. § Supports equality and range searches efficiently. Index Entries (Direct search) Data Entries ("Sequence set")

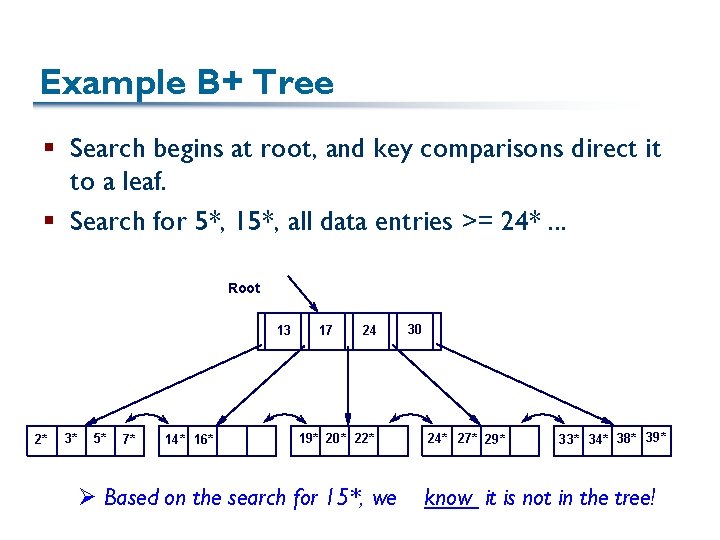

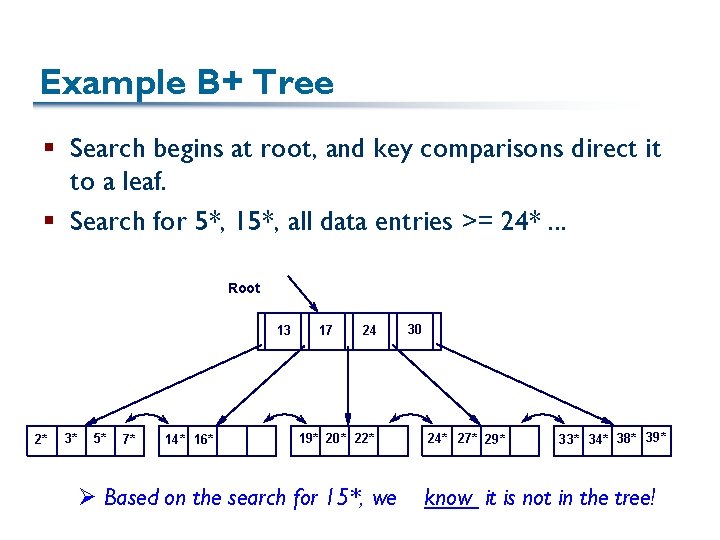

Example B+ Tree § Search begins at root, and key comparisons direct it to a leaf. § Search for 5*, 15*, all data entries >= 24*. . . Root 13 2* 3* 5* 7* 14* 16* 17 24 19* 20* 22* Ø Based on the search for 15*, we 30 24* 27* 29* 33* 34* 38* 39* know it is not in the tree!

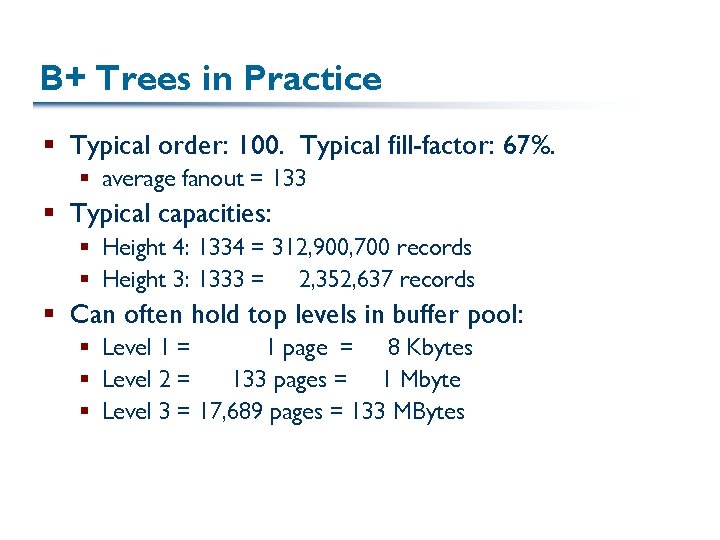

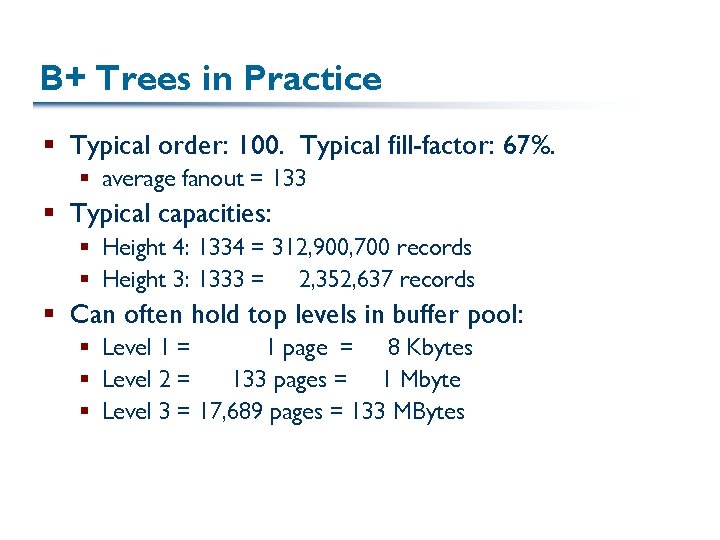

B+ Trees in Practice § Typical order: 100. Typical fill-factor: 67%. § average fanout = 133 § Typical capacities: § Height 4: 1334 = 312, 900, 700 records § Height 3: 1333 = 2, 352, 637 records § Can often hold top levels in buffer pool: § Level 1 = 1 page = 8 Kbytes § Level 2 = 133 pages = 1 Mbyte § Level 3 = 17, 689 pages = 133 MBytes

Inserting Data into a B+ Tree § Find correct leaf L. § Put data entry onto L. § If L has enough space, done! § Else, must split L (into L and a new node L 2) Redistribute entries evenly, copy up middle key. Insert index entry pointing to L 2 into parent of L. § This can happen recursively § To split index node, redistribute entries evenly, but push up middle key. (Contrast with leaf splits. ) § Splits “grow” tree; root split increases height. § Tree growth: gets wider or one level taller at top.

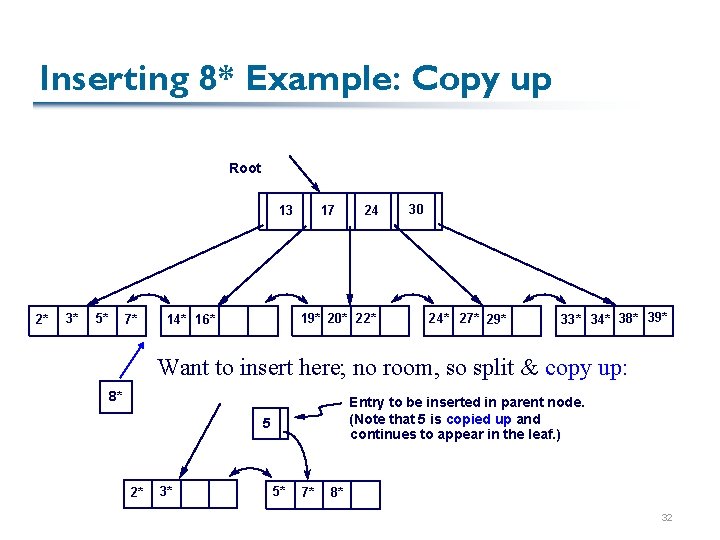

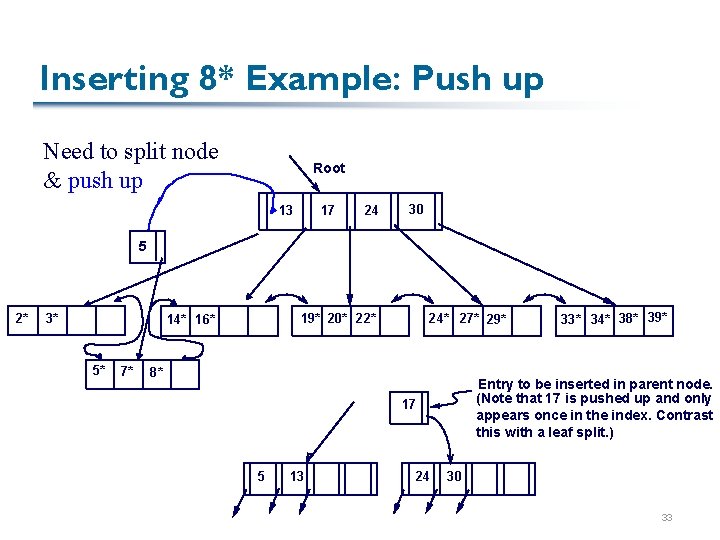

Inserting 8* into Example B+ Tree § Observe how minimum occupancy is guaranteed in both leaf and index pg splits. § Recall that all data items are in leaves, and partition values for keys are in intermediate nodes Note difference between copy-up and push-up.

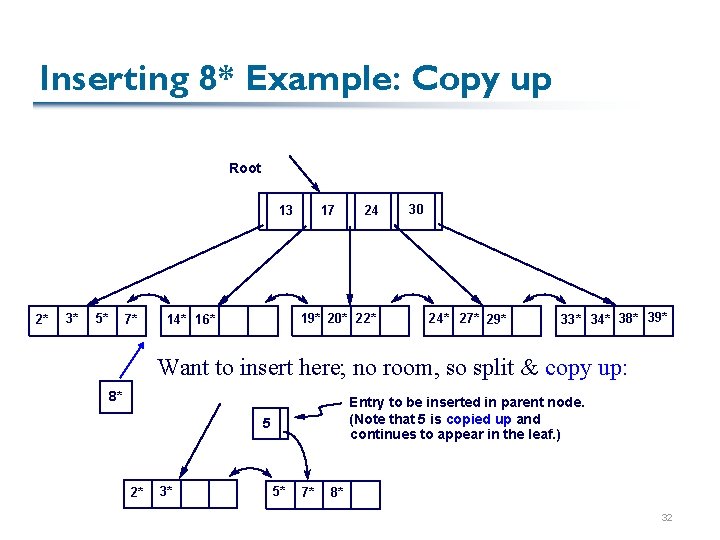

Inserting 8* Example: Copy up Root 13 2* 3* 5* 7* 17 24 19* 20* 22* 14* 16* 30 24* 27* 29* 33* 34* 38* 39* Want to insert here; no room, so split & copy up: 8* Entry to be inserted in parent node. (Note that 5 is copied up and continues to appear in the leaf. ) 5 2* 3* 5* 7* 8* 32

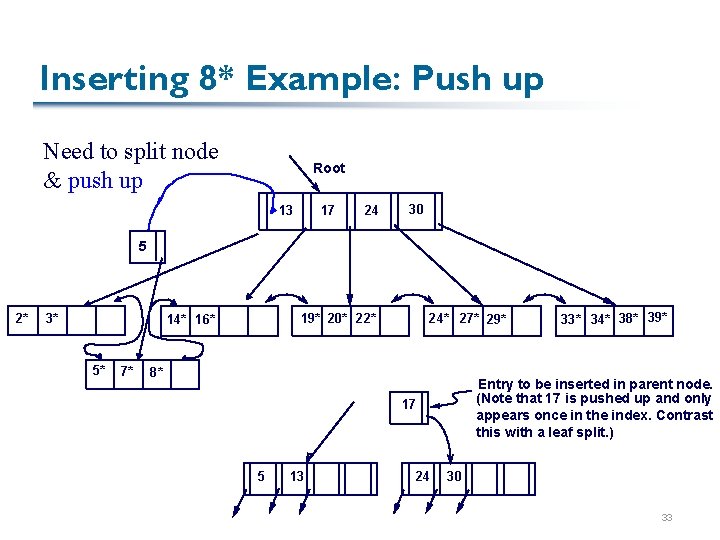

Inserting 8* Example: Push up Need to split node & push up Root 13 17 24 30 5 2* 3* 19* 20* 22* 14* 16* 5* 7* 24* 27* 29* 8* Entry to be inserted in parent node. (Note that 17 is pushed up and only appears once in the index. Contrast this with a leaf split. ) 17 5 13 24 33* 34* 38* 39* 30 33

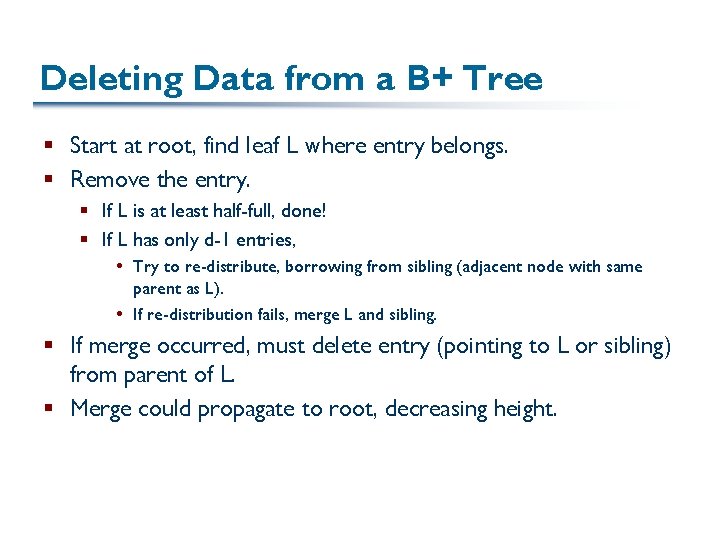

Deleting Data from a B+ Tree § Start at root, find leaf L where entry belongs. § Remove the entry. § If L is at least half-full, done! § If L has only d-1 entries, Try to re-distribute, borrowing from sibling (adjacent node with same parent as L). If re-distribution fails, merge L and sibling. § If merge occurred, must delete entry (pointing to L or sibling) from parent of L. § Merge could propagate to root, decreasing height.

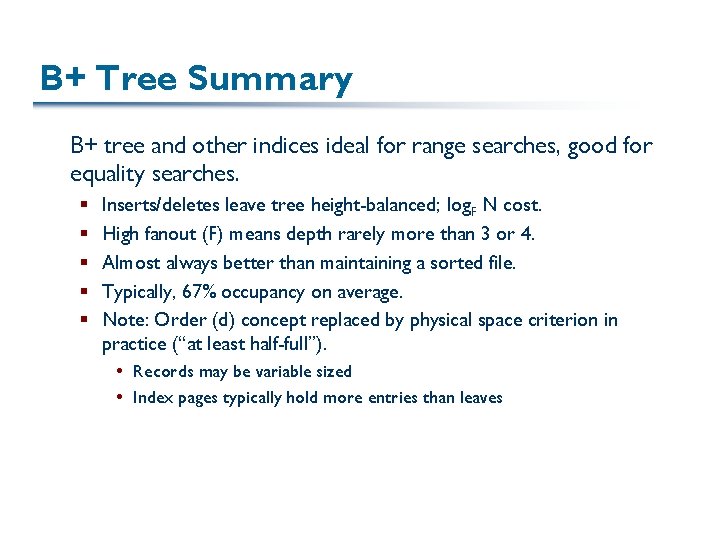

B+ Tree Summary B+ tree and other indices ideal for range searches, good for equality searches. § § § Inserts/deletes leave tree height-balanced; log. F N cost. High fanout (F) means depth rarely more than 3 or 4. Almost always better than maintaining a sorted file. Typically, 67% occupancy on average. Note: Order (d) concept replaced by physical space criterion in practice (“at least half-full”). Records may be variable sized Index pages typically hold more entries than leaves

Other Kinds of Indices § Multidimensional indices § R-trees, k. D-trees, … § Text indices § Inverted indices § Structural indices § Object indices: access support relations, path indices § XML and graph indices: dataguides, 1 -indices, d(k) indices Describe parent-child, path relationships 36

Speeding Operations over Data § Three general data organization techniques: § Indexing § Sorting § Hashing 37

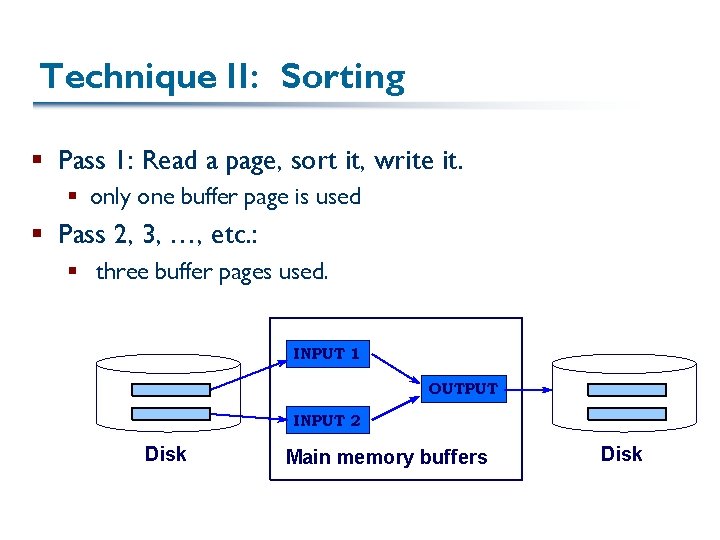

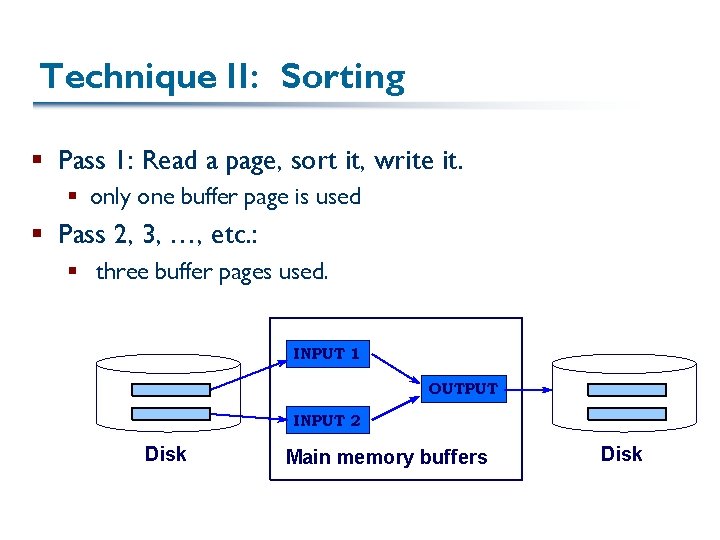

Technique II: Sorting § Pass 1: Read a page, sort it, write it. § only one buffer page is used § Pass 2, 3, …, etc. : § three buffer pages used. INPUT 1 OUTPUT INPUT 2 Disk Main memory buffers Disk

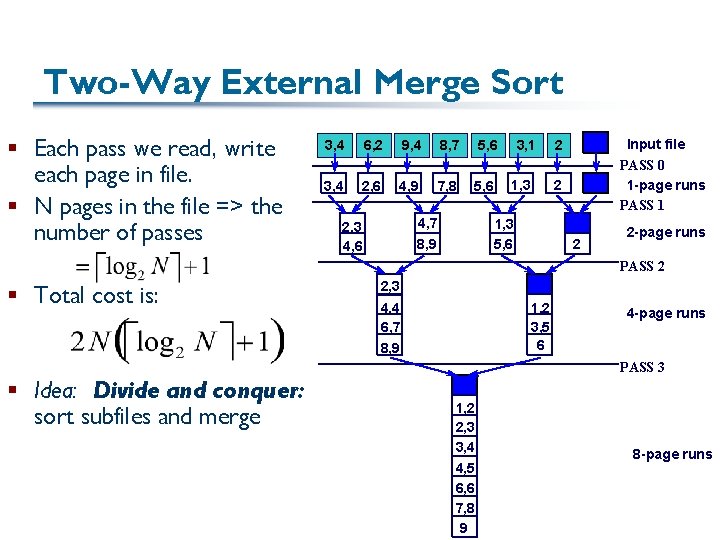

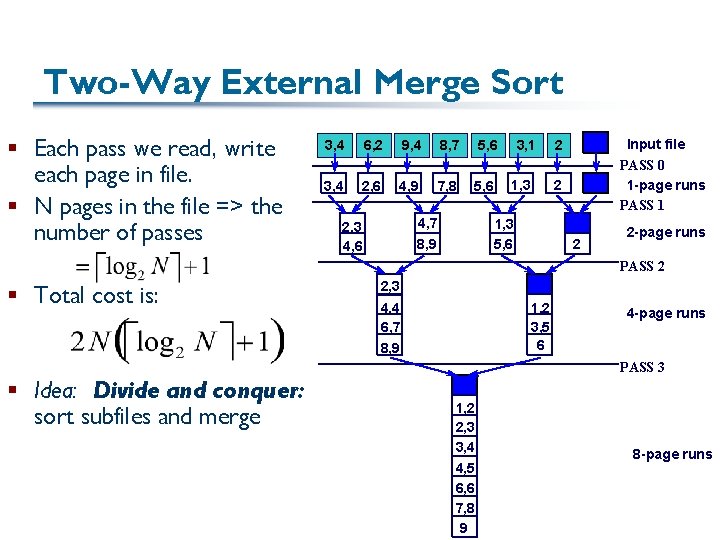

Two-Way External Merge Sort § Each pass we read, write each page in file. § N pages in the file => the number of passes 3, 4 6, 2 9, 4 8, 7 5, 6 3, 4 2, 6 4, 9 7, 8 5, 6 4, 7 8, 9 2, 3 4, 6 3, 1 1, 3 Input file PASS 0 1 -page runs PASS 1 2 2 1, 3 5, 6 2 2 -page runs PASS 2 § Total cost is: 2, 3 4, 4 6, 7 8, 9 1, 2 3, 5 6 4 -page runs PASS 3 § Idea: Divide and conquer: sort subfiles and merge 1, 2 2, 3 3, 4 4, 5 6, 6 7, 8 9 8 -page runs

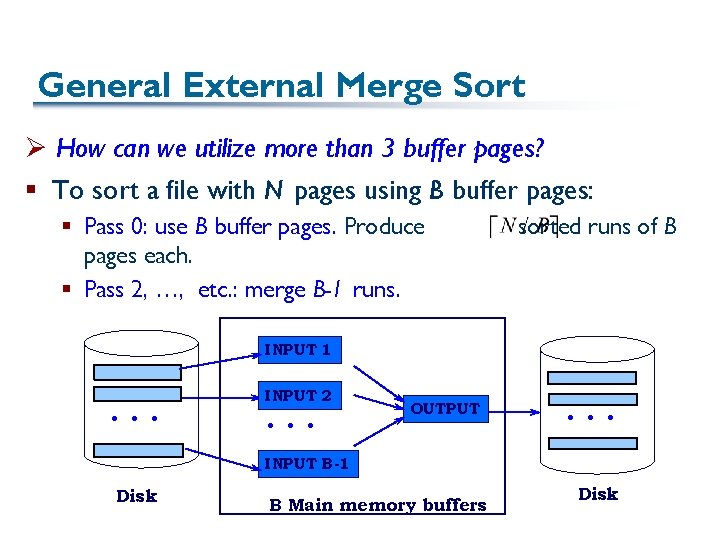

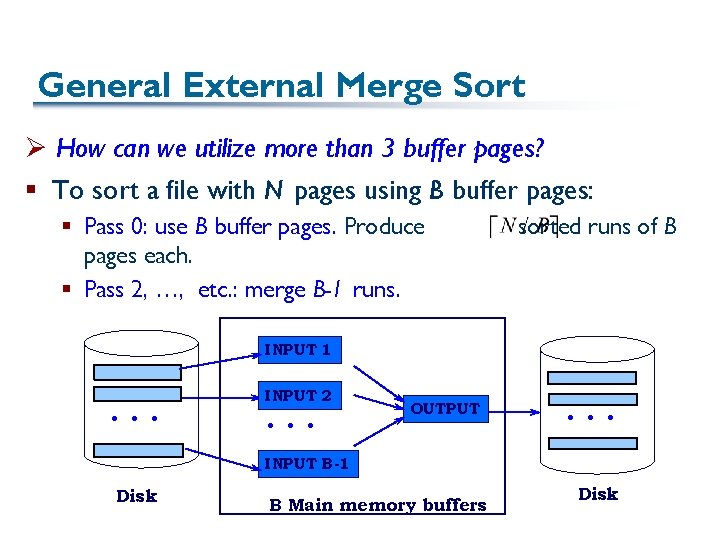

General External Merge Sort Ø How can we utilize more than 3 buffer pages? § To sort a file with N pages using B buffer pages: § Pass 0: use B buffer pages. Produce pages each. § Pass 2, …, etc. : merge B-1 runs. sorted runs of B INPUT 1 . . . INPUT 2 . . . OUTPUT . . . INPUT B-1 Disk B Main memory buffers Disk

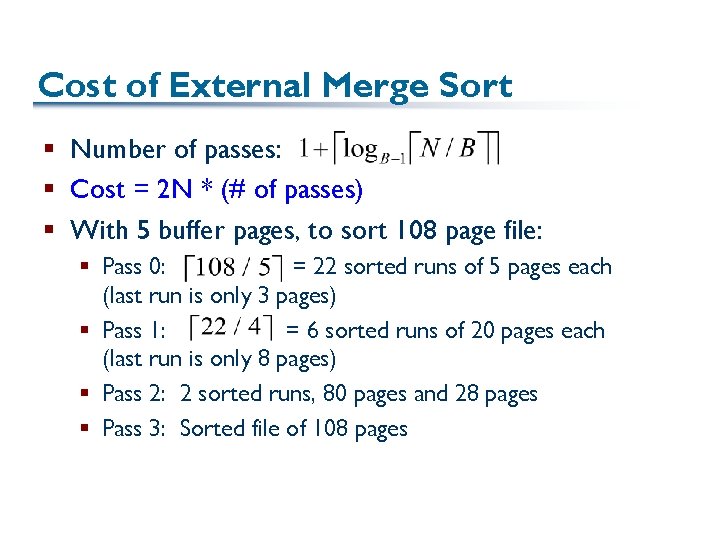

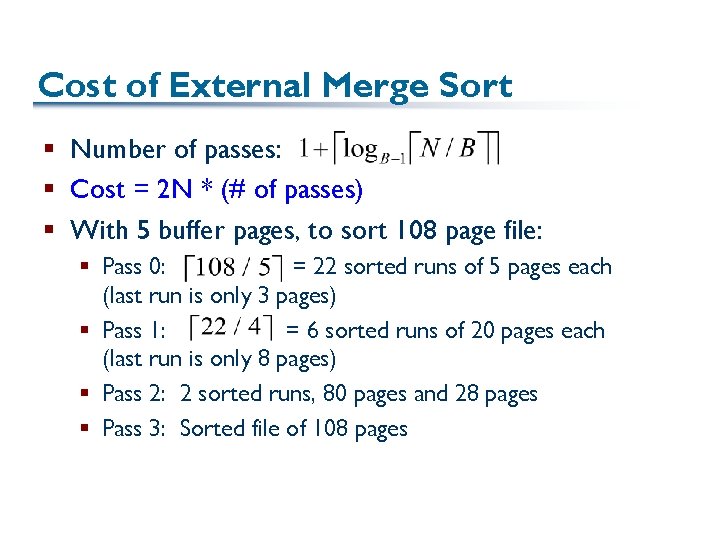

Cost of External Merge Sort § Number of passes: § Cost = 2 N * (# of passes) § With 5 buffer pages, to sort 108 page file: § Pass 0: = 22 sorted runs of 5 pages each (last run is only 3 pages) § Pass 1: = 6 sorted runs of 20 pages each (last run is only 8 pages) § Pass 2: 2 sorted runs, 80 pages and 28 pages § Pass 3: Sorted file of 108 pages

Speeding Operations over Data § Three general data organization techniques: § Indexing § Sorting § Hashing 42

Technique 3: Hashing § A familiar idea: § Requires “good” hash function (may depend on data) § Distribute data across buckets § Often multiple items in same bucket (buckets might overflow) § Types of hash tables: § § Static Extendible (requires directory to buckets; can split) Linear (two levels, rotate through + split; bad with skew) Can be the basis of disk-based indices! We won’t get into detail because of time, but see text 43