OVERVIEW OF MULTICORE PARALLEL COMPUTING AND DATA MINING

- Slides: 30

OVERVIEW OF MULTICORE, PARALLEL COMPUTING, AND DATA MINING 1 Indiana University Computer Science Dept. Seung-Hee Bae 1

OUTLINE Motivation Multicore Parallel Computing Data Mining 2

MOTIVATION According to “How Much Information” project at UC Berkeley Print, film, magnetic & optical storage media produced about 5 exabytes (a billion of billion bytes) of new info. in 2002. 5 exabytes = 37000 Library of Congress (17 million books) The rate of data increase will continue to accelerate through weblogs, digital photo & video, surveillance monitor, scientific instruments (sensors), and instant message etc. Thus, we need more powerful computing platforms to deal with this much data. To take advantage of multicore chip, it is critical to build a software with scalable parallelism. To deal with a huge amount of data and utilize multicore, it is essential to develop data mining tools with highly scalable parallel programming. 3

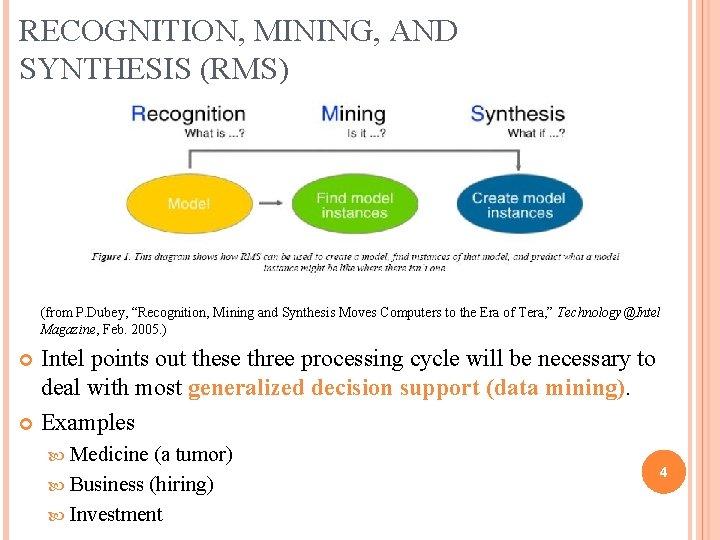

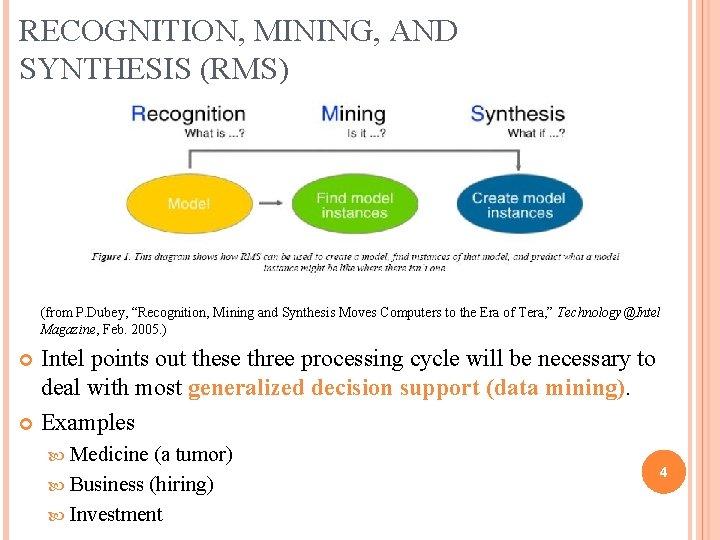

RECOGNITION, MINING, AND SYNTHESIS (RMS) (from P. Dubey, “Recognition, Mining and Synthesis Moves Computers to the Era of Tera, ” Technology@Intel Magazine, Feb. 2005. ) Intel points out these three processing cycle will be necessary to deal with most generalized decision support (data mining). Examples Medicine (a tumor) Business (hiring) Investment 4

Motivation Multicore Toward Concurrency What is Multicore? Parallel Computing Data Mining 5

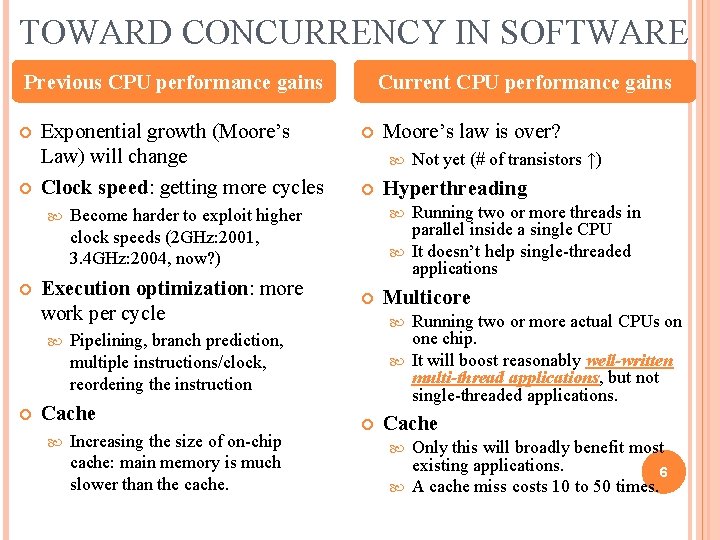

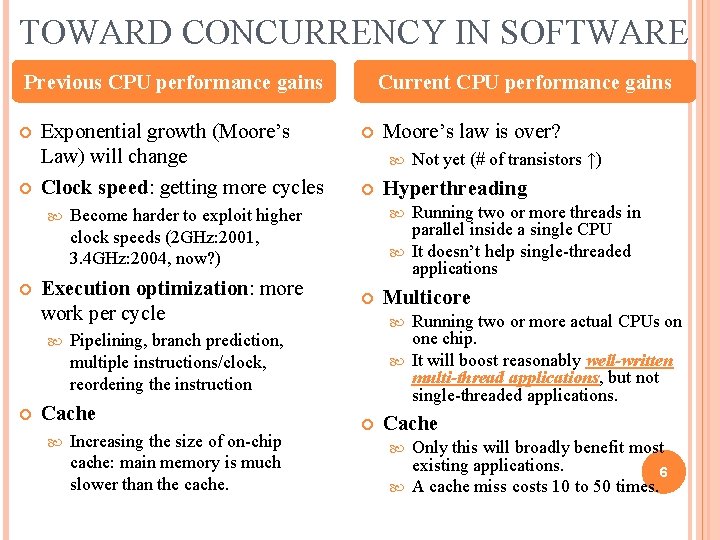

TOWARD CONCURRENCY IN SOFTWARE Previous CPU performance gains Exponential growth (Moore’s Law) will change Clock speed: getting more cycles Increasing the size of on-chip cache: main memory is much slower than the cache. Moore’s law is over? Not yet (# of transistors ↑) Hyperthreading Running two or more threads in parallel inside a single CPU It doesn’t help single-threaded applications Multicore Running two or more actual CPUs on one chip. It will boost reasonably well-written multi-thread applications, but not single-threaded applications. Pipelining, branch prediction, multiple instructions/clock, reordering the instruction Cache Become harder to exploit higher clock speeds (2 GHz: 2001, 3. 4 GHz: 2004, now? ) Execution optimization: more work per cycle Current CPU performance gains Cache Only this will broadly benefit most existing applications. 6 A cache miss costs 10 to 50 times.

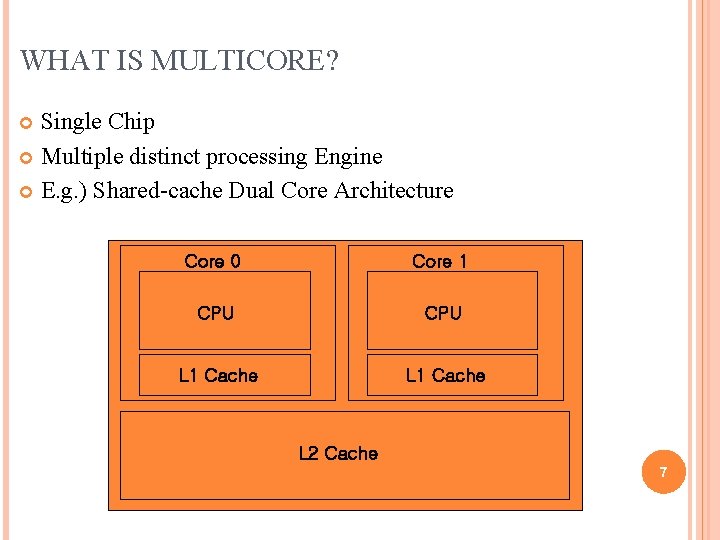

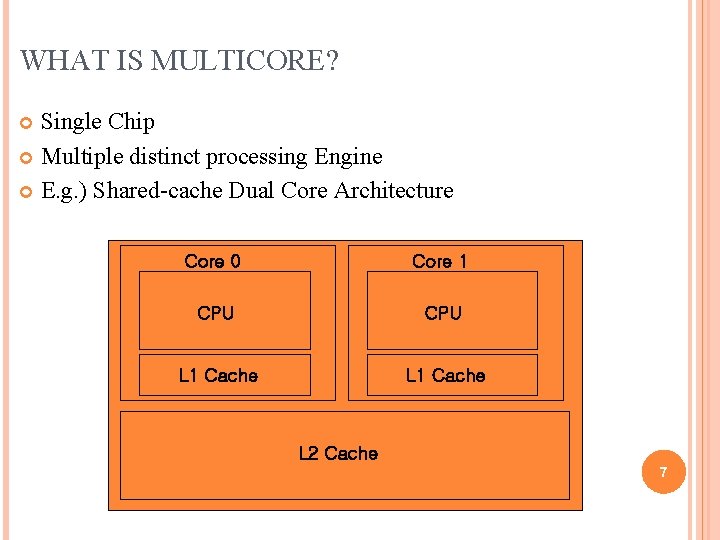

WHAT IS MULTICORE? Single Chip Multiple distinct processing Engine E. g. ) Shared-cache Dual Core Architecture Core 0 Core 1 CPU L 1 Cache L 2 Cache 7

Motivation Multicore Parallel Computing Parallel architectures (Shared-Memory vs. Distributed-Memory) Decomposing Program (Data Parallelism vs. Task Parallelism) MPI and Open. MP Data Mining 8

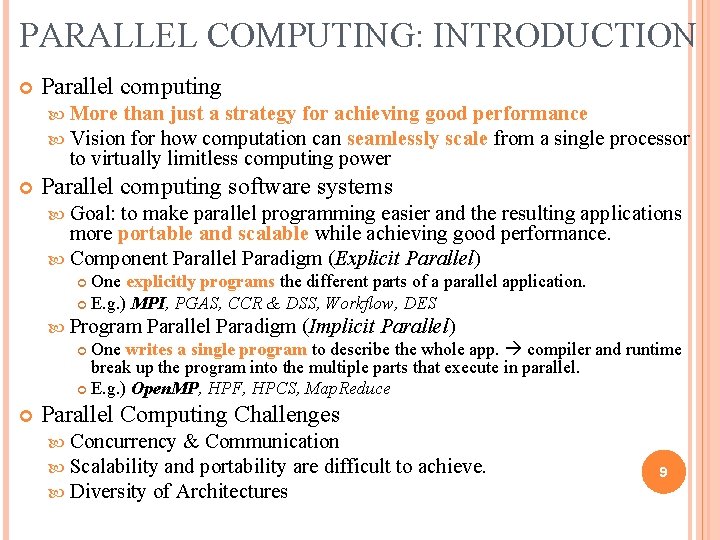

PARALLEL COMPUTING: INTRODUCTION Parallel computing More than just a strategy for achieving good performance Vision for how computation can seamlessly scale from a single to virtually limitless computing power processor Parallel computing software systems Goal: to make parallel programming easier and the resulting applications more portable and scalable while achieving good performance. Component Parallel Paradigm (Explicit Parallel) One explicitly programs the different parts of a parallel application. E. g. ) MPI, PGAS, CCR & DSS, Workflow, DES Program Parallel Paradigm (Implicit Parallel) One writes a single program to describe the whole app. compiler and runtime break up the program into the multiple parts that execute in parallel. E. g. ) Open. MP, HPF, HPCS, Map. Reduce Parallel Computing Challenges Concurrency & Communication Scalability and portability are difficult Diversity of Architectures to achieve. 9

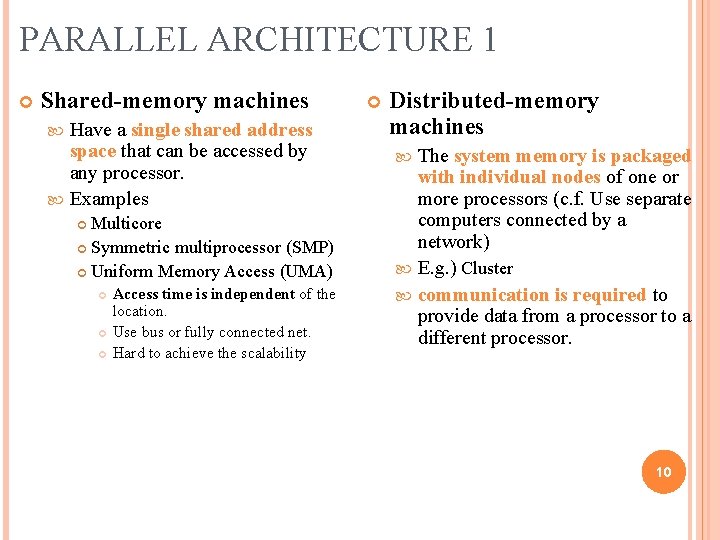

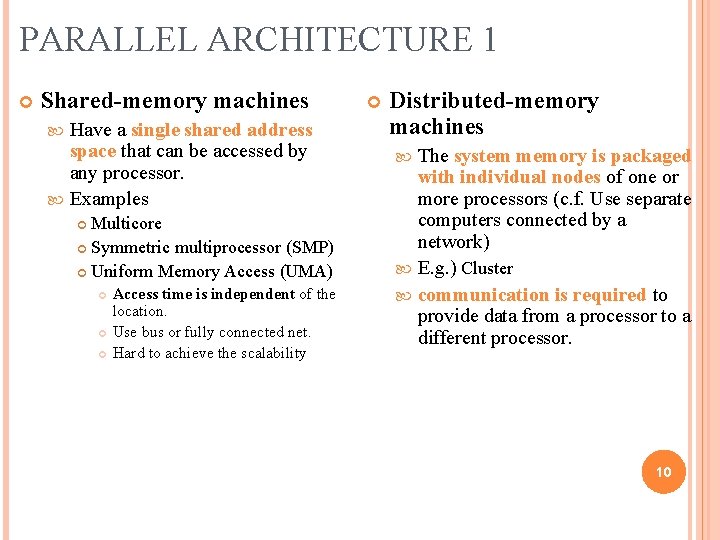

PARALLEL ARCHITECTURE 1 Shared-memory machines Have a single shared address space that can be accessed by any processor. Examples Multicore Symmetric multiprocessor (SMP) Uniform Memory Access (UMA) Access time is independent of the location. Use bus or fully connected net. Hard to achieve the scalability Distributed-memory machines The system memory is packaged with individual nodes of one or more processors (c. f. Use separate computers connected by a network) E. g. ) Cluster communication is required to provide data from a processor to a different processor. 10

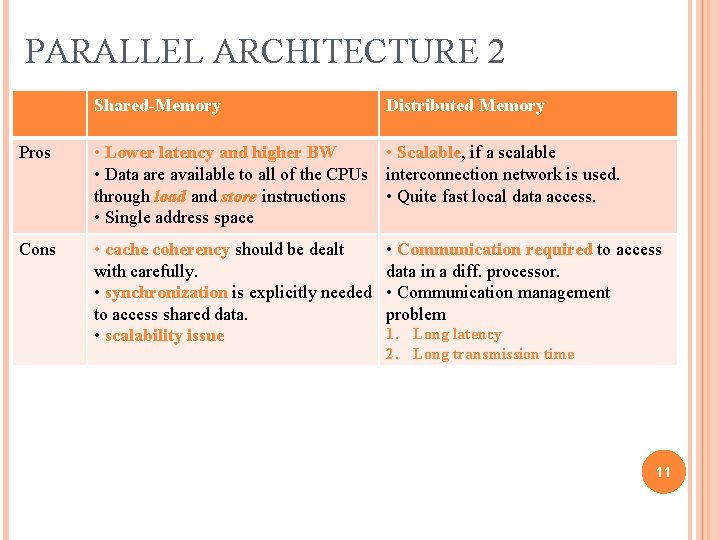

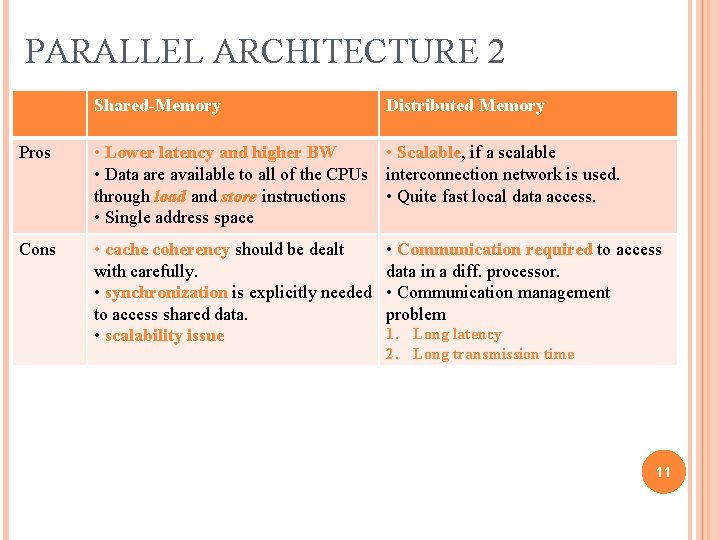

PARALLEL ARCHITECTURE 2 Shared-Memory Distributed Memory Pros • Lower latency and higher BW • Scalable, if a scalable • Data are available to all of the CPUs interconnection network is used. through load and store instructions • Quite fast local data access. • Single address space Cons • cache coherency should be dealt with carefully. • synchronization is explicitly needed to access shared data. • scalability issue • Communication required to access data in a diff. processor. • Communication management problem 1. Long latency 2. Long transmission time 11

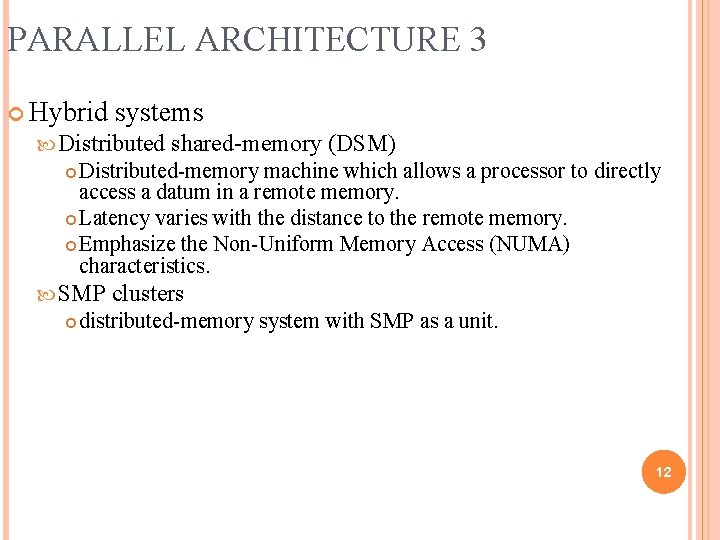

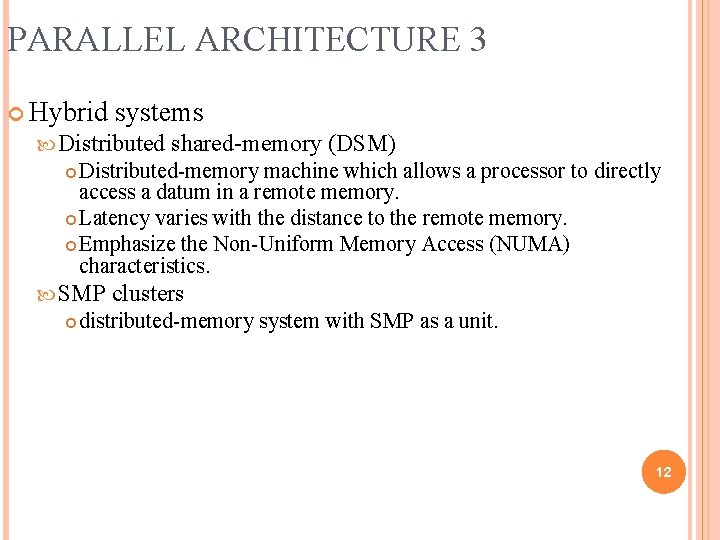

PARALLEL ARCHITECTURE 3 Hybrid systems Distributed shared-memory (DSM) Distributed-memory machine which allows a processor to directly access a datum in a remote memory. Latency varies with the distance to the remote memory. Emphasize the Non-Uniform Memory Access (NUMA) characteristics. SMP clusters distributed-memory system with SMP as a unit. 12

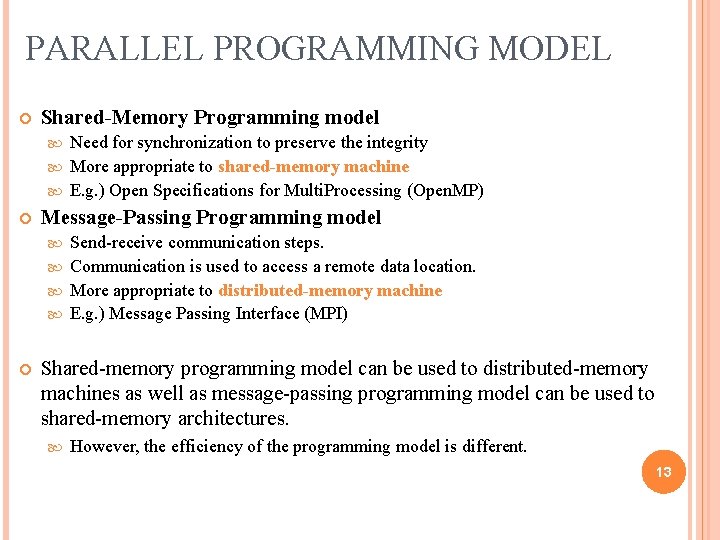

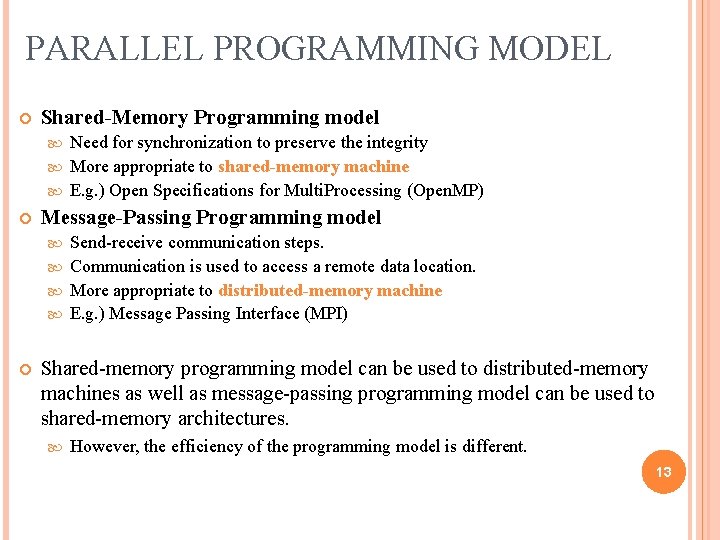

PARALLEL PROGRAMMING MODEL Shared-Memory Programming model Need for synchronization to preserve the integrity More appropriate to shared-memory machine E. g. ) Open Specifications for Multi. Processing (Open. MP) Message-Passing Programming model Send-receive communication steps. Communication is used to access a remote data location. More appropriate to distributed-memory machine E. g. ) Message Passing Interface (MPI) Shared-memory programming model can be used to distributed-memory machines as well as message-passing programming model can be used to shared-memory architectures. However, the efficiency of the programming model is different. 13

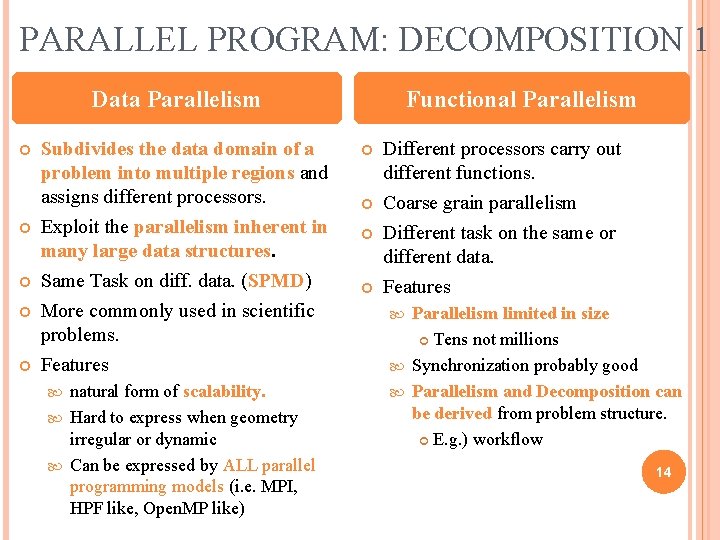

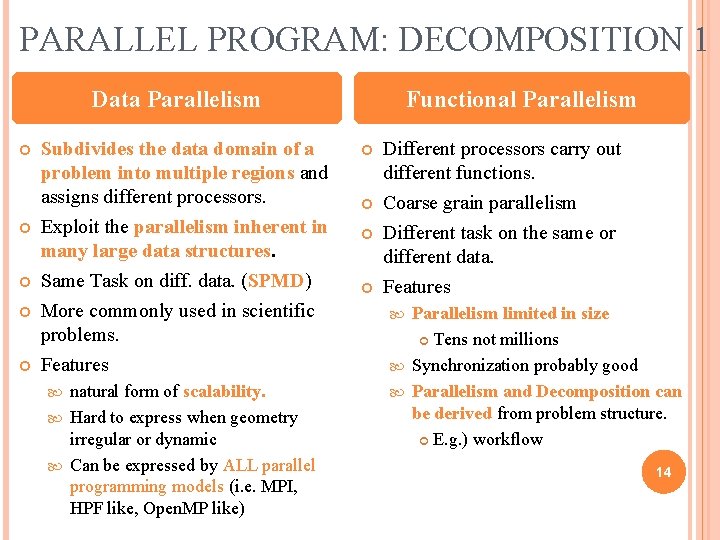

PARALLEL PROGRAM: DECOMPOSITION 1 Data Parallelism Subdivides the data domain of a problem into multiple regions and assigns different processors. Exploit the parallelism inherent in many large data structures. Same Task on diff. data. (SPMD) More commonly used in scientific problems. Features natural form of scalability. Hard to express when geometry irregular or dynamic Can be expressed by ALL parallel programming models (i. e. MPI, HPF like, Open. MP like) Functional Parallelism Different processors carry out different functions. Coarse grain parallelism Different task on the same or different data. Features Parallelism limited in size Tens not millions Synchronization probably good Parallelism and Decomposition can be derived from problem structure. E. g. ) workflow 14

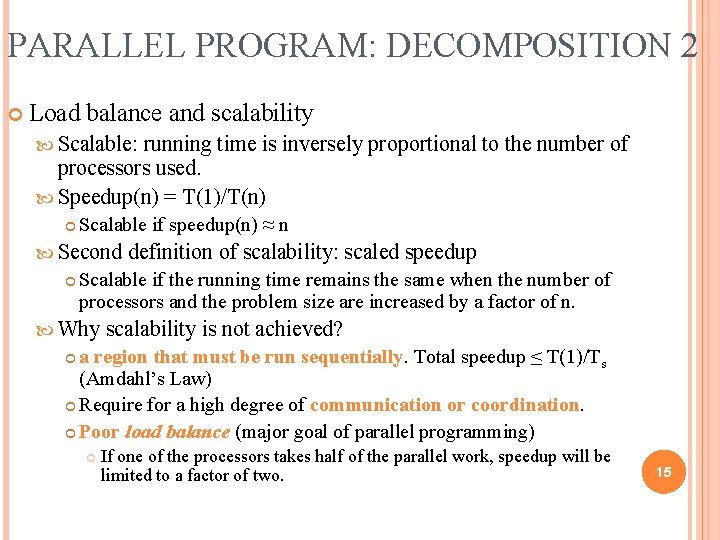

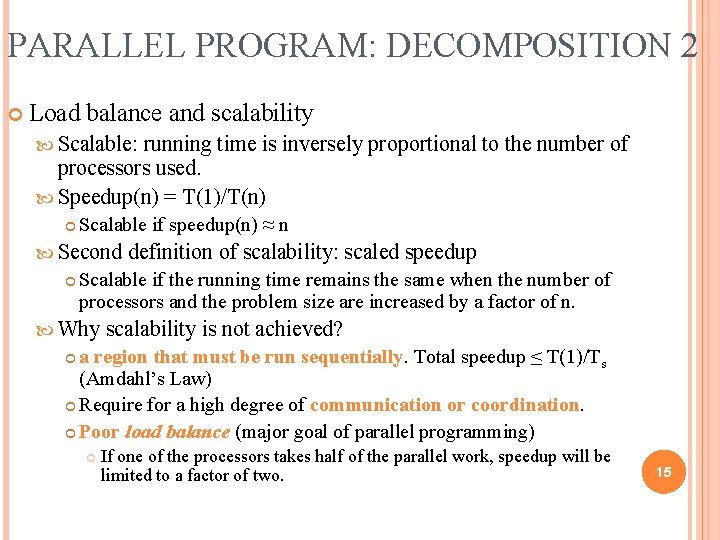

PARALLEL PROGRAM: DECOMPOSITION 2 Load balance and scalability Scalable: running time is inversely proportional to the number of processors used. Speedup(n) = T(1)/T(n) Scalable if speedup(n) ≈ n Second definition of scalability: scaled speedup Scalable if the running time remains the same when the number of processors and the problem size are increased by a factor of n. Why scalability is not achieved? a region that must be run sequentially. Total speedup ≤ T(1)/Ts (Amdahl’s Law) Require for a high degree of communication or coordination. Poor load balance (major goal of parallel programming) If one of the processors takes half of the parallel work, speedup will be limited to a factor of two. 15

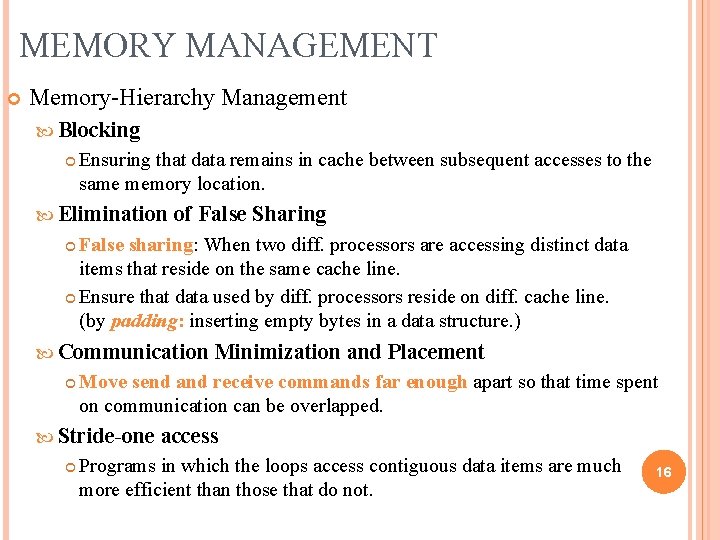

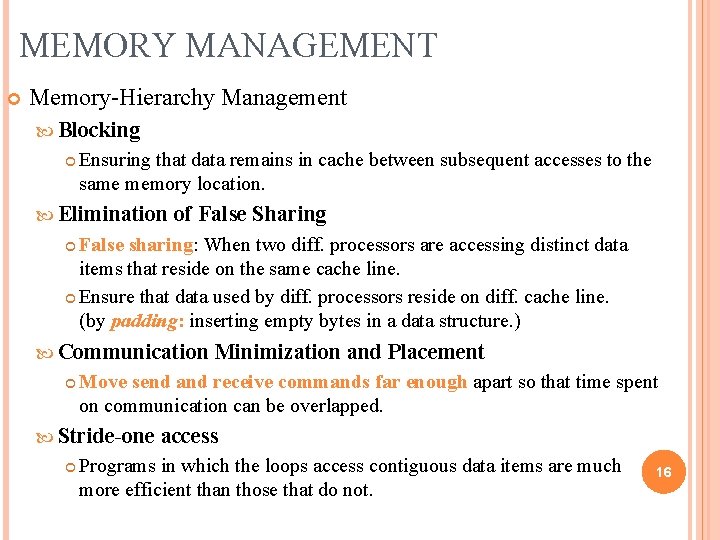

MEMORY MANAGEMENT Memory-Hierarchy Management Blocking Ensuring that data remains in cache between subsequent accesses to the same memory location. Elimination of False Sharing False sharing: When two diff. processors are accessing distinct data items that reside on the same cache line. Ensure that data used by diff. processors reside on diff. cache line. (by padding: inserting empty bytes in a data structure. ) Communication Move send and receive commands far enough apart so that time spent on communication can be overlapped. Stride-one Minimization and Placement access Programs in which the loops access contiguous data items are much more efficient than those that do not. 16

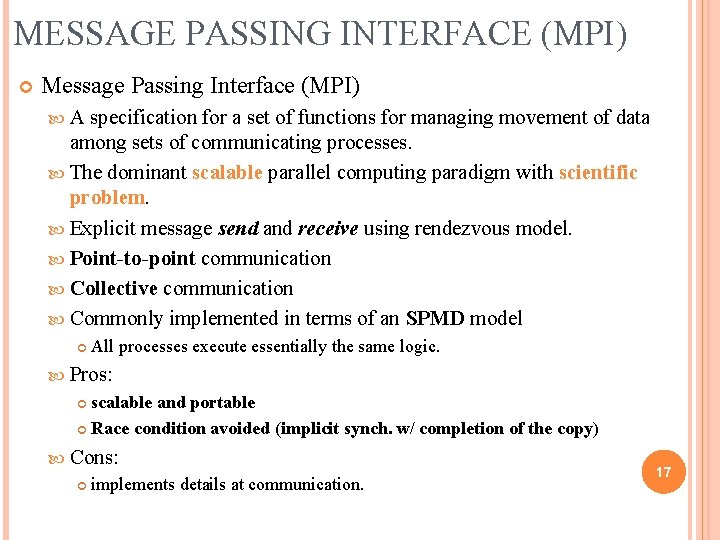

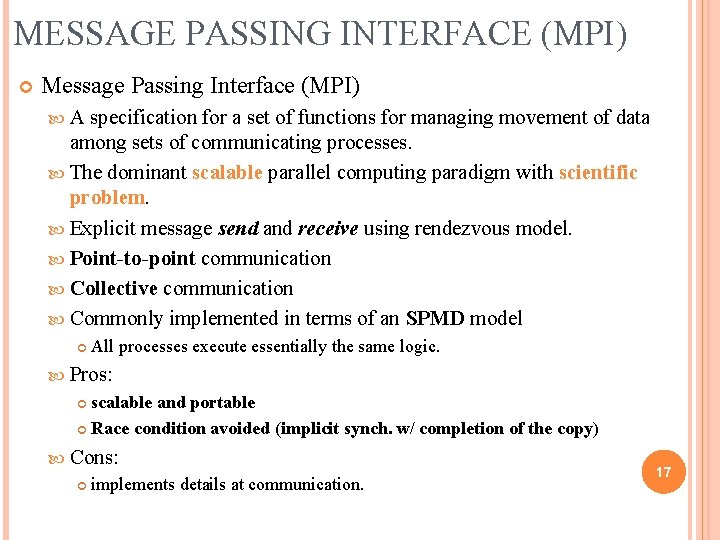

MESSAGE PASSING INTERFACE (MPI) Message Passing Interface (MPI) A specification for a set of functions for managing movement of data among sets of communicating processes. The dominant scalable parallel computing paradigm with scientific problem. Explicit message send and receive using rendezvous model. Point-to-point communication Collective communication Commonly implemented in terms of an SPMD model All processes execute essentially the same logic. Pros: scalable and portable Race condition avoided (implicit synch. w/ completion of the copy) Cons: implements details at communication. 17

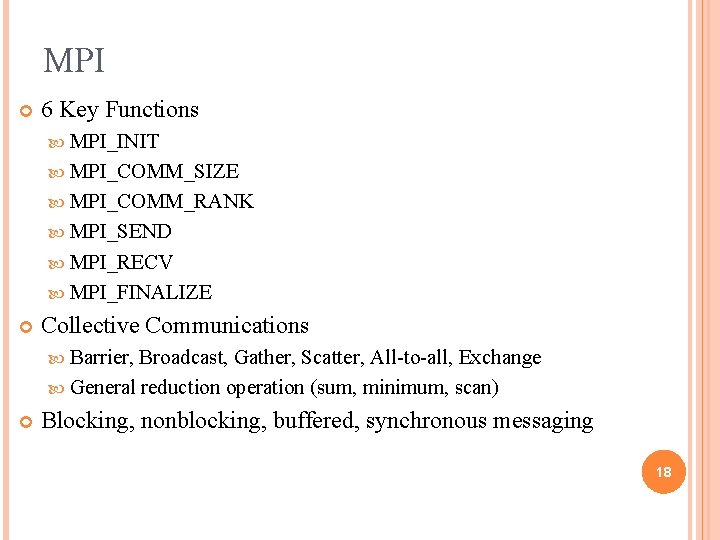

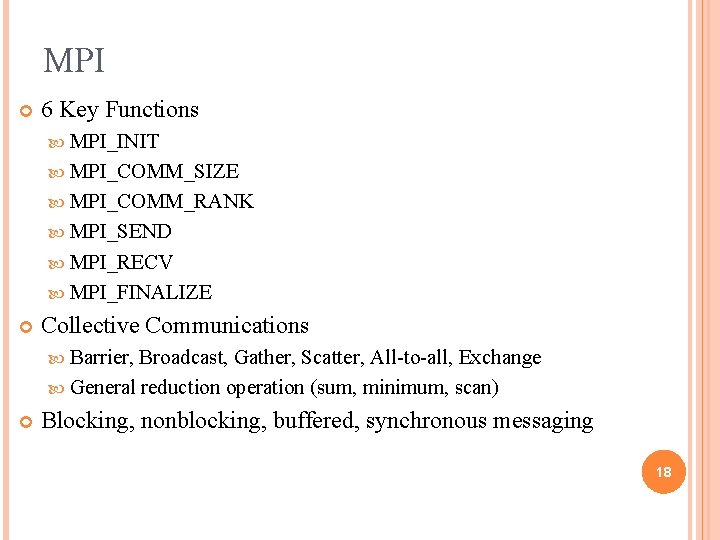

MPI 6 Key Functions MPI_INIT MPI_COMM_SIZE MPI_COMM_RANK MPI_SEND MPI_RECV MPI_FINALIZE Collective Communications Barrier, Broadcast, Gather, Scatter, All-to-all, Exchange General reduction operation (sum, minimum, scan) Blocking, nonblocking, buffered, synchronous messaging 18

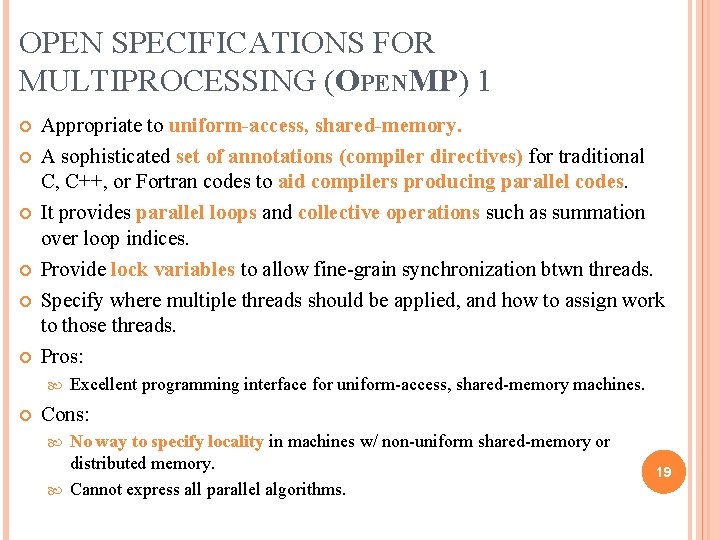

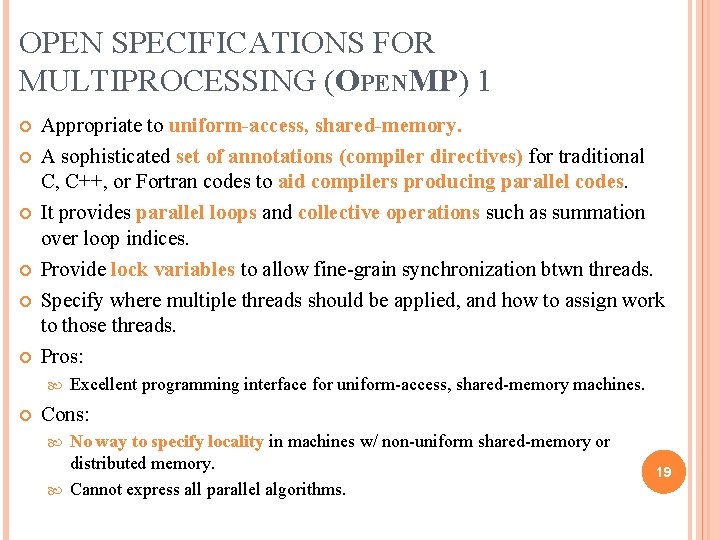

OPEN SPECIFICATIONS FOR MULTIPROCESSING (OPENMP) 1 Appropriate to uniform-access, shared-memory. A sophisticated set of annotations (compiler directives) for traditional C, C++, or Fortran codes to aid compilers producing parallel codes. It provides parallel loops and collective operations such as summation over loop indices. Provide lock variables to allow fine-grain synchronization btwn threads. Specify where multiple threads should be applied, and how to assign work to those threads. Pros: Excellent programming interface for uniform-access, shared-memory machines. Cons: No way to specify locality in machines w/ non-uniform shared-memory or distributed memory. Cannot express all parallel algorithms. 19

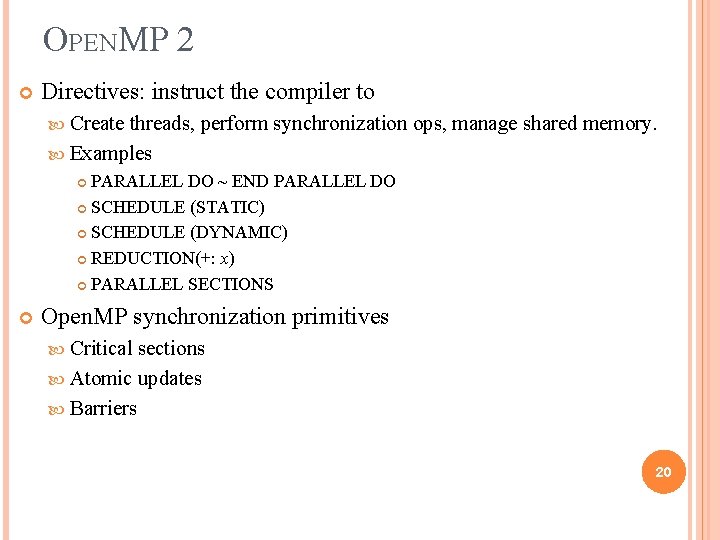

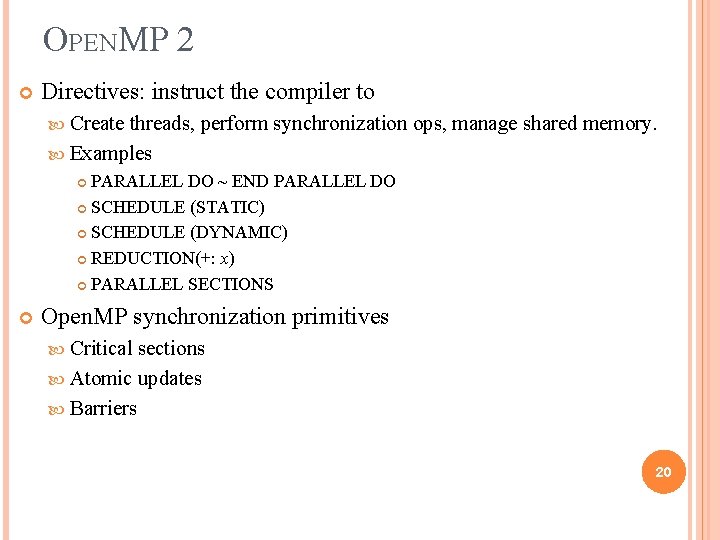

OPENMP 2 Directives: instruct the compiler to Create threads, perform synchronization ops, manage shared memory. Examples PARALLEL DO ~ END PARALLEL DO SCHEDULE (STATIC) SCHEDULE (DYNAMIC) REDUCTION(+: x) PARALLEL SECTIONS Open. MP synchronization primitives Critical sections Atomic updates Barriers 20

Motivation Multicore Parallel Computing Data Mining Expectation Maximization (EM) Deterministic Annealing (DA) Hidden Markov Model (HMM) Other Important Algorithms 21

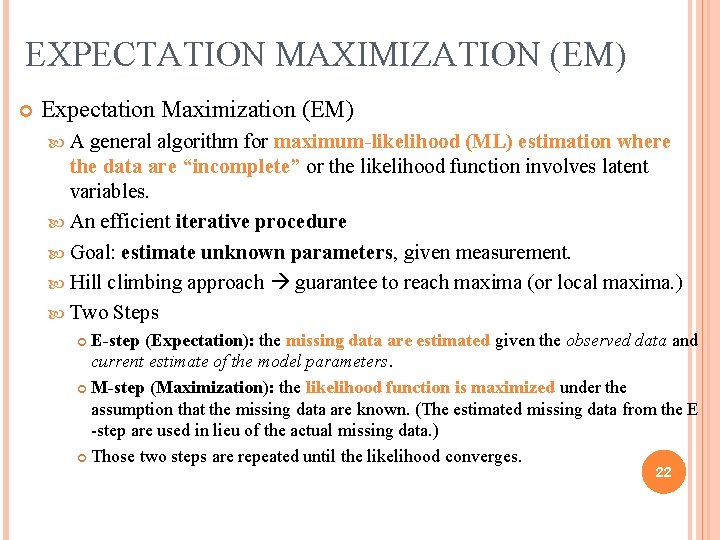

EXPECTATION MAXIMIZATION (EM) Expectation Maximization (EM) A general algorithm for maximum-likelihood (ML) estimation where the data are “incomplete” or the likelihood function involves latent variables. An efficient iterative procedure Goal: estimate unknown parameters, given measurement. Hill climbing approach guarantee to reach maxima (or local maxima. ) Two Steps E-step (Expectation): the missing data are estimated given the observed data and current estimate of the model parameters. M-step (Maximization): the likelihood function is maximized under the assumption that the missing data are known. (The estimated missing data from the E -step are used in lieu of the actual missing data. ) Those two steps are repeated until the likelihood converges. 22

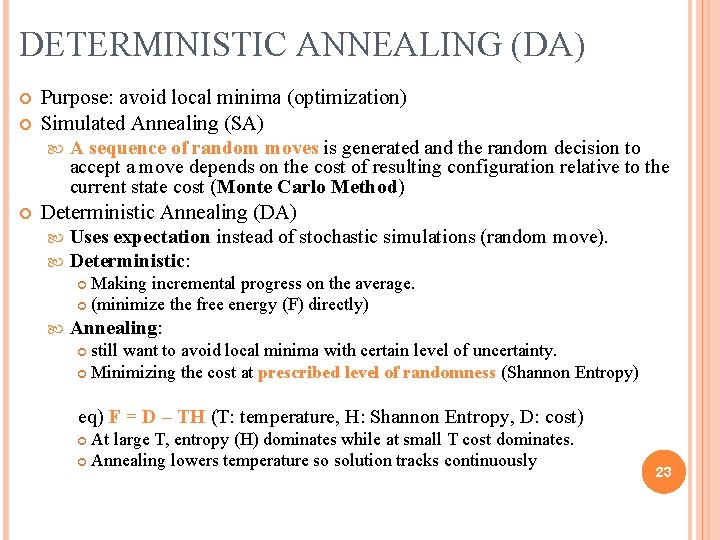

DETERMINISTIC ANNEALING (DA) Purpose: avoid local minima (optimization) Simulated Annealing (SA) A sequence of random moves is generated and the random decision to accept a move depends on the cost of resulting configuration relative to the current state cost (Monte Carlo Method) Deterministic Annealing (DA) Uses expectation instead of stochastic simulations (random move). Deterministic: Making incremental progress on the average. (minimize the free energy (F) directly) Annealing: still want to avoid local minima with certain level of uncertainty. Minimizing the cost at prescribed level of randomness (Shannon Entropy) eq) F = D – TH (T: temperature, H: Shannon Entropy, D: cost) At large T, entropy (H) dominates while at small T cost dominates. Annealing lowers temperature so solution tracks continuously 23

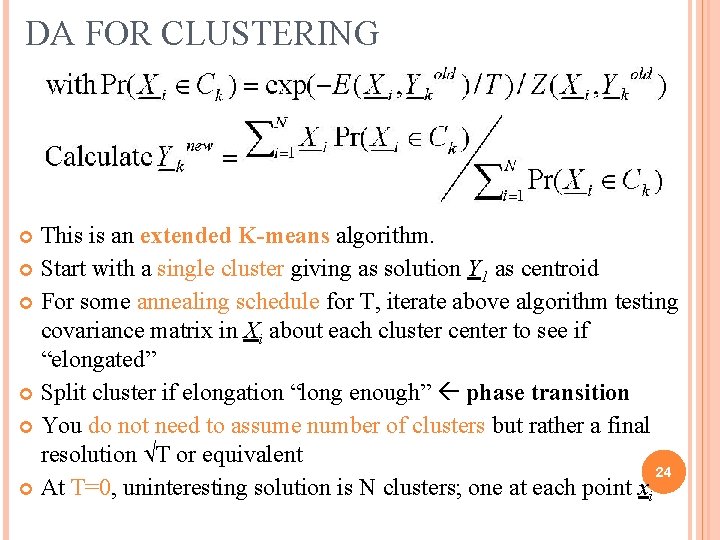

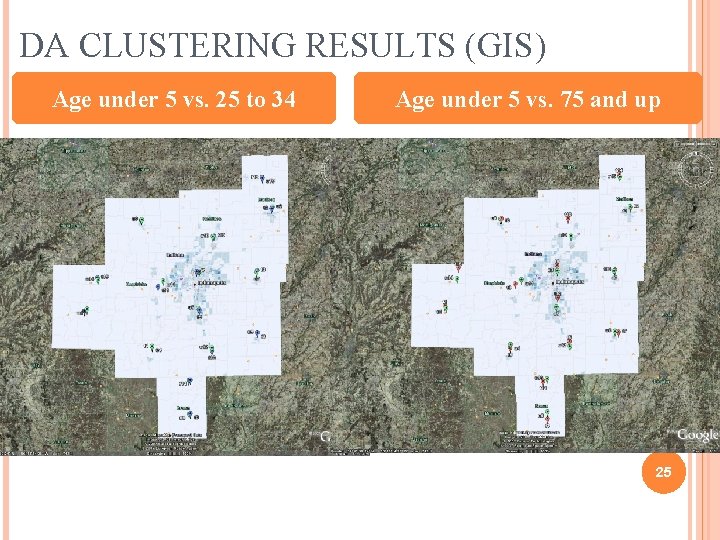

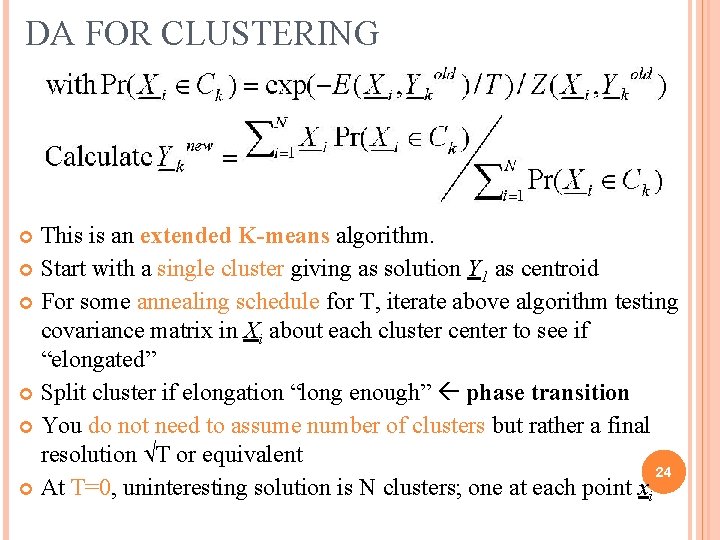

DA FOR CLUSTERING This is an extended K-means algorithm. Start with a single cluster giving as solution Y 1 as centroid For some annealing schedule for T, iterate above algorithm testing covariance matrix in Xi about each cluster center to see if “elongated” Split cluster if elongation “long enough” phase transition You do not need to assume number of clusters but rather a final resolution T or equivalent 24 At T=0, uninteresting solution is N clusters; one at each point xi

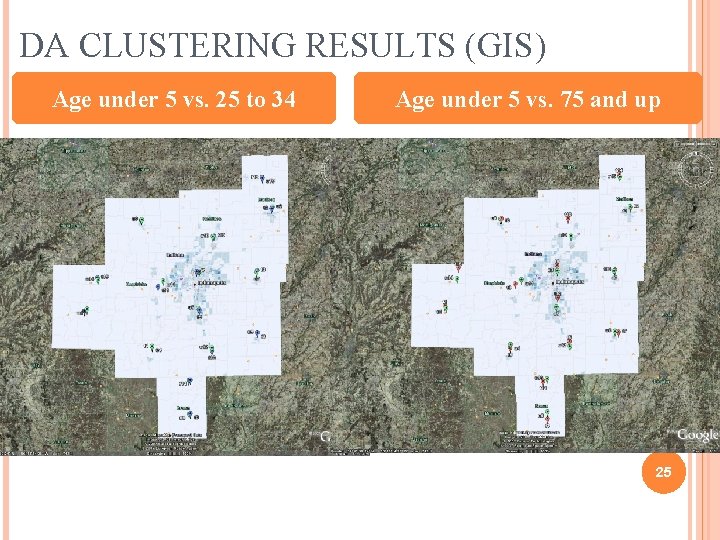

DA CLUSTERING RESULTS (GIS) Age under 5 vs. 25 to 34 Age under 5 vs. 75 and up 25

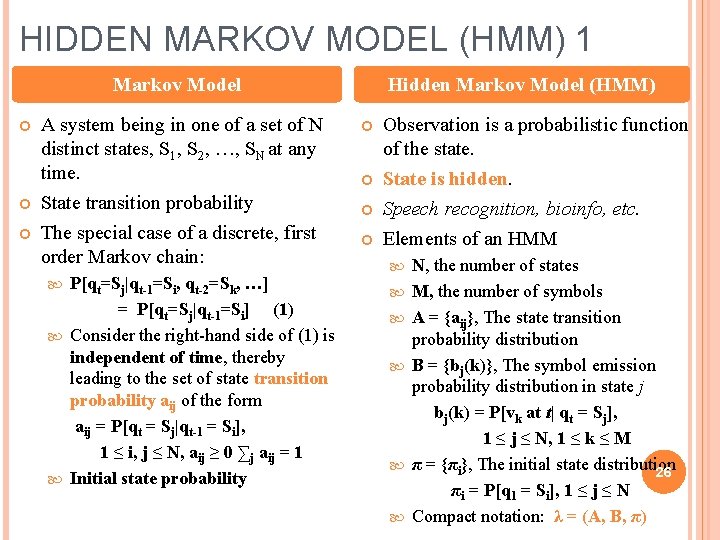

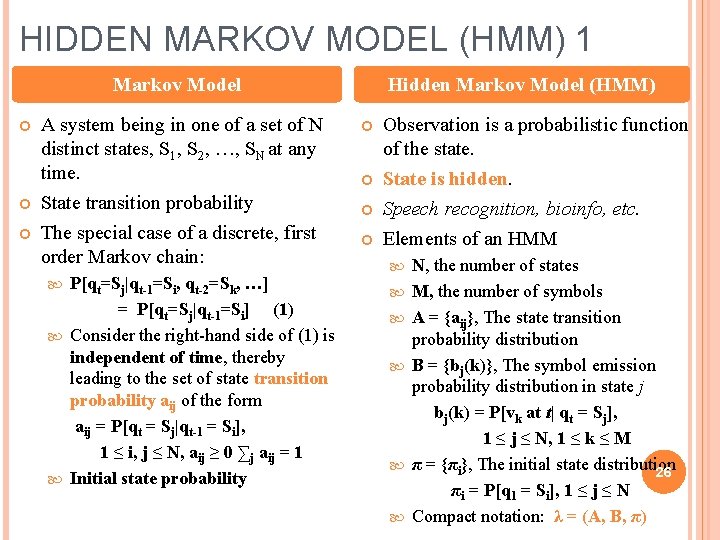

HIDDEN MARKOV MODEL (HMM) 1 Markov Model A system being in one of a set of N distinct states, S 1, S 2, …, SN at any time. State transition probability The special case of a discrete, first order Markov chain: P[qt=Sj|qt-1=Si, qt-2=Sk, …] = P[qt=Sj|qt-1=Si] (1) Consider the right-hand side of (1) is independent of time, thereby leading to the set of state transition probability aij of the form aij = P[qt = Sj|qt-1 = Si], 1 ≤ i, j ≤ N, aij ≥ 0 ∑j aij = 1 Initial state probability Hidden Markov Model (HMM) Observation is a probabilistic function of the state. State is hidden. Speech recognition, bioinfo, etc. Elements of an HMM N, the number of states M, the number of symbols A = {aij}, The state transition probability distribution B = {bj(k)}, The symbol emission probability distribution in state j bj(k) = P[vk at t| qt = Sj], 1 ≤ j ≤ N, 1 ≤ k ≤ M π = {πi}, The initial state distribution 26 πi = P[q 1 = Si], 1 ≤ j ≤ N Compact notation: λ = (A, B, π)

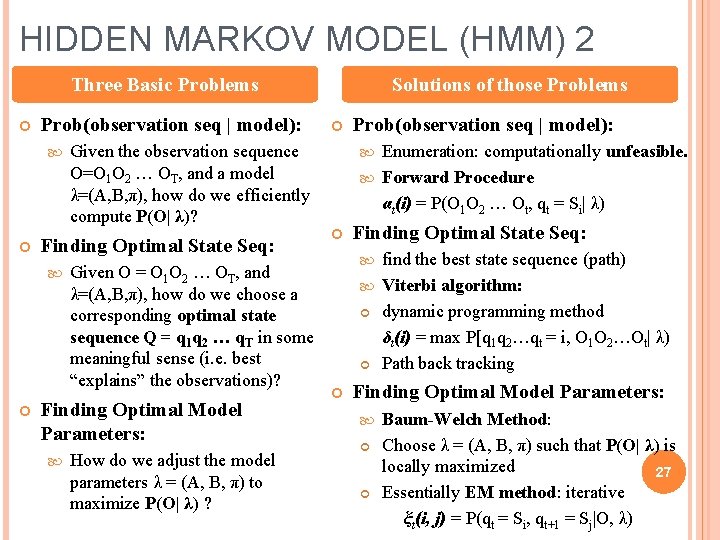

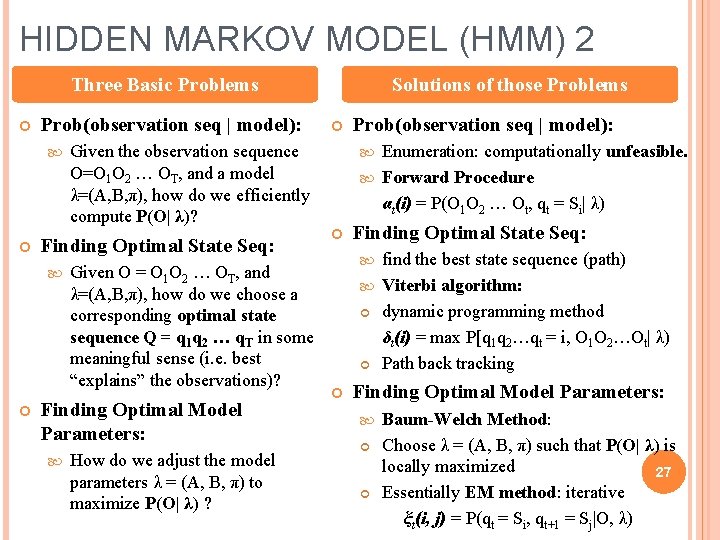

HIDDEN MARKOV MODEL (HMM) 2 Three Basic Problems Prob(observation seq | model): Finding Optimal State Seq: Given the observation sequence O=O 1 O 2 … OT, and a model λ=(A, B, π), how do we efficiently compute P(O| λ)? Given O = O 1 O 2 … OT, and λ=(A, B, π), how do we choose a corresponding optimal state sequence Q = q 1 q 2 … q. T in some meaningful sense (i. e. best “explains” the observations)? Finding Optimal Model Parameters: How do we adjust the model parameters λ = (A, B, π) to maximize P(O| λ) ? Solutions of those Problems Prob(observation seq | model): Enumeration: computationally unfeasible. Forward Procedure αt(i) = P(O 1 O 2 … Ot, qt = Si| λ) Finding Optimal State Seq: find the best state sequence (path) Viterbi algorithm: dynamic programming method δt(i) = max P[q 1 q 2…qt = i, O 1 O 2…Ot| λ) Path back tracking Finding Optimal Model Parameters: Baum-Welch Method: Choose λ = (A, B, π) such that P(O| λ) is locally maximized 27 Essentially EM method: iterative ξt(i, j) = P(qt = Si, qt+1 = Sj|O, λ)

OTHER IMPORTANT ALGS. Other Data Mining Algorithms Support Vector Machine (SVM) K-means (special case of DA clustering), Nearest-neighbor Decision Tree, Neural network, etc. Dimension Reduction GTM (Generative Topographic Map) MDS (Multi. Dimensional Scaling) SOM (Self-Organizing Map) 28

SUMMARY Era of Multicore (Parallelism is essential. ) Explosion of information from many kinds of sources. We are interesting scalable parallel data-mining algorithms. Clustering algorithm (DA clustering) GIS (demographic (census) data) – visualization is natural. Cheminformatics – dimension reduction is necessary to visualize. Visualization (Dimension Reduction) Hidden Markov Models, … 29

THANK YOU! QUESTIONS? 30