Grid Computing in Data Mining and Data Mining

- Slides: 25

Grid Computing in Data Mining and Data Mining on Grid Computing David Cieslak (dcieslak@cse. nd. edu) Advisor: Nitesh Chawla (nchawla@cse. nd. edu) University of Notre Dame 1

Grid Computing in Data Mining How you help me 2

Data Mining Primer n Data Mining: "The non-trivial process of identifying valid, novel, potentially useful, and ultimately understandable patterns in data". n n Fayyad, Piatetsky-Shapiro & Smyth, 1996. Classifier: Learning algorithm which trains a predictive model from data Ensemble: A set of classifiers working together to improve prediction 3

Applications of Data Mining n n n n Network Intrusion Detection Categorizing Adult Income Finding Calcifications in Mammography Looking for Oil Spills Identifying Handwritten Digits Predicting Job Failure on a Computing Grid Anticipating Successful Companies 4

Condor Makes DM Tractable n I use a small set of algorithms in high volume Ex: Run same classifier on many datasets n A single data mining operation may have easily parallelized segments Ex: Learn an ensemble of 10 classifiers on dataset n Introducing simple parallelism into data mining conserves time significantly 5

Common DM Task: 10 Fold CV Original Data Network Traffic Dataset ~30 MB Data 6

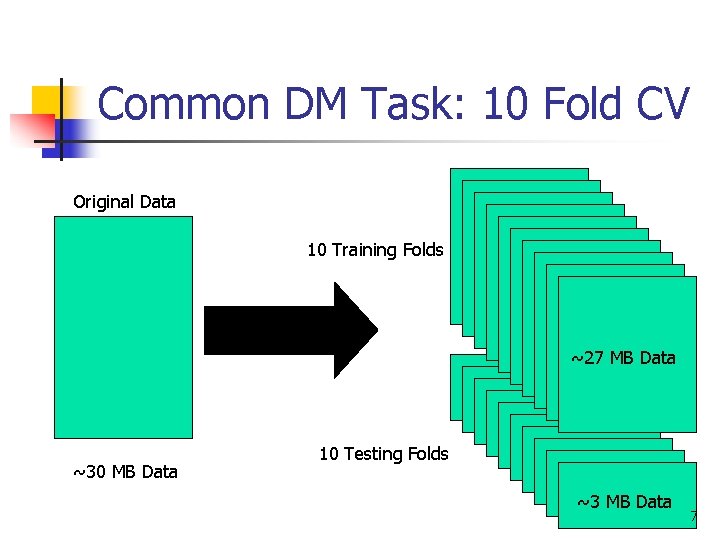

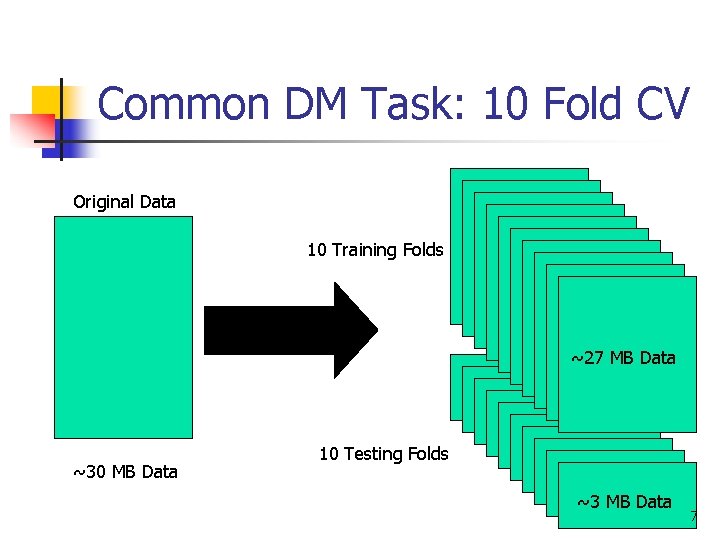

Common DM Task: 10 Fold CV Original Data 10 Training Folds ~27 MB Data ~30 MB Data 10 Testing Folds ~3 MB Data 7

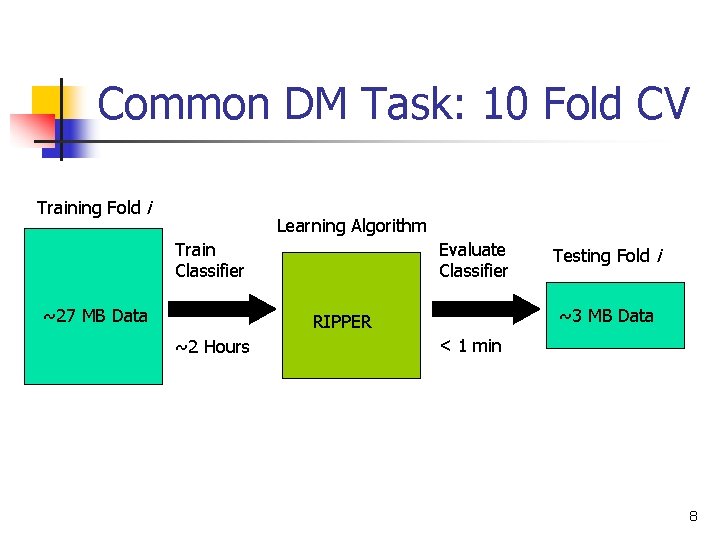

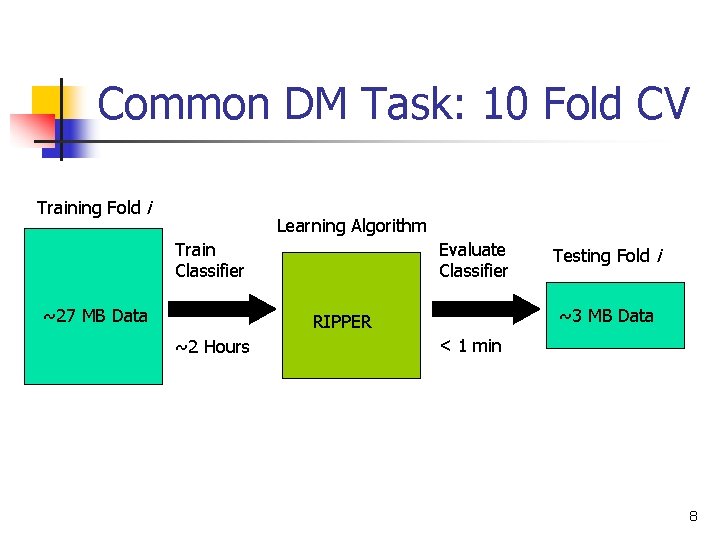

Common DM Task: 10 Fold CV Training Fold i Learning Algorithm Train Classifier ~27 MB Data Evaluate Classifier ~3 MB Data RIPPER ~2 Hours Testing Fold i < 1 min 8

Common DM Task: 10 Fold CV ~27 MB Data ~3 MB Data Average and aggregate various statistics and measures across folds 9

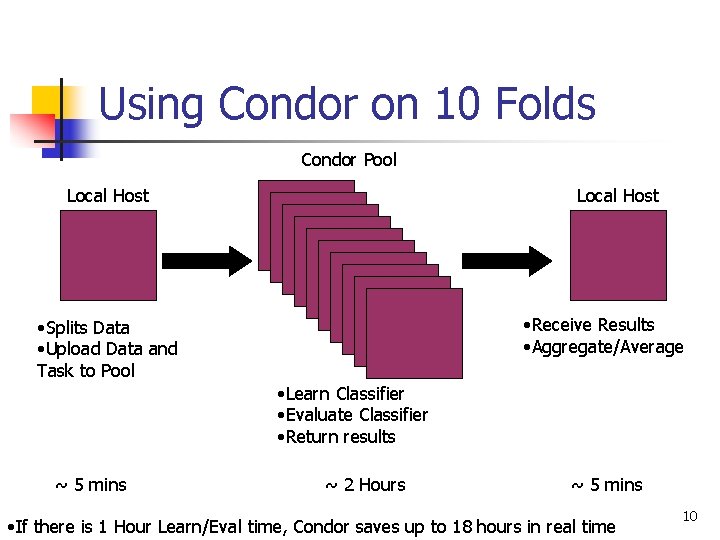

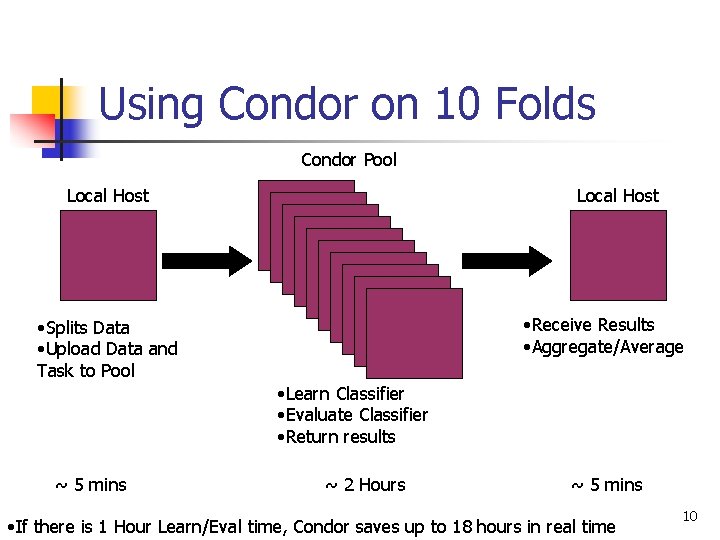

Using Condor on 10 Folds Condor Pool Local Host • Receive Results • Aggregate/Average • Splits Data • Upload Data and Task to Pool • Learn Classifier • Evaluate Classifier • Return results ~ 5 mins ~ 2 Hours ~ 5 mins • If there is 1 Hour Learn/Eval time, Condor saves up to 18 hours in real time 10

A More Complex DM Task Over/Under Sampling Wrapper 1. 2. 3. 4. 5. 6. 7. Split data into 50 folds Generate 10 undersamplings and 20 oversamplings per fold Learn classifier on each undersampling (pool) Evaluate and select best undersampling Learn classifier combing best undersampling with each oversampling Evaluate best combination Obtain results on test folds (single) (pool) 11

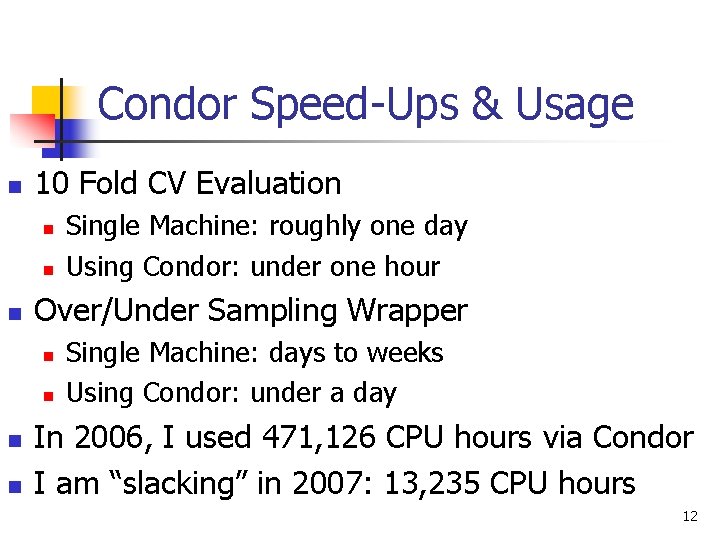

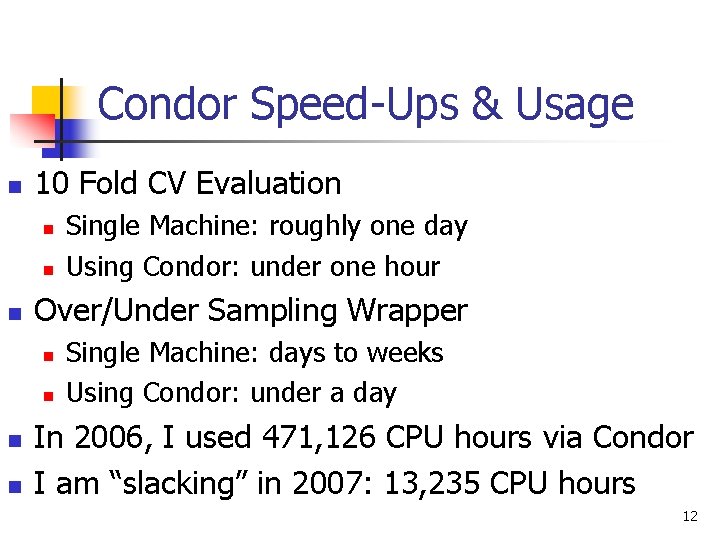

Condor Speed-Ups & Usage n 10 Fold CV Evaluation n Over/Under Sampling Wrapper n n Single Machine: roughly one day Using Condor: under one hour Single Machine: days to weeks Using Condor: under a day In 2006, I used 471, 126 CPU hours via Condor I am “slacking” in 2007: 13, 235 CPU hours 12

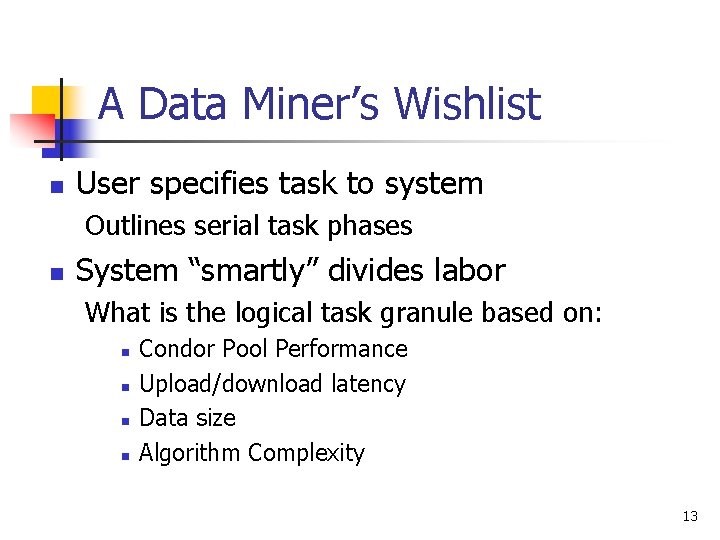

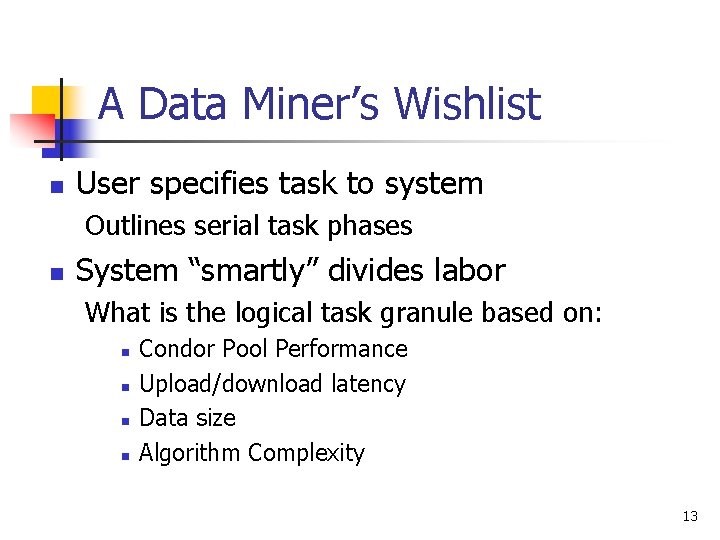

A Data Miner’s Wishlist n User specifies task to system Outlines serial task phases n System “smartly” divides labor What is the logical task granule based on: n n Condor Pool Performance Upload/download latency Data size Algorithm Complexity 13

Data Mining on Grid Computing How I help you 14

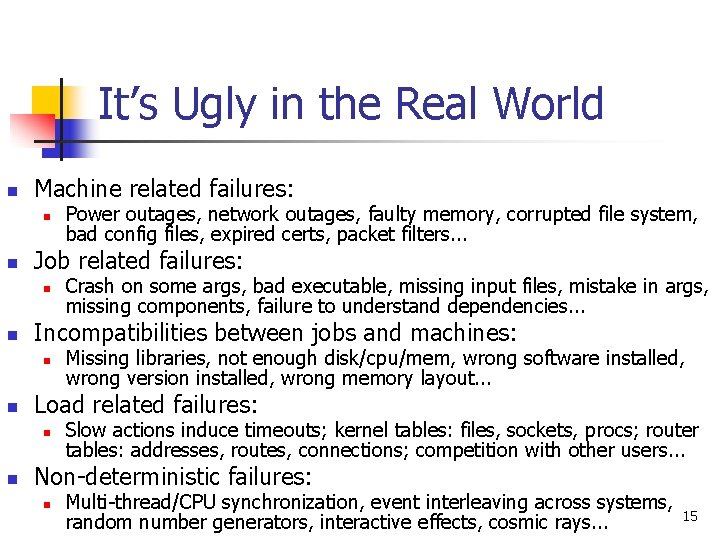

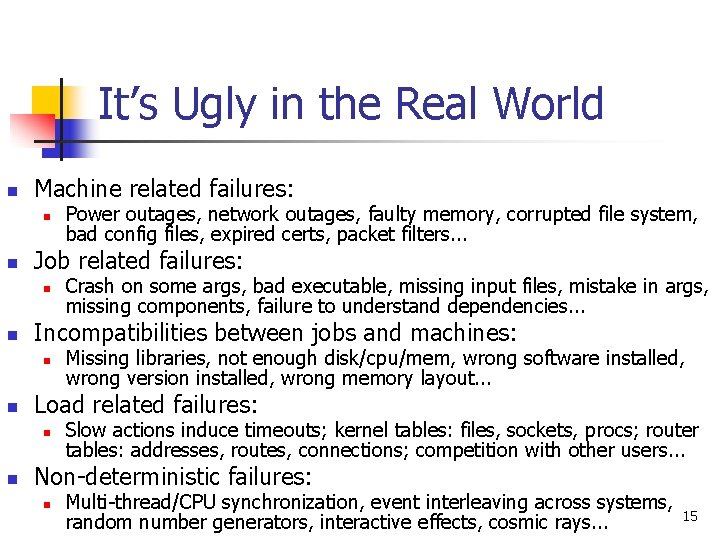

It’s Ugly in the Real World n Machine related failures: n n Job related failures: n n Missing libraries, not enough disk/cpu/mem, wrong software installed, wrong version installed, wrong memory layout. . . Load related failures: n n Crash on some args, bad executable, missing input files, mistake in args, missing components, failure to understand dependencies. . . Incompatibilities between jobs and machines: n n Power outages, network outages, faulty memory, corrupted file system, bad config files, expired certs, packet filters. . . Slow actions induce timeouts; kernel tables: files, sockets, procs; router tables: addresses, routes, connections; competition with other users. . . Non-deterministic failures: n Multi-thread/CPU synchronization, event interleaving across systems, random number generators, interactive effects, cosmic rays. . . 15

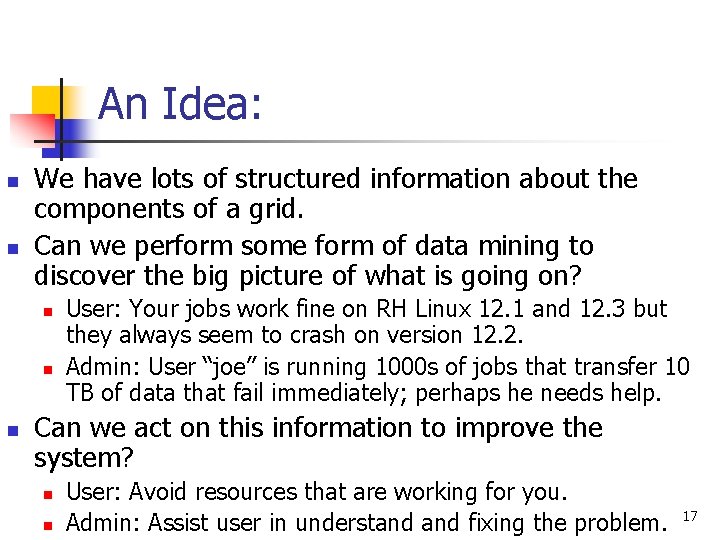

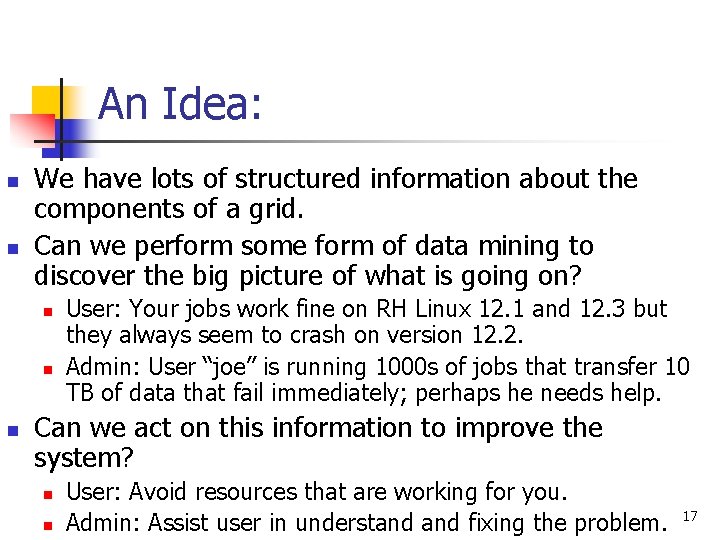

A “Grand Challenge” Problem: n n n A user submits one million jobs to the grid. Half of them fail. Now what? n n n Examine the output of every failed job? Login to every site to examine the logs? Resubmit and hope for the best? We need some way of getting the big picture. Need to identify problems not seen before. 16

An Idea: n n We have lots of structured information about the components of a grid. Can we perform some form of data mining to discover the big picture of what is going on? n n n User: Your jobs work fine on RH Linux 12. 1 and 12. 3 but they always seem to crash on version 12. 2. Admin: User “joe” is running 1000 s of jobs that transfer 10 TB of data that fail immediately; perhaps he needs help. Can we act on this information to improve the system? n n User: Avoid resources that are working for you. Admin: Assist user in understand fixing the problem. 17

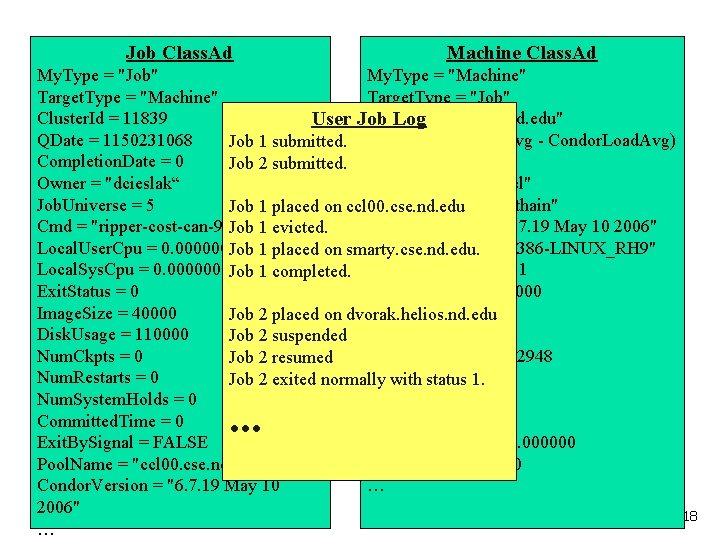

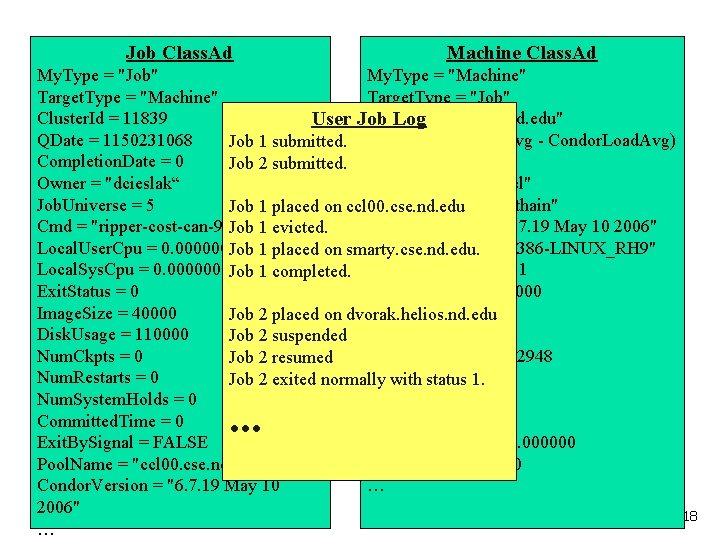

Job Class. Ad Machine Class. Ad My. Type = "Job" My. Type = "Machine" Target. Type = "Job" Cluster. Id = 11839 Name = "ccl 00. cse. nd. edu" User Job Log QDate = 1150231068 Job 1 submitted. Cpu. Busy = ((Load. Avg - Condor. Load. Avg) Completion. Date = 0 Job 2 submitted. >= 0. 500000) Owner = "dcieslak“ Machine. Group = "ccl" Job. Universe = 5 Machine. Owner = "dthain" Job 1 placed on ccl 00. cse. nd. edu Cmd = "ripper-cost-can-9 -50. sh" Condor. Version = "6. 7. 19 May 10 2006" Job 1 evicted. Local. User. Cpu = 0. 000000 Job 1 placed on smarty. cse. nd. edu. Condor. Platform = "I 386 -LINUX_RH 9" Local. Sys. Cpu = 0. 000000 Job 1 completed. Virtual. Machine. ID = 1 Exit. Status = 0 Executable. Size = 20000 Image. Size = 40000 Job. Universe = 1 Job 2 placed on dvorak. helios. nd. edu Disk. Usage = 110000 Job 2 suspended Nice. User = FALSE Num. Ckpts = 0 Virtual. Memory = 962948 Job 2 resumed Num. Restarts = 0 Memory = 4981. Job 2 exited normally with status Num. System. Holds = 0 Cpus = 1 Committed. Time = 0 Disk = 19072712 Exit. By. Signal = FALSE Condor. Load. Avg = 1. 000000 Pool. Name = "ccl 00. cse. nd. edu" Load. Avg = 1. 130000 Condor. Version = "6. 7. 19 May 10 … 2006" … . . . 18

Job Job. Ad Ad Ad User Job Log Success Class Machine Ad Ad Failure Class Failure Criteria: exit !=0 core dump evicted suspended bad output DATA MINING Your jobs work fine on RH Linux 12. 1 and 12. 3 but they always seem to crash on version 12. 2. 19

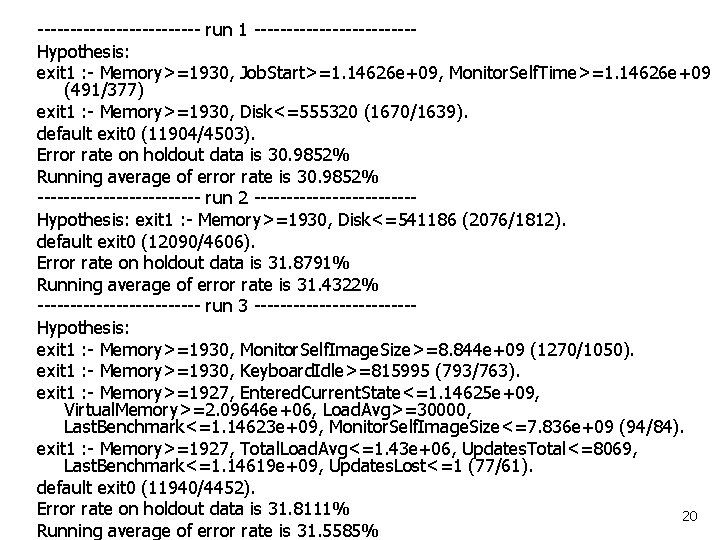

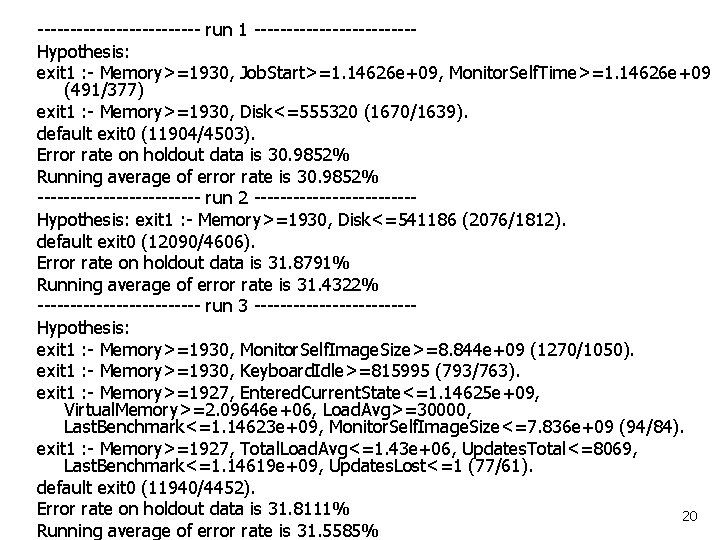

------------- run 1 ------------Hypothesis: exit 1 : - Memory>=1930, Job. Start>=1. 14626 e+09, Monitor. Self. Time>=1. 14626 e+09 (491/377) exit 1 : - Memory>=1930, Disk<=555320 (1670/1639). default exit 0 (11904/4503). Error rate on holdout data is 30. 9852% Running average of error rate is 30. 9852% ------------- run 2 ------------Hypothesis: exit 1 : - Memory>=1930, Disk<=541186 (2076/1812). default exit 0 (12090/4606). Error rate on holdout data is 31. 8791% Running average of error rate is 31. 4322% ------------- run 3 ------------Hypothesis: exit 1 : - Memory>=1930, Monitor. Self. Image. Size>=8. 844 e+09 (1270/1050). exit 1 : - Memory>=1930, Keyboard. Idle>=815995 (793/763). exit 1 : - Memory>=1927, Entered. Current. State<=1. 14625 e+09, Virtual. Memory>=2. 09646 e+06, Load. Avg>=30000, Last. Benchmark<=1. 14623 e+09, Monitor. Self. Image. Size<=7. 836 e+09 (94/84). exit 1 : - Memory>=1927, Total. Load. Avg<=1. 43 e+06, Updates. Total<=8069, Last. Benchmark<=1. 14619 e+09, Updates. Lost<=1 (77/61). default exit 0 (11940/4452). Error rate on holdout data is 31. 8111% 20 Running average of error rate is 31. 5585%

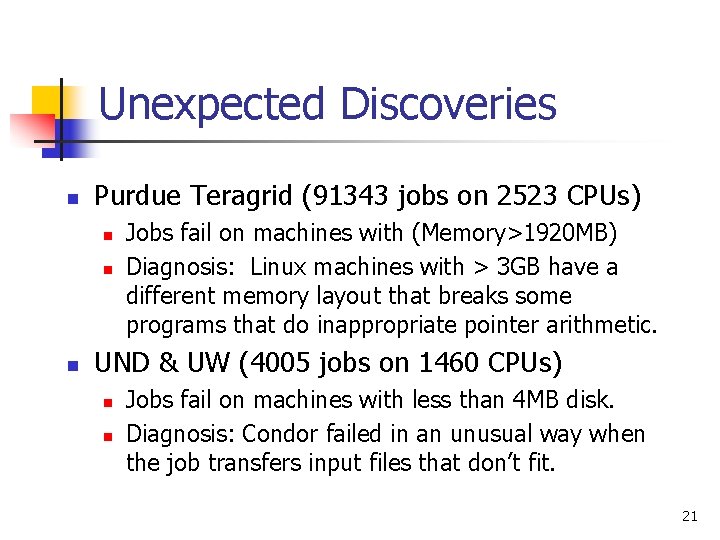

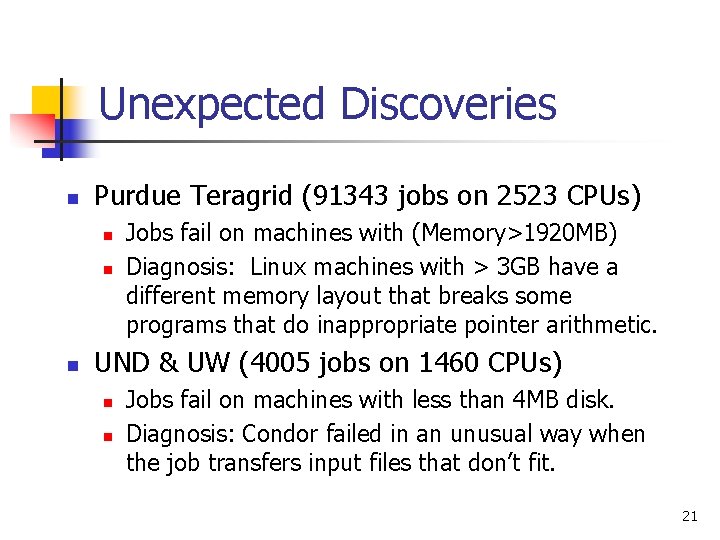

Unexpected Discoveries n Purdue Teragrid (91343 jobs on 2523 CPUs) n n n Jobs fail on machines with (Memory>1920 MB) Diagnosis: Linux machines with > 3 GB have a different memory layout that breaks some programs that do inappropriate pointer arithmetic. UND & UW (4005 jobs on 1460 CPUs) n n Jobs fail on machines with less than 4 MB disk. Diagnosis: Condor failed in an unusual way when the job transfers input files that don’t fit. 21

Many Open Problems n Strengths and Weaknesses of Approach n n Acting on Information n n Correlation != Causation -> could be enough? Limits of reported data -> increase resolution? Not enough data points -> direct job placement? Steering by the end user. Applying learned rules back to the system. Evaluating (and sometimes abandoning) changes. Creating tools that assist with “digging deeper. ” Data Mining Research n n Continuous intake + incremental construction. Creating results that non-specialists can understand. 22

Acknowledgements n Dr. Thain (University of Notre Dame) n n n Local Condor expert Use of some slides for this presentation Cooperative Computing Lab n n Maintain/Improve local Condor Pool Provide computing resources 23

Condor Related Publications n n n D. Cieslak, D. Thain, N. Chawla, "Troubleshooting Distributed Systems via Data Mining, " (HPDC-15), June 2006 N. Chawla, D. Cieslak, "Evaluating Calibration of Probability Estimation Trees, " AAAI Workshop on the Evaluation Methods in Machine Learning, July 2006 N. Chawla, D. Cieslak, L. Hall, A. Joshi, “Killing Two Birds with One Stone: Countering Cost and Imbalance, ” Data Mining and Knowledge Discovery, Under Revision 24

Questions? 25