Neural Machine Translation Quinn Lanners 1 Advisor Dr

- Slides: 20

Neural Machine Translation Quinn Lanners 1 Advisor: Dr. Thomas Laurent 1 1 Department of Mathematics, Loyola Marymount University

What is Neural Machine Translation? Encoder-Decoder Structure Objective: Improve Py. Torch Tutorial Results Future Enhancements Outline

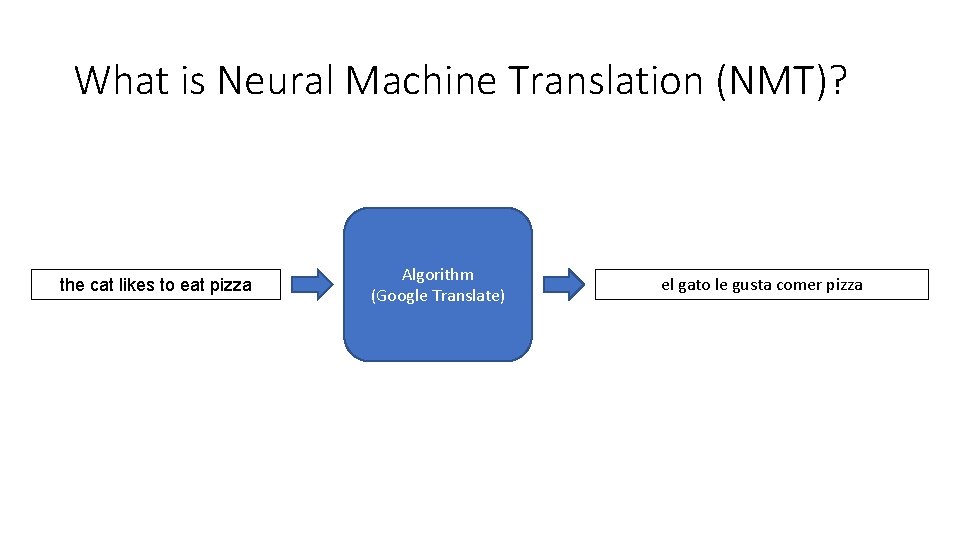

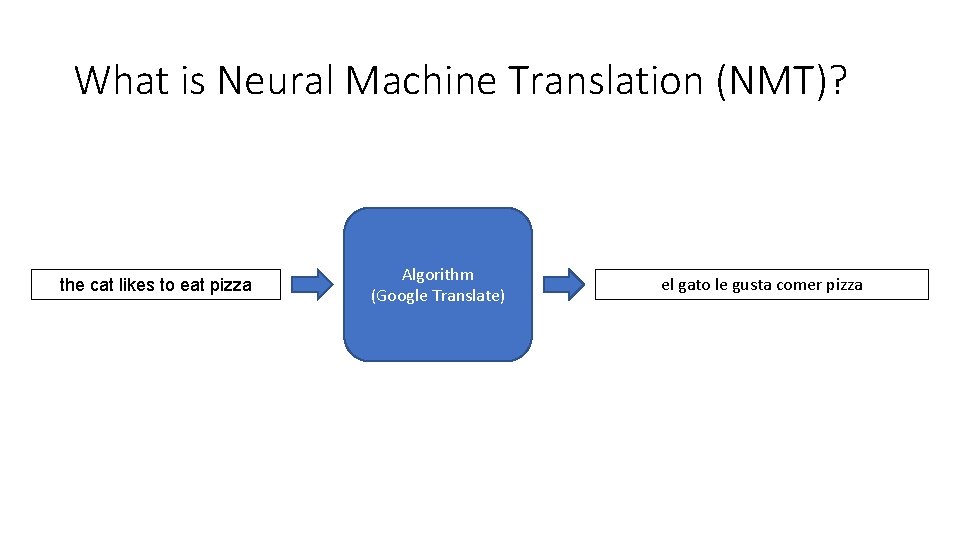

What is Neural Machine Translation (NMT)? the cat likes to eat pizza Algorithm (Google Translate) el gato le gusta comer pizza

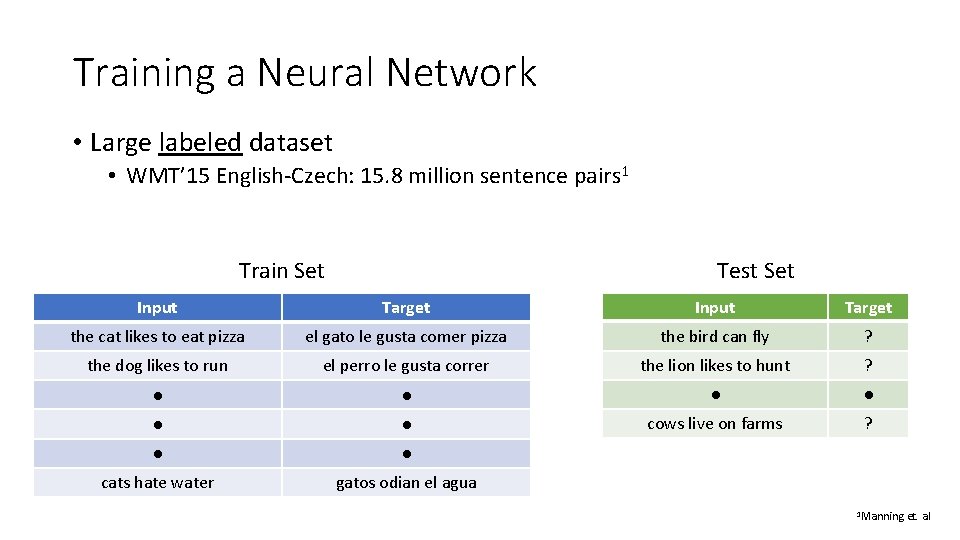

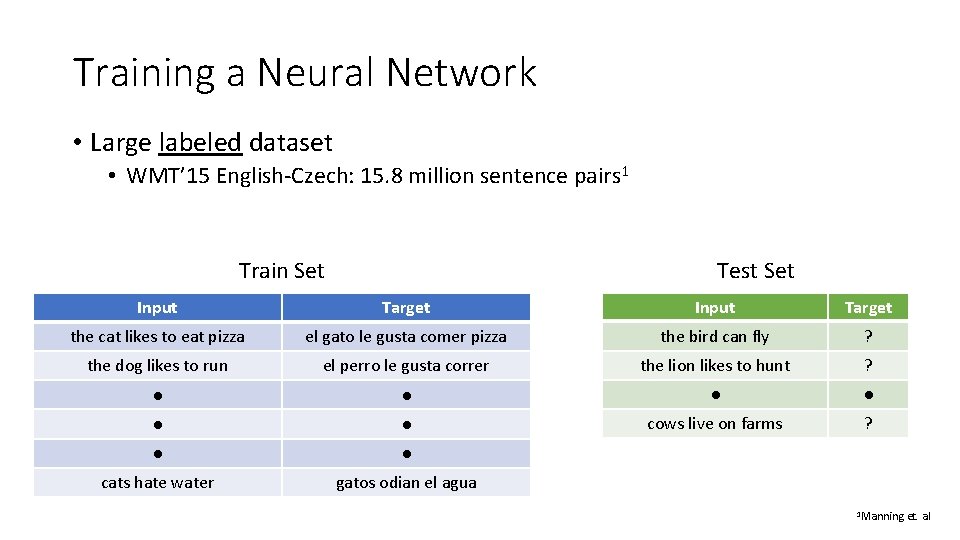

Training a Neural Network • Large labeled dataset • WMT’ 15 English-Czech: 15. 8 million sentence pairs 1 Train Set Test Set Input Target the cat likes to eat pizza el gato le gusta comer pizza the bird can fly ? the dog likes to run el perro le gusta correr the lion likes to hunt ? ● ● ● cows live on farms ? ● ● cats hate water gatos odian el agua 1 Manning et. al

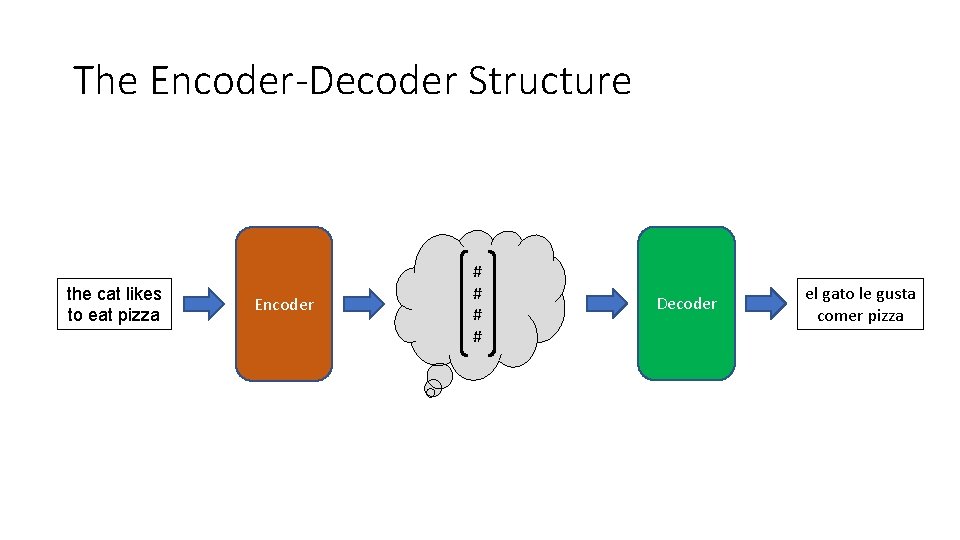

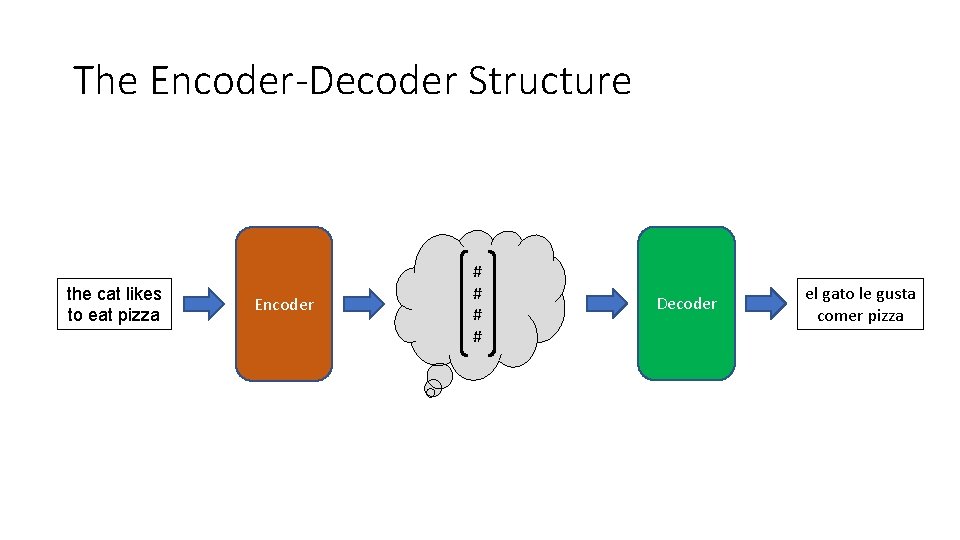

The Encoder-Decoder Structure the cat likes to eat pizza Encoder # # Decoder el gato le gusta comer pizza

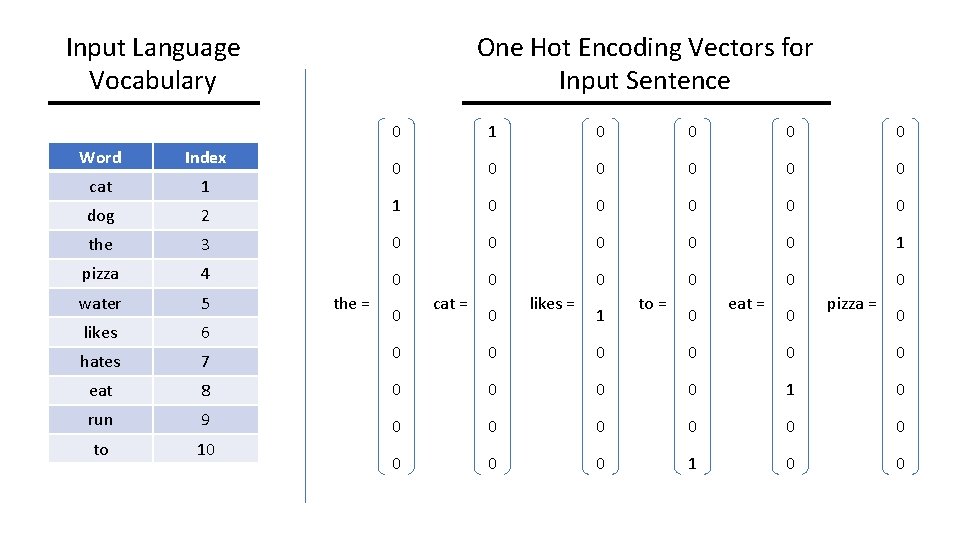

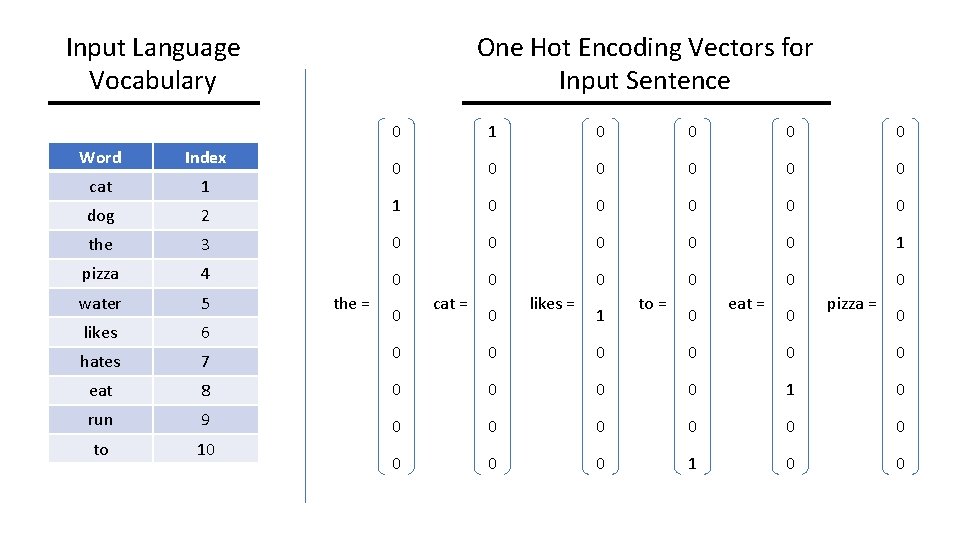

One Hot Encoding Vectors for Input Sentence Input Language Vocabulary 0 1 0 0 0 0 0 1 0 0 0 Word Index cat 1 dog 2 the 3 0 0 0 1 pizza 4 0 0 0 water 5 likes 6 hates 7 0 0 0 eat 8 0 0 1 0 run 9 0 0 0 to 10 0 1 0 0 the = 0 cat = 0 likes = 1 to = 0 eat = 0 pizza = 0

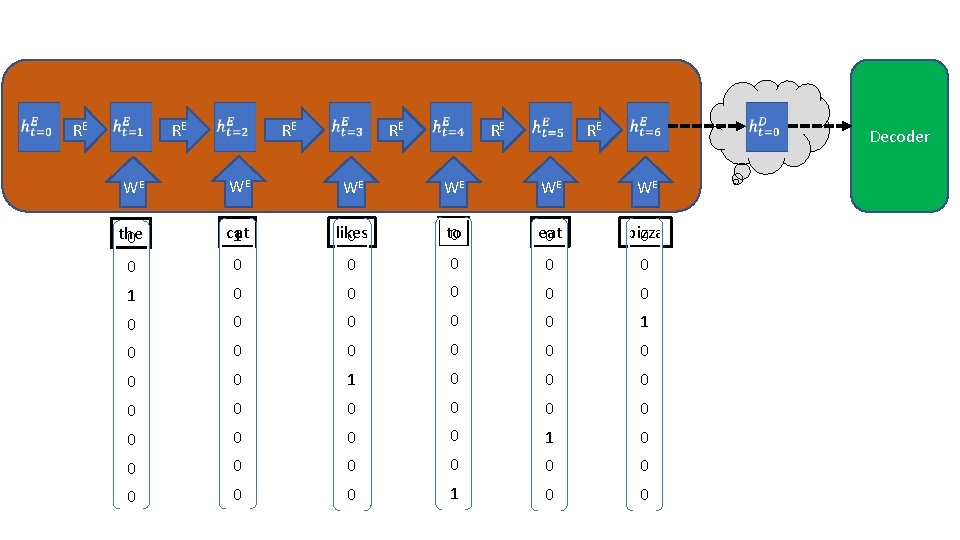

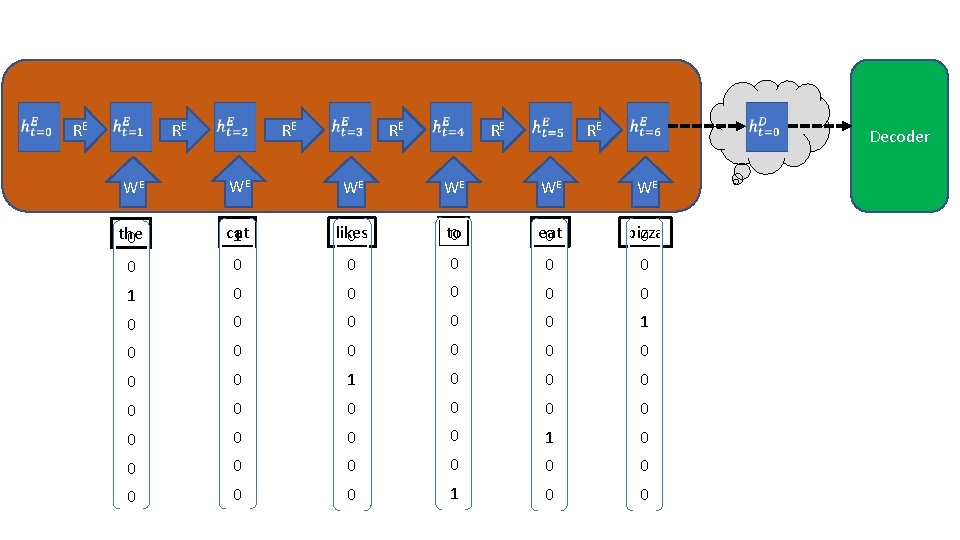

RE RE RE Decoder WE WE WE the 0 cat 1 likes 0 to 0 eat 0 pizza 0 0 0 0 0 0 0 1 0 0 0 0 0 0 1 0 0

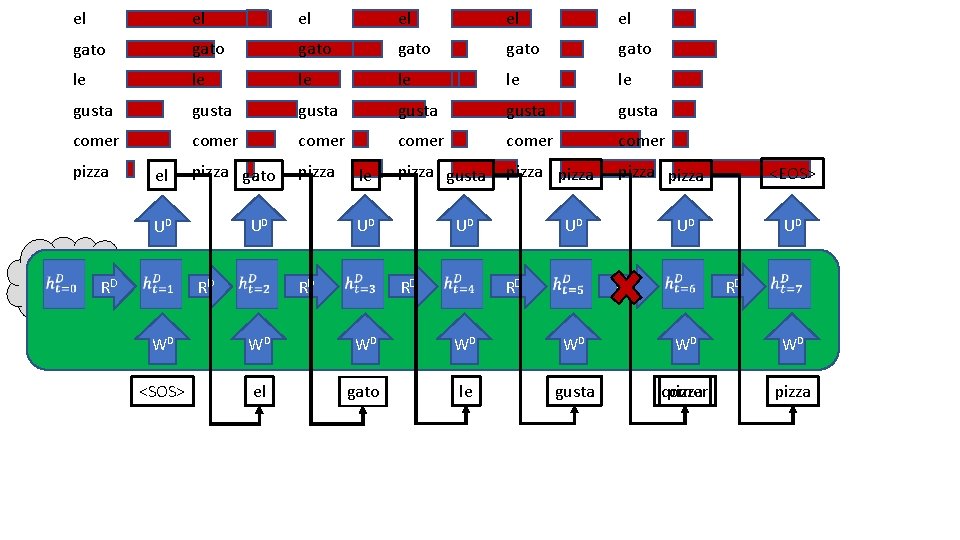

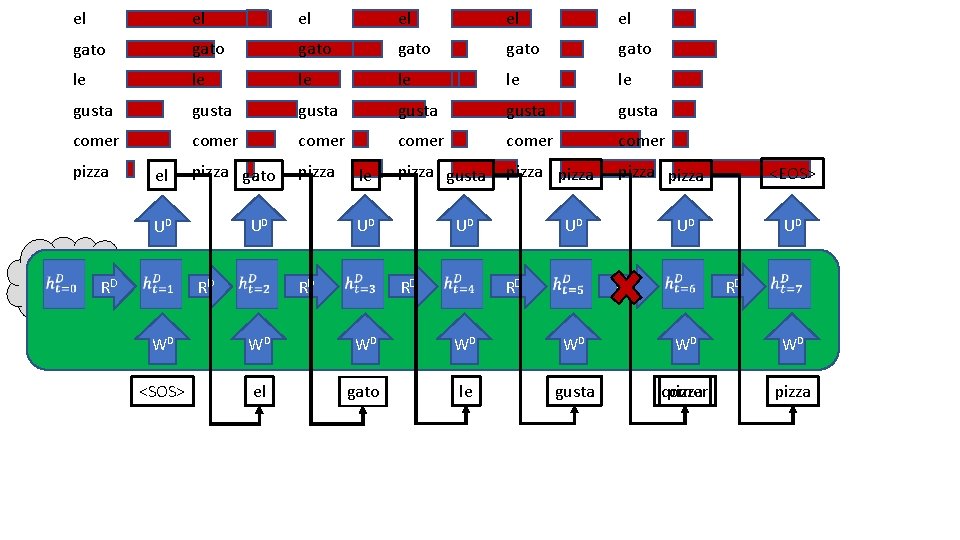

el el el gato gato le le le gusta gusta comer comer pizza gato pizza gusta pizza pizza el UD UD RD RD le UD RD <EOS> UD RD WD WD <SOS> el gato le gusta comer pizza

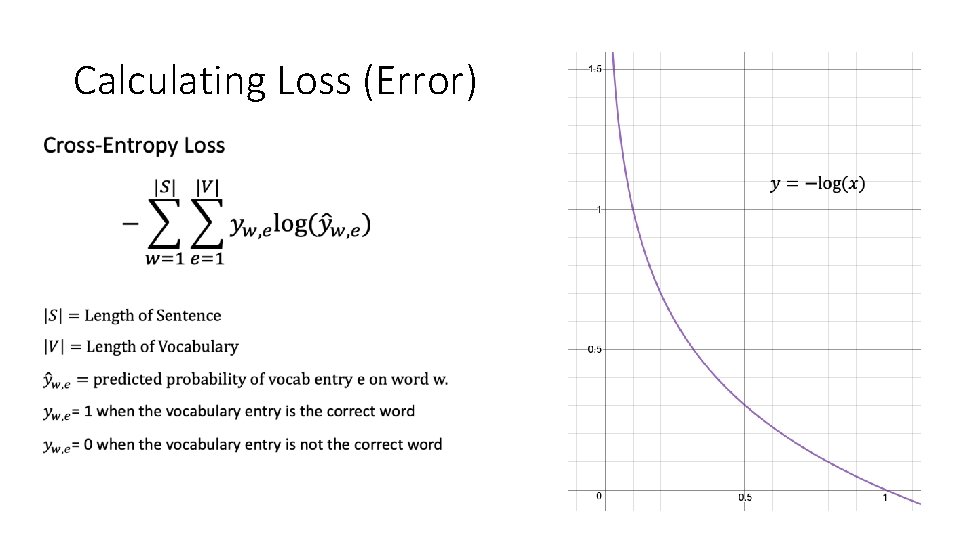

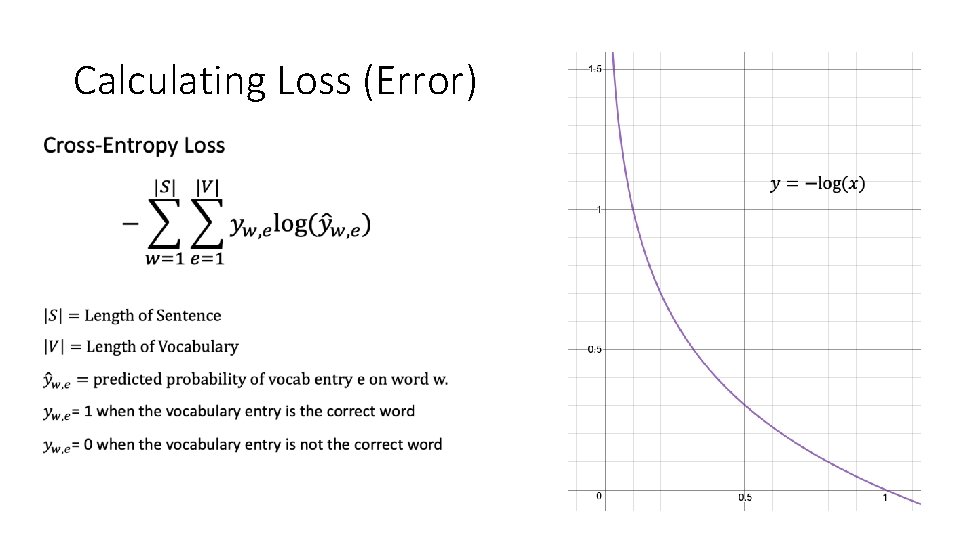

Calculating Loss (Error) •

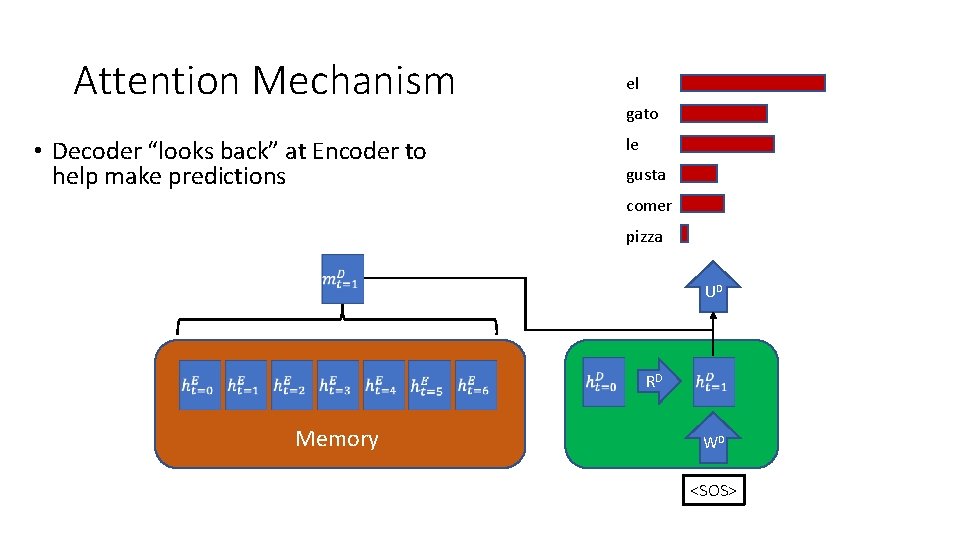

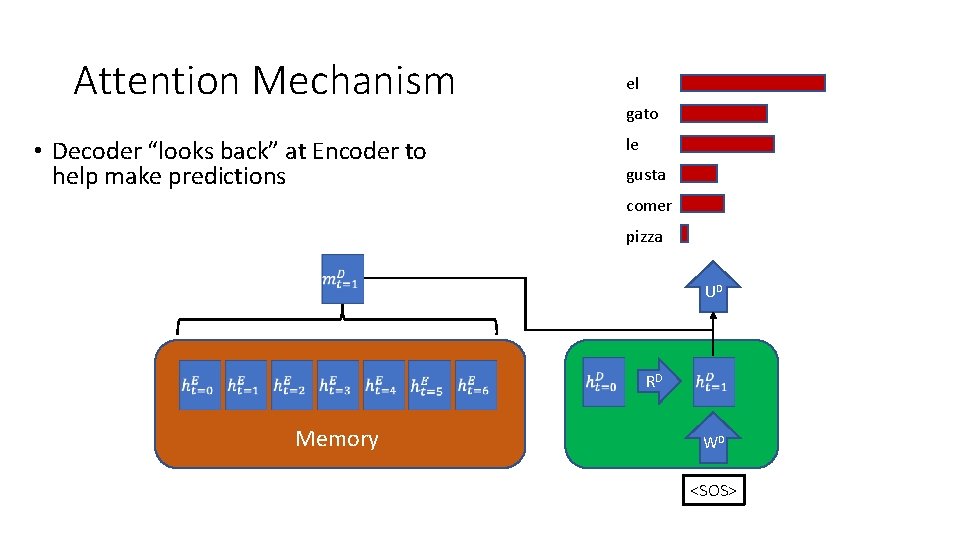

Attention Mechanism • Decoder “looks back” at Encoder to help make predictions el gato le gusta comer pizza UD RD Memory WD <SOS>

Objective: Improve Py. Torch Tutorial • 2 main Python Machine Learning packages • Py. Torch (Facebook) • Tensor. Flow (Google) • Py. Torch Neural Machine Translation Tutorial “TRANSLATION WITH A SEQUENCE TO SEQUENCE NETWORK AND ATTENTION” • Shortcomings • No test set • Does not batchify • GRU rather than LSTM https: //pytorch. org/

Data Set for Py. Torch Tutorial • French -> English • Training Set: 10, 853 sentence pairs • Test Set: 0 sentence pairs • Vocabulary Sizes • French: 4489 • English: 2925

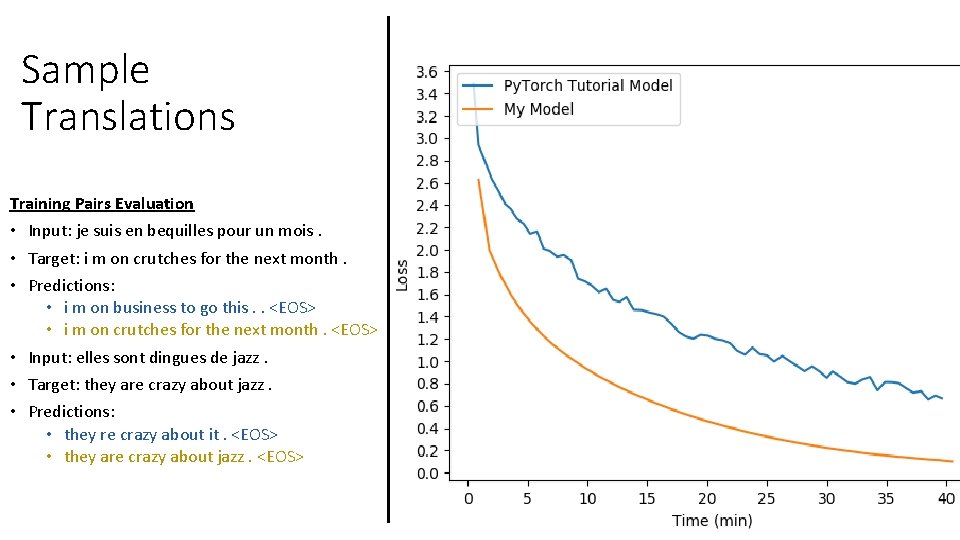

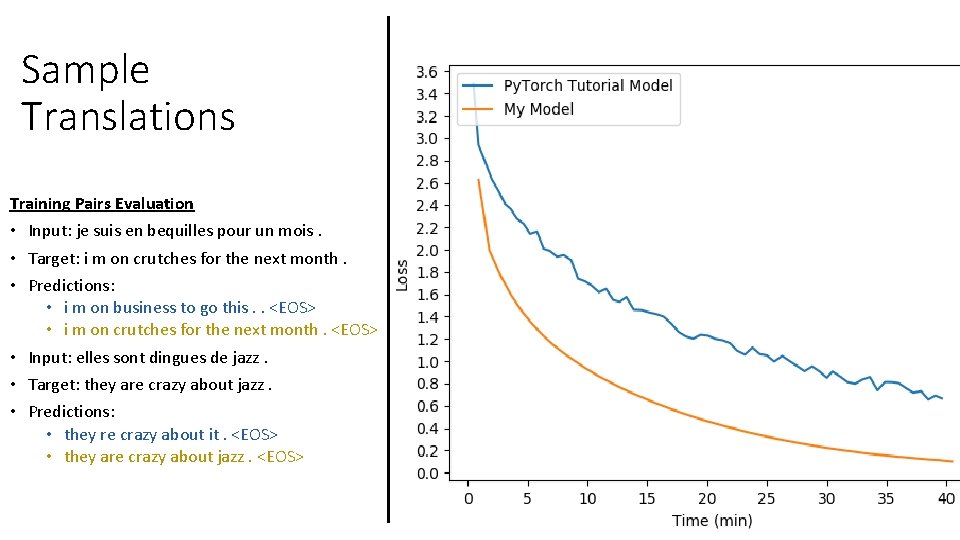

Sample Translations Training Pairs Evaluation • Input: je suis en bequilles pour un mois. • Target: i m on crutches for the next month. • Predictions: • i m on business to go this. . <EOS> • i m on crutches for the next month. <EOS> • Input: elles sont dingues de jazz. • Target: they are crazy about jazz. • Predictions: • they re crazy about it. <EOS> • they are crazy about jazz. <EOS>

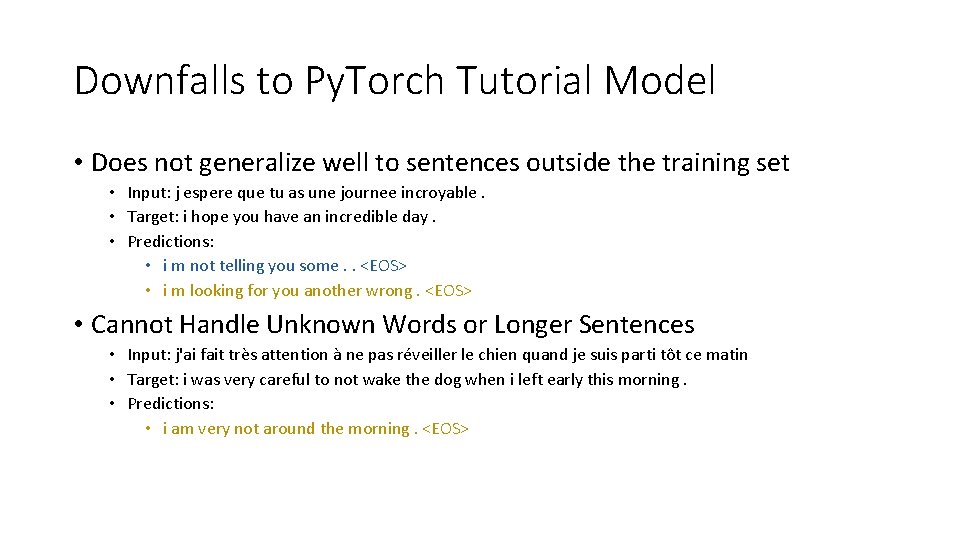

Downfalls to Py. Torch Tutorial Model • Does not generalize well to sentences outside the training set • Input: j espere que tu as une journee incroyable. • Target: i hope you have an incredible day. • Predictions: • i m not telling you some. . <EOS> • i m looking for you another wrong. <EOS> • Cannot Handle Unknown Words or Longer Sentences • Input: j'ai fait très attention à ne pas réveiller le chien quand je suis parti tôt ce matin • Target: i was very careful to not wake the dog when i left early this morning. • Predictions: • i am very not around the morning. <EOS>

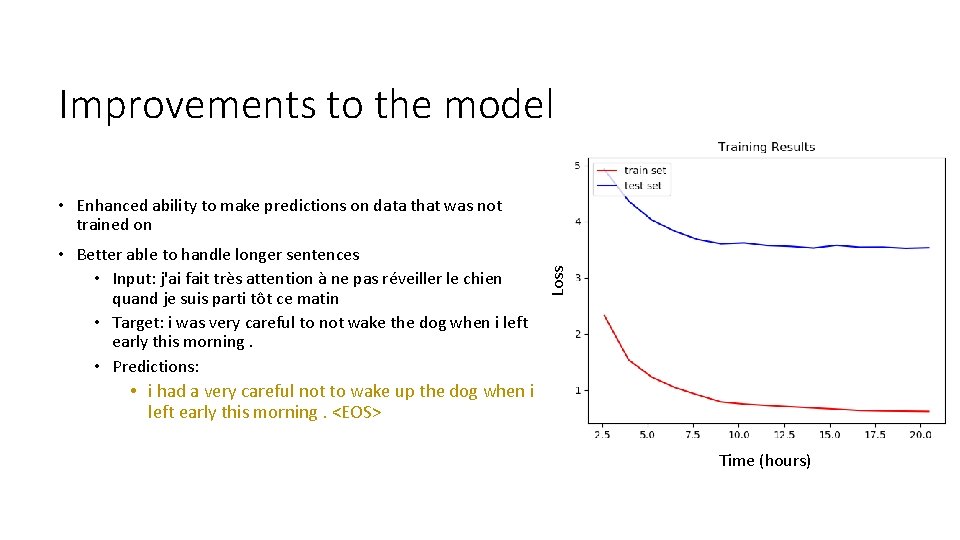

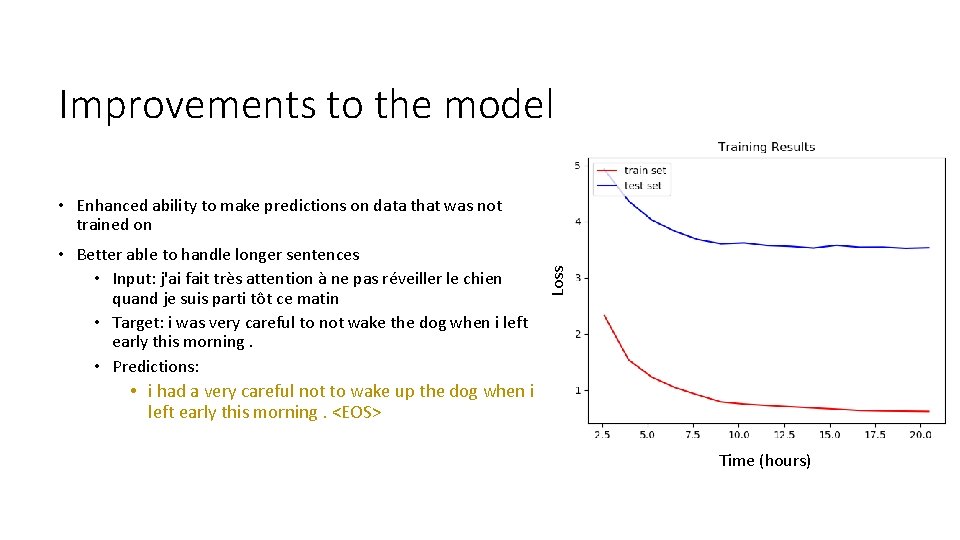

Improvements to the model • Better able to handle longer sentences • Input: j'ai fait très attention à ne pas réveiller le chien quand je suis parti tôt ce matin • Target: i was very careful to not wake the dog when i left early this morning. • Predictions: • i had a very careful not to wake up the dog when i left early this morning. <EOS> Loss • Enhanced ability to make predictions on data that was not trained on Time (hours)

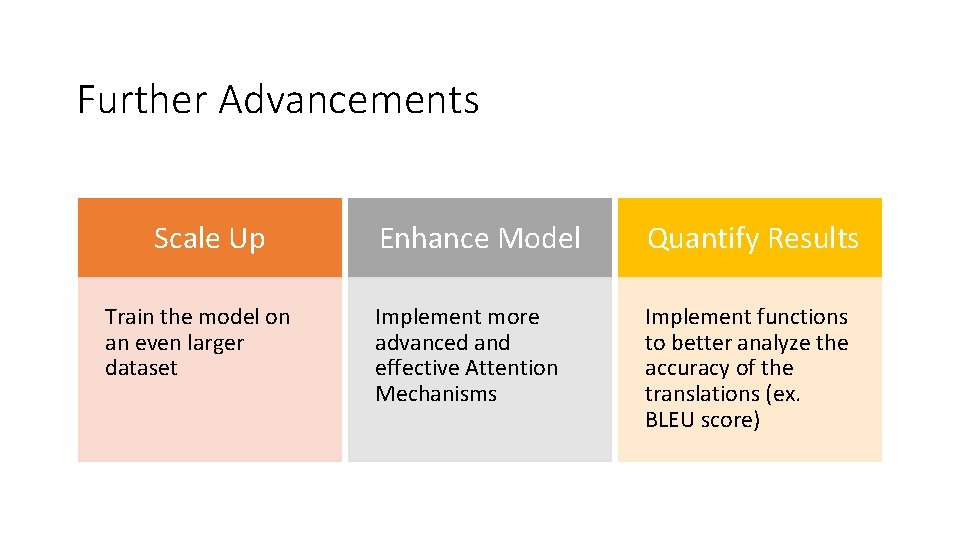

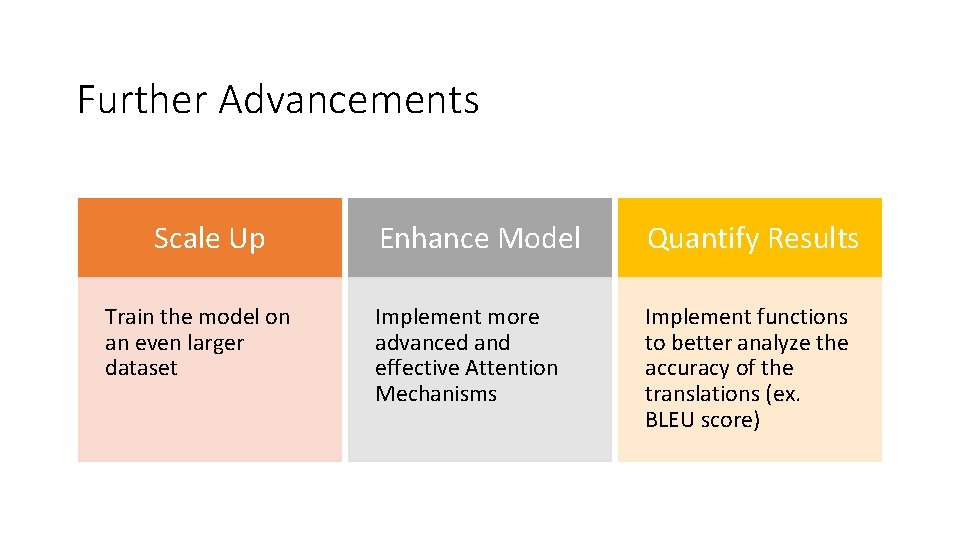

Further Advancements Scale Up Train the model on an even larger dataset Enhance Model Quantify Results Implement more advanced and effective Attention Mechanisms Implement functions to better analyze the accuracy of the translations (ex. BLEU score)

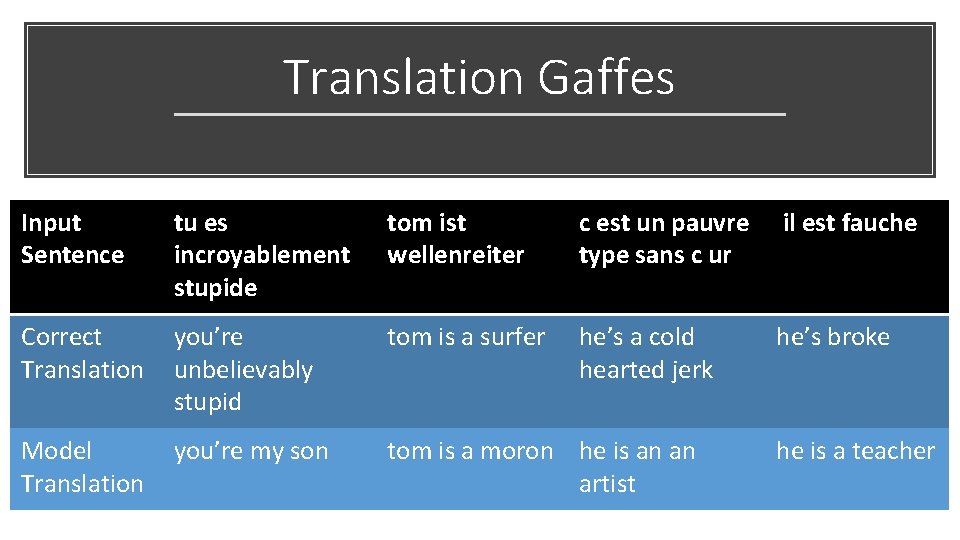

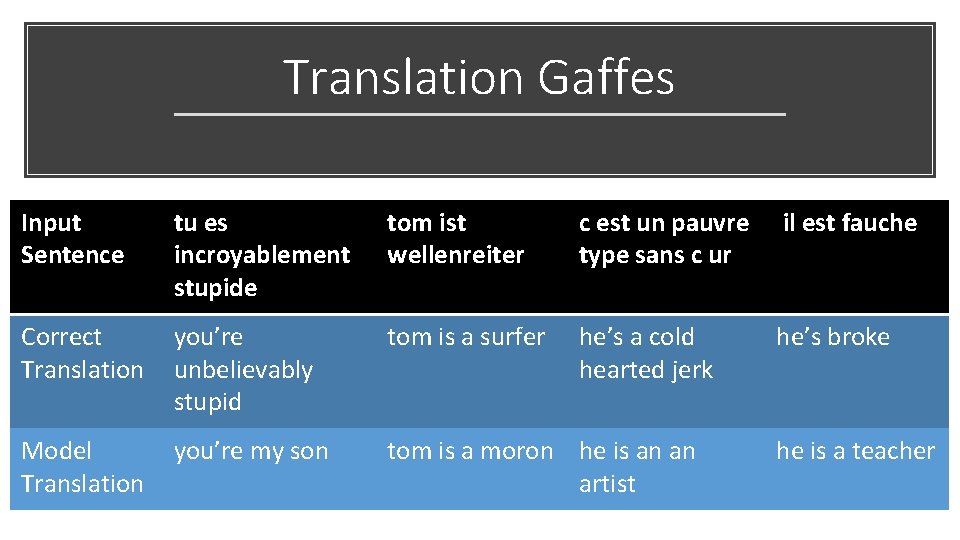

Translation Gaffes Input Sentence tu es incroyablement stupide tom ist wellenreiter c est un pauvre type sans c ur il est fauche Correct Translation you’re unbelievably stupid tom is a surfer he’s a cold hearted jerk he’s broke Model Translation you’re my son tom is a moron he is an an artist he is a teacher

Sources • Jozefowicz, R. , Zaremba, W. , & Sutskever, I. (2015, June). An empirical exploration of recurrent network architectures. In International Conference on Machine Learning (pp. 2342 -2350). • Luong, M. T. , Pham, H. , & Manning, C. D. (2015). Effective approaches to attention-based neural machine translation. ar. Xiv preprint ar. Xiv: 1508. 04025. • Manning, Luong, See, & Pham. Neural Machine Translation. The Stanford Natural Language Processing Group. Retrieved on April 15, 2019 from https: //nlp. stanford. edu/projects/nmt/ • Zhang, Z. , & Sabuncu, M. (2018). Generalized cross entropy loss for training deep neural networks with noisy labels. In Advances in Neural Information Processing Systems (pp. 8778 -8788).

Thank You! Dr. Thomas Laurent LMU Mathematics Department

Questions?

Visualizing and understanding neural machine translation

Visualizing and understanding neural machine translation Weisfeiler-lehman neural machine for link prediction

Weisfeiler-lehman neural machine for link prediction Semantic translation examples

Semantic translation examples Voice translation profile

Voice translation profile Transformation of a function

Transformation of a function Noun phrases

Noun phrases Self – marc quinn, 1991

Self – marc quinn, 1991 Self – marc quinn, 1991

Self – marc quinn, 1991 Paulyn marrinan quinn

Paulyn marrinan quinn Versengő értékek modell

Versengő értékek modell Edel quinn

Edel quinn Jim quinn net worth

Jim quinn net worth Edel quinn

Edel quinn Greg quinn caredx

Greg quinn caredx Duane quinn

Duane quinn Instrumento ocai de cameron y quinn

Instrumento ocai de cameron y quinn Ucf professor richard quinn

Ucf professor richard quinn Nccbp

Nccbp John quinn beaumont

John quinn beaumont Jenny tsang-quinn

Jenny tsang-quinn Feargal quinn

Feargal quinn