Mining Frequent Patterns Using FPGrowth Method Ivan Tanasi

![Problem importance 2/2 Association rules ◦ buys(“laptop”)=>buys(“mouse”) [support = 2%, confidence = 30%] • Problem importance 2/2 Association rules ◦ buys(“laptop”)=>buys(“mouse”) [support = 2%, confidence = 30%] •](https://slidetodoc.com/presentation_image_h2/64389004257a1d9ba2603ebc040ebbf9/image-5.jpg)

- Slides: 26

Mining Frequent Patterns Using FP-Growth Method Ivan Tanasić (itanasic@gmail. com) Department of Computer Engineering and Computer Science, School of Electrical Engineering, University of Belgrade

Mining Frequent Patterns without Candidate Generation: A Frequent-Pattern Tree Approach ◦ Jiawei Han (UIUC) ◦ Jian Pei (Buffalo) ◦ Yiwen Yin (SFU) ◦ Runying Mao (Microsoft) Ivan Tanasic (itanasic@gmail. com) 2/25

Problem Definition Mining frequent patterns from a DB ◦ Frequent intemsets (milk + bread) ◦ Frequent sequential patterns (computer -> printer -> paper) ◦ Frequent structural patterns (subgraphs, subtrees) Ivan Tanasic (itanasic@gmail. com) 3/25

Problem Importance 1/2 Basic DM primitive Used for mining data relationships ◦ Associations ◦ Correlations Helps with basic DM tasks ◦ Classification ◦ Clustering Ivan Tanasic (itanasic@gmail. com) 4/25

![Problem importance 22 Association rules buyslaptopbuysmouse support 2 confidence 30 Problem importance 2/2 Association rules ◦ buys(“laptop”)=>buys(“mouse”) [support = 2%, confidence = 30%] •](https://slidetodoc.com/presentation_image_h2/64389004257a1d9ba2603ebc040ebbf9/image-5.jpg)

Problem importance 2/2 Association rules ◦ buys(“laptop”)=>buys(“mouse”) [support = 2%, confidence = 30%] • Support=% of all transactions containing that items • Confidence=% of transactions containing I 1 that contain I 2 Ivan Tanasic (itanasic@gmail. com) 5/25

Problem Trend Apriori speedup using techniques New data structures (trees) Association rule specific algorithms Specific AR algorithms (One. R, Zero. R) FP-Growth still widely used Ivan Tanasic (itanasic@gmail. com) 6/25

Existing Solutions 1/3 (Apriori) Agrawal et al. (1994) AP: All nonempty subsets of a frequent itemset must also be frequent Starts from 1 -itemsets Join + prune (using AP + min supp) Generates huge number of candidates Ivan Tanasic (itanasic@gmail. com) 7/25

Existing Solutions 2/3 (ECLAT) Zaki (2000) Equivalence CLass Transformation Vertical format: {item, TID_set} instead of {TID, itemset} Intersects TID_sets of candidates TID_sets holds support info (no scans) Still generates candidates Ivan Tanasic (itanasic@gmail. com) 8/25

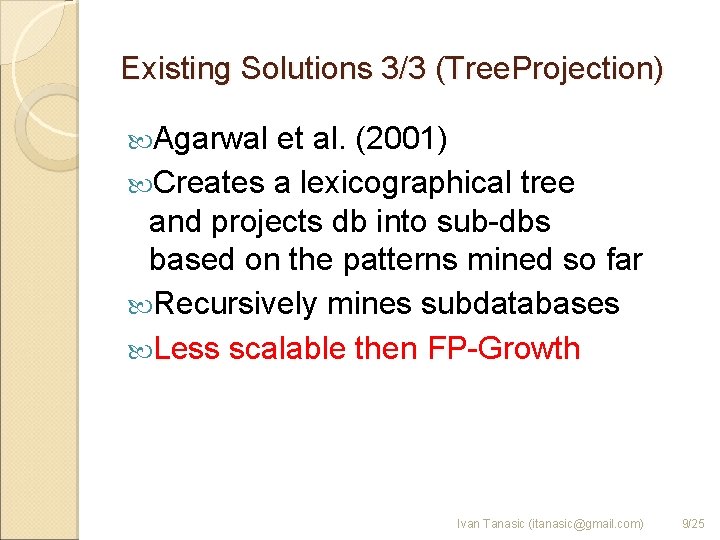

Existing Solutions 3/3 (Tree. Projection) Agarwal et al. (2001) Creates a lexicographical tree and projects db into sub-dbs based on the patterns mined so far Recursively mines subdatabases Less scalable then FP-Growth Ivan Tanasic (itanasic@gmail. com) 9/25

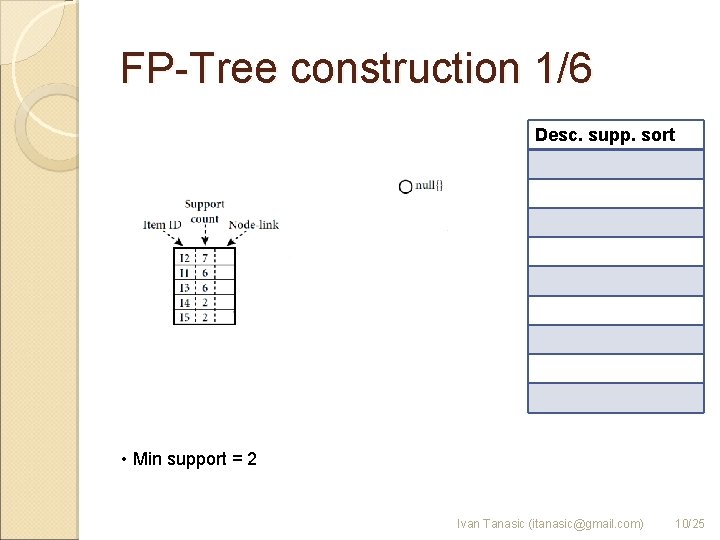

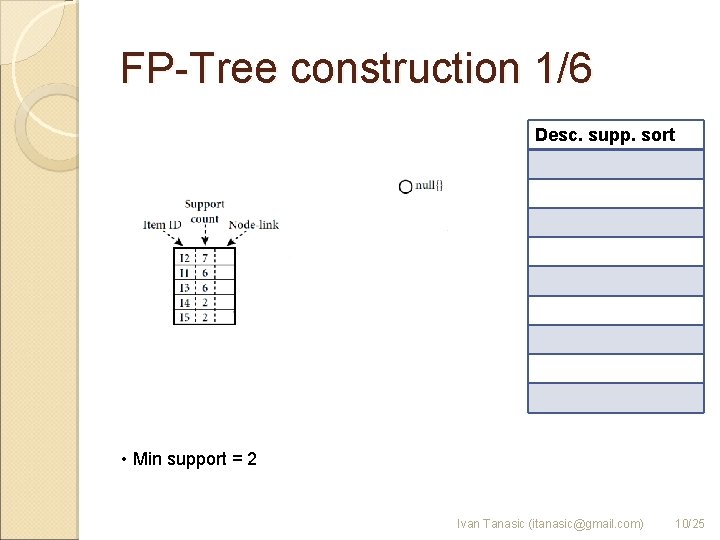

FP-Tree construction 1/6 Desc. supp. sort • Min support = 2 Ivan Tanasic (itanasic@gmail. com) 10/25

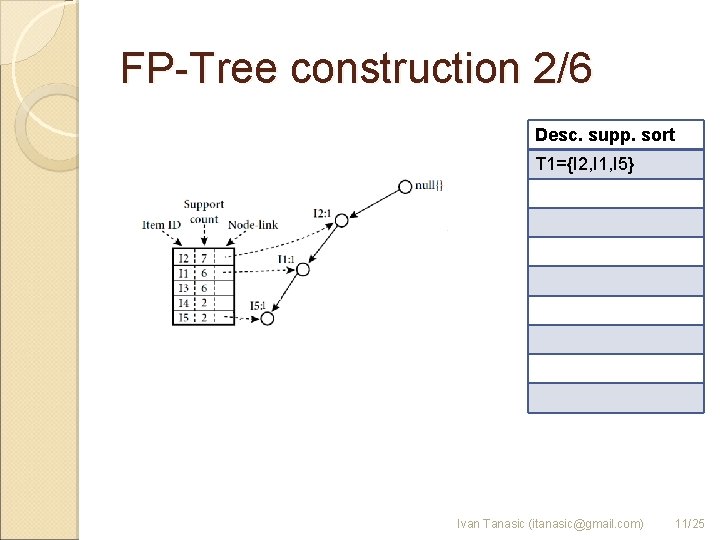

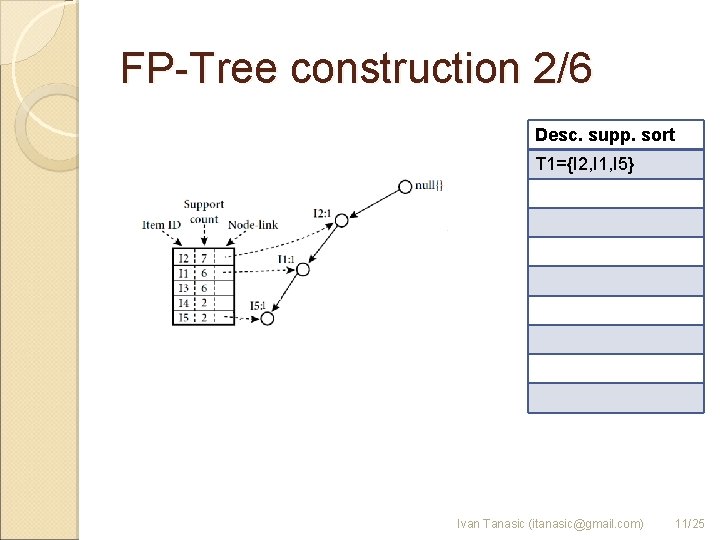

FP-Tree construction 2/6 Desc. supp. sort T 1={I 2, I 1, I 5} Ivan Tanasic (itanasic@gmail. com) 11/25

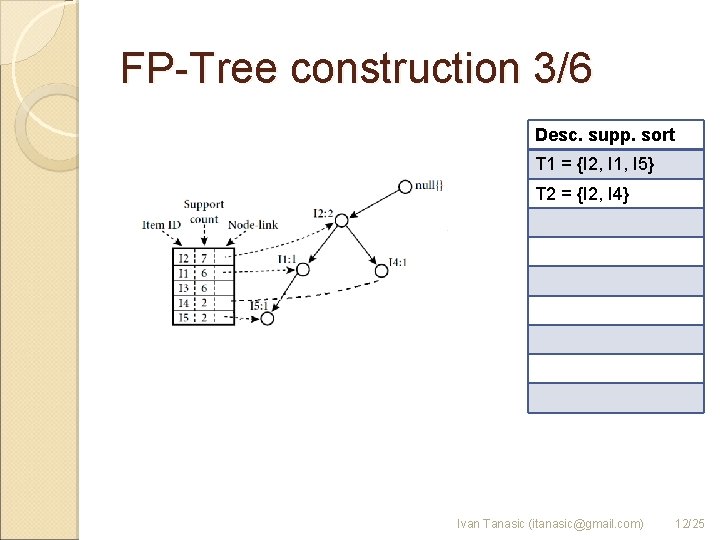

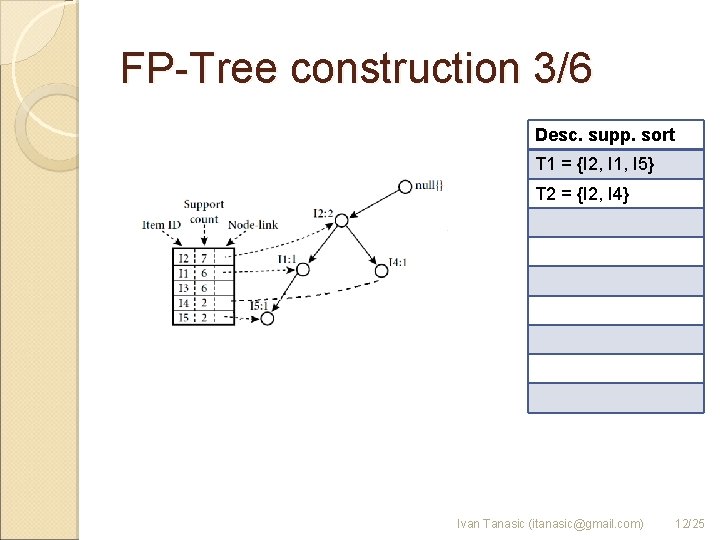

FP-Tree construction 3/6 Desc. supp. sort T 1 = {I 2, I 1, I 5} T 2 = {I 2, I 4} Ivan Tanasic (itanasic@gmail. com) 12/25

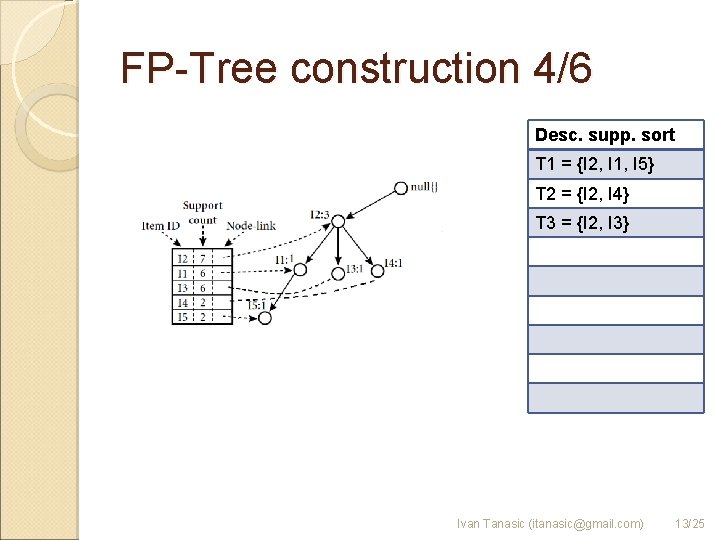

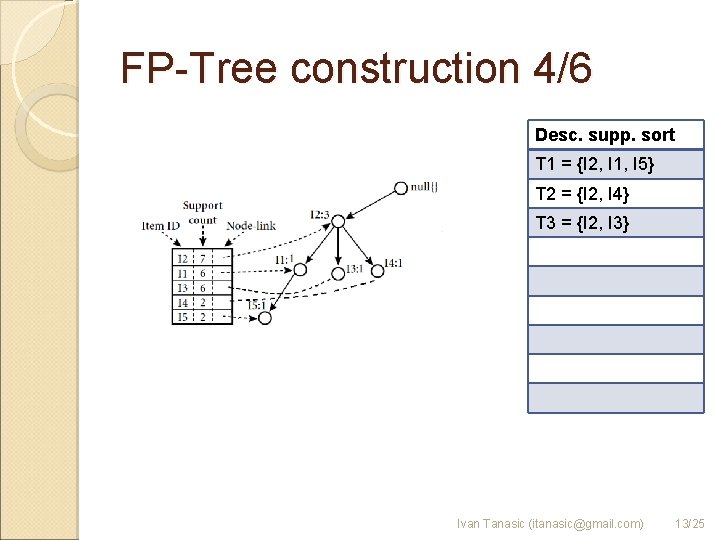

FP-Tree construction 4/6 Desc. supp. sort T 1 = {I 2, I 1, I 5} T 2 = {I 2, I 4} T 3 = {I 2, I 3} Ivan Tanasic (itanasic@gmail. com) 13/25

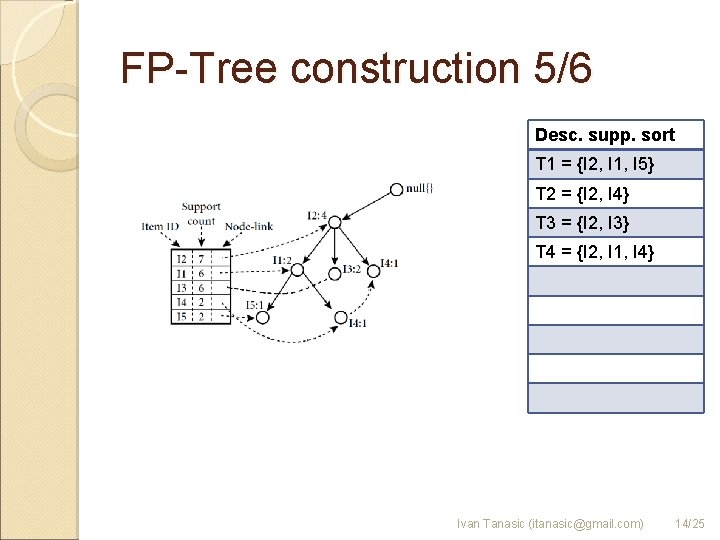

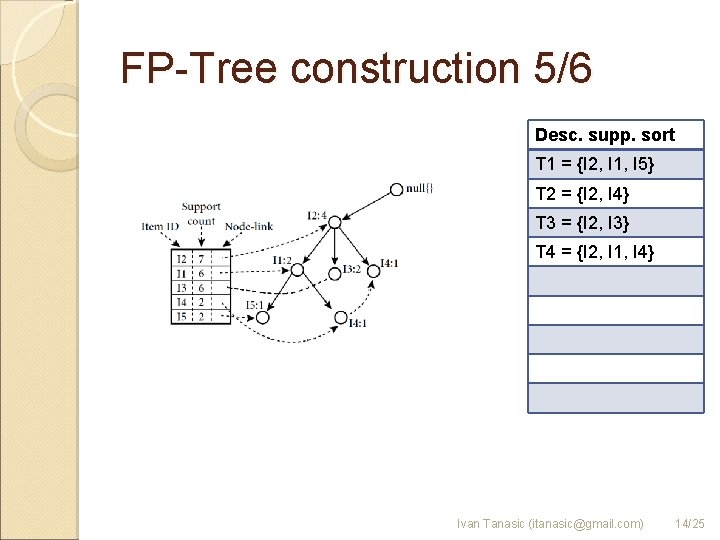

FP-Tree construction 5/6 Desc. supp. sort T 1 = {I 2, I 1, I 5} T 2 = {I 2, I 4} T 3 = {I 2, I 3} T 4 = {I 2, I 1, I 4} Ivan Tanasic (itanasic@gmail. com) 14/25

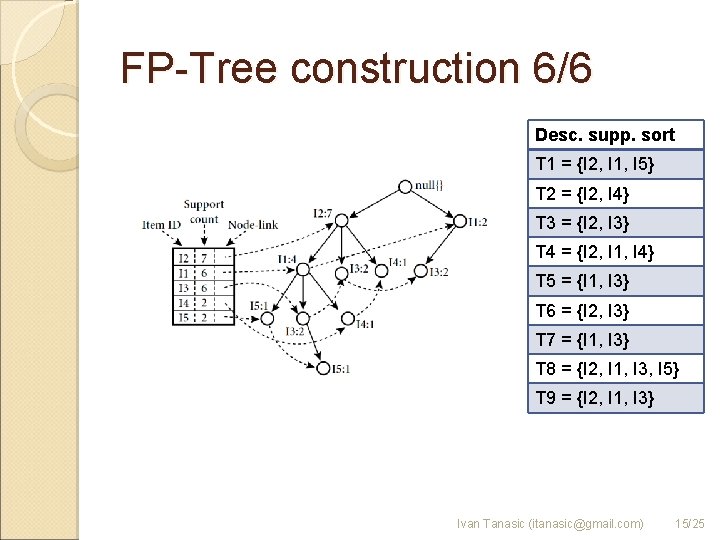

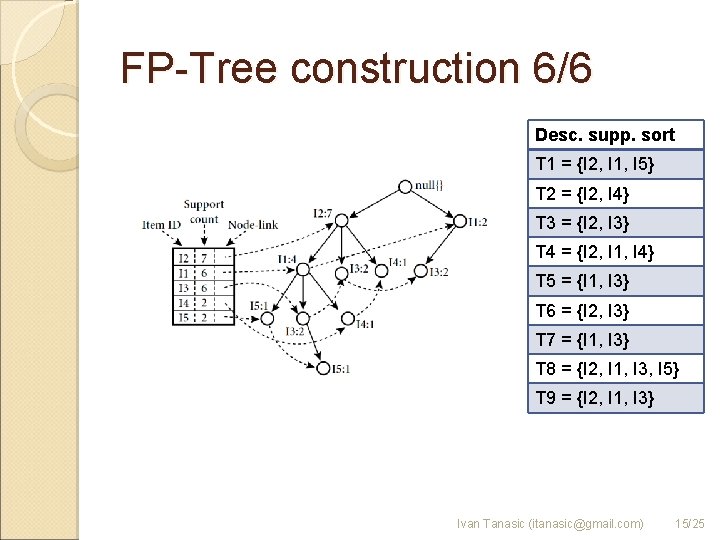

FP-Tree construction 6/6 Desc. supp. sort T 1 = {I 2, I 1, I 5} T 2 = {I 2, I 4} T 3 = {I 2, I 3} T 4 = {I 2, I 1, I 4} T 5 = {I 1, I 3} T 6 = {I 2, I 3} T 7 = {I 1, I 3} T 8 = {I 2, I 1, I 3, I 5} T 9 = {I 2, I 1, I 3} Ivan Tanasic (itanasic@gmail. com) 15/25

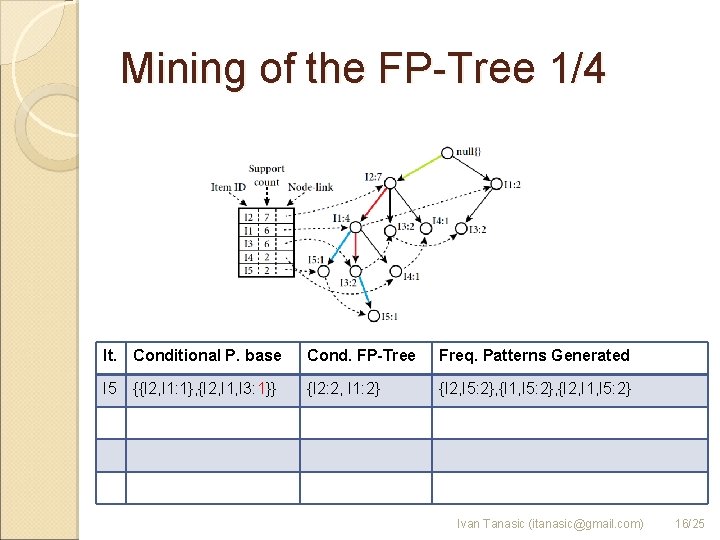

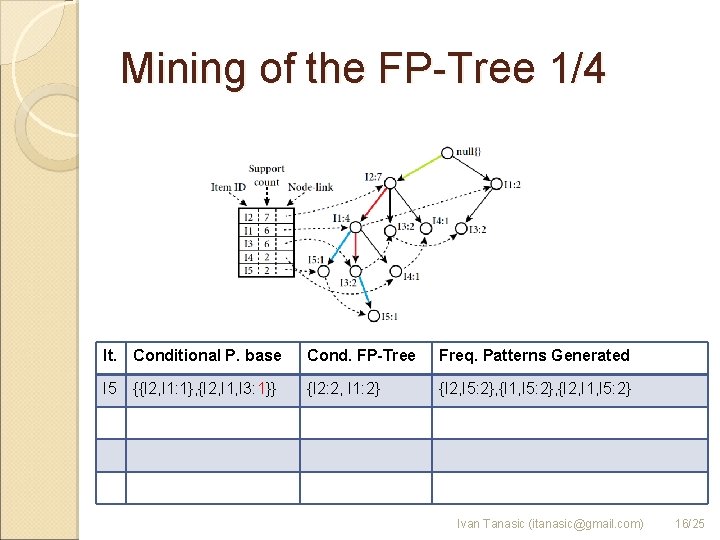

Mining of the FP-Tree 1/4 It. Conditional P. base Cond. FP-Tree Freq. Patterns Generated I 5 {I 2: 2, I 1: 2} {I 2, I 5: 2}, {I 1, I 5: 2}, {I 2, I 1, I 5: 2} {{I 2, I 1: 1}, {I 2, I 1, I 3: 1}} Ivan Tanasic (itanasic@gmail. com) 16/25

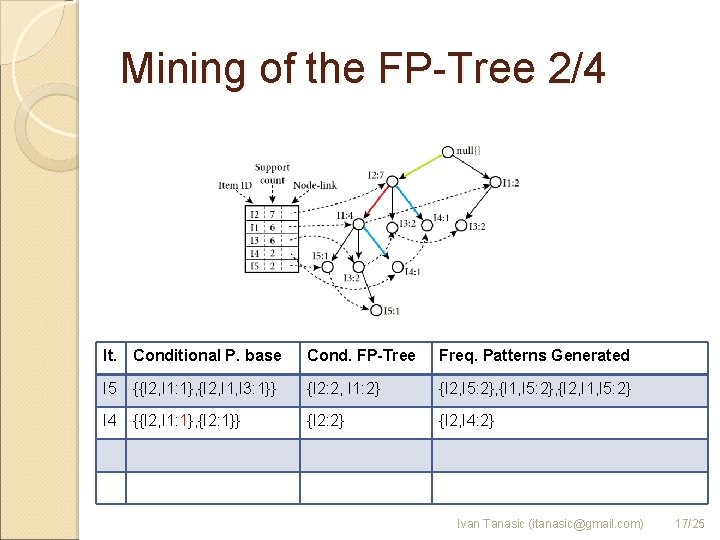

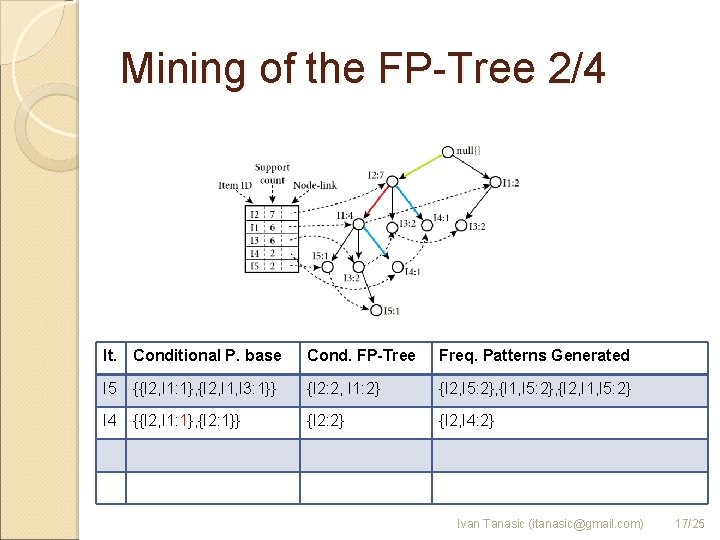

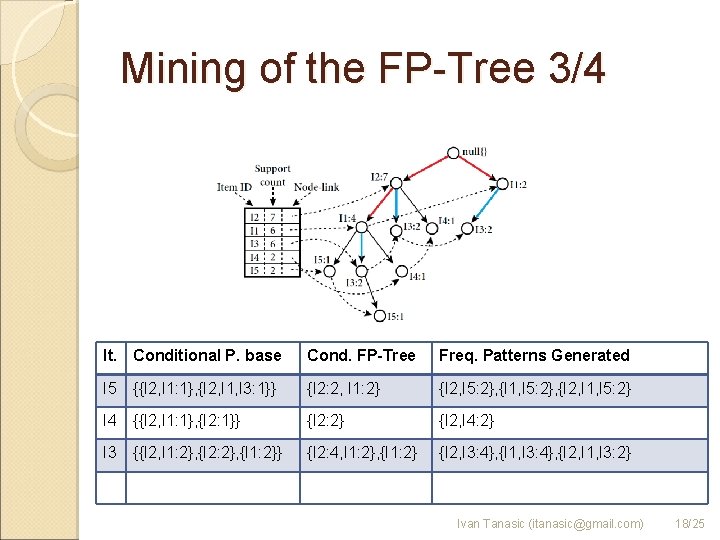

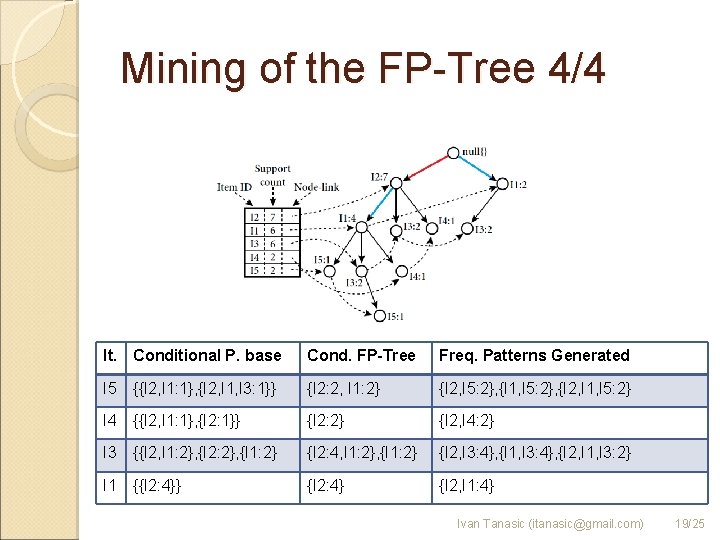

Mining of the FP-Tree 2/4 It. Conditional P. base Cond. FP-Tree Freq. Patterns Generated I 5 {{I 2, I 1: 1}, {I 2, I 1, I 3: 1}} {I 2: 2, I 1: 2} {I 2, I 5: 2}, {I 1, I 5: 2}, {I 2, I 1, I 5: 2} I 4 {{I 2, I 1: 1}, {I 2: 1}} {I 2: 2} {I 2, I 4: 2} Ivan Tanasic (itanasic@gmail. com) 17/25

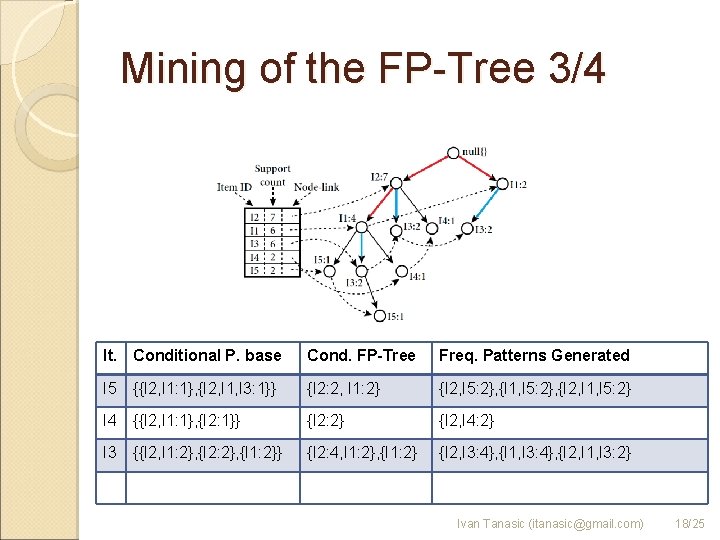

Mining of the FP-Tree 3/4 It. Conditional P. base Cond. FP-Tree Freq. Patterns Generated I 5 {{I 2, I 1: 1}, {I 2, I 1, I 3: 1}} {I 2: 2, I 1: 2} {I 2, I 5: 2}, {I 1, I 5: 2}, {I 2, I 1, I 5: 2} I 4 {{I 2, I 1: 1}, {I 2: 1}} {I 2: 2} {I 2, I 4: 2} I 3 {{I 2, I 1: 2}, {I 2: 2}, {I 1: 2}} {I 2: 4, I 1: 2}, {I 1: 2} {I 2, I 3: 4}, {I 1, I 3: 4}, {I 2, I 1, I 3: 2} Ivan Tanasic (itanasic@gmail. com) 18/25

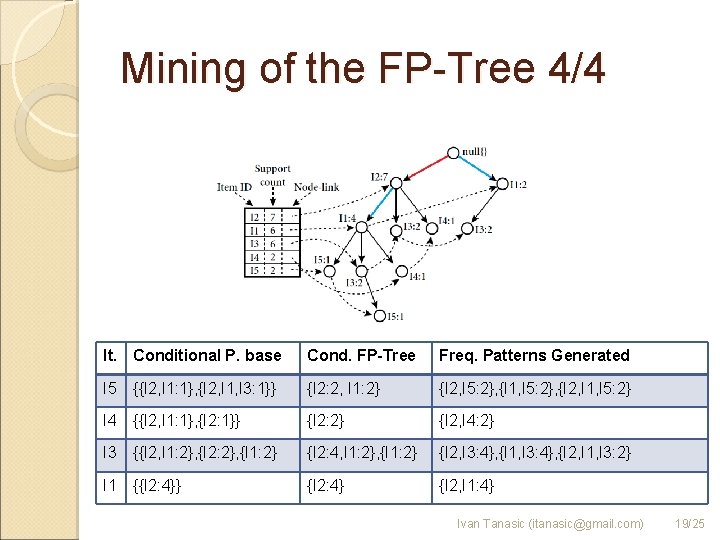

Mining of the FP-Tree 4/4 It. Conditional P. base Cond. FP-Tree Freq. Patterns Generated I 5 {{I 2, I 1: 1}, {I 2, I 1, I 3: 1}} {I 2: 2, I 1: 2} {I 2, I 5: 2}, {I 1, I 5: 2}, {I 2, I 1, I 5: 2} I 4 {{I 2, I 1: 1}, {I 2: 1}} {I 2: 2} {I 2, I 4: 2} I 3 {{I 2, I 1: 2}, {I 2: 2}, {I 1: 2} {I 2: 4, I 1: 2}, {I 1: 2} {I 2, I 3: 4}, {I 1, I 3: 4}, {I 2, I 1, I 3: 2} I 1 {{I 2: 4}} {I 2: 4} {I 2, I 1: 4} Ivan Tanasic (itanasic@gmail. com) 19/25

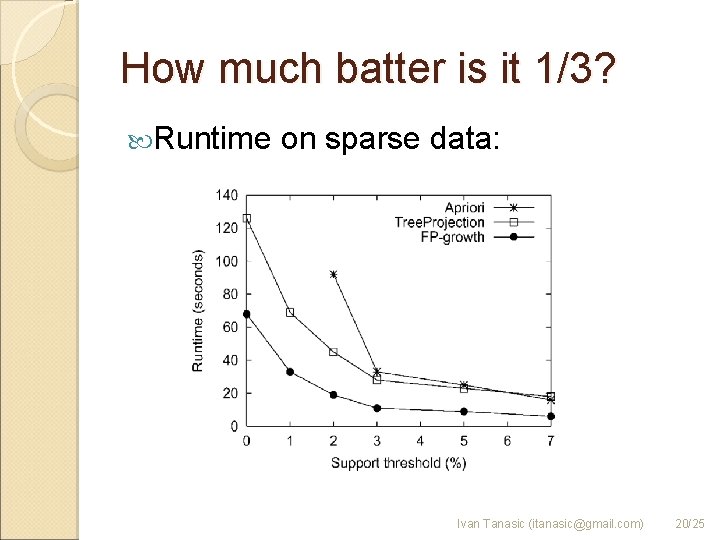

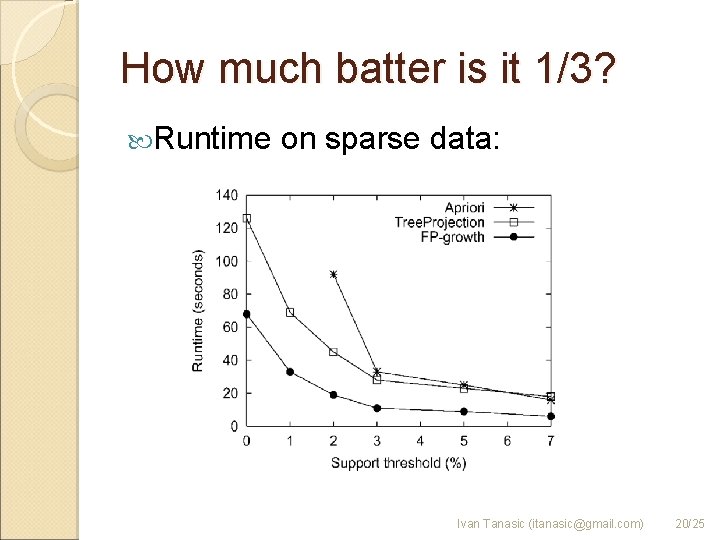

How much batter is it 1/3? Runtime on sparse data: Ivan Tanasic (itanasic@gmail. com) 20/25

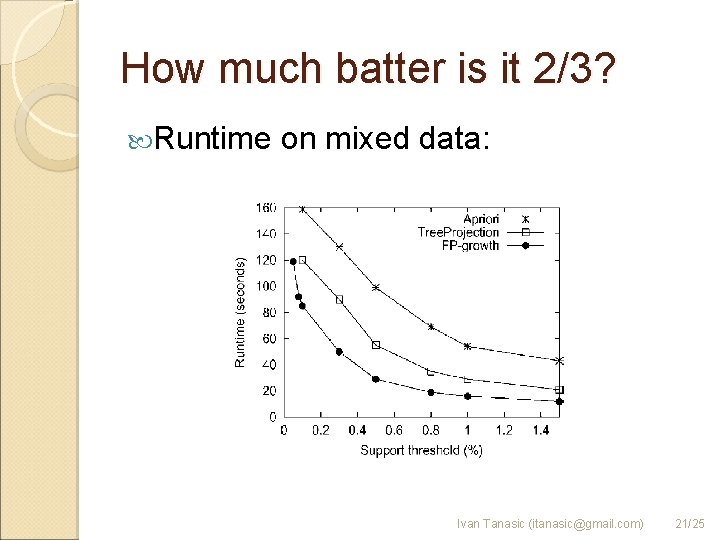

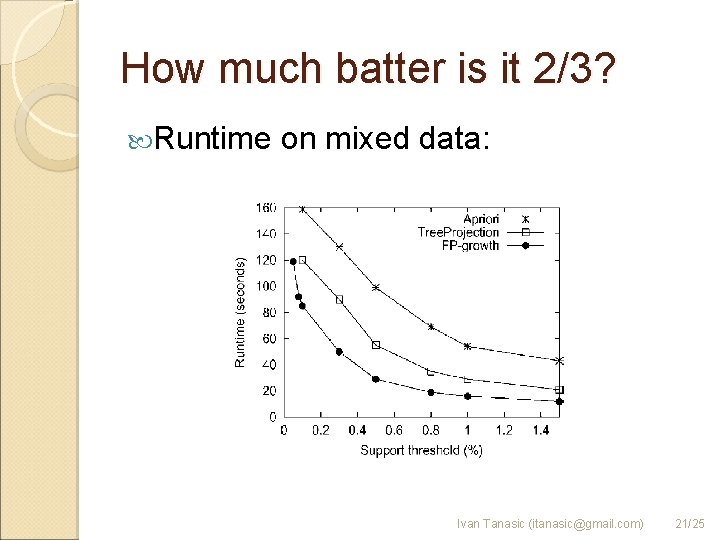

How much batter is it 2/3? Runtime on mixed data: Ivan Tanasic (itanasic@gmail. com) 21/25

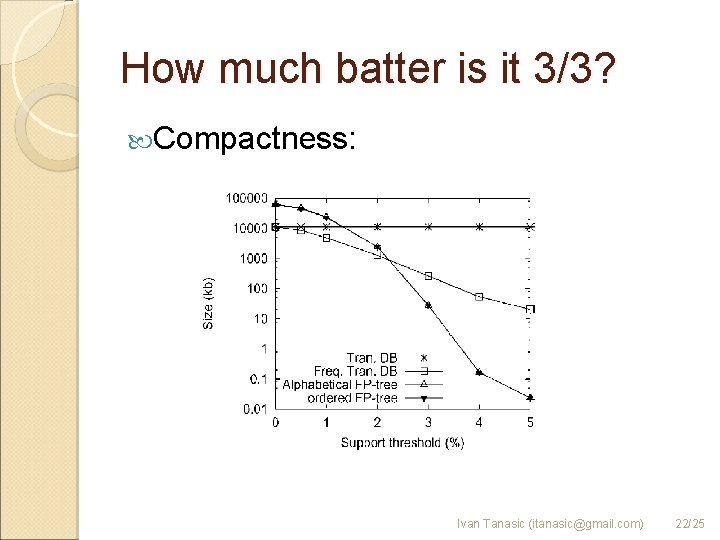

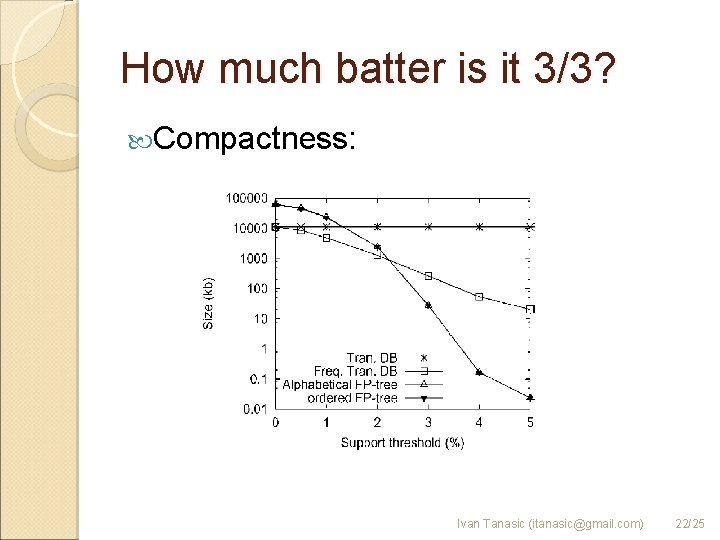

How much batter is it 3/3? Compactness: Ivan Tanasic (itanasic@gmail. com) 22/25

Is it Original? A lot of methods try to improve Apriori ◦ Hashing ◦ Transaction reduction ◦ Partitioning ◦ Sampling Tree. Projection uses similar structure, but it is still a different method Ivan Tanasic (itanasic@gmail. com) 23/25

Importance over time Basic primitive (strong foundation for tall building) Performance gets very important as databases are getting huge Scalability also FP-Growth has both performance and scalability Ivan Tanasic (itanasic@gmail. com) 24/25

Conclusion An important method for solving important DM tasks Fast Compact Scalable (db projection/tree on disk) Ivan Tanasic (itanasic@gmail. com) 25/25

Mining Frequent Patterns Using FPGrowth Method Ivan Tanasić (itanasic@gmail. com) Department of Computer Engineering and Computer Science, School of Electrical Engineering, University of Belgrade