LHC current and future proposals for HEP Computing

![Database technologies: no. SQL databases [1] n Current RDBMS have limitations n n n Database technologies: no. SQL databases [1] n Current RDBMS have limitations n n n](https://slidetodoc.com/presentation_image_h/f039f7067e696d1a510c6082a8ae2016/image-10.jpg)

![Database technologies: no. SQL databases [2] n Being evaluated or in use for n Database technologies: no. SQL databases [2] n Being evaluated or in use for n](https://slidetodoc.com/presentation_image_h/f039f7067e696d1a510c6082a8ae2016/image-11.jpg)

![Workload Management: efficient use of Multicore machines [2] n Evaluating the usage of “whole Workload Management: efficient use of Multicore machines [2] n Evaluating the usage of “whole](https://slidetodoc.com/presentation_image_h/f039f7067e696d1a510c6082a8ae2016/image-15.jpg)

- Slides: 21

LHC current and future proposals for HEP Computing Alessandro De Salvo Alessandro. De. Salvo@roma 1. infn. it Super. B Computing R&D Workshop Ferrara, 06 -07 -2011 A. De Salvo – Jul 6 2011

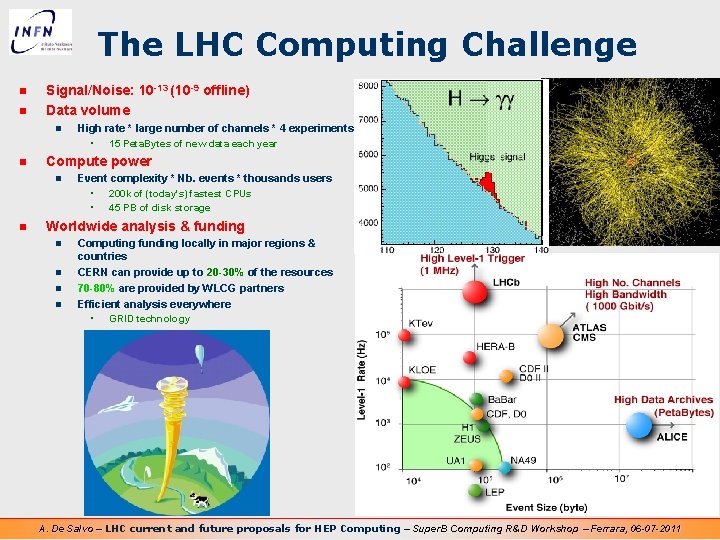

The LHC Computing Challenge n n Signal/Noise: 10 -13 (10 -9 offline) Data volume n n Compute power n n High rate * large number of channels * 4 experiments • 15 Peta. Bytes of new data each year Event complexity * Nb. events * thousands users • 200 k of (today's) fastest CPUs • 45 PB of disk storage Worldwide analysis & funding n n Computing funding locally in major regions & countries CERN can provide up to 20 -30% of the resources 70 -80% are provided by WLCG partners Efficient analysis everywhere • GRID technology A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

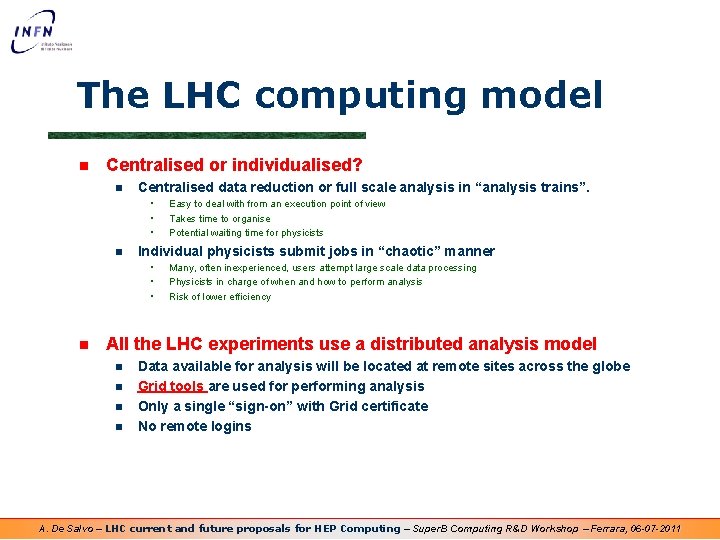

The LHC computing model n Centralised or individualised? n Centralised data reduction or full scale analysis in “analysis trains”. • • • n Individual physicists submit jobs in “chaotic” manner • • • n Easy to deal with from an execution point of view Takes time to organise Potential waiting time for physicists Many, often inexperienced, users attempt large scale data processing Physicists in charge of when and how to perform analysis Risk of lower efficiency All the LHC experiments use a distributed analysis model n n Data available for analysis will be located at remote sites across the globe Grid tools are used for performing analysis Only a single “sign-on” with Grid certificate No remote logins A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

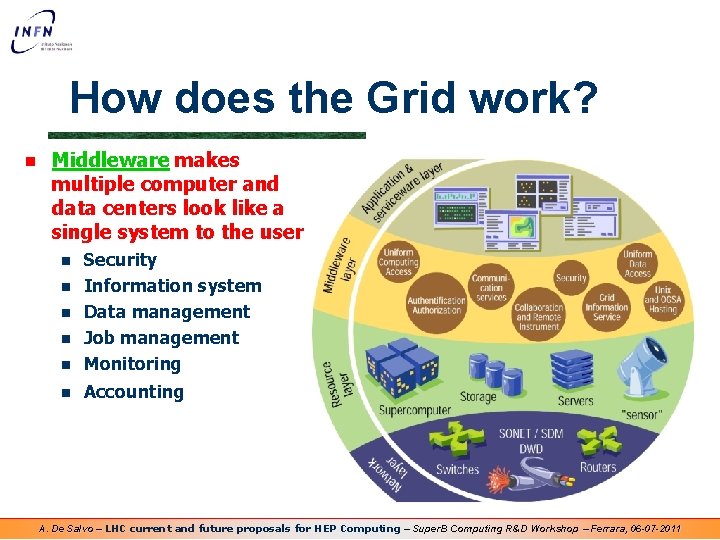

How does the Grid work? n Middleware makes multiple computer and data centers look like a single system to the user n Security Information system Data management Job management Monitoring n Accountingot easy! n n A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

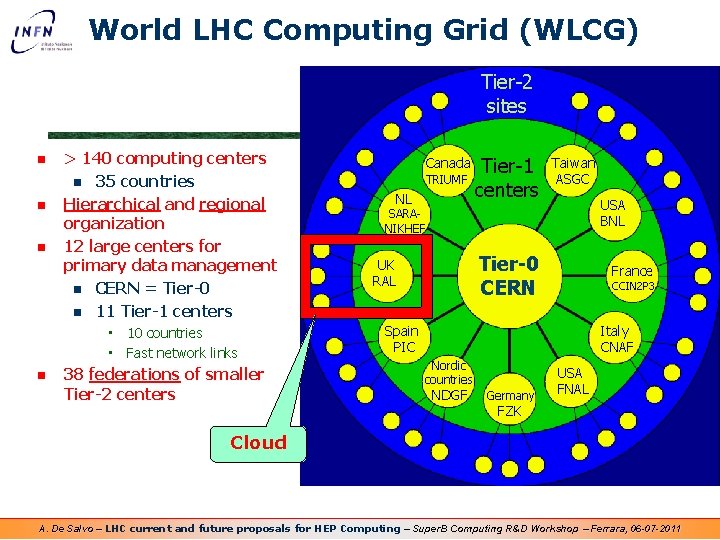

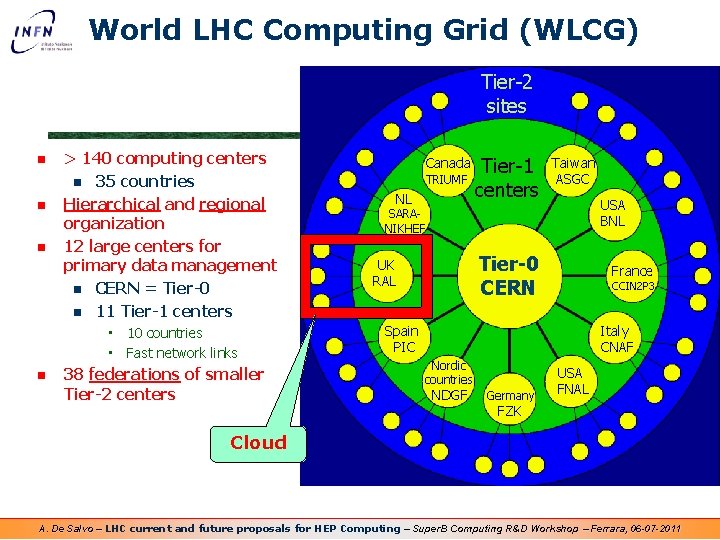

World LHC Computing Grid (WLCG) Tier-2 sites n n n > 140 computing centers n 35 countries Hierarchical and regional organization 12 large centers for primary data management n CERN = Tier-0 n 11 Tier-1 centers • 10 countries • Fast network links n 38 federations of smaller Tier-2 centers Canada TRIUMF NL Tier-1 centers Taiwan ASGC USA BNL SARANIKHEF Tier-0 CERN UK RAL France CCIN 2 P 3 Spain PIC Italy CNAF Nordic countries NDGF Germany USA FNAL FZK Cloud A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

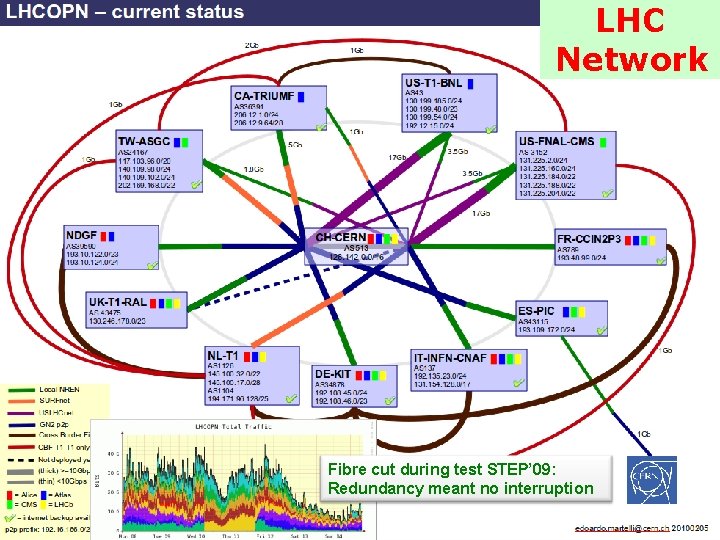

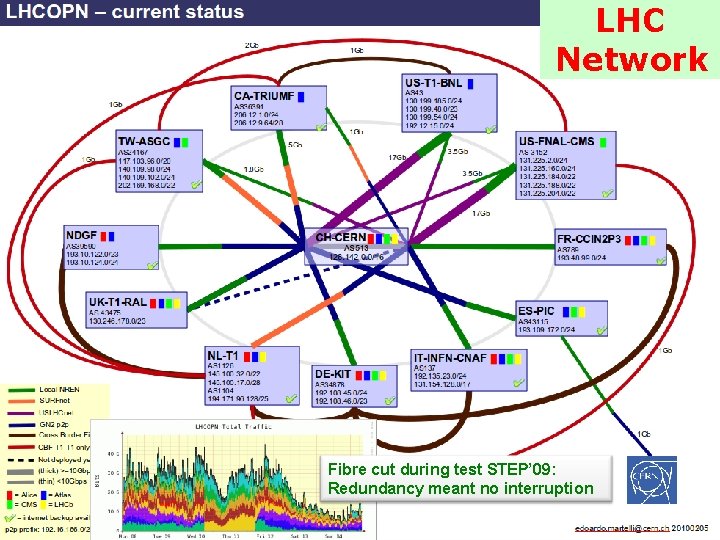

LHC Network Fibre cut during test STEP’ 09: Redundancy meant no interruption Sergio Bertolucci, CERN A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011 6

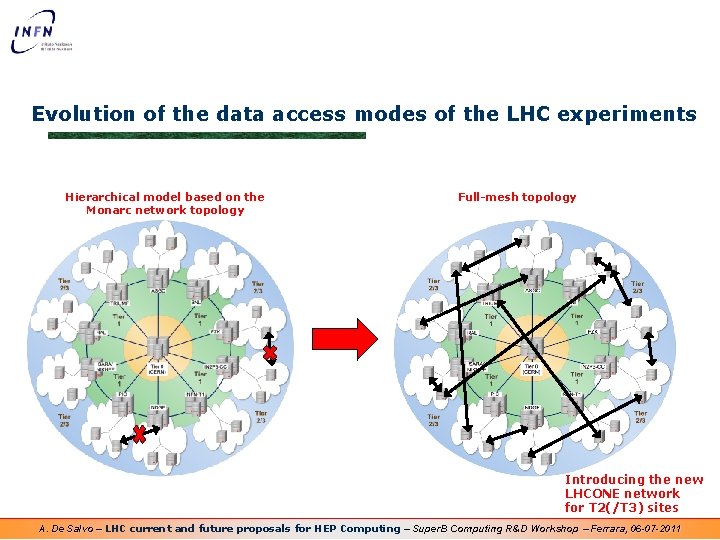

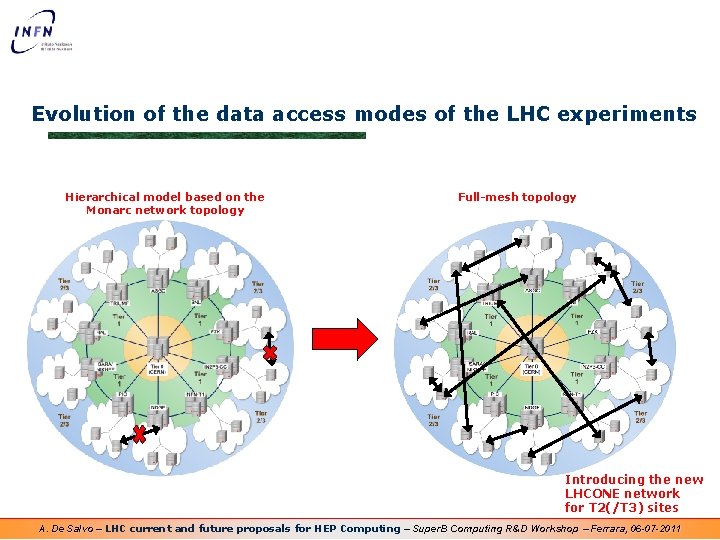

Evolution of the data access modes of the LHC experiments Hierarchical model based on the Monarc network topology Full-mesh topology Introducing the new LHCONE network for T 2(/T 3) sites A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

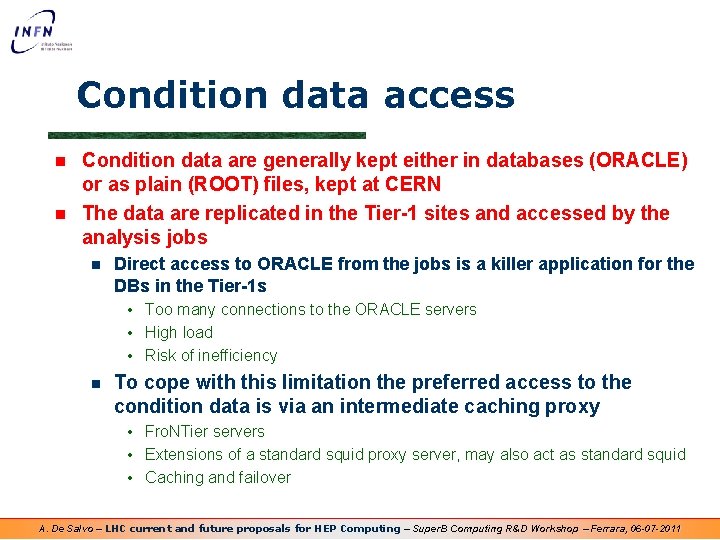

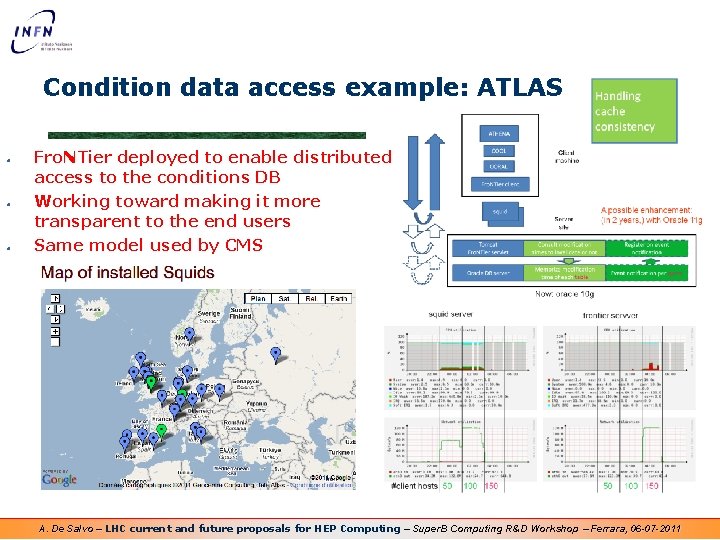

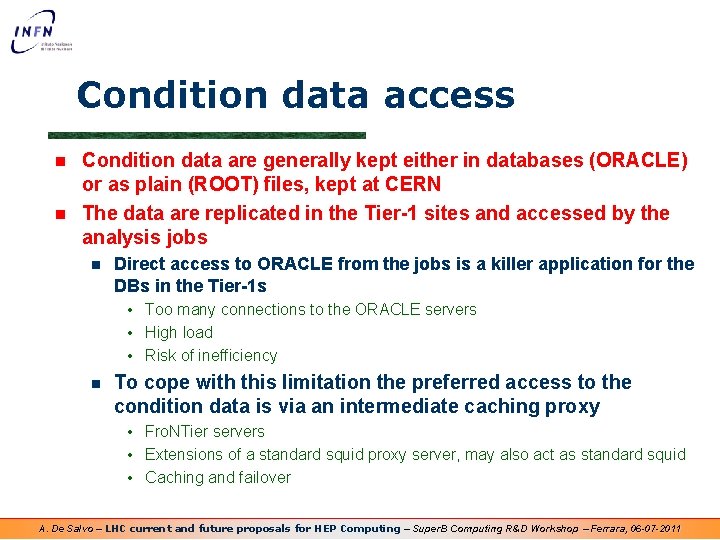

Condition data access n n Condition data are generally kept either in databases (ORACLE) or as plain (ROOT) files, kept at CERN The data are replicated in the Tier-1 sites and accessed by the analysis jobs n Direct access to ORACLE from the jobs is a killer application for the DBs in the Tier-1 s • Too many connections to the ORACLE servers • High load • Risk of inefficiency n To cope with this limitation the preferred access to the condition data is via an intermediate caching proxy • Fro. NTier servers • Extensions of a standard squid proxy server, may also act as standard squid • Caching and failover A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

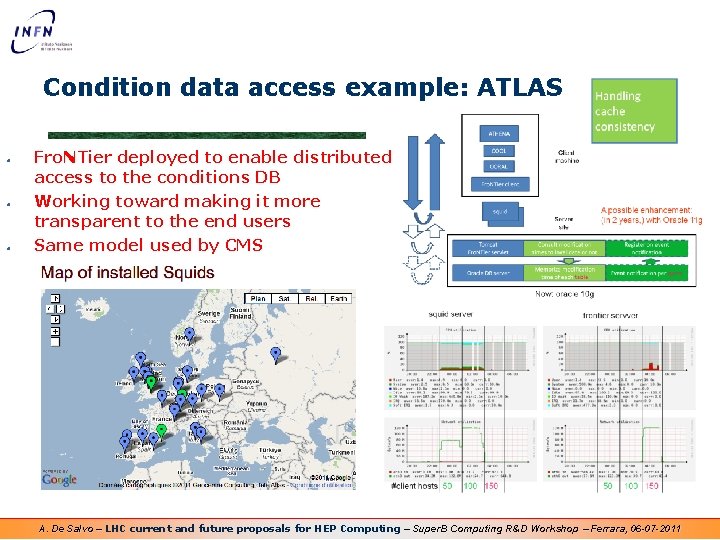

Condition data access example: ATLAS Fro. NTier deployed to enable distributed access to the conditions DB Working toward making it more transparent to the end users Same model used by CMS A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

![Database technologies no SQL databases 1 n Current RDBMS have limitations n n n Database technologies: no. SQL databases [1] n Current RDBMS have limitations n n n](https://slidetodoc.com/presentation_image_h/f039f7067e696d1a510c6082a8ae2016/image-10.jpg)

Database technologies: no. SQL databases [1] n Current RDBMS have limitations n n n Hard to scale De-normalization for better performance Complicated setup/configuration with apache Bad for large, random and I/Os intensive applications Exploring the no. SQL solutions to overcome the current limitations n n n ‘Scale easily’ – But different architecture ‘Get the work done’ on lower cost infrastructure Same application level reliability Google, Facebook, Amazon, Yahoo! Open source projects • n Bigtable, Cassandra, Simpledb, Dynamo, Mongo. DB, Couchdb, Hypertable, Riak, Hadoop Hbase, etc. no. SQL databases are currently not a replacement for any standard RDBMS n n n There are classes of complex queries which include time and date ranges where RDBMS typically performs rather poorly For example, storing lots of historical data on expensive transaction-oriented RDBMS does not seem optimal An option to unload significant amounts of archival-type reference data from Oracle to a highly performing, scalable system based on commodity hardware appears attractive A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

![Database technologies no SQL databases 2 n Being evaluated or in use for n Database technologies: no. SQL databases [2] n Being evaluated or in use for n](https://slidetodoc.com/presentation_image_h/f039f7067e696d1a510c6082a8ae2016/image-11.jpg)

Database technologies: no. SQL databases [2] n Being evaluated or in use for n n n ATLAS Panda system ATLAS Distributed Data Management CMS WMcore/WMAgent … The ATLAS experience with the DQ 2 Accounting service (large tables) shows that a no. SQL database like Mongo. DB can reach almost the same performance of an Oracle RAC cluster using almost the same amount of disk space, but running on cheap hardware n n Oracle • 0. 3 s to complete the test queries • Oracle RAC (CERN ADCR) • 38 GB of disk space used • 243 Indexes, 2 Functions, 365 Partitions/Y+hints • 5 weeks + DBAs to setup the facility Mongo. DB • 0. 4 s to complete the test queries • Single machine, 8 Cores/16 G • 42 GB of disk space used • 4 hours to setup the facility • 1 Table, 1 Index A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

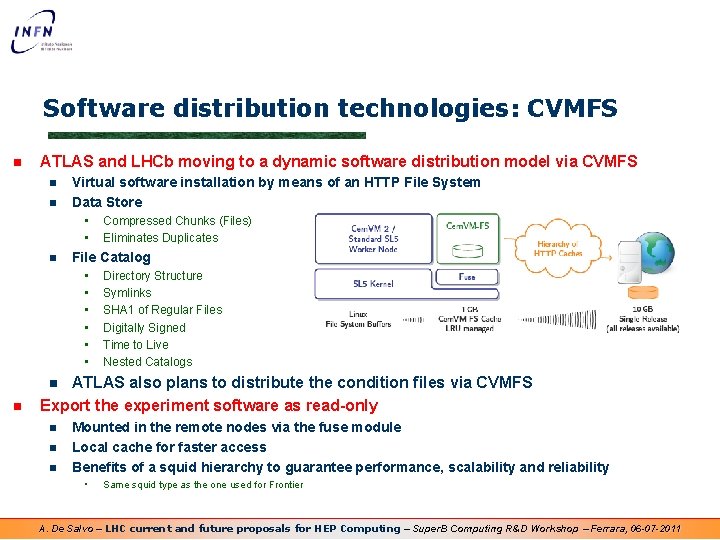

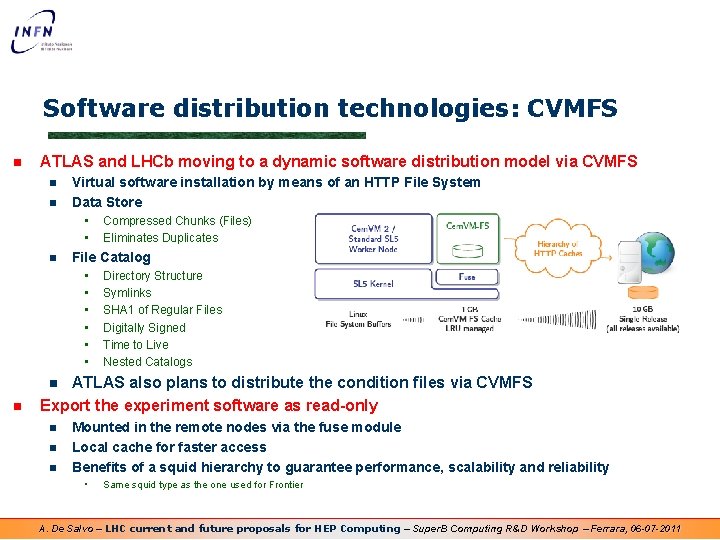

Software distribution technologies: CVMFS n ATLAS and LHCb moving to a dynamic software distribution model via CVMFS n n Virtual software installation by means of an HTTP File System Data Store • • n Compressed Chunks (Files) Eliminates Duplicates File Catalog • • • Directory Structure Symlinks SHA 1 of Regular Files Digitally Signed Time to Live Nested Catalogs ATLAS also plans to distribute the condition files via CVMFS Export the experiment software as read-only n n n Mounted in the remote nodes via the fuse module Local cache for faster access Benefits of a squid hierarchy to guarantee performance, scalability and reliability • Same squid type as the one used for Frontier A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

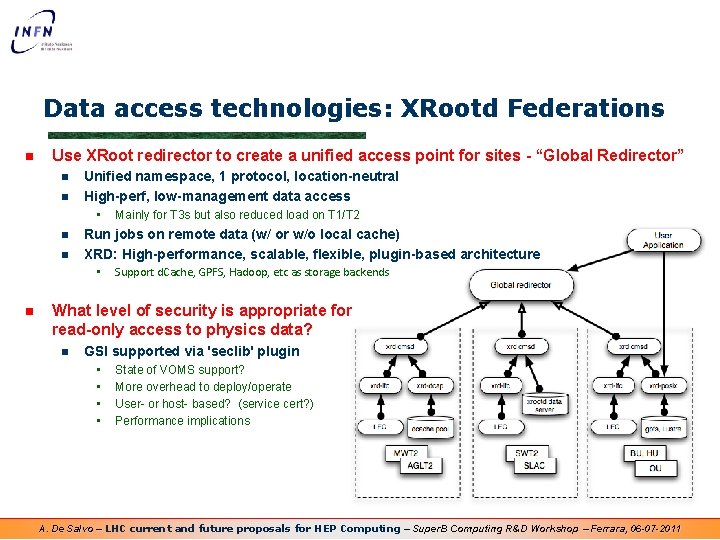

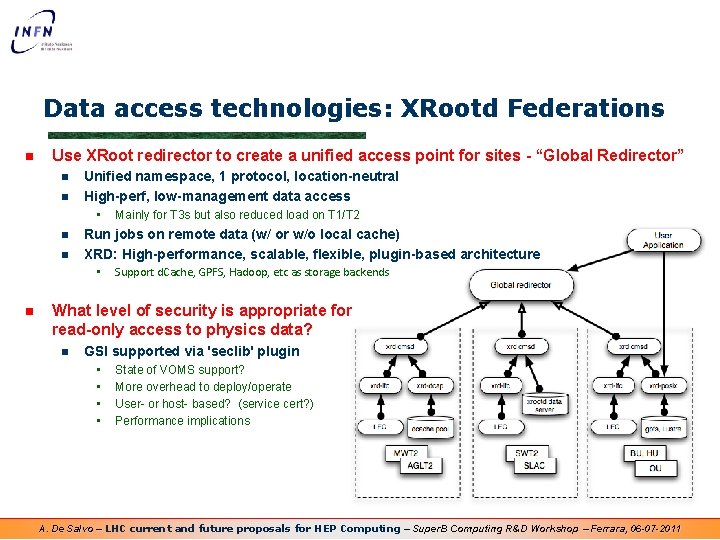

Data access technologies: XRootd Federations n Use XRoot redirector to create a unified access point for sites - “Global Redirector” n n Unified namespace, 1 protocol, location-neutral High-perf, low-management data access • n n Run jobs on remote data (w/ or w/o local cache) XRD: High-performance, scalable, flexible, plugin-based architecture • n Mainly for T 3 s but also reduced load on T 1/T 2 Support d. Cache, GPFS, Hadoop, etc as storage backends What level of security is appropriate for read-only access to physics data? n GSI supported via 'seclib' plugin • • State of VOMS support? More overhead to deploy/operate User- or host- based? (service cert? ) Performance implications A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

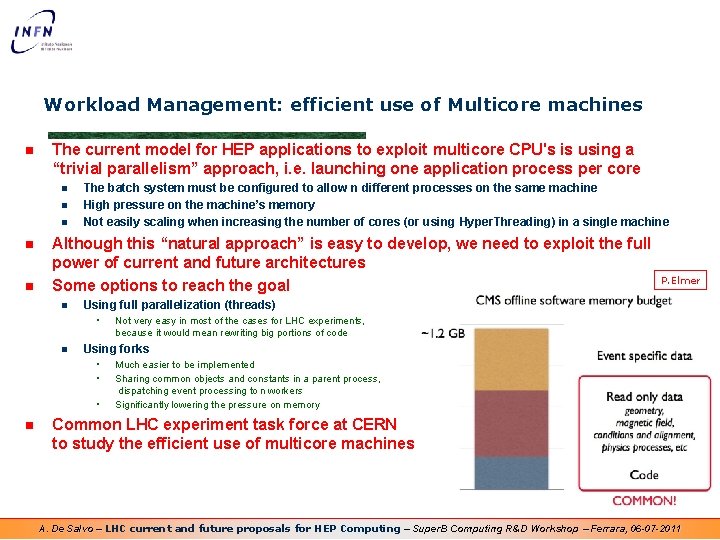

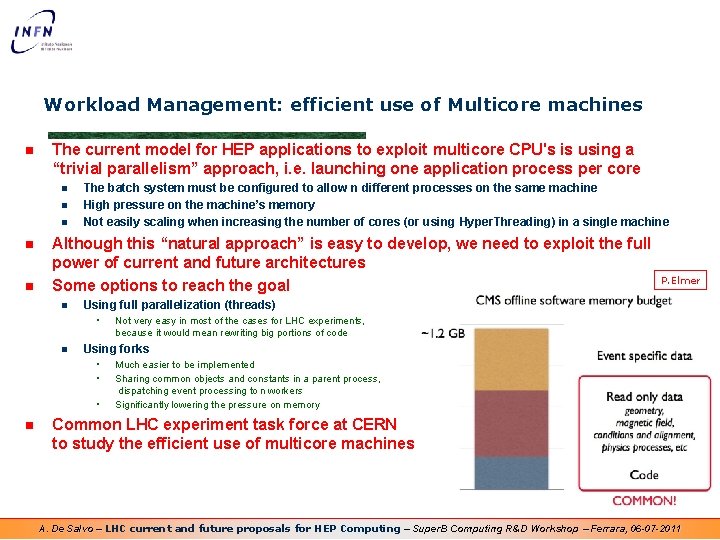

Workload Management: efficient use of Multicore machines n The current model for HEP applications to exploit multicore CPU's is using a “trivial parallelism” approach, i. e. launching one application process per core n n n The batch system must be configured to allow n different processes on the same machine High pressure on the machine’s memory Not easily scaling when increasing the number of cores (or using Hyper. Threading) in a single machine Although this “natural approach” is easy to develop, we need to exploit the full power of current and future architectures Some options to reach the goal n Using full parallelization (threads) • n Not very easy in most of the cases for LHC experiments, because it would mean rewriting big portions of code Using forks • • • n P. Elmer Much easier to be implemented Sharing common objects and constants in a parent process, dispatching event processing to n workers Significantly lowering the pressure on memory Common LHC experiment task force at CERN to study the efficient use of multicore machines A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

![Workload Management efficient use of Multicore machines 2 n Evaluating the usage of whole Workload Management: efficient use of Multicore machines [2] n Evaluating the usage of “whole](https://slidetodoc.com/presentation_image_h/f039f7067e696d1a510c6082a8ae2016/image-15.jpg)

Workload Management: efficient use of Multicore machines [2] n Evaluating the usage of “whole node” queues n n Define a single slot for each Multicore machine The performance of the parallel jobs with respect to n copies of a single jobs essentially remains the same, but the physical memory used is much lower n CMS can save up to ~60% of the total used memory on a 32 -core machine • • n Total memory used by 32 children: 13 GB Total memory used by 32 separate jobs: 34 GB ATLAS can save up to 0. 5 GB per process, so about the same amount of memory saved by CMS ATLAS memory usage A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

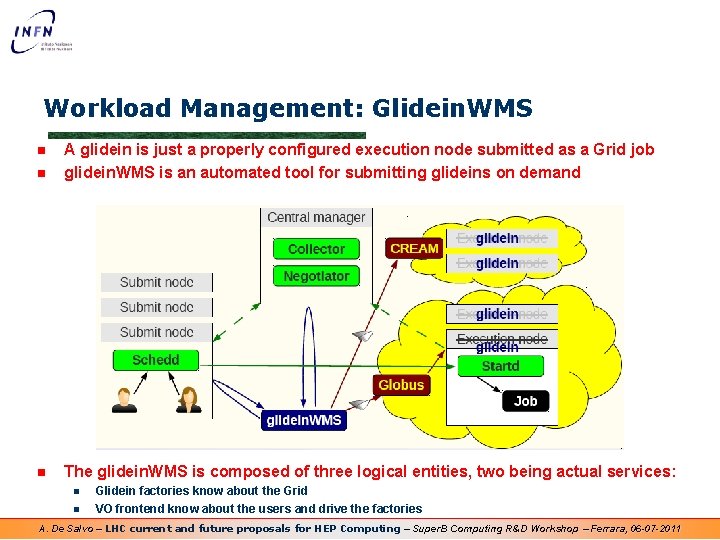

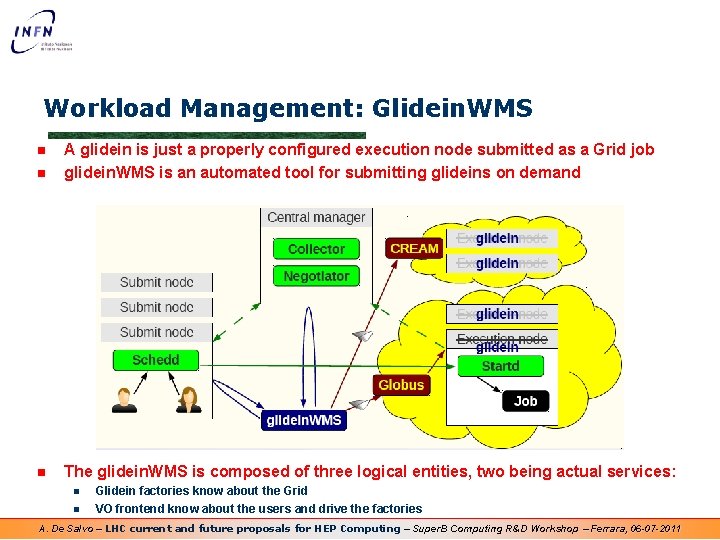

Workload Management: Glidein. WMS n A glidein is just a properly configured execution node submitted as a Grid job glidein. WMS is an automated tool for submitting glideins on demand n The glidein. WMS is composed of three logical entities, two being actual services: n n n Glidein factories know about the Grid VO frontend know about the users and drive the factories A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

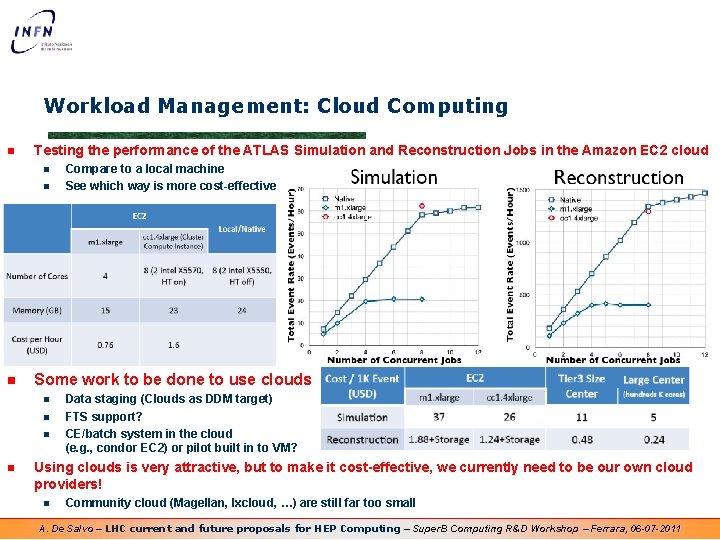

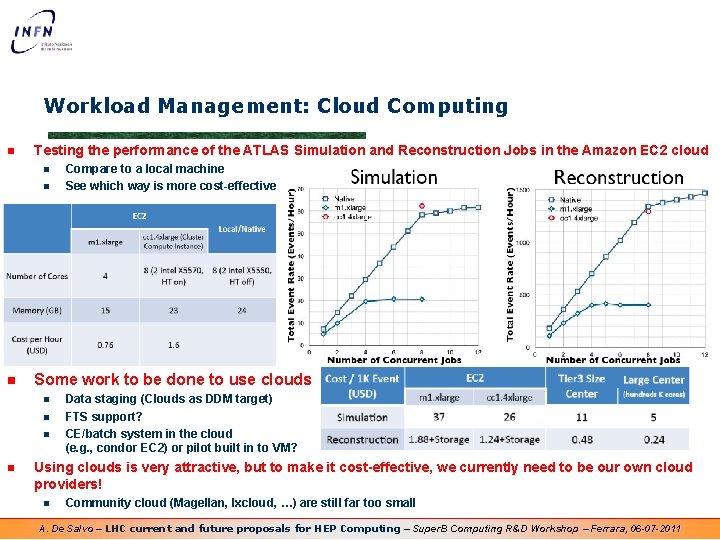

Workload Management: Cloud Computing n Testing the performance of the ATLAS Simulation and Reconstruction Jobs in the Amazon EC 2 cloud n n n Some work to be done to use clouds n n Compare to a local machine See which way is more cost-effective Data staging (Clouds as DDM target) FTS support? CE/batch system in the cloud (e. g. , condor EC 2) or pilot built in to VM? Using clouds is very attractive, but to make it cost-effective, we currently need to be our own cloud providers! n Community cloud (Magellan, lxcloud, …) are still far too small A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

What about the future? A personal view n n My personal view is that future computing will be more oriented to the optimization of the resources rather than a linear expansion of what we have now Some items will play an important role in the game n n n Virtualization and clouds Multicore architectures and true parallel processing The trend is to put a consistent effort in Cloud Computing n Pros • • • n Cons • • n Easy maintenance Portability Isolation Same level of performance as on real appliances Optimization of the resources Map/Reduce algorithms may be applied to some HEP applications Easily suitable for CPU resources only Virtualized storage resources are still a problem, will probably need to stay with the current Grid-enabled services Switching to real parallel processing will require re-think on our way of virtualizing resources n n Up to now when we talk about virtualization we intend the segmentation of a physical resource into smaller pieces With the new CPU architectures we’ll probably have to think about the reverse too, i. e. aggregating multiple physical resources into a virtual one • • Bigger multicore machines, no code change at the application level For example Scale. MP’s Versatile. SMP (http: //www. scalemp. com/) A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

Conclusions n The WLCG Collaboration prepared, deployed and is now managing the common computing infrastructure of the LHC experiments n n All the LHC experiments are successfully using the Grid distributed computing to perform the data analysis n MC, real data n n Central productions and user’s analysis New technologies have been implemented or are under study to overcome the current limitations of the system and to better adapt to the experiments’ needs n n Coping reasonably well so far with the large amount of data that is distributed, stored and processed every day Many R&D developments and studies Future computing difficult to predict, but going in the direction of virtualized, parallel processing Credits: D. Barberis, Y. Yao, C. Waldman, V. Garonne, R. Santinelli, A. Sciabà, D. Spiga, P. Elmer A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

BACKUP SLIDES A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011

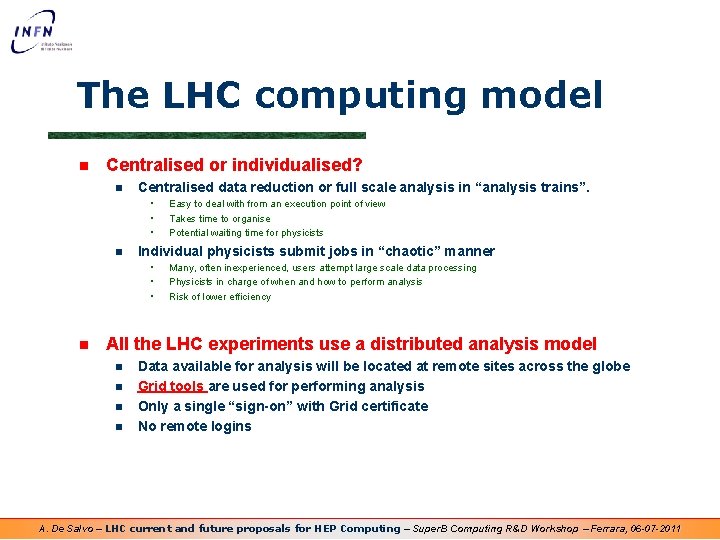

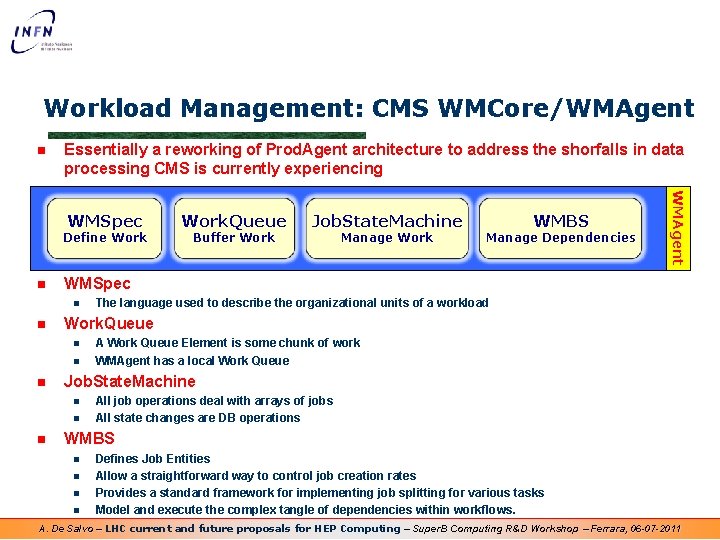

Workload Management: CMS WMCore/WMAgent n Essentially a reworking of Prod. Agent architecture to address the shorfalls in data processing CMS is currently experiencing Define Work n n Manage Dependencies The language used to describe the organizational units of a workload A Work Queue Element is some chunk of work WMAgent has a local Work Queue Job. State. Machine n n n Manage Work WMBS Work. Queue n n Buffer Work Job. State. Machine WMSpec n n Work. Queue WMAgent WMSpec All job operations deal with arrays of jobs All state changes are DB operations WMBS n n Defines Job Entities Allow a straightforward way to control job creation rates Provides a standard framework for implementing job splitting for various tasks Model and execute the complex tangle of dependencies within workflows. A. De Salvo – LHC current and future proposals for HEP Computing – Super. B Computing R&D Workshop – Ferrara, 06 -07 -2011