LCG LHC Computing Grid Project LCG The LHC

- Slides: 30

LCG LHC Computing Grid Project - LCG The LHC Computing Grid First steps towards a Global Computing Facility for Physics 18 September 2003 Les Robertson – LCG Project Leader CERN – European Organization for Nuclear Research Geneva, Switzerland last update: 24/09/2021 22: 38 les. robertson@cern. ch les robertson - cern-it 1

LHC Computing Grid Project LCG § The LCG Project is a collaboration of – § The LHC experiments § The Regional Computing Centres § Physics institutes § . . working together to prepare and deploy the computing environment that will be used by the experiments to analyse the LHC data This includes support for applications § provision of common tools, frameworks, environment, data persistency § . . and the development and operation of a computing service § exploiting the resources available to LHC experiments in computing centres, physics institutes and universities around the world § presenting this as a reliable, coherent environment for the experiments last update 24/09/2021 22: 38 2

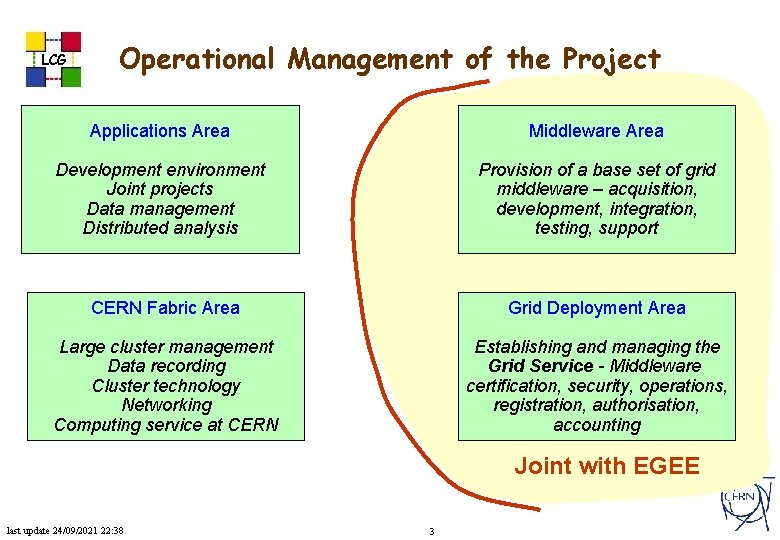

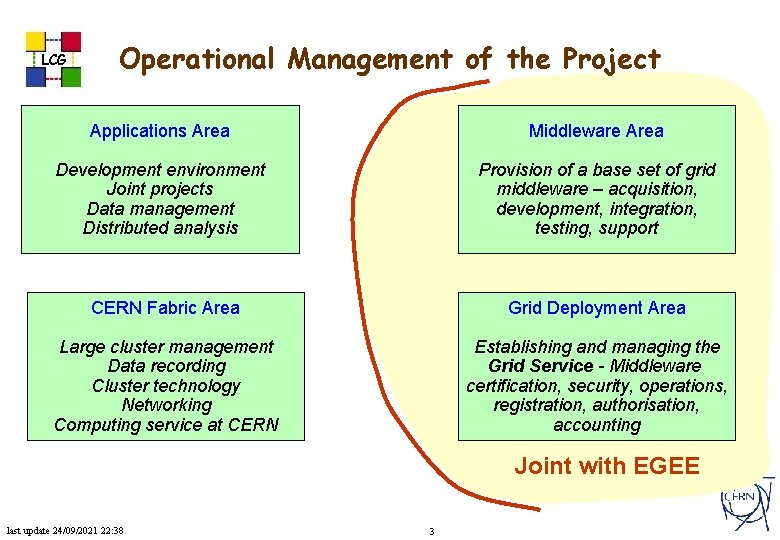

LCG Operational Management of the Project Applications Area Middleware Area Development environment Joint projects Data management Distributed analysis Provision of a base set of grid middleware – acquisition, development, integration, testing, support CERN Fabric Area Grid Deployment Area Large cluster management Data recording Cluster technology Networking Computing service at CERN Establishing and managing the Grid Service - Middleware certification, security, operations, registration, authorisation, accounting Joint with EGEE last update 24/09/2021 22: 38 3

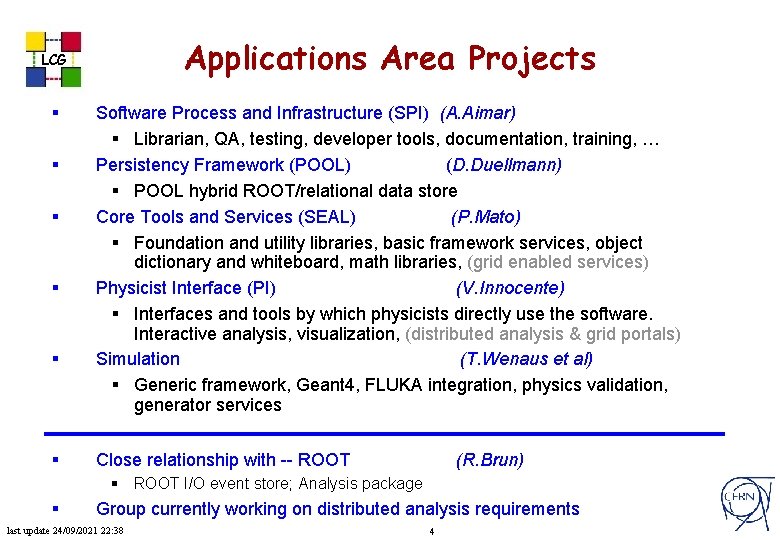

Applications Area Projects LCG § § § Software Process and Infrastructure (SPI) (A. Aimar) § Librarian, QA, testing, developer tools, documentation, training, … Persistency Framework (POOL) (D. Duellmann) § POOL hybrid ROOT/relational data store Core Tools and Services (SEAL) (P. Mato) § Foundation and utility libraries, basic framework services, object dictionary and whiteboard, math libraries, (grid enabled services) Physicist Interface (PI) (V. Innocente) § Interfaces and tools by which physicists directly use the software. Interactive analysis, visualization, (distributed analysis & grid portals) Simulation (T. Wenaus et al) § Generic framework, Geant 4, FLUKA integration, physics validation, generator services Close relationship with -- ROOT (R. Brun) § ROOT I/O event store; Analysis package § Group currently working on distributed analysis requirements last update 24/09/2021 22: 38 4

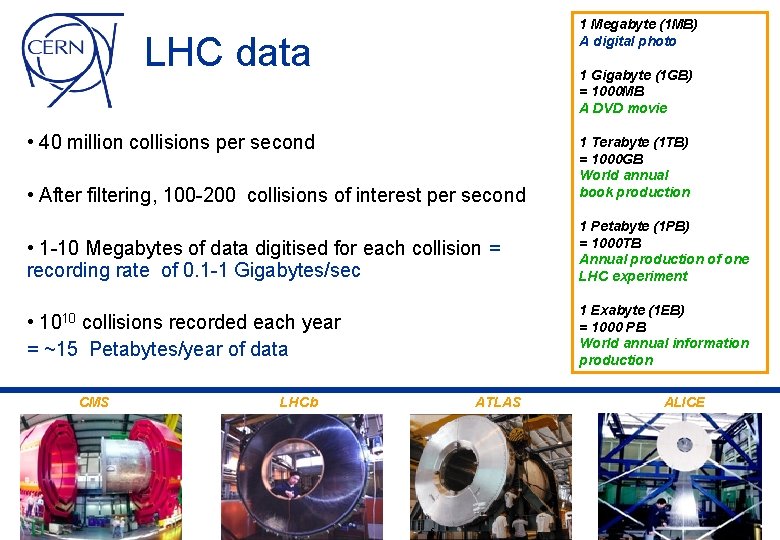

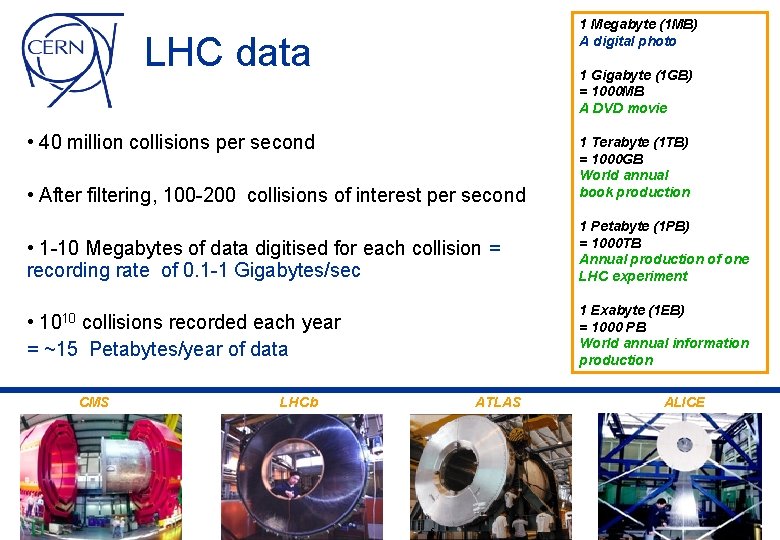

1 Megabyte (1 MB) A digital photo LHC data 1 Gigabyte (1 GB) = 1000 MB A DVD movie • 40 million collisions per second • After filtering, 100 -200 collisions of interest per second 1 Terabyte (1 TB) = 1000 GB World annual book production • 1 -10 Megabytes of data digitised for each collision = recording rate of 0. 1 -1 Gigabytes/sec 1 Petabyte (1 PB) = 1000 TB Annual production of one LHC experiment 1 Exabyte (1 EB) = 1000 PB World annual information production • collisions recorded each year = ~15 Petabytes/year of data 1010 CMS LHCb ATLAS 5 ALICE

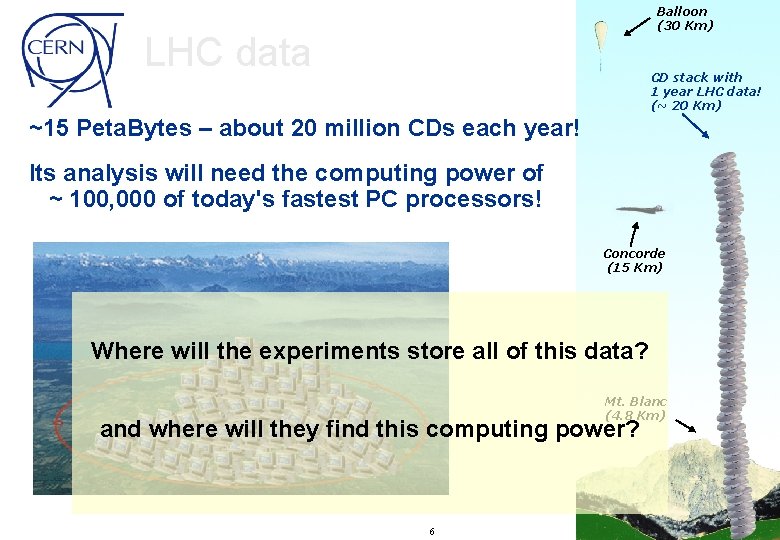

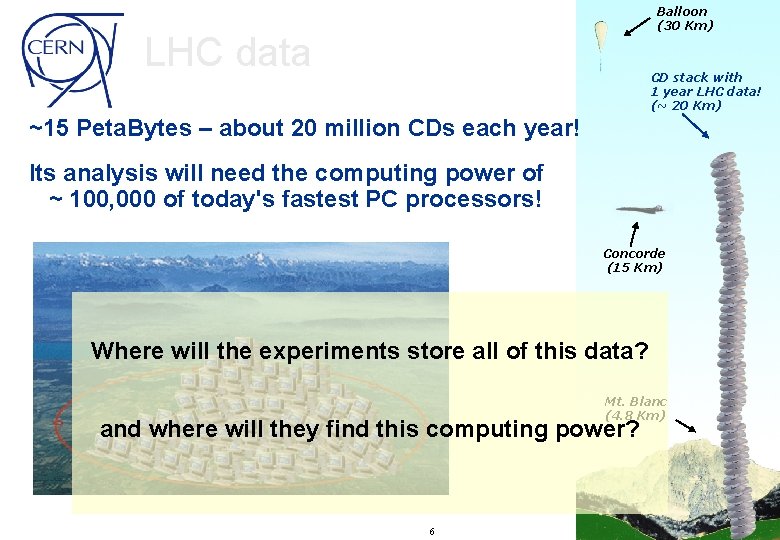

Balloon (30 Km) LHC data CD stack with 1 year LHC data! (~ 20 Km) ~15 Peta. Bytes – about 20 million CDs each year! Its analysis will need the computing power of ~ 100, 000 of today's fastest PC processors! Concorde (15 Km) Where will the experiments store all of this data? Mt. Blanc (4. 8 Km) and where will they find this computing power? 6

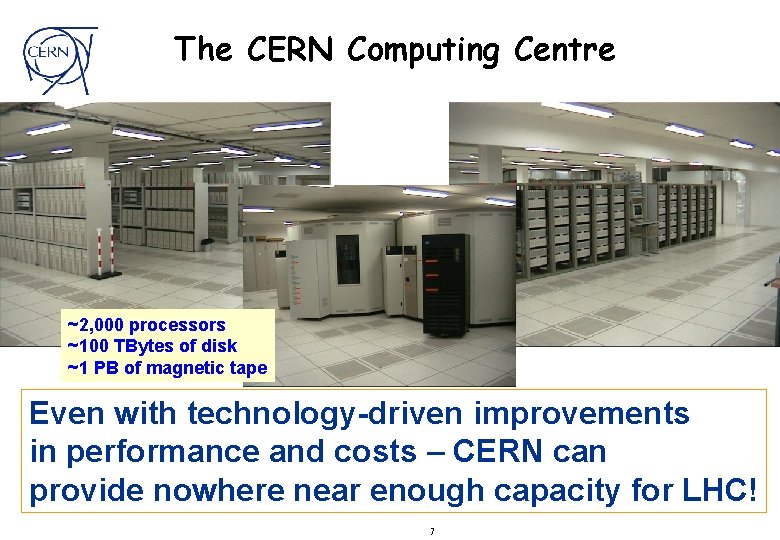

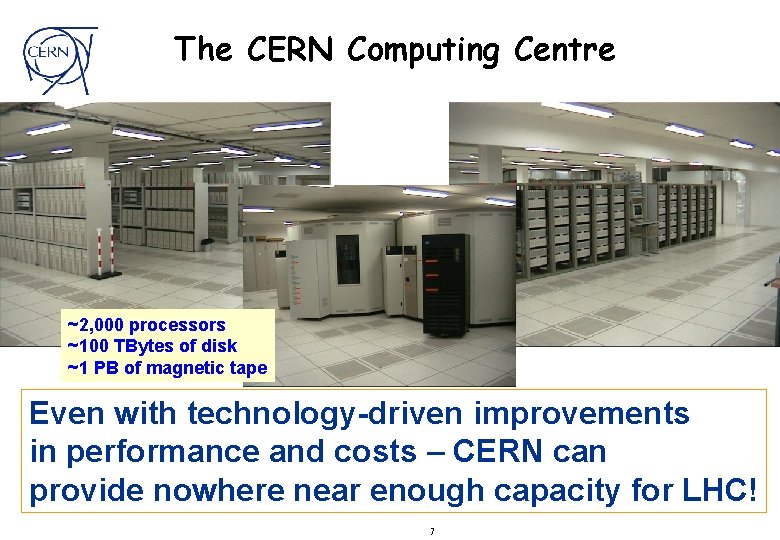

The CERN Computing Centre ~2, 000 processors ~100 TBytes of disk ~1 PB of magnetic tape Even with technology-driven improvements in performance and costs – CERN can provide nowhere near enough capacity for LHC! 7

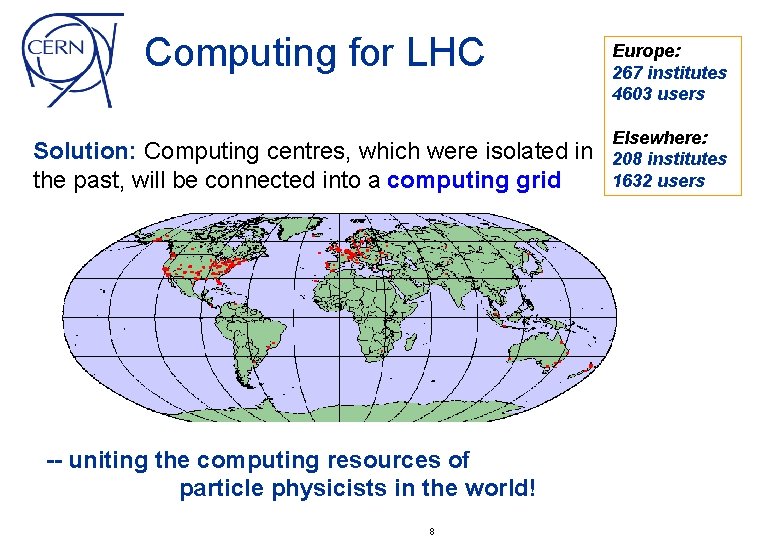

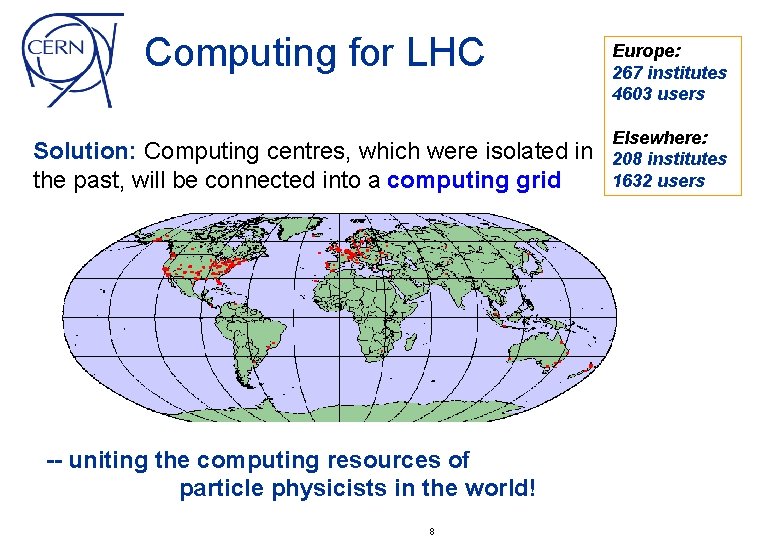

Computing for LHC Solution: Computing centres, which were isolated in the past, will be connected into a computing grid -- uniting the computing resources of particle physicists in the world! 8 Europe: 267 institutes 4603 users Elsewhere: 208 institutes 1632 users

LCG Regional Centres Pilot production service : 2003 – 2005 First wave centres § CERN § Academica Sinica Taiwan § Brookhaven National Lab § PIC Barcelona § CNAF Bologna § Fermilab § FZK Karlsruhe § IN 2 P 3 Lyon § KFKI Budapest § Moscow State University § University of Prague § Rutherford Appleton Lab (UK) § University of Tokyo last update 24/09/2021 22: 38 Other Centres Caltech GSI Darmstadt Italian Tier 2 s(Torino, Milano, Legnaro) JINR Dubna Manno (Switzerland) NIKHEF Amsterdam Ohio Supercomputing Centre Sweden (Nordu. Grid) Tata Institute (India) Triumf (Canada) UCSD UK Tier 2 s University of Florida– Gainesville …… § § § § 9

LCG the Sources for Middleware & Tools used by e of The Virtual Data Toolkit - VDT last update 24/09/2021 22: 38 10

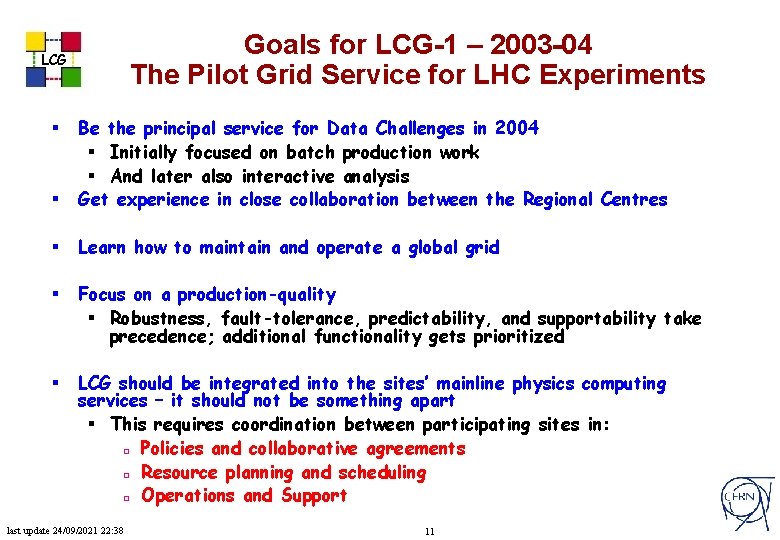

Goals for LCG-1 – 2003 -04 The Pilot Grid Service for LHC Experiments LCG § Be the principal service for Data Challenges in 2004 § Initially focused on batch production work § And later also interactive analysis Get experience in close collaboration between the Regional Centres § Learn how to maintain and operate a global grid § Focus on a production-quality § Robustness, fault-tolerance, predictability, and supportability take precedence; additional functionality gets prioritized § LCG should be integrated into the sites’ mainline physics computing services – it should not be something apart § This requires coordination between participating sites in: ¨ Policies and collaborative agreements ¨ Resource planning and scheduling ¨ Operations and Support § last update 24/09/2021 22: 38 11

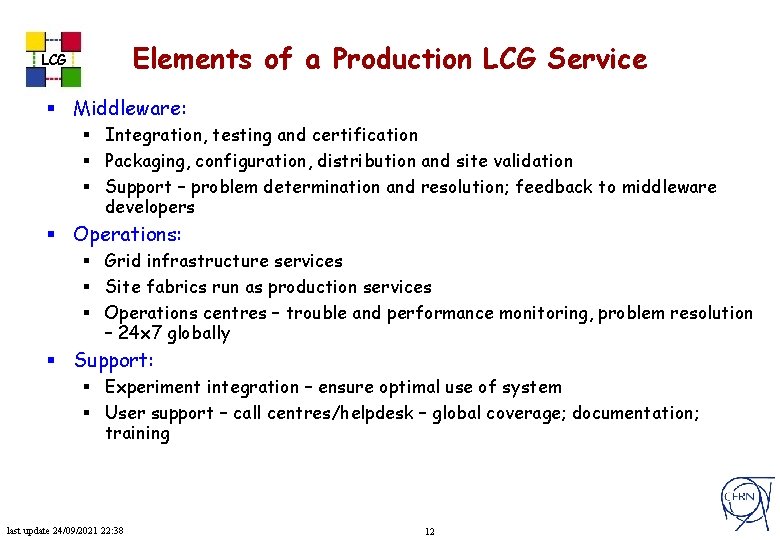

Elements of a Production LCG Service LCG § Middleware: § Integration, testing and certification § Packaging, configuration, distribution and site validation § Support – problem determination and resolution; feedback to middleware developers § Operations: § Grid infrastructure services § Site fabrics run as production services § Operations centres – trouble and performance monitoring, problem resolution – 24 x 7 globally § Support: § Experiment integration – ensure optimal use of system § User support – call centres/helpdesk – global coverage; documentation; training last update 24/09/2021 22: 38 12

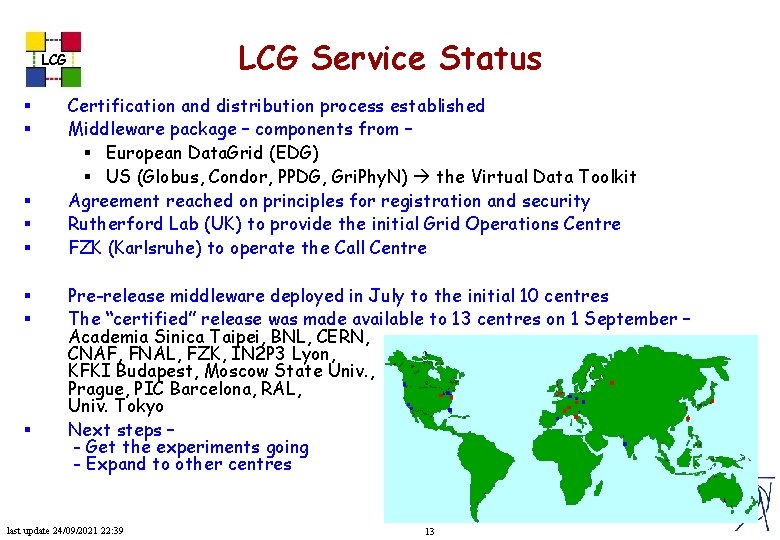

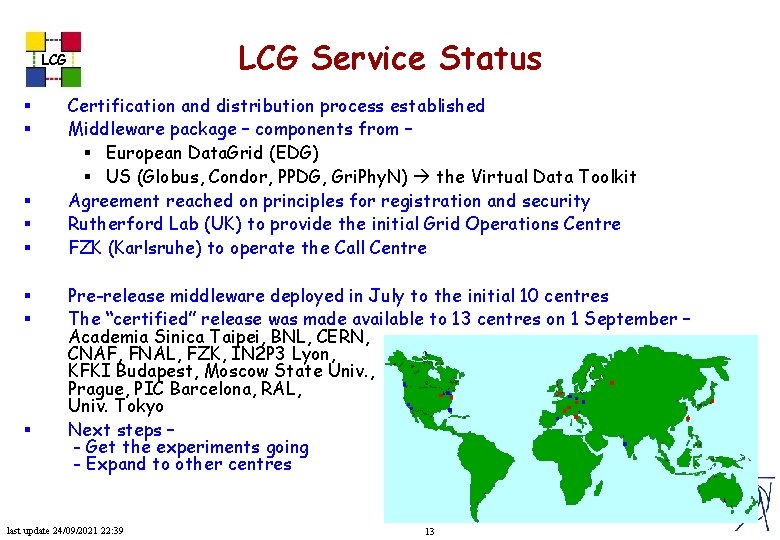

LCG Service Status LCG § § § § Certification and distribution process established Middleware package – components from – § European Data. Grid (EDG) § US (Globus, Condor, PPDG, Gri. Phy. N) the Virtual Data Toolkit Agreement reached on principles for registration and security Rutherford Lab (UK) to provide the initial Grid Operations Centre FZK (Karlsruhe) to operate the Call Centre Pre-release middleware deployed in July to the initial 10 centres The “certified” release was made available to 13 centres on 1 September – Academia Sinica Taipei, BNL, CERN, CNAF, FNAL, FZK, IN 2 P 3 Lyon, KFKI Budapest, Moscow State Univ. , Prague, PIC Barcelona, RAL, Univ. Tokyo Next steps – - Get the experiments going - Expand to other centres last update 24/09/2021 22: 39 13

Preliminary full simulation and reconstruction tests with ALICE LCG ALIEN submitting work to the LCG service § Aliroot 3. 09. 06 - fully reconstructed events § CPU-intensive, RAM-demanding (up to 600 MB), long lasting jobs ( average 14 hours ) Outcome: § > 95 % successful job submission, execution and output retrieval § last update 24/09/2021 22: 39 14

LCG 1 at the Time of First Release LCG § Impressive improvement on Stability w. r. t. old 1. x EDG releases and corresponding testbeds § Lots of room for further improvements § Additional features to be added before the end of the year, in preparation for the data challenges of 2004 § As more centres join, Scalability will surely become a major issue last update 24/09/2021 22: 39 15

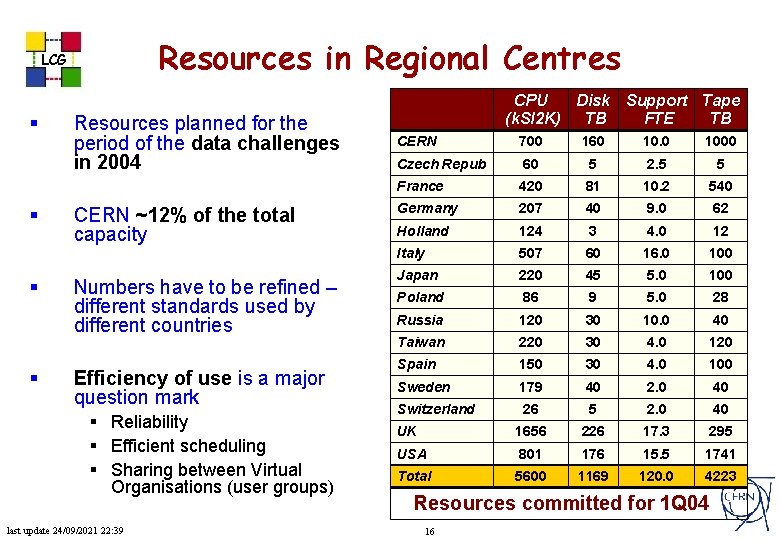

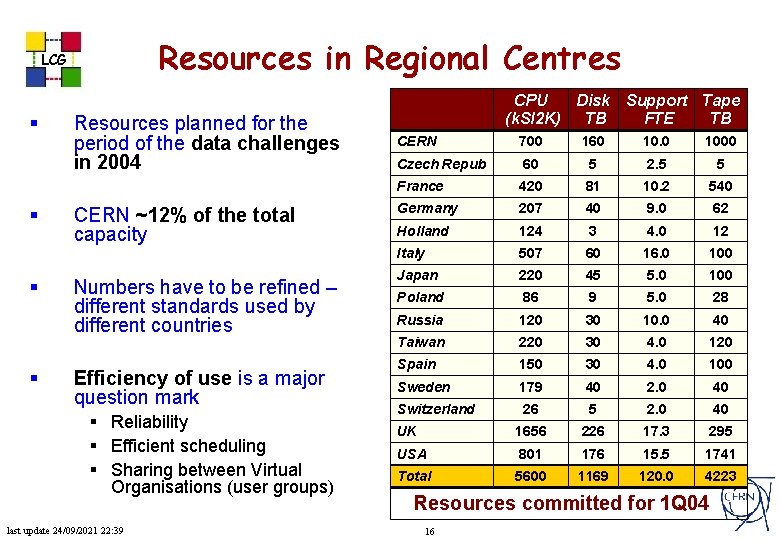

Resources in Regional Centres LCG § § Resources planned for the period of the data challenges in 2004 CERN ~12% of the total capacity Numbers have to be refined – different standards used by different countries Efficiency of use is a major question mark § Reliability § Efficient scheduling § Sharing between Virtual Organisations (user groups) last update 24/09/2021 22: 39 CPU Disk Support Tape (k. SI 2 K) TB FTE TB CERN 700 160 1000 Czech Repub 60 5 2. 5 5 France 420 81 10. 2 540 Germany 207 40 9. 0 62 Holland 124 3 4. 0 12 Italy 507 60 16. 0 100 Japan 220 45 5. 0 100 Poland 86 9 5. 0 28 Russia 120 30 10. 0 40 Taiwan 220 30 4. 0 120 Spain 150 30 4. 0 100 Sweden 179 40 2. 0 40 Switzerland 26 5 2. 0 40 UK 1656 226 17. 3 295 USA 801 176 15. 5 1741 Total 5600 1169 120. 0 4223 Resources committed for 1 Q 04 16

LCG From LCG-1 to LHC Startup last update 24/09/2021 22: 39 17

LCG § § Where are we now with Grid Technology? For LHC – - we now understand the basic requirements for batch processing And we have a prototype solution developed by Globus and Condor in the US and the Data. Grid and related projects in Europe It is more difficult than was expected – - reliability, scalability, monitoring, operation, . . And we are not yet seeing useful industrial products But we are ready to start re-engineering the components § part of the large EGEE project proposal submitted to the EU § re-write of Globus using a web-services architecture is now available § Many more practical problems will be discovered now that we start running a grid as a sustained round-the-clock service and the LHC experiments begin to use it for doing real work last update 24/09/2021 22: 39 18

Grid Middleware for LCG in the Longer Term LCG Requirements – § A second round of specification of the basic grid requirements is being completed now - HEPCAL II § A team has started to specify the higher level requirements for distributed analysis – batch and interactive – and define the HEP-specific tools that will be needed For basic middleware the current strategy is to assume § that the US Do. E/NSF will provide a well supported Virtual Data Toolkit based on Globus Toolkit 3 § that the EGEE project, approved for EU 6 th framework funding, will develop the additional tools needed by LCG And the LCG Applications Area will develop higher-level HEPspecific functionality last update 24/09/2021 22: 39 19

ARDA – An Architectural Roadmap Towards Distributed Analysis ! Work in Progress 20

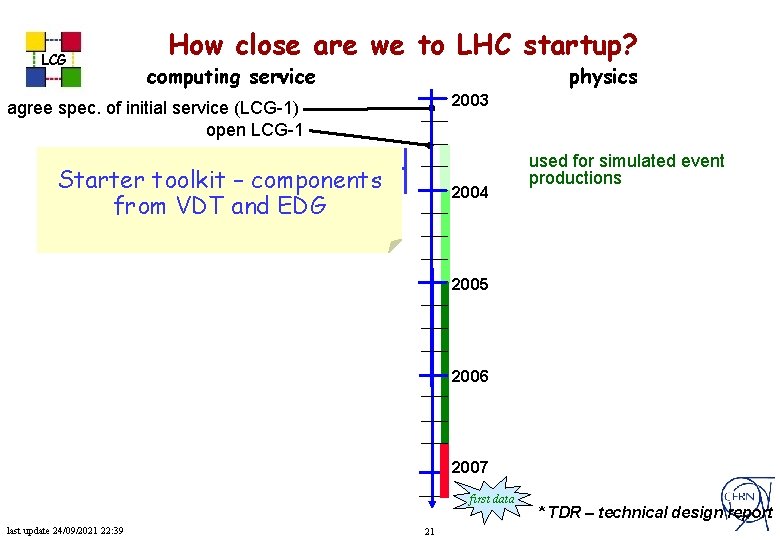

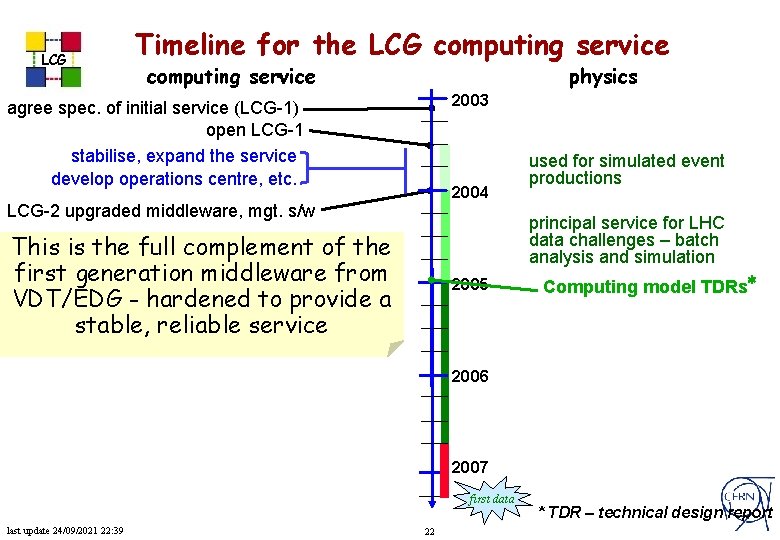

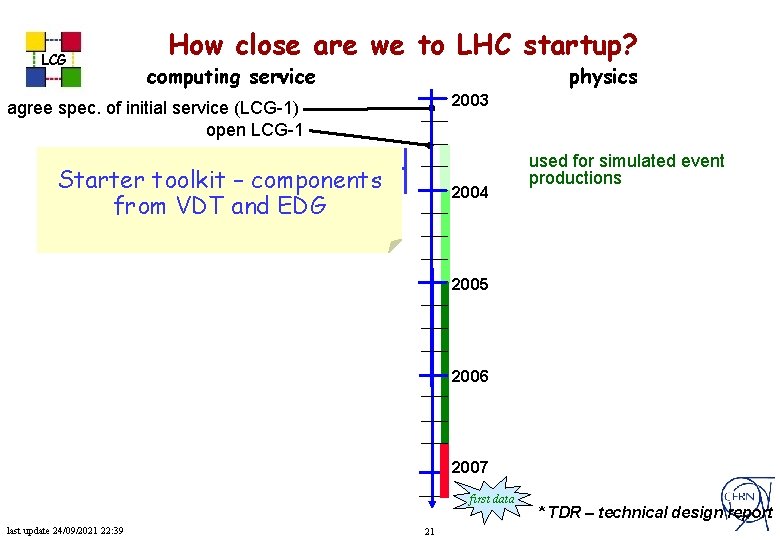

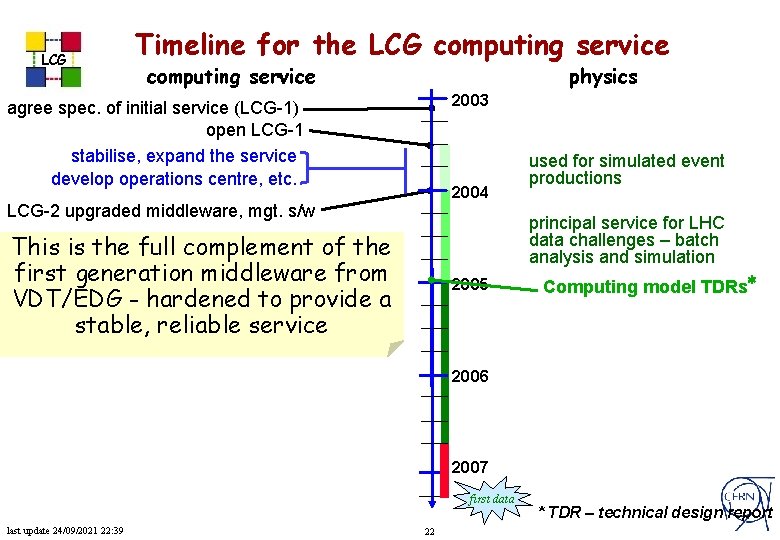

How close are we to LHC startup? LCG computing service physics 2003 agree spec. of initial service (LCG-1) open LCG-1 stabilise, expand the service develop operations centre, etc. Starter toolkit – components 2004 from VDT and EDG used for simulated event productions 2005 2006 2007 first data last update 24/09/2021 22: 39 21 * TDR – technical design report

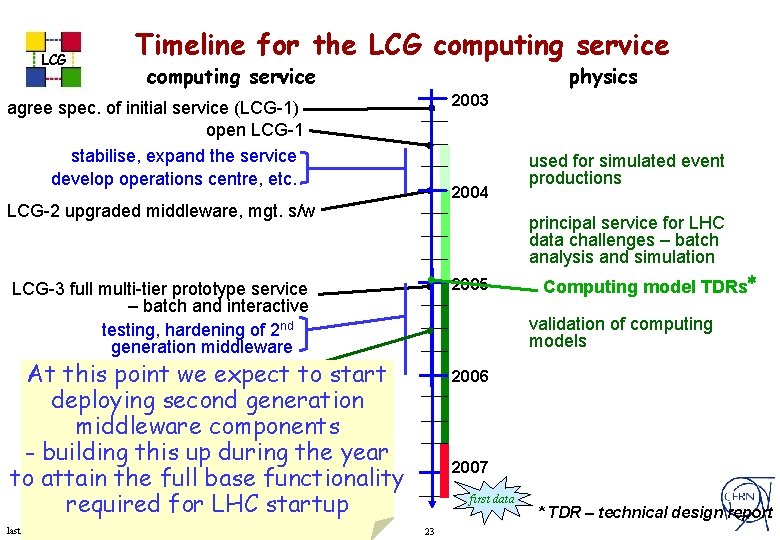

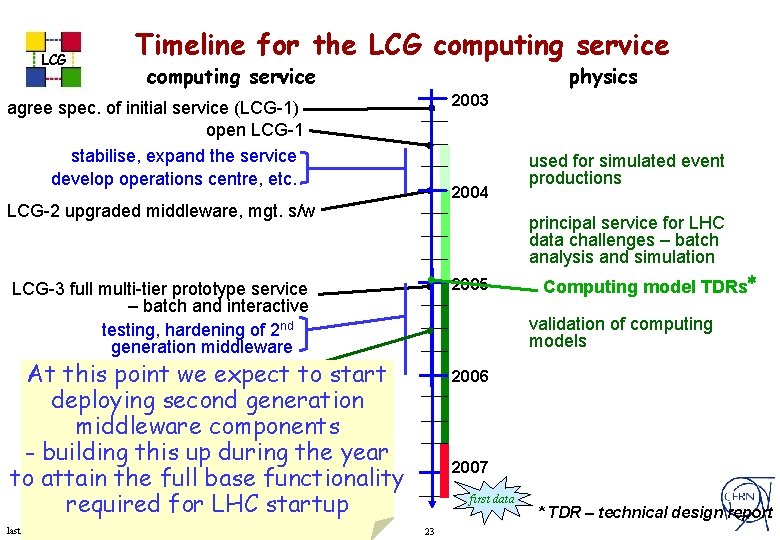

LCG Timeline for the LCG computing service physics 2003 agree spec. of initial service (LCG-1) open LCG-1 stabilise, expand the service develop operations centre, etc. 2004 LCG-2 upgraded middleware, mgt. s/w used for simulated event productions principal service for LHC data challenges – batch analysis and simulation This is the full complement of the first generation middleware from VDT/EDG - hardened to provide a stable, reliable service 2005 Computing model TDRs* 2006 2007 first data last update 24/09/2021 22: 39 22 * TDR – technical design report

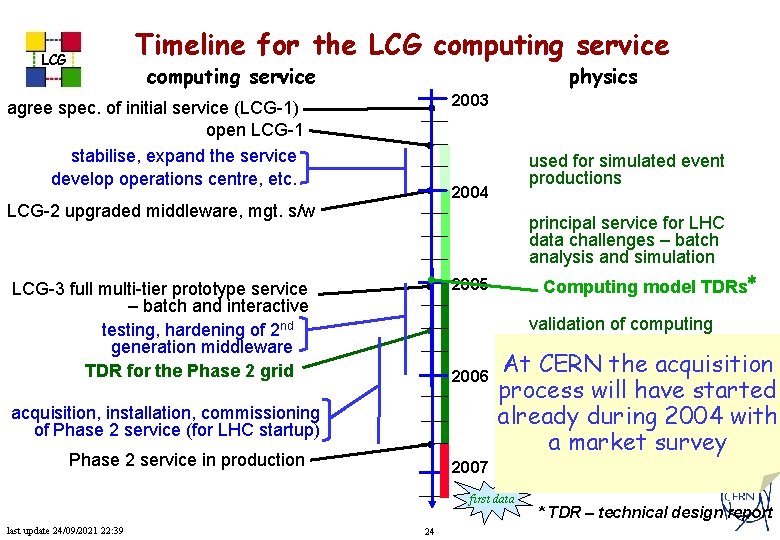

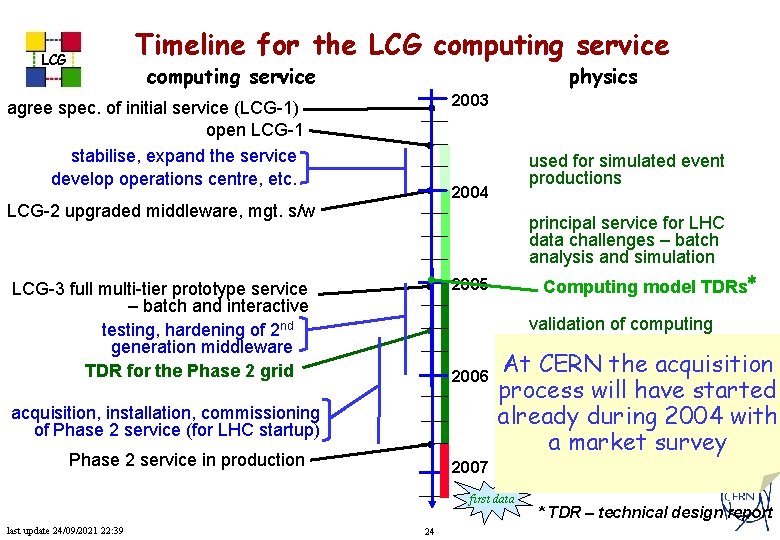

LCG Timeline for the LCG computing service physics 2003 agree spec. of initial service (LCG-1) open LCG-1 stabilise, expand the service develop operations centre, etc. 2004 LCG-2 upgraded middleware, mgt. s/w principal service for LHC data challenges – batch analysis and simulation 2005 LCG-3 full multi-tier prototype service – batch and interactive testing, hardening of 2 nd generation middleware TDRpoint for thewe Phase 2 grid to At this expect Computing model TDRs* validation of computing models start deploying second generation middleware components - building this up during the year to attain the full base functionality required for LHC startup last update 24/09/2021 22: 39 used for simulated event productions 2006 2007 first data 23 * TDR – technical design report

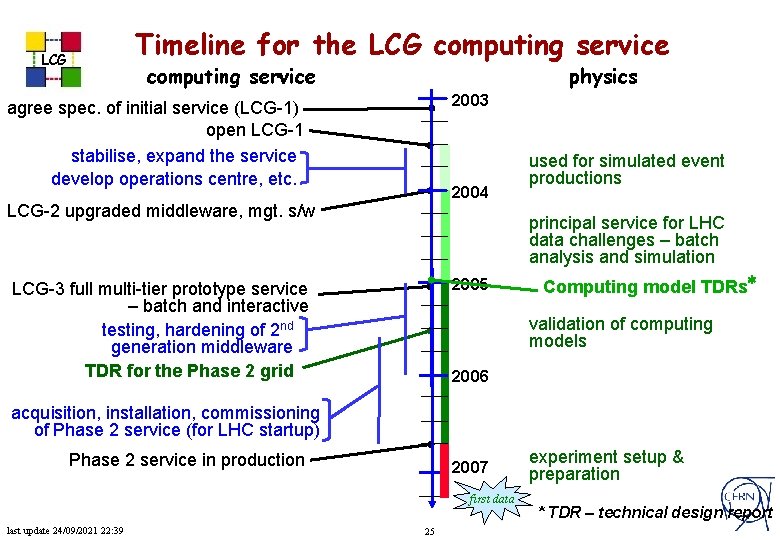

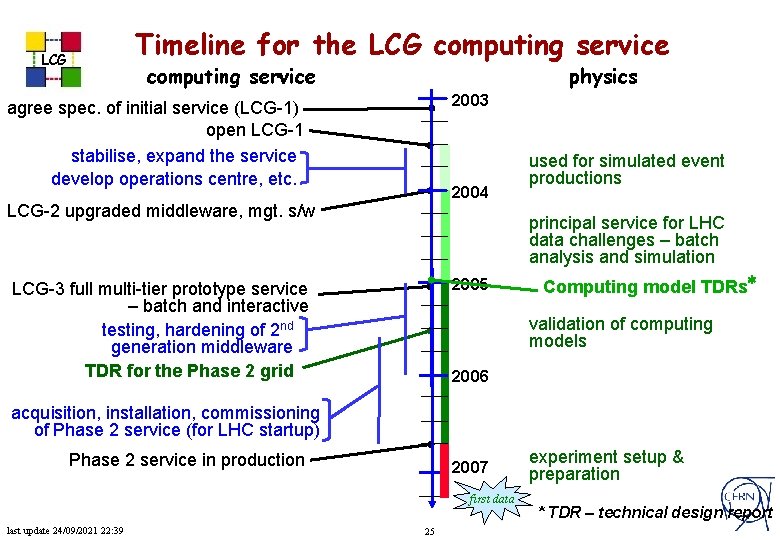

Timeline for the LCG computing service physics 2003 agree spec. of initial service (LCG-1) open LCG-1 stabilise, expand the service develop operations centre, etc. used for simulated event productions 2004 LCG-2 upgraded middleware, mgt. s/w principal service for LHC data challenges – batch analysis and simulation Computing model TDRs* 2005 LCG-3 full multi-tier prototype service – batch and interactive testing, hardening of 2 nd generation middleware TDR for the Phase 2 grid validation of computing models 2006 acquisition, installation, commissioning of Phase 2 service (for LHC startup) Phase 2 service in production At CERN the acquisition process will have started already during 2004 with a market survey 2007 first data last update 24/09/2021 22: 39 24 experiment setup & preparation * TDR – technical design report

Timeline for the LCG computing service physics 2003 agree spec. of initial service (LCG-1) open LCG-1 stabilise, expand the service develop operations centre, etc. 2004 LCG-2 upgraded middleware, mgt. s/w used for simulated event productions principal service for LHC data challenges – batch analysis and simulation 2005 LCG-3 full multi-tier prototype service – batch and interactive testing, hardening of 2 nd generation middleware TDR for the Phase 2 grid Computing model TDRs* validation of computing models 2006 acquisition, installation, commissioning of Phase 2 service (for LHC startup) Phase 2 service in production 2007 first data last update 24/09/2021 22: 39 25 experiment setup & preparation * TDR – technical design report

Evolution of the Base Technology LCG § § § last update 24/09/2021 22: 39 These are still very early days – with very few grids providing a reliable, round-the-clock “production” service And few applications that are managing gigantic distributed databases Although the basic ideas and tools have been around for a long time, we are only now seeing these applied to large scale services Developing the grid concept continues to attract substantial interest and public funding There are major changes taking place in architecture and frameworks – § E. g. the Open Grid Services Architecture and Infrastructure (OGSA, OGSI) and there will be more to come as experience grows There is a lot of commercial interest from potential software suppliers (IBM, HP, Microsoft, . . ) – but no clear sight of useful products 26

Adapting to the changing landscape LCG § In the short-term there will many grids and several middleware implementations -- for LCG - inter-operability will be a major headache § Will be all agree on a common set of tools? - unlikely! § Or will we have to operate a grid of grids – some sort of federation? § Or will computing centres be part of several grids? § The Global Grid Forum – promises to provide a mechanism for evolving architecture and agreeing on standards – but this is a long-term process In the medium-term, until there is substantial practical experience with different architectures and different implementations, de facto standards will emerge § § How quickly will we recognise the winners? Will we have become too attached to our own developments to change? § last update 24/09/2021 22: 39 27

Access Rights and Security LCG The grid idea assumes global authentication, and authorisation based on the user’s role in his virtual organisation -- one set of credentials for everything you do § The agreement for LHC is that all members of a physics collaboration will have access to all of its resources -- the political implications of this have still to be tested! § Could be an attractive target for hackers -- this is probably the greatest risk that we take by adopting the grid model for LHC computing last update 24/09/2021 22: 39 28

Key LCG goals for next 12 months LCG § Take-up by the experiments of the first versions of common applications – starts NOW § Evolve the LCG-1 service into a production facility for LHC experiments – validated in data challenges § Establish the requirements and a credible implementation plan for baseline distributed grid analysis for 2007 -08 § the model § hep-specific tools § base grid technology - middleware to support the computing models of the experiments – Technical Design Reports due end 2004 last update 24/09/2021 22: 39 29

Summary LCG § § § § The LCG Project has a clear goal of providing the environment and services for recording and analysing the LHC data when the accelerator starts operation in 2007 The computational requirements of LHC dictate a geographically distributed solution, taking maximum advantage of the facilities available to LHC around the world --- a computational GRID A pilot service – LCG-1 – has been opened to learn how to use this technology to provide a reliable, efficient service encompassing many independent computing centres It is already clear that the current middleware will have to re-engineered or replaced to achieve the goals of reliability and scalability In the medium term we expect to get this new middleware from EU and US funded projects But the technology is evolving rapidly – and LCG will have to adapt to a changing environment While we keep a strong focus on providing a continuing service for the LHC Collaborations last update 24/09/2021 22: 39 30