Lecture 26 Case Studies Topics processor case studies

- Slides: 18

Lecture 26: Case Studies • Topics: processor case studies, Flash memory • Final exam stats: § Highest 83, median 67 § 70+: 16 students, 60 -69: 20 students § 1 st 3 problems and 7 th problem: gimmes § 4 th problem (LSQ): half the students got full points § 5 th problem (cache hierarchy): 1 correct solution § 6 th problem (coherence): most got more than 15 points § 8 th problem (TM): very few mentioned frequent aborts, starvation, and livelock § 9 th problem (TM): no one got close to full points § 10 th problem (LRU): 1 “correct” solution with the 1 tree structure

Finals Discussion: LSQ, Caches, TM, LRU 2

Case Study I: Sun’s Niagara • Commercial servers require high thread-level throughput and suffer from cache misses • Sun’s Niagara focuses on: Ø simple cores (low power, design complexity, can accommodate more cores) Ø fine-grain multi-threading (to tolerate long memory latencies) 3

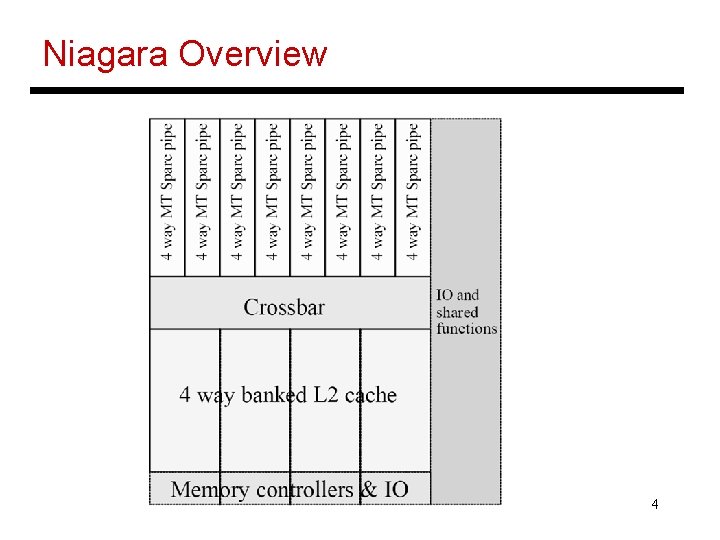

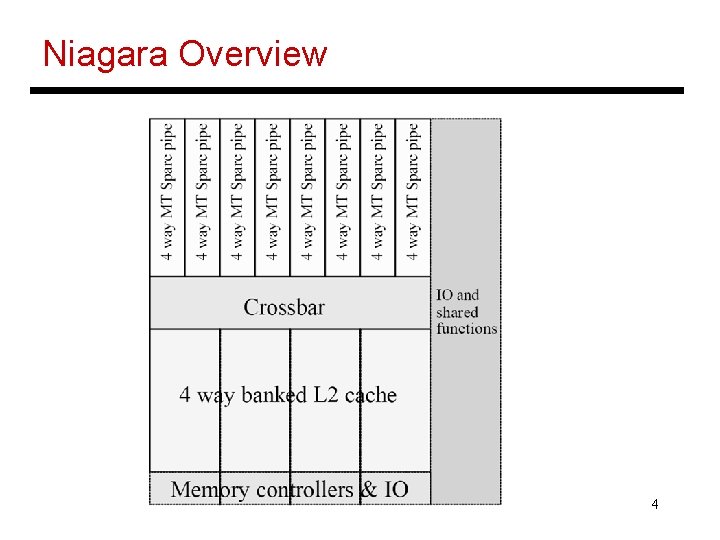

Niagara Overview 4

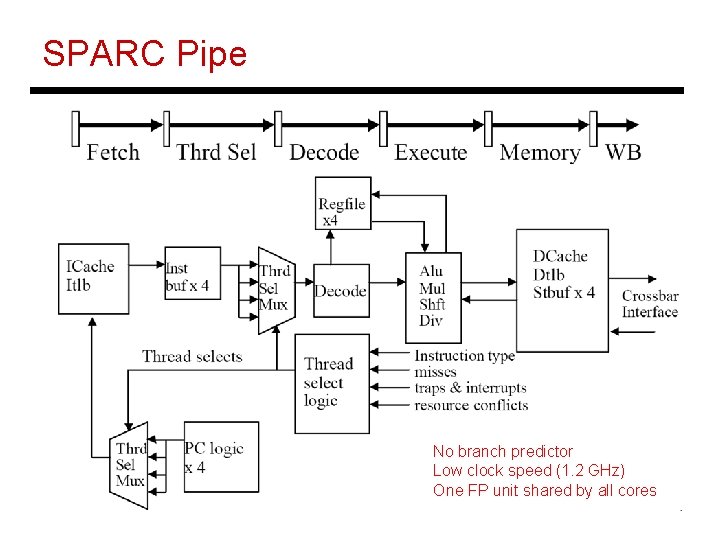

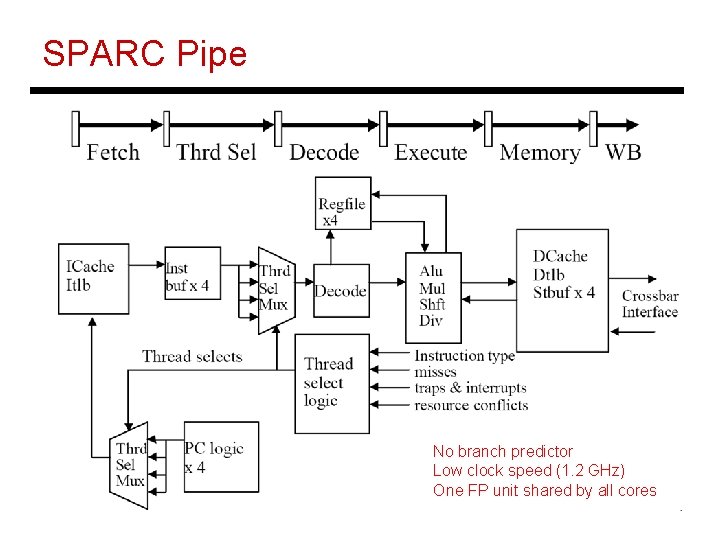

SPARC Pipe No branch predictor Low clock speed (1. 2 GHz) One FP unit shared by all cores 5

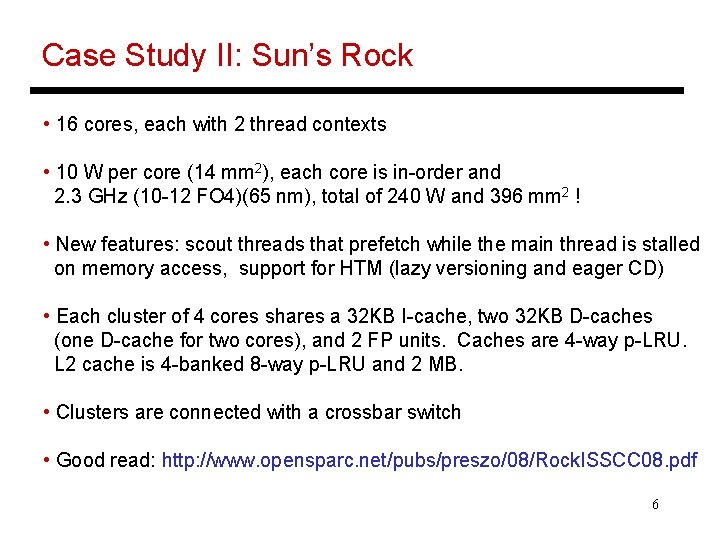

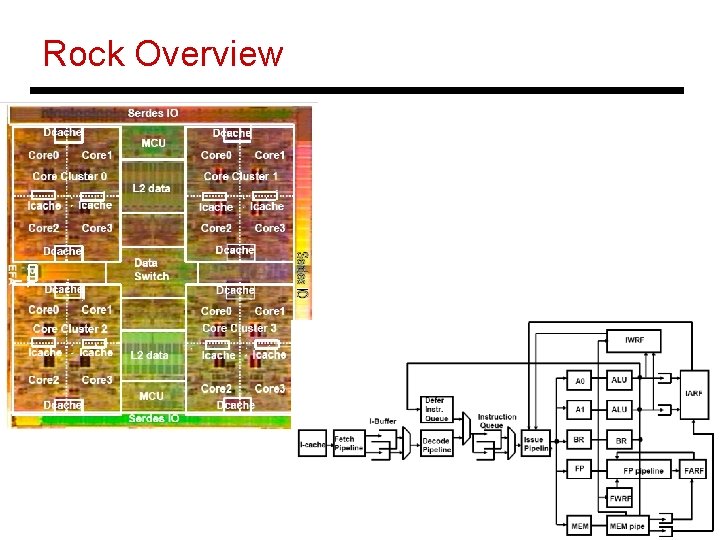

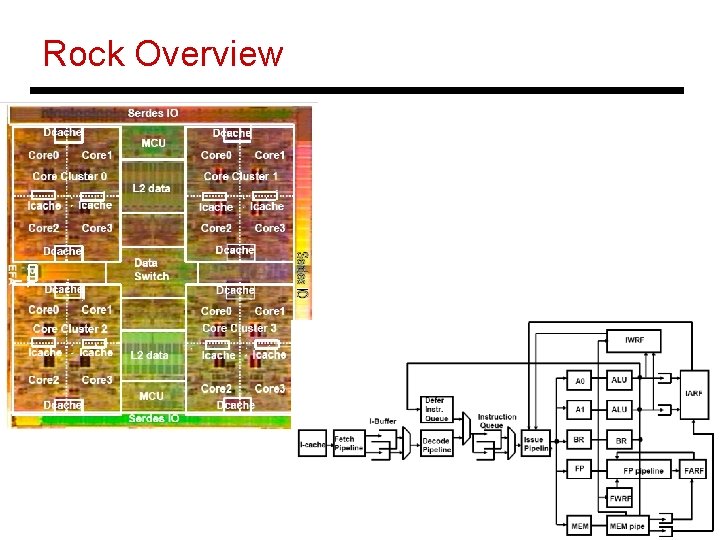

Case Study II: Sun’s Rock • 16 cores, each with 2 thread contexts • 10 W per core (14 mm 2), each core is in-order and 2. 3 GHz (10 -12 FO 4)(65 nm), total of 240 W and 396 mm 2 ! • New features: scout threads that prefetch while the main thread is stalled on memory access, support for HTM (lazy versioning and eager CD) • Each cluster of 4 cores shares a 32 KB I-cache, two 32 KB D-caches (one D-cache for two cores), and 2 FP units. Caches are 4 -way p-LRU. L 2 cache is 4 -banked 8 -way p-LRU and 2 MB. • Clusters are connected with a crossbar switch • Good read: http: //www. opensparc. net/pubs/preszo/08/Rock. ISSCC 08. pdf 6

Rock Overview 7

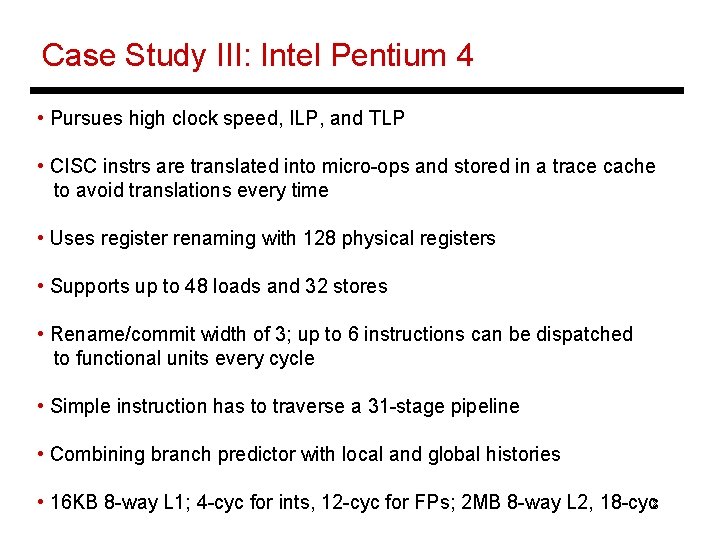

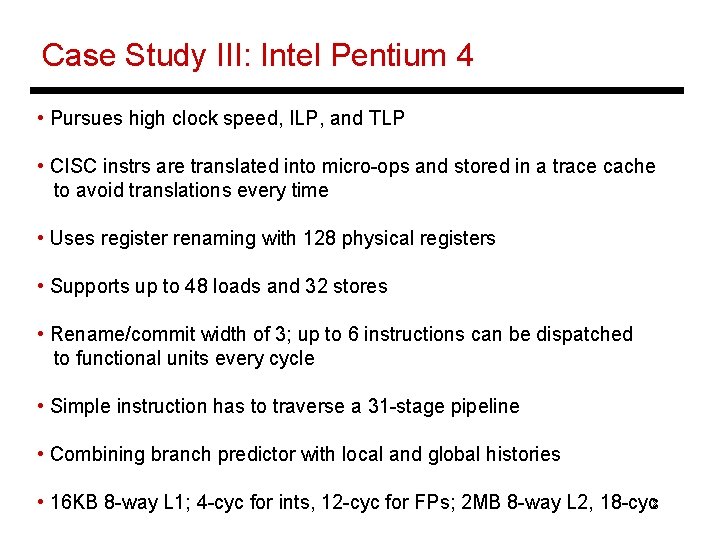

Case Study III: Intel Pentium 4 • Pursues high clock speed, ILP, and TLP • CISC instrs are translated into micro-ops and stored in a trace cache to avoid translations every time • Uses register renaming with 128 physical registers • Supports up to 48 loads and 32 stores • Rename/commit width of 3; up to 6 instructions can be dispatched to functional units every cycle • Simple instruction has to traverse a 31 -stage pipeline • Combining branch predictor with local and global histories • 16 KB 8 -way L 1; 4 -cyc for ints, 12 -cyc for FPs; 2 MB 8 -way L 2, 18 -cyc 8

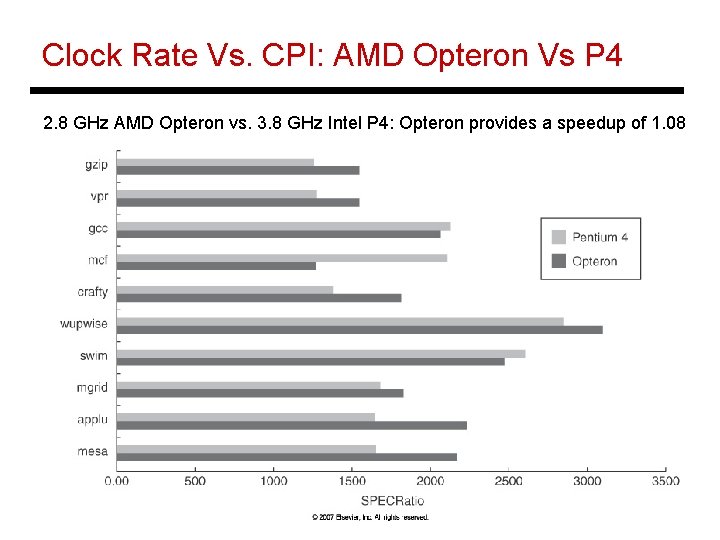

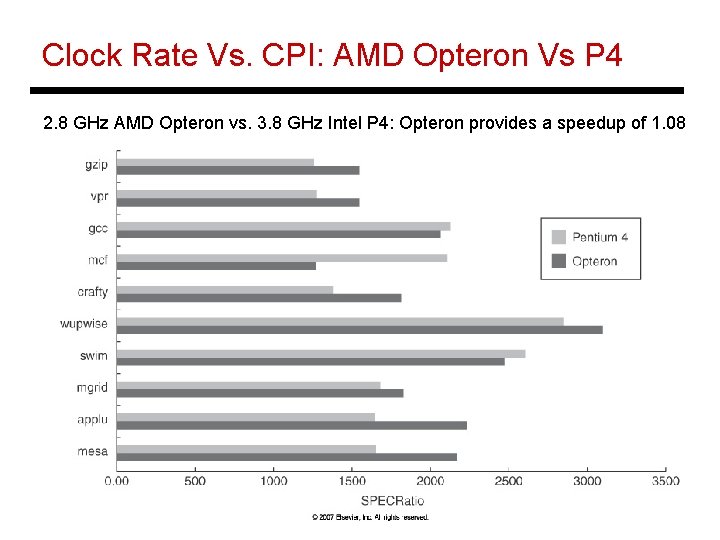

Clock Rate Vs. CPI: AMD Opteron Vs P 4 2. 8 GHz AMD Opteron vs. 3. 8 GHz Intel P 4: Opteron provides a speedup of 1. 08 9

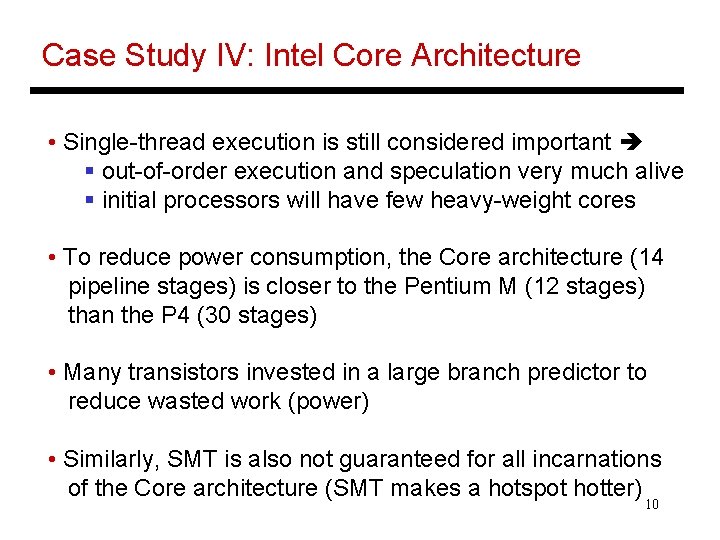

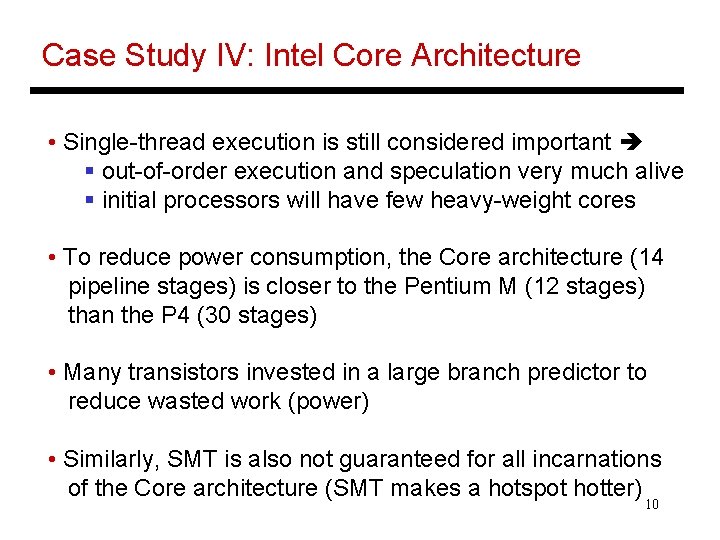

Case Study IV: Intel Core Architecture • Single-thread execution is still considered important § out-of-order execution and speculation very much alive § initial processors will have few heavy-weight cores • To reduce power consumption, the Core architecture (14 pipeline stages) is closer to the Pentium M (12 stages) than the P 4 (30 stages) • Many transistors invested in a large branch predictor to reduce wasted work (power) • Similarly, SMT is also not guaranteed for all incarnations of the Core architecture (SMT makes a hotspot hotter) 10

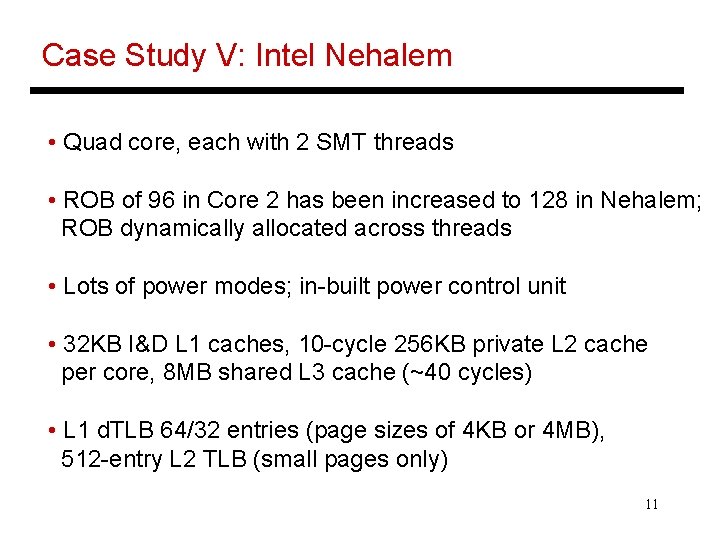

Case Study V: Intel Nehalem • Quad core, each with 2 SMT threads • ROB of 96 in Core 2 has been increased to 128 in Nehalem; ROB dynamically allocated across threads • Lots of power modes; in-built power control unit • 32 KB I&D L 1 caches, 10 -cycle 256 KB private L 2 cache per core, 8 MB shared L 3 cache (~40 cycles) • L 1 d. TLB 64/32 entries (page sizes of 4 KB or 4 MB), 512 -entry L 2 TLB (small pages only) 11

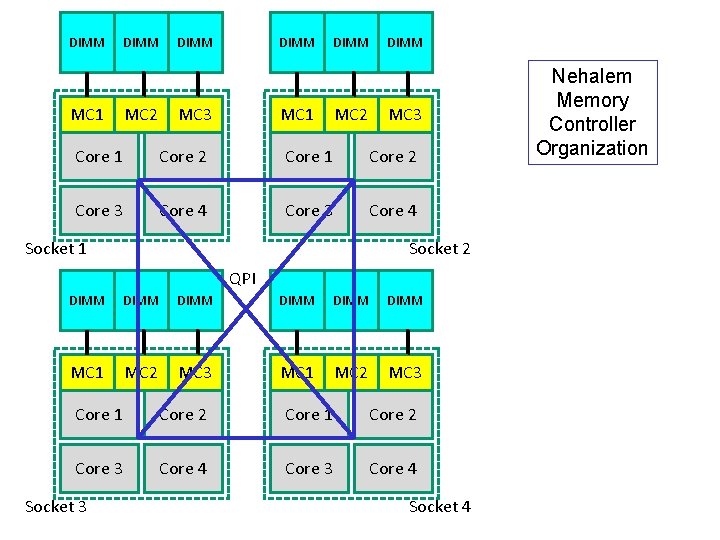

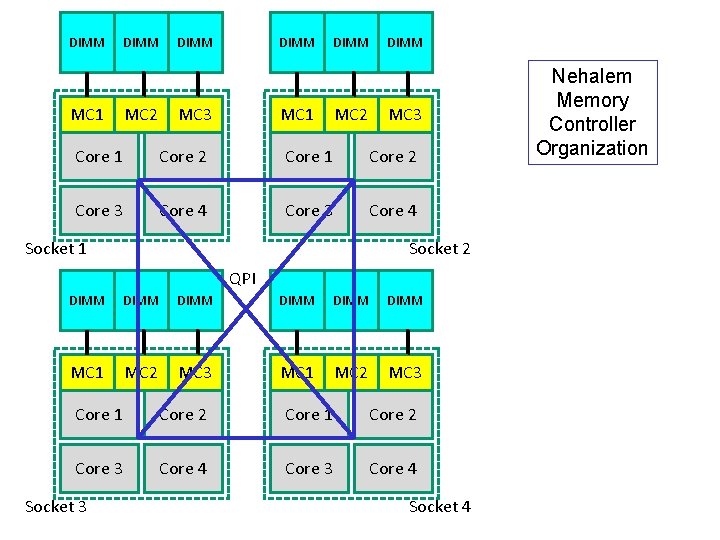

DIMM MC 1 DIMM MC 2 DIMM MC 3 Core 1 Core 2 Core 3 Core 4 Socket 1 Socket 2 QPI DIMM DIMM MC 1 MC 2 MC 3 Core 1 Core 2 Core 3 Core 4 Socket 3 Socket 4 Nehalem Memory Controller Organization

Flash Memory • Technology cost-effective enough that flash memory can now replace magnetic disks on laptops (also known as solid-state disks) • Non-volatile, fast read times (15 MB/sec) (slower than DRAM), a write requires an entire block to be erased first (about 100 K erases are possible) (block sizes can be 16 -512 KB) 13

Advanced Course • Spr’ 09: CS 7810: Advanced Computer Architecture § co-taught by Al Davis and me § lots of multi-core topics: cache coherence, TM, networks § memory technologies: DRAM layouts, new technologies, memory controller design § Major course project on evaluating original ideas with simulators (can lead to publications) § One programming assignment, take-home final 14

Case Studies: More Processors • AMD Barcelona: 4 cores, issue width of 3, each core has private L 1 (64 KB) and L 2 (512 KB), shared L 3 (2 MB), 95 W (AMD also has announcements for 3 -core chips) • Sun Niagara 2: 8 threads per core, up to 8 cores, 60 -123 W, 0. 9 -1. 4 GHz, 4 MB L 2 (8 banks), 8 FP units • IBM Power 6: 2 cores, 4. 7 GHz, each core has a private 4 MB L 2 15

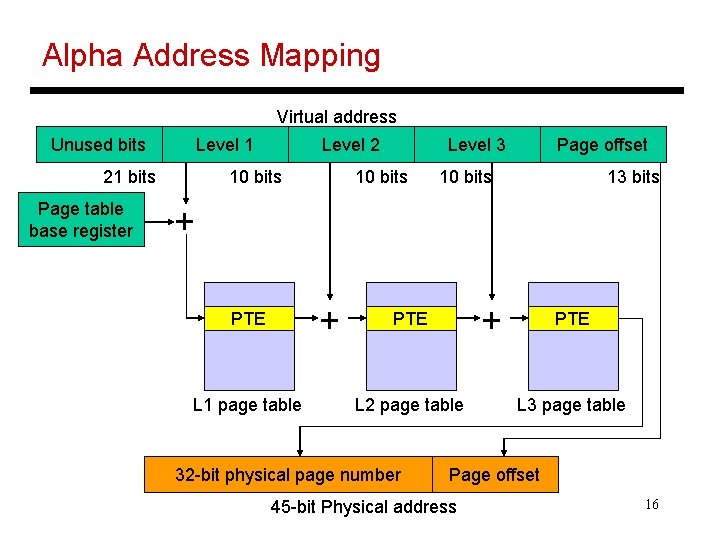

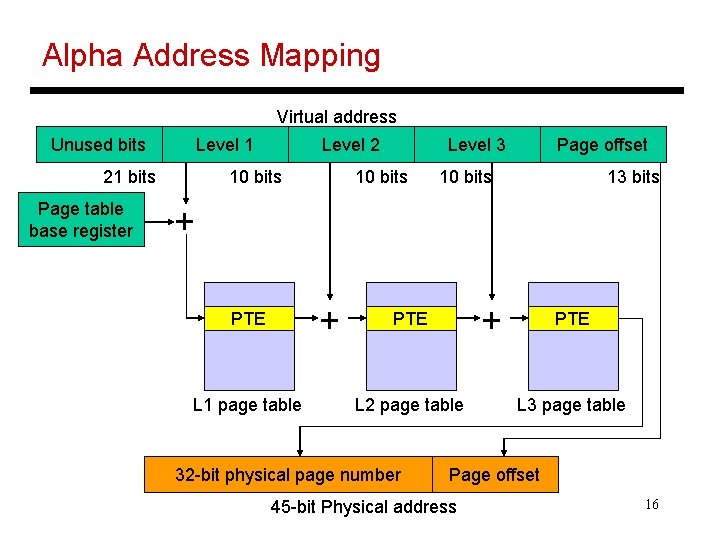

Alpha Address Mapping Virtual address Unused bits Level 1 21 bits Page table base register Level 2 10 bits Level 3 10 bits Page offset 10 bits 13 bits + + PTE L 1 page table + PTE L 2 page table 32 -bit physical page number PTE L 3 page table Page offset 45 -bit Physical address 16

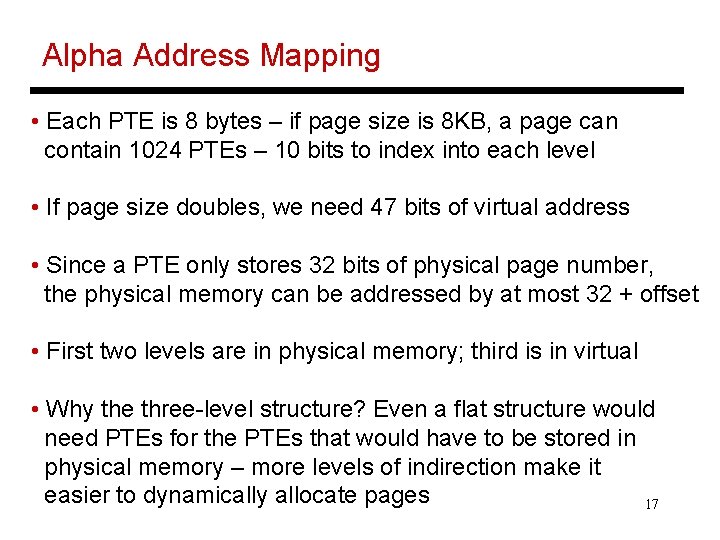

Alpha Address Mapping • Each PTE is 8 bytes – if page size is 8 KB, a page can contain 1024 PTEs – 10 bits to index into each level • If page size doubles, we need 47 bits of virtual address • Since a PTE only stores 32 bits of physical page number, the physical memory can be addressed by at most 32 + offset • First two levels are in physical memory; third is in virtual • Why the three-level structure? Even a flat structure would need PTEs for the PTEs that would have to be stored in physical memory – more levels of indirection make it easier to dynamically allocate pages 17

Title • Bullet 18