In Datacenter Performance The Only Constant Is Change

- Slides: 25

In Datacenter Performance, The Only Constant Is Change Dmitry Duplyakin, Alexandru Uta, Aleksander Maricq, Robert Ricci 20 th IEEE/ACM International Symposium on Cluster, Cloud and Internet Computing CCGrid 2020 (presented in May 2021)

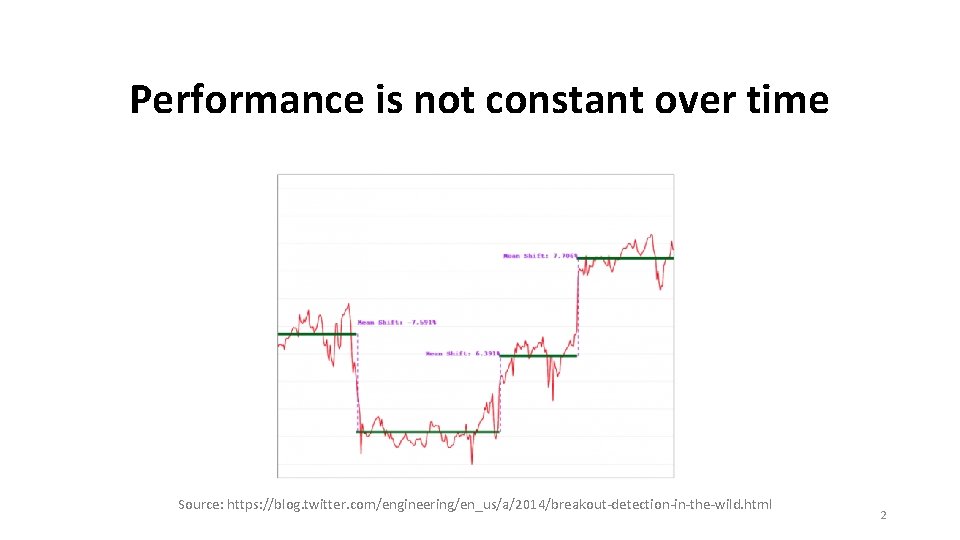

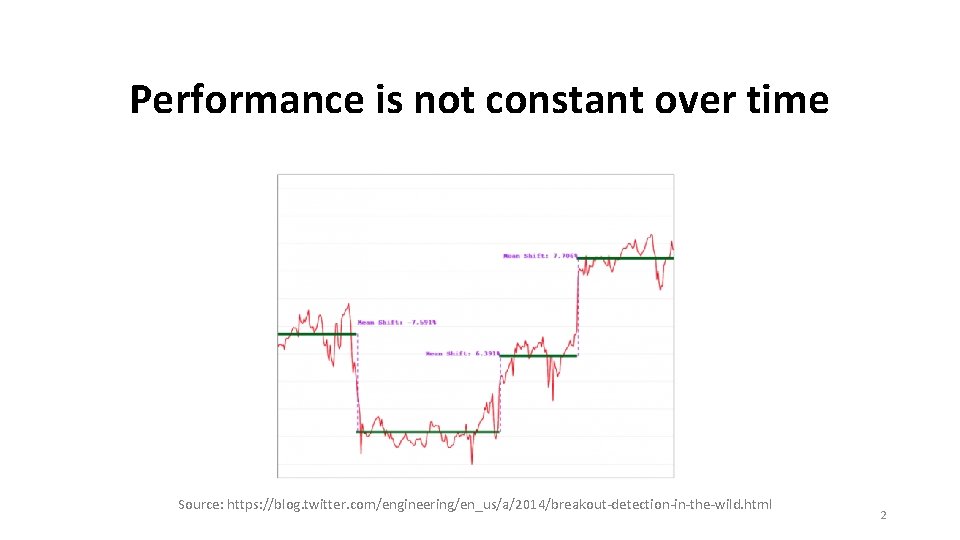

Performance is not constant over time Source: https: //blog. twitter. com/engineering/en_us/a/2014/breakout-detection-in-the-wild. html 2

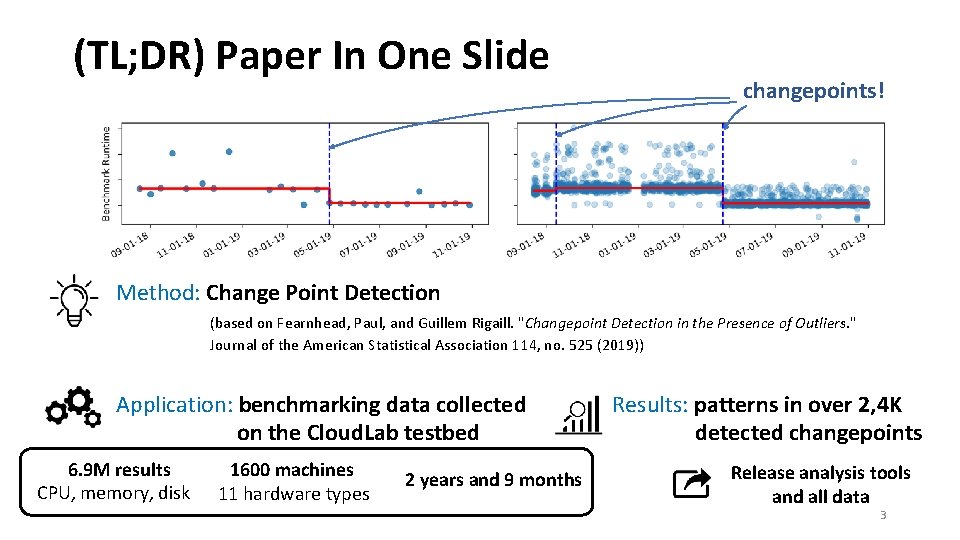

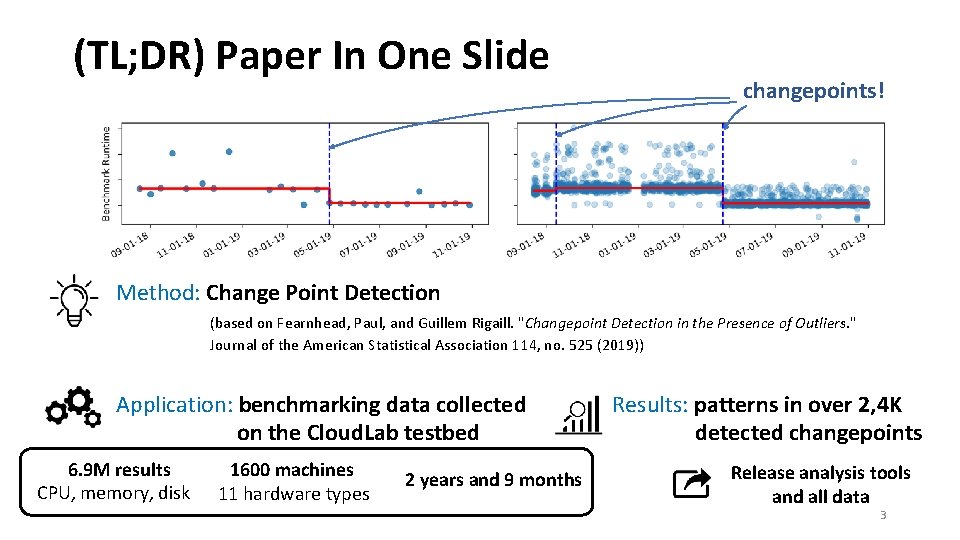

(TL; DR) Paper In One Slide changepoints! Method: Change Point Detection (based on Fearnhead, Paul, and Guillem Rigaill. "Changepoint Detection in the Presence of Outliers. " Journal of the American Statistical Association 114, no. 525 (2019)) Application: benchmarking data collected on the Cloud. Lab testbed 6. 9 M results CPU, memory, disk 1600 machines 11 hardware types 2 years and 9 months Results: patterns in over 2, 4 K detected changepoints Release analysis tools and all data 3

Outline What performance numbers did we collect & study? Where did we collect the data? What changepoint detection method did we use? What are the key results and what did we learn from them? 4

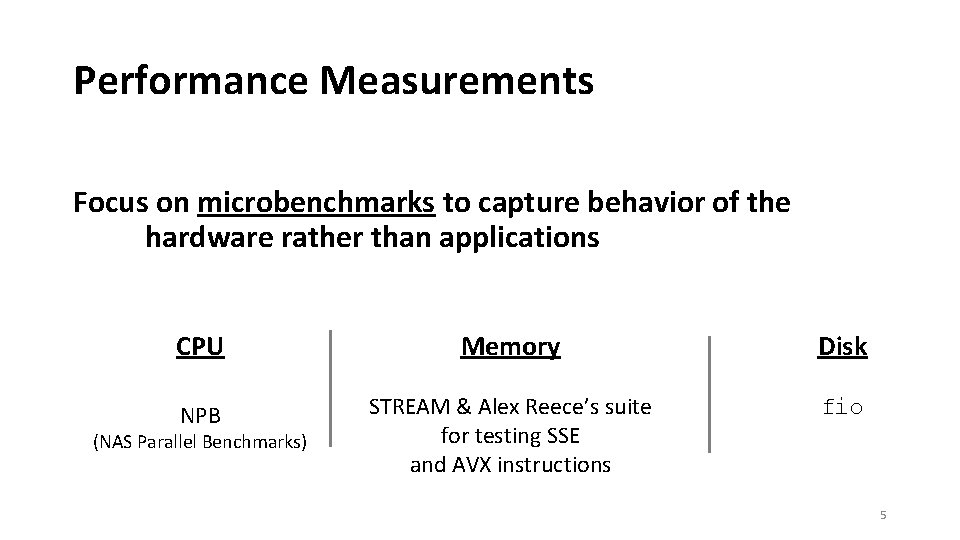

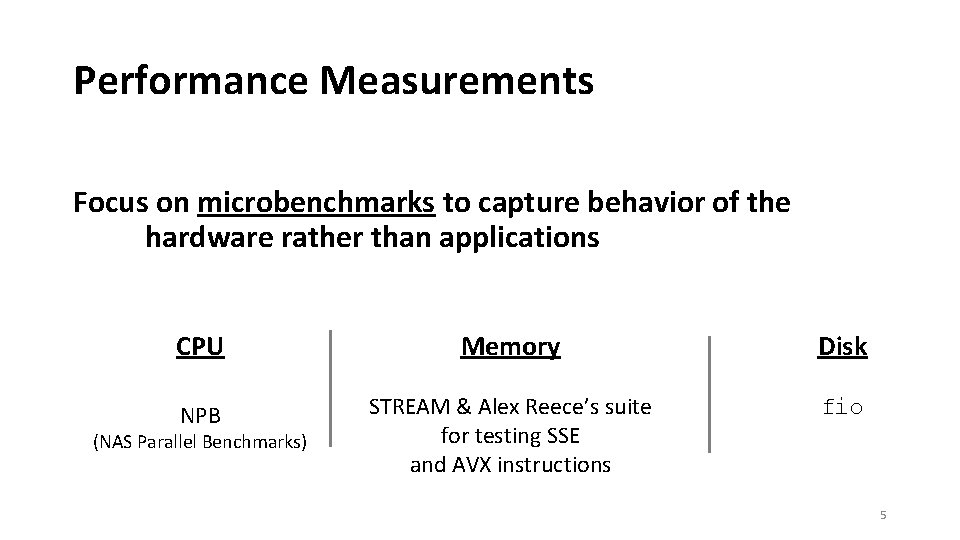

Performance Measurements Focus on microbenchmarks to capture behavior of the hardware rather than applications CPU Memory Disk NPB STREAM & Alex Reece’s suite for testing SSE and AVX instructions fio (NAS Parallel Benchmarks) 5

Where did we collect the data? On . More at: https: //cloudlab. us and in paper: where experiments It is a distributed testbed for are run on cloud computing real, physical hardware and systems research (not Virtual Machines) No interference! 6

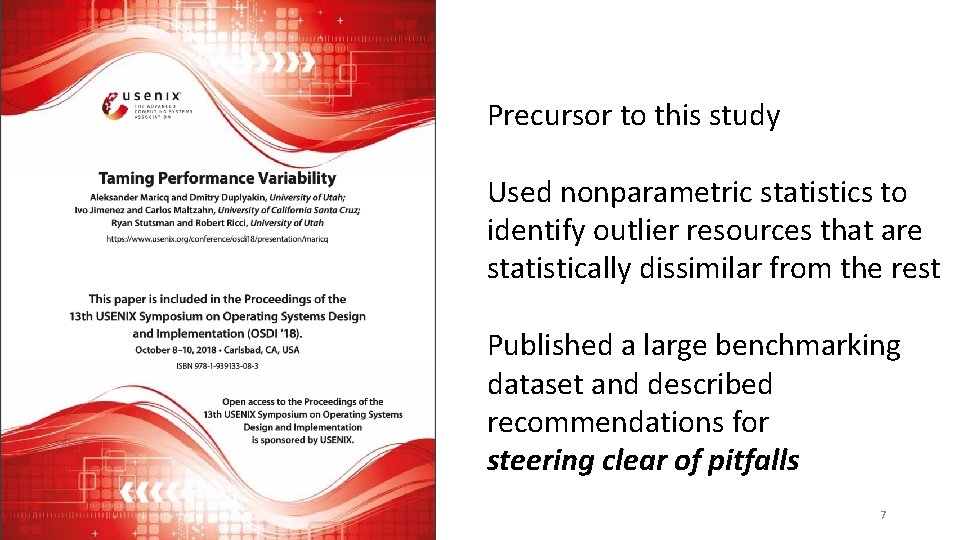

Precursor to this study Used nonparametric statistics to identify outlier resources that are statistically dissimilar from the rest Published a large benchmarking dataset and described recommendations for steering clear of pitfalls 7

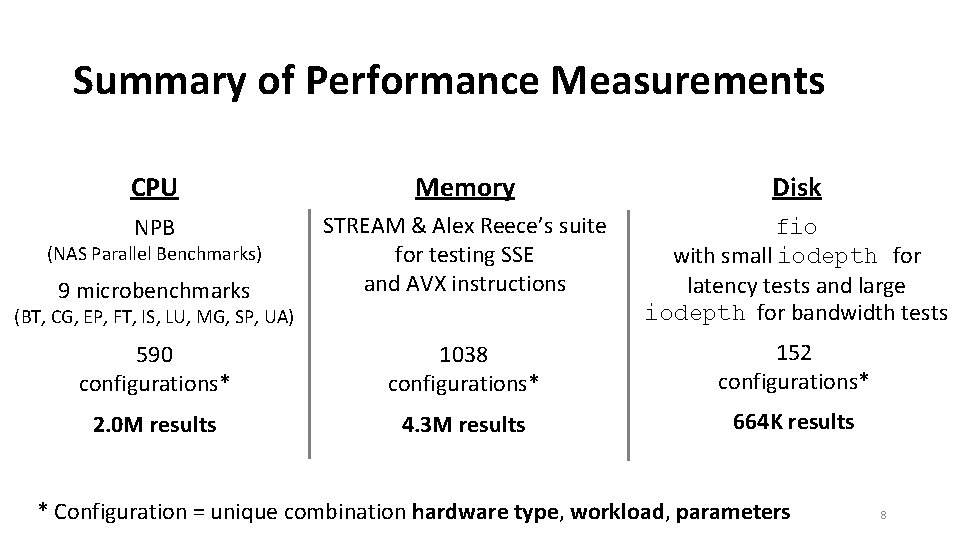

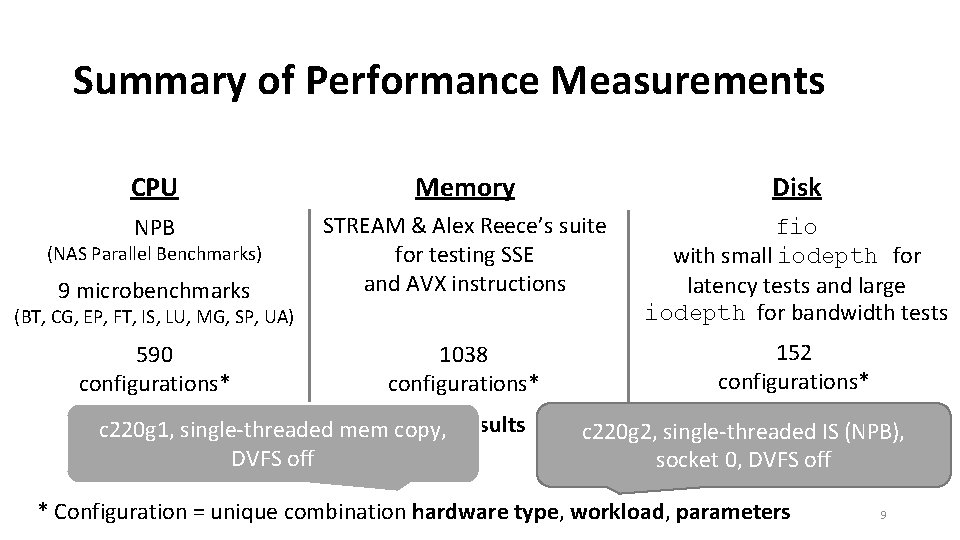

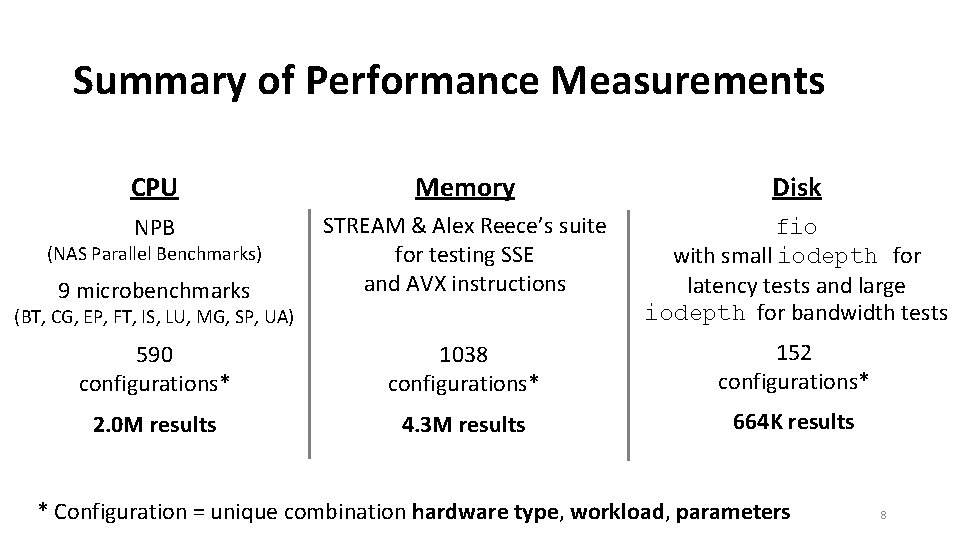

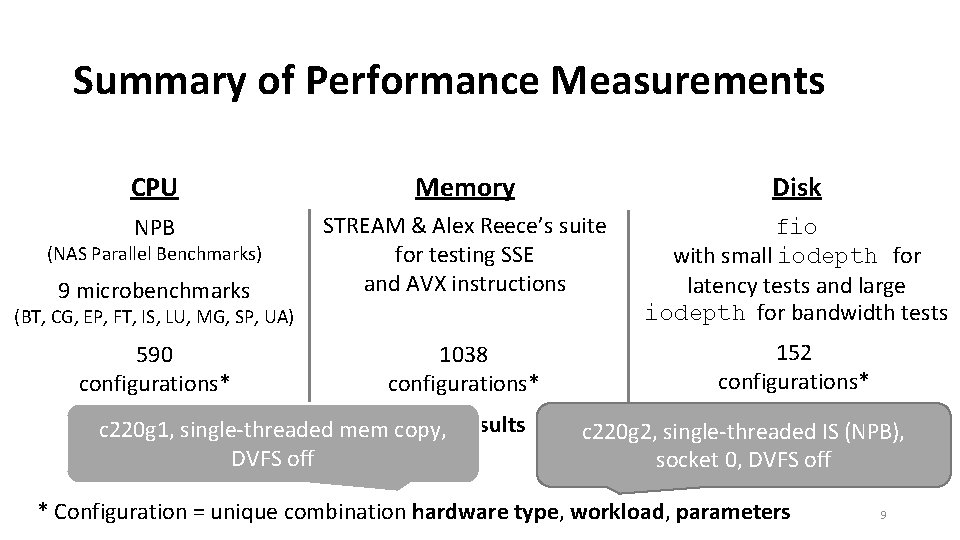

Summary of Performance Measurements CPU Memory Disk NPB 9 microbenchmarks STREAM & Alex Reece’s suite for testing SSE and AVX instructions fio with small iodepth for latency tests and large iodepth for bandwidth tests 590 configurations* 1038 configurations* 152 configurations* 2. 0 M results 4. 3 M results 664 K results (NAS Parallel Benchmarks) (BT, CG, EP, FT, IS, LU, MG, SP, UA) * Configuration = unique combination hardware type, workload, parameters 8

Summary of Performance Measurements CPU Memory Disk NPB 9 microbenchmarks STREAM & Alex Reece’s suite for testing SSE and AVX instructions fio with small iodepth for latency tests and large iodepth for bandwidth tests 590 configurations* 1038 configurations* 152 configurations* (NAS Parallel Benchmarks) (BT, CG, EP, FT, IS, LU, MG, SP, UA) 2. 0 M results 4. 3 M results c 220 g 1, single-threaded mem copy, DVFS off 664 K results c 220 g 2, single-threaded IS (NPB), socket 0, DVFS off * Configuration = unique combination hardware type, workload, parameters 9

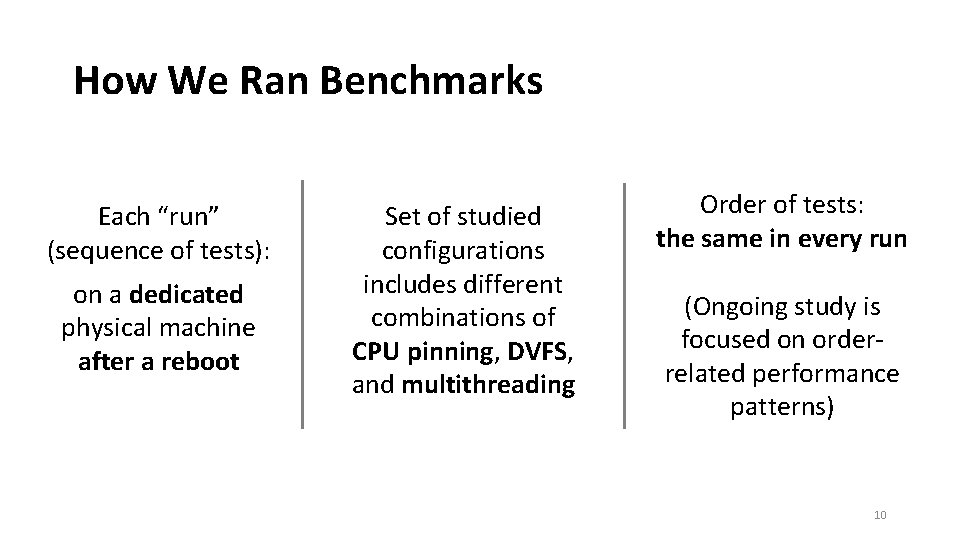

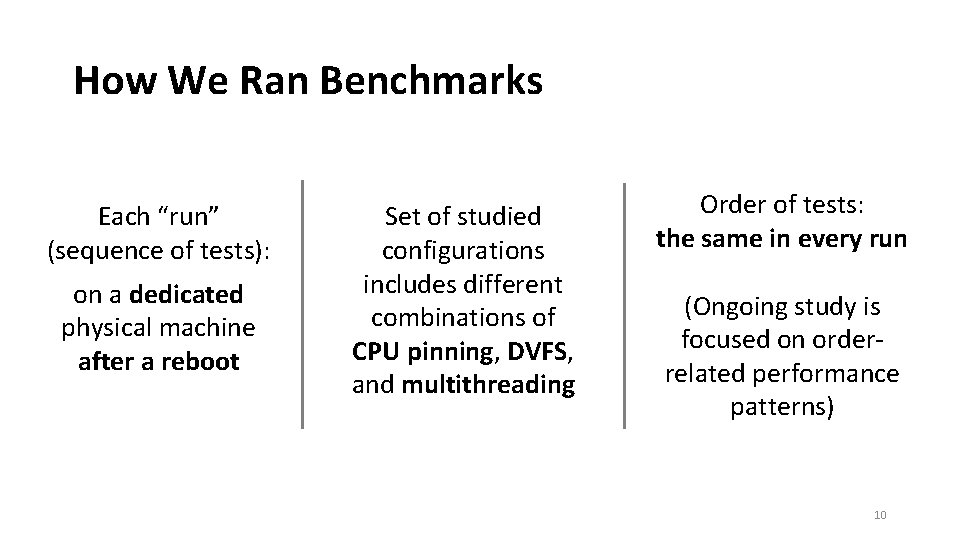

How We Ran Benchmarks Each “run” (sequence of tests): on a dedicated physical machine after a reboot Set of studied configurations includes different combinations of CPU pinning, DVFS, and multithreading Order of tests: the same in every run (Ongoing study is focused on orderrelated performance patterns) 10

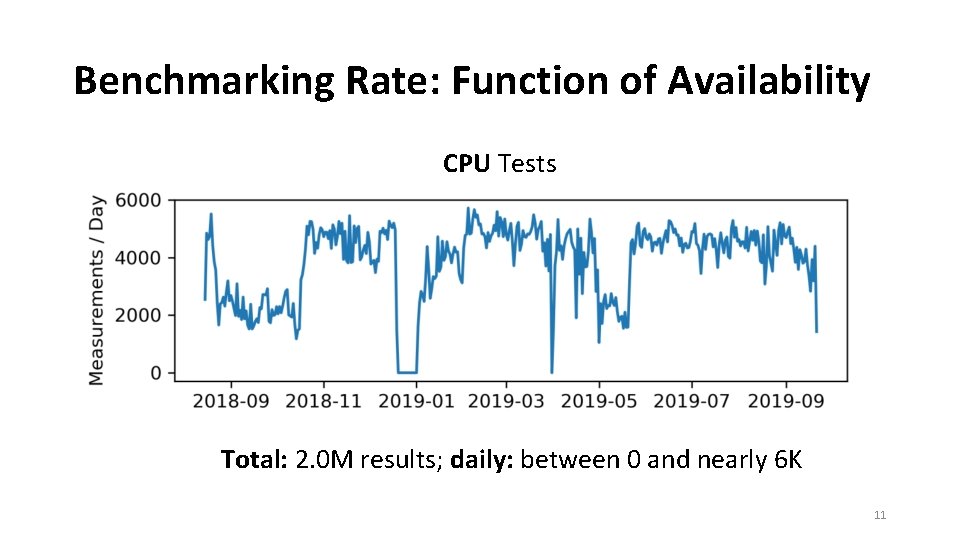

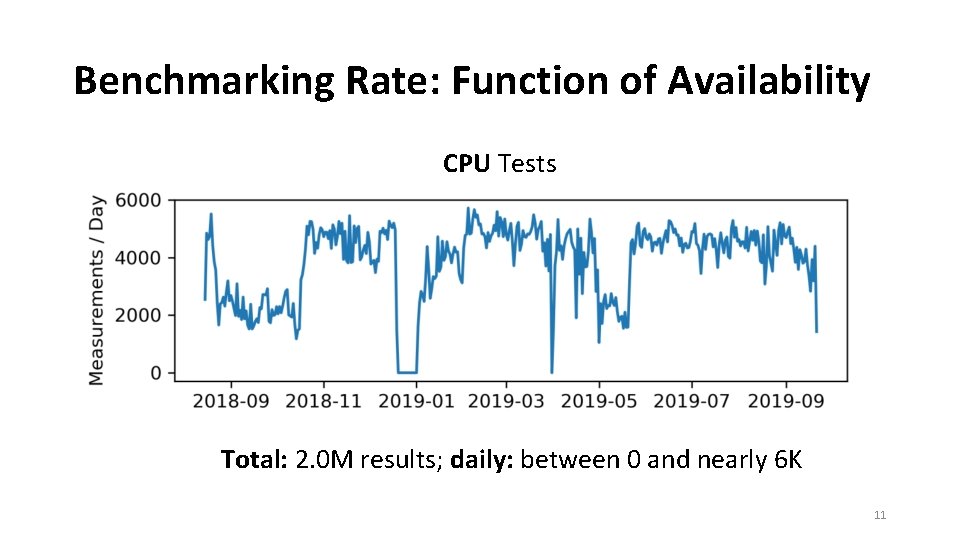

Benchmarking Rate: Function of Availability CPU Tests Total: 2. 0 M results; daily: between 0 and nearly 6 K 11

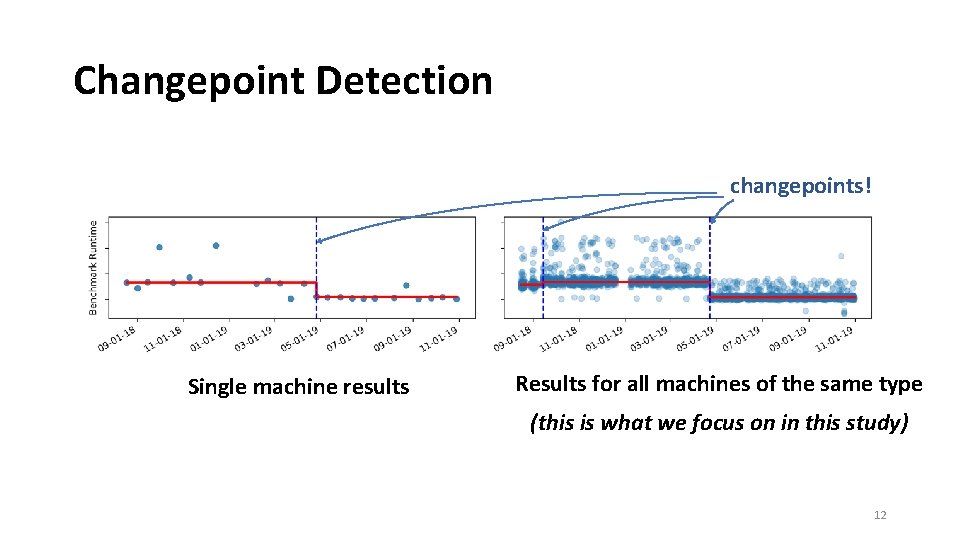

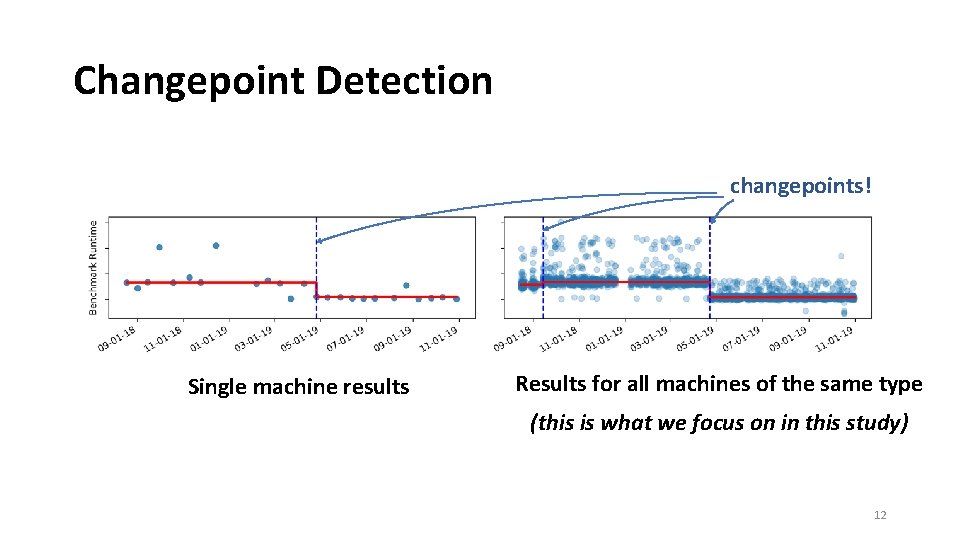

Changepoint Detection changepoints! Single machine results Results for all machines of the same type (this is what we focus on in this study) 12

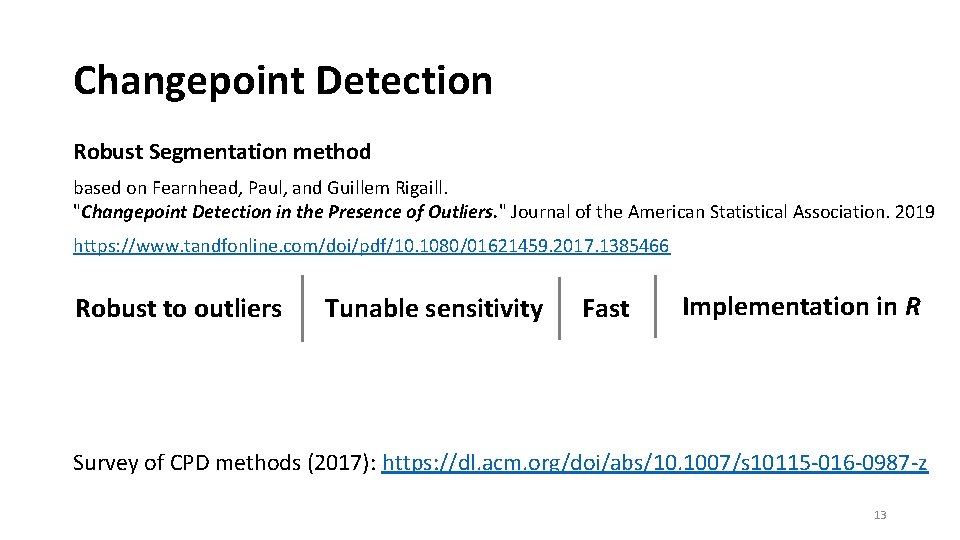

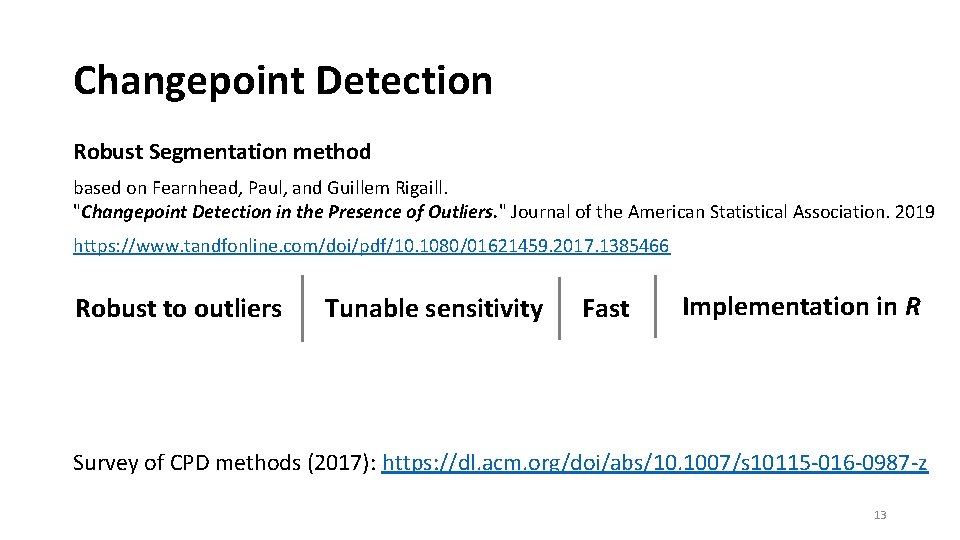

Changepoint Detection Robust Segmentation method based on Fearnhead, Paul, and Guillem Rigaill. "Changepoint Detection in the Presence of Outliers. " Journal of the American Statistical Association. 2019 https: //www. tandfonline. com/doi/pdf/10. 1080/01621459. 2017. 1385466 Robust to outliers Tunable sensitivity Fast Implementation in R Survey of CPD methods (2017): https: //dl. acm. org/doi/abs/10. 1007/s 10115 -016 -0987 -z 13

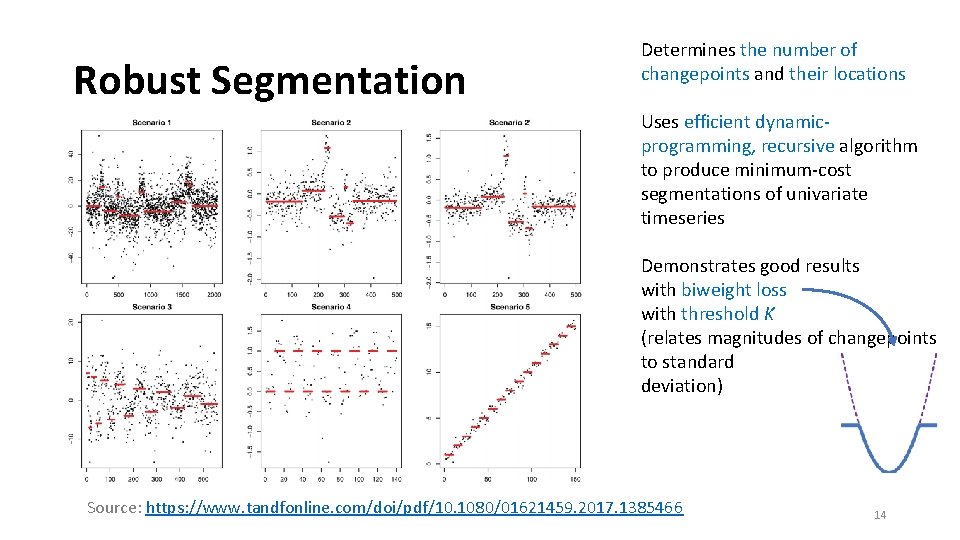

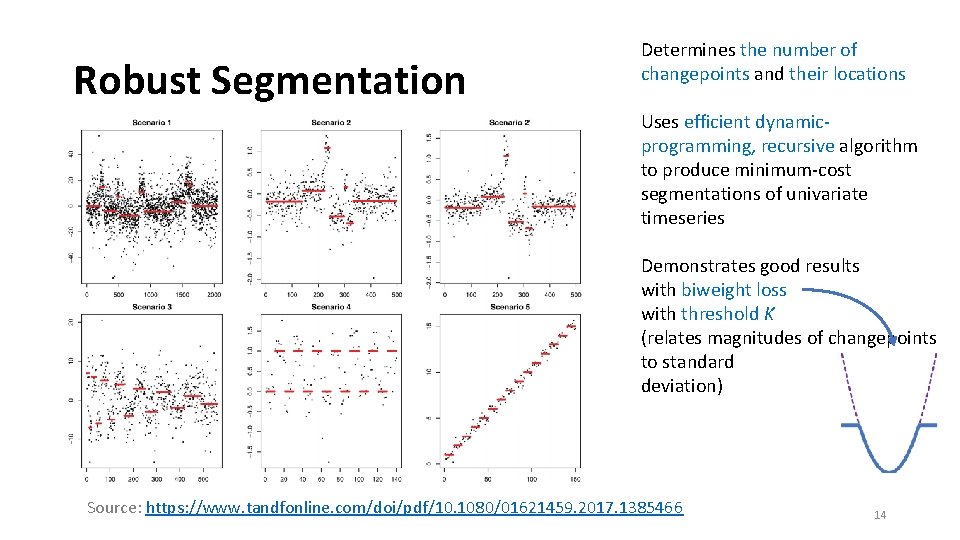

Robust Segmentation Determines the number of changepoints and their locations Uses efficient dynamicprogramming, recursive algorithm to produce minimum-cost segmentations of univariate timeseries Demonstrates good results with biweight loss with threshold K (relates magnitudes of changepoints to standard deviation) Source: https: //www. tandfonline. com/doi/pdf/10. 1080/01621459. 2017. 1385466 14

Outline What is Cloud. Lab? What performance numbers did we gather & study? What changepoint detection method did we used? What are the key results and what did we learn from them? 15

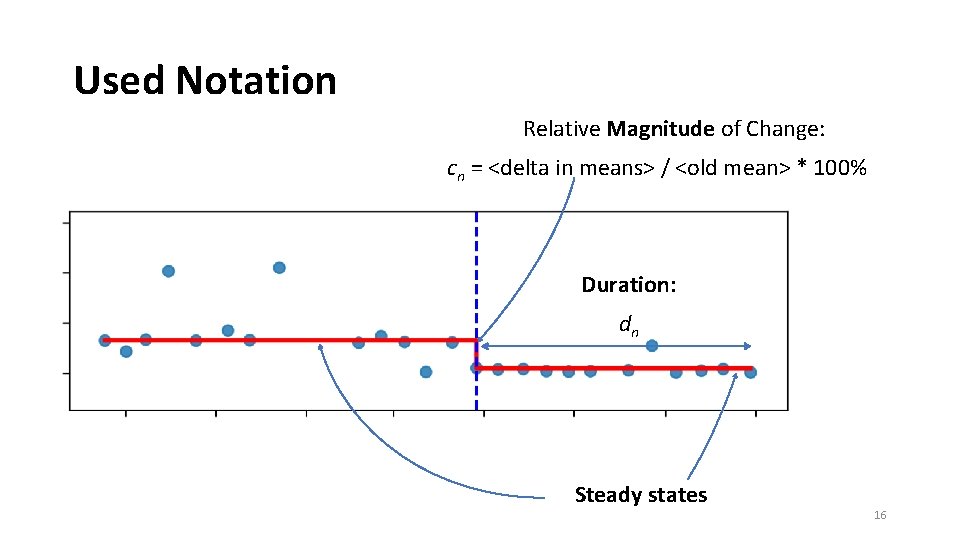

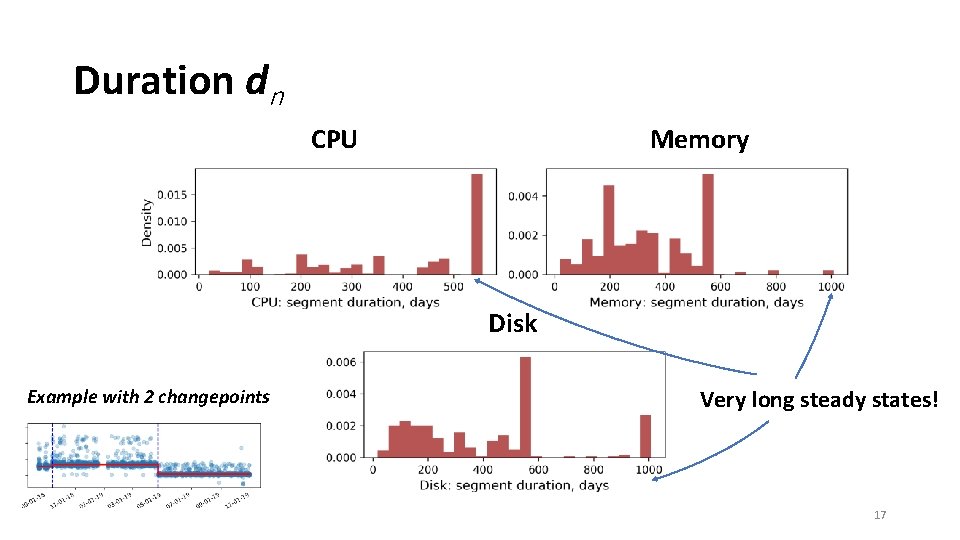

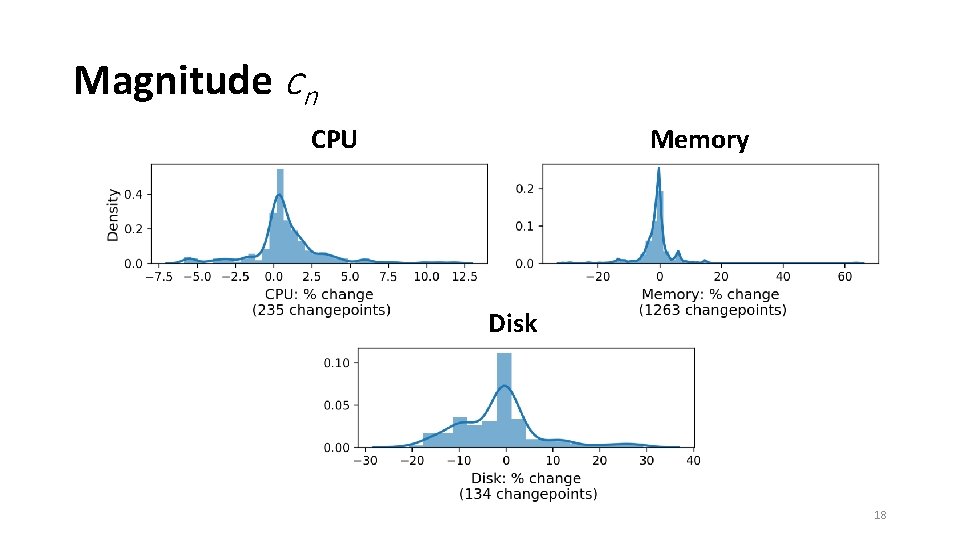

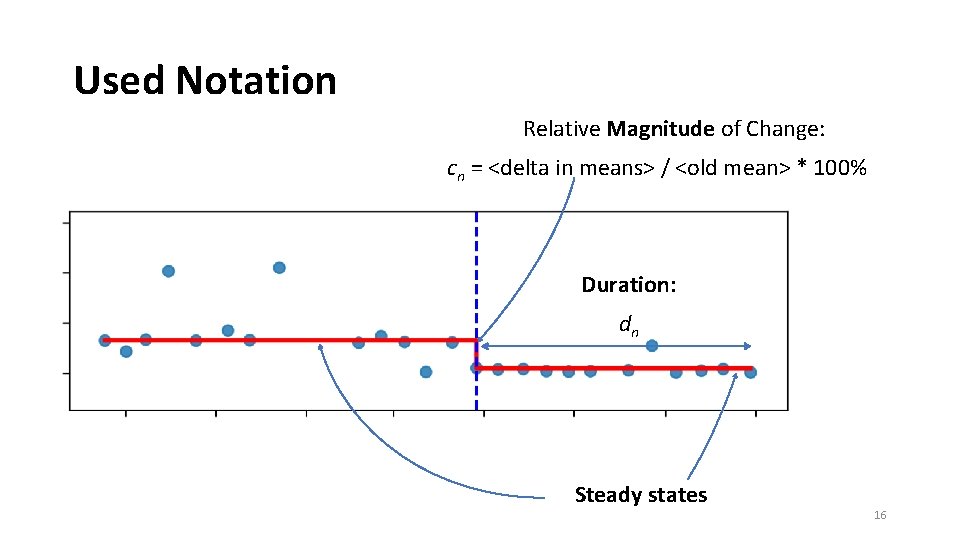

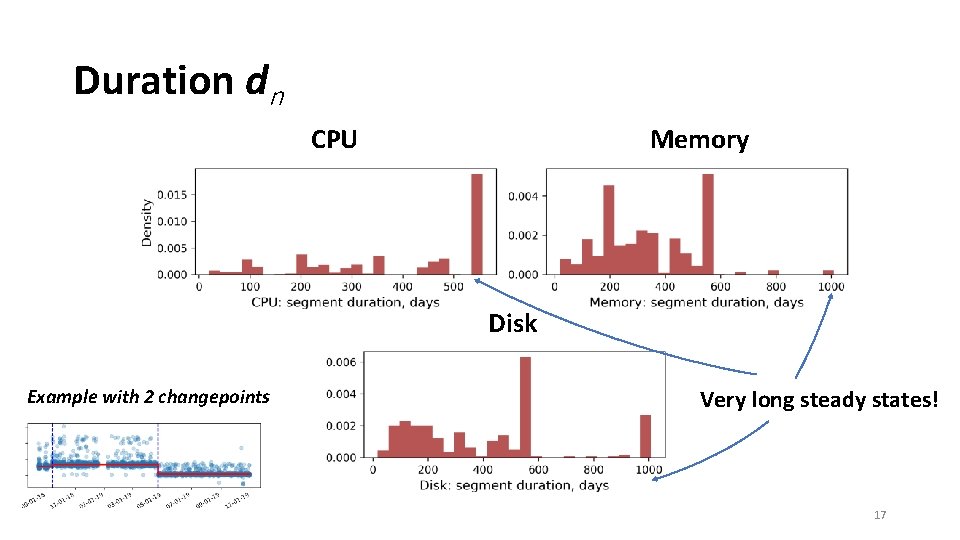

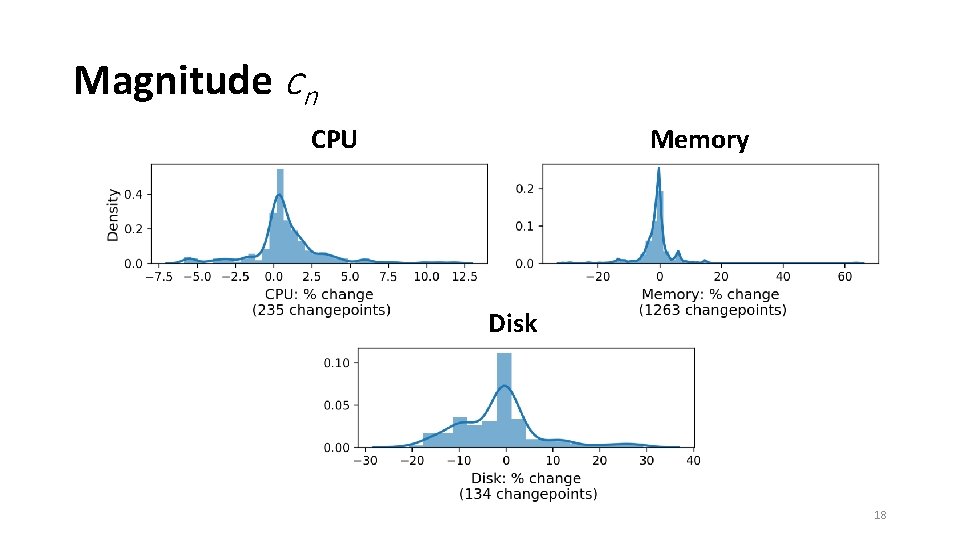

Used Notation Relative Magnitude of Change: cn = <delta in means> / <old mean> * 100% Duration: dn Steady states 16

Duration dn CPU Memory Disk Example with 2 changepoints Very long steady states! 17

Magnitude cn CPU Memory Disk 18

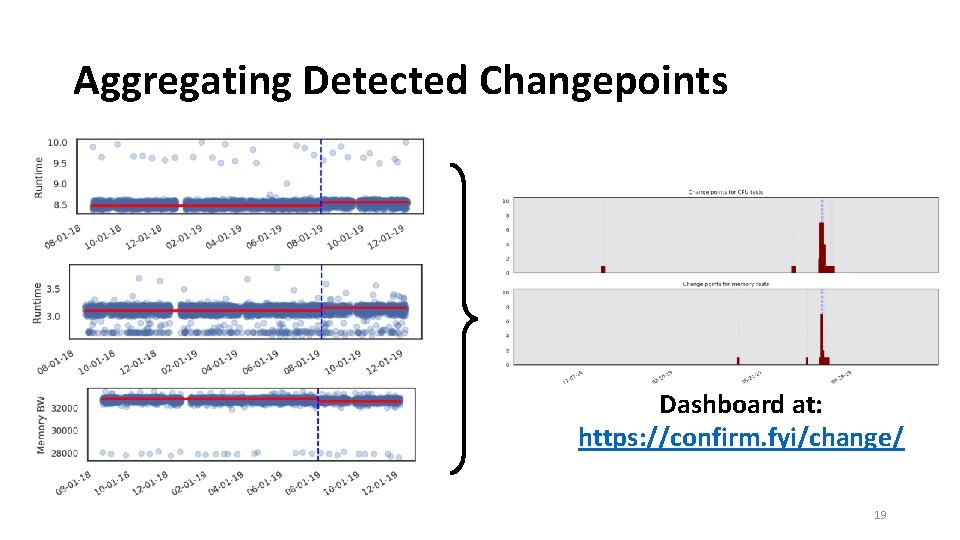

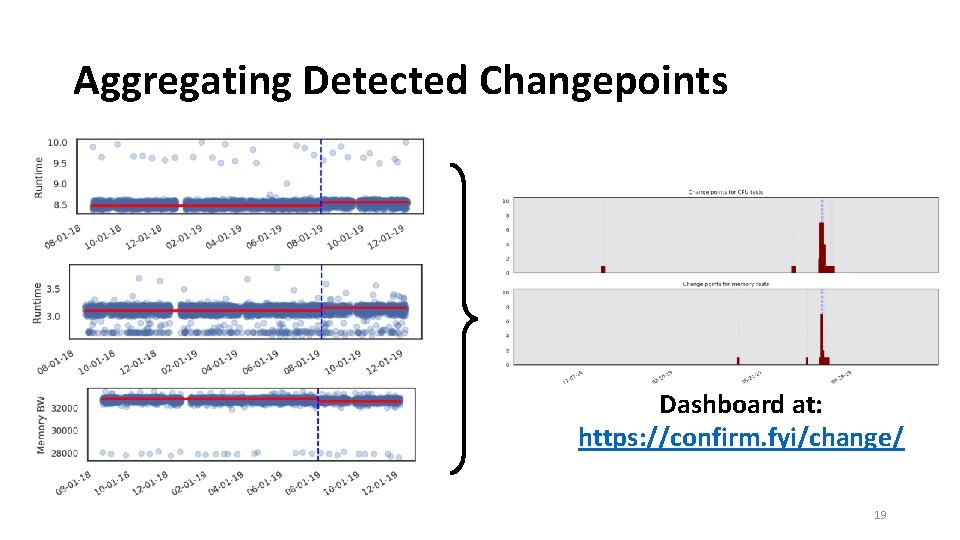

Aggregating Detected Changepoints Dashboard at: https: //confirm. fyi/change/ 19

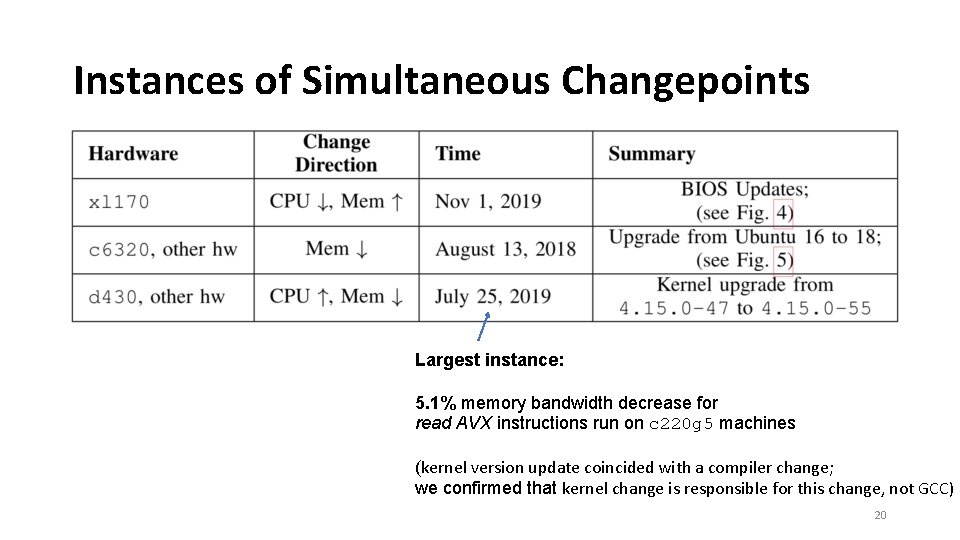

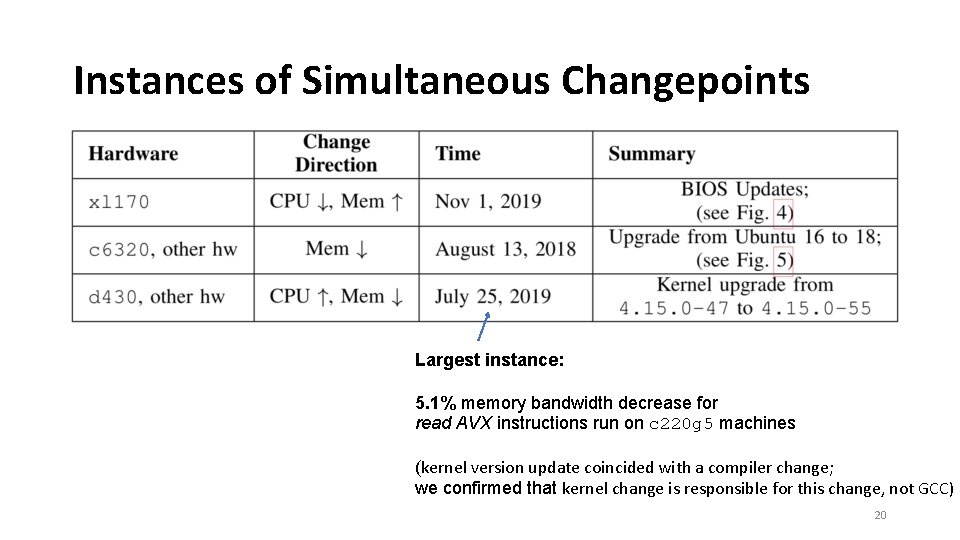

Instances of Simultaneous Changepoints Largest instance: 5. 1% memory bandwidth decrease for read AVX instructions run on c 220 g 5 machines (kernel version update coincided with a compiler change; we confirmed that kernel change is responsible for this change, not GCC) 20

Steady States Disk: 2 hardware types use: 1 TB 7200 -RPM 6 G SATA HDDs only handful of isolated changepoints In contrast, Micron M 500 120 GB SATA 3 flash – 15 changepoints Toshiba XG 3 series 256 GB NVMe – 33 changepoints CPU: m 400 machines (APM X-GENE CPUs) – 0 changepoints Memory: c 6420 machines (Xeon Gold 6142 CPUs) – 0 changepoints 21

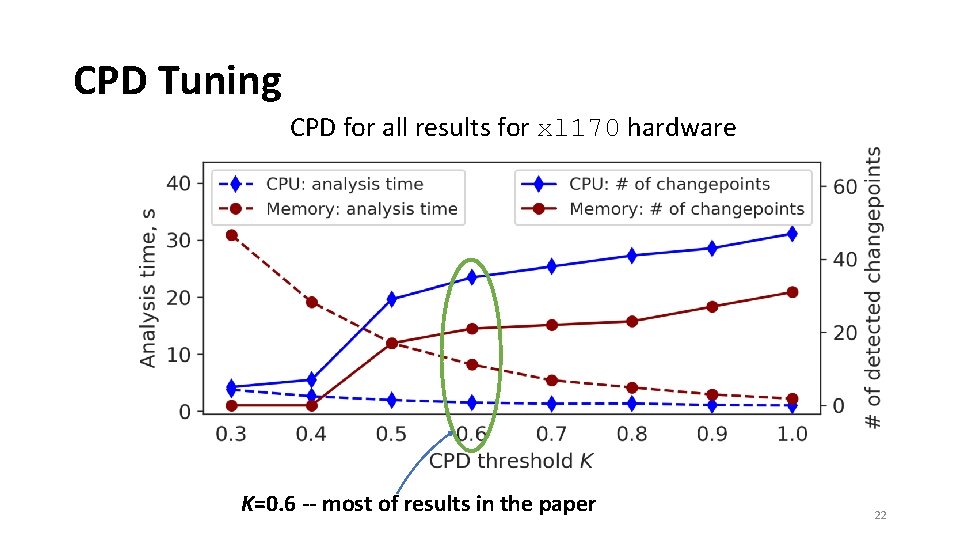

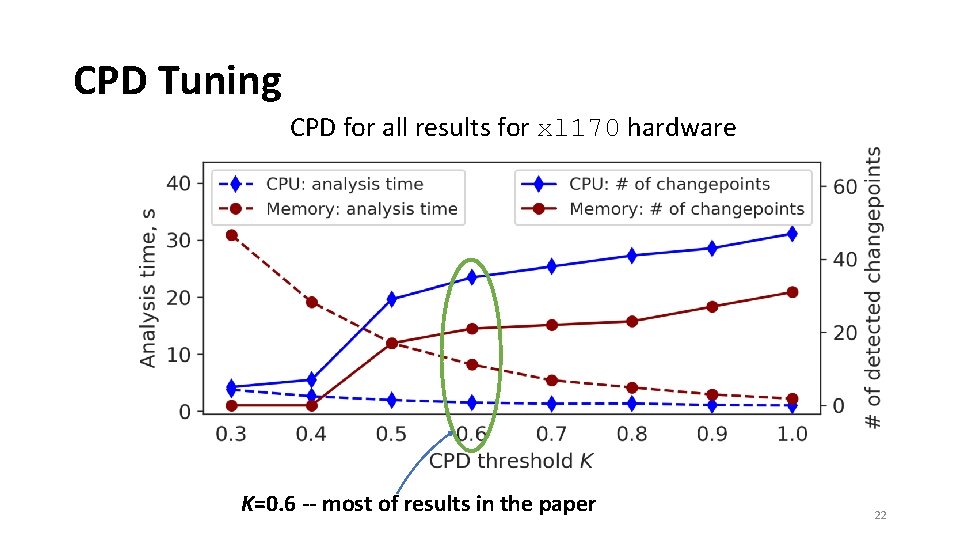

CPD Tuning CPD for all results for xl 170 hardware K=0. 6 -- most of results in the paper 22

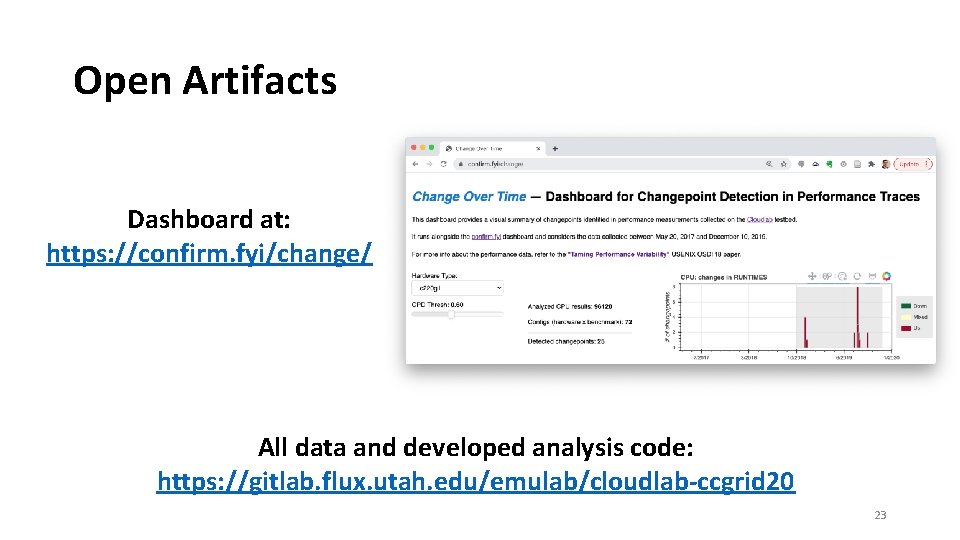

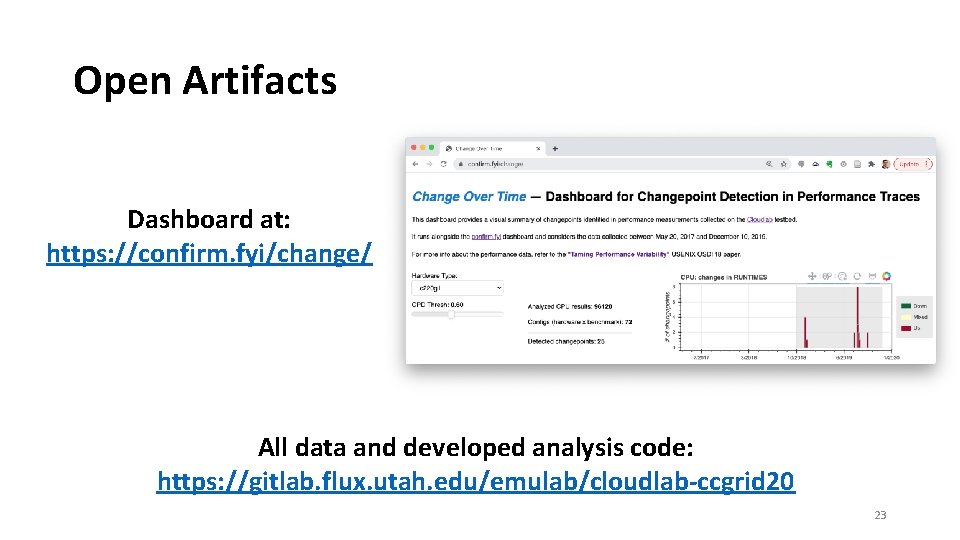

Open Artifacts Dashboard at: https: //confirm. fyi/change/ All data and developed analysis code: https: //gitlab. flux. utah. edu/emulab/cloudlab-ccgrid 20 23

Summary Changepoint Detection applied to benchmarking data from Cloud. Lab Public! 6. 9 M results CPU, memory, disk 1600 machines 11 hardware types 2 years and 9 months Over 2, 400 detected changepoints CPD can be part of: Studies of evolution over time for computing systems and their components Reproducibility studies 24

Acknowledgements Grant #: 1743363 Collaborators from Leiden University for leading a follow-up study: “Cloud Performance Variability Prediction”, https: //dl. acm. org/doi/abs/10. 1145/3447545. 3451182 Thank you! dmitry. duplyakin@gmail. com 25