Huug van den Dool and Suranjana Saha Prediction

- Slides: 32

Huug van den Dool and Suranjana Saha Prediction Skill and Predictability in CFS

Definitions Prediction Skill and Predictability Opinion: Literature fuzzies up ‘predictability’ vs ‘prediction skill’

Definition 1: Evaluation of skill of real time prediction; the old-fashioned way. Problems: a) Sample size! , b) Wait a long time (and funding agents are impatient)

Definition 1: Evaluation of skill of real time prediction; the old-fashioned way. Definition 2: Evaluation of skill of hindcasts; hard, not impossible. Problems: a) Sample size, b) ‘honesty’ of hindcasts

Definition 1: Evaluation of skill of real time prediction; the old-fashioned way. Definition 2: Evaluation of skill of hindcasts; hard, not impossible. Definition 3: Predictability of the 1 st kind (~ sensitivity due to uncertainty in initial conditions)

Definition 1: Evaluation of skill of real time prediction; the old-fashioned way. Sample size! Definition 2: Evaluation of skill of hindcasts; hard, not impossible Definition 3: Predictability of the 1 st kind (~ sensitivity due to uncertainty in initial conditions) Definition 4: Predictability of the 2 nd kind due to variations in external boundary conditions (AMIP; Potential Predictability; Reproducibility; Madden’s approach)

Predictability (theoretical/intrinsic) is a ceiling for actual prediction skill. Any other ‘kinds’ of predictability?

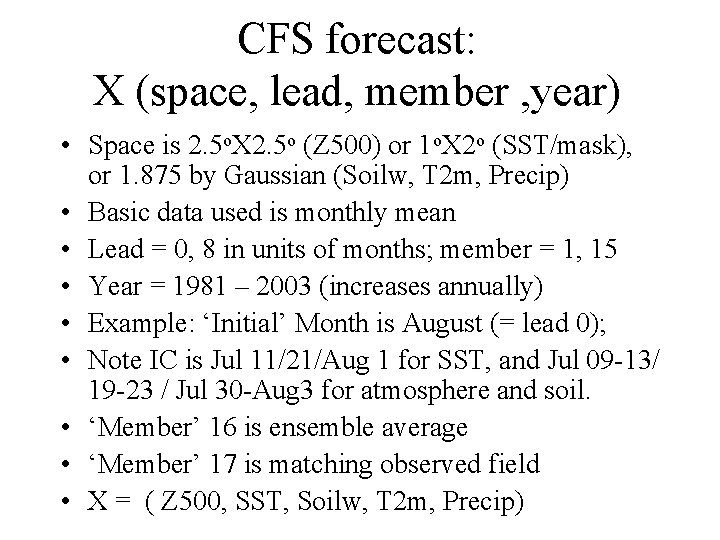

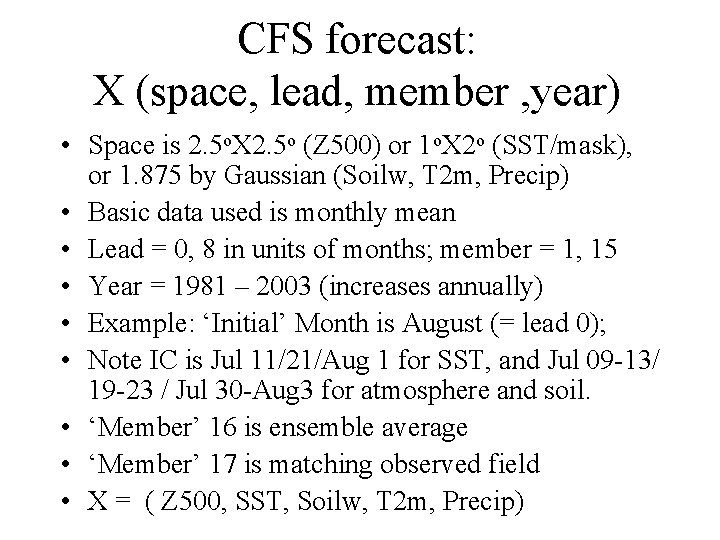

CFS forecast: X (space, lead, member , year) • Space is 2. 5 o. X 2. 5 o (Z 500) or 1 o. X 2 o (SST/mask), or 1. 875 by Gaussian (Soilw, T 2 m, Precip) • Basic data used is monthly mean • Lead = 0, 8 in units of months; member = 1, 15 • Year = 1981 – 2003 (increases annually) • Example: ‘Initial’ Month is August (= lead 0); • Note IC is Jul 11/21/Aug 1 for SST, and Jul 09 -13/ 19 -23 / Jul 30 -Aug 3 for atmosphere and soil. • ‘Member’ 16 is ensemble average • ‘Member’ 17 is matching observed field • X = ( Z 500, SST, Soilw, T 2 m, Precip)

ASPECTS • • • Prediction skill (member i vs member 17) Predictability (member i vs member j) Monthly mean Seasonal mean Ensemble average Predictability of 1 st kind only.

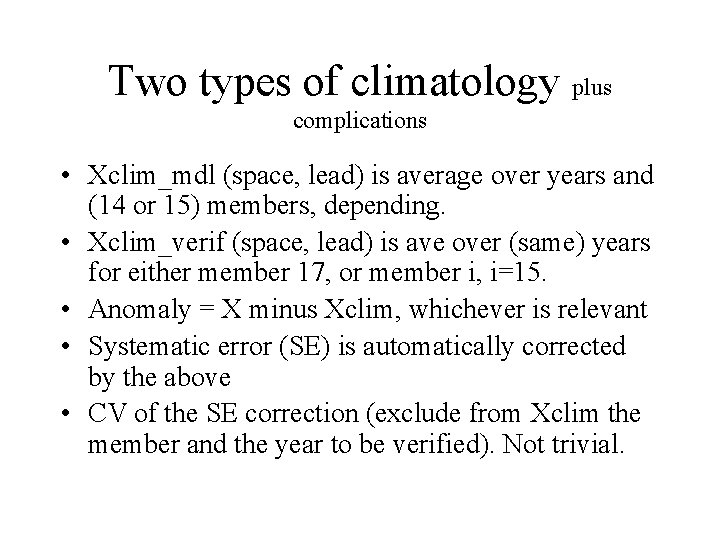

Two types of climatology plus complications • Xclim_mdl (space, lead) is average over years and (14 or 15) members, depending. • Xclim_verif (space, lead) is ave over (same) years for either member 17, or member i, i=15. • Anomaly = X minus Xclim, whichever is relevant • Systematic error (SE) is automatically corrected by the above • CV of the SE correction (exclude from Xclim the member and the year to be verified). Not trivial.

Prediction Skill Monthly

Conclusions (monthly data) • CFS data is a goldmine. • CFS has enough (? ) data forecast evaluation (and diagnostics) • Member i vs member j unifies predictability of 1 st and 2 nd kind in CFS output • CFS has some prediction skill. In order of skill: SST, {tropical variables}, soilw, T 2 m, Precip • CFS has some more predictability (as defined), but ceiling is ‘low’ in mid-latitudes. • Seasonality (no surprise)

To do: • Identify interdecadal skill source (if any) • Identify soil moisture skill source (are models still too strong on local effects? How about non-local effects) • Daily data for the finer temporal scales in skill/predictability. • Why do models like CFS have predictability in so few d. o. f. (and is that really all there is) • Further ideas about ‘new’ predictability notions

A case for the importance of knowing the effective number of degrees of freedom (edof) in which we have forecast skill. Considerations: -) physical models have one clear strength: they can execute the nonlinear terms -) a model needs at least 3 degrees of freedom to be non-linear (Lorenz, 1960) -) a non-linear model with nominally a zillion degrees of freedom, but skill in only <= 3 dof is functionally linear in terms of the skill of its forecasts - and, to its detriment, the non-linear terms add random numbers to the tendencies of the modes with predictability. ==> Therefore: Physical models need to have skill in, effectively, > 3 dof before they can be expected to take advantage of non-linearity. (In a forecast setting). ( Note: not any 3 degrees of freedom will do. )

‘Lingering memory’ Cai+Van den Dool(2005); Schemm et al calibration data set, (CFS daily data set will be used also).

Suranjana saha

Suranjana saha Suranjana saha

Suranjana saha Den gode den onde og den grusomme

Den gode den onde og den grusomme Den gode, den onde og den grusomme

Den gode, den onde og den grusomme Den gode den onde den grusomme musik

Den gode den onde den grusomme musik Gamle og nye karakterskala

Gamle og nye karakterskala In den kopf schauen

In den kopf schauen Daj mi ruku dam ti srdce len ho nezlomí

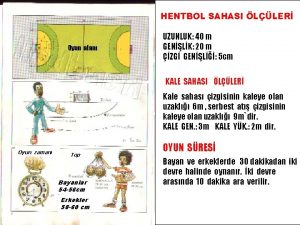

Daj mi ruku dam ti srdce len ho nezlomí Voleybol meydancasi ölçüleri

Voleybol meydancasi ölçüleri What is plasma to gas called

What is plasma to gas called Hentbol saha ölçüleri

Hentbol saha ölçüleri Eskrim saha ölçüleri

Eskrim saha ölçüleri Conto kalimah pananya cara nya éta

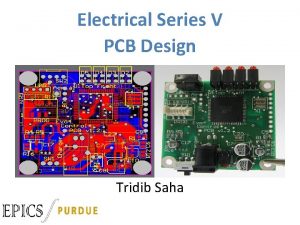

Conto kalimah pananya cara nya éta Ece404 github

Ece404 github Beyzbol sahası ölçüleri

Beyzbol sahası ölçüleri Saha gözlem ziyaretleri

Saha gözlem ziyaretleri Dr soma saha

Dr soma saha Saha chapter 1

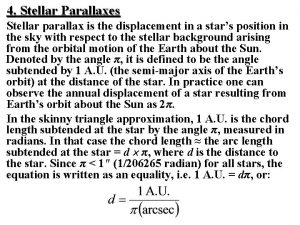

Saha chapter 1 Saha equation

Saha equation Tsg export sa

Tsg export sa Voleybol oyun kuralları

Voleybol oyun kuralları Saha ionization equation

Saha ionization equation Dr prantik saha

Dr prantik saha Riya saha shah

Riya saha shah Deontology death penalty

Deontology death penalty Arnold van den berg biography

Arnold van den berg biography Ernest van den haag

Ernest van den haag Sleutelmanipulatie

Sleutelmanipulatie Nieuwe leerweg

Nieuwe leerweg Eva van den broek

Eva van den broek Bas van den brenk

Bas van den brenk Psychische grundbedürfnisse

Psychische grundbedürfnisse Bram van den hurk

Bram van den hurk