Evaluation Report Template The steps involved Dp 4

- Slides: 32

Evaluation Report Template

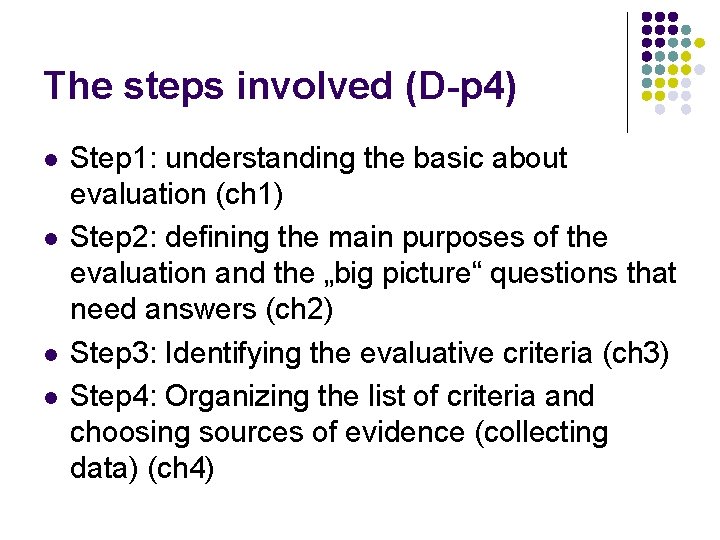

The steps involved (D-p 4) l l Step 1: understanding the basic about evaluation (ch 1) Step 2: defining the main purposes of the evaluation and the „big picture“ questions that need answers (ch 2) Step 3: Identifying the evaluative criteria (ch 3) Step 4: Organizing the list of criteria and choosing sources of evidence (collecting data) (ch 4)

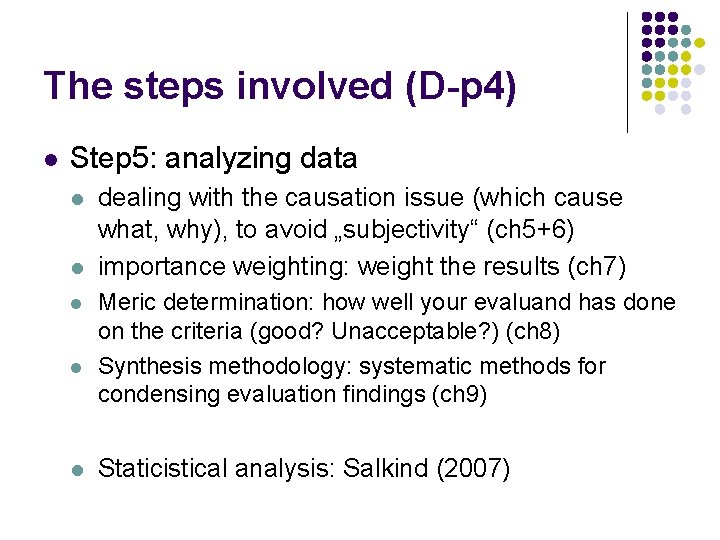

The steps involved (D-p 4) l Step 5: analyzing data l l l dealing with the causation issue (which cause what, why), to avoid „subjectivity“ (ch 5+6) importance weighting: weight the results (ch 7) Meric determination: how well your evaluand has done on the criteria (good? Unacceptable? ) (ch 8) Synthesis methodology: systematic methods for condensing evaluation findings (ch 9) Staticistical analysis: Salkind (2007)

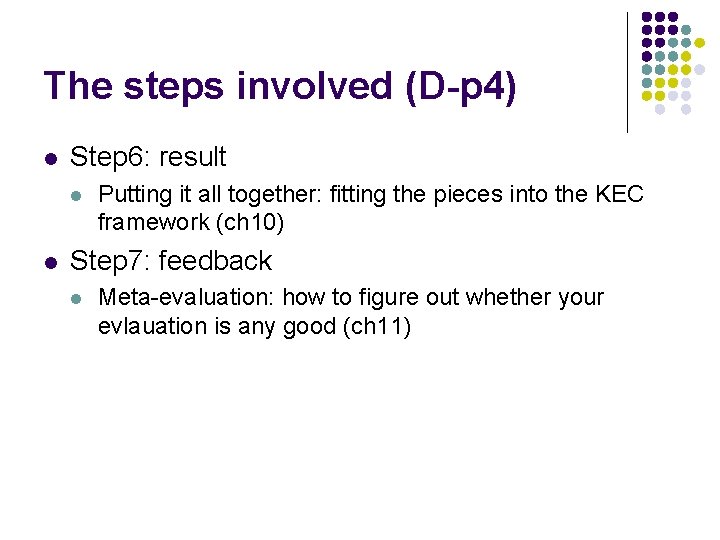

The steps involved (D-p 4) l Step 6: result l l Putting it all together: fitting the pieces into the KEC framework (ch 10) Step 7: feedback l Meta-evaluation: how to figure out whether your evlauation is any good (ch 11)

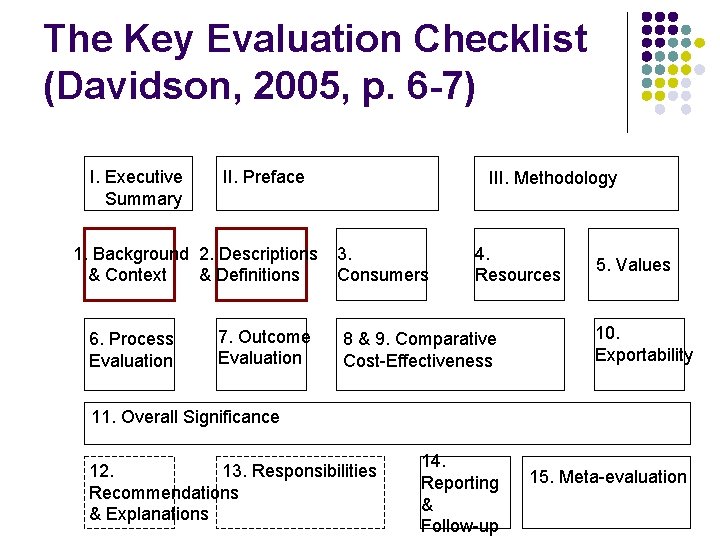

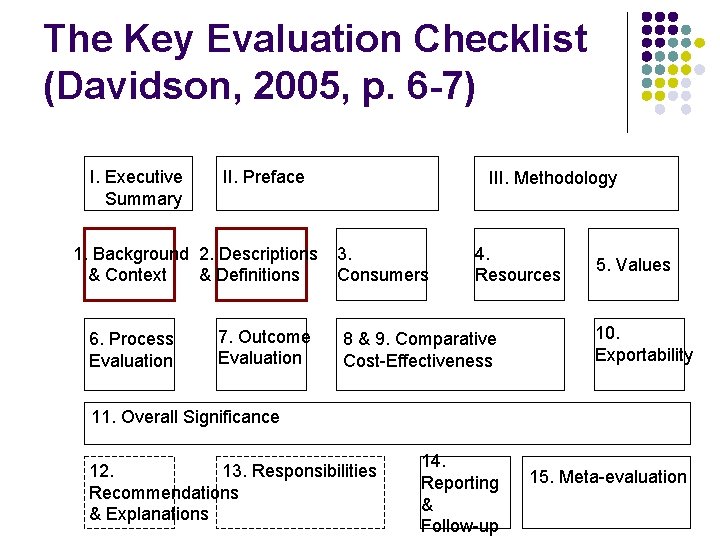

The Key Evaluation Checklist (Davidson, 2005, p. 6 -7) I. Executive Summary II. Preface 1. Background 2. Descriptions & Context & Definitions 6. Process Evaluation 7. Outcome Evaluation III. Methodology 3. Consumers 4. Resources 8 & 9. Comparative Cost-Effectiveness 5. Values 10. Exportability 11. Overall Significance 12. 13. Responsibilities Recommendations & Explanations 14. Reporting & Follow-up 15. Meta-evaluation

Step 1: Understand the basic of evaluation l l Identify the evaluand Background and context of evaluand l l Descriptions and definitions l l Why did this program or product come into existence in the first place? Describe the evaluand in enough detail so that virtually anyone can understand what it is and what it does How: collect background information, pay a firsthand visit or literature review

Step 1: Output report l Output: one or two page overview of the evaluand findings l l l What is your evaluand Background and context of your evaluand Description of your evaluand l Try to be as detail as possible

Step 2: Defining the Purpose of the Evaluation (D-Ch 2) l Who asked for this evaluation and why? What are the main evaluation questions? Who are the main audience? l Aboslute merit or relative merit l l

Step 2: Output report l Your step 2 output report should answer the following questions: l Define the evaluation purpose l l Do you need to demonstrate to someone (yourself) the overall quality of something? Or Do you need to find a file for improvement? Or do you do both? Once you answer above questions, figure out what are your big picture questions: l l Is your evaluation related to the absolute merit of your evaluand? Or the relative merit of your evaluand

Step 3: Defining evaluative criteria l To build a criterion list, consider the following procedures: l l A needs assessment Logic model of linking the evaluand to the needs An assesment of other relevant values, such as process, outcomes, and cost A strategy to organize your criterion checklist Make sure that you go into the evaluation with a well-thought-out plan so that you know what you need to know, where to get that information, and how you are going to put it together when you write up your report.

Needs assessment l l Understand the true needs of your evaluation end users (consumers or impactees) Who are your end users? l They are the person or entity who buys or users a product or service, enroll in a training program, etc. l l l Upstream stakeholder (i. e. People on upper level of the structure – manager, designer) Immediate recipients (i. e. People who directly consume your product or service – consumer, trainee) Downstream consumers (i. e. People who indirectly involved in your evaluation)

Understanding needs l Needs vs. Wants l l l Difference and why A need is something without which unsatisfactory functioning occurs. Different kind of needs l l Context dependence Conscious needs vs. Unconsious needs l l Met needs vs. Unmet needs l l Needs we know and needs we do not know Building a factory (increase job, but create pollution) Performance needs vs. Instrumental needs l l l „need to do“ something for satisfactory functioning (actual problems) vs. Proposed solutions Access email vs. Lightweight laptop Most of the case, performance needs is considered, but not the instrumental needs

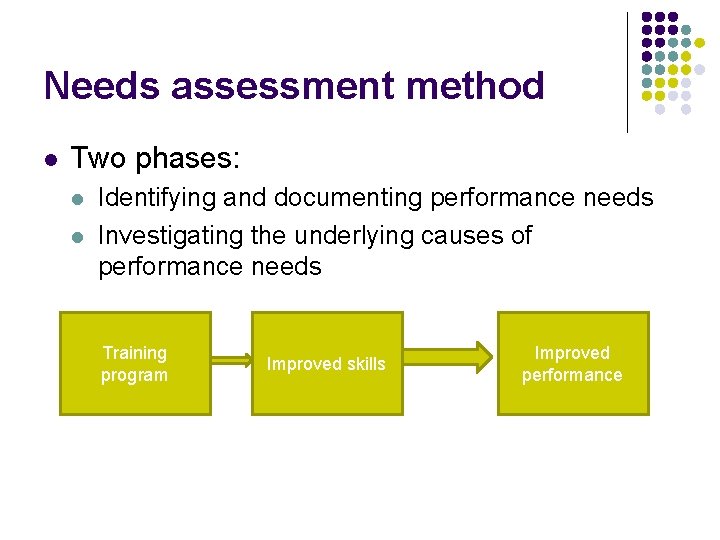

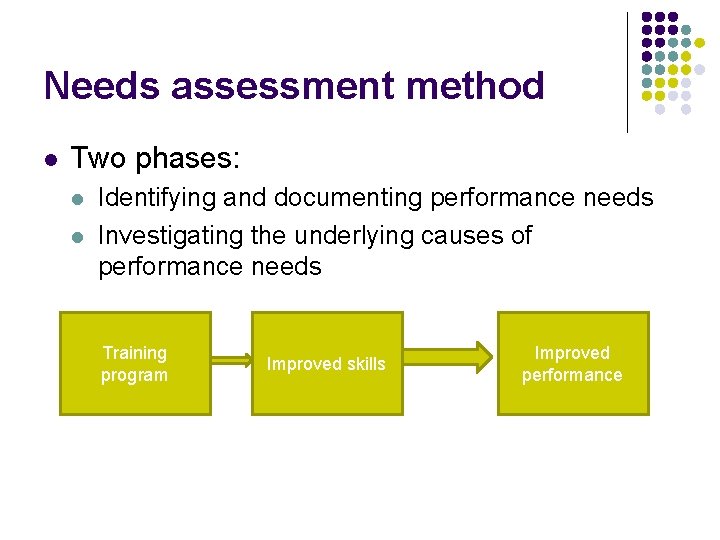

Needs assessment method l Two phases: l l Identifying and documenting performance needs Investigating the underlying causes of performance needs Training program Improved skills Improved performance

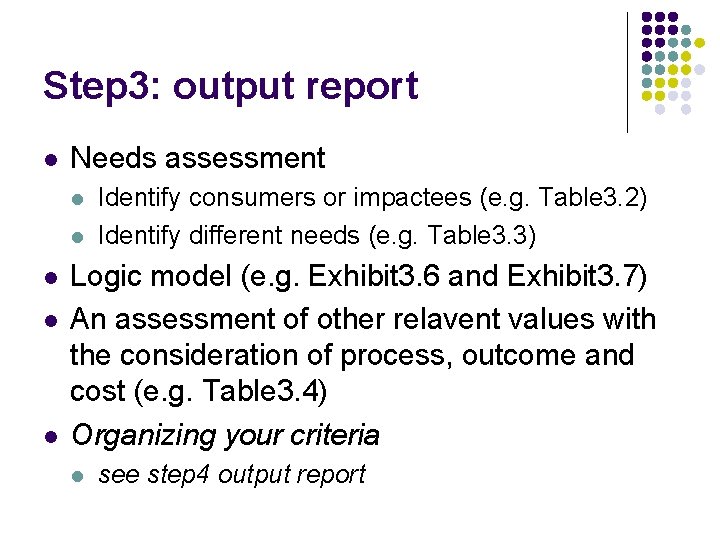

Step 3: output report l Needs assessment l l l Identify consumers or impactees (e. g. Table 3. 2) Identify different needs (e. g. Table 3. 3) Logic model (e. g. Exhibit 3. 6 and Exhibit 3. 7) An assessment of other relavent values with the consideration of process, outcome and cost (e. g. Table 3. 4) Organizing your criteria l see step 4 output report

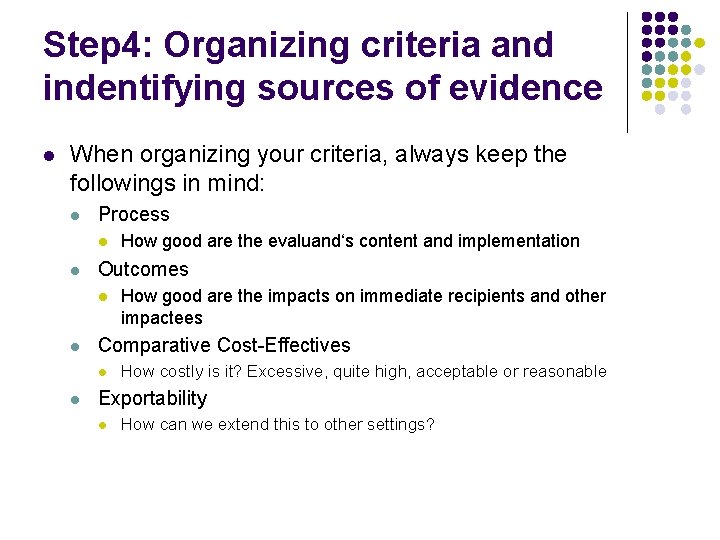

Step 4: Organizing criteria and indentifying sources of evidence l When organizing your criteria, always keep the followings in mind: l Process l l Outcomes l l How good are the impacts on immediate recipients and other impactees Comparative Cost-Effectives l l How good are the evaluand‘s content and implementation How costly is it? Excessive, quite high, acceptable or reasonable Exportability l How can we extend this to other settings?

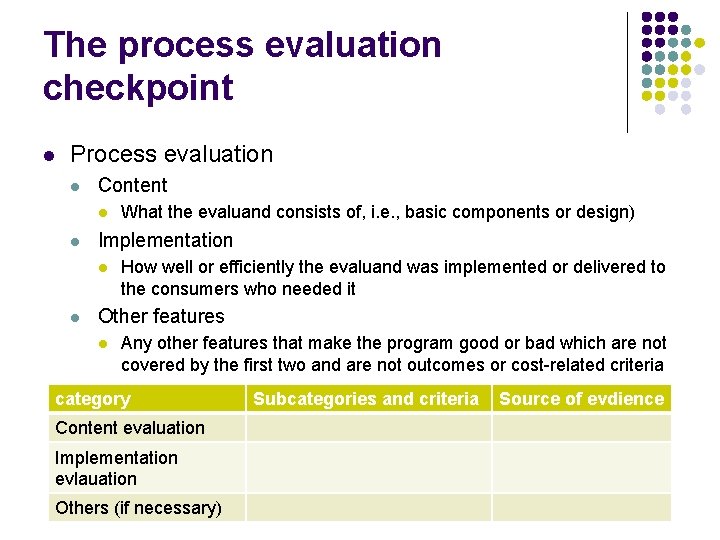

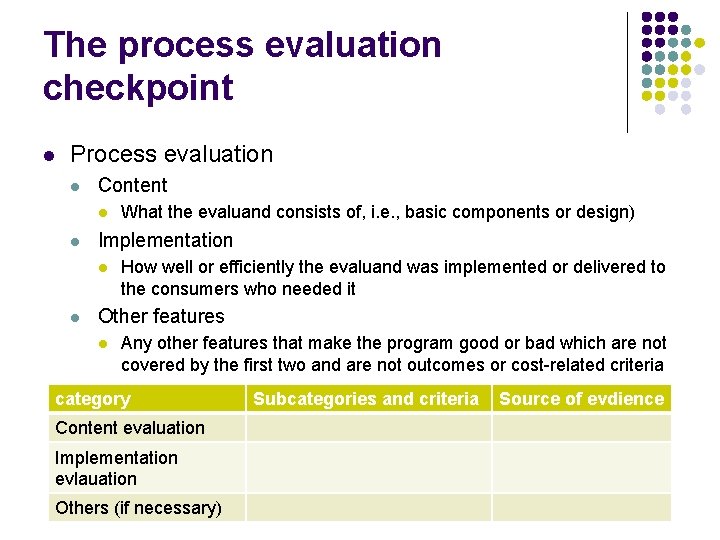

The process evaluation checkpoint l Process evaluation l Content l l Implementation l l What the evaluand consists of, i. e. , basic components or design) How well or efficiently the evaluand was implemented or delivered to the consumers who needed it Other features l Any other features that make the program good or bad which are not covered by the first two and are not outcomes or cost-related criteria category Content evaluation Implementation evlauation Others (if necessary) Subcategories and criteria Source of evdience

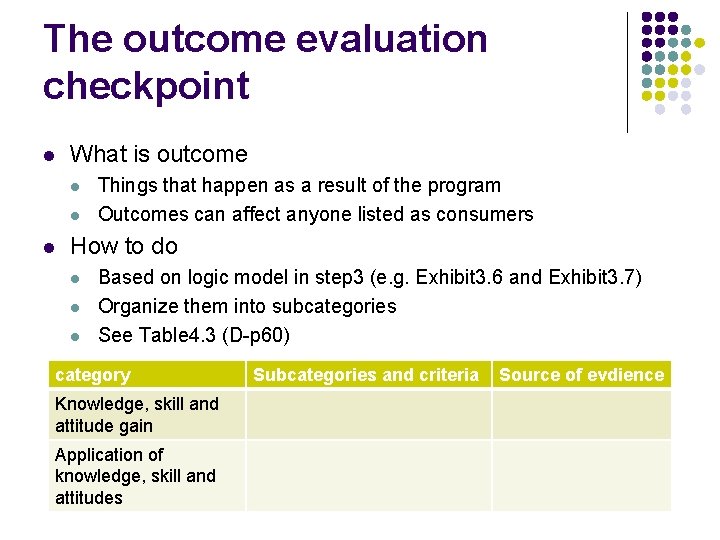

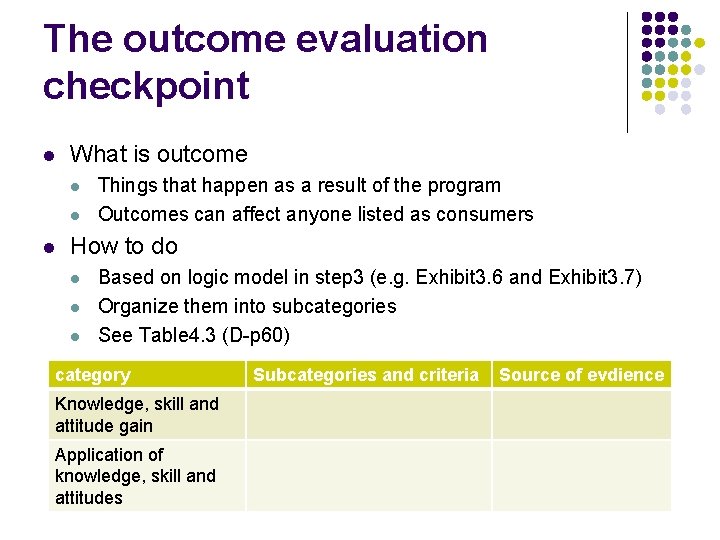

The outcome evaluation checkpoint l What is outcome l l l Things that happen as a result of the program Outcomes can affect anyone listed as consumers How to do l l l Based on logic model in step 3 (e. g. Exhibit 3. 6 and Exhibit 3. 7) Organize them into subcategories See Table 4. 3 (D-p 60) category Knowledge, skill and attitude gain Application of knowledge, skill and attitudes Subcategories and criteria Source of evdience

The comparative costeffectiveness checkpoint l l Any evaluation has to take cost into account What are costs? l l l Money Time Effort Space Opportunity costs

The exportability checkpoint l What elements of the evaluand (i. e. , innovative design or approach) might make it potentially valuable or a significant contribution or advance in another setting

Step 4: Output report l Checkpoints for l l Process (e. g. Table 4. 1, 4. 2) Outcomes (e. g. , Table 4. 3) Comparative Cost-Effectives (e. g. , cost cube table) Exportability l Short summary of potential areas for exportability

Step 5: Analysing data l l 5. 1 Inferencing causation 5. 2 Determining importance 5. 3 Merit determination 5. 4 Synthesis

5. 1 Certainty about causation (D-ch 5) l l Each decision-making context requires a different level of certainty Quantitative or qualitative analysis l All-quantitative or all-qualitative l l Sample choosing Sample size Mix of them More in statistical analysis

Inferrencing causation: 8 strategies l l l l 1. Ask observers 2. Check whether the content of the evaluand matches the outcome 3. Look for other telltale patterns that suggest one cause or another 4. Check whether the timing of outcomes makes sense 5. Check whether the „dose“ is related logically to the „response“. 6. Make comparisons with a „control“ or „comparison“ group 7. Control statistically for extraneous variables 8. Identify and check the underlying causal mechanism(s)

5. 2 Determining importance (Dch 7) l 5. 2 Importance determiniation is the process of assigning labels to dimensions or components to indicate their importance. l Different evaluations l Dimensional evaluation Component evaluation l Holistic evaluation l

Determining importance: 6 strategies l l l 1. having stakeholders or consumers „vote“ on importance 2. Drawing on the knowledge of selected stakeholders 3. Using evidence from the literature 4. Using specialist judgment 5. Using evidence from the needs and values assessments 6. Using program theory and evidence of causal linkages

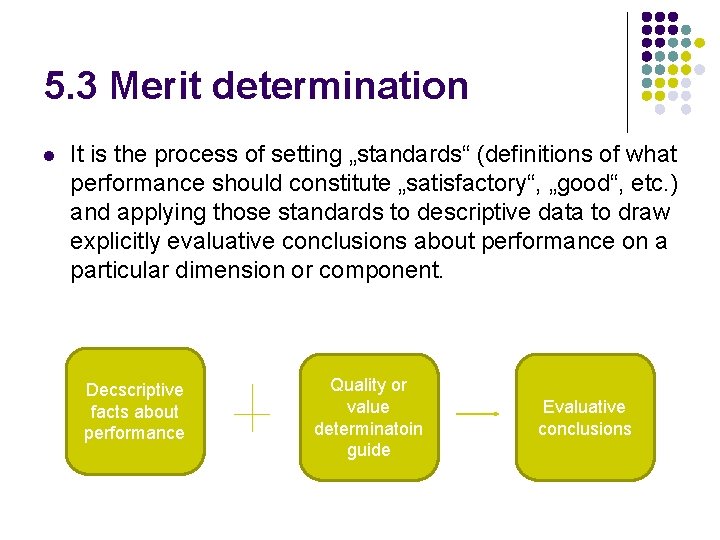

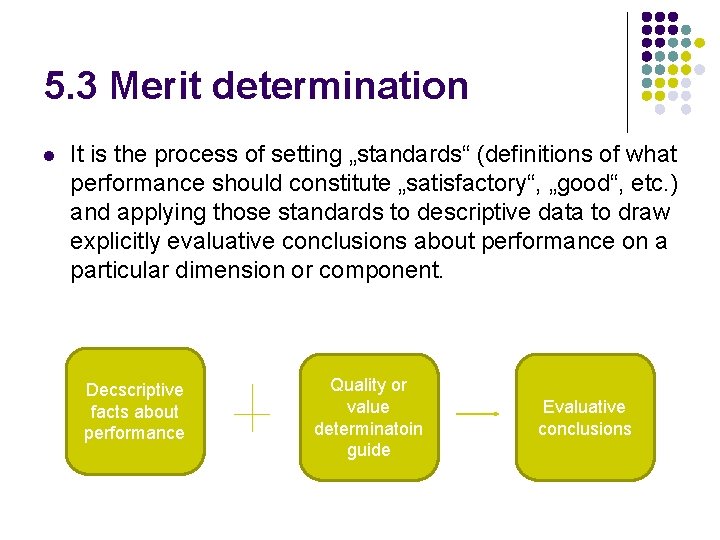

5. 3 Merit determination l It is the process of setting „standards“ (definitions of what performance should constitute „satisfactory“, „good“, etc. ) and applying those standards to descriptive data to draw explicitly evaluative conclusions about performance on a particular dimension or component. Decscriptive facts about performance Quality or value determinatoin guide Evaluative conclusions

Rubric l l Rubric is a tool that provides an evaluative description of what performance or quality „looks like“. It has two levels: l l Grading rubric is used to determin absolute quality or value (e. g. , Table 8. 2) Ranking rubric is used to determin relative quality or value

5. 4 Synthesis methodology l l Synthesis is the process of combining a set of ratings or performances on several components or dimensions into an overall rating. Quantitative synthesis l l Using numerical weights Qualitative synthesis l Using qualitative labels

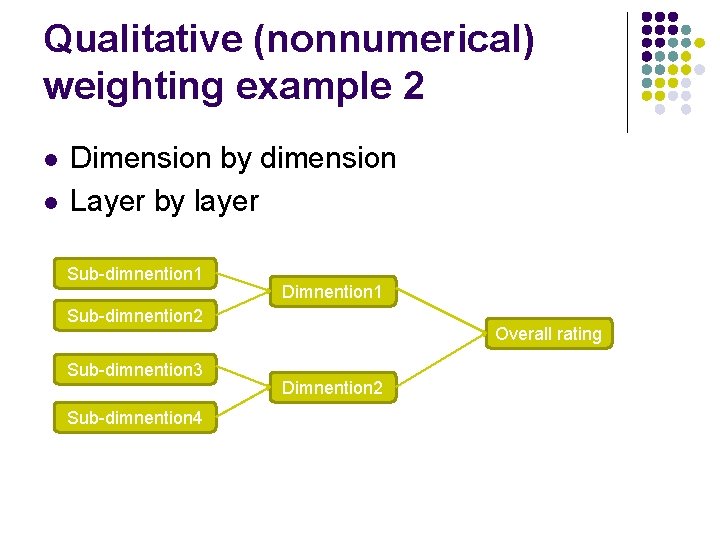

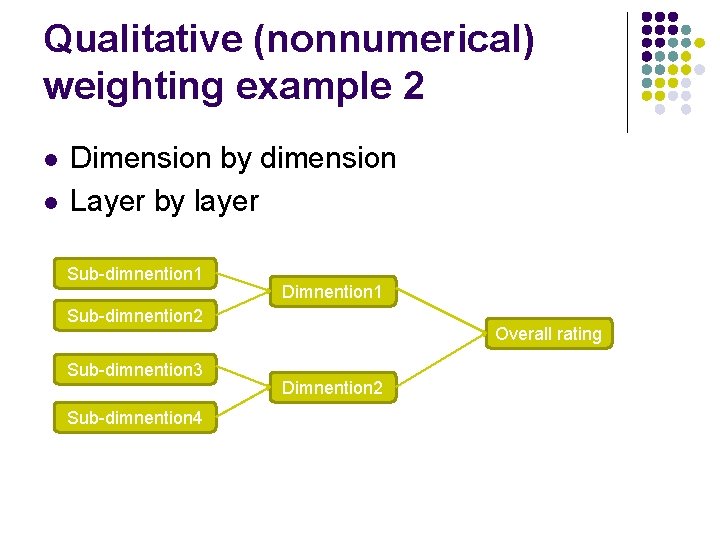

Qualitative (nonnumerical) weighting example 2 l l Dimension by dimension Layer by layer Sub-dimnention 1 Dimnention 1 Sub-dimnention 2 Sub-dimnention 3 Sub-dimnention 4 Overall rating Dimnention 2

Step 6: Result l l Putting it all together: fitting the pieces into the KEC framework (ch 10) Now we are ready to write our evaluation report.

Step 7: Feedback (optional) l Meta-evaluation: how to figure out whether your evlauation is any good (ch 11)

Related links l KEC l l l http: //www. wmich. edu/evalctr/checklists/kec. htm http: //www. wmich. edu/evalctr/checklists/kec_feb 07. pdf Questionnaire examples l l http: //www. go 2 itech. org/HTML/TT 06/toolkit/evaluation/forms. html http: //enhancinged. wgbh. org/formats/person/evaluate. html http: //www. dioceseofspokane. org/policies/HR/Appendix%20 II/Sa mple. Forms. htm http: //njaes. rutgers. edu/evaluation/