Efficient Management of Large Clusters with Minimal Manpower

- Slides: 34

Efficient Management of Large Clusters with Minimal Manpower CHEP 2010 – Taiwan Oct. 18, 2010 Tony Wong (Brookhaven National Lab)

Background �Scientific computing support @ BNL � RHIC (NP) program in late-1990’s � Expanded to cover ATLAS (HEP) Tier 1 (early 2000’s) � Other BNL-supported (neutrino and astro-physics) activities (mid- 2000’s) �Ushered in the era of commodity computing � Inexpensive hardware � Widespread use of open-source software � RH Linux (now Scientific Linux) � Condor � Ganglia/Nagios � My. SQL � Meteoric growth of computing facility

Cluster Services �ATLAS computing �~23% of ATLAS international computing capacity �RHIC computing � Bulk of computing capacity � Cluster-based distributed storage (~5 PB in 2010) via d. Cache and Xrootd �Reliability and redundancy � From sheer size � Individual worker nodes only attended during daytime (not critical) � Critical services (batch, power, cooling, related DB systems) maintained 24 x 7

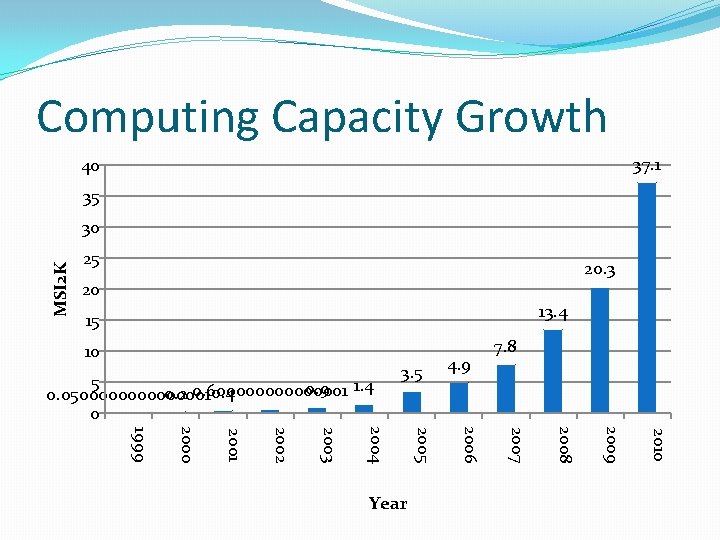

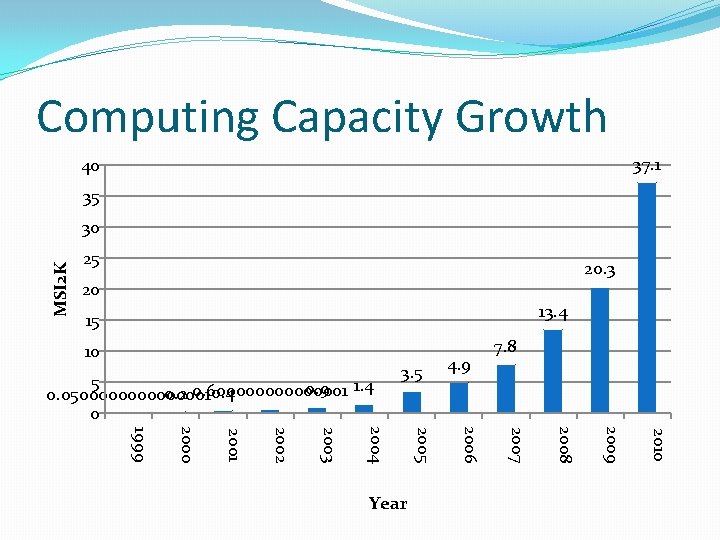

Computing Capacity Growth 37. 1 40 35 MSI 2 K 30 25 20. 3 20 13. 4 15 10 5 0. 9 1. 4 0. 2 0. 600000001 0. 0500000001 0 3. 5 2010 2009 2008 2007 2006 2005 2004 2003 2002 2001 2000 1999 Year 4. 9 7. 8

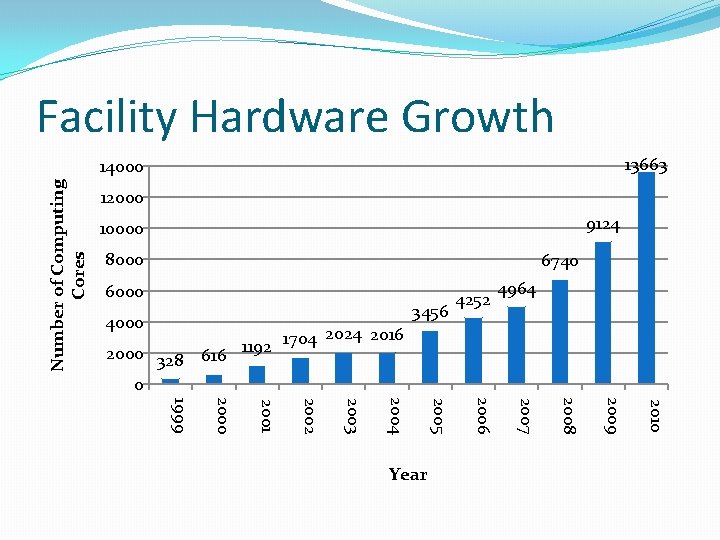

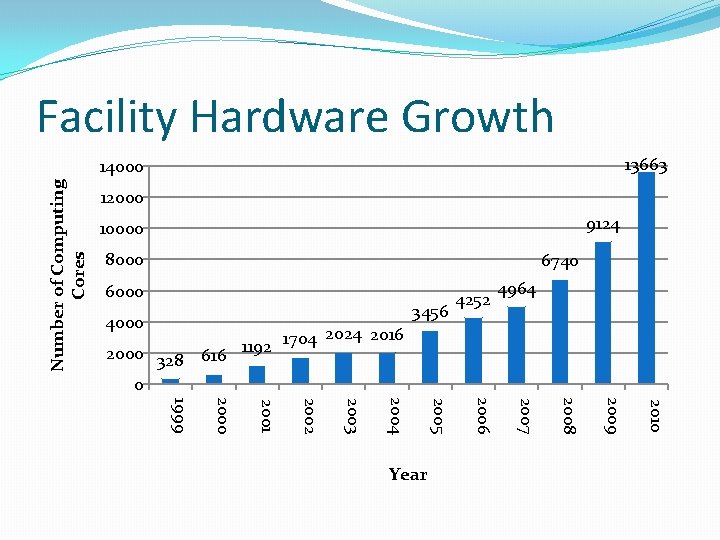

Facility Hardware Growth 13663 Number of Computing Cores 14000 12000 9124 10000 8000 6740 6000 3456 4000 4252 4964 1704 2024 2016 1192 2000 328 616 0 2010 2009 2008 2007 2006 2005 2004 2003 2002 2001 2000 1999 Year

Linux Farm Cluster

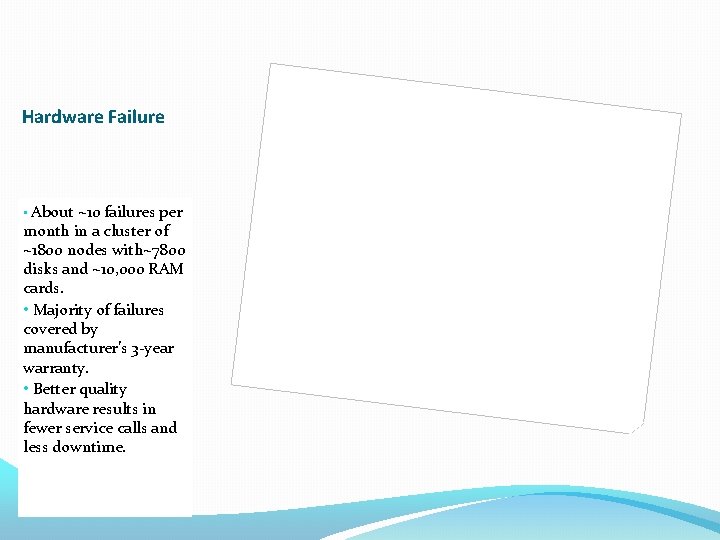

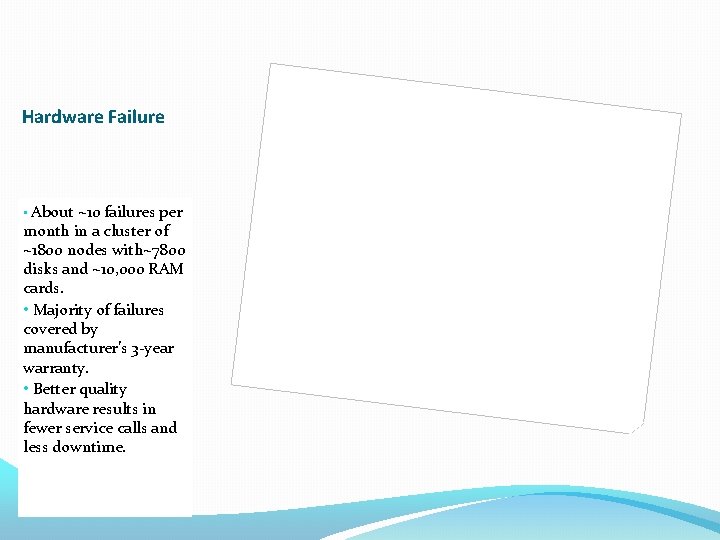

Hardware Failure • About ~10 failures per month in a cluster of ~1800 nodes with~7800 disks and ~10, 000 RAM cards. • Majority of failures covered by manufacturer’s 3 -year warranty. • Better quality hardware results in fewer service calls and less downtime.

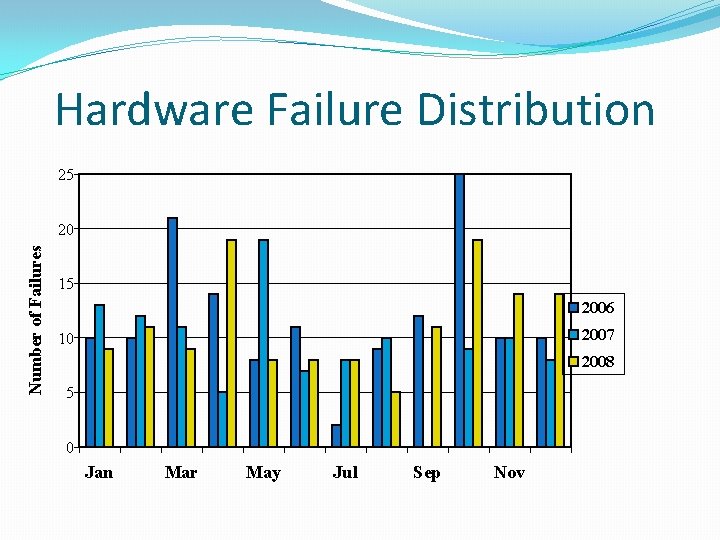

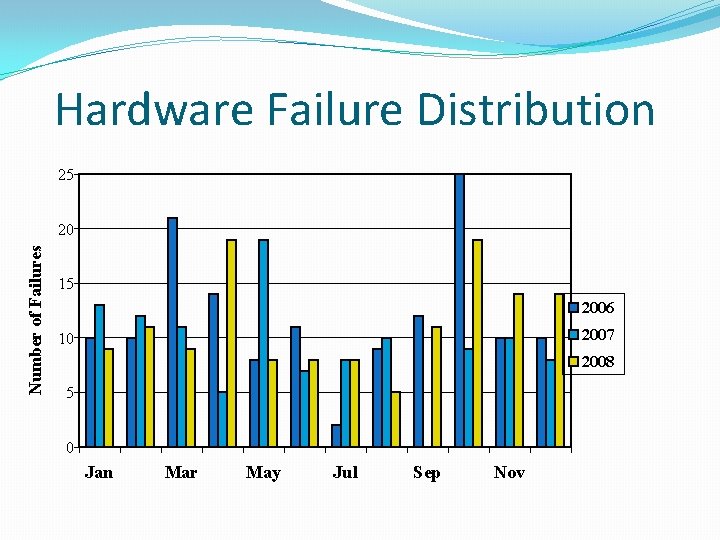

Hardware Failure Distribution 25 Number of Failures 20 15 2006 2007 10 2008 5 0 Jan Mar May Jul Sep Nov

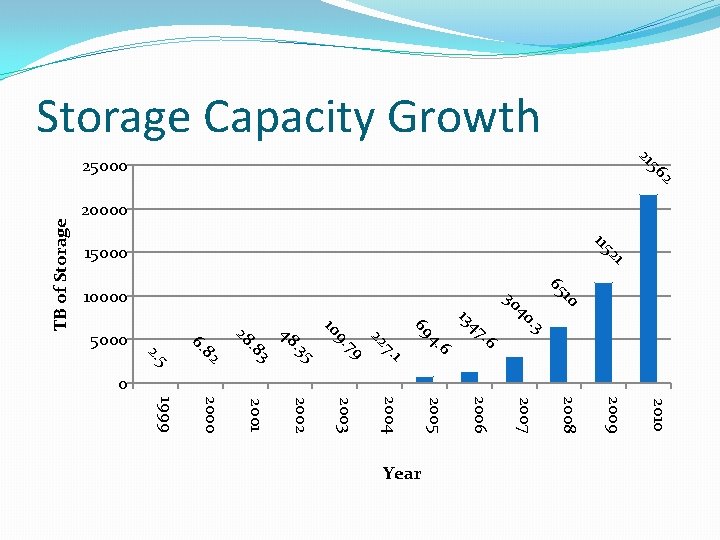

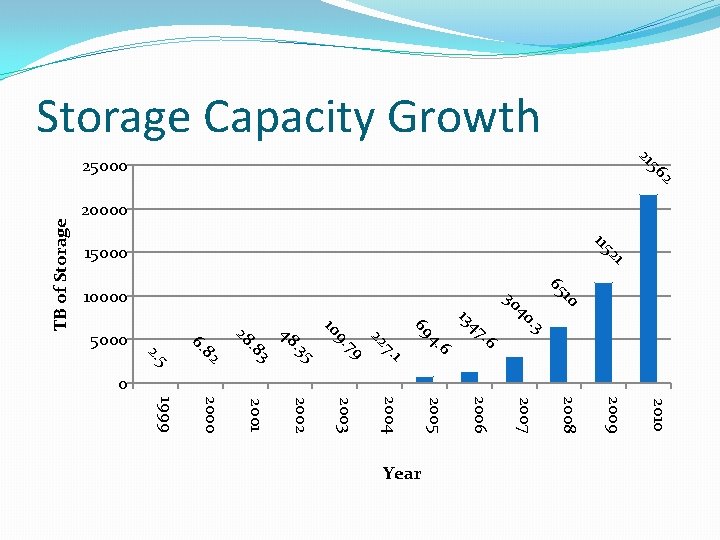

21 2 56 20000 21 115 15000 10 65. 3. 6 47 13 25000 2010 2009 2008 2007 2006 2005 6 4. 69 2004 1 2003 22 7 9. 10 2002 5 2001 3. 8 2000 28 82 2. 6. 1999 48 . 3 9 7. 0 5 5000 Year 40 30 10000 TB of Storage Capacity Growth

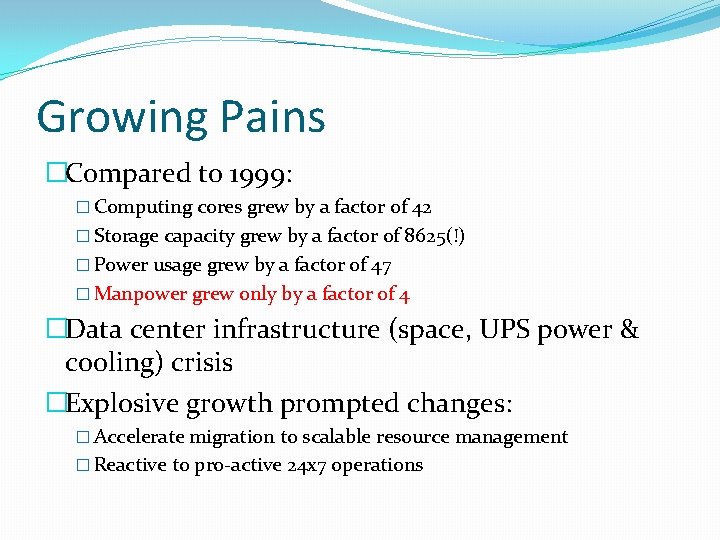

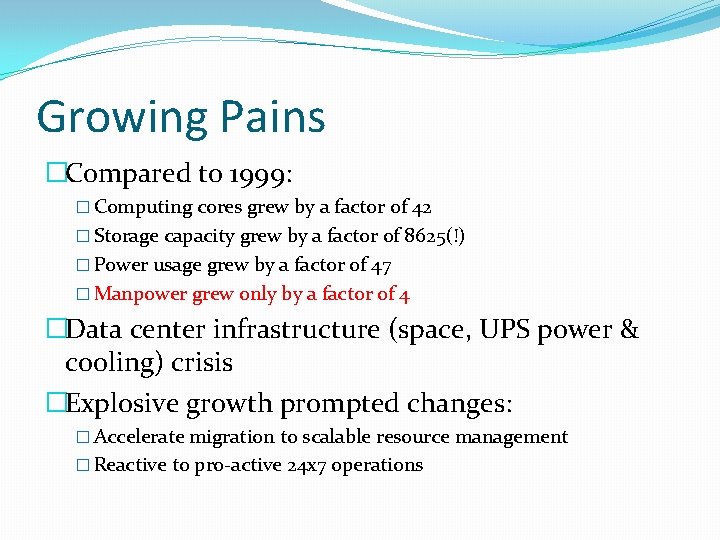

Growing Pains �Compared to 1999: � Computing cores grew by a factor of 42 � Storage capacity grew by a factor of 8625(!) � Power usage grew by a factor of 47 � Manpower grew only by a factor of 4 �Data center infrastructure (space, UPS power & cooling) crisis �Explosive growth prompted changes: � Accelerate migration to scalable resource management � Reactive to pro-active 24 x 7 operations

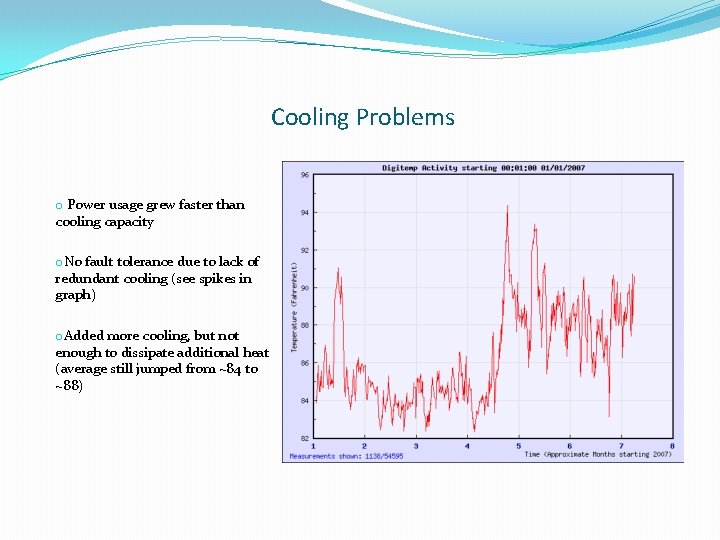

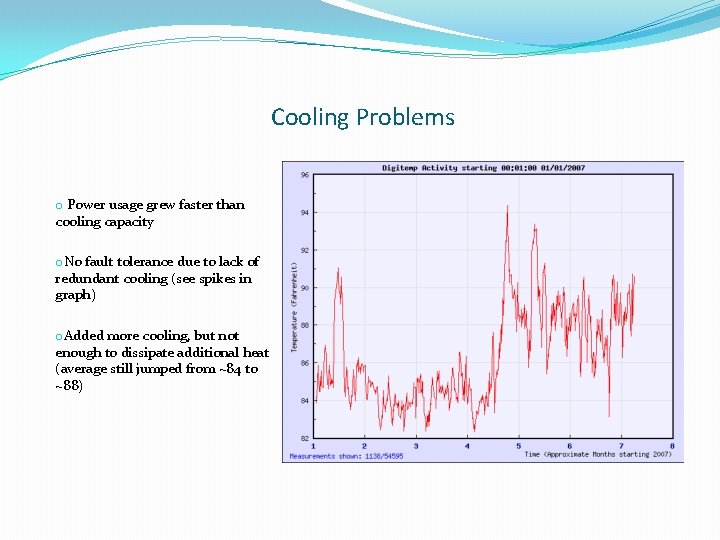

Cooling Problems o Power usage grew faster than cooling capacity o. No fault tolerance due to lack of redundant cooling (see spikes in graph) o. Added more cooling, but not enough to dissipate additional heat (average still jumped from ~84 to ~88)

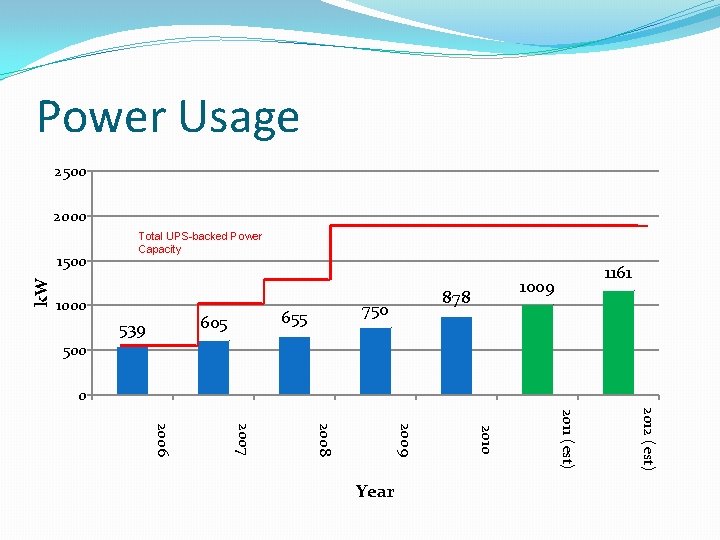

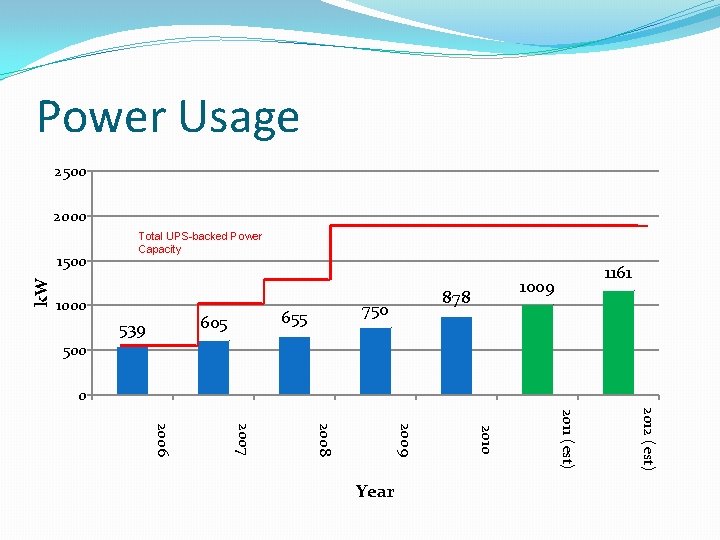

Power Usage 2500 2000 k. W 1500 Total UPS-backed Power Capacity 1000 655 605 539 878 750 1161 1009 500 0 2012 (est) 2011 (est) 2010 2009 2008 2007 2006 Year

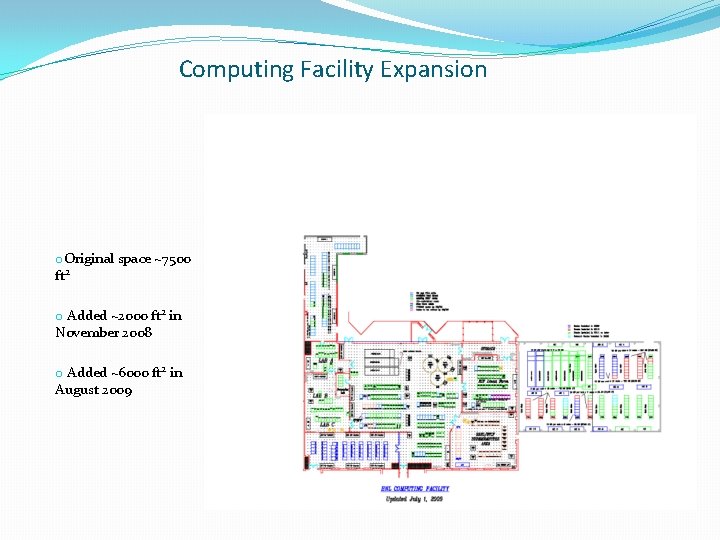

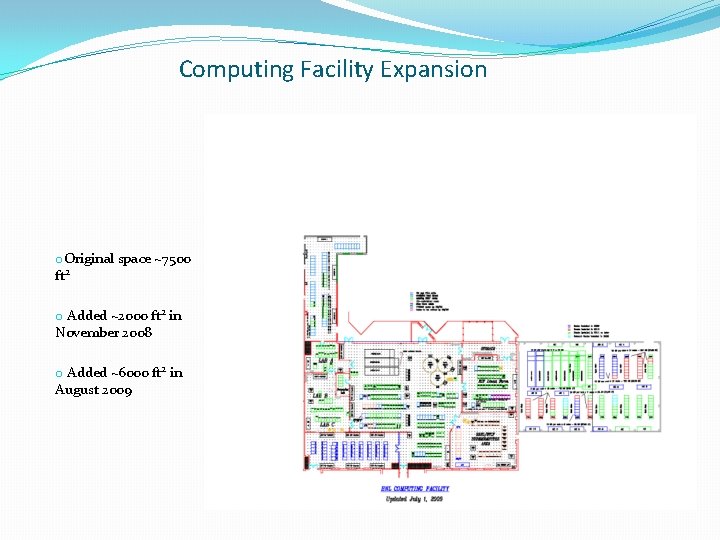

Computing Facility Expansion o. Original space ~7500 ft 2 o Added ~2000 ft 2 in November 2008 o Added ~6000 ft 2 in August 2009

Data Center Expansion January 14, 2009

Data Center Expansion October 10, 2009

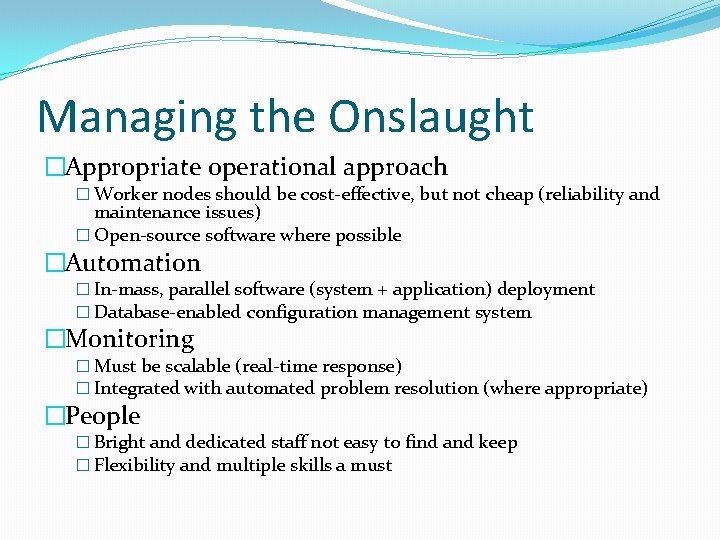

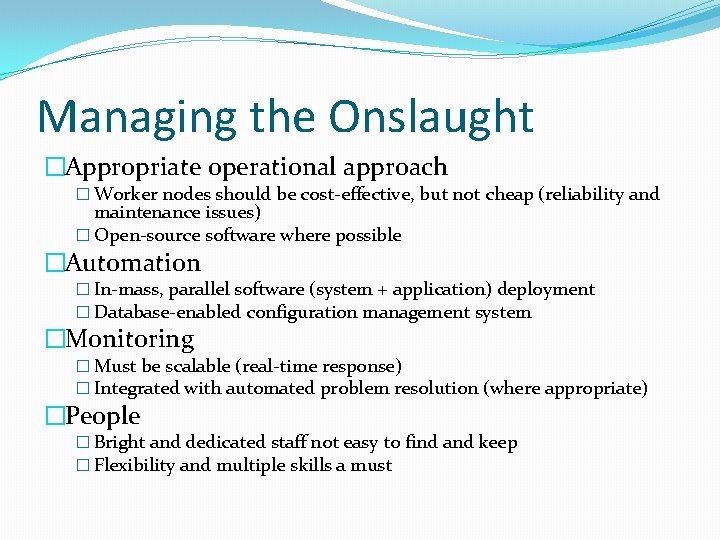

Managing the Onslaught �Appropriate operational approach � Worker nodes should be cost-effective, but not cheap (reliability and maintenance issues) � Open-source software where possible �Automation � In-mass, parallel software (system + application) deployment � Database-enabled configuration management system �Monitoring � Must be scalable (real-time response) � Integrated with automated problem resolution (where appropriate) �People � Bright and dedicated staff not easy to find and keep � Flexibility and multiple skills a must

Staffing Level Number of FTE’s 5 4 3. 5 4 4. 2 5 4. 2 3 3 1. 75 2 1 4 4. 4 2 2. 3 1 0 2010 2009 2008 2007 2006 2005 2004 2003 2002 2001 2000 1999 Year

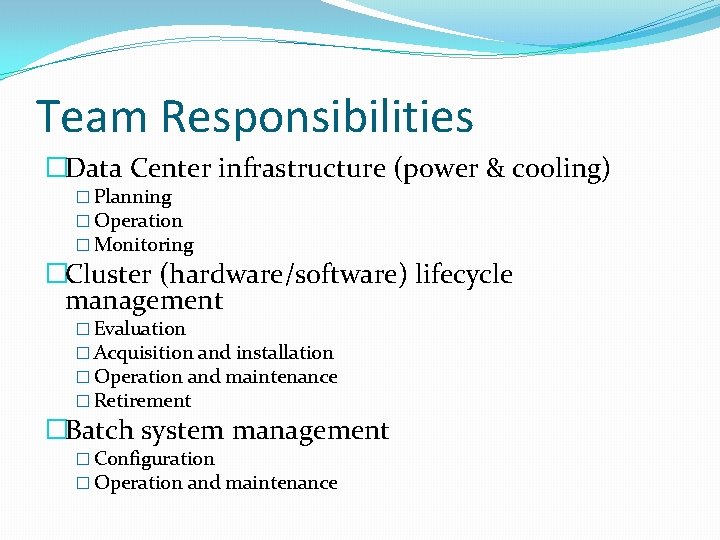

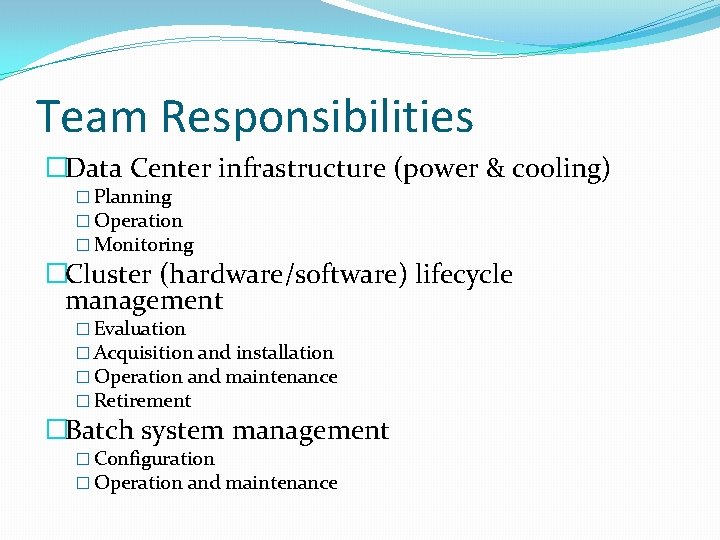

Team Responsibilities �Data Center infrastructure (power & cooling) � Planning � Operation � Monitoring �Cluster (hardware/software) lifecycle management � Evaluation � Acquisition and installation � Operation and maintenance � Retirement �Batch system management � Configuration � Operation and maintenance

Scalable Resource Management �Hardware lifecycle management � Leverage supplier’s infrastructure and experience to provide turn- key solution � Leverage BNL (not RACF) staff to dispose of retired hardware �System software � Hardware validation with QA test � My. SQL-based asset management system � Network-based OS installation �Application software stack � Post-install process after OS installation � To be integrated with Puppet configuration management system

Pro-Active Operations �Automated resource provisioning � Opening and blocking resources (with Nagios) � � � Management of disk usage Management of client-side conditions (NFS, AFS, Condor, etc) Management of poor user practices (memory leaks, infinite loops, etc) � Remote IPMI-based power management �Redundancy � Cold and hot spares for critical services (Condor batch) � 24 x 7 monitoring and notification �Emergency Handling � Power & Cooling � � Automated monitoring mechanism Auto shutdown of facility in emergencies

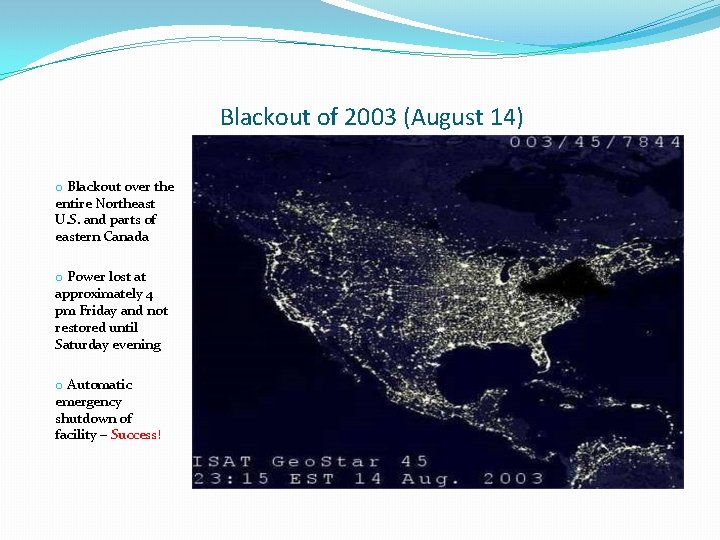

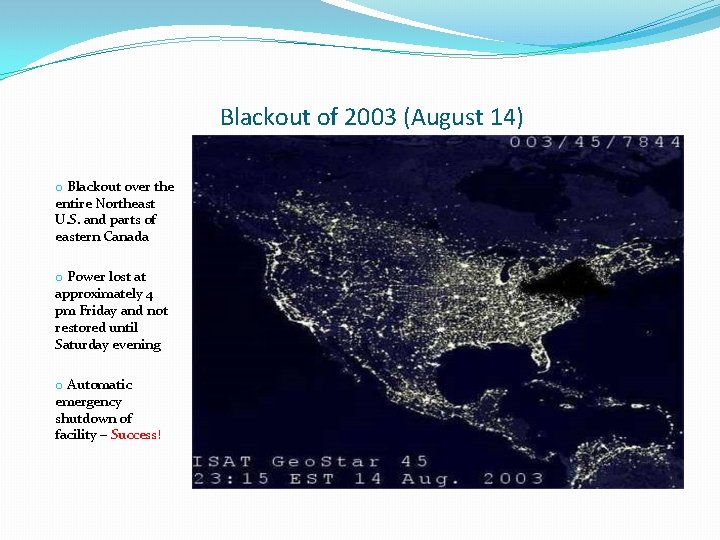

Blackout of 2003 (August 14) o Blackout over the entire Northeast U. S. and parts of eastern Canada o Power lost at approximately 4 pm Friday and not restored until Saturday evening o Automatic emergency shutdown of facility – Success!

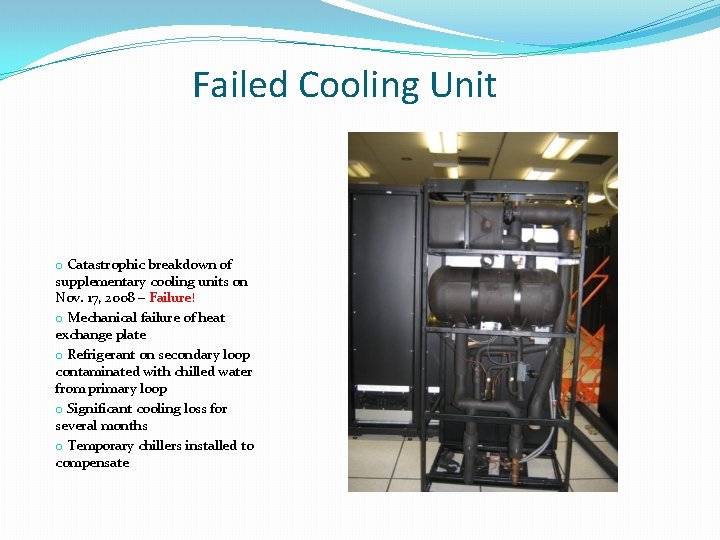

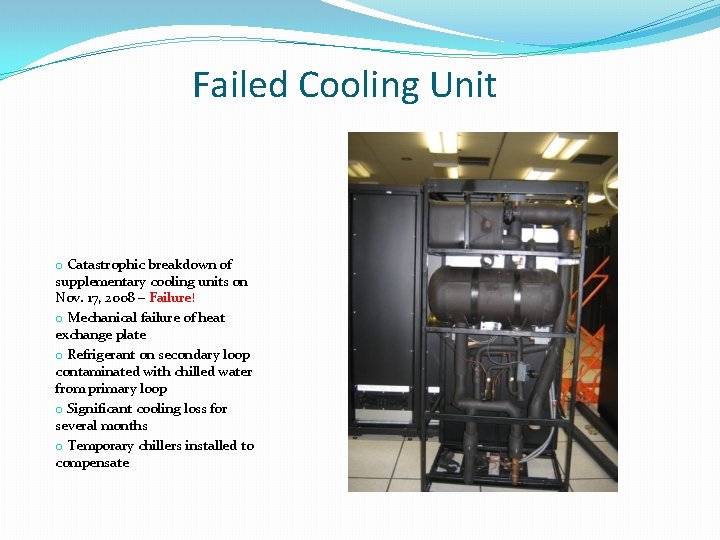

Failed Cooling Unit o Catastrophic breakdown of supplementary cooling units on Nov. 17, 2008 – Failure! o Mechanical failure of heat exchange plate o Refrigerant on secondary loop contaminated with chilled water from primary loop o Significant cooling loss for several months o Temporary chillers installed to compensate

Monitoring �Open-source ready t 00 ls � Nagios – services (Condor, AFS, NFS, etc) � Ganglia – activity and resource utilization �Written by RACF staff with open-source tools � Condor � Data center infrastructure �Criteria for selecting monitoring tools � Free � Scalable � Easy to configure, deploy and maintain

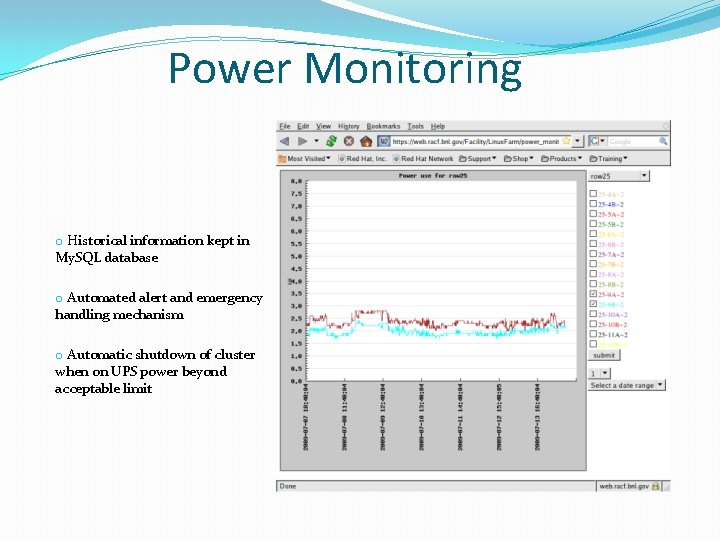

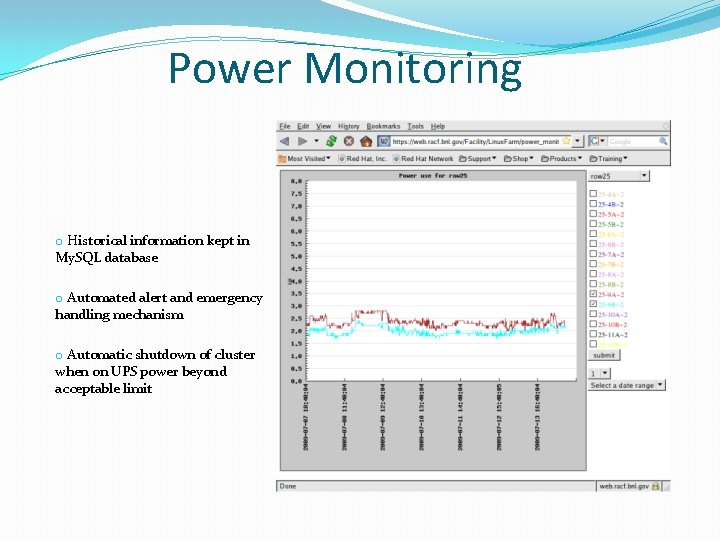

Power Monitoring o Historical information kept in My. SQL database o Automated alert and emergency handling mechanism o Automatic shutdown of cluster when on UPS power beyond acceptable limit

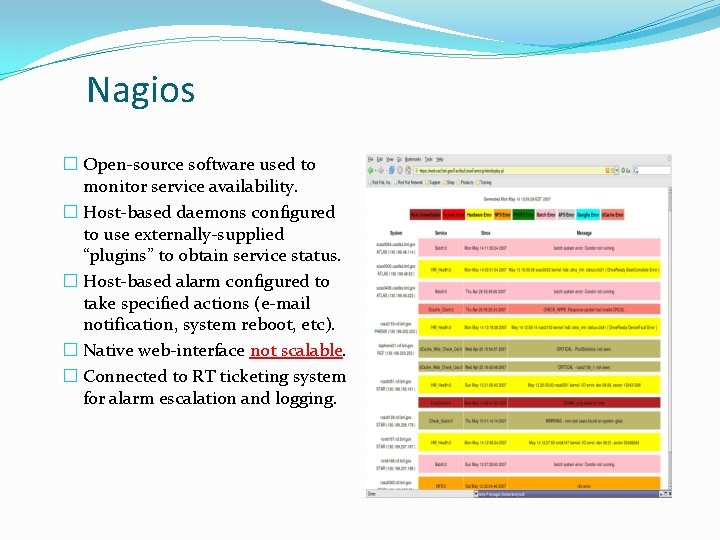

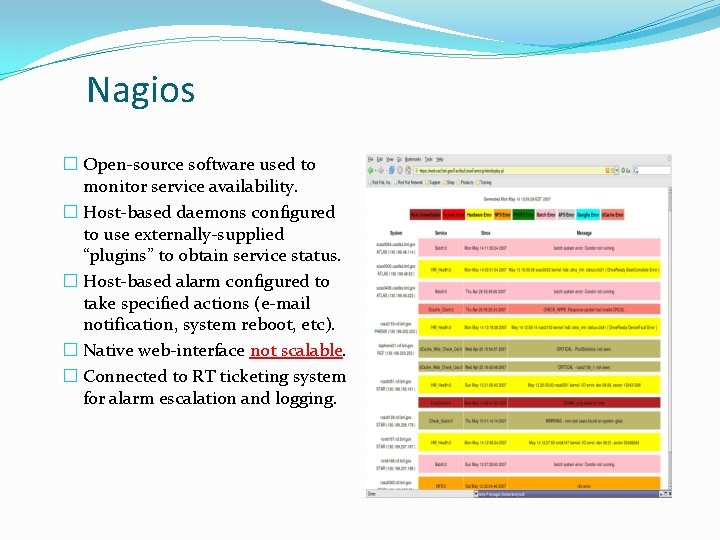

Nagios � Open-source software used to monitor service availability. � Host-based daemons configured to use externally-supplied “plugins” to obtain service status. � Host-based alarm configured to take specified actions (e-mail notification, system reboot, etc). � Native web-interface not scalable. � Connected to RT ticketing system for alarm escalation and logging.

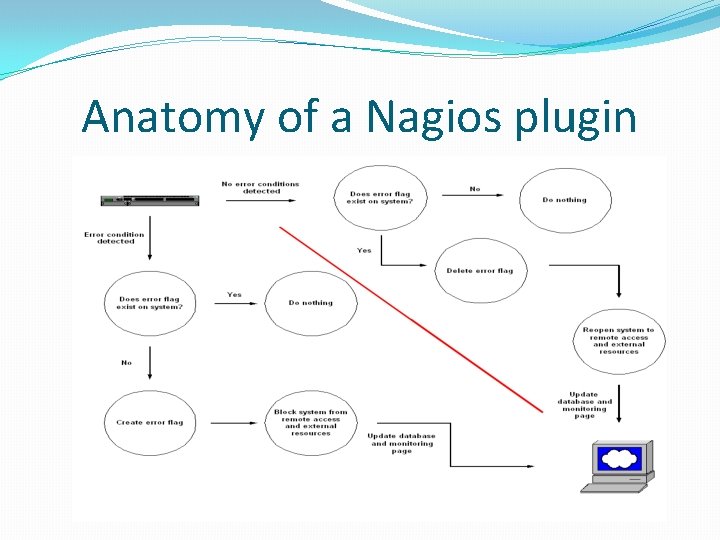

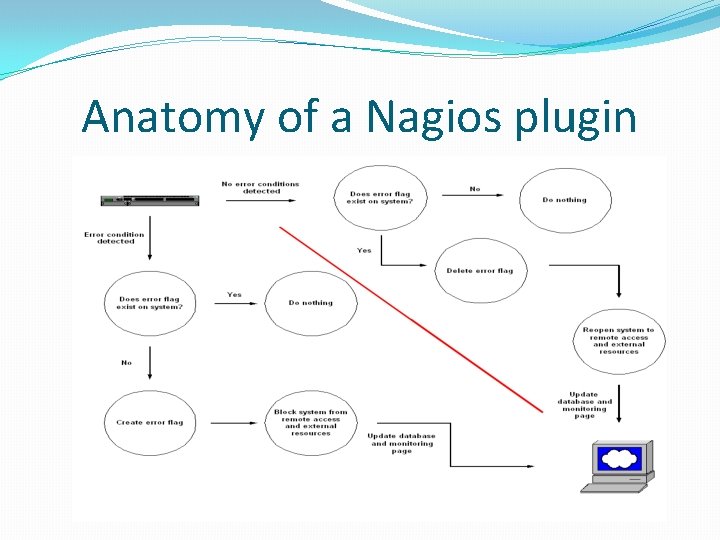

Anatomy of a Nagios plugin

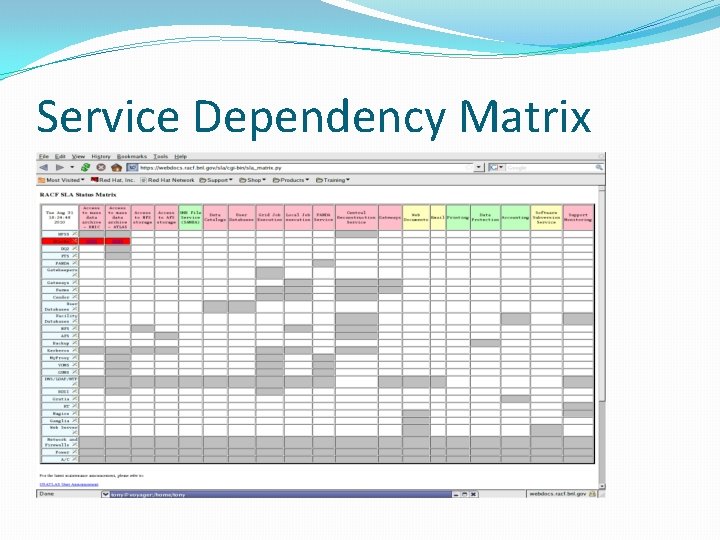

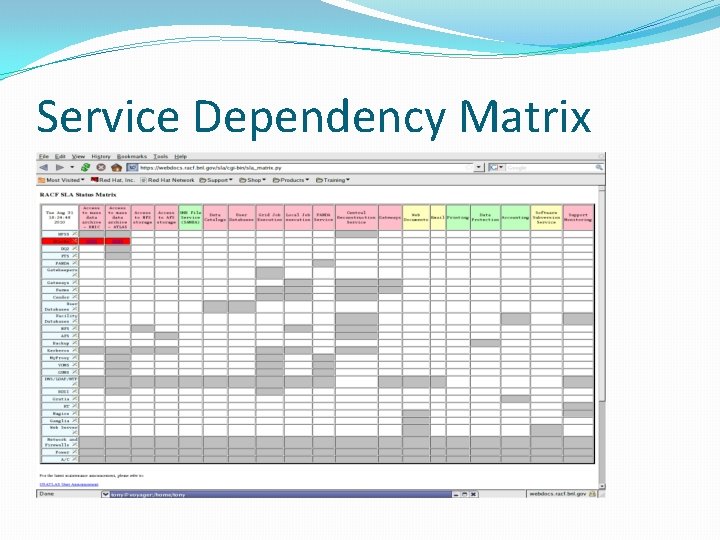

Service Dependency Matrix

Performance and Reliability �Average of ~10 trouble tickets/month �About 5. 7 million batch jobs/month �About 4. 8 million cpu-hours/month of effective runtime �Only 3% ineffective cpu-hours/month due to batch scheduling inefficiency �Average ~87% CPU occupancy over last 12 months

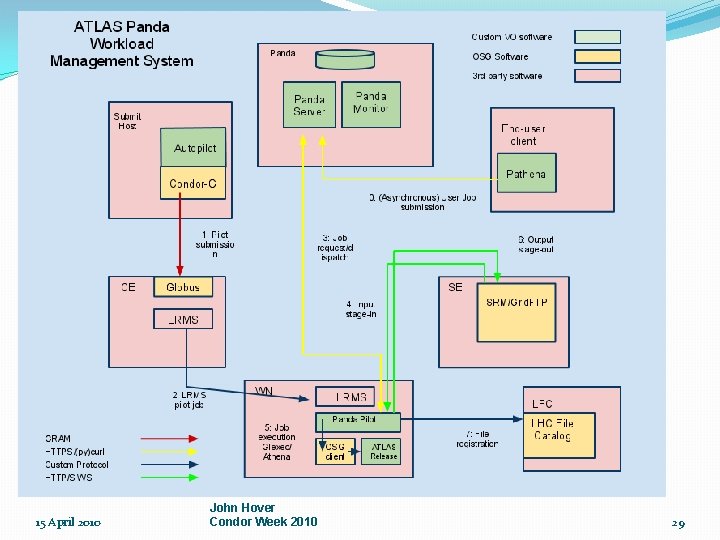

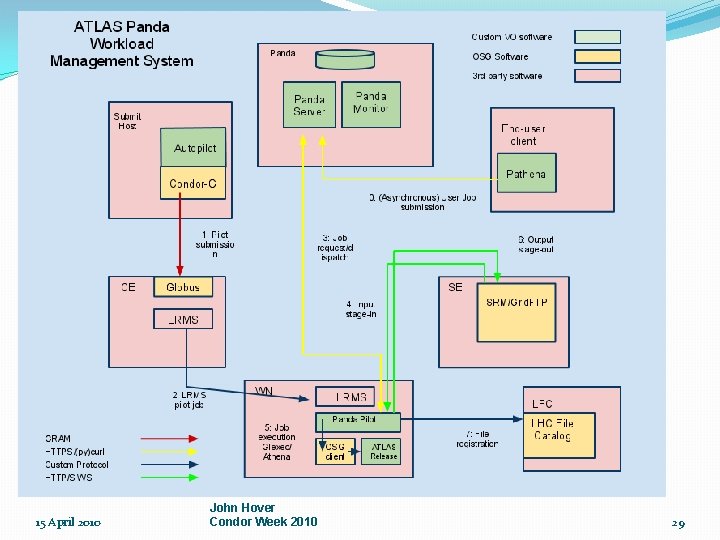

15 April 2010 John Hover Condor Week 2010 29

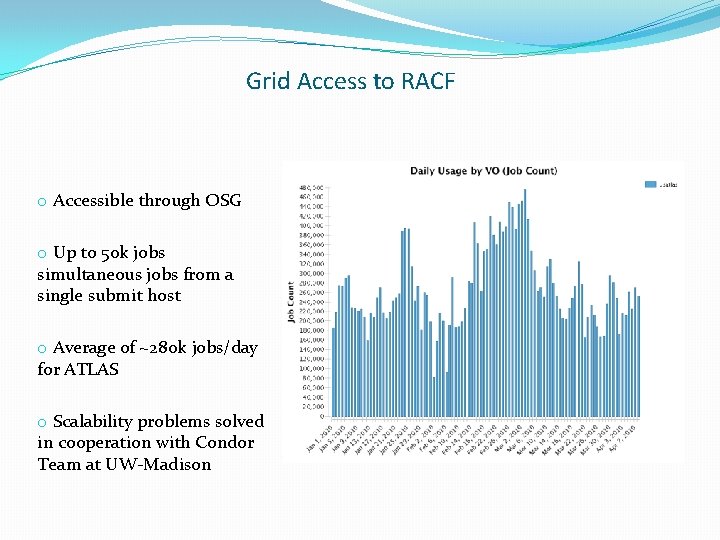

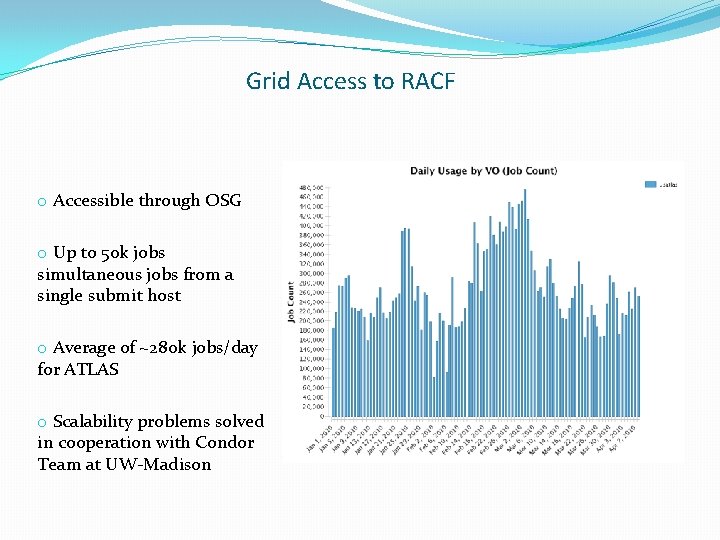

Grid Access to RACF o Accessible through OSG o Up to 50 k jobs simultaneous jobs from a single submit host o Average of ~280 k jobs/day for ATLAS o Scalability problems solved in cooperation with Condor Team at UW-Madison

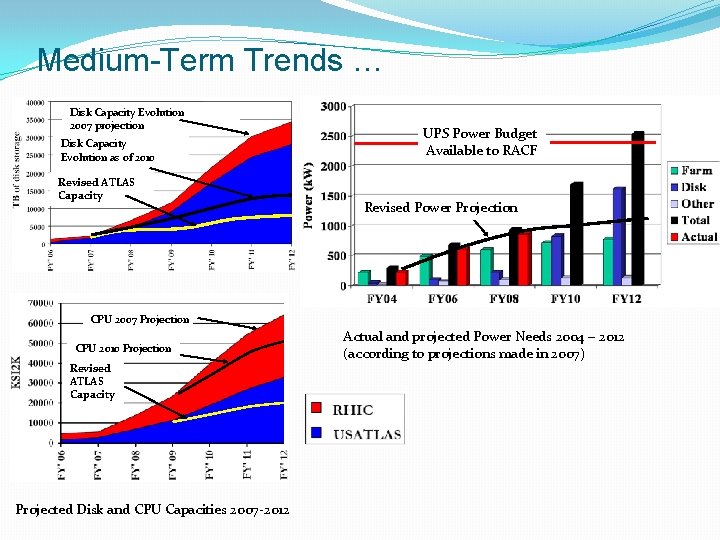

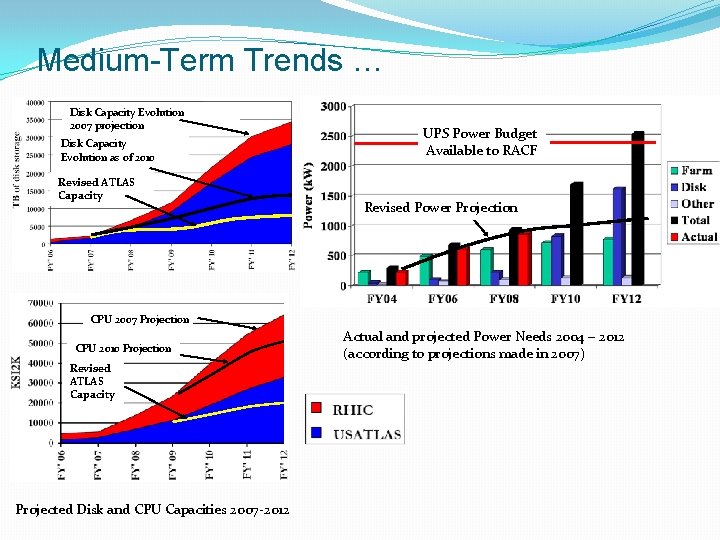

Medium-Term Trends … Disk Capacity Evolution 2007 projection Disk Capacity Evolution as of 2010 Revised ATLAS Capacity UPS Power Budget Available to RACF Revised Power Projection CPU 2007 Projection CPU 2010 Projection Revised ATLAS Capacity Projected Disk and CPU Capacities 2007 -2012 Actual and projected Power Needs 2004 – 2012 (according to projections made in 2007)

… and its consequences �Storage systems will use an increasingly larger fraction of electrical power �An increasing emphasis on power reliability and UPS back-up to keep storage systems available �Any disruptions will be felt beyond local site �A reflection of the distributed computing model

A Model for Tier 2 sites �Small team must be: � Multi-talented � Productive and efficient � Available off-hours (dedicated people not trivial to find!) �Automatic problem-discovery and resolution where possible (ie, Nagios, emergency handling, etc) – sensible monitoring choices a MUST �Leverage open-source tools and external expertise �Do NOT overlook power and cooling issues

Summary �A 2, 000+ cluster has been operated successfully by a group of ~4 people over the past decade �An appropriate model for manpower-limited Tier 2 sites �Some significant service disruptions over the past decade due to infrastructure issues �Increased staff productivity through selective automation, mix of commercial/open-source software and smart monitoring