EDUCATOR EFFECTIVENESS IN WISCONSIN Katharine Rainey Director of

- Slides: 42

EDUCATOR EFFECTIVENESS IN WISCONSIN Katharine Rainey Director of Educator Effectiveness Department of Public Instruction Bradley Carl, Ph. D Researcher and Associate Director Value-Added Research Center 1

HOW WI DIFFERS 2

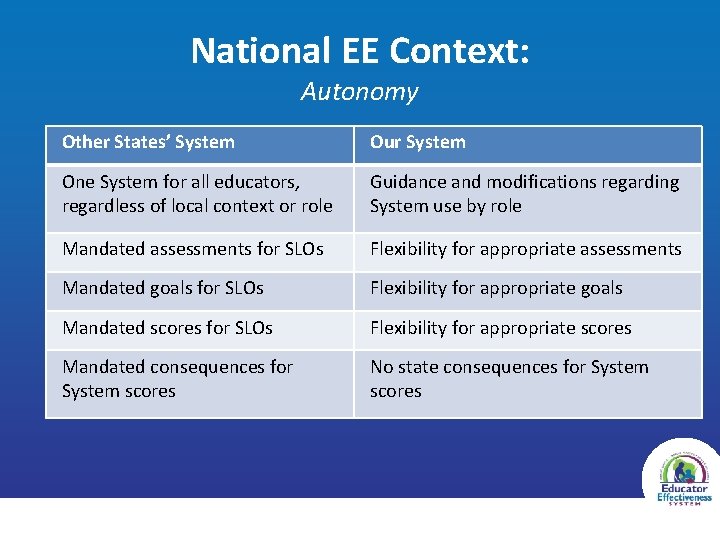

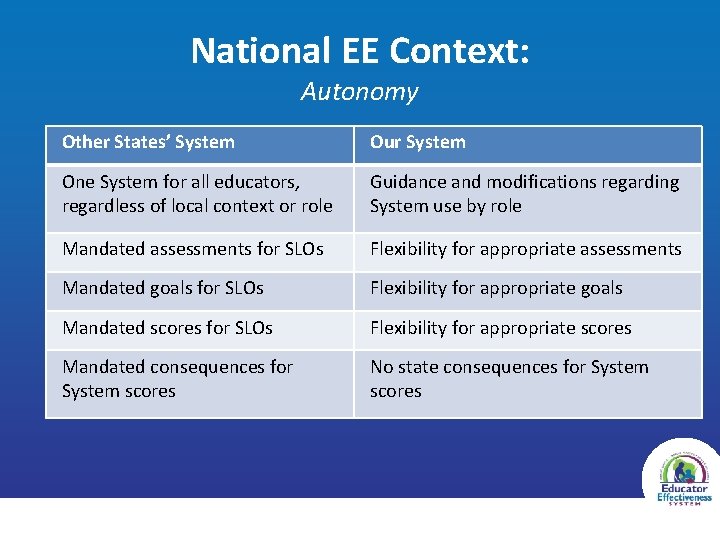

National EE Context: Autonomy Other States’ System Our System One System for all educators, regardless of local context or role Guidance and modifications regarding System use by role Mandated assessments for SLOs Flexibility for appropriate assessments Mandated goals for SLOs Flexibility for appropriate goals Mandated scores for SLOs Flexibility for appropriate scores Mandated consequences for System scores No state consequences for System scores

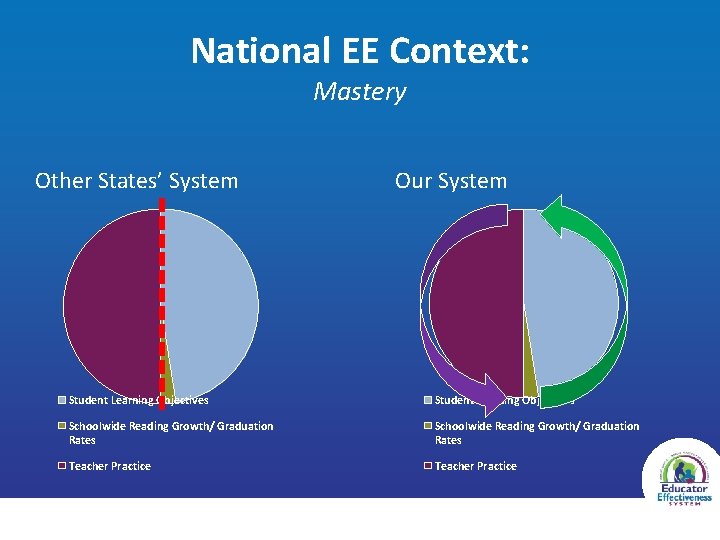

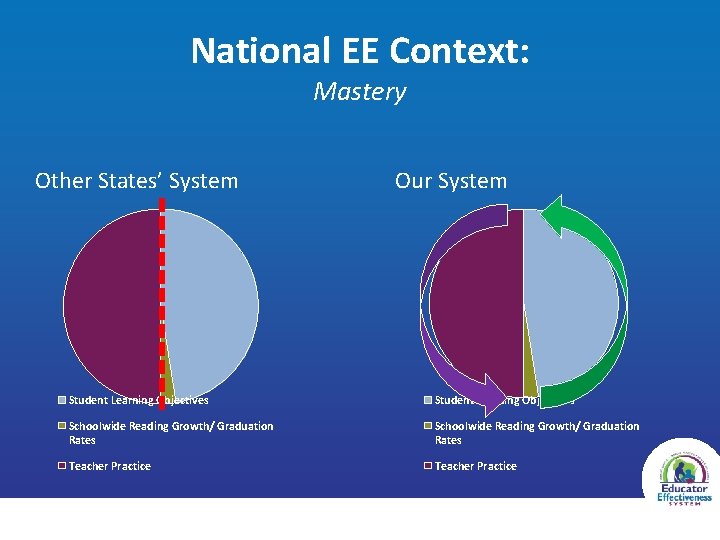

National EE Context: Mastery Other States’ System Our System Student Learning Objectives Schoolwide Reading Growth/ Graduation Rates Teacher Practice

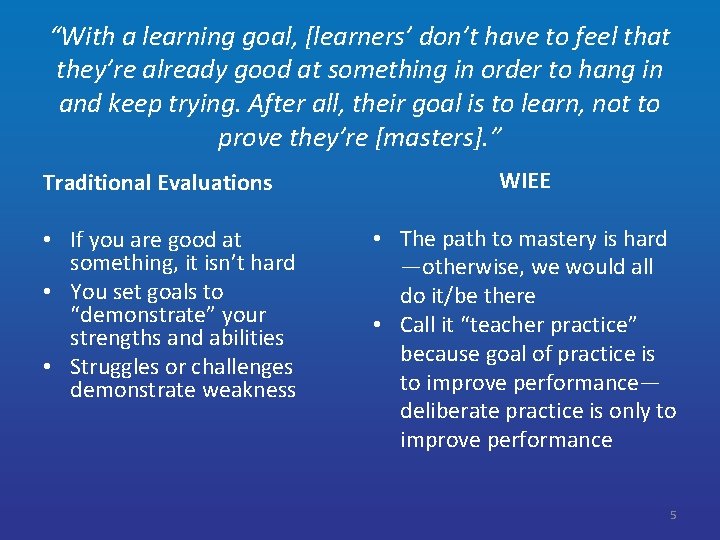

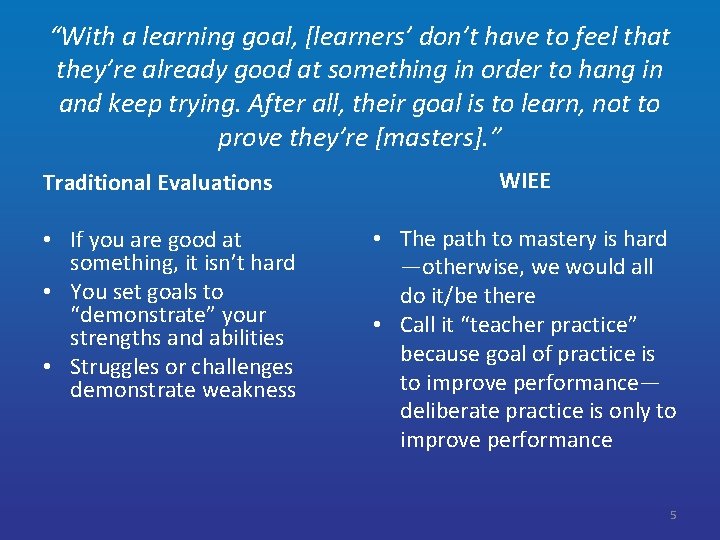

“With a learning goal, [learners’ don’t have to feel that they’re already good at something in order to hang in and keep trying. After all, their goal is to learn, not to prove they’re [masters]. ” Traditional Evaluations • If you are good at something, it isn’t hard • You set goals to “demonstrate” your strengths and abilities • Struggles or challenges demonstrate weakness WIEE • The path to mastery is hard —otherwise, we would all do it/be there • Call it “teacher practice” because goal of practice is to improve performance— deliberate practice is only to improve performance 5

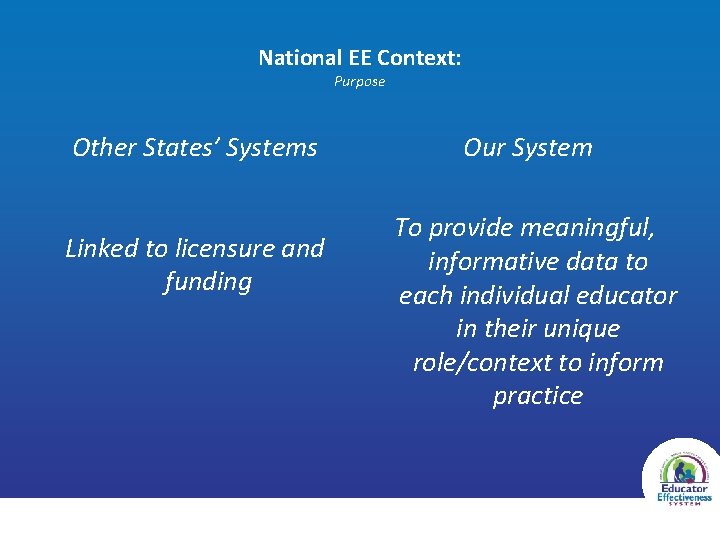

National EE Context: Purpose Other States’ Systems Linked to licensure and funding Our System To provide meaningful, informative data to each individual educator in their unique role/context to inform practice

WISCONSIN: Informing Others • • • Other states USDE Other countries Teachscape Danielson Group

ABOUT TEACHSCAPE 8

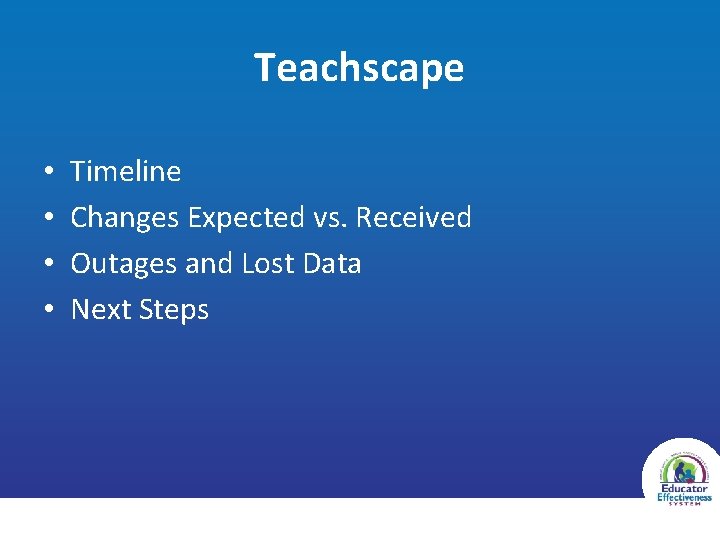

Teachscape • • Timeline Changes Expected vs. Received Outages and Lost Data Next Steps

THE EFFECTIVENESS CYCLE 10

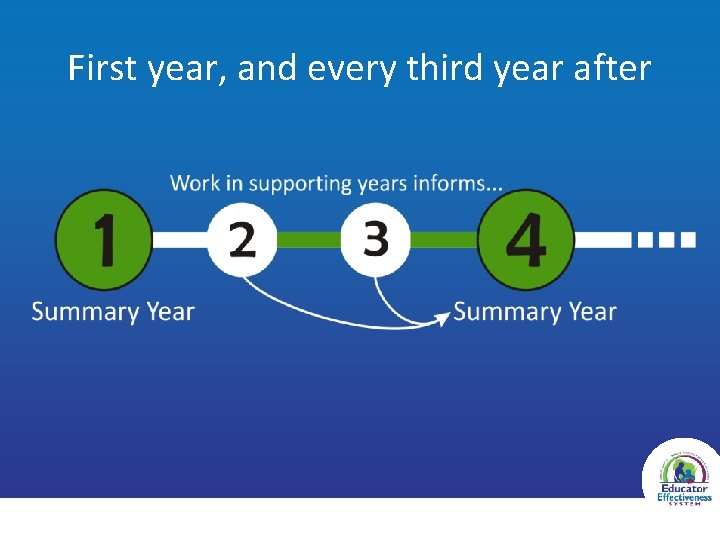

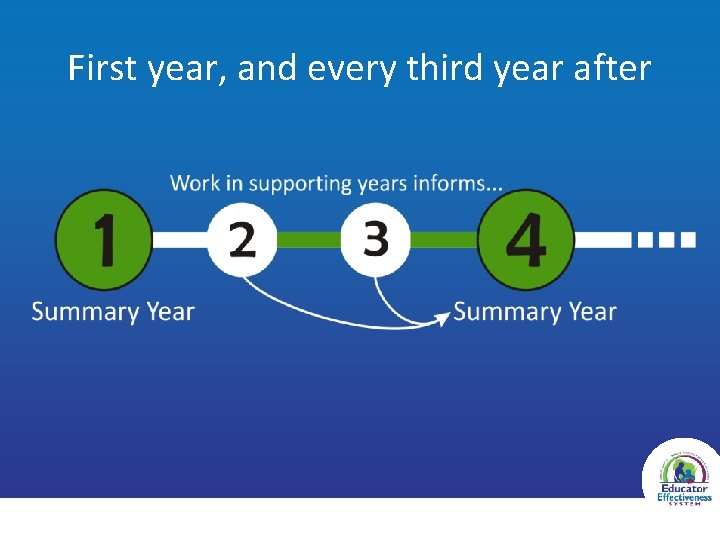

First year, and every third year after

MULTIPLE MEASURES 12

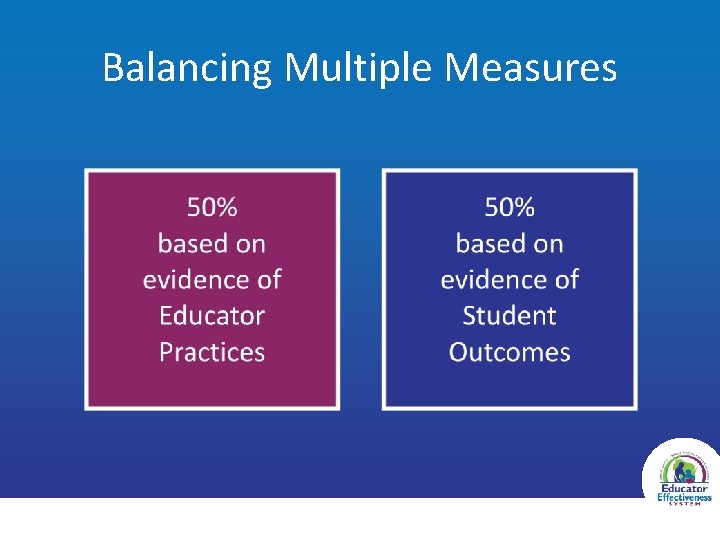

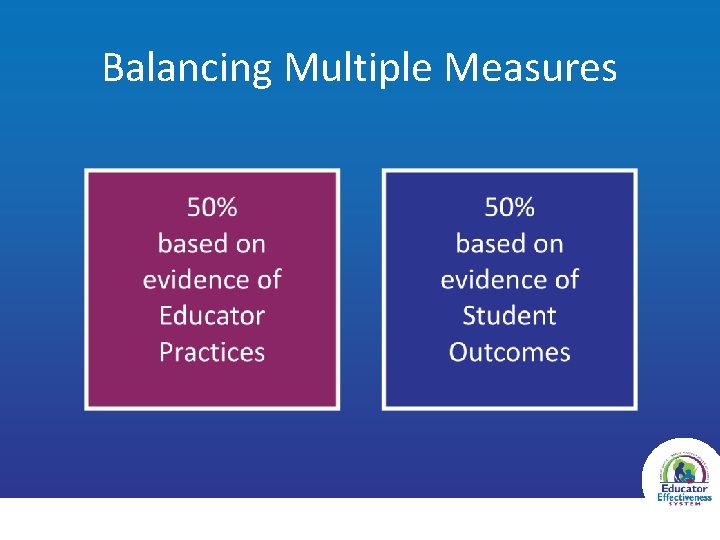

Balancing Multiple Measures

PRACTICE 14

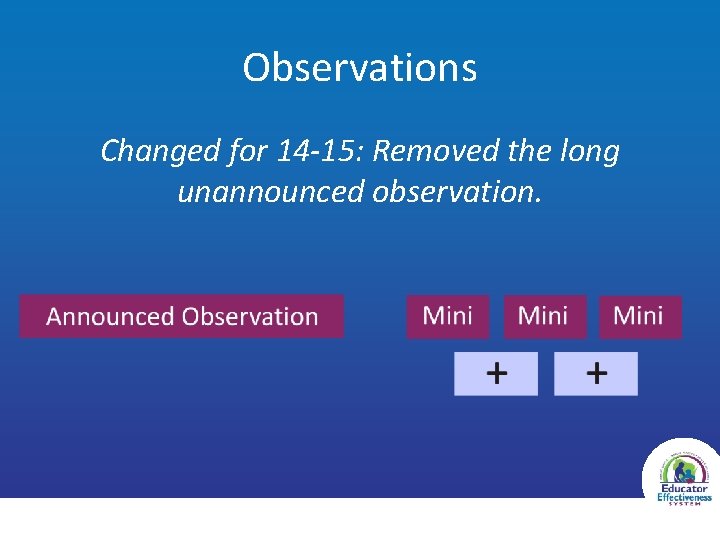

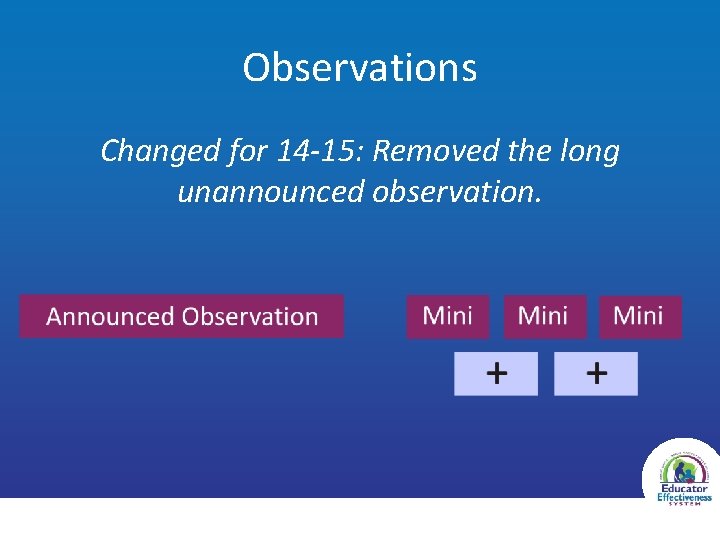

Observations Changed for 14 -15: Removed the long unannounced observation.

16

STUDENT OUTCOMES 17

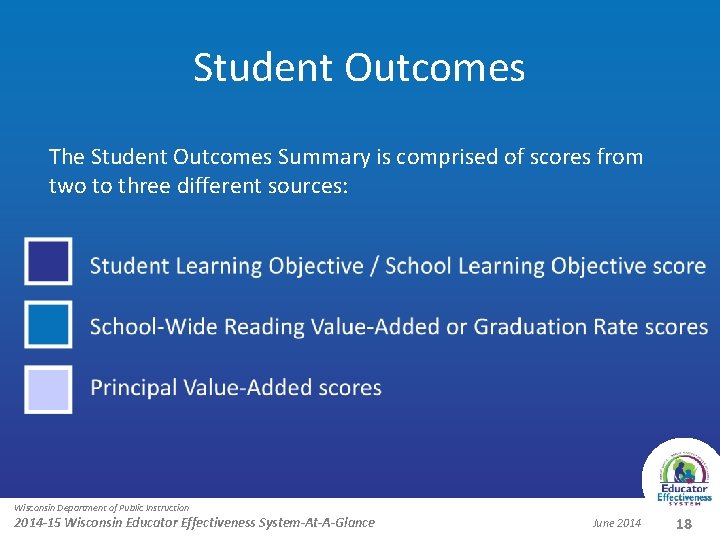

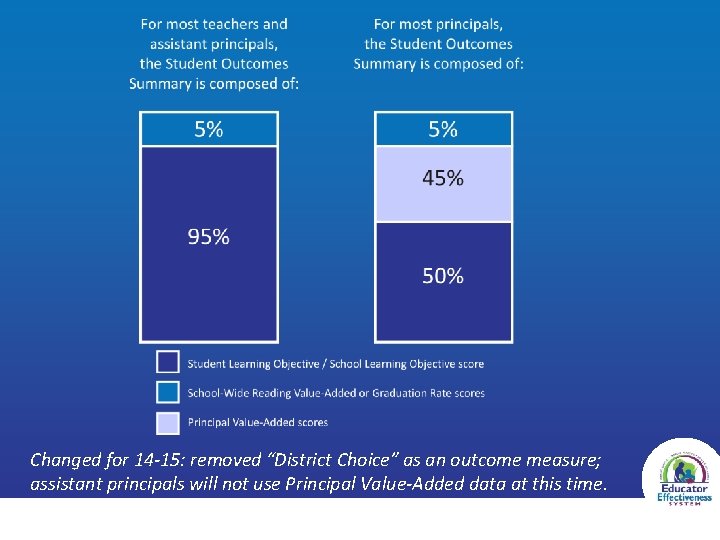

Student Outcomes The Student Outcomes Summary is comprised of scores from two to three different sources: Wisconsin Department of Public Instruction 2014 -15 Wisconsin Educator Effectiveness System-At-A-Glance June 2014 18

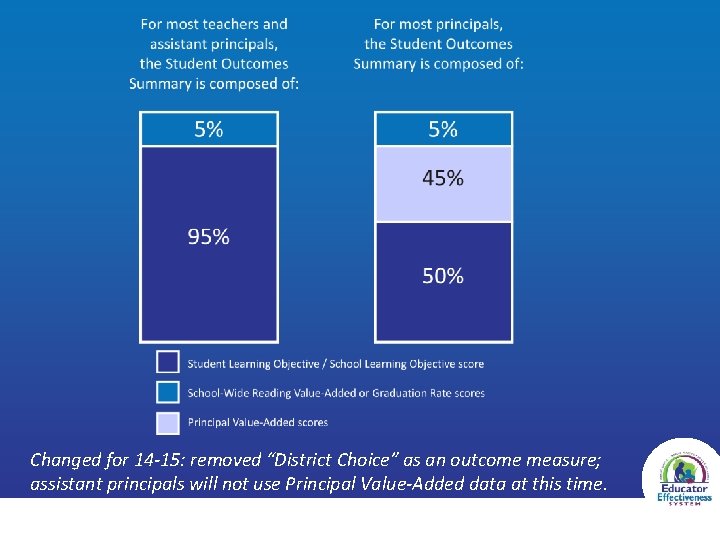

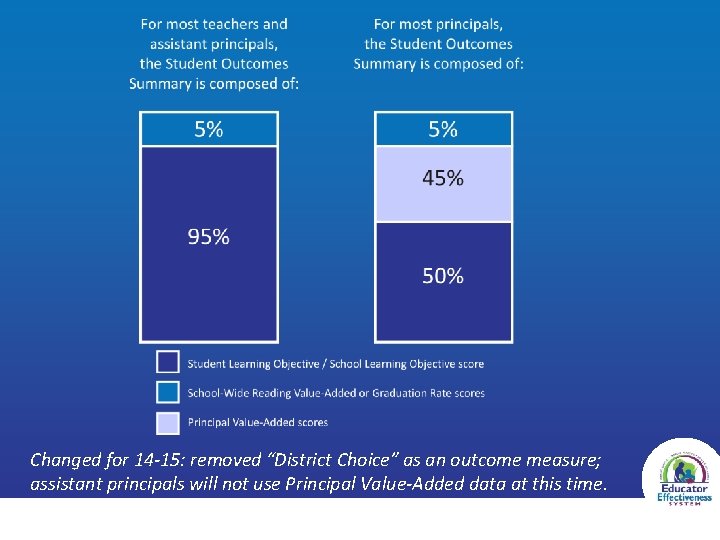

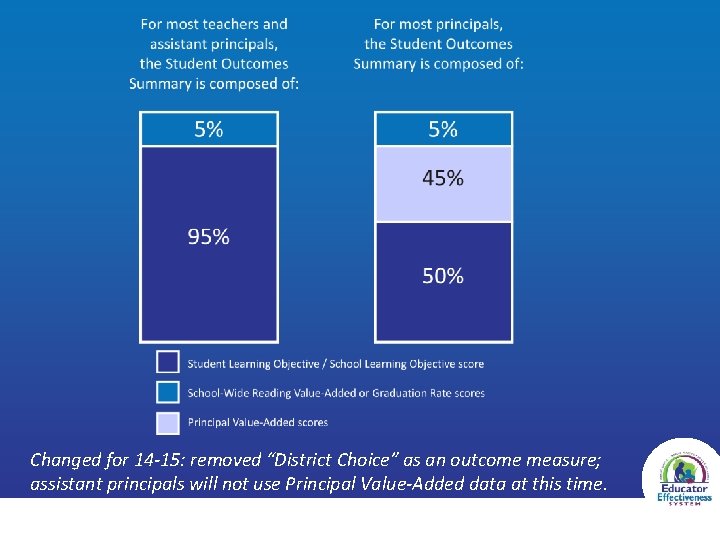

Changed for 14 -15: removed “District Choice” as an outcome measure; assistant principals will not use Principal Value-Added data at this time.

Value Added Bradley Carl, Ph. D Researcher and Associate Director Value-Added Research Center (VARC) UW-Madison Research Center 20

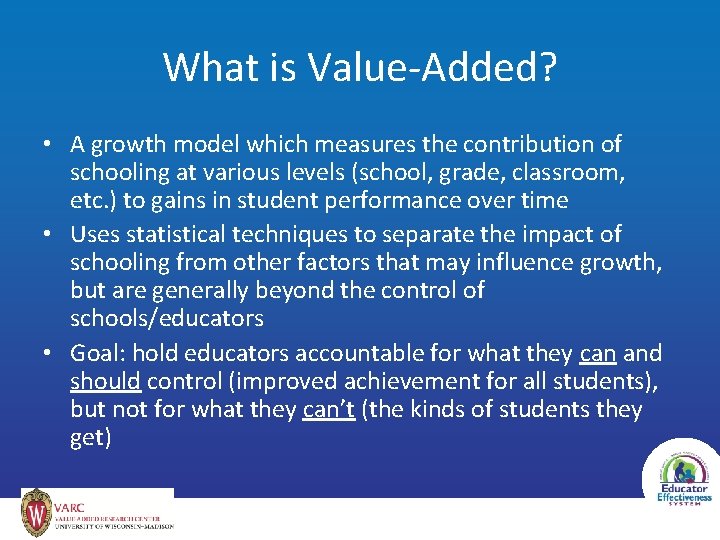

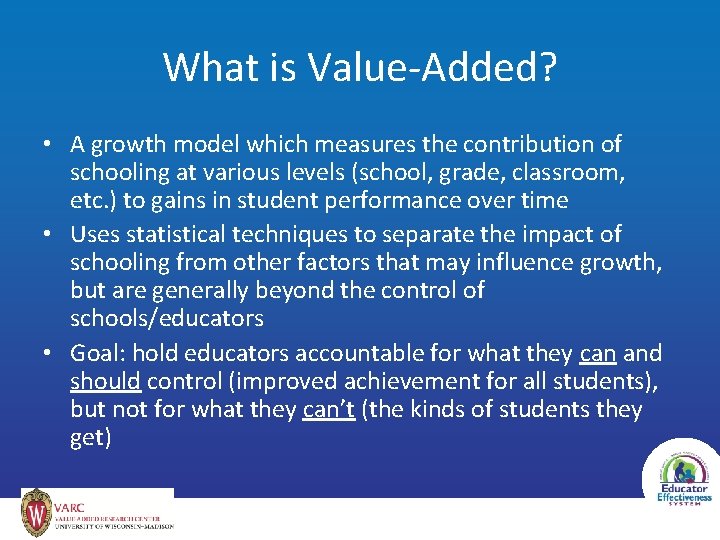

What is Value-Added? • A growth model which measures the contribution of schooling at various levels (school, grade, classroom, etc. ) to gains in student performance over time • Uses statistical techniques to separate the impact of schooling from other factors that may influence growth, but are generally beyond the control of schools/educators • Goal: hold educators accountable for what they can and should control (improved achievement for all students), but not for what they can’t (the kinds of students they get)

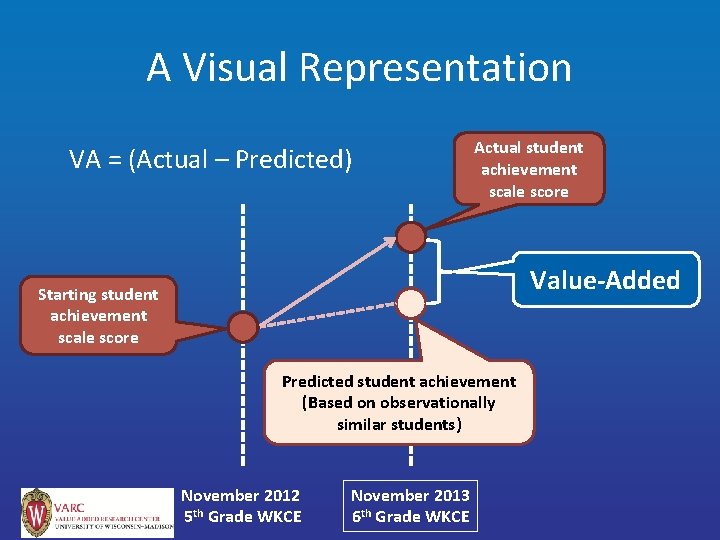

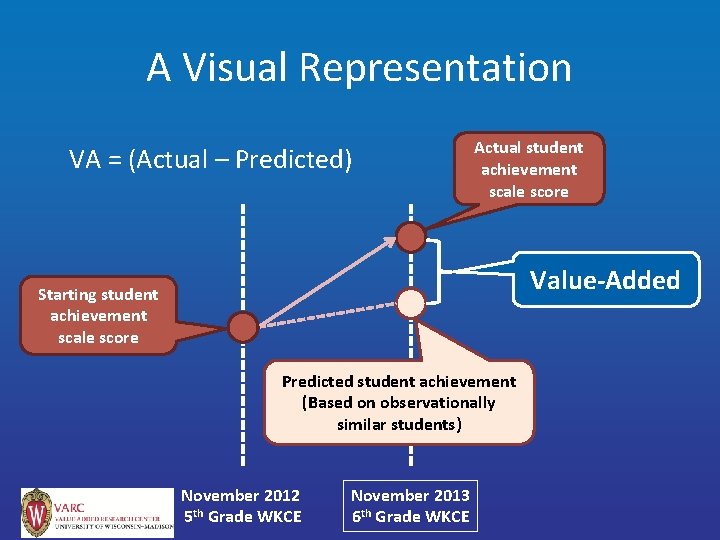

A Visual Representation VA = (Actual – Predicted) Actual student achievement scale score Value-Added Starting student achievement scale score Predicted student achievement (Based on observationally similar students) November 2012 5 th Grade WKCE November 2013 6 th Grade WKCE

Value-Added in Wisconsin’s EE System • School-level value-added (Reading only) • Principal value-added • Teacher value-added

Value-Added in Wisconsin’s EE System • School-level Reading (for most elementary and middle schools; 5% of Student Outcomes Summary): – Reflects wishes of EE Design Team to “message” shared responsibility for literacy instruction across the curriculum

Value-Added in Wisconsin’s EE System • Principal value-added (PVA) for most elementary and middle school principals: – Differs from schoolwide value-added by: • Building in an “adjustment period” for principals who are new to their building (since principal impact on student performance is less direct and because initial years mostly reflect the effects of prior principal) • Accounting for school starting point (to avoid creating disincentives to join lower-performing or higher-performing schools) • Incorporating PVA from previous assignments in WI, where available • See handout

Value-Added in Wisconsin’s EE System • Teacher-level value-added (for teachers responsible for Reading and Math): – Will be added to Student Outcomes Summary in the future when data systems fully support precise associations between students, teachers, and courses

Student Learning Objectives/ School Learning Objectives (SLOs) Changed for 14 -15: Reduced from two SLOs annually to one annually; evaluator no longer approves the EEP or SLO goals. (NOTE: The process should still be highly collaborative. )

Creating the SLO Score • SLO score is based on outcome results and process rather than results only • Educator owns the process to LEARN the process—focus directed at process and formative conversations more than score

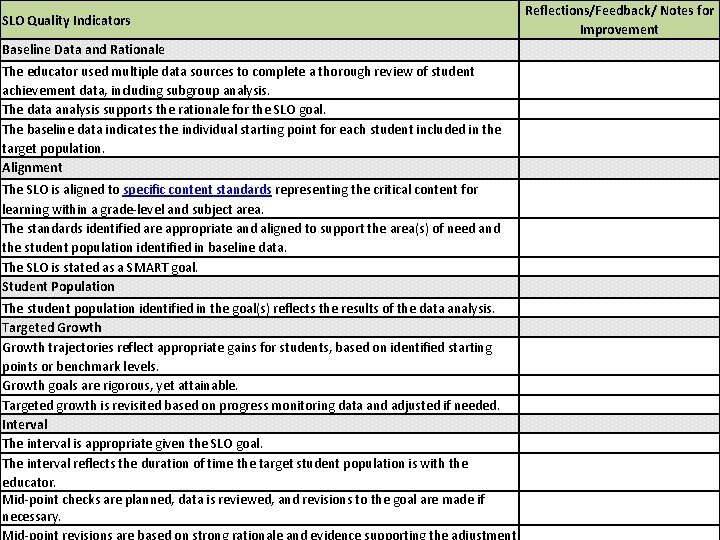

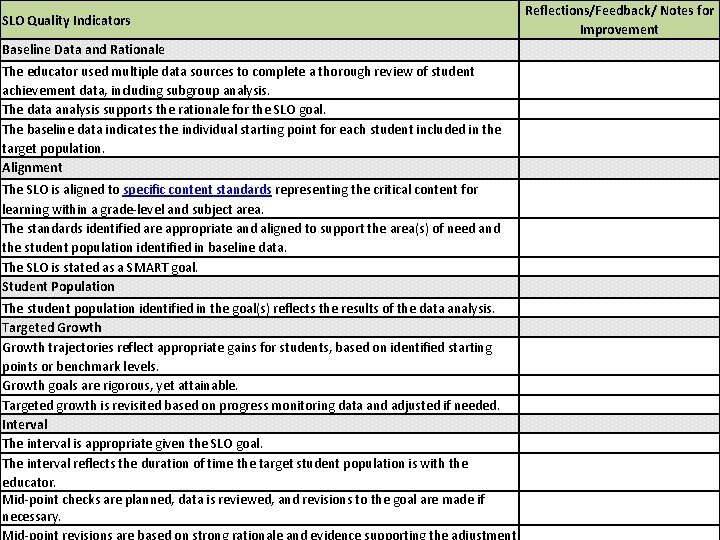

SLO Quality Indicators Baseline Data and Rationale The educator used multiple data sources to complete a thorough review of student achievement data, including subgroup analysis. The data analysis supports the rationale for the SLO goal. The baseline data indicates the individual starting point for each student included in the target population. Alignment The SLO is aligned to specific content standards representing the critical content for learning within a grade-level and subject area. The standards identified are appropriate and aligned to support the area(s) of need and the student population identified in baseline data. The SLO is stated as a SMART goal. Student Population The student population identified in the goal(s) reflects the results of the data analysis. Targeted Growth trajectories reflect appropriate gains for students, based on identified starting points or benchmark levels. Growth goals are rigorous, yet attainable. Targeted growth is revisited based on progress monitoring data and adjusted if needed. Interval The interval is appropriate given the SLO goal. The interval reflects the duration of time the target student population is with the educator. Mid-point checks are planned, data is reviewed, and revisions to the goal are made if necessary. Mid-point revisions are based on strong rationale and evidence supporting the adjustment Reflections/Feedback/ Notes for Improvement

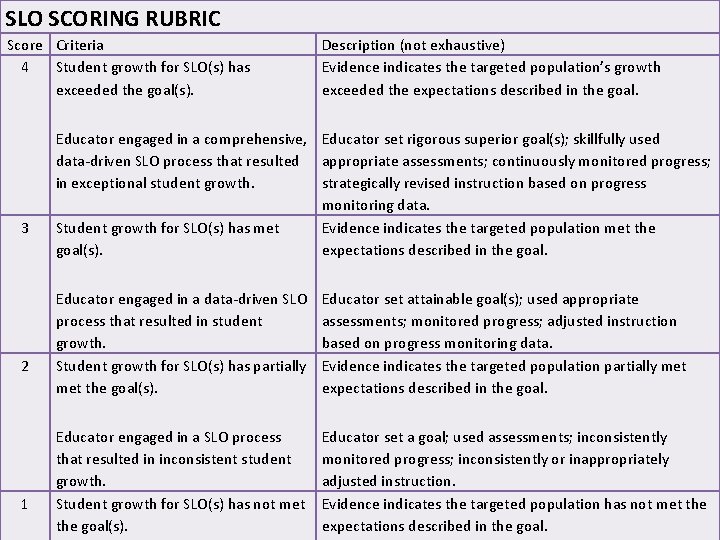

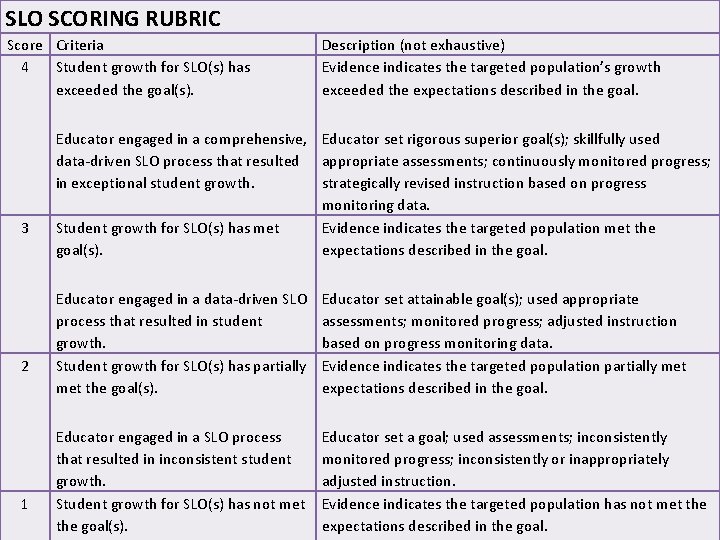

SLO SCORING RUBRIC Score Criteria 4 Student growth for SLO(s) has exceeded the goal(s). 3 2 1 Description (not exhaustive) Evidence indicates the targeted population’s growth exceeded the expectations described in the goal. Educator engaged in a comprehensive, Educator set rigorous superior goal(s); skillfully used data-driven SLO process that resulted appropriate assessments; continuously monitored progress; in exceptional student growth. strategically revised instruction based on progress monitoring data. Student growth for SLO(s) has met Evidence indicates the targeted population met the goal(s). expectations described in the goal. Educator engaged in a data-driven SLO process that resulted in student growth. Student growth for SLO(s) has partially met the goal(s). Educator set attainable goal(s); used appropriate assessments; monitored progress; adjusted instruction based on progress monitoring data. Evidence indicates the targeted population partially met expectations described in the goal. Educator engaged in a SLO process that resulted in inconsistent student growth. Student growth for SLO(s) has not met the goal(s). Educator set a goal; used assessments; inconsistently monitored progress; inconsistently or inappropriately adjusted instruction. Evidence indicates the targeted population has not met the expectations described in the goal.

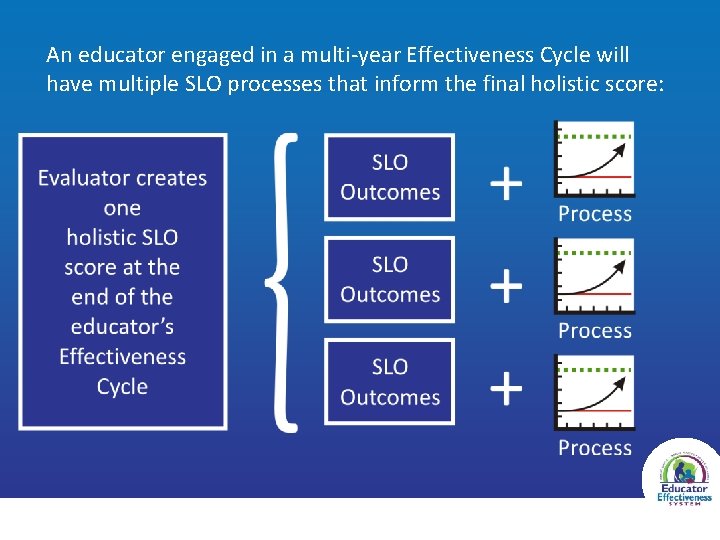

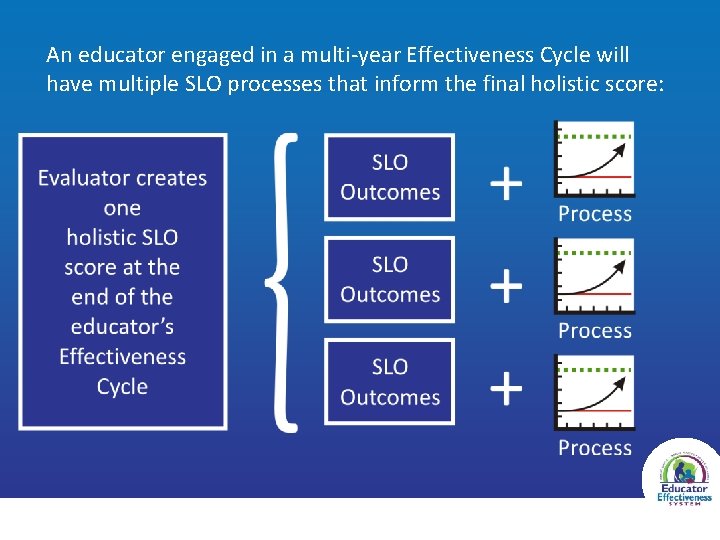

An educator engaged in a multi-year Effectiveness Cycle will have multiple SLO processes that inform the final holistic score:

SUMMARIZING THE EFFECTIVENESS CYCLE 32

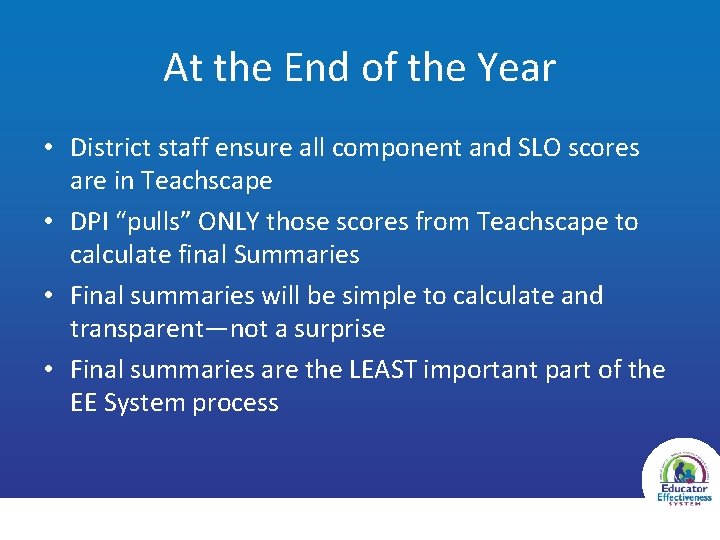

At the End of the Year • District staff ensure all component and SLO scores are in Teachscape • DPI “pulls” ONLY those scores from Teachscape to calculate final Summaries • Final summaries will be simple to calculate and transparent—not a surprise • Final summaries are the LEAST important part of the EE System process

Balancing Multiple Measures

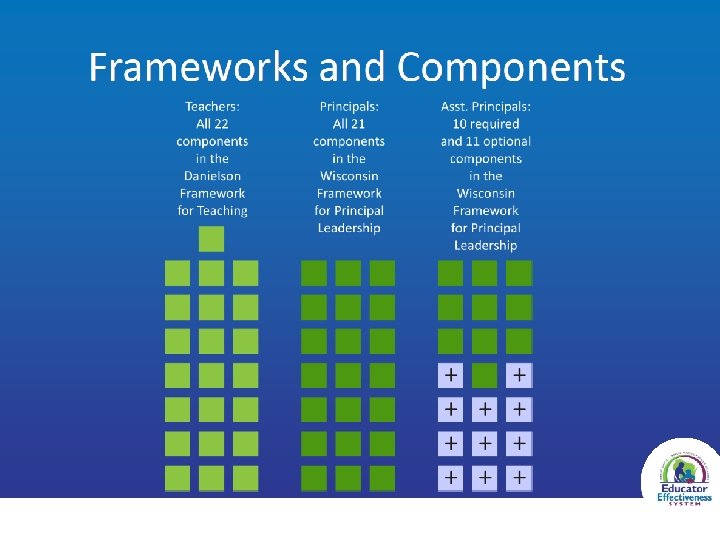

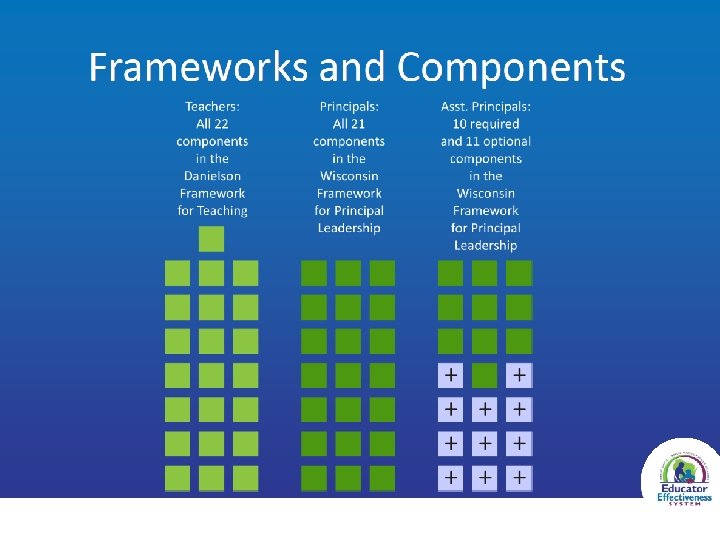

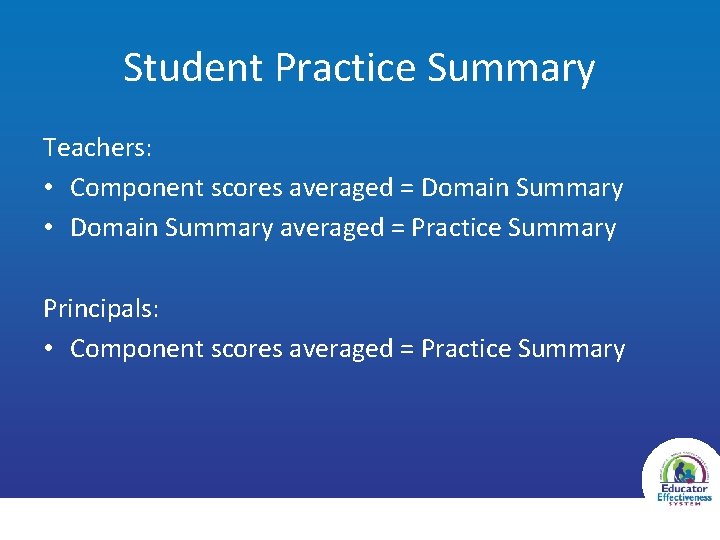

Student Practice Summary Teachers: • Component scores averaged = Domain Summary • Domain Summary averaged = Practice Summary Principals: • Component scores averaged = Practice Summary

Changed for 14 -15: removed “District Choice” as an outcome measure; assistant principals will not use Principal Value-Added data at this time.

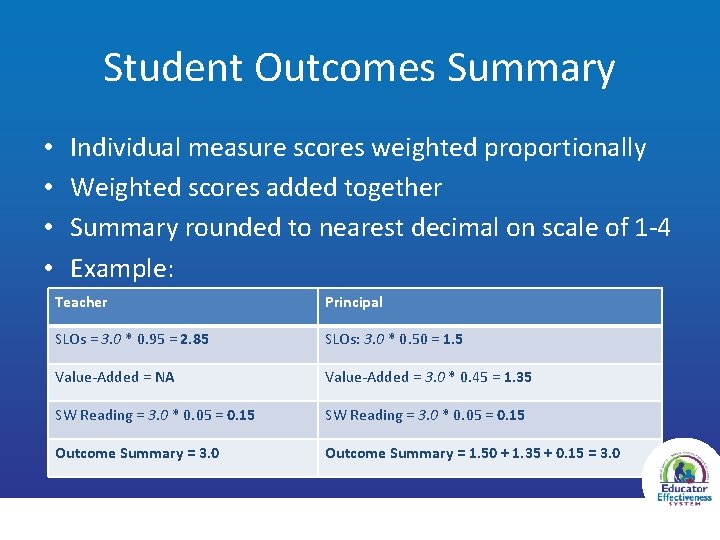

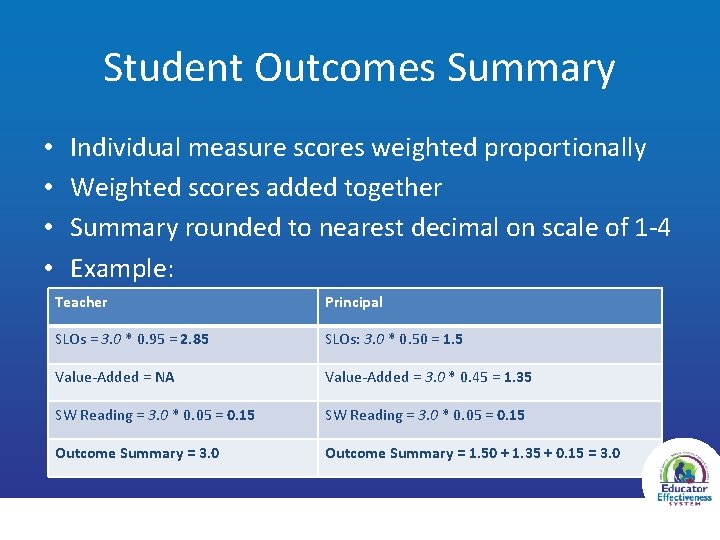

Student Outcomes Summary • • Individual measure scores weighted proportionally Weighted scores added together Summary rounded to nearest decimal on scale of 1 -4 Example: Teacher Principal SLOs = 3. 0 * 0. 95 = 2. 85 SLOs: 3. 0 * 0. 50 = 1. 5 Value-Added = NA Value-Added = 3. 0 * 0. 45 = 1. 35 SW Reading = 3. 0 * 0. 05 = 0. 15 Outcome Summary = 3. 0 Outcome Summary = 1. 50 + 1. 35 + 0. 15 = 3. 0

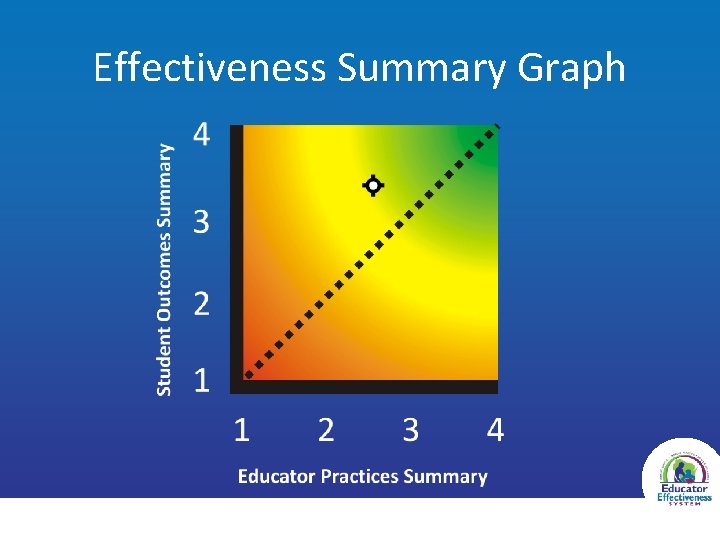

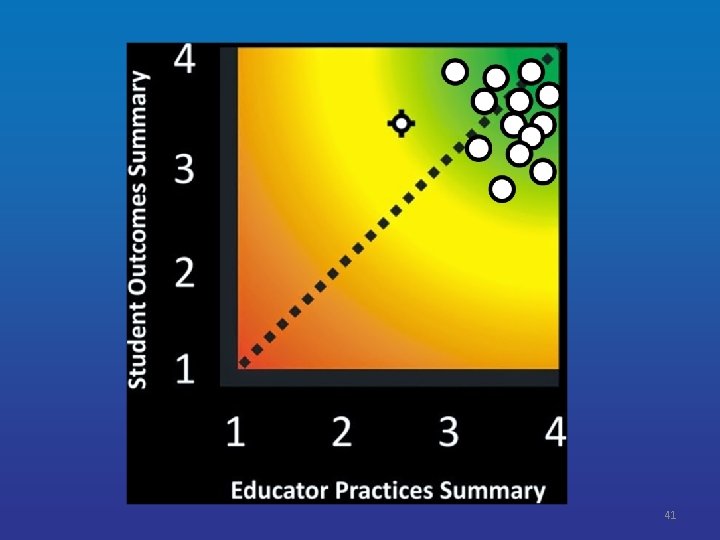

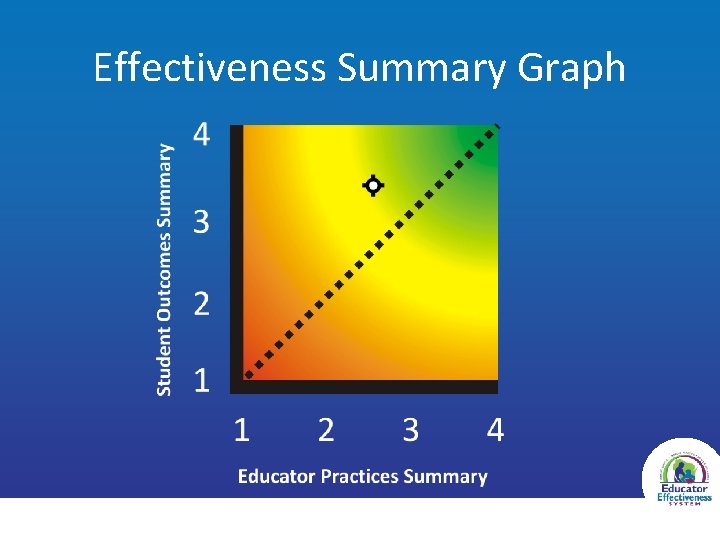

Summary Graph The educator’s results will be reported visually using a coordinate pair on the Summary Graph, summarizing the data collected regarding their practice and outcomes across the Effectiveness Cycle. Wisconsin Department of Public Instruction 2014 -15 Wisconsin Educator Effectiveness System-At-A-Glance June 2014 38

Effectiveness Summary Graph

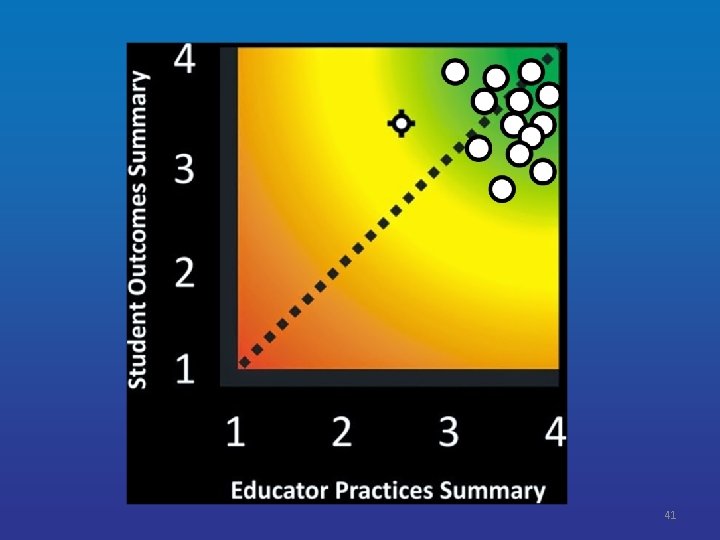

Data Summaries • Remove use of word “Reporting” • Locally: – Provided in SAFE until WISEid becomes available option – Visually/graphically provide all data in most meaningful ways – Only between educator and their administrators • Federal: – USDE has approved this scoring process – USDE has provided initial approval for state-level reporting ONLY (for coming year)

41

For more information and resources related to the Wisconsin Educator Effectiveness System, please visit the WIEE website at: ee. dpi. wi. gov @Wis. DPI_EE @Katharine. Rainey dpiwis-ee. blogspot. com/ Wisconsin Department of Public Instruction 2014 -15 Wisconsin Educator Effectiveness System-At-A-Glance June 2014 42

Nc educator effectiveness system

Nc educator effectiveness system Slo and ppg examples

Slo and ppg examples Joel rainey pastor

Joel rainey pastor Alisha gross

Alisha gross Katharine hepburn parkinsons

Katharine hepburn parkinsons Katharine kolcaba comfort theory

Katharine kolcaba comfort theory Katharine kolcaba biography

Katharine kolcaba biography Prefix and suffix examples

Prefix and suffix examples Birthday party by katherine brush

Birthday party by katherine brush Katharine gu

Katharine gu Taxonomic structure of comfort

Taxonomic structure of comfort Comfort nursing theory

Comfort nursing theory Dr katharine jones

Dr katharine jones Kolcava

Kolcava Alabama teacher code of ethics

Alabama teacher code of ethics Georgia professional standards commission code of ethics

Georgia professional standards commission code of ethics Tspc complaints

Tspc complaints Bureau of educator certification florida

Bureau of educator certification florida Health of education

Health of education Turing tumble simulator

Turing tumble simulator Msde educator portal

Msde educator portal Vumc educator portfolio

Vumc educator portfolio Ct teacher certification reciprocity

Ct teacher certification reciprocity Hbsp coursepack

Hbsp coursepack Microteaching lesson plan

Microteaching lesson plan Nevada educator performance framework

Nevada educator performance framework Nurse educator resume

Nurse educator resume Dlm educator portal

Dlm educator portal Comclassroom

Comclassroom Allied educator moe

Allied educator moe Teachers violating the code of ethics in georgia

Teachers violating the code of ethics in georgia Office of educator services

Office of educator services Georgia code of ethics for educators

Georgia code of ethics for educators Microsoft innovative educator trainer academy

Microsoft innovative educator trainer academy Joinmyquiz.comm

Joinmyquiz.comm Managerial behavior and effectiveness

Managerial behavior and effectiveness Cath lab cost effectiveness

Cath lab cost effectiveness Issues in curriculum development in india

Issues in curriculum development in india Debriifing

Debriifing External forms of social control

External forms of social control Measuring coaching effectiveness

Measuring coaching effectiveness Strategic profitability analysis

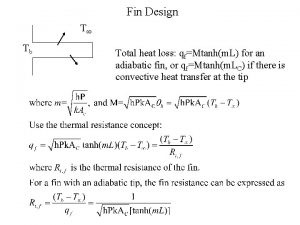

Strategic profitability analysis Total fin

Total fin