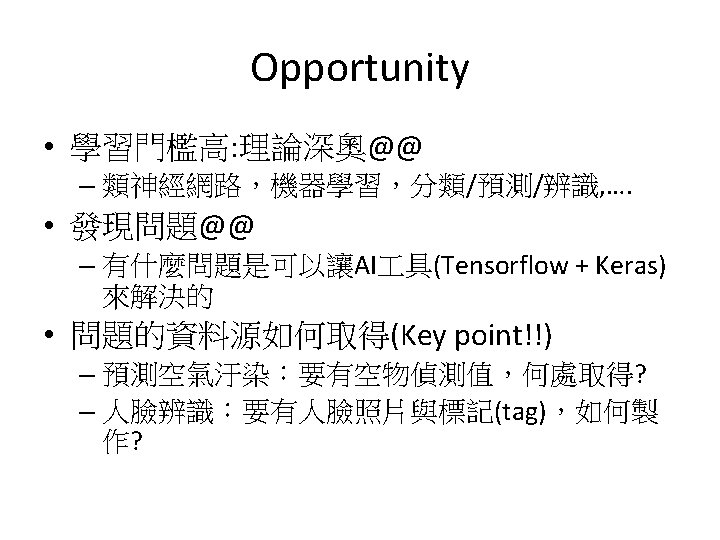

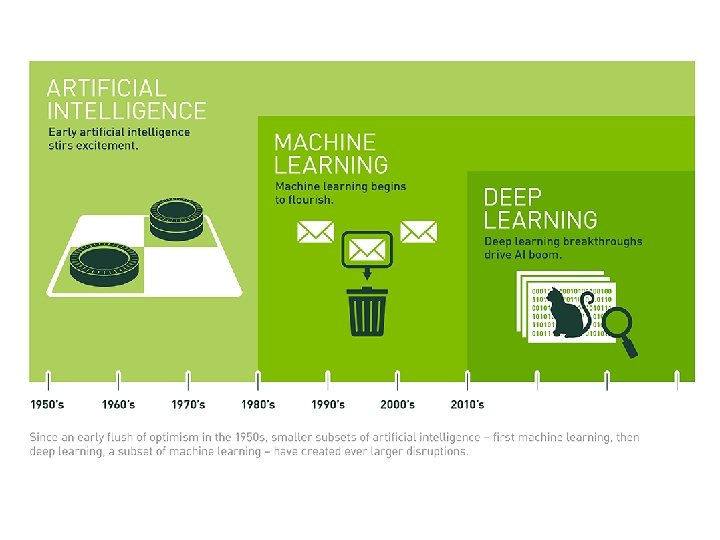

Deep Learning Machine Learning CNN RNN Opportunity Machine

- Slides: 47

• • Deep Learning , Machine Learning CNN RNN Opportunity

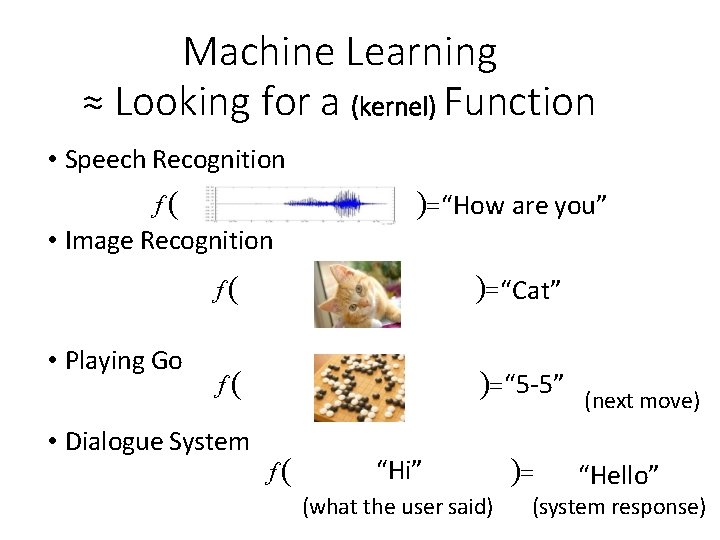

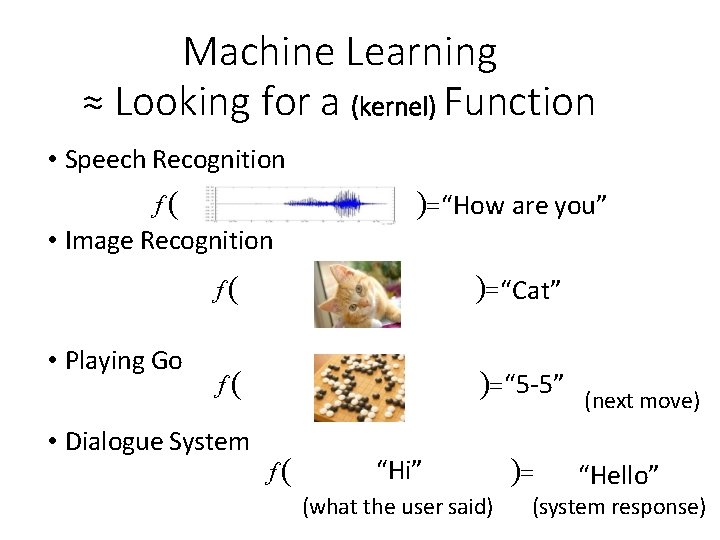

Machine Learning ≈ Looking for a (kernel) Function • Speech Recognition “How are you” f • Image Recognition • Playing Go f “Cat” f “ 5 -5” • Dialogue System f “Hi” (what the user said) (next move) “Hello” (system response)

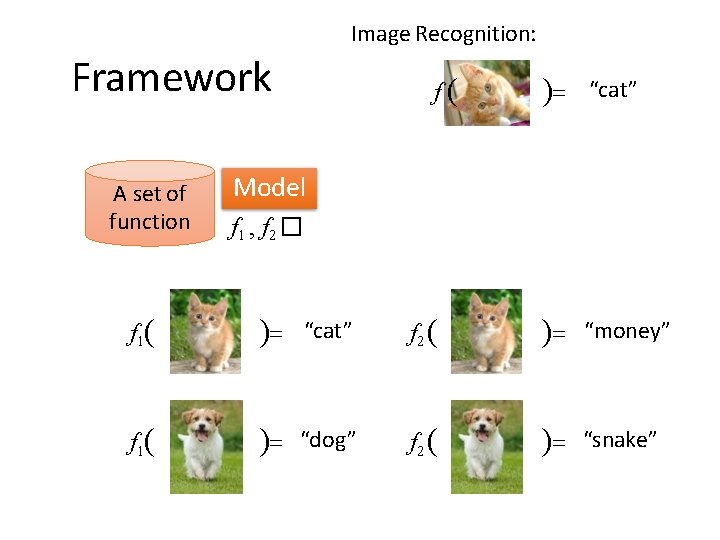

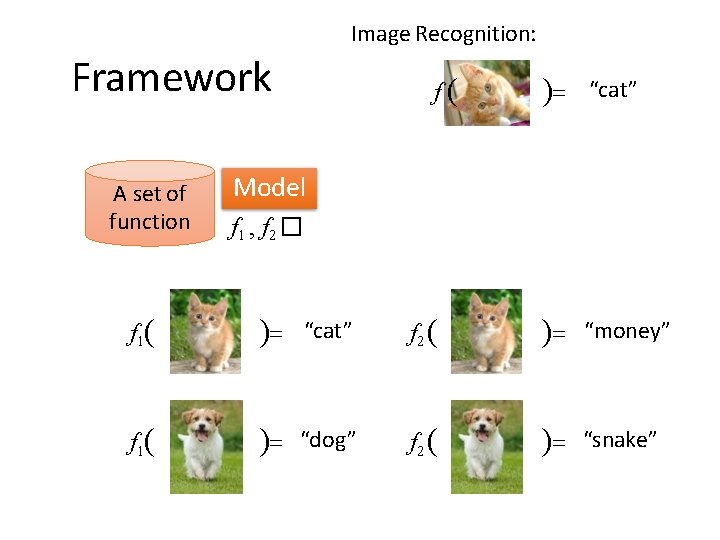

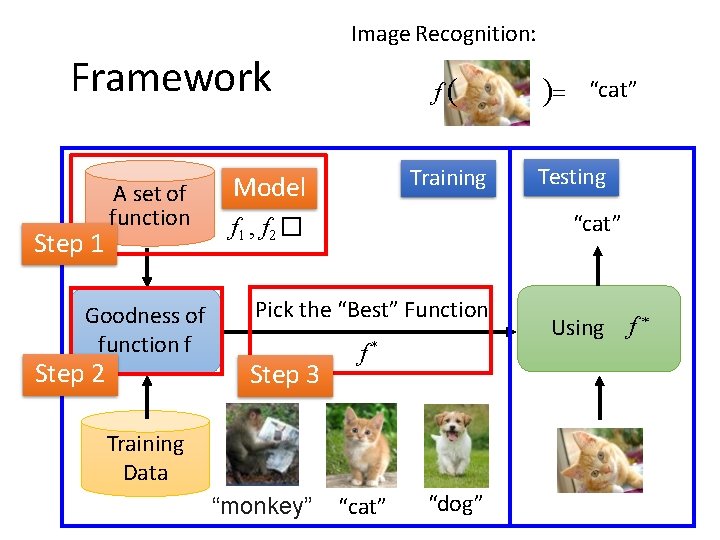

Image Recognition: Framework A set of function f “cat” Model f 1 , f 2 � f 1 “cat” f 2 “money” f 1 “dog” f 2 “snake”

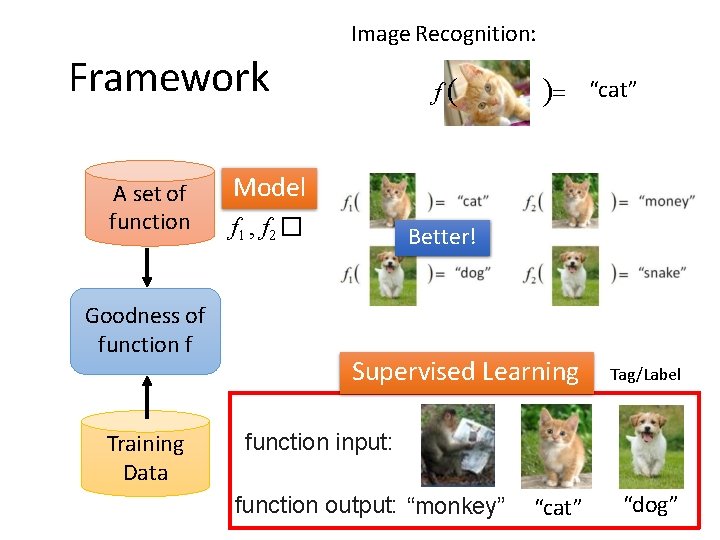

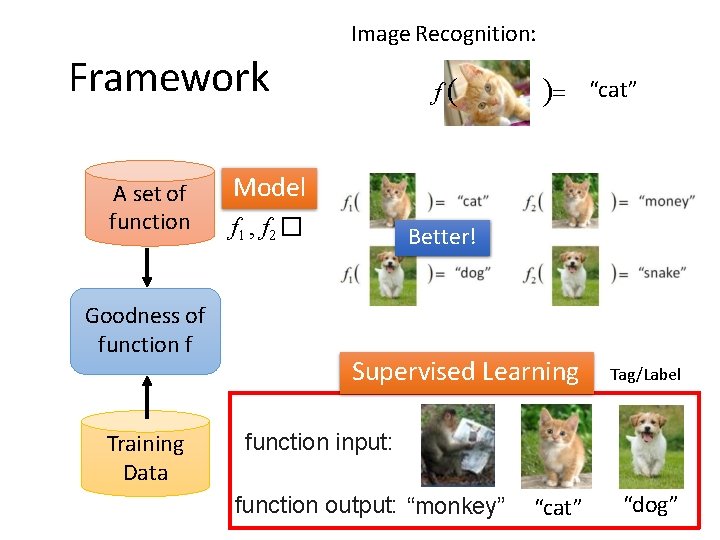

Image Recognition: Framework A set of function Goodness of function f Training Data f “cat” Model f 1 , f 2 � Better! Supervised Learning Tag/Label function input: function output: “monkey” “cat” “dog”

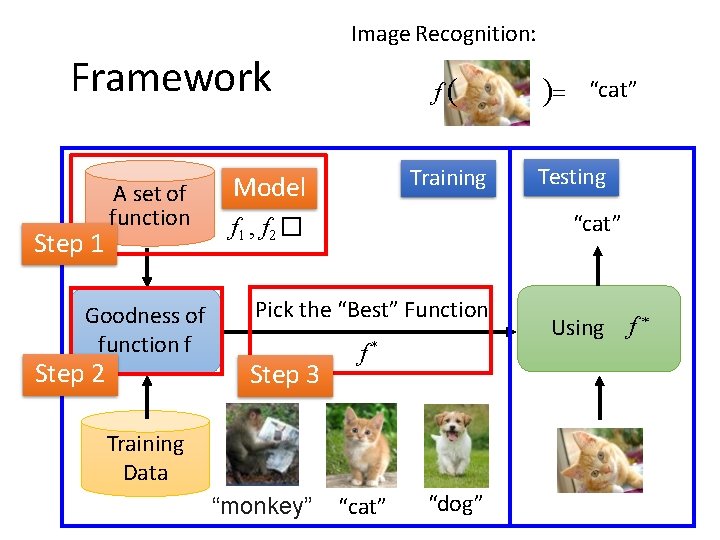

Image Recognition: Framework Step 1 A set of function Goodness of function f Step 2 f Training Model Pick the “Best” Function f* Training Data “monkey” “cat” Testing “cat” f 1 , f 2 � Step 3 “cat” “dog” Using f

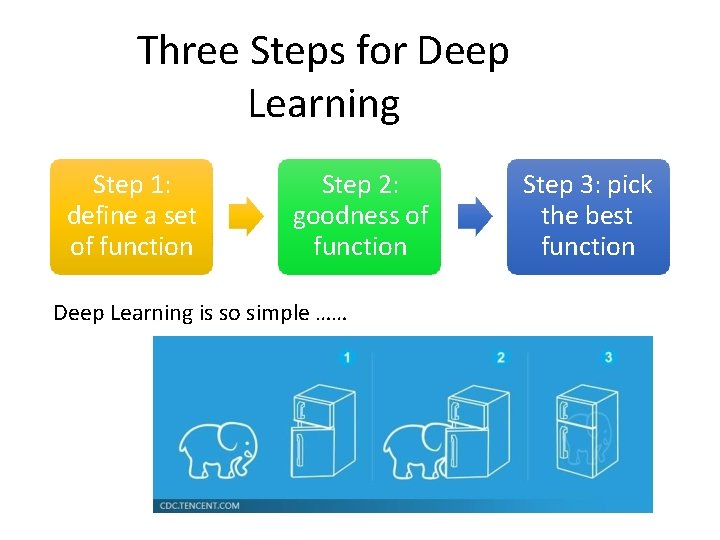

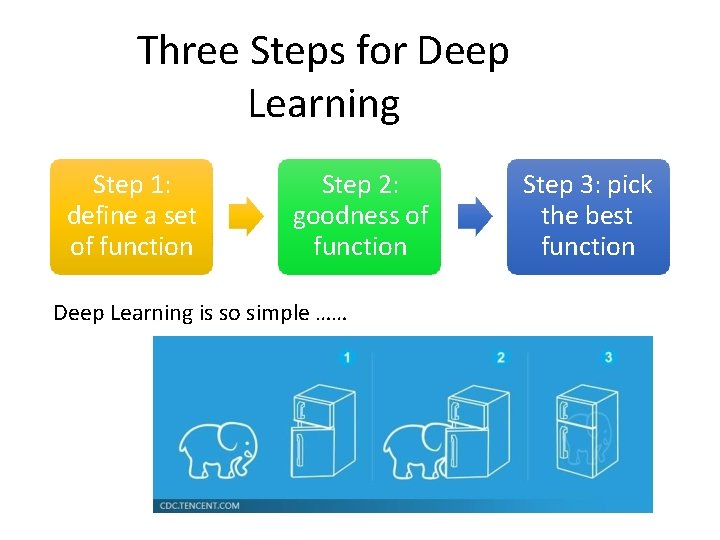

Three Steps for Deep Learning Step 1: define a set of function Step 2: goodness of function Deep Learning is so simple …… Step 3: pick the best function

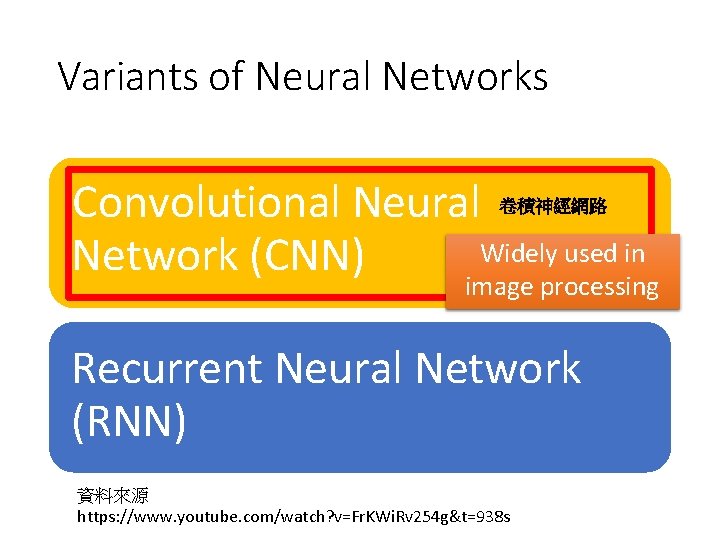

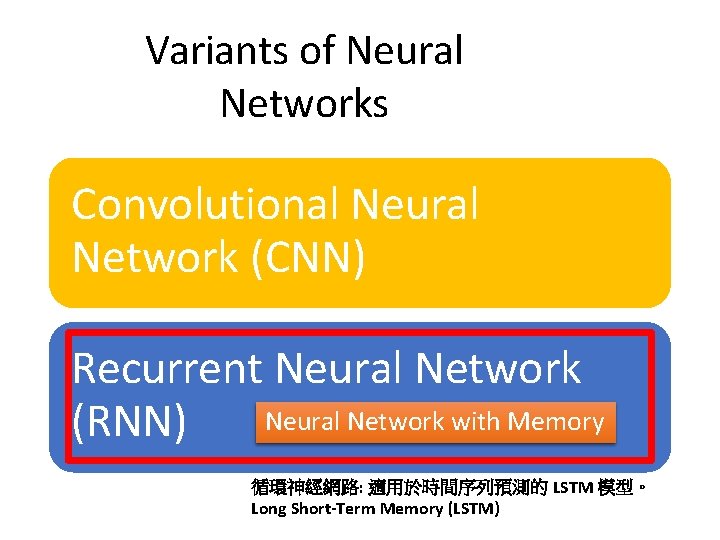

Variants of Neural Networks Convolutional Neural Widely used in Network (CNN) 卷積神經網路 image processing Recurrent Neural Network (RNN) 資料來源 https: //www. youtube. com/watch? v=Fr. KWi. Rv 254 g&t=938 s

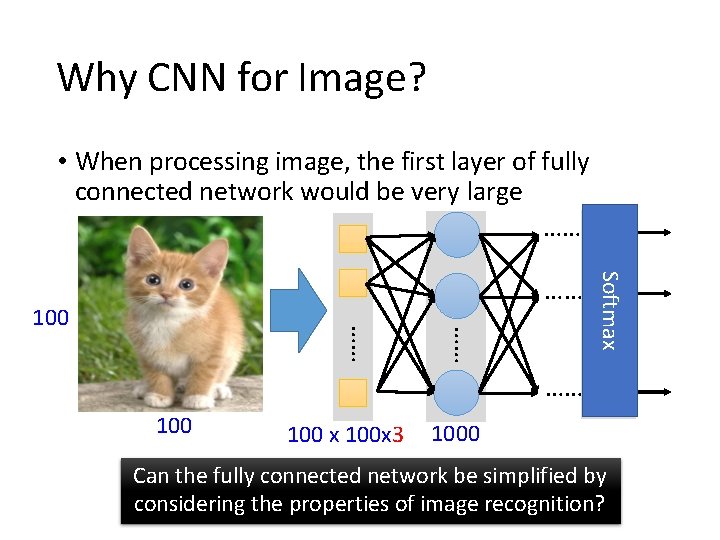

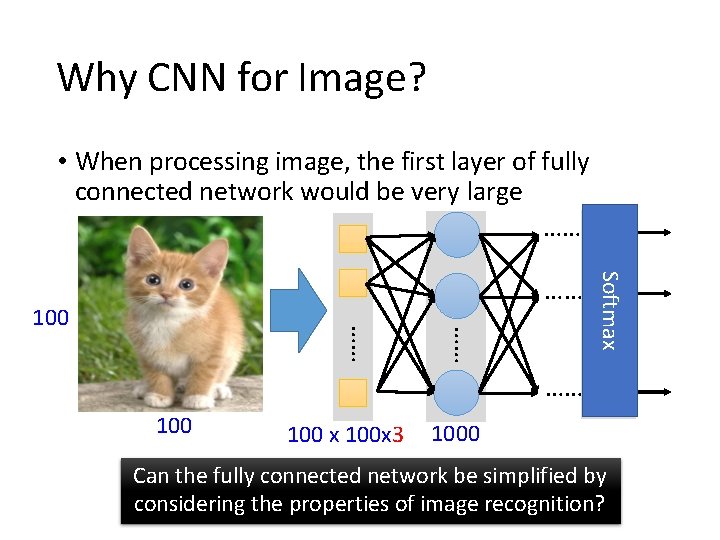

Why CNN for Image? • When processing image, the first layer of fully connected network would be very large …… …… …… 100 Softmax …… …… 100 x 100 x 3 1000 Can the fully connected network be simplified by considering the properties of image recognition?

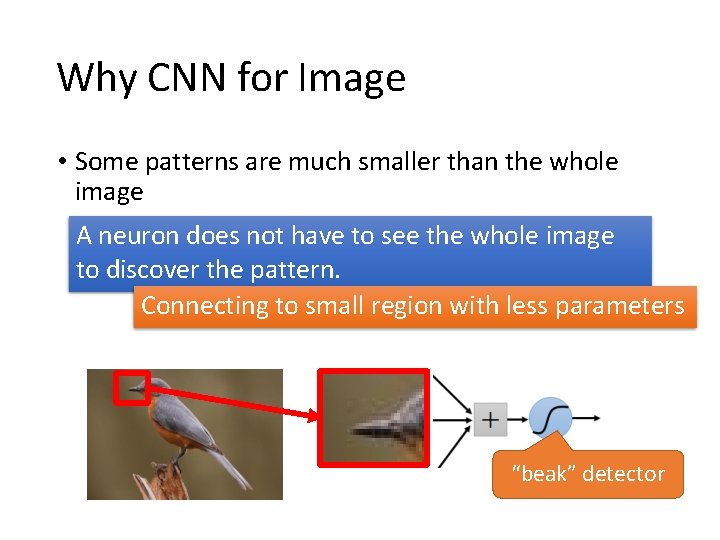

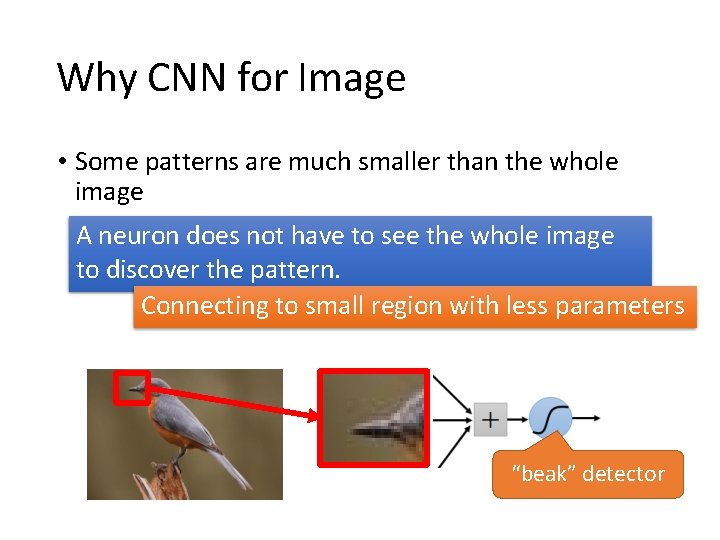

Why CNN for Image • Some patterns are much smaller than the whole image A neuron does not have to see the whole image to discover the pattern. Connecting to small region with less parameters “beak” detector

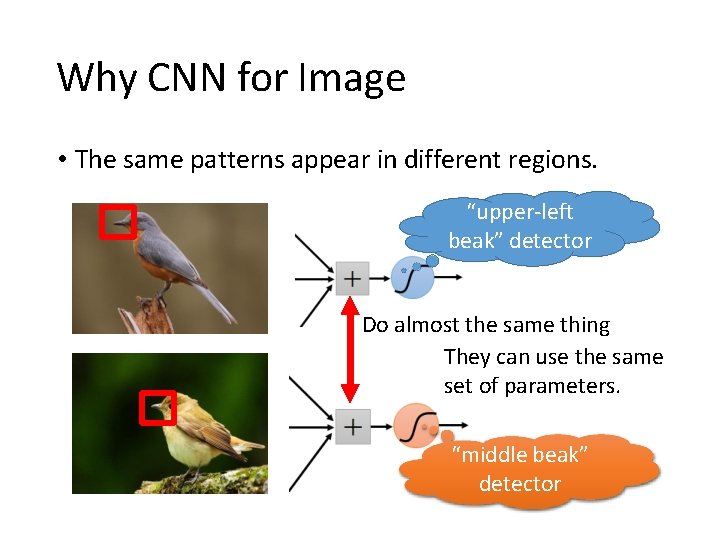

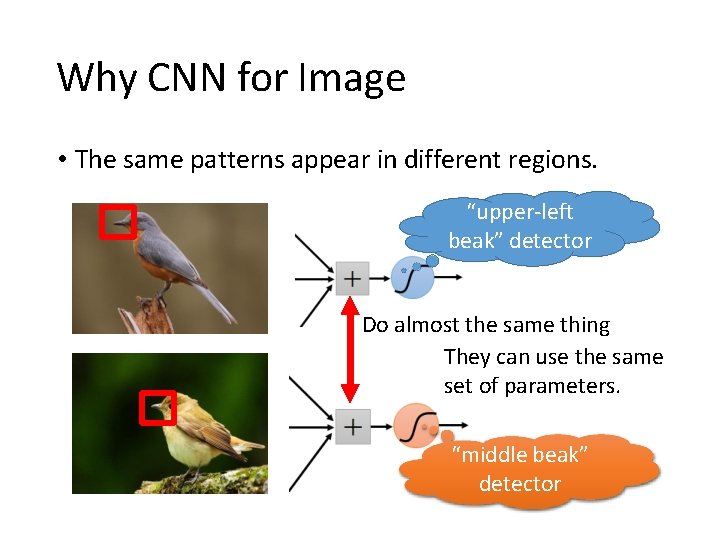

Why CNN for Image • The same patterns appear in different regions. “upper-left beak” detector Do almost the same thing They can use the same set of parameters. “middle beak” detector

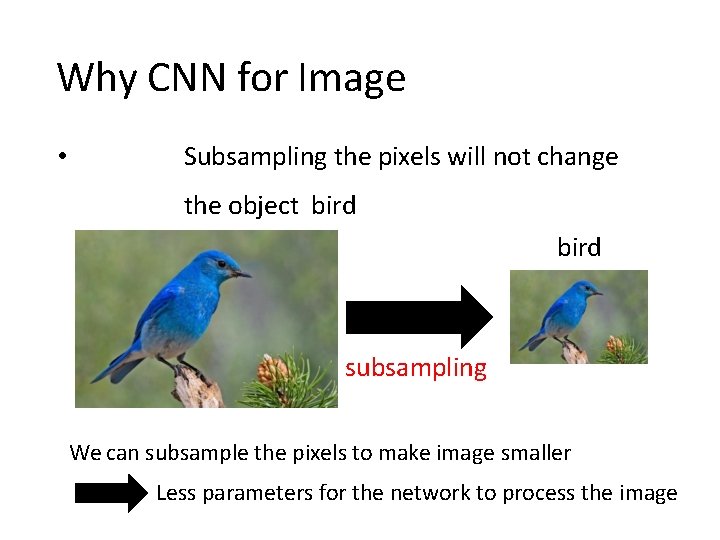

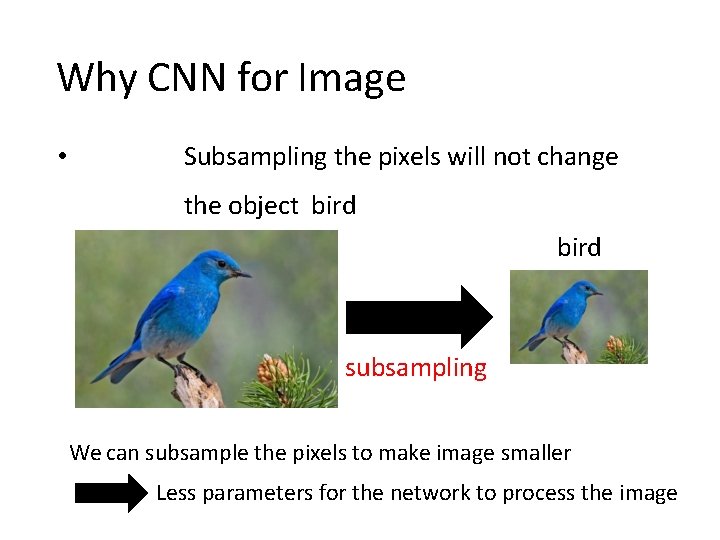

Why CNN for Image • Subsampling the pixels will not change the object bird subsampling We can subsample the pixels to make image smaller Less parameters for the network to process the image

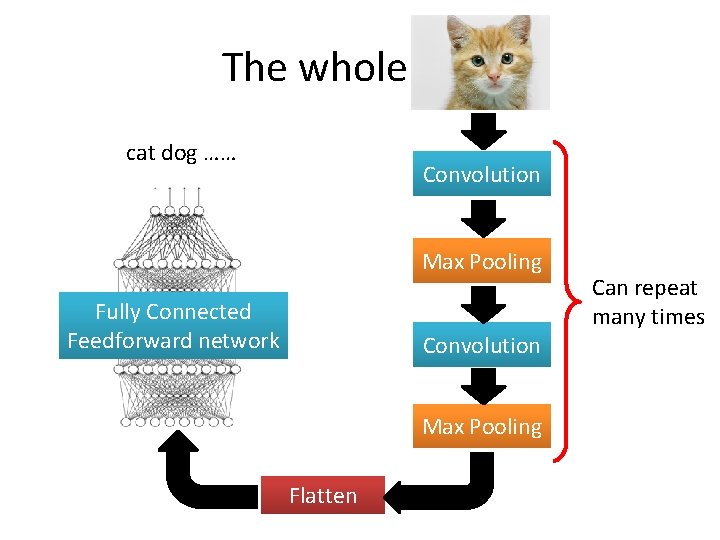

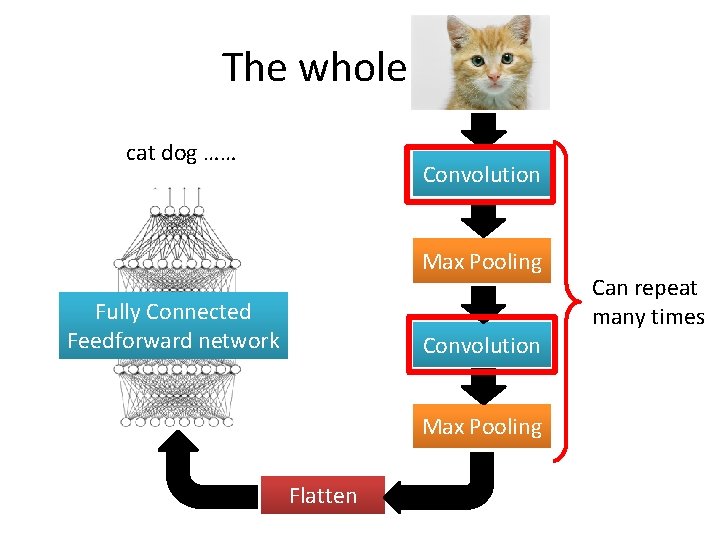

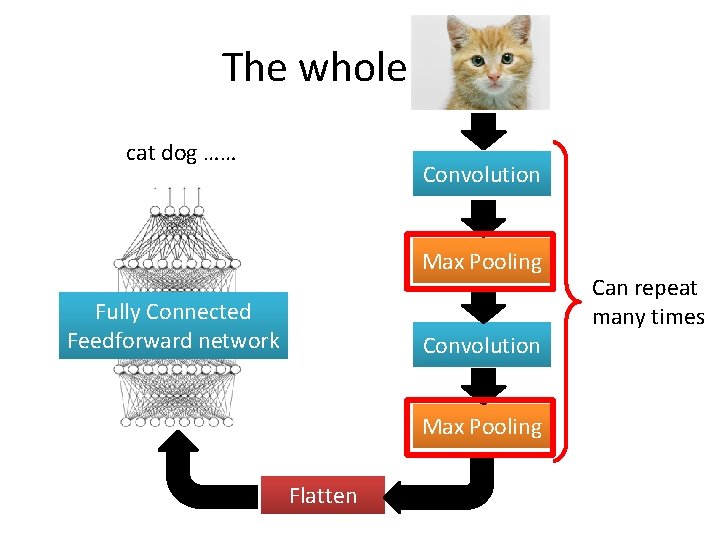

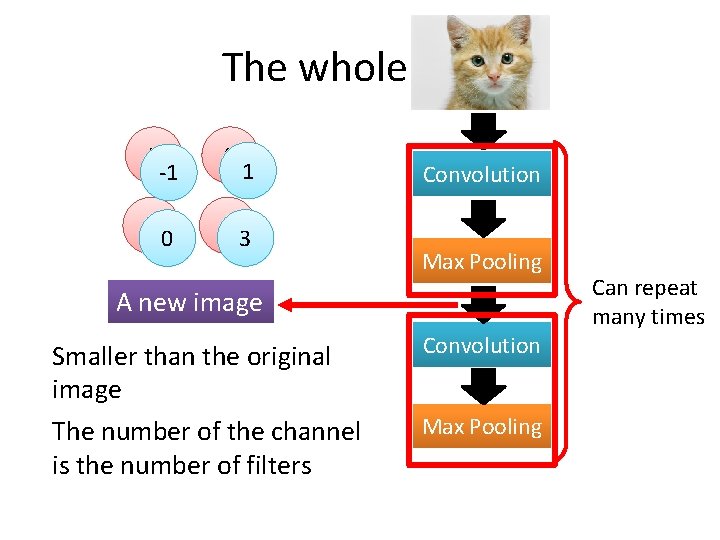

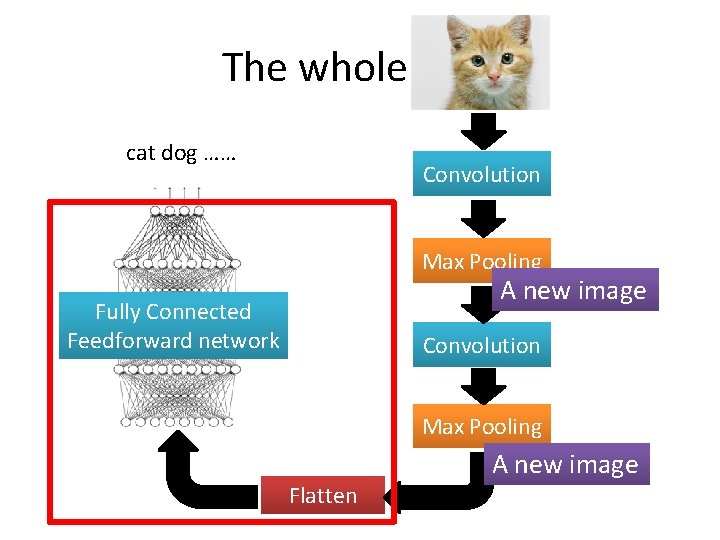

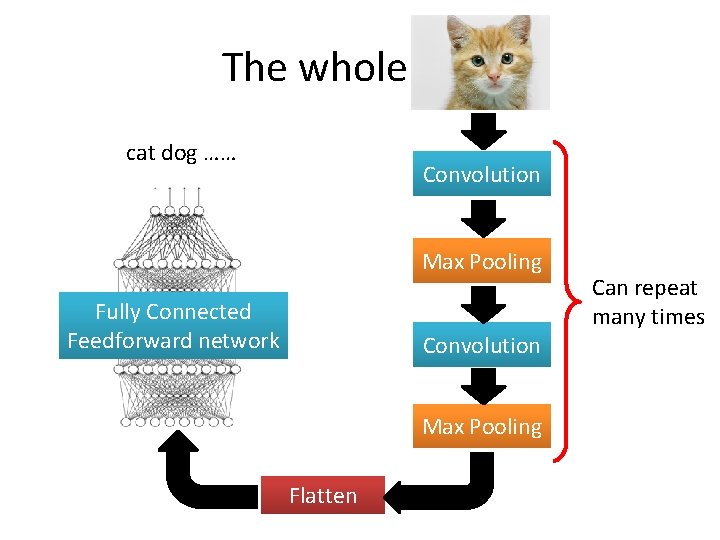

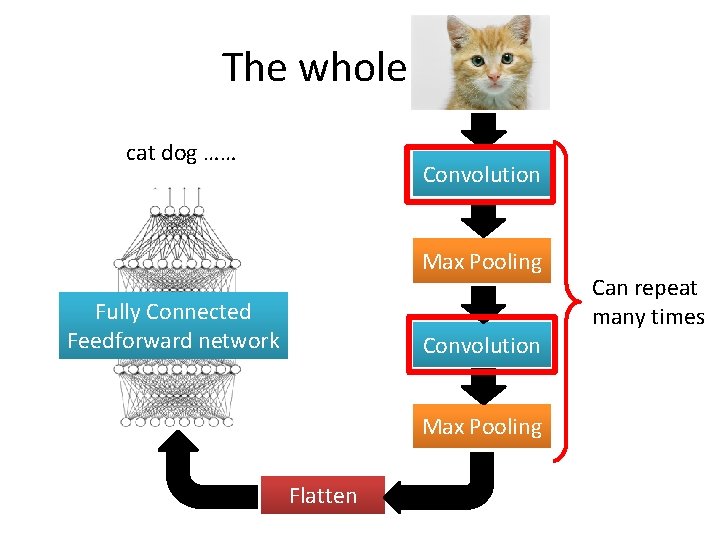

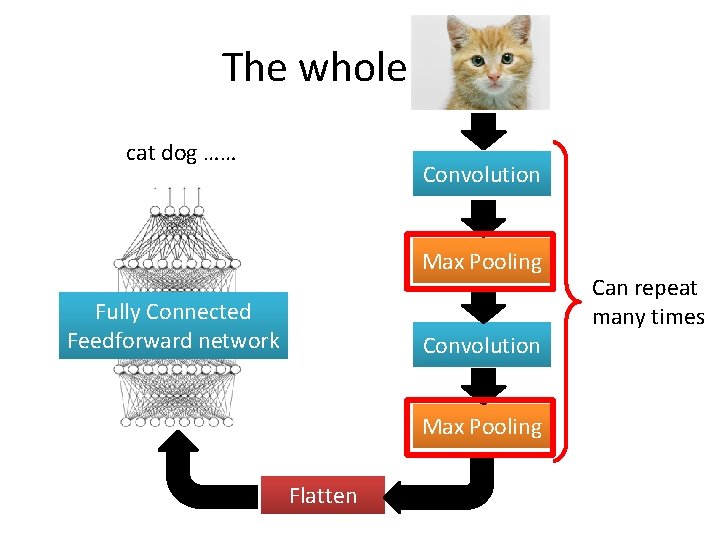

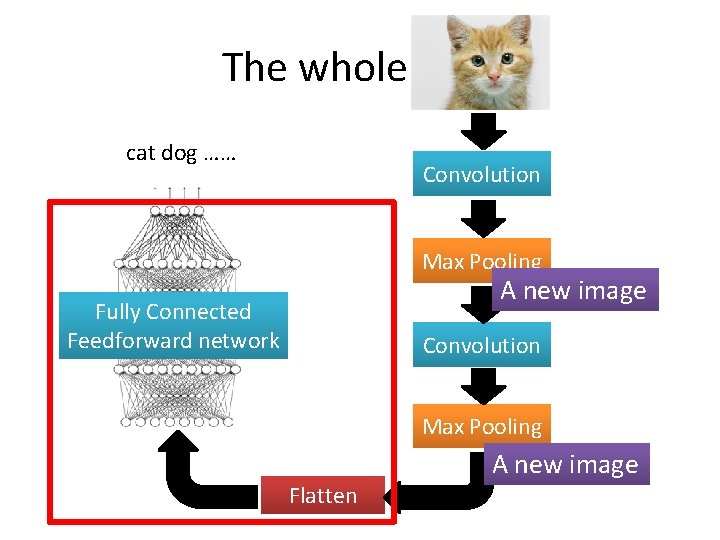

The whole CNN cat dog …… Convolution Max Pooling Fully Connected Feedforward network Convolution Max Pooling Flatten Can repeat many times

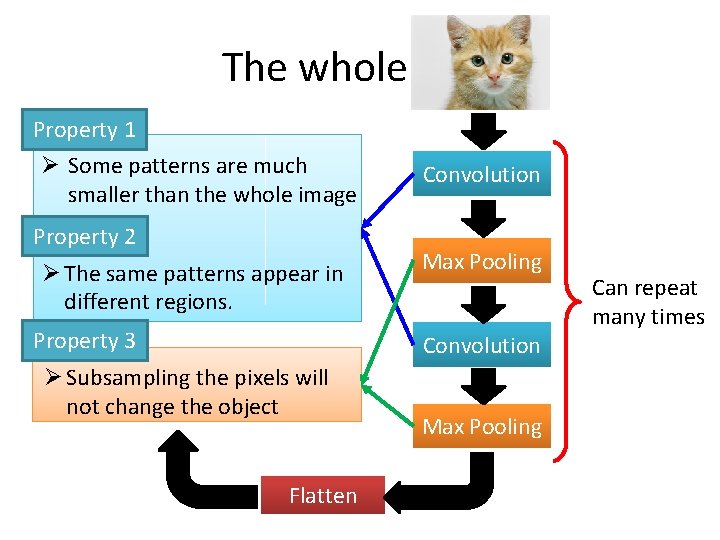

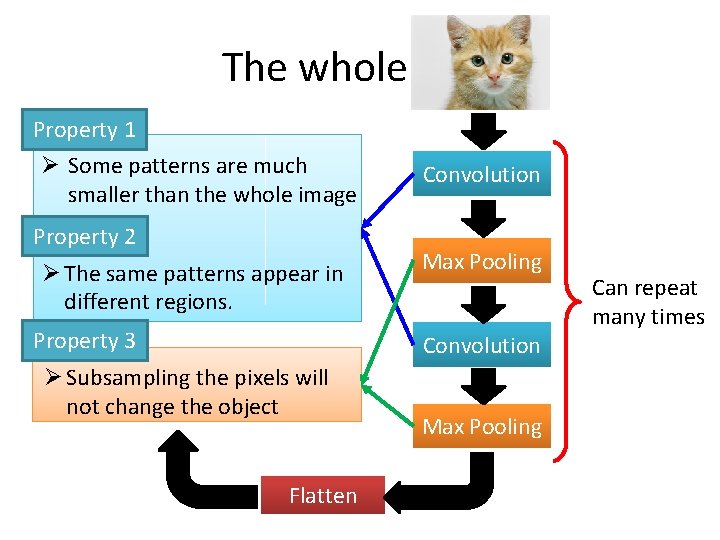

The whole CNN Property 1 Ø Some patterns are much smaller than the whole image Property 2 Ø The same patterns appear in different regions. Property 3 Convolution Max Pooling Convolution Ø Subsampling the pixels will not change the object Flatten Max Pooling Can repeat many times

The whole CNN cat dog …… Convolution Max Pooling Fully Connected Feedforward network Convolution Max Pooling Flatten Can repeat many times

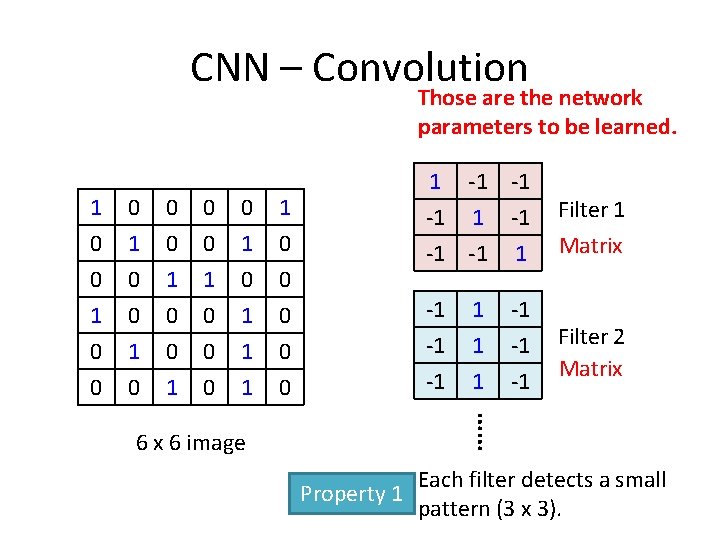

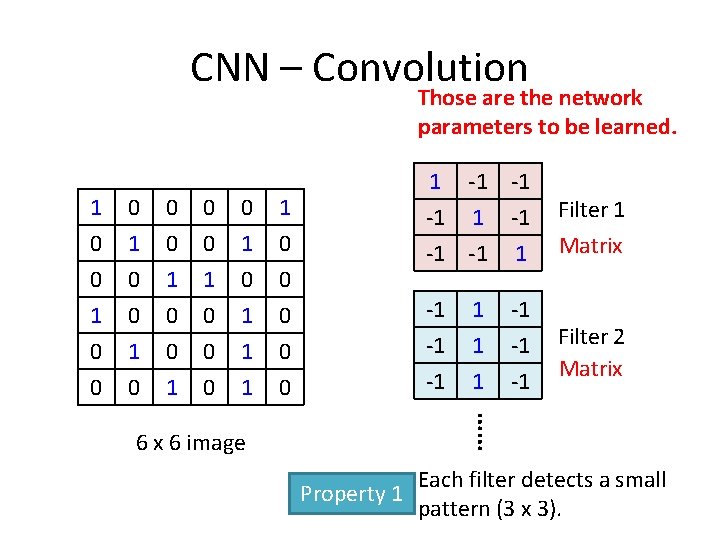

CNN – Convolution Those are the network parameters to be learned. 1 0 0 1 0 0 0 1 0 1 1 0 0 0 1 1 0 0 Filter 1 -1 -1 -1 Filter 2 Matrix 1 1 1 -1 -1 -1 Matrix …… 6 x 6 image 1 -1 -1 -1 1 Each filter detects a small Property 1 pattern (3 x 3).

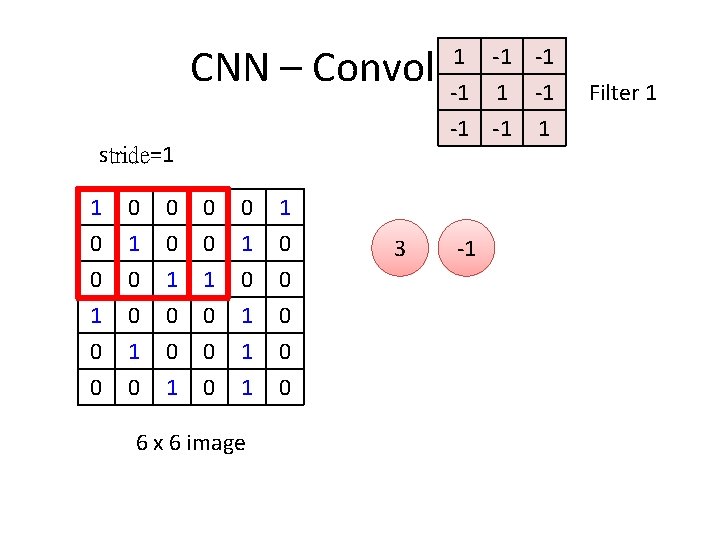

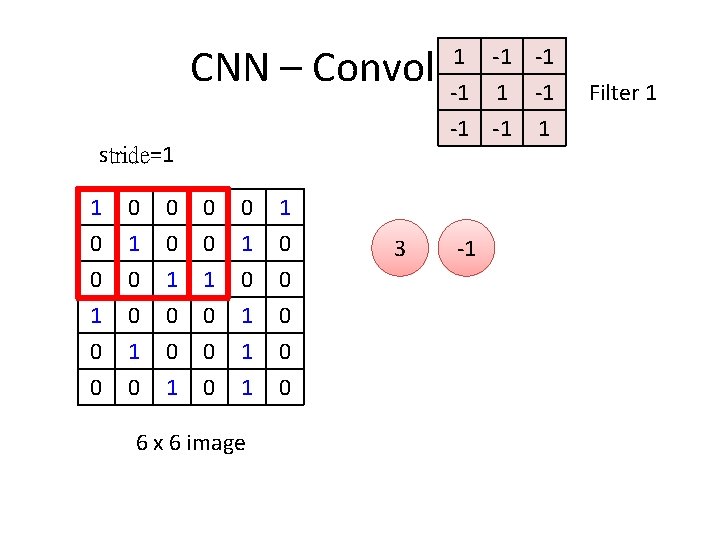

CNN – Convolution 1 -1 -1 -1 1 stride=1 1 0 0 1 0 0 0 1 0 1 1 0 0 0 1 1 0 0 6 x 6 image 3 -1 Filter 1

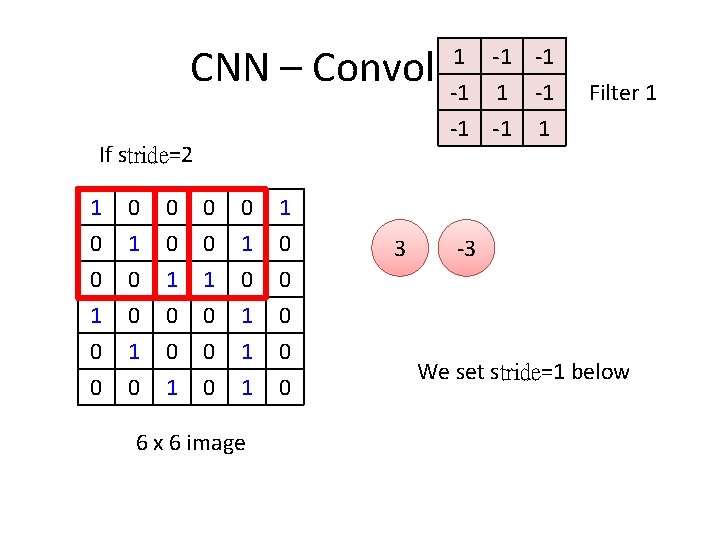

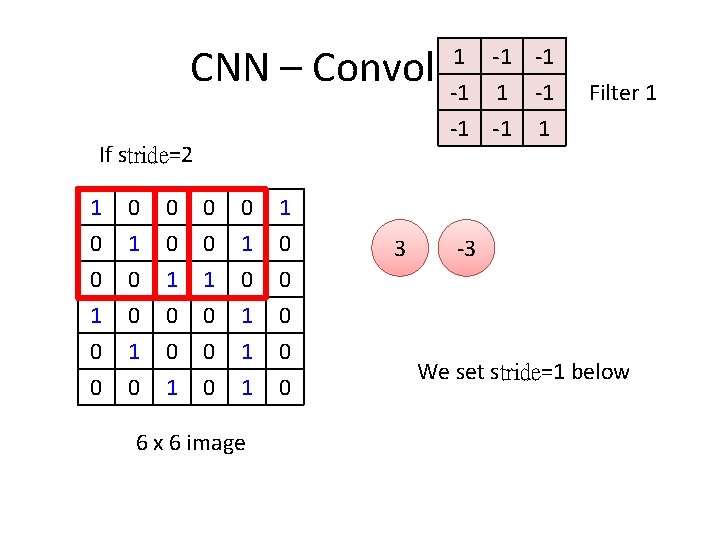

CNN – Convolution 1 -1 -1 -1 1 If stride=2 1 0 0 1 0 0 0 1 0 1 1 0 0 0 1 1 0 0 6 x 6 image 3 Filter 1 -3 We set stride=1 below

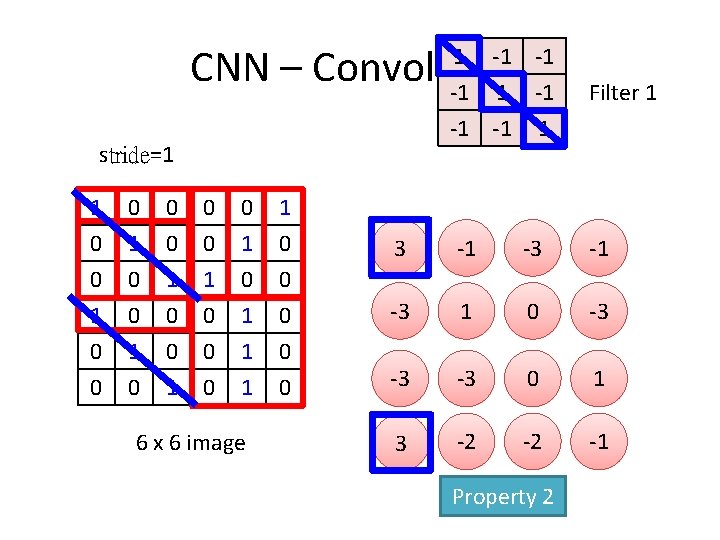

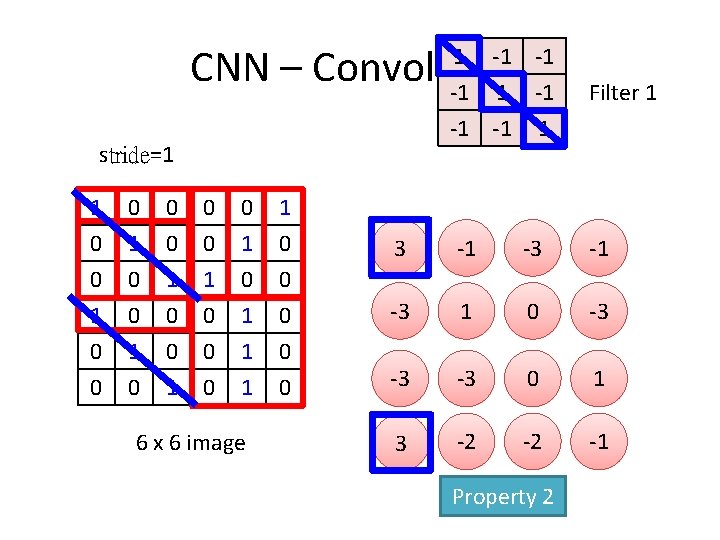

CNN – Convolution stride=1 1 -1 -1 -1 1 Filter 1 1 0 0 1 0 0 0 1 0 1 1 0 0 0 3 -1 -3 1 0 -3 0 0 1 1 0 0 -3 -3 0 1 3 -2 -2 -1 6 x 6 image Property 2

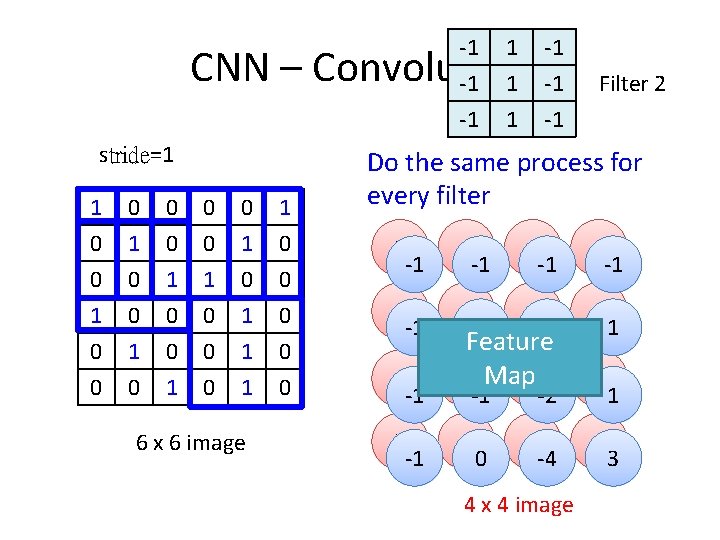

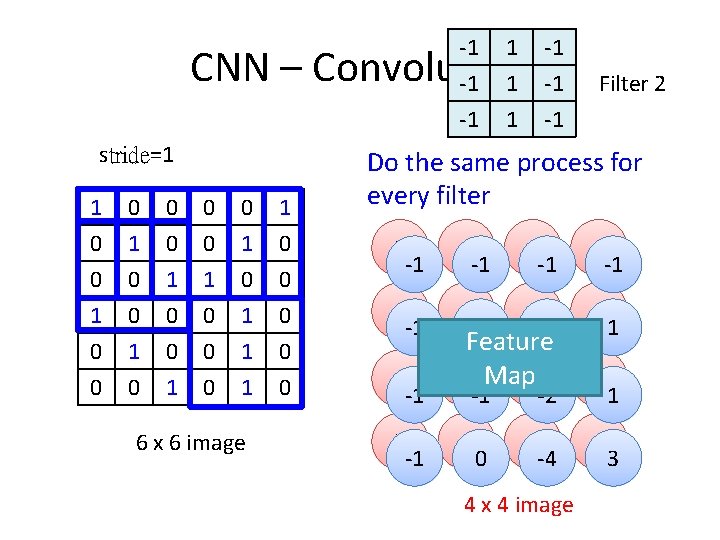

-1 -1 -1 1 CNN – Convolution stride=1 1 0 0 1 0 0 0 1 0 1 1 0 0 0 1 1 0 0 6 x 6 image -1 -1 -1 Filter 2 Do the same process for every filter 3 -1 -1 -1 -3 -1 1 -1 0 -2 -3 1 -3 -1 Feature -3 Map 0 -1 -2 -2 0 -2 -4 4 x 4 image 1 1 -1 3

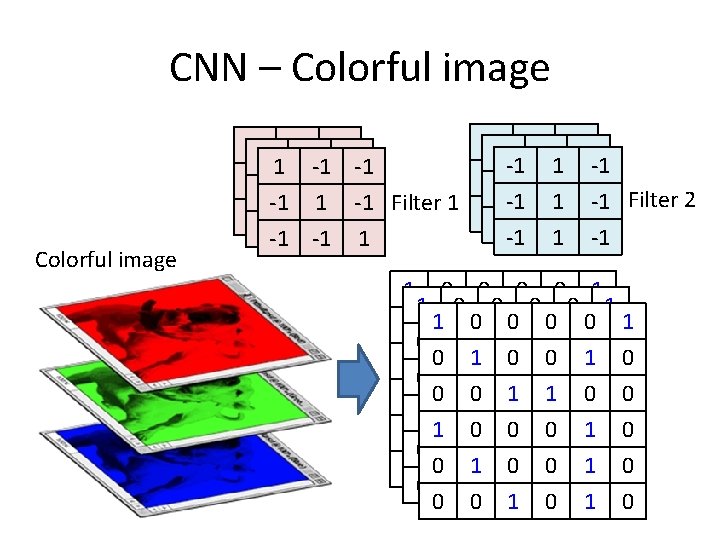

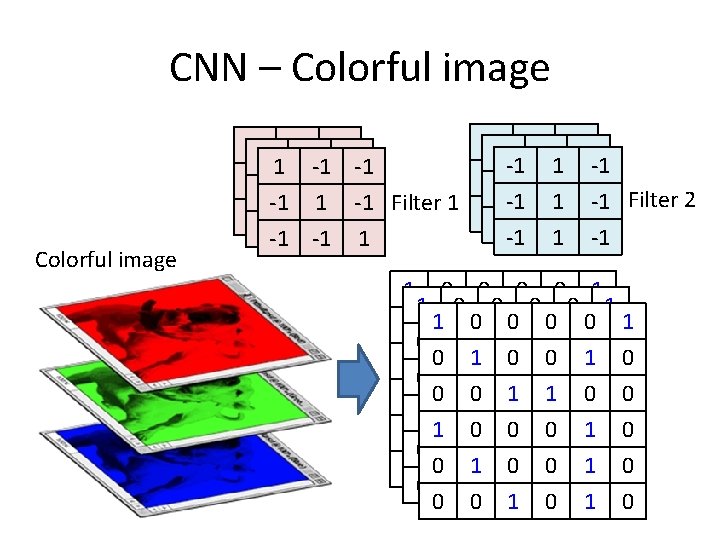

CNN – Colorful image -1 -1 11 -1 -1 -1 -1 -1 111 -1 -1 -1 Filter 2 -1 1 -1 Filter 1 -1 -1 -1 11 -1 -1 -1 -1 1 1 0 0 0 0 1 0 11 00 00 01 00 1 0 0 00 11 01 00 10 0 1 1 0 0 1 00 00 10 11 00 0 11 00 00 01 10 0 0 1 0 0 00 11 00 01 10 0 1 0

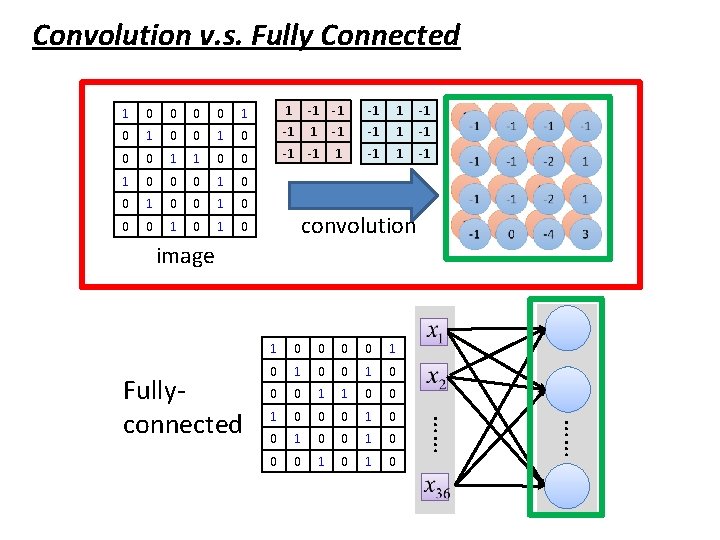

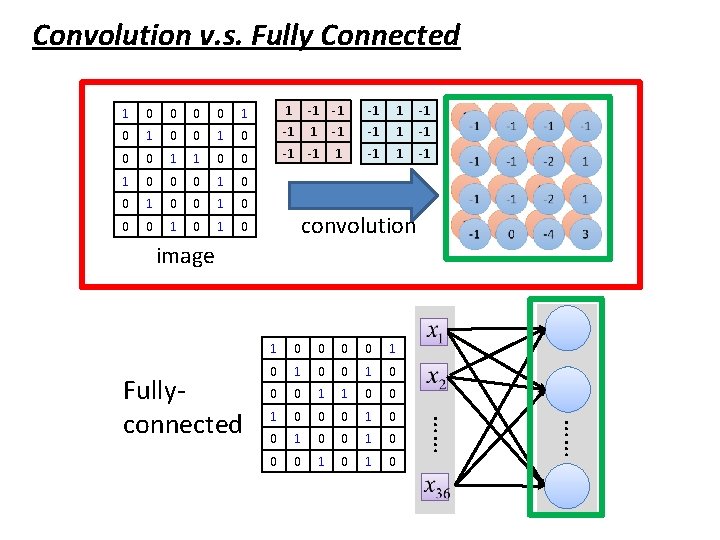

Convolution v. s. Fully Connected 1 0 0 1 1 -1 -1 -1 0 1 0 -1 1 -1 0 0 1 1 0 0 -1 -1 1 0 0 0 1 0 1 0 convolution image 0 0 0 1 0 0 0 1 1 0 0 0 1 0 0 0 1 0 …… Fullyconnected 1

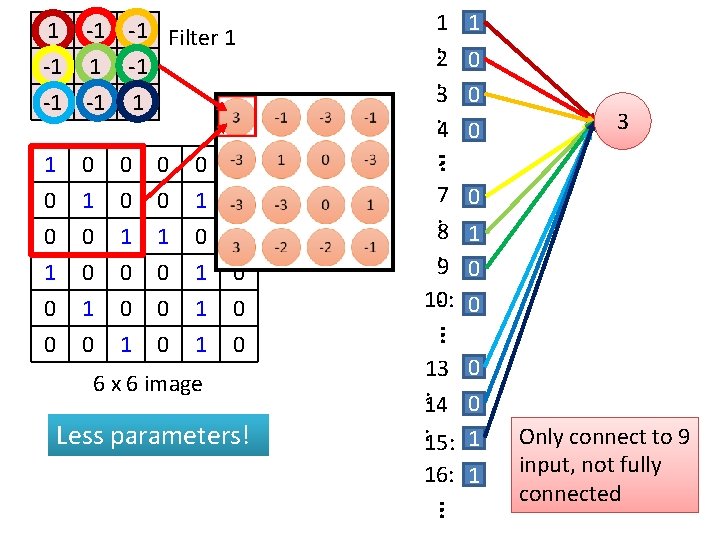

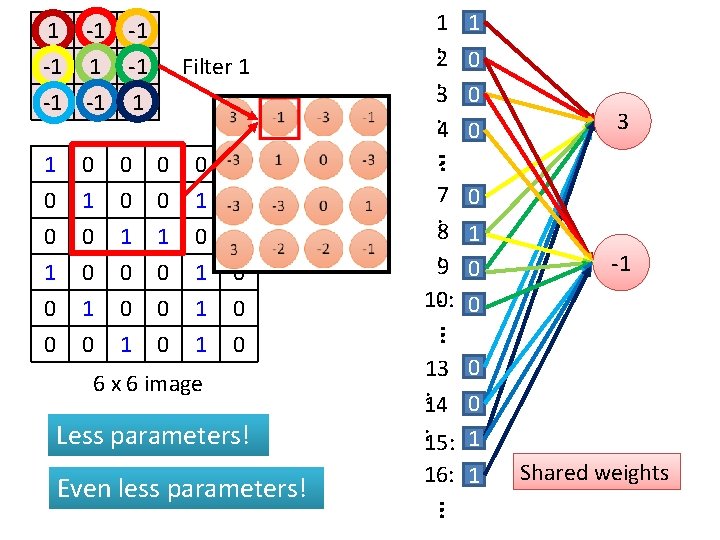

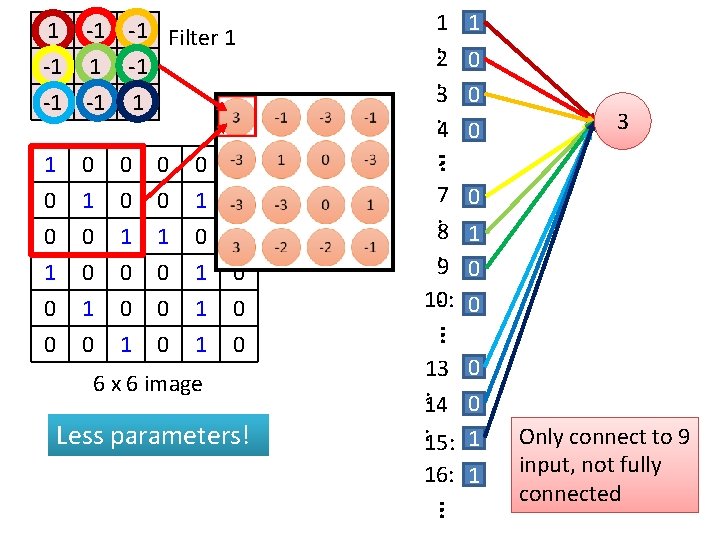

1 -1 -1 Filter 1 -1 -1 -1 1 0 0 0 1 0 0 1 1 0 0 6 x 6 image Less parameters! 7 0 : 8 1 : 9 0 : 0 10: … 0 1 0 0 3 … 1 0 0 1 1 1 : 2 0 : 3 0 : 4 0 : 13 0 : 0 14 : 15: 1 16: 1 … Only connect to 9 input, not fully connected

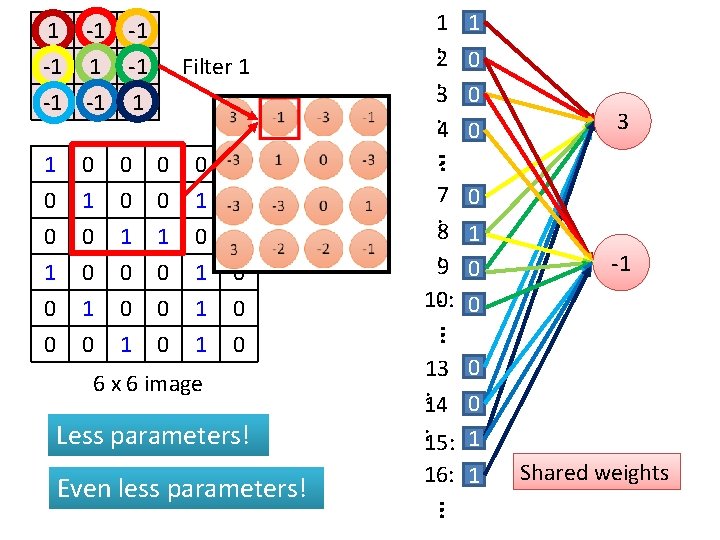

1 -1 -1 -1 1 Filter 1 0 0 0 1 0 1 1 0 0 0 1 1 0 0 6 x 6 image Less parameters! 13 0 : 0 14 : 15: 1 16: 1 … Even less parameters! 7 0 : 8 1 : 9 0 : 0 10: -1 … 0 1 0 0 3 … 1 0 0 1 1 1 : 2 0 : 3 0 : 4 0 : Shared weights

The whole CNN cat dog …… Convolution Max Pooling Fully Connected Feedforward network Convolution Max Pooling Flatten Can repeat many times

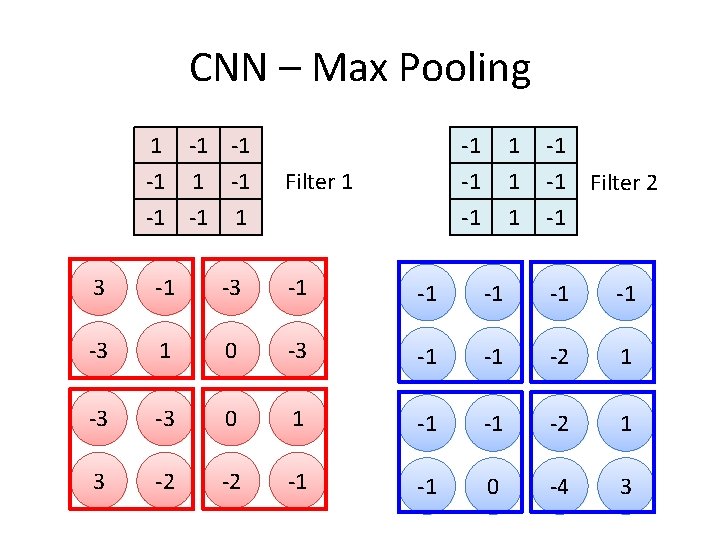

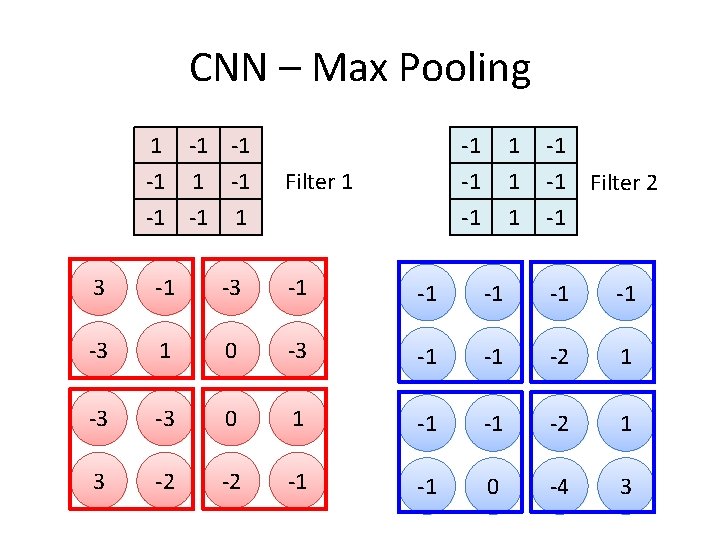

CNN – Max Pooling 1 -1 -1 -1 Filter 1 1 -1 -1 -1 Filter 2 3 -1 -1 -1 -3 1 0 -3 -1 -1 -2 1 -3 -3 0 1 -1 -1 -2 1 3 -2 -2 -1 -1 0 -4 3

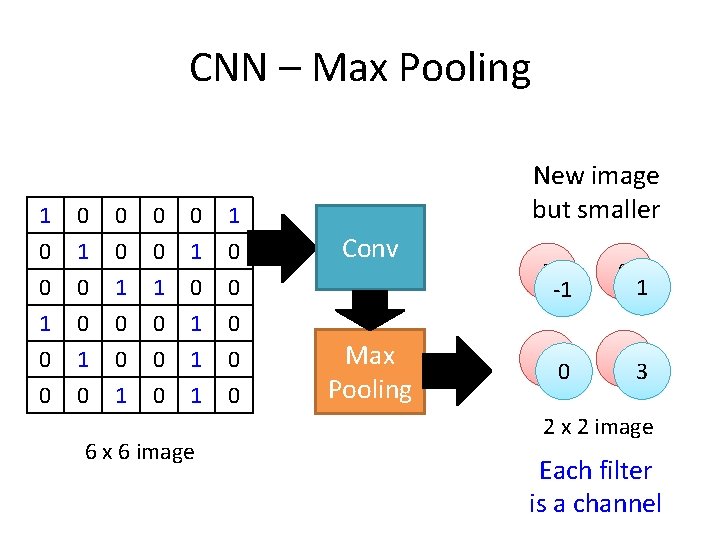

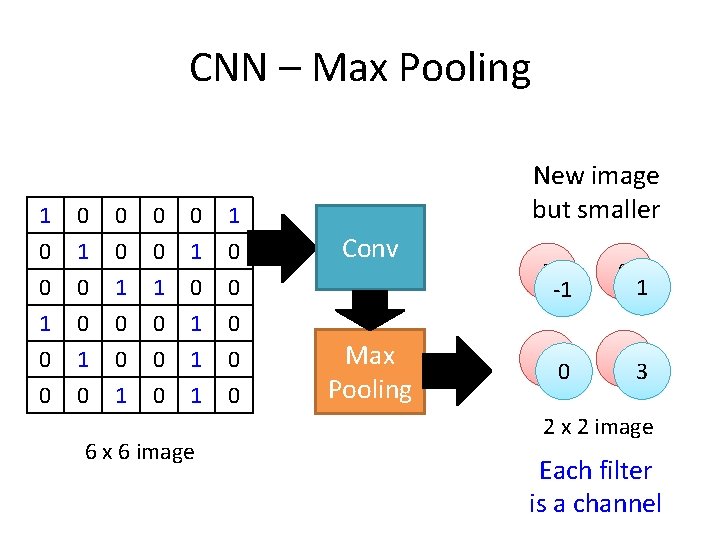

CNN – Max Pooling 1 0 0 1 0 0 0 1 0 1 1 0 0 0 1 1 0 0 6 x 6 image New image but smaller Conv Max Pooling 3 -1 0 3 1 0 1 3 2 x 2 image Each filter is a channel

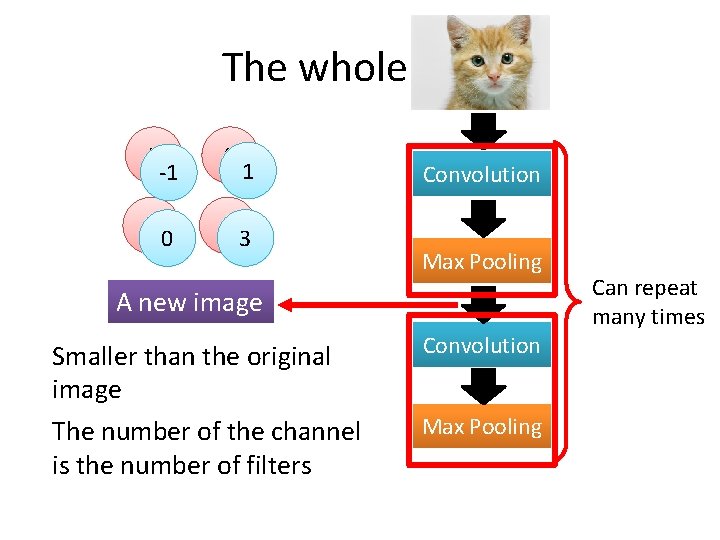

The whole CNN 3 -1 0 3 1 0 1 3 Convolution Max Pooling A new image Smaller than the original image The number of the channel is the number of filters Convolution Max Pooling Can repeat many times

The whole CNN cat dog …… Convolution Max Pooling A new image Fully Connected Feedforward network Convolution Max Pooling Flatten A new image

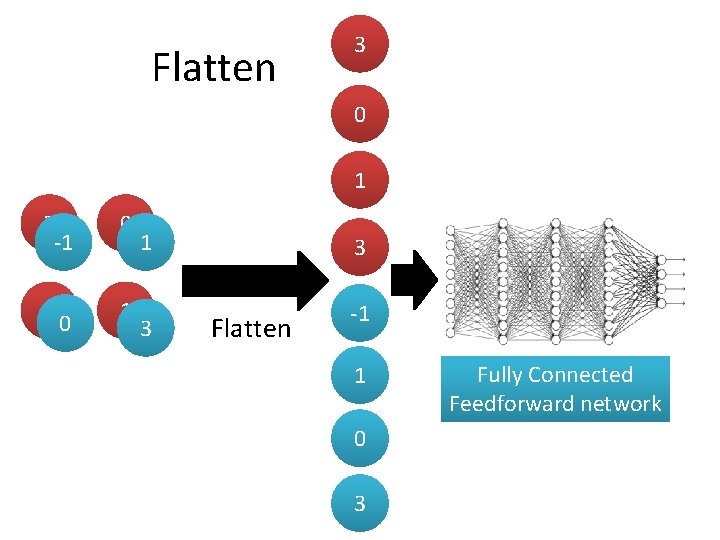

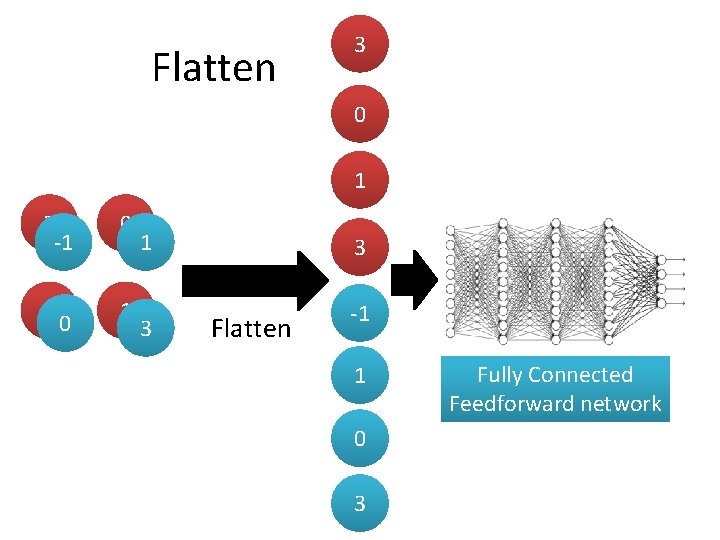

Flatten 3 0 1 3 -1 0 3 1 0 1 3 3 Flatten -1 1 0 3 Fully Connected Feedforward network

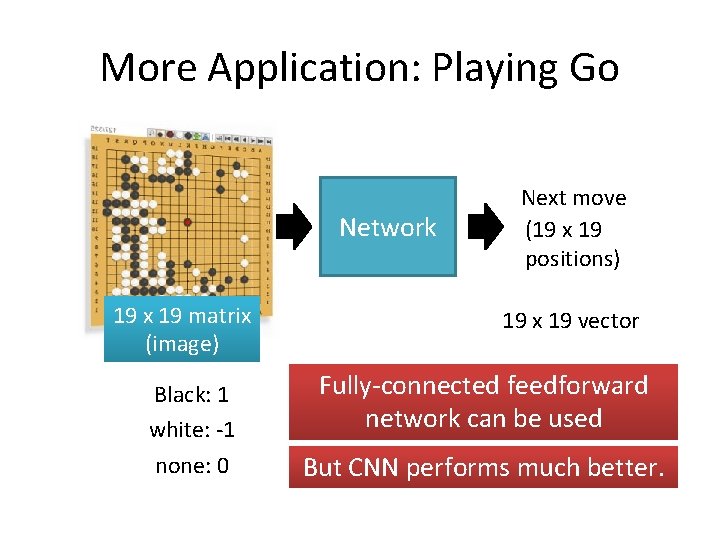

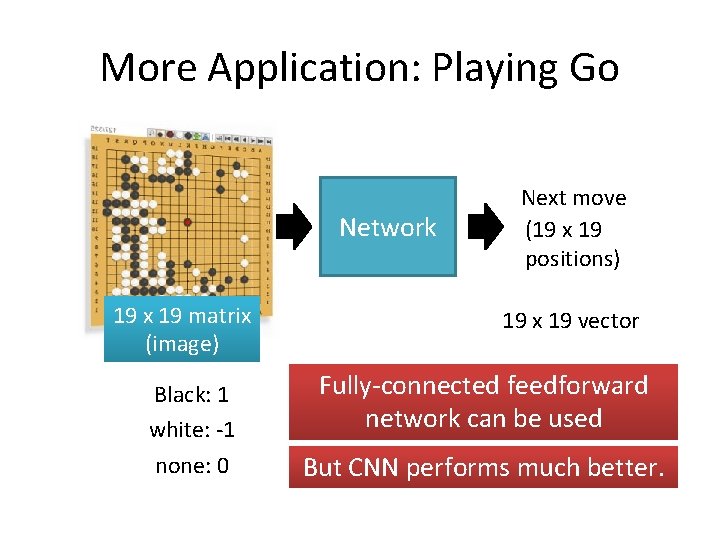

More Application: Playing Go Network 19 x 19 matrix 19(image) x 19 vector Black: 1 white: -1 none: 0 Next move (19 x 19 positions) 19 x 19 vector Fully-connected feedforward network can be used But CNN performs much better.

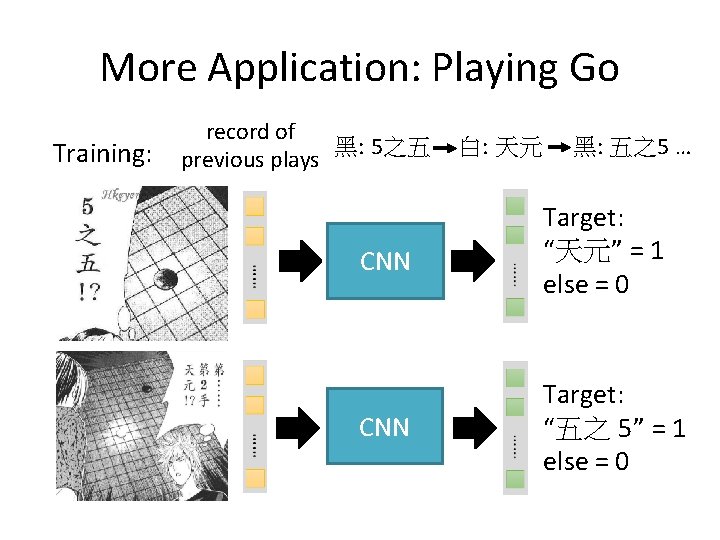

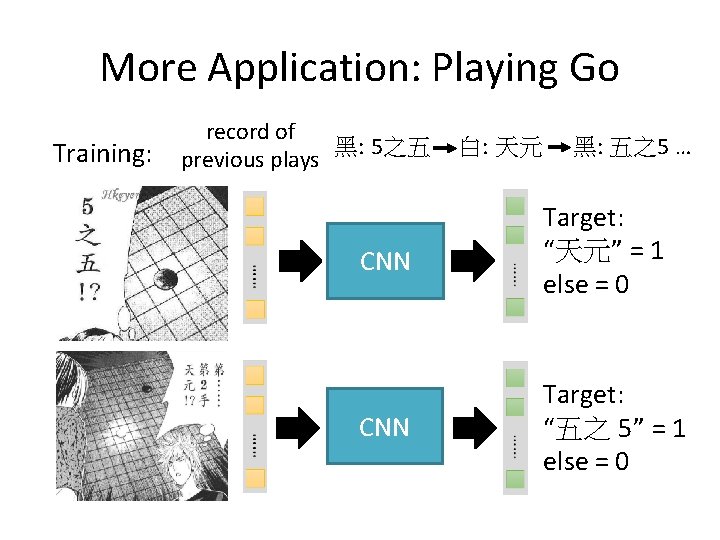

More Application: Playing Go Training: record of 黑: 5之五 previous plays 白: 天元 黑: 五之5 … CNN Target: “天元” = 1 else = 0 CNN Target: “五之 5” = 1 else = 0

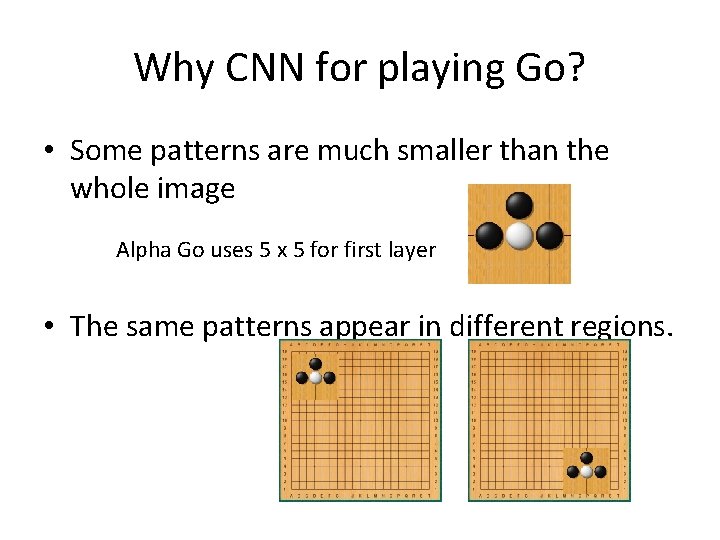

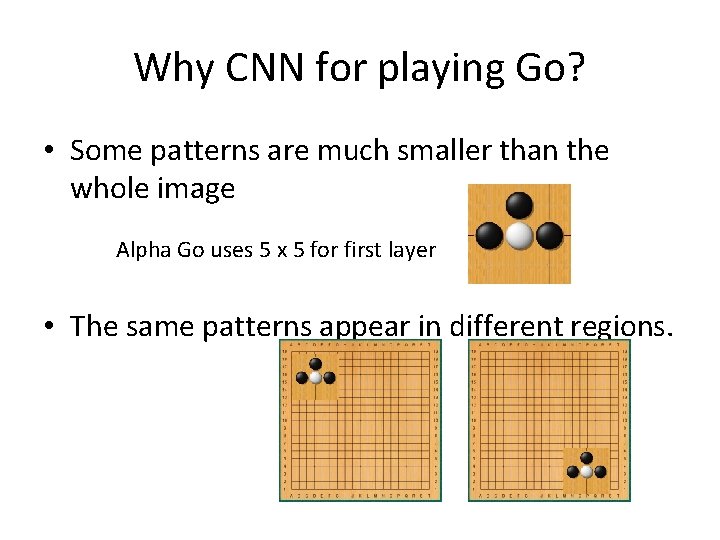

Why CNN for playing Go? • Some patterns are much smaller than the whole image Alpha Go uses 5 x 5 for first layer • The same patterns appear in different regions.

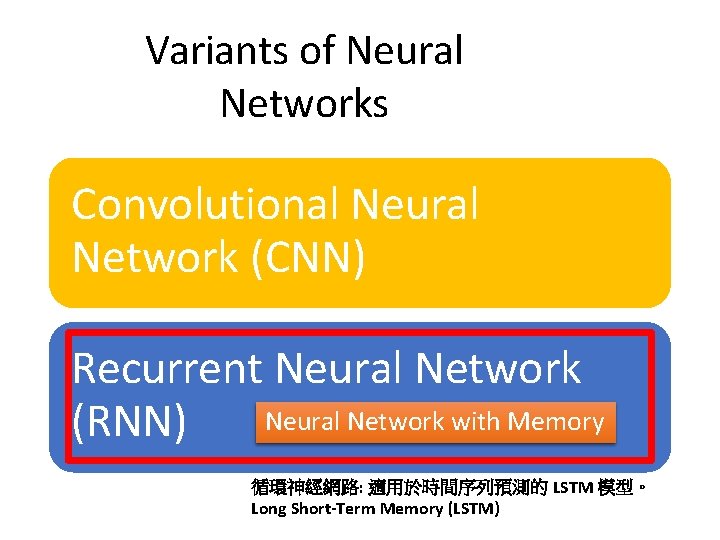

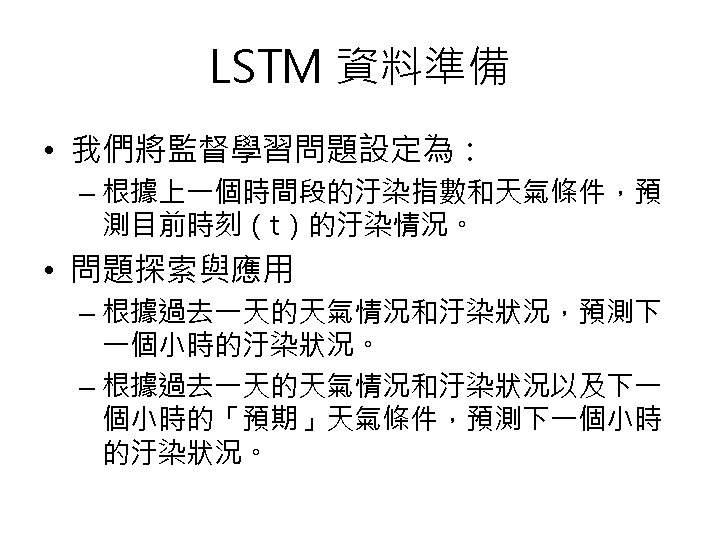

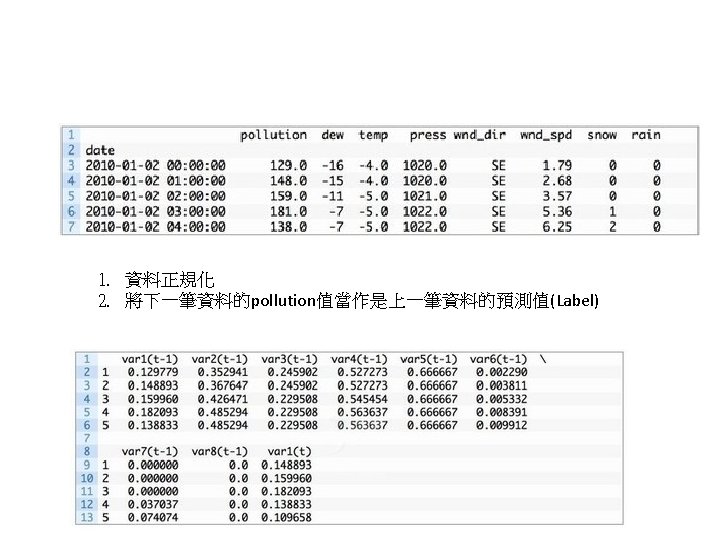

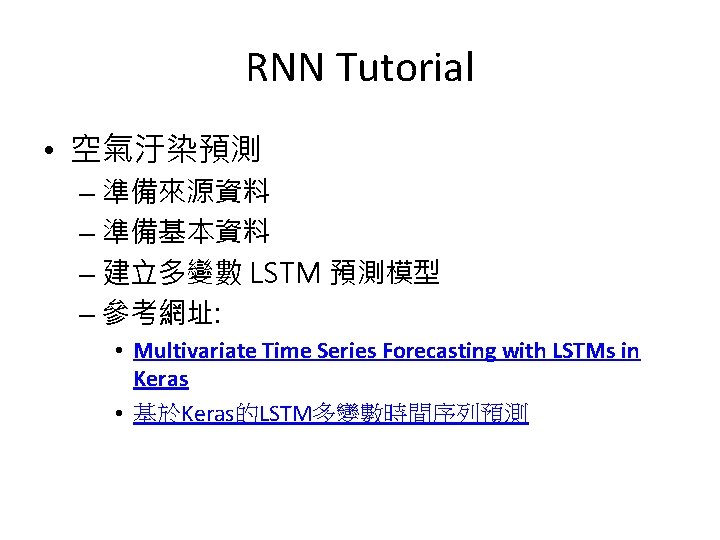

Variants of Neural Networks Convolutional Neural Network (CNN) Recurrent Neural Network with Memory (RNN) 循環神經網路: 適用於時間序列預測的 LSTM 模型。 Long Short-Term Memory (LSTM)

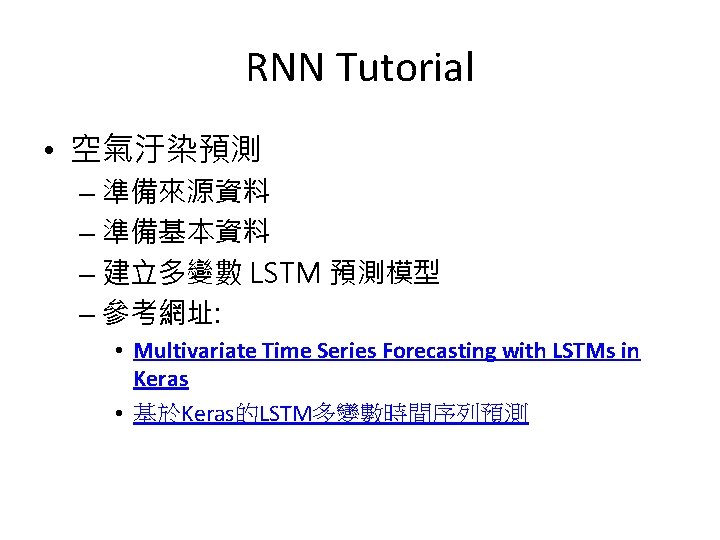

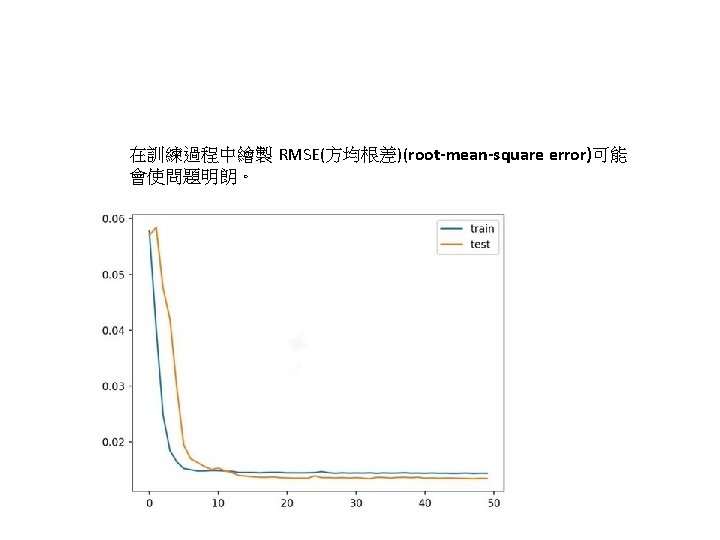

My Target 預測股市隔天指數值: RMSE=100 Train set : 2013/01/08~2014/8/3 Test set : 2014/8/27~2017/7/23

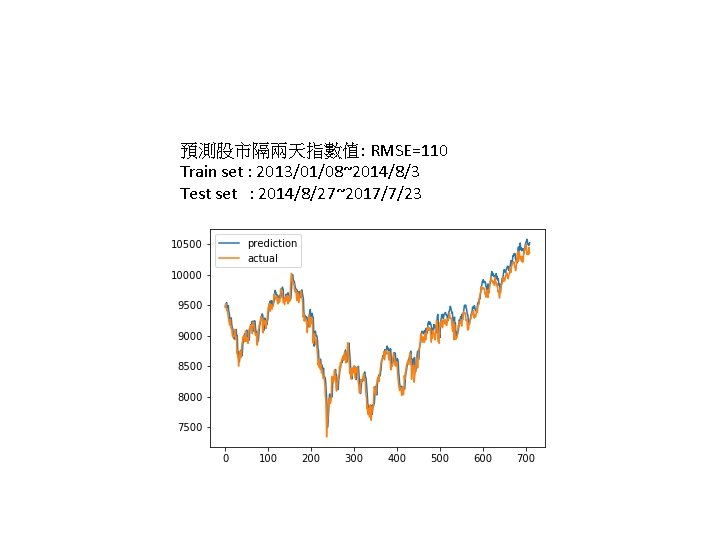

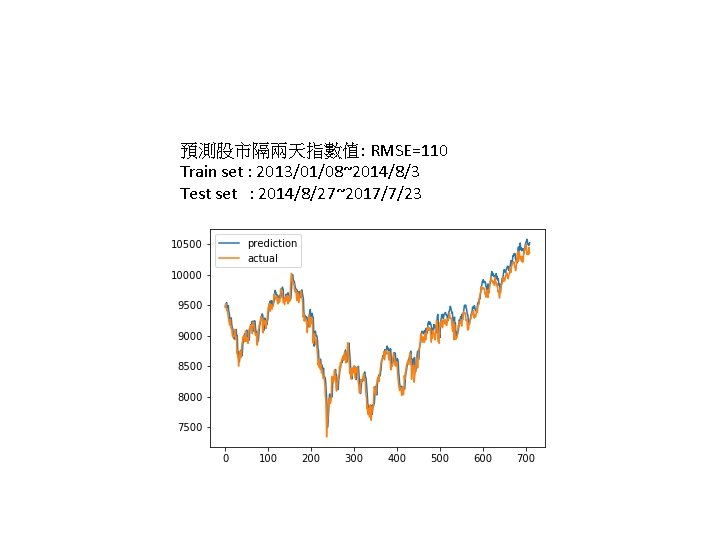

預測股市隔兩天指數值: RMSE=110 Train set : 2013/01/08~2014/8/3 Test set : 2014/8/27~2017/7/23

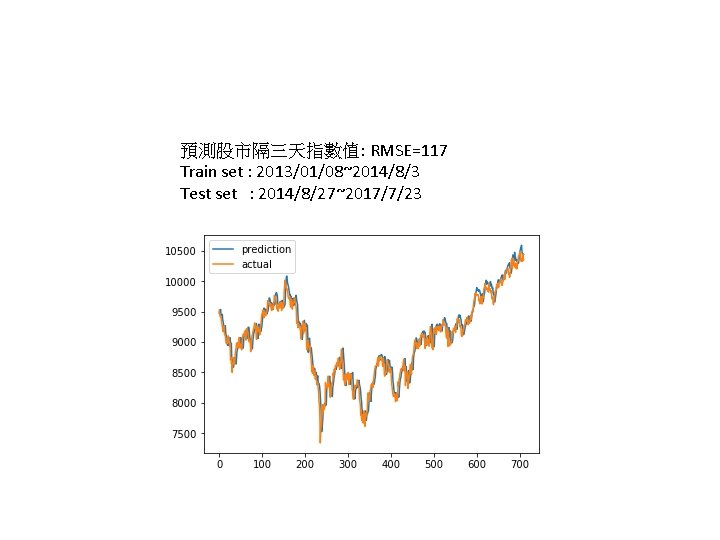

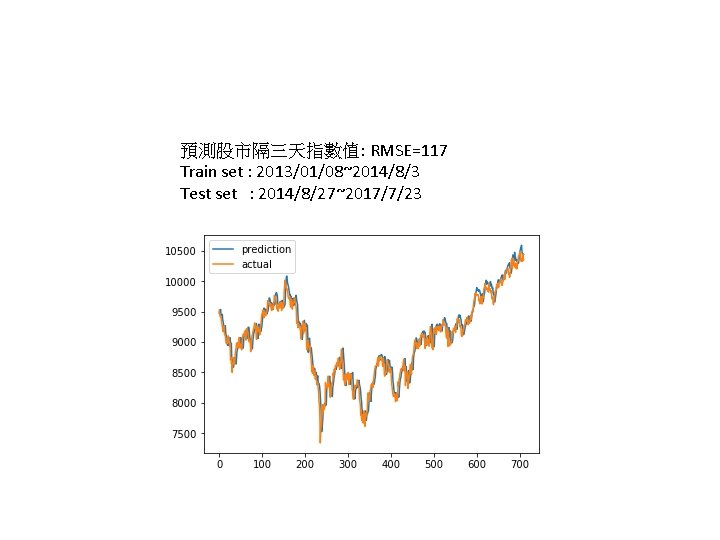

預測股市隔三天指數值: RMSE=117 Train set : 2013/01/08~2014/8/3 Test set : 2014/8/27~2017/7/23