Recurrent Neural Networks Adapted from Arun Mallya Source

- Slides: 53

Recurrent Neural Networks Adapted from Arun Mallya Source: Part 1, Part 2

Outline • Sequential prediction problems • Vanilla RNN unit – Forward and backward pass – Back-propagation through time (BPTT) • Long Short-Term Memory (LSTM) unit • Gated Recurrent Unit (GRU) • Applications

Sequential prediction tasks • So far, we focused mainly on prediction problems with fixed-size inputs and outputs • But what if the input and/or output is a variable-length sequence?

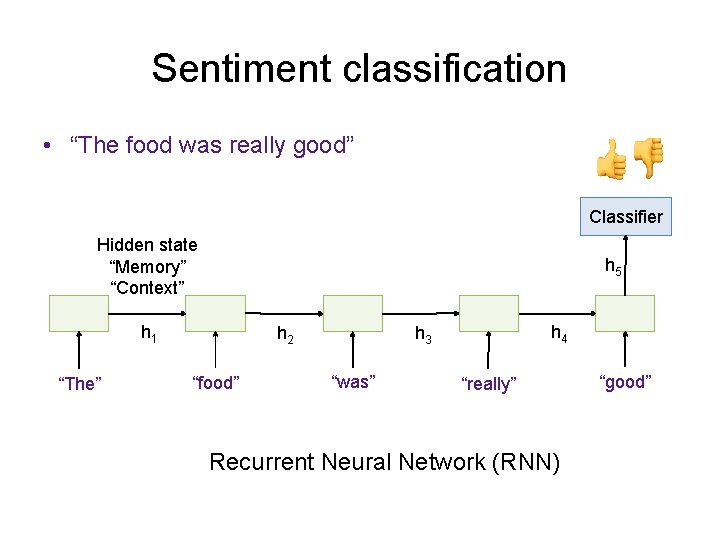

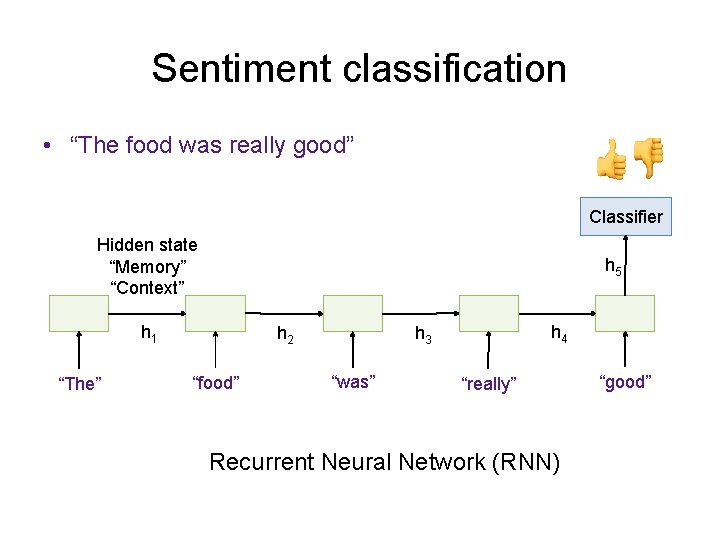

Text classification • Sentiment classification: classify a restaurant or movie or product review as positive or negative – “The food was really good” – “The vacuum cleaner broke within two weeks” – “The movie had slow parts, but overall was worth watching” • What feature representation or predictor structure can we use for this problem?

Sentiment classification • “The food was really good” Classifier Hidden state “Memory” “Context” h 5 h 1 “The” h 2 “food” h 4 h 3 “was” “really” Recurrent Neural Network (RNN) “good”

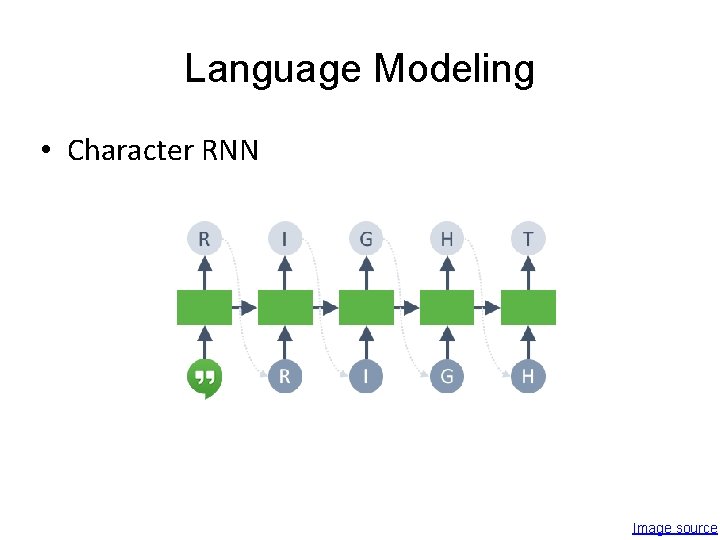

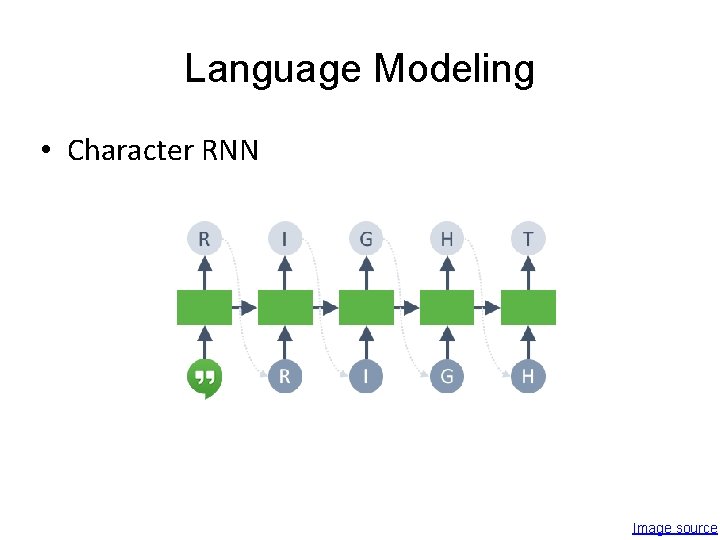

Language Modeling

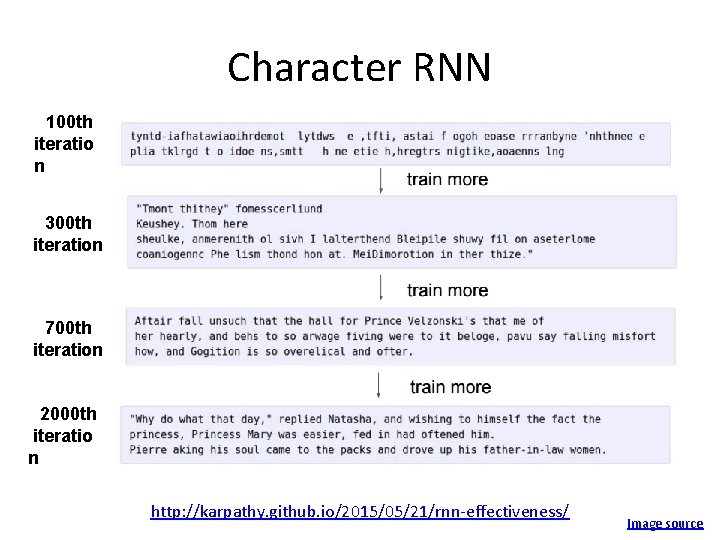

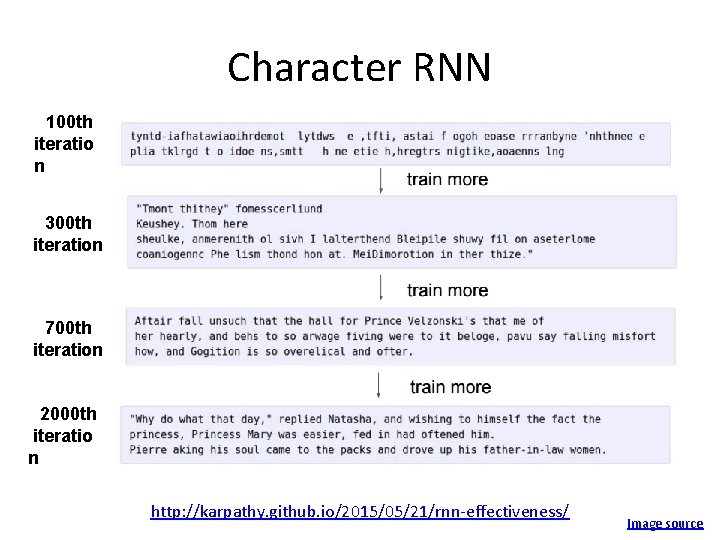

Language Modeling • Character RNN Image source

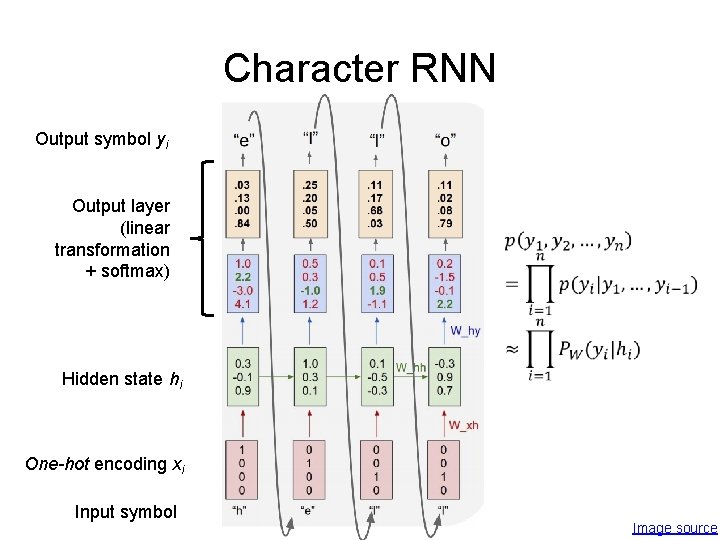

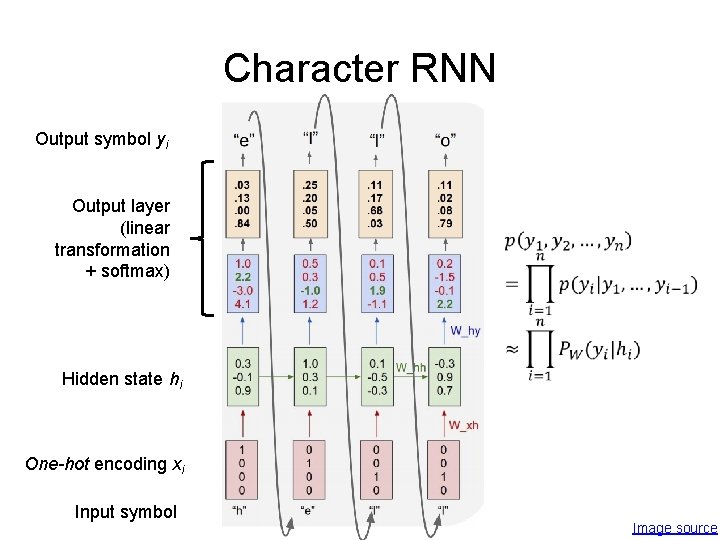

Character RNN Output symbol yi Output layer (linear transformation + softmax) Hidden state hi One-hot encoding xi Input symbol Image source

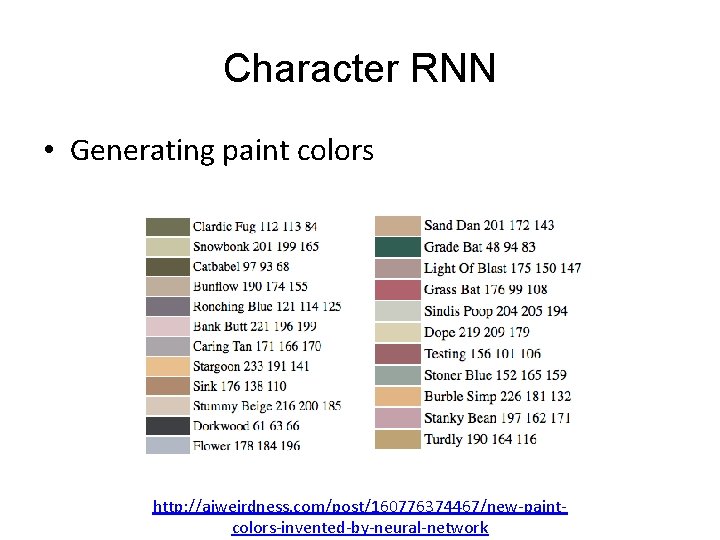

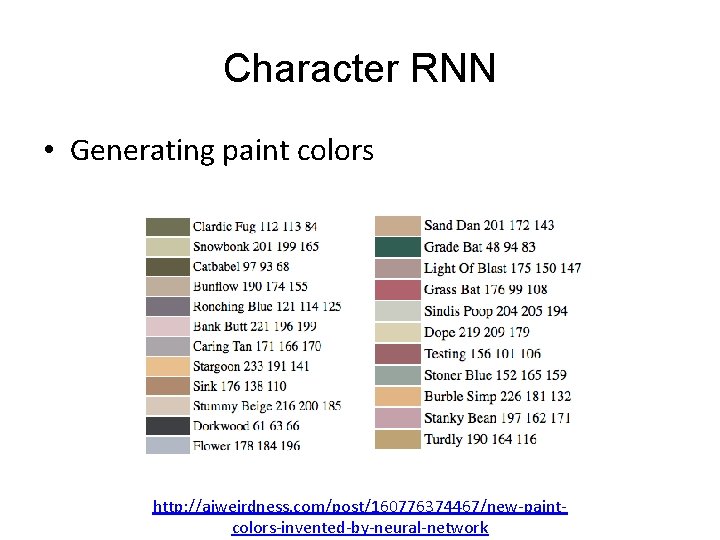

Character RNN • Generating paint colors http: //aiweirdness. com/post/160776374467/new-paintcolors-invented-by-neural-network

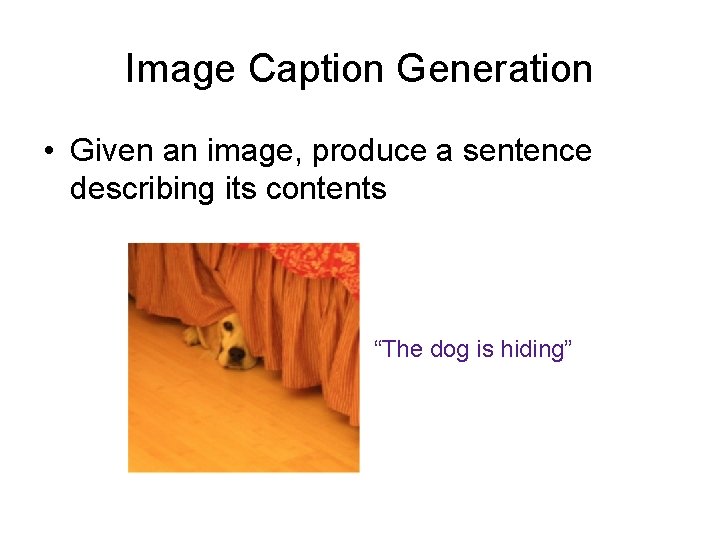

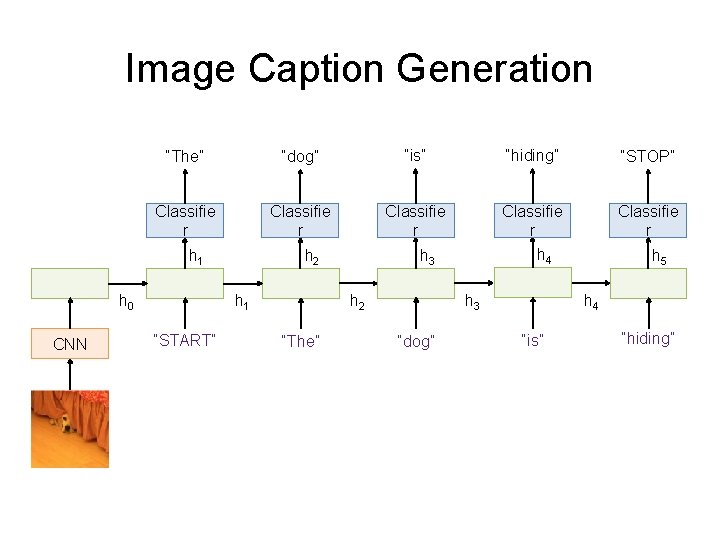

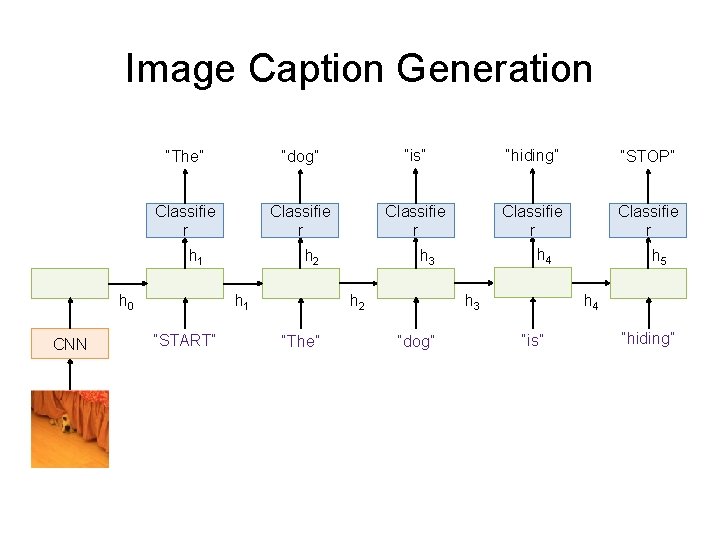

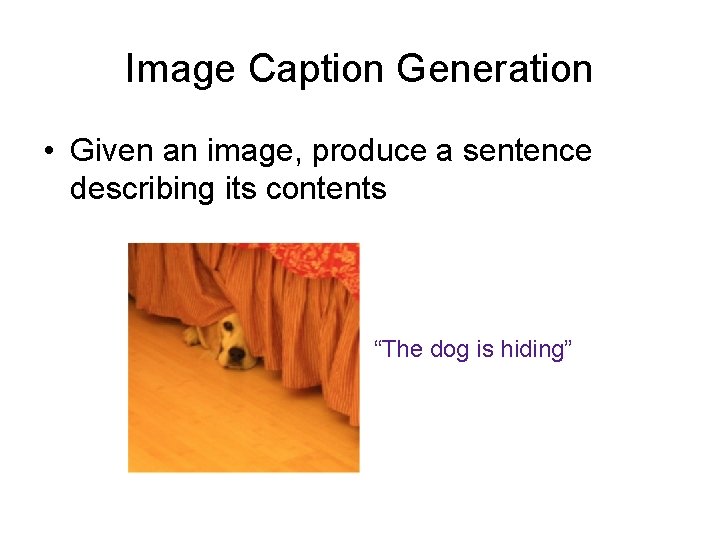

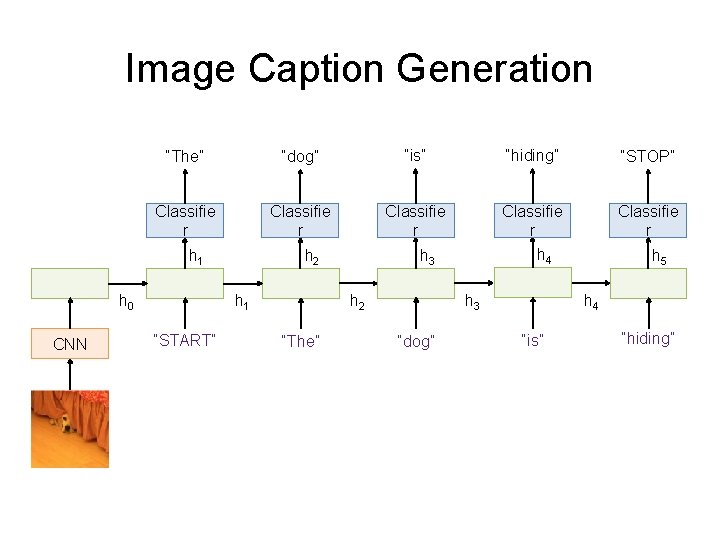

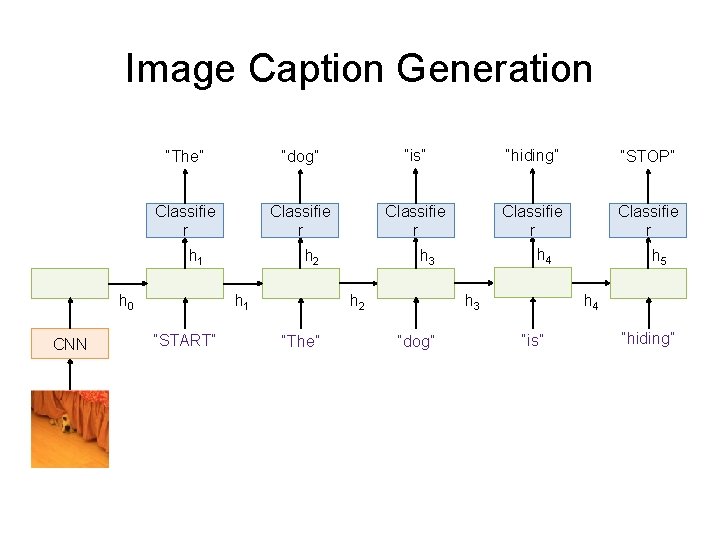

Image Caption Generation • Given an image, produce a sentence describing its contents “The dog is hiding”

Image Caption Generation “The” “dog” “is” “hiding” “STOP” Classifie r Classifie r h 1 h 0 CNN “START” “dog” h 5 h 4 h 3 h 2 “The” h 4 h 3 h 2 “is” “hiding”

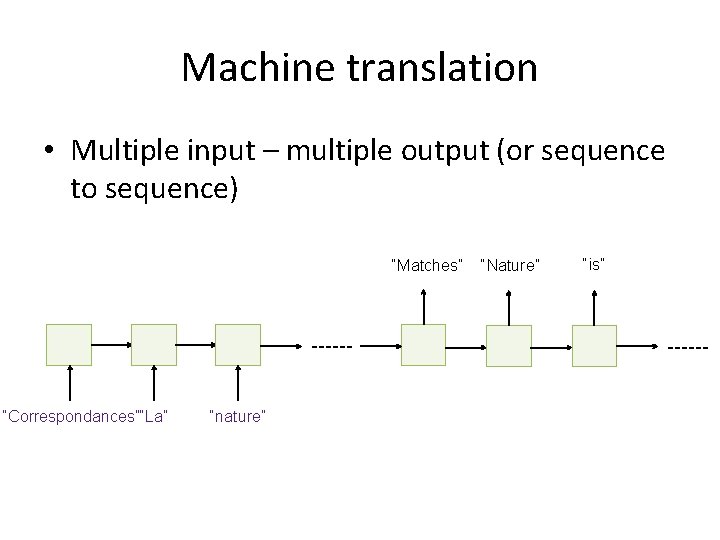

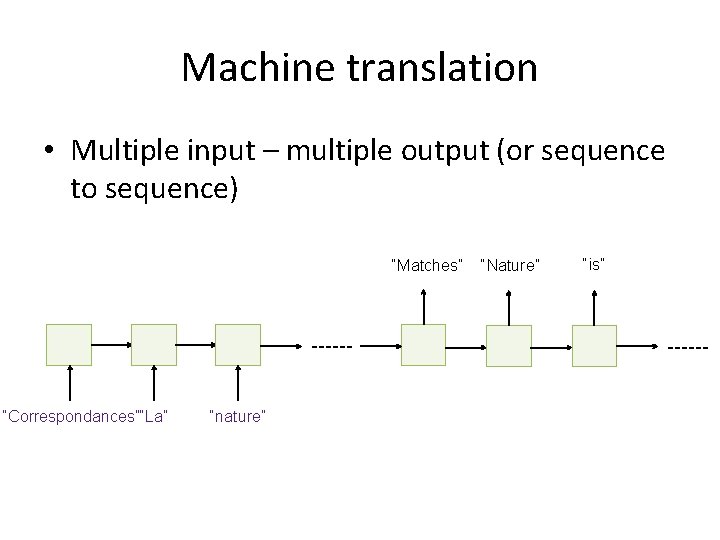

Machine translation https: //translate. google. com/

Machine translation • Multiple input – multiple output (or sequence to sequence) “Matches” “Correspondances”“La” “nature” “Nature” “is”

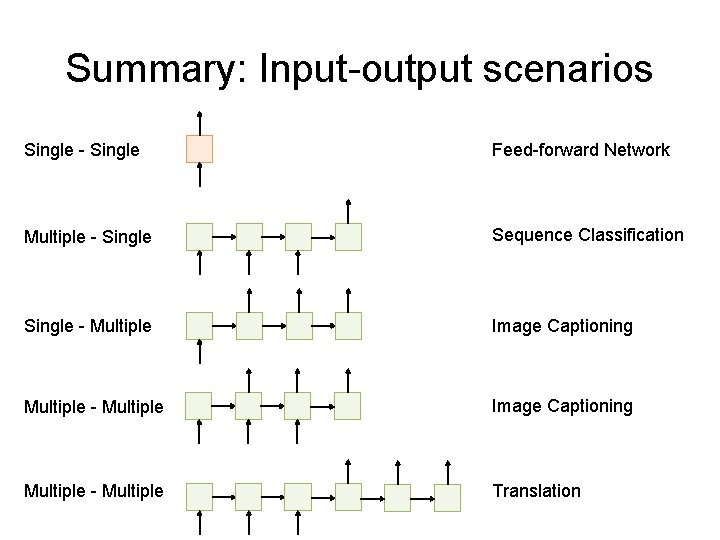

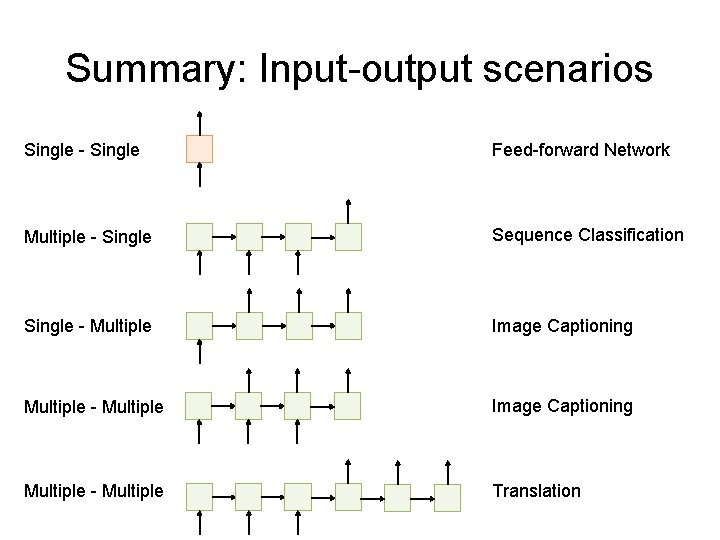

Summary: Input-output scenarios Single - Single Feed-forward Network Multiple - Single Sequence Classification Single - Multiple Image Captioning Multiple - Multiple Translation

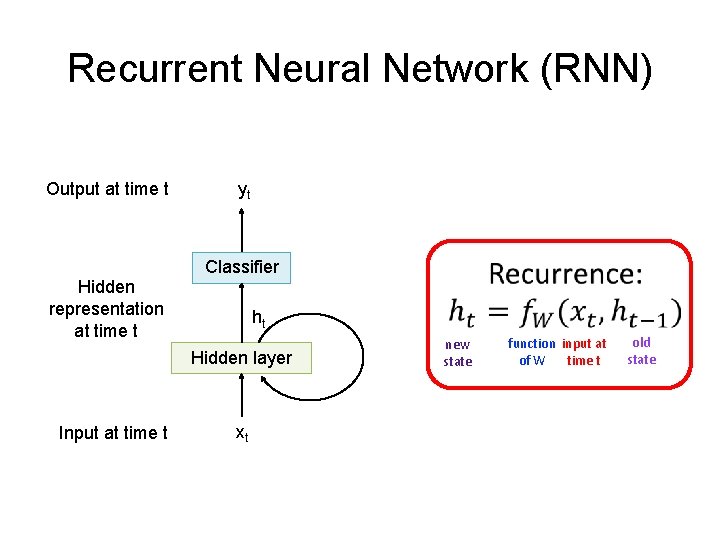

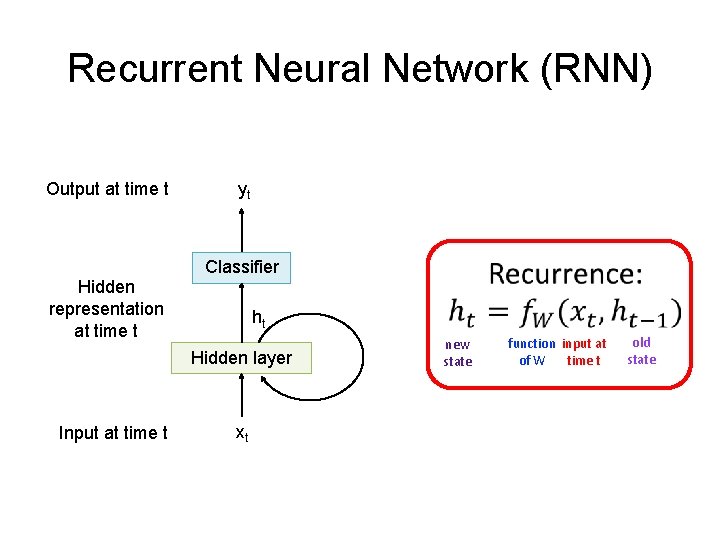

Recurrent Neural Network (RNN) Output at time t Hidden representation at time t yt Classifier ht Hidden layer Input at time t xt new state function input at of W time t old state

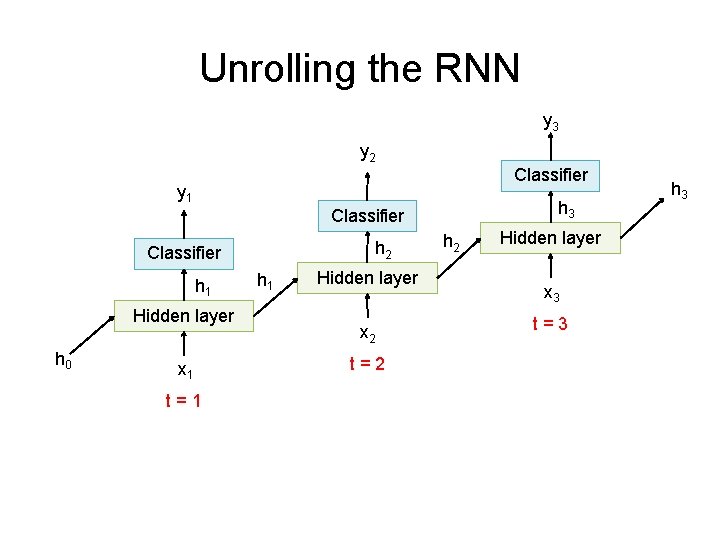

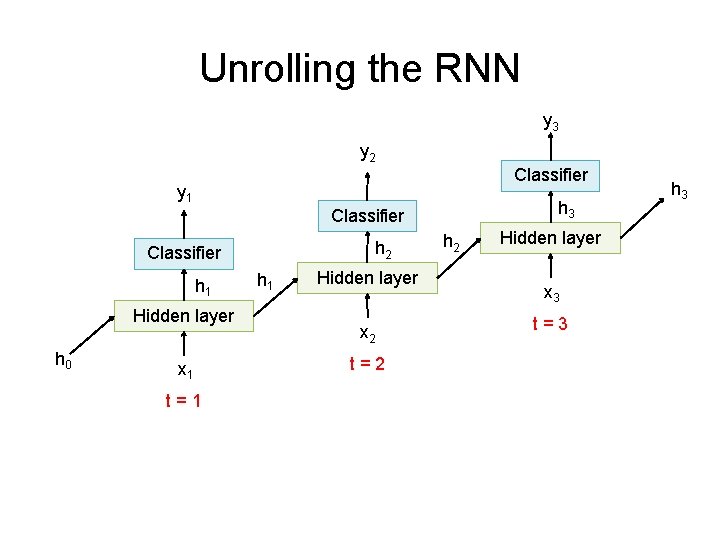

Unrolling the RNN y 3 y 2 Classifier y 1 h 3 Classifier h 2 Classifier h 1 Hidden layer h 0 x 1 t=1 h 1 Hidden layer x 2 t=2 h 2 Hidden layer x 3 t=3 h 3

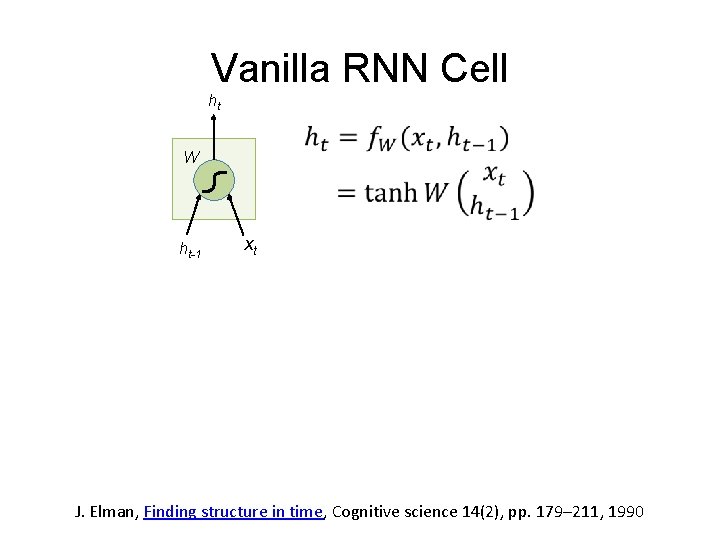

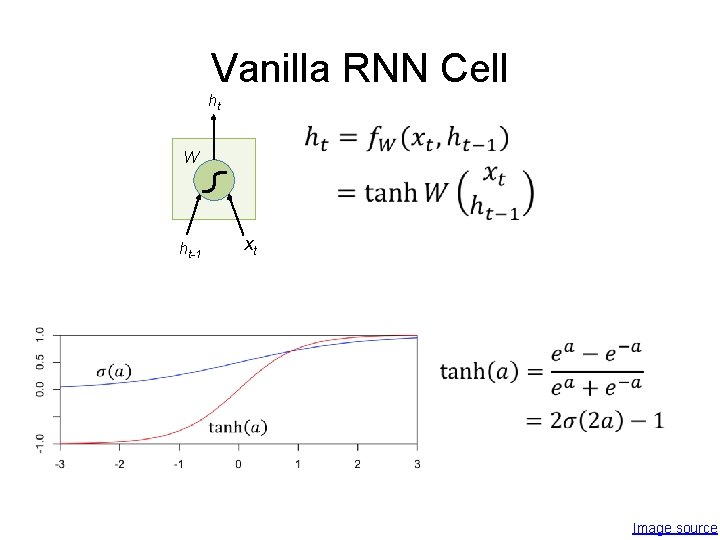

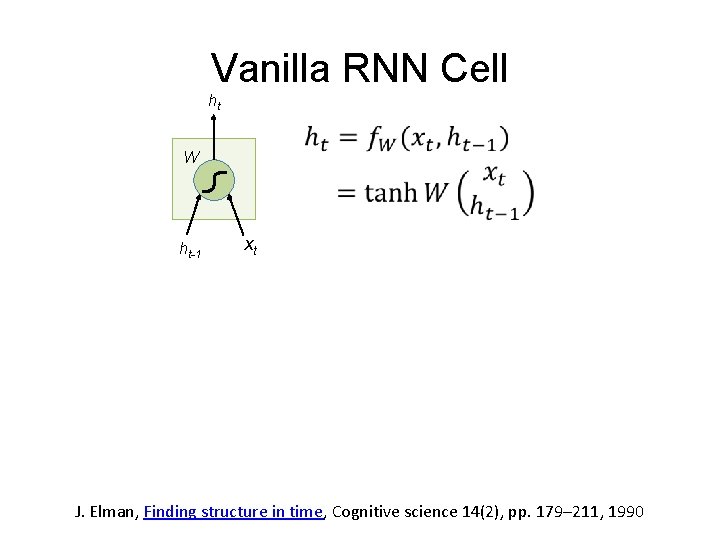

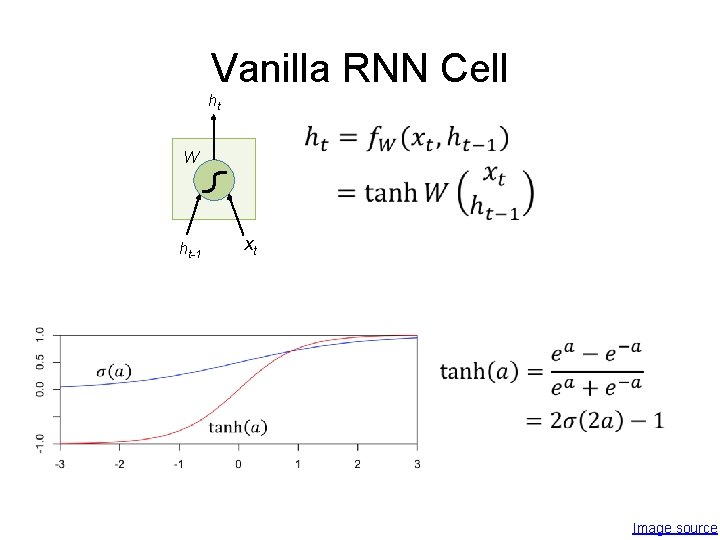

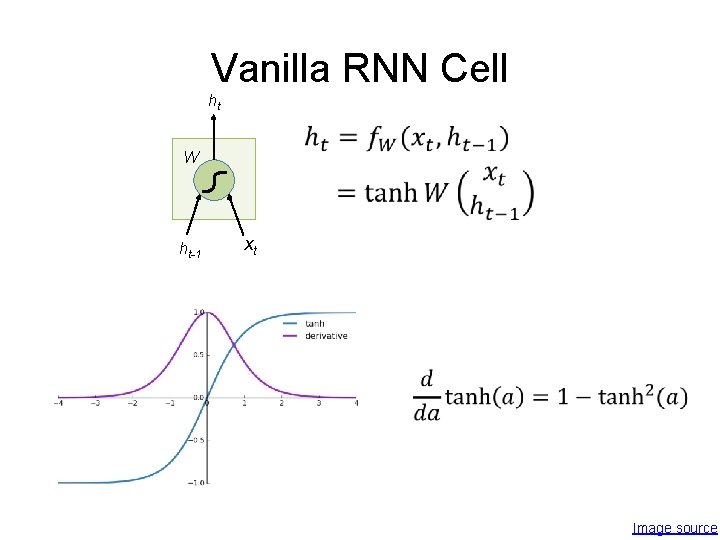

Vanilla RNN Cell ht W ht-1 xt J. Elman, Finding structure in time, Cognitive science 14(2), pp. 179– 211, 1990

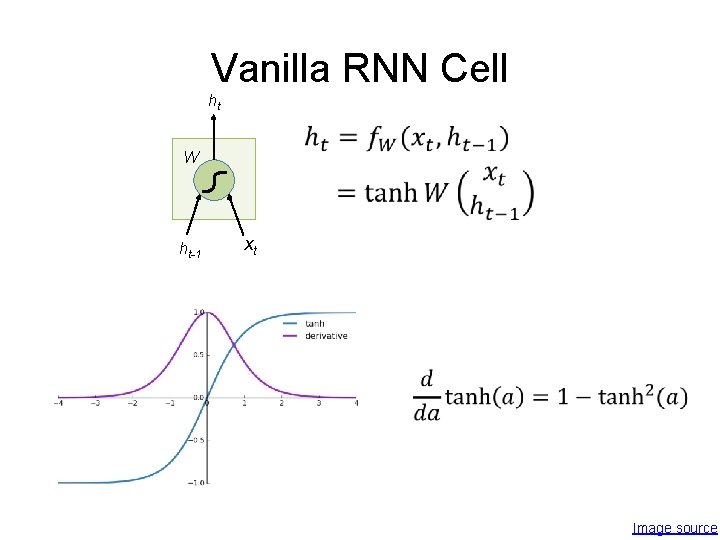

Vanilla RNN Cell ht W xt ht-1 Image source

Vanilla RNN Cell ht W ht-1 xt Image source

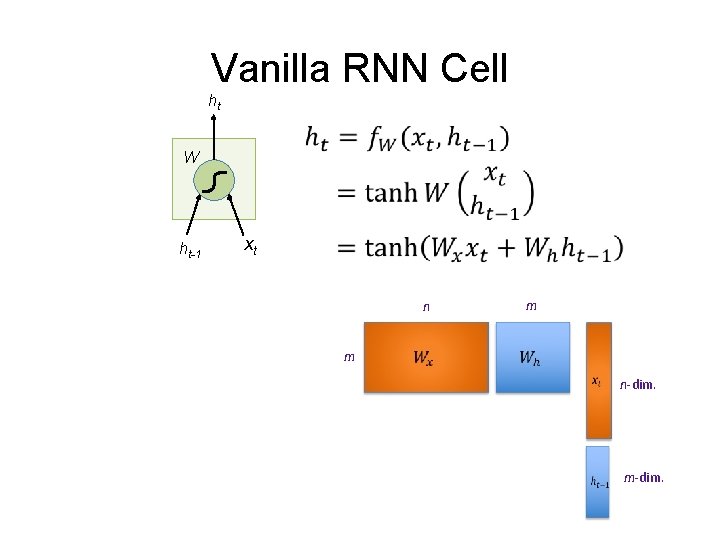

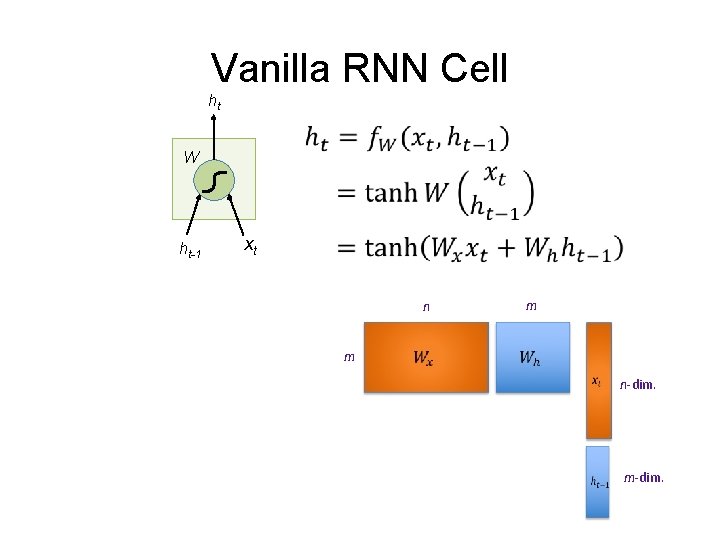

Vanilla RNN Cell ht W ht-1 xt m n-dim. m-dim.

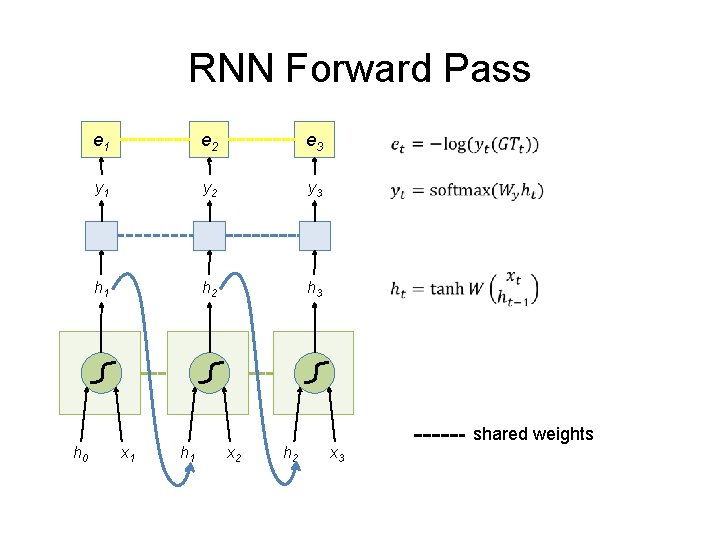

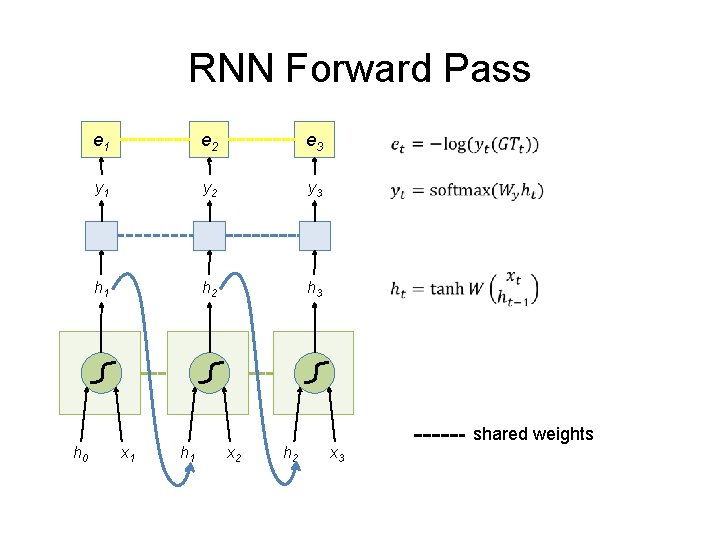

RNN Forward Pass h 0 e 1 e 2 e 3 y 1 y 2 y 3 h 1 h 2 h 3 x 1 h 1 x 2 h 2 x 3 shared weights

Backpropagation Through Time (BPTT) • Most common method used to train RNNs • The unfolded network (used during forward pass) is treated as one big feed-forward network that accepts the whole time series as input • The weight updates are computed for each copy in the unfolded network, then summed (or averaged) and applied to the RNN weights

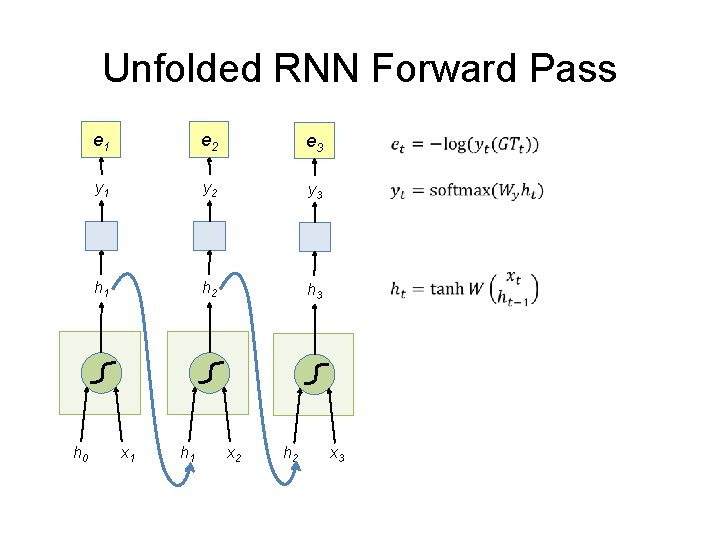

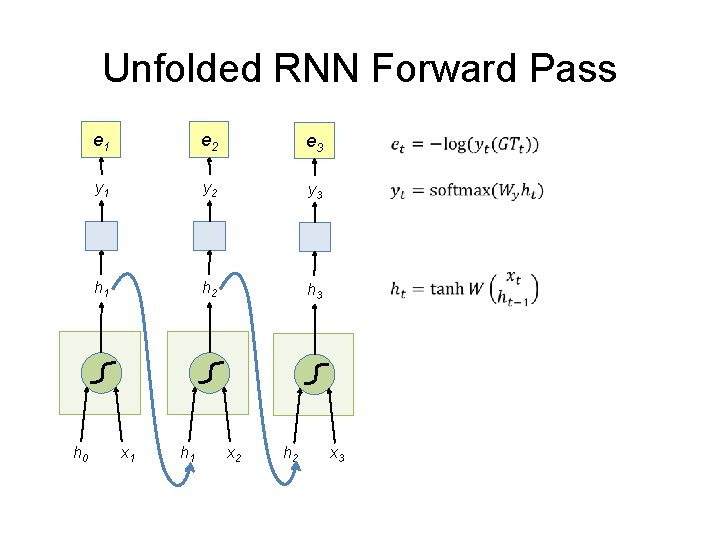

Unfolded RNN Forward Pass h 0 e 1 e 2 e 3 y 1 y 2 y 3 h 1 h 2 h 3 x 1 h 1 x 2 h 2 x 3

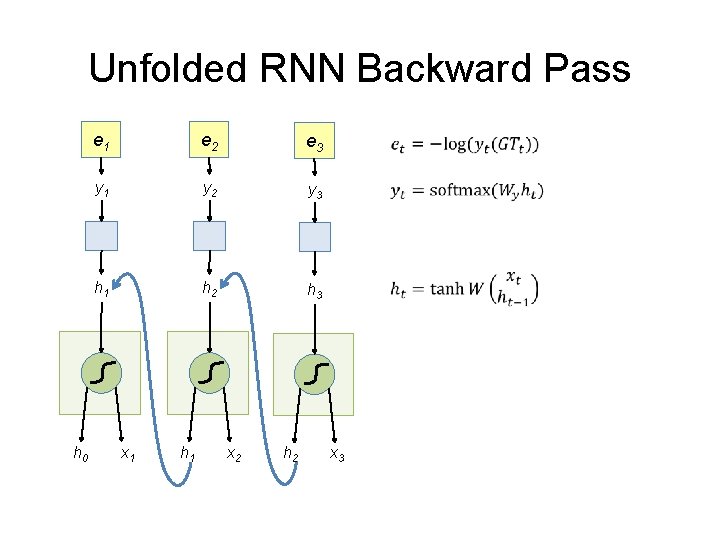

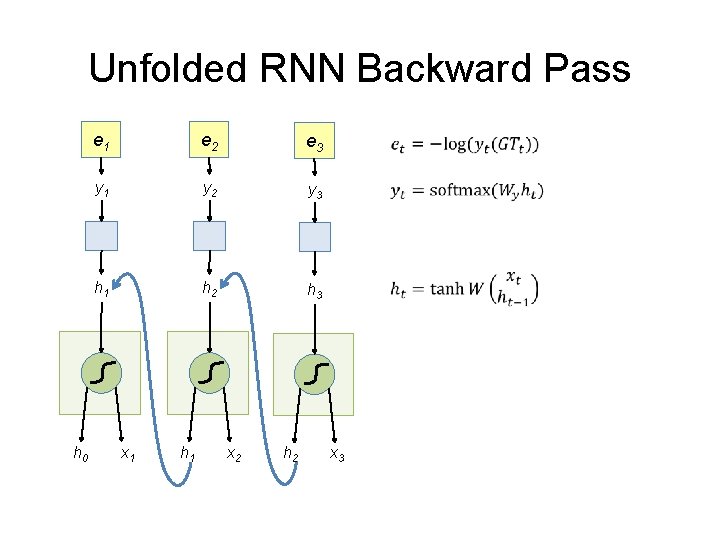

Unfolded RNN Backward Pass h 0 e 1 e 2 e 3 y 1 y 2 y 3 h 1 h 2 h 3 x 1 h 1 x 2 h 2 x 3

Backpropagation Through Time (BPTT) • https: //machinelearningmastery. com/gentle-introduction-backpropagation-time/ http: //www. cs. utoronto. ca/~ilya/pubs/ilya_sutskever_phd_thesis. pdf

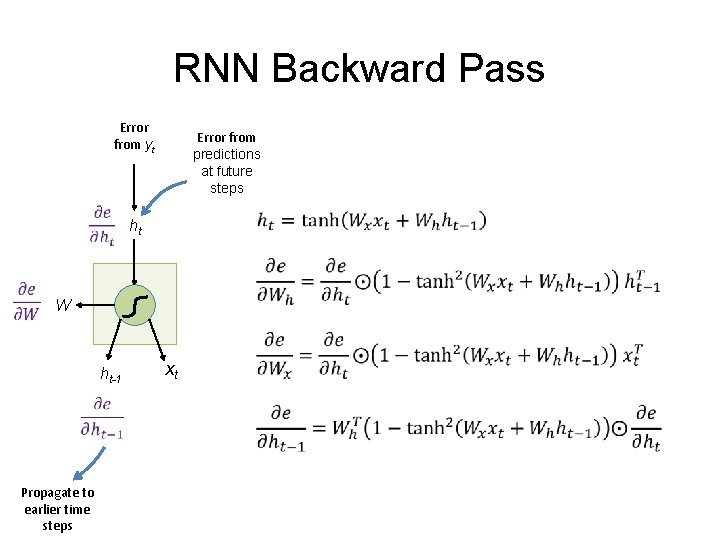

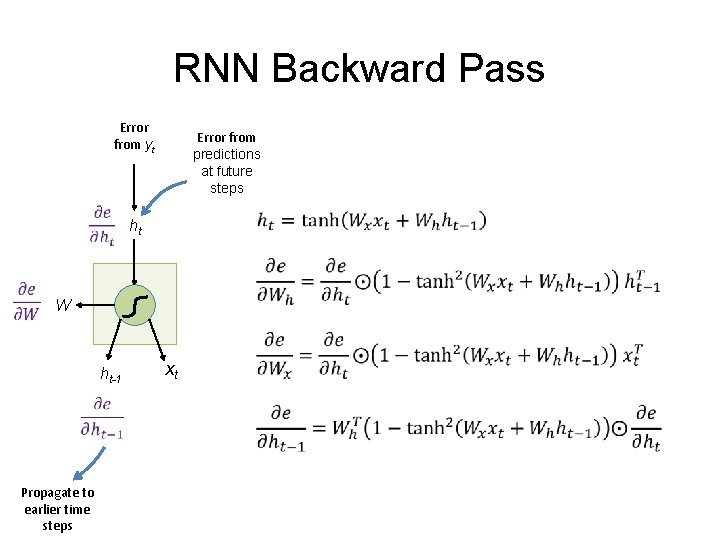

RNN Backward Pass Error from yt Error from predictions at future steps ht W ht-1 Propagate to earlier time steps xt

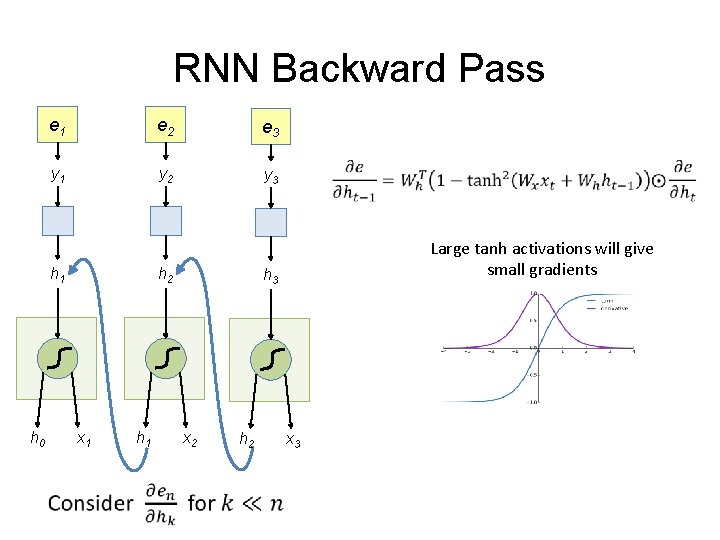

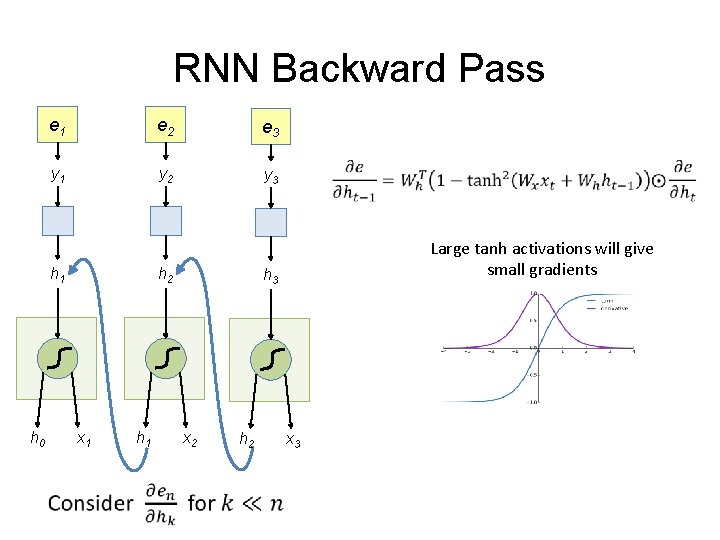

RNN Backward Pass e 1 e 2 e 3 y 1 y 2 y 3 h 1 h 0 h 2 x 1 h 1 Large tanh activations will give small gradients h 3 x 2 h 2 x 3

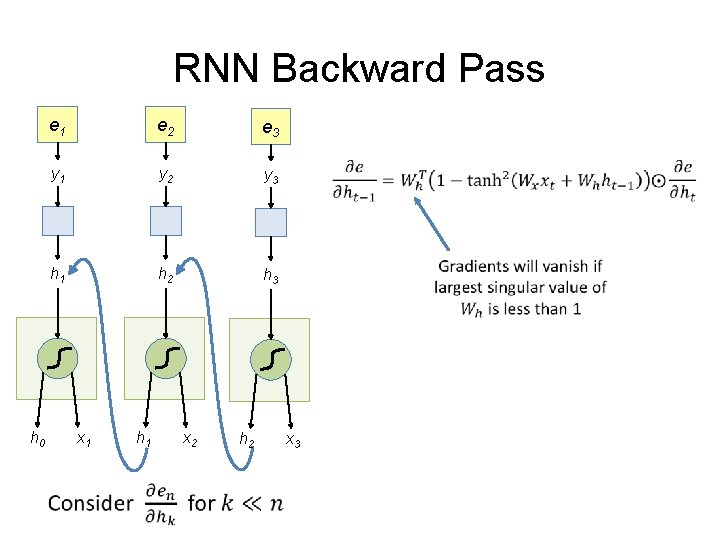

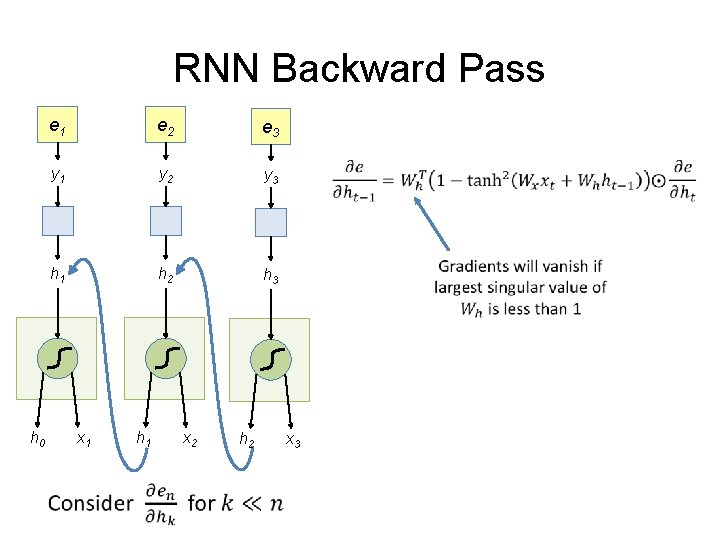

RNN Backward Pass h 0 e 1 e 2 e 3 y 1 y 2 y 3 h 1 h 2 h 3 x 1 h 1 x 2 h 2 x 3

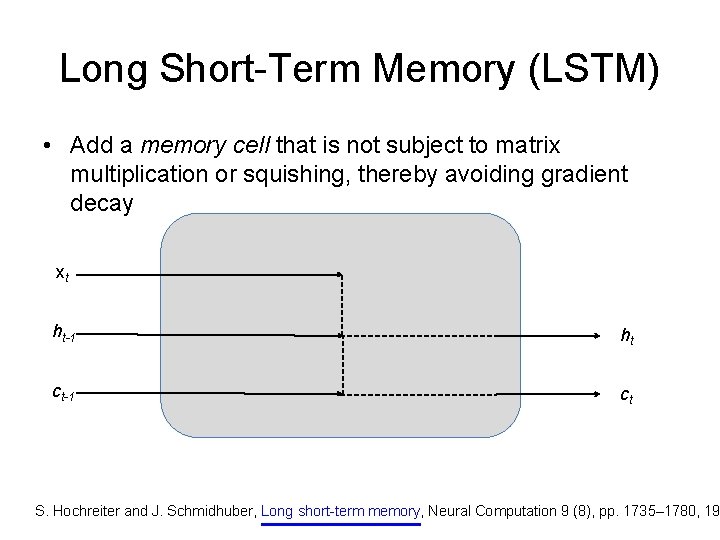

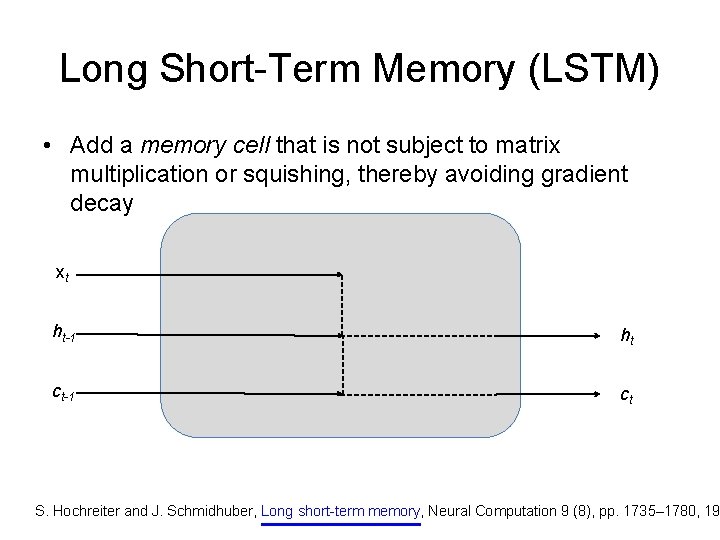

Long Short-Term Memory (LSTM) • Add a memory cell that is not subject to matrix multiplication or squishing, thereby avoiding gradient decay xt ht-1 ht ct-1 ct S. Hochreiter and J. Schmidhuber, Long short-term memory, Neural Computation 9 (8), pp. 1735– 1780, 19

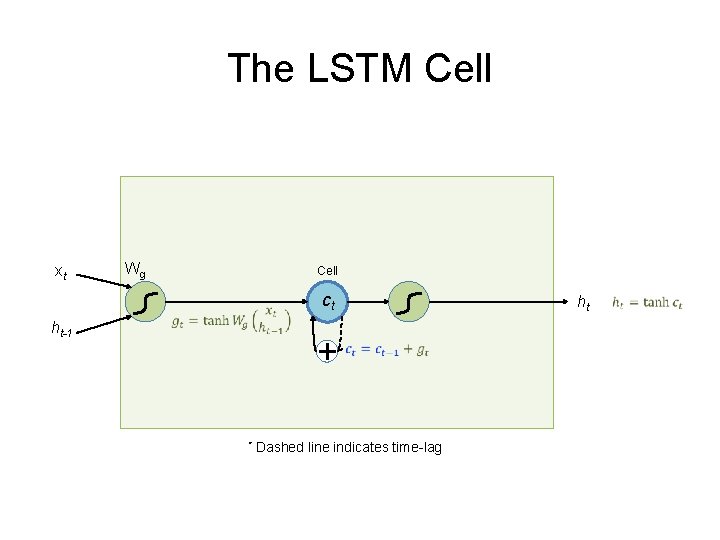

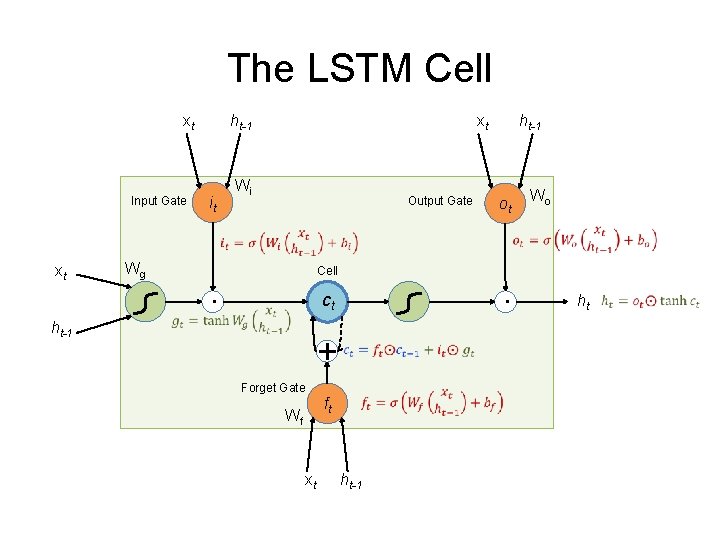

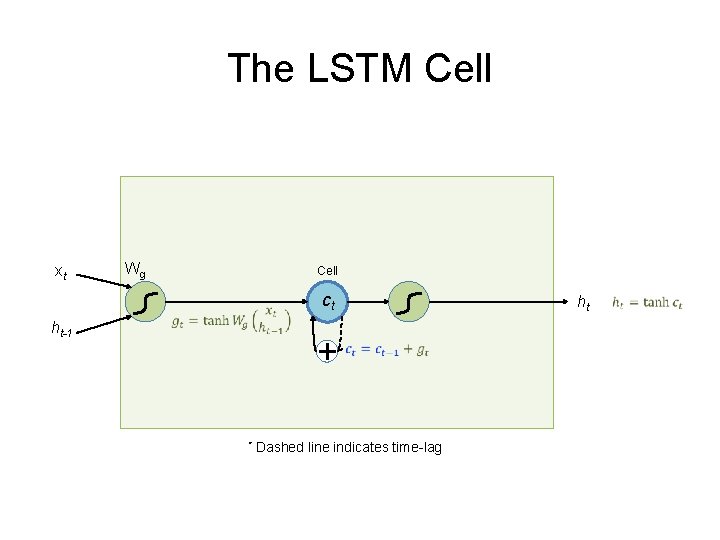

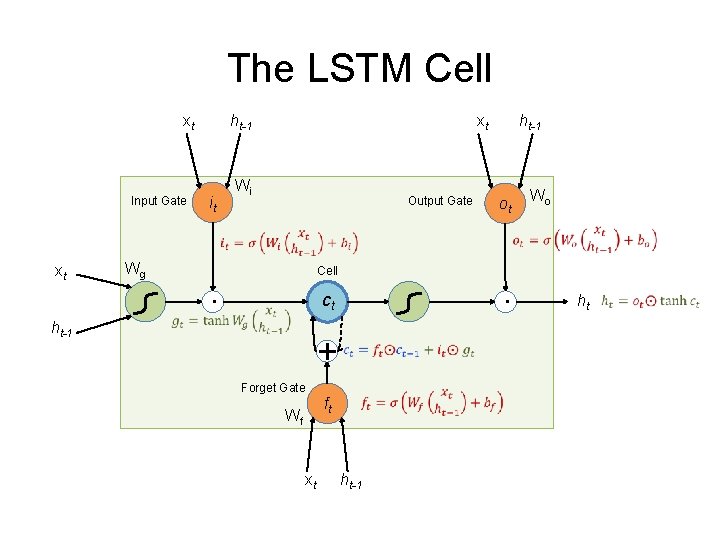

The LSTM Cell xt ht-1 Wg Cell ct ht * Dashed line indicates time-lag

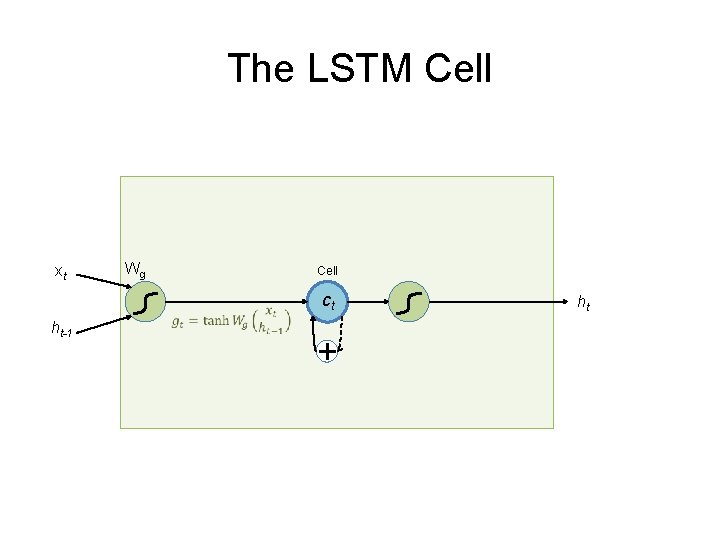

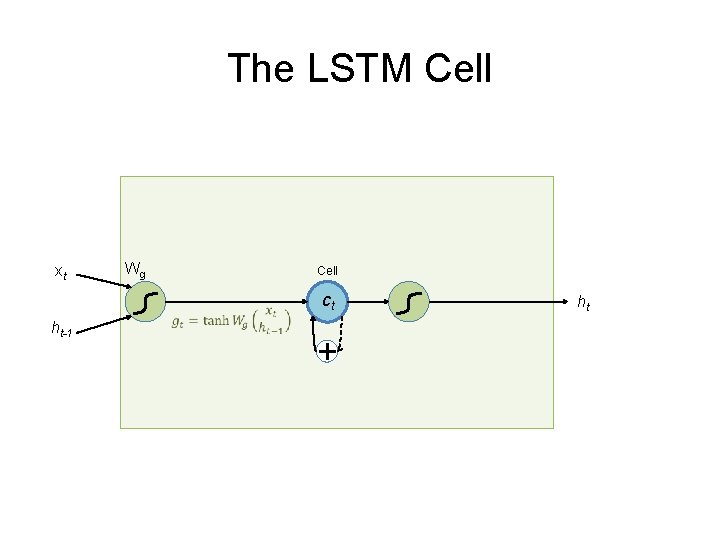

The LSTM Cell xt ht-1 Wg Cell ct ht

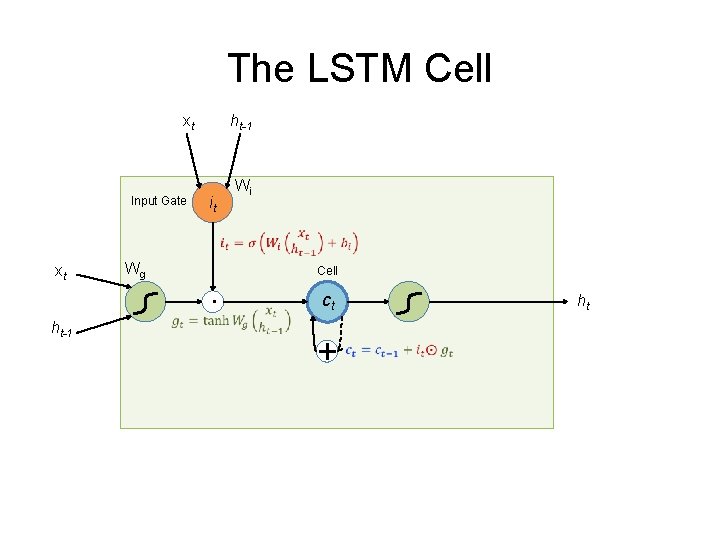

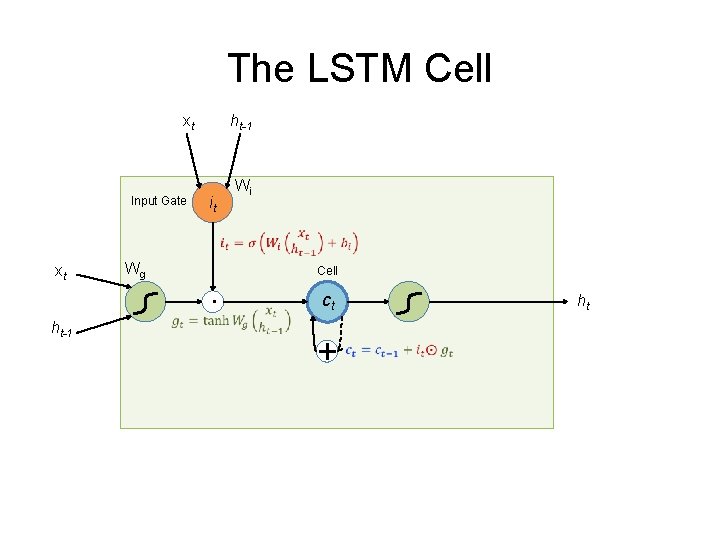

The LSTM Cell xt Input Gate ht-1 Wi it xt ht-1 Wg . Cell ct ht

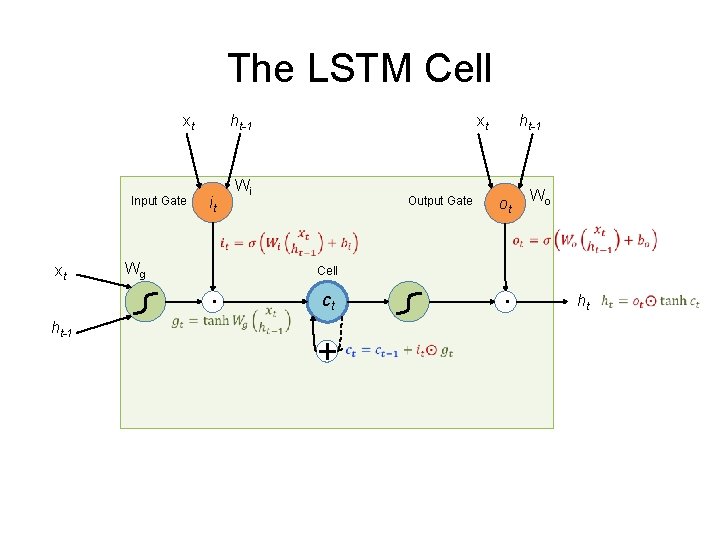

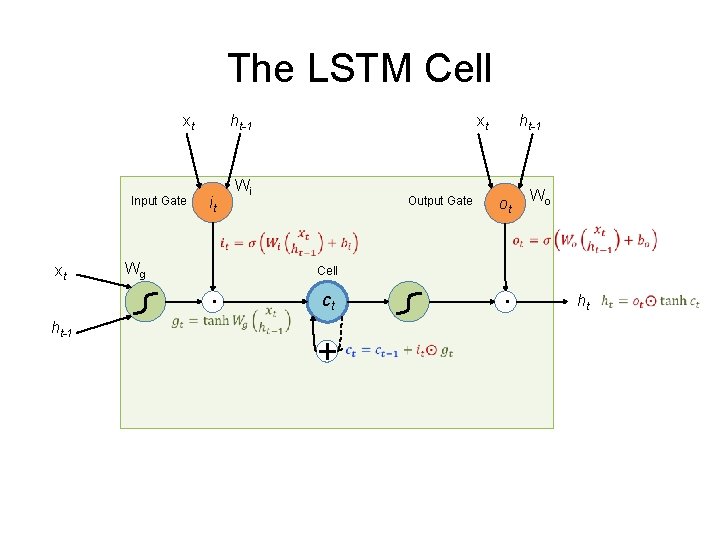

The LSTM Cell xt Input Gate ht-1 xt Wi it Output Gate ht-1 Wg . ot Wo xt ht-1 Cell . ct ht

The LSTM Cell xt Input Gate ht-1 xt Wi it Output Gate ht-1 Wg ot Wo xt ht-1 Cell . . ct Forget Gate ft Wf xt ht-1 ht

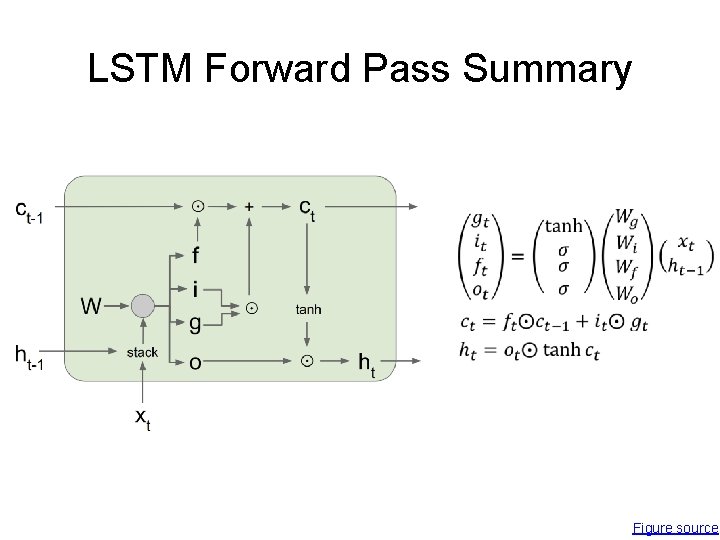

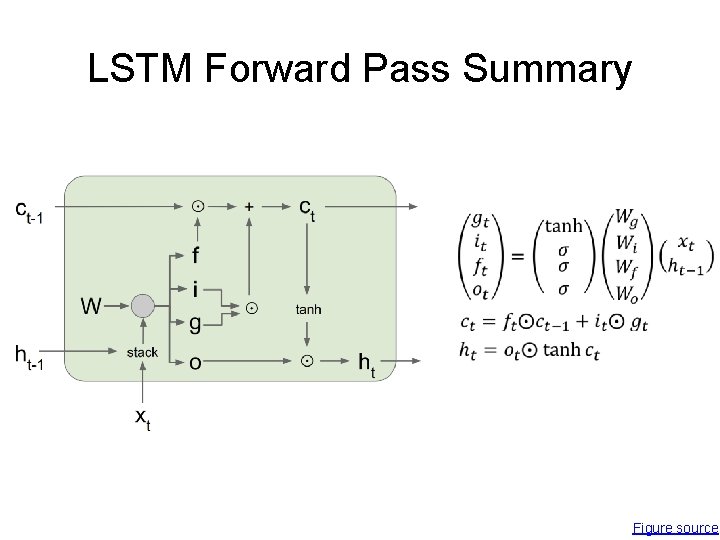

LSTM Forward Pass Summary • Figure source

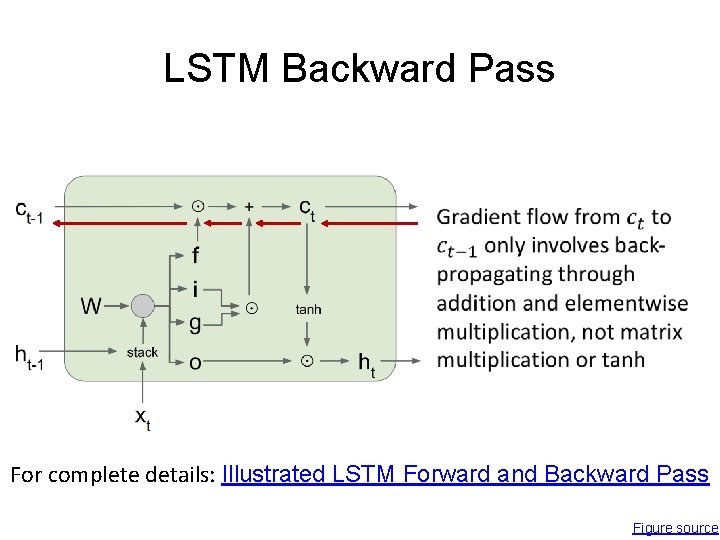

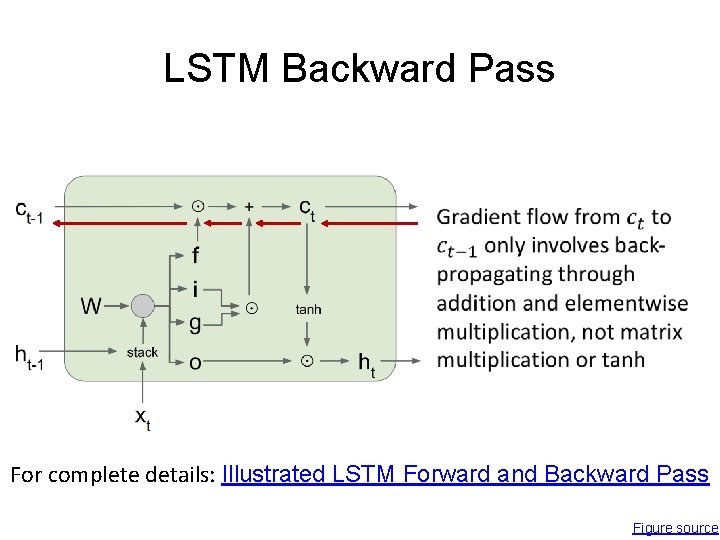

LSTM Backward Pass • For complete details: Illustrated LSTM Forward and Backward Pass Figure source

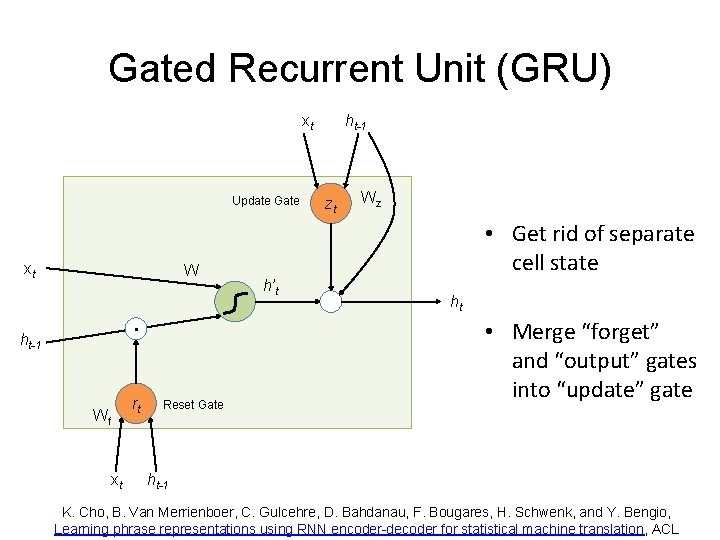

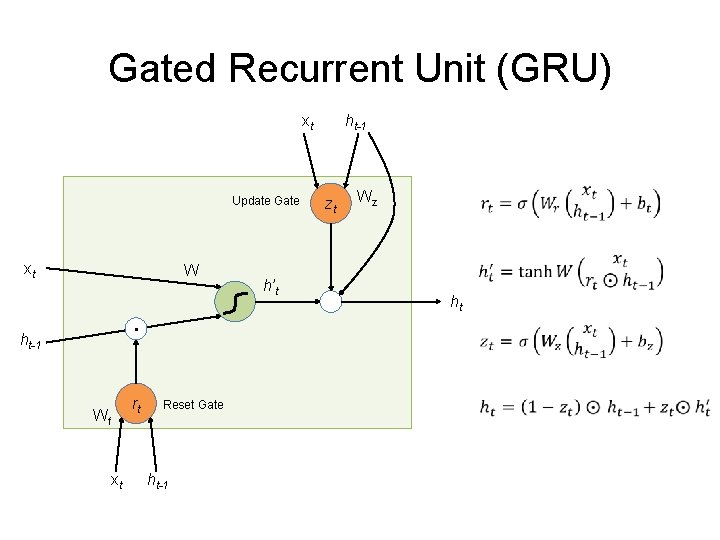

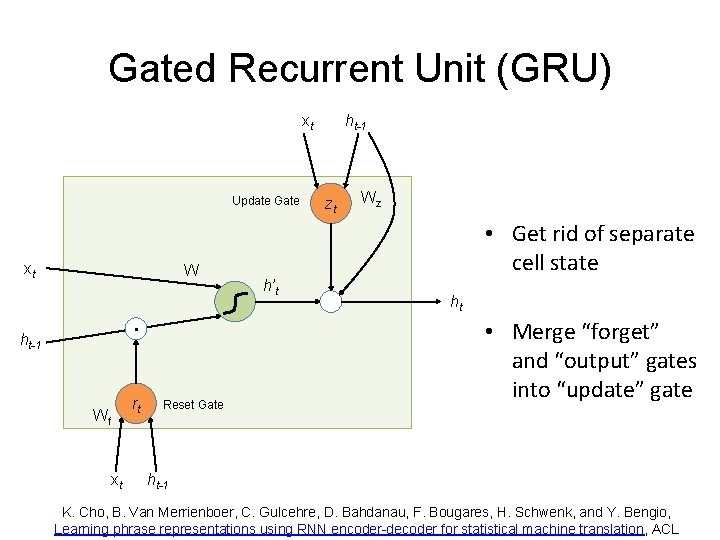

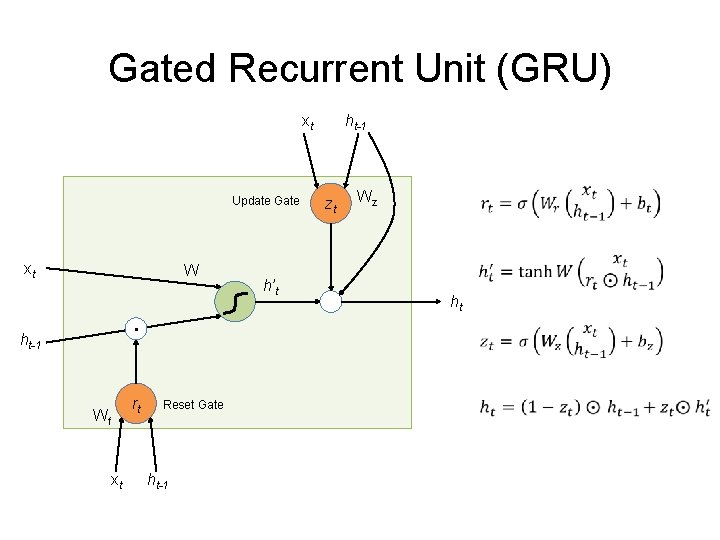

Gated Recurrent Unit (GRU) xt Update Gate xt W . ht-1 Wf xt rt Reset Gate h’t ht-1 zt Wz • Get rid of separate cell state ht • Merge “forget” and “output” gates into “update” gate ht-1 K. Cho, B. Van Merrienboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, Learning phrase representations using RNN encoder-decoder for statistical machine translation, ACL

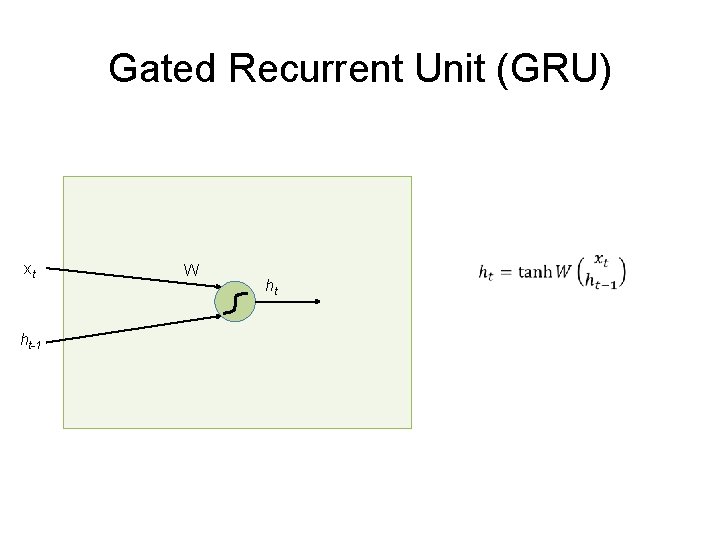

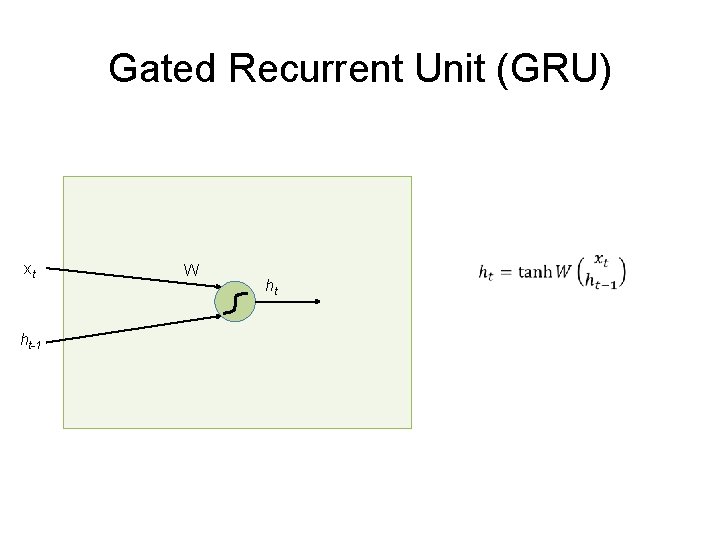

Gated Recurrent Unit (GRU) xt ht-1 W ht

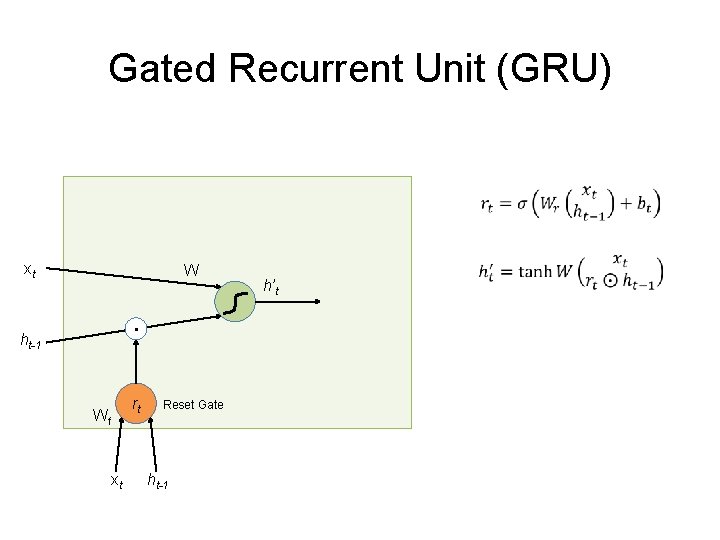

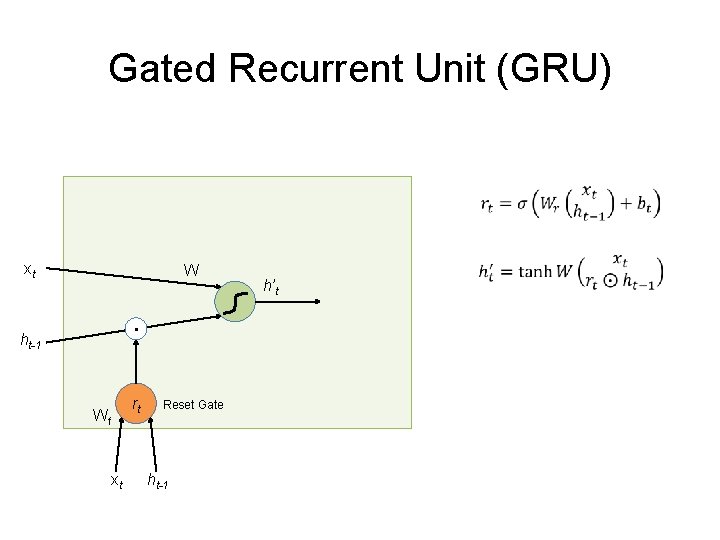

Gated Recurrent Unit (GRU) xt W . ht-1 Wf xt rt Reset Gate ht-1 h’t

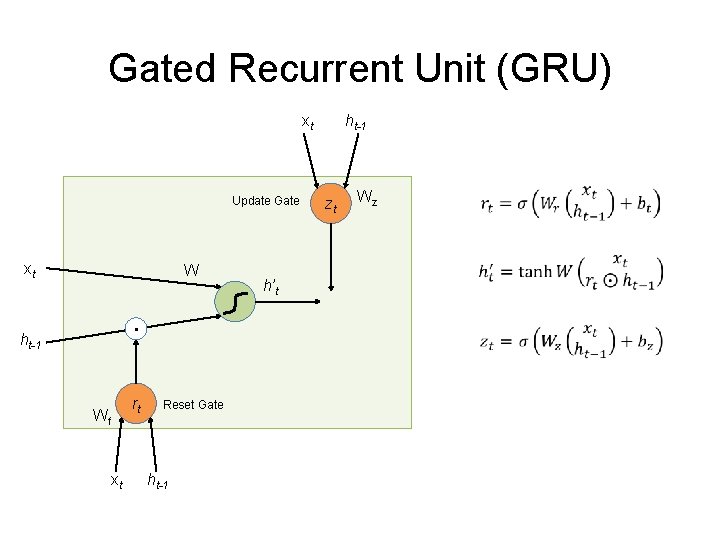

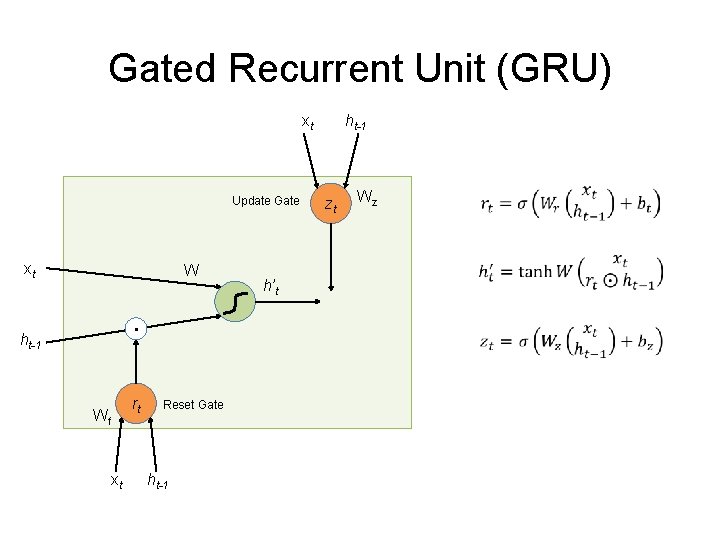

Gated Recurrent Unit (GRU) xt Update Gate xt W . ht-1 Wf xt rt h’t ht-1 zt Wz Reset Gate ht-1

Gated Recurrent Unit (GRU) xt Update Gate xt W . ht-1 Wf xt rt h’t ht-1 zt Wz ht Reset Gate ht-1

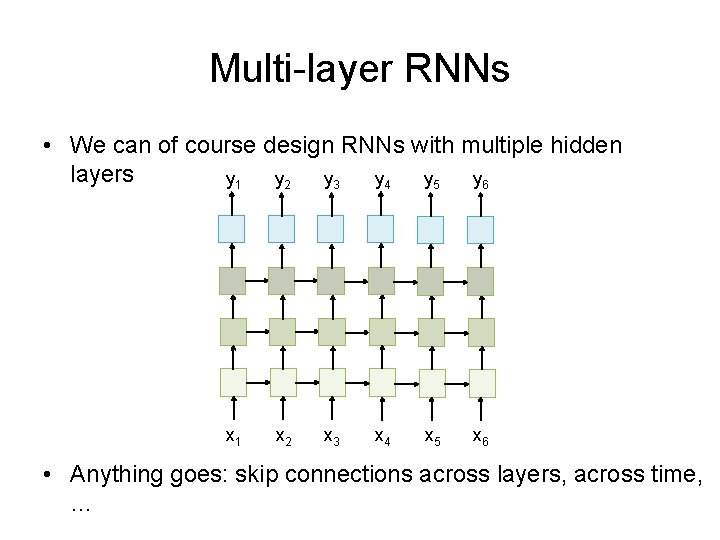

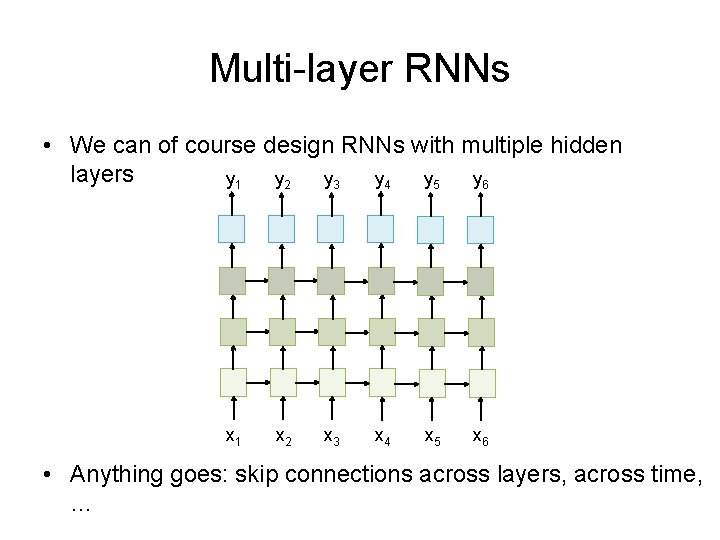

Multi-layer RNNs • We can of course design RNNs with multiple hidden layers y 1 y 4 y 2 y 3 y 5 y 6 x 1 x 2 x 3 x 4 x 5 x 6 • Anything goes: skip connections across layers, across time, …

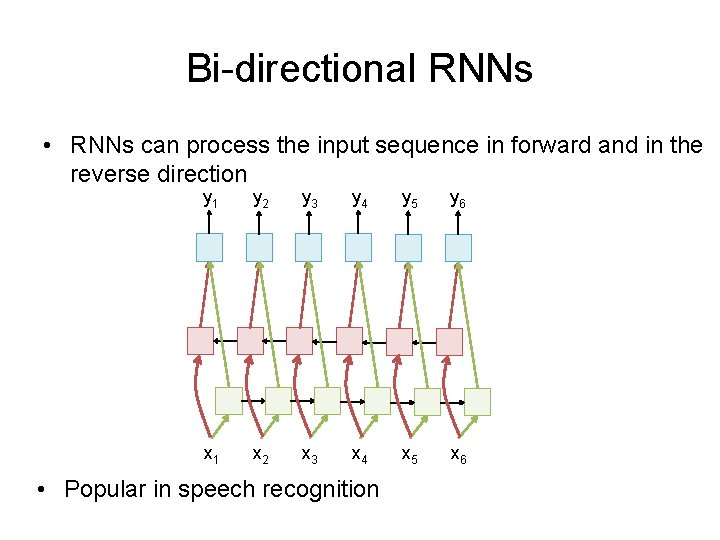

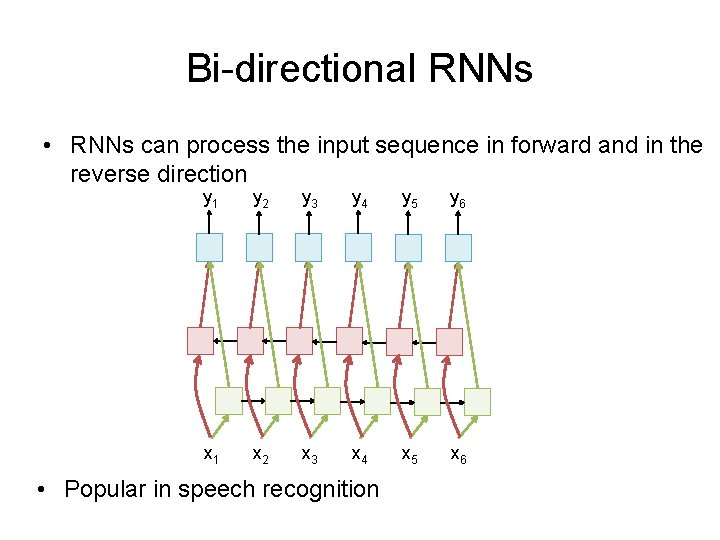

Bi-directional RNNs • RNNs can process the input sequence in forward and in the reverse direction y 1 y 2 y 3 y 4 y 5 y 6 x 1 x 2 x 3 x 4 x 5 x 6 • Popular in speech recognition

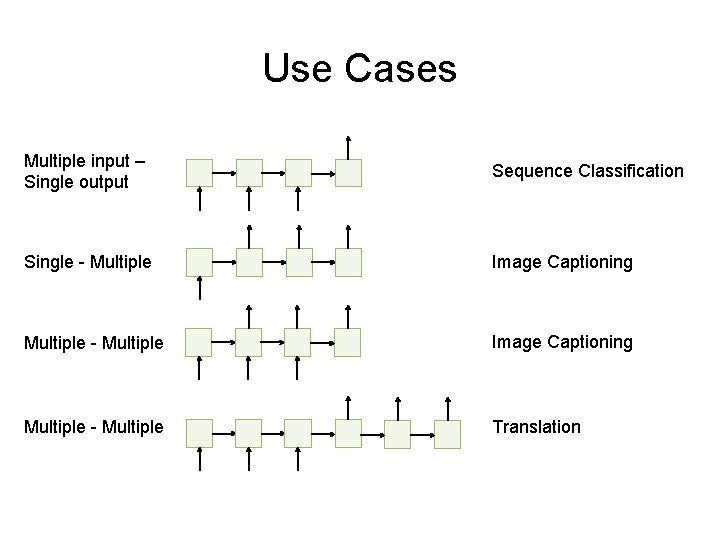

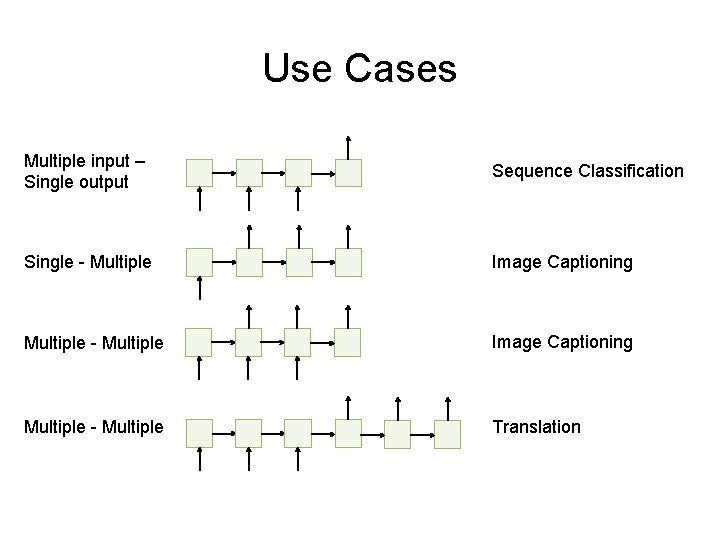

Use Cases Multiple input – Single output Sequence Classification Single - Multiple Image Captioning Multiple - Multiple Translation

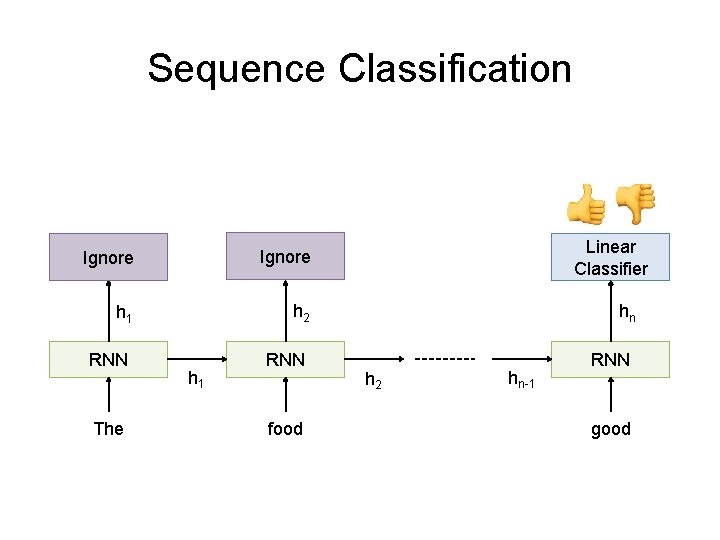

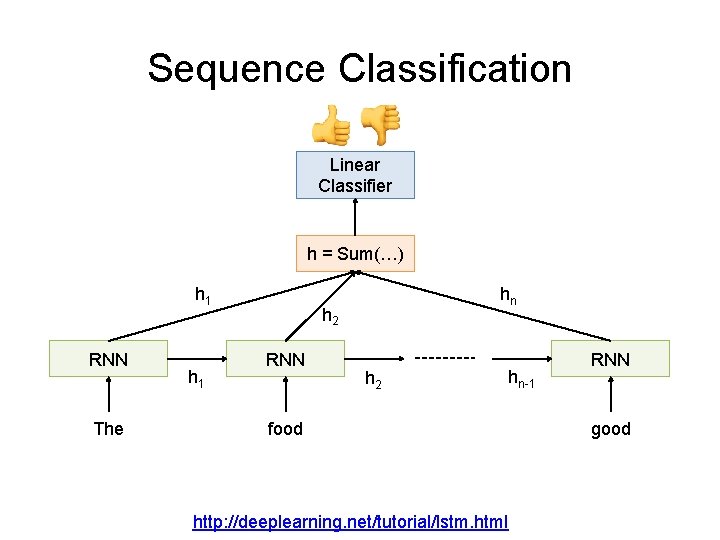

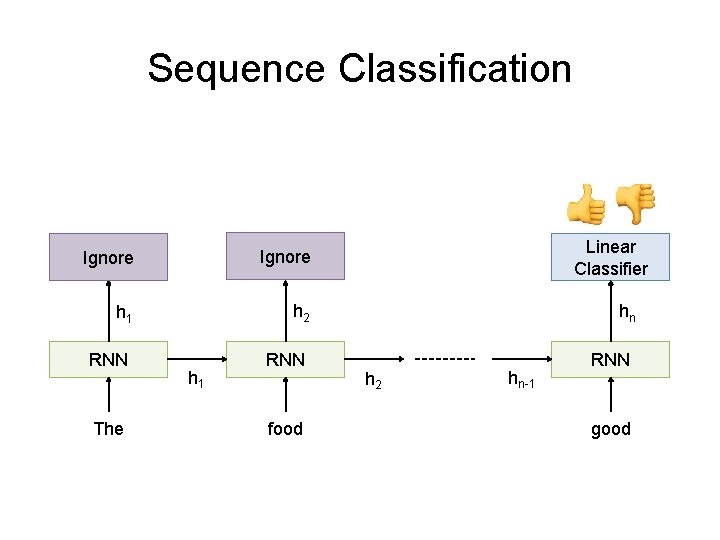

Sequence Classification Linear Classifier Ignore h 1 h 2 hn RNN RNN The h 1 food h 2 hn-1 good

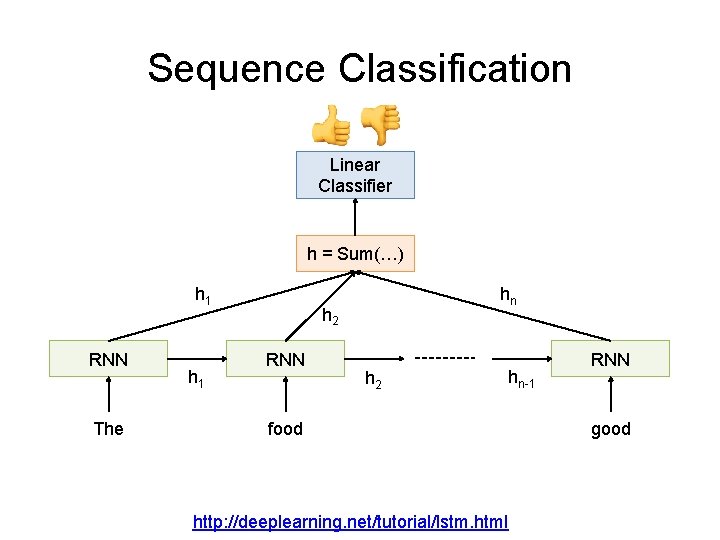

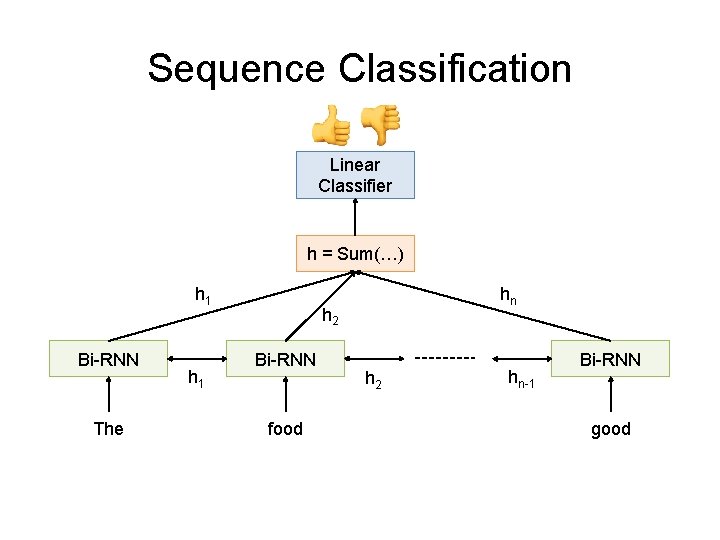

Sequence Classification Linear Classifier h = Sum(…) h 1 RNN The h 1 hn h 2 RNN h 2 food http: //deeplearning. net/tutorial/lstm. html hn-1 RNN good

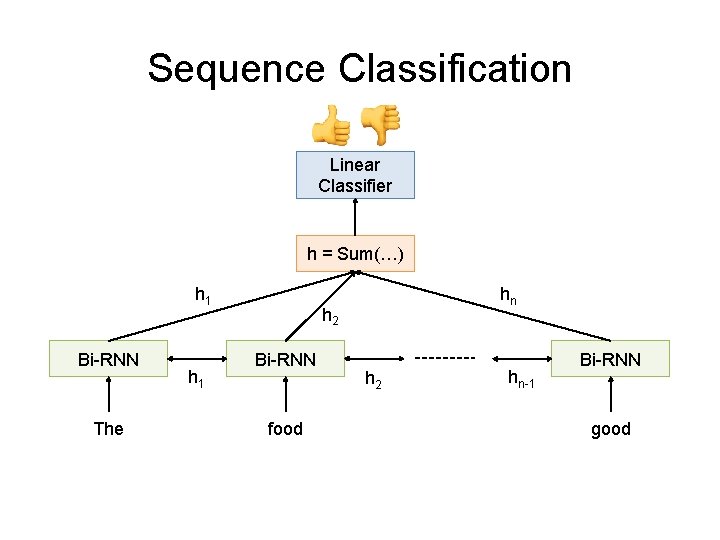

Sequence Classification Linear Classifier h = Sum(…) h 1 Bi-RNN The h 1 hn h 2 Bi-RNN food h 2 hn-1 Bi-RNN good

Character RNN 100 th iteratio n 300 th iteration 700 th iteration 2000 th iteratio n http: //karpathy. github. io/2015/05/21/rnn-effectiveness/ Image source

Image Caption Generation “The” “dog” “is” “hiding” “STOP” Classifie r Classifie r h 1 h 0 CNN “START” “dog” h 5 h 4 h 3 h 2 “The” h 4 h 3 h 2 “is” “hiding”

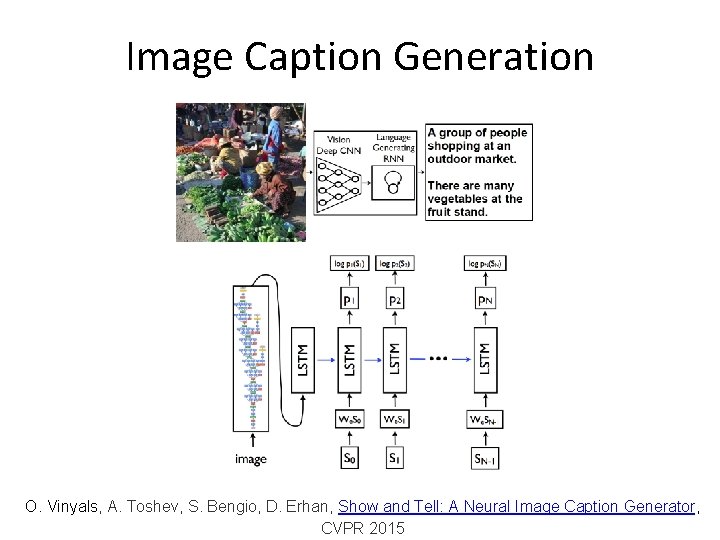

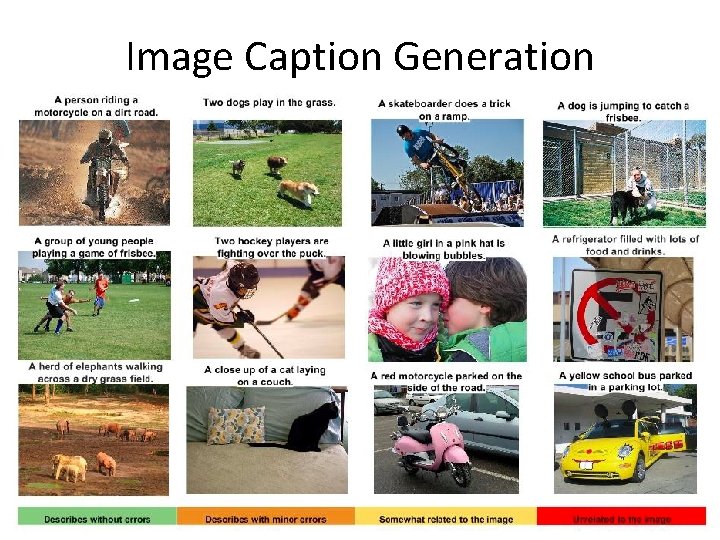

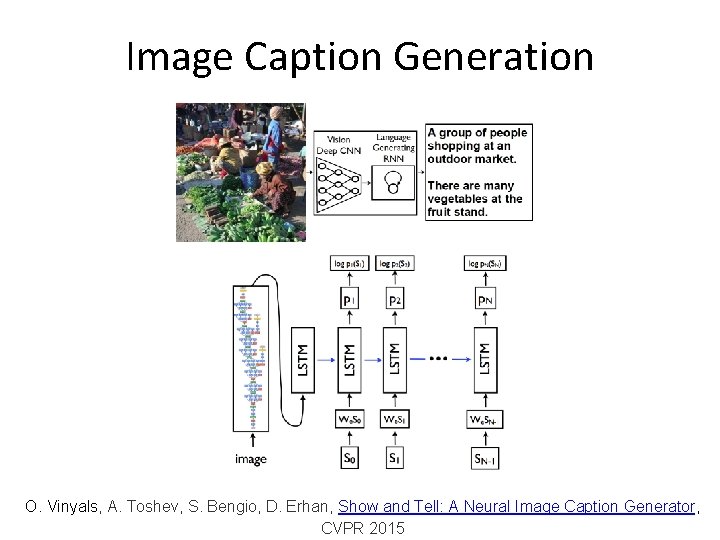

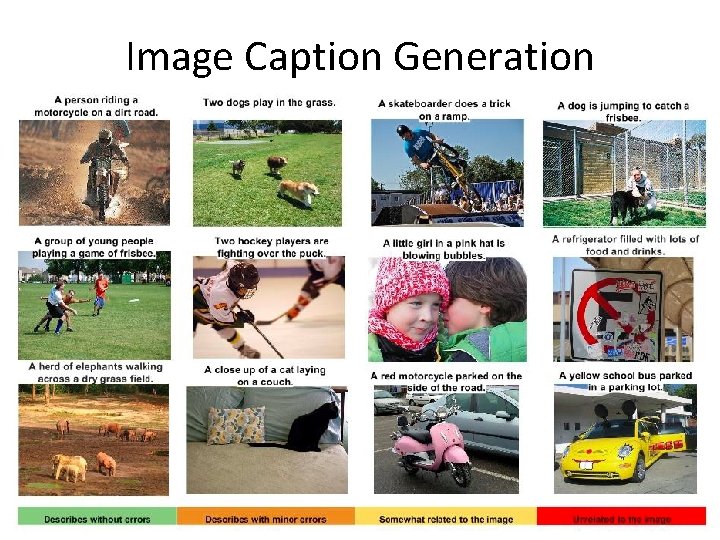

Image Caption Generation O. Vinyals, A. Toshev, S. Bengio, D. Erhan, Show and Tell: A Neural Image Caption Generator, CVPR 2015

Image Caption Generation

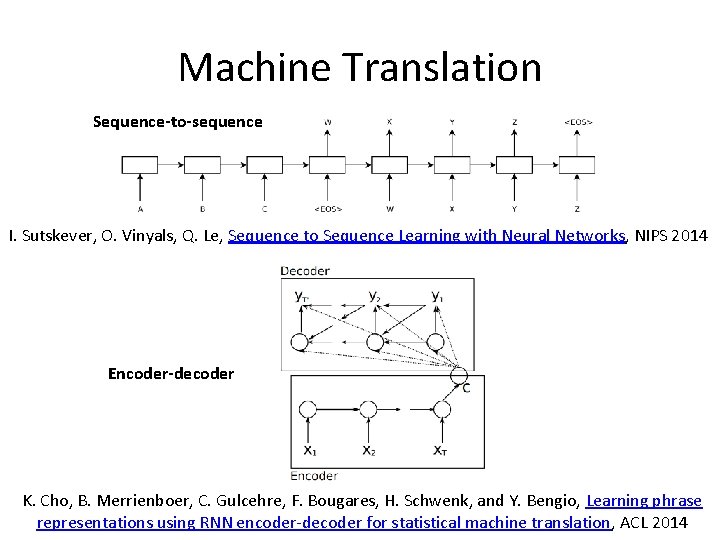

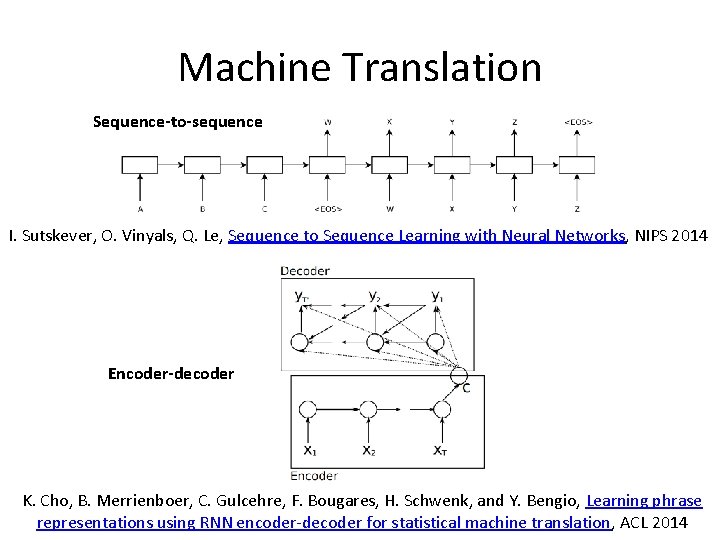

Machine Translation Sequence-to-sequence I. Sutskever, O. Vinyals, Q. Le, Sequence to Sequence Learning with Neural Networks, NIPS 2014 Encoder-decoder K. Cho, B. Merrienboer, C. Gulcehre, F. Bougares, H. Schwenk, and Y. Bengio, Learning phrase representations using RNN encoder-decoder for statistical machine translation, ACL 2014

Useful Resources / References • • http: //cs 231 n. stanford. edu/slides/winter 1516_lecture 10. pdf http: //www. cs. toronto. edu/~rgrosse/csc 321/lec 10. pdf • R. Pascanu, T. Mikolov, and Y. Bengio, On the difficulty of training recurrent neural networks, ICML 2013 S. Hochreiter, and J. Schmidhuber, Long short-term memory, Neural computation, 1997 9(8), pp. 1735 -1780 F. A. Gers, and J. Schmidhuber, Recurrent nets that time and count, IJCNN 2000 K. Greff , R. K. Srivastava, J. Koutník, B. R. Steunebrink, and J. Schmidhuber, LSTM: A search space odyssey, IEEE transactions on neural networks and learning systems, 2016 K. Cho, B. Van Merrienboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, Learning phrase representations using RNN encoder-decoder for statistical machine translation, ACL 2014 R. Jozefowicz, W. Zaremba, and I. Sutskever, An empirical exploration of recurrent network architectures, JMLR 2015 • • •