CS 276 A Text Information Retrieval Mining and

- Slides: 77

CS 276 A Text Information Retrieval, Mining, and Exploitation Lecture 10 7 Nov 2002

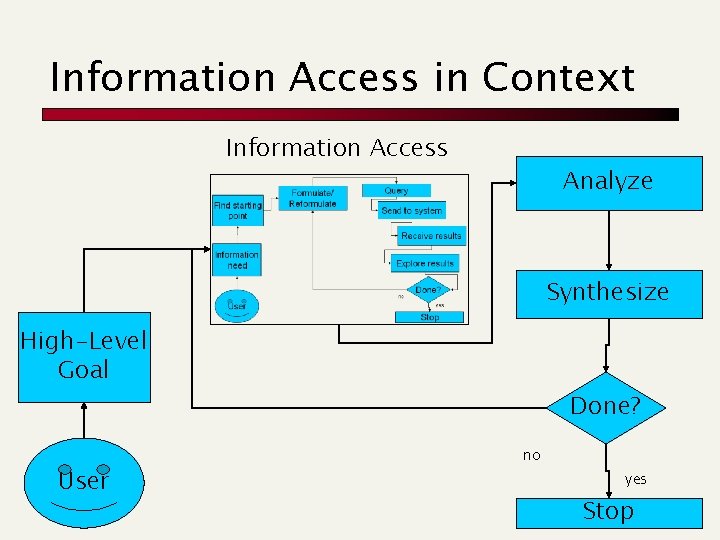

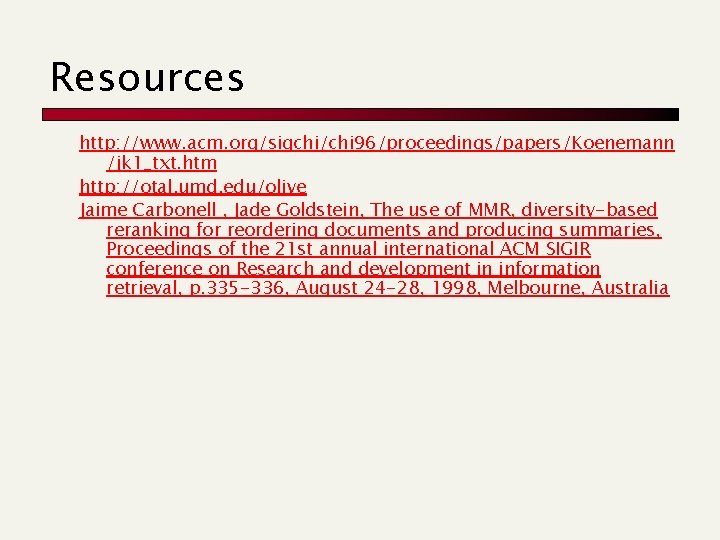

Information Access in Context Information Access Analyze Synthesize High-Level Goal User Done? no yes Stop

Exercise n Observe your own information seeking behavior n n n WWW University library Grocery store Are you a searcher or a browser? How do you reformulate your query? n n Read bad hits, then minus terms Read good hits, then plus terms Try a completely different query …

Correction: Address Field vs. Search Box n n Are users typing urls into the search box ignorant? . com /. org /. net / international urls cnn. com vs. www. cnn. com Full url with protocol qualifier vs. partial url

Today’s Topics n n Information design and visualization Evaluation measures and test collections Evaluation of interactive information retrieval Evaluation gotchas

Information Visualization and Exploration n Tufte Shneiderman Information foraging: Xerox PARC / PARC Inc.

Edward Tufte n n n Information design bible: The visual display of quantitative information The art and science of how to display (quantitative) information visually Significant influence on User Interface design

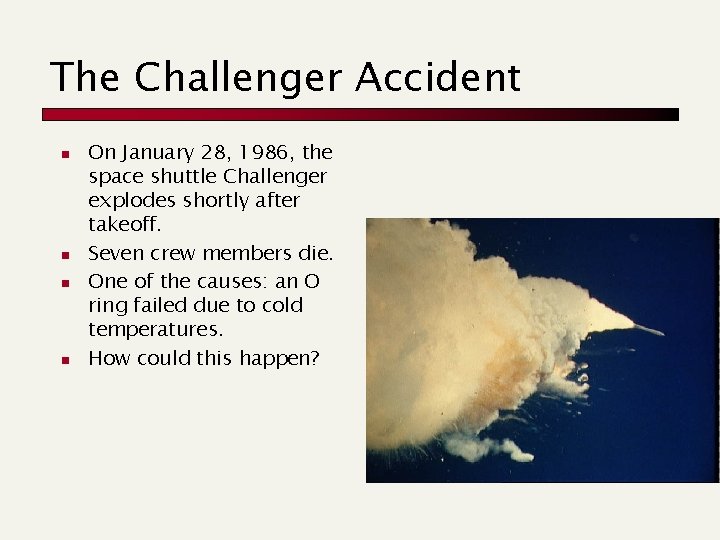

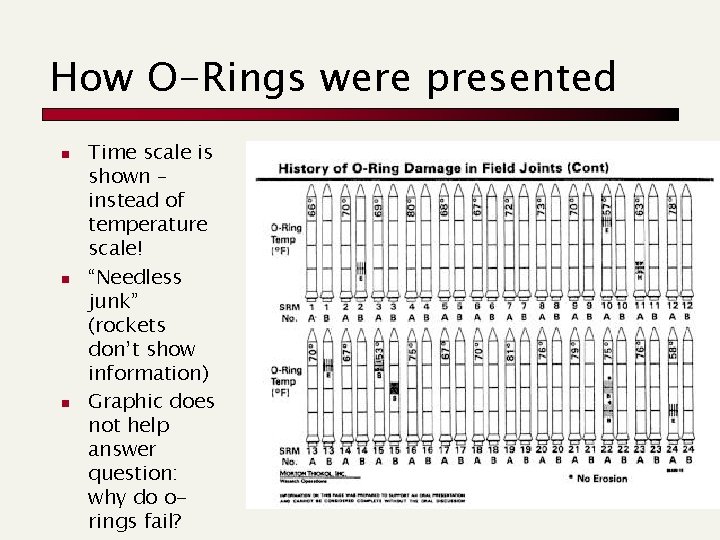

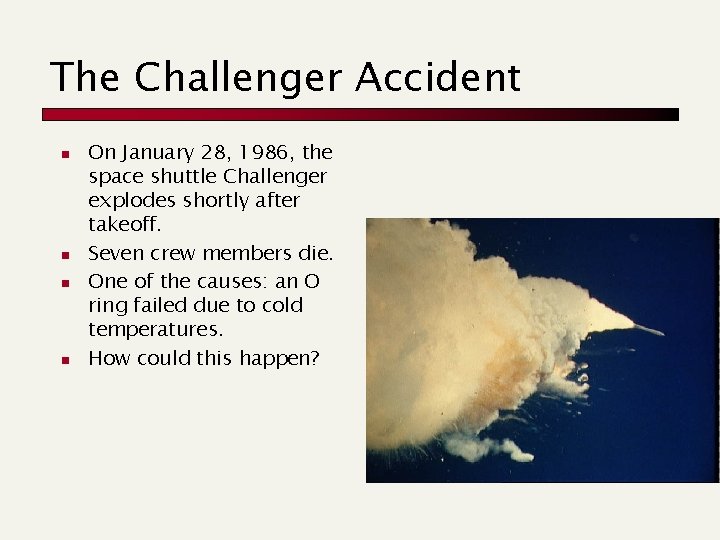

The Challenger Accident n n On January 28, 1986, the space shuttle Challenger explodes shortly after takeoff. Seven crew members die. One of the causes: an O ring failed due to cold temperatures. How could this happen?

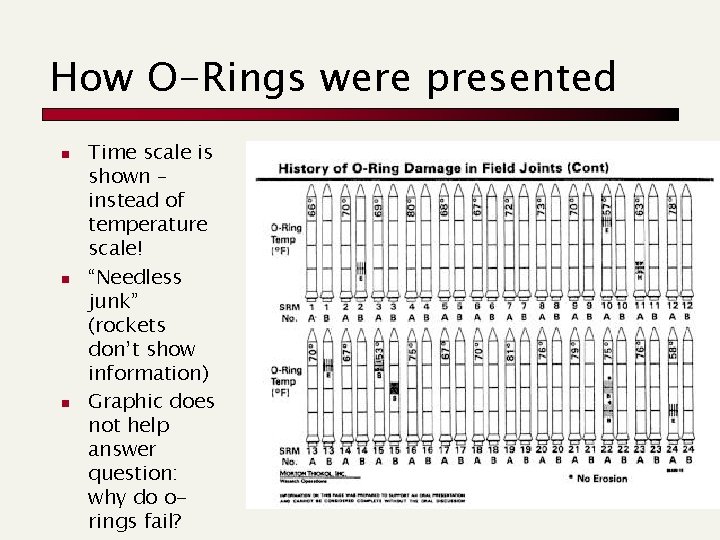

How O-Rings were presented n n n Time scale is shown – instead of temperature scale! “Needless junk” (rockets don’t show information) Graphic does not help answer question: why do orings fail?

Tufte: Principles for Information Design n n n Omit needless junk Show what you mean Don't obscure the meaning and order of scales Make comparisons of related images possible Claim authorship, and think twice when others don't Seek truth

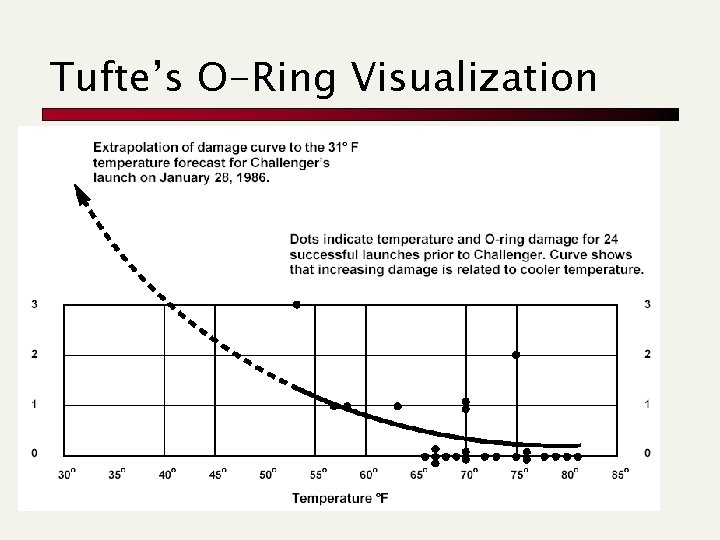

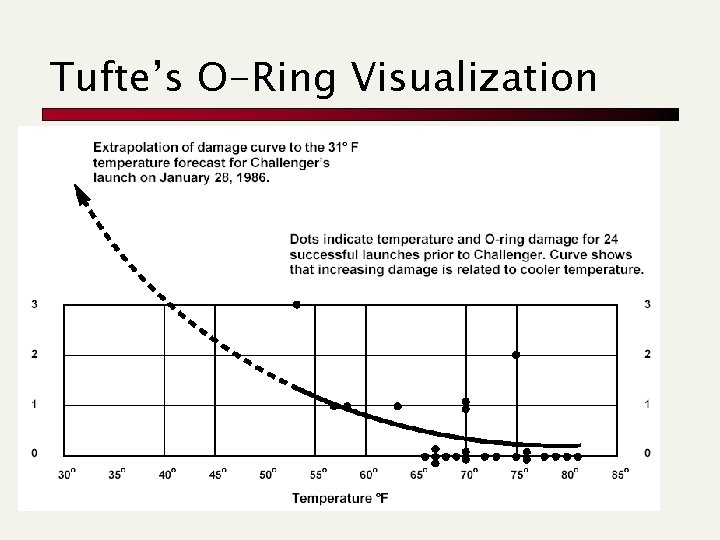

Tufte’s O-Ring Visualization

Tufte: Summary 1. 2. 3. 4. “Like poor writing, bad graphical displays distort or obscure the data, make it harder to understand or compare, or otherwise thwart the communicative effect which the graph should convey. ” Bad decisions are made based on bad information design. Tufte’s influence on UI design Examples of the best and worst in information visualization: http: //www. math. yorku. ca/SCS/Gallery/nofr ames. html

Shneiderman: Information Visualization n How to design user interfaces How to engineer user interfaces for software Task by type taxonomy

Shneiderman on HCI n Well-designed interactive computer systems promote: n n Positive feelings of success, competence, and mastery. Allow users to concentrate on their work, rather than on the system. Marti Hearst

Task by Type Taxonomy: Data Types n n n n 1 -D linear: seesoft 2 -D map: multidimensional scaling (terms, docs, etc) 3 -D world: cat-a-cone Multi-dim: table lens Temporal: topic detection Tree: hierarchies a la Yahoo Network: network graphs of sites (kartoo)

Task by Type Taxonomy: Tasks n n n n Overview: gain an overview of the entire collection Zoom: zoom in on items of interest Filter: filter out uninteresting items Details-on-demand: select an item or group and get details when needed Relate: view relationships among items History: keep a history of actions to support, undo, replay Extract: allow extraction of subcollections and the query parameters

Exercise n If your project has a UI component: n n Which data types are being displayed? Which tasks are you supporting?

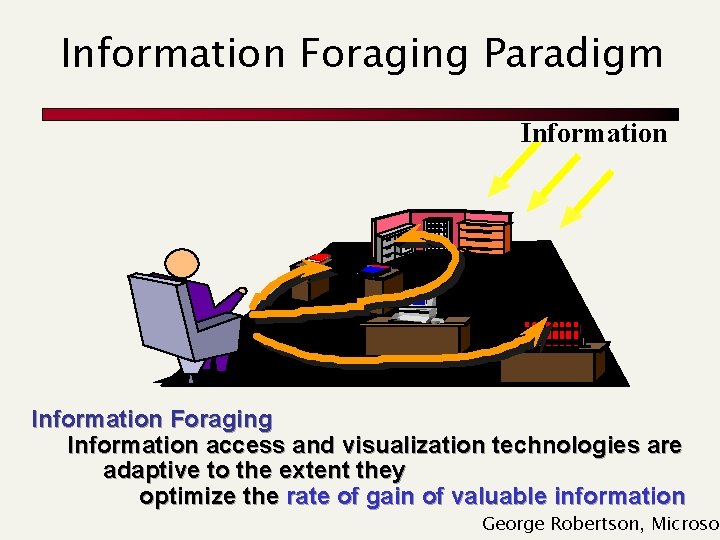

Xerox PARC: Information Foraging n n Metaphor from ecology/biology People looking for information = animals foraging for food Predictive model that allows principled way of designing user interfaces The main focus is: n n n What will the user do next? How can we support a good choice for the next action? Rather than: n Evaluation of a single user-system interaction

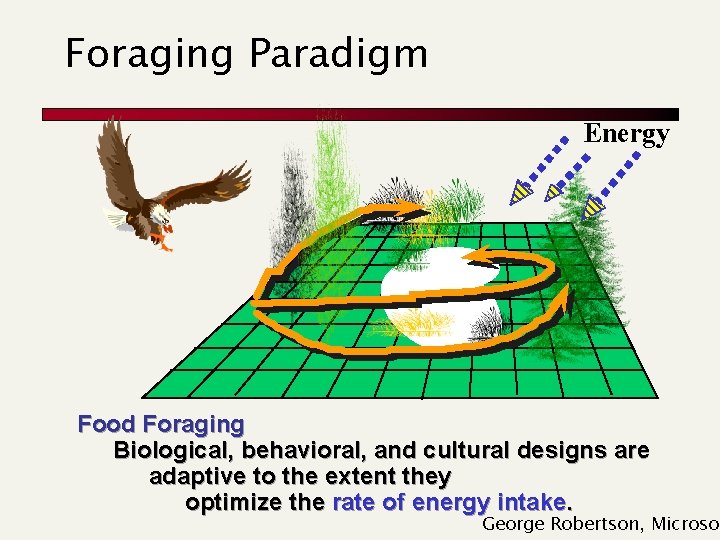

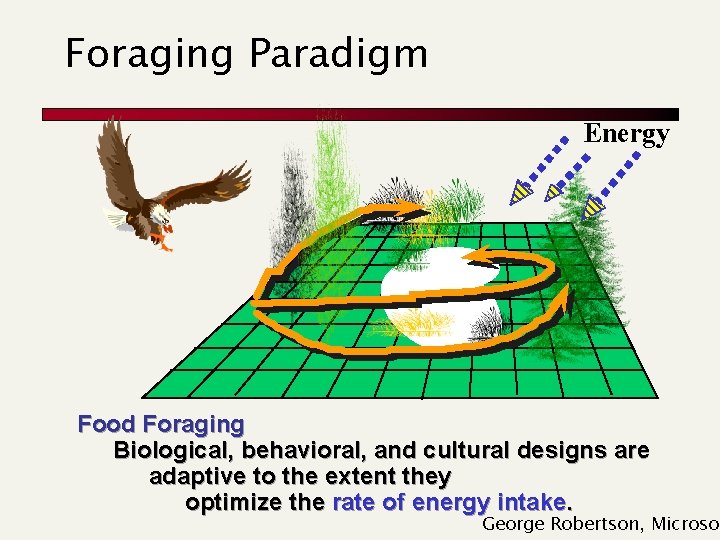

Foraging Paradigm Energy Food Foraging Biological, behavioral, and cultural designs are adaptive to the extent they optimize the rate of energy intake. George Robertson, Microsof

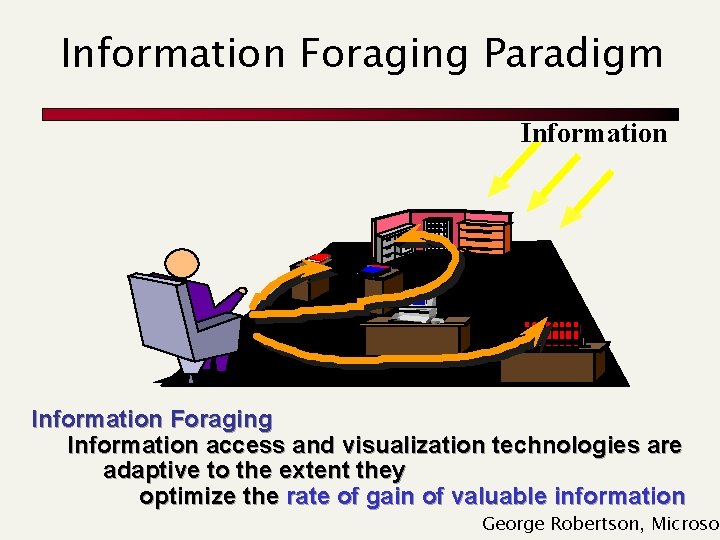

Information Foraging Paradigm Information Foraging Information access and visualization technologies are adaptive to the extent they optimize the rate of gain of valuable information George Robertson, Microsof

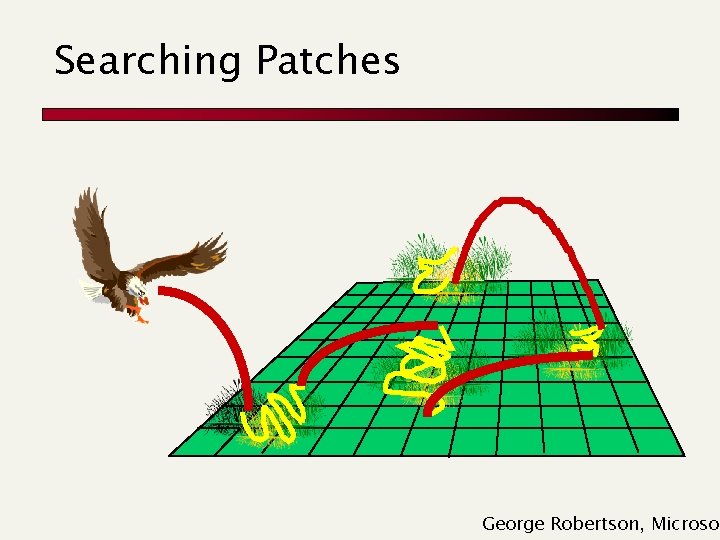

Searching Patches George Robertson, Microsof

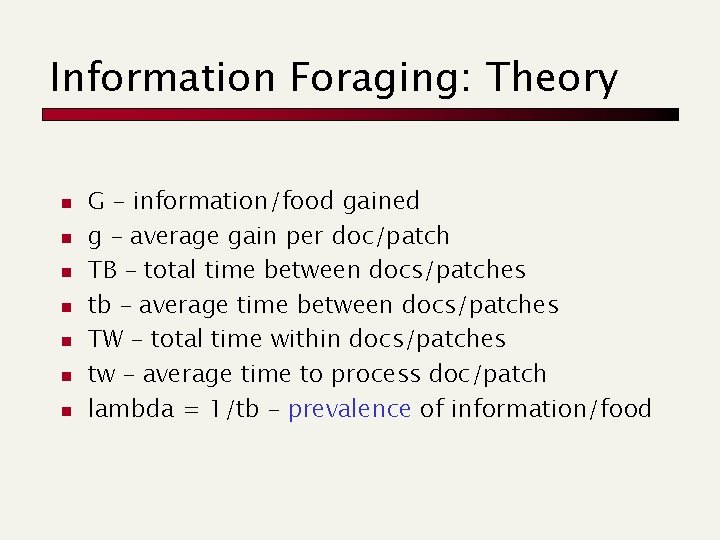

Information Foraging: Theory n n n n G – information/food gained g – average gain per doc/patch TB – total time between docs/patches tb – average time between docs/patches TW – total time within docs/patches tw – average time to process doc/patch lambda = 1/tb – prevalence of information/food

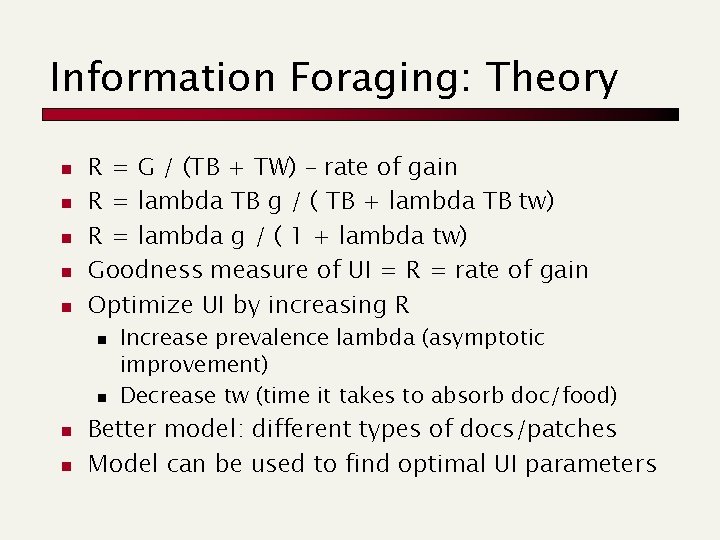

Information Foraging: Theory n n n R = G / (TB + TW) – rate of gain R = lambda TB g / ( TB + lambda TB tw) R = lambda g / ( 1 + lambda tw) Goodness measure of UI = R = rate of gain Optimize UI by increasing R n n Increase prevalence lambda (asymptotic improvement) Decrease tw (time it takes to absorb doc/food) Better model: different types of docs/patches Model can be used to find optimal UI parameters

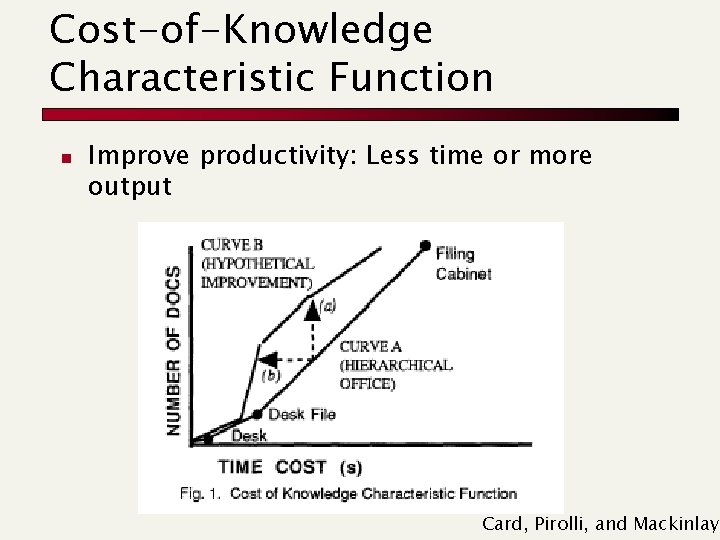

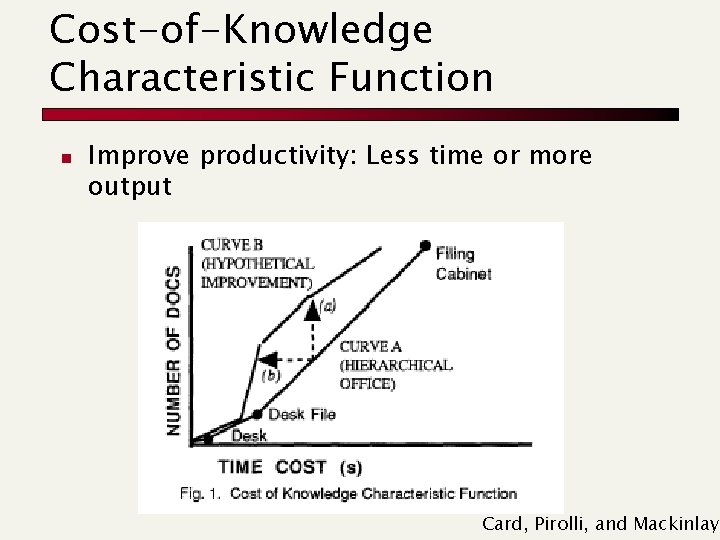

Cost-of-Knowledge Characteristic Function n Improve productivity: Less time or more output Card, Pirolli, and Mackinlay

Creating Test Collections for IR Evaluation

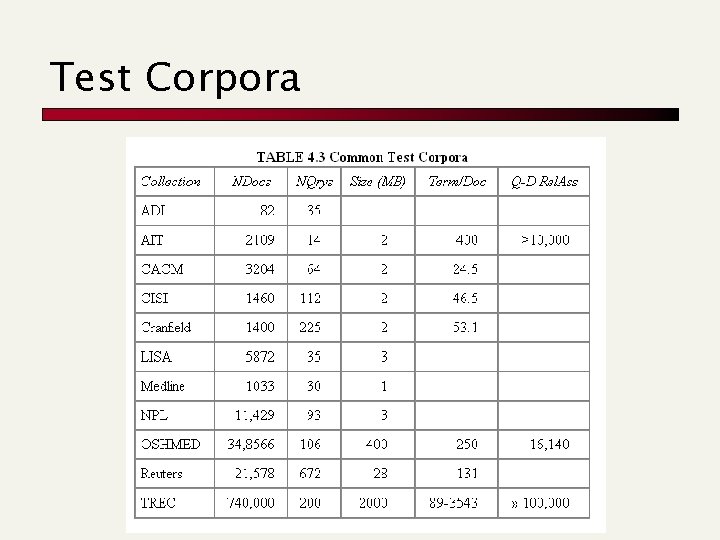

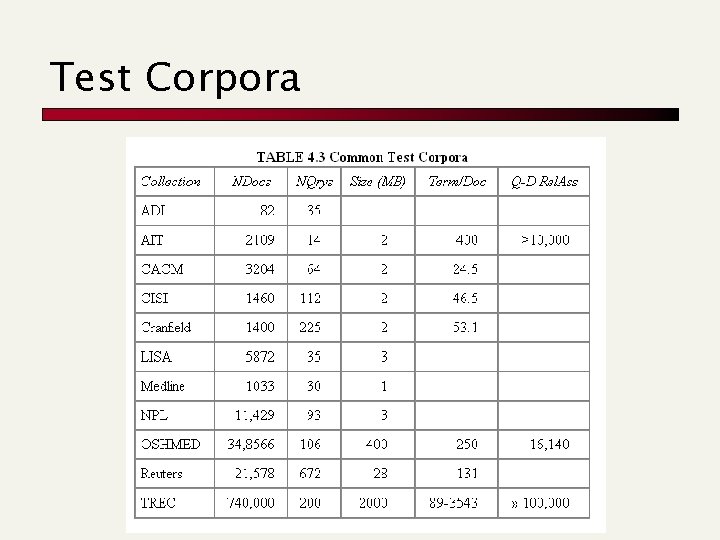

Test Corpora

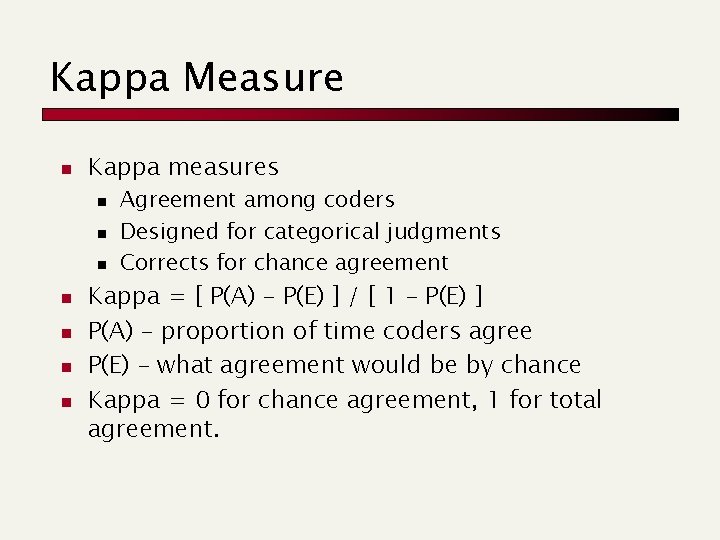

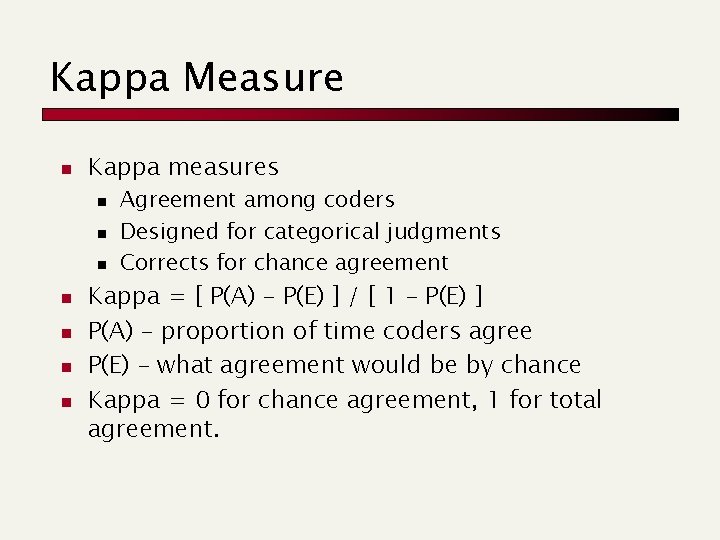

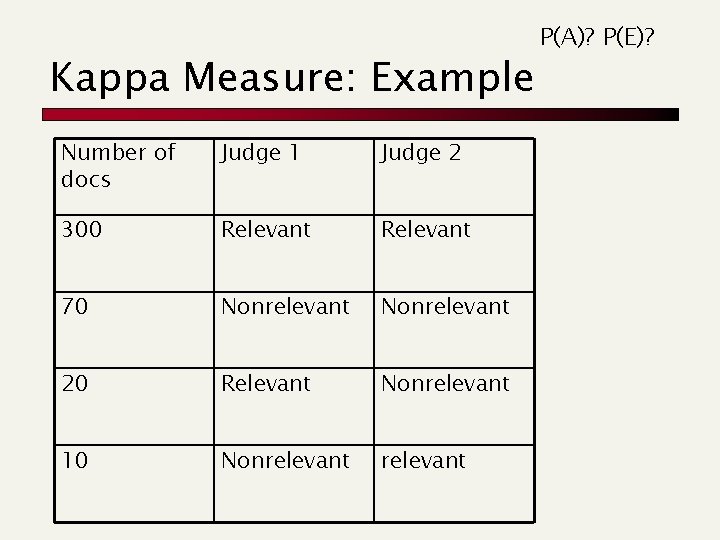

Kappa Measure n Kappa measures n n n n Agreement among coders Designed for categorical judgments Corrects for chance agreement Kappa = [ P(A) – P(E) ] / [ 1 – P(E) ] P(A) – proportion of time coders agree P(E) – what agreement would be by chance Kappa = 0 for chance agreement, 1 for total agreement.

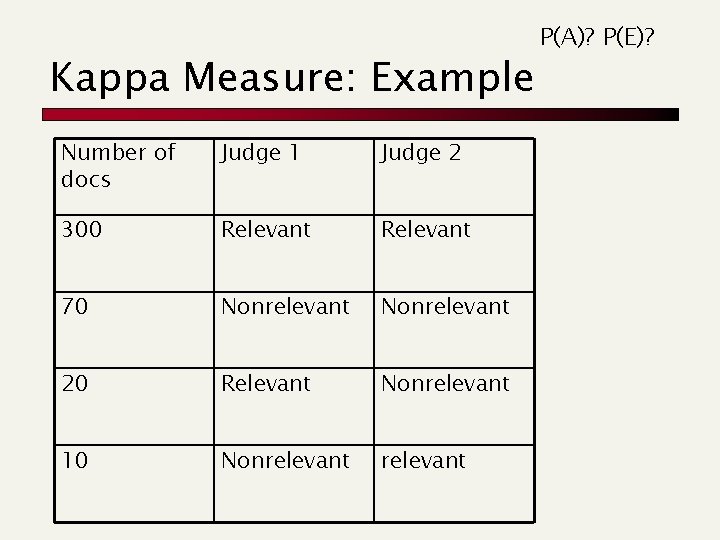

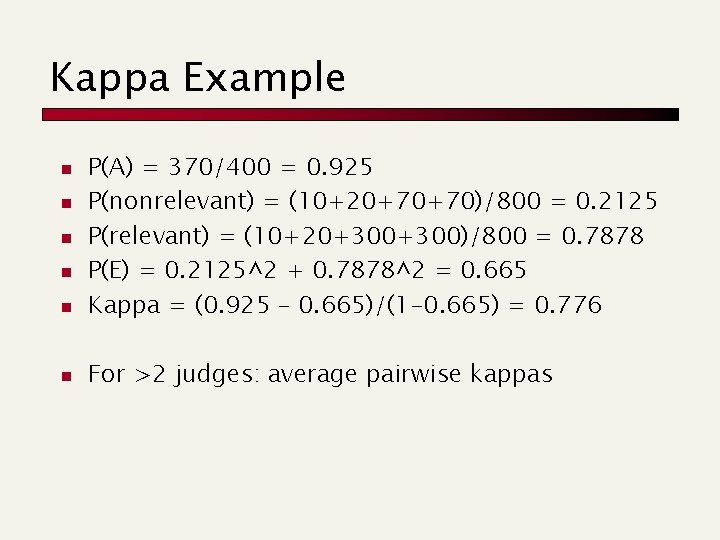

Kappa Measure: Example Number of docs Judge 1 Judge 2 300 Relevant 70 Nonrelevant 20 Relevant Nonrelevant 10 Nonrelevant P(A)? P(E)?

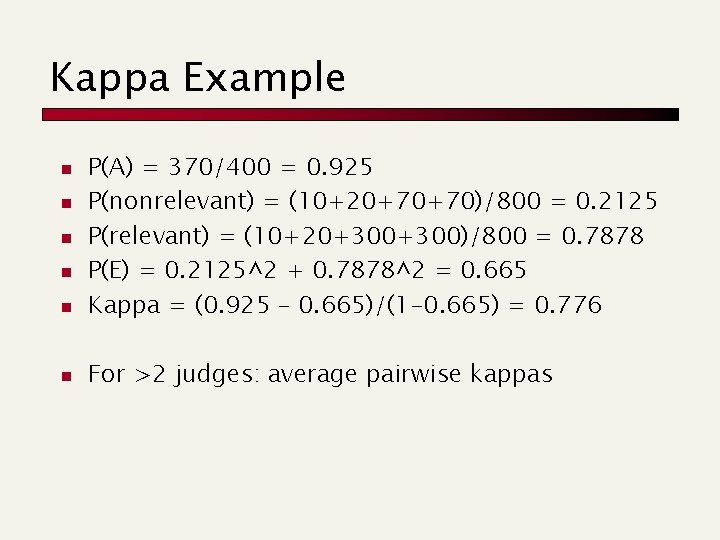

Kappa Example n P(A) = 370/400 = 0. 925 P(nonrelevant) = (10+20+70+70)/800 = 0. 2125 P(relevant) = (10+20+300)/800 = 0. 7878 P(E) = 0. 2125^2 + 0. 7878^2 = 0. 665 Kappa = (0. 925 – 0. 665)/(1 -0. 665) = 0. 776 n For >2 judges: average pairwise kappas n n

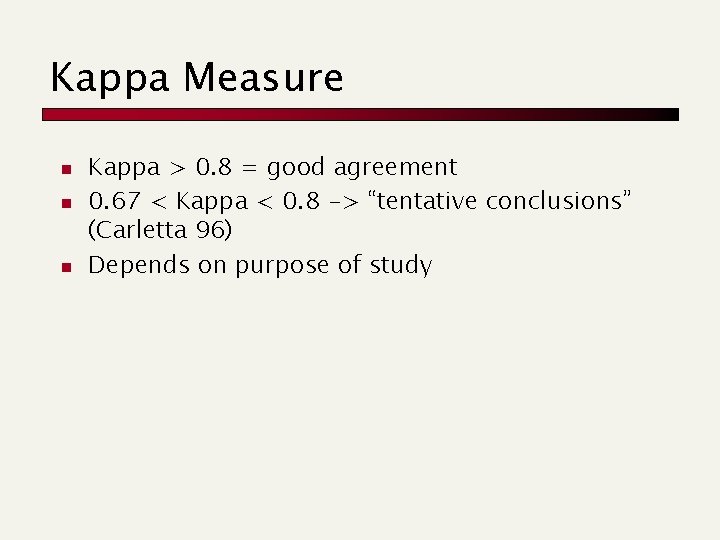

Kappa Measure n n n Kappa > 0. 8 = good agreement 0. 67 < Kappa < 0. 8 -> “tentative conclusions” (Carletta 96) Depends on purpose of study

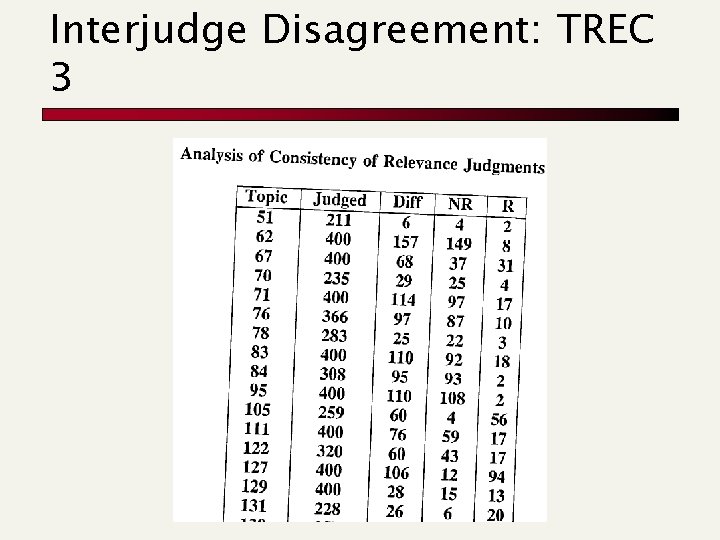

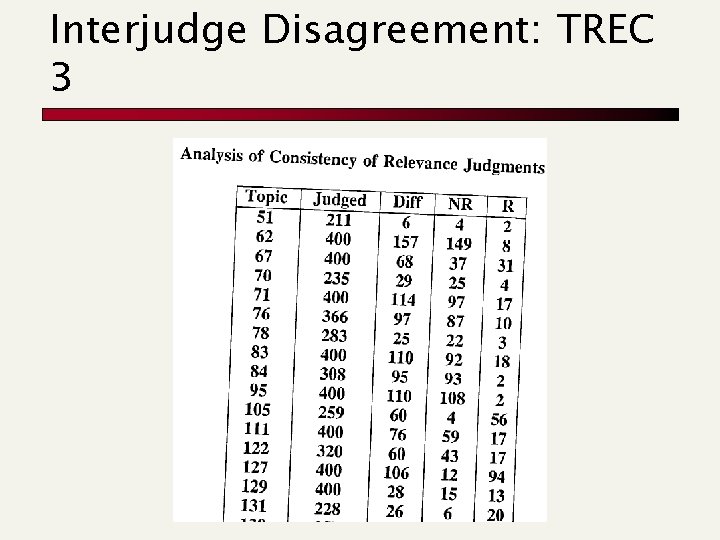

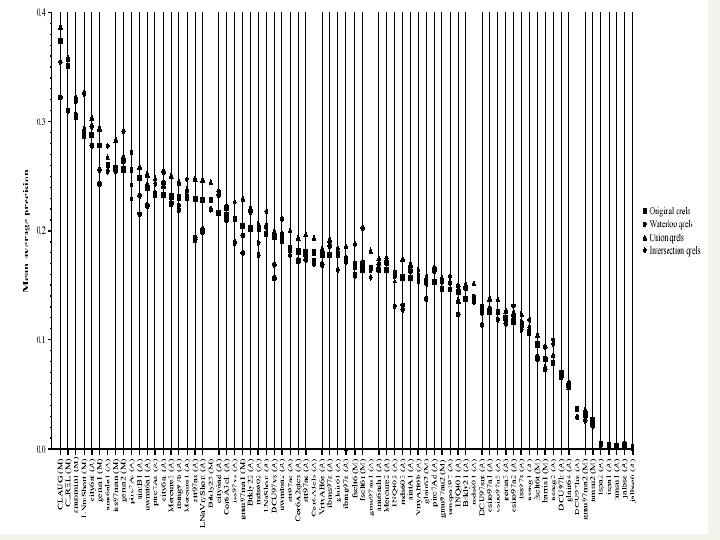

Interjudge Disagreement: TREC 3

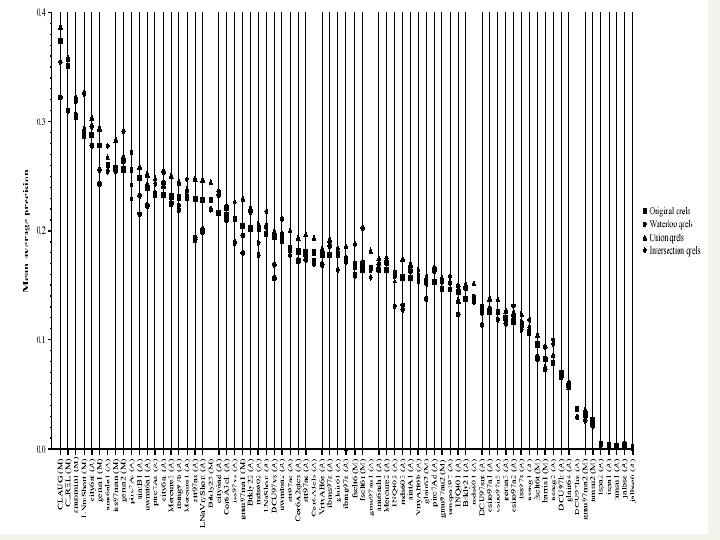

Impact of Interjudge Disagreement n n Impact on absolute performance measure can be significant (0. 32 vs 0. 39) Little impact on ranking of different systems or relative performance

Evaluation Measures

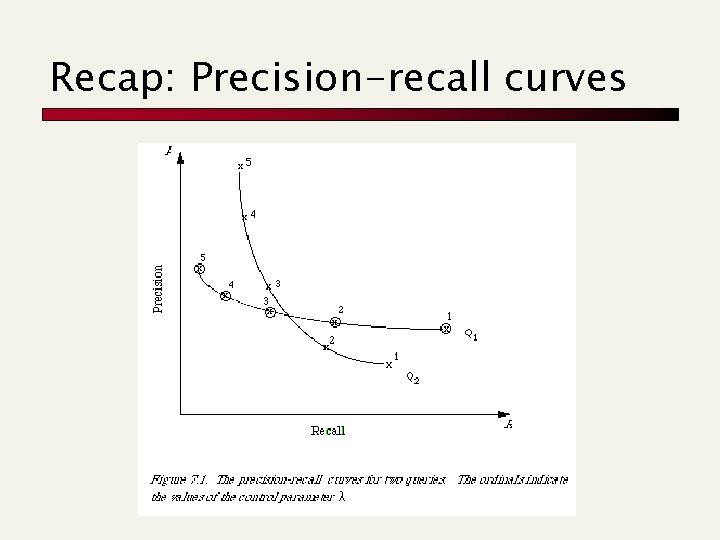

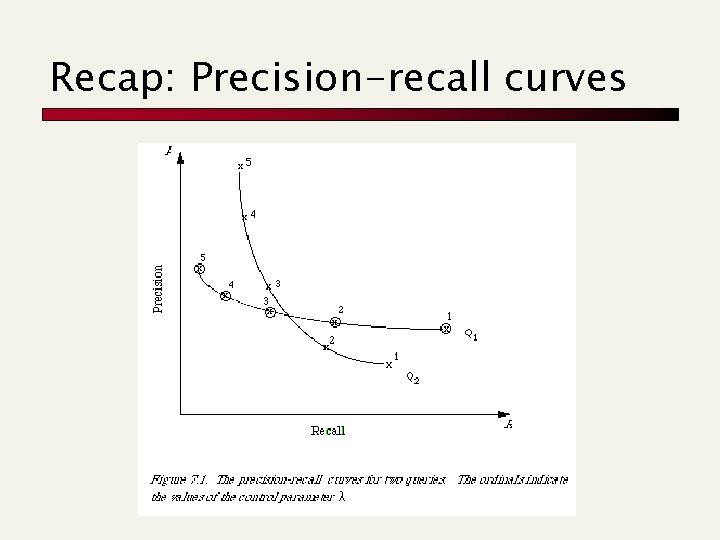

Recap: Precision/Recall n Evaluation of ranked results: n n n You can return any number of results ordered by similarity By taking various numbers of documents (levels of recall), you can produce a precision- recall curve Precision: #correct&retrieved/#retrieved Recall: #correct&retrieved/#correct The truth, the whole truth, and nothing but the truth. Recall 1. 0 = the whole truth, precision 1. 0 = nothing but the truth.

Recap: Precision-recall curves

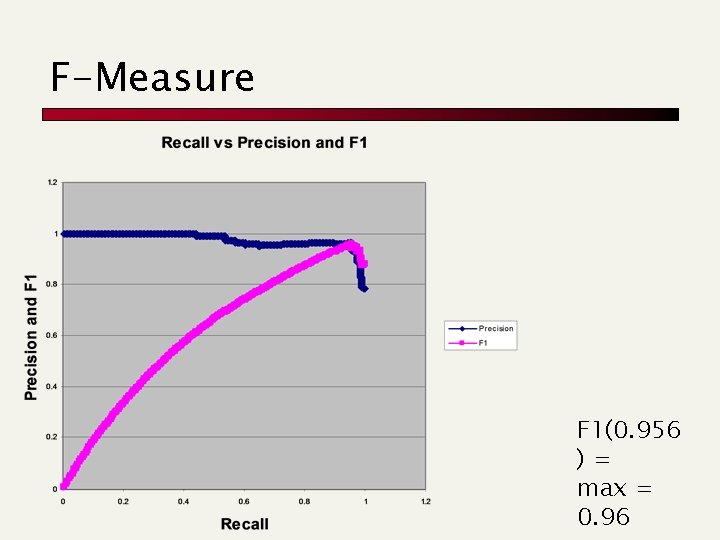

F Measure n n n F measure is the harmonic mean of precision and recall (strictly speaking F 1) 1/F = ½ (1/P + 1/R) Use F measure if you need to optimize a single measure that balances precision and recall.

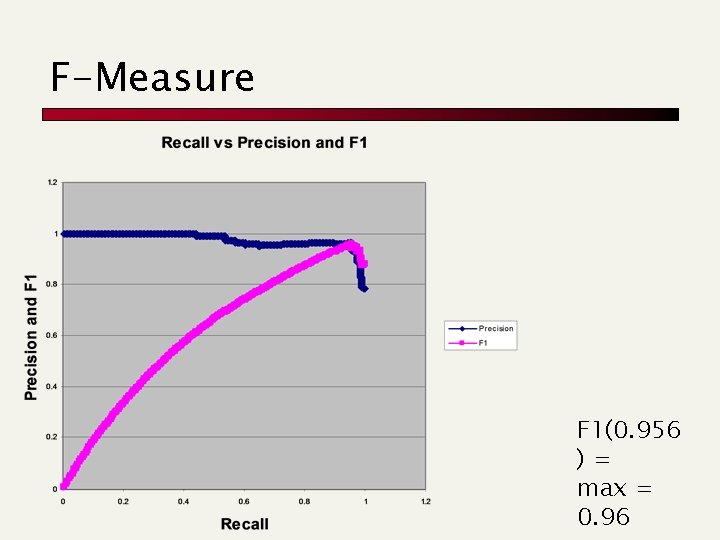

F-Measure F 1(0. 956 )= max = 0. 96

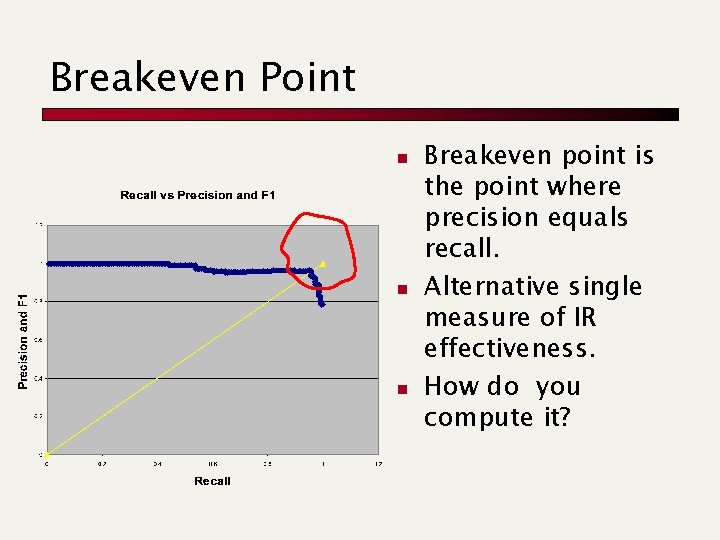

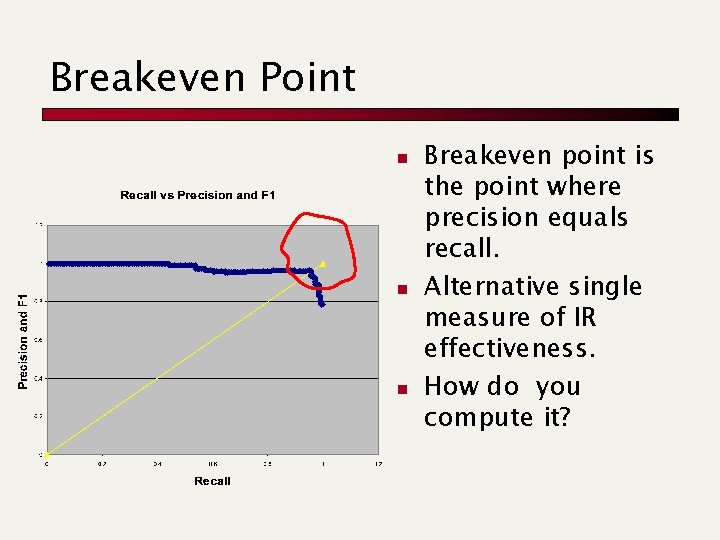

Breakeven Point n n n Breakeven point is the point where precision equals recall. Alternative single measure of IR effectiveness. How do you compute it?

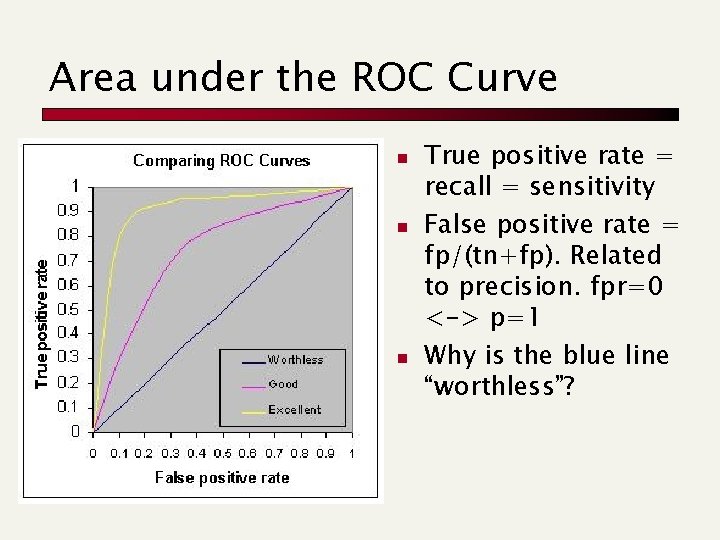

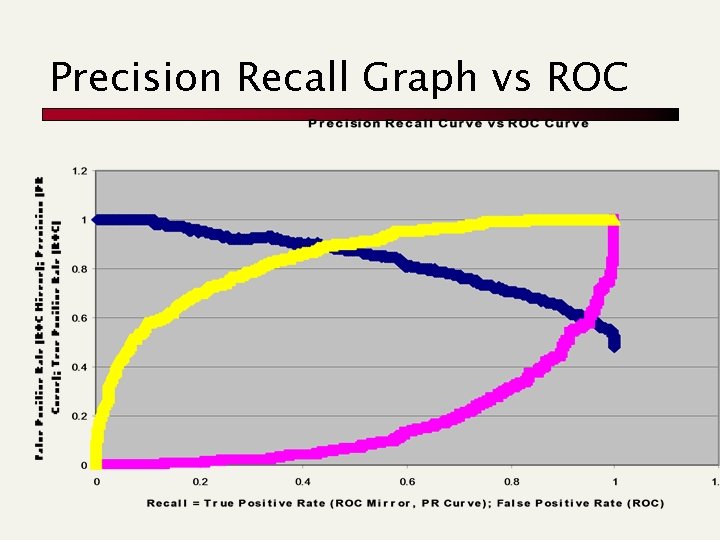

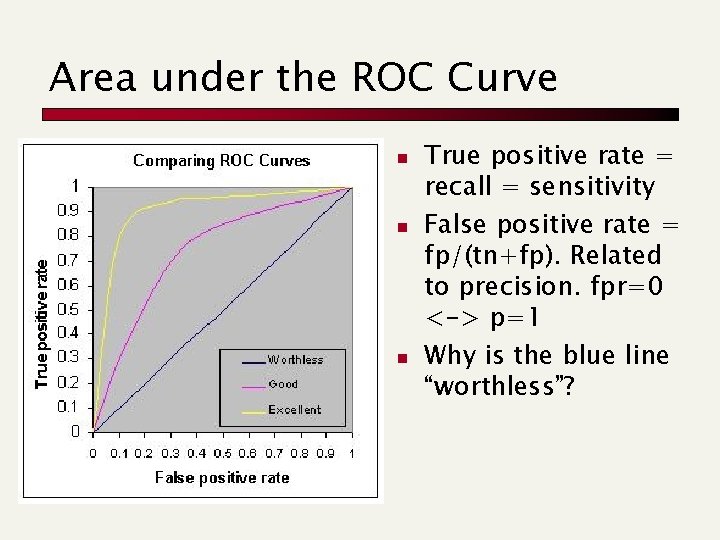

Area under the ROC Curve n n n True positive rate = recall = sensitivity False positive rate = fp/(tn+fp). Related to precision. fpr=0 <-> p=1 Why is the blue line “worthless”?

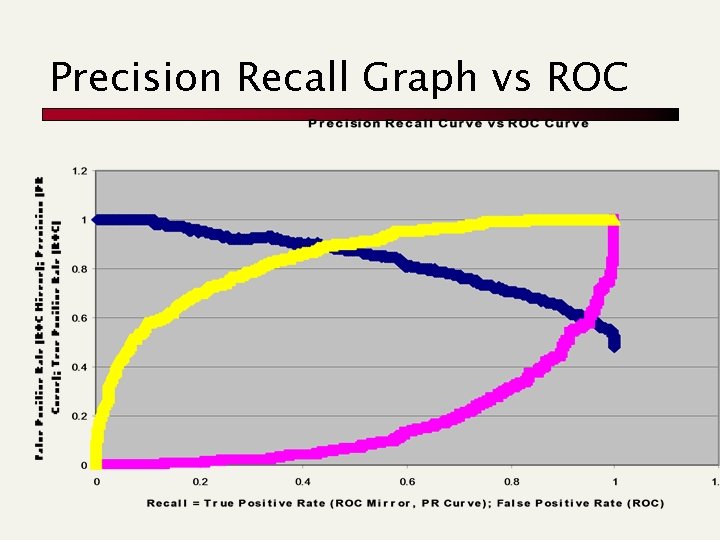

Precision Recall Graph vs ROC

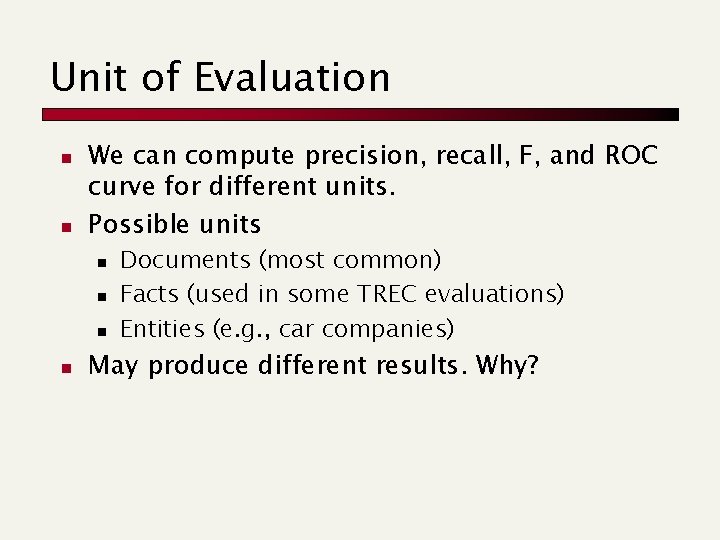

Unit of Evaluation n n We can compute precision, recall, F, and ROC curve for different units. Possible units n n Documents (most common) Facts (used in some TREC evaluations) Entities (e. g. , car companies) May produce different results. Why?

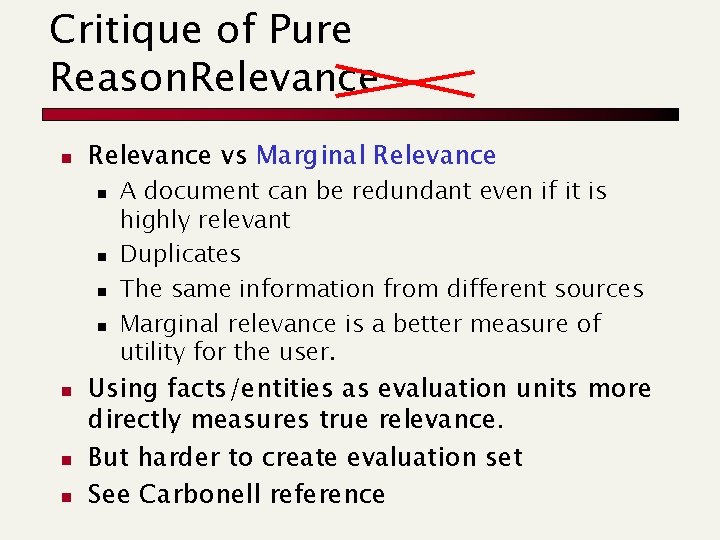

Critique of Pure Reason. Relevance n Relevance vs Marginal Relevance n n n n A document can be redundant even if it is highly relevant Duplicates The same information from different sources Marginal relevance is a better measure of utility for the user. Using facts/entities as evaluation units more directly measures true relevance. But harder to create evaluation set See Carbonell reference

Evaluation of Interactive Information Retrieval

Evaluating Interactive IR n Evaluating interactive IR poses special challenges n n Obtaining experimental data is more expensive Experiments involving humans require careful design. n n Control for confounding variables Questionnaire to collect relevant subject data Ensure that experimental setup is close to intended real world scenario Approval for human subjects research

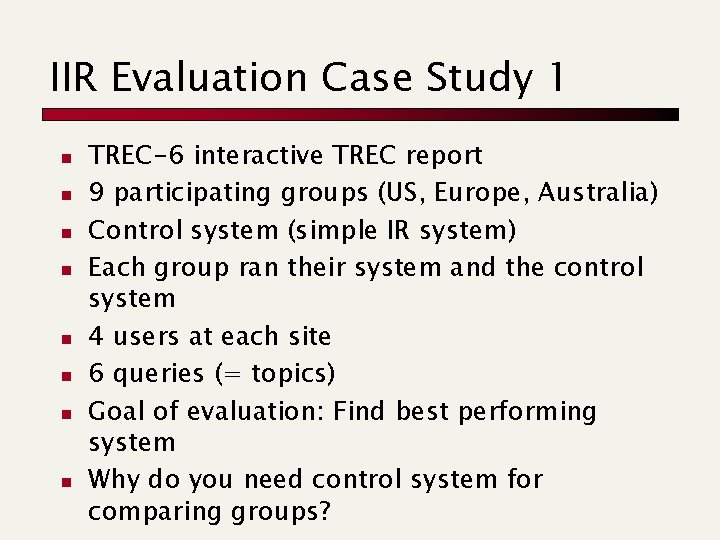

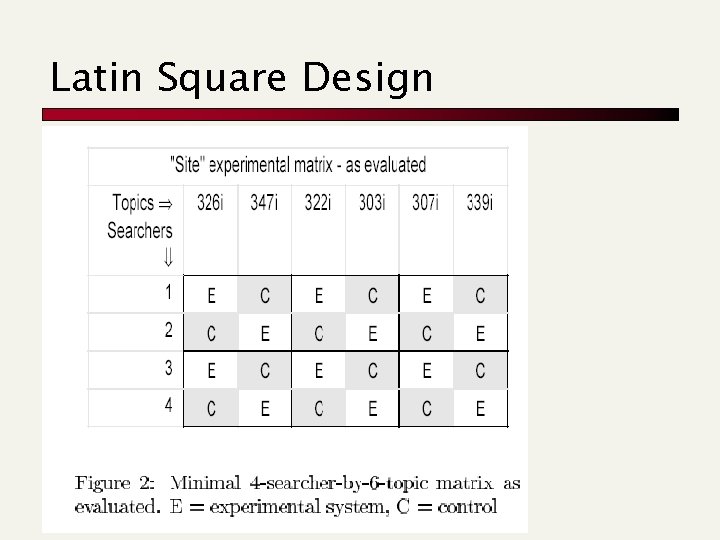

IIR Evaluation Case Study 1 n n n n TREC-6 interactive TREC report 9 participating groups (US, Europe, Australia) Control system (simple IR system) Each group ran their system and the control system 4 users at each site 6 queries (= topics) Goal of evaluation: Find best performing system Why do you need control system for comparing groups?

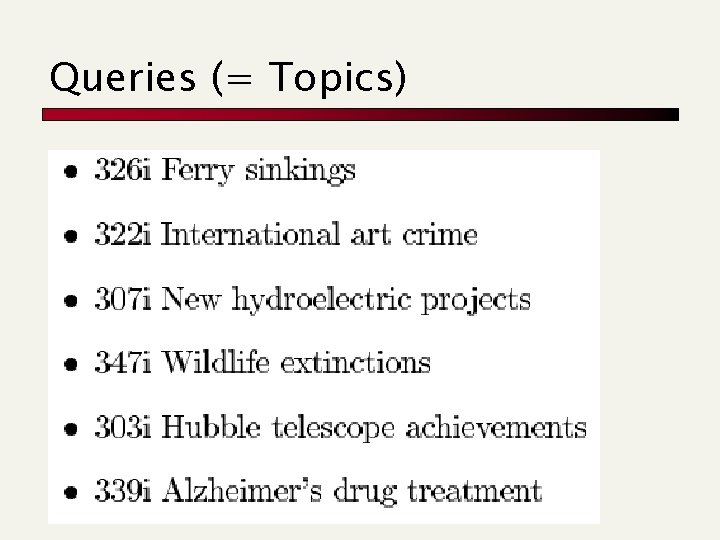

Queries (= Topics)

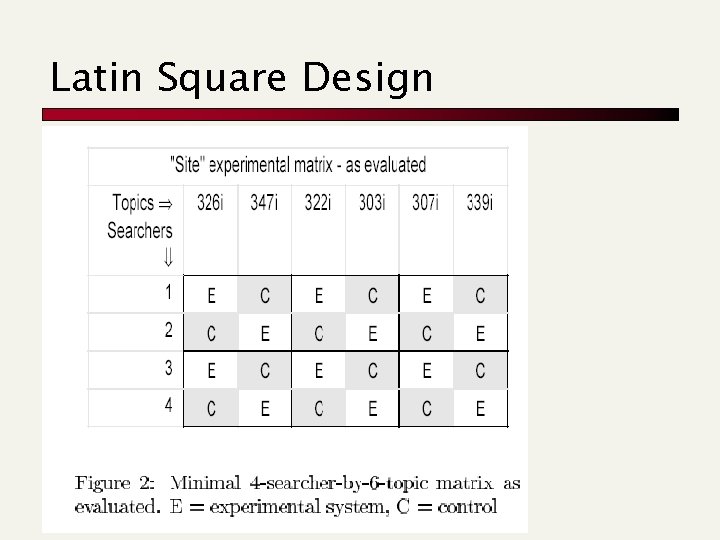

Latin Square Design

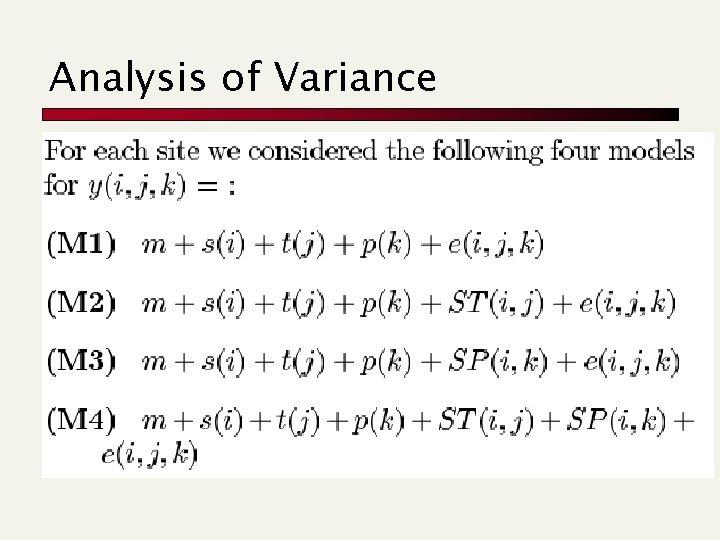

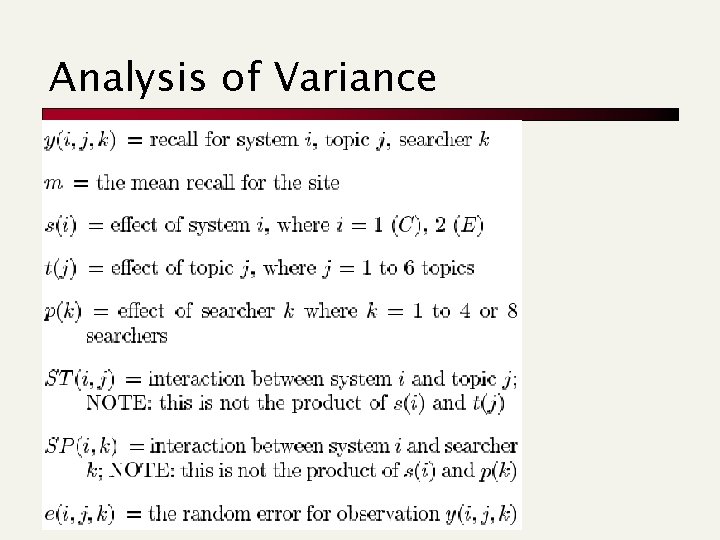

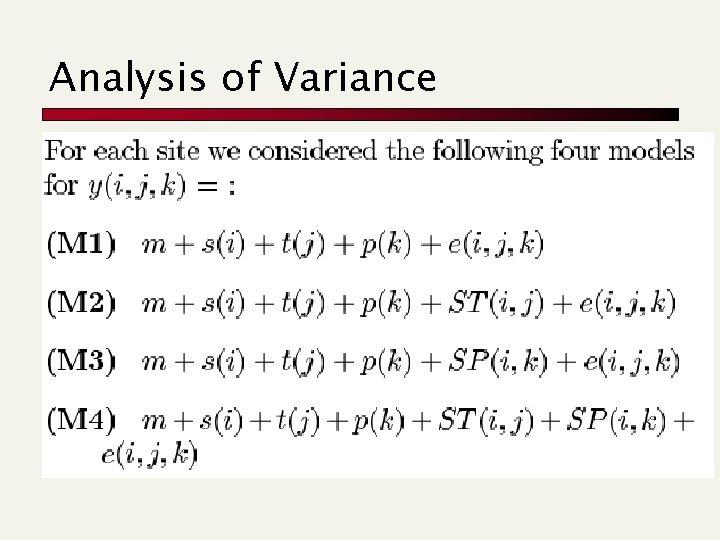

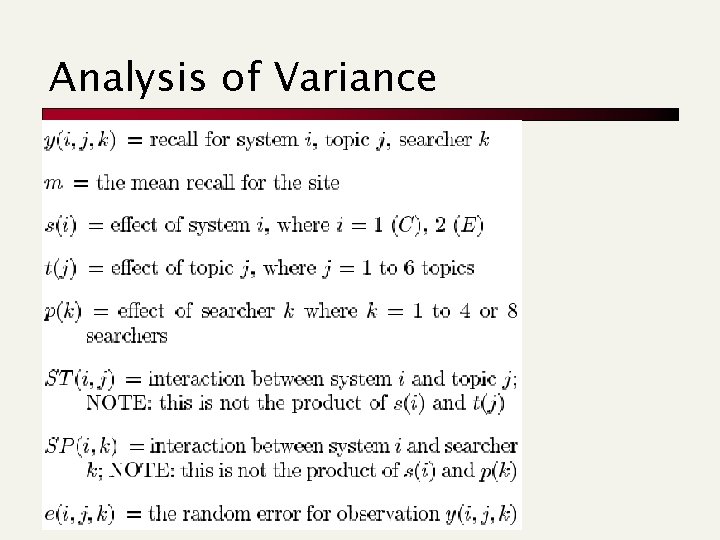

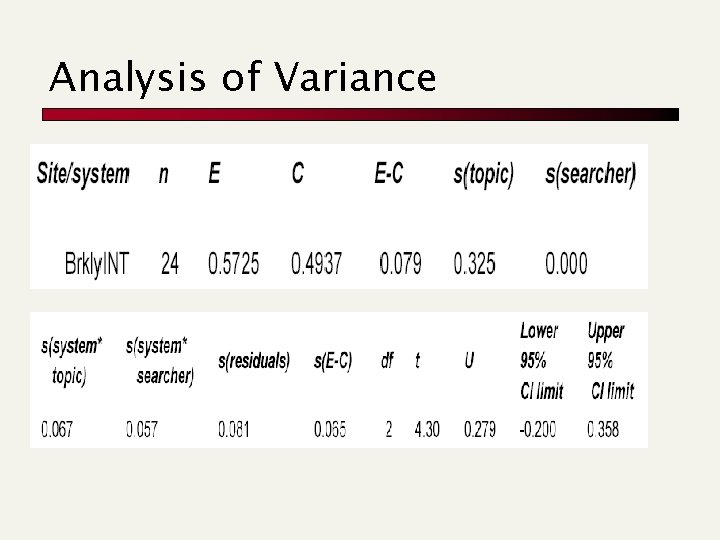

Analysis of Variance

Analysis of Variance

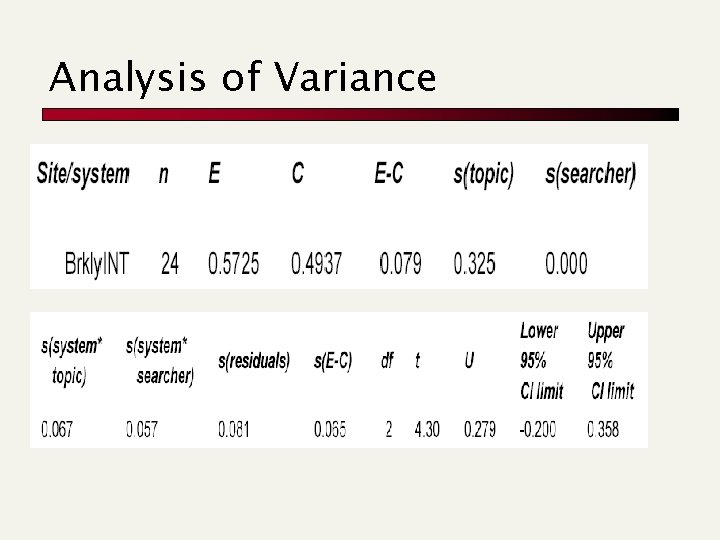

Analysis of Variance

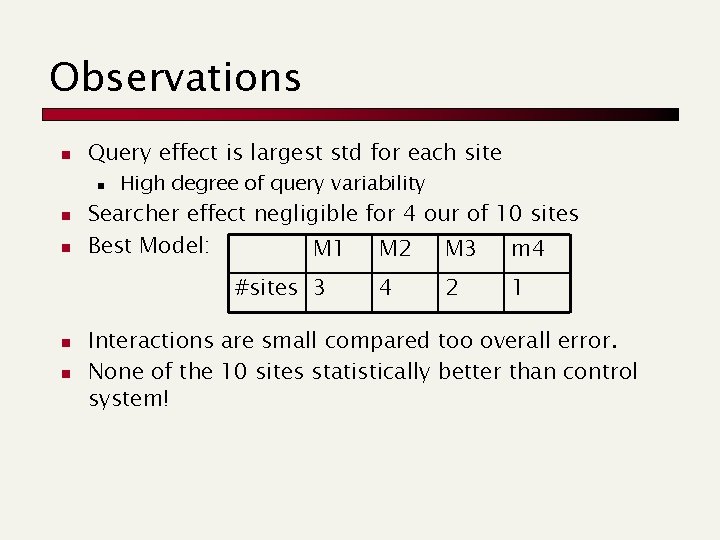

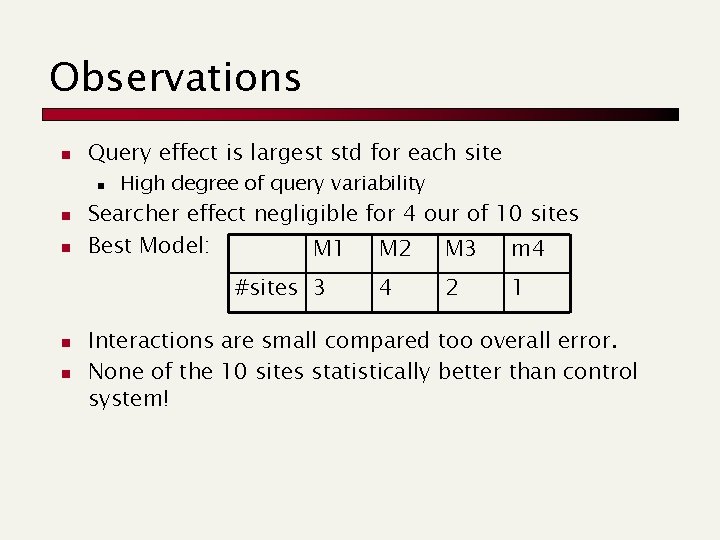

Observations n Query effect is largest std for each site n n n High degree of query variability Searcher effect negligible for 4 our of 10 sites Best Model: M 1 M 2 M 3 m 4 #sites 3 n n 4 2 1 Interactions are small compared too overall error. None of the 10 sites statistically better than control system!

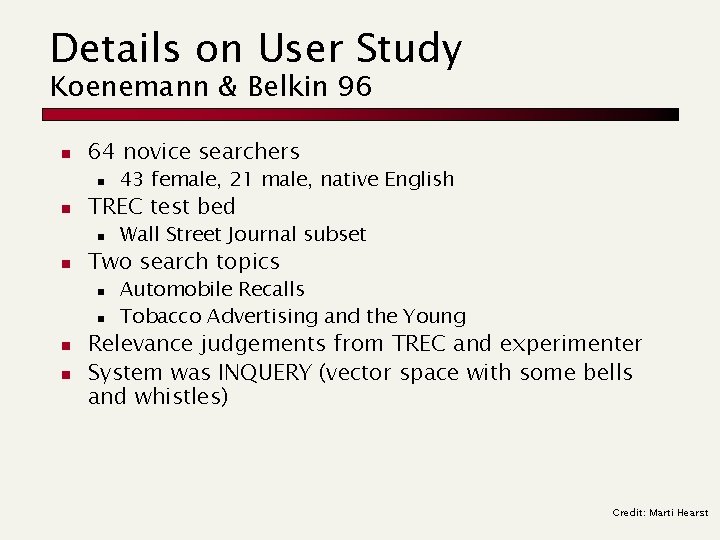

IIR Evaluation Case Study 2 n n Evaluation of relevance feedback Koenemann & Belkin 1996

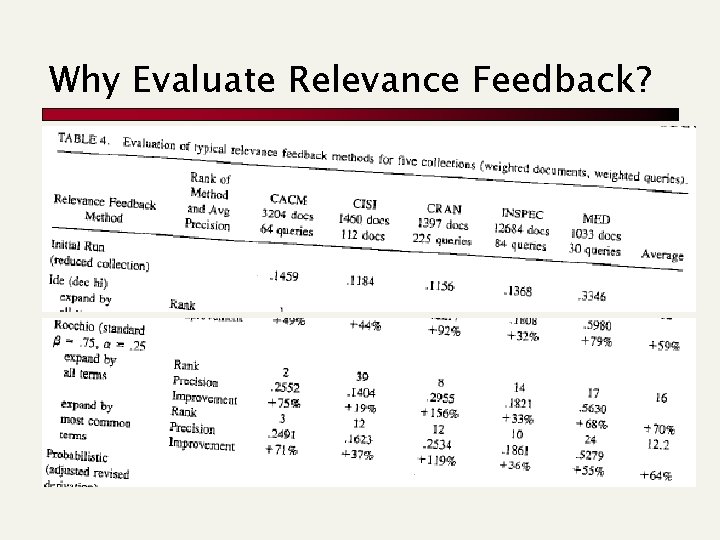

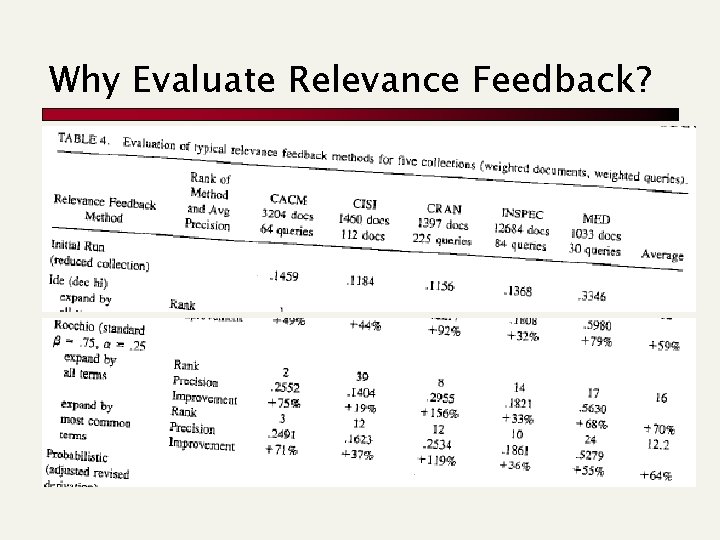

Why Evaluate Relevance Feedback?

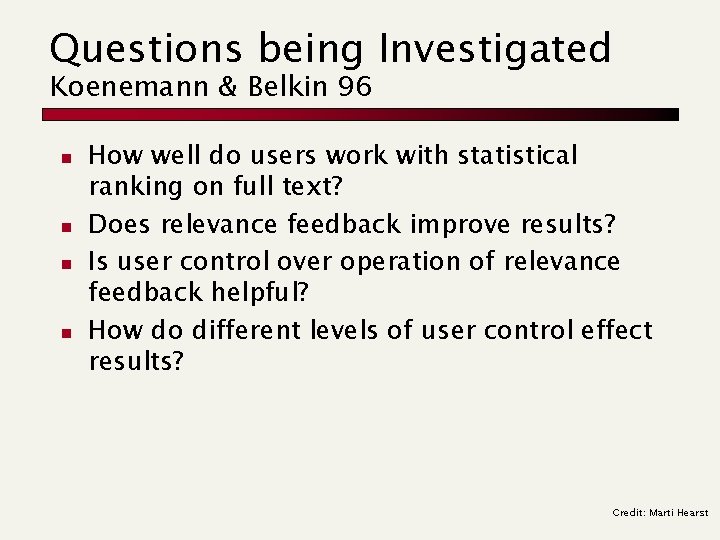

Questions being Investigated Koenemann & Belkin 96 n n How well do users work with statistical ranking on full text? Does relevance feedback improve results? Is user control over operation of relevance feedback helpful? How do different levels of user control effect results? Credit: Marti Hearst

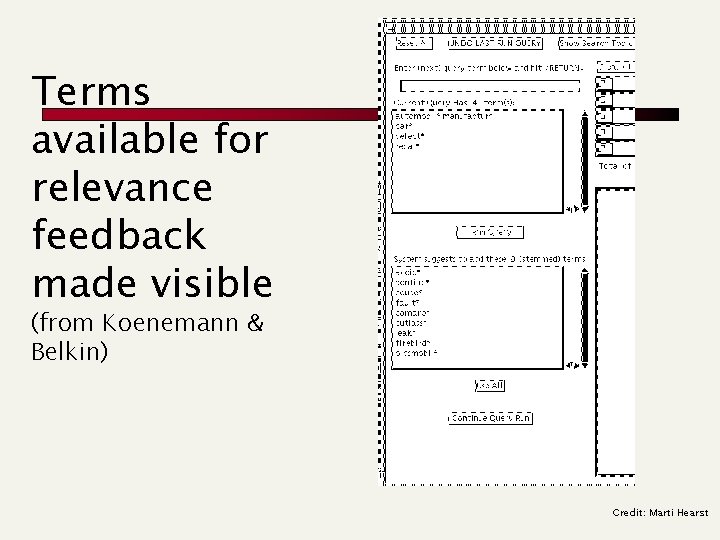

How much of the guts should the user see? n Opaque (black box) n n Transparent n n (see available terms after the r. f. ) Penetrable n n (like web search engines) (see suggested terms before the r. f. ) Which do you think worked best? Credit: Marti Hearst

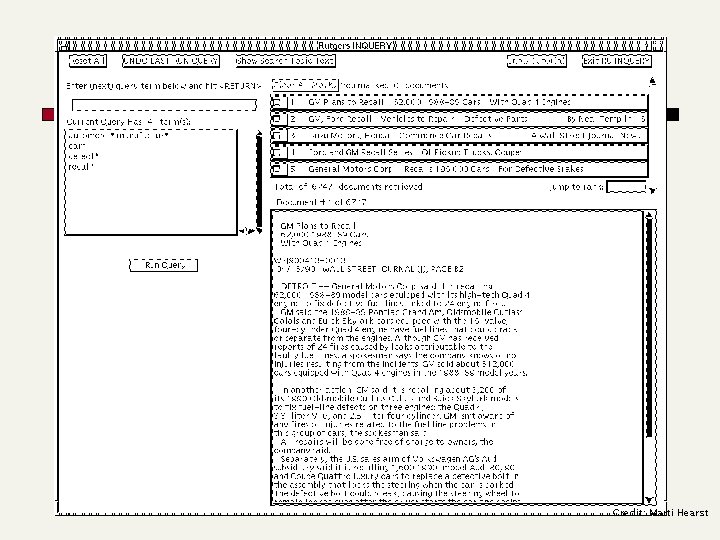

Credit: Marti Hearst

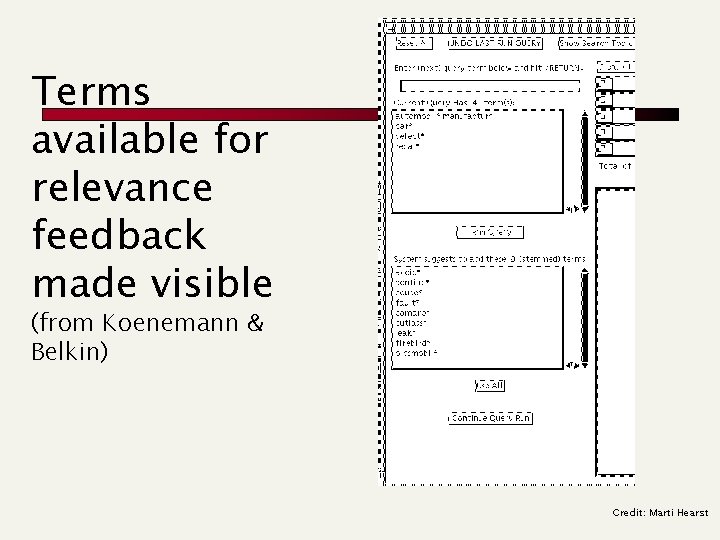

Terms available for relevance feedback made visible (from Koenemann & Belkin) Credit: Marti Hearst

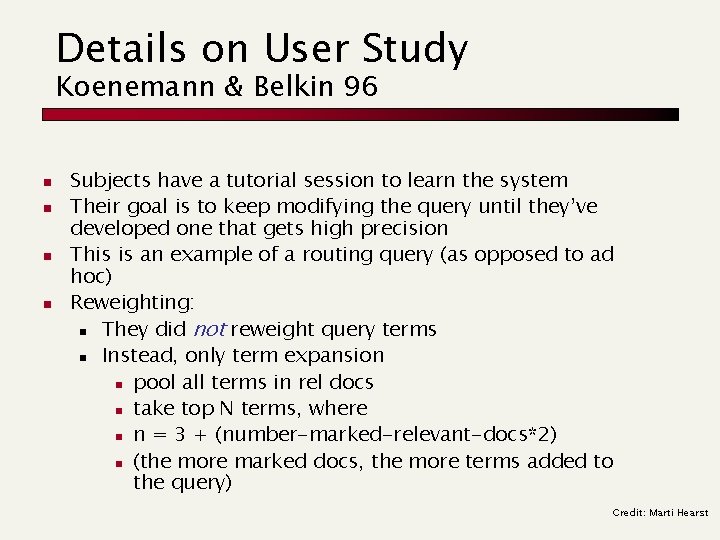

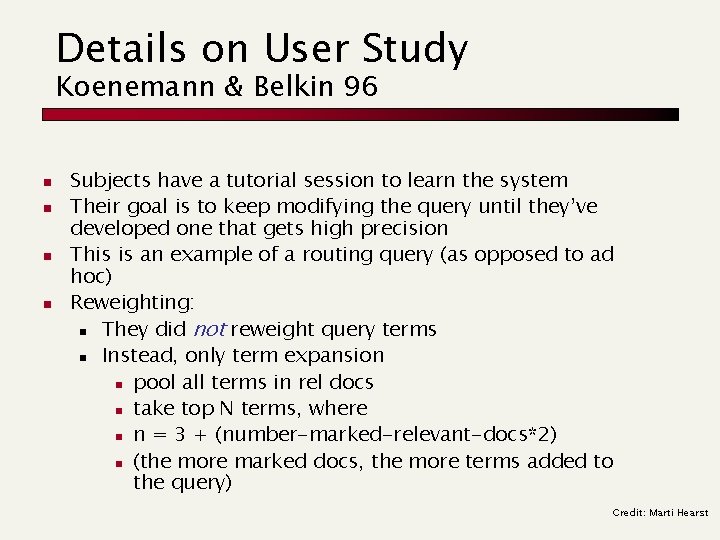

Details on User Study Koenemann & Belkin 96 n n Subjects have a tutorial session to learn the system Their goal is to keep modifying the query until they’ve developed one that gets high precision This is an example of a routing query (as opposed to ad hoc) Reweighting: n They did not reweight query terms n Instead, only term expansion n pool all terms in rel docs n take top N terms, where n n = 3 + (number-marked-relevant-docs*2) n (the more marked docs, the more terms added to the query) Credit: Marti Hearst

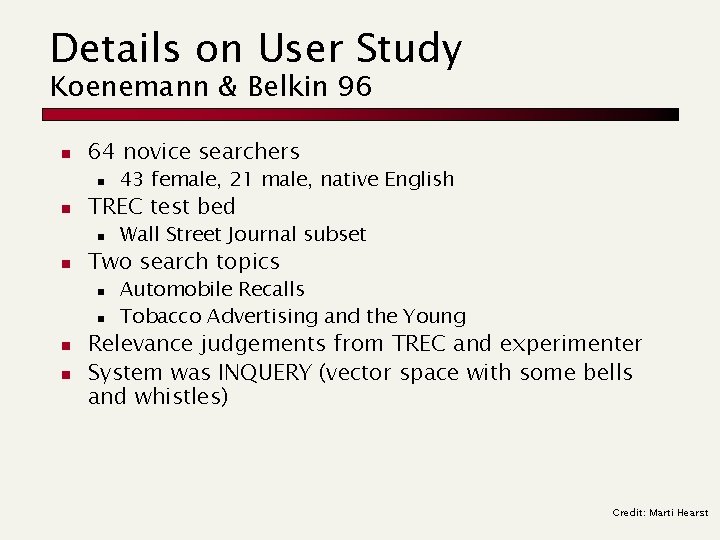

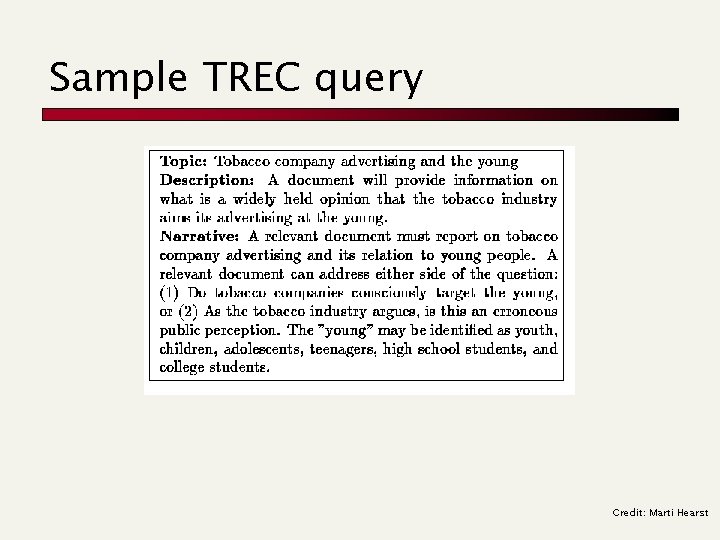

Details on User Study Koenemann & Belkin 96 n 64 novice searchers n n TREC test bed n n Wall Street Journal subset Two search topics n n 43 female, 21 male, native English Automobile Recalls Tobacco Advertising and the Young Relevance judgements from TREC and experimenter System was INQUERY (vector space with some bells and whistles) Credit: Marti Hearst

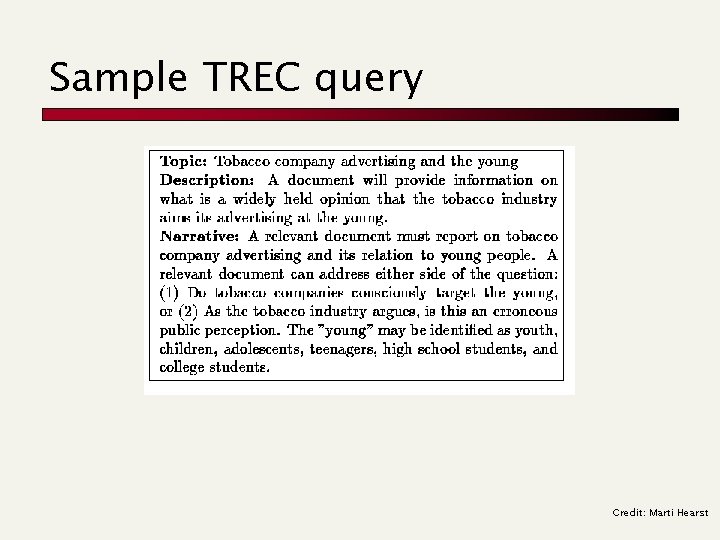

Sample TREC query Credit: Marti Hearst

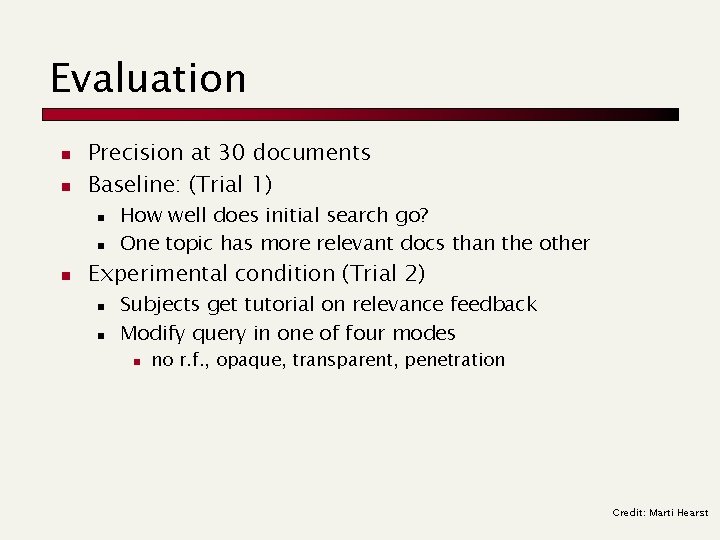

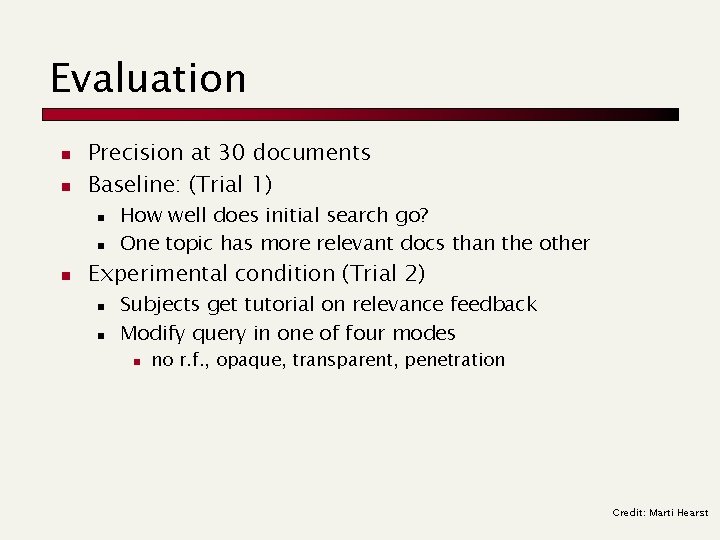

Evaluation n n Precision at 30 documents Baseline: (Trial 1) n n n How well does initial search go? One topic has more relevant docs than the other Experimental condition (Trial 2) n n Subjects get tutorial on relevance feedback Modify query in one of four modes n no r. f. , opaque, transparent, penetration Credit: Marti Hearst

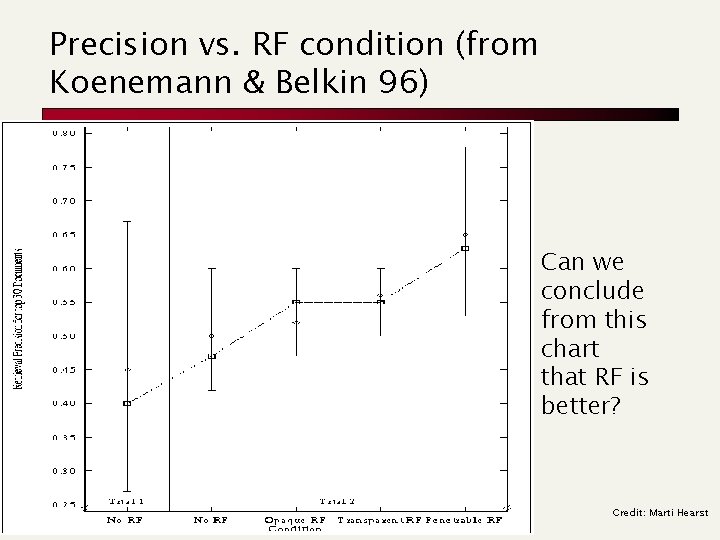

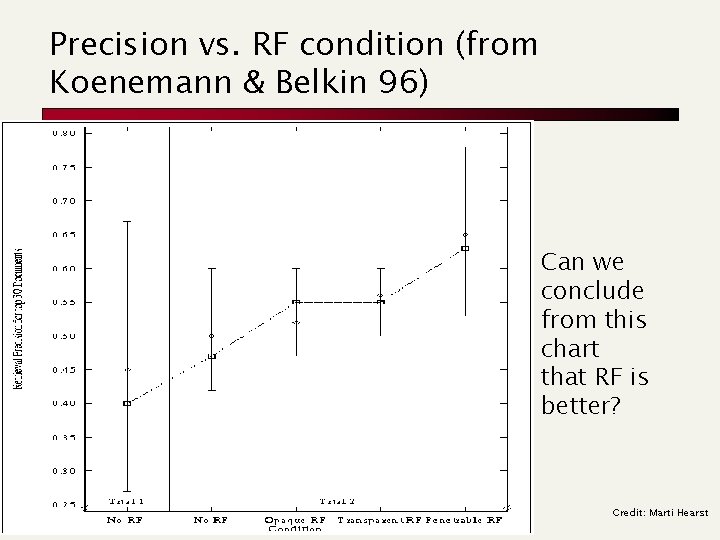

Precision vs. RF condition (from Koenemann & Belkin 96) Can we conclude from this chart that RF is better? Credit: Marti Hearst

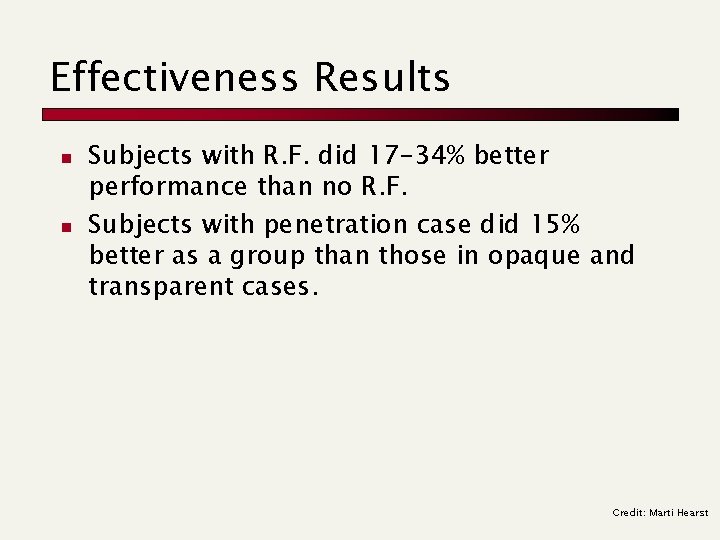

Effectiveness Results n n Subjects with R. F. did 17 -34% better performance than no R. F. Subjects with penetration case did 15% better as a group than those in opaque and transparent cases. Credit: Marti Hearst

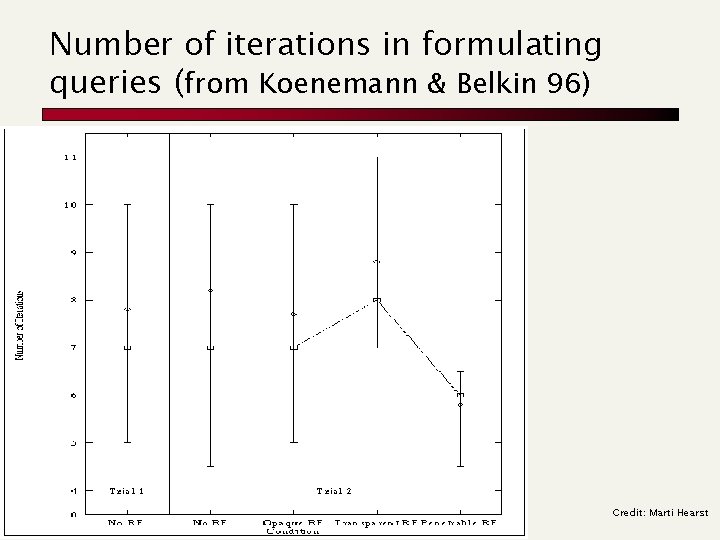

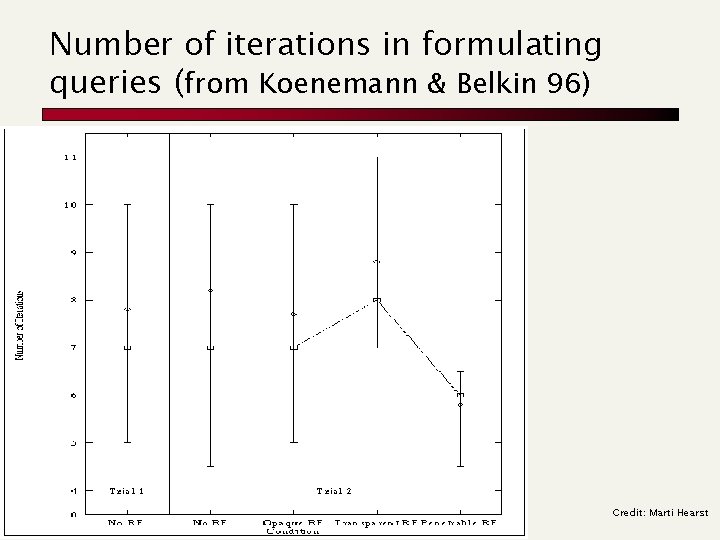

Number of iterations in formulating queries (from Koenemann & Belkin 96) Credit: Marti Hearst

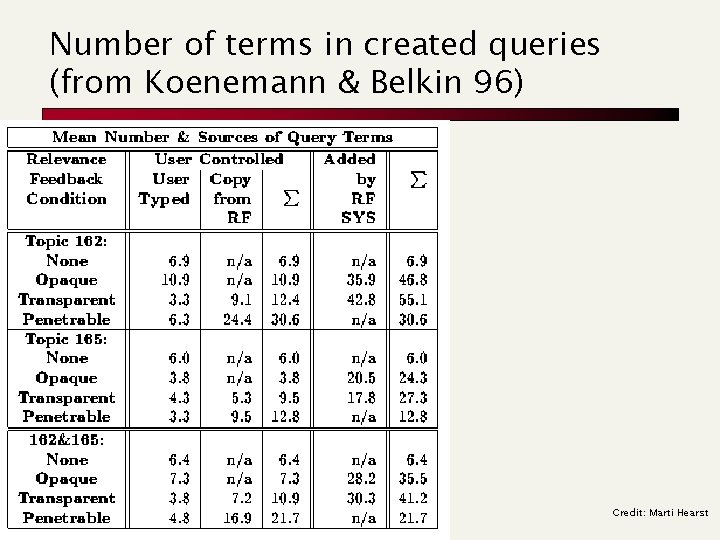

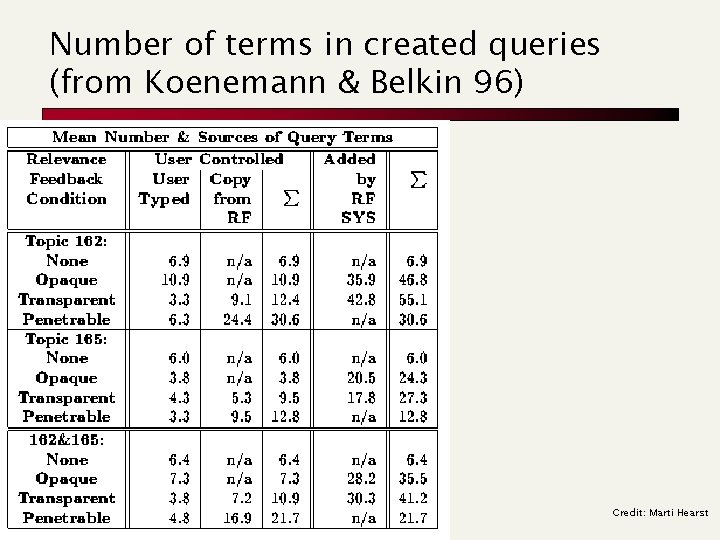

Number of terms in created queries (from Koenemann & Belkin 96) Credit: Marti Hearst

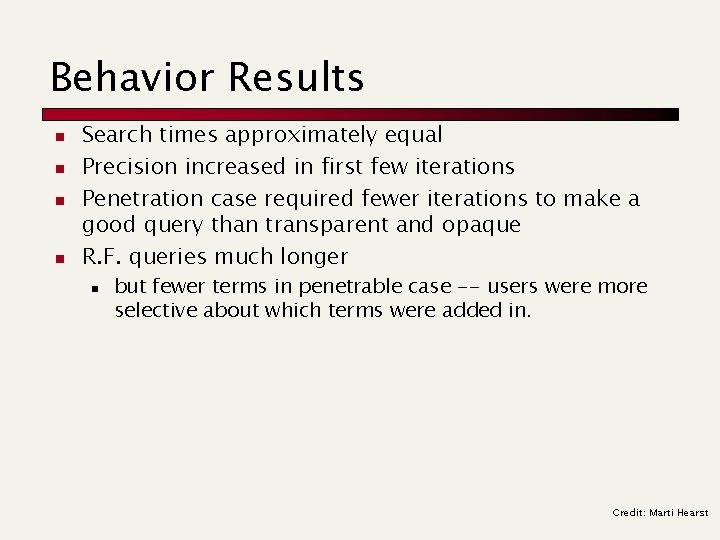

Behavior Results n n Search times approximately equal Precision increased in first few iterations Penetration case required fewer iterations to make a good query than transparent and opaque R. F. queries much longer n but fewer terms in penetrable case -- users were more selective about which terms were added in. Credit: Marti Hearst

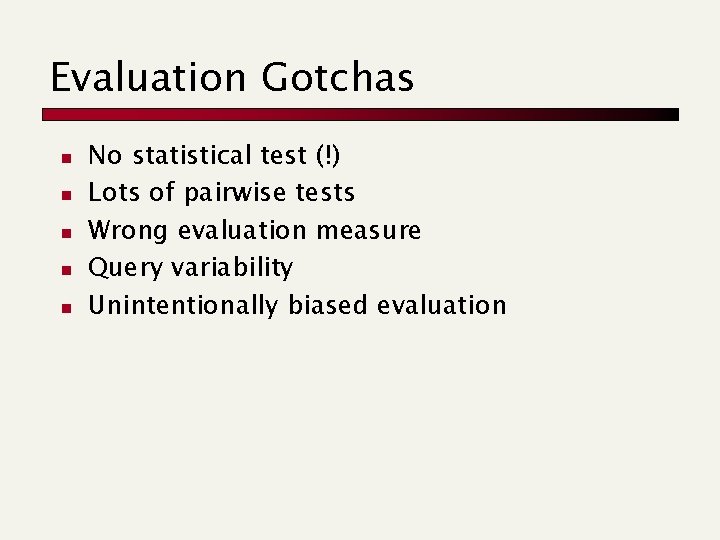

Evaluation Gotchas n n n No statistical test (!) Lots of pairwise tests Wrong evaluation measure Query variability Unintentionally biased evaluation

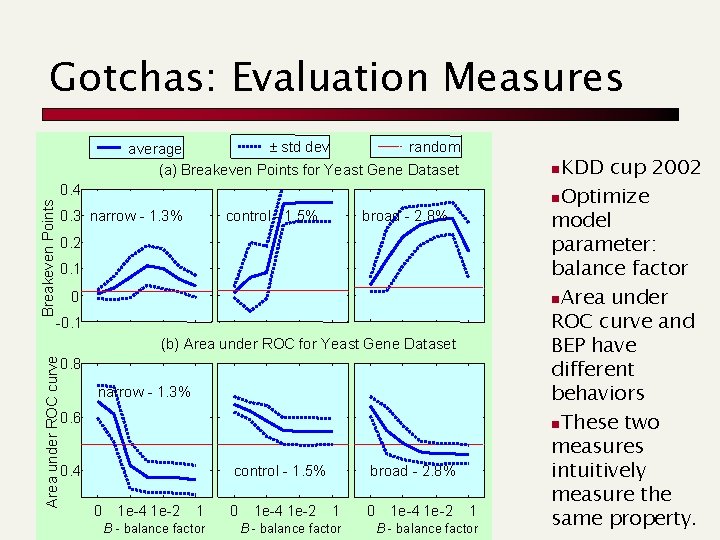

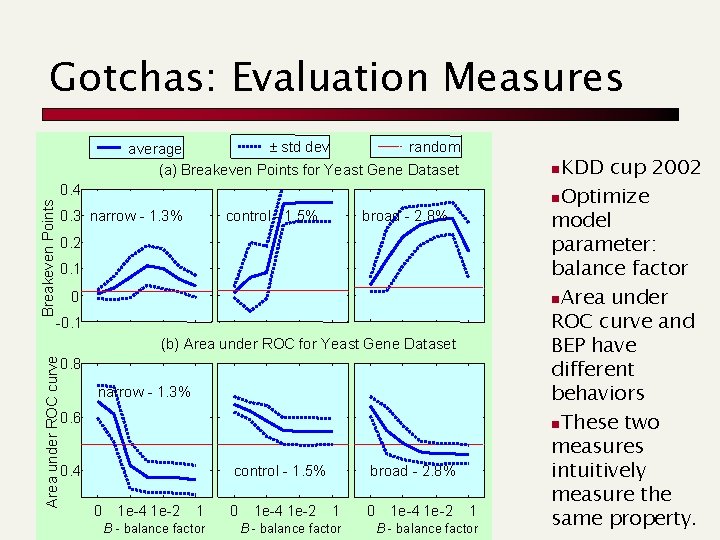

Gotchas: Evaluation Measures ± std dev random average (a) Breakeven Points for Yeast Gene Dataset Breakeven Points 0. 4 0. 3 narrow - 1. 3% control - 1. 5% broad - 2. 8% 0. 2 0. 1 0 -0. 1 Area under ROC curve (b) Area under ROC for Yeast Gene Dataset 0. 8 narrow - 1. 3% 0. 6 0. 4 control - 1. 5% 0 1 e-4 1 e-2 1 B - balance factor 0 1 e-4 1 e-2 broad - 2. 8% 1 B - balance factor 0 1 e-4 1 e-2 KDD cup 2002 n. Optimize model parameter: balance factor n. Area under ROC curve and BEP have different behaviors n. These two measures intuitively measure the same property. n 1 B - balance factor

Gotchas: Query variability · · · Eichmann et al. claim that for their approach to CLIR French is harder than Spanish. French average precision: 0. 149 Spanish average precision: 0. 173

Gotchas: Query variability · · · Queries Queries with with Spanish > baseline: 14 Spanish baseline: 40 Spanish < baseline: 53 French > baseline: 20 French baseline: 22 French < baseline: 64

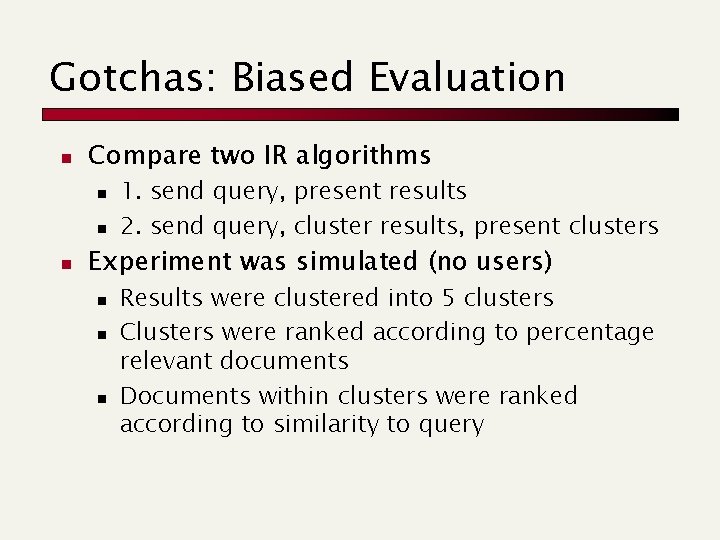

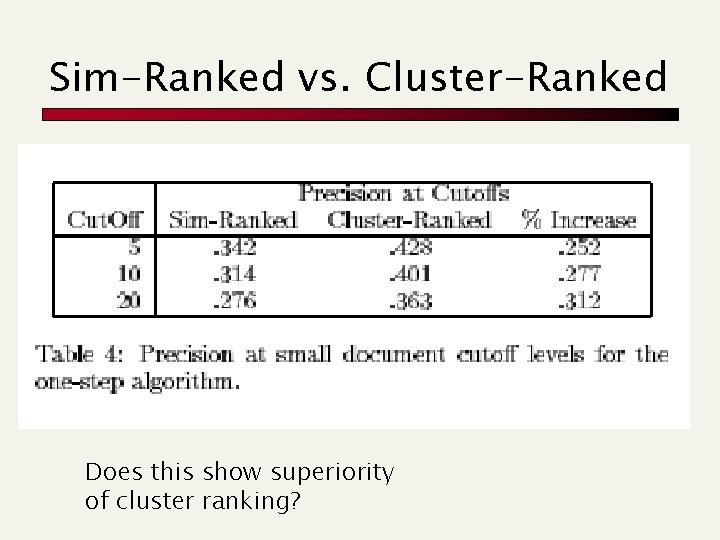

Gotchas: Biased Evaluation n Compare two IR algorithms n n n 1. send query, present results 2. send query, cluster results, present clusters Experiment was simulated (no users) n n n Results were clustered into 5 clusters Clusters were ranked according to percentage relevant documents Documents within clusters were ranked according to similarity to query

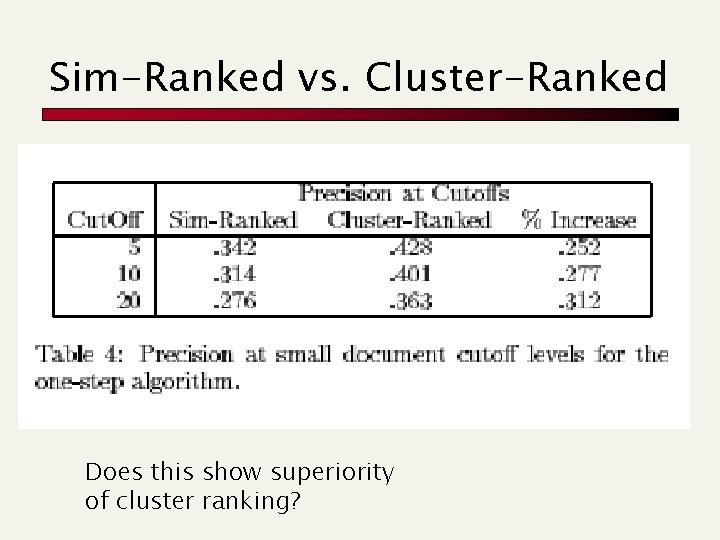

Sim-Ranked vs. Cluster-Ranked Does this show superiority of cluster ranking?

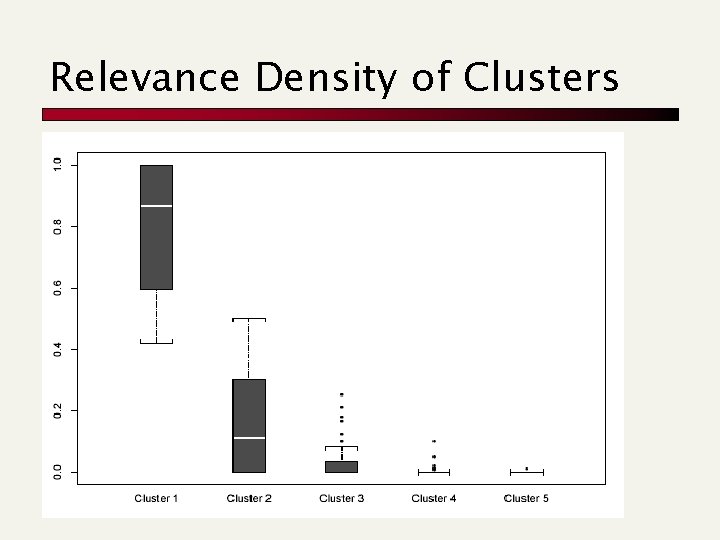

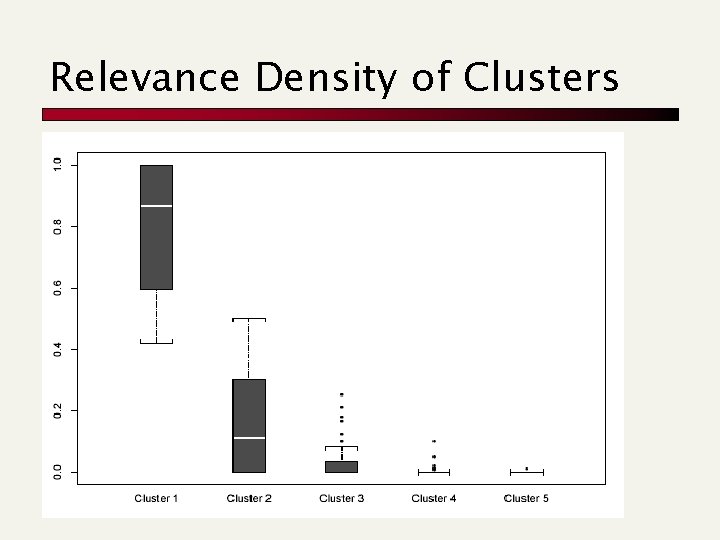

Relevance Density of Clusters

Summary n n n Information Visualization: A good visualization is worth a thousand pictures. But to make information visualization work for text is hard. Evaluation Measures: F measure, break-even point, area under the ROC curve Evaluating interactive systems is harder than evaluating algorithms. Evaluation gotchas: Begin with the end in mind

Resources FOA 4. 3 MIR Ch. 10. 8 – 10. 10 Ellen Voorhees, Variations in Relevance Judgments and the Measurement of Retrieval Effectiveness, ACM Sigir 98 Harman, D. K. Overview of the Third REtrieval Conference (TREC 3). In: Overview of The Third Text REtrieval Conference (TREC -3). Harman, D. K. (Ed. ). NIST Special Publication 500 -225, 1995, pp. l-19. "Assessing agreement on classification tasks: the kappa statistic", Jean Carletta, Computational Linguistics 22(2): 249254, 1996 Reexamining the Cluster Hypothesis: Scatter/Gather on Retrieval Results (1996) Marti A. Hearst, Jan O. Pedersen Proceedings of SIGIR-96, http: //gim. unmc. edu/dxtests/ROC 3. htm Pirolli, P. and Card, S. K. (1999). Information Foraging. Psychological Review 106(4): 643 -675. Paul Over, TREC-6 Interactive Track Report, NIST, 1998.

Resources http: //www. acm. org/sigchi/chi 96/proceedings/papers/Koenemann /jk 1_txt. htm http: //otal. umd. edu/olive Jaime Carbonell , Jade Goldstein, The use of MMR, diversity-based reranking for reordering documents and producing summaries, Proceedings of the 21 st annual international ACM SIGIR conference on Research and development in information retrieval, p. 335 -336, August 24 -28, 1998, Melbourne, Australia