CS 2750 Machine Learning Linear Models for Classification

- Slides: 47

CS 2750: Machine Learning Linear Models for Classification Prof. Adriana Kovashka University of Pittsburgh February 15, 2016

Plan for Today • • • Regression for classification Fisher’s linear discriminant Perceptron Logistic regression Multi-way classification Generative vs discriminative models

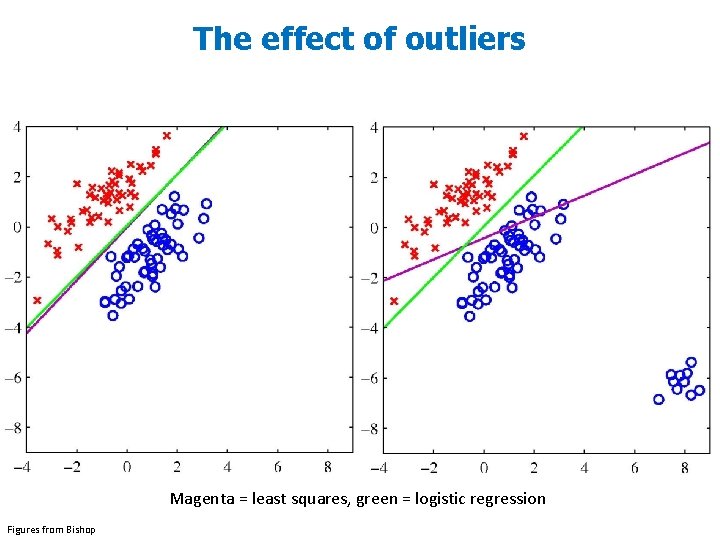

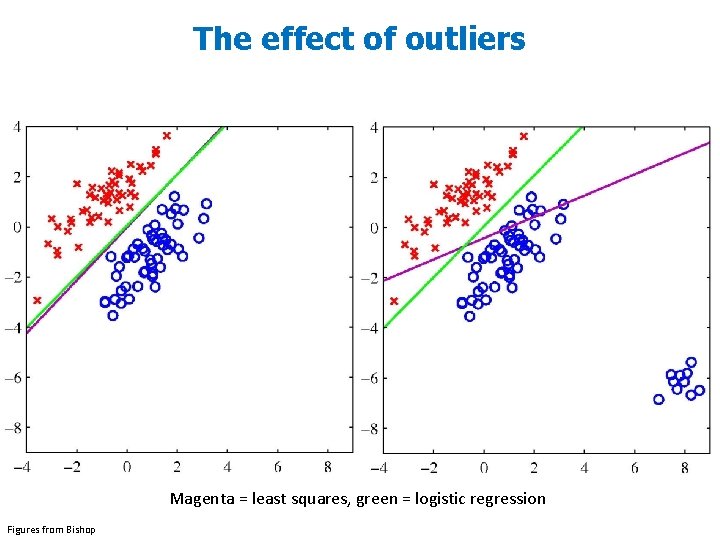

The effect of outliers Magenta = least squares, green = logistic regression Figures from Bishop

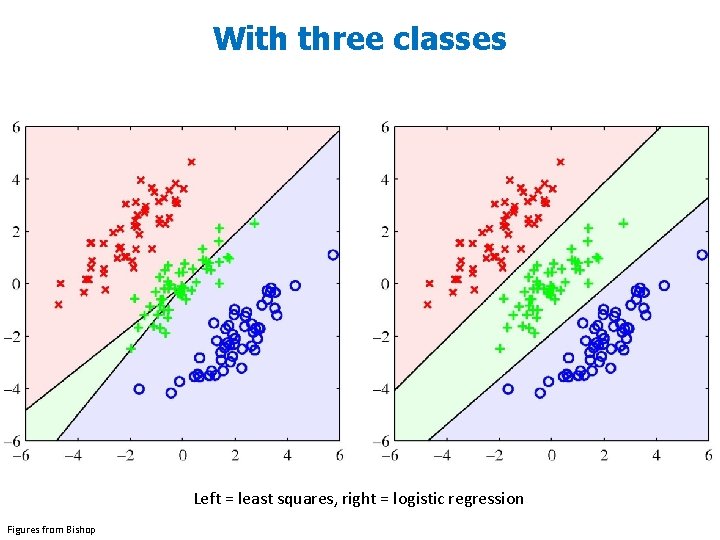

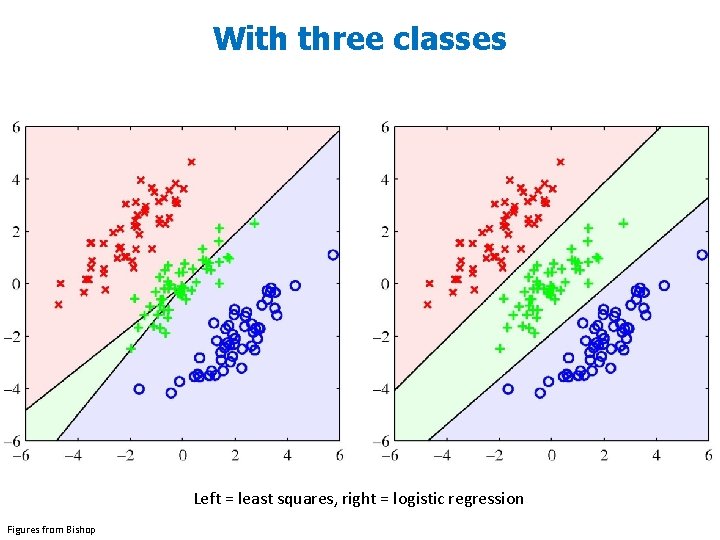

With three classes Left = least squares, right = logistic regression Figures from Bishop

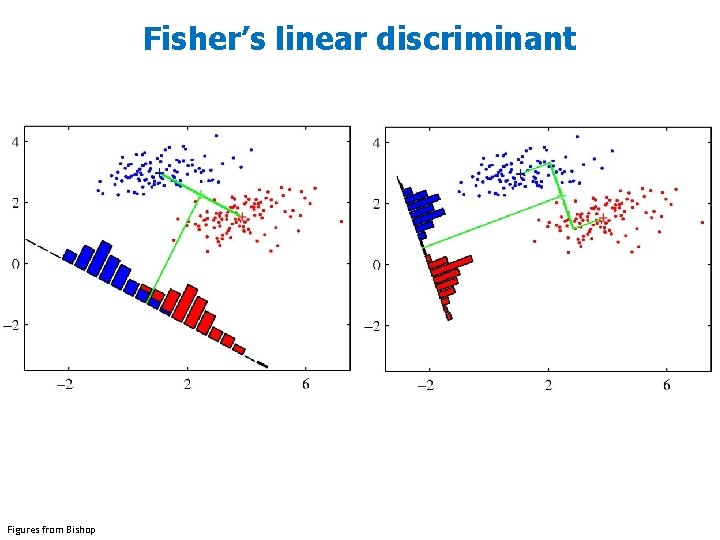

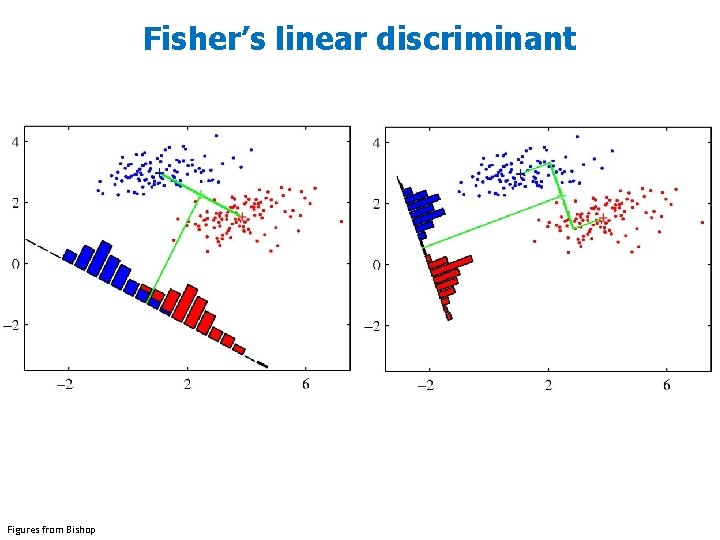

Fisher’s linear discriminant Figures from Bishop

Plan for Today • • • Regression for classification Fisher’s linear discriminant Perceptron Logistic regression Multi-way classification Generative vs discriminative models

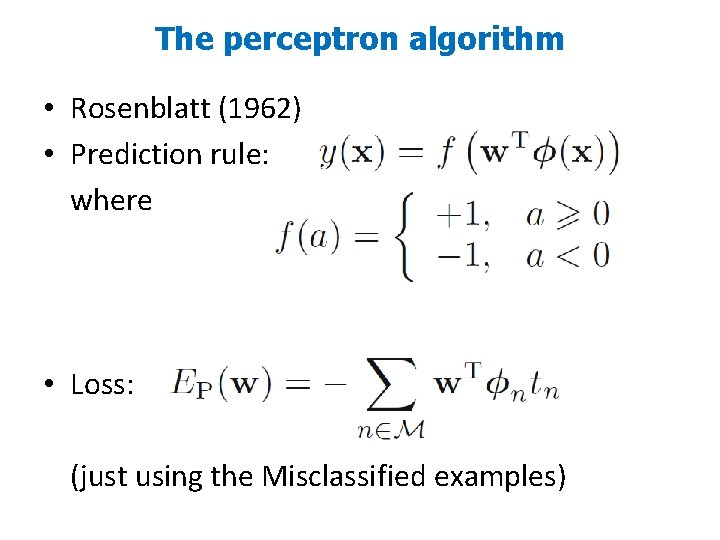

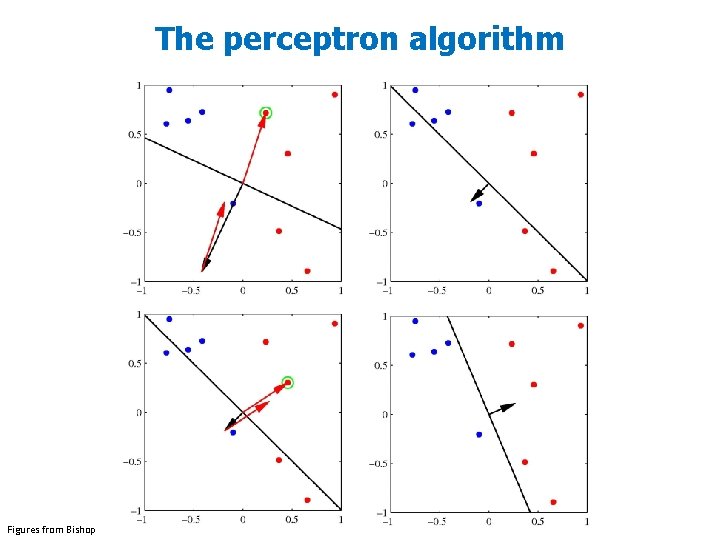

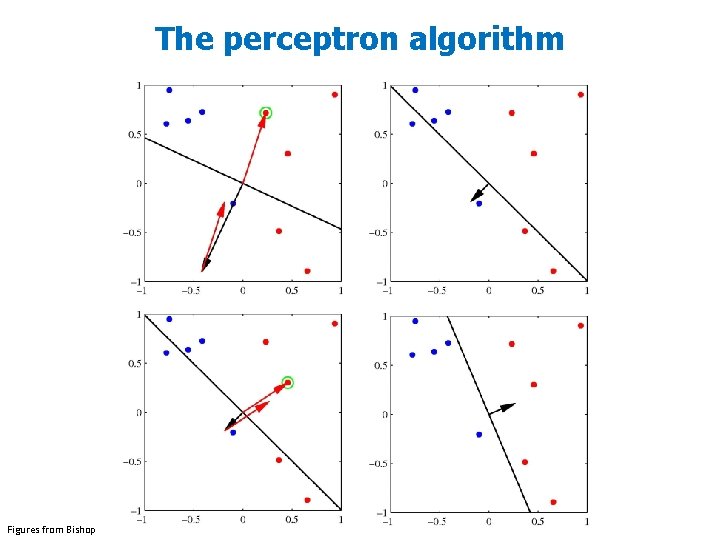

The perceptron algorithm • Rosenblatt (1962) • Prediction rule: where • Loss: (just using the Misclassified examples)

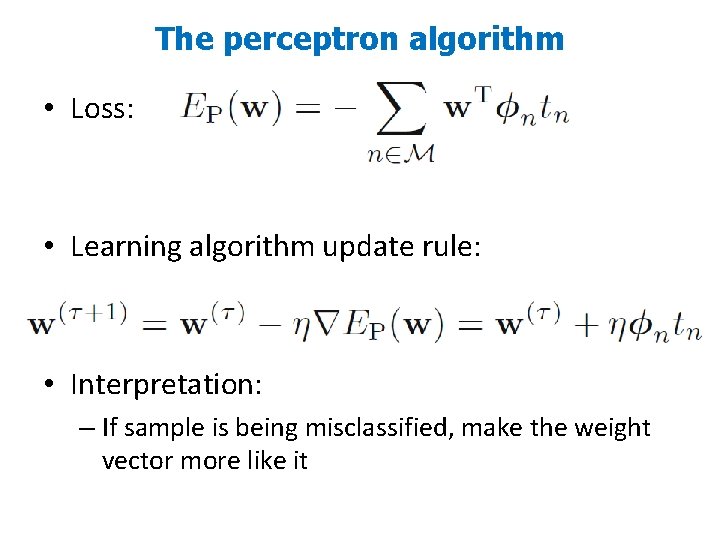

The perceptron algorithm • Loss: • Learning algorithm update rule: • Interpretation: – If sample is being misclassified, make the weight vector more like it

The perceptron algorithm Figures from Bishop

Plan for Today • • • Regression for classification Fisher’s linear discriminant Perceptron Logistic regression Multi-way classification Generative vs discriminative models

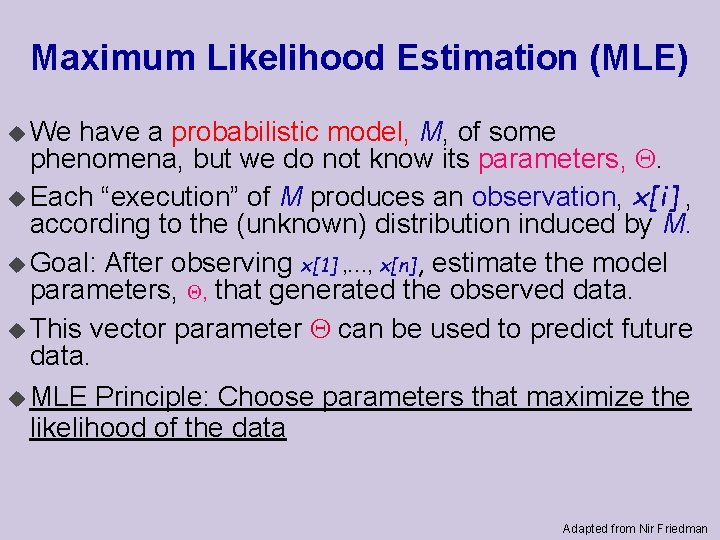

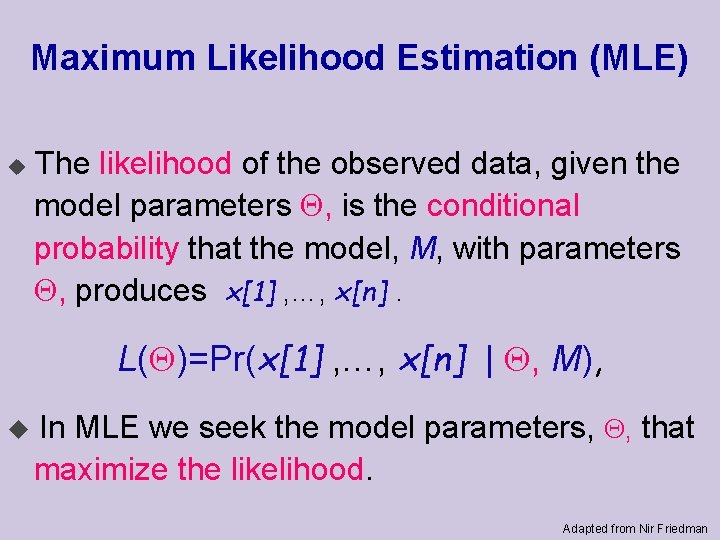

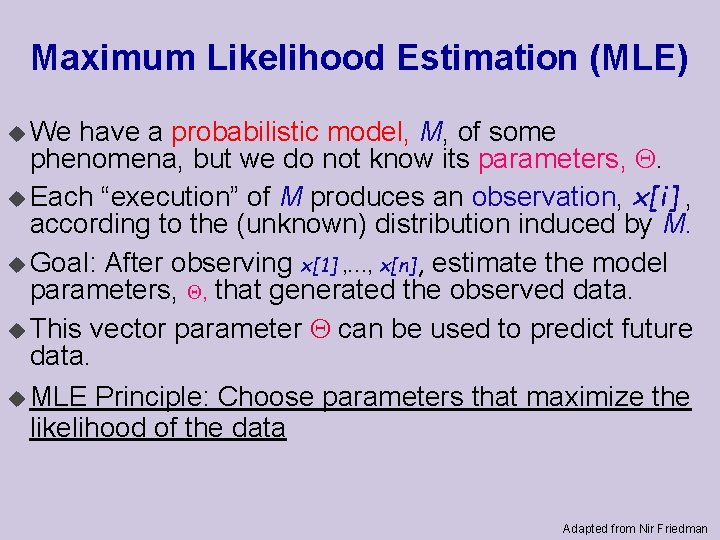

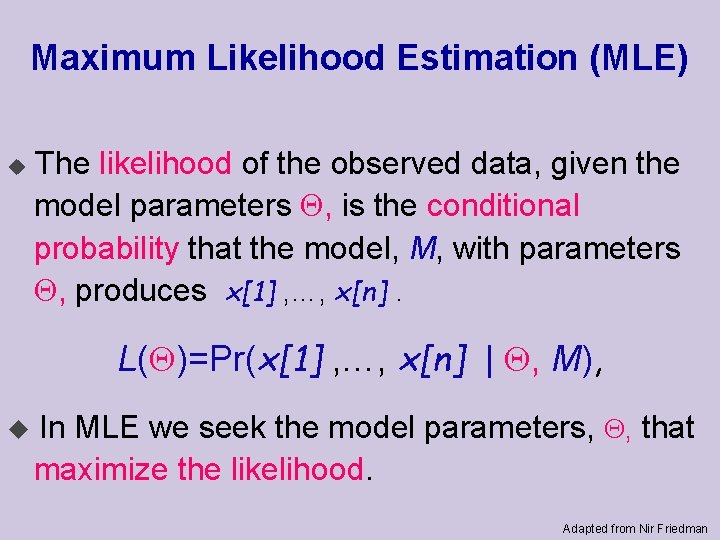

Maximum Likelihood Estimation (MLE) u We have a probabilistic model, M, of some phenomena, but we do not know its parameters, . u Each “execution” of M produces an observation, x[i] , according to the (unknown) distribution induced by M. u Goal: After observing x[1] , …, x[n], estimate the model parameters, , that generated the observed data. u This vector parameter can be used to predict future data. u MLE Principle: Choose parameters that maximize the likelihood of the data Adapted from Nir Friedman

Maximum Likelihood Estimation (MLE) u The likelihood of the observed data, given the model parameters , is the conditional probability that the model, M, with parameters , produces x[1] , …, x[n]. L( )=Pr(x[1] , …, x[n] | , M), u In MLE we seek the model parameters, , that maximize the likelihood. Adapted from Nir Friedman

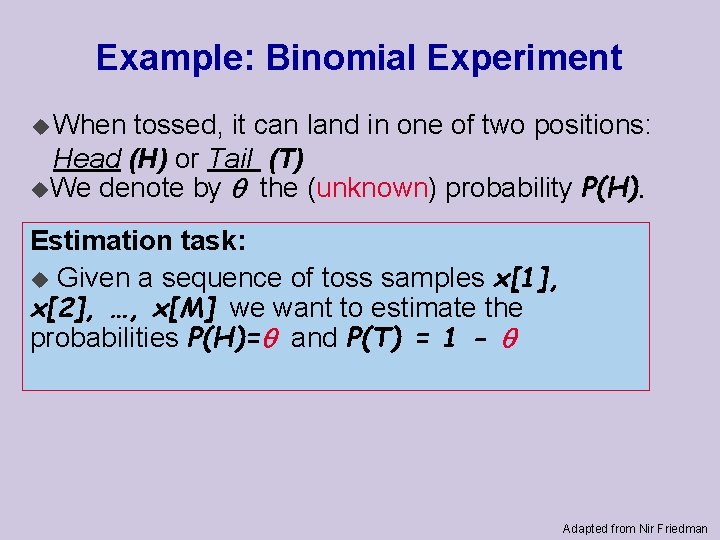

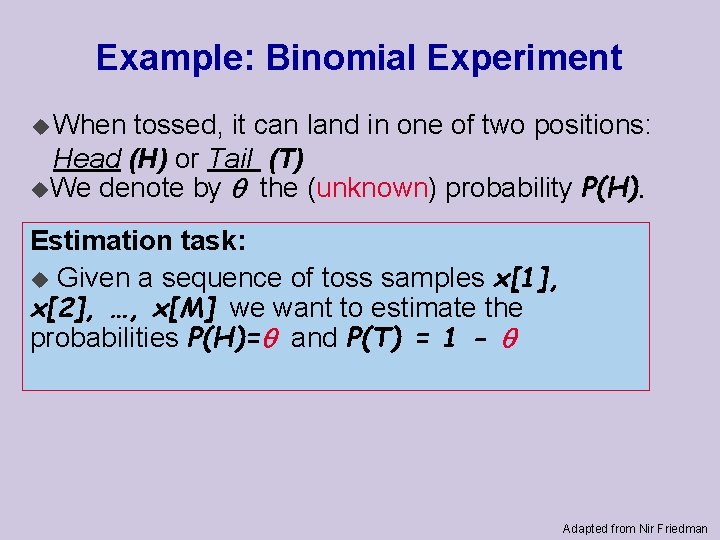

Example: Binomial Experiment u When tossed, it can land in one of two positions: Head (H) or Tail (T) u. We denote by the (unknown) probability P(H). Estimation task: u Given a sequence of toss samples x[1], x[2], …, x[M] we want to estimate the probabilities P(H)= and P(T) = 1 - Adapted from Nir Friedman

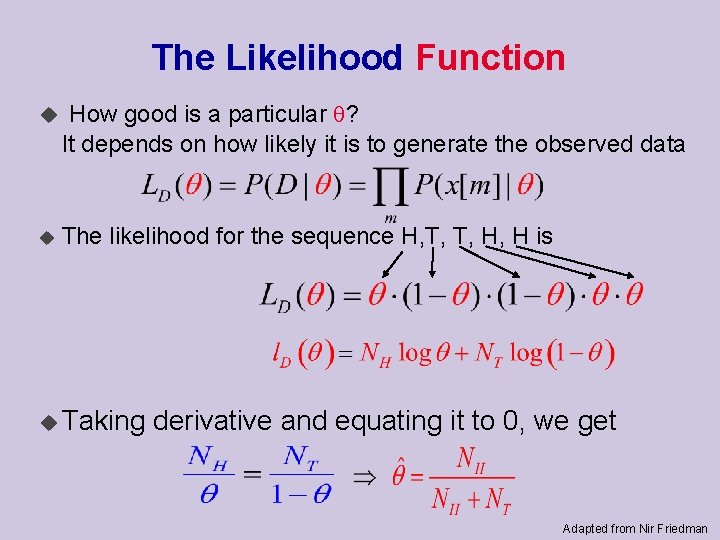

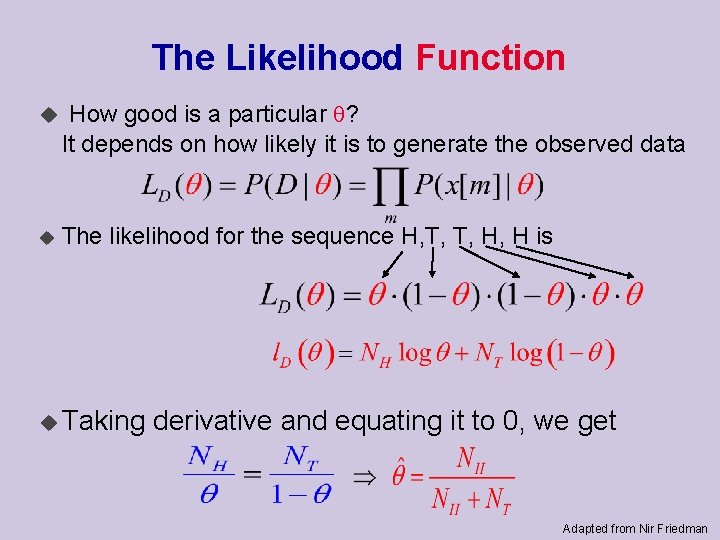

The Likelihood Function u How good is a particular ? It depends on how likely it is to generate the observed data u The likelihood for the sequence H, T, T, H, H is u Taking derivative and equating it to 0, we get Adapted from Nir Friedman

Issues u Overconfidence u Better: Maximum-a-posteriori (MAP)

Plan for Today • • • Regression for classification Fisher’s linear discriminant Perceptron Logistic regression Multi-way classification Generative vs discriminative models

Multi-class problems • Instead of just two classes, we now have C classes • E. g. predict which movie genre a viewer likes best • Possible answers: action, drama, indie, thriller, etc. • Two approaches: – One-vs-all – One-vs-one

Multi-class problems • One-vs-all (a. k. a. one-vs-others) – Train K classifiers – In each, pos = data from class i, neg = data from classes other than i – The class with the most confident prediction wins – Example: • • • You have 4 classes, train 4 classifiers 1 vs others: score 3. 5 2 vs others: score 6. 2 3 vs others: score 1. 4 4 vs other: score 5. 5 Final prediction: class 2 – Issues?

Multi-class problems • One-vs-one (a. k. a. all-vs-all) – Train K(K-1)/2 binary classifiers (all pairs of classes) – They all vote for the label – Example: • • You have 4 classes, then train 6 classifiers 1 vs 2, 1 vs 3, 1 vs 4, 2 vs 3, 2 vs 4, 3 vs 4 Votes: 1, 1, 4, 2, 4, 4 Final prediction is class 4

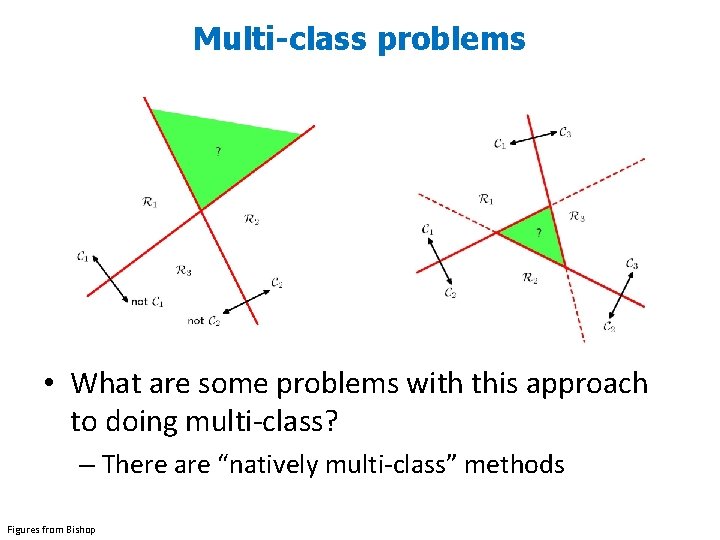

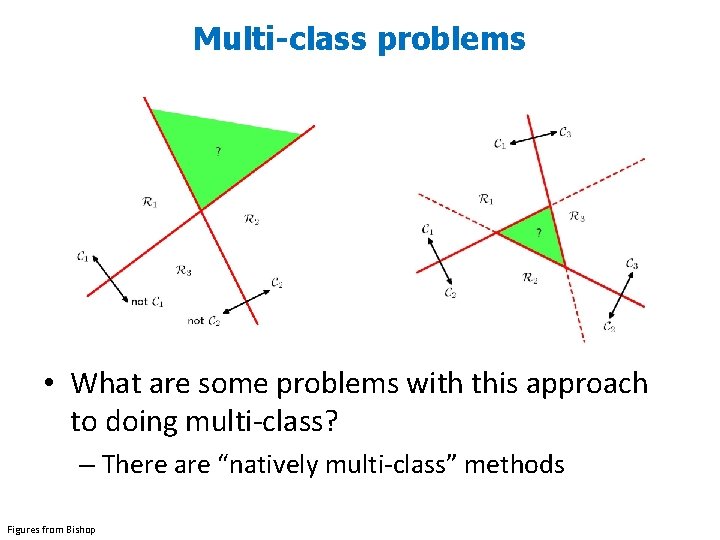

Multi-class problems • What are some problems with this approach to doing multi-class? – There are “natively multi-class” methods Figures from Bishop

Plan for Today • • • Regression for classification Fisher’s linear discriminant Perceptron Logistic regression Multi-way classification Generative vs discriminative models

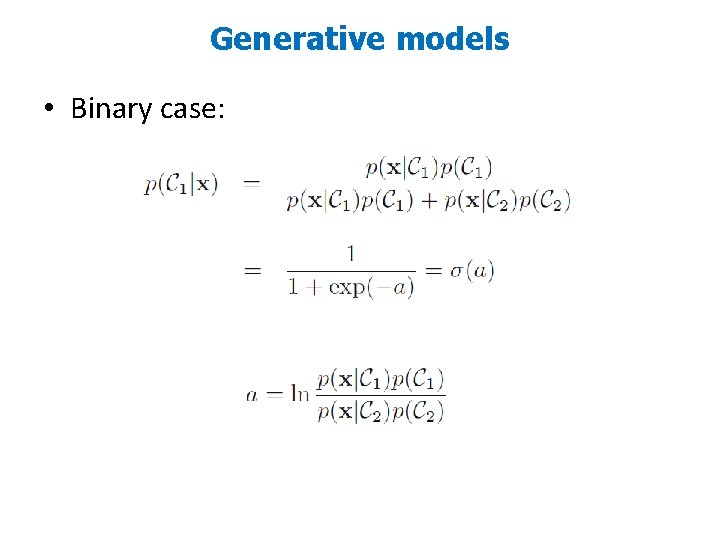

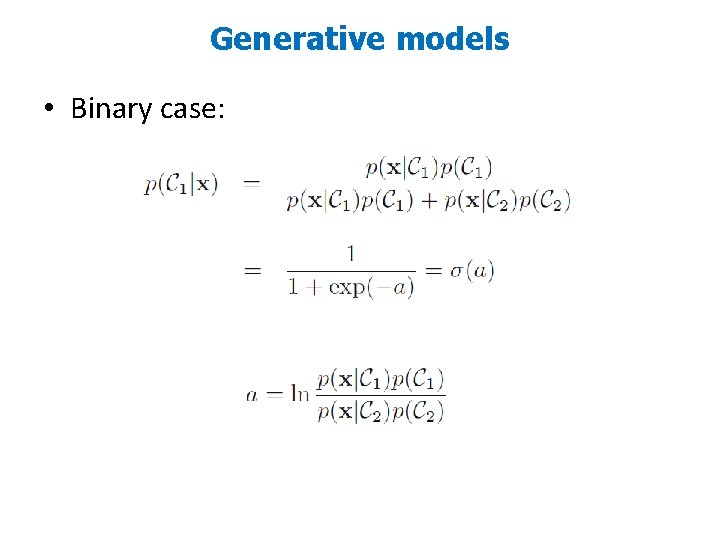

Generative models • Binary case:

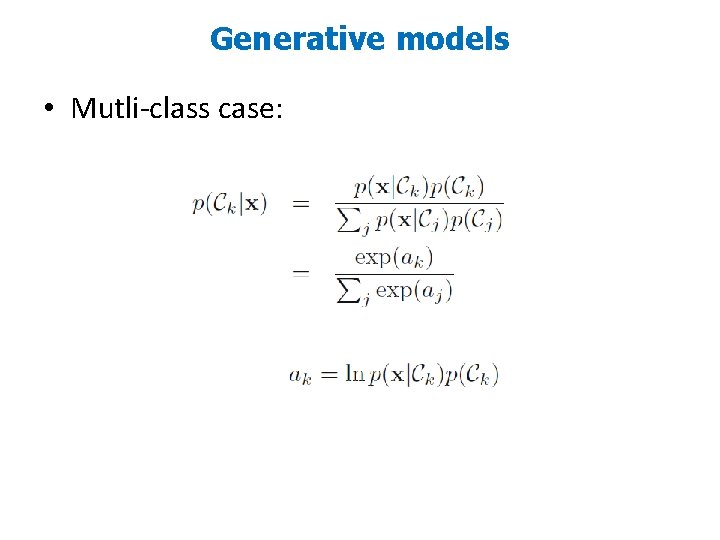

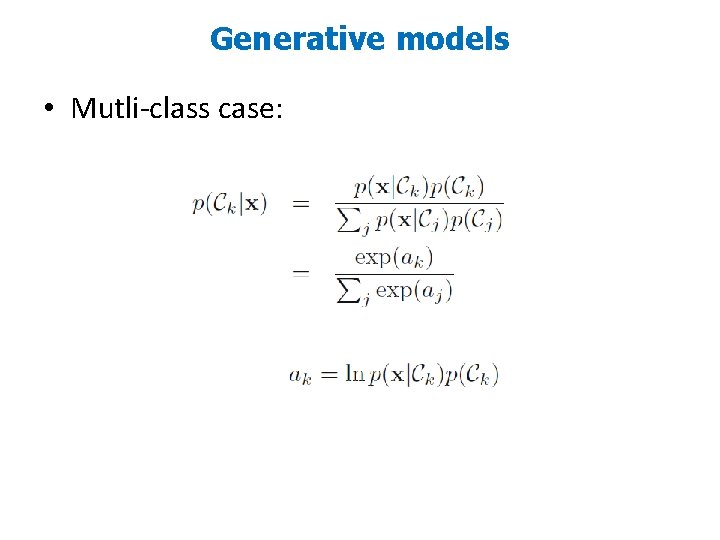

Generative models • Mutli-class case:

Generative models • Why are these called generative? • Can use them to generate new samples x • Perhaps this is overkill?