CS 161 Design and Architecture of Computer Systems

- Slides: 56

CS 161 – Design and Architecture of Computer Systems Technology Trends and Performance Evaluation

Quick recap What is computer architecture? The science and art of designing, selecting, and interconnecting hardware components and designing the hardware/software interface to create a computing system that meets functional, performance, energy consumption, cost, and other specific goals. 2

AN ENABLER FOR COMP ARCH Technology trends MOORE’S LAW 3

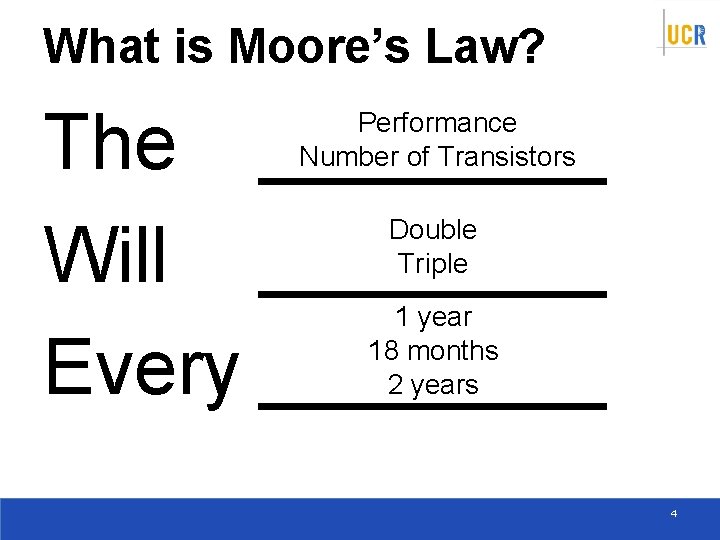

What is Moore’s Law? The ____ Will ____ Every ____ Performance Number of Transistors Double Triple 1 year 18 months 2 years 4

5

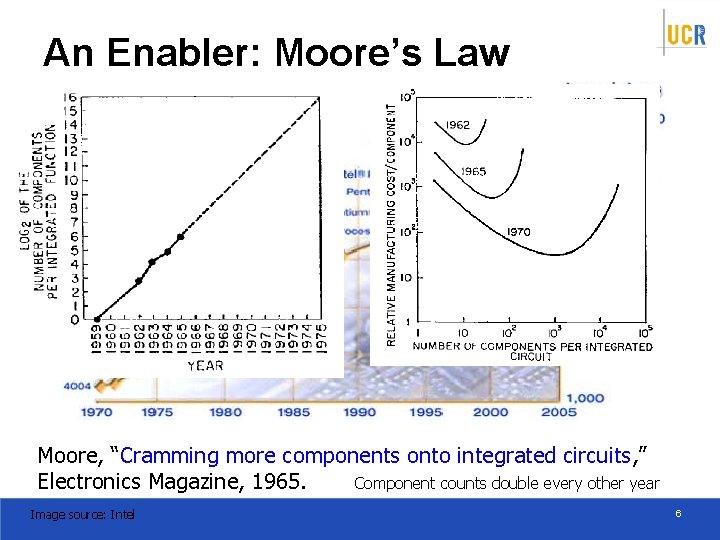

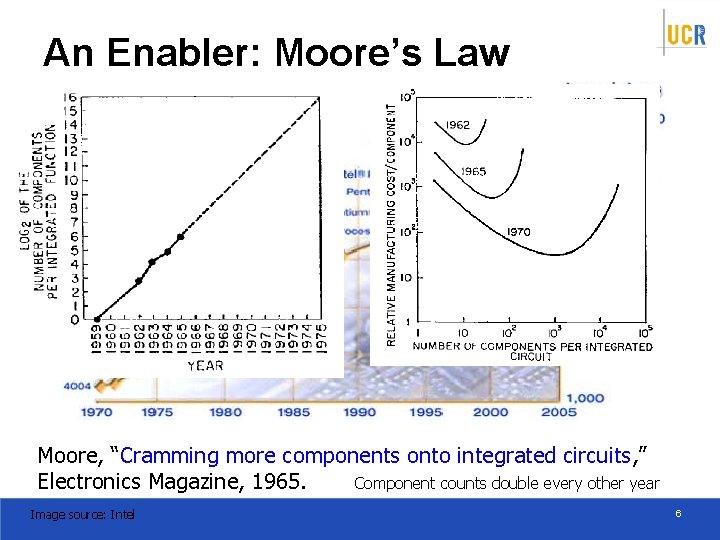

An Enabler: Moore’s Law Moore, “Cramming more components onto integrated circuits, ” Electronics Magazine, 1965. Component counts double every other year Image source: Intel 6

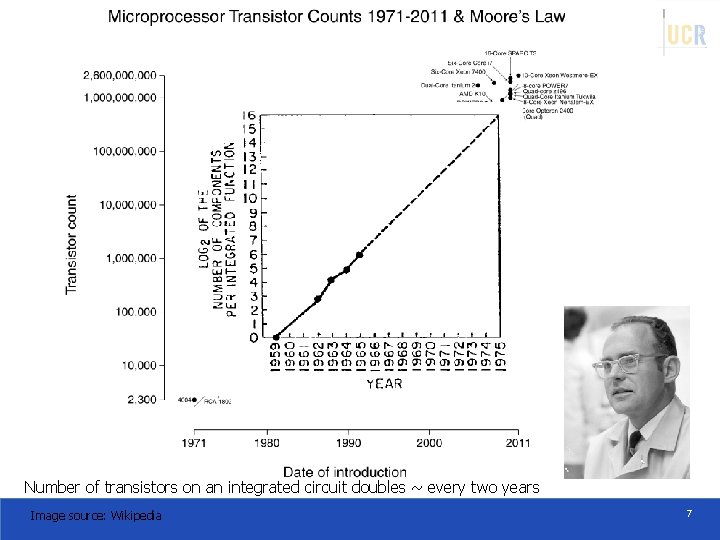

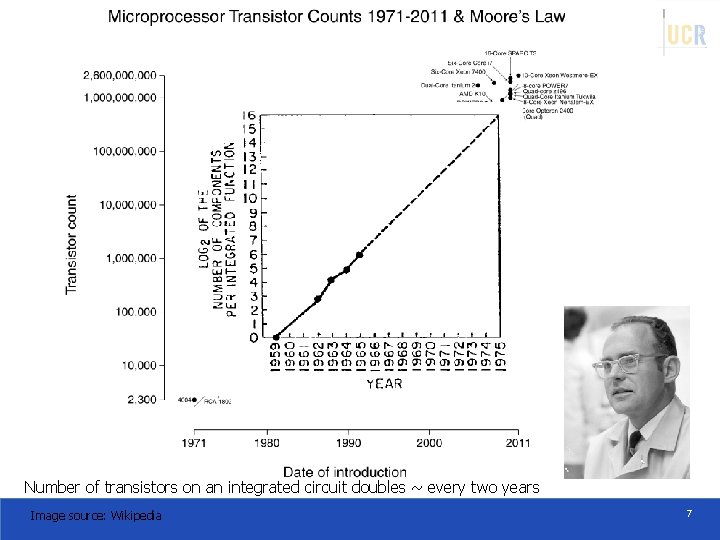

Number of transistors on an integrated circuit doubles ~ every two years Image source: Wikipedia 7

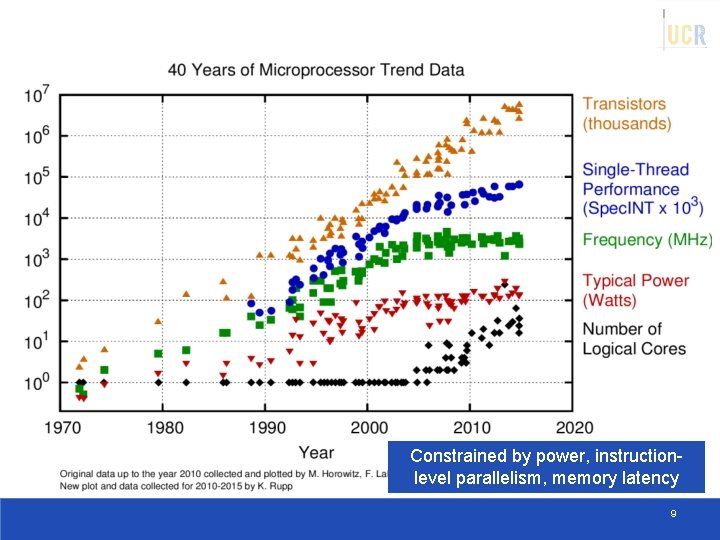

# of Transistors == Performance? 8

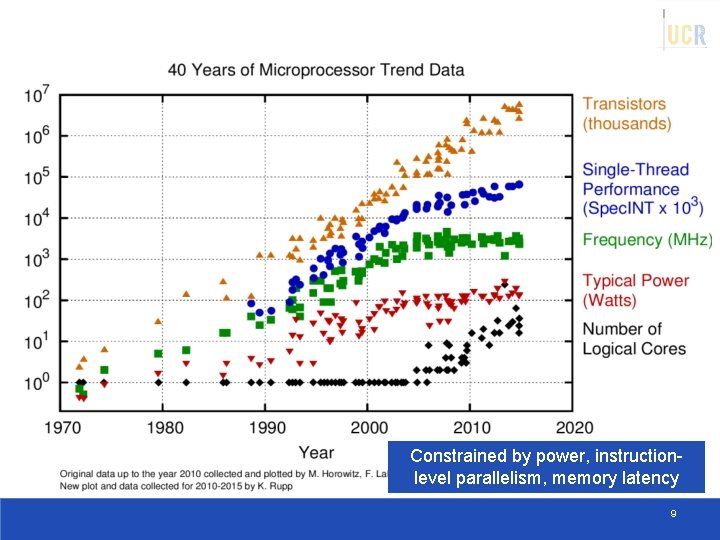

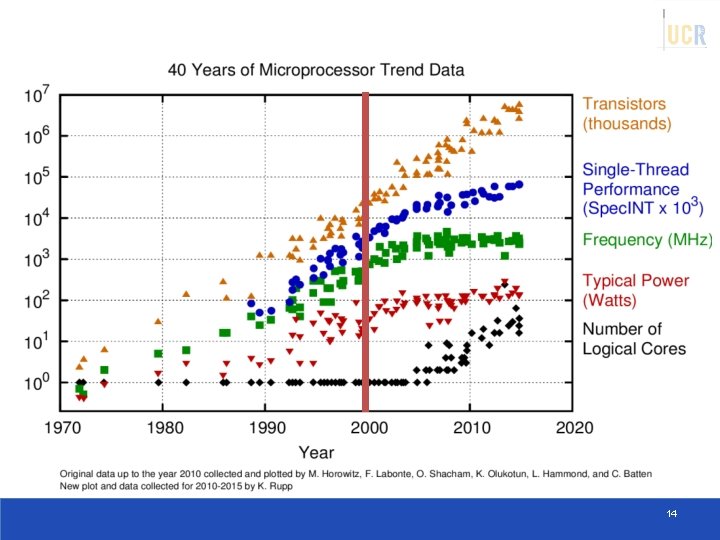

Constrained by power, instructionlevel parallelism, memory latency 9

Technology trends MEMORY SCALING 10

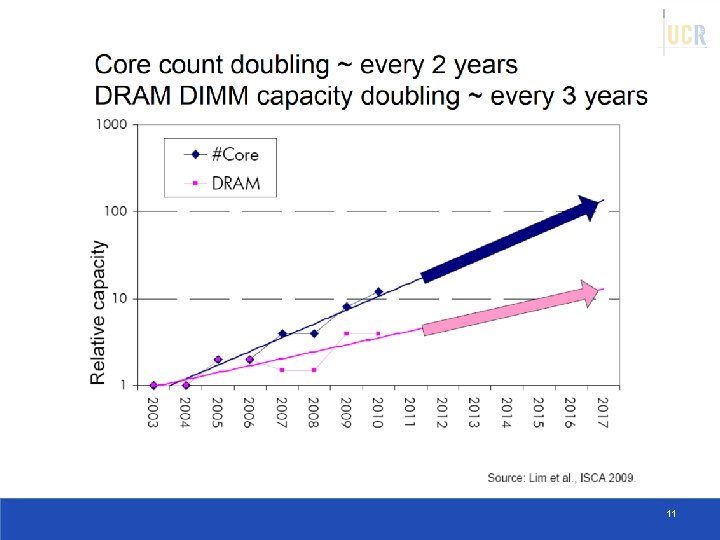

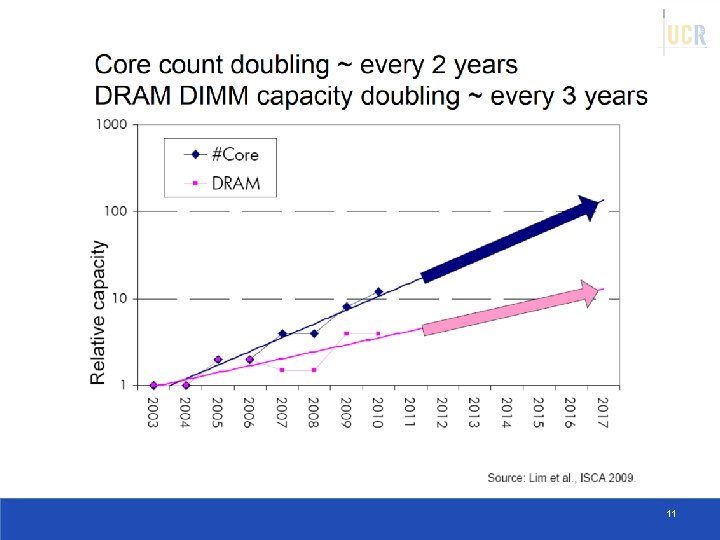

11

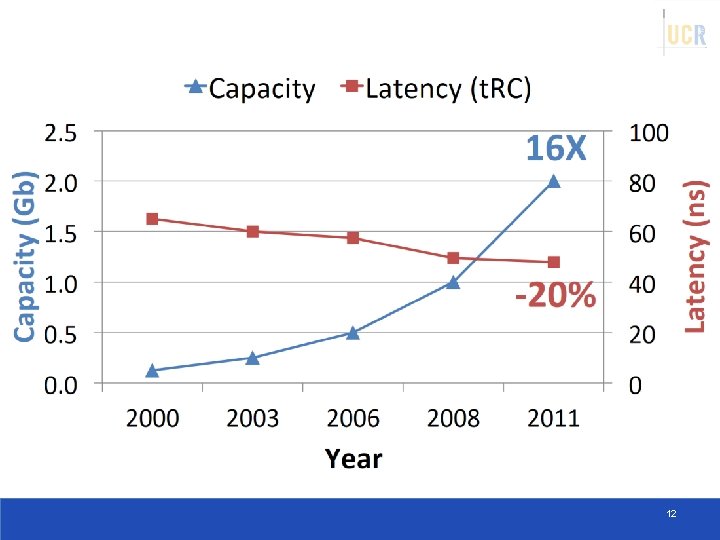

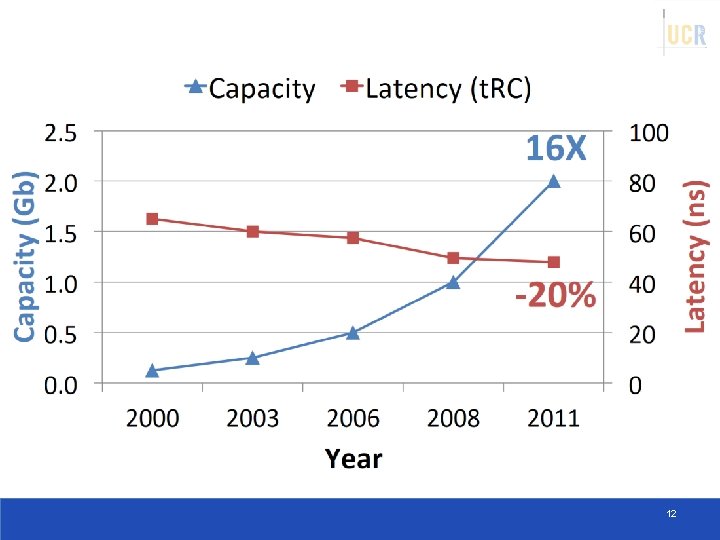

12

Technology trends THE POWER WALL 13

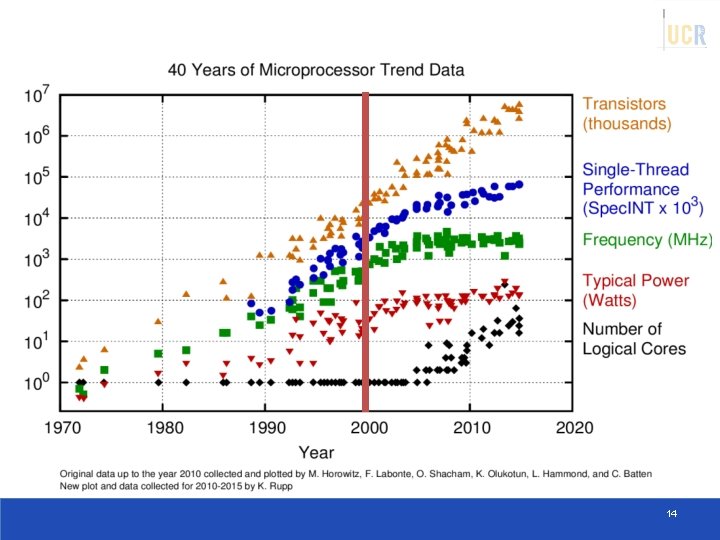

14

15

FUNDAMENTAL CONCEPTS 16

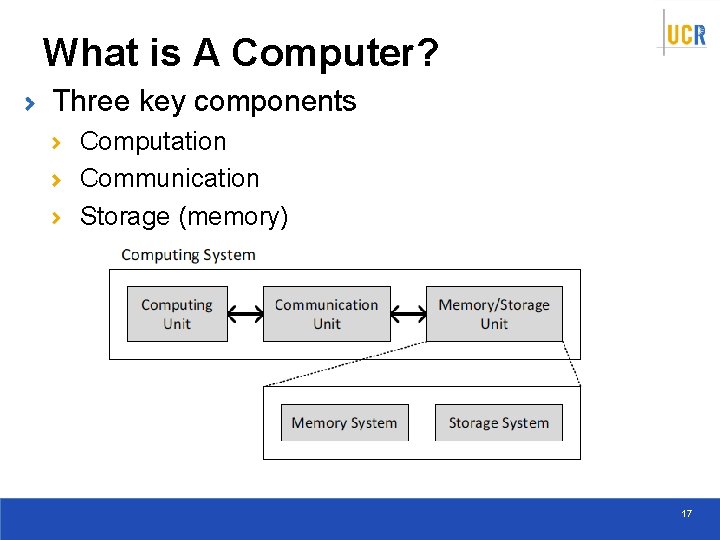

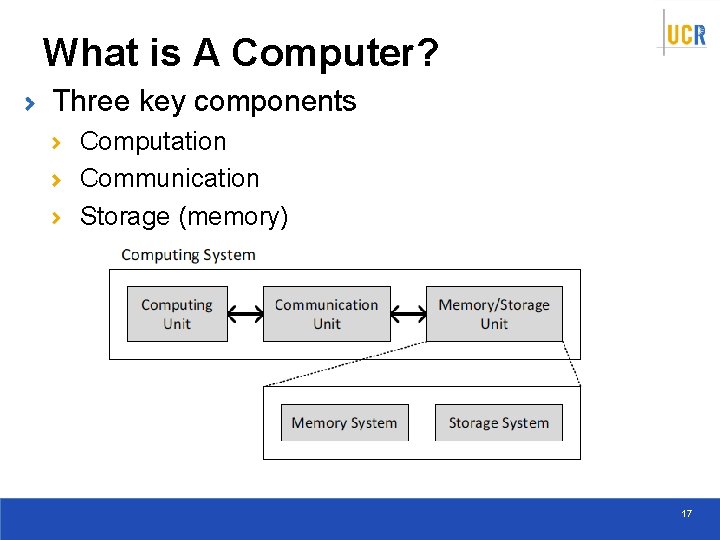

What is A Computer? Three key components Computation Communication Storage (memory) 17

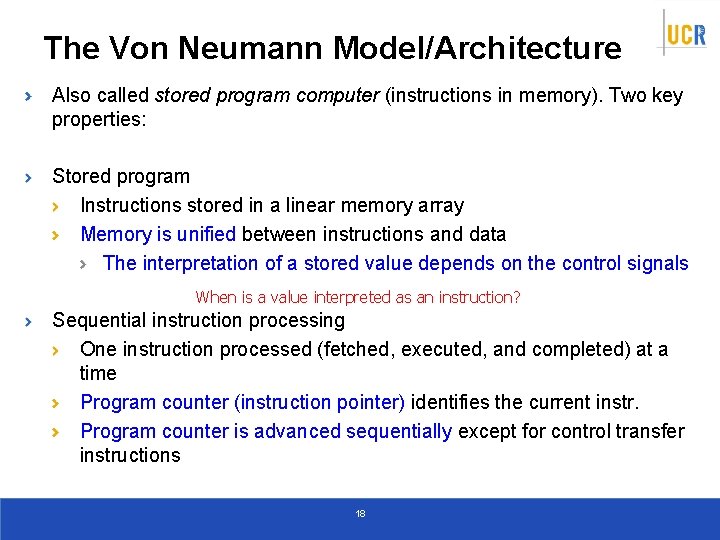

The Von Neumann Model/Architecture Also called stored program computer (instructions in memory). Two key properties: Stored program Instructions stored in a linear memory array Memory is unified between instructions and data The interpretation of a stored value depends on the control signals When is a value interpreted as an instruction? Sequential instruction processing One instruction processed (fetched, executed, and completed) at a time Program counter (instruction pointer) identifies the current instr. Program counter is advanced sequentially except for control transfer instructions 18

The Von Neumann Model (of a Computer) Q: Is this the only way that a computer can operate? A: No. Qualified Answer: But, it has been the dominant way i. e. , the dominant paradigm for computing for N decades 19

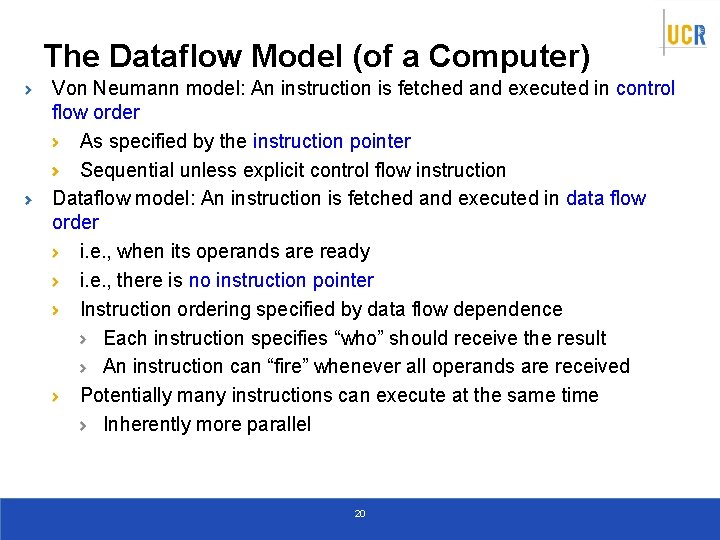

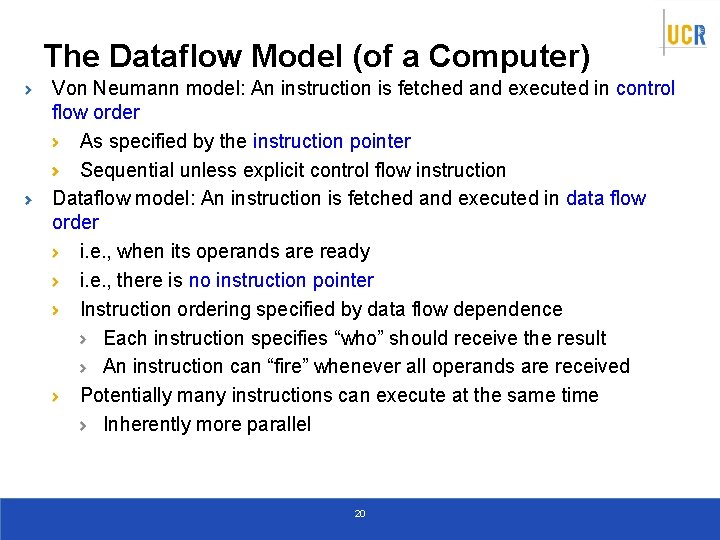

The Dataflow Model (of a Computer) Von Neumann model: An instruction is fetched and executed in control flow order As specified by the instruction pointer Sequential unless explicit control flow instruction Dataflow model: An instruction is fetched and executed in data flow order i. e. , when its operands are ready i. e. , there is no instruction pointer Instruction ordering specified by data flow dependence Each instruction specifies “who” should receive the result An instruction can “fire” whenever all operands are received Potentially many instructions can execute at the same time Inherently more parallel 20

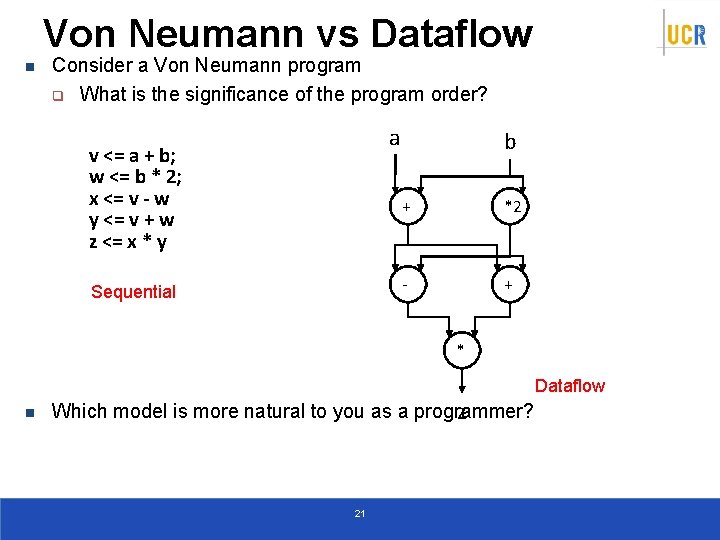

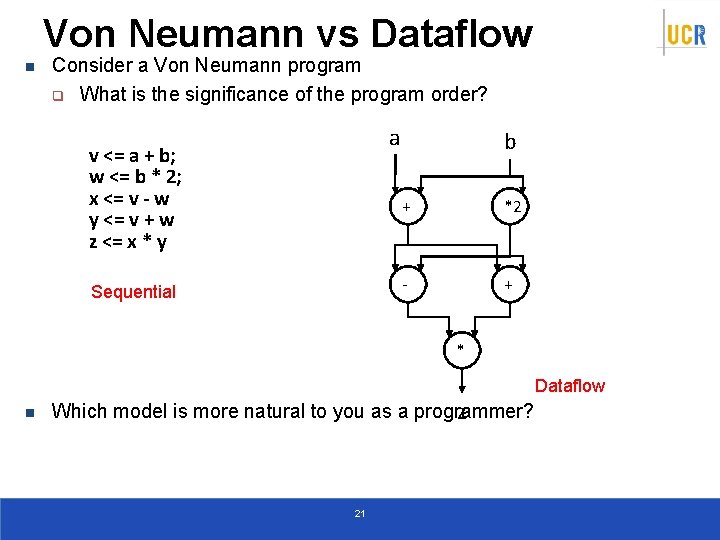

Von Neumann vs Dataflow n Consider a Von Neumann program q What is the significance of the program order? a v <= a + b; w <= b * 2; x <= v - w y <= v + w z <= x * y Sequential b + *2 - + * Dataflow n Which model is more natural to you as a programmer? z 21

WHAT IS PERFORMANCE? 22

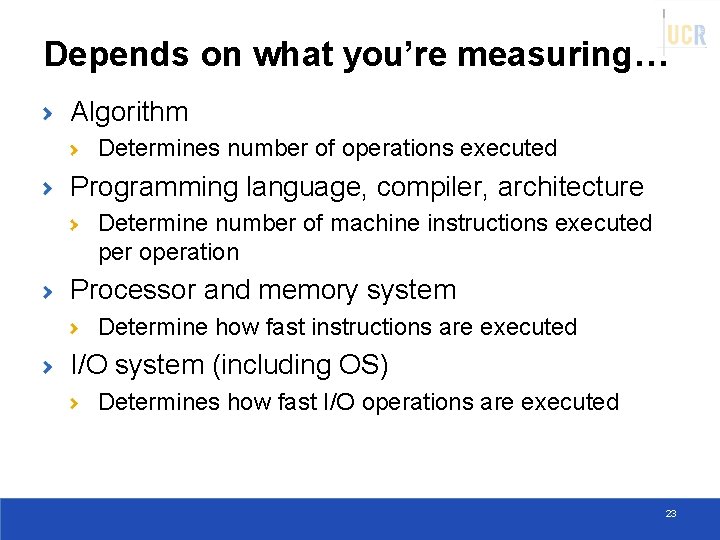

Depends on what you’re measuring… Algorithm Determines number of operations executed Programming language, compiler, architecture Determine number of machine instructions executed per operation Processor and memory system Determine how fast instructions are executed I/O system (including OS) Determines how fast I/O operations are executed 23

Performance analogy Car Top Speed (mph) Acceleration (0 -60) Torque Horsepower MPG Processor Frequency Response Time / Latency Throughput / Bandwidth Power 24

Response Time and Throughput Response time How long it takes to do a task Throughput Total work done per unit time e. g. , tasks/transactions/… per hour How are response time and throughput affected by Replacing the processor with a faster version? Adding more processors? We’ll focus on response time for now… aka Execution time 25

Performance EXECUTION TIME 26

Measuring Execution Time Elapsed time Total response time, including all aspects Processing, I/O, OS overhead, idle time Two common measurements: Wall Clock Time CPU Time 27

Measuring Execution Time Wall Clock Time Real time to complete job (seconds) CPU time Time spent processing a given job Discounts I/O time, other jobs’ shares Comprises user CPU time and system CPU time $ time make > /dev/null 2>&1 real 1 m 14. 115 s user 0 m 57. 853 s sys 0 m 10. 853 s 28

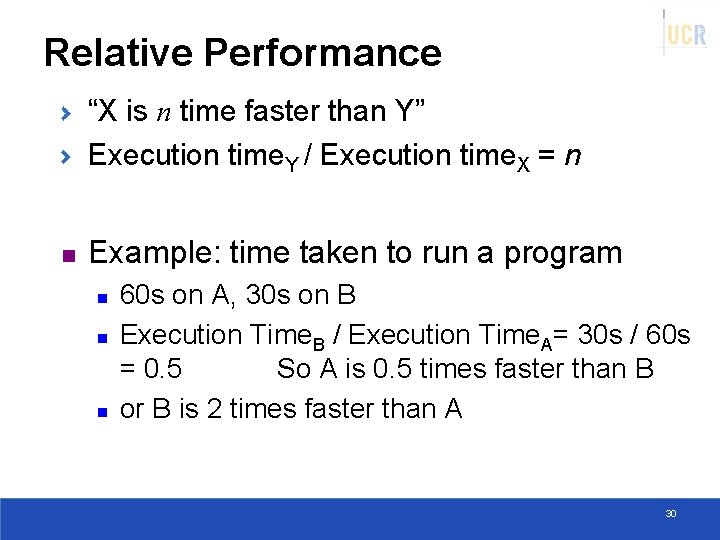

Relative Performance “X is n time faster than Y” Execution time. Y / Execution time. X = n n Example: time taken to run a program n n n 10 s on A, 15 s on B Execution Time. B / Execution Time. A = 15 s / 10 s = 1. 5 So A is 1. 5 times faster than B 29

Relative Performance “X is n time faster than Y” Execution time. Y / Execution time. X = n n Example: time taken to run a program n n n 60 s on A, 30 s on B Execution Time. B / Execution Time. A= 30 s / 60 s = 0. 5 So A is 0. 5 times faster than B or B is 2 times faster than A 30

Performance is defined as: Performance = 1 / Execution Time More generally: Speedup = Performance. New / Performance. Old = Execution. Old / Execution. New 31

Performance CLOCK CYCLES 32

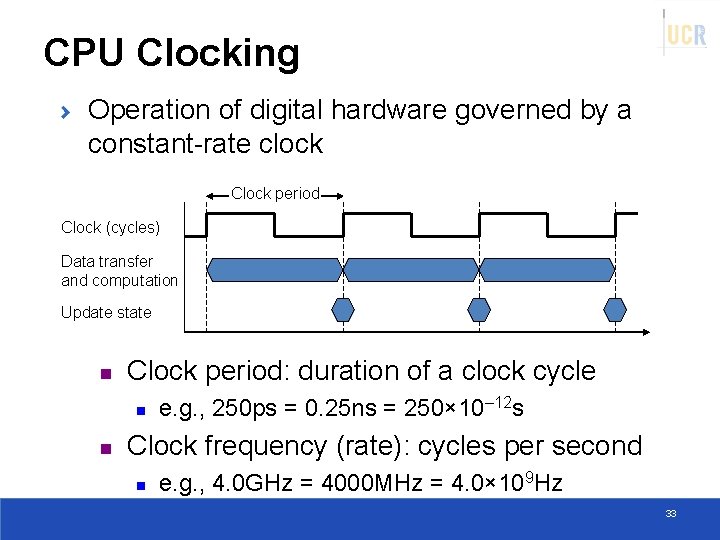

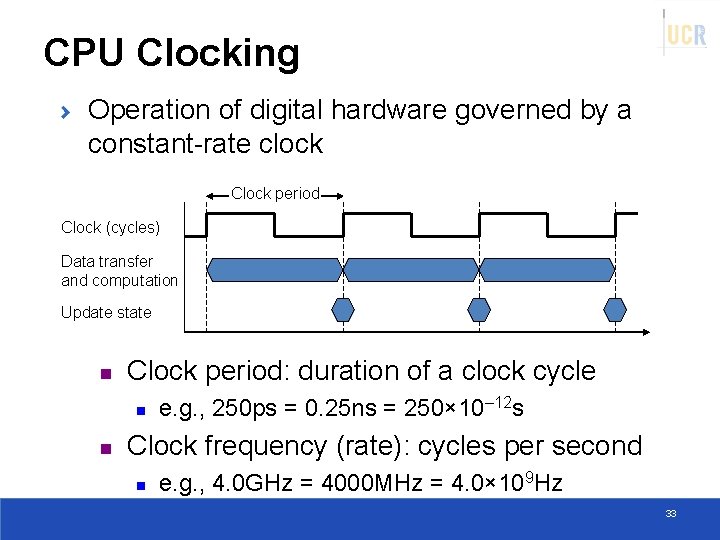

CPU Clocking Operation of digital hardware governed by a constant-rate clock Clock period Clock (cycles) Data transfer and computation Update state n Clock period: duration of a clock cycle n n e. g. , 250 ps = 0. 25 ns = 250× 10– 12 s Clock frequency (rate): cycles per second n e. g. , 4. 0 GHz = 4000 MHz = 4. 0× 109 Hz 33

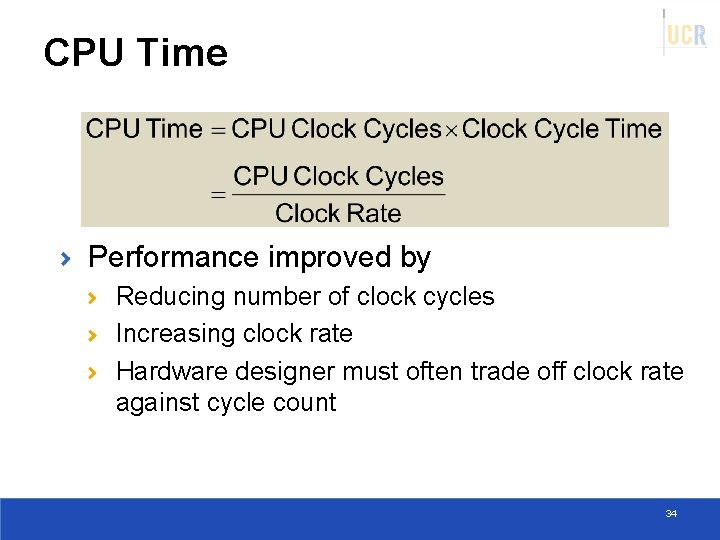

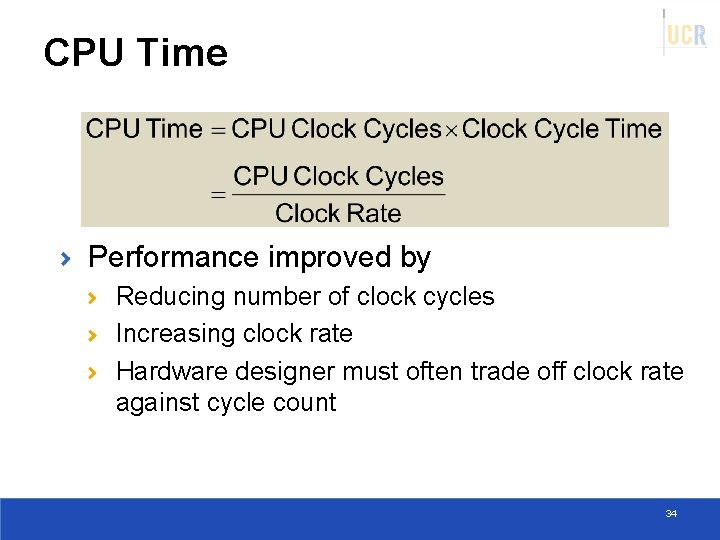

CPU Time Performance improved by Reducing number of clock cycles Increasing clock rate Hardware designer must often trade off clock rate against cycle count 34

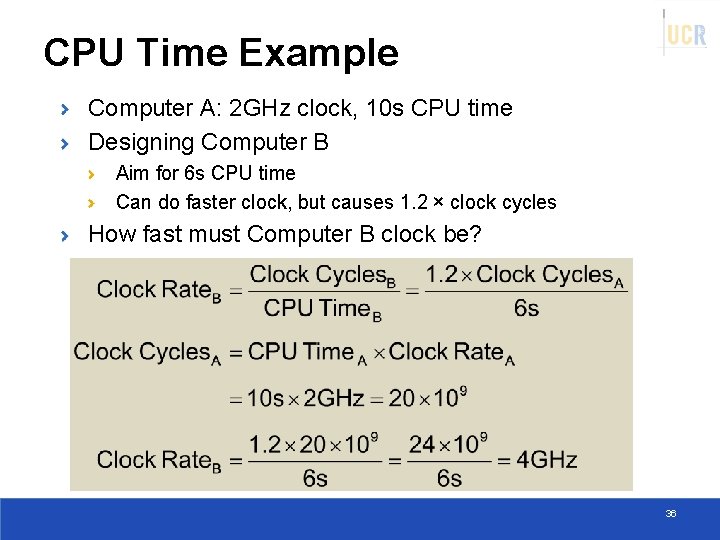

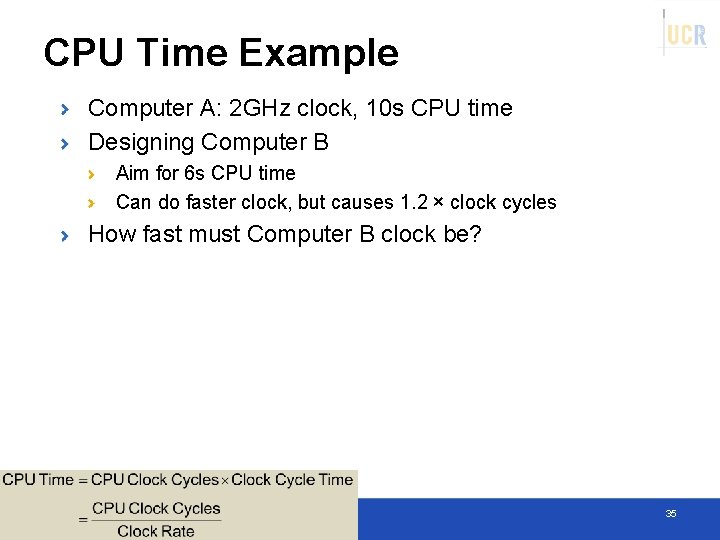

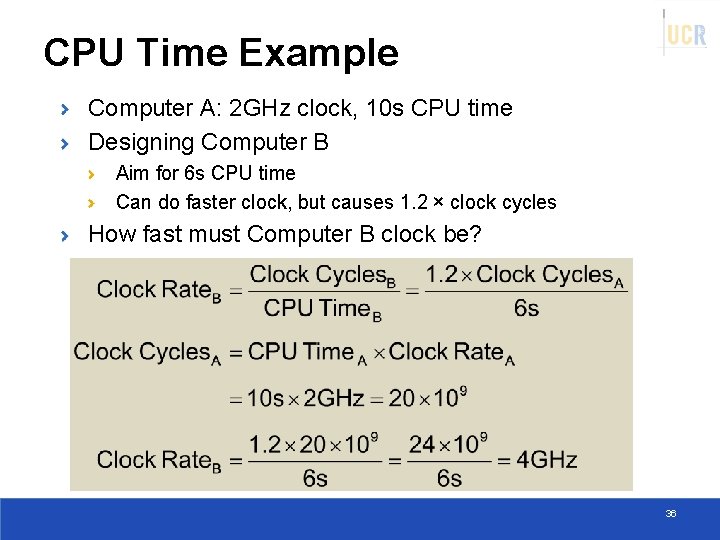

CPU Time Example Computer A: 2 GHz clock, 10 s CPU time Designing Computer B Aim for 6 s CPU time Can do faster clock, but causes 1. 2 × clock cycles How fast must Computer B clock be? 35

CPU Time Example Computer A: 2 GHz clock, 10 s CPU time Designing Computer B Aim for 6 s CPU time Can do faster clock, but causes 1. 2 × clock cycles How fast must Computer B clock be? 36

Performance CPI 37

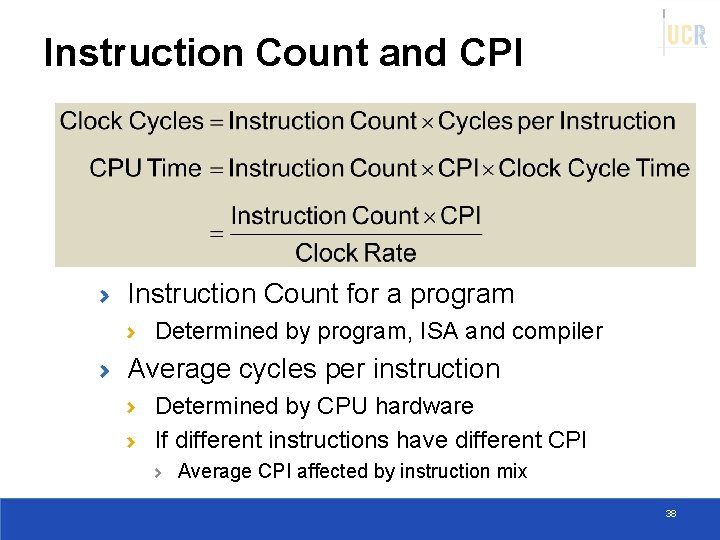

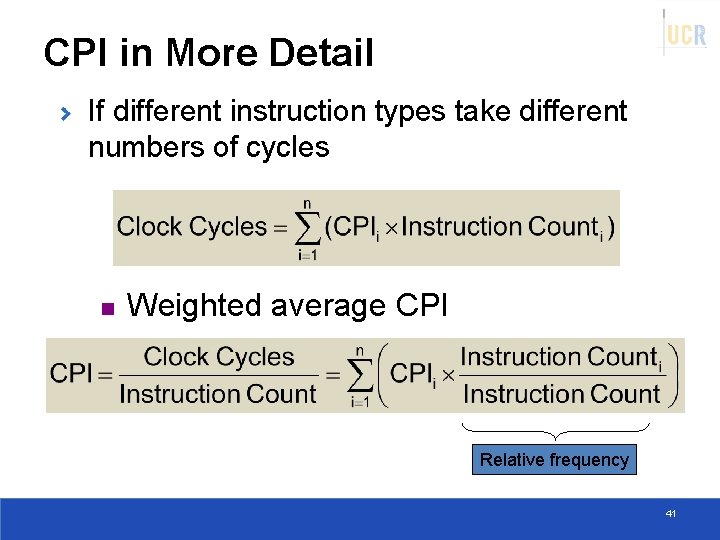

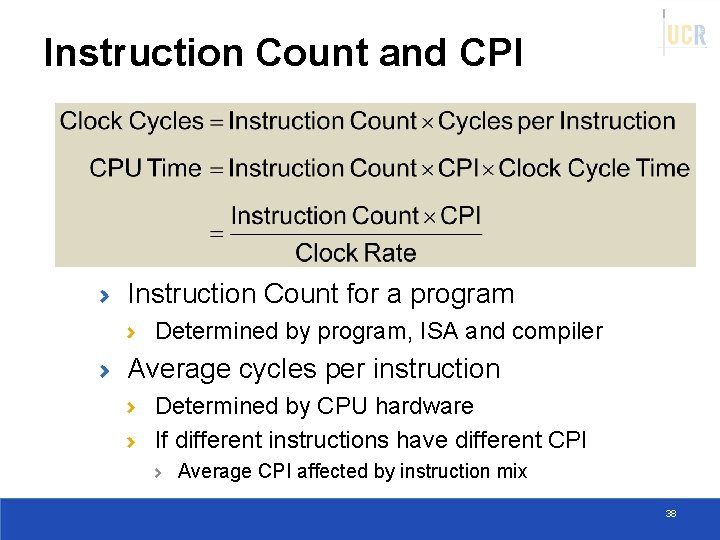

Instruction Count and CPI Instruction Count for a program Determined by program, ISA and compiler Average cycles per instruction Determined by CPU hardware If different instructions have different CPI Average CPI affected by instruction mix 38

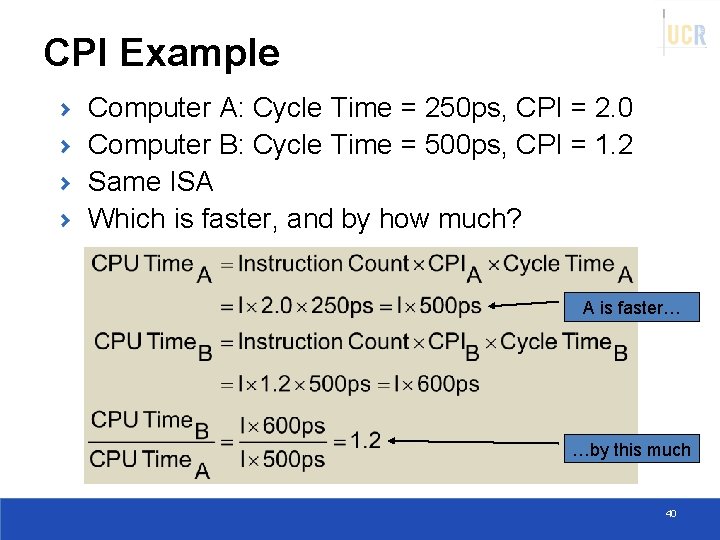

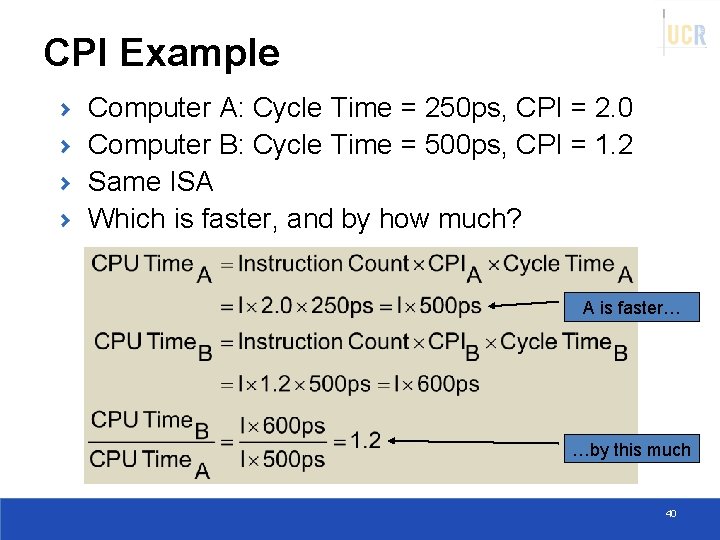

CPI Example Computer A: Cycle Time = 250 ps, CPI = 2. 0 Computer B: Cycle Time = 500 ps, CPI = 1. 2 Same ISA Which is faster, and by how much? 39

CPI Example Computer A: Cycle Time = 250 ps, CPI = 2. 0 Computer B: Cycle Time = 500 ps, CPI = 1. 2 Same ISA Which is faster, and by how much? A is faster… …by this much 40

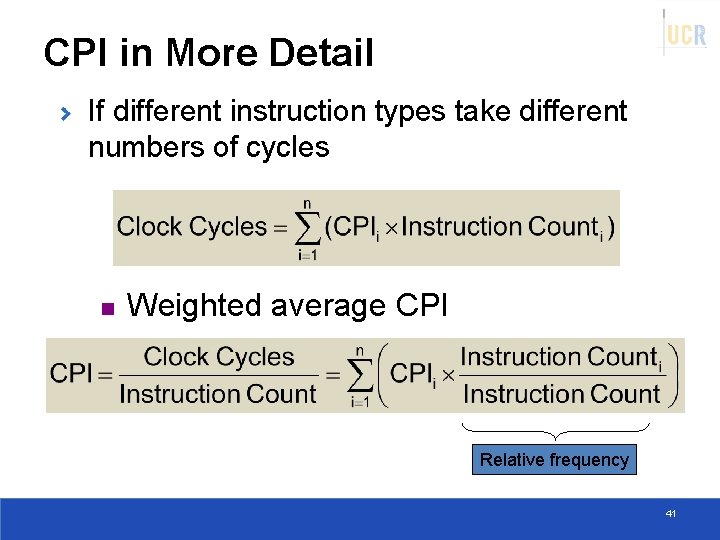

CPI in More Detail If different instruction types take different numbers of cycles n Weighted average CPI Relative frequency 41

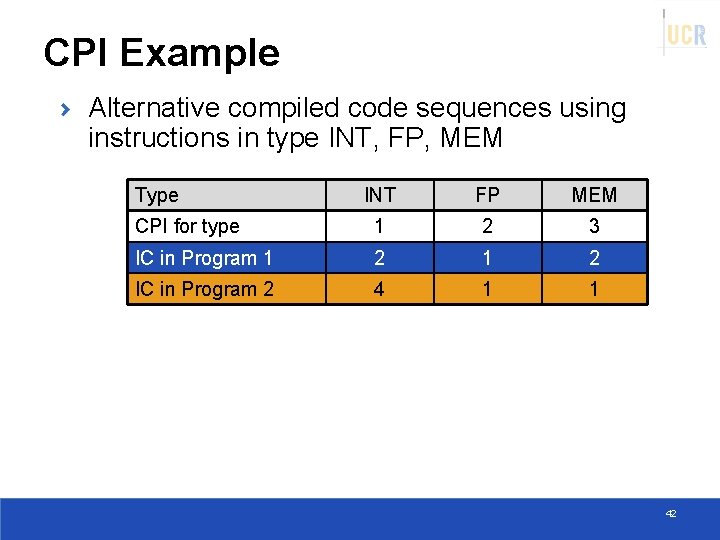

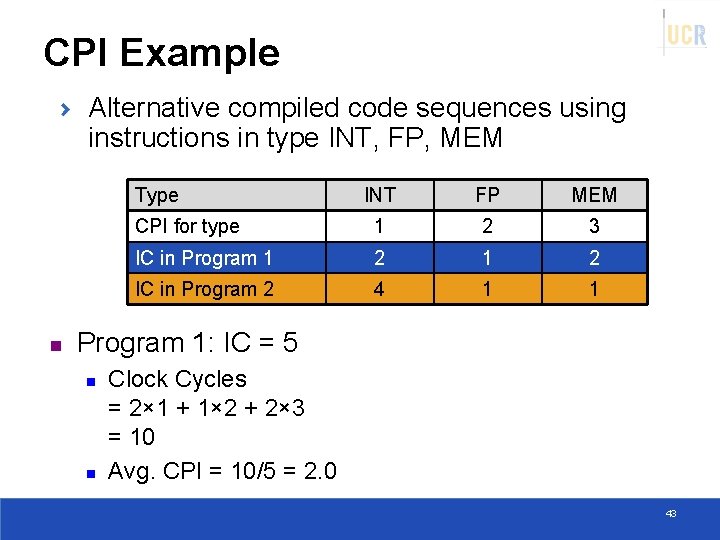

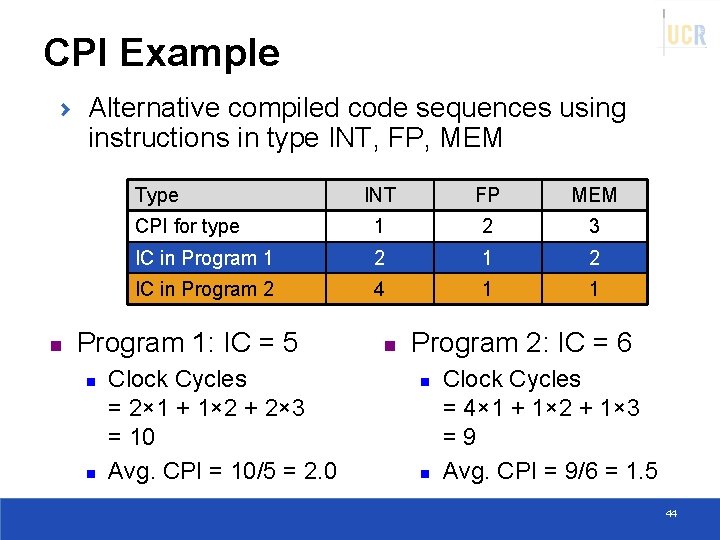

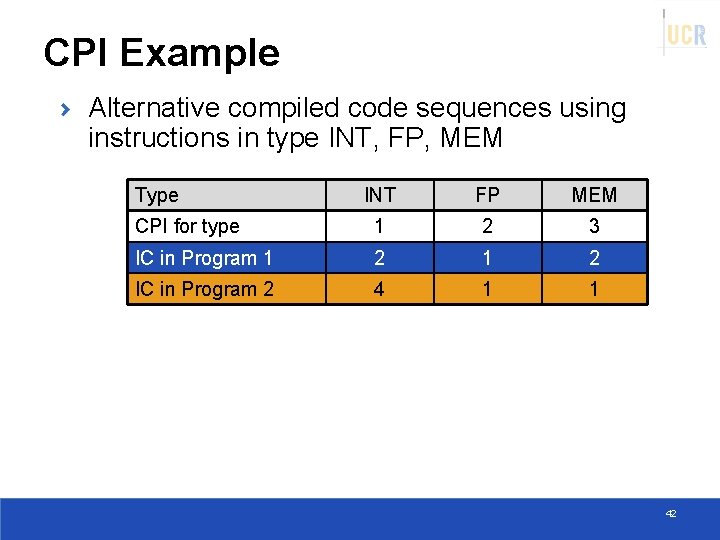

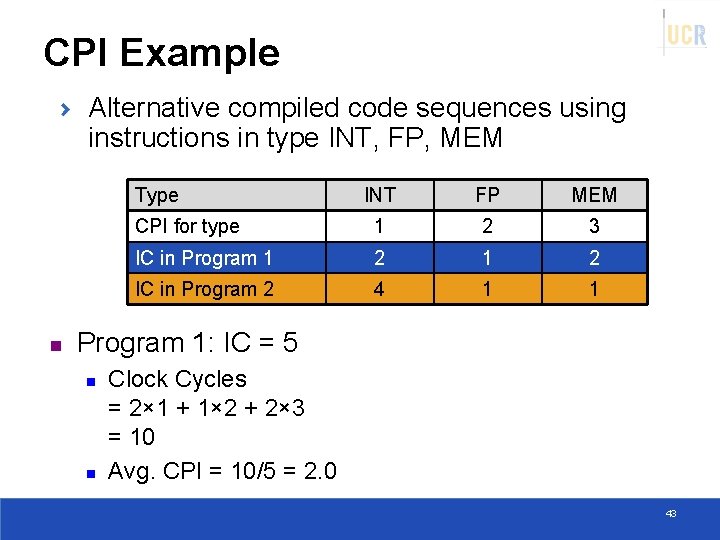

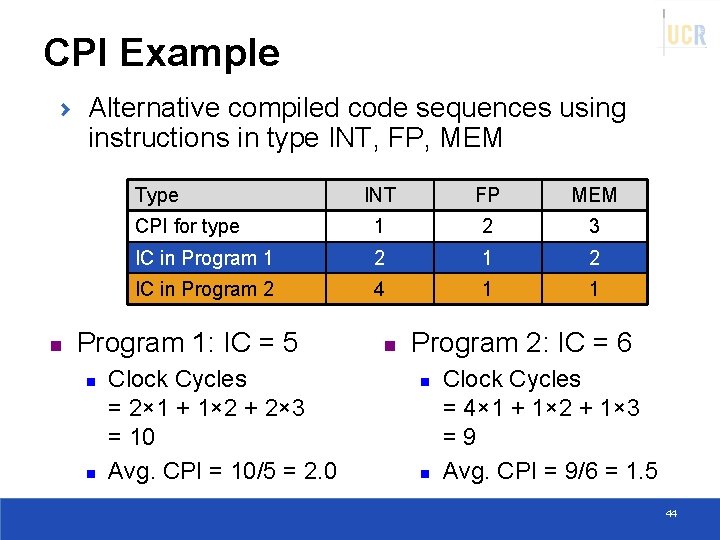

CPI Example Alternative compiled code sequences using instructions in type INT, FP, MEM Type INT FP MEM CPI for type 1 2 3 IC in Program 1 2 IC in Program 2 4 1 1 42

CPI Example Alternative compiled code sequences using instructions in type INT, FP, MEM Type n INT FP MEM CPI for type 1 2 3 IC in Program 1 2 IC in Program 2 4 1 1 Program 1: IC = 5 n n Clock Cycles = 2× 1 + 1× 2 + 2× 3 = 10 Avg. CPI = 10/5 = 2. 0 43

CPI Example Alternative compiled code sequences using instructions in type INT, FP, MEM Type n INT FP MEM CPI for type 1 2 3 IC in Program 1 2 IC in Program 2 4 1 1 Program 1: IC = 5 n n Clock Cycles = 2× 1 + 1× 2 + 2× 3 = 10 Avg. CPI = 10/5 = 2. 0 n Program 2: IC = 6 n n Clock Cycles = 4× 1 + 1× 2 + 1× 3 =9 Avg. CPI = 9/6 = 1. 5 44

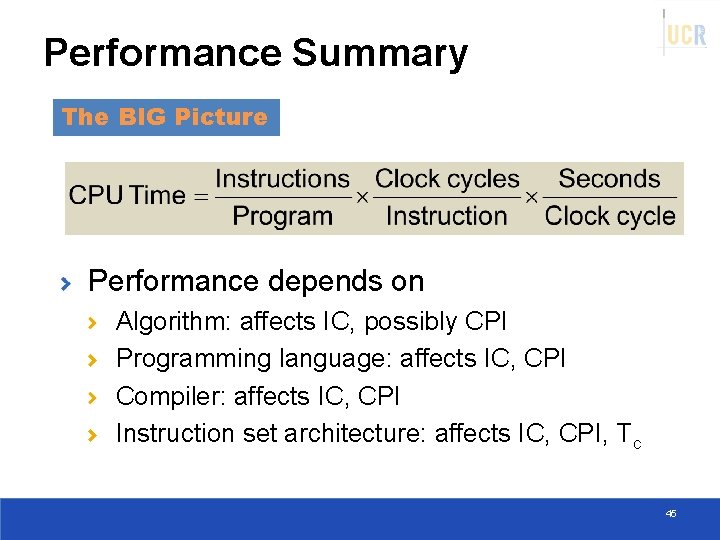

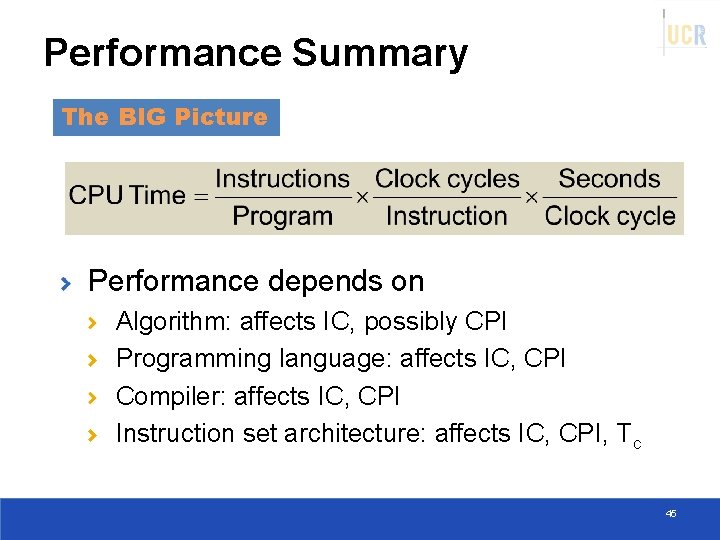

Performance Summary The BIG Picture Performance depends on Algorithm: affects IC, possibly CPI Programming language: affects IC, CPI Compiler: affects IC, CPI Instruction set architecture: affects IC, CPI, Tc 45

POWER 46

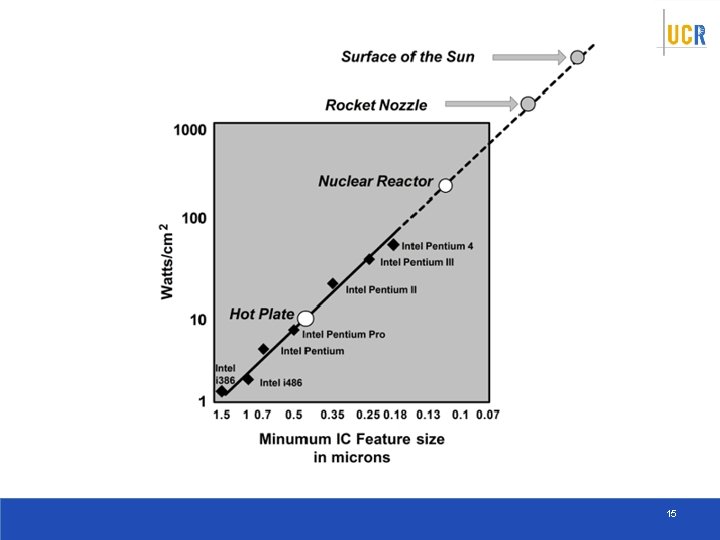

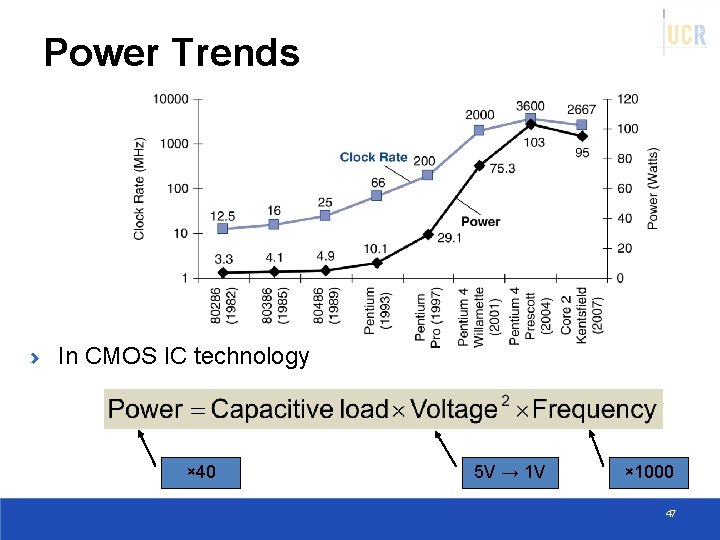

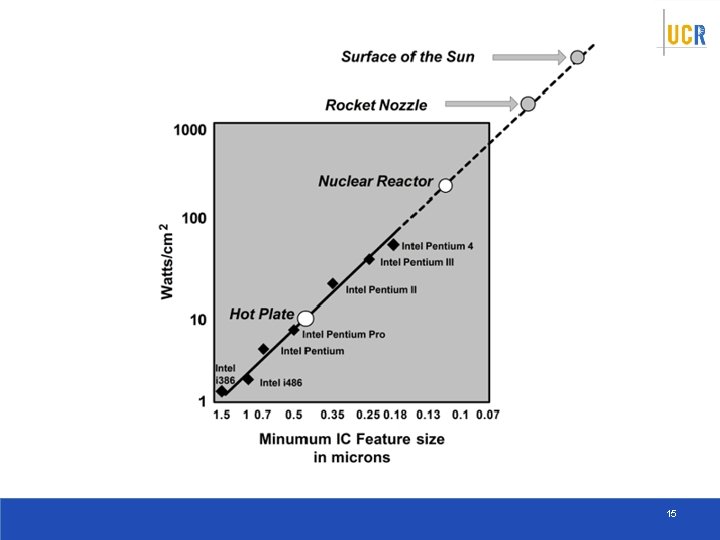

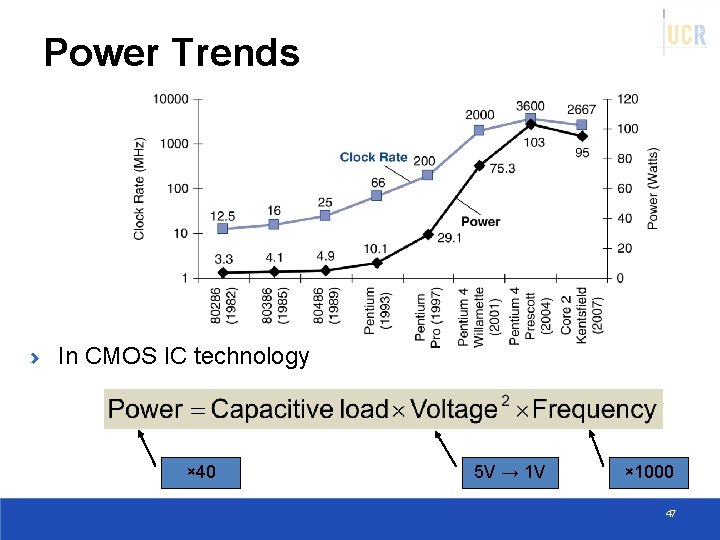

Power Trends In CMOS IC technology × 40 5 V → 1 V × 1000 47

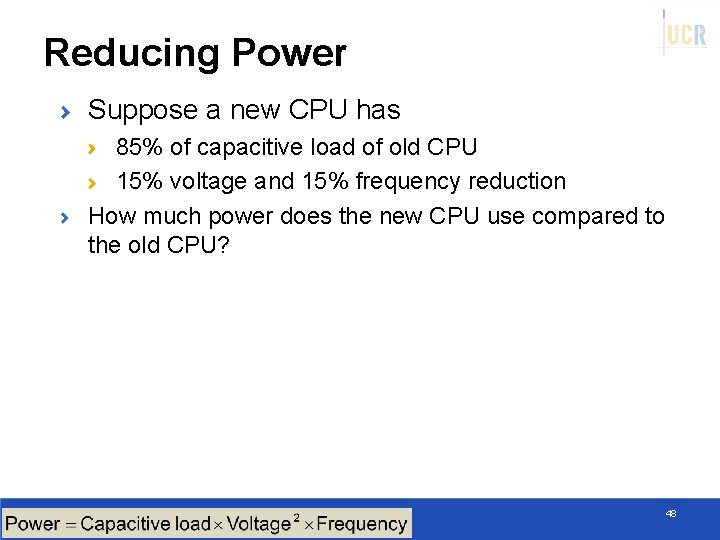

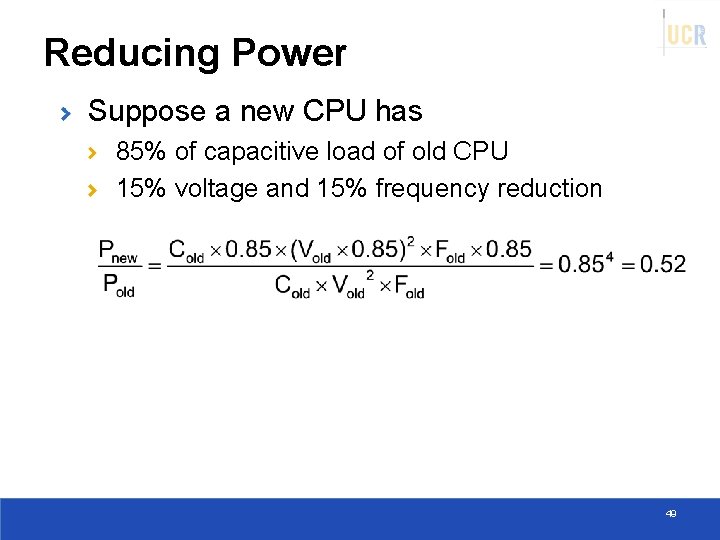

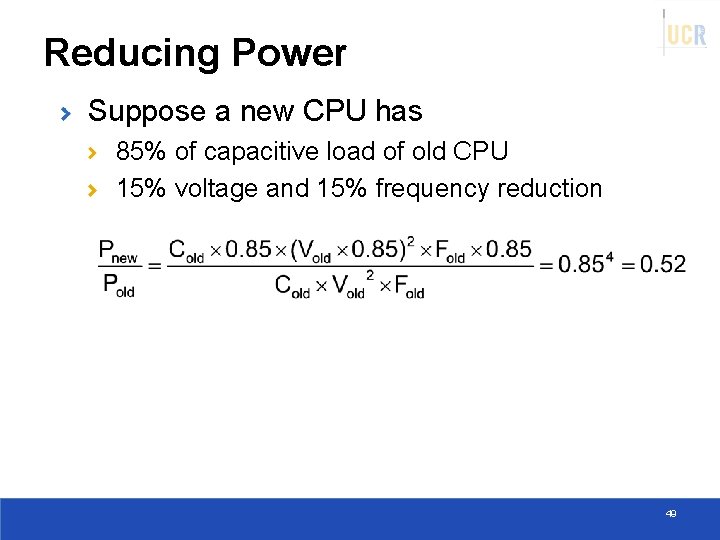

Reducing Power Suppose a new CPU has 85% of capacitive load of old CPU 15% voltage and 15% frequency reduction How much power does the new CPU use compared to the old CPU? 48

Reducing Power Suppose a new CPU has 85% of capacitive load of old CPU 15% voltage and 15% frequency reduction 49

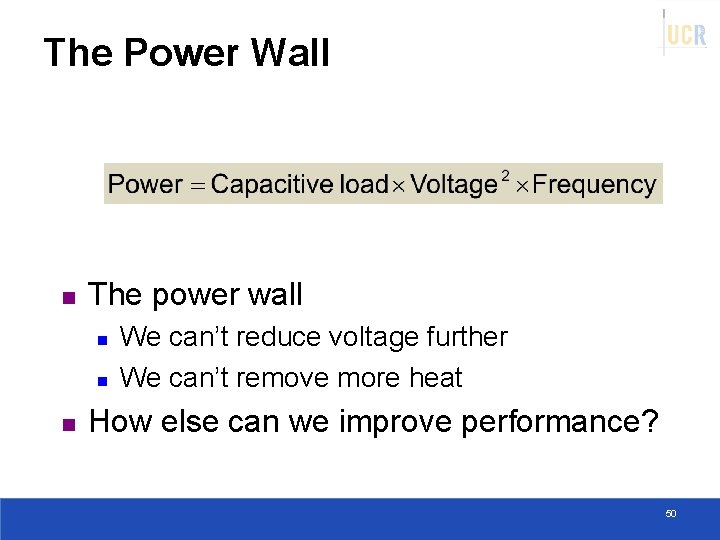

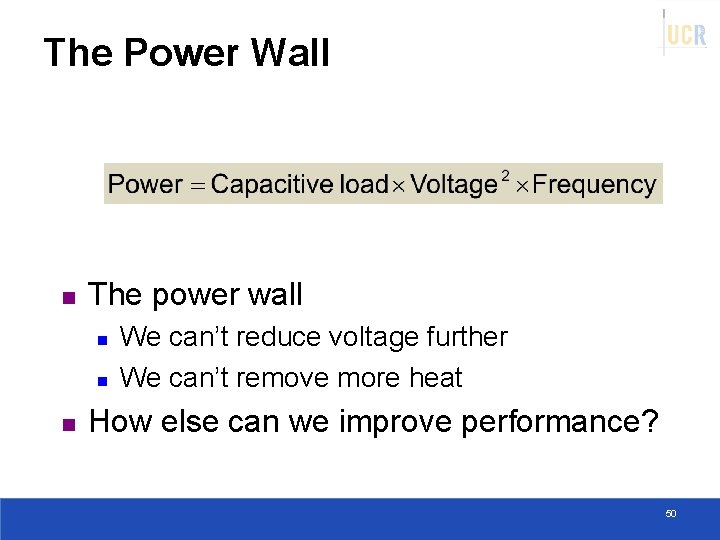

The Power Wall n The power wall n n n We can’t reduce voltage further We can’t remove more heat How else can we improve performance? 50

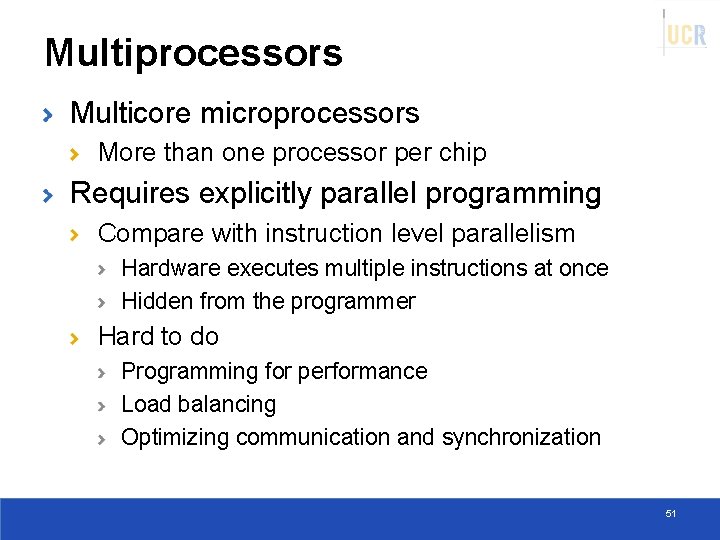

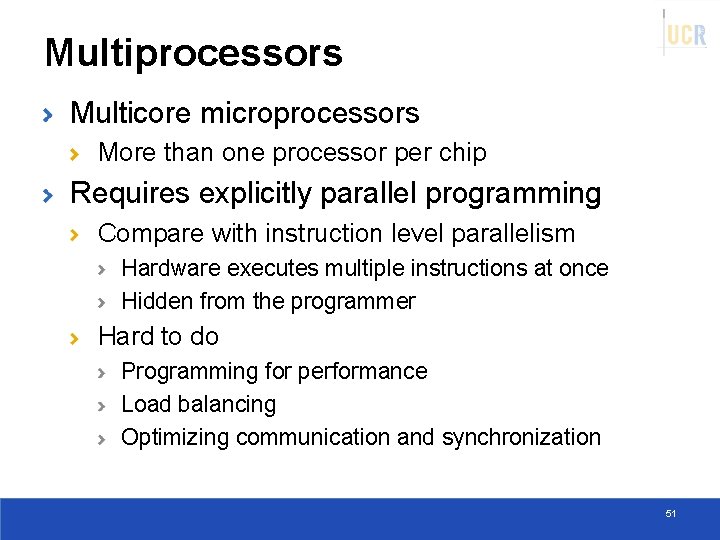

Multiprocessors Multicore microprocessors More than one processor per chip Requires explicitly parallel programming Compare with instruction level parallelism Hardware executes multiple instructions at once Hidden from the programmer Hard to do Programming for performance Load balancing Optimizing communication and synchronization 51

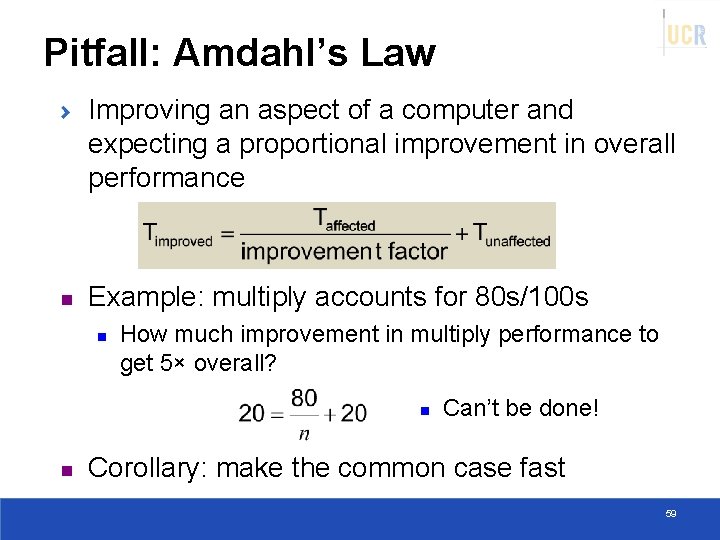

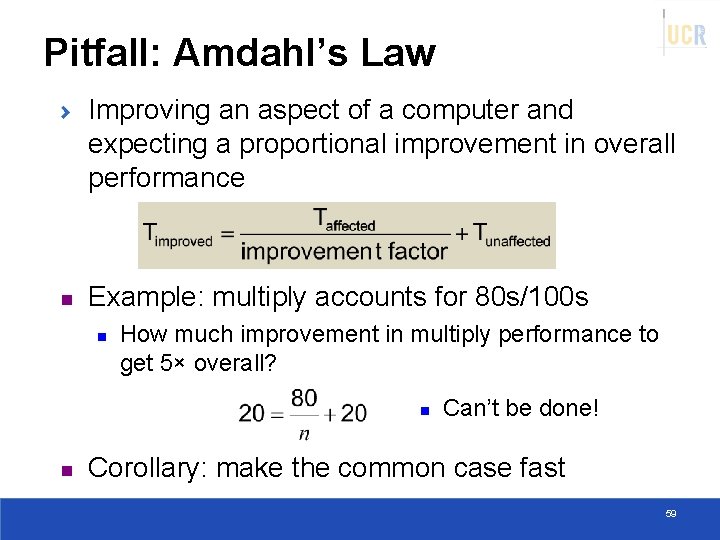

Pitfall: Amdahl’s Law Improving an aspect of a computer and expecting a proportional improvement in overall performance n Example: multiply accounts for 80 s/100 s n How much improvement in multiply performance to get 5× overall? n n Can’t be done! Corollary: make the common case fast 59

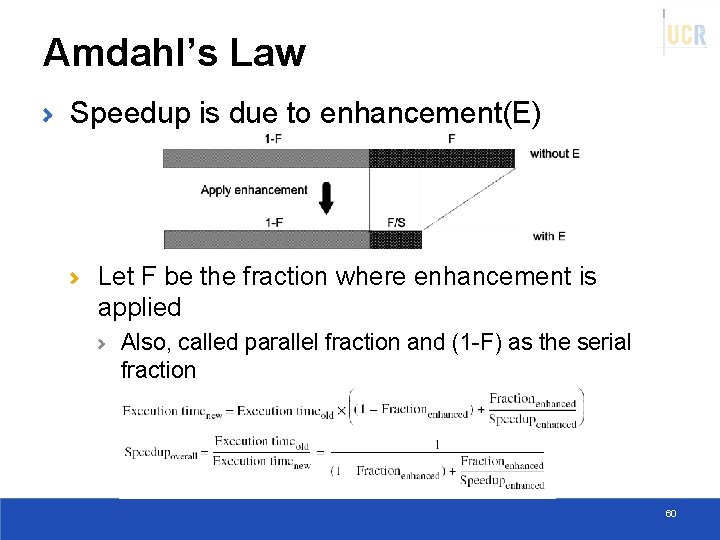

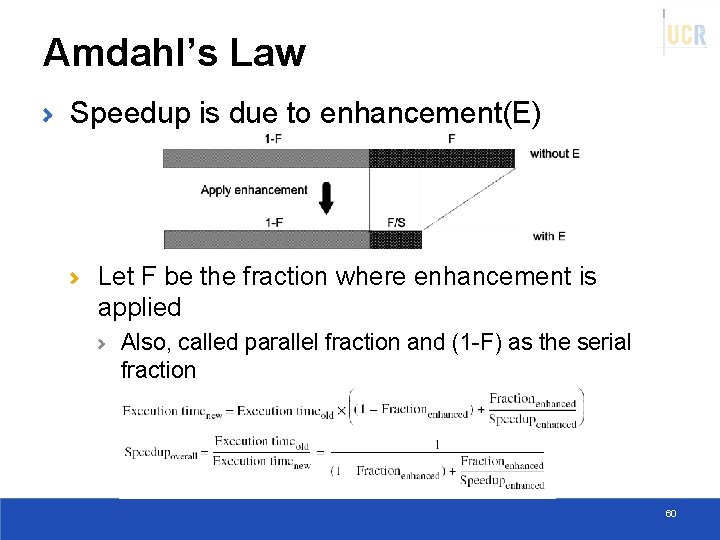

Amdahl’s Law Speedup is due to enhancement(E) Let F be the fraction where enhancement is applied Also, called parallel fraction and (1 -F) as the serial fraction 60

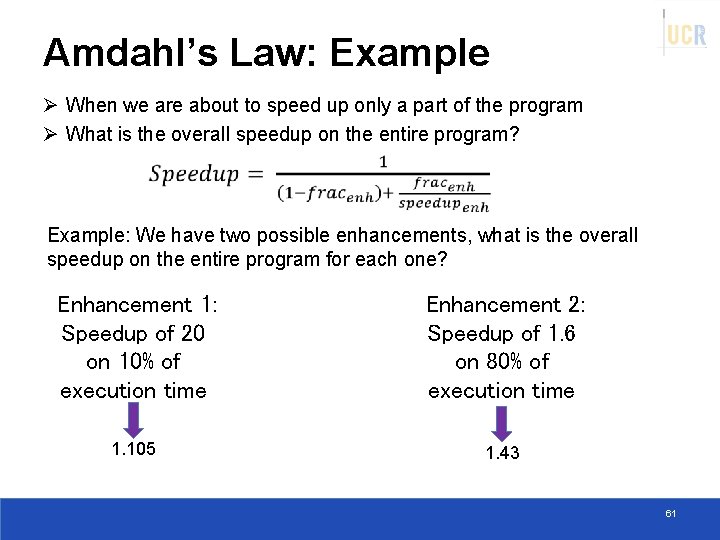

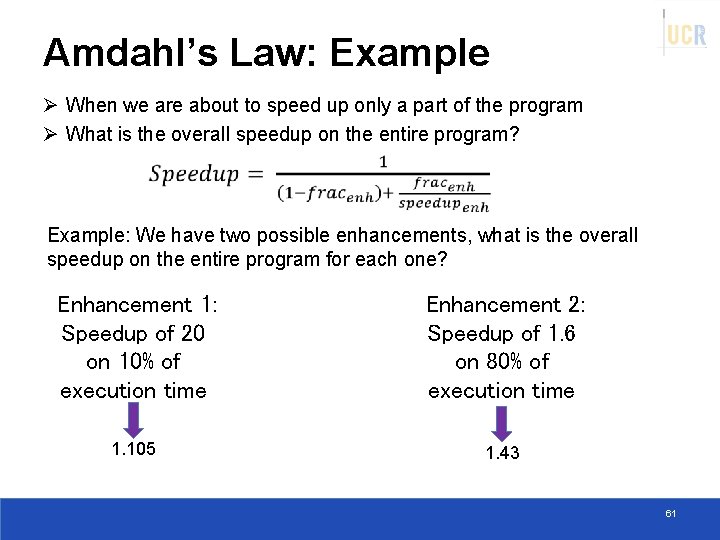

Amdahl’s Law: Example Ø When we are about to speed up only a part of the program Ø What is the overall speedup on the entire program? Example: We have two possible enhancements, what is the overall speedup on the entire program for each one? Enhancement 1: Speedup of 20 on 10% of execution time Enhancement 2: Speedup of 1. 6 on 80% of execution time 1. 105 1. 43 61

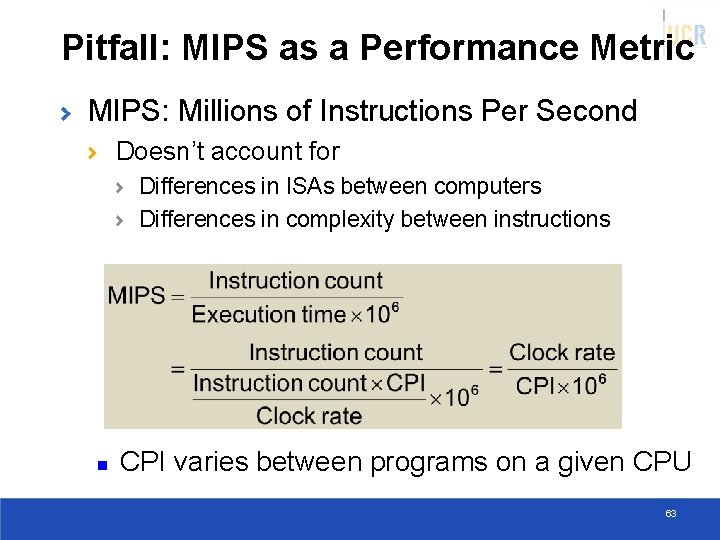

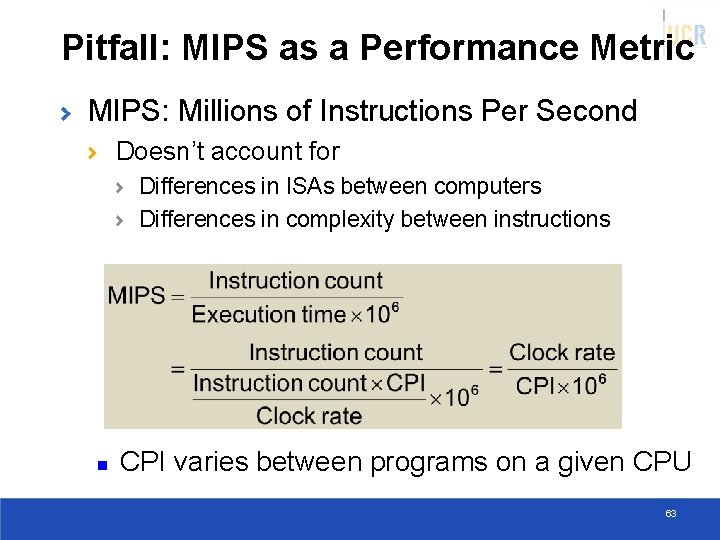

Pitfall: MIPS as a Performance Metric MIPS: Millions of Instructions Per Second Doesn’t account for Differences in ISAs between computers Differences in complexity between instructions n CPI varies between programs on a given CPU 63

Concluding Remarks Cost/performance is improving Due to underlying technology development Instruction set architecture The hardware/software interface Execution time: the best performance measure Power is a limiting factor Use parallelism to improve performance 64

Design of basic computer in computer architecture

Design of basic computer in computer architecture Computer security 161 cryptocurrency lecture

Computer security 161 cryptocurrency lecture Computer science 161

Computer science 161 Diff between computer organization and architecture

Diff between computer organization and architecture Bus design in computer architecture

Bus design in computer architecture Digital design and computer architecture arm edition

Digital design and computer architecture arm edition Digital design and computer architecture

Digital design and computer architecture Digital design and computer architecture

Digital design and computer architecture Digital design and computer architecture

Digital design and computer architecture Digital design and computer architecture

Digital design and computer architecture Digital design and computer architecture: arm edition

Digital design and computer architecture: arm edition Memory system

Memory system 64 bit alu

64 bit alu Logic design conventions

Logic design conventions Design of alu in computer architecture

Design of alu in computer architecture Bsa in computer architecture

Bsa in computer architecture Alu computer architecture

Alu computer architecture Psalm 96 2-3

Psalm 96 2-3 Gezang 161

Gezang 161 Mini vidas parts

Mini vidas parts Port 161

Port 161 Opwekking 86

Opwekking 86 Convenio 161 oit resumen

Convenio 161 oit resumen Cs 161 ucr

Cs 161 ucr Jelena đorđevic 161

Jelena đorđevic 161 Inls 161

Inls 161 Astronomy 161

Astronomy 161 Mcp 161

Mcp 161 Fas161

Fas161 Pa 161 program

Pa 161 program Ac 161

Ac 161 Error 161

Error 161 Drba-161

Drba-161 Csc 161 rochester

Csc 161 rochester Dbx debugger

Dbx debugger Bvp 161

Bvp 161 Gezang 161

Gezang 161 Jeroen sytsma

Jeroen sytsma Astronomy 161

Astronomy 161 160 161

160 161 Nabl assessor list

Nabl assessor list 180-161

180-161 Call and return architecture

Call and return architecture Decision support systems and intelligent systems

Decision support systems and intelligent systems When to use sdlc

When to use sdlc Computer organization and architecture 10th solution

Computer organization and architecture 10th solution Virtual lab computer organization

Virtual lab computer organization Introduction to computer organization and architecture

Introduction to computer organization and architecture Timing and control in computer architecture

Timing and control in computer architecture Evolution of computer architecture

Evolution of computer architecture Computer organization & architecture: themes and variations

Computer organization & architecture: themes and variations Computer organization and architecture 10th edition

Computer organization and architecture 10th edition Linear pipeline vs non linear pipeline

Linear pipeline vs non linear pipeline Multiplexer computer architecture

Multiplexer computer architecture Assembly language and computer architecture

Assembly language and computer architecture Computer organization and architecture william stallings

Computer organization and architecture william stallings Computer organisation and architecture

Computer organisation and architecture