CPS 110 Address translation Landon Cox Dynamic address

- Slides: 42

CPS 110: Address translation Landon Cox

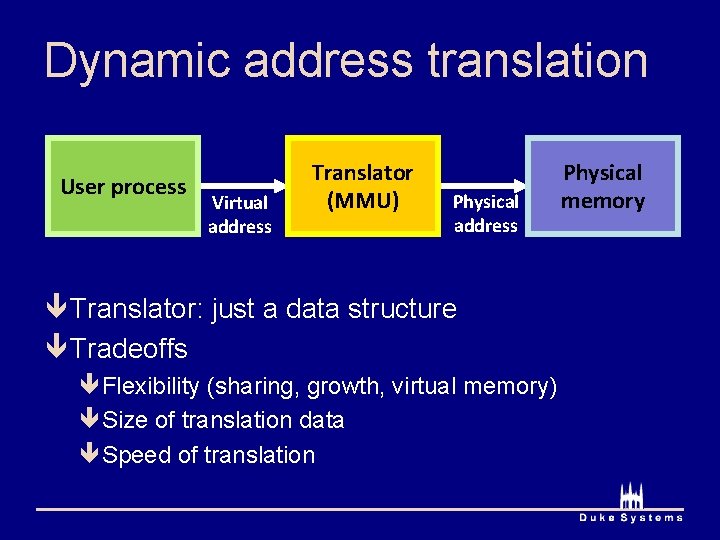

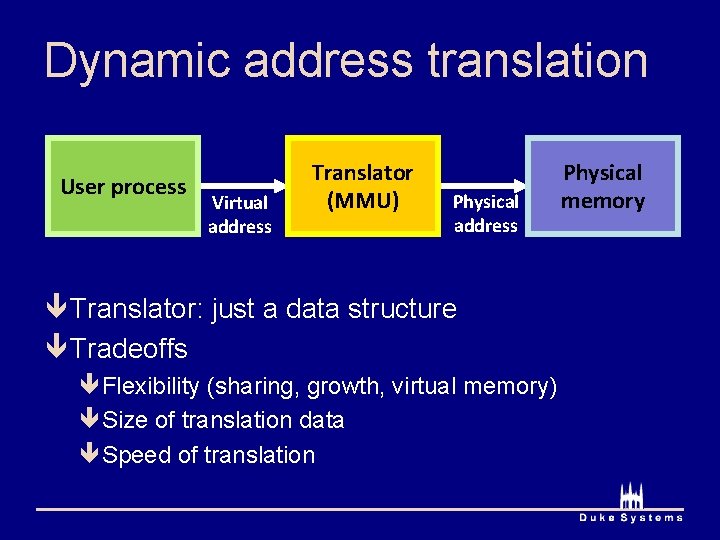

Dynamic address translation User process Virtual address Translator (MMU) Physical address ê Translator: just a data structure ê Tradeoffs êFlexibility (sharing, growth, virtual memory) êSize of translation data êSpeed of translation Physical memory

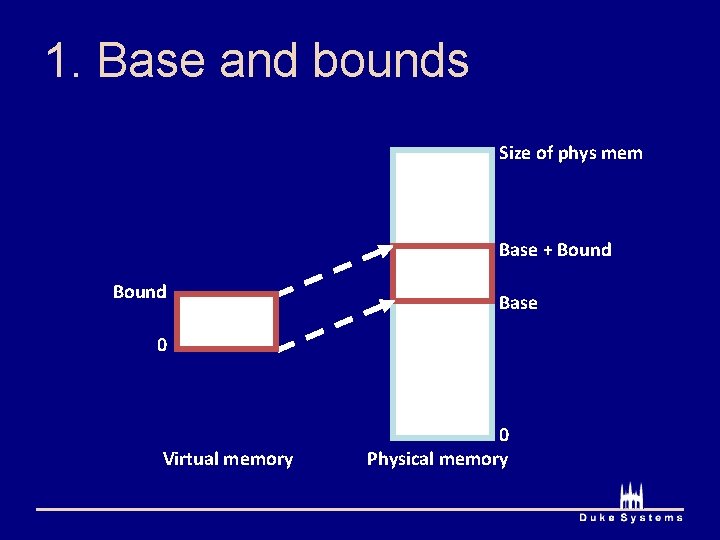

1. Base and bounds êFor each process êSingle contiguous region of phys mem êNot allowed to access outside of region êIllusion own physical mem [0, bound)

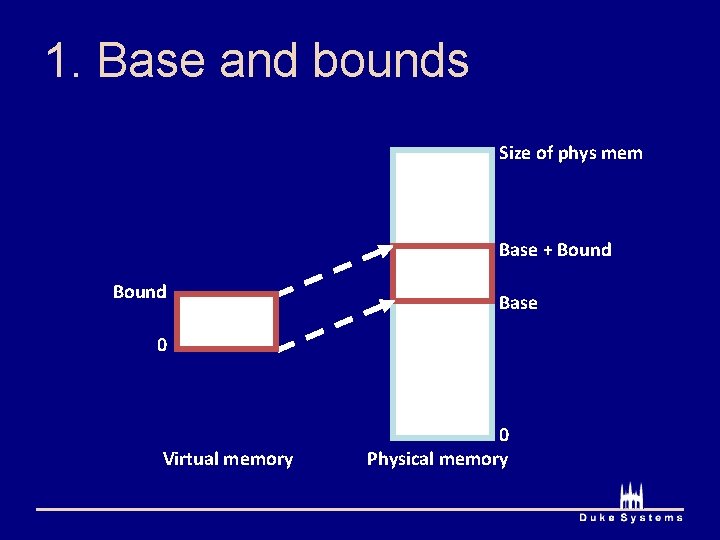

1. Base and bounds Size of phys mem Base + Bound Base 0 Virtual memory 0 Physical memory

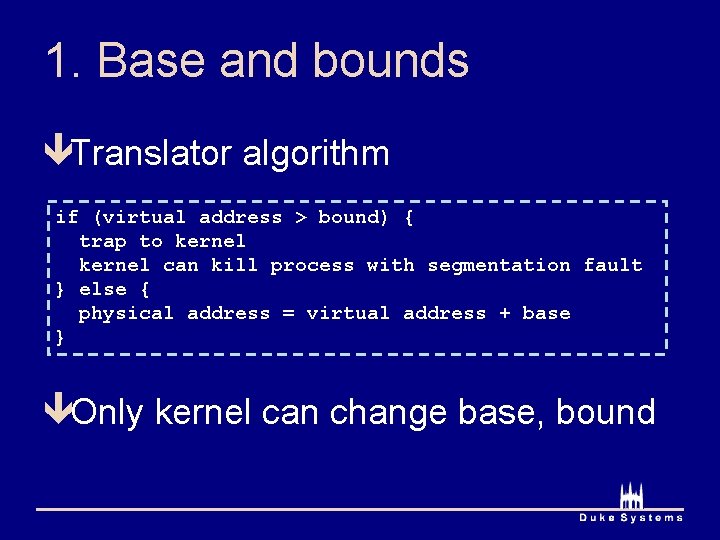

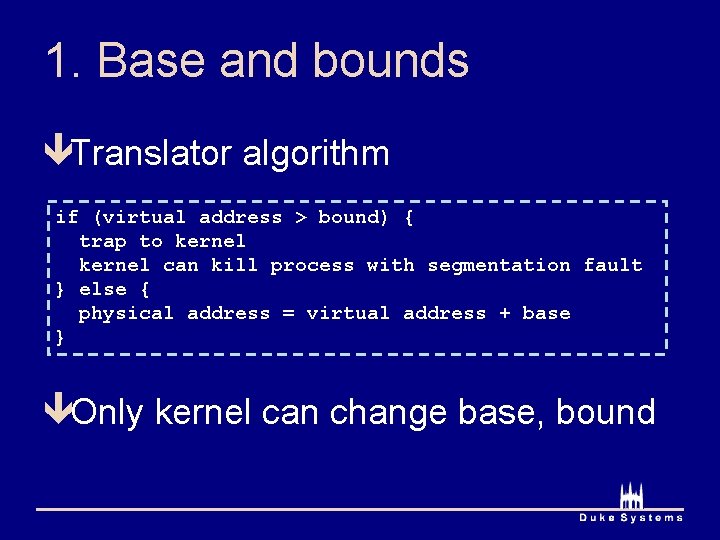

1. Base and bounds êTranslator algorithm if (virtual address > bound) { trap to kernel can kill process with segmentation fault } else { physical address = virtual address + base } êOnly kernel can change base, bound

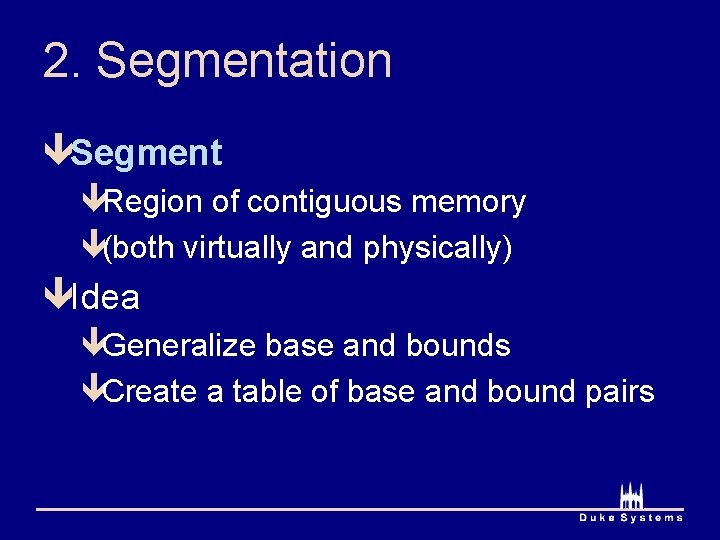

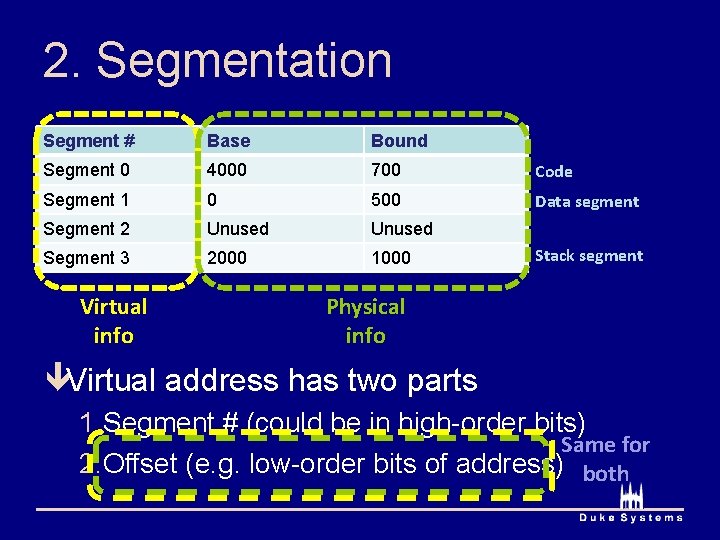

2. Segmentation êSegment êRegion of contiguous memory ê(both virtually and physically) êIdea êGeneralize base and bounds êCreate a table of base and bound pairs

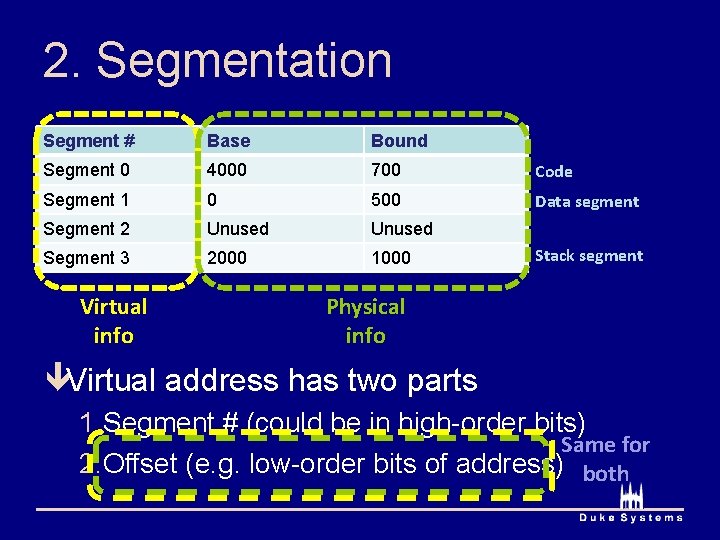

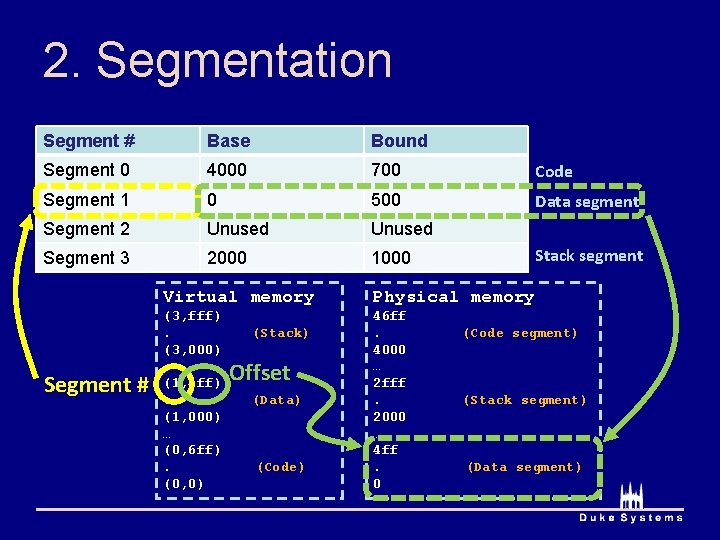

2. Segmentation Segment # Base Bound Segment 0 4000 700 Code Segment 1 0 500 Data segment Segment 2 Unused Segment 3 2000 1000 Virtual info Stack segment Physical info êVirtual address has two parts 1. Segment # (could be in high-order bits) Same for 2. Offset (e. g. low-order bits of address) both

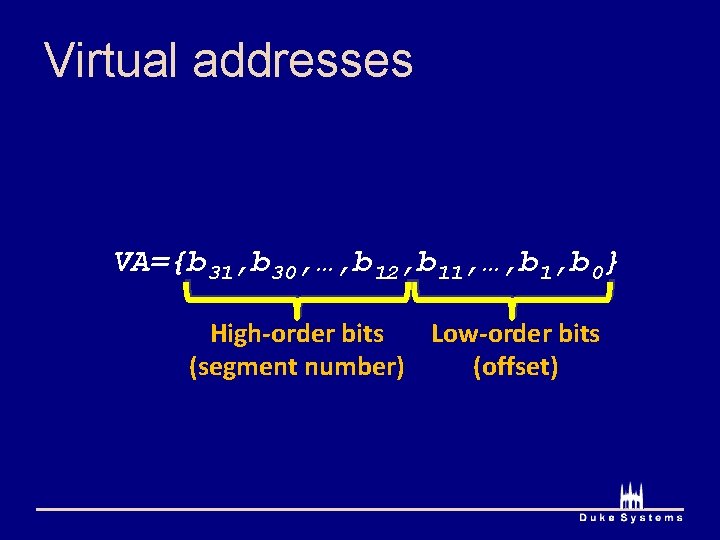

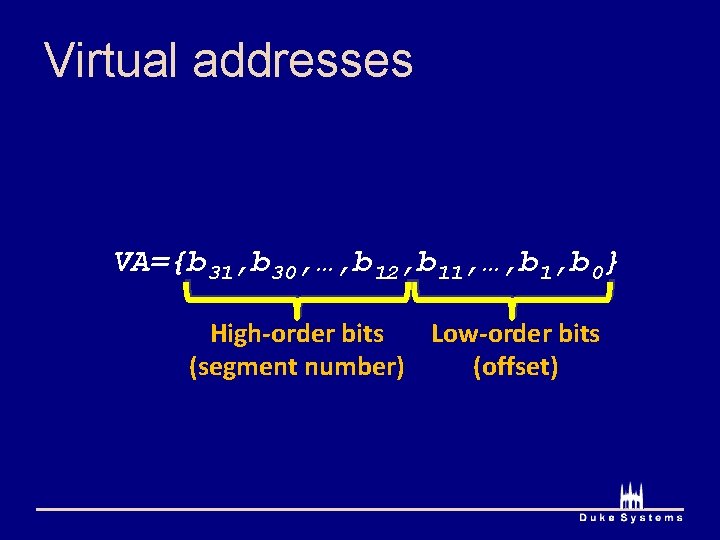

Virtual addresses VA={b 31, b 30, …, b 12, b 11, …, b 1, b 0} High-order bits Low-order bits (segment number) (offset)

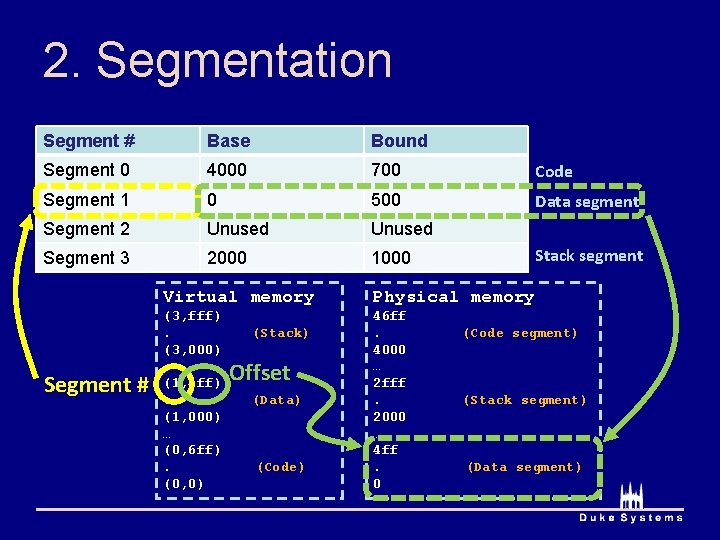

2. Segmentation Segment # Base Bound Segment 0 4000 700 Code Segment 1 0 500 Data segment Segment 2 Unused Segment 3 2000 1000 Segment # Stack segment Virtual memory Physical memory (3, fff). (3, 000) … (1, 4 ff). (1, 000) … (0, 6 ff). (0, 0) 46 ff. 4000 … 2 fff. 2000 … 4 ff. 0 (Stack) Offset (Data) (Code segment) (Stack segment) (Data segment)

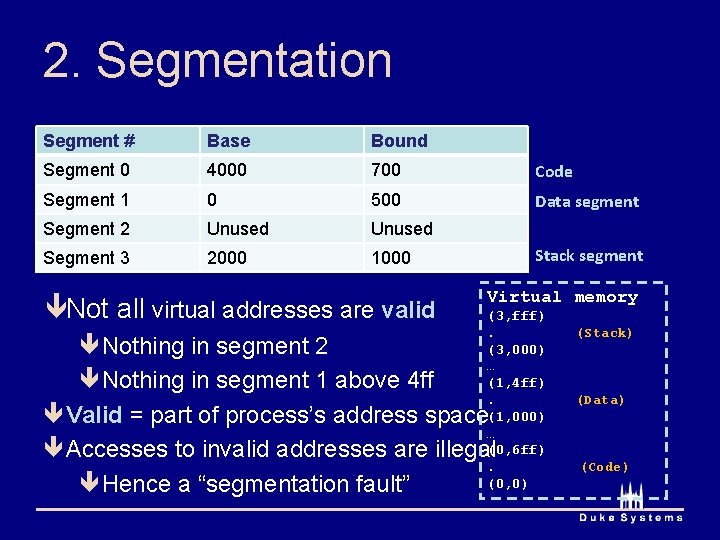

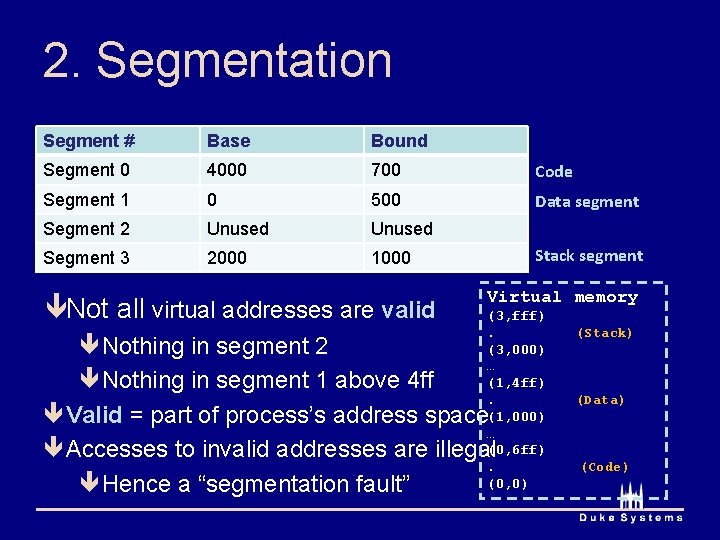

2. Segmentation Segment # Base Bound Segment 0 4000 700 Code Segment 1 0 500 Data segment Segment 2 Unused Segment 3 2000 1000 êNot all virtual addresses are valid Stack segment Virtual memory (3, fff). (3, 000) … (1, 4 ff). (1, 000) … (0, 6 ff). (0, 0) êNothing in segment 2 êNothing in segment 1 above 4 ff êValid = part of process’s address space êAccesses to invalid addresses are illegal êHence a “segmentation fault” (Stack) (Data) (Code)

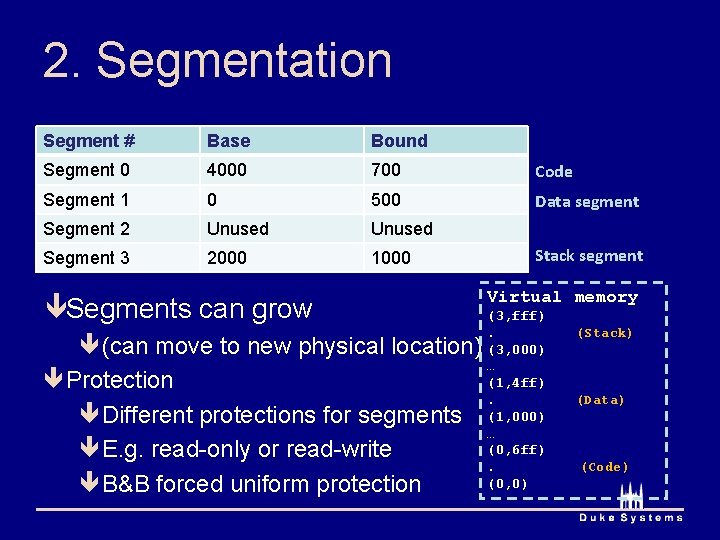

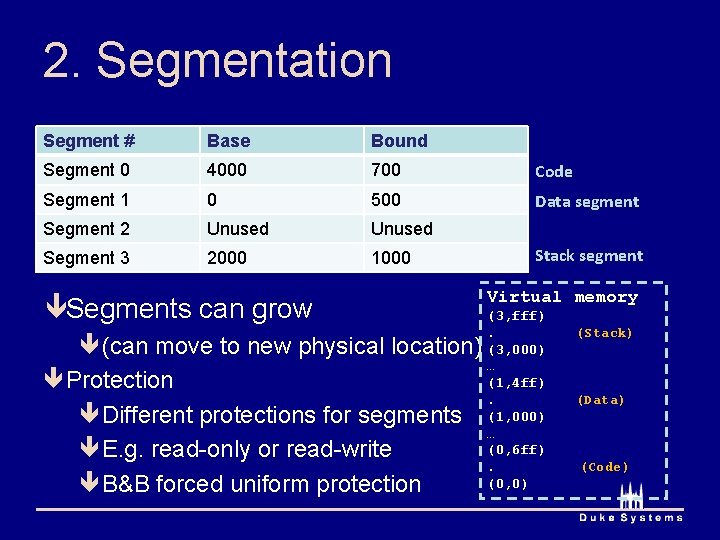

2. Segmentation Segment # Base Bound Segment 0 4000 700 Code Segment 1 0 500 Data segment Segment 2 Unused Segment 3 2000 1000 êSegments can grow ê(can move to new physical location) êProtection êDifferent protections for segments êE. g. read-only or read-write êB&B forced uniform protection Stack segment Virtual memory (3, fff). (3, 000) … (1, 4 ff). (1, 000) … (0, 6 ff). (0, 0) (Stack) (Data) (Code)

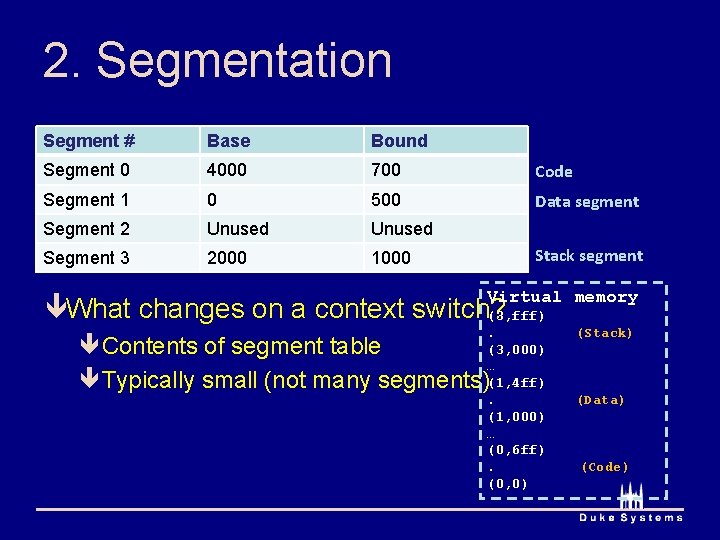

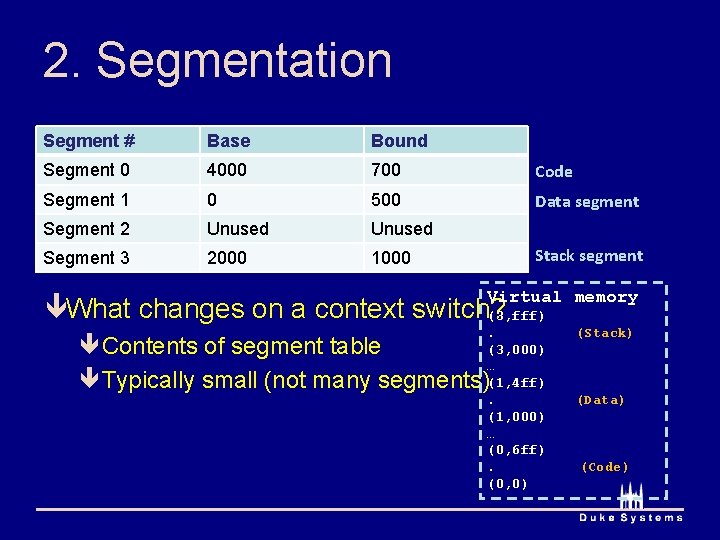

2. Segmentation Segment # Base Bound Segment 0 4000 700 Code Segment 1 0 500 Data segment Segment 2 Unused Segment 3 2000 1000 Stack segment Virtual memory êWhat changes on a context switch? (3, fff). (3, 000) … (1, 4 ff). (1, 000) … (0, 6 ff). (0, 0) êContents of segment table êTypically small (not many segments) (Stack) (Data) (Code)

Segmentation pros and cons ê Pros êMultiple areas of address space can grow separately êEasy to share parts of address space ê(can share code segment)

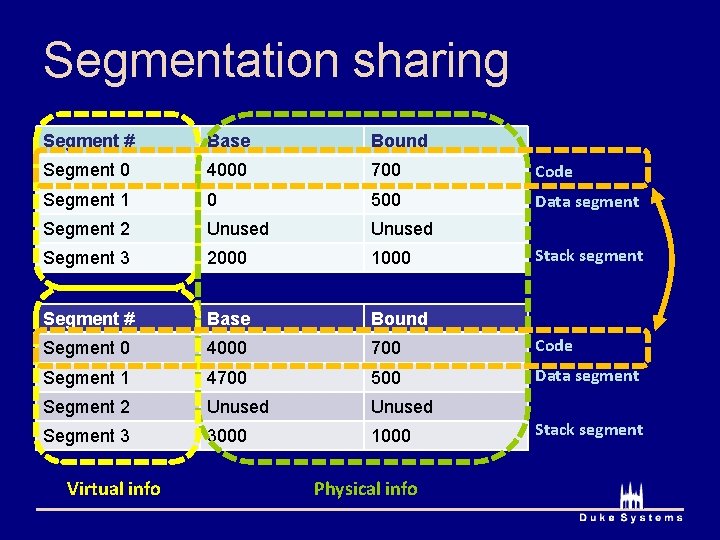

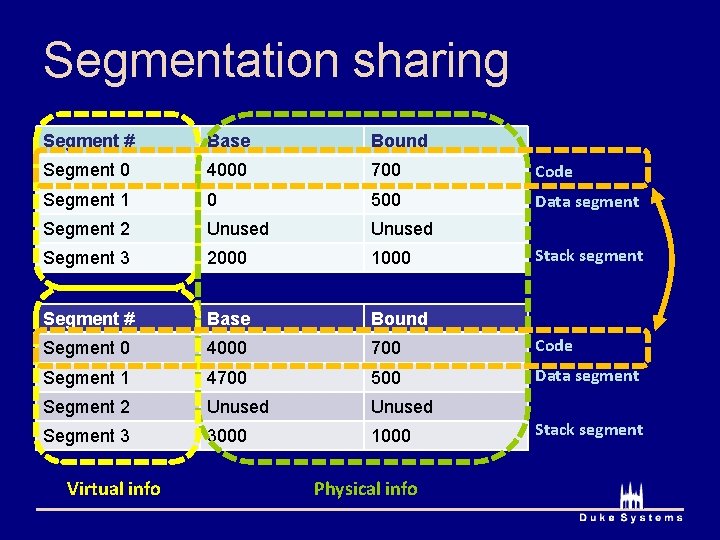

Segmentation sharing Segment # Base Bound Segment 0 4000 700 Code Segment 1 0 500 Data segment Segment 2 Unused Segment 3 2000 1000 Segment # Base Bound Segment 0 4000 700 Code Segment 1 4700 500 Data segment Segment 2 Unused Segment 3 3000 1000 Virtual info Physical info Stack segment

Segmentation pros and cons ê Pros êMultiple areas of address space can grow separately êEasy to share parts of address space ê(can share code segment) ê Cons êComplex memory allocation ê(still have external fragmentation)

2. Segmentation ê Do we get virtual memory? ê (can an address space be larger than phys mem? ) êSegments must be smaller than physical memory êAddress space can contain multiple segments êCan swap segments in and out ê What makes this tricky? êPerformance (segments are relatively large) êComplexity (odd segment sizes packing problem) ê Solution: fixed-size segments called pages!

3. Paging êVery similar to segmentation êAllocate memory in fixed-size chunks êChunks are called pages êWhy fixed-size pages? êAllocation is greatly simplified êJust keep a list of free physical pages êAny physical page can store any virtual page

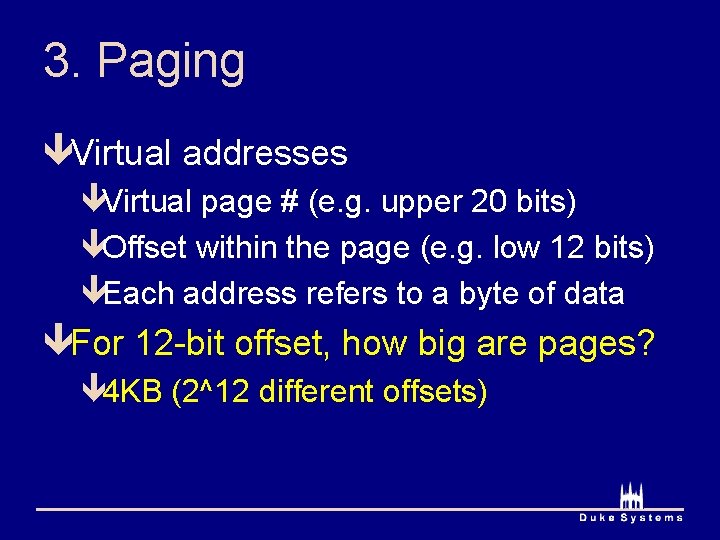

3. Paging êVirtual addresses êVirtual page # (e. g. upper 20 bits) êOffset within the page (e. g. low 12 bits) êEach address refers to a byte of data êFor 12 -bit offset, how big are pages? ê 4 KB (2^12 different offsets)

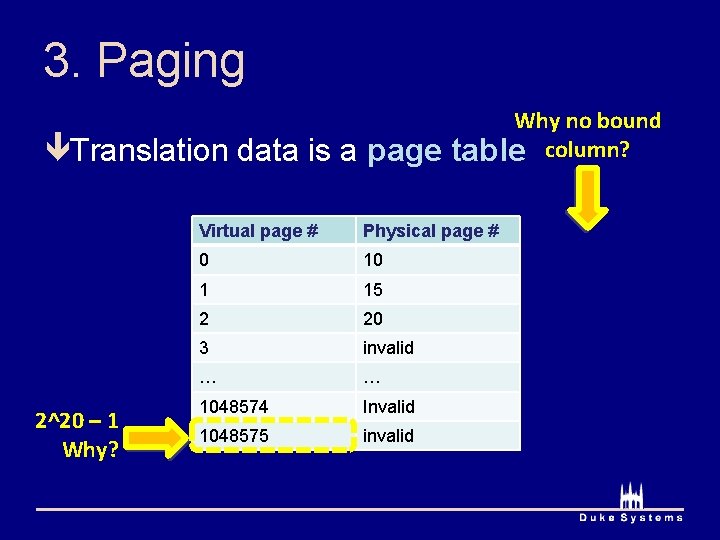

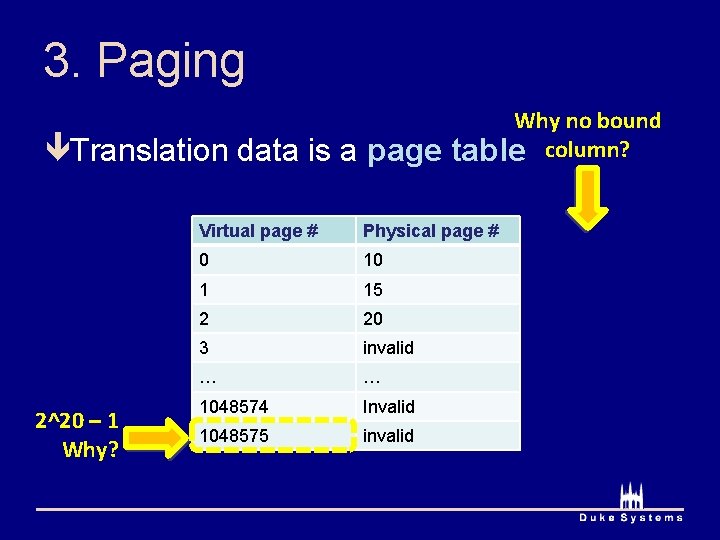

3. Paging êTranslation data is a page 2^20 – 1 Why? Why no bound table column? Virtual page # Physical page # 0 10 1 15 2 20 3 invalid … … 1048574 Invalid 1048575 invalid

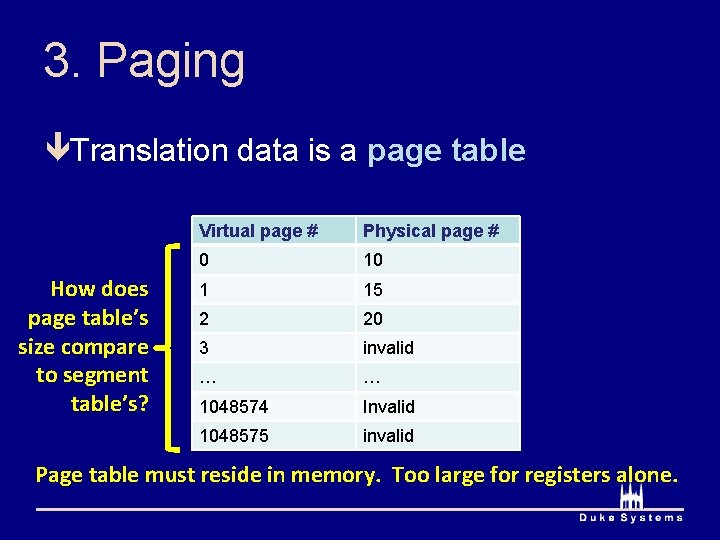

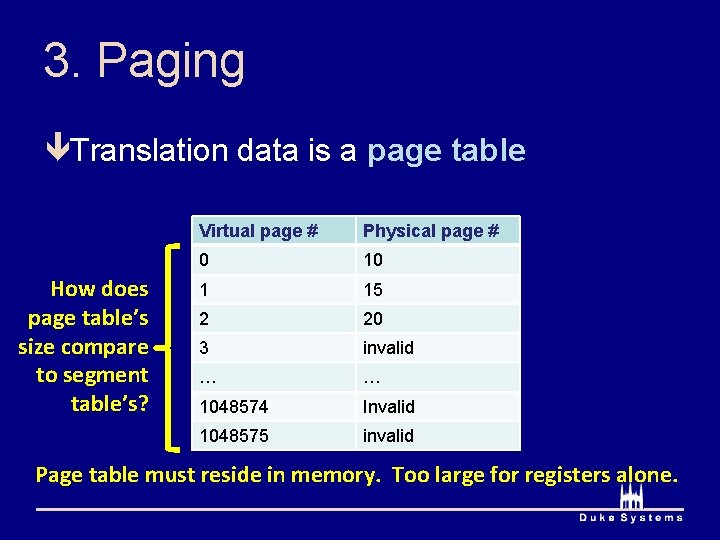

3. Paging êTranslation data is a page table How does page table’s size compare to segment table’s? Virtual page # Physical page # 0 10 1 15 2 20 3 invalid … … 1048574 Invalid 1048575 invalid Page table must reside in memory. Too large for registers alone.

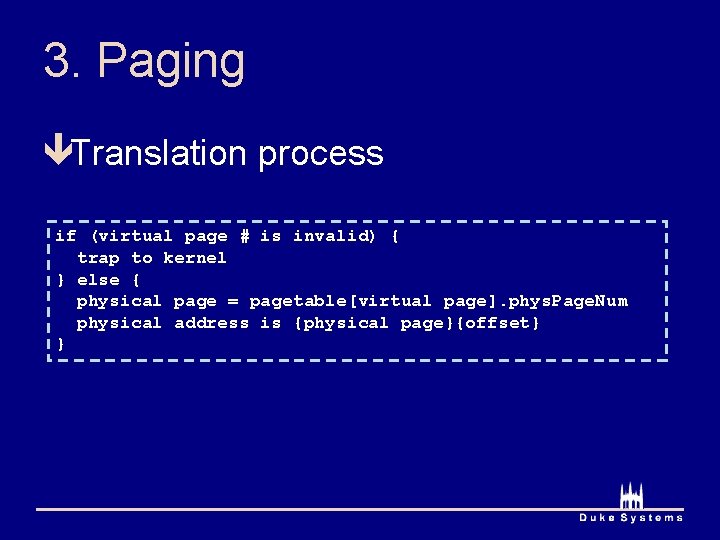

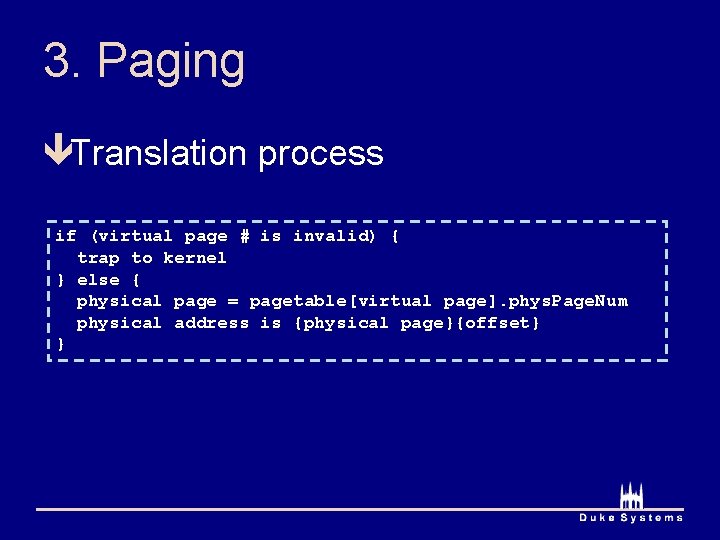

3. Paging êTranslation process if (virtual page # is invalid) { trap to kernel } else { physical page = pagetable[virtual page]. phys. Page. Num physical address is {physical page}{offset} }

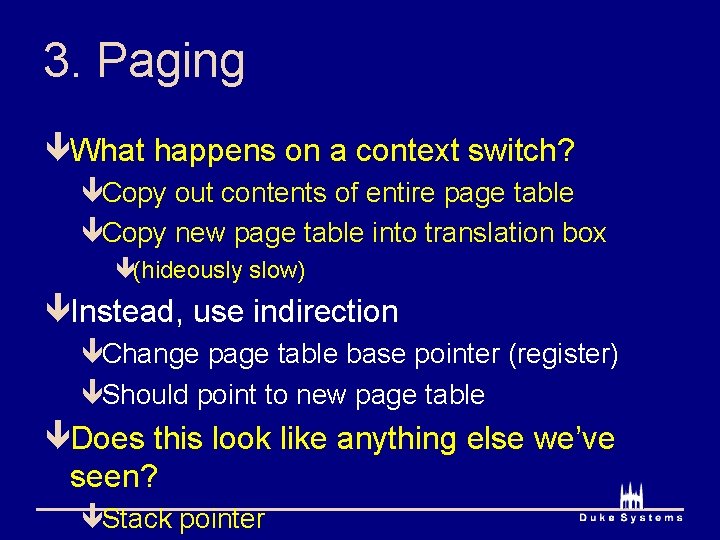

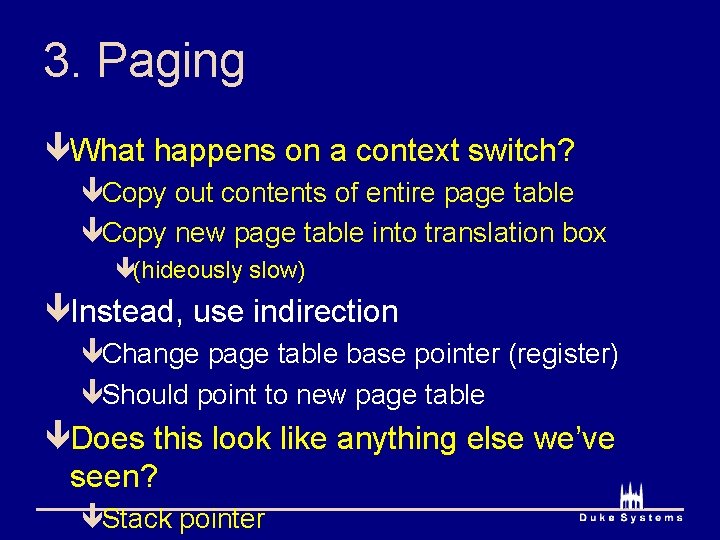

3. Paging êWhat happens on a context switch? êCopy out contents of entire page table êCopy new page table into translation box ê(hideously slow) êInstead, use indirection êChange page table base pointer (register) êShould point to new page table êDoes this look like anything else we’ve seen? êStack pointer

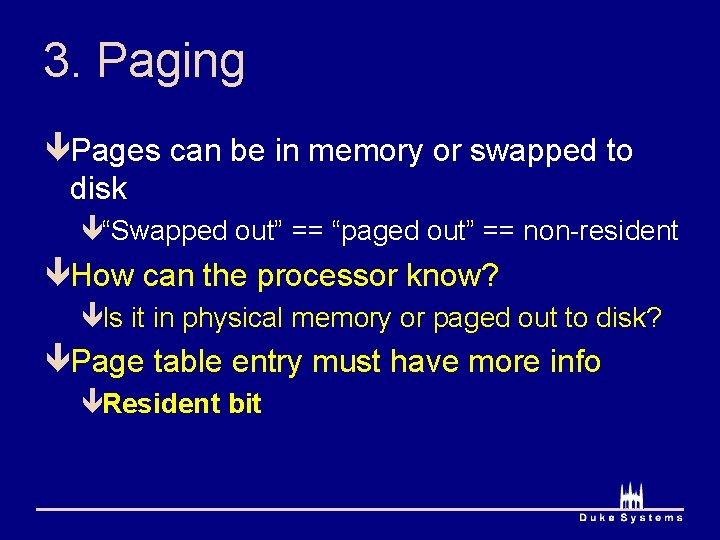

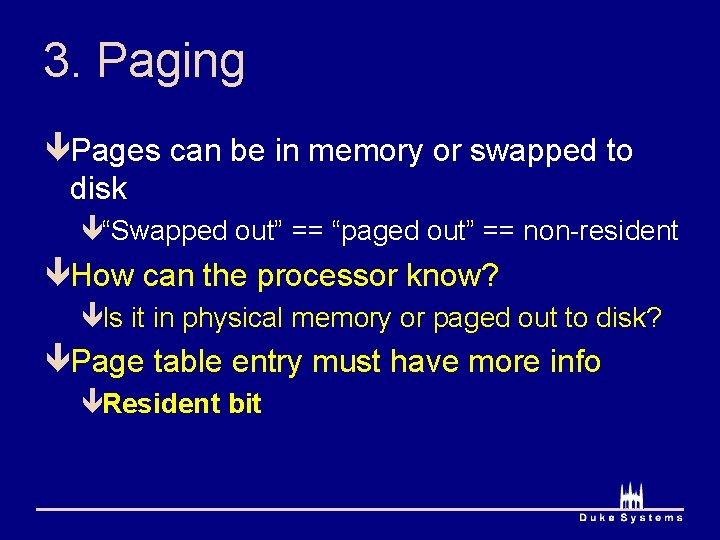

3. Paging êPages can be in memory or swapped to disk ê“Swapped out” == “paged out” == non-resident êHow can the processor know? êIs it in physical memory or paged out to disk? êPage table entry must have more info êResident bit

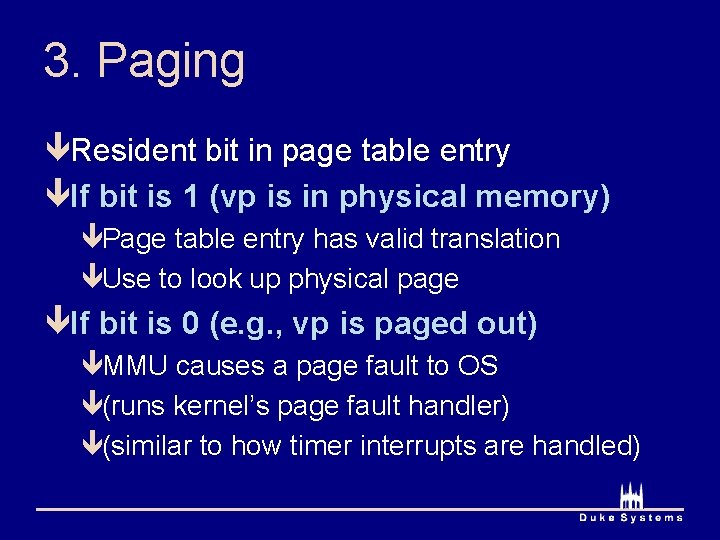

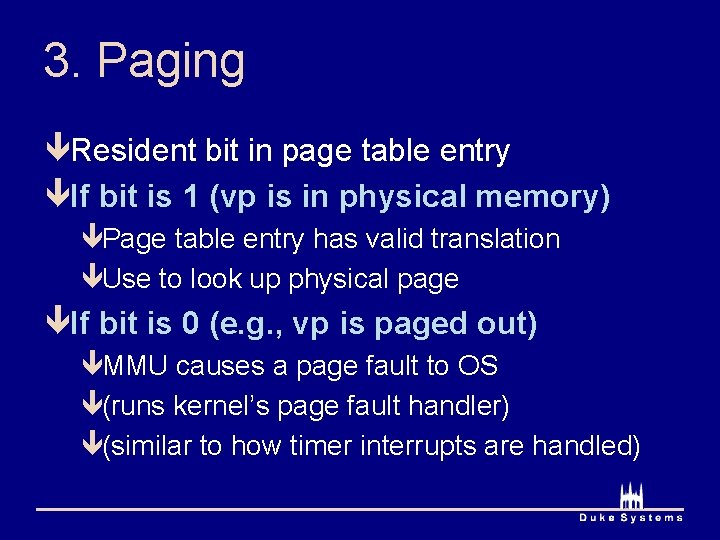

3. Paging êResident bit in page table entry êIf bit is 1 (vp is in physical memory) êPage table entry has valid translation êUse to look up physical page êIf bit is 0 (e. g. , vp is paged out) êMMU causes a page fault to OS ê(runs kernel’s page fault handler) ê(similar to how timer interrupts are handled)

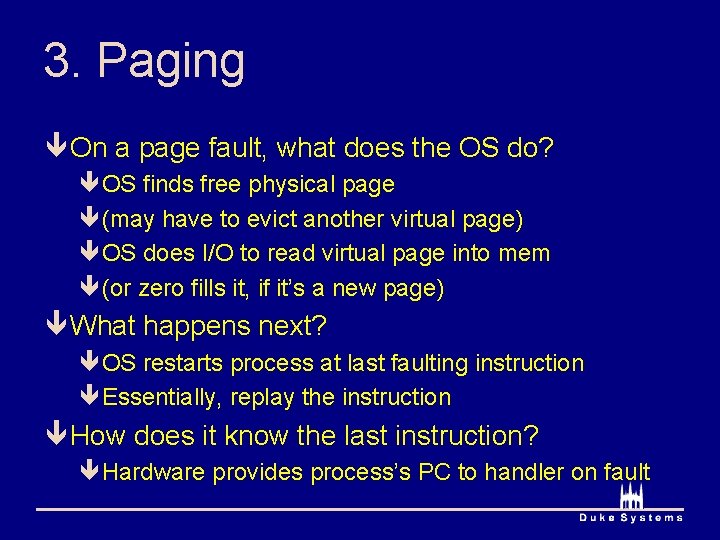

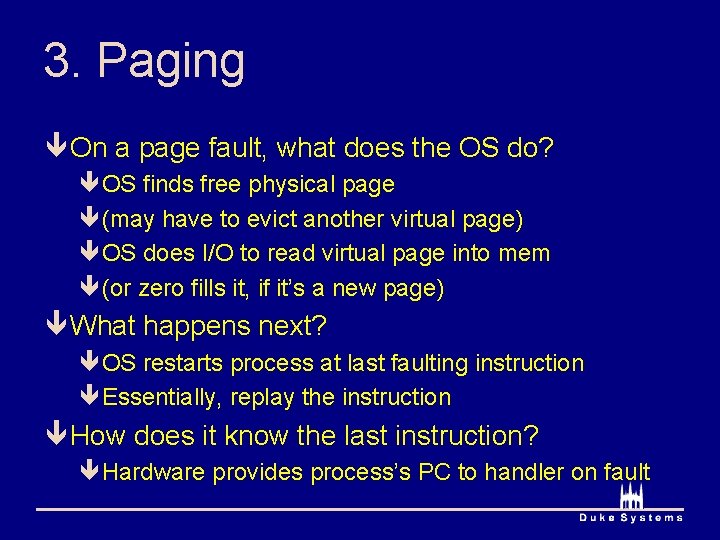

3. Paging ê On a page fault, what does the OS do? êOS finds free physical page ê(may have to evict another virtual page) êOS does I/O to read virtual page into mem ê(or zero fills it, if it’s a new page) ê What happens next? êOS restarts process at last faulting instruction êEssentially, replay the instruction ê How does it know the last instruction? êHardware provides process’s PC to handler on fault

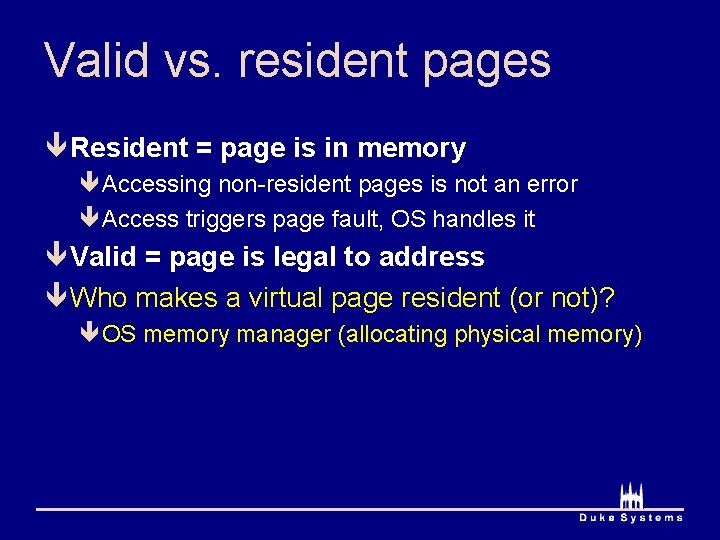

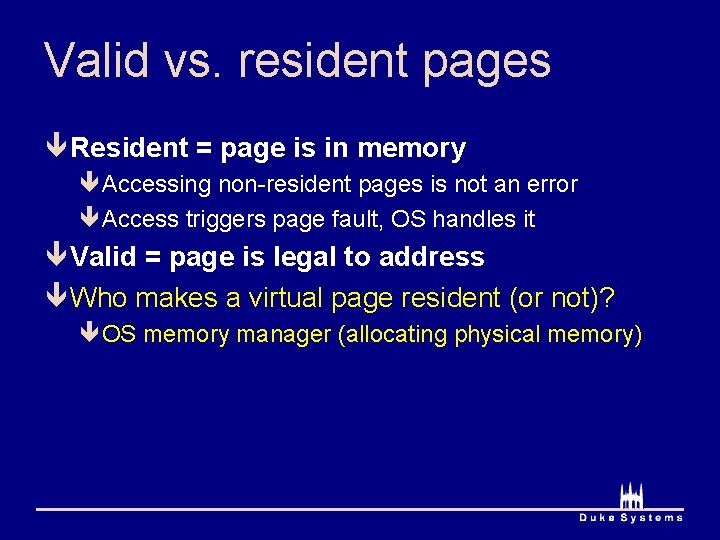

Valid vs. resident pages ê Resident = page is in memory êAccessing non-resident pages is not an error êAccess triggers page fault, OS handles it ê Valid = page is legal to address ê Who makes a virtual page resident (or not)? êOS memory manager (allocating physical memory)

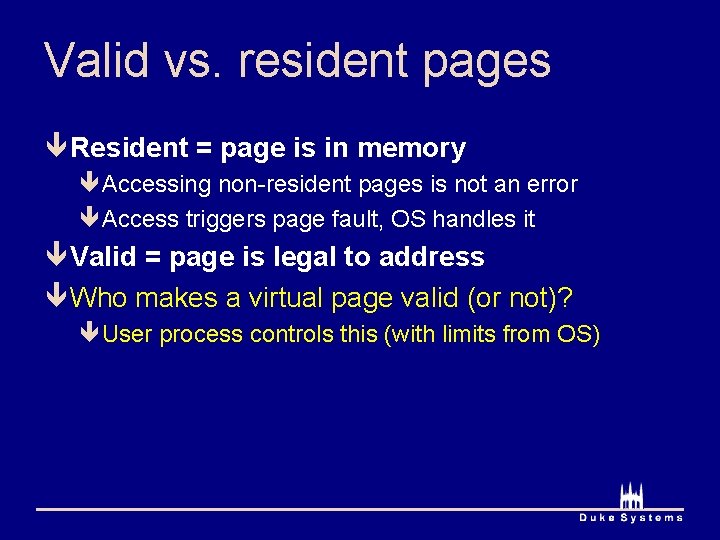

Valid vs. resident pages ê Resident = page is in memory êAccessing non-resident pages is not an error êAccess triggers page fault, OS handles it ê Valid = page is legal to address ê Who makes a virtual page valid (or not)? êUser process controls this (with limits from OS)

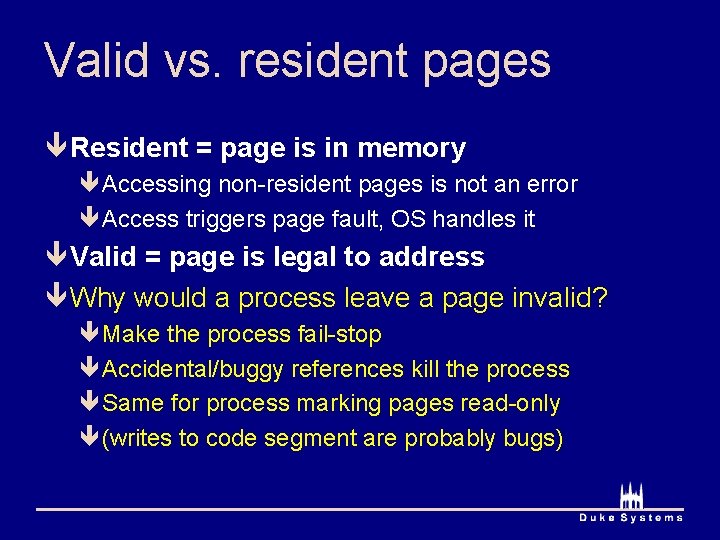

Valid vs. resident pages ê Resident = page is in memory êAccessing non-resident pages is not an error êAccess triggers page fault, OS handles it ê Valid = page is legal to address ê Why would a process leave a page invalid? êMake the process fail-stop êAccidental/buggy references kill the process êSame for process marking pages read-only ê(writes to code segment are probably bugs)

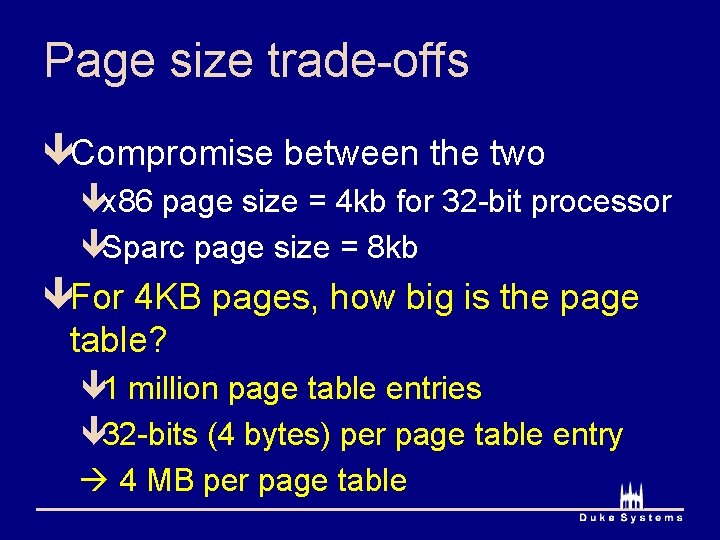

Page size trade-offs êIf page size is too small êLots of page table entries êBig page table ê If we use 4 -byte pages with 4 -byte PTEs êNum PTEs = 2^30 = 1 billion ê 1 billion PTE * 4 bytes/PTE = 4 GB êWould take up entire address space!

Page size trade-offs êWhat if we use really big (1 GB) pages? êInternal fragmentation êWasted space within the page êRecall external fragmentation ê(wasted space between pages/segments)

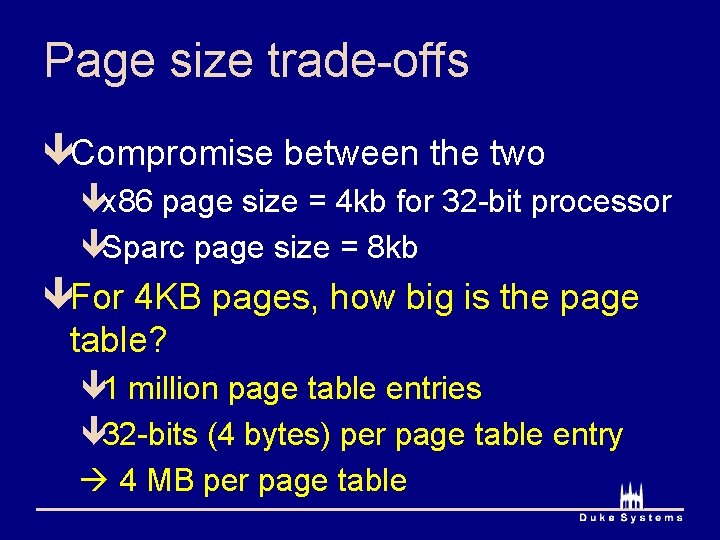

Page size trade-offs êCompromise between the two êx 86 page size = 4 kb for 32 -bit processor êSparc page size = 8 kb êFor 4 KB pages, how big is the page table? ê 1 million page table entries ê 32 -bits (4 bytes) per page table entry 4 MB per page table

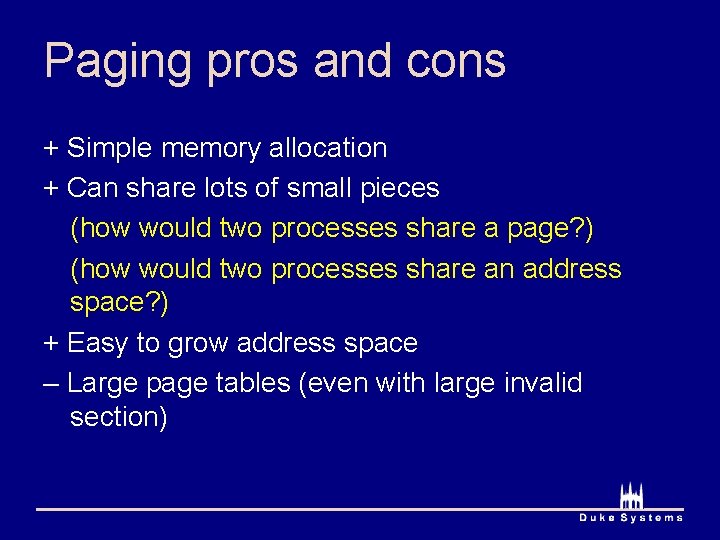

Paging pros and cons + Simple memory allocation + Can share lots of small pieces (how would two processes share a page? ) (how would two processes share an address space? ) + Easy to grow address space – Large page tables (even with large invalid section)

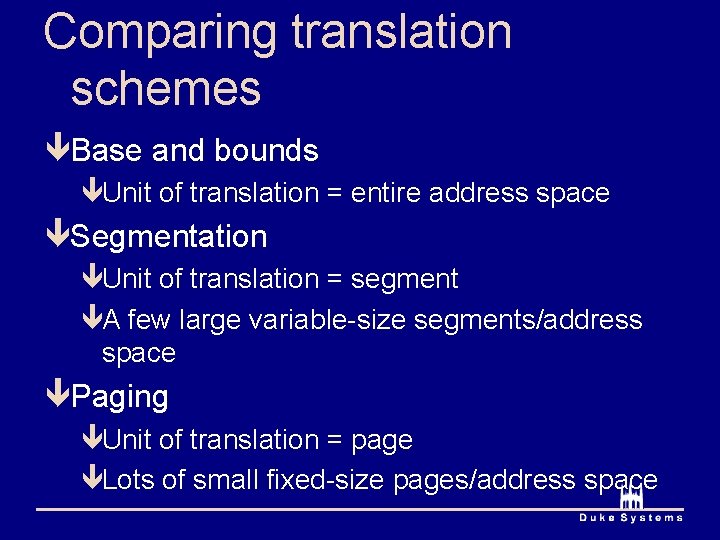

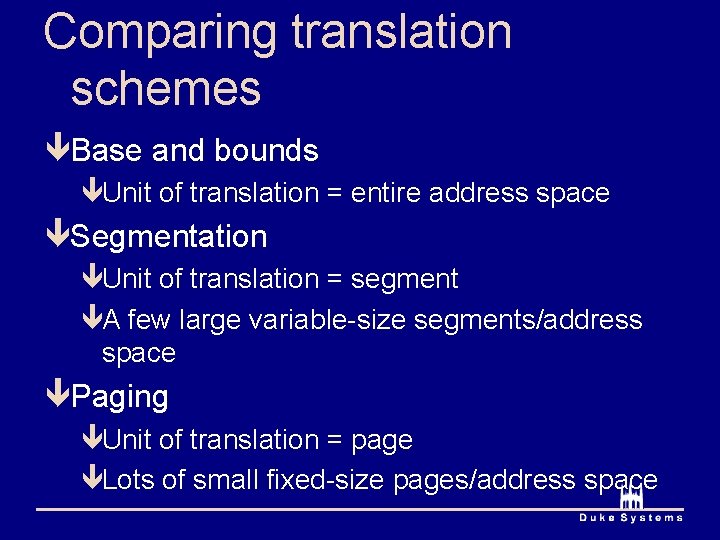

Comparing translation schemes êBase and bounds êUnit of translation = entire address space êSegmentation êUnit of translation = segment êA few large variable-size segments/address space êPaging êUnit of translation = page êLots of small fixed-size pages/address space

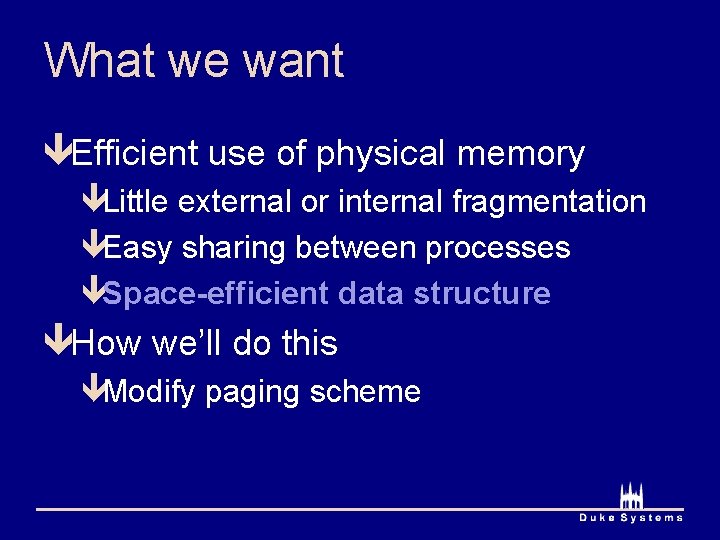

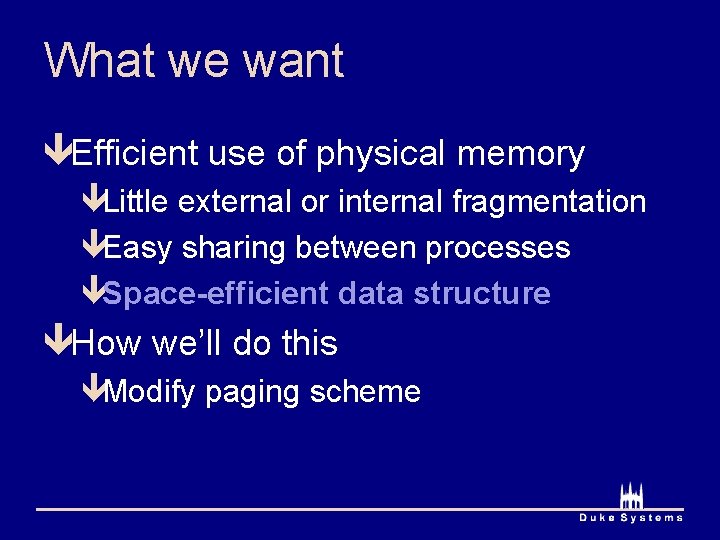

What we want êEfficient use of physical memory êLittle external or internal fragmentation êEasy sharing between processes êSpace-efficient data structure êHow we’ll do this êModify paging scheme

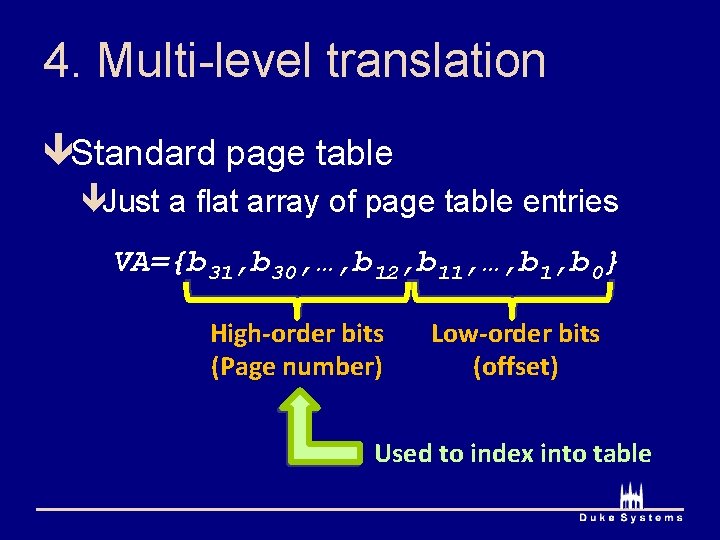

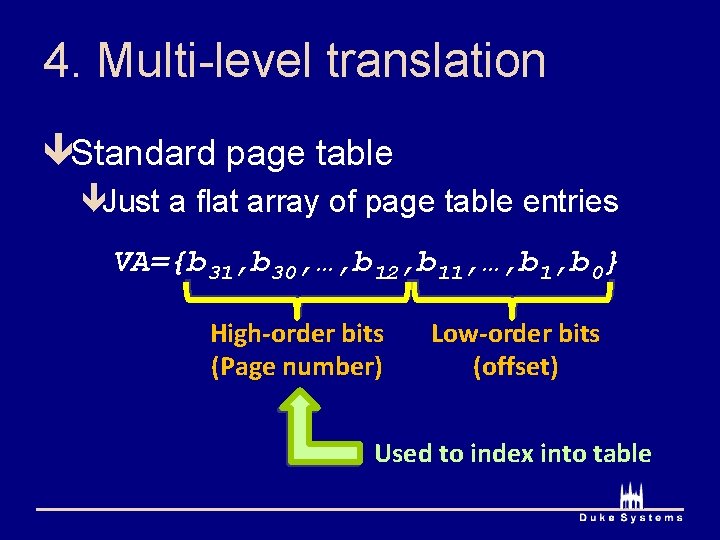

4. Multi-level translation êStandard page table êJust a flat array of page table entries VA={b 31, b 30, …, b 12, b 11, …, b 1, b 0} High-order bits (Page number) Low-order bits (offset) Used to index into table

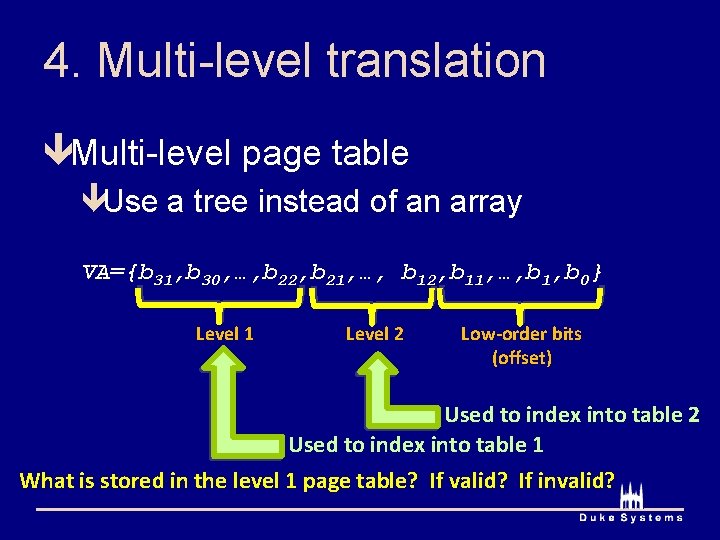

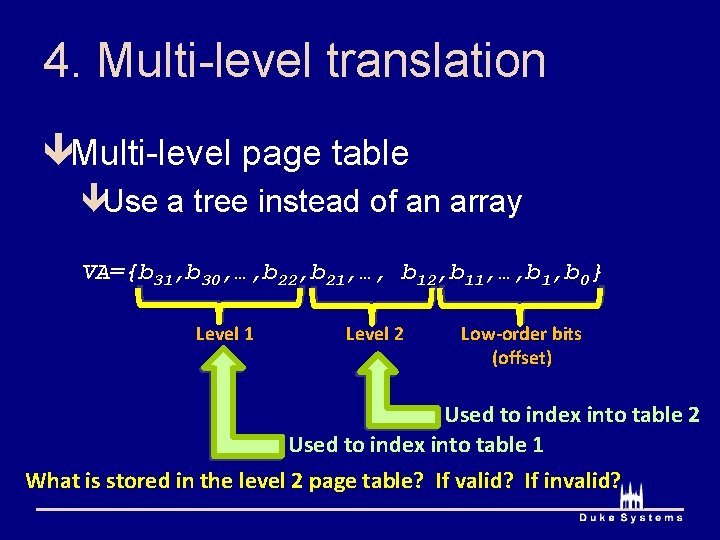

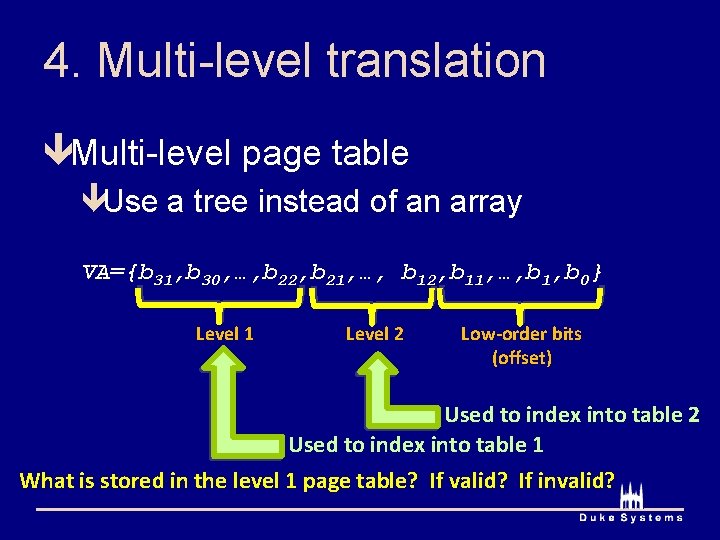

4. Multi-level translation êMulti-level page table êUse a tree instead of an array VA={b 31, b 30, …, b 22, b 21, …, b 12, b 11, …, b 1, b 0} Level 1 Level 2 Low-order bits (offset) Used to index into table 2 Used to index into table 1 What is stored in the level 1 page table? If valid? If invalid?

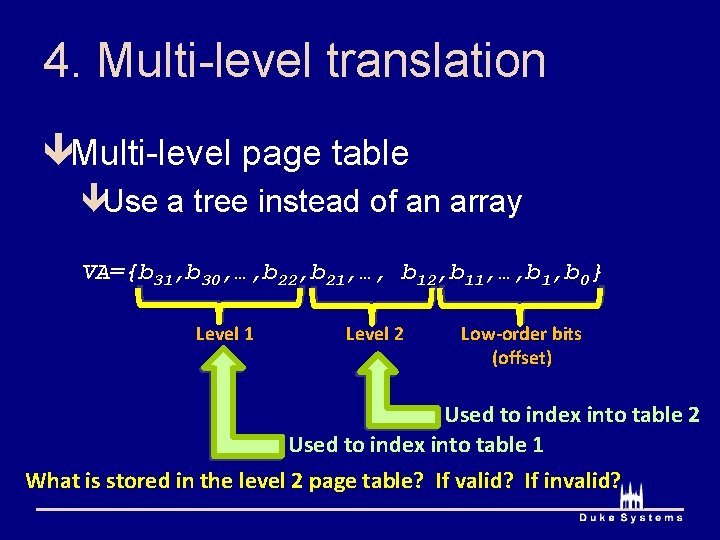

4. Multi-level translation êMulti-level page table êUse a tree instead of an array VA={b 31, b 30, …, b 22, b 21, …, b 12, b 11, …, b 1, b 0} Level 1 Level 2 Low-order bits (offset) Used to index into table 2 Used to index into table 1 What is stored in the level 2 page table? If valid? If invalid?

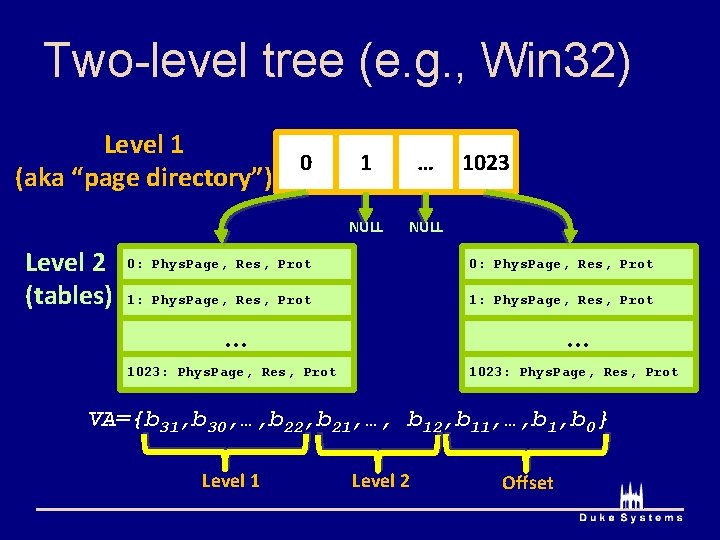

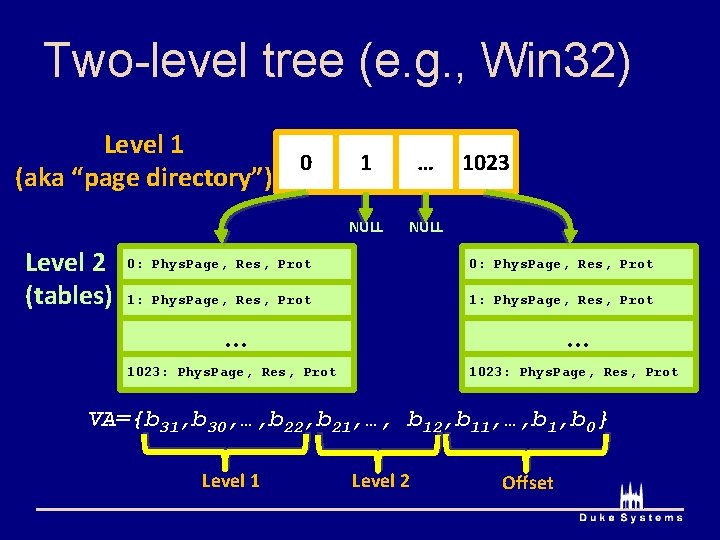

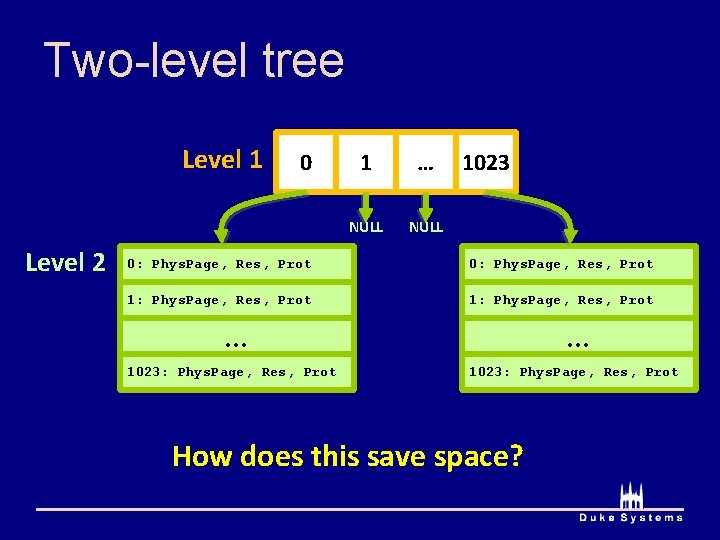

Two-level tree (e. g. , Win 32) Level 1 0 (aka “page directory”) Level 2 (tables) 1 … NULL 1023 ? 0: Phys. Page, Res, Prot 1: Phys. Page, Res, Prot … … ? ? 1023: Phys. Page, Res, Prot VA={b 31, b 30, …, b 22, b 21, …, b 12, b 11, …, b 1, b 0} Level 1 Level 2 Offset

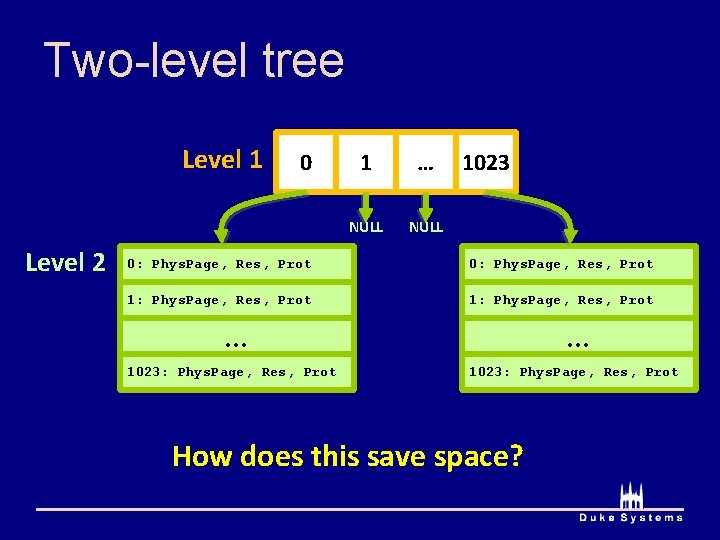

Two-level tree Level 1 Level 2 0 1 … NULL 1023 0: Phys. Page, Res, Prot 1: Phys. Page, Res, Prot … 1023: Phys. Page, Res, Prot How does this save space?

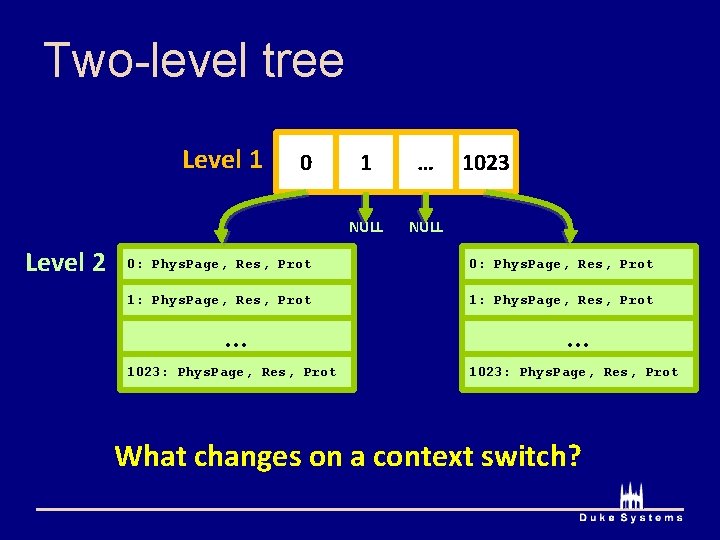

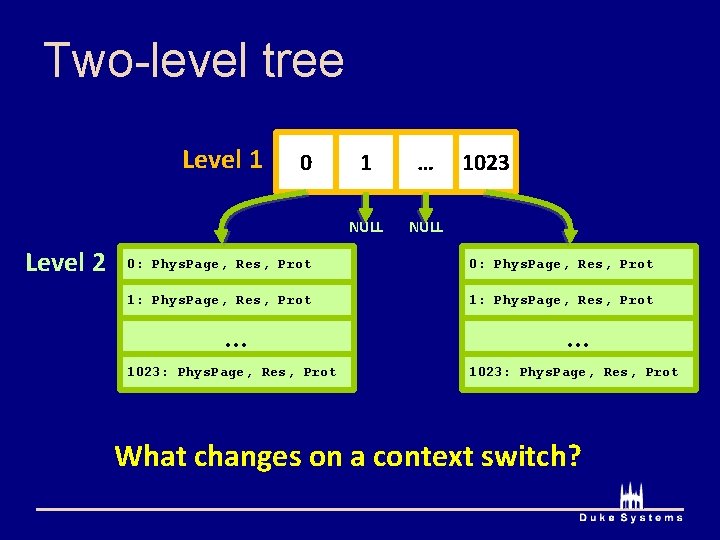

Two-level tree Level 1 Level 2 0 1 … NULL 1023 0: Phys. Page, Res, Prot 1: Phys. Page, Res, Prot … 1023: Phys. Page, Res, Prot What changes on a context switch?

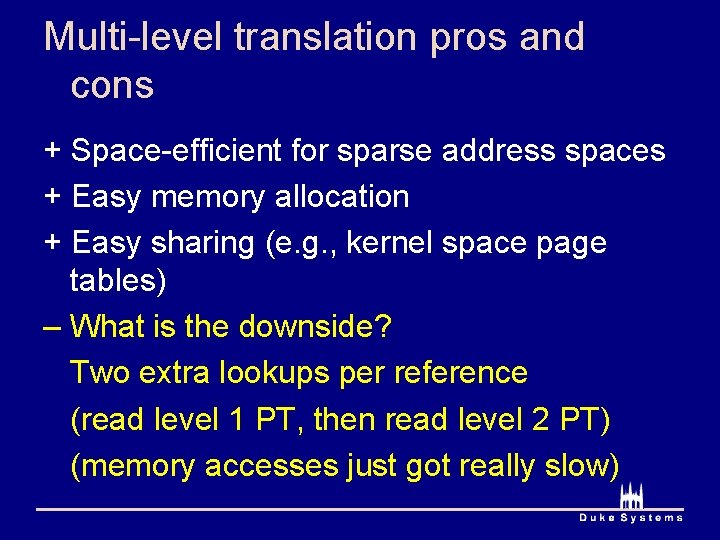

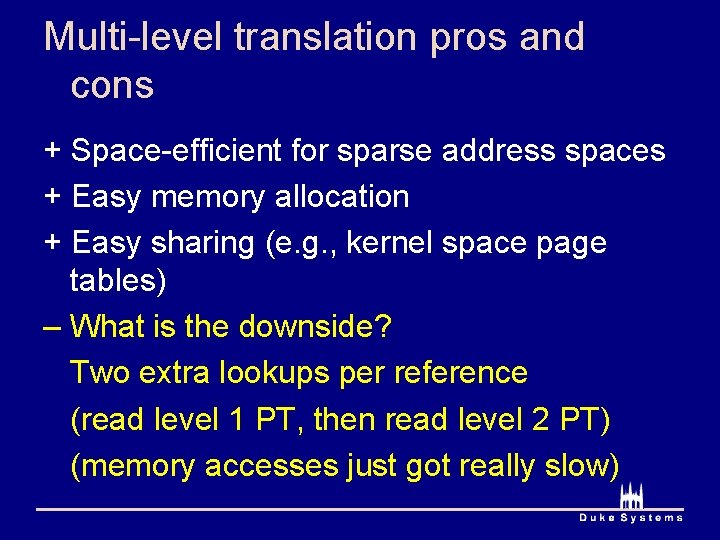

Multi-level translation pros and cons + Space-efficient for sparse address spaces + Easy memory allocation + Easy sharing (e. g. , kernel space page tables) – What is the downside? Two extra lookups per reference (read level 1 PT, then read level 2 PT) (memory accesses just got really slow)

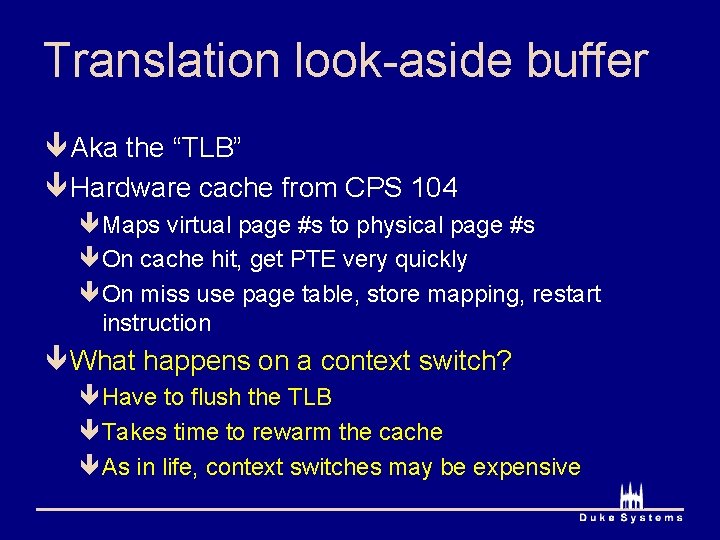

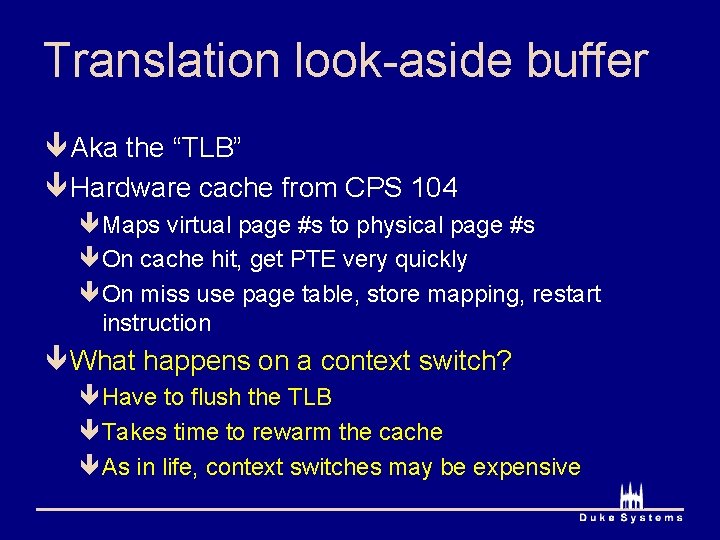

Translation look-aside buffer ê Aka the “TLB” ê Hardware cache from CPS 104 êMaps virtual page #s to physical page #s êOn cache hit, get PTE very quickly êOn miss use page table, store mapping, restart instruction ê What happens on a context switch? êHave to flush the TLB êTakes time to rewarm the cache êAs in life, context switches may be expensive

Cps 110

Cps 110 Cps 110

Cps 110 110 000 110 111 000 111

110 000 110 111 000 111 Vignette mutuelle 110/110

Vignette mutuelle 110/110 Cathy landon

Cathy landon Linear function transformations

Linear function transformations Noun phrase

Noun phrase What is communicative translation?

What is communicative translation? Cisco voice translation rule

Cisco voice translation rule Page translation in 80386

Page translation in 80386 Network address translation test

Network address translation test Virtual memory address translation

Virtual memory address translation Migrate network address translation

Migrate network address translation Dynamic translation example

Dynamic translation example What is formal equivalence

What is formal equivalence Logical memory vs physical memory

Logical memory vs physical memory Dynamic dynamic - bloom

Dynamic dynamic - bloom Rezidual doğrusallık

Rezidual doğrusallık Cox-merz rule

Cox-merz rule Efpractice

Efpractice Minitab box cox

Minitab box cox Cox webhosting

Cox webhosting Cox spam blocker

Cox spam blocker Nelson and cox

Nelson and cox Cox urban furniture

Cox urban furniture Cox=eox/tox

Cox=eox/tox Jessica cox biografia

Jessica cox biografia Ingemar j. cox

Ingemar j. cox Individueel schuldmodel

Individueel schuldmodel Wash sector cox's bazar

Wash sector cox's bazar Cox emil

Cox emil Cox.net

Cox.net Black and cox 1976

Black and cox 1976 Cox investor relations

Cox investor relations Nele leosk

Nele leosk Sylica

Sylica Cox

Cox Cox

Cox Kibe bryant kids

Kibe bryant kids Cox regression

Cox regression Cox regressioanalyysi

Cox regressioanalyysi Cox mill elementary school

Cox mill elementary school Cox 2 inhibitors

Cox 2 inhibitors