CPS 110 Dualmode operation Landon Cox March 4

- Slides: 44

CPS 110: Dual-mode operation Landon Cox March 4, 2008

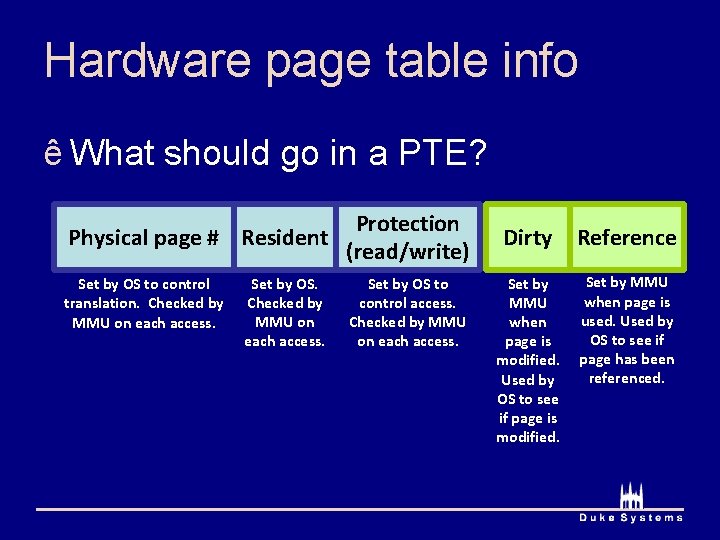

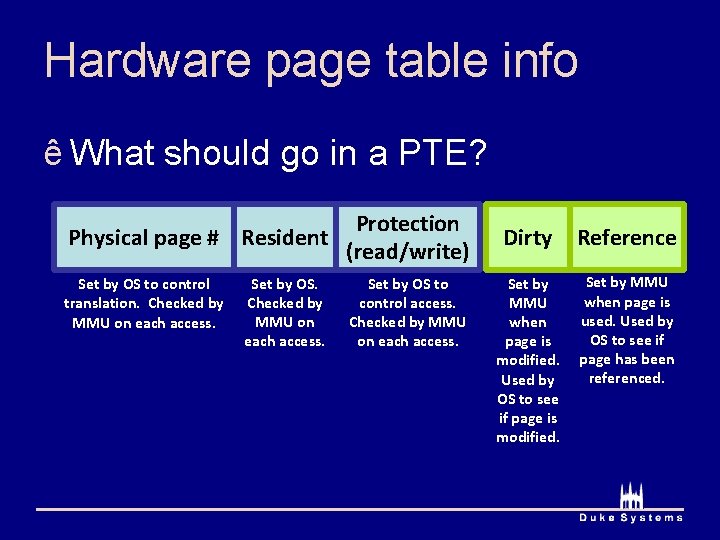

Hardware page table info ê What should go in a PTE? Protection Physical page # Resident (read/write) Set by OS to control translation. Checked by MMU on each access. Set by OS to control access. Checked by MMU on each access. Dirty Reference Set by MMU when page is modified. Used by OS to see if page is modified. Set by MMU when page is used. Used by OS to see if page has been referenced.

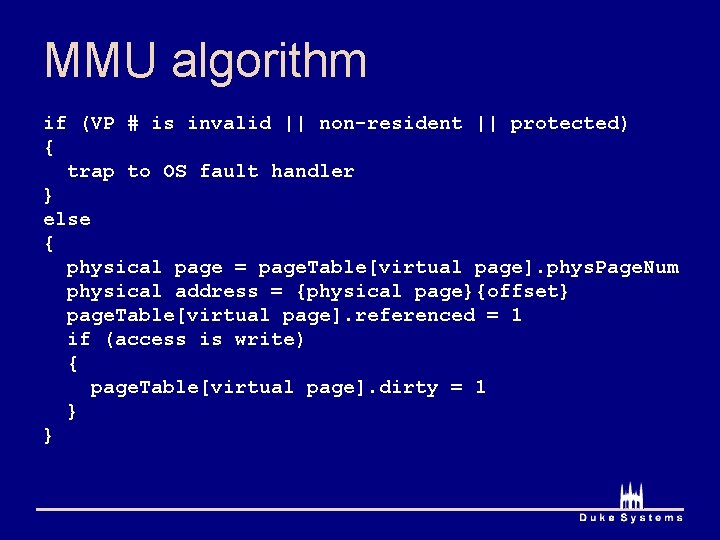

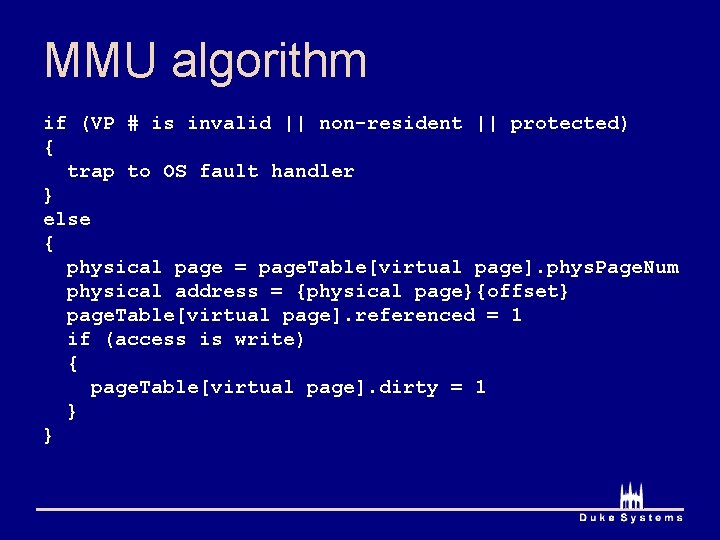

MMU algorithm if (VP # is invalid || non-resident || protected) { trap to OS fault handler } else { physical page = page. Table[virtual page]. phys. Page. Num physical address = {physical page}{offset} page. Table[virtual page]. referenced = 1 if (access is write) { page. Table[virtual page]. dirty = 1 } }

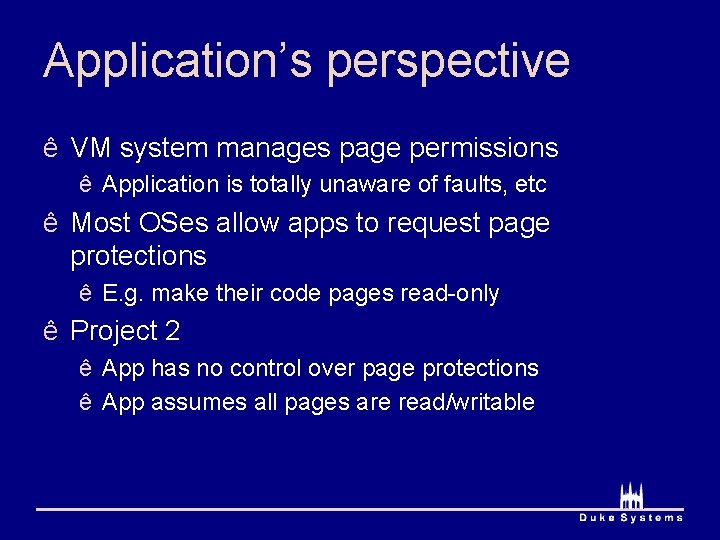

Application’s perspective ê VM system manages page permissions ê Application is totally unaware of faults, etc ê Most OSes allow apps to request page protections ê E. g. make their code pages read-only ê Project 2 ê App has no control over page protections ê App assumes all pages are read/writable

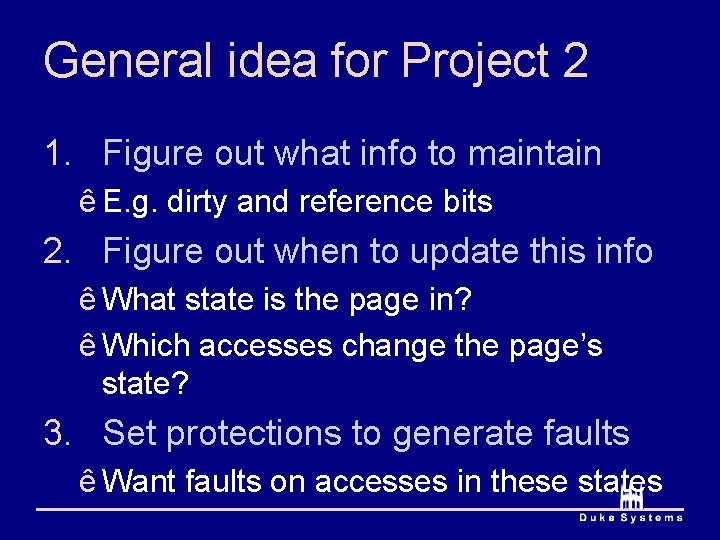

General idea for Project 2 1. Figure out what info to maintain ê E. g. dirty and reference bits 2. Figure out when to update this info ê What state is the page in? ê Which accesses change the page’s state? 3. Set protections to generate faults ê Want faults on accesses in these states

Where to store translation data? 1. Could be kept in physical memory ê How the MMU accesses it ê E. g. PTBR points to physical memory 2. Could be in kernel virtual address space ê ê ê A little weird, but what is done in practice How to keep translation data in a place that must be translated? Translation for user address space is in kernel virtual memory Kernel’s translation data stays in physical memory (pinned) Does anything else need to be pinned (and why)? Kernel’s page fault handler code also has to be pinned. ê Project 2: translation data is stored in “kernel” virtual memory

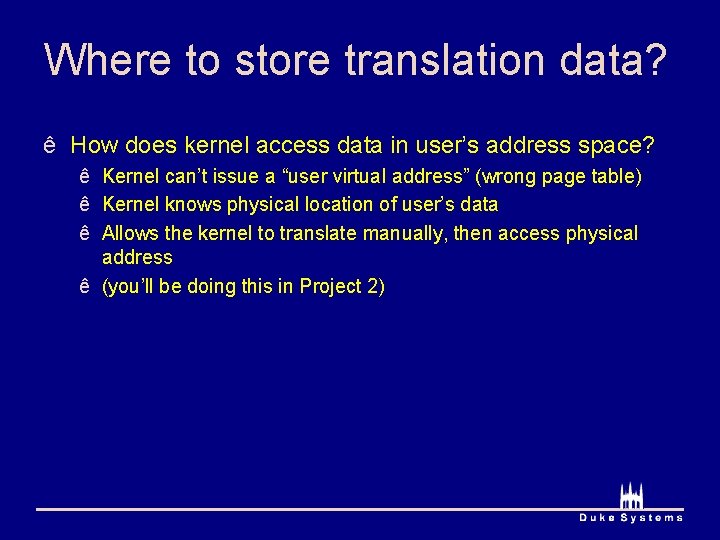

Where to store translation data? ê How does kernel access data in user’s address space? ê Kernel can’t issue a “user virtual address” (wrong page table) ê Kernel knows physical location of user’s data ê Allows the kernel to translate manually, then access physical address ê (you’ll be doing this in Project 2)

Kernel vs. user mode ê Who sets up the data used by the MMU? ê Can’t be the user process ê Otherwise could access anything ê Only kernel is allowed to modify any memory ê Processor must know to allow kernel ê Update the translator ê Execute privileged instructions (halt, do I/O)

Kernel vs. user mode ê How does machine know kernel is running? ê This requires hardware support ê Process supports two modes, kernel and user ê Mode is indicated by a hardware register ê Mode bit

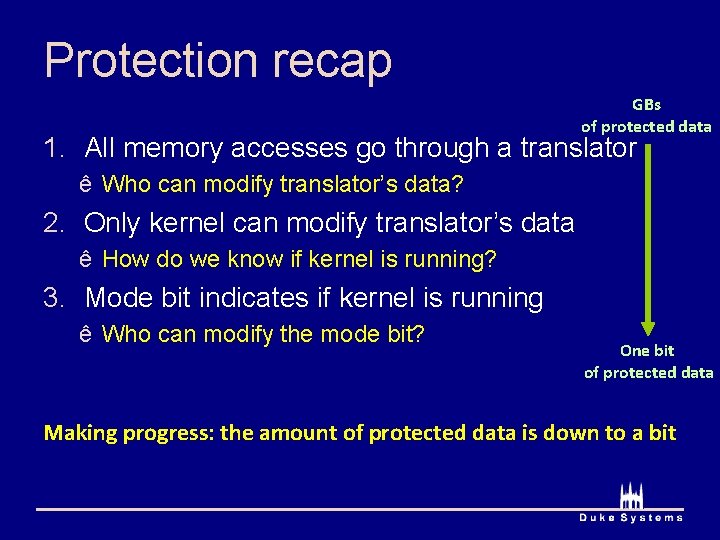

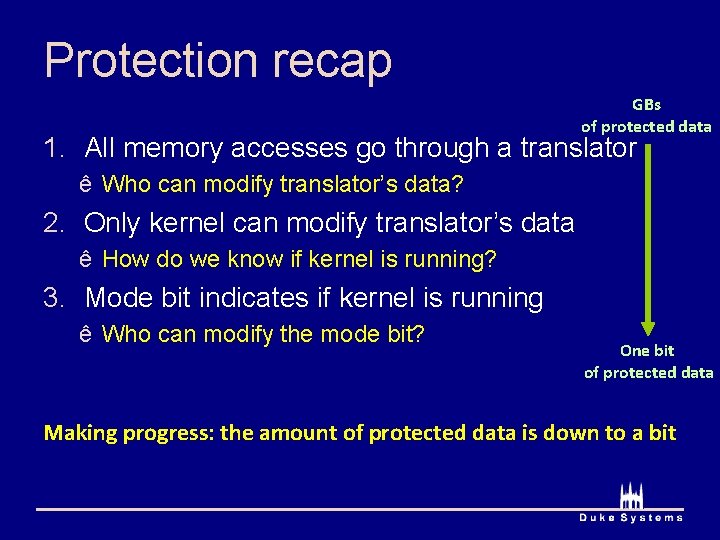

Protection recap GBs of protected data 1. All memory accesses go through a translator ê Who can modify translator’s data? 2. Only kernel can modify translator’s data ê How do we know if kernel is running? 3. Mode bit indicates if kernel is running ê Who can modify the mode bit? One bit of protected data Making progress: the amount of protected data is down to a bit

Protecting the mode bit ê Can kernel change the mode bit? ê Yes. Kernel is completely trusted. ê Can user process change the mode bit? ê Not directly ê User programs need to invoke the kernel ê Must be able to initiate a change

When to transition from user to kernel? 1. Exceptions (interrupts, traps) ê Access something out of your valid address space ê (e. g. segmentation fault) ê Disk I/O finishes, causes interrupt ê Timer pre-emption, causes interrupt ê Page faults 2. System calls ê Similar in purpose to a function call

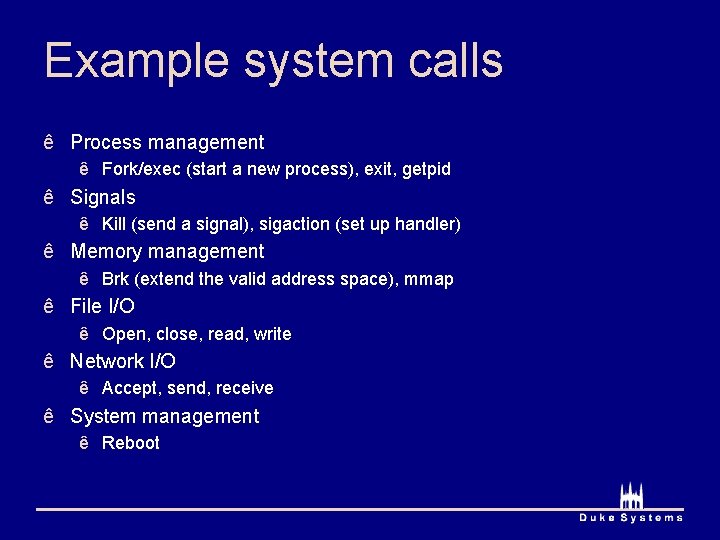

Example system calls ê Process management ê Fork/exec (start a new process), exit, getpid ê Signals ê Kill (send a signal), sigaction (set up handler) ê Memory management ê Brk (extend the valid address space), mmap ê File I/O ê Open, close, read, write ê Network I/O ê Accept, send, receive ê System management ê Reboot

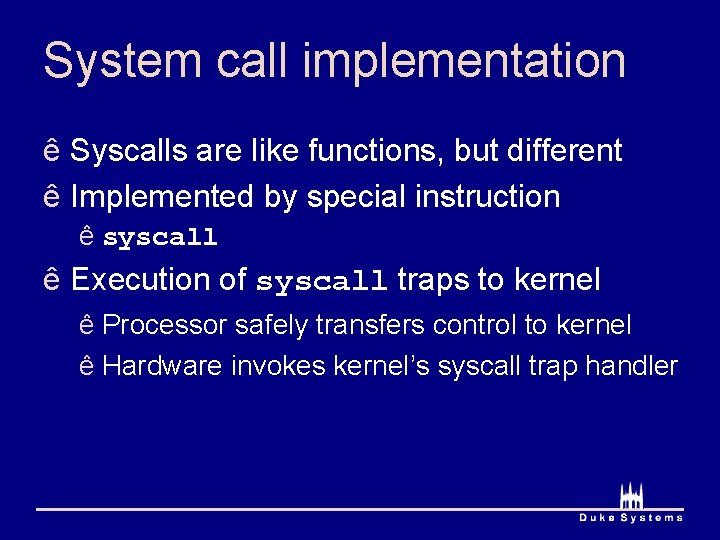

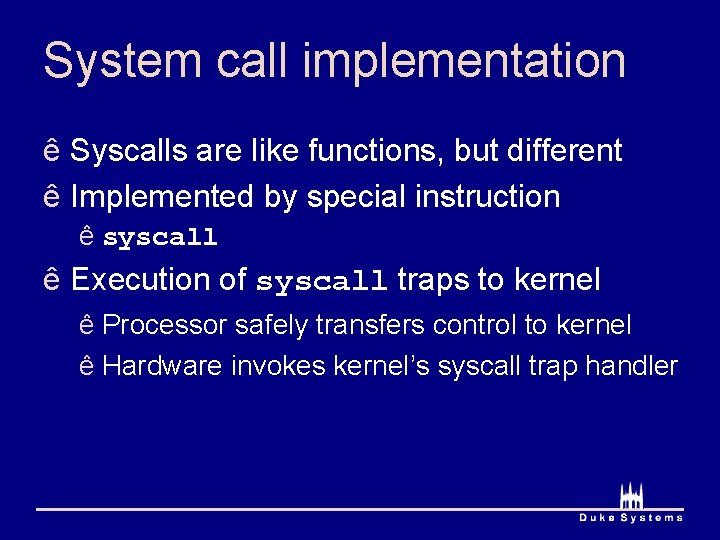

System call implementation ê Syscalls are like functions, but different ê Implemented by special instruction ê syscall ê Execution of syscall traps to kernel ê Processor safely transfers control to kernel ê Hardware invokes kernel’s syscall trap handler

System call implementation ê Libc wraps systems calls ê C++/Java make calls into libc

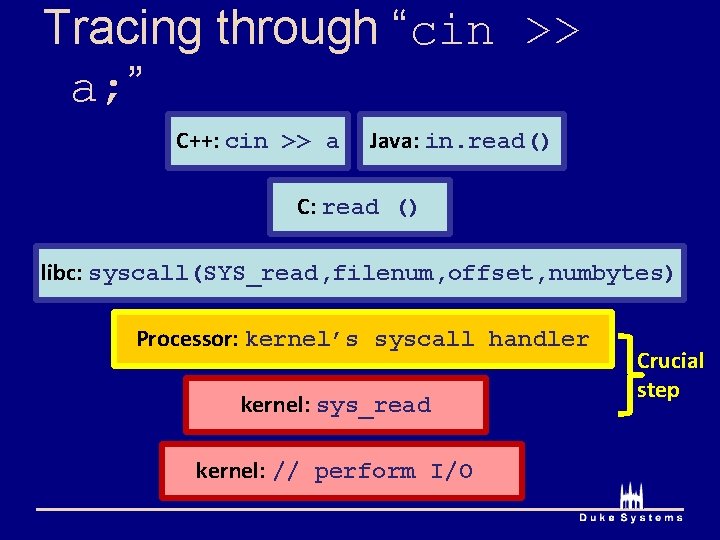

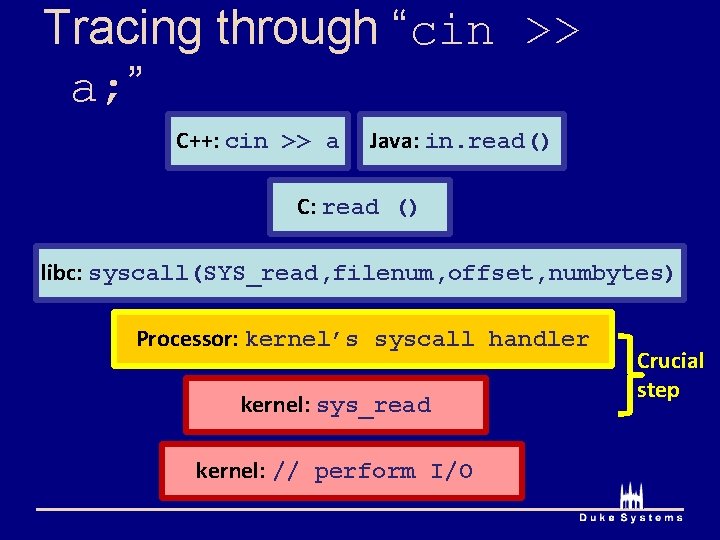

Tracing through “cin >> a; ” C++: cin >> a Java: in. read() C: read () libc: syscall(SYS_read, filenum, offset, numbytes) Processor: kernel’s syscall handler kernel: sys_read kernel: // perform I/O Crucial step

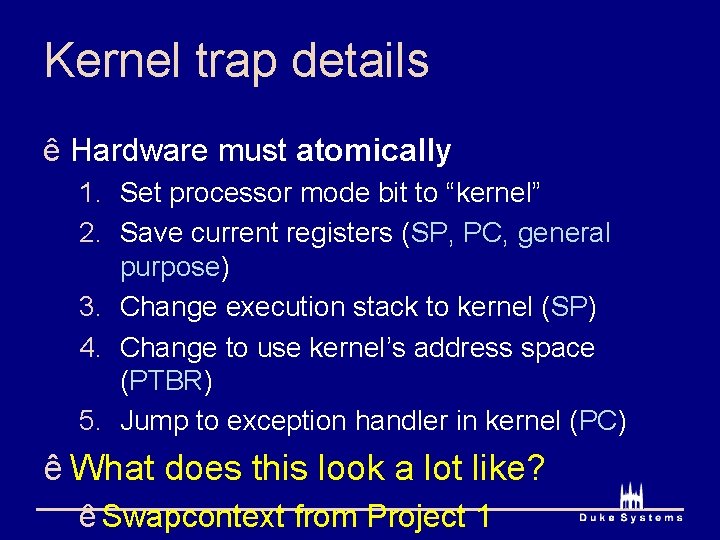

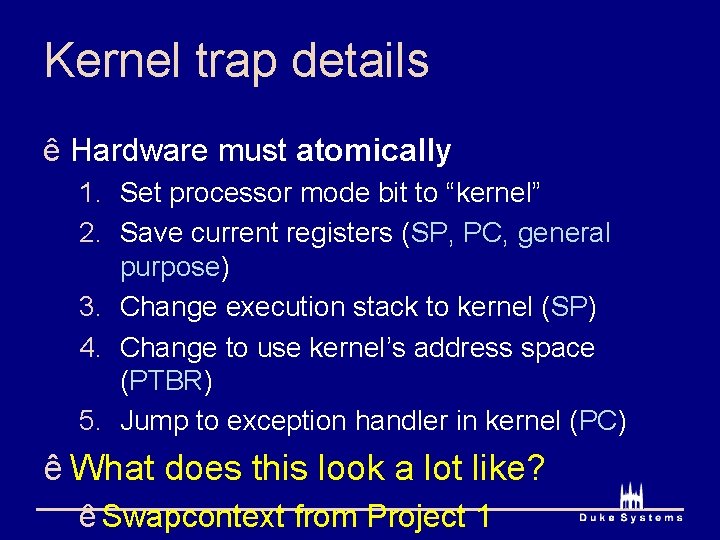

Kernel trap details ê Hardware must atomically 1. Set processor mode bit to “kernel” 2. Save current registers (SP, PC, general purpose) 3. Change execution stack to kernel (SP) 4. Change to use kernel’s address space (PTBR) 5. Jump to exception handler in kernel (PC) ê What does this look a lot like? ê Swapcontext from Project 1

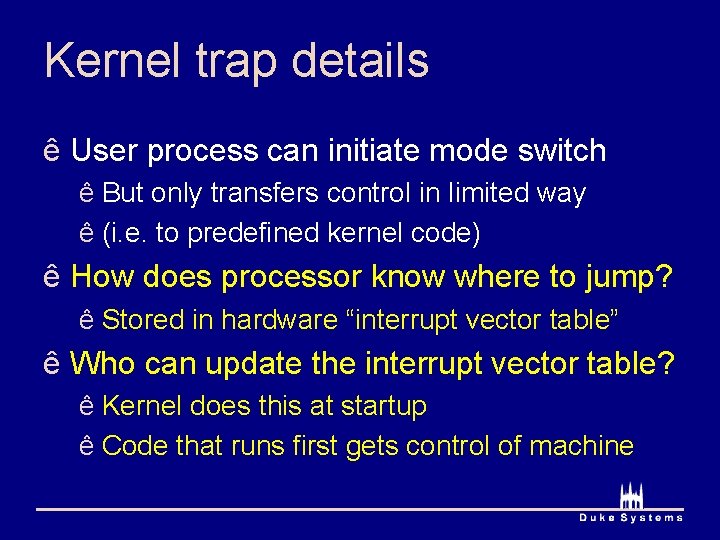

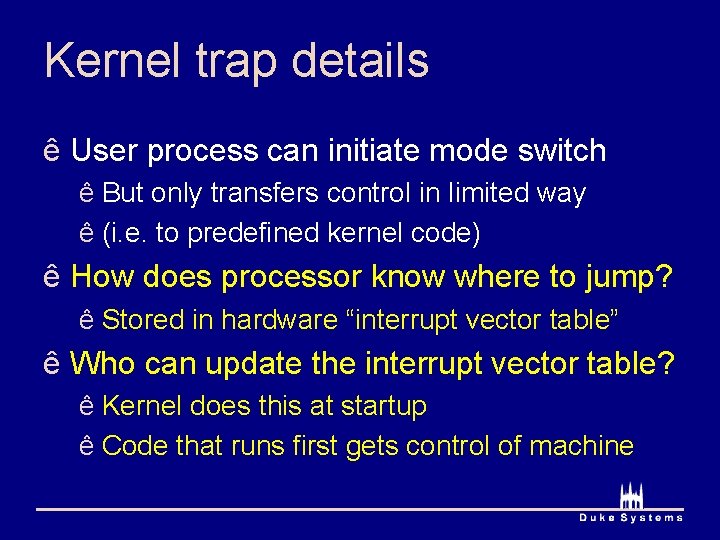

Kernel trap details ê User process can initiate mode switch ê But only transfers control in limited way ê (i. e. to predefined kernel code) ê How does processor know where to jump? ê Stored in hardware “interrupt vector table” ê Who can update the interrupt vector table? ê Kernel does this at startup ê Code that runs first gets control of machine

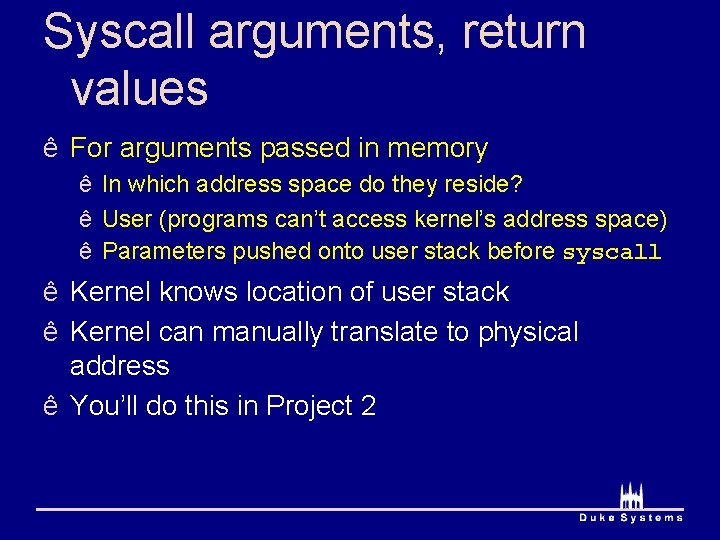

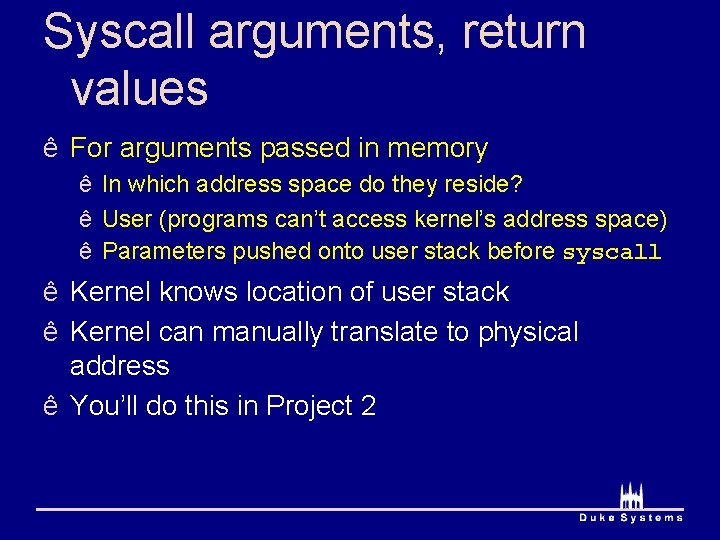

Syscall arguments, return values ê For arguments passed in memory ê In which address space do they reside? ê User (programs can’t access kernel’s address space) ê Parameters pushed onto user stack before syscall ê Kernel knows location of user stack ê Kernel can manually translate to physical address ê You’ll do this in Project 2

Tracing through read() C: read (int fd, void *buf, size_t size) libc: push fd, buf, size onto user’s stack Note: pointer! kernel: sys_read () { verify fd is an open file verify [buf – buf+size) valid, writable read file from data (e. g. disk) find physical location of buf copy data to buf’s physical location } Verify arguments!

Course administration ê Project 2 ê Was posted last week ê Will go over basics in discussion section ê Due March 25 th ê Extra office hours week after spring break ê Any other questions?

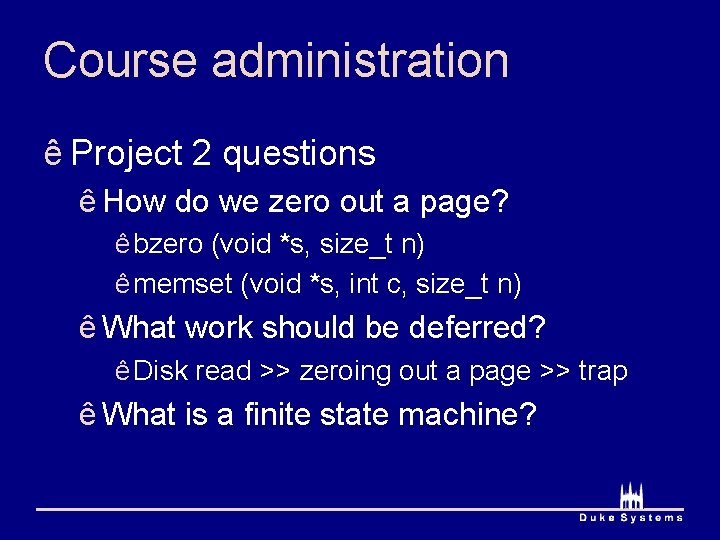

Course administration ê Project 2 questions ê How do we zero out a page? ê bzero (void *s, size_t n) ê memset (void *s, int c, size_t n) ê What work should be deferred? ê Disk read >> zeroing out a page >> trap ê What is a finite state machine?

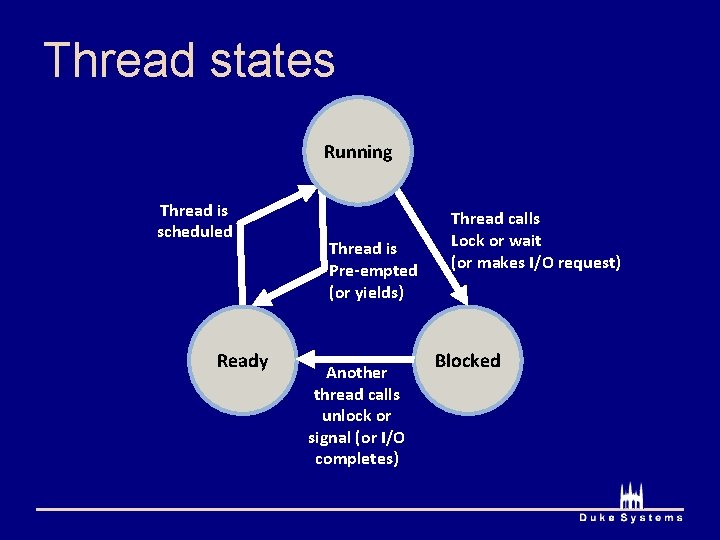

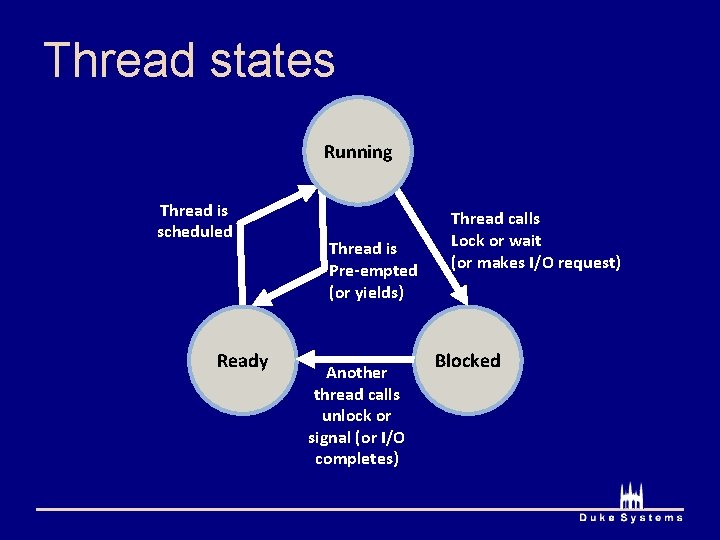

Thread states Running Thread is scheduled Ready Thread is Pre-empted (or yields) Another thread calls unlock or signal (or I/O completes) Thread calls Lock or wait (or makes I/O request) Blocked

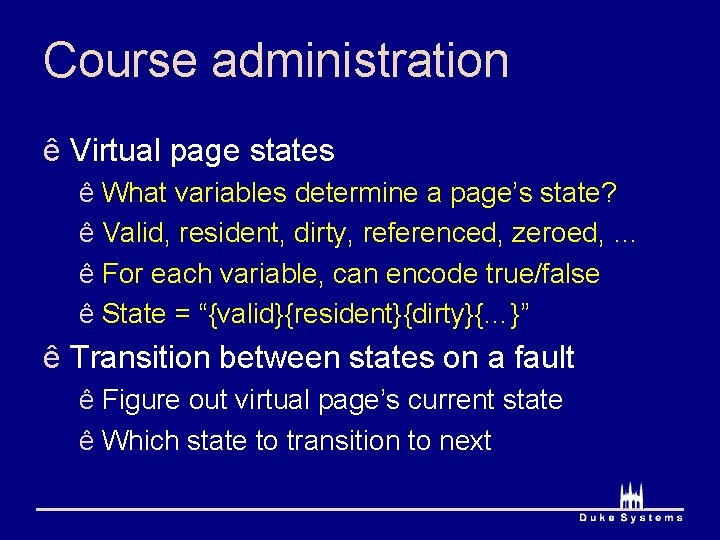

Course administration ê Virtual page states ê What variables determine a page’s state? ê Valid, resident, dirty, referenced, zeroed, … ê For each variable, can encode true/false ê State = “{valid}{resident}{dirty}{…}” ê Transition between states on a fault ê Figure out virtual page’s current state ê Which state to transition to next

Creating, starting a process 1. Allocate process control block 2. Read program code from disk 3. Store program code in memory (could be demand-loaded too) ê Physical or virtual memory? ê (physical: no virtual memory for process yet) 4. Initialize machine registers for new process 5. Initialize translator data for new address space Need ê E. g. page table and PTBR hardware ê Virtual addresses of code segment point to correct physical locationssupport 6. Set processor mode bit to “user” 7. Jump to start of program

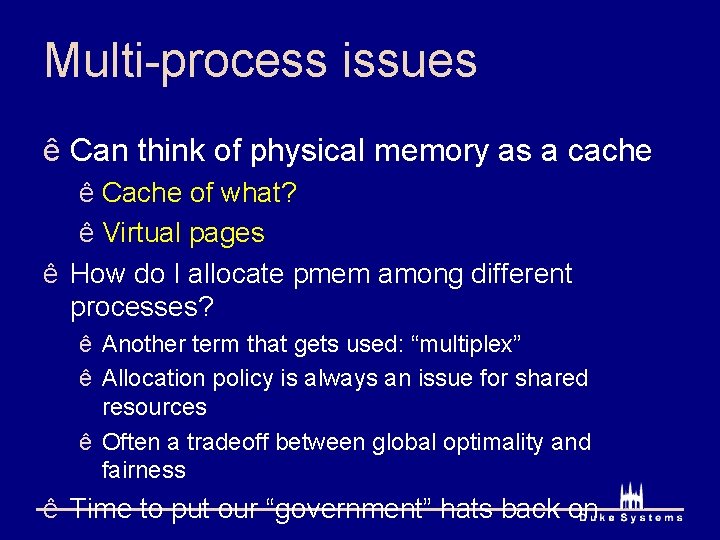

Multi-process issues ê Can think of physical memory as a cache ê Cache of what? ê Virtual pages ê How do I allocate pmem among different processes? ê Another term that gets used: “multiplex” ê Allocation policy is always an issue for shared resources ê Often a tradeoff between global optimality and fairness ê Time to put our “government” hats back on

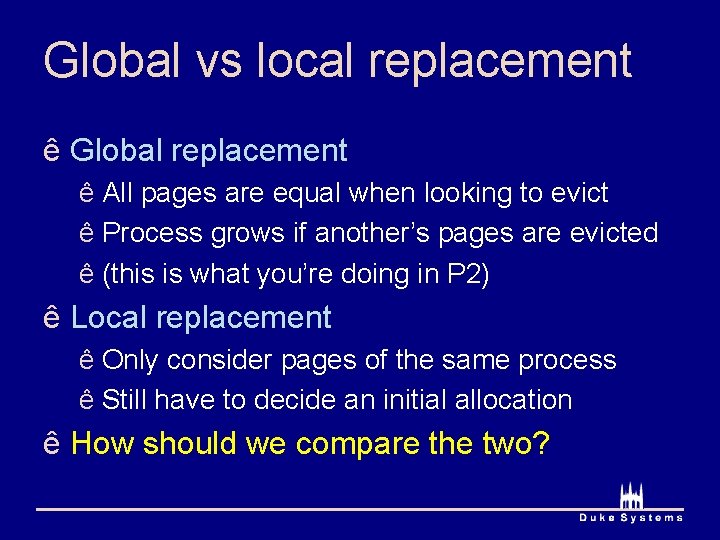

Global vs local replacement ê Global replacement ê All pages are equal when looking to evict ê Process grows if another’s pages are evicted ê (this is what you’re doing in P 2) ê Local replacement ê Only consider pages of the same process ê Still have to decide an initial allocation ê How should we compare the two?

Thrashing ê What happens if we have lots of big processes? ê They all need a lot of physical memory to run ê Switching between processes will cause lots of disk I/O ê For most replacement algorithms ê Performance degrades rapidly if not everything fits ê OPT degrades more gracefully ê This is called “thrashing”

Why thrashing is so bad ê Average access time ê (hit rate * hit time) + (miss rate * miss time) ê Hit time =. 0001 milliseconds (100 ns) ê Miss time = 10 milliseconds (Disk I/O) ê What happens if hit rate goes from 100% 99%? ê 100% hit rate: avg access time =. 0001 ms ê 99% hit rate: avg access time =. 99*. 0001 +. 01*10 =. 1 ms ê 90% hit rate: avg access time =. 90*. 0001 +. 1*10 = 1 ms ê 1% drop in hit rate leads to 1000 times increase!

Why thrashing is so bad ê Easy to induce thrashing with LRU and clock ê Even if they are 99% accurate, thrashing kills ê Another way to look at it ê 1 ms per instruction ê 1 cycle per instruction ê Every few instructions accesses memory ê End up with a 3 GHz processor running at 1 KHz

What to do about thrashing ê Best solution ê Throw money at the problem (buy more RAM) ê No solution ê One process actively uses more pages than fit ê What if only the sum of pages doesn’t fit? ê Run fewer at a time (i. e. swap processes out)

What to do about thrashing ê Four processes: A, B, C, and D ê Each uses 500 MB of memory ê System has 1 GB of physical memory ê Don’t run A, B, C and D together ê Run A and B together ê Then run C and D together ê How would this help?

What to do about thrashing ê Another solution ê Run each process for a longer slice ê Amortize cost of initial page faults ê Extend the fast phase of execution ê i. e. the part after its pages are in pmem

What do I mean by actively using? ê Working set (T) ê All pages used in last T seconds ê Larger working sets require more pmem ê Sum of all working sets must fit in pmem ê Otherwise you’ll have thrashing

Working sets ê Want to run a “balance set” of processes ê Balance set ê Subset of all processes ê Sum of working sets fits in physical memory ê Intuition ê Run processes whose T-working sets fit ê Can go T seconds without getting a fault

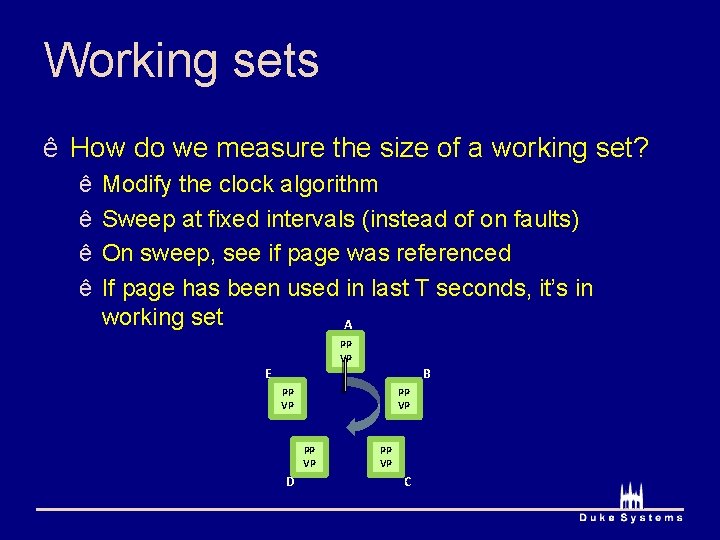

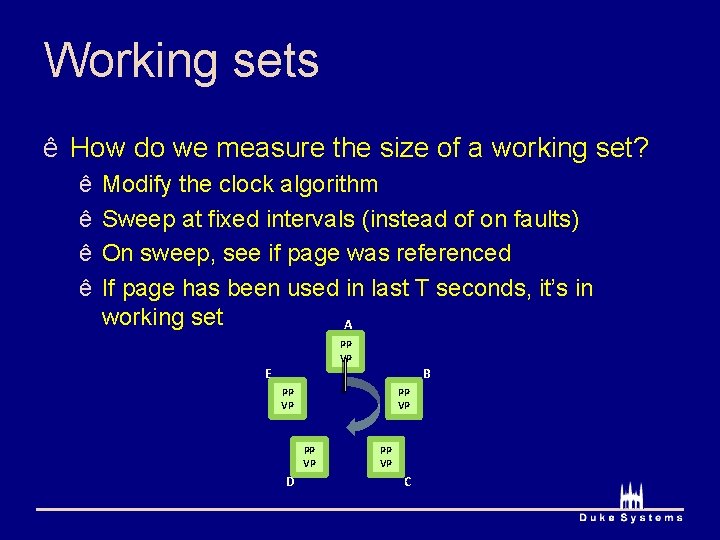

Working sets ê How do we measure the size of a working set? ê ê Modify the clock algorithm Sweep at fixed intervals (instead of on faults) On sweep, see if page was referenced If page has been used in last T seconds, it’s in working set A PP VP E B PP VP D PP VP C

Putting it all together ê Unix fork, exec, and shell ê How does Unix create processes? 1. Fork ê New (child) process with one thread ê Address space is an exact copy of parent 2. Exec ê New (child) process with one thread ê Address space is initialized from new program

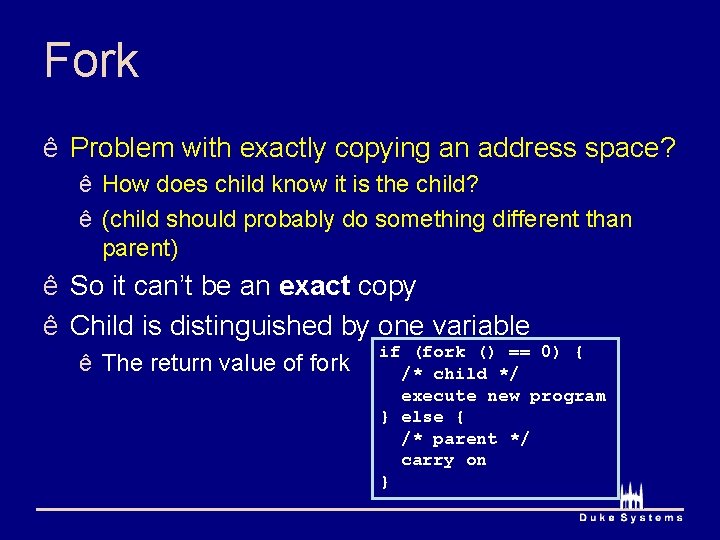

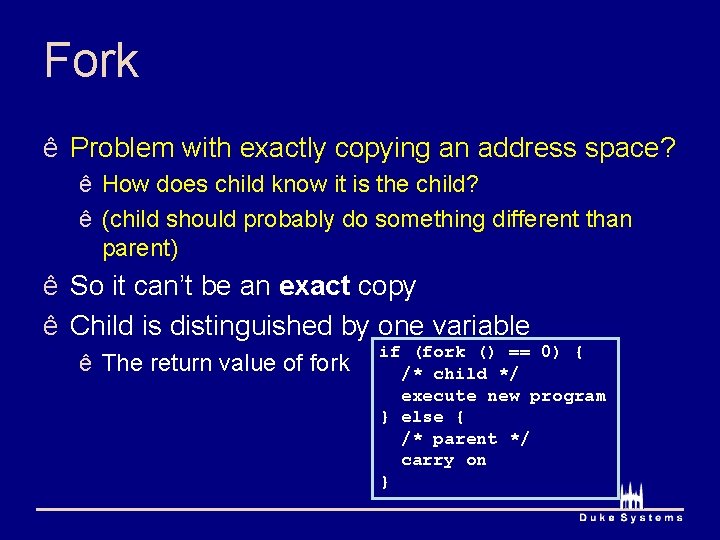

Fork ê Problem with exactly copying an address space? ê How does child know it is the child? ê (child should probably do something different than parent) ê So it can’t be an exact copy ê Child is distinguished by one variable ê The return value of fork if (fork () == 0) { /* child */ execute new program } else { /* parent */ carry on }

Fork ê How can we efficiently create the copy address space?

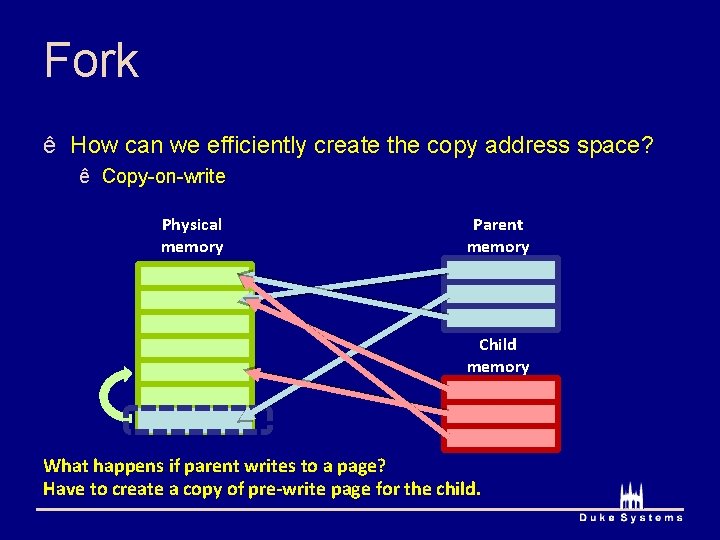

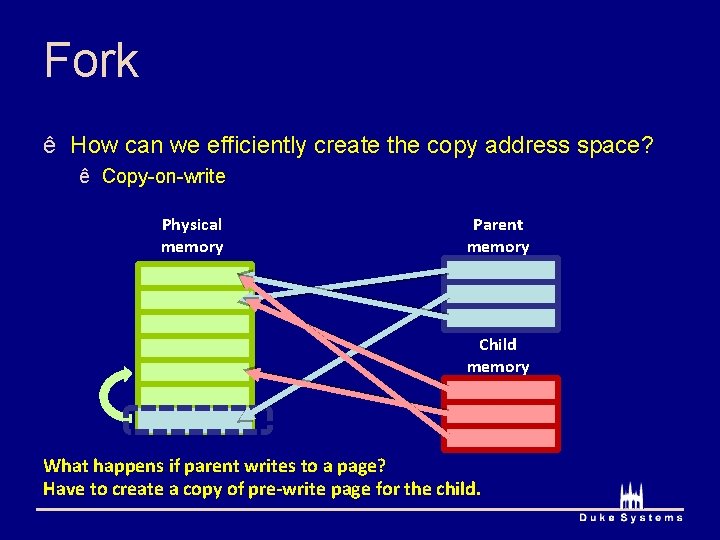

Fork ê How can we efficiently create the copy address space? ê Copy-on-write Physical memory Parent memory Child memory What happens if parent writes to a page?

Fork ê How can we efficiently create the copy address space? ê Copy-on-write Physical memory Parent memory Child memory What happens if parent writes to a page? Have to create a copy of pre-write page for the child.

Why use fork? ê Why copy the parent’s address space? ê You’re just going to throw it out anyway ê Sometimes you want a new copy of same process ê Separating fork and exec provides flexibility ê Also, the child can inherit some kernel state ê E. g. open files, stdin, stdout ê Used by shells to redirect input/output

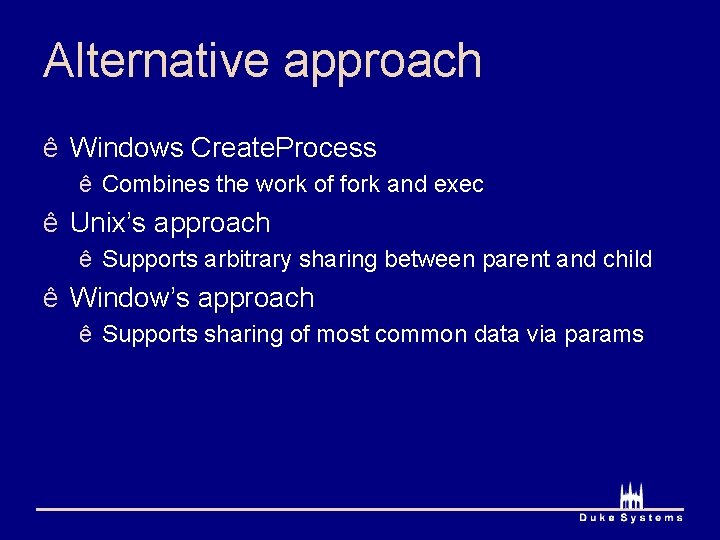

Alternative approach ê Windows Create. Process ê Combines the work of fork and exec ê Unix’s approach ê Supports arbitrary sharing between parent and child ê Window’s approach ê Supports sharing of most common data via params

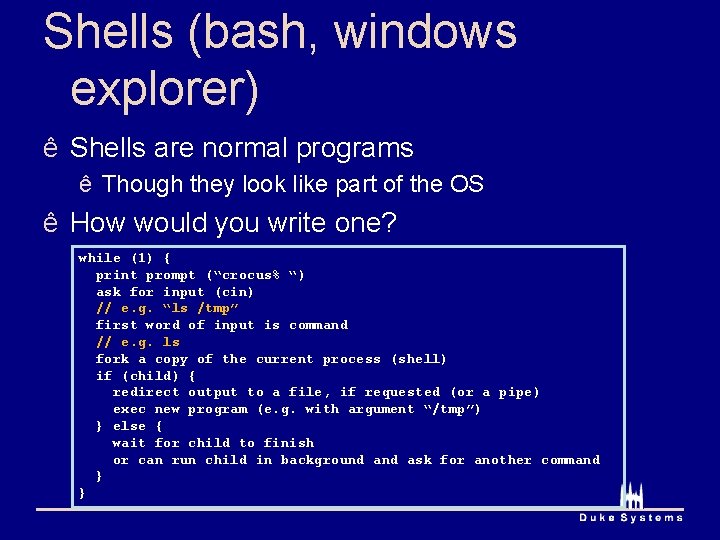

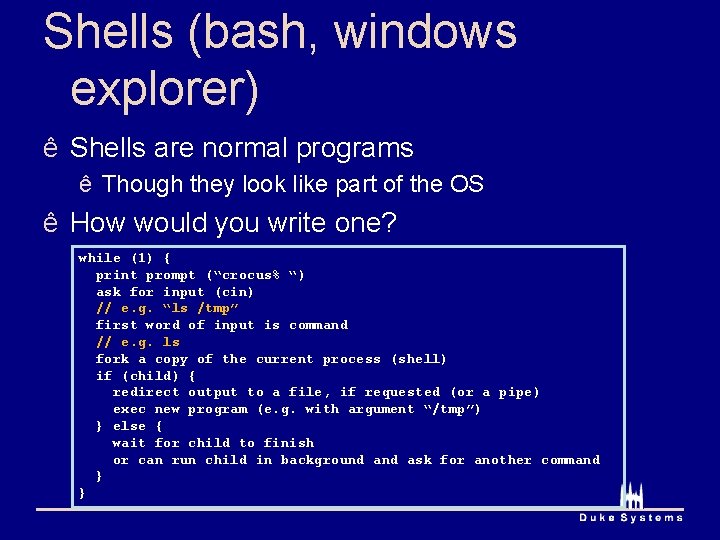

Shells (bash, windows explorer) ê Shells are normal programs ê Though they look like part of the OS ê How would you write one? while (1) { print prompt (“crocus% “) ask for input (cin) // e. g. “ls /tmp” first word of input is command // e. g. ls fork a copy of the current process (shell) if (child) { redirect output to a file, if requested (or a pipe) exec new program (e. g. with argument “/tmp”) } else { wait for child to finish or can run child in background ask for another command } }

Duke cs 210

Duke cs 210 Cps 110

Cps 110 110 000 110 & 111 000 111

110 000 110 & 111 000 111 Vignette mutuelle 110/110

Vignette mutuelle 110/110 Poland national anthem lyrics

Poland national anthem lyrics Cathy landon

Cathy landon Wash sector cox's bazar

Wash sector cox's bazar Remove furniture darwin

Remove furniture darwin Cox emil

Cox emil Ecoxib

Ecoxib Cox.net

Cox.net Jessica cox biografia

Jessica cox biografia Individueel schuldmodel

Individueel schuldmodel Cox investor relations

Cox investor relations Raymond cox qc

Raymond cox qc Nele leosk

Nele leosk Cox

Cox Alccs

Alccs Cobe bryant age

Cobe bryant age Regressiokerroin

Regressiokerroin Cox web hosting

Cox web hosting Nelson and cox

Nelson and cox Cox 2 inhibitors

Cox 2 inhibitors Assembly heap allocation

Assembly heap allocation Dr bhanu deval

Dr bhanu deval Nelson and cox

Nelson and cox Brian cox dunedin

Brian cox dunedin Ingemar j. cox

Ingemar j. cox Cox mill elementary school

Cox mill elementary school Metoda coxa

Metoda coxa Eletrofo

Eletrofo Black cox model

Black cox model Tom cox intermediate

Tom cox intermediate Penny cox uf

Penny cox uf Wfo cox

Wfo cox Rezidual doğrusallık

Rezidual doğrusallık An introduction to rheology

An introduction to rheology Cox regression

Cox regression Tom cox intermediate

Tom cox intermediate Efpractice

Efpractice Cox spam blocker

Cox spam blocker Carrie cox

Carrie cox Alicia cox ph

Alicia cox ph Nelson and cox

Nelson and cox Cox eox tox

Cox eox tox