COSMO strategy for Verification Adriano Raspanti COSMO WG

- Slides: 25

COSMO strategy for Verification Adriano Raspanti COSMO WG 5 Coordinator – “Verification and Case studies” Head of Verification Section at Italian Met Service (raspanti@meteoam. it) with contributions by WG 4 (Interpretation-PP) and WG 5 people Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

COSMO strategy for Verification MAIN PLANS (or projects) • Advanced interpretation and verification of very high resolution models (project by Pierre Eckert) • Conditional Verification-Ver. SUS project • COSI “The global Score” (COSMO Index) Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

Advanced interpretation and verification of very high resolution models Background The increase in resolution of the models will lead to a “proliferation” of grid points and also to an increase of noise in the forecasts. The effects of the so-called “double penalty” also will increase for events not predicted exactly at the right place at the right time. Ways to extract the most valuable information out of high density fields have to be found. The connection with various fuzzy verification methods will be explored in this project. Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

Advanced interpretation and verification of very high resolution models MAIN Goal of the project Data with a very high spatial (and temporal) variability like precipitation have to be treated with special care in order to avoid the double penalty syndrome. Following methods have been identified in a first stage: Fuzzy verification, Contiguous Rain Area (CRA), Neighborhood methods, Fraction skill score, Intensity scale technique and similar When the aggregation region is small, the scores are usually poor, but with an increasing averaging area the scores become very good The goal is to find the smallest area in which the benefit of running a very high resolution model is present. This will be called the reliable scale Not only the verification will be carried out at this “optimal” scale, but the products forecasters and customers should also be designed at this scale (or scales). Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

Advanced interpretation and verification of very high resolution models Other aspects of the project 1. Application of “boosting” method for the detection of “special" weather parameters • This method finds optimal choices for predictors which are proposed by the meteorologists. Good results with weather parameters not directly included in the model like fog or visibility are expected. 2. Use of very high resolution precipitation as input of the hydrological models. Studies and verification on the impact of this coupling Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

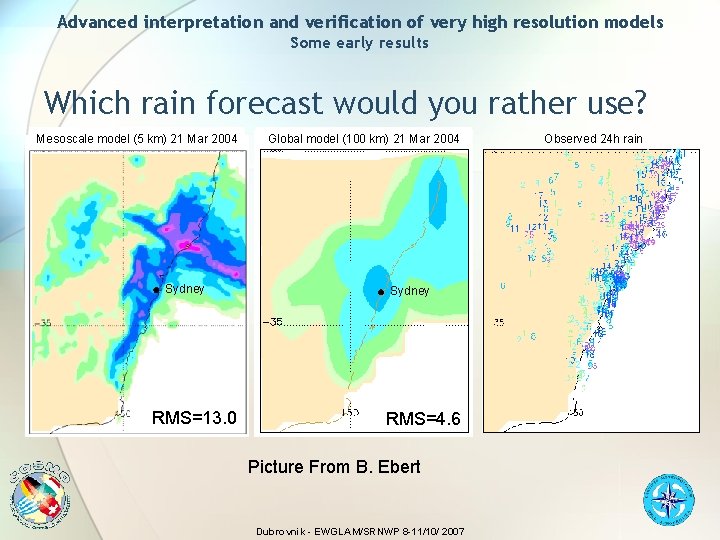

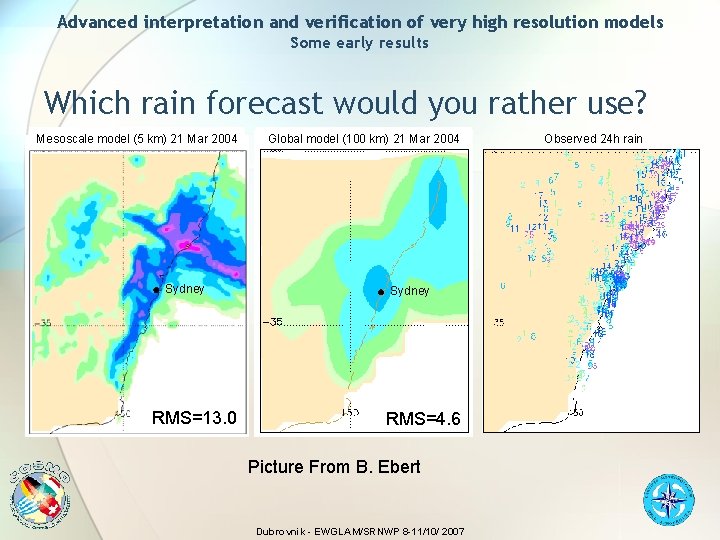

Advanced interpretation and verification of very high resolution models Some early results Which rain forecast would you rather use? Mesoscale model (5 km) 21 Mar 2004 Sydney RMS=13. 0 Global model (100 km) 21 Mar 2004 Sydney RMS=4. 6 Picture From B. Ebert Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007 Observed 24 h rain

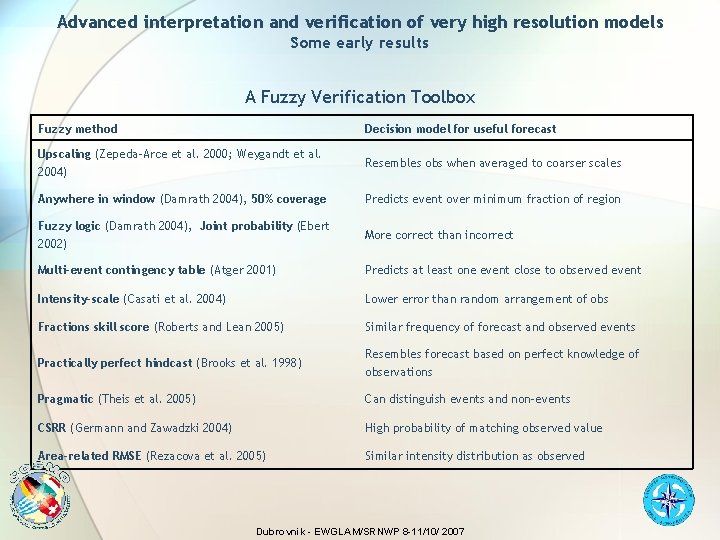

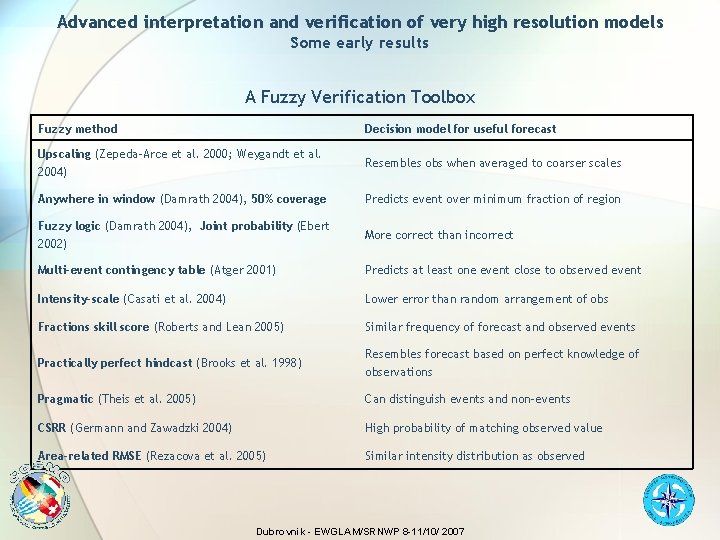

Advanced interpretation and verification of very high resolution models Some early results A Fuzzy Verification Toolbox Fuzzy method Decision model for useful forecast Upscaling (Zepeda-Arce et al. 2000; Weygandt et al. 2004) Anywhere in window (Damrath 2004), 50% coverage Fuzzy logic (Damrath 2004), Joint probability (Ebert 2002) Resembles obs when averaged to coarser scales Predicts event over minimum fraction of region More correct than incorrect Multi-event contingency table (Atger 2001) Predicts at least one event close to observed event Intensity-scale (Casati et al. 2004) Lower error than random arrangement of obs Fractions skill score (Roberts and Lean 2005) Similar frequency of forecast and observed events Practically perfect hindcast (Brooks et al. 1998) Resembles forecast based on perfect knowledge of observations Pragmatic (Theis et al. 2005) Can distinguish events and non-events CSRR (Germann and Zawadzki 2004) High probability of matching observed value Area-related RMSE (Rezacova et al. 2005) Similar intensity distribution as observed Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

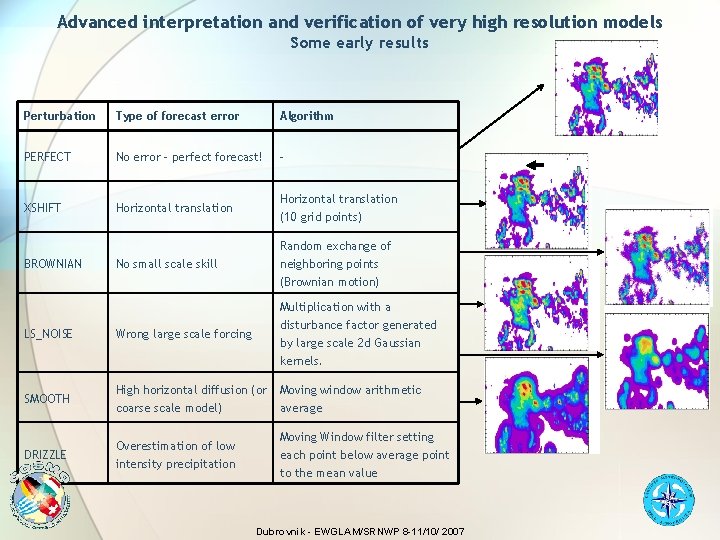

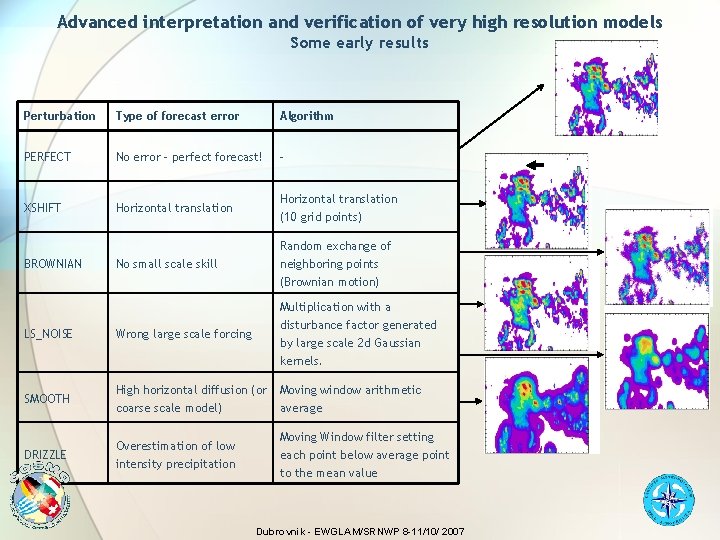

Advanced interpretation and verification of very high resolution models Some early results Perturbation Type of forecast error Algorithm PERFECT No error – perfect forecast! - XSHIFT Horizontal translation (10 grid points) Random exchange of BROWNIAN No small scale skill neighboring points (Brownian motion) Multiplication with a LS_NOISE SMOOTH DRIZZLE disturbance factor generated by large scale 2 d Gaussian kernels. Wrong large scale forcing High horizontal diffusion (or Moving window arithmetic coarse scale model) average Overestimation of low intensity precipitation Moving Window filter setting each point below average point to the mean value Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

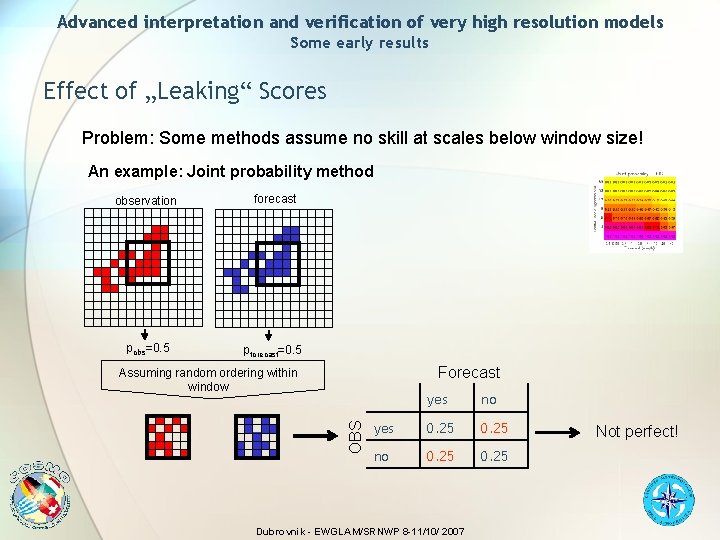

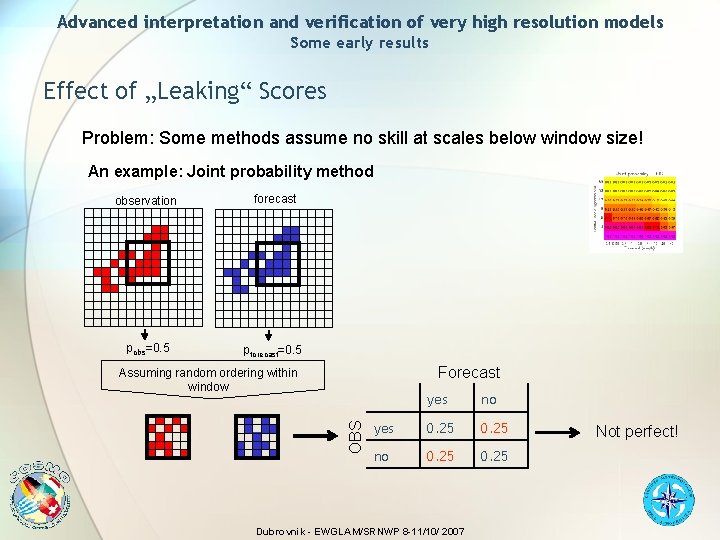

Advanced interpretation and verification of very high resolution models Some early results Effect of „Leaking“ Scores Problem: Some methods assume no skill at scales below window size! An example: Joint probability method observation forecast pobs=0. 5 pforecast=0. 5 Forecast OBS Assuming random ordering within window yes no yes 0. 25 no 0. 25 Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007 Not perfect!

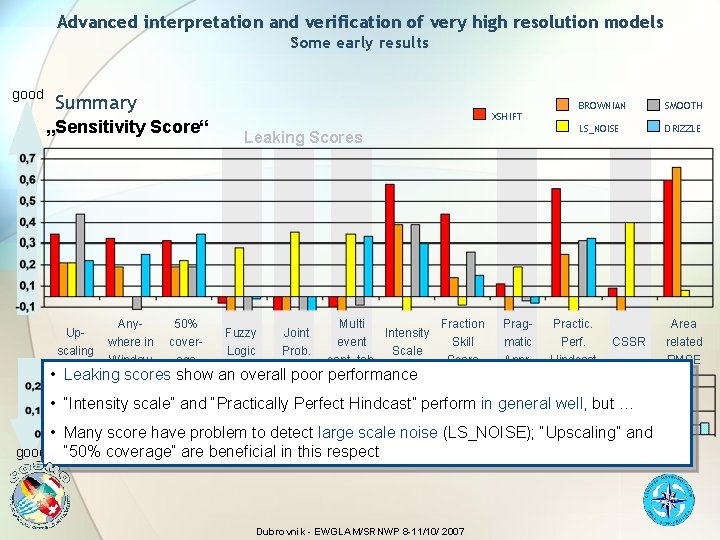

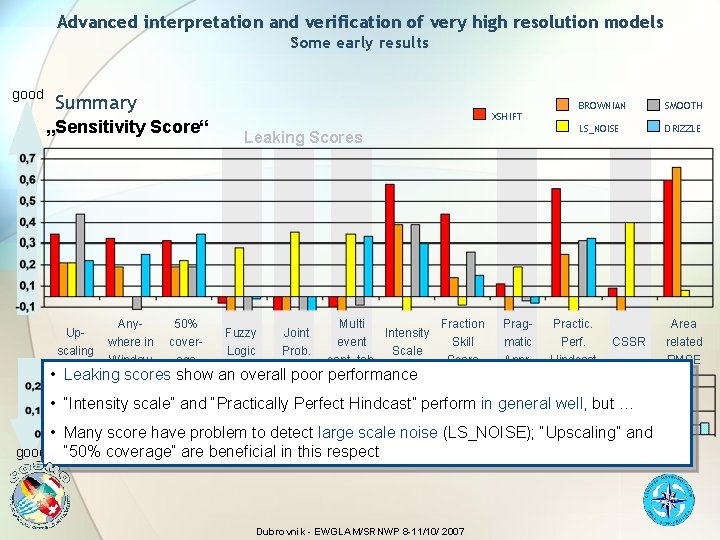

Advanced interpretation and verification of very high resolution models Some early results good Summary „Sensitivity Score“ Upscaling Anywhere in Window 50% coverage XSHIFT Leaking Scores Fuzzy Logic Joint Prob. Multi Fraction Intensity event Skill Scale cont. tab. Score • Leaking scores show an overall poor performance Pragmatic Appr. BROWNIAN SMOOTH LS_NOISE DRIZZLE Practic. Perf. Hindcast CSSR • “Intensity scale” and “Practically Perfect Hindcast” perform in general well, but … • Many score have problem to detect large scale noise (LS_NOISE); “Upscaling” and STD good “ 50% coverage” are beneficial in this respect Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007 Area related RMSE

CV Project - Ver. SUS - Verification System Unified Survey MAIN Goal of the Versus project Development of a common and unified verification “package” including a Conditional Verification tool. METHOD The typical approach to CV could consist of the selection of one or several forecast products and one or several mask variables or conditions, which would be used to define thresholds for the product verification (e. g. verification of T 2 M only for grid points with zero cloud cover in model and observations). After the selection of the desired conditions, a classical verification tool for statistical indexes can be used. The more flexible way to perform a selection of forecasts and observations is to use an “ad hoc database”, planned and designed for this purpose, where the mask or filter could be simply or complex SQL statements. Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

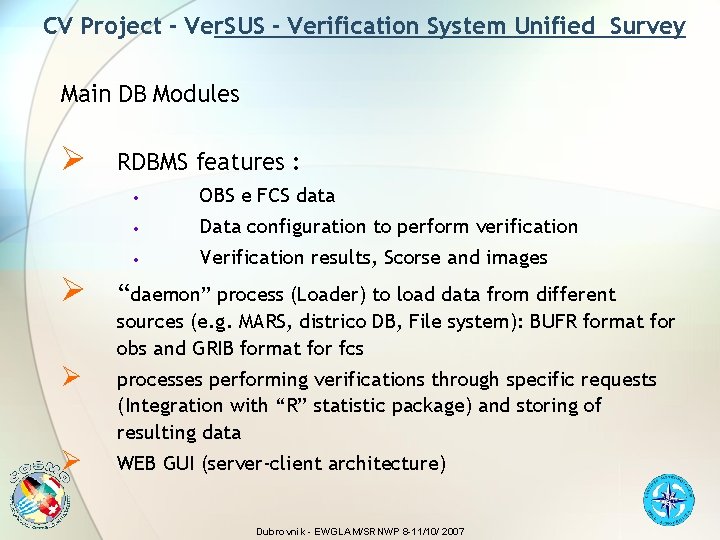

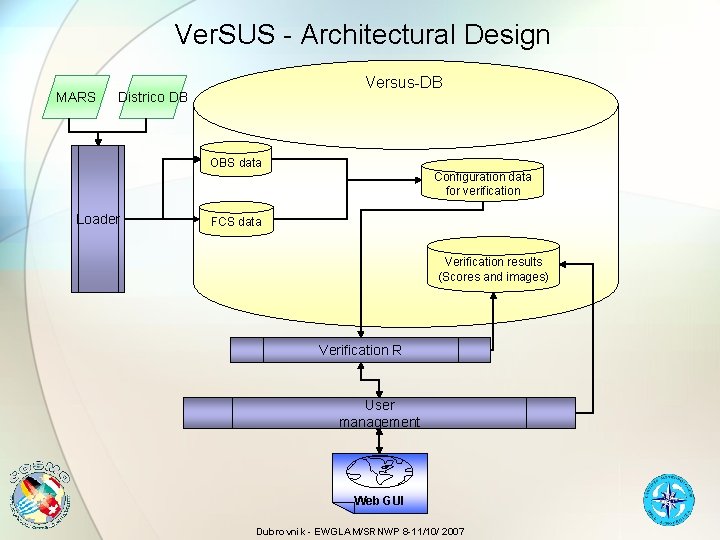

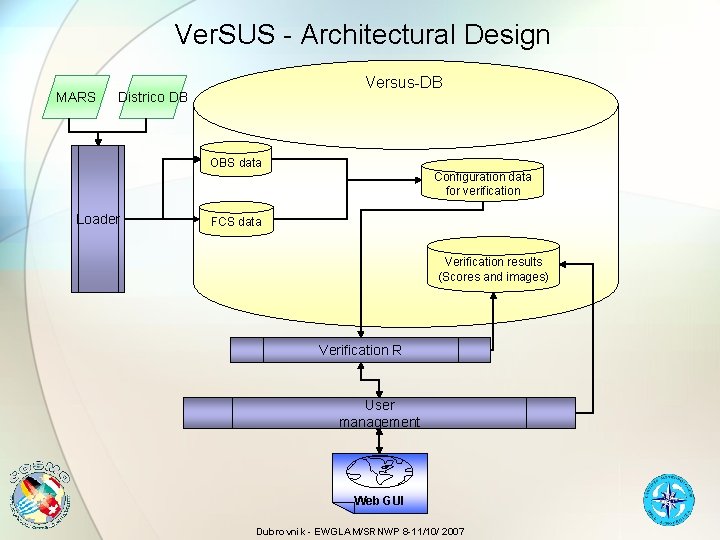

CV Project - Ver. SUS - Verification System Unified Survey Main DB Modules Ø Ø RDBMS features : • OBS e FCS data • Data configuration to perform verification • Verification results, Scorse and images “daemon” process (Loader) to load data from different sources (e. g. MARS, districo DB, File system): BUFR format for obs and GRIB format for fcs Ø processes performing verifications through specific requests (Integration with “R” statistic package) and storing of resulting data Ø WEB GUI (server-client architecture) Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

Ver. SUS - Architectural Design MARS Versus-DB Districo DB OBS data Configuration data for verification Loader FCS data Verification results (Scores and images) Verification R User management Web GUI Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

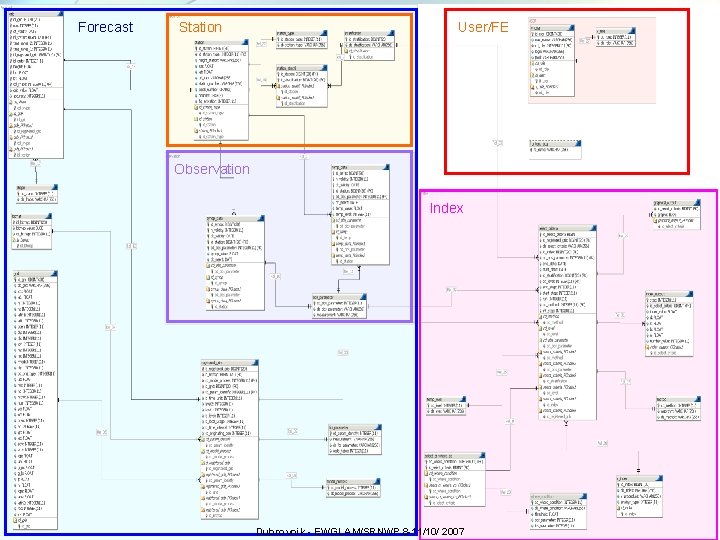

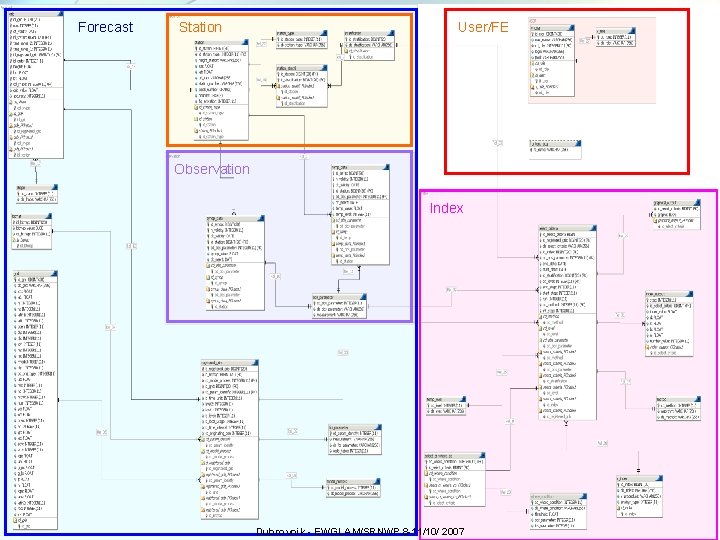

Forecast Station User/FE Observation Index Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

CV Project - Ver. SUS - Verification System Unified Survey VERSUS DB has the following main areas: • Users managing area • Front-End area for Front-End setting up. Two main FE: the loader FE for data ingestion, and scores FE for the execution of verification indexes by means of “R” package library. • Meteorological data area, for handling of observations (surface and upper air) and forecasts data and their lookup tables. • Score criteria area that manages the definition of scores and their applications. • Output area that stores the scores and graphical output. Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

CV Project - Ver. SUS - Verification System Unified Survey Main lookup tables: • Station: the list of punctual meteorological station that provides surface or upper air observation data to VERSUS system. The attributes are name, nationality, latitude, longitude, height, the WMO and/or ICAO code (if they exist) of the station. Moreover there is an unique identifier of the station that VERSUS DB automatically assigns when a new station is defined by means of Graphic User Interface (GUI) • Obs_type: the list of observation types (templates) such as synop, temp, any other observation data coded in BUFR format. That table is modified by means of a GUI • Obs_parameter: the list of BUFR parameter codes, the meaning and input measurement. This table is automatically updated whenever a new occurrence of BUFR parameter code comes to the system. • Model: the list of meteorological models verified VERSUS • Grid: the list of grids that are defined in the section 3 of the grib. • Fcs_parameter: data defined in the section 1 of the grib. The lookup tables are managed by GUI or loader FE of the system, automatically, whenever a new instance of them occurs. Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

CV Project - Ver. SUS - Verification System Unified Survey The selection criteria of the forecast and observation data is setting up by means of a GUI. The information that must be define are: • Stratification (lat/lon, WMO name, morphological, …. ) • The list of R-verification indexes to apply • The observed parameter and its condition/filter, if any • The forecast parameter (model, grid, parameter) and its condition/filter, if any • The method of getting forecast data, such as nearest point, mean on a given radius, … • The start date and end date of the data or the frequency (monthly, weekly, seasonal) • Steps • Pressure Levels (for upper air) Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

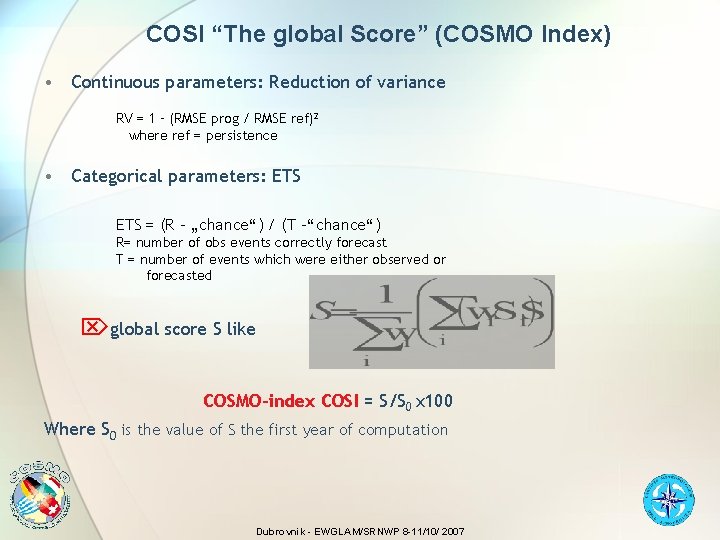

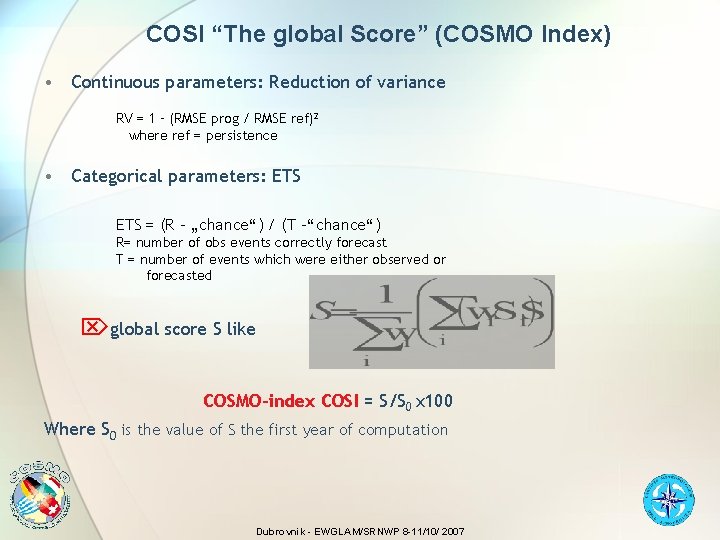

COSI “The global Score” (COSMO Index) • Continuous parameters: Reduction of variance RV = 1 – (RMSE prog / RMSE ref)2 where ref = persistence • Categorical parameters: ETS = (R – „chance“) / (T –“chance“) R= number of obs events correctly forecast T = number of events which were either observed or forecasted global score S like COSMO-index COSI = S/S 0 x 100 Where S 0 is the value of S the first year of computation Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

COSI “The global Score” (COSMO Index) Parameters • total cloud amount [threshold: 0 -2, 3 -6, 7 -8 • temperature [t 2 m, later: tmin, tmax] • 10 m- windvector • precipitation [thresholds: 0. 2, 2, 10 mm/6 h] Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

COSI “The global Score” (COSMO Index) Verification frequency • All 3 h − T 2 m, 10 m-wind and cloudiness: • @ 00, 03, …, 18, 21 UTC later on: tmin & tmx over 12 h • 6 h-sums: precipitation Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

COSI “The global Score” (COSMO Index) Which models ? Aggregation ? • Start with COSMO-7 • But programming also for COSMO-2 • Temperature and windspeed: 1 gridpoint • Precipitation: mean in a radius of 15 km • Cloudiness: mean in a radius of 30 km Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

COSI “The global Score” (COSMO Index) List of stations: • starting point: EWGLAM station list for verification • selection based on availability of cloudiness each 3 h per day • plus „some more“ representative stations for COSMO-countries THE_Score will be computed for each COSMO-country and different regions (W/N/E/S-Europe, Alps, smallest common region of all COSMO-xx, …) Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

COSMO strategy for Verification Conclusions Advanced interpretation and verification of very high resolution models • Search for the “optimal scale” for verification and for representation of precipitation fields • Fuzzy Verification score a promising framework for verification of high resolution precipitation forecasts. • • Not all scores indicate a perfect forecast by perfect scores (Leaking scores). • Choice of the scores: Upscaling, Intensity scale, Fraction skill score (? ) • End of the project expected for 2008 Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

COSMO strategy for Verification Conclusions Ver. SUS project • One tool for Verification and Conditional Verification • DB powerful • No “ad hoc” application to create verifications: only simple selections • R-Integration (to add statistical Indexes only the “Verification Package” can be updated) – Community Knowledge • User configurable using the GUI (Graphical User Interface) • GUI WEB-based • End of the project expected for 2008 (delivery of the package) Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

COSMO strategy for Verification Conclusions COSI “The global Score” (COSMO Index) • Next future implementation • Included in Common Verification Suite package (common fortran package for standard verifications, delivered in 2006 for COSMO community) • Will be included in VERSUS package • First results hopefully for COSMO GM of 2008 Dubrovnik - EWGLAM/SRNWP 8 -11/10/ 2007

Adriano raspanti

Adriano raspanti Adriano yacubian fernandes

Adriano yacubian fernandes Adriano yacubian

Adriano yacubian Segretivo

Segretivo Adriano yacubian

Adriano yacubian Stibor teste

Stibor teste Publio elio traiano adriano

Publio elio traiano adriano Neuroanatomia

Neuroanatomia Adriano todisco

Adriano todisco Liceo adriano tivoli

Liceo adriano tivoli Adriano moulo dienorastis

Adriano moulo dienorastis Adriano medeiros

Adriano medeiros Adriano yacubian

Adriano yacubian Adriano lai

Adriano lai Il numero come principio del cosmo

Il numero come principio del cosmo Team plus hr services private limited

Team plus hr services private limited Cosmo graphical mystery was written by

Cosmo graphical mystery was written by Oozeex

Oozeex Sufijo de cosmo

Sufijo de cosmo Raices griegas foto

Raices griegas foto Cosmo ro

Cosmo ro Spanish numbers

Spanish numbers Viaggio nel cosmo

Viaggio nel cosmo El cosmo y el universo

El cosmo y el universo Jimmy cournoyer

Jimmy cournoyer Cosmo player

Cosmo player