ComputerAided Language Processing Ruslan Mitkov University of Wolverhampton

- Slides: 37

Computer-Aided Language Processing Ruslan Mitkov University of Wolverhampton

The rise and fall of Natural Language Processing (NLP)? o Automatic NLP: expectations fulfilled? o Many practical applications such as IR, shy away from NLP techniques o Performance accurate? o There are many applications such as word alignment, anaphora resolution, term extraction where accuracy could be well below 100% o Dramatic improvements feasible in foreseeable time?

Context o Promising NLP projects, results but o In the vast majority of real-world applications, fully automatic NLP is still far from delivering reliable results.

Alternative: computer-aided language processing (CALP) o Computer-aided scenario: o Processing is not done entirely by computers o Human intervention improves, postedits or validates the output of the computer program.

Historical perspective o Martin Kay’s (1980) paper on machine -aided translation

Machine-Aided Translation The translator sends the simple sentences for translation to the computer and translates the more difficult, complex ones him(her)self.

CALP examples o Machine-aided Translation o Summarisation (Orasan, Mitkov and Hasler 2003) o Generation of multiple-choice tests (Mitkov and An, 2003; Mitkov, An and Karamanis 2006) o Information extraction (Cunningham et al 2002) o Acquisition of semantic lexicons (Riloff and Schmelzenbach, 1998) o Annotation of corpora (Orasan 2005) o Translation Memory

Translation Memory A Translation Memory is a linguistic database that collects all your translations and their target language equivalents as you translate. A Translation Memory is a database that collects all your translations and their target language equivalents as you translate. Match 87% linguistic �� linguistische

CALP applications in focus o Machine-aided Translation o Translation Memory o Annotation tools o Computer-aided Summarisation o Computer-aided Generation of Multiple-Choice Tests

MAT: the Penang experiment o Books/manuals averaging about 250 pages translated manually by a translation bureau and by a Machine-Aided Translation program (SISKEP). o Manual translation took 360 hours on average o Translation by a Machine-Aided Translation program needed 200 hours on average o Efficiency rate: 1. 8

Translation Memory o A case study (Webb 1998) o Client saves 40% money, 70% time o Translator / translation agency saves 69% money, 70% time o Efficiency rate: 3. 3

PALink. A: multi-task annotation tool o Employed in a number of corpora annotation tasks o (Semi-automatic) mark-up of coreference o (Semi-automatic) mark-up of centering o (Semi-automatic) mark-up of events

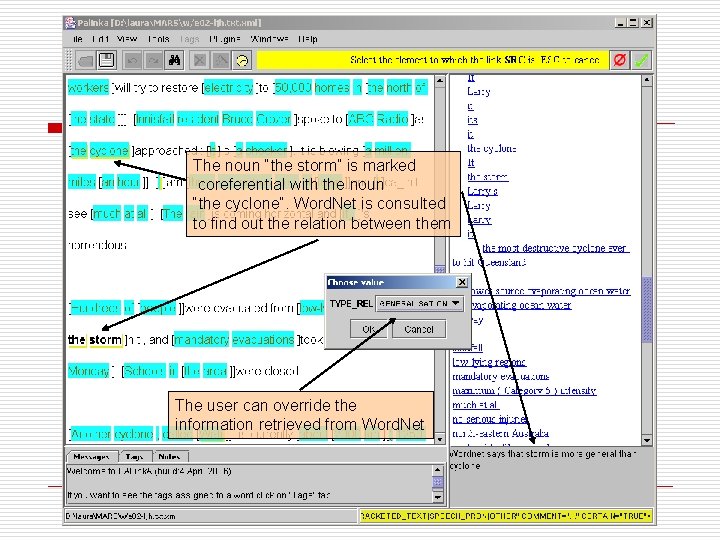

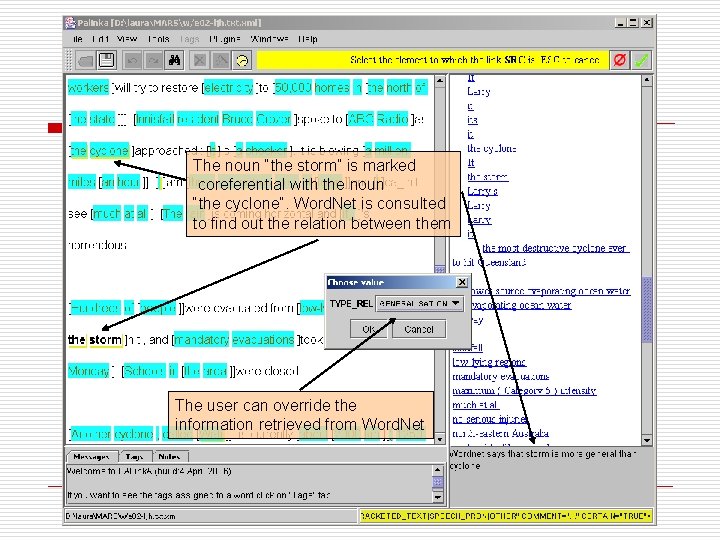

The noun “the storm” is marked coreferential with the noun “the cyclone”. Word. Net is consulted to find out the relation between them The user can override the information retrieved from Word. Net

PALink. A: multi-task annotation tool (II) o Webpage: http: //clg. wlv. ac. uk/projects/PALink. A o Old version: over 500 downloads used in several projects o New version: supports plugins (not available for download yet.

Further CALP experiments (evaluations) at the University of Wolverhampton o Computer-aided summarisation o Computer-aided generation of multiplechoice tests o Efficiency and quality evaluated in both cases

Computer-aided summarisation o CAST: computer-aided summarisation tool (Orasan, Mitkov and Hasler 2003) o Combines automatic methods with human input o Relies on automatic methods to identify the important information o Humans can decide to include this information and/or additional one o Humans post-edit the information to produce a coherent summary

Evaluation (Orasan and Hasler 2007) o Time for producing summaries with and without CAST o Consistent familiarity-effect-extinguished model: same texts produced manually and with the help of the program in intervals of 1 year o Human had to choose the better summary when presented with a pair of summaries

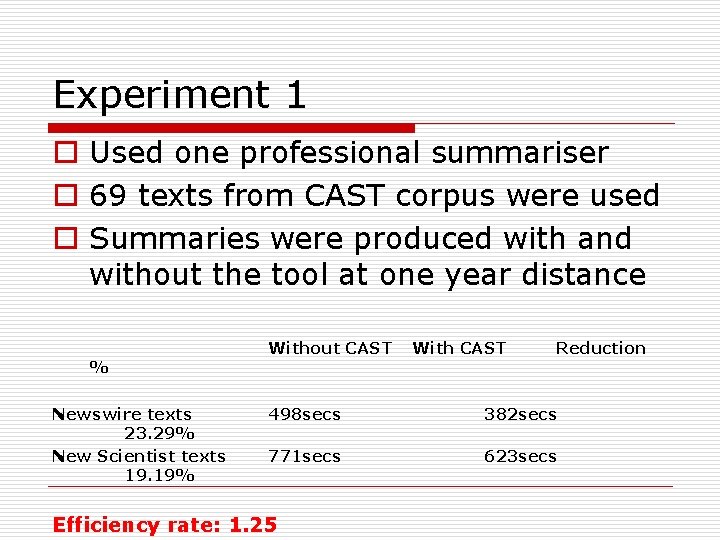

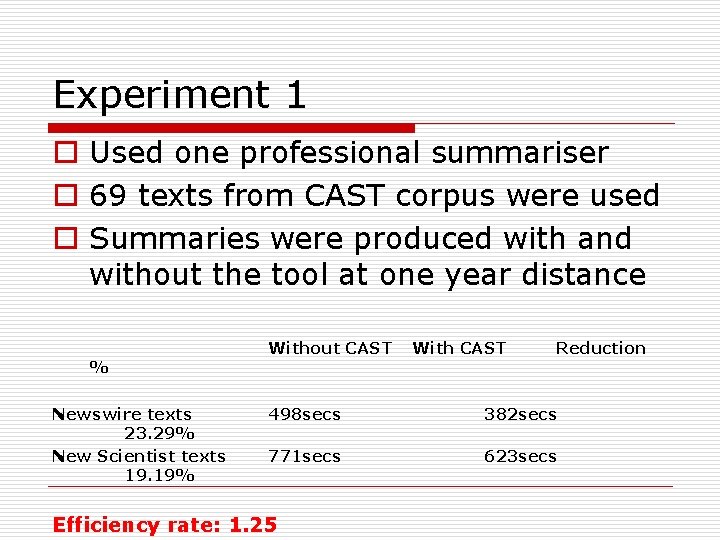

Experiment 1 o Used one professional summariser o 69 texts from CAST corpus were used o Summaries were produced with and without the tool at one year distance % Newswire texts 23. 29% New Scientist texts 19. 19% Without CAST With CAST Reduction 498 secs 382 secs 771 secs 623 secs Efficiency rate: 1. 25

Experiment 1 o Term-based summariser used in the process evaluated o Correlation between the success of the automatic summariser and the time reduction

Experiment 2 o Turing-like experiment where humans were asked humans to pick the better summary in a pair o Each pair contained one summary produced with CAST and one without CAST o 17 judges were shown 4 randomly selected pairs

Experiment 2 o In 41 pairs the summary produced with CAST was preferred o In 27 pairs the summary produced without CAST was preferred o Chi-square shows that there is no statistically significant difference with 0. 05 confidence

Discussion o Computer-aided summarisation works for professional summarisers o Reduces the time necessary to produce summaries by about 20% o Quality of summaries not compromised

Computer-aided generation of multiplechoice tests (Mitkov and Ha 2003) o Multiple-choice test: an effective way to measure student achievements. o Fact: development of multiple-choice tests is a time-consuming and labour intensive task o Alternative: computer-aided multiplechoice test generation based on a novel NLP methodology o How does it work?

Methodology o The system identifies the important concepts in text o Generates questions focusing on these concepts o Chooses semantically closest distractors

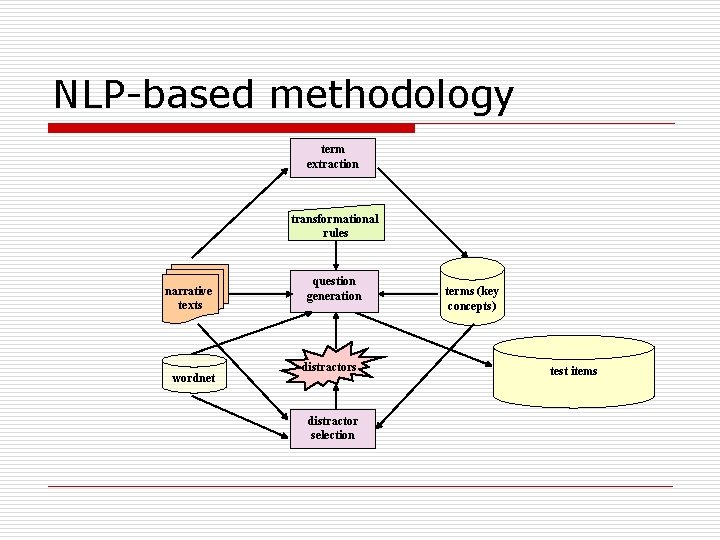

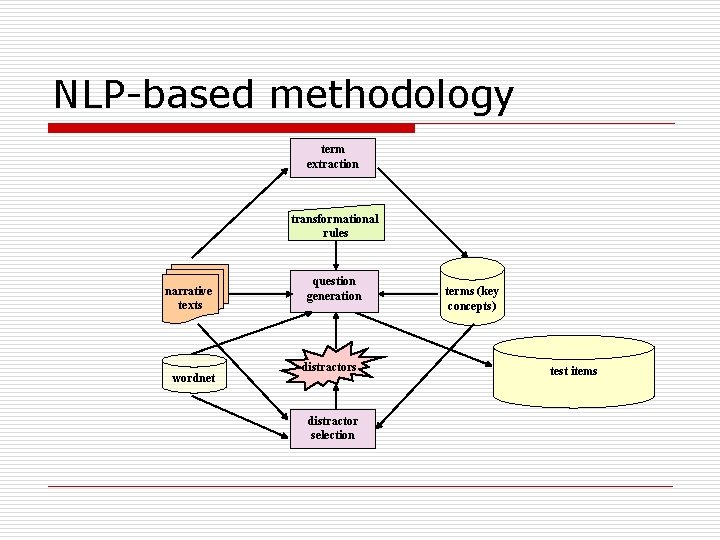

NLP-based methodology term extraction transformational rules narrative texts wordnet question generation distractors distractor selection terms (key concepts) test items

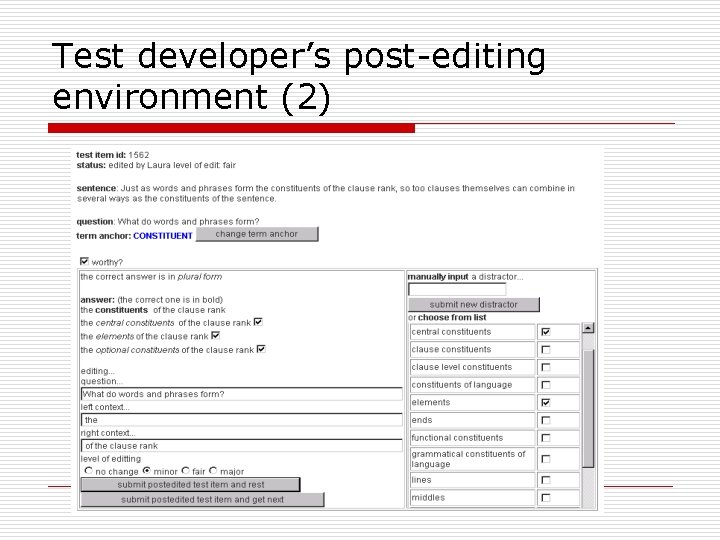

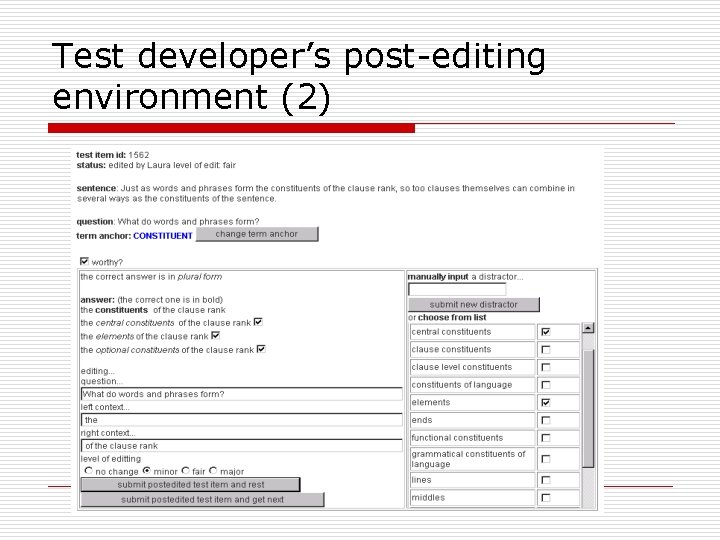

Test developer’s post-editing environment o First version of system: 3 distractors generated to be post-edited o Current version of system: long list of distractors generated with the user choosing 3 from them

Test developer’s post-editing environment (2)

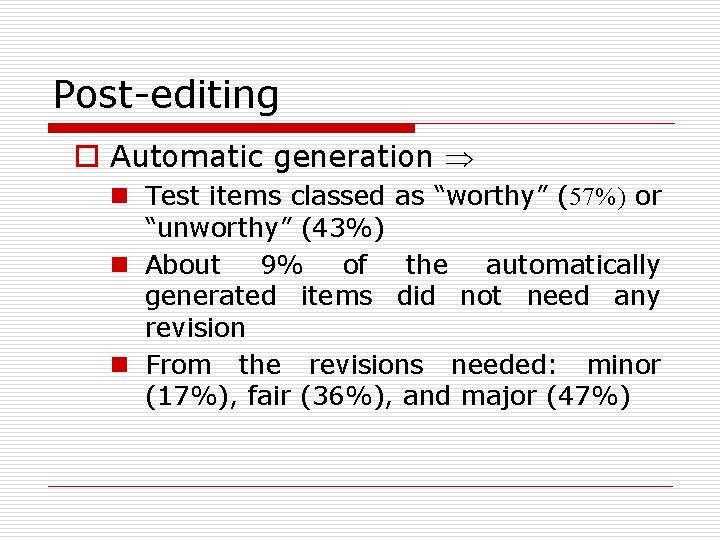

Post-editing o Automatic generation n Test items classed as “worthy” (57%) or “unworthy” (43%) n About 9% of the automatically generated items did not need any revision n From the revisions needed: minor (17%), fair (36%), and major (47%)

In-class experiments n Controlled set of test items administered n First experiment: 24 items constructed with the help of the first version of the system n Second experiment: another 18 items constructed with the help of the current version of the system n Further 12 manually produced items included n 113 undergraduate students took the test o 45 in first experiment o 78 in second experiment o subset of second group (30) replied to manually produced test

Evaluation o Efficiency of the procedure o Quality of the test items

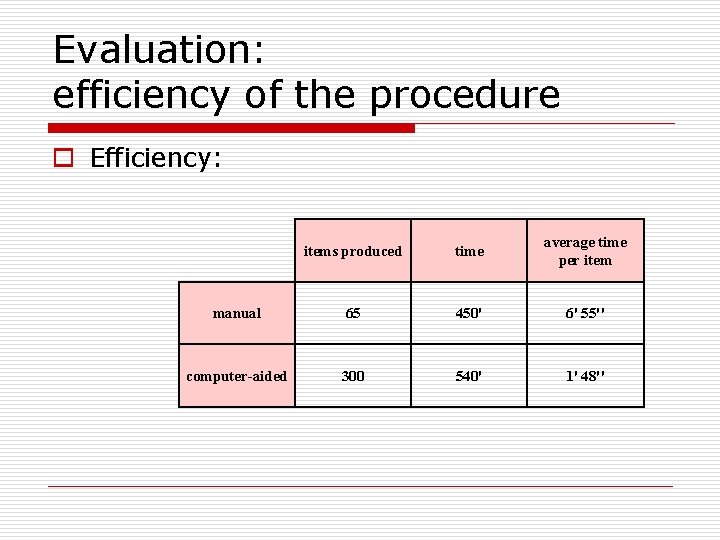

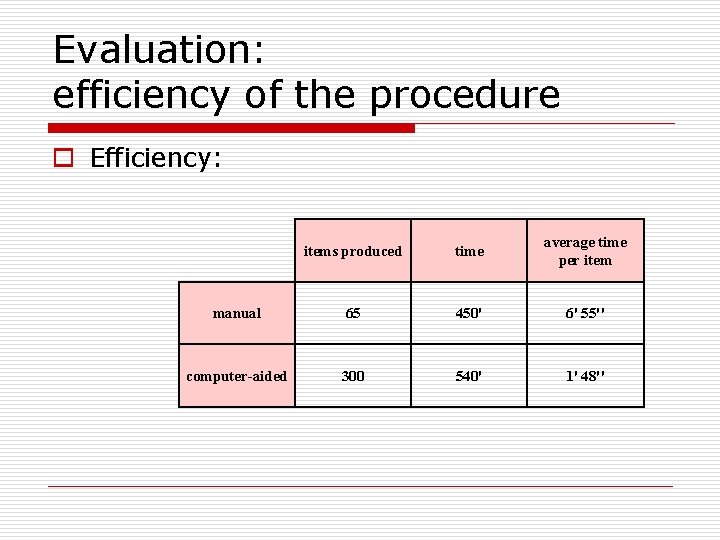

Evaluation: efficiency of the procedure o Efficiency: items produced time average time per item manual 65 450' 6' 55'' computer-aided 300 540' 1' 48''

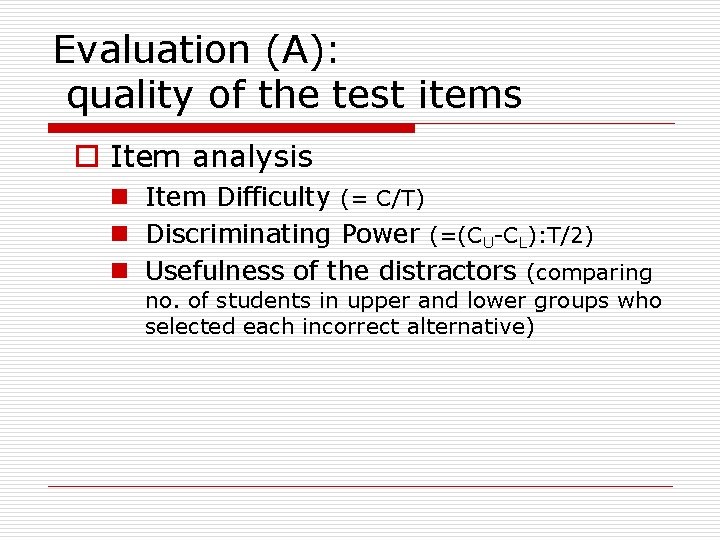

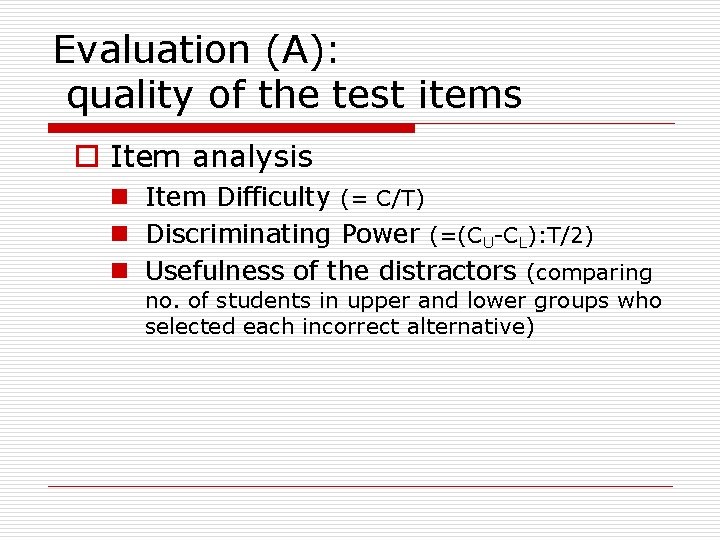

Evaluation (A): quality of the test items o Item analysis n Item Difficulty (= C/T) n Discriminating Power (=(CU-CL): T/2) n Usefulness of the distractors (comparing no. of students in upper and lower groups who selected each incorrect alternative)

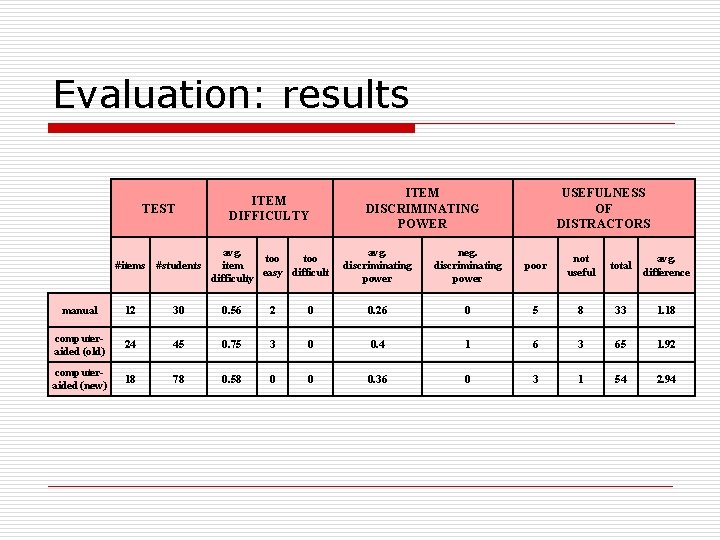

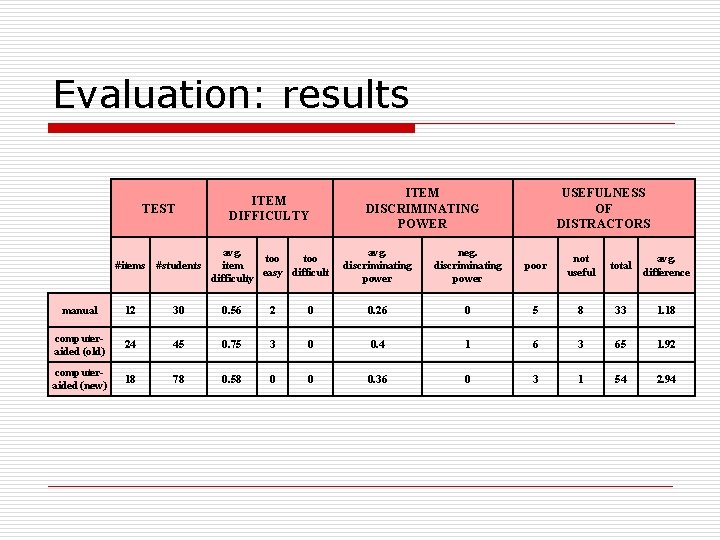

Evaluation: results TEST ITEM DIFFICULTY avg. too #items #students item easy difficulty ITEM DISCRIMINATING POWER USEFULNESS OF DISTRACTORS avg. discriminating power neg. discriminating power poor not useful total avg. difference manual 12 30 0. 56 2 0 0. 26 0 5 8 33 1. 18 computeraided (old) 24 45 0. 75 3 0 0. 4 1 6 3 65 1. 92 computeraided (new) 18 78 0. 58 0 0 0. 36 0 3 1 54 2. 94

Discussion o Computer-aided construction of multiple-choice test items is much more efficient than purely manual construction (efficiency rate 3. 8) o Quality of test items produced with the help program is not compromised in exchange for time and labour savings

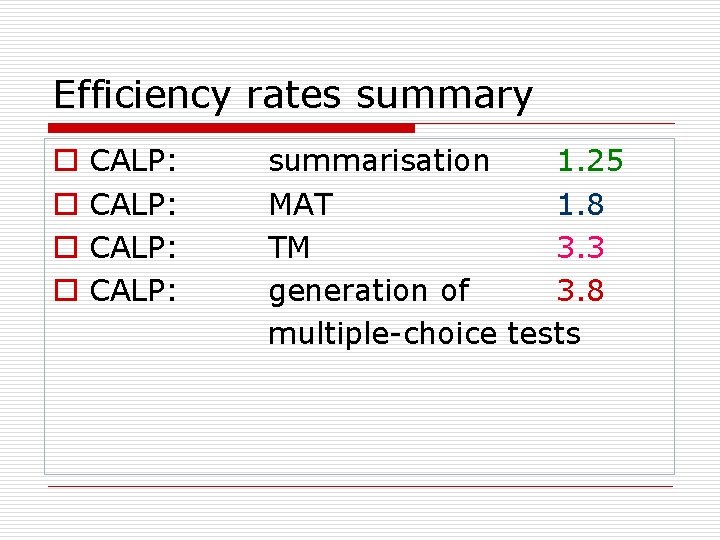

Efficiency rates summary o o CALP: summarisation 1. 25 MAT 1. 8 TM 3. 3 generation of 3. 8 multiple-choice tests

Conclusions o CALP: attractive alternative of automatic NLP o CALP: significant efficiency (time and labour) o CALP: no compromise of quality

Further information o My web page: www. wlv. ac. uk/~le 1825 o The Research Group in Computational Linguistics: clg. wlv. ac. uk