CMU TEAMA in TDT 2004 Topic Tracking Yiming

- Slides: 21

CMU TEAM-A in TDT 2004 Topic Tracking Yiming Yang School of Computer Science Carnegie Mellon University Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 1

CMU Team A – Jaime Carbonell (PI) – Yiming Yang (Co-PI) – Ralf Brown – Jian Zhang – Nianli Ma – Shinjae Yoo – Bryan Kisiel, Monica Rogati, Yi Chang Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 2

Participated Tasks in TDT 2004 § Topic Tracking (Nianli Ma et al. ) § Supervised Adaptive Tracking (Yiming Yang et al. ) § New Event Detection (Jian Zhang et al. ) § Link Detection (Ralf Brown) § Hierarchical Topic Detection – not participated Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 3

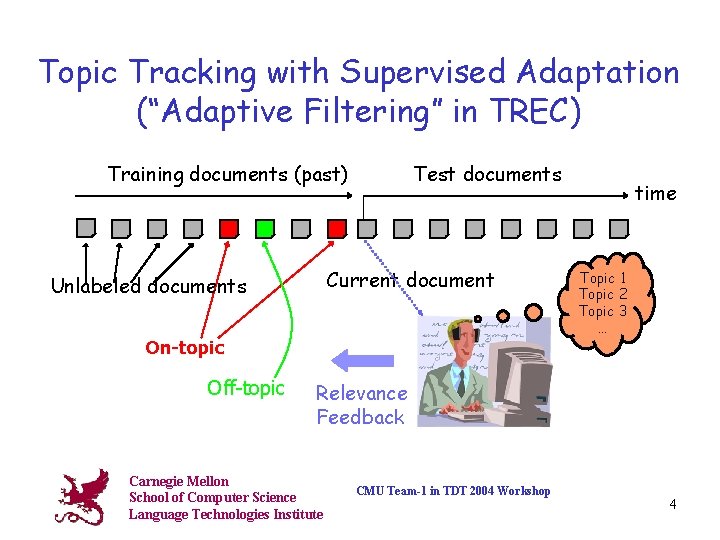

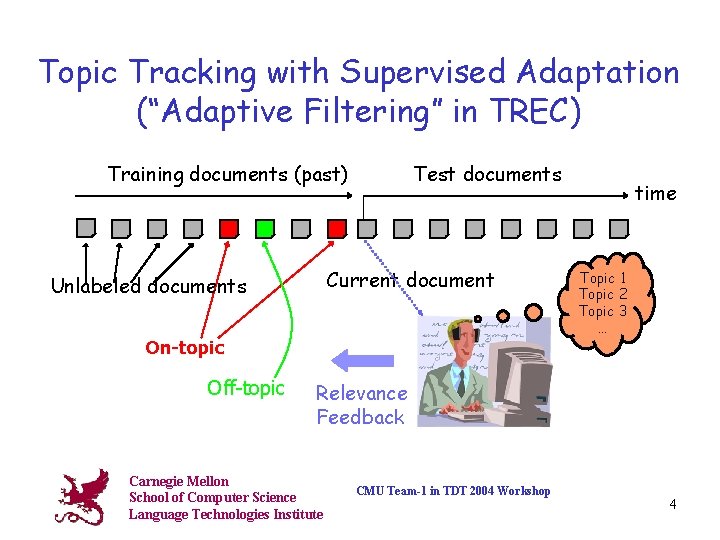

Topic Tracking with Supervised Adaptation (“Adaptive Filtering” in TREC) Training documents (past) Test documents Current document Unlabeled documents On-topic Off-topic time Topic 1 Topic 2 Topic 3 … Relevance Feedback Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 4

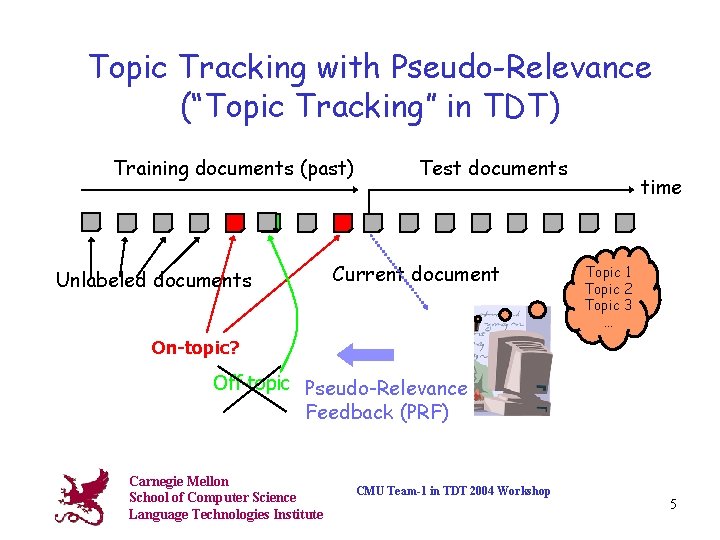

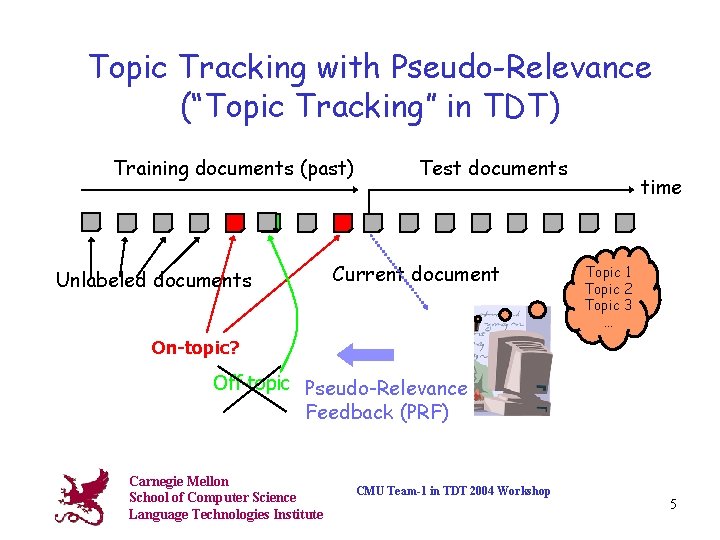

Topic Tracking with Pseudo-Relevance (“Topic Tracking” in TDT) Training documents (past) Unlabeled documents Test documents Current document time Topic 1 Topic 2 Topic 3 … On-topic? Off-topic Pseudo-Relevance Feedback (PRF) Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 5

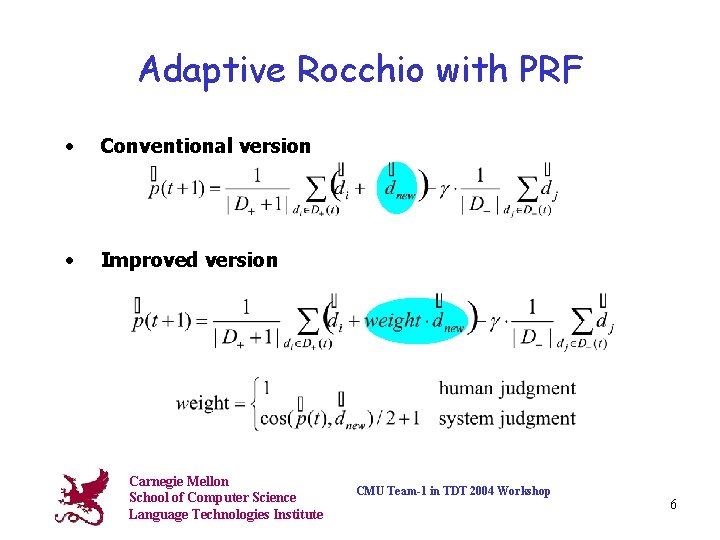

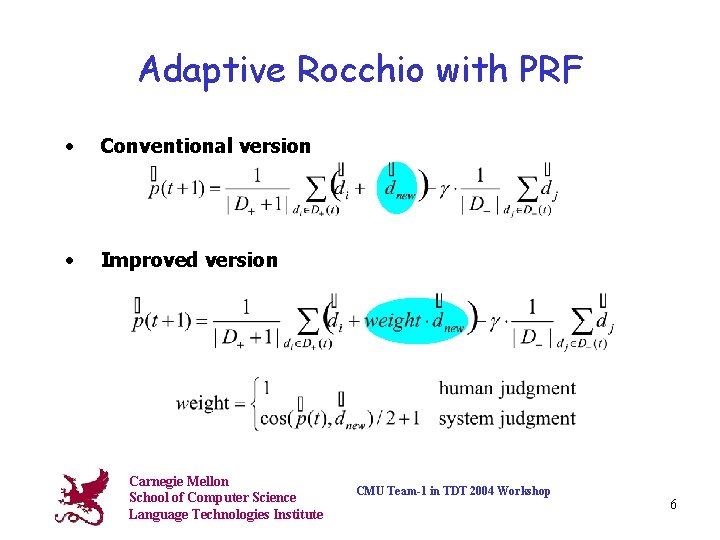

Adaptive Rocchio with PRF • Conventional version • Improved version Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 6

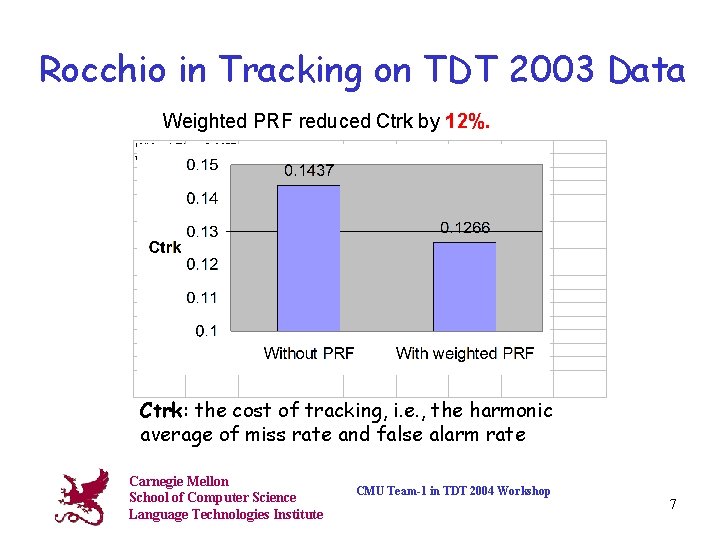

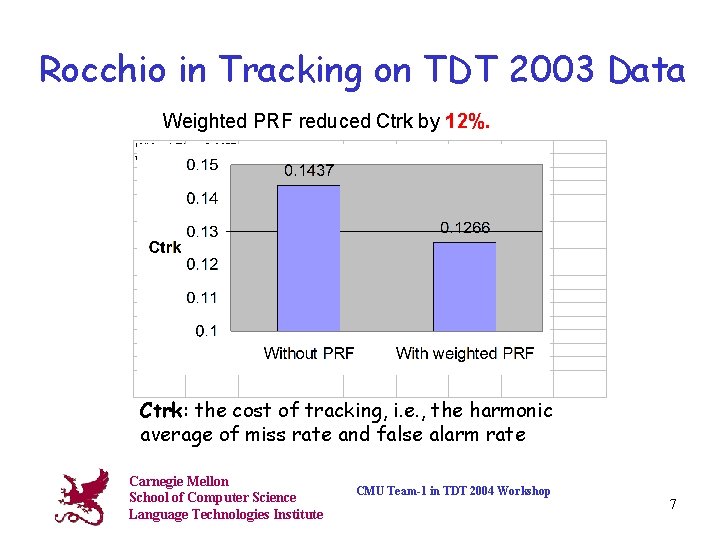

Rocchio in Tracking on TDT 2003 Data Weighted PRF reduced Ctrk by 12%. Ctrk: the cost of tracking, i. e. , the harmonic average of miss rate and false alarm rate Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 7

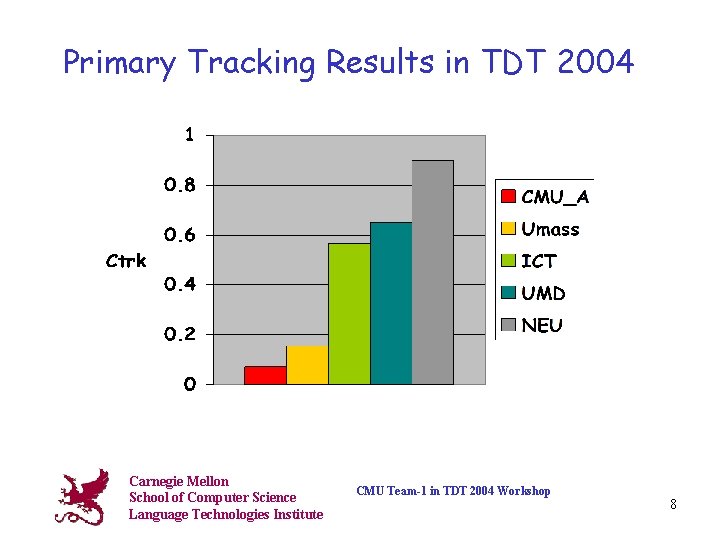

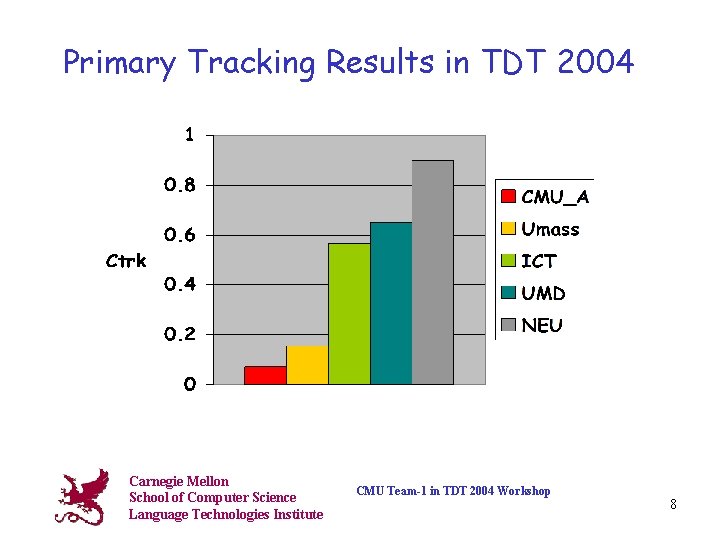

Primary Tracking Results in TDT 2004 Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 8

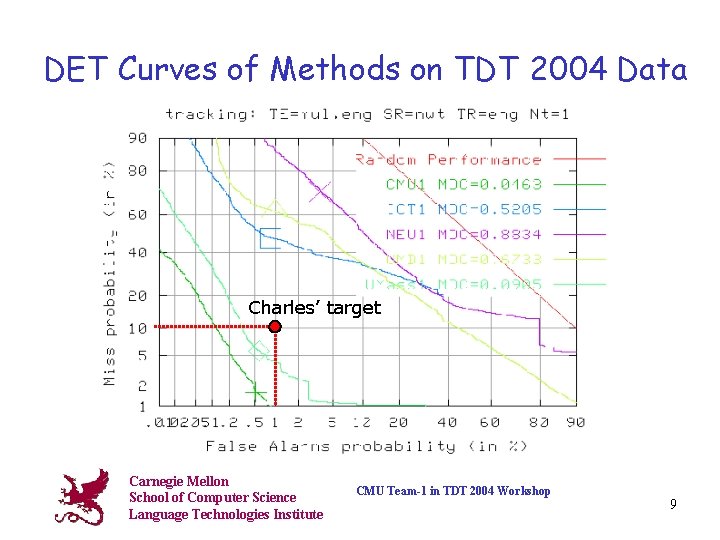

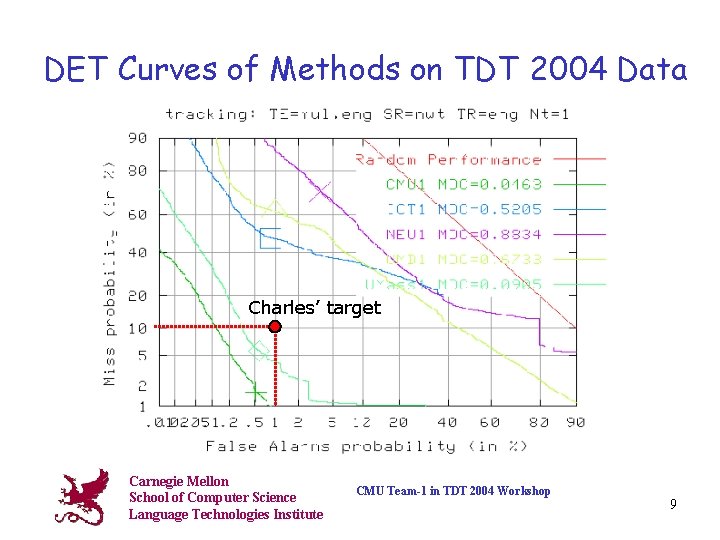

DET Curves of Methods on TDT 2004 Data Charles’ target Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 9

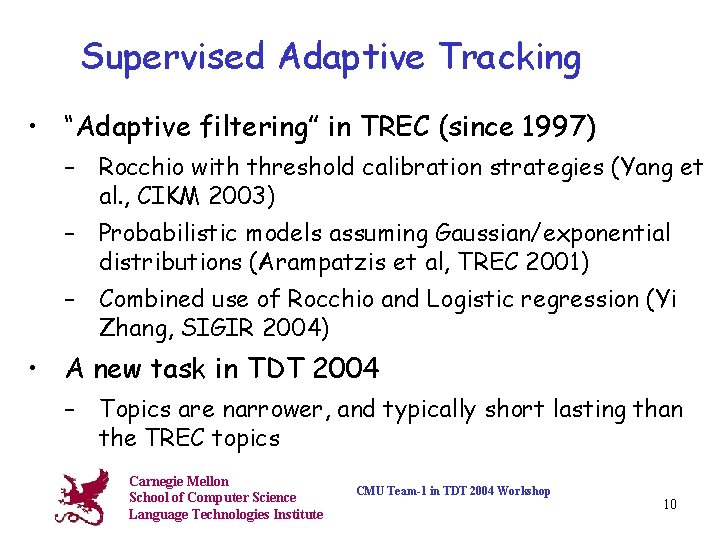

Supervised Adaptive Tracking • “Adaptive filtering” in TREC (since 1997) – Rocchio with threshold calibration strategies (Yang et al. , CIKM 2003) – Probabilistic models assuming Gaussian/exponential distributions (Arampatzis et al, TREC 2001) – Combined use of Rocchio and Logistic regression (Yi Zhang, SIGIR 2004) • A new task in TDT 2004 – Topics are narrower, and typically short lasting than the TREC topics Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 10

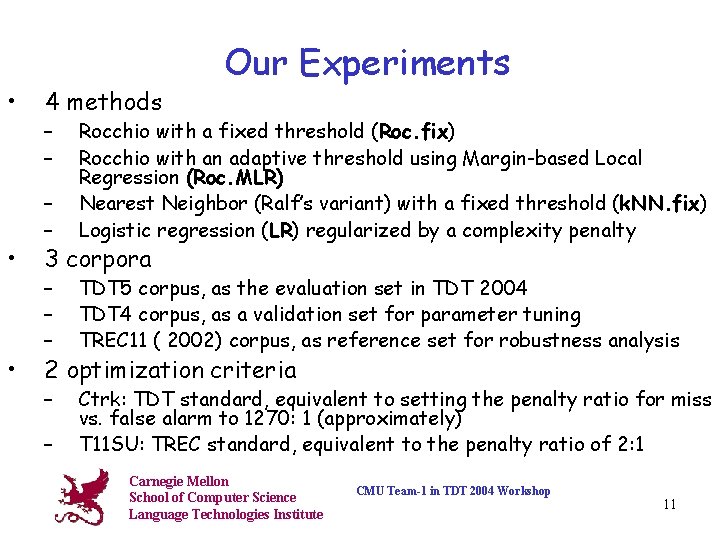

• 4 methods – – Our Experiments – – Rocchio with a fixed threshold (Roc. fix) Rocchio with an adaptive threshold using Margin-based Local Regression (Roc. MLR) Nearest Neighbor (Ralf’s variant) with a fixed threshold (k. NN. fix) Logistic regression (LR) regularized by a complexity penalty – – – TDT 5 corpus, as the evaluation set in TDT 2004 TDT 4 corpus, as a validation set for parameter tuning TREC 11 ( 2002) corpus, as reference set for robustness analysis – Ctrk: TDT standard, equivalent to setting the penalty ratio for miss vs. false alarm to 1270: 1 (approximately) T 11 SU: TREC standard, equivalent to the penalty ratio of 2: 1 • 3 corpora • 2 optimization criteria – Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 11

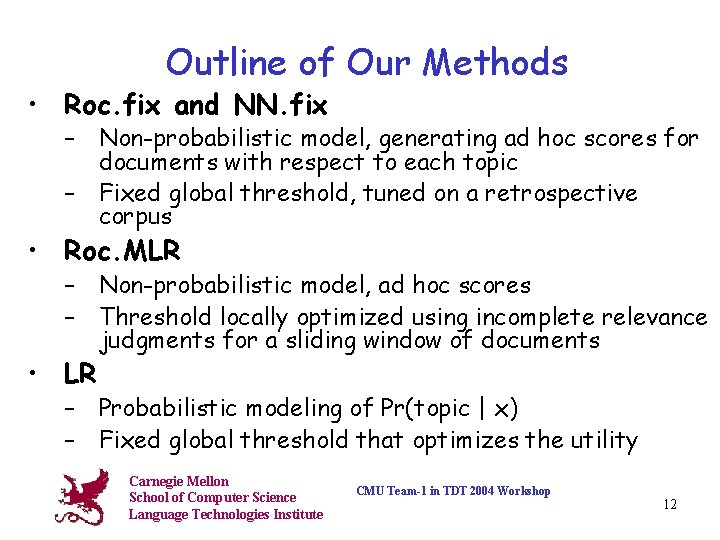

Outline of Our Methods • Roc. fix and NN. fix – Non-probabilistic model, generating ad hoc scores for documents with respect to each topic – Fixed global threshold, tuned on a retrospective corpus • Roc. MLR – Non-probabilistic model, ad hoc scores – Threshold locally optimized using incomplete relevance judgments for a sliding window of documents • LR – Probabilistic modeling of Pr(topic | x) – Fixed global threshold that optimizes the utility Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 12

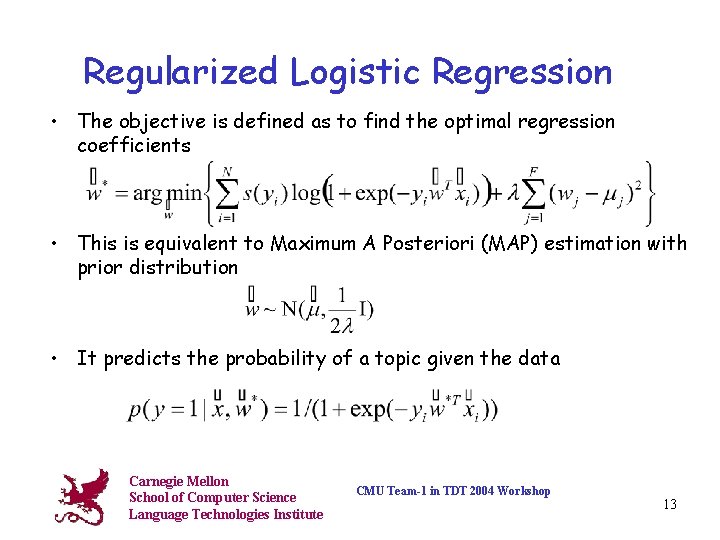

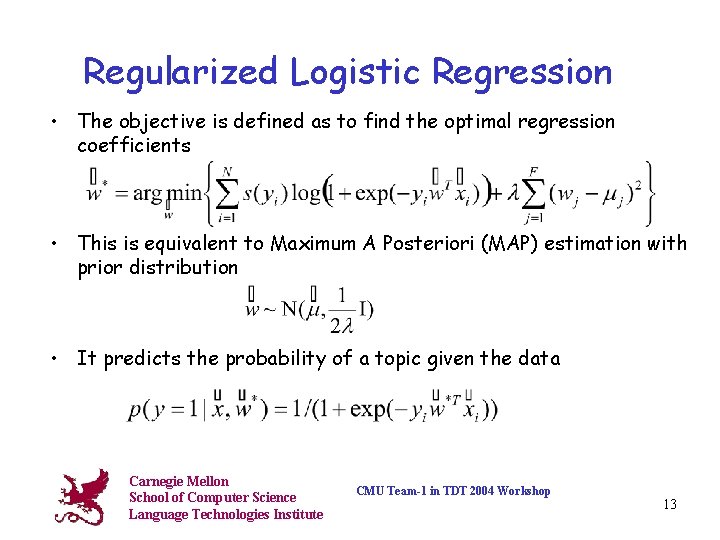

Regularized Logistic Regression • The objective is defined as to find the optimal regression coefficients • This is equivalent to Maximum A Posteriori (MAP) estimation with prior distribution • It predicts the probability of a topic given the data Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 13

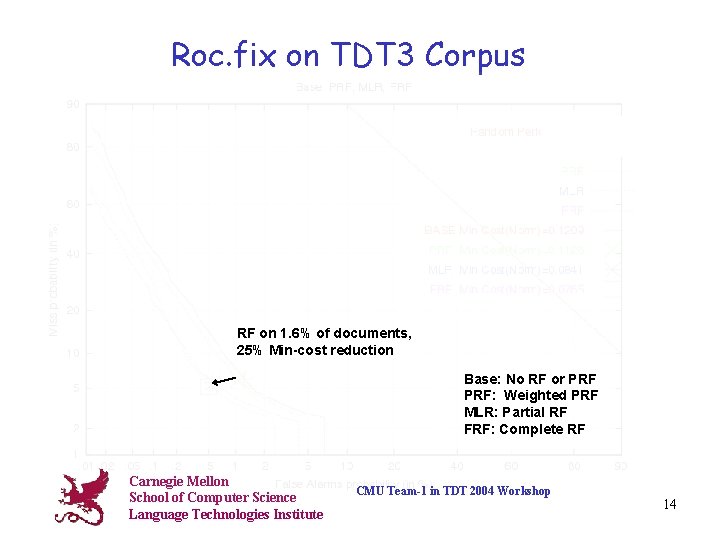

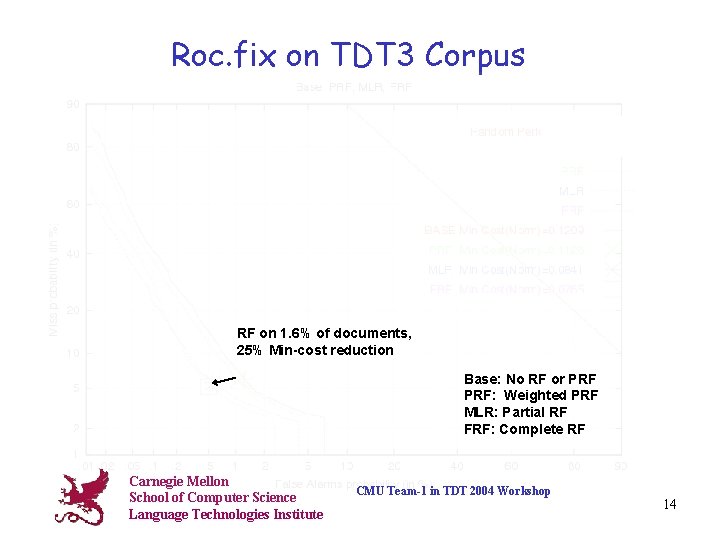

Roc. fix on TDT 3 Corpus RF on 1. 6% of documents, 25% Min-cost reduction Base: No RF or PRF: Weighted PRF MLR: Partial RF FRF: Complete RF Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 14

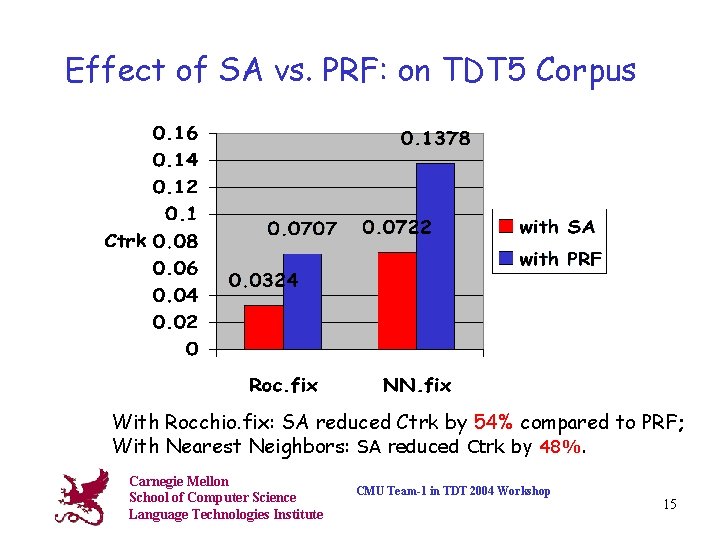

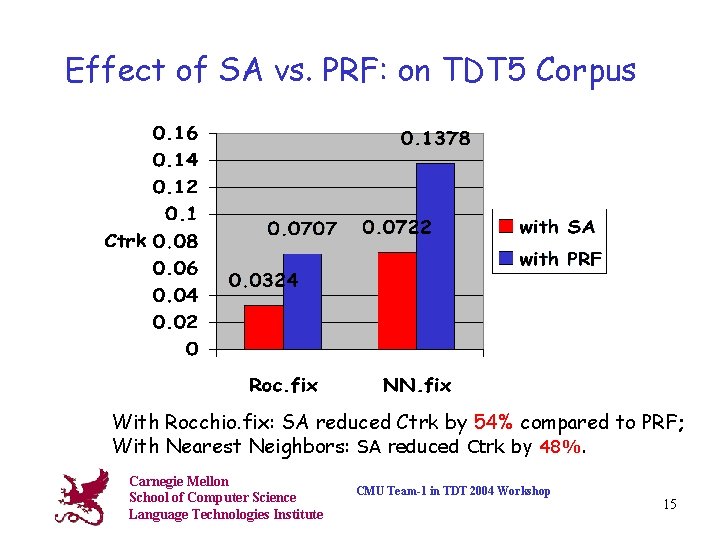

Effect of SA vs. PRF: on TDT 5 Corpus With Rocchio. fix: SA reduced Ctrk by 54% compared to PRF; With Nearest Neighbors: SA reduced Ctrk by 48%. Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 15

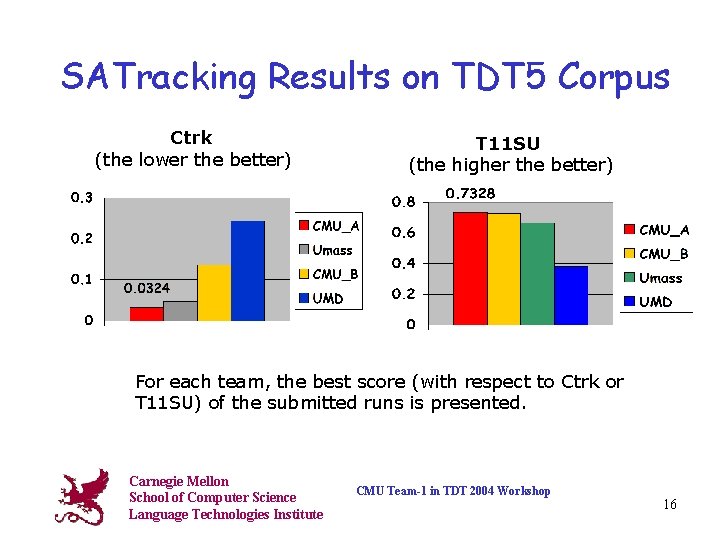

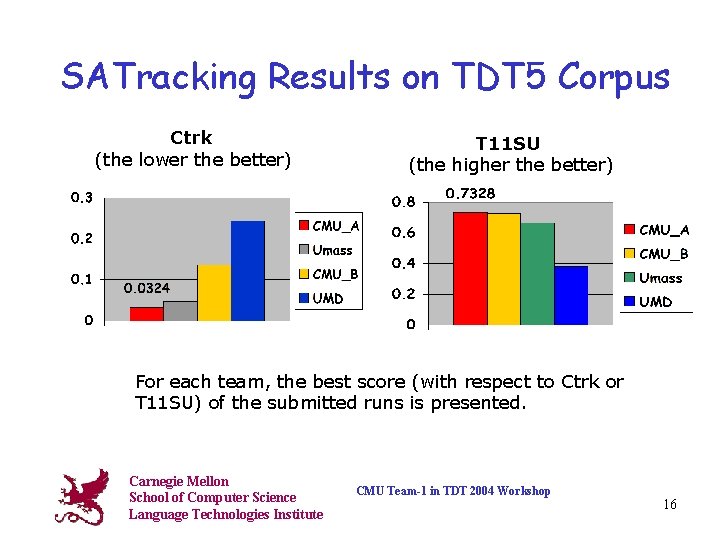

SATracking Results on TDT 5 Corpus Ctrk (the lower the better) T 11 SU (the higher the better) For each team, the best score (with respect to Ctrk or T 11 SU) of the submitted runs is presented. Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 16

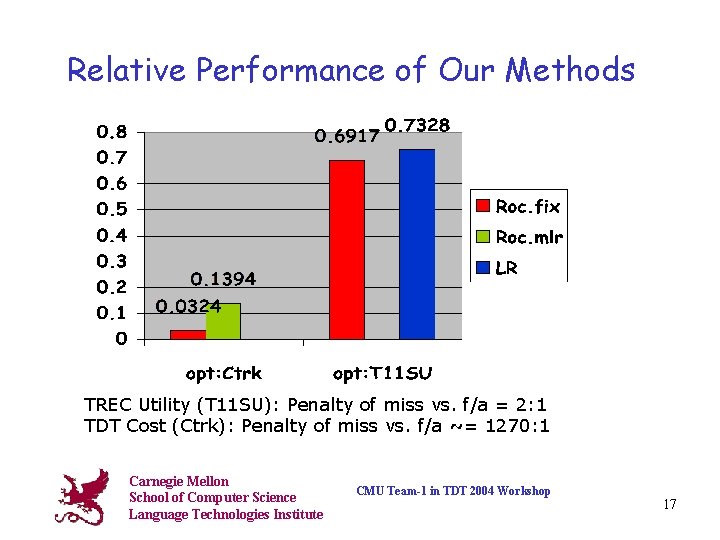

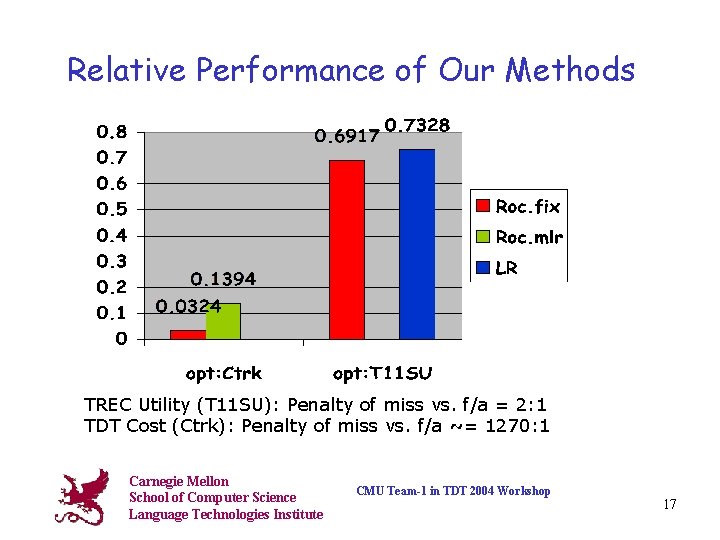

Relative Performance of Our Methods TREC Utility (T 11 SU): Penalty of miss vs. f/a = 2: 1 TDT Cost (Ctrk): Penalty of miss vs. f/a ~= 1270: 1 Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 17

Main Observations • Encouraging results: a small amount of relevance feedback (on 1~2% documents) yielded significant performance improvement • Puzzling point: Rocchio without any threshold calibration, works surprisingly well in both Ctrk and T 11 SU, which is inconsistent to our observations on TREC data. Why? • Scaling issue: a significant challenge for the learning algorithms including LR and MLR in the TDT domain. Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 18

Temporal Nature of Topics/Events TDT Event: Nov. APEC Meeting Broadcast News Topic: Kidnappings TREC Topic: Elections Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 19

Topics for Future Research • Keep up with new algorithms/theories • Exploit domain knowledge, e. g. , predefined topics (and super topics) in a hierarchical setting • Investigate topic-conditioned event tracking with predictive features (including Named Entities) • Develop algorithms to detect and exploit temporal trends • TDT in cross-lingual settings Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 20

References § Y. Yang and B. Kisiel. Margin-based Local Regression for Adaptive Filtering. ACM CIKM 2003 (Conference on Information and Knowledge Management). § J. Zhang and Y. Yang. Robustness of regularized linear classification methods in text categorization ACM SIGIR 2003, pp 190 -197. § J. Zhang, R. Jin, Y. Yang and A. Hauptmann. Modified logistic regression: an approximation to SVM and its application in large-scale text categorization. ICML 2003 (International Conference on Machine Learning), pp 888897. § N. Ma, Y. Yang & M. Rogati. Cross-Language Event Tracking. Asia Information Retrieval Symposium (AIRS), 2004. Carnegie Mellon School of Computer Science Language Technologies Institute CMU Team-1 in TDT 2004 Workshop 21