Clustering Paolo Ferragina Dipartimento di Informatica Universit di

- Slides: 24

Clustering Paolo Ferragina Dipartimento di Informatica Università di Pisa

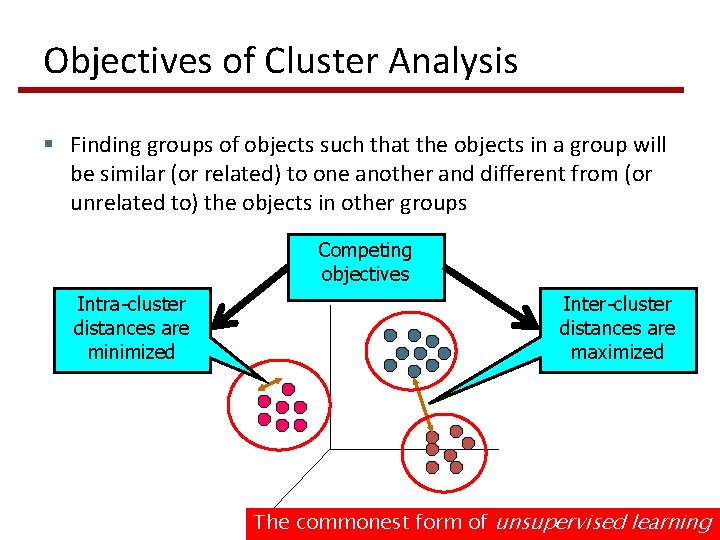

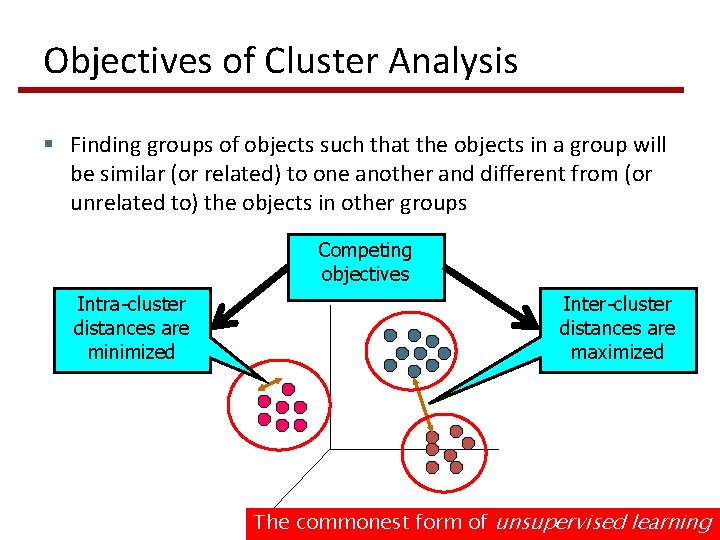

Objectives of Cluster Analysis § Finding groups of objects such that the objects in a group will be similar (or related) to one another and different from (or unrelated to) the objects in other groups Competing objectives Intra-cluster distances are minimized Inter-cluster distances are maximized The commonest form of unsupervised learning

Notion of similarity/distance § Ideal: semantic similarity § Practical: term-statistical similarity § Docs as vectors § Similarity = cosine similarity, LSH, …

Clustering Algorithms § Flat algorithms § Create a set of clusters § Usually start with a random (partial) partitioning § Refine it iteratively § K means clustering § Hierarchical algorithms § Create a hierarchy of clusters (dendogram) § Bottom-up, agglomerative § Top-down, divisive

Hard vs. soft clustering § Hard clustering: Each document belongs to exactly one cluster § More common and easier to do § Soft clustering: Each document can belong to more than one cluster. § Makes more sense for applications like creating browsable hierarchies § News is a proper example § Search results is another example

Flat & Partitioning Algorithms § Given: a set of n documents and the number K § Find: a partition in K clusters that optimizes the chosen partitioning criterion § Globally optimal § Intractable for many objective functions § Ergo, exhaustively enumerate all partitions § Locally optimal § Effective heuristic methods: K-means and K-medoids algorithms

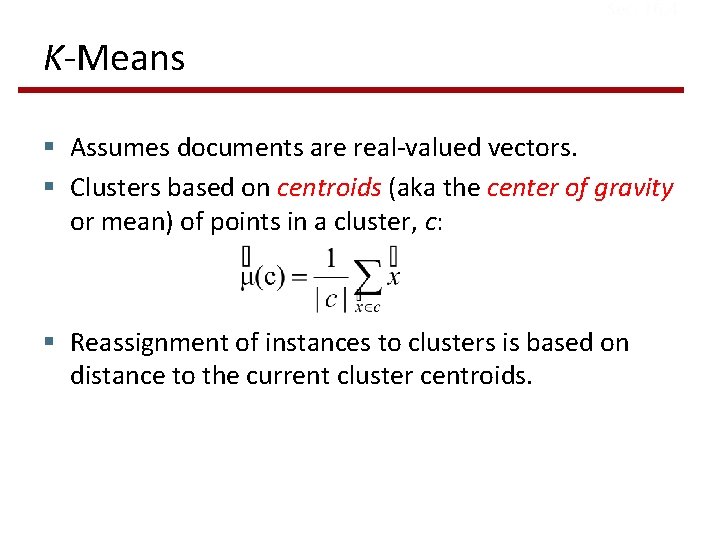

Sec. 16. 4 K-Means § Assumes documents are real-valued vectors. § Clusters based on centroids (aka the center of gravity or mean) of points in a cluster, c: § Reassignment of instances to clusters is based on distance to the current cluster centroids.

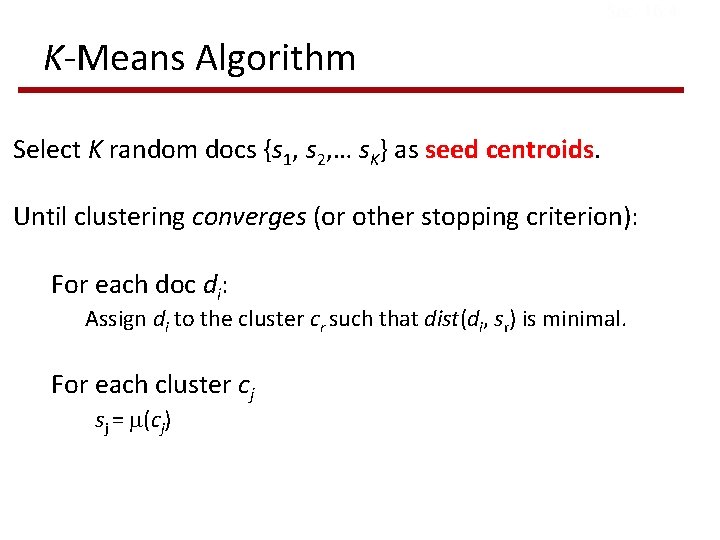

Sec. 16. 4 K-Means Algorithm Select K random docs {s 1, s 2, … s. K} as seed centroids. Until clustering converges (or other stopping criterion): For each doc di: Assign di to the cluster cr such that dist(di, sr) is minimal. For each cluster cj sj = (cj)

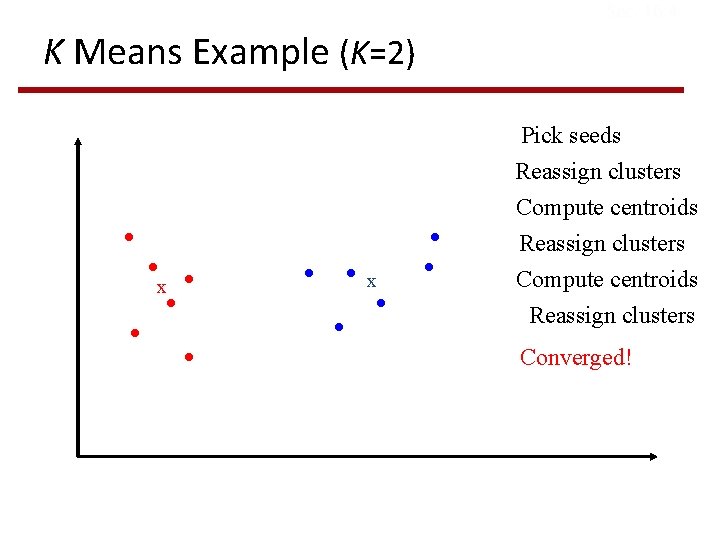

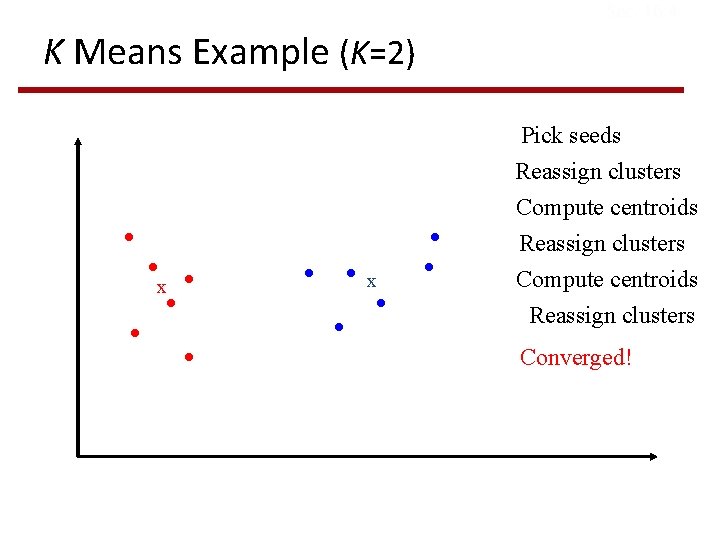

Sec. 16. 4 K Means Example (K=2) Pick seeds x x Reassign clusters Compute centroids Reassign clusters Converged!

Sec. 16. 4 Termination conditions § Several possibilities, e. g. , § A fixed number of iterations. § Doc partition unchanged. § Centroid positions don’t change.

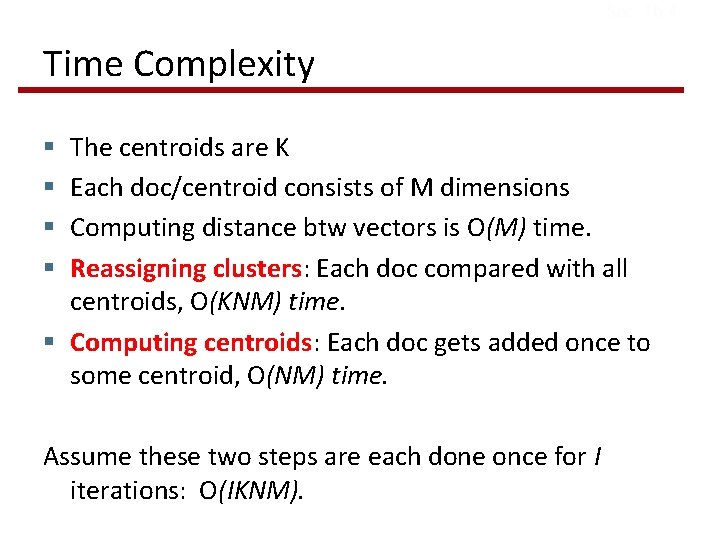

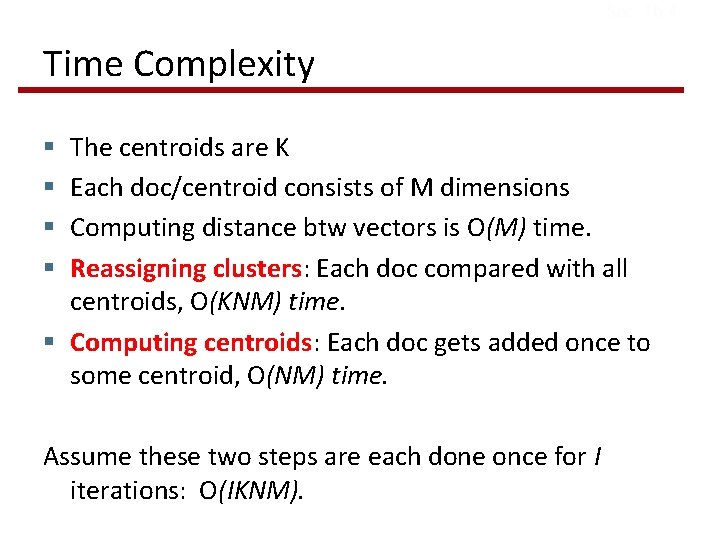

Sec. 16. 4 Time Complexity The centroids are K Each doc/centroid consists of M dimensions Computing distance btw vectors is O(M) time. Reassigning clusters: Each doc compared with all centroids, O(KNM) time. § Computing centroids: Each doc gets added once to some centroid, O(NM) time. § § Assume these two steps are each done once for I iterations: O(IKNM).

How Many Clusters? § Number of clusters K is given § Partition n docs into predetermined number of clusters § Finding the “right” number of clusters is part of the problem § Can usually take an algorithm for one flavor and convert to the other.

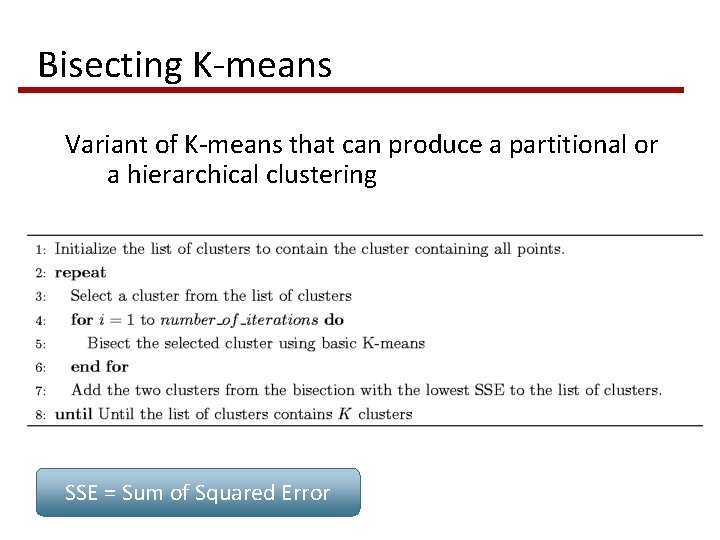

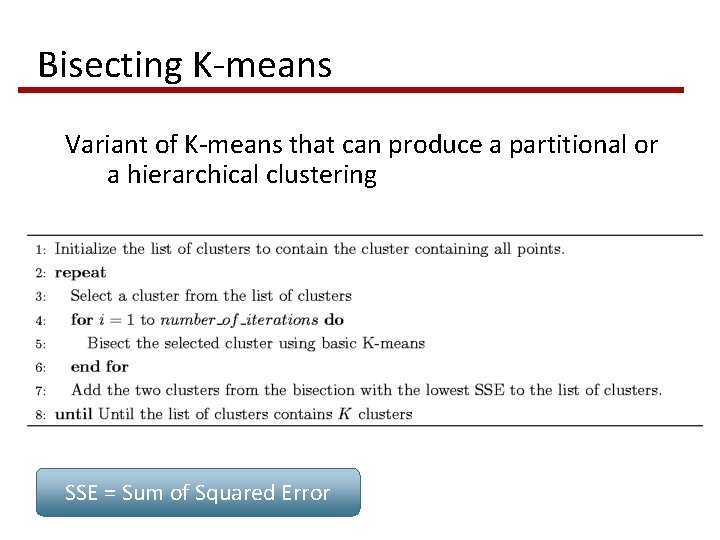

Bisecting K-means Variant of K-means that can produce a partitional or a hierarchical clustering SSE = Sum of Squared Error

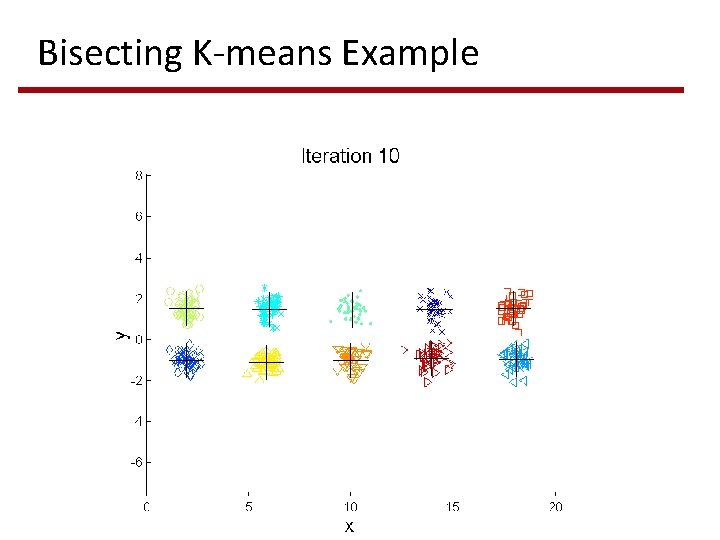

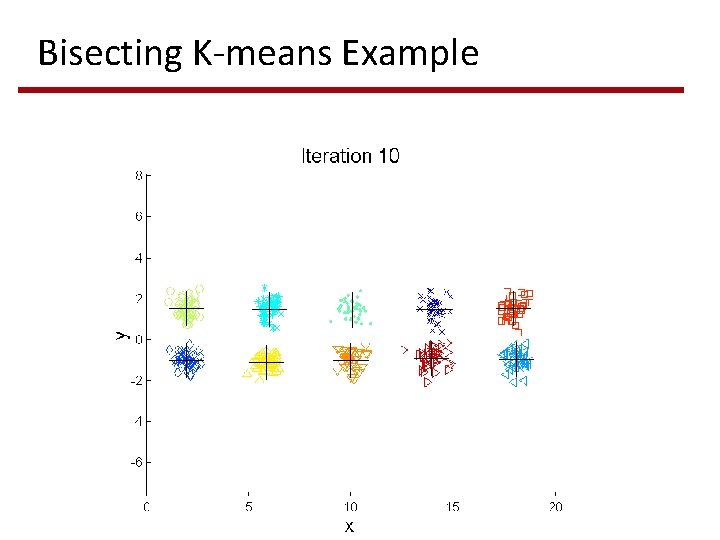

Bisecting K-means Example

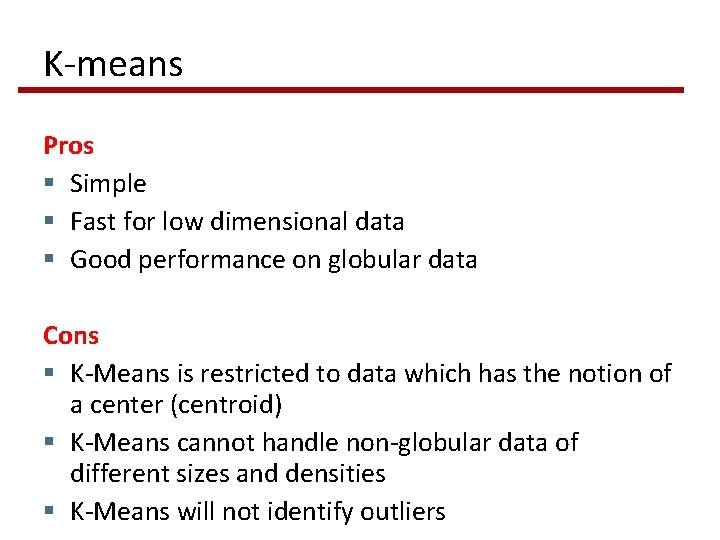

K-means Pros § Simple § Fast for low dimensional data § Good performance on globular data Cons § K-Means is restricted to data which has the notion of a center (centroid) § K-Means cannot handle non-globular data of different sizes and densities § K-Means will not identify outliers

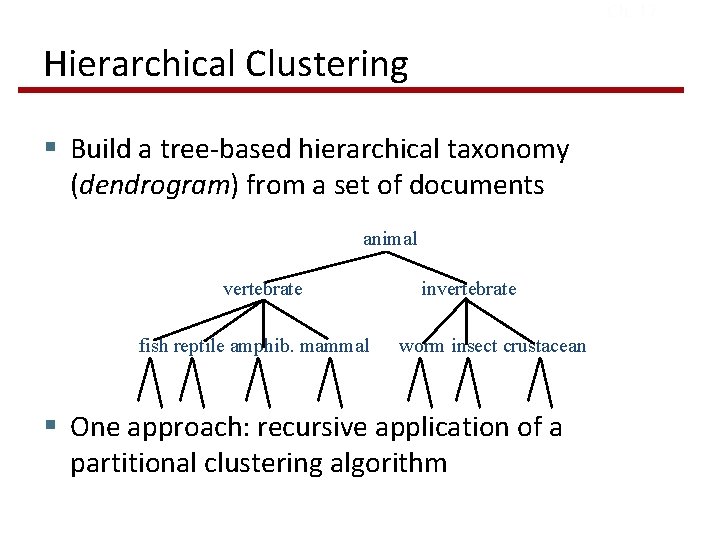

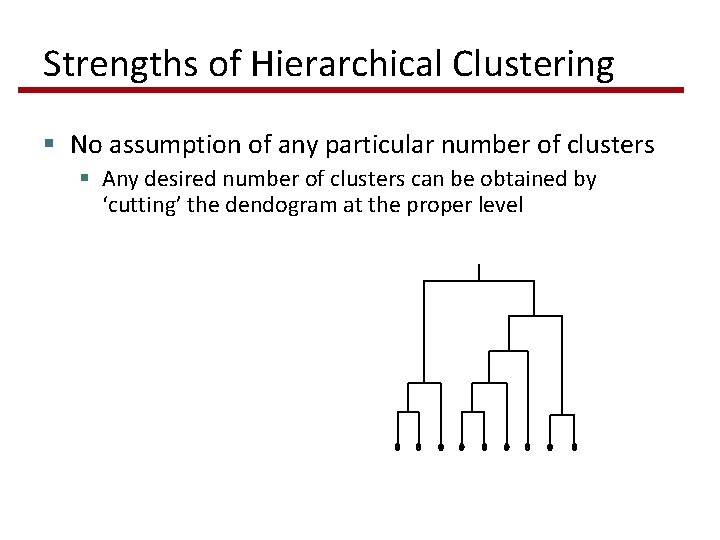

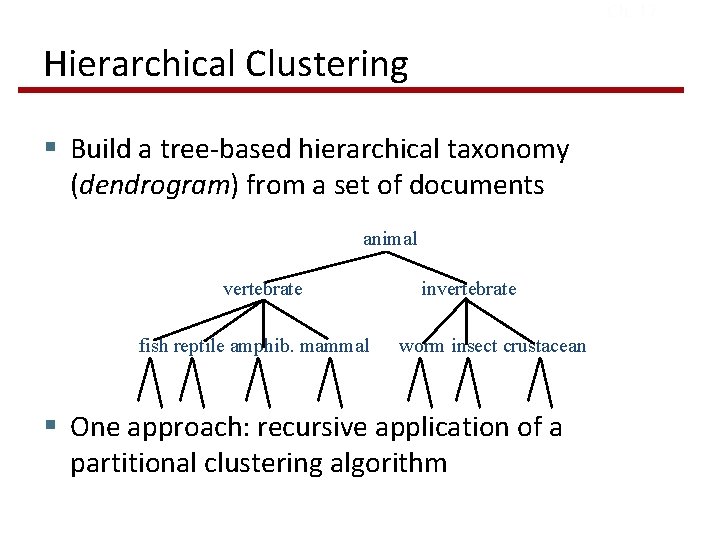

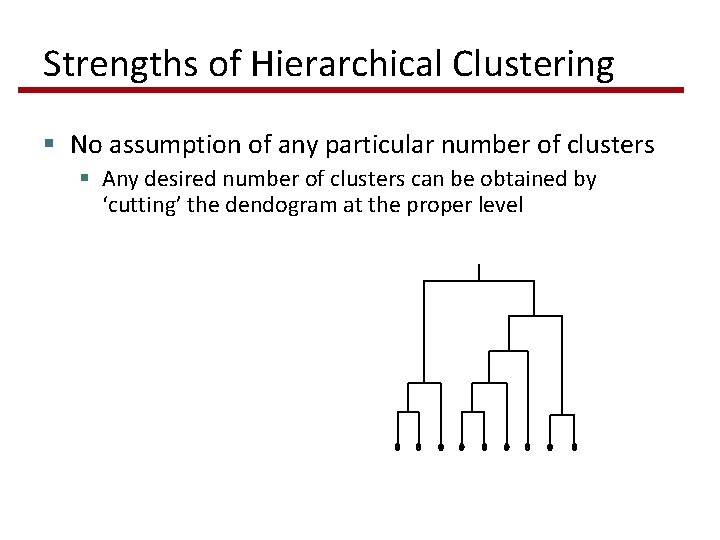

Ch. 17 Hierarchical Clustering § Build a tree-based hierarchical taxonomy (dendrogram) from a set of documents animal vertebrate fish reptile amphib. mammal invertebrate worm insect crustacean § One approach: recursive application of a partitional clustering algorithm

Strengths of Hierarchical Clustering § No assumption of any particular number of clusters § Any desired number of clusters can be obtained by ‘cutting’ the dendogram at the proper level

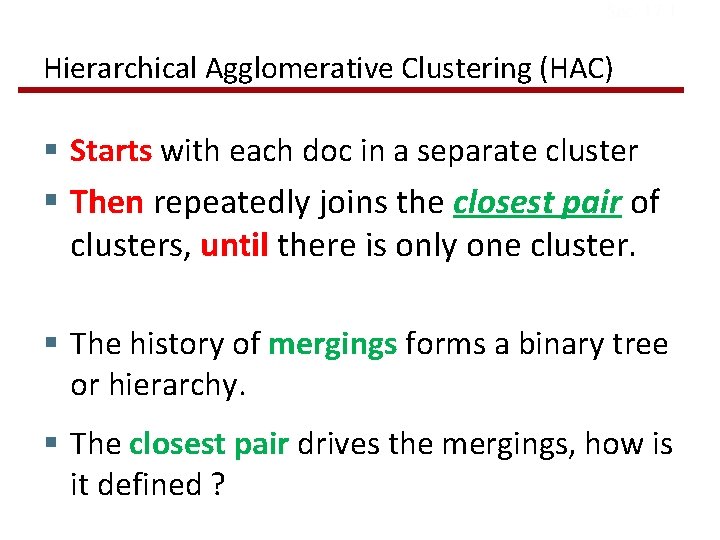

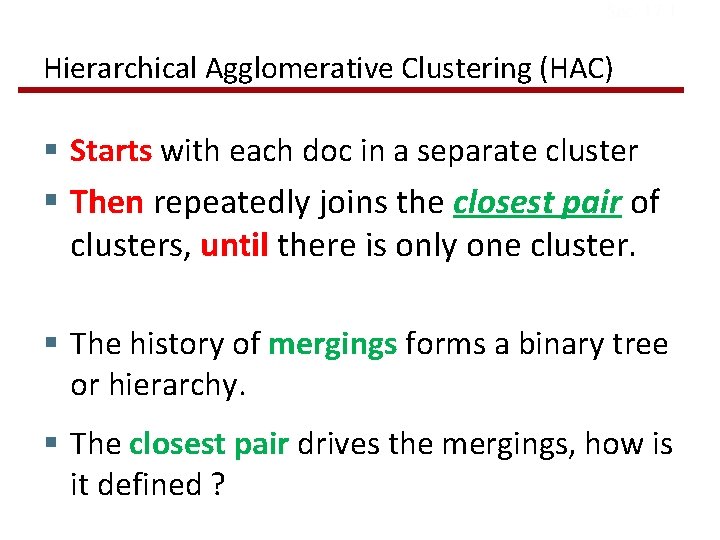

Sec. 17. 1 Hierarchical Agglomerative Clustering (HAC) § Starts with each doc in a separate cluster § Then repeatedly joins the closest pair of clusters, until there is only one cluster. § The history of mergings forms a binary tree or hierarchy. § The closest pair drives the mergings, how is it defined ?

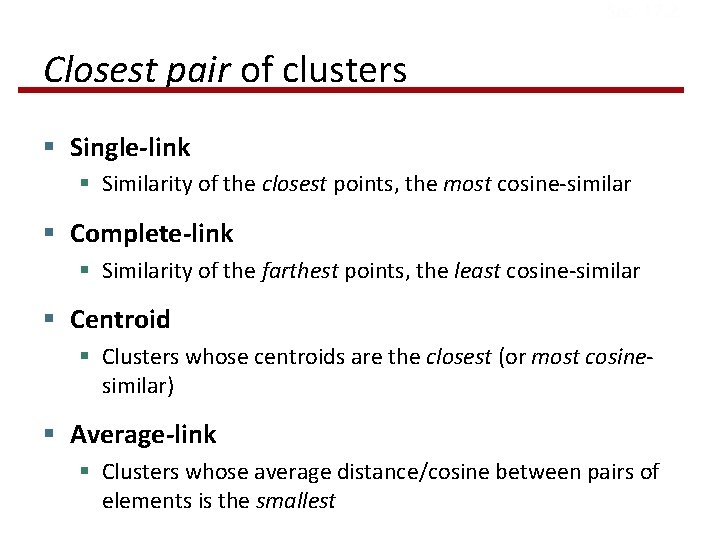

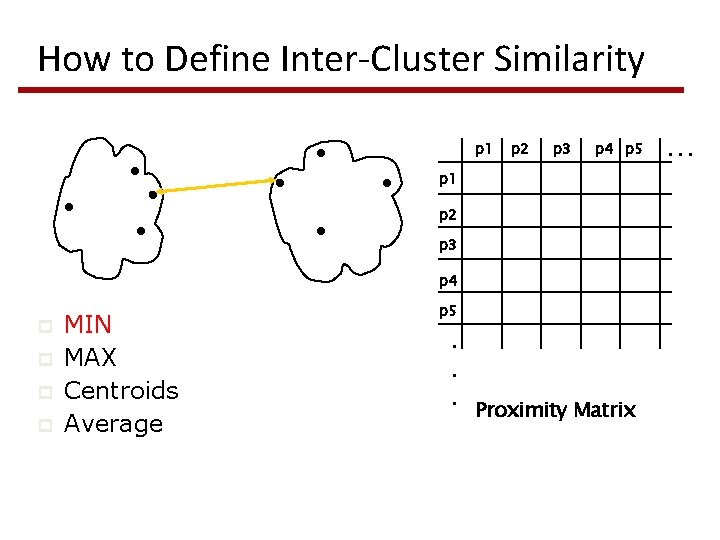

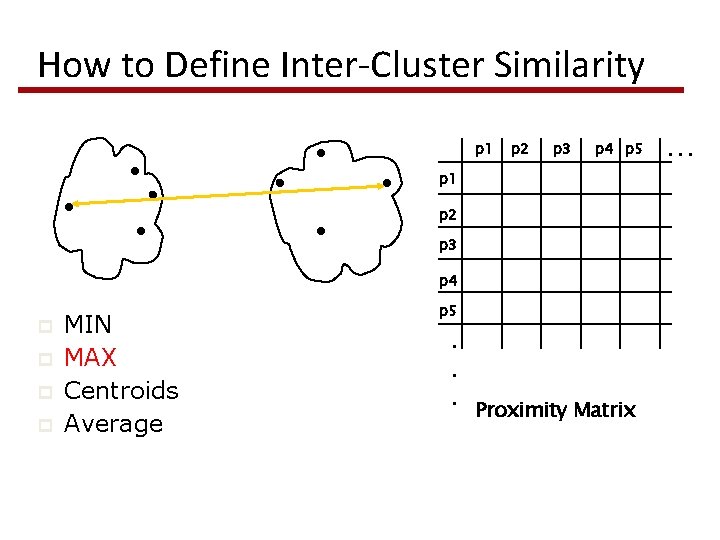

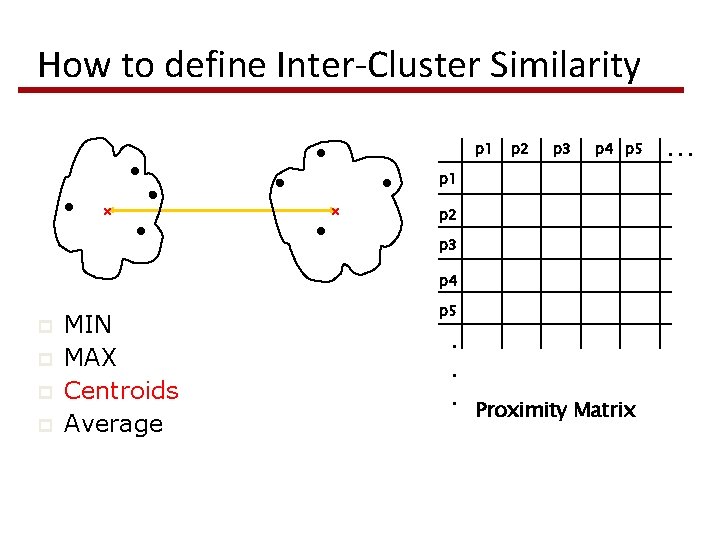

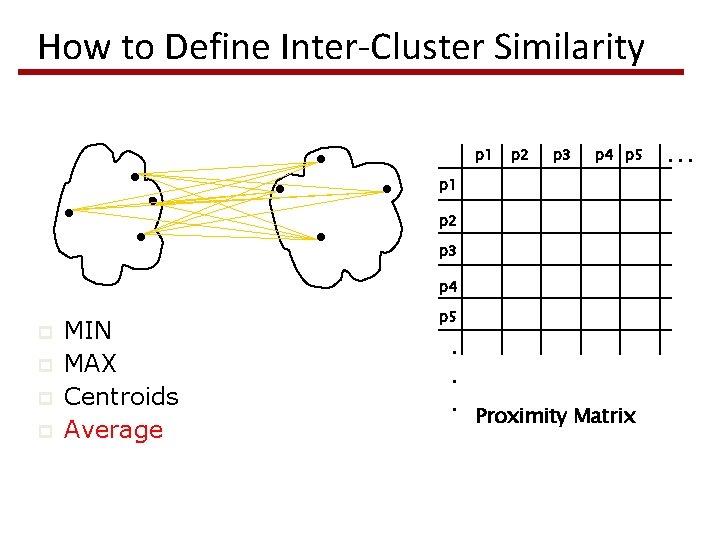

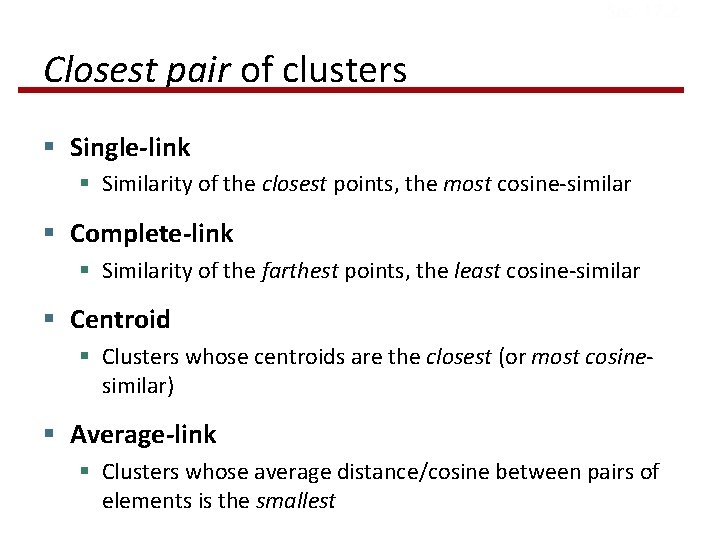

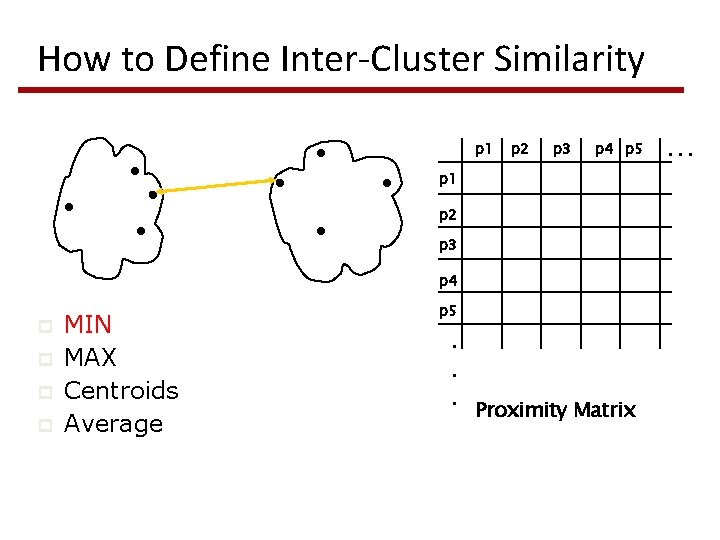

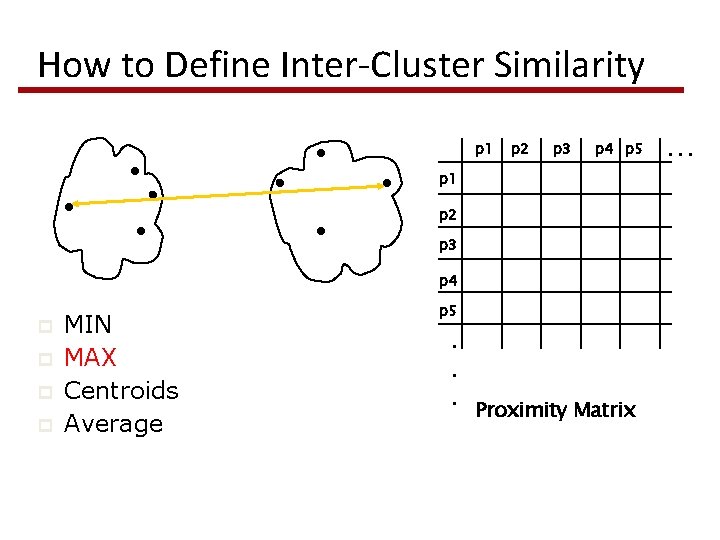

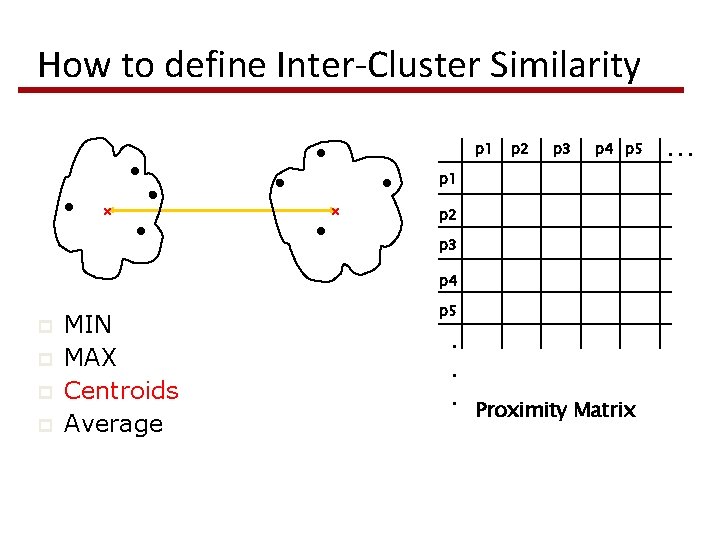

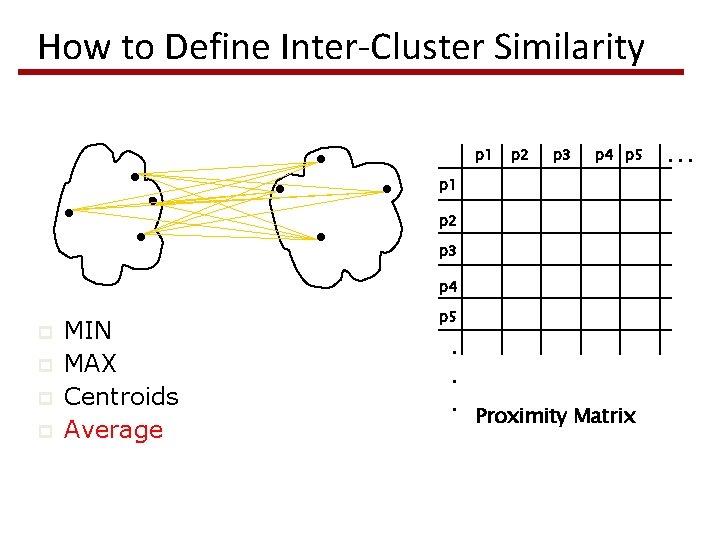

Sec. 17. 2 Closest pair of clusters § Single-link § Similarity of the closest points, the most cosine-similar § Complete-link § Similarity of the farthest points, the least cosine-similar § Centroid § Clusters whose centroids are the closest (or most cosinesimilar) § Average-link § Clusters whose average distance/cosine between pairs of elements is the smallest

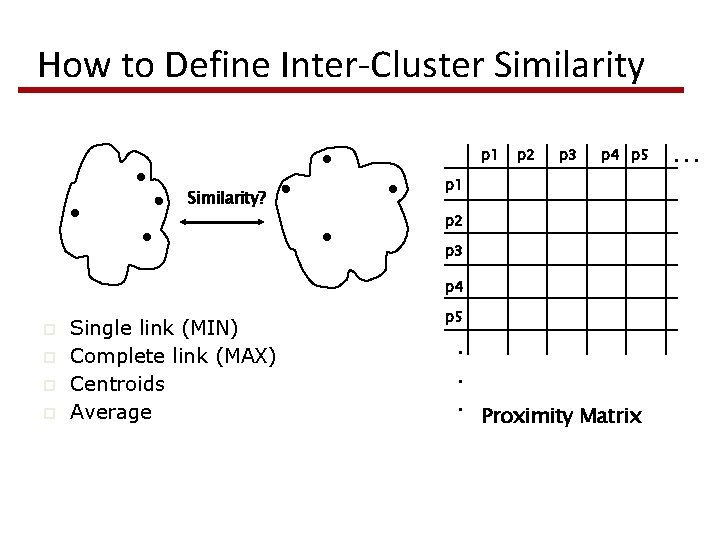

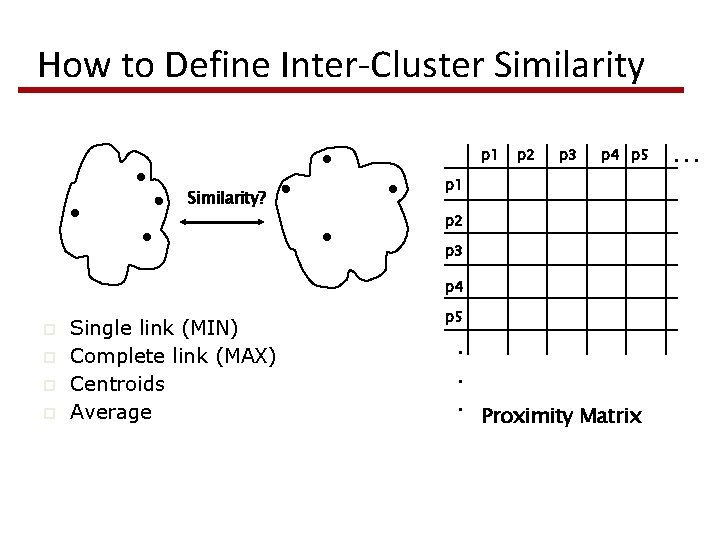

How to Define Inter-Cluster Similarity p 1 Similarity? p 2 p 3 p 4 p 5 p 1 p 2 p 3 p 4 p p Single link (MIN) Complete link (MAX) Centroids Average p 5 . . . Proximity Matrix . . .

How to Define Inter-Cluster Similarity p 1 p 2 p 3 p 4 p 5 p 1 p 2 p 3 p 4 p p MIN MAX Centroids Average p 5 . . . Proximity Matrix . . .

How to Define Inter-Cluster Similarity p 1 p 2 p 3 p 4 p 5 p 1 p 2 p 3 p 4 p p MIN MAX Centroids Average p 5 . . . Proximity Matrix . . .

How to define Inter-Cluster Similarity p 1 p 2 p 3 p 4 p 5 p 1 p 2 p 3 p 4 p p MIN MAX Centroids Average p 5 . . . Proximity Matrix . . .

How to Define Inter-Cluster Similarity p 1 p 2 p 3 p 4 p 5 p 1 p 2 p 3 p 4 p p MIN MAX Centroids Average p 5 . . . Proximity Matrix . . .

Paolo ferragina

Paolo ferragina Paolo ferragina

Paolo ferragina Paolo ferragina

Paolo ferragina Paolo ferragina

Paolo ferragina Flat and hierarchical clustering

Flat and hierarchical clustering L

L Flat clustering vs hierarchical clustering

Flat clustering vs hierarchical clustering Rotterdam university economics

Rotterdam university economics Universit of london

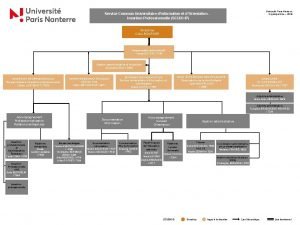

Universit of london Nanterre universit

Nanterre universit Universit

Universit Universit sherbrooke

Universit sherbrooke Unipr dipartimento economia

Unipr dipartimento economia Dipartimento organizzazione giudiziaria

Dipartimento organizzazione giudiziaria Gecos protezione civile

Gecos protezione civile Dipartimento delle istituzioni

Dipartimento delle istituzioni Dipartimento di fisica ferrara

Dipartimento di fisica ferrara Scuola chicago

Scuola chicago Dipartimento scienze economiche e aziendali

Dipartimento scienze economiche e aziendali Dipartimento medicina perugia

Dipartimento medicina perugia Dipartimento di economia pisa

Dipartimento di economia pisa Dipartimento di scienze mediche traslazionali

Dipartimento di scienze mediche traslazionali Dipartimento di chimica pavia

Dipartimento di chimica pavia Ingegneria civile unical

Ingegneria civile unical Ingegneria ferrara

Ingegneria ferrara