Crawling Paolo Ferragina Dipartimento di Informatica Universit di

![A practical-theoretical idea !!! E[ri, j] = 0 Var[ri, j] = 1 A practical-theoretical idea !!! E[ri, j] = 0 Var[ri, j] = 1](https://slidetodoc.com/presentation_image_h/8bf85068b99f987d854fe72a3664ee60/image-37.jpg)

![Sec. 19. 6 Computing Sketch[i] for Doc 1 Document 1 264 Start with 64 Sec. 19. 6 Computing Sketch[i] for Doc 1 Document 1 264 Start with 64](https://slidetodoc.com/presentation_image_h/8bf85068b99f987d854fe72a3664ee60/image-52.jpg)

![Sec. 19. 6 Test if Doc 1. Sketch[i] = Doc 2. Sketch[i] Document 2 Sec. 19. 6 Test if Doc 1. Sketch[i] = Doc 2. Sketch[i] Document 2](https://slidetodoc.com/presentation_image_h/8bf85068b99f987d854fe72a3664ee60/image-53.jpg)

- Slides: 55

Crawling Paolo Ferragina Dipartimento di Informatica Università di Pisa Reading 20. 1, 20. 2 and 20. 3

Spidering n 24 h, 7 days “walking” over a Graph n What about the Graph? n n Bow. Tie Direct graph G = (N, E) N changes (insert, delete) >> 50 * 109 nodes E changes (insert, delete) > 10 links per node n 10*50*109 = 500*109 1 -entries in adj matrix

Crawling Issues n How to crawl? n n How much to crawl? How much to index? n n n Coverage: How big is the Web? How much do we cover? Relative Coverage: How much do competitors have? How often to crawl? n n Quality: “Best” pages first Efficiency: Avoid duplication (or near duplication) Etiquette: Robots. txt, Server load concerns (Minimize load) Freshness: How much has changed? How to parallelize the process

Page selection n Given a page P, define how “good” P is. n Several metrics: n n BFS, DFS, Random Popularity driven (Page. Rank, full vs partial) Topic driven or focused crawling Combined

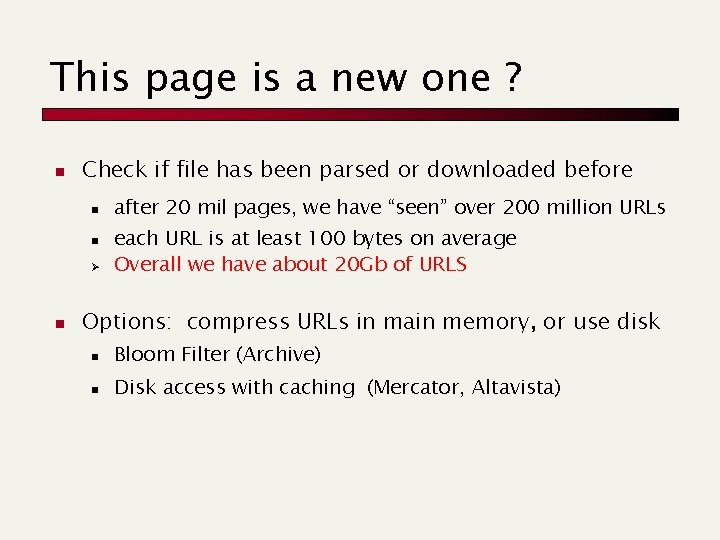

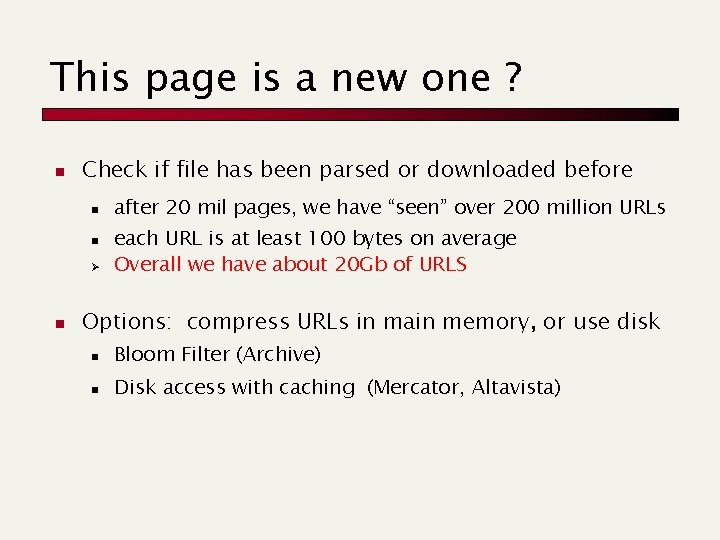

This page is a new one ? n Check if file has been parsed or downloaded before n n Ø n after 20 mil pages, we have “seen” over 200 million URLs each URL is at least 100 bytes on average Overall we have about 20 Gb of URLS Options: compress URLs in main memory, or use disk n Bloom Filter (Archive) n Disk access with caching (Mercator, Altavista)

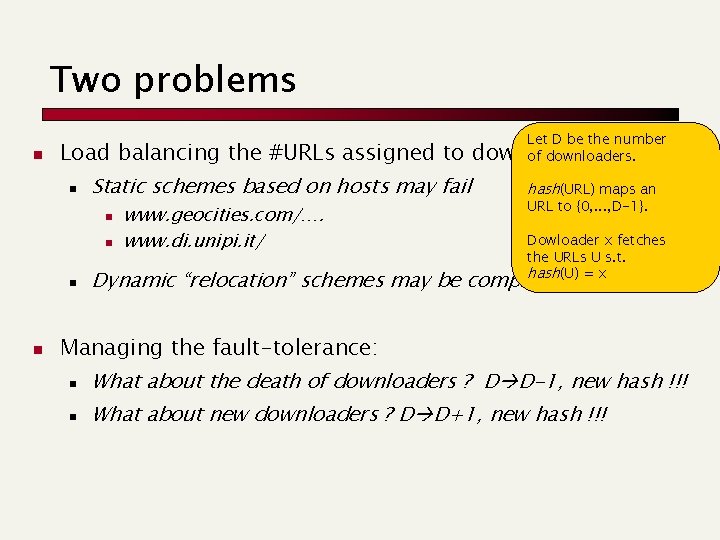

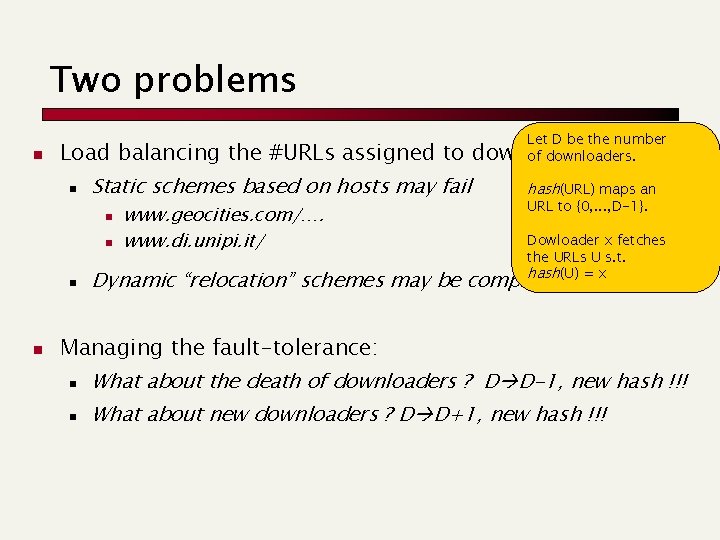

Crawler “cycle of life” Link Extractor: while(<Page Repository is not empty>){ <take a page p (check if it is new)> <extract links contained in p within href> <extract links contained in javascript> <extract…. . <insert these links into the Priority Queue> } PQ Link Extractor Crawler Manager PR AR Downloaders: while(<Assigned Repository is not empty>){ <extract url u> <download page(u)> <send page(u) to the Page Repository> <store page(u) in a proper archive, possibly compressed> } Crawler Manager: while(<Priority Queue is not empty>){ <extract some URL u having the highest priority> foreach u extracted { if ( (u “Already Seen Page” ) || ( u “Already Seen Page” && <u’s version on the Web is more recent> ) ){ <resolve u wrt DNS> <send u to the Assigned Repository> } } }

Parallel Crawlers Web is too big to be crawled by a single crawler, work should be divided avoiding duplication v Dynamic assignment v v v Central coordinator dynamically assigns URLs to crawlers Links are given to Central coordinator (? bottleneck? ) Static assignment v v Web is statically partitioned and assigned to crawlers Crawler only crawls its part of the web

Two problems n Load balancing the #URLs assigned to n Static schemes based on hosts may fail n n www. geocities. com/…. www. di. unipi. it/ Let D be the number downloaders: of downloaders. hash(URL) maps an URL to {0, . . . , D-1}. Dowloader x fetches the URLs U s. t. hash(U) = x Dynamic “relocation” schemes may be complicated Managing the fault-tolerance: n What about the death of downloaders ? D D-1, new hash !!! n What about new downloaders ? D D+1, new hash !!!

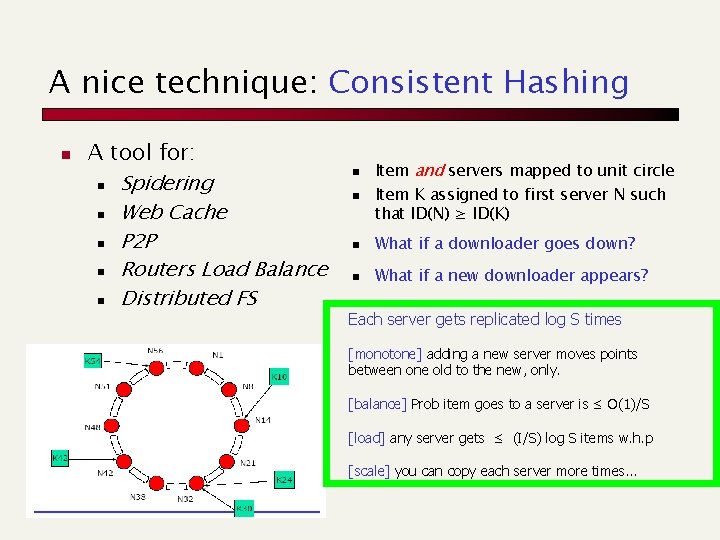

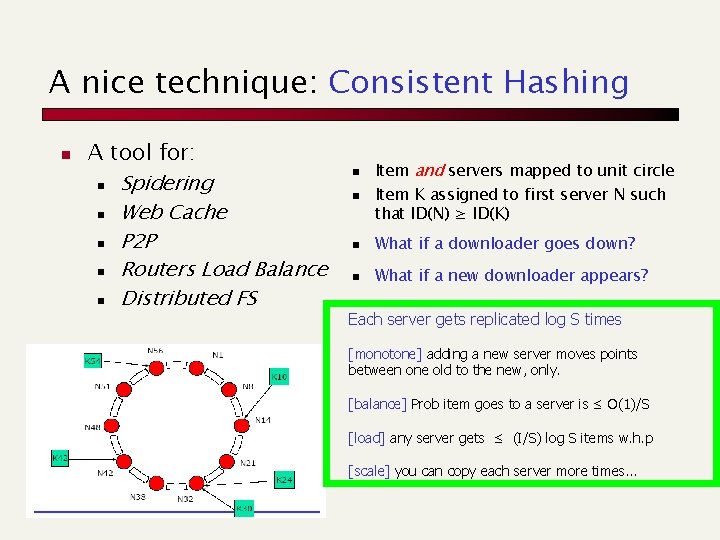

A nice technique: Consistent Hashing n A tool for: n Spidering n Web Cache n P 2 P n Routers Load Balance n Distributed FS n Item and servers mapped to unit circle Item K assigned to first server N such that ID(N) ≥ ID(K) n What if a downloader goes down? n What if a new downloader appears? n Each server gets replicated log S times [monotone] adding a new server moves points between one old to the new, only. [balance] Prob item goes to a server is ≤ O(1)/S [load] any server gets ≤ (I/S) log S items w. h. p [scale] you can copy each server more times. . .

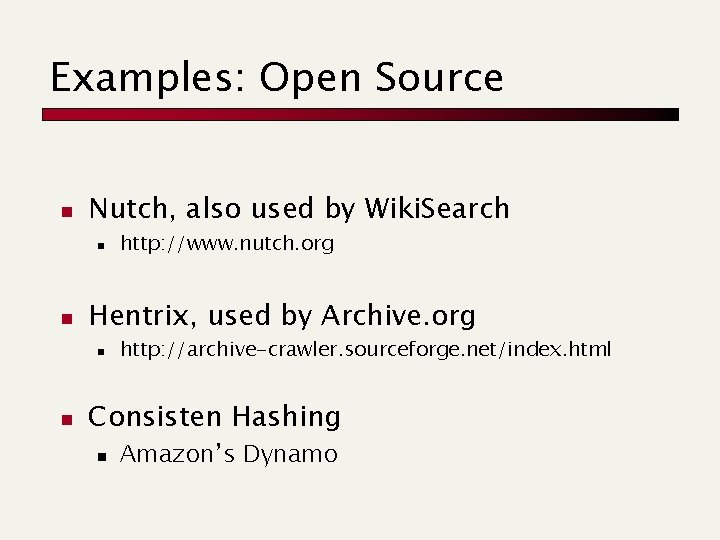

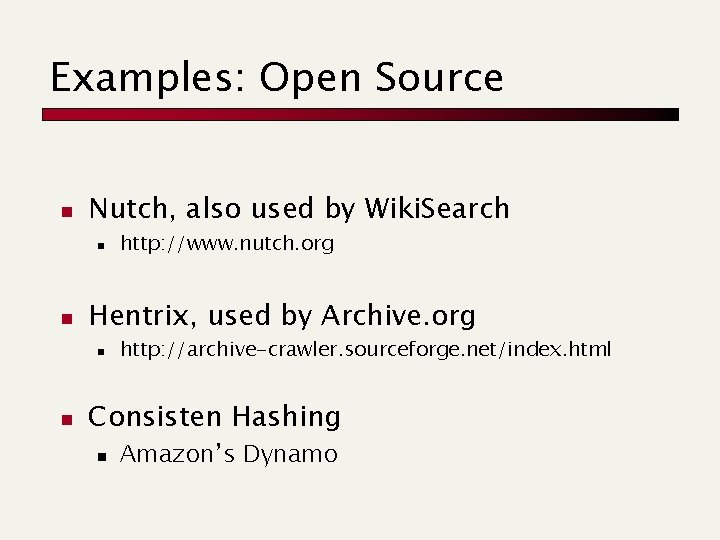

Examples: Open Source n Nutch, also used by Wiki. Search n n Hentrix, used by Archive. org n n http: //www. nutch. org http: //archive-crawler. sourceforge. net/index. html Consisten Hashing n Amazon’s Dynamo

Ranking Link-based Ranking (2° generation) Reading 21

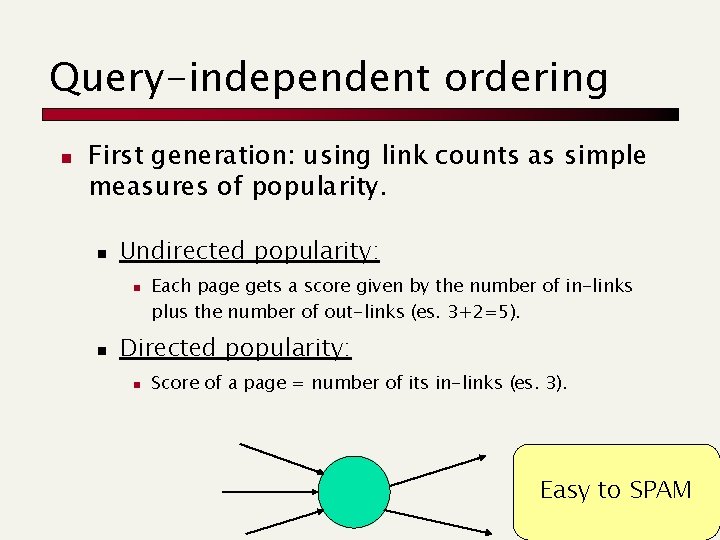

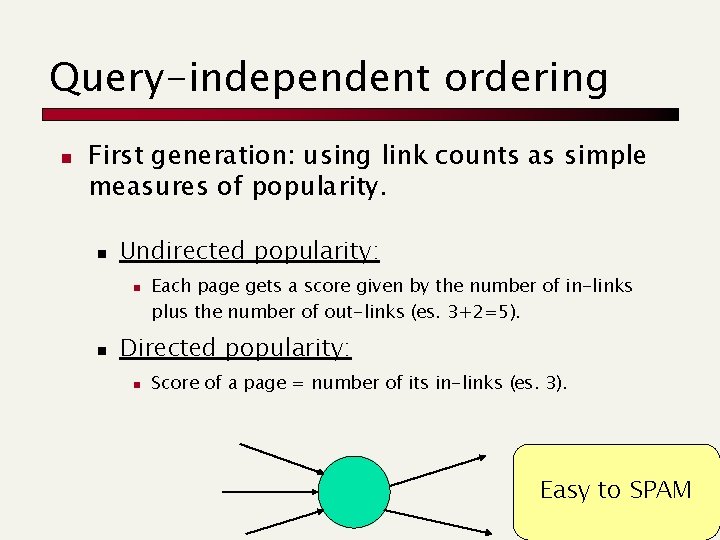

Query-independent ordering n First generation: using link counts as simple measures of popularity. n Undirected popularity: n n Each page gets a score given by the number of in-links plus the number of out-links (es. 3+2=5). Directed popularity: n Score of a page = number of its in-links (es. 3). Easy to SPAM

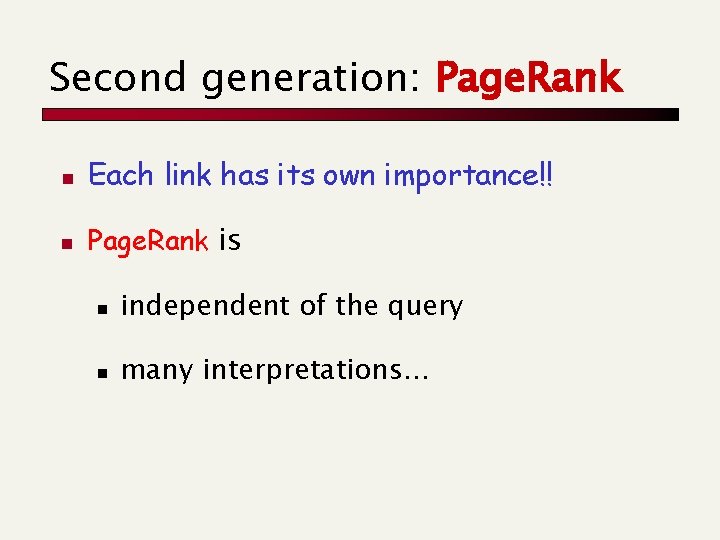

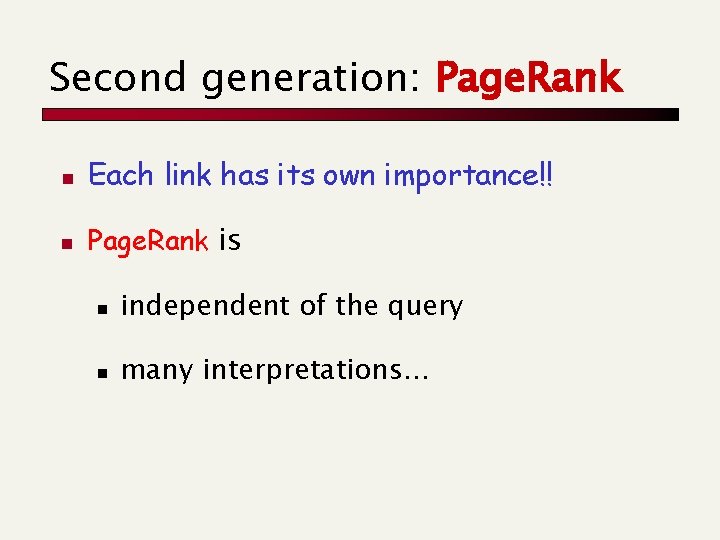

Second generation: Page. Rank n Each link has its own importance!! n Page. Rank is n independent of the query n many interpretations…

Basic Intuition… What about nodes with no in/out links?

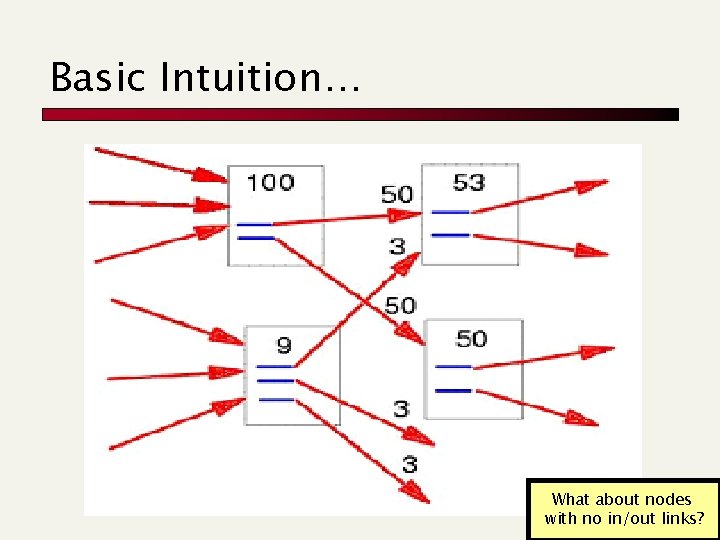

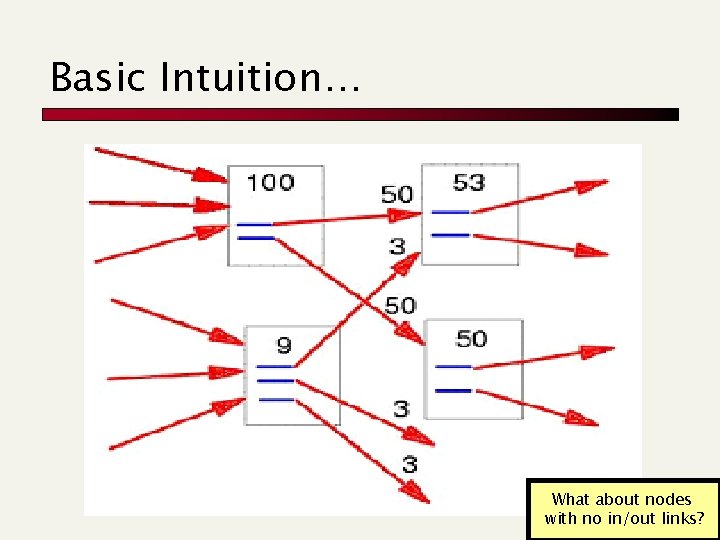

Google’s Pagerank Random jump Principal eigenvector r = [ a PT + (1 - a) e e. T ] × r B(i) : set of pages linking to i. #out(j) : number of outgoing links from j. e : vector of components 1/sqrt{N}.

Three different interpretations n Graph (intuitive interpretation) n n Matrix (easy for computation) n n Co-citation Eigenvector computation or a linear system solution Markov Chain (useful to prove convergence) n a sort of Usage Simulation “In the steady state” each page has a long-term visit rate - use this as the page’s score. 1 -a Any node a Neighbors

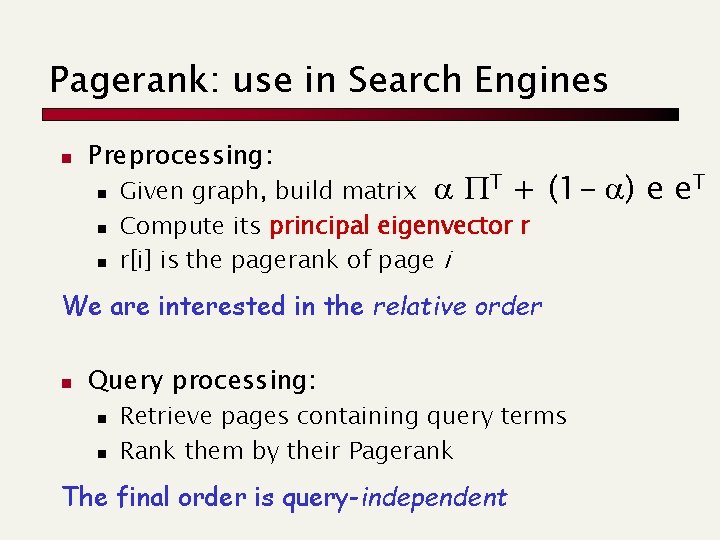

Pagerank: use in Search Engines n Preprocessing: n n n Given graph, build matrix a PT + Compute its principal eigenvector r r[i] is the pagerank of page i (1 - a) e e. T We are interested in the relative order n Query processing: n n Retrieve pages containing query terms Rank them by their Pagerank The final order is query-independent

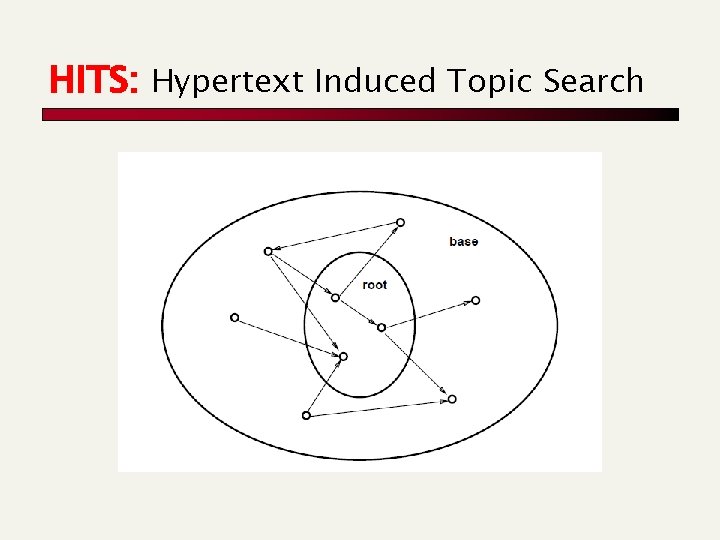

HITS: Hypertext Induced Topic Search

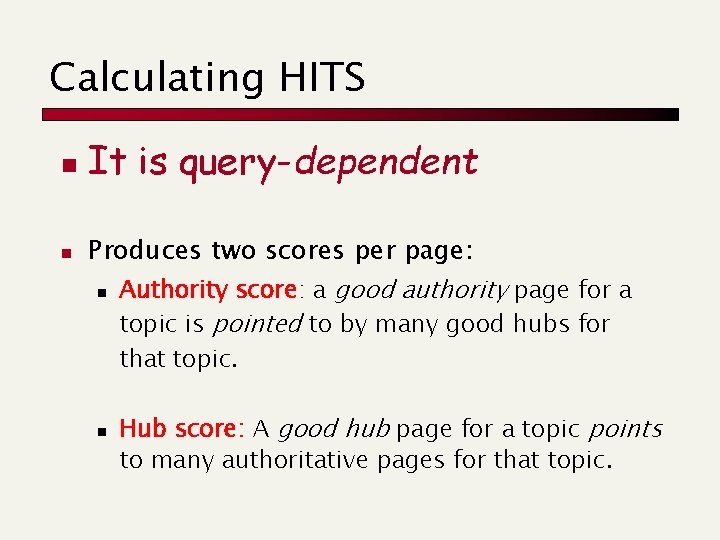

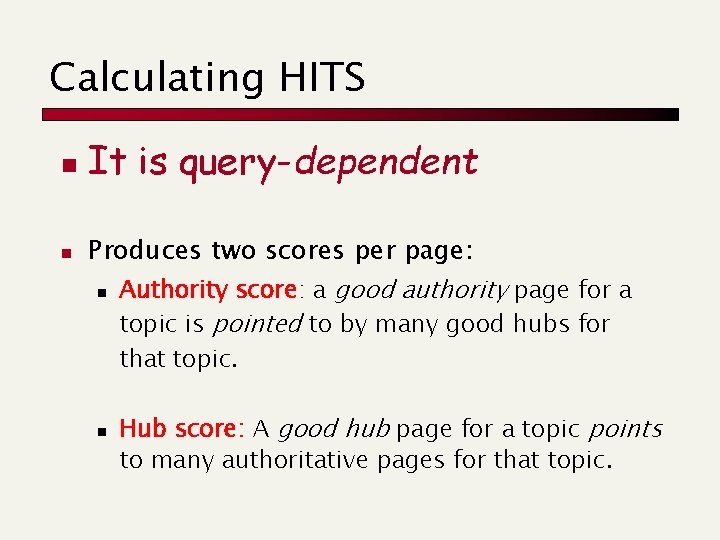

Calculating HITS n n It is query-dependent Produces two scores per page: n Authority score: a good authority page for a topic is pointed to by many good hubs for that topic. n Hub score: A good hub page for a topic points to many authoritative pages for that topic.

Authority and Hub scores 5 2 3 1 4 1 6 7 a(1) = h(2) + h(3) + h(4) h(1) = a(5) + a(6) + a(7)

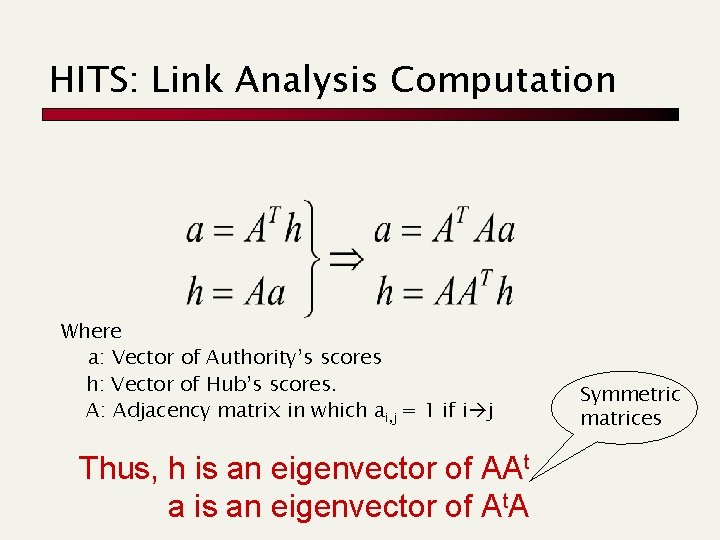

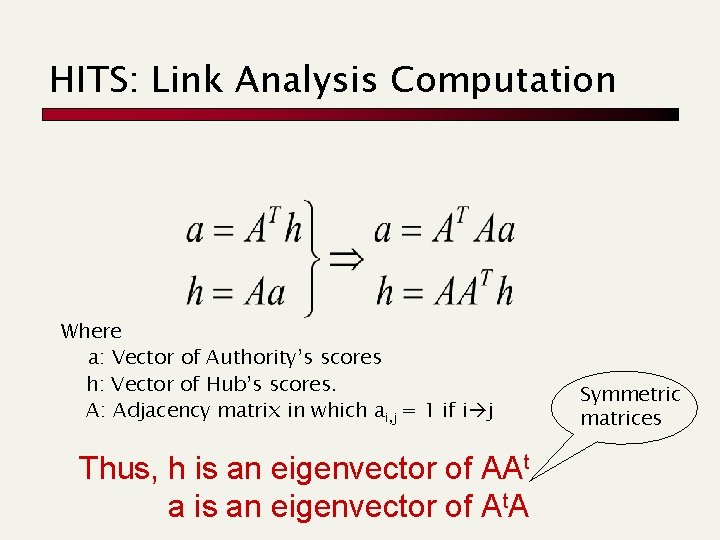

HITS: Link Analysis Computation Where a: Vector of Authority’s scores h: Vector of Hub’s scores. A: Adjacency matrix in which ai, j = 1 if i j Thus, h is an eigenvector of AAt a is an eigenvector of At. A Symmetric matrices

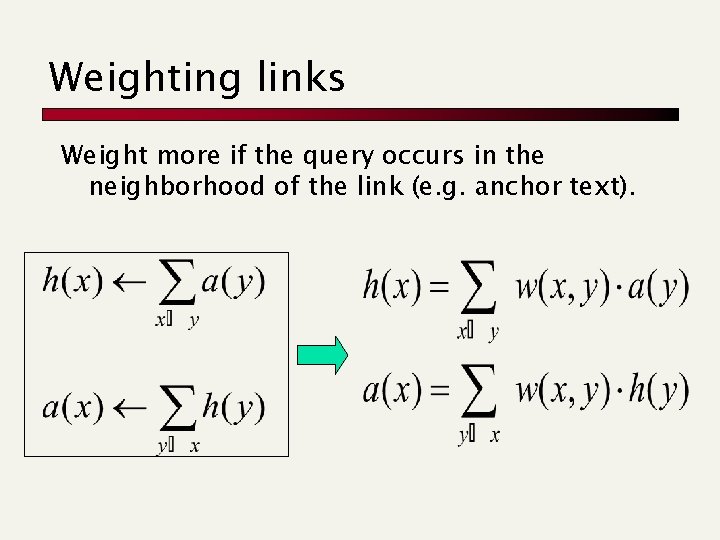

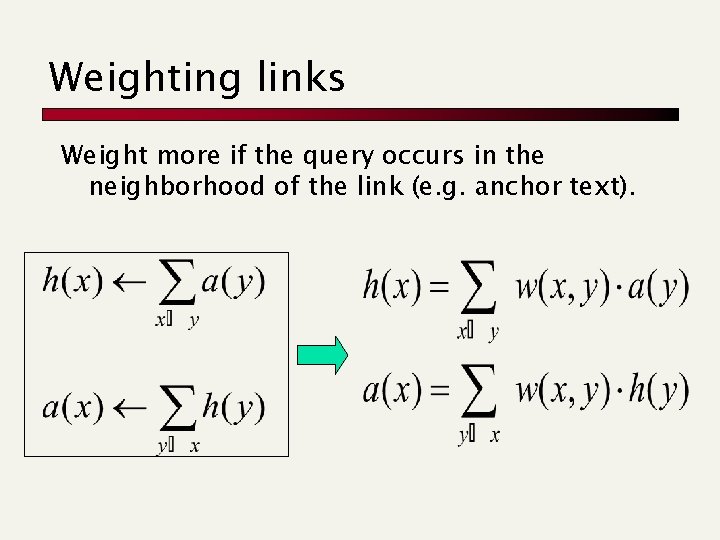

Weighting links Weight more if the query occurs in the neighborhood of the link (e. g. anchor text).

Latent Semantic Indexing (mapping onto a smaller space of latent concepts) Paolo Ferragina Dipartimento di Informatica Università di Pisa Reading 18

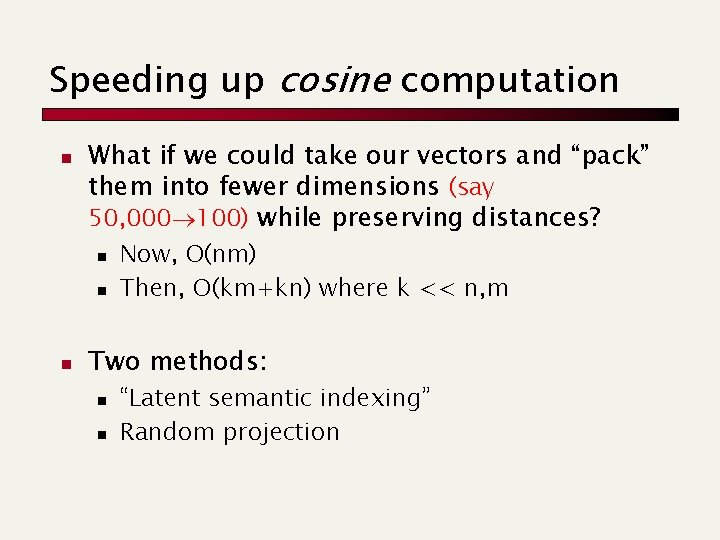

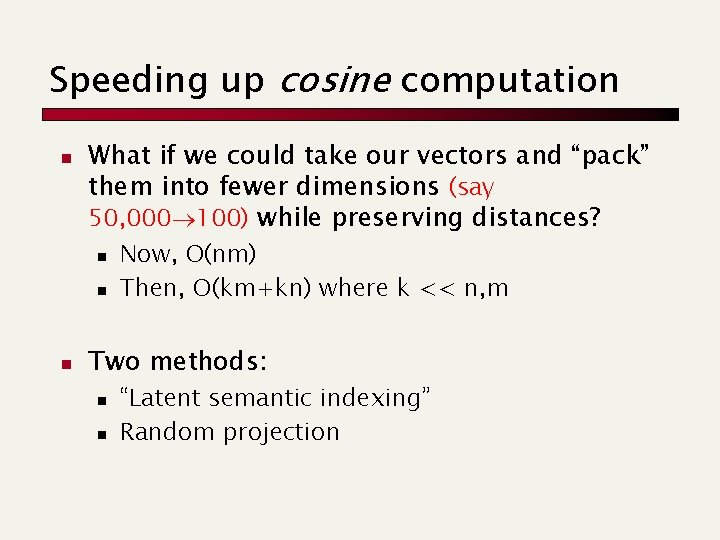

Speeding up cosine computation n What if we could take our vectors and “pack” them into fewer dimensions (say 50, 000 100) while preserving distances? n n n Now, O(nm) Then, O(km+kn) where k << n, m Two methods: n n “Latent semantic indexing” Random projection

A sketch n LSI is data-dependent n n Create a k-dim subspace by eliminating redundant axes Pull together “related” axes – hopefully n n car and automobile What about polysemy ? Random projection is data-independent n Choose a k-dim subspace that guarantees good stretching properties with high probability between pair of points.

Notions from linear algebra n n n Matrix A, vector v Matrix transpose (At) Matrix product Rank Eigenvalues l and eigenvector v: Av = lv

Overview of LSI n Pre-process docs using a technique from linear algebra called Singular Value Decomposition n Create a new (smaller) vector space n Queries handled (faster) in this new space

Singular-Value Decomposition n Recall m n matrix of terms docs, A. n A has rank r m, n Define term-term correlation matrix T=AAt n T is a square, symmetric m m matrix n Let P be m r matrix of eigenvectors of T Define doc-doc correlation matrix D=At. A n D is a square, symmetric n n matrix n Let R be n r matrix of eigenvectors of D

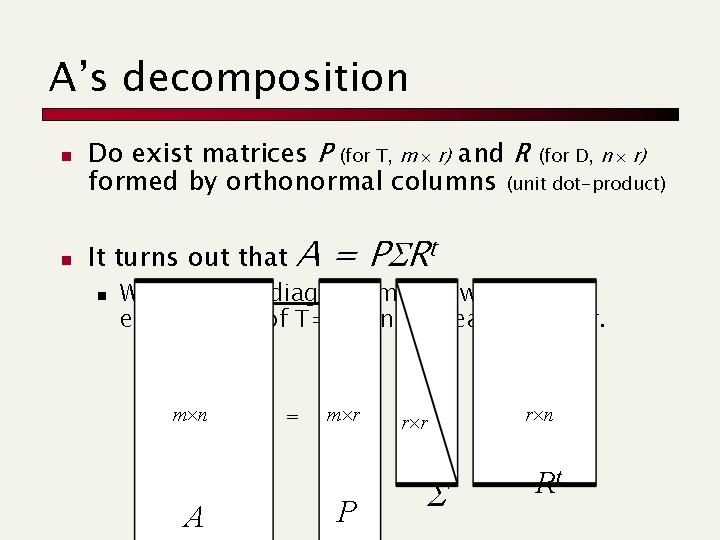

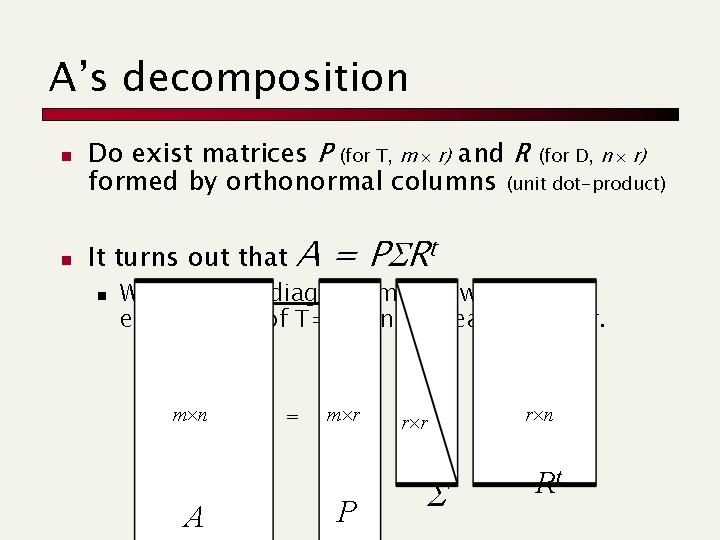

A’s decomposition n n Do exist matrices P (for T, m r) and R (for D, n r) formed by orthonormal columns (unit dot-product) It turns out that n A = PSRt Where S is a diagonal matrix with the eigenvalues of T=AAt in decreasing order. m n A = m r P r r S r n Rt

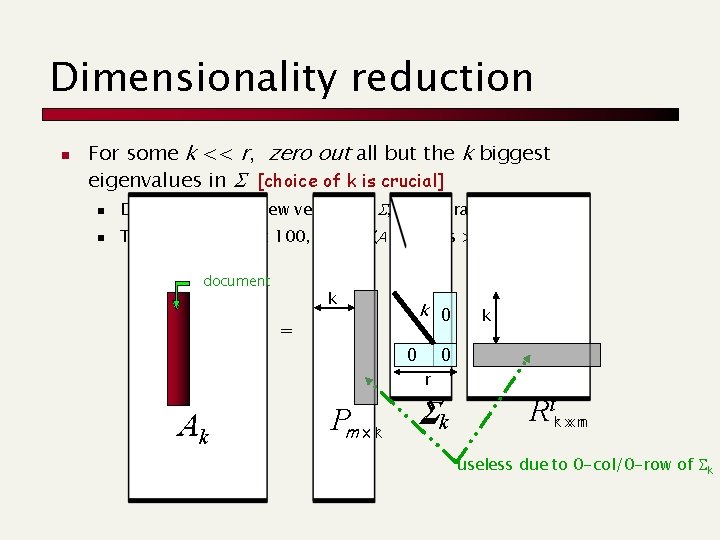

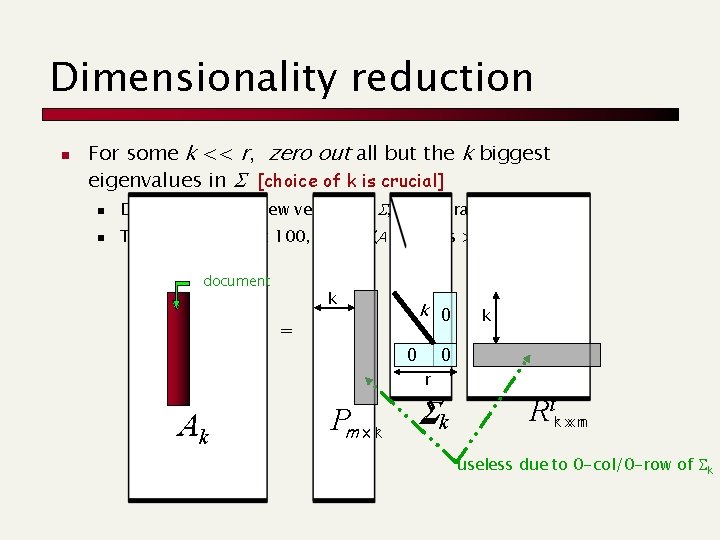

Dimensionality reduction n For some k << r, zero out all but the k biggest eigenvalues in S [choice of k is crucial] n n Denote by Sk this new version of S, having rank k Typically k is about 100, while r (A’s rank) is > 10, 000 document k k 0 = 0 k 0 r Ak Pm x kr Sk Rtkr xx nn useless due to 0 -col/0 -row of Sk

Guarantee n Ak is a pretty good approximation to A: n n Relative distances are (approximately) preserved Of all m n matrices of rank k, Ak is the best approximation to A wrt the following measures: n min. B, rank(B)=k ||A-B||2 = ||A-Ak||2 = sk+1 n min. B, rank(B)=k ||A-B||F 2 = ||A-Ak||F 2 = sk+12+ sk+22+. . . + sr 2 n Frobenius norm ||A||F 2 = s 12+ s 22+. . . + sr 2

Reduction n X k = Sk Rt n n n is the doc-matrix k x n, hence reduced to k dim Take the doc-correlation matrix: t t t t n It is D=A A =(P S R ) = (SR ) Approx S with Sk, thus get At A Xkt Xk (both are n x n matr. ) We use Xk to define A’s projection: n n n R, P are formed by orthonormal eigenvectors of the matrices D, T Xk = Sk Rt , substitute Rt = S-1 Pt A, so get Pkt A. In fact, Sk S-1 Pt = Pkt which is a k x m matrix This means that to reduce a doc/query vector is enough to multiply it by Pkt n Cost of sim(q, d), for all d, is O(kn+km) instead of O(mn)

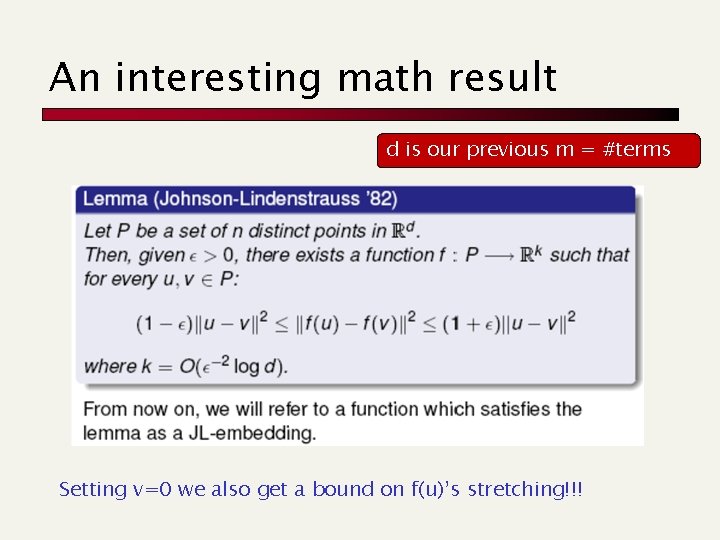

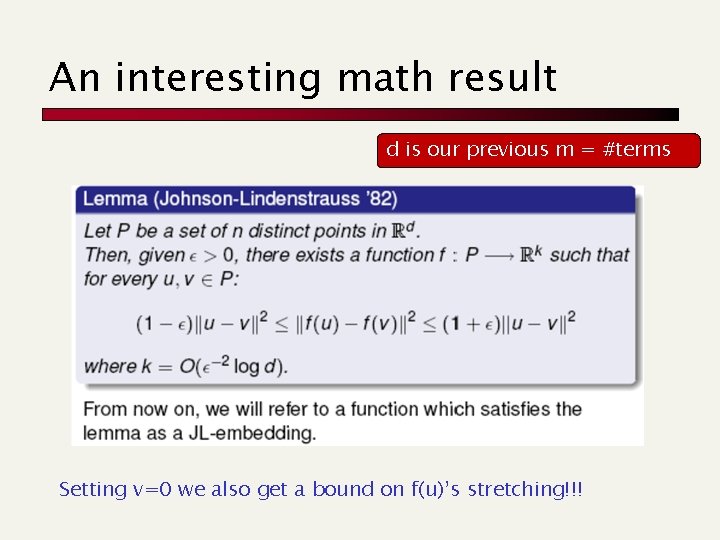

Which are the concepts ? n c-th concept = c-th row of Pkt (which is k x m) n Denote it by Pkt [c], whose size is m = #terms n Pkt [c][i] = strength of association between cth concept and i-th term n Projected document: d’j = Pkt dj n n d’j[c] = strenght of concept c in dj Projected query: q’ = Pkt q n q’ [c] = strenght of concept c in q

Random Projections Paolo Ferragina Dipartimento di Informatica Università di Pisa Slides only !

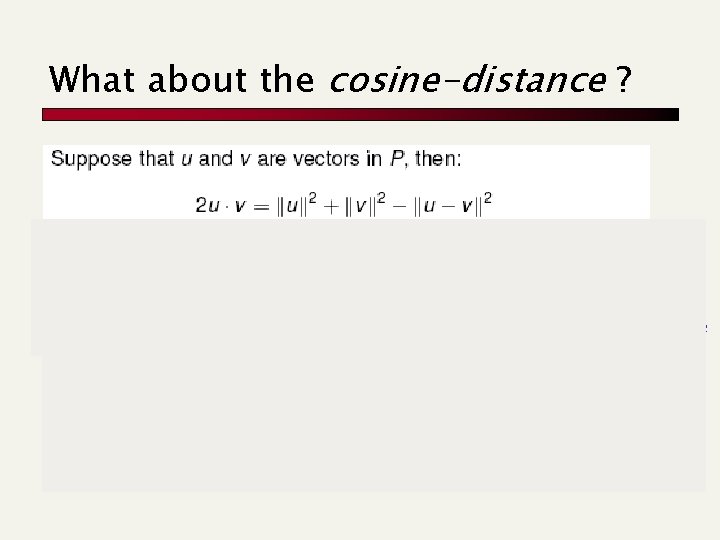

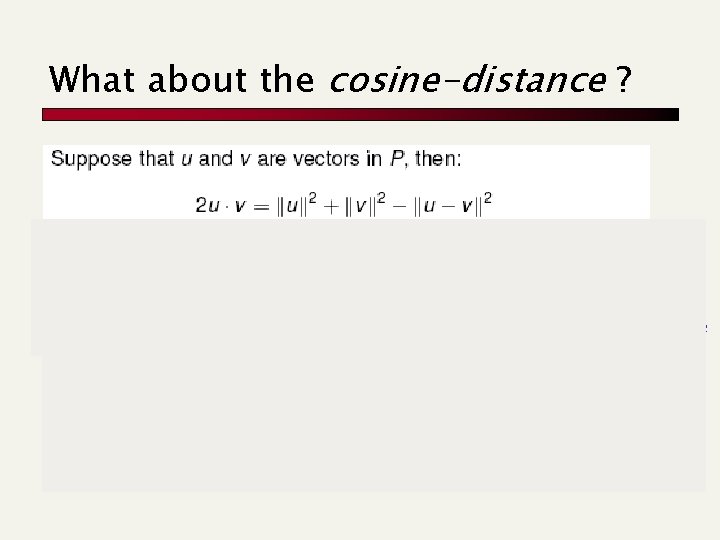

An interesting math result d is our previous m = #terms Setting v=0 we also get a bound on f(u)’s stretching!!!

What about the cosine-distance ? f(u)’s, f(v)’s stretching substituting formula above

![A practicaltheoretical idea Eri j 0 Varri j 1 A practical-theoretical idea !!! E[ri, j] = 0 Var[ri, j] = 1](https://slidetodoc.com/presentation_image_h/8bf85068b99f987d854fe72a3664ee60/image-37.jpg)

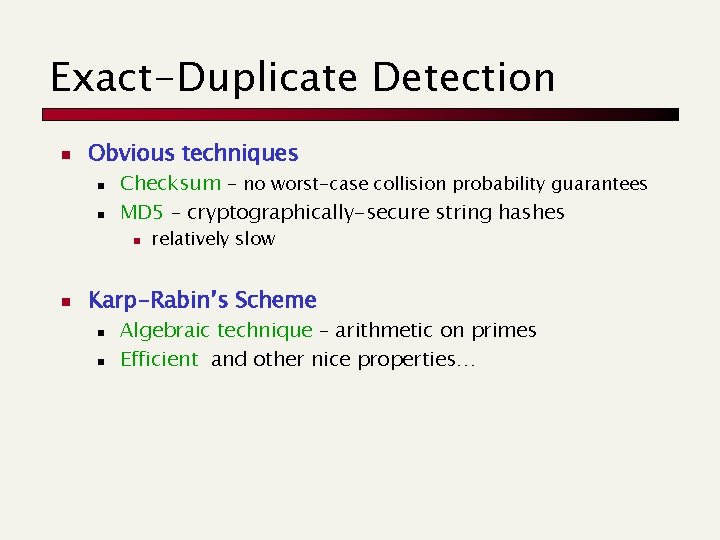

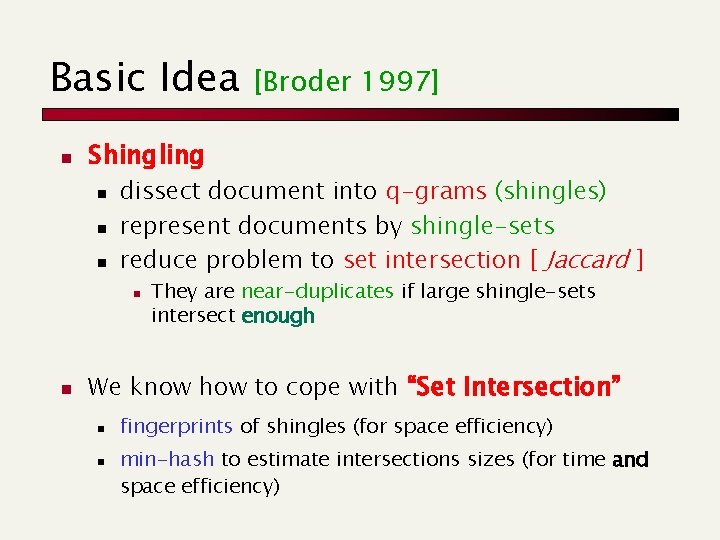

A practical-theoretical idea !!! E[ri, j] = 0 Var[ri, j] = 1

Finally. . . R Random projections hide large constants v k (1/e)2 * log d, so it may be large… v it is simple and fast to compute R LSI is intuitive and may scale to any k v optimal under various metrics v but costly to compute

Document duplication (exact or approximate) Paolo Ferragina Dipartimento di Informatica Università di Pisa Slides only!

Sec. 19. 6 Duplicate documents n The web is full of duplicated content n n Few exact duplicate detection Many cases of near duplicates n E. g. , Last modified date the only difference between two copies of a page

Natural Approaches n Fingerprinting: n n Random Sampling n n only works for exact matches sample substrings (phrases, sentences, etc) hope: similar documents similar samples But – even samples of same document will differ Edit-distance n n n metric for approximate string-matching expensive – even for one pair of strings impossible – for 1032 web documents

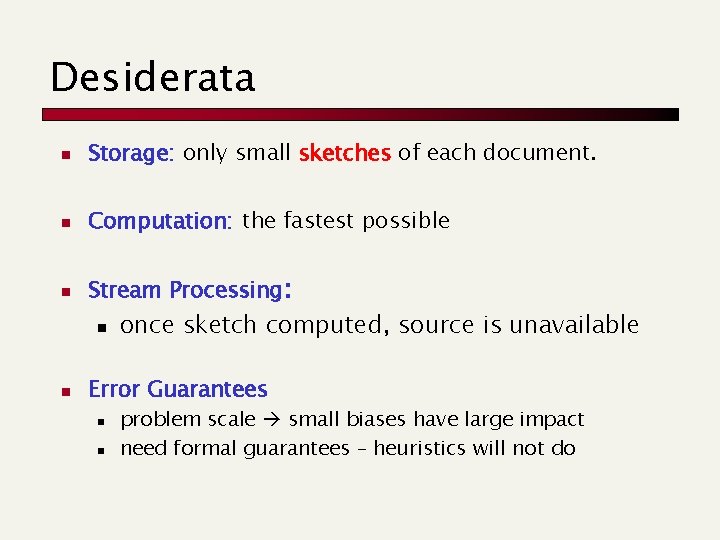

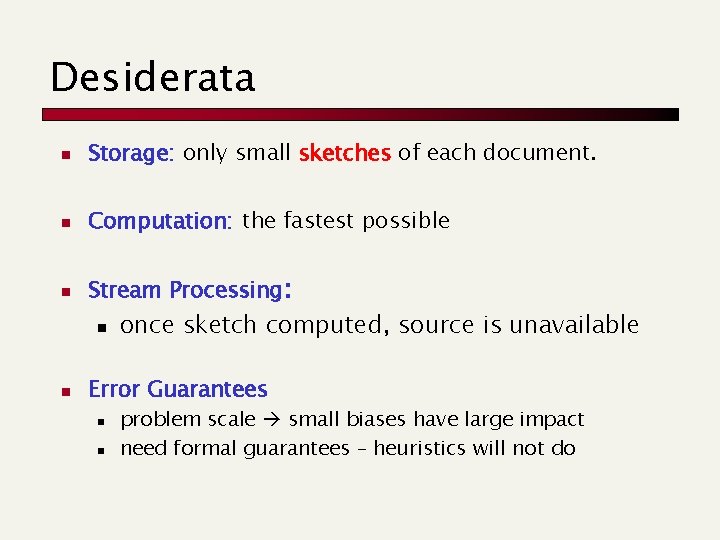

Exact-Duplicate Detection n Obvious techniques n n Checksum – no worst-case collision probability guarantees MD 5 – cryptographically-secure string hashes n n relatively slow Karp-Rabin’s Scheme n n Algebraic technique – arithmetic on primes Efficient and other nice properties…

Karp-Rabin Fingerprints n n n Consider – m-bit string A=a 1 a 2 … am Assume – a 1=1 and fixed-length strings (wlog) Basic values: n n n Fingerprints: f(A) = A mod p n n n Choose a prime p in the universe U, such that 2 p uses few memory-words (hence U ≈ 264) Set h = dm-1 mod p Nice property is that if B = a 2 … am am+1 f(B) = [d (A - a 1 h) + am+1 ] mod p Prob[false hit] = Prob p divides (A-B) = #div(A-B)/U ≈ (log (A+B)) / U = m/U

Near-Duplicate Detection n Problem n n n Given a large collection of documents Identify the near-duplicate documents Web search engines n Proliferation of near-duplicate documents n n Legitimate – mirrors, local copies, updates, … Malicious – spam, spider-traps, dynamic URLs, … Mistaken – spider errors 30% of web-pages are near-duplicates [1997]

Desiderata n Storage: only small sketches of each document. n Computation: the fastest possible n Stream Processing: n n once sketch computed, source is unavailable Error Guarantees n n problem scale small biases have large impact need formal guarantees – heuristics will not do

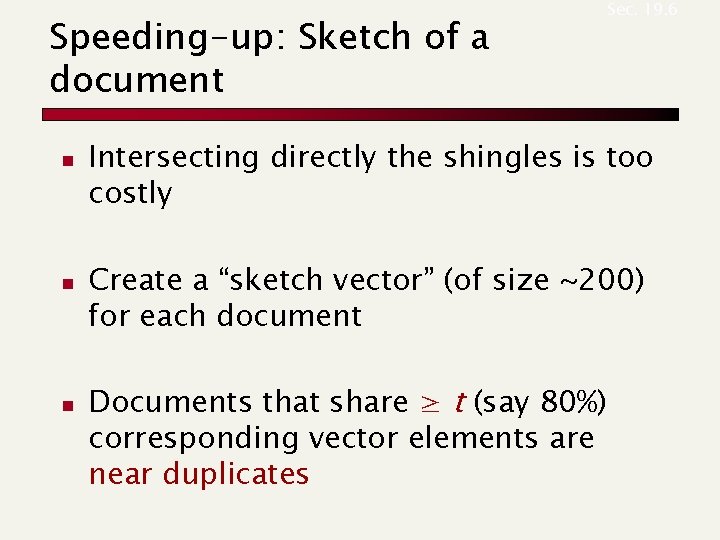

Basic Idea n Shingling n n n dissect document into q-grams (shingles) represent documents by shingle-sets reduce problem to set intersection [ Jaccard ] n n [Broder 1997] They are near-duplicates if large shingle-sets intersect enough We know how to cope with “Set Intersection” n n fingerprints of shingles (for space efficiency) min-hash to estimate intersections sizes (for time and space efficiency)

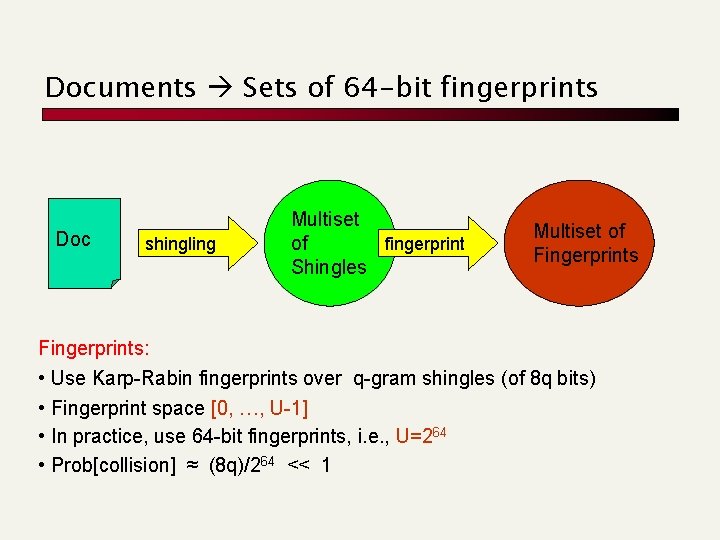

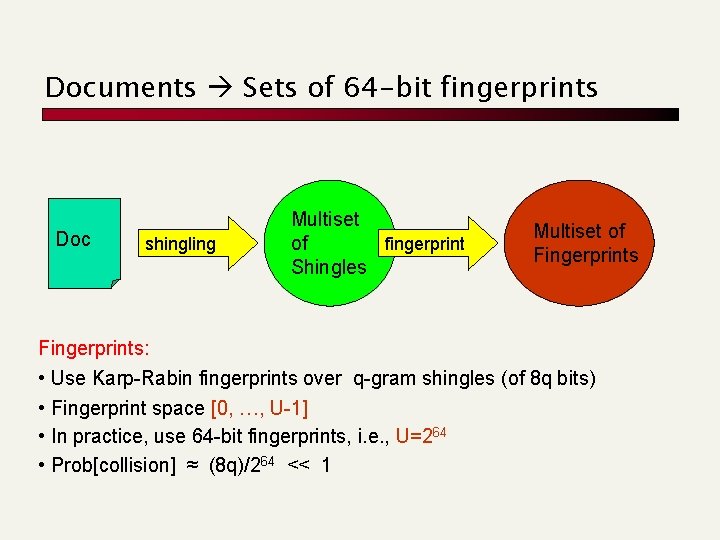

Documents Sets of 64 -bit fingerprints Doc shingling Multiset fingerprint of Shingles Multiset of Fingerprints: • Use Karp-Rabin fingerprints over q-gram shingles (of 8 q bits) • Fingerprint space [0, …, U-1] • In practice, use 64 -bit fingerprints, i. e. , U=264 • Prob[collision] ≈ (8 q)/264 << 1

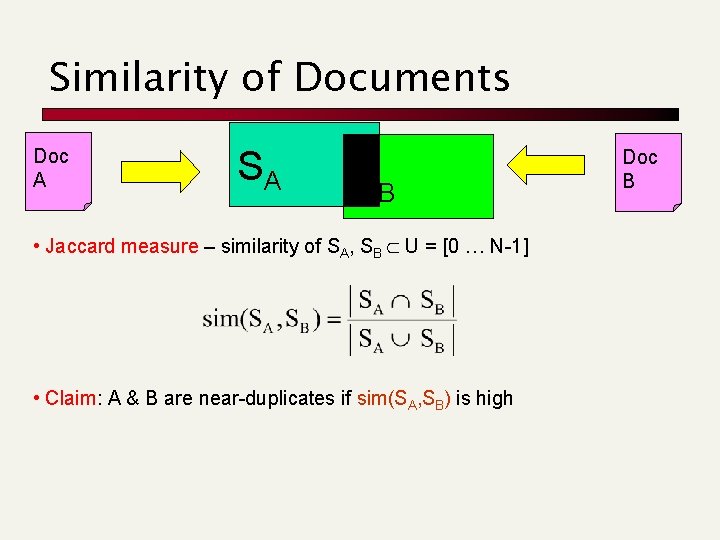

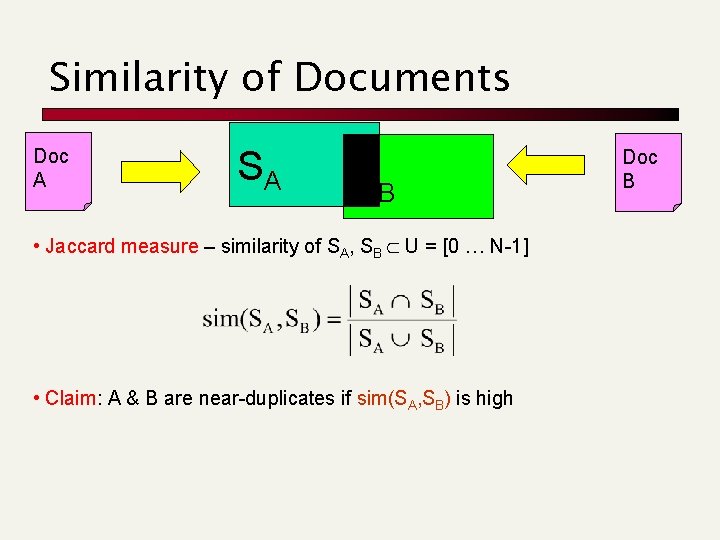

Similarity of Documents Doc A SA SB • Jaccard measure – similarity of SA, SB U = [0 … N-1] • Claim: A & B are near-duplicates if sim(SA, SB) is high Doc B

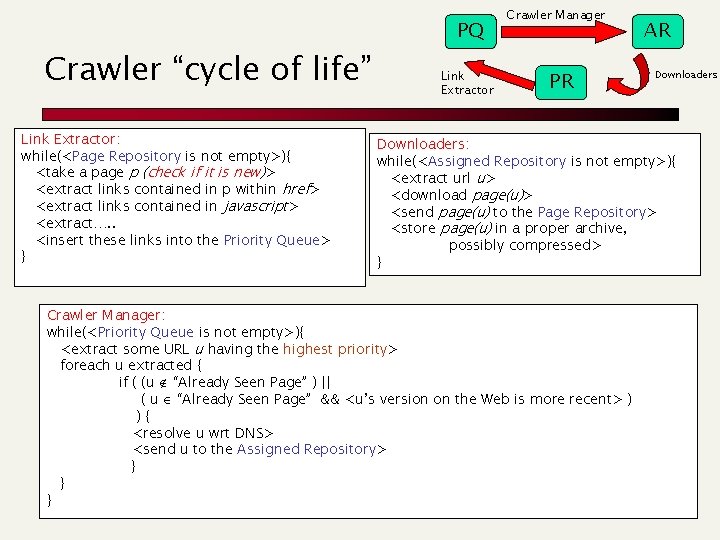

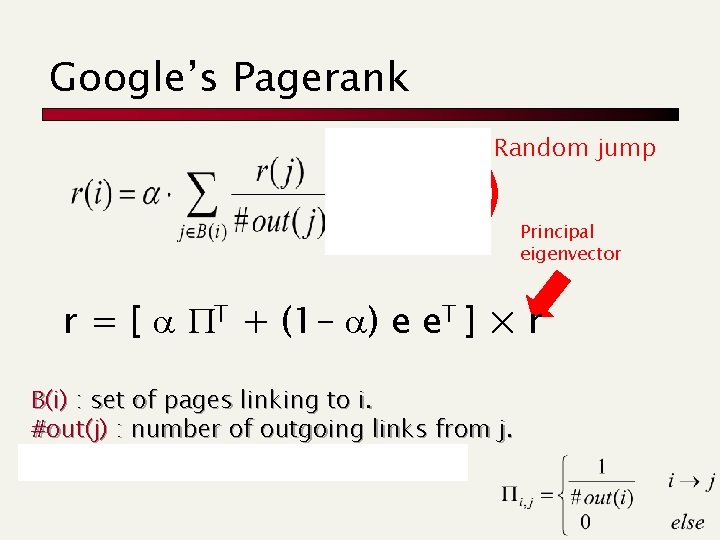

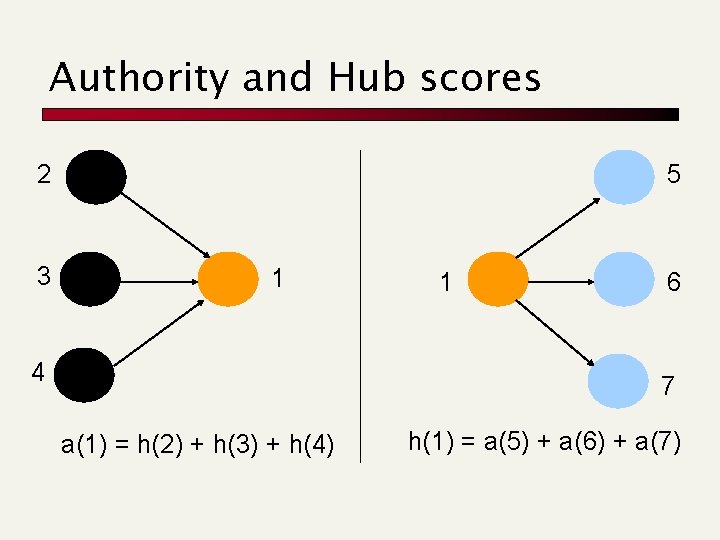

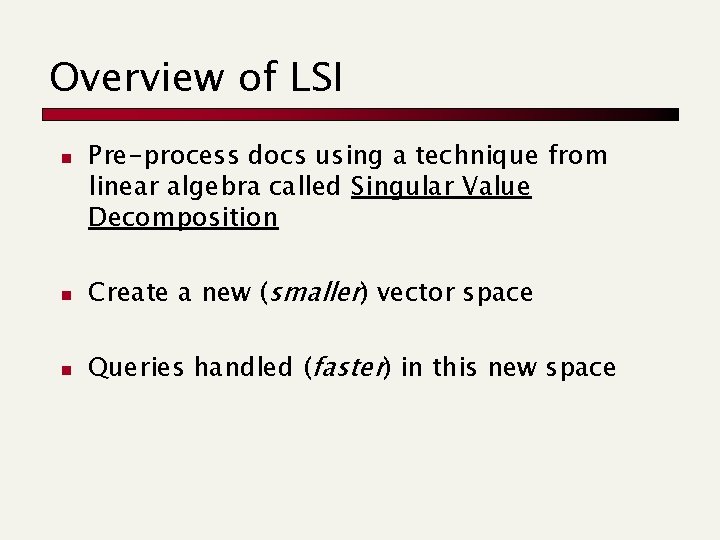

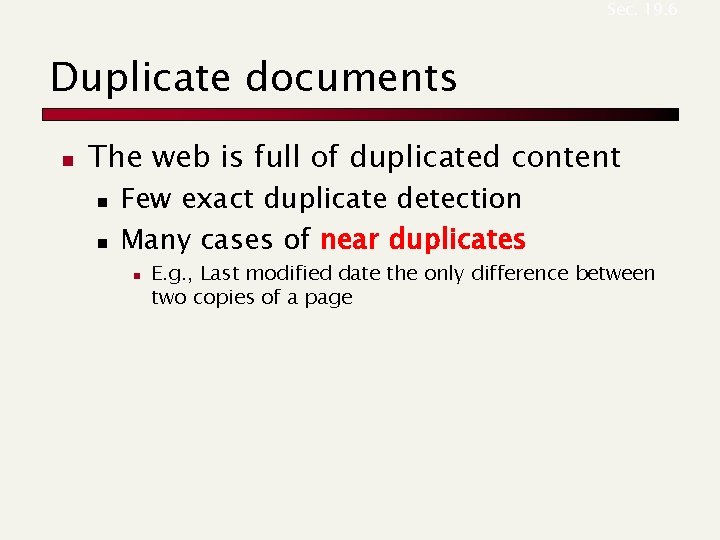

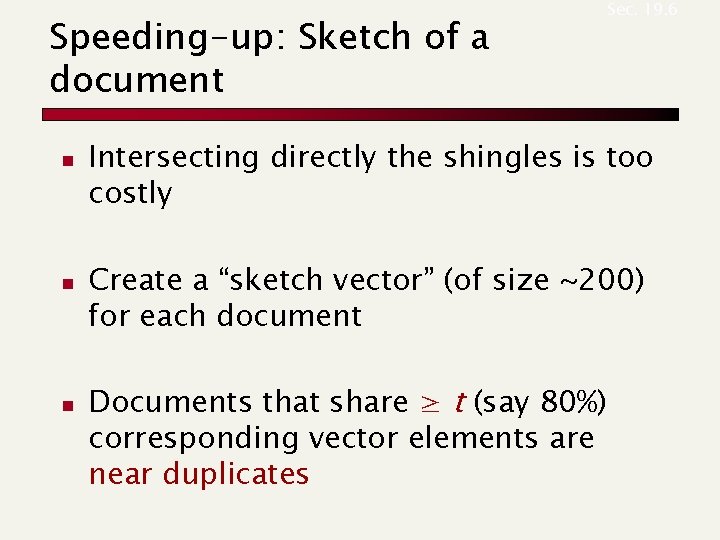

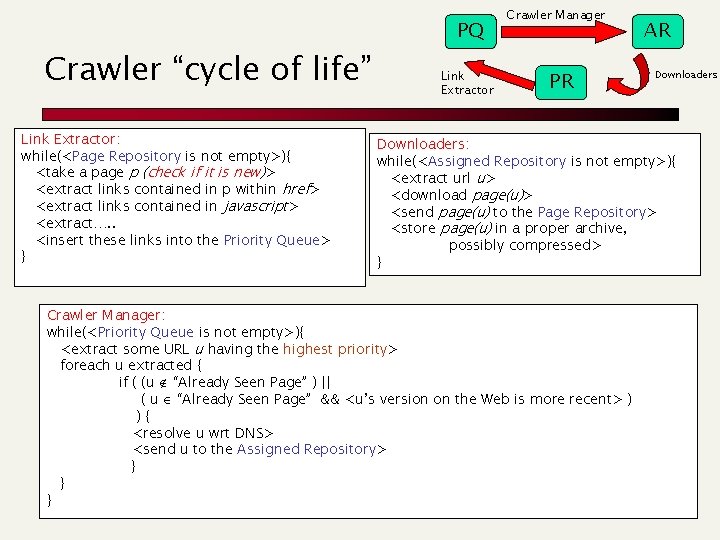

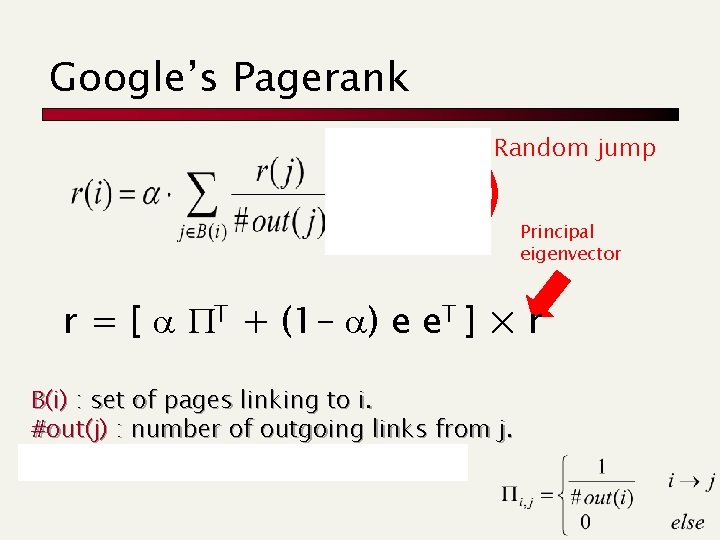

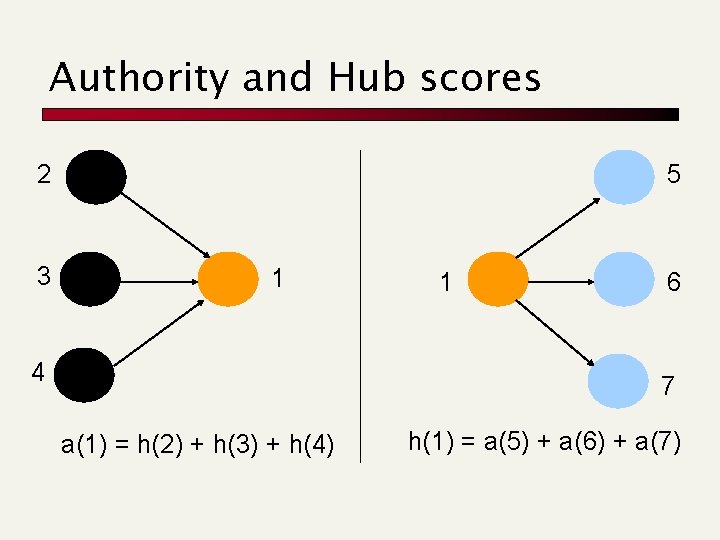

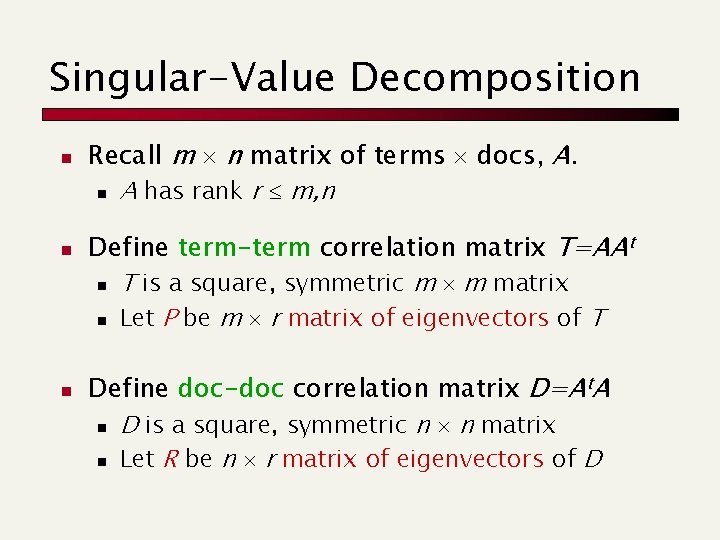

Speeding-up: Sketch of a document n n n Sec. 19. 6 Intersecting directly the shingles is too costly Create a “sketch vector” (of size ~200) for each document Documents that share ≥ t (say 80%) corresponding vector elements are near duplicates

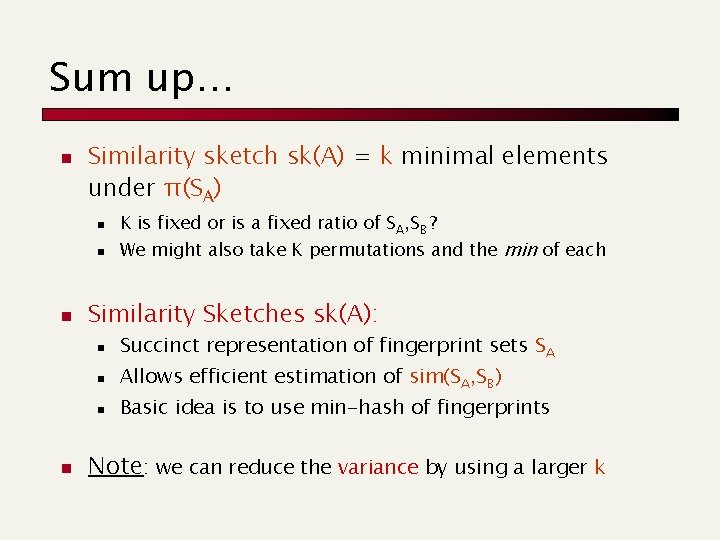

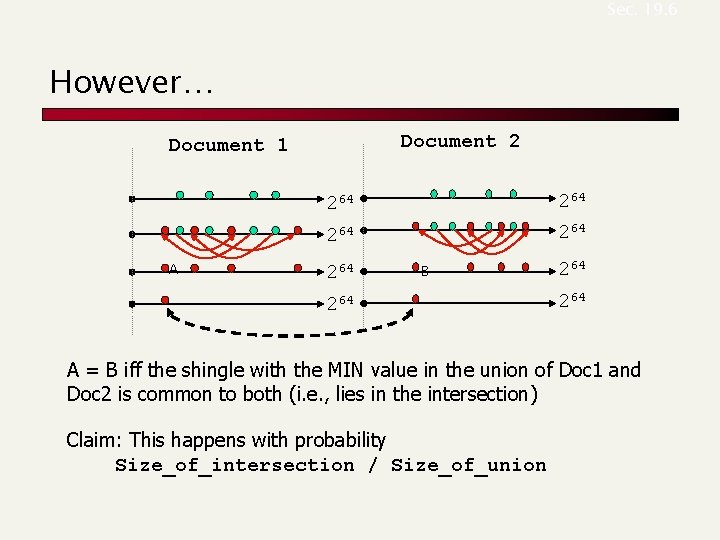

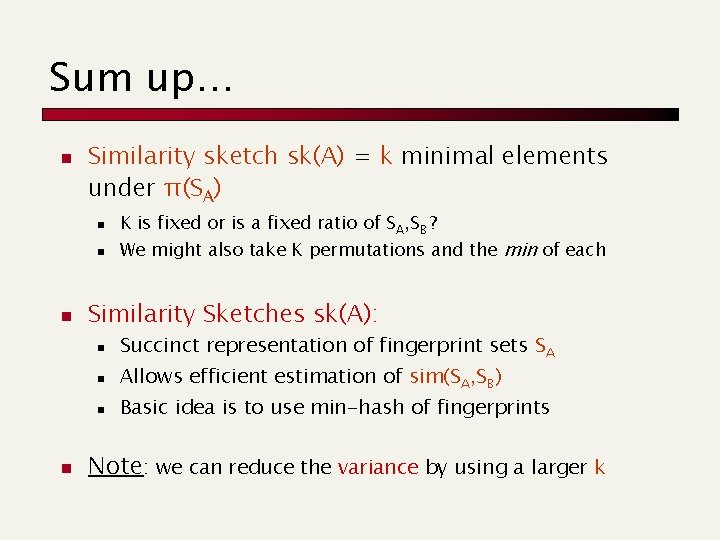

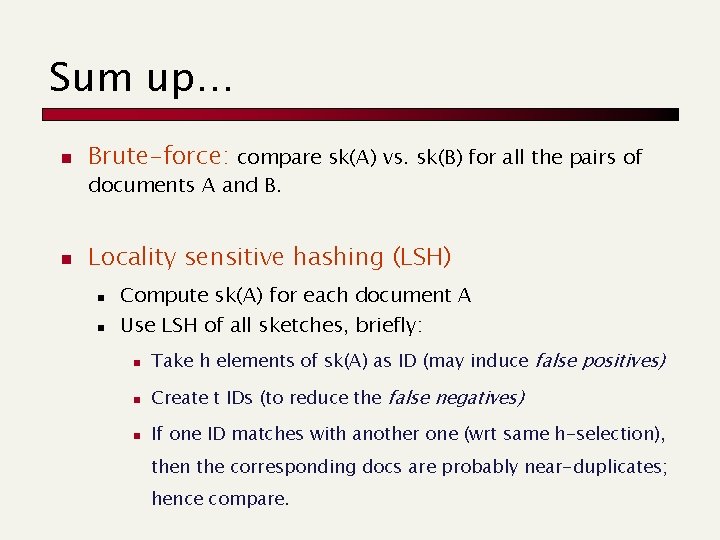

Sketching by Min-Hashing n Consider n SA, S B P n Pick a random permutation π of P (such as ax+b mod |P|) n Define = π -1( min{π(SA)} ) , b = π -1( min{π(SB)} ) n n minimal element under permutation π Lemma:

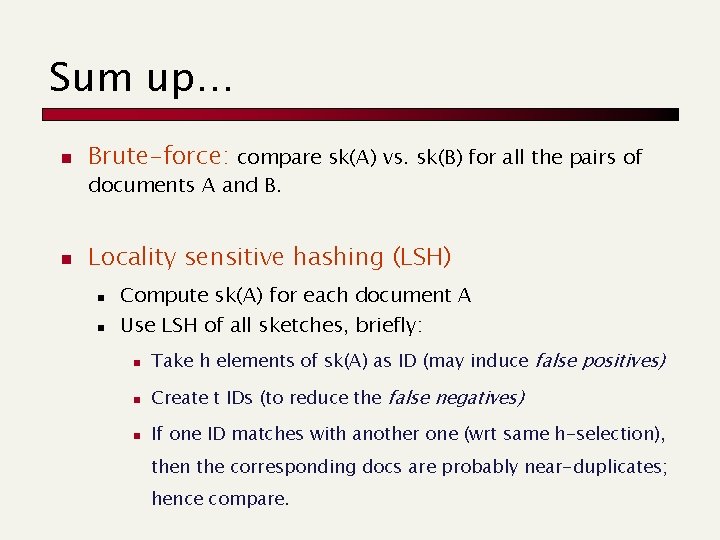

Sum up… n Similarity sketch sk(A) = k minimal elements under π(SA) n n n Similarity Sketches sk(A): n n K is fixed or is a fixed ratio of SA, SB ? We might also take K permutations and the min of each Succinct representation of fingerprint sets SA Allows efficient estimation of sim(SA, SB) Basic idea is to use min-hash of fingerprints Note: we can reduce the variance by using a larger k

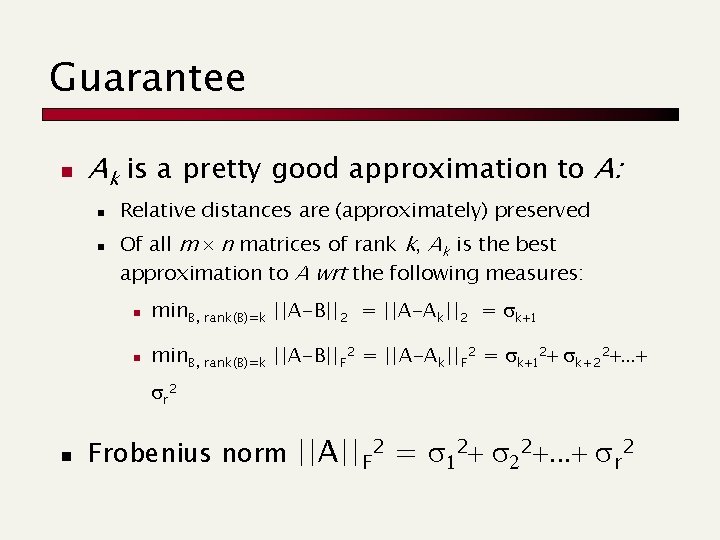

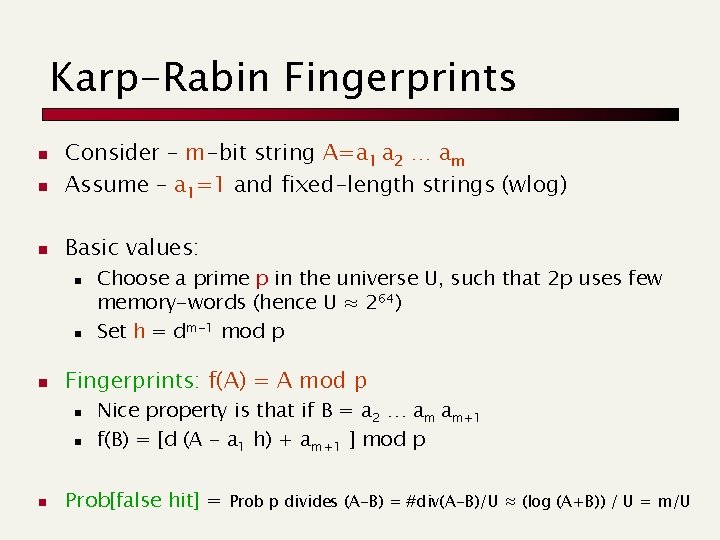

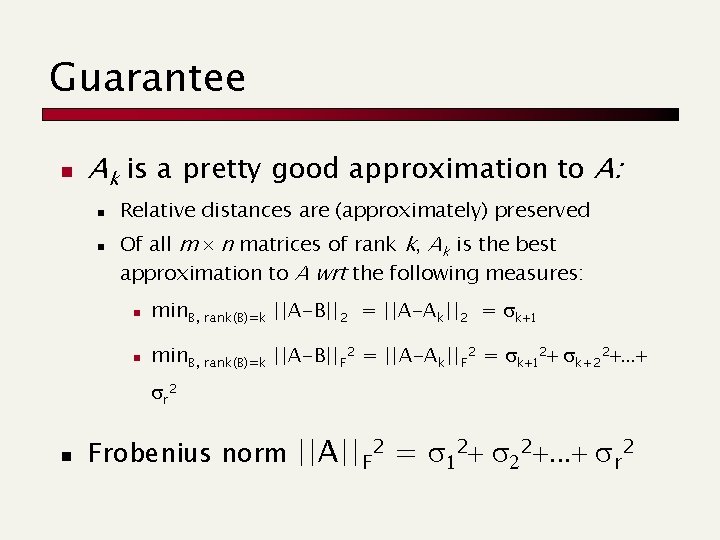

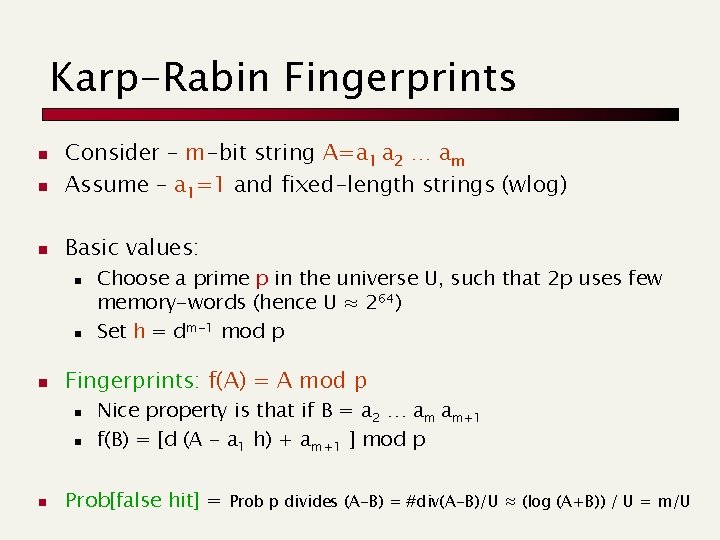

![Sec 19 6 Computing Sketchi for Doc 1 Document 1 264 Start with 64 Sec. 19. 6 Computing Sketch[i] for Doc 1 Document 1 264 Start with 64](https://slidetodoc.com/presentation_image_h/8bf85068b99f987d854fe72a3664ee60/image-52.jpg)

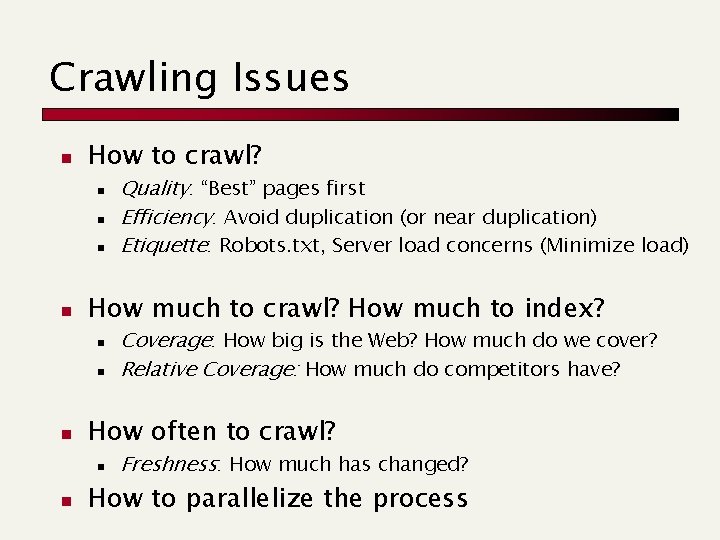

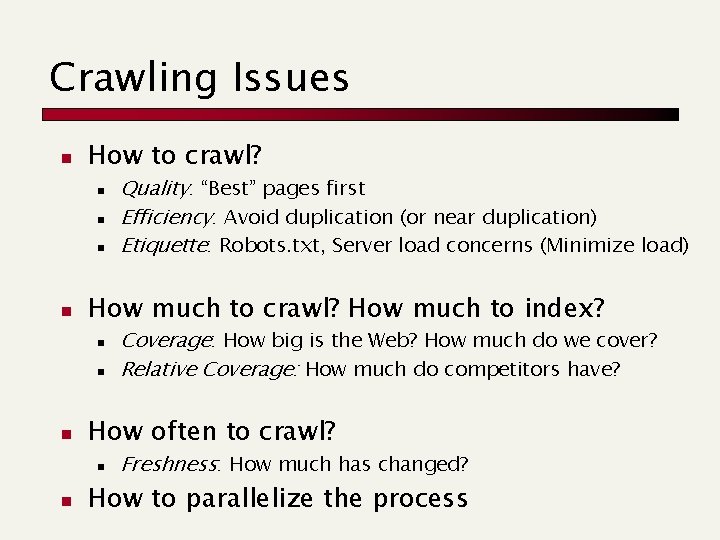

Sec. 19. 6 Computing Sketch[i] for Doc 1 Document 1 264 Start with 64 -bit f(shingles) 264 Permute on the number line with pi 264 Pick the min value

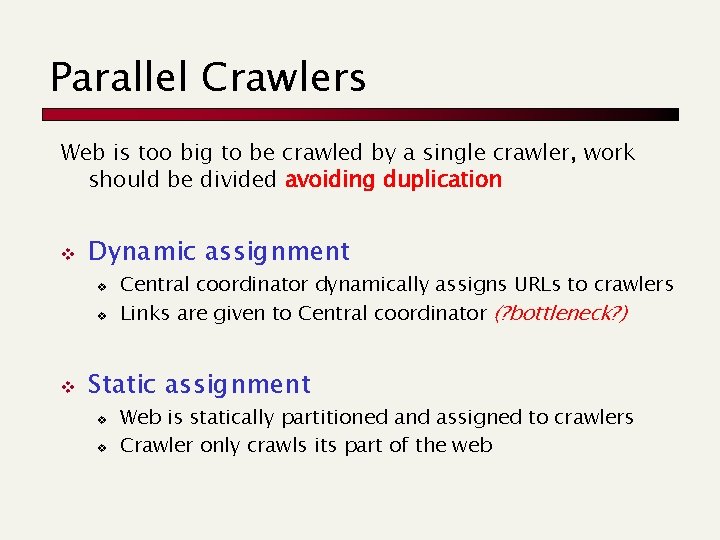

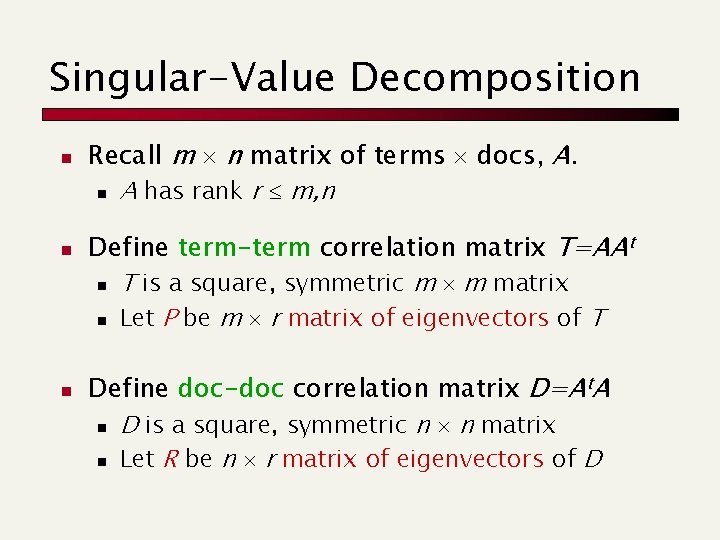

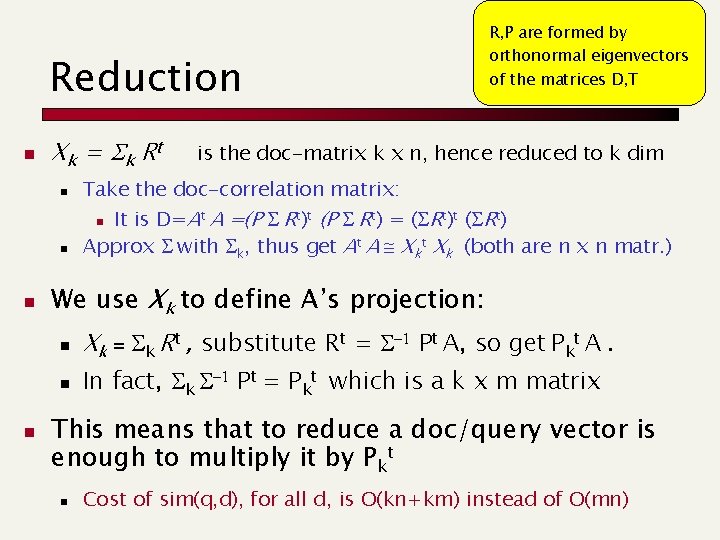

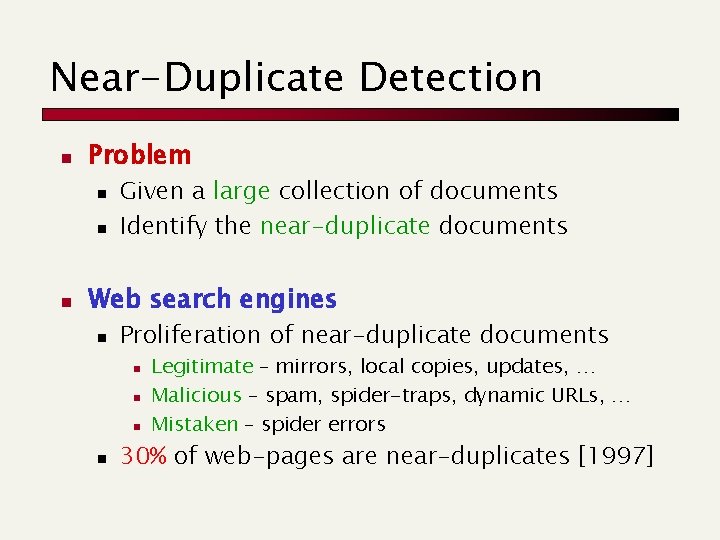

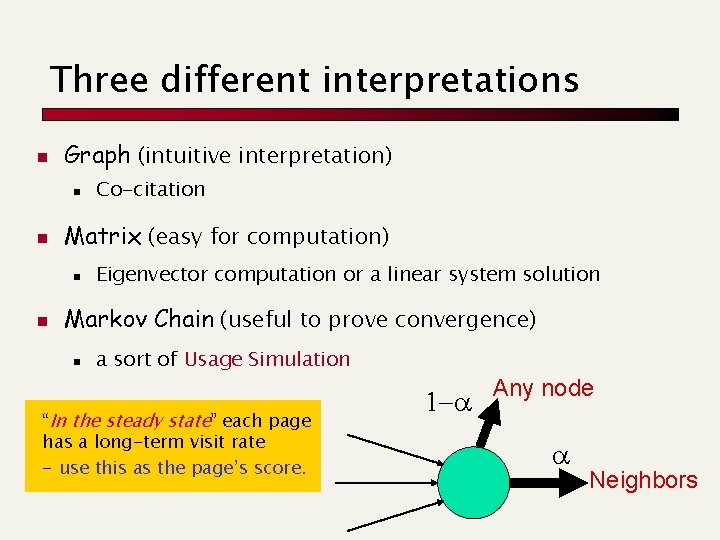

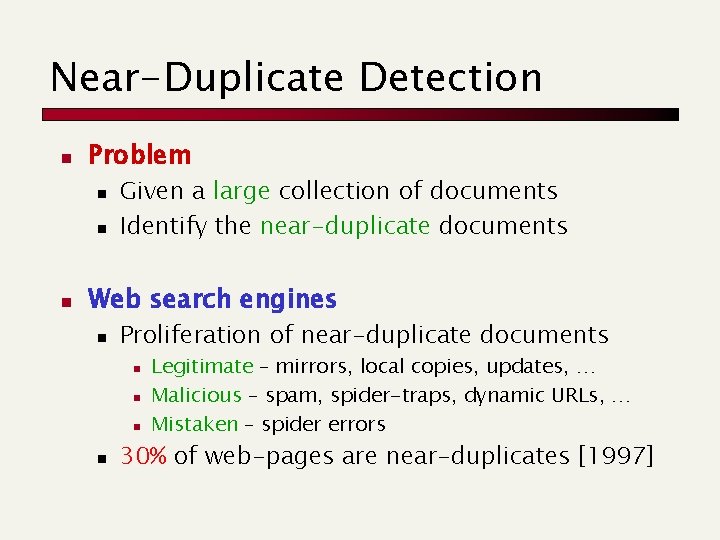

![Sec 19 6 Test if Doc 1 Sketchi Doc 2 Sketchi Document 2 Sec. 19. 6 Test if Doc 1. Sketch[i] = Doc 2. Sketch[i] Document 2](https://slidetodoc.com/presentation_image_h/8bf85068b99f987d854fe72a3664ee60/image-53.jpg)

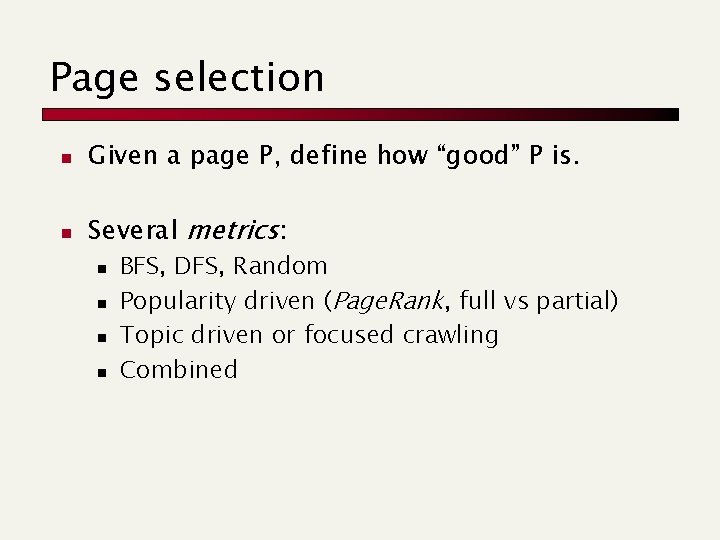

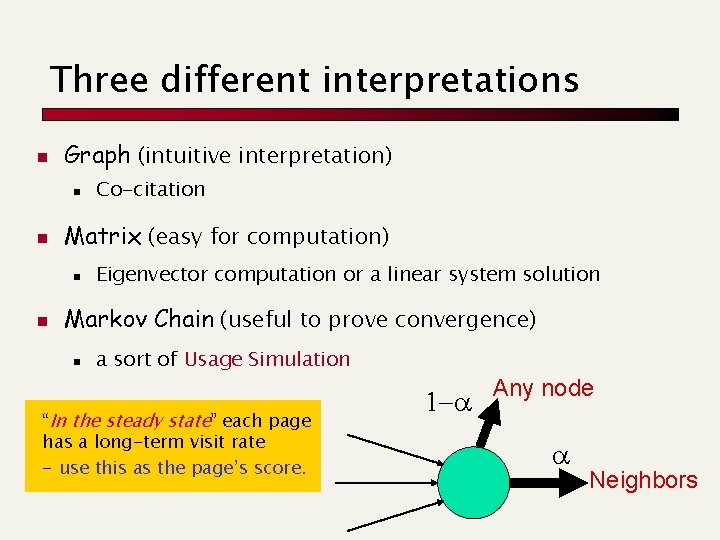

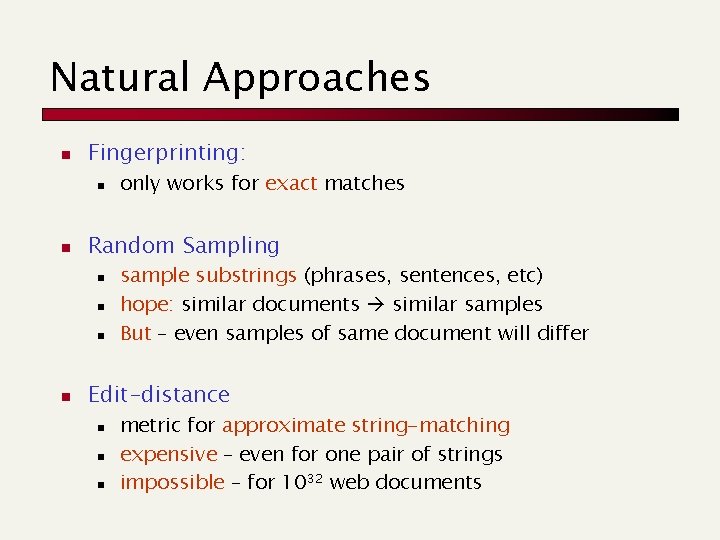

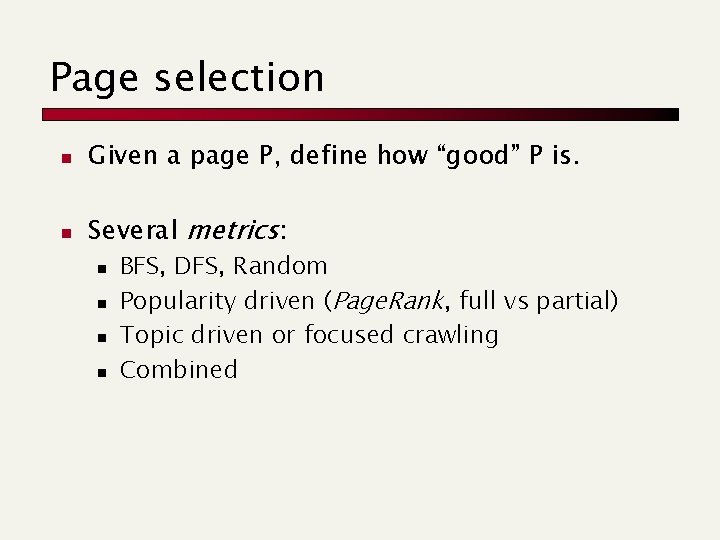

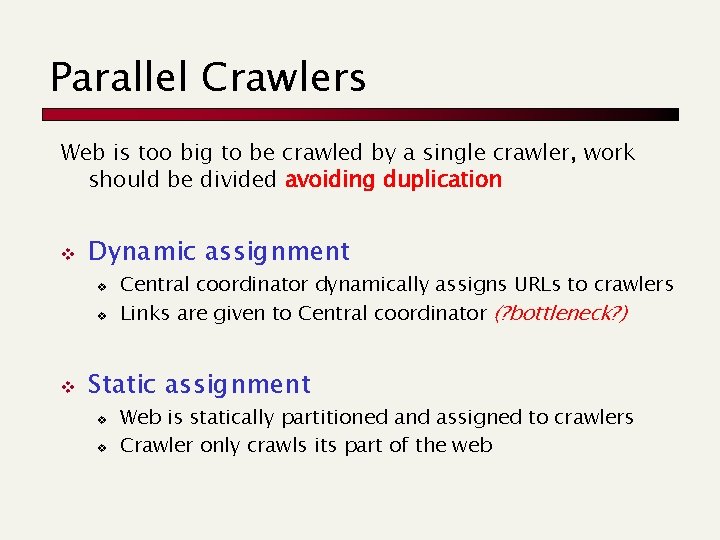

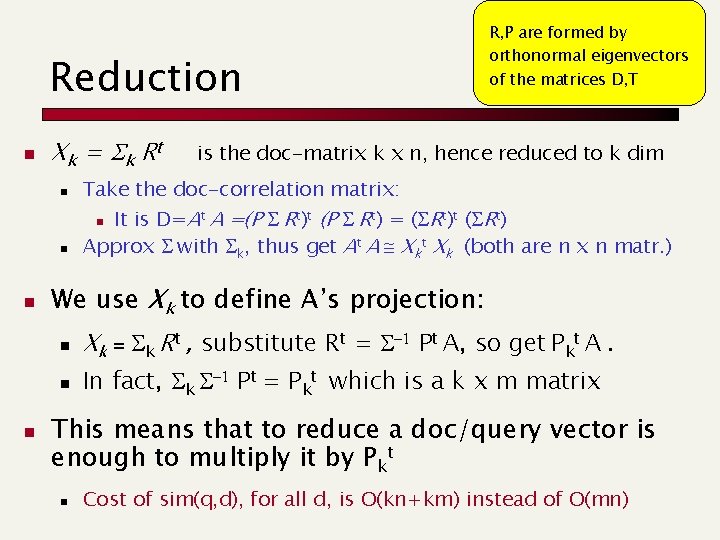

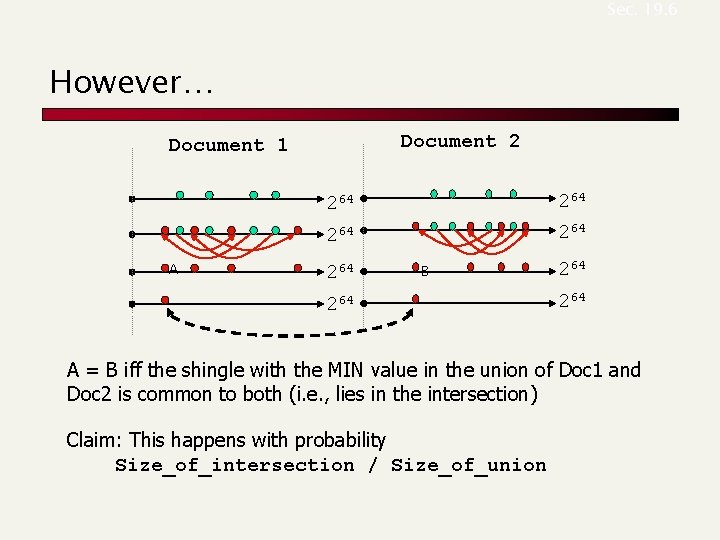

Sec. 19. 6 Test if Doc 1. Sketch[i] = Doc 2. Sketch[i] Document 2 Document 1 A 264 264 264 B 264 Are these equal? Test for 200 random permutations: p 1, p 2, … p 200 264

Sec. 19. 6 However… Document 2 Document 1 A 264 264 264 B 264 264 A = B iff the shingle with the MIN value in the union of Doc 1 and Doc 2 is common to both (i. e. , lies in the intersection) Claim: This happens with probability Size_of_intersection / Size_of_union

Sum up… n Brute-force: compare sk(A) vs. sk(B) for all the pairs of documents A and B. n Locality sensitive hashing (LSH) n Compute sk(A) for each document A n Use LSH of all sketches, briefly: n Take h elements of sk(A) as ID (may induce false positives) n Create t IDs (to reduce the false negatives) n If one ID matches with another one (wrt same h-selection), then the corresponding docs are probably near-duplicates; hence compare.