Chinese Whispers an Efficient Graph Clustering Algorithm and

- Slides: 25

Chinese Whispers an Efficient Graph Clustering Algorithm and its Application to Natural Language Processing Problems Chris Biemann University of Leipzig, NLP-Dept. Leipzig, Germany June 9, 2006 Text. Graphs 06, NYC, USA 1

Outline • • Introduction to Graph Clustering Chinese Whispers Algorithm Experiments with Synthetic Data Application of CW to – Language Seperation – POS clustering – Word Sense Induction • Extensions 2

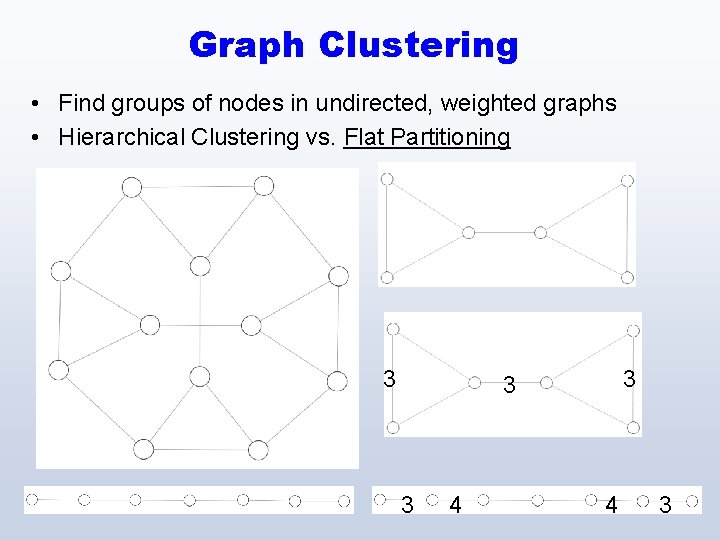

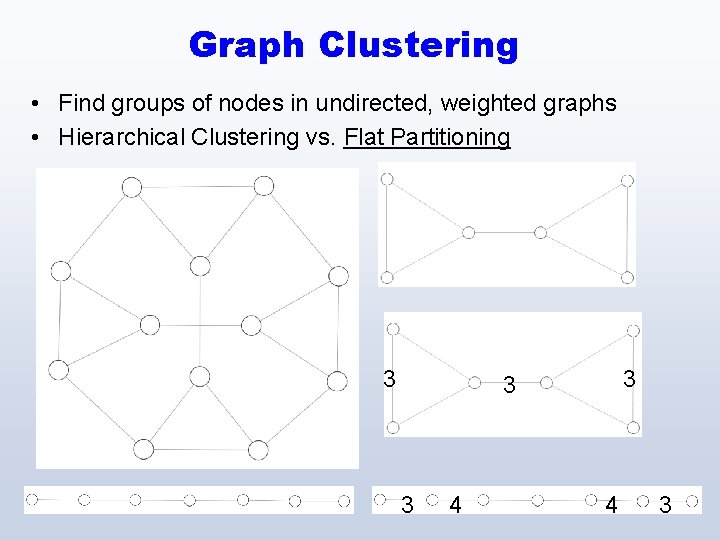

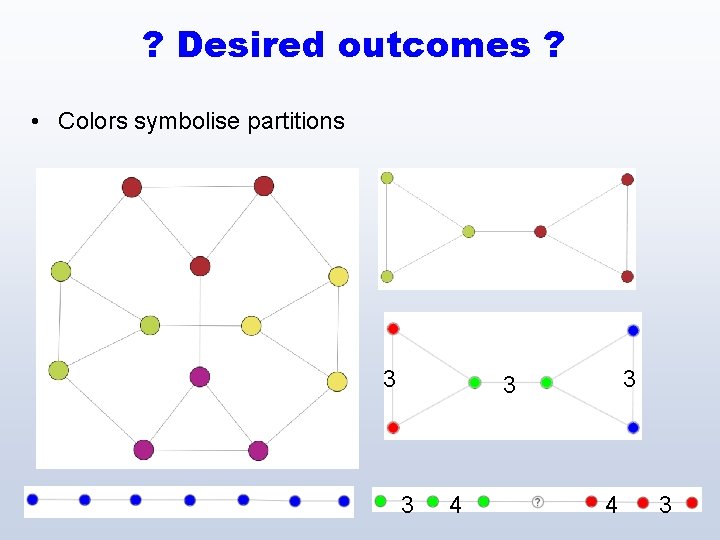

Graph Clustering • Find groups of nodes in undirected, weighted graphs • Hierarchical Clustering vs. Flat Partitioning 3 3 4 3

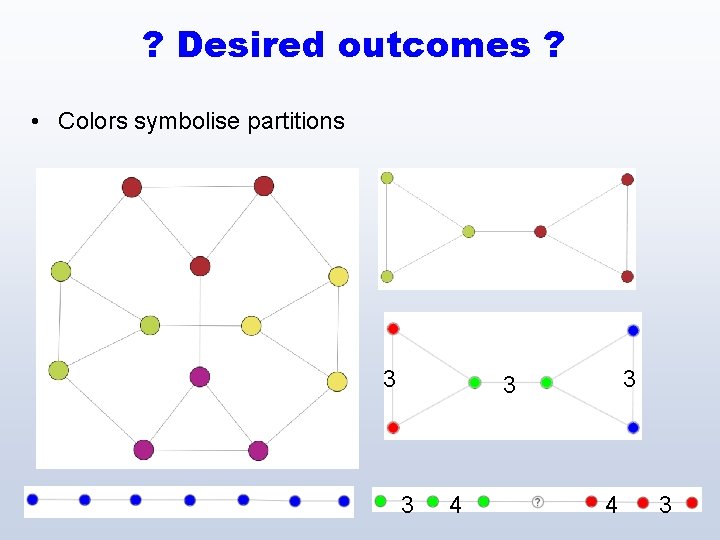

? Desired outcomes ? • Colors symbolise partitions 3 3 4 4 4 3

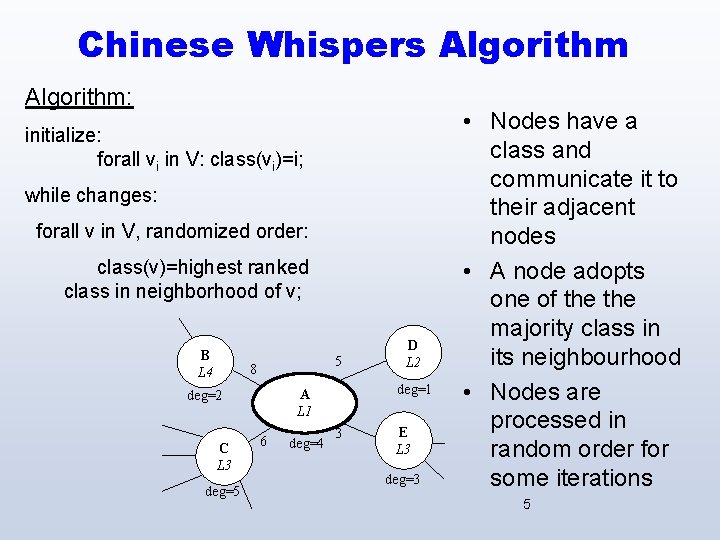

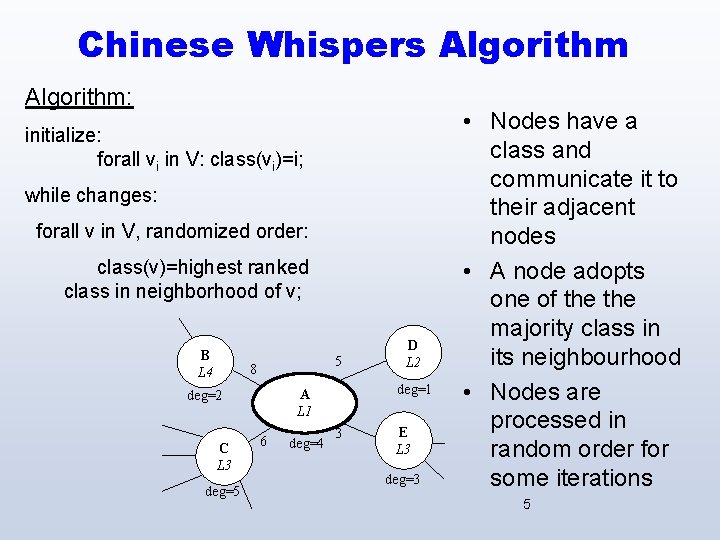

Chinese Whispers Algorithm: initialize: forall vi in V: class(vi)=i; while changes: forall v in V, randomized order: class(v)=highest ranked class in neighborhood of v; B L 4 5 8 C L 3 deg=5 deg=1 A L 1 deg=2 6 deg=4 D L 2 3 E L 3 deg=3 • Nodes have a class and communicate it to their adjacent nodes • A node adopts one of the majority class in its neighbourhood • Nodes are processed in random order for some iterations 5

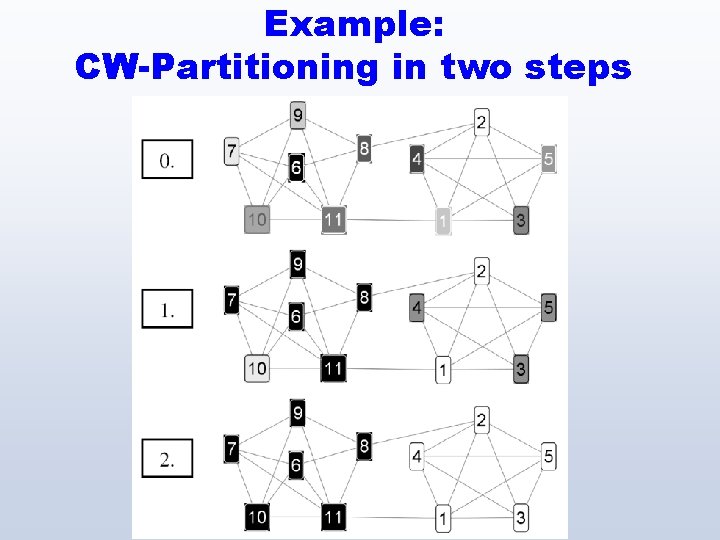

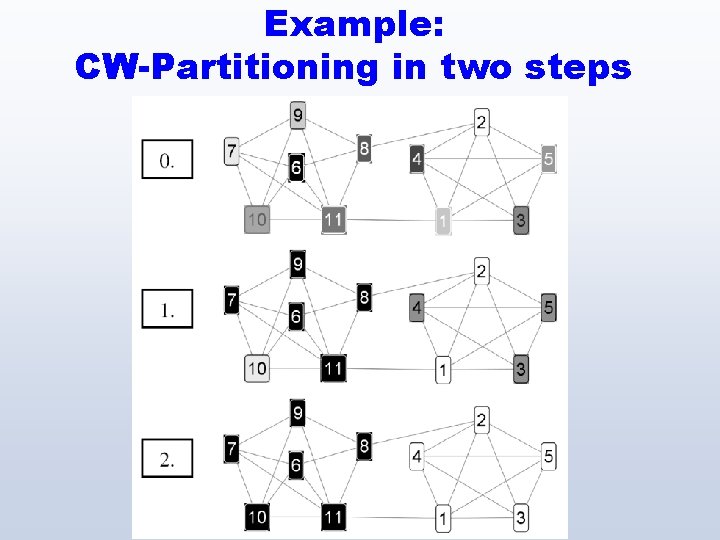

Example: CW-Partitioning in two steps 6

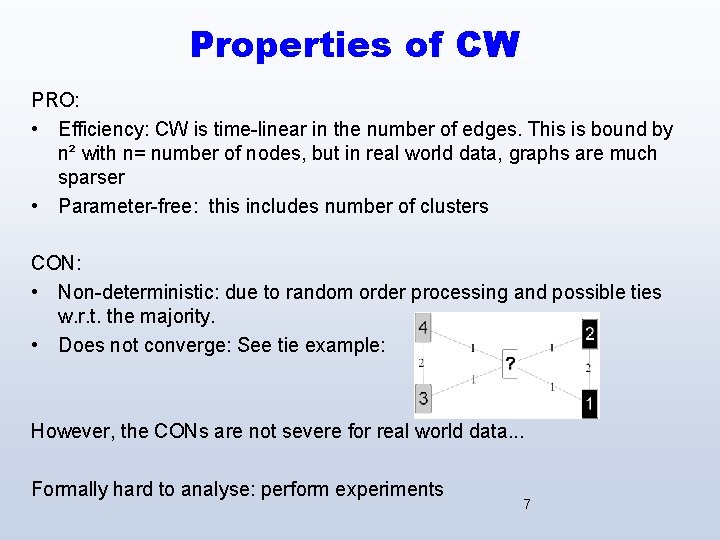

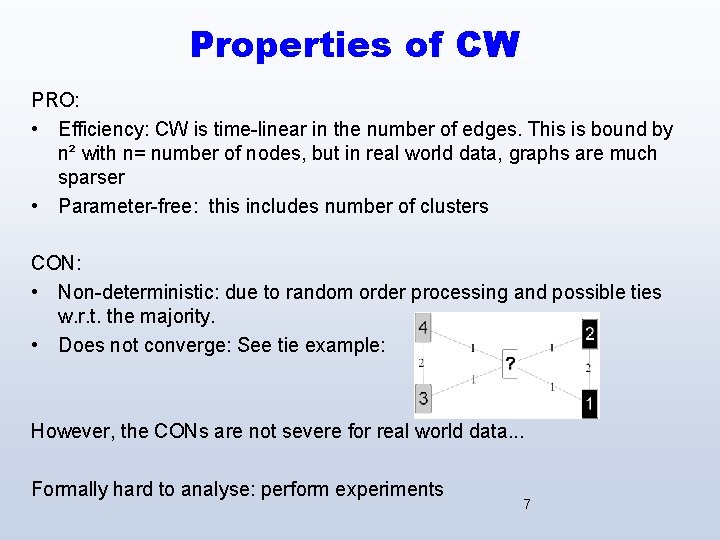

Properties of CW PRO: • Efficiency: CW is time-linear in the number of edges. This is bound by n² with n= number of nodes, but in real world data, graphs are much sparser • Parameter-free: this includes number of clusters CON: • Non-deterministic: due to random order processing and possible ties w. r. t. the majority. • Does not converge: See tie example: However, the CONs are not severe for real world data. . . Formally hard to analyse: perform experiments 7

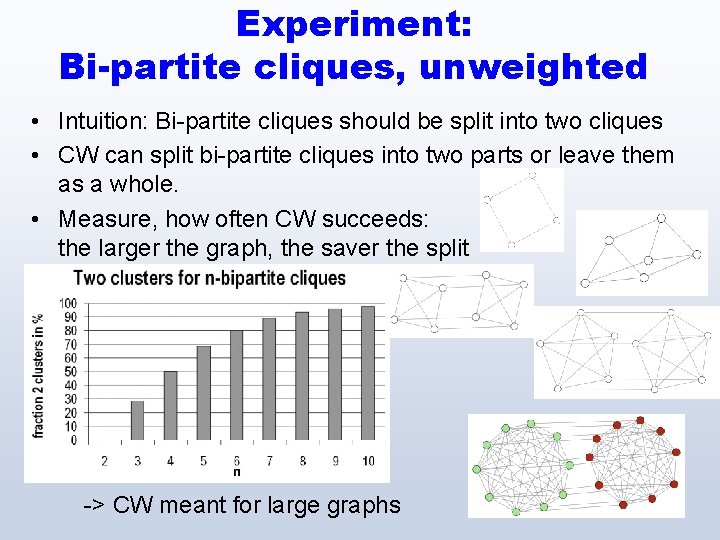

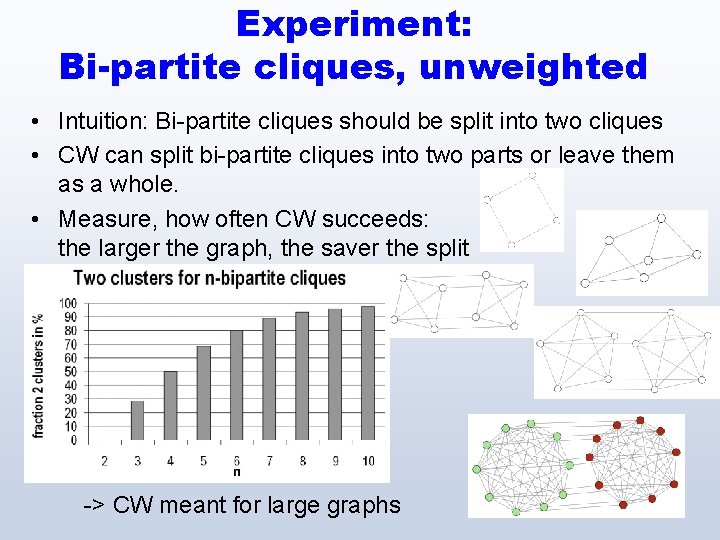

Experiment: Bi-partite cliques, unweighted • Intuition: Bi-partite cliques should be split into two cliques • CW can split bi-partite cliques into two parts or leave them as a whole. • Measure, how often CW succeeds: the larger the graph, the saver the split -> CW meant for large graphs 8

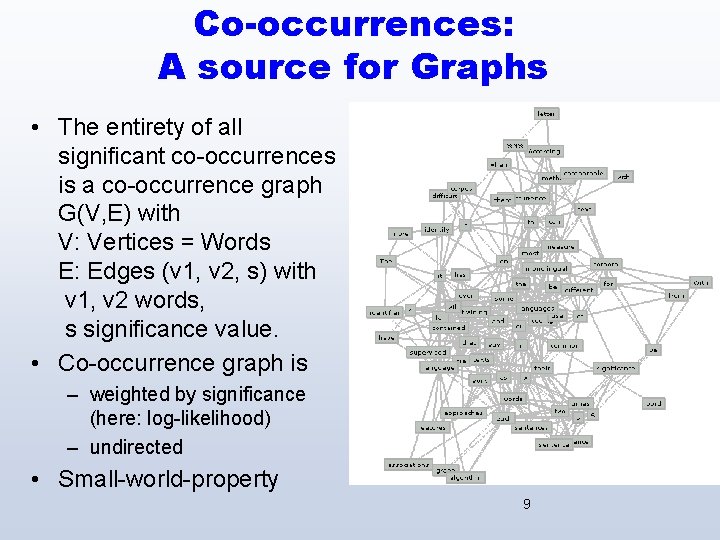

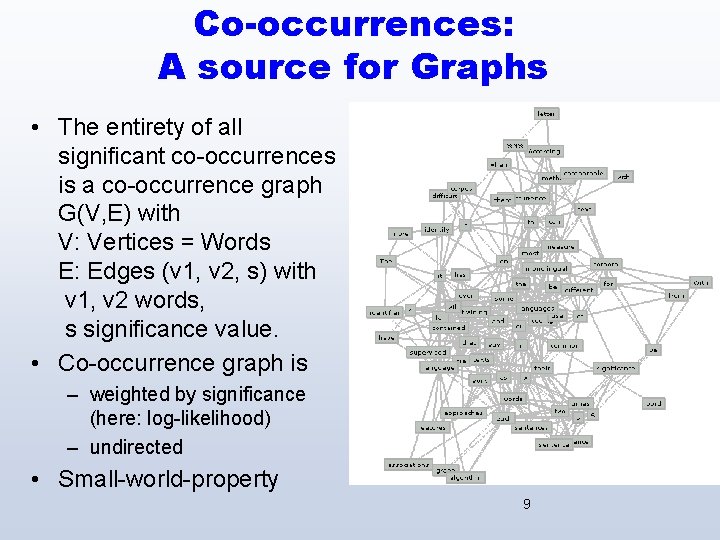

Co-occurrences: A source for Graphs • The entirety of all significant co-occurrences is a co-occurrence graph G(V, E) with V: Vertices = Words E: Edges (v 1, v 2, s) with v 1, v 2 words, s significance value. • Co-occurrence graph is – weighted by significance (here: log-likelihood) – undirected • Small-world-property 9

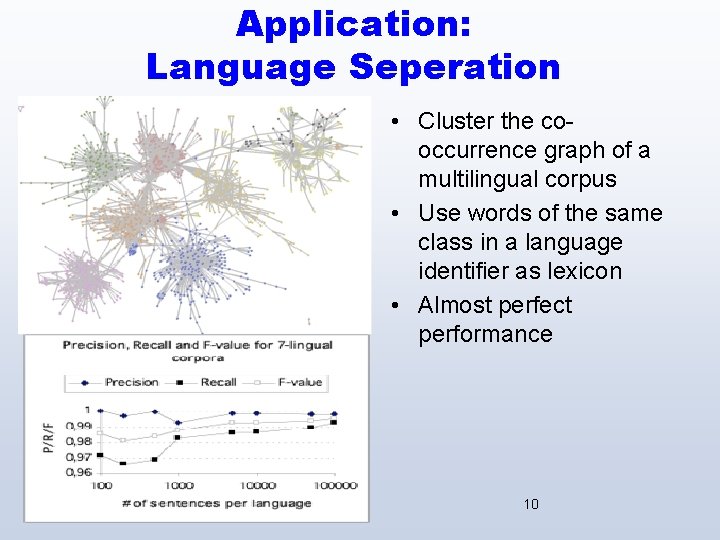

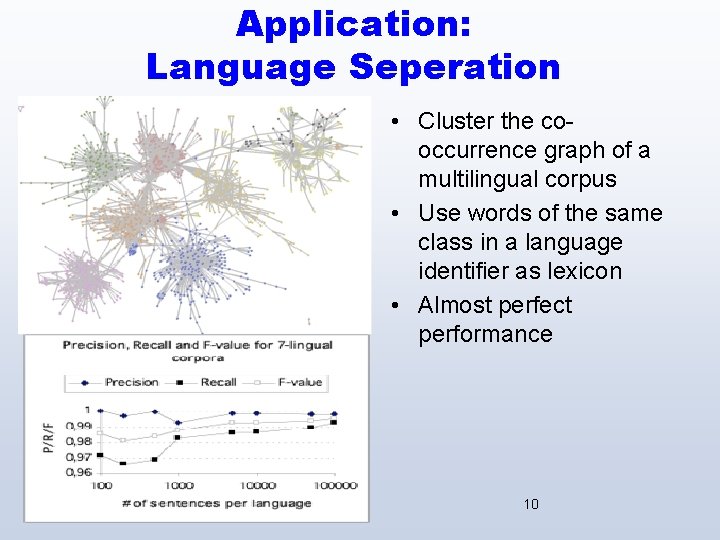

Application: Language Seperation • Cluster the cooccurrence graph of a multilingual corpus • Use words of the same class in a language identifier as lexicon • Almost perfect performance 10

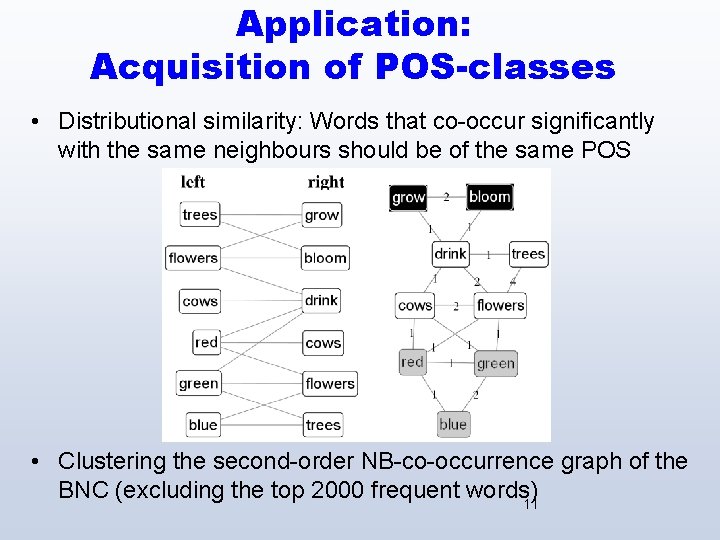

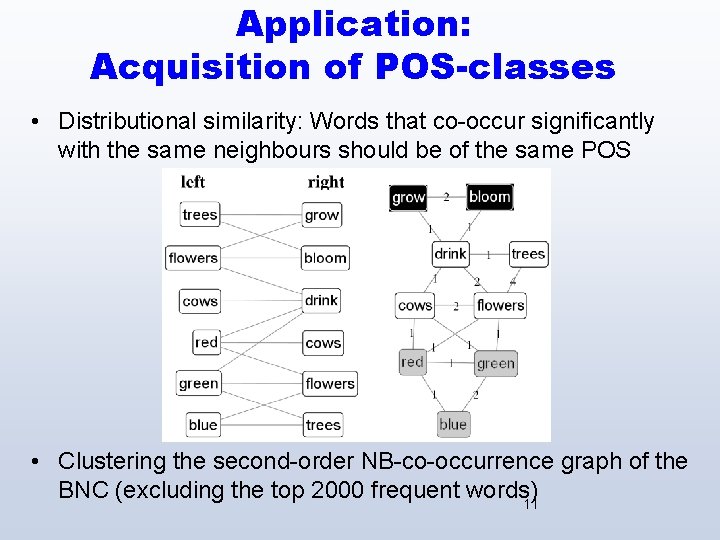

Application: Acquisition of POS-classes • Distributional similarity: Words that co-occur significantly with the same neighbours should be of the same POS • Clustering the second-order NB-co-occurrence graph of the BNC (excluding the top 2000 frequent words) 11

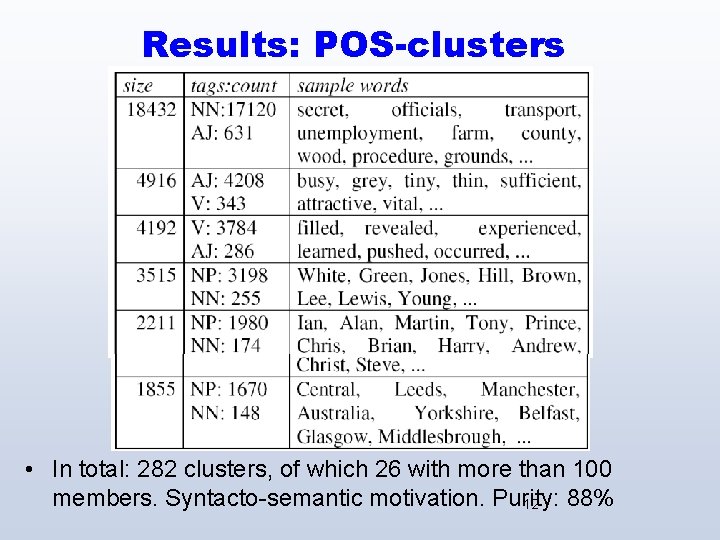

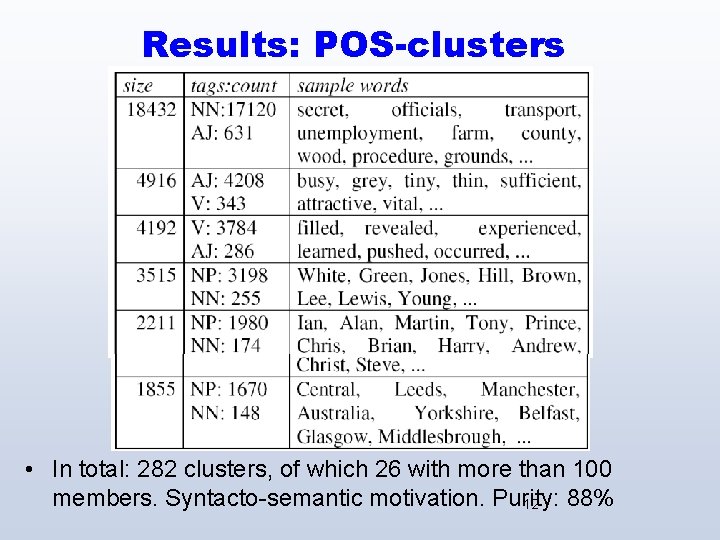

Results: POS-clusters • In total: 282 clusters, of which 26 with more than 100 members. Syntacto-semantic motivation. Purity: 88% 12

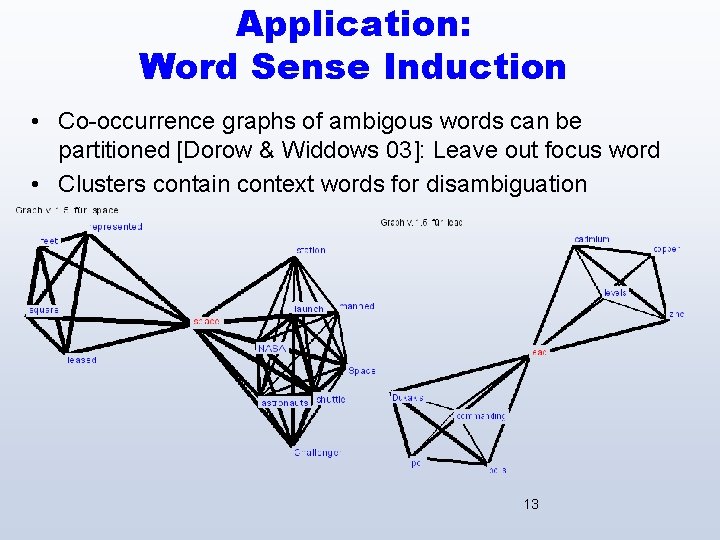

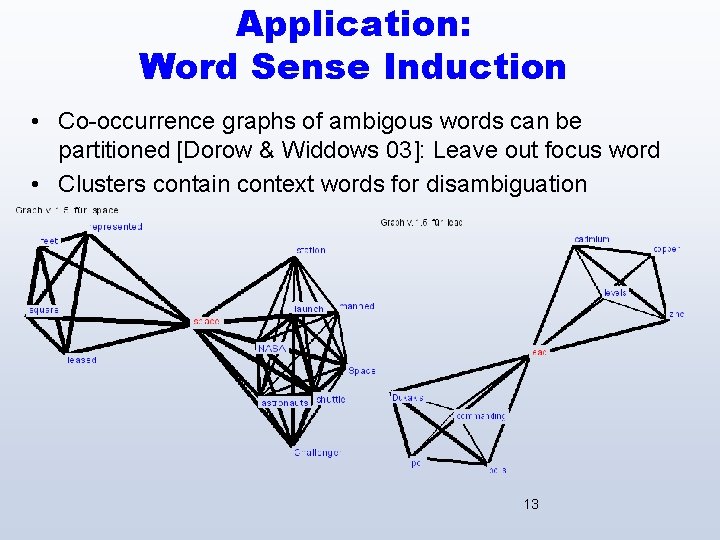

Application: Word Sense Induction • Co-occurrence graphs of ambigous words can be partitioned [Dorow & Widdows 03]: Leave out focus word • Clusters contain context words for disambiguation 13

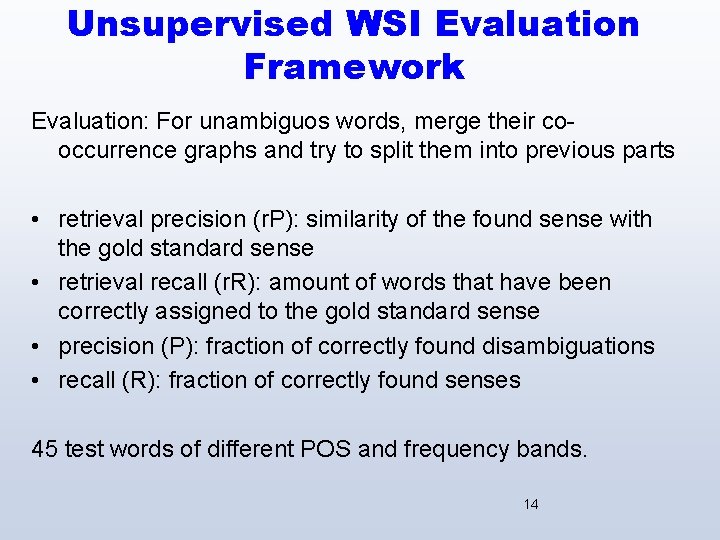

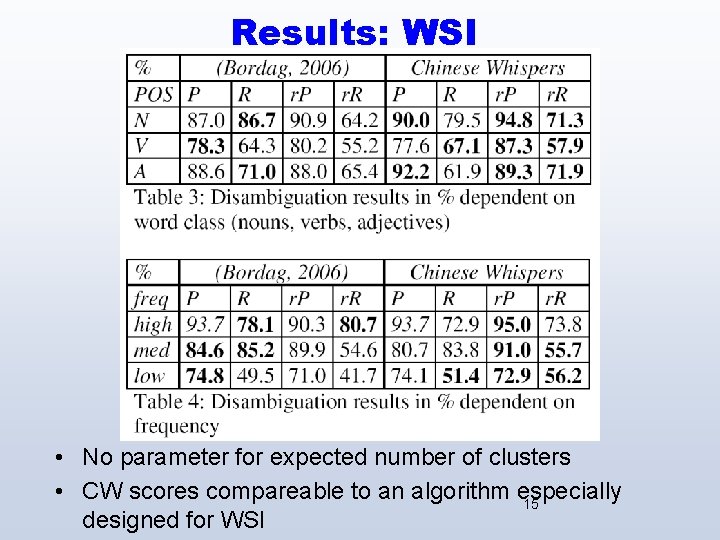

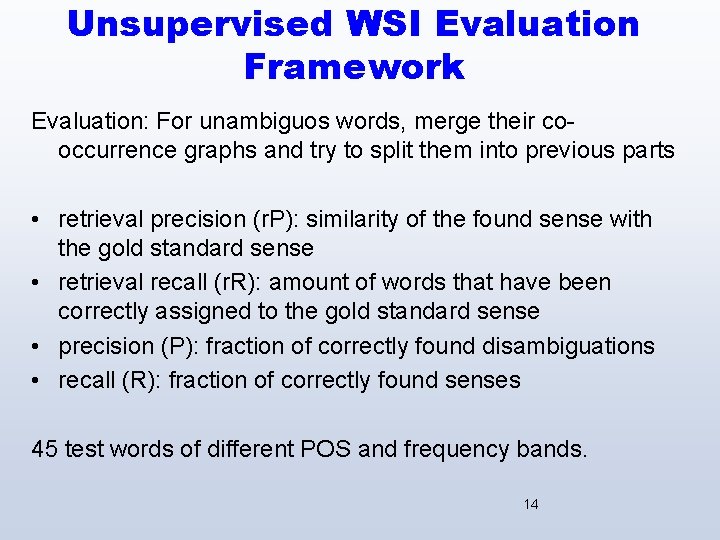

Unsupervised WSI Evaluation Framework Evaluation: For unambiguos words, merge their cooccurrence graphs and try to split them into previous parts • retrieval precision (r. P): similarity of the found sense with the gold standard sense • retrieval recall (r. R): amount of words that have been correctly assigned to the gold standard sense • precision (P): fraction of correctly found disambiguations • recall (R): fraction of correctly found senses 45 test words of different POS and frequency bands. 14

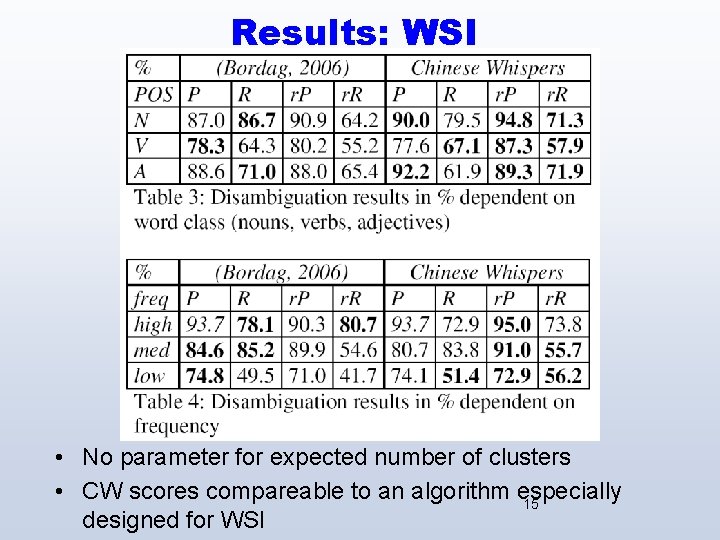

Results: WSI • No parameter for expected number of clusters • CW scores compareable to an algorithm especially 15 designed for WSI

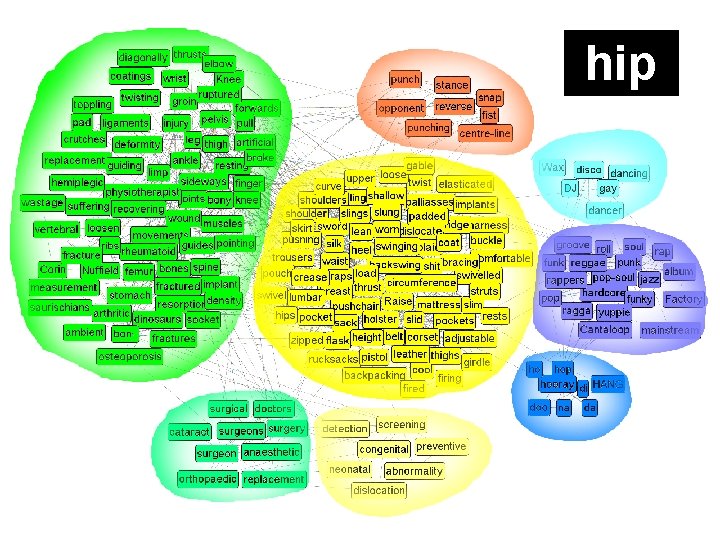

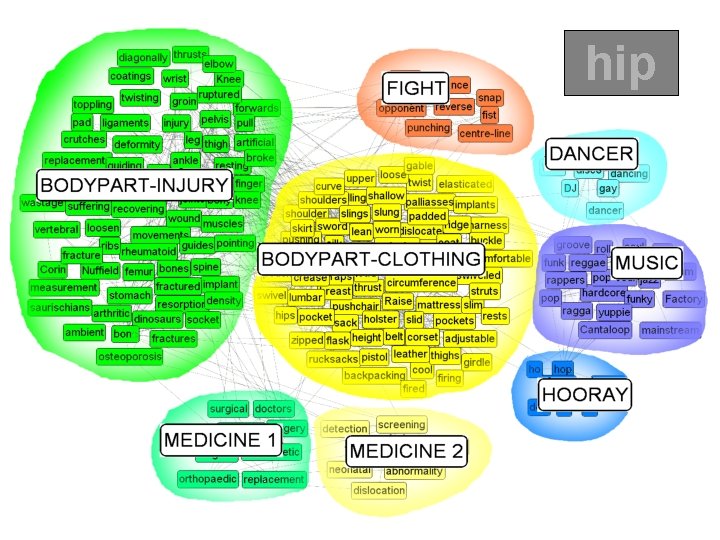

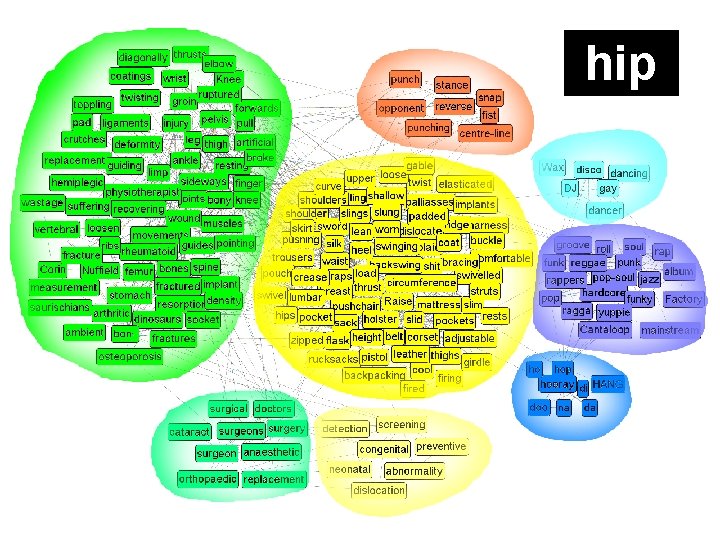

hip 16

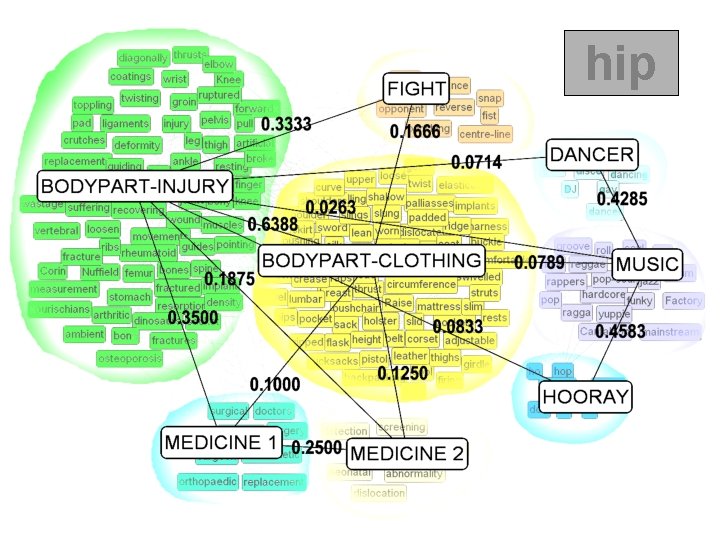

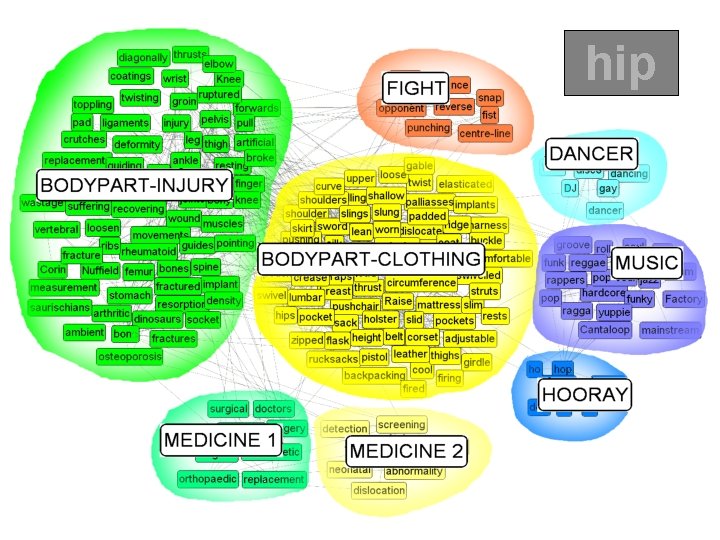

hip 17

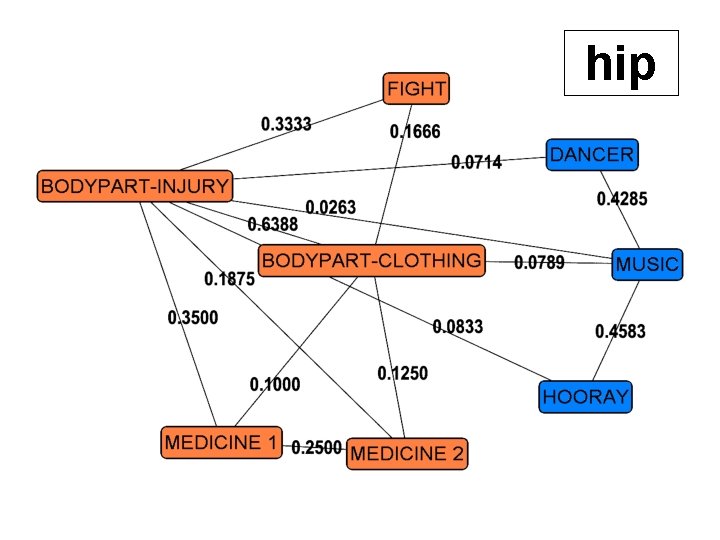

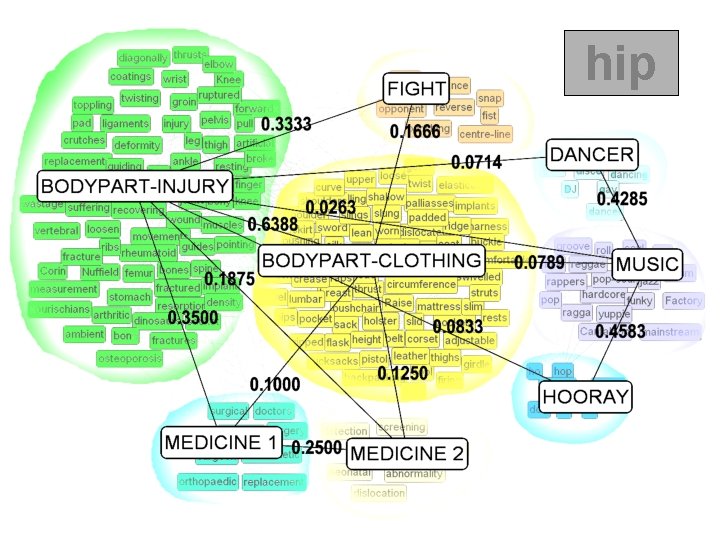

hip 18

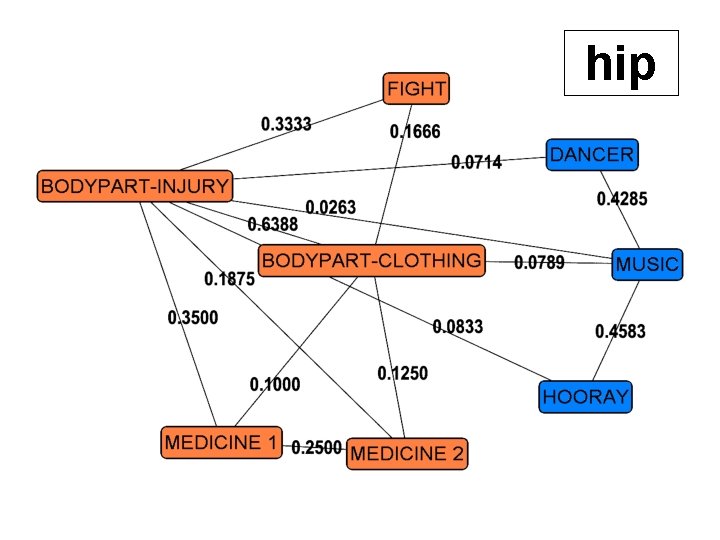

hip 19

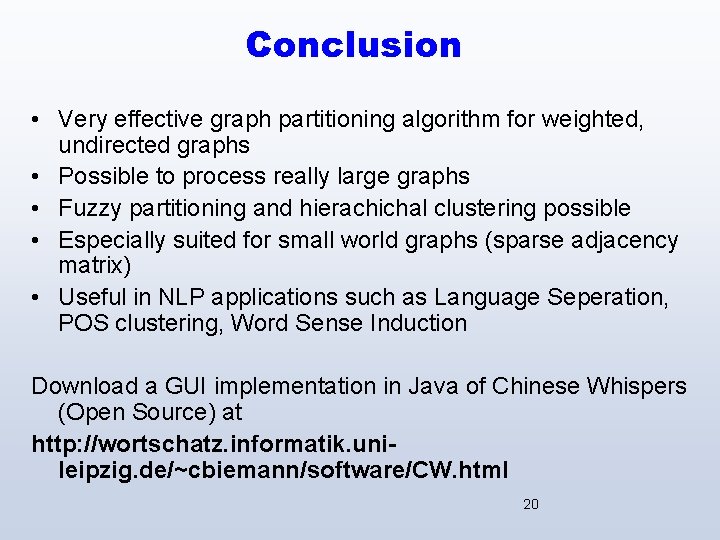

Conclusion • Very effective graph partitioning algorithm for weighted, undirected graphs • Possible to process really large graphs • Fuzzy partitioning and hierachichal clustering possible • Especially suited for small world graphs (sparse adjacency matrix) • Useful in NLP applications such as Language Seperation, POS clustering, Word Sense Induction Download a GUI implementation in Java of Chinese Whispers (Open Source) at http: //wortschatz. informatik. unileipzig. de/~cbiemann/software/CW. html 20

Questions ? THANK YOU 21

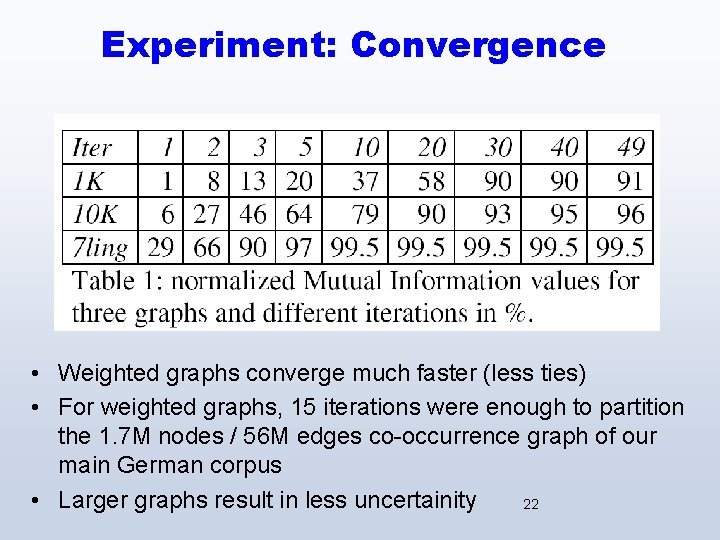

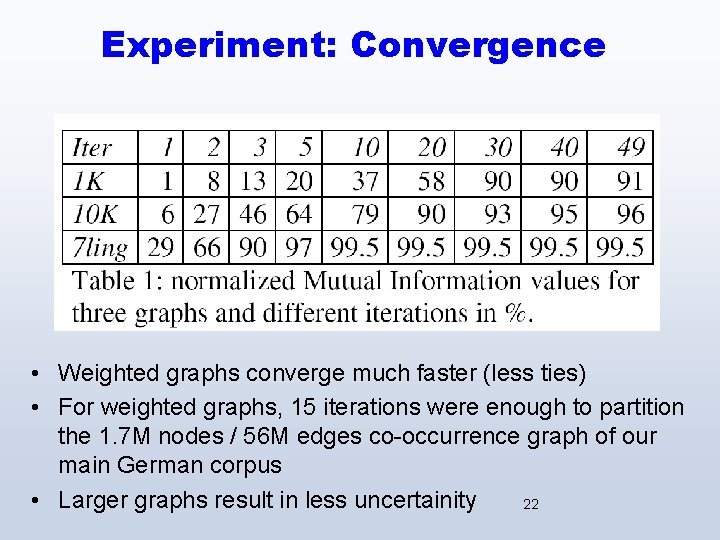

Experiment: Convergence • Weighted graphs converge much faster (less ties) • For weighted graphs, 15 iterations were enough to partition the 1. 7 M nodes / 56 M edges co-occurrence graph of our main German corpus • Larger graphs result in less uncertainity 22

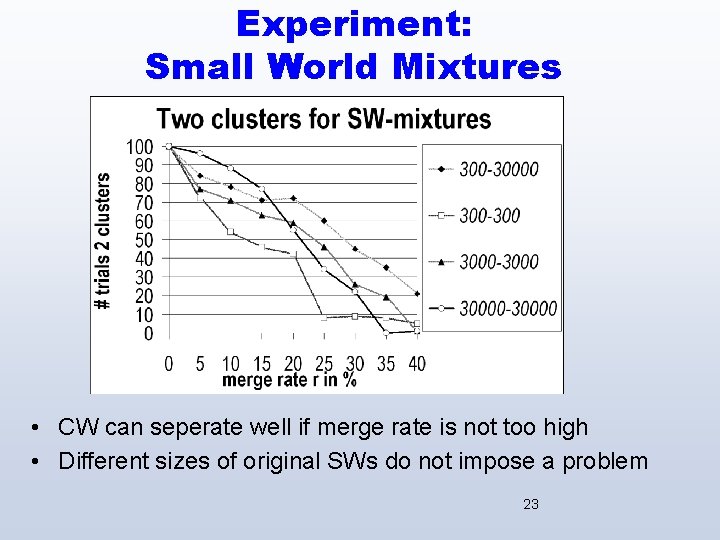

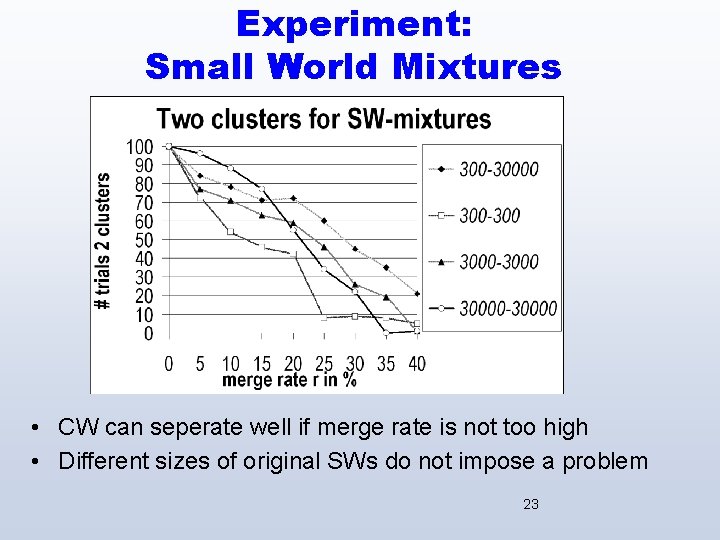

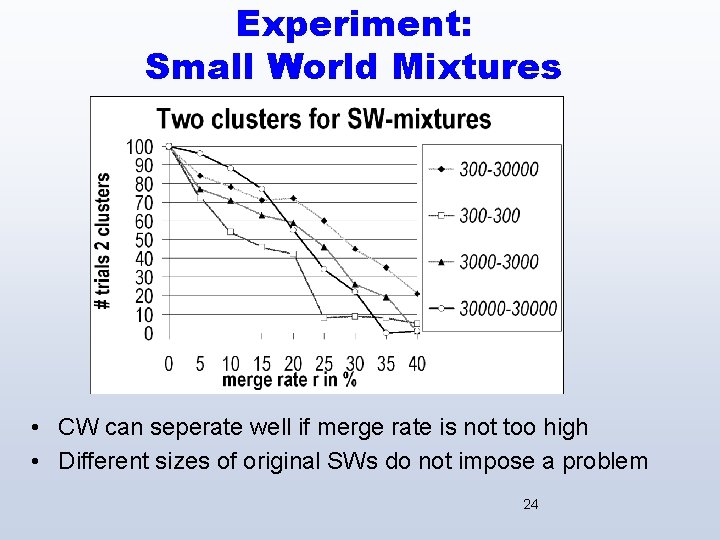

Experiment: Small World Mixtures • CW can seperate well if merge rate is not too high • Different sizes of original SWs do not impose a problem 23

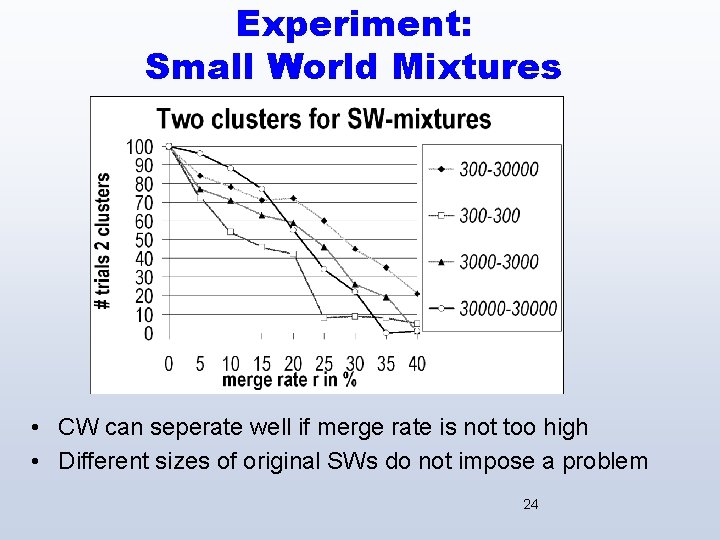

Experiment: Small World Mixtures • CW can seperate well if merge rate is not too high • Different sizes of original SWs do not impose a problem 24

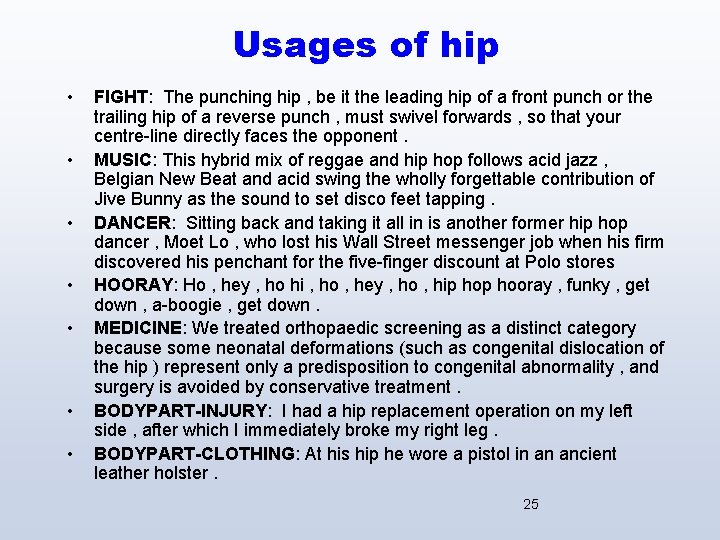

Usages of hip • • FIGHT: The punching hip , be it the leading hip of a front punch or the trailing hip of a reverse punch , must swivel forwards , so that your centre-line directly faces the opponent. MUSIC: This hybrid mix of reggae and hip hop follows acid jazz , Belgian New Beat and acid swing the wholly forgettable contribution of Jive Bunny as the sound to set disco feet tapping. DANCER: Sitting back and taking it all in is another former hip hop dancer , Moet Lo , who lost his Wall Street messenger job when his firm discovered his penchant for the five-finger discount at Polo stores HOORAY: Ho , hey , ho hi , ho , hey , ho , hip hooray , funky , get down , a-boogie , get down. MEDICINE: We treated orthopaedic screening as a distinct category because some neonatal deformations (such as congenital dislocation of the hip ) represent only a predisposition to congenital abnormality , and surgery is avoided by conservative treatment. BODYPART-INJURY: I had a hip replacement operation on my left side , after which I immediately broke my right leg. BODYPART-CLOTHING: At his hip he wore a pistol in an ancient leather holster. 25