Chapter 9 Syntax Analysis Winter 2007 SEG 2101

- Slides: 73

Chapter 9 Syntax Analysis Winter 2007 SEG 2101 Chapter 9 1

Contents • • Context free grammars Top-down parsing Bottom-up parsing Attribute grammars Dynamic semantics Tools for syntax analysis Chomsky’s hierarchy Winter 2007 SEG 2101 Chapter 9 2

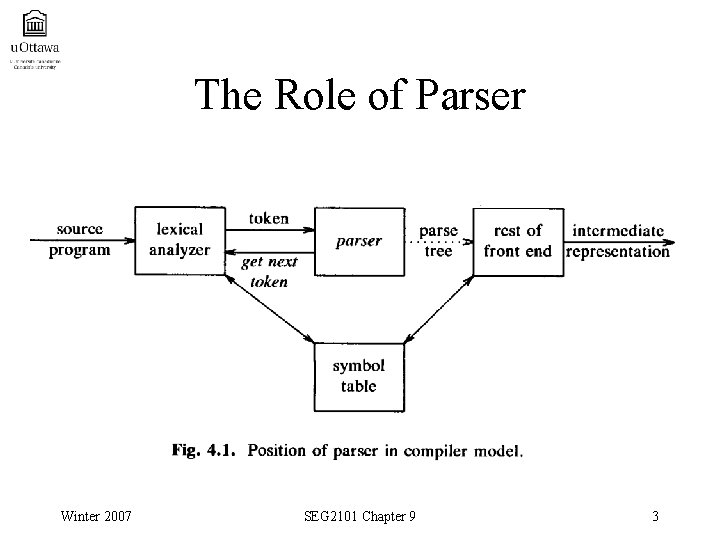

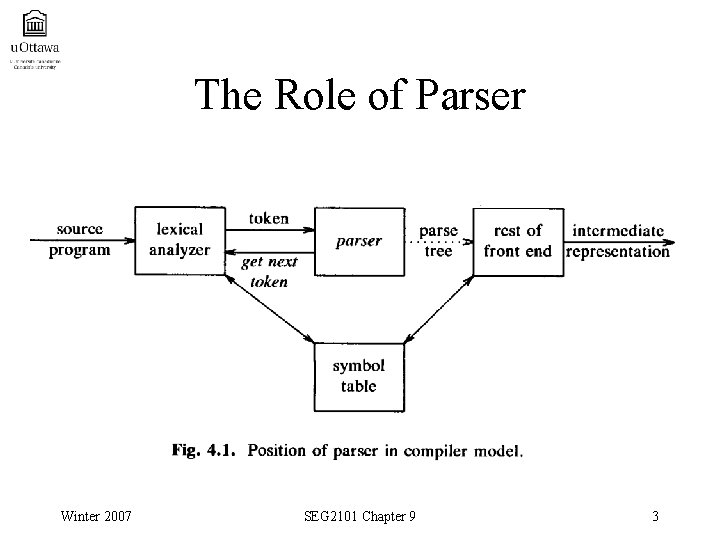

The Role of Parser Winter 2007 SEG 2101 Chapter 9 3

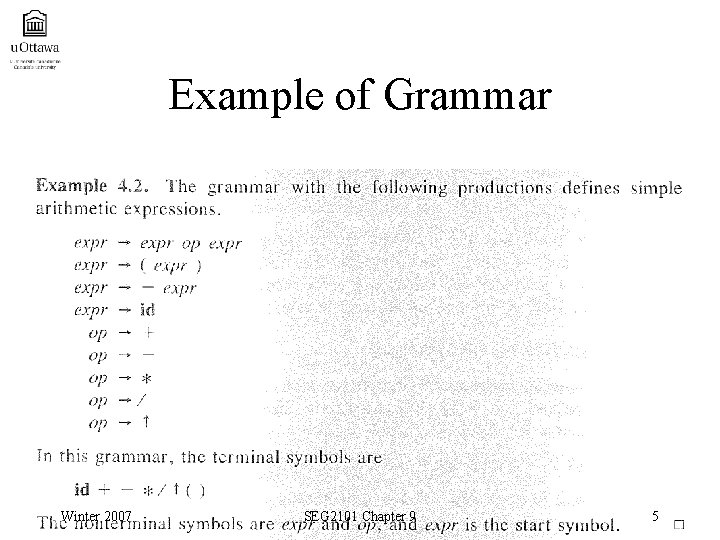

9. 1: Context Free Grammars • A context free grammar consists of terminals, nonterminals, a start symbol, and productions. • Terminals are the basic symbols from which strings are formed. • Nonterminals are syntactic variables that denote sets of strings. • One nonterminal is distinguished as the start symbol. • The productions of a grammar specify the manner in which the terminal and nonterminals can be combined to form strings. • A language that can be generated by a grammar is said to be a context-free language. Winter 2007 SEG 2101 Chapter 9 4

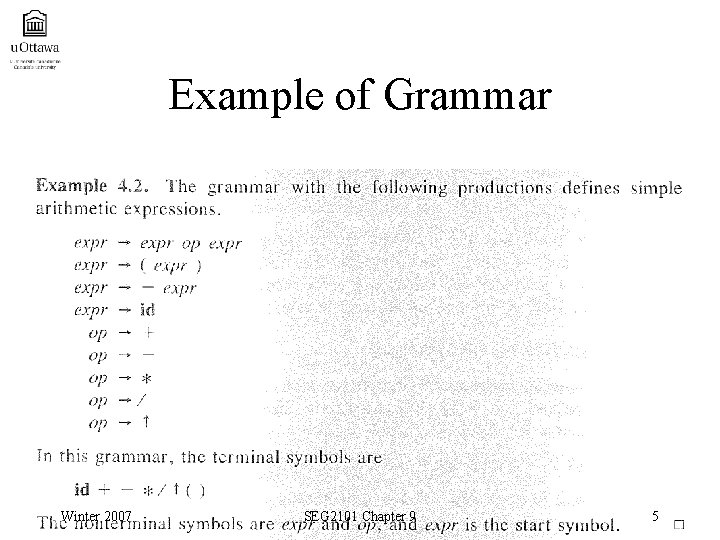

Example of Grammar Winter 2007 SEG 2101 Chapter 9 5

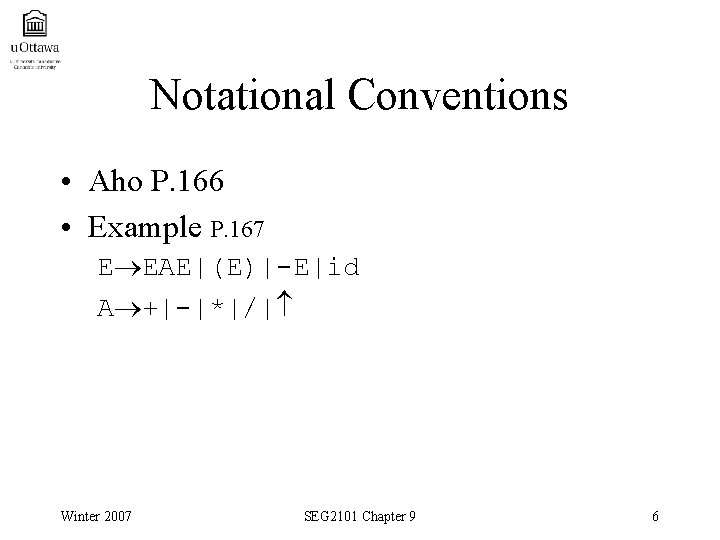

Notational Conventions • Aho P. 166 • Example P. 167 E EAE|(E)|-E|id A +|-|*|/| Winter 2007 SEG 2101 Chapter 9 6

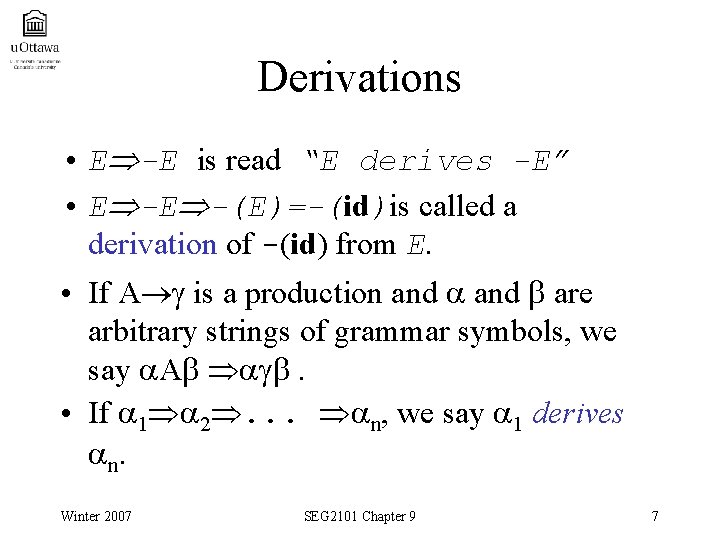

Derivations • E -E is read “E derives -E” • E -E -(E)=-(id)is called a derivation of -(id) from E. • If A is a production and are arbitrary strings of grammar symbols, we say A . • If 1 2. . . n, we say 1 derives n. Winter 2007 SEG 2101 Chapter 9 7

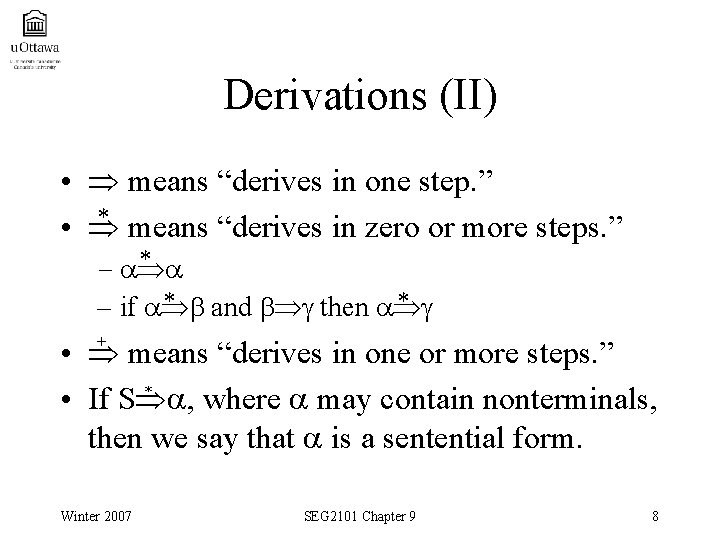

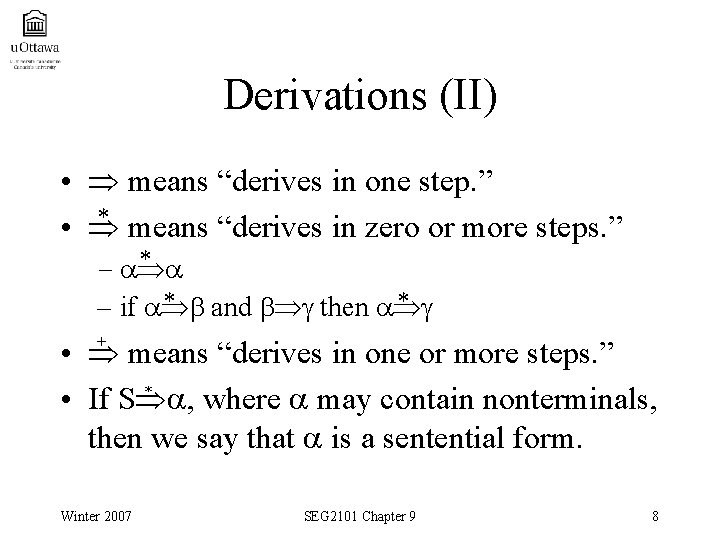

Derivations (II) • means “derives in one step. ” * means “derives in zero or more steps. ” • * – * * – if and then • means “derives in one or more steps. ” * • If S , where may contain nonterminals, then we say that is a sentential form. + Winter 2007 SEG 2101 Chapter 9 8

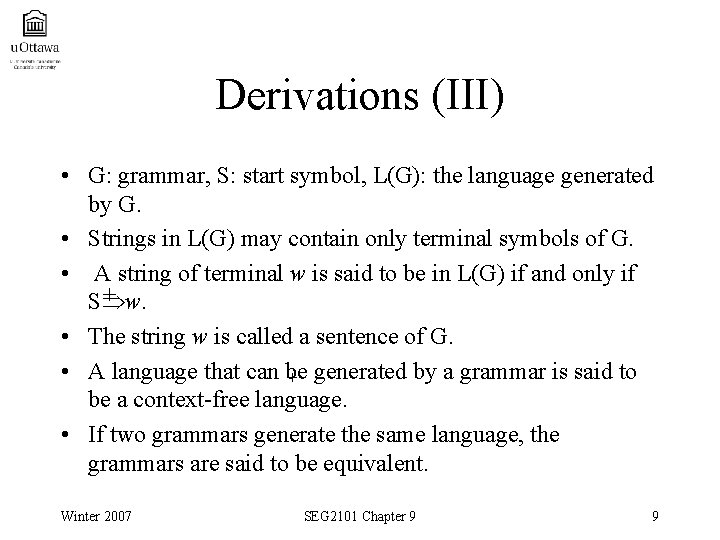

Derivations (III) • G: grammar, S: start symbol, L(G): the language generated by G. • Strings in L(G) may contain only terminal symbols of G. • A string of terminal w is said to be in L(G) if and only if + S w. • The string w is called a sentence of G. • A language that can be + generated by a grammar is said to be a context-free language. • If two grammars generate the same language, the grammars are said to be equivalent. Winter 2007 SEG 2101 Chapter 9 9

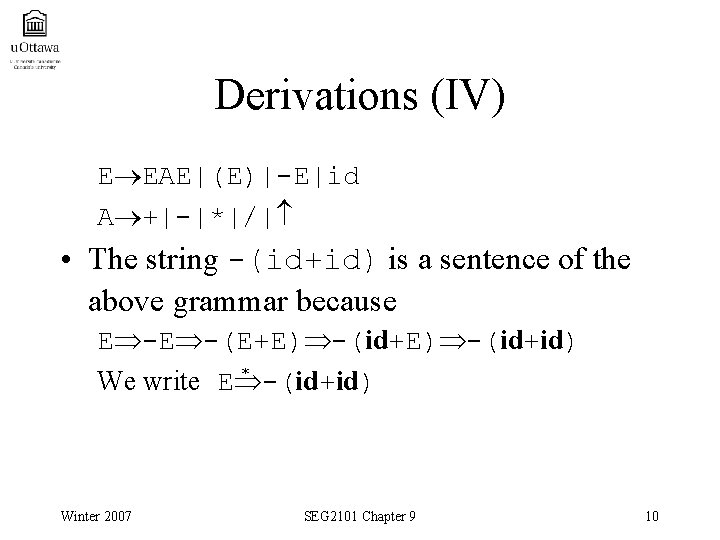

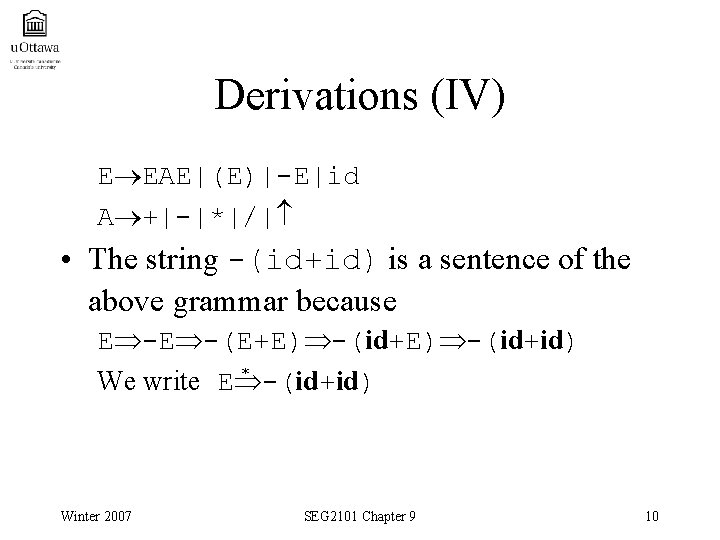

Derivations (IV) E EAE|(E)|-E|id A +|-|*|/| • The string -(id+id) is a sentence of the above grammar because E -E -(E+E) -(id+id) * We write E -(id+id) Winter 2007 SEG 2101 Chapter 9 10

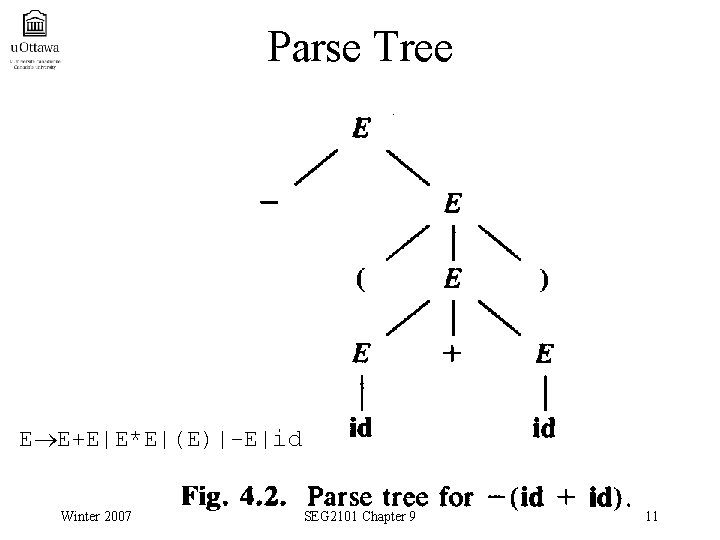

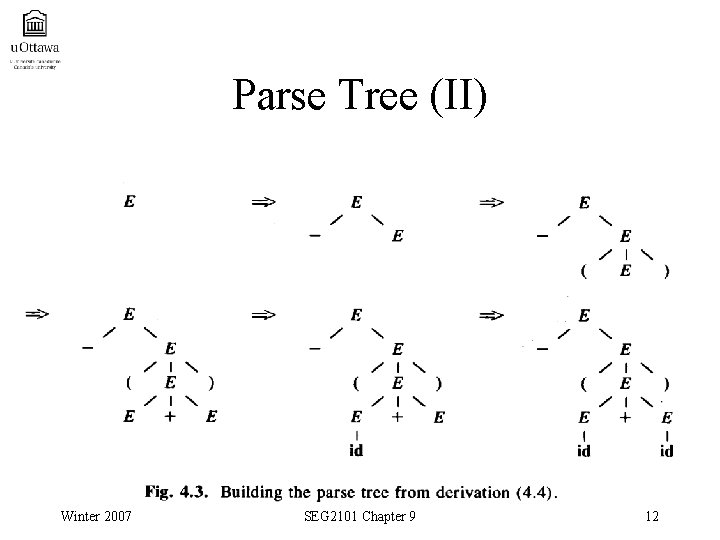

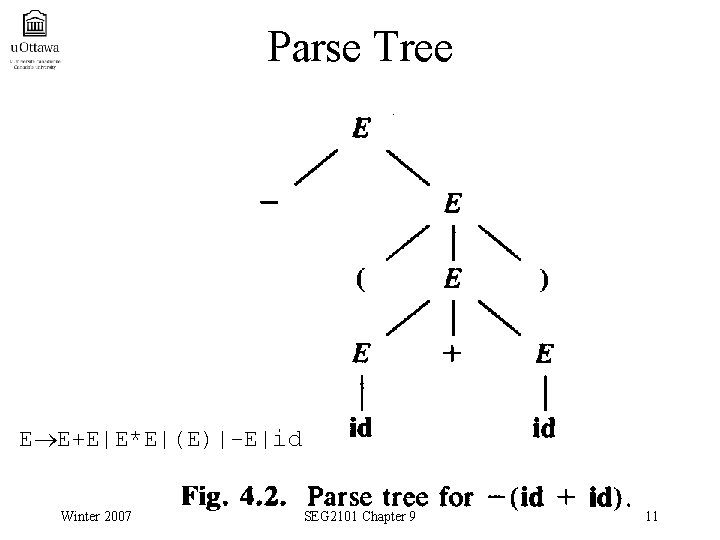

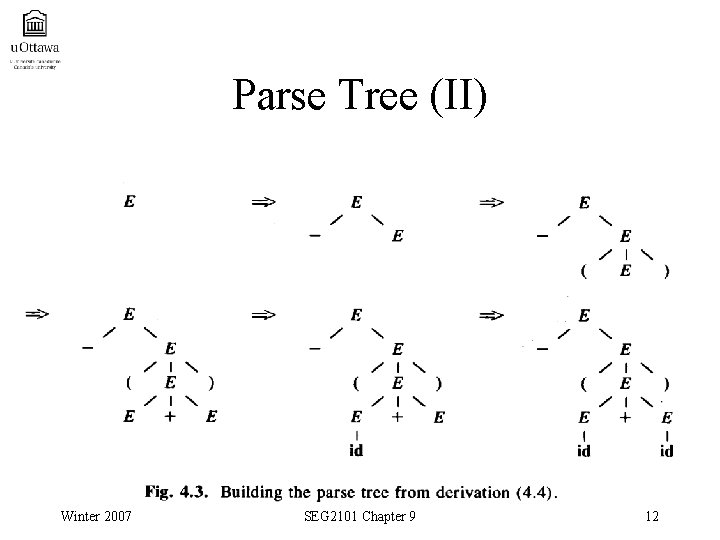

Parse Tree E E+E|E*E|(E)|-E|id Winter 2007 SEG 2101 Chapter 9 11

Parse Tree (II) Winter 2007 SEG 2101 Chapter 9 12

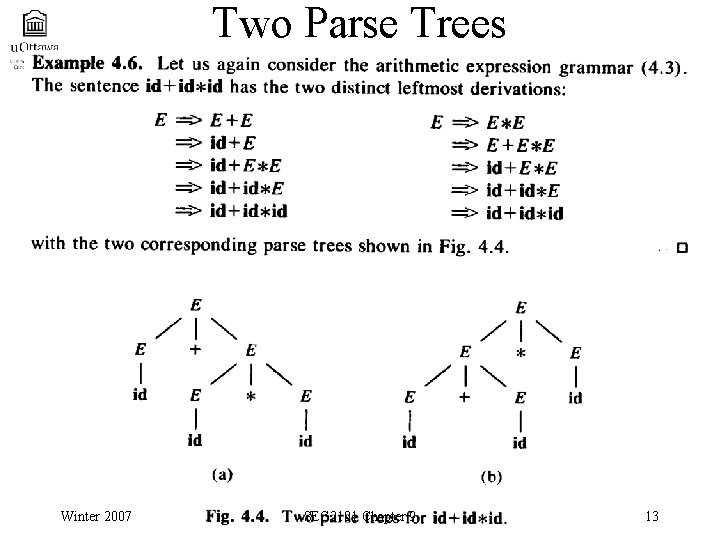

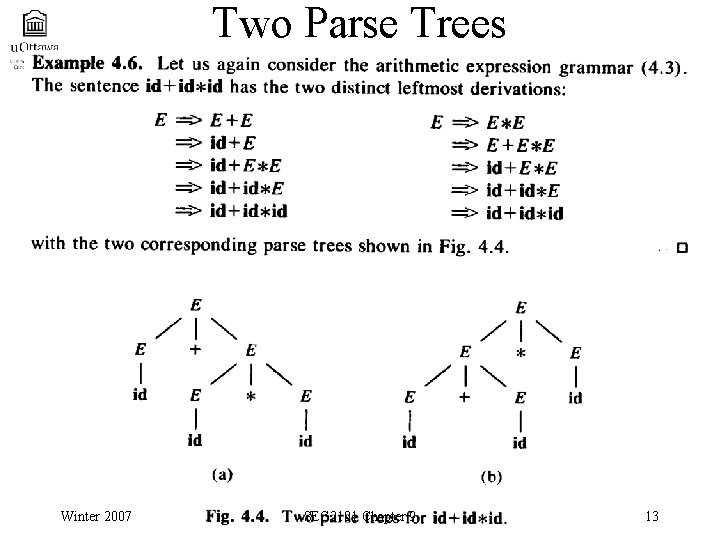

Two Parse Trees Winter 2007 SEG 2101 Chapter 9 13

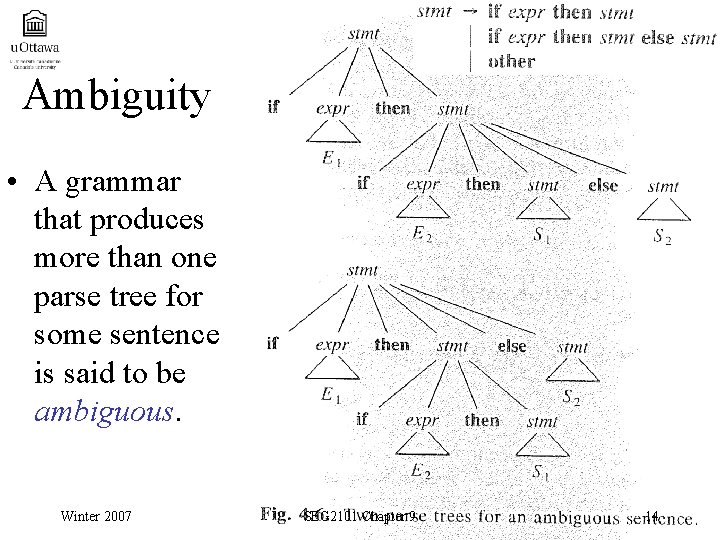

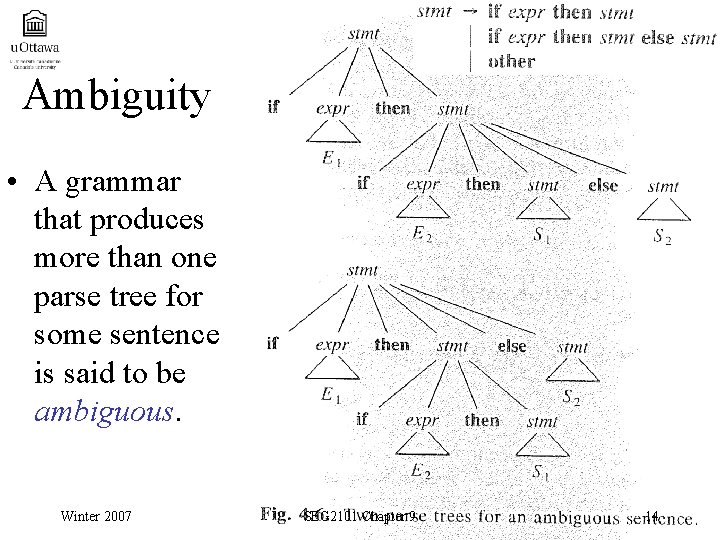

Ambiguity • A grammar that produces more than one parse tree for some sentence is said to be ambiguous. Winter 2007 SEG 2101 Chapter 9 14

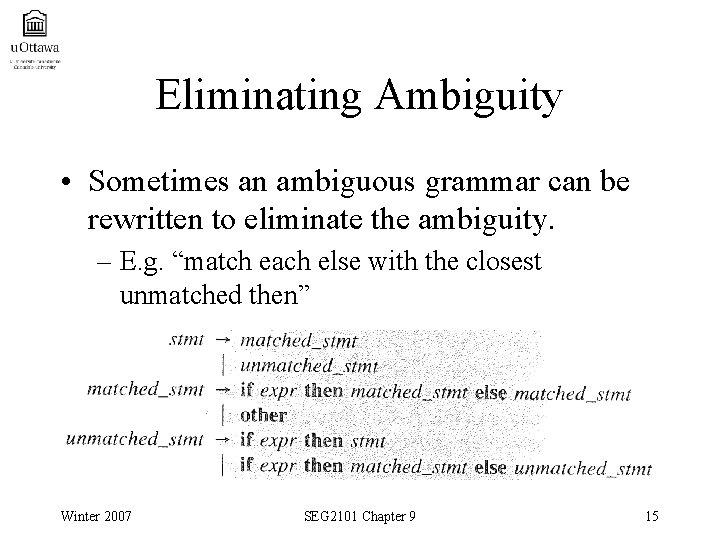

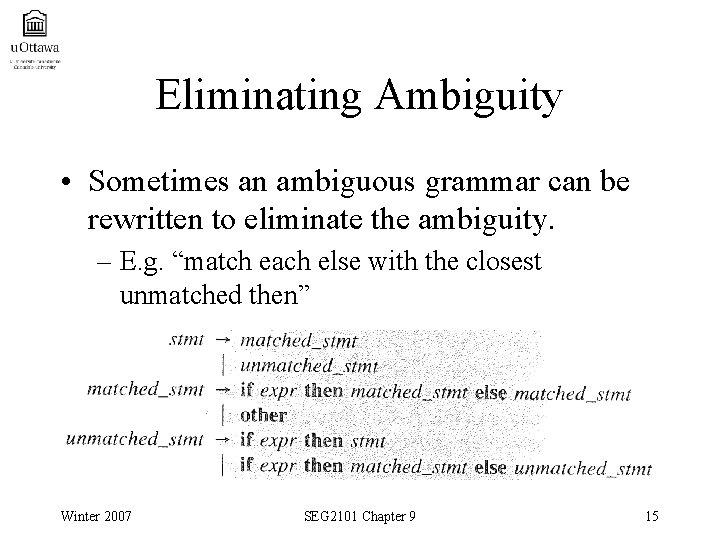

Eliminating Ambiguity • Sometimes an ambiguous grammar can be rewritten to eliminate the ambiguity. – E. g. “match each else with the closest unmatched then” Winter 2007 SEG 2101 Chapter 9 15

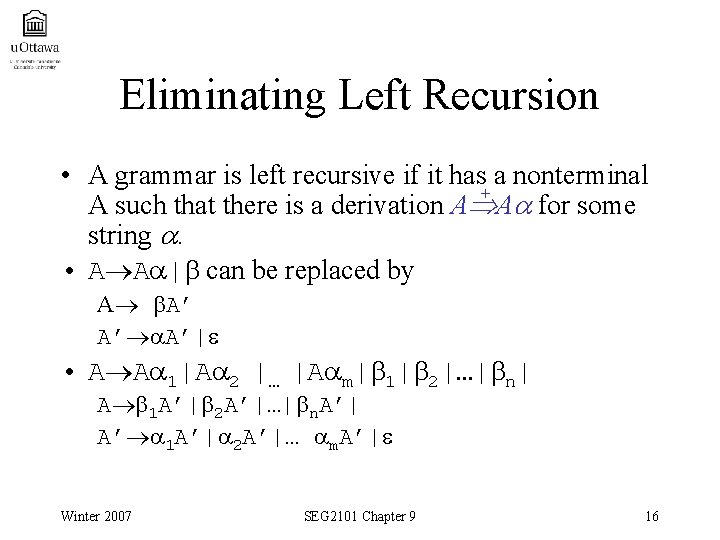

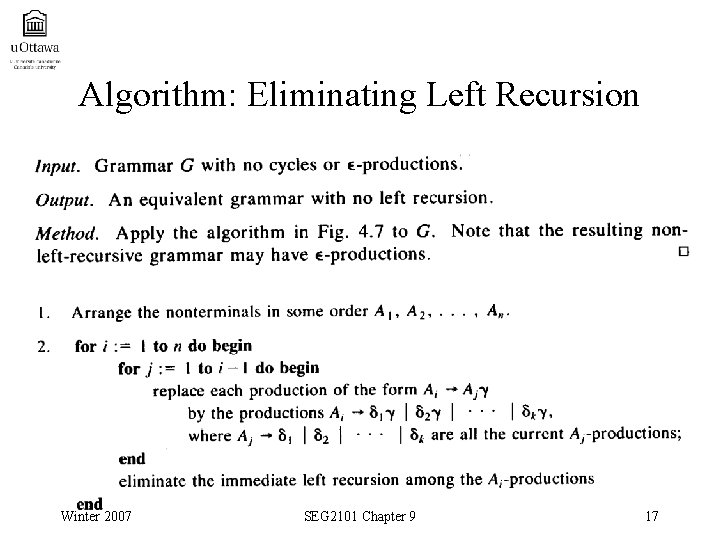

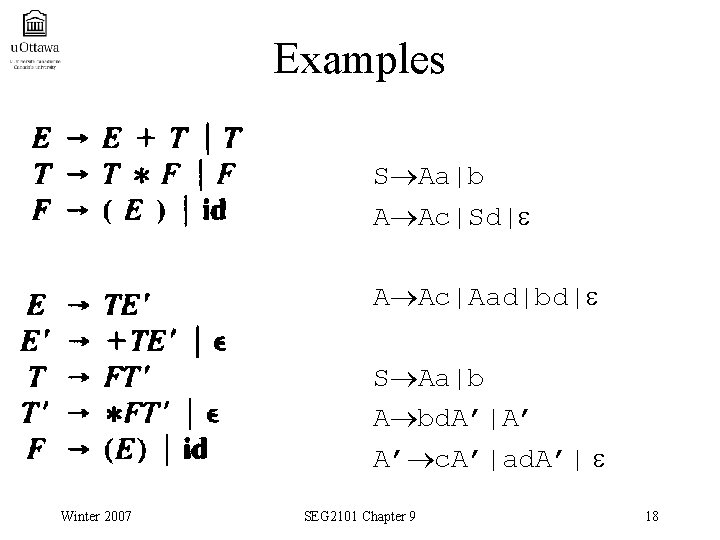

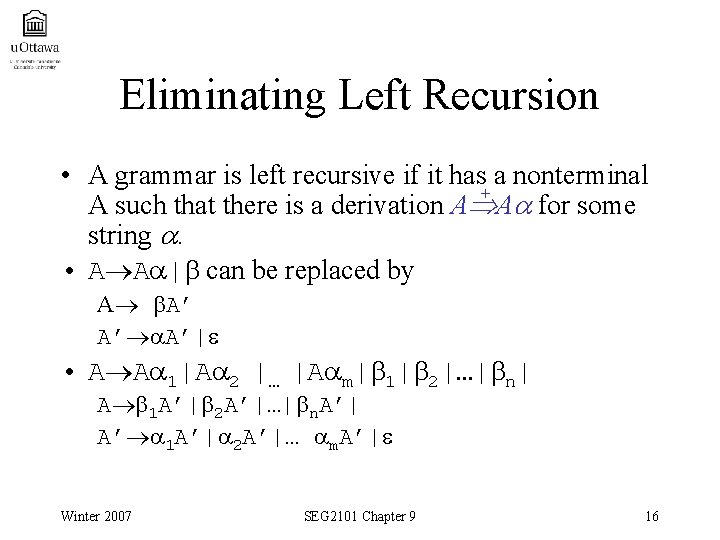

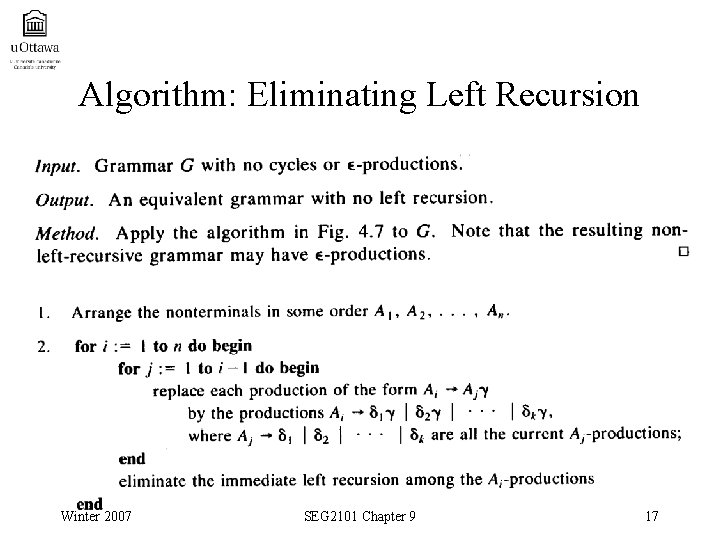

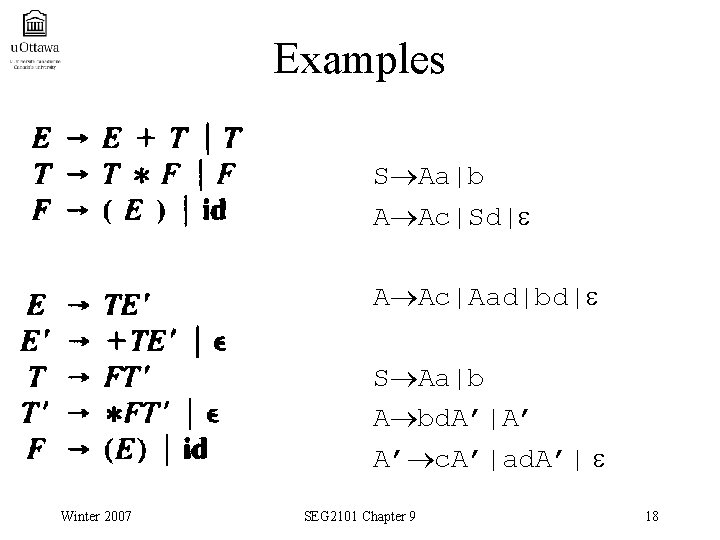

Eliminating Left Recursion • A grammar is left recursive if it has a nonterminal + A such that there is a derivation A A for some string . • A A | can be replaced by A A’ A’ A’| • A A 1|A 2 |… |A m| 1| 2|…| n| A 1 A’| 2 A’|…| n. A’| A’ 1 A’| 2 A’|… m. A’| Winter 2007 SEG 2101 Chapter 9 16

Algorithm: Eliminating Left Recursion Winter 2007 SEG 2101 Chapter 9 17

Examples S Aa|b A Ac|Sd| A Ac|Aad|bd| S Aa|b A bd. A’|A’ A’ c. A’|ad. A’| Winter 2007 SEG 2101 Chapter 9 18

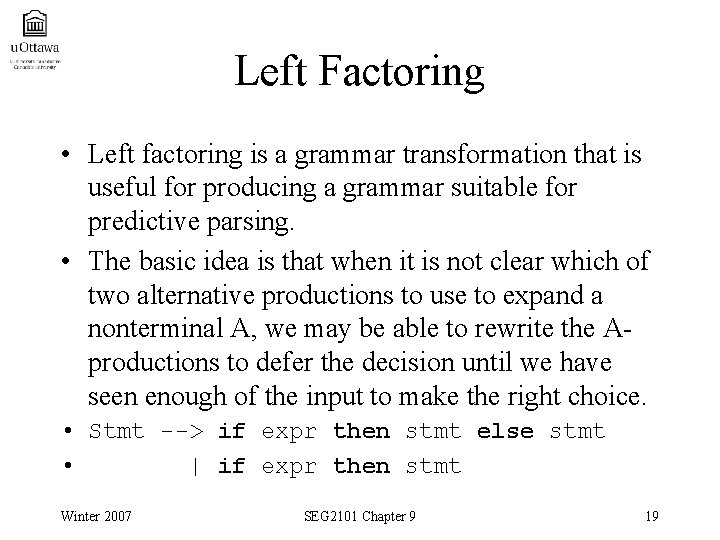

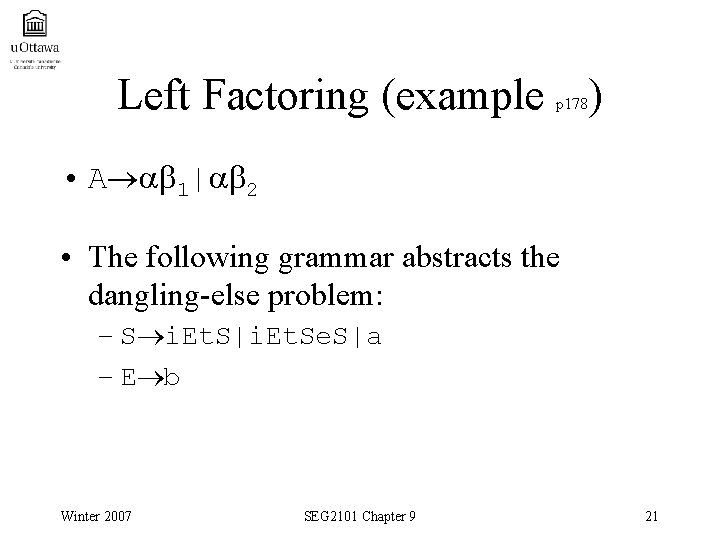

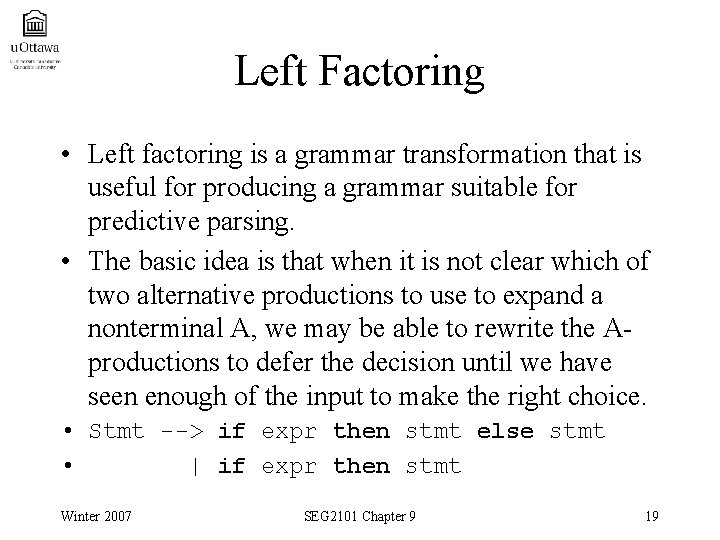

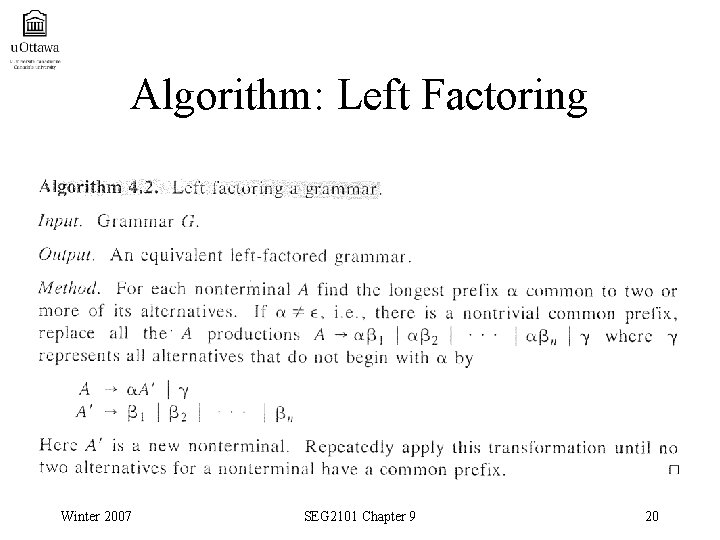

Left Factoring • Left factoring is a grammar transformation that is useful for producing a grammar suitable for predictive parsing. • The basic idea is that when it is not clear which of two alternative productions to use to expand a nonterminal A, we may be able to rewrite the Aproductions to defer the decision until we have seen enough of the input to make the right choice. • Stmt --> if expr then stmt else stmt • | if expr then stmt Winter 2007 SEG 2101 Chapter 9 19

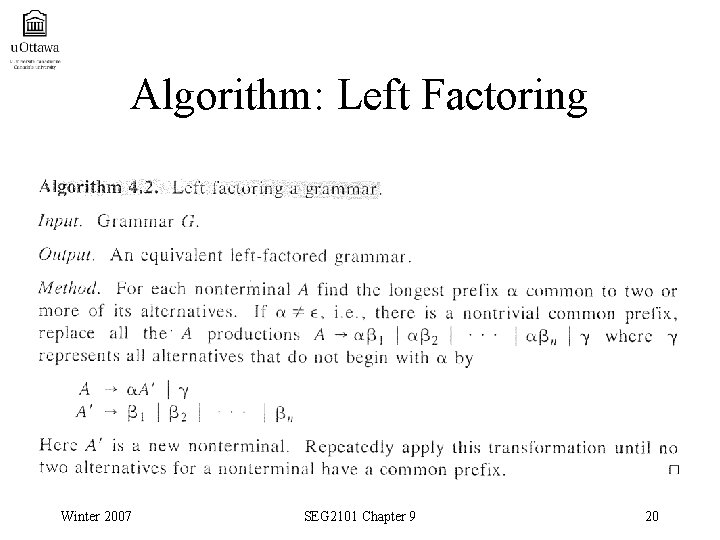

Algorithm: Left Factoring Winter 2007 SEG 2101 Chapter 9 20

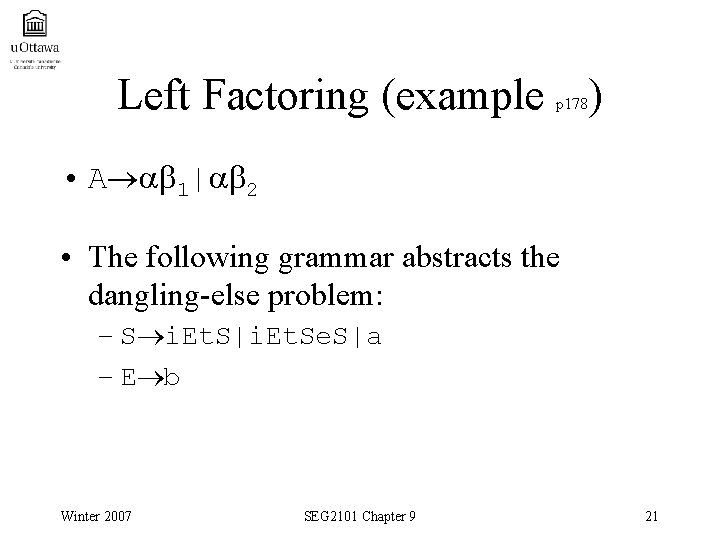

Left Factoring (example ) p 178 • A 1| 2 • The following grammar abstracts the dangling-else problem: – S i. Et. S|i. Et. Se. S|a – E b Winter 2007 SEG 2101 Chapter 9 21

9. 2: Top Down Parsing • • Recursive-descent parsing Predictive parsers Nonrecursive predictive parsing FIRST and FOLLOW Construction of predictive parsing table LL(1) grammars Error recovery in predictive parsing (if time permits) Winter 2007 SEG 2101 Chapter 9 22

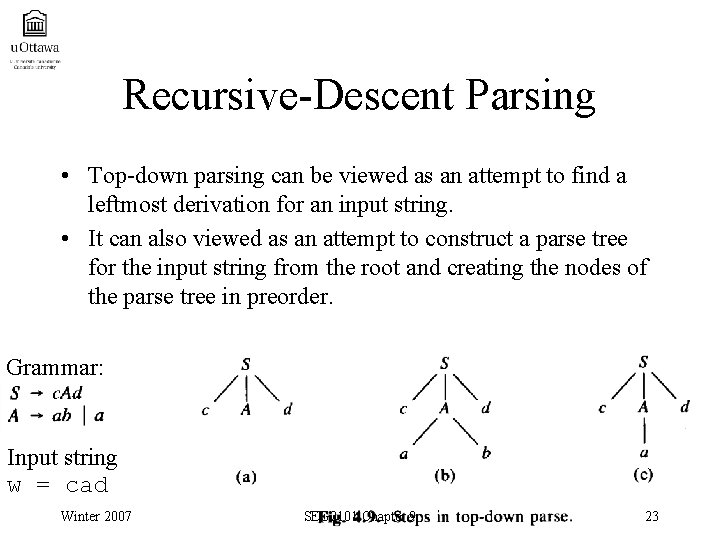

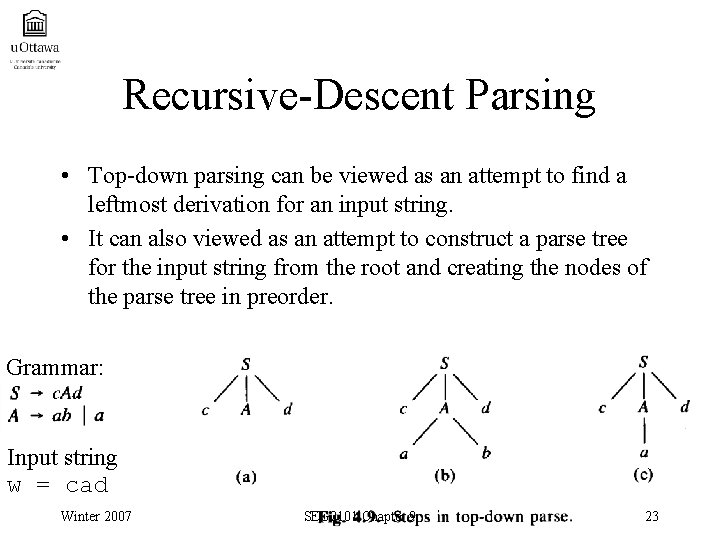

Recursive-Descent Parsing • Top-down parsing can be viewed as an attempt to find a leftmost derivation for an input string. • It can also viewed as an attempt to construct a parse tree for the input string from the root and creating the nodes of the parse tree in preorder. Grammar: Input string w = cad Winter 2007 SEG 2101 Chapter 9 23

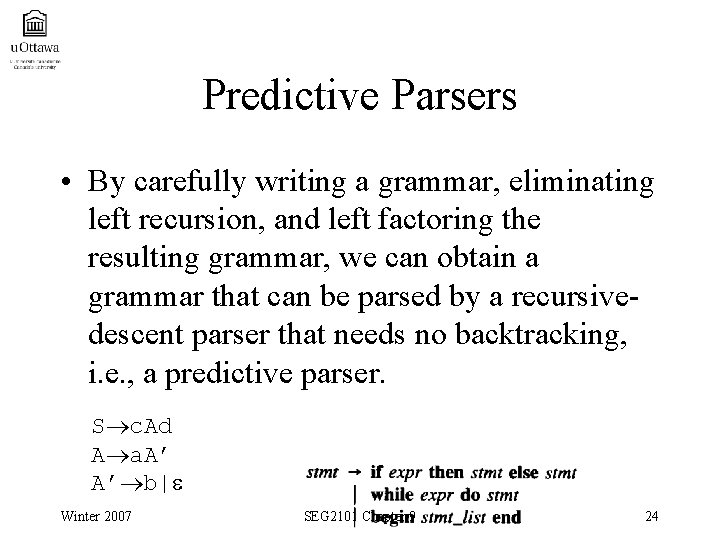

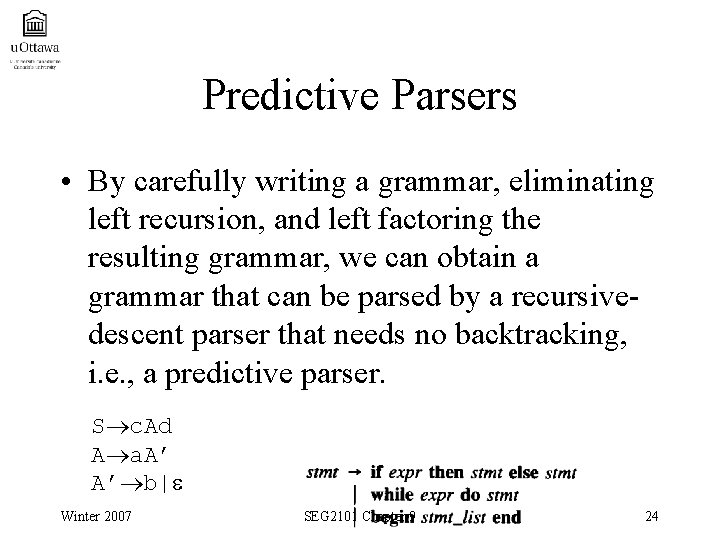

Predictive Parsers • By carefully writing a grammar, eliminating left recursion, and left factoring the resulting grammar, we can obtain a grammar that can be parsed by a recursivedescent parser that needs no backtracking, i. e. , a predictive parser. S c. Ad A a. A’ A’ b| Winter 2007 SEG 2101 Chapter 9 24

Predictive Parser (II) • Recursive-descent parsing is a top-down method of syntax analysis in which we execute a set of recursive procedures to process the input. • A procedures is associated with each nonterminal of a grammar. • Predictive parsing is what in which the look-ahead symbol unambiguously determines the procedure selected for each nonterminal. • The sequence of procedures called in processing the input implicitly defines a parse tree for the input. Winter 2007 SEG 2101 Chapter 9 25

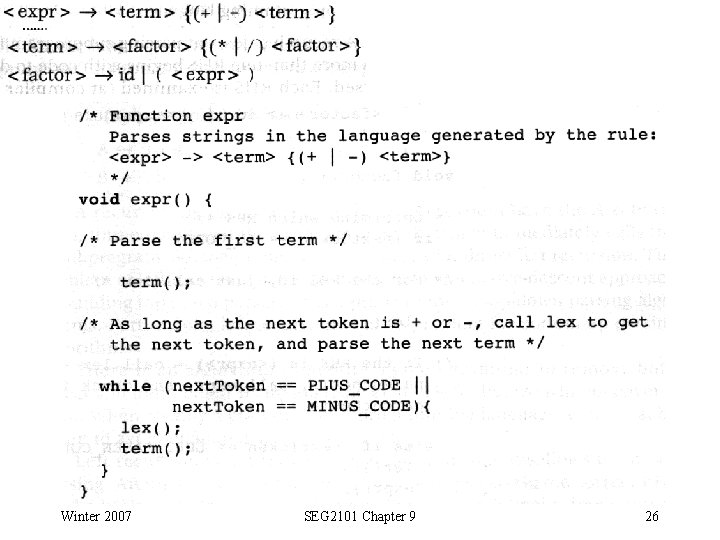

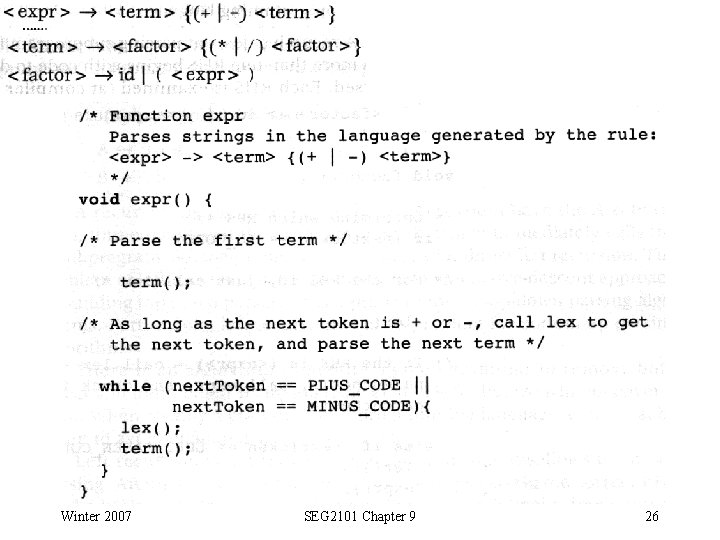

Winter 2007 SEG 2101 Chapter 9 26

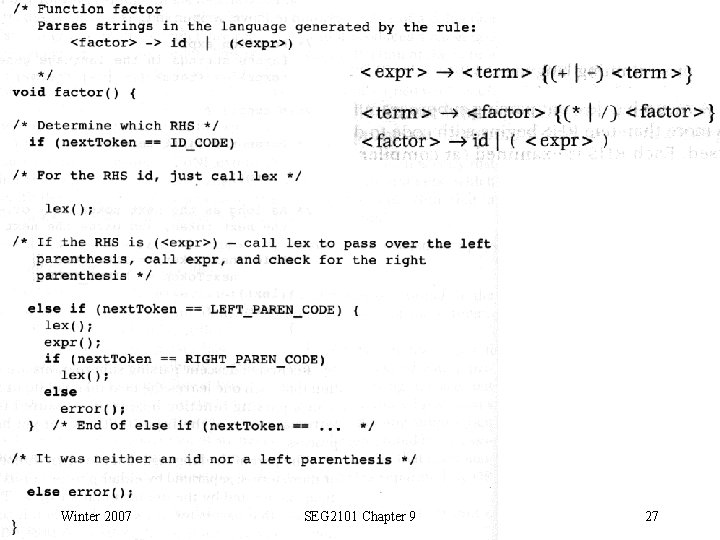

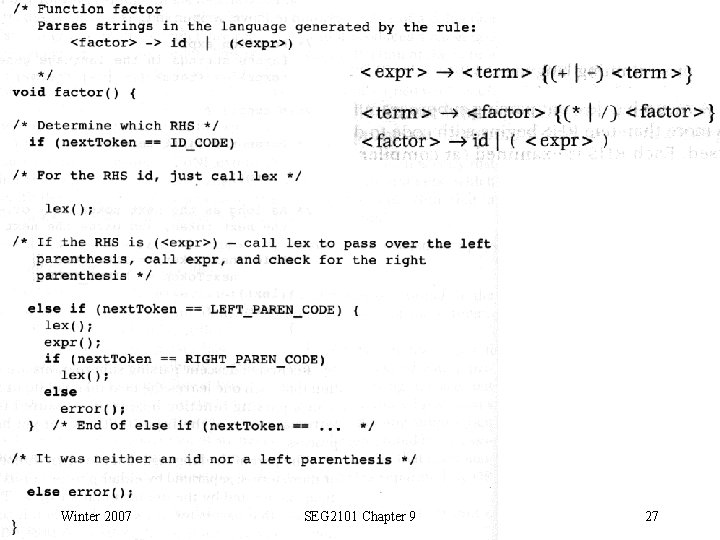

Winter 2007 SEG 2101 Chapter 9 27

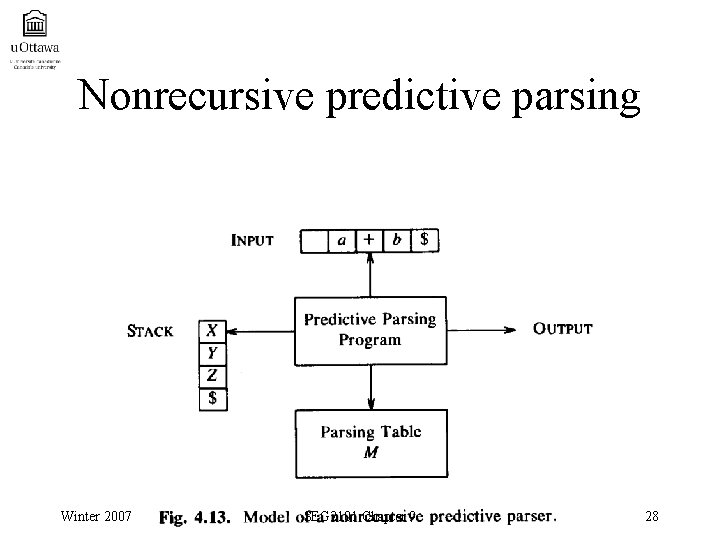

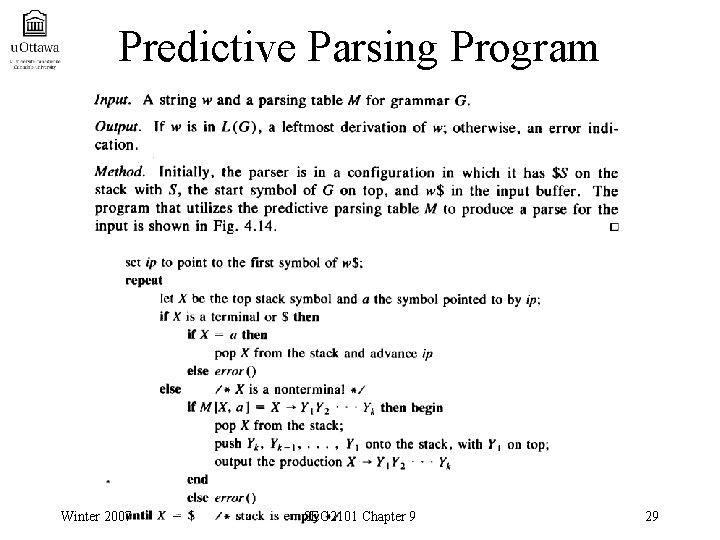

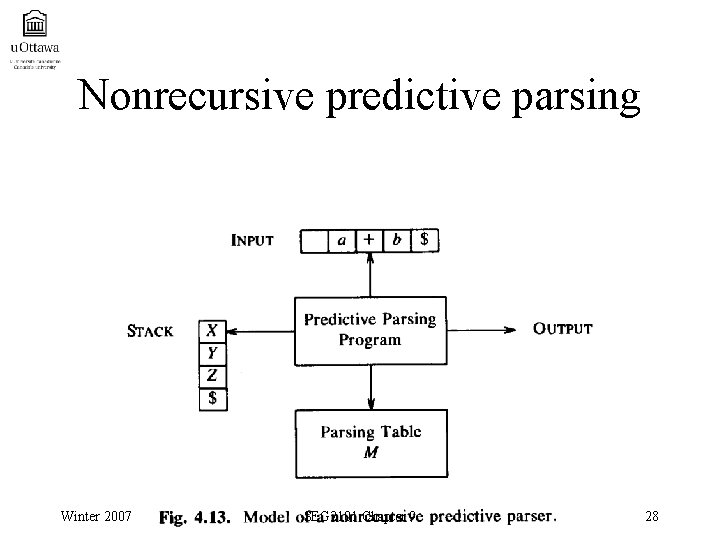

Nonrecursive predictive parsing Winter 2007 SEG 2101 Chapter 9 28

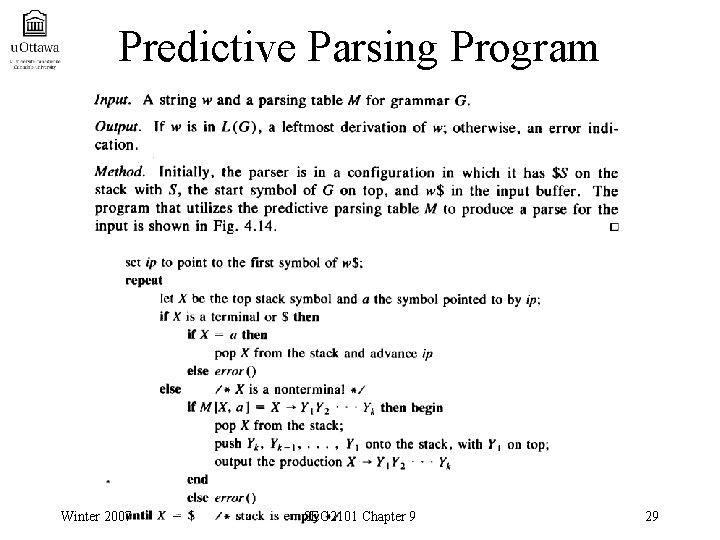

Predictive Parsing Program Winter 2007 SEG 2101 Chapter 9 29

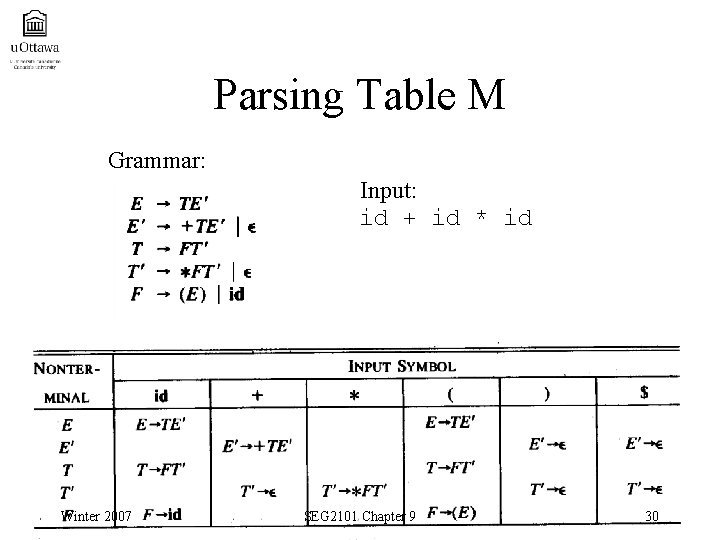

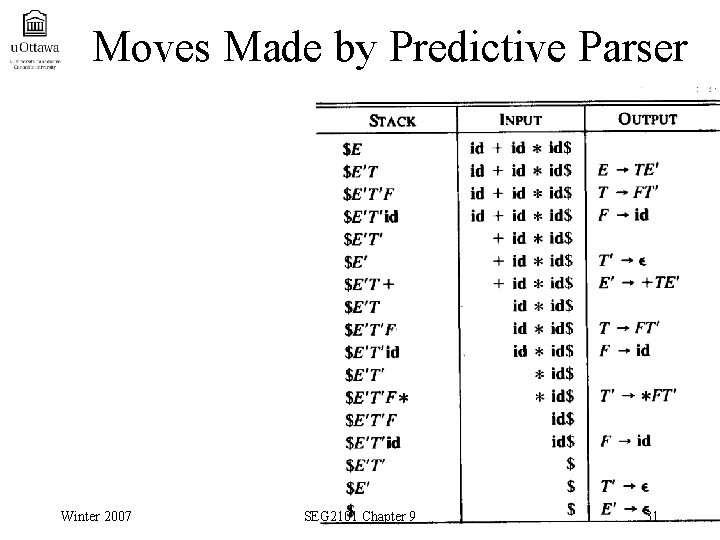

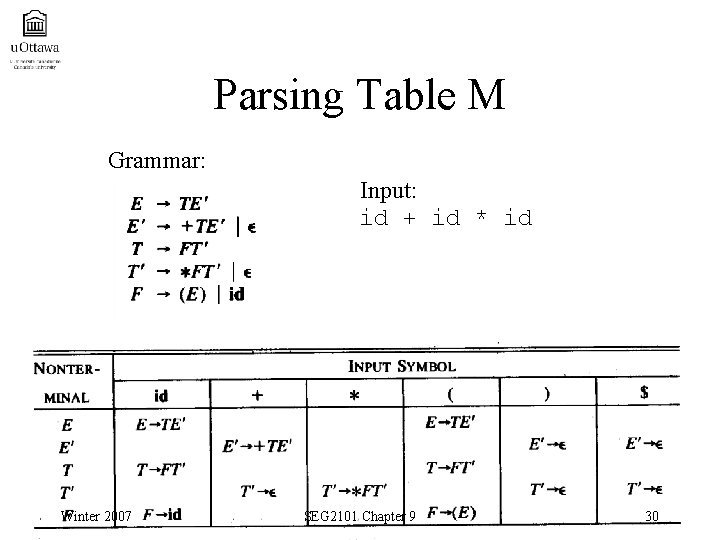

Parsing Table M Grammar: Input: id + id * id Winter 2007 SEG 2101 Chapter 9 30

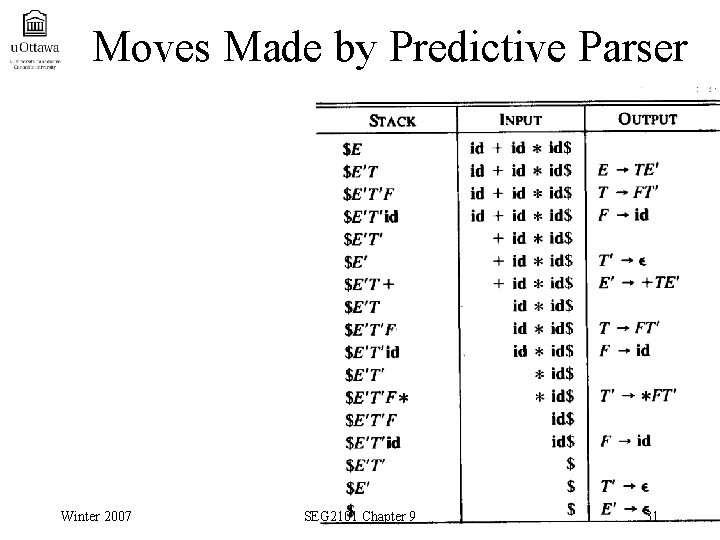

Moves Made by Predictive Parser Winter 2007 SEG 2101 Chapter 9 31

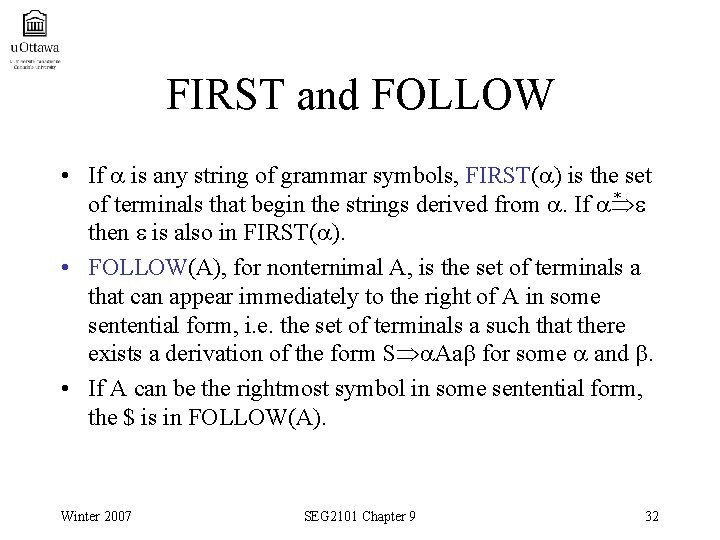

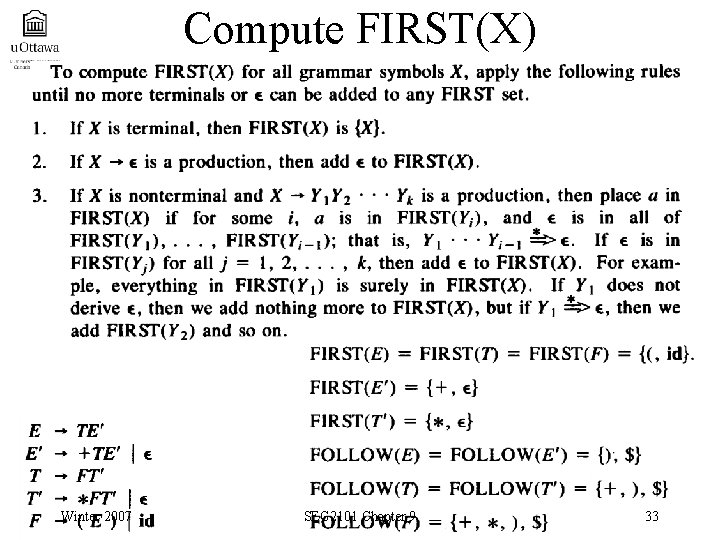

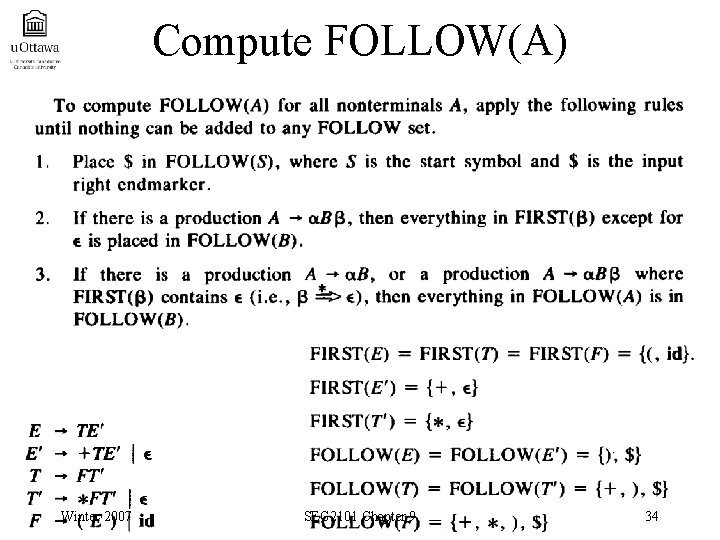

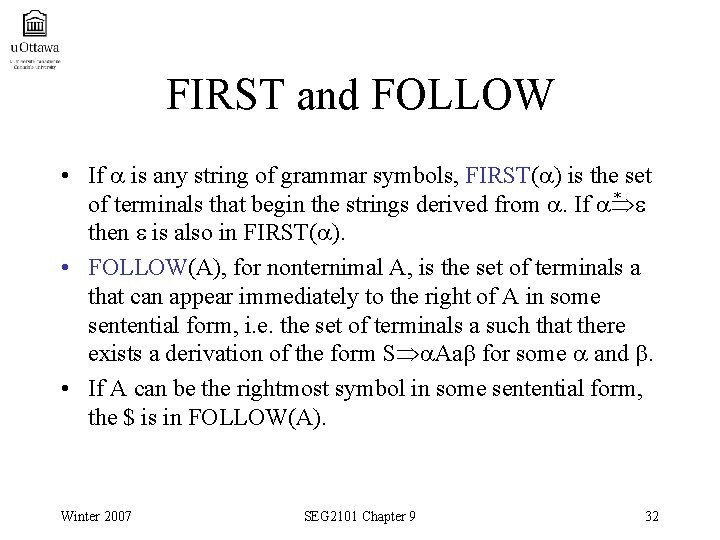

FIRST and FOLLOW • If is any string of grammar symbols, FIRST( ) is the set * of terminals that begin the strings derived from . If then is also in FIRST( ). • FOLLOW(A), for nonternimal A, is the set of terminals a that can appear immediately to the right of A in some sentential form, i. e. the set of terminals a such that there exists a derivation of the form S Aa for some and . • If A can be the rightmost symbol in some sentential form, the $ is in FOLLOW(A). Winter 2007 SEG 2101 Chapter 9 32

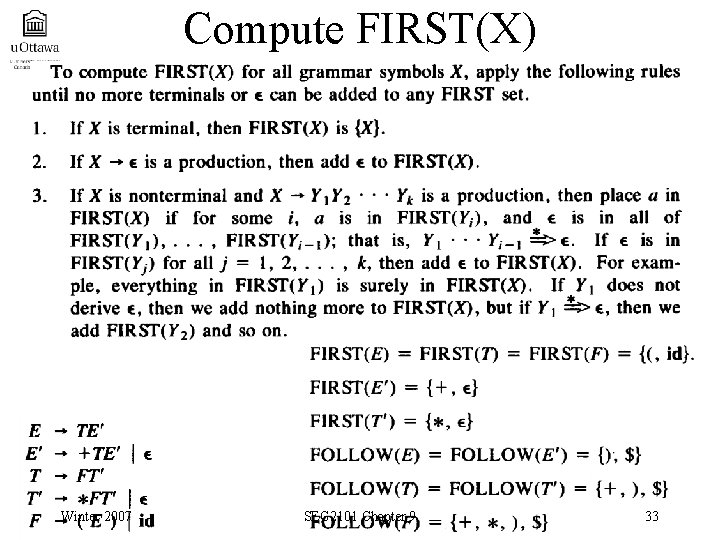

Compute FIRST(X) Winter 2007 SEG 2101 Chapter 9 33

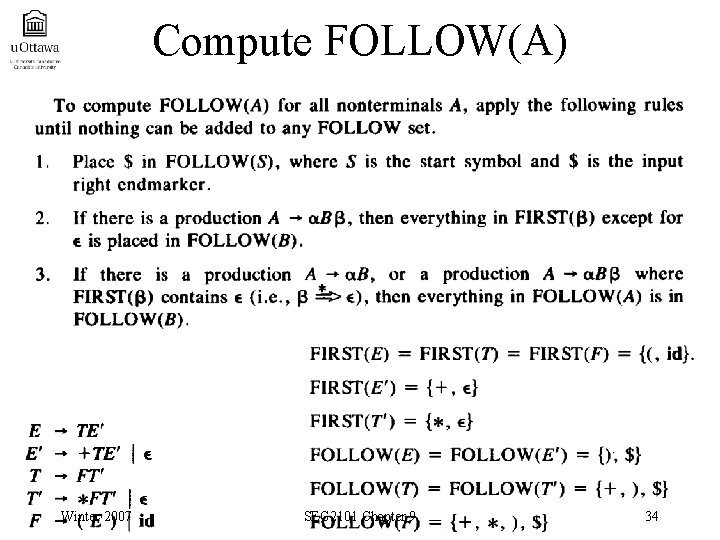

Compute FOLLOW(A) Winter 2007 SEG 2101 Chapter 9 34

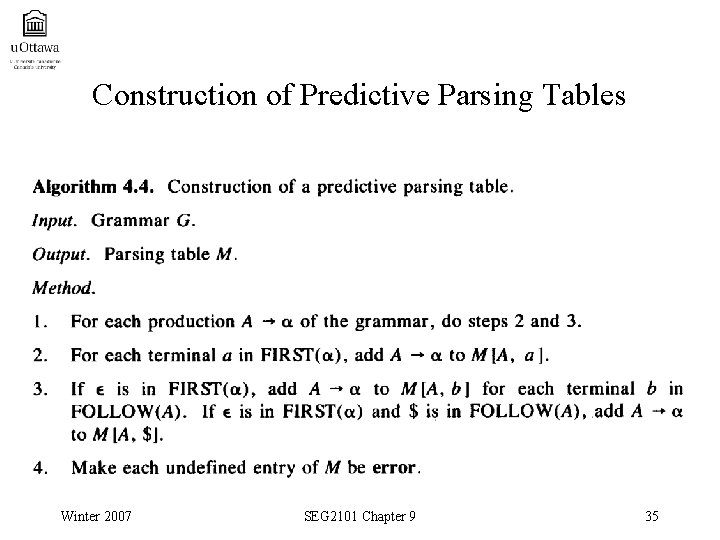

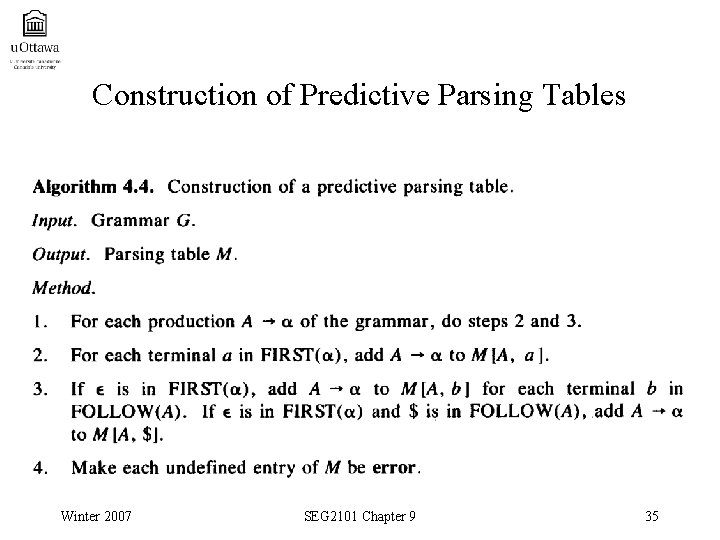

Construction of Predictive Parsing Tables Winter 2007 SEG 2101 Chapter 9 35

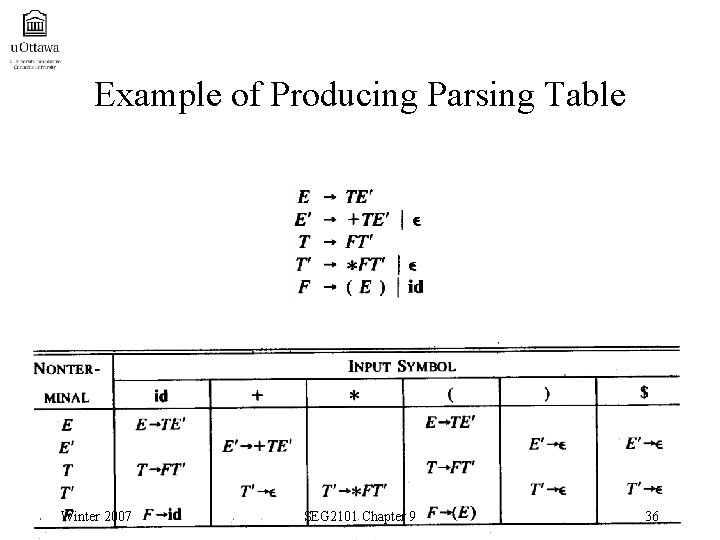

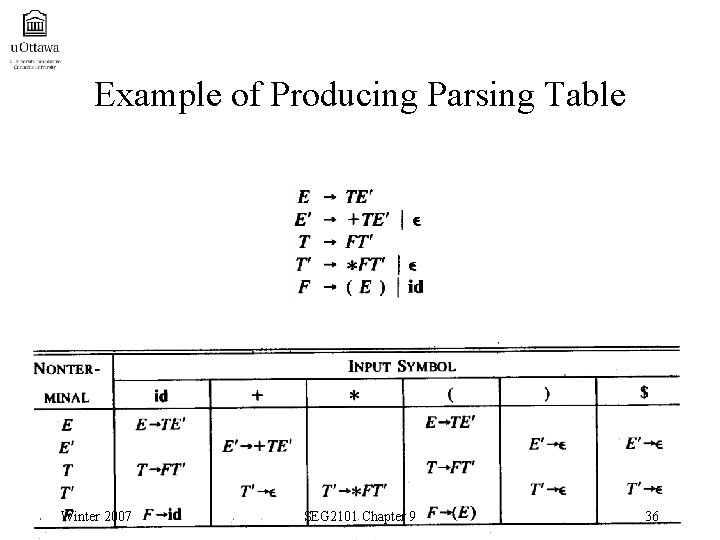

Example of Producing Parsing Table Winter 2007 SEG 2101 Chapter 9 36

LL(1) Grammars • A grammar whose parsing table has no multiply-defined entries is said to be LL(1). • First L: scanning from left to right • Second L: producing a leftmost derivation • 1: using one input symbol of lookahead at each step to make parsing action decision. Winter 2007 SEG 2101 Chapter 9 37

Properties of LL(1) • No ambiguous or left recursive grammar can be LL(1). • Grammar G is LL(1) iff whenever A | are two distinct productions of G and: – For no terminal a do both and derive strings beginning with a. FIRST( )= – At most one of and can derive the empty string. * – If , the does not derive any string beginning with a terminal in FOLLOW(A). FIRST( FOLLOW(A))= Winter 2007 SEG 2101 Chapter 9 38

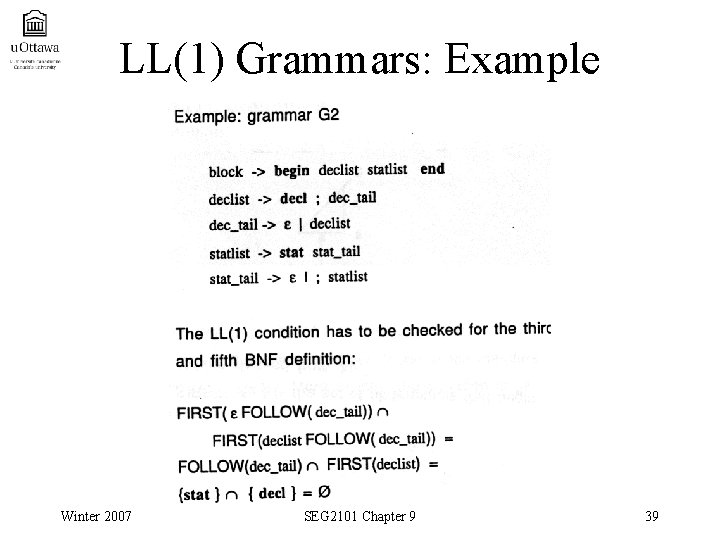

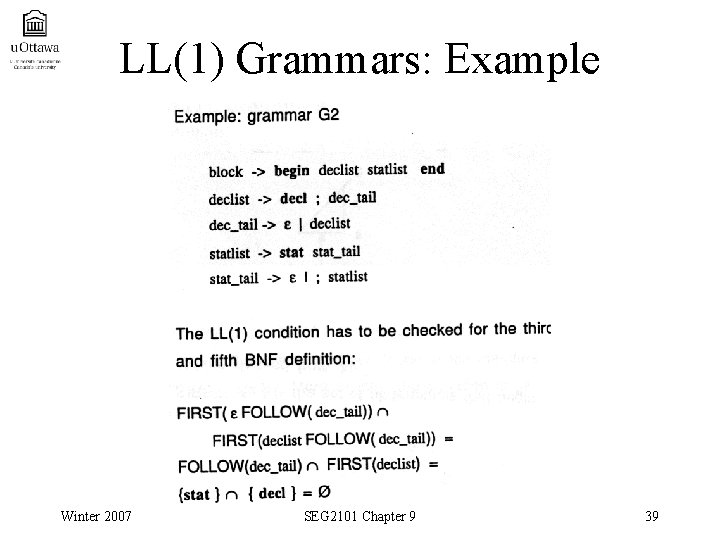

LL(1) Grammars: Example Winter 2007 SEG 2101 Chapter 9 39

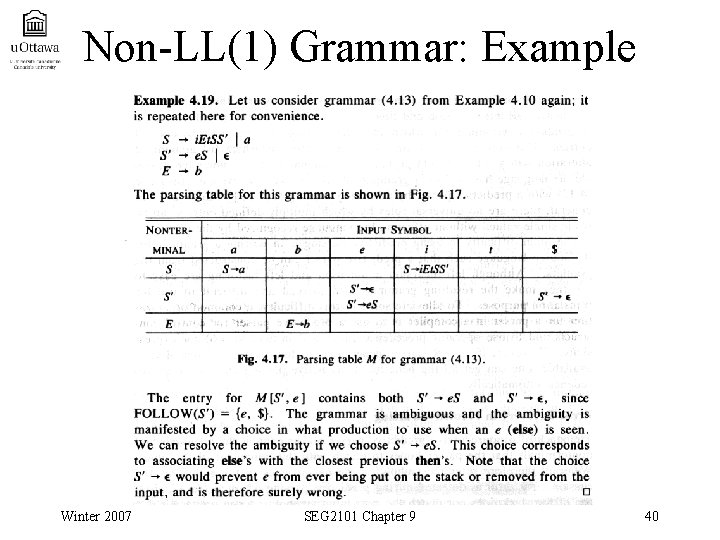

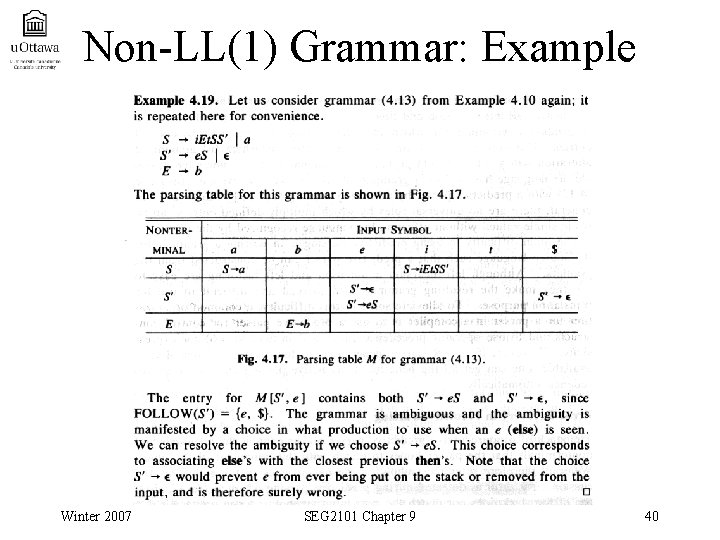

Non-LL(1) Grammar: Example Winter 2007 SEG 2101 Chapter 9 40

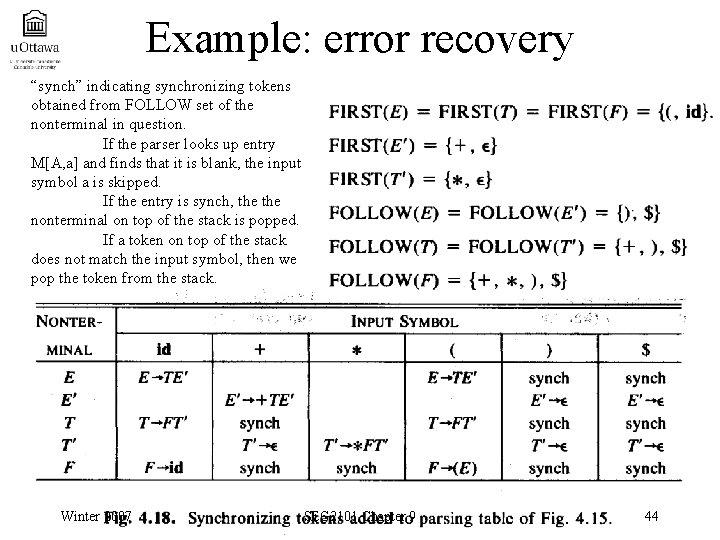

Error recovery in predictive parsing • An error is detected during the predictive parsing when the terminal on top of the stack does not match the next input symbol, or when nonterminal A on top of the stack, a is the next input symbol, and parsing table entry M[A, a] is empty. • Panic-mode error recovery is based on the idea of skipping symbols on the input until a token in a selected set of synchronizing tokens. Winter 2007 SEG 2101 Chapter 9 41

How to select synchronizing set? • Place all symbols in FOLLOW(A) into the synchronizing set for nonterminal A. If we skip tokens until an element of FOLLOW(A) is seen and pop A from the stack, it likely that parsing can continue. • We might add keywords that begins statements to the synchronizing sets for the nonterminals generating expressions. Winter 2007 SEG 2101 Chapter 9 42

How to select synchronizing set? (II) • If a nonterminal can generate the empty string, then the production deriving can be used as a default. This may postpone some error detection, but cannot cause an error to be missed. This approach reduces the number of nonterminals that have to be considered during error recovery. • If a terminal on top of stack cannot be matched, a simple idea is to pop the terminal, issue a message saying that the terminal was inserted. Winter 2007 SEG 2101 Chapter 9 43

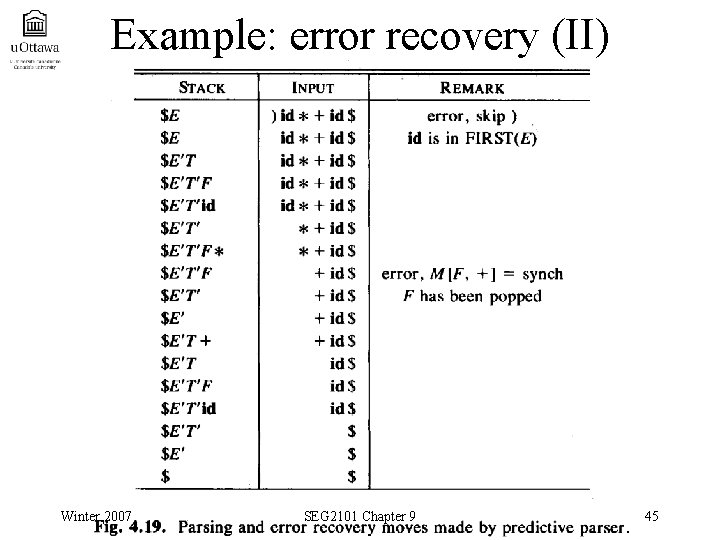

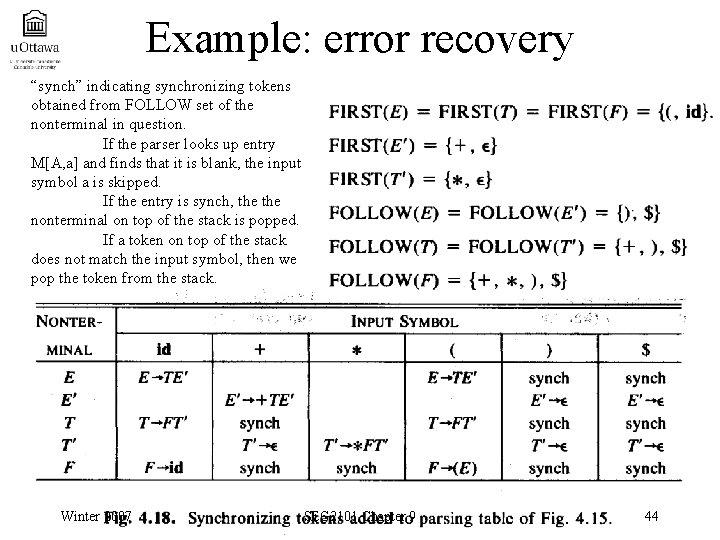

Example: error recovery “synch” indicating synchronizing tokens obtained from FOLLOW set of the nonterminal in question. If the parser looks up entry M[A, a] and finds that it is blank, the input symbol a is skipped. If the entry is synch, the nonterminal on top of the stack is popped. If a token on top of the stack does not match the input symbol, then we pop the token from the stack. Winter 2007 SEG 2101 Chapter 9 44

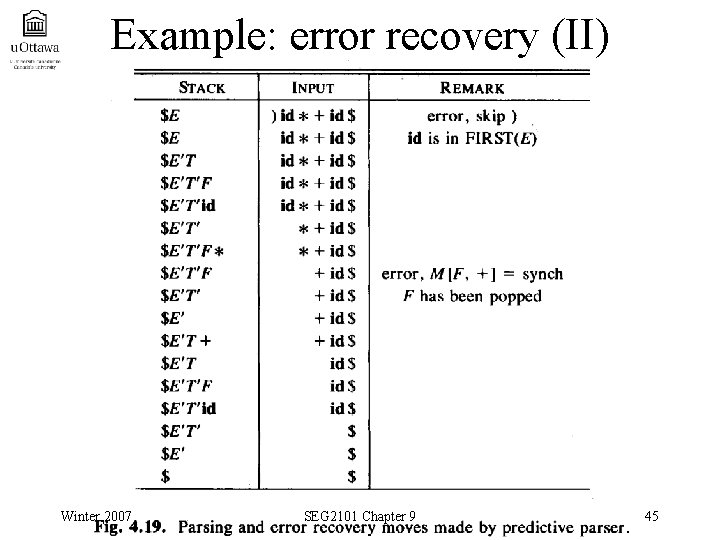

Example: error recovery (II) Winter 2007 SEG 2101 Chapter 9 45

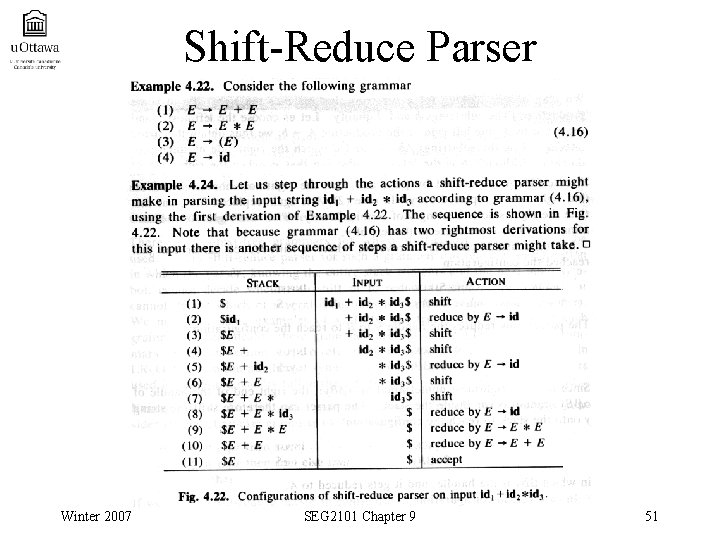

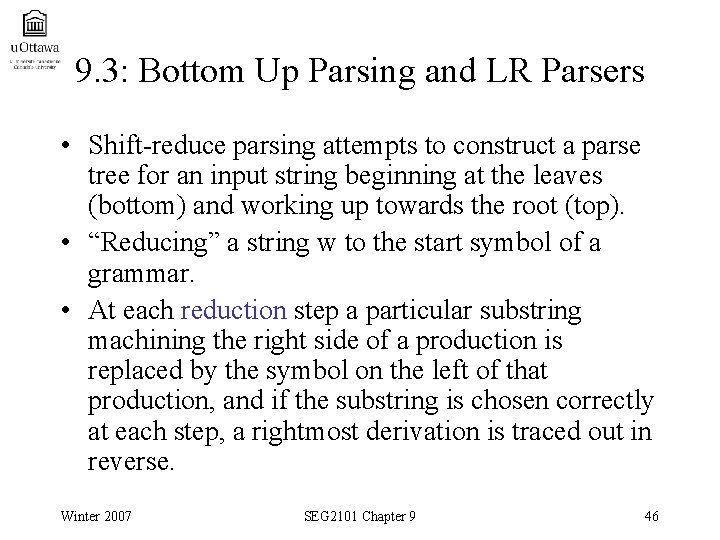

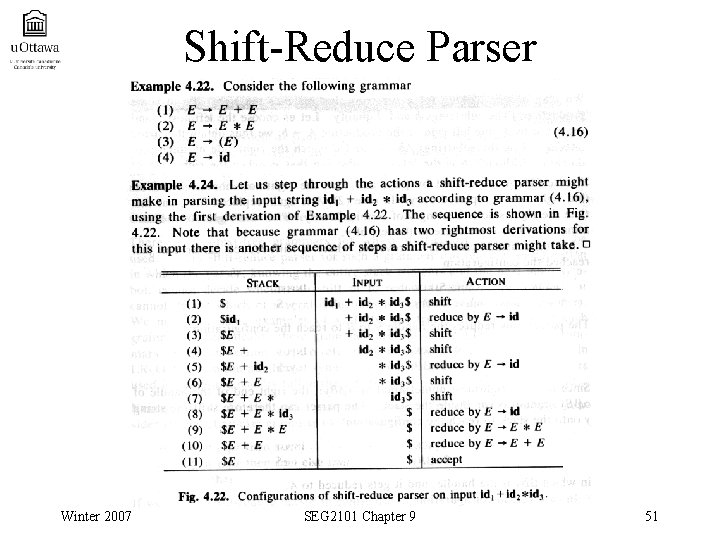

9. 3: Bottom Up Parsing and LR Parsers • Shift-reduce parsing attempts to construct a parse tree for an input string beginning at the leaves (bottom) and working up towards the root (top). • “Reducing” a string w to the start symbol of a grammar. • At each reduction step a particular substring machining the right side of a production is replaced by the symbol on the left of that production, and if the substring is chosen correctly at each step, a rightmost derivation is traced out in reverse. Winter 2007 SEG 2101 Chapter 9 46

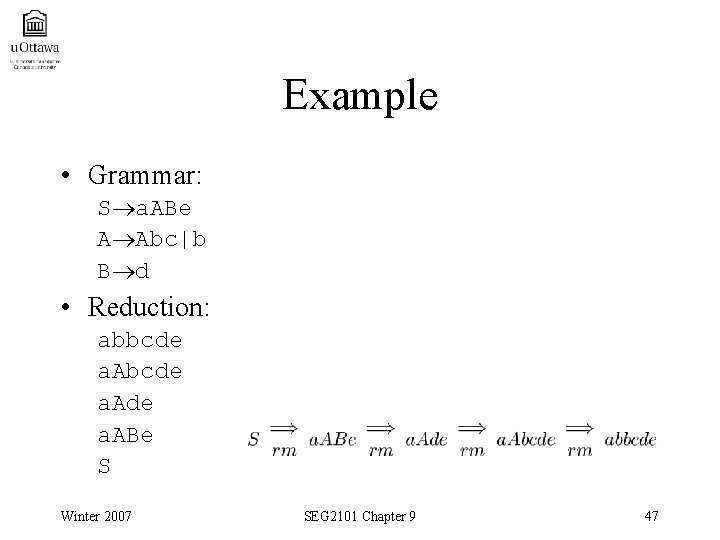

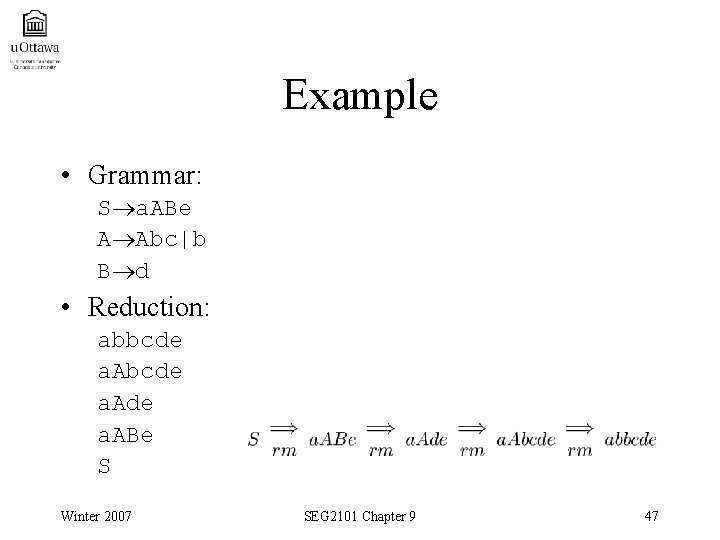

Example • Grammar: S a. ABe A Abc|b B d • Reduction: abbcde a. Ade a. ABe S Winter 2007 SEG 2101 Chapter 9 47

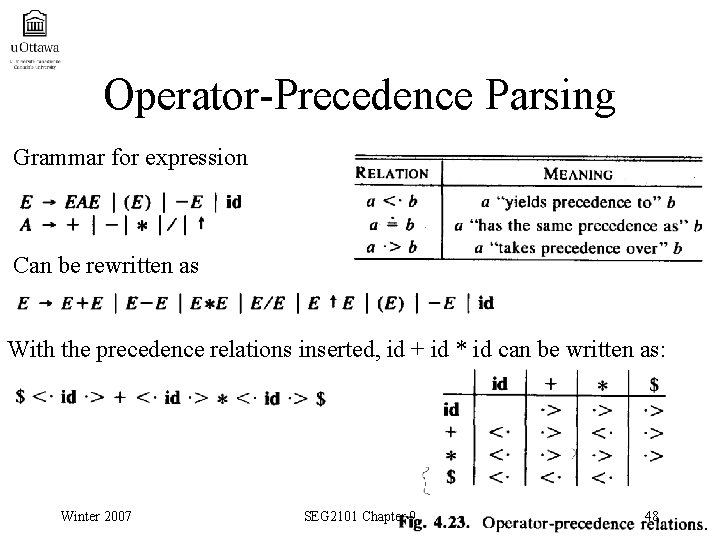

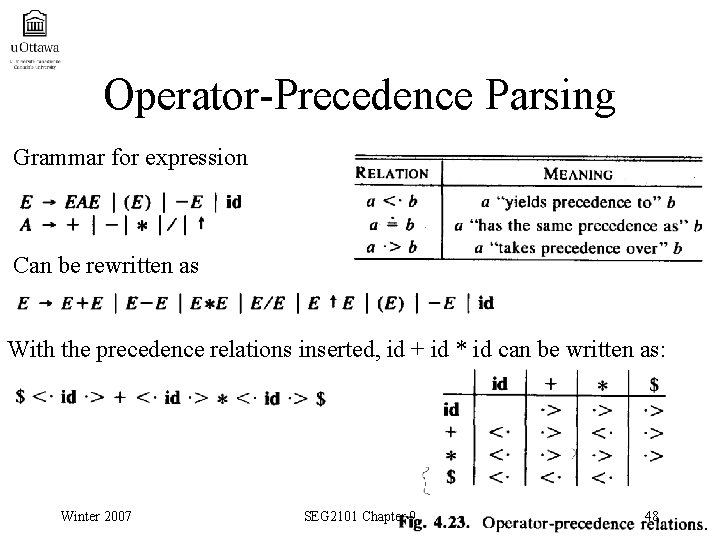

Operator-Precedence Parsing Grammar for expression Can be rewritten as With the precedence relations inserted, id + id * id can be written as: Winter 2007 SEG 2101 Chapter 9 48

LR(k) Parsers • L: left-to-right scanning of the input • R: constructing a rightmost derivation in reverse • k: the number of input symbols of lookahead that are used in making parsing decisions. Winter 2007 SEG 2101 Chapter 9 49

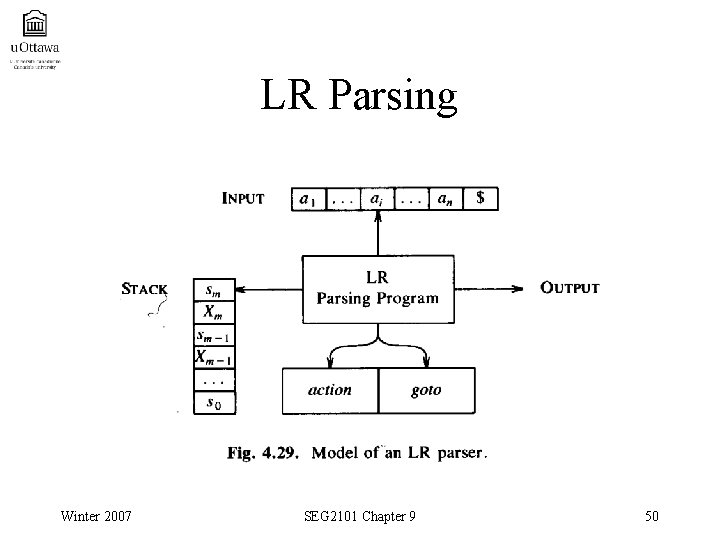

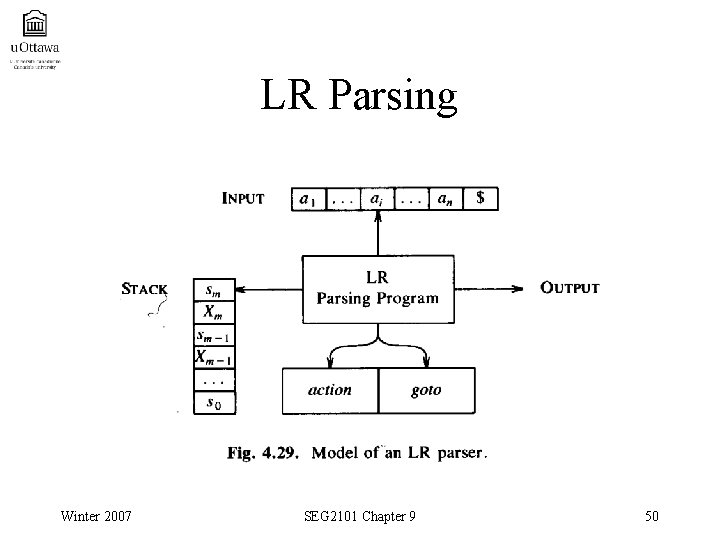

LR Parsing Winter 2007 SEG 2101 Chapter 9 50

Shift-Reduce Parser Winter 2007 SEG 2101 Chapter 9 51

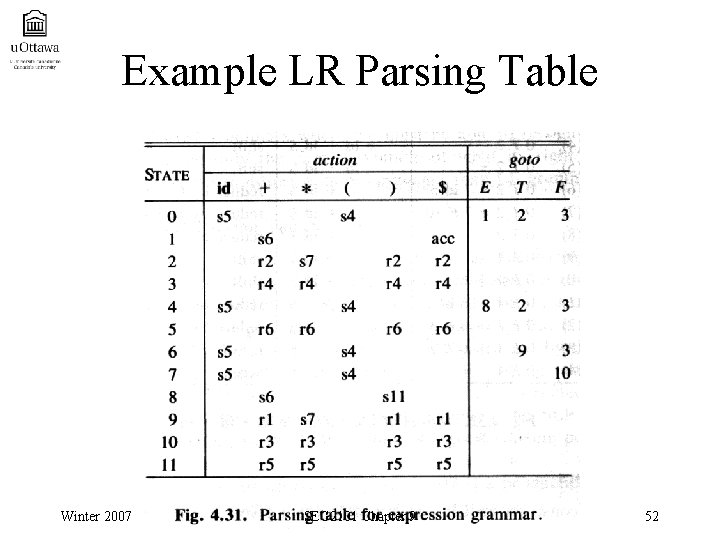

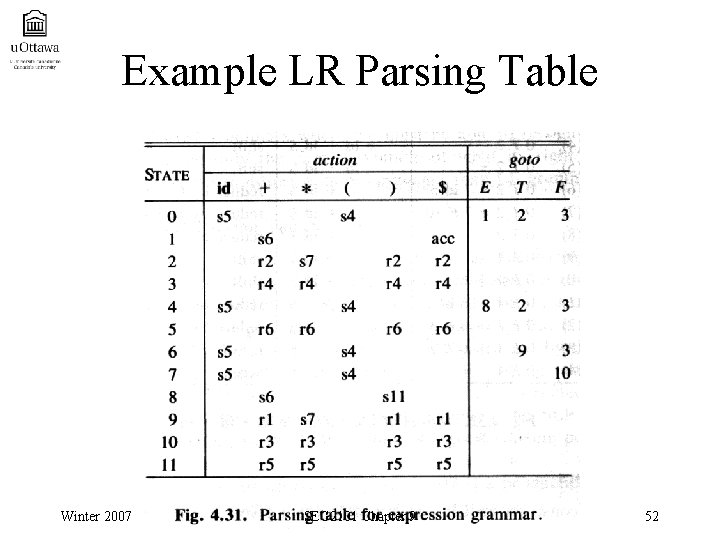

Example LR Parsing Table Winter 2007 SEG 2101 Chapter 9 52

9. 4: Attributes Grammars • An attribute grammar is a device used to describe more of the structure of a programming language than is possible with a context-free grammar. • Some of the semantic properties can be evaluated at compile-time, they are called "static semantics", other properties are determined at execution time, they are called "dynamic semantics". • The static semantics is often represented by semantic attributes which are associated with the nonterminals. Winter 2007 SEG 2101 Chapter 9 53

Attribute Grammars • Grammars with added attributes, attribute computation functions, and predicate functions. • Attributes: similar to variables • Attribute computation functions: specify how attribute values are computed • Predicate functions: state some of the syntax and static semantic rules of the language Winter 2007 SEG 2101 Chapter 9 54

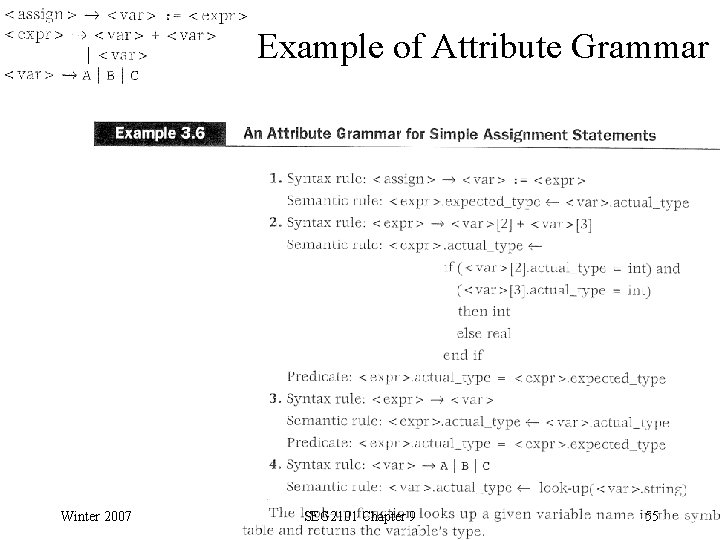

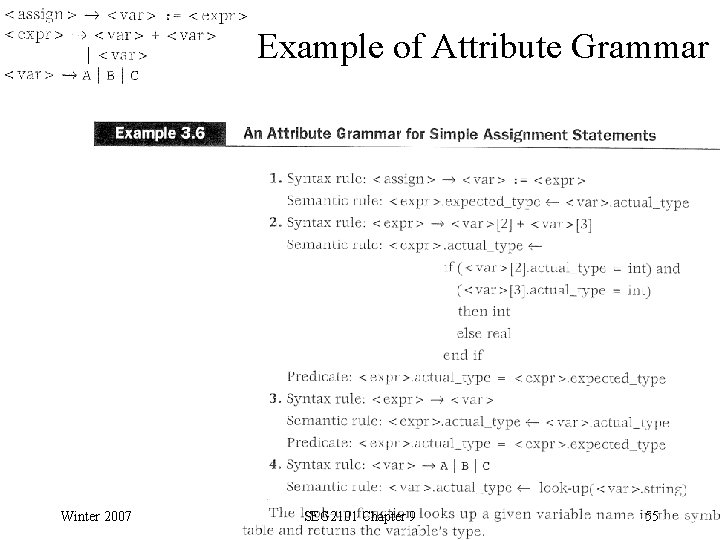

Example of Attribute Grammar Winter 2007 SEG 2101 Chapter 9 55

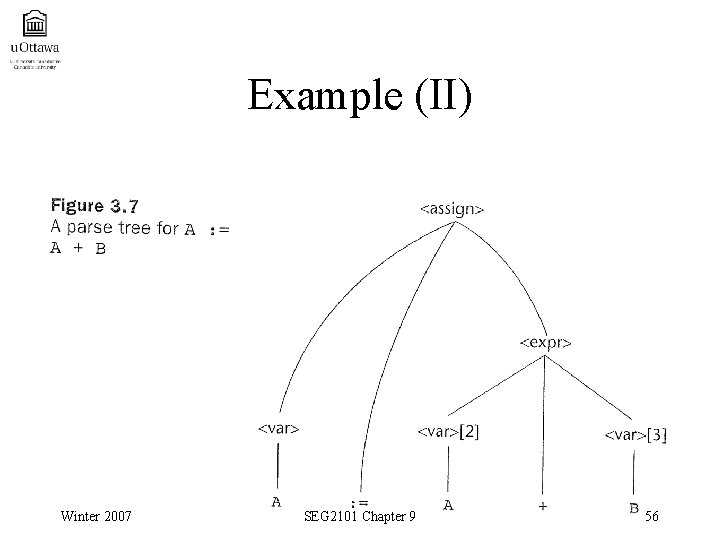

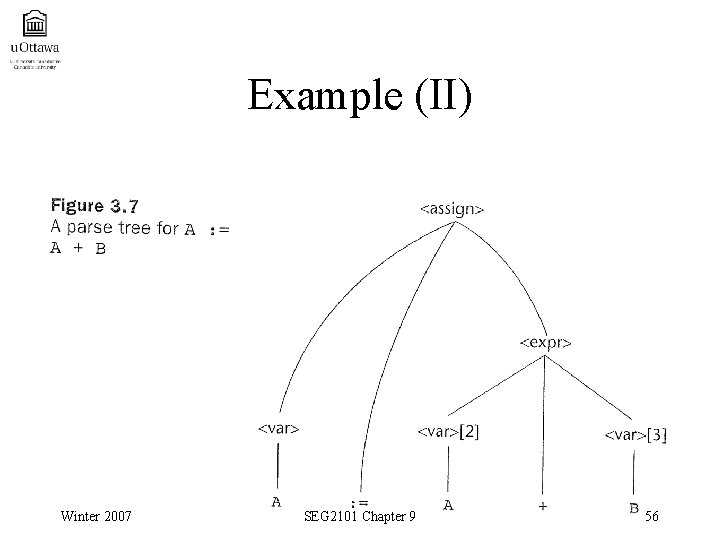

Example (II) Winter 2007 SEG 2101 Chapter 9 56

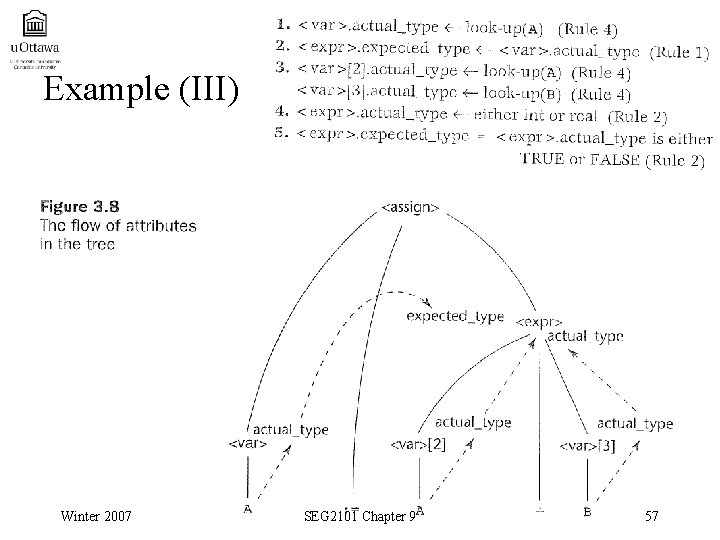

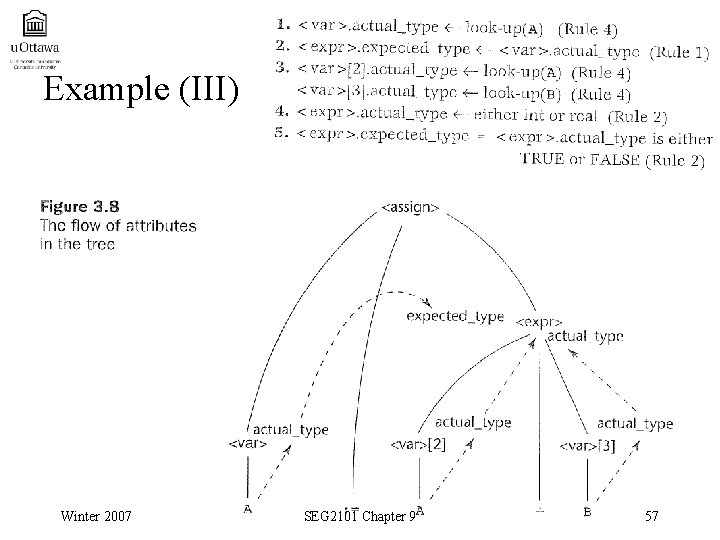

Example (III) Winter 2007 SEG 2101 Chapter 9 57

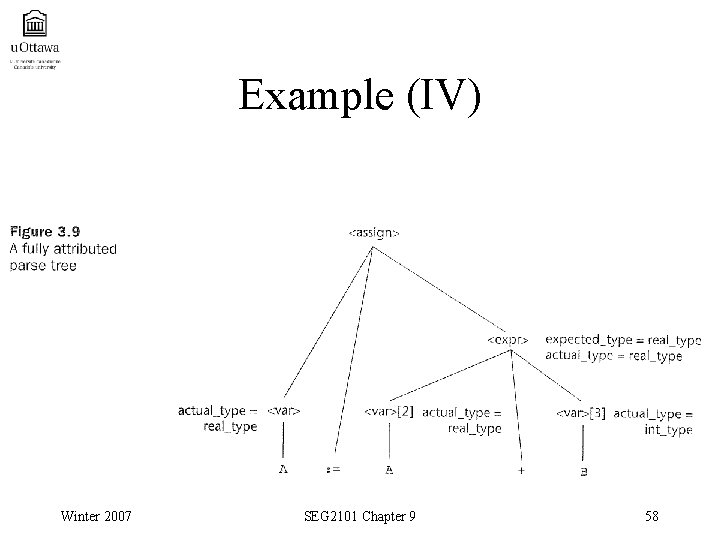

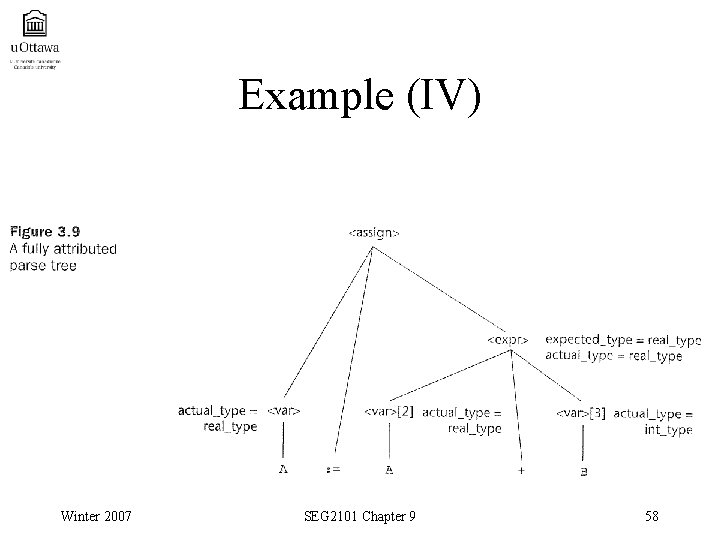

Example (IV) Winter 2007 SEG 2101 Chapter 9 58

9. 5: Dynamic Semantics • Informal definition: Only informal explanations are given (in natural language) which define the meaning of programs (e. g. language reference manuals, etc. ). • Operational semantics: The meaning of the constructs of the programming language is defined in terms of the translation into another lower-level language and the semantics of this lower-level language. Usually only the translation is defined formally, the semantics of the lowerlevel language is defined informally. Winter 2007 SEG 2101 Chapter 9 59

Axiomatic Semantics • Axiomatic semantics was defined to prove the correctness of programs. • This approach is related to the approach of defining the semantics of a procedure (independently of its code) in terms of pre- and post-conditions that define properties of input and output parameters and values of state variables. • Weakest precondition: For a given statement, and a given postcondition that should hold after its execution, the weakest precondition is the weakest condition which ensures, when it holds before the execution of the statement, that the given postcondition holds afterwards. Winter 2007 SEG 2101 Chapter 9 60

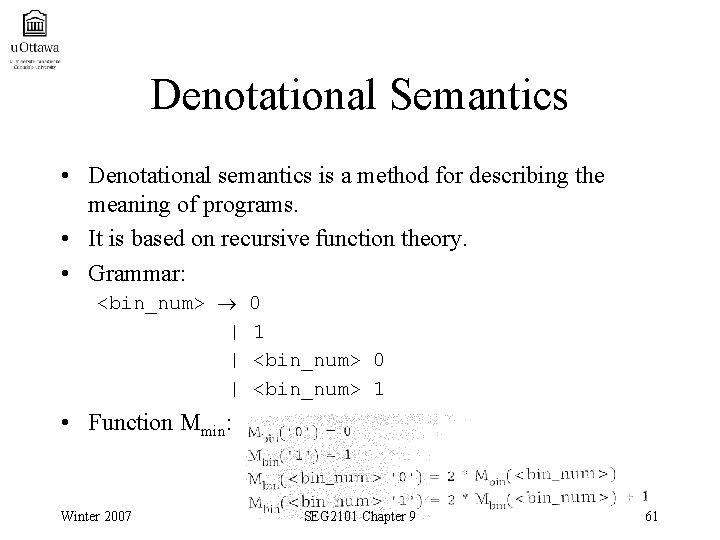

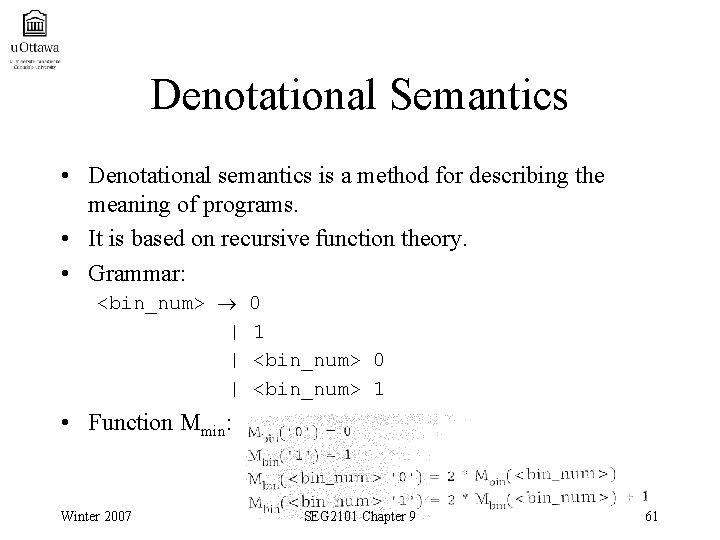

Denotational Semantics • Denotational semantics is a method for describing the meaning of programs. • It is based on recursive function theory. • Grammar: <bin_num> | | | 0 1 <bin_num> 0 <bin_num> 1 • Function Mmin: Winter 2007 SEG 2101 Chapter 9 61

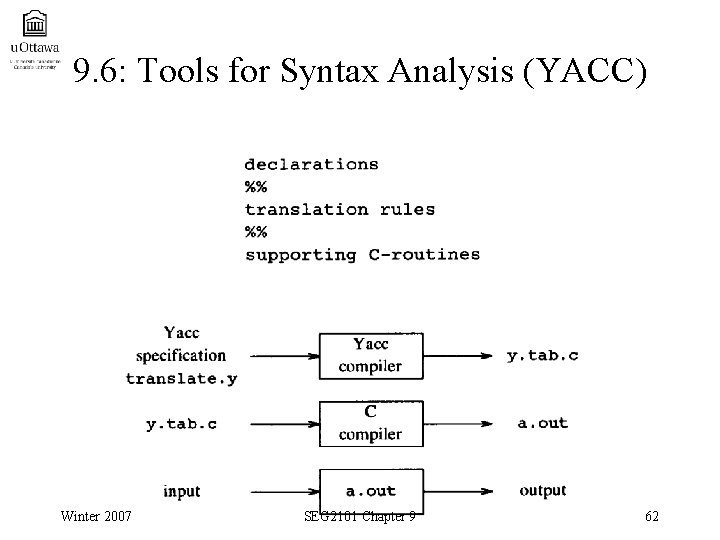

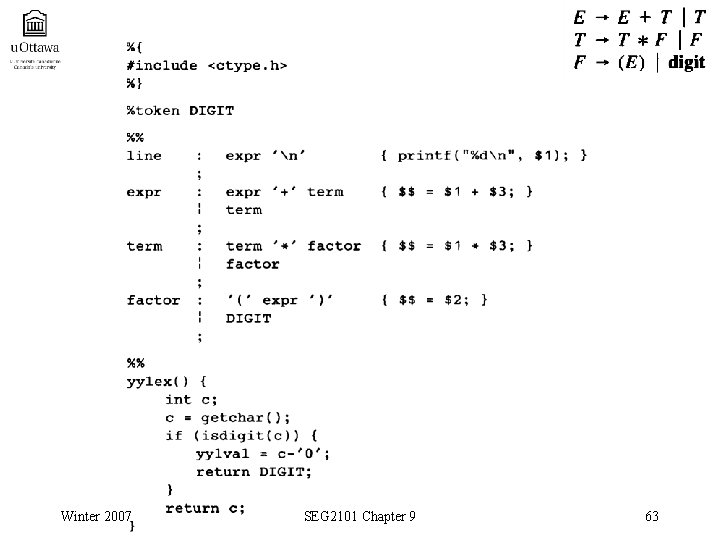

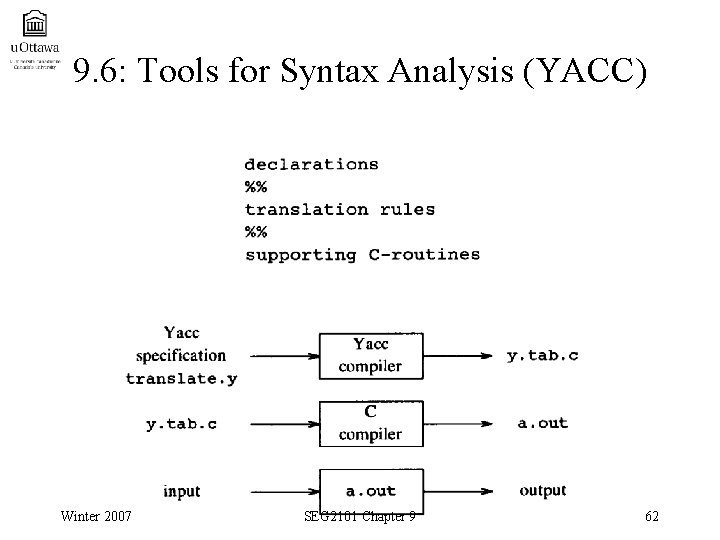

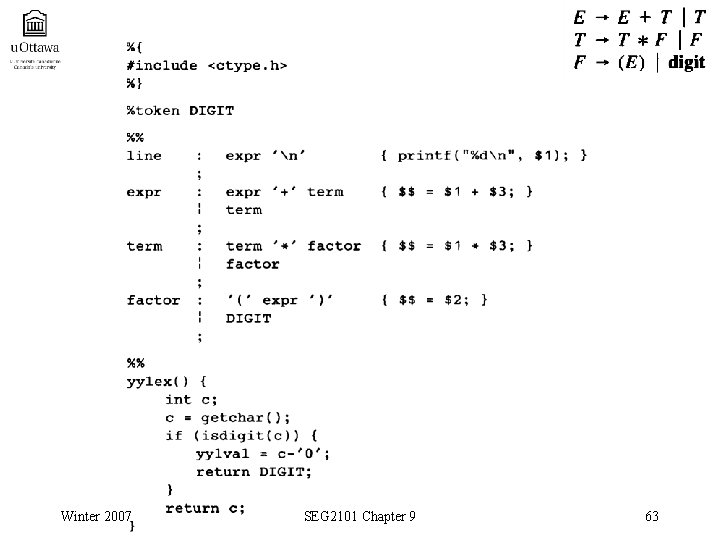

9. 6: Tools for Syntax Analysis (YACC) Winter 2007 SEG 2101 Chapter 9 62

Winter 2007 SEG 2101 Chapter 9 63

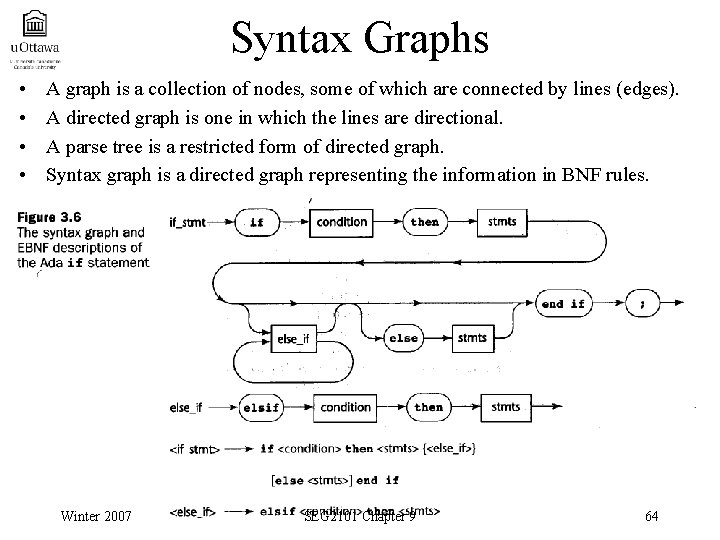

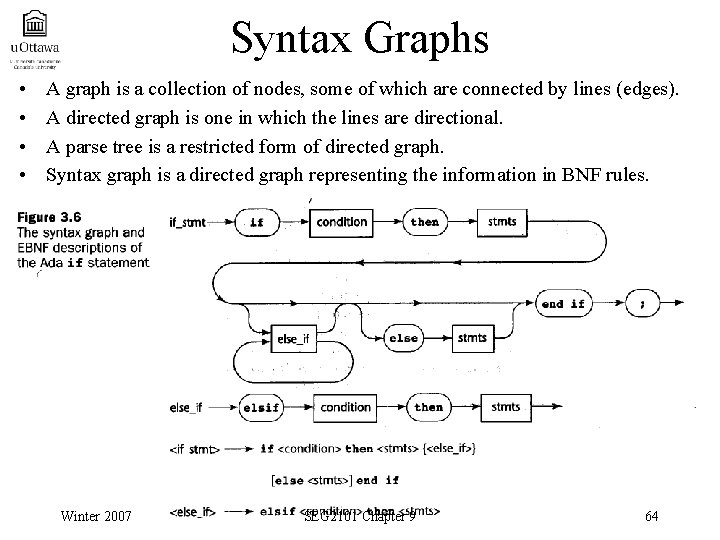

Syntax Graphs • • A graph is a collection of nodes, some of which are connected by lines (edges). A directed graph is one in which the lines are directional. A parse tree is a restricted form of directed graph. Syntax graph is a directed graph representing the information in BNF rules. Winter 2007 SEG 2101 Chapter 9 64

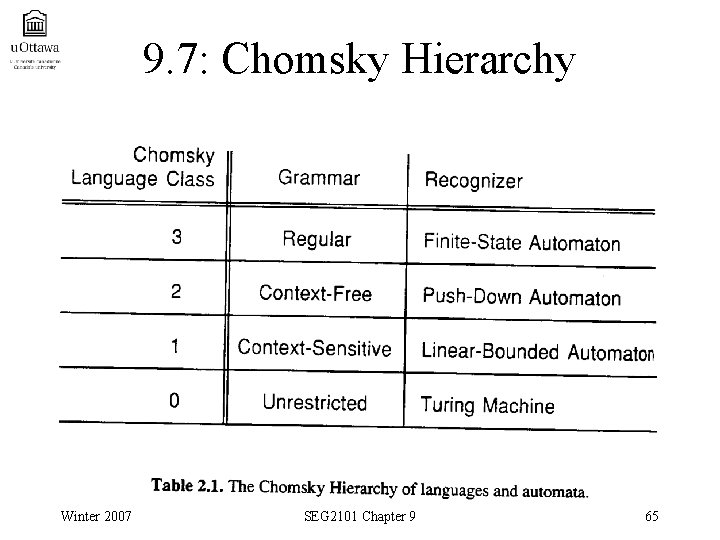

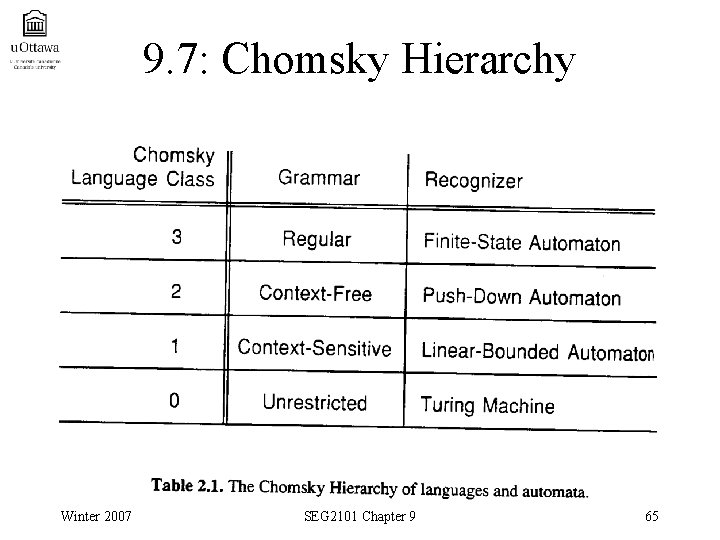

9. 7: Chomsky Hierarchy Winter 2007 SEG 2101 Chapter 9 65

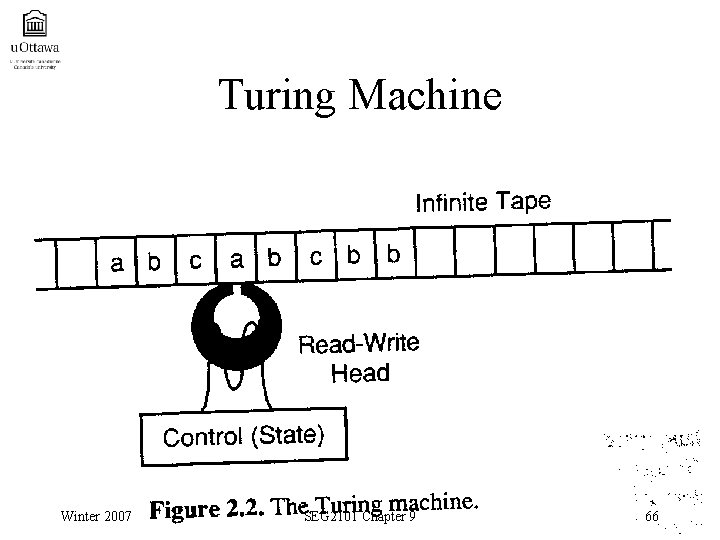

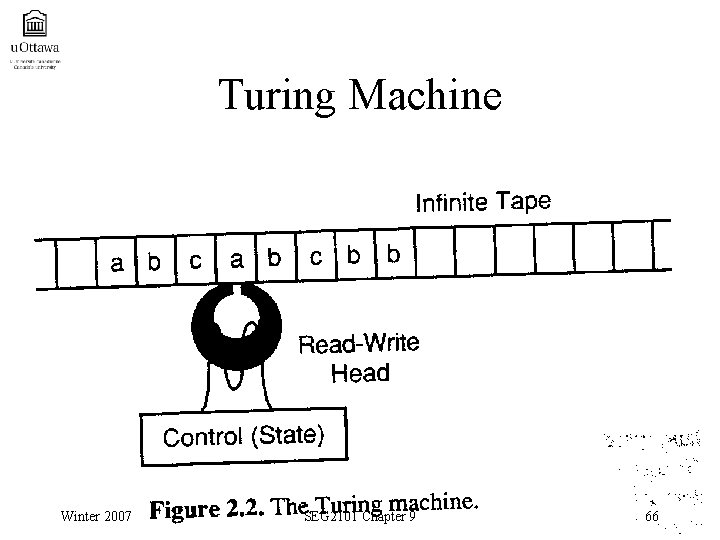

Turing Machine Winter 2007 SEG 2101 Chapter 9 66

Turing Machine (II) • Unrestricted grammar • Recognized by Turing machine • It consists of a read-write head that can be positioned anywhere along an infinite tape. • It is not a useful class of language for compiler design. Winter 2007 SEG 2101 Chapter 9 67

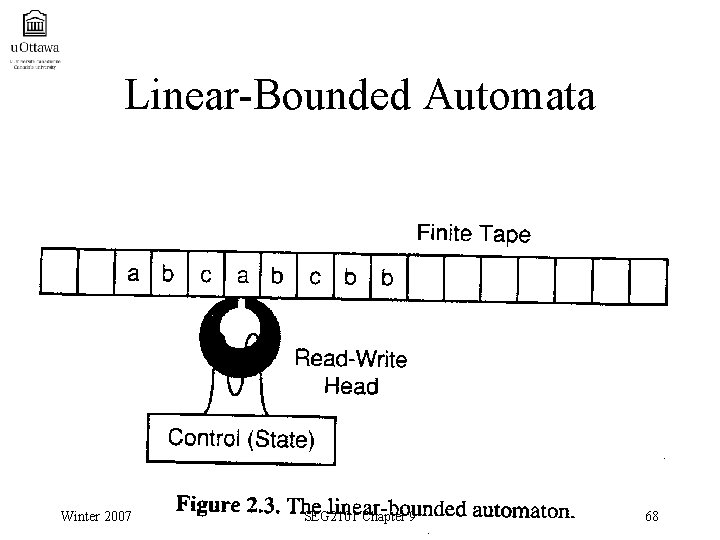

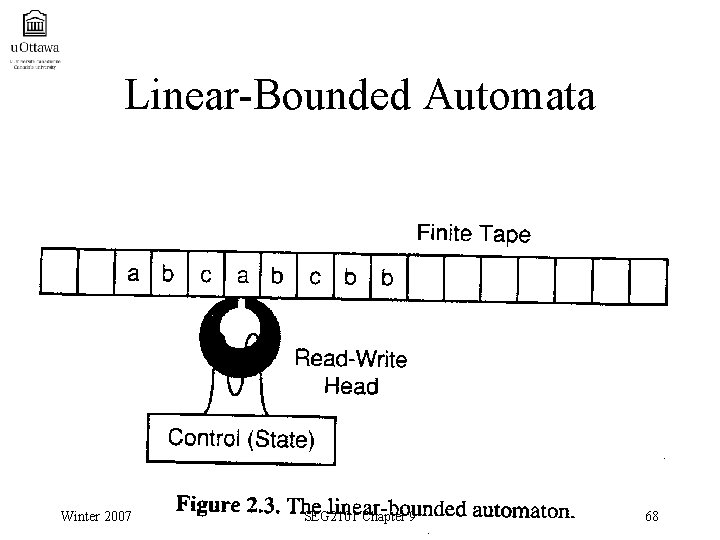

Linear-Bounded Automata Winter 2007 SEG 2101 Chapter 9 68

Linear-Bounded Automata • Context-sensitive • Restrictions – Left-hand of each production must have at least one nonterminal in it – Right-hand side must not have fewer symbols than the left – There can be no empty productions (N ) Winter 2007 SEG 2101 Chapter 9 69

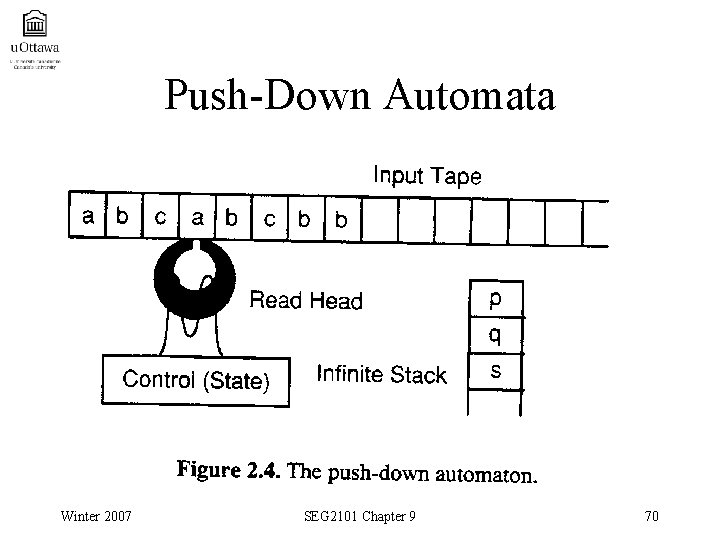

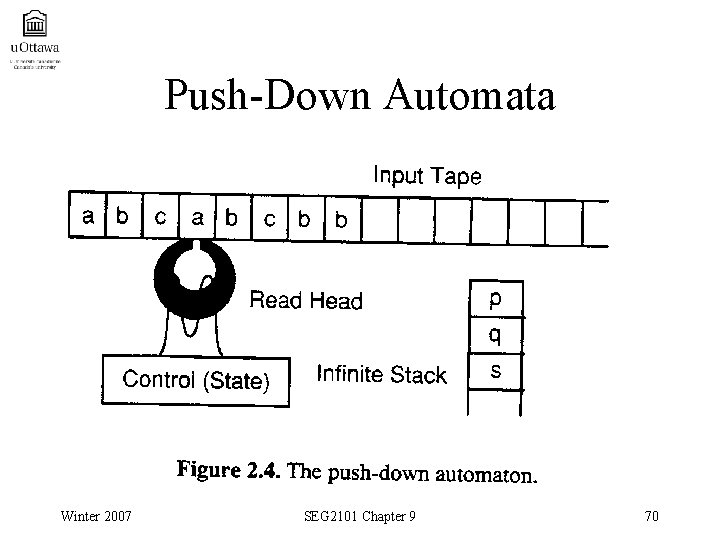

Push-Down Automata Winter 2007 SEG 2101 Chapter 9 70

Push-Down Automata (II) • Context-free • Recognized by push-down automata • Can only read its input tape but has a stack that can grow to arbitrary depth where it can save information • An automation with a read-only tape and two independent stacks is equivalent to a Turing machine. • It allows at most a single nonterminal (and no terminal) on the left-hand side of each production. Winter 2007 SEG 2101 Chapter 9 71

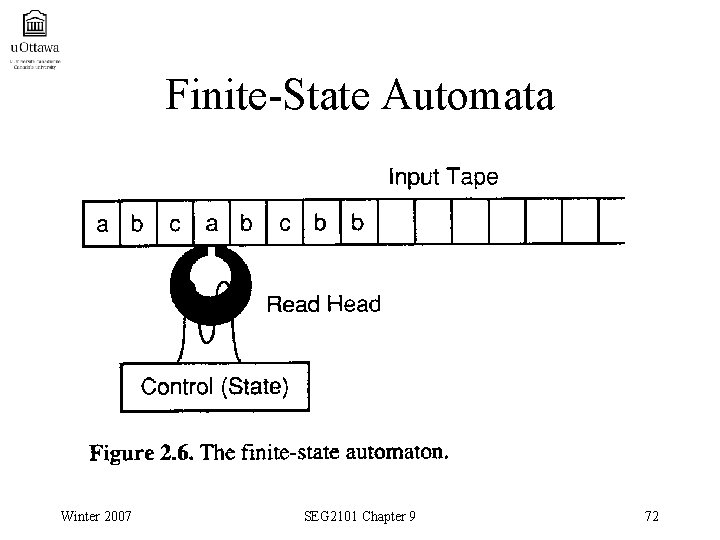

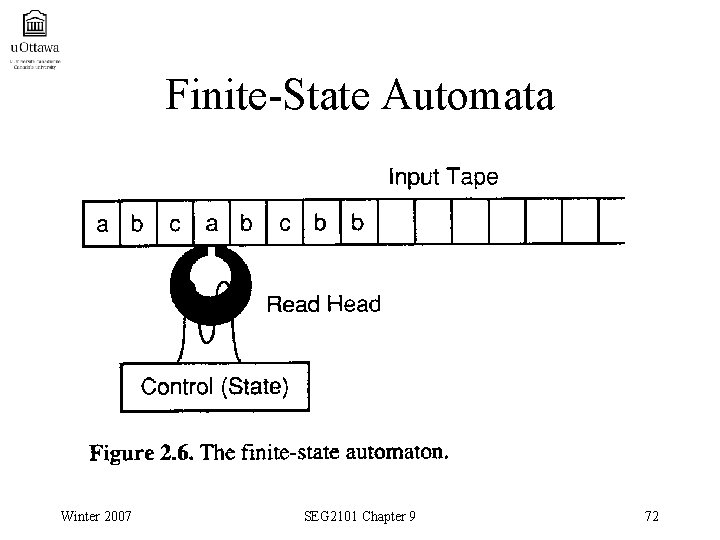

Finite-State Automata Winter 2007 SEG 2101 Chapter 9 72

Finite State Automata (II) • Regular language • Anything that must be remembered about the context of a symbol on the input tape must be preserved in the state of the machine. • It allows only one symbol (a nonterminal) on the left-hand, and only one or two symbols on the right. Winter 2007 SEG 2101 Chapter 9 73