Chapter 4 Lexical and Syntax Analysis 1 Lexical

![Lexical and Syntax Analysis: Chapter 4 Consider the rule: <variable> ident l ident [<expr>] Lexical and Syntax Analysis: Chapter 4 Consider the rule: <variable> ident l ident [<expr>]](https://slidetodoc.com/presentation_image_h/b99eeb5dc6e2f805b95ab48d36257eac/image-33.jpg)

- Slides: 42

Chapter 4 Lexical and Syntax Analysis 1

Lexical and Syntax Analysis: Chapter 4 Out. Lines: In this chapter a major topics will be discussed : • Introduction to lexical analysis, including simple example. • Discussing of parsing problem. • The primary approach to parsing. • Recursive-descent implementation technique for LL parser. • Discussing bottom-up parsing and LR parsing algorithm. 2

Lexical and Syntax Analysis: Chapter 4 Introduction Three different approaches to implementing programming languages : 1. Compilation. 2. Pure interpretation. 3. Hybrid. Slide

Lexical and Syntax Analysis: Chapter 4 Compilation Approach • Uses a program called a compiler, which translates program written in high-level programming languages into machine code. 4

Lexical and Syntax Analysis: Chapter 4 Interpretation Approach • Perform no translate. • Program are interpreted in their original form by software interpreter. • Used for smaller system in which execution is not critical. 5

Lexical and Syntax Analysis: Chapter 4 Hybrid Approach • Translate program in high-level into intermediate forms which are interpreter. • It takes much slower execution than compiler system. 6

Lexical and Syntax Analysis: Chapter 4 Compilers task separate into two parts: 1. lexical analysis: deal with small-scale constructs (names , numeric literals) 2. Syntax analysis: deal with large-scale constructs ( expressions , statement , program unit ) 7

Lexical and Syntax Analysis: Chapter 4 Reasons of separating lexical analysis from Syntax analysis : 1. Simplicity 2. Efficiency 3. Portability 8

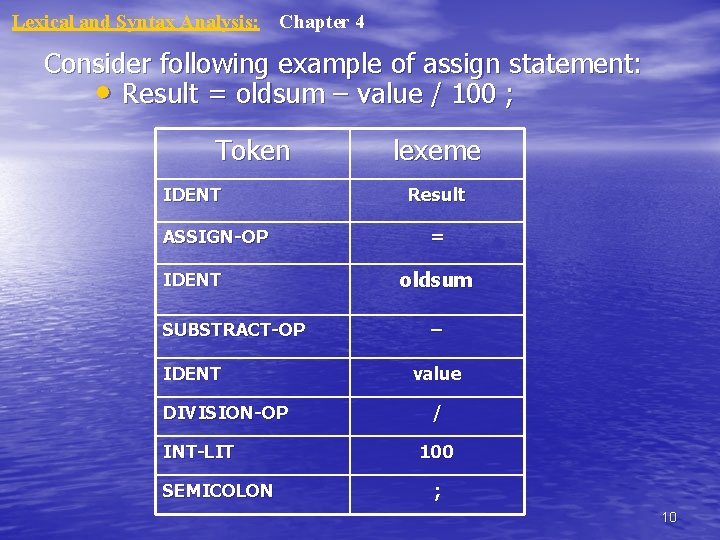

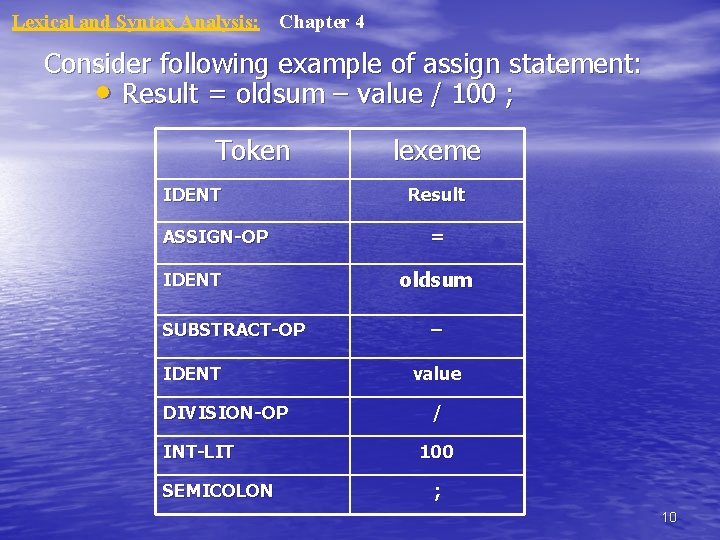

Lexical and Syntax Analysis: Chapter 4 Lexical Analysis • Lexical Analysis is a part of syntax analysis • Lexical Analyzer collect characters into logical grouping( lexemes) and assigns internal codes ( tokens ) 9

Lexical and Syntax Analysis: Chapter 4 Consider following example of assign statement: • Result = oldsum – value / 100 ; Token IDENT ASSIGN-OP IDENT SUBSTRACT-OP IDENT DIVISION-OP INT-LIT SEMICOLON lexeme Result = oldsum – value / 100 ; 10

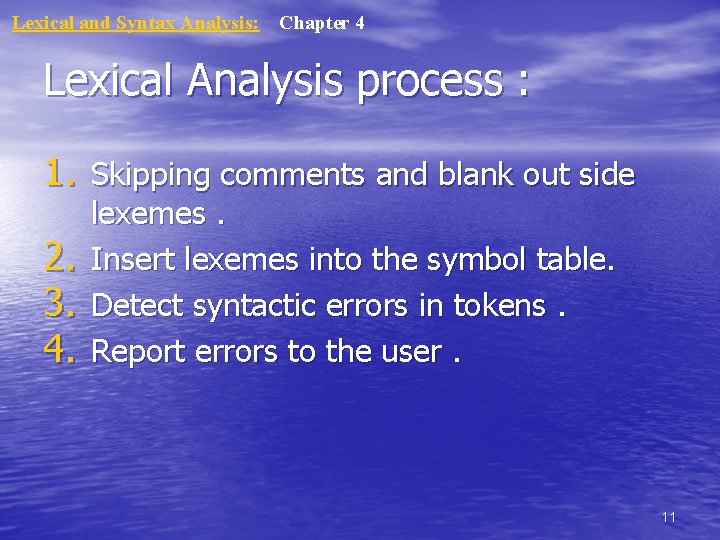

Lexical and Syntax Analysis: Chapter 4 Lexical Analysis process : 1. Skipping comments and blank out side 2. 3. 4. lexemes. Insert lexemes into the symbol table. Detect syntactic errors in tokens. Report errors to the user. 11

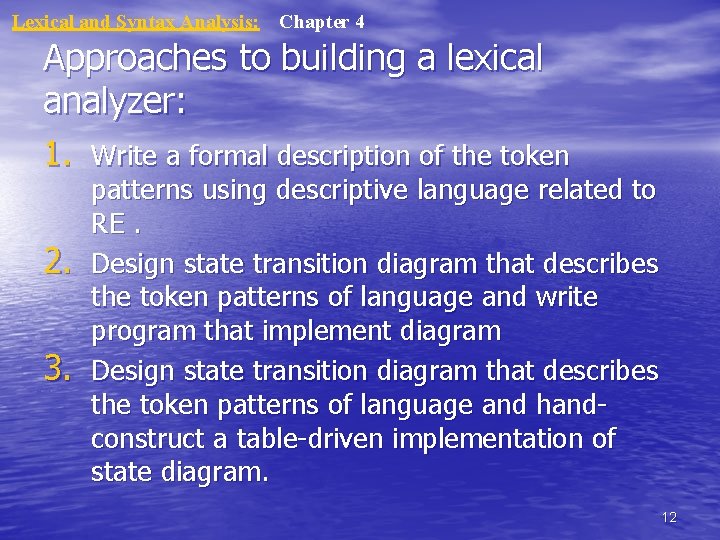

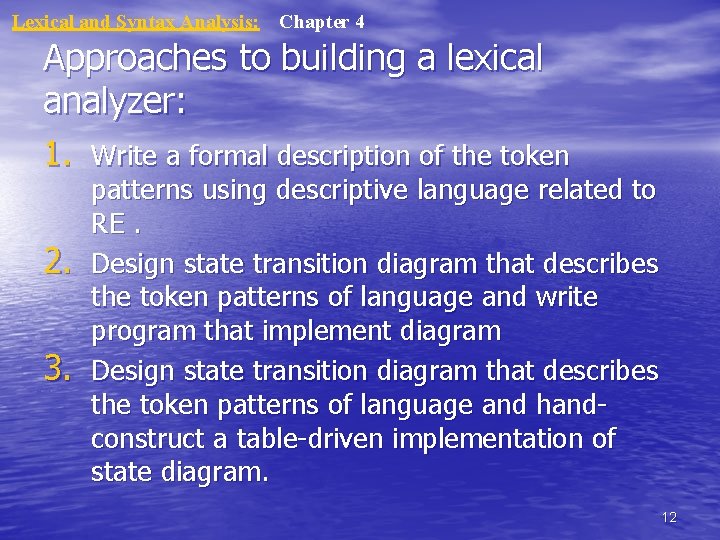

Lexical and Syntax Analysis: Chapter 4 Approaches to building a lexical analyzer: 1. Write a formal description of the token 2. 3. patterns using descriptive language related to RE. Design state transition diagram that describes the token patterns of language and write program that implement diagram Design state transition diagram that describes the token patterns of language and handconstruct a table-driven implementation of state diagram. 12

Lexical and Syntax Analysis: Chapter 4 State diagram include state, transitions for each, and every token pattern. • Results very large and complex diagram. Therefore a lexical analyzer need to recognizes only: • Name • Reserved words • Integer literals 13

Lexical and Syntax Analysis: Chapter 4 • Lexical analyzer use a table of reserved words to determine which names are reserved words. • Can build a much more compact state diagram. • Use a single transition on any character. • we define some sub programs for the common tasks. 14

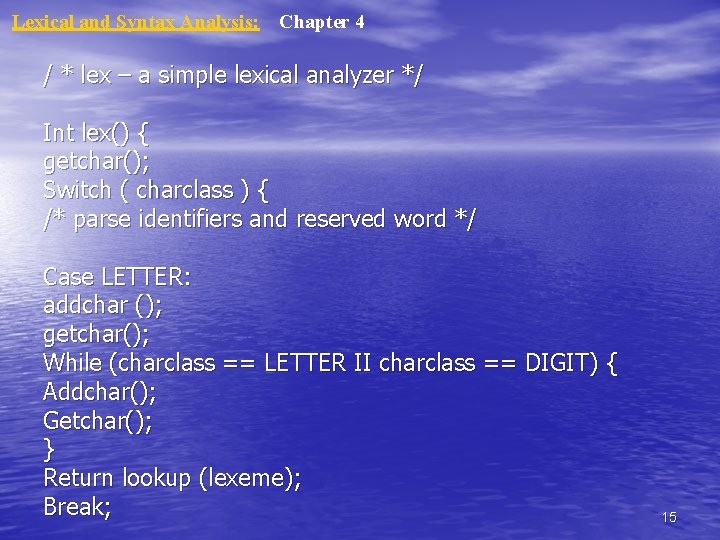

Lexical and Syntax Analysis: Chapter 4 / * lex – a simple lexical analyzer */ Int lex() { getchar(); Switch ( charclass ) { /* parse identifiers and reserved word */ Case LETTER: addchar (); getchar(); While (charclass == LETTER II charclass == DIGIT) { Addchar(); Getchar(); } Return lookup (lexeme); Break; 15

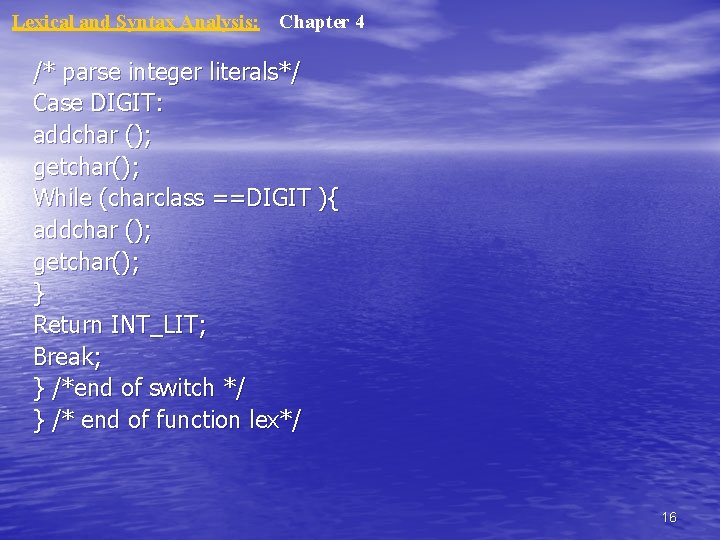

Lexical and Syntax Analysis: Chapter 4 /* parse integer literals*/ Case DIGIT: addchar (); getchar(); While (charclass ==DIGIT ){ addchar (); getchar(); } Return INT_LIT; Break; } /*end of switch */ } /* end of function lex*/ 16

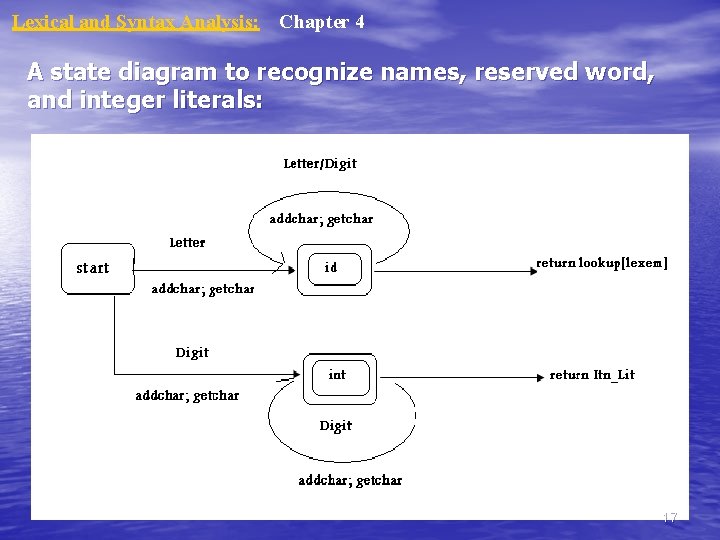

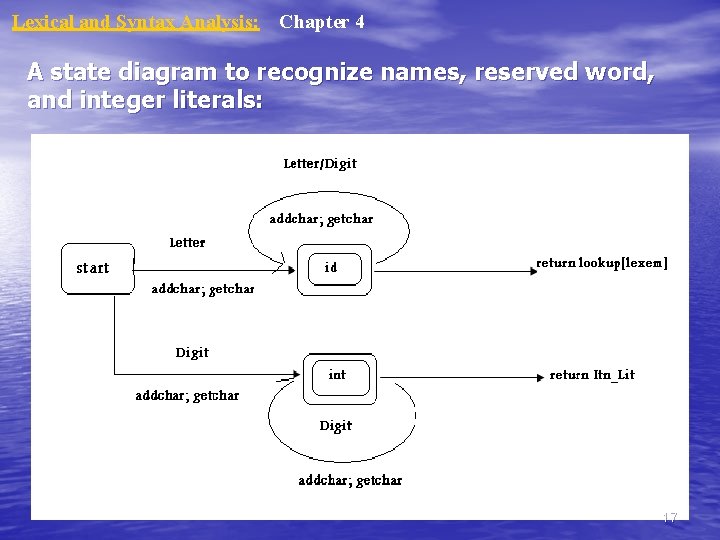

Lexical and Syntax Analysis: Chapter 4 A state diagram to recognize names, reserved word, and integer literals: 17

Lexical and Syntax Analysis: Chapter 4 Parsing problem • Syntax analysis is called parsing. • Two goals of Syntax analysis: • 1. Must check the input program to determine it is syntactically correct. 2. Produce either a complete parse tree or at least trace the structure of the complete parse tree. • Parsers for pl construct parse trees for given programs. 18

Lexical and Syntax Analysis: Chapter 4 ØSyntax analyzer parsers/ based in a formal description of syntax of programming calls context-free grammars, or BNF. 19

Lexical and Syntax Analysis: Chapter 4 Ø Advantages for using BNF : 1. BNF description are clear and concise. 2. Can be used as the direct basis for the 3. syntax analysis. The implementations are easy to maintain. 20

Lexical and Syntax Analysis: Chapter 4 Notational convention for grammar symbols: 1. Terminal Symbols : lowercase letters the beginning of the alphabet ( a, b, c, ……. . ) 2. Nonterminal Symbols: uppercase letters the beginning of the alphabet (A, B, C, …. . ) 3. Terminal or Nonterminal symbols : uppercase letters the end of the alphabet ( W, X, Y, Z) 4. String of Terminal - lowercase letters the end of the alphabet (w, x, y, z) 5. Mixed strings (terminal or/and nonterminal) 21

Lexical and Syntax Analysis: Chapter 4 Categories Of Parsing Algorithms: 1. Top-down: the tree is built from the root downward to the leaves. 1. Bottom-up: the tree is built from the leaves upward to the root. 22

Lexical and Syntax Analysis: Chapter 4 Complexity Of Parsing • Parsing algorithms for grammar are complex and inefficient. • Complexity in algorithms is O( n 3), Means amount of time they take to order of the cube length string to be parsed. • Algorithms must back up and reparse part of sentence being analyzed. • Reparsing : is required when the parser has made a mistake in the parsing process. • backing up: is requires that part of the parse tree being constructed (trace) must be dismantled and rebuilt. 23

Lexical and Syntax Analysis: Chapter 4 Top down parser • Top-down parsers check to see if a string can be generated by a grammar by creating a parse tree starting from the initial symbol and working down • The parser uses symbol-look-ahead and this approach without backtracking • LL parsers are examples of top-down parsers. 24

Lexical and Syntax Analysis: Chapter 4 Recursive – Decent Parsing • Recursive – Decent Parser consist of many recursive subprograms and produce a parse tree in top-down (descending) order • The syntax of These structure described with recursive grammar rule • EBNF is suitable for Recursive – Decent Parser 25

Lexical and Syntax Analysis: Chapter 4 Consider the following EBNF description of arithmetic expression • <expr> • <term> • <factor> <term> { ( + l - ) <term> } <factor>{ ( * l / ) <factor>} id l ( <expr> ) • This grammar must ensure that code generation process( syntax analysis) Produce code that adheres to the associativety rule of language 26

Lexical and Syntax Analysis: Chapter 4 The LL Grammar Class • A LL parser is a top-down parser for a subset of the context-free grammars. It parses the input from Left to right, and constructs a Leftmost derivation of the sentence 27

Lexical and Syntax Analysis: Chapter 4 Left Recursion • In recursive descent parsing, it is possible to implement a procedure that may cause an infinite loop if the grammar that we called left recursion 28

Lexical and Syntax Analysis: Chapter 4 Left Recursion • Consider the following rule: • A A + b {direct} • A B B a A {indirect} Ab • The Algorithm that remove both {direct, indirect} not covered here 29

Lexical and Syntax Analysis: Chapter 4 Pairwise disjoint ness test • Test requires the ability to compute a set of RHSs of a given nonterminal symbol in a grammar • If a nonterminal has more than RHSs the first terminal symbol must be unique. 30

Lexical and Syntax Analysis: Chapter 4 Consider the following rule: • A a. B l b. Ab l c • The FIRST set for RHSs are {a}, {b}, {c} which are clearly disjoint , which pass {Pairwise disjoint ness test} • A a. B l alb • The FIRST set for RHSs are {a}, {a} which are clearly not disjoint. 31

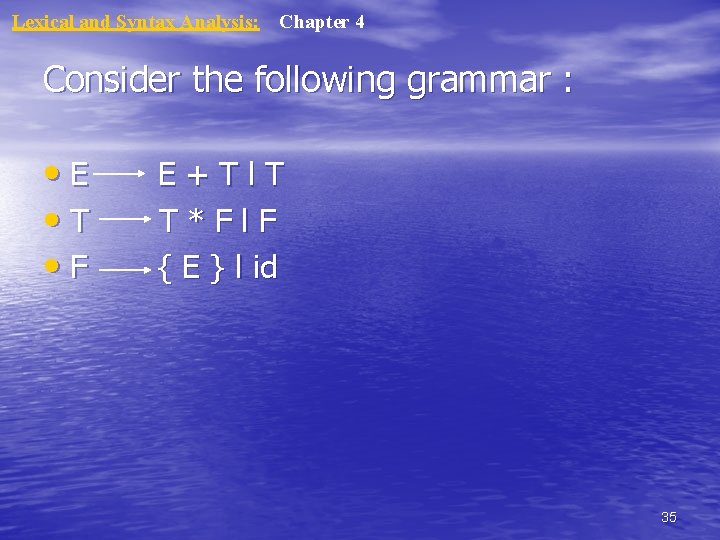

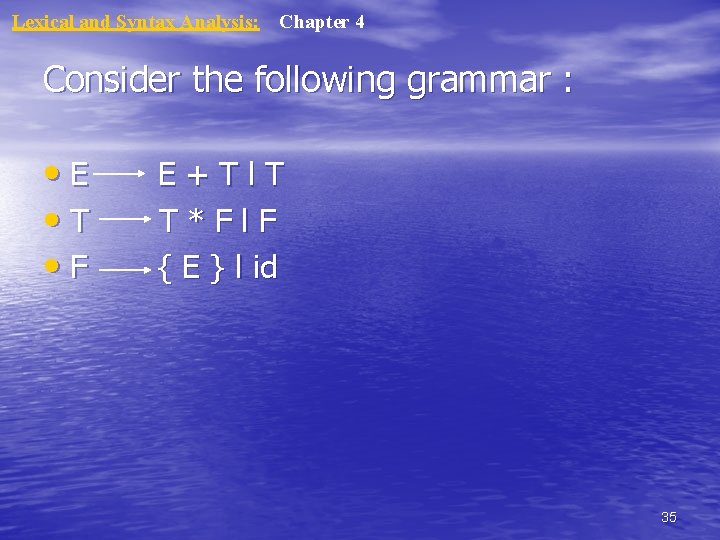

Lexical and Syntax Analysis: Chapter 4 Left factoring • A process that solve the problem of left recursion and don’t pass “ pair wise disjoint ness test [ both RHS begin with the same terminal] • We will take a look at left factoring 32

![Lexical and Syntax Analysis Chapter 4 Consider the rule variable ident l ident expr Lexical and Syntax Analysis: Chapter 4 Consider the rule: <variable> ident l ident [<expr>]](https://slidetodoc.com/presentation_image_h/b99eeb5dc6e2f805b95ab48d36257eac/image-33.jpg)

Lexical and Syntax Analysis: Chapter 4 Consider the rule: <variable> ident l ident [<expr>] • { this rule don’t pass pair wise disjoitness} • We use left factoring to solve this problem. Tow rules can be replaced by : <variable> ident <new> ε l [<expr>] 33

Lexical and Syntax Analysis: Chapter 4 Bottom- Up Parsing • check to see a string can be generated from a • • grammar by creating a parse tree from the leaves, and working up. Left recursive acceptable to bottom-up parsers don’t include met symbols the process of bottom-up parsers produce the reverse of a right most derivation LR parsers are examples of bottom-up parsers. 34

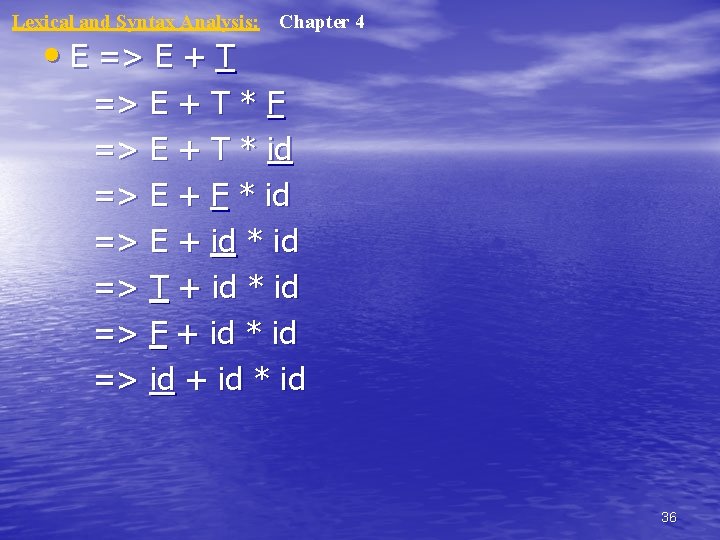

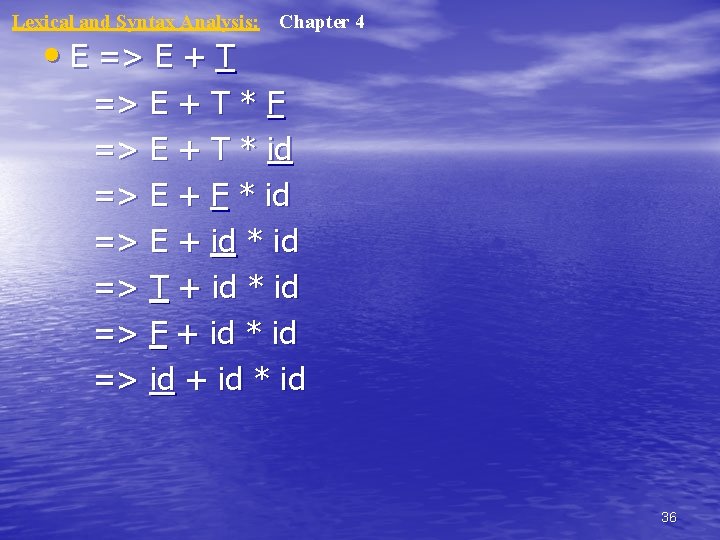

Lexical and Syntax Analysis: Chapter 4 Consider the following grammar : • E • T • F E+Tl. T T*Fl. F { E } l id 35

Lexical and Syntax Analysis: • E => E + T Chapter 4 => E + T * F => E + T * id => E + F * id => E + id * id => T + id * id => F + id * id => id + id * id 36

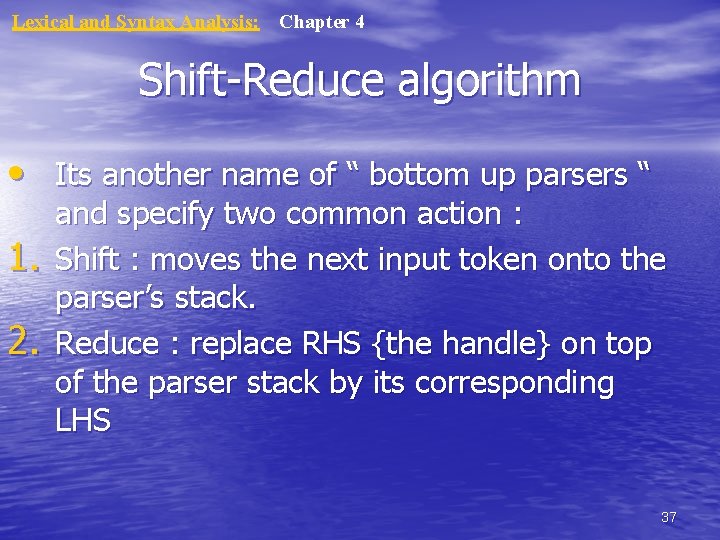

Lexical and Syntax Analysis: Chapter 4 Shift-Reduce algorithm • Its another name of “ bottom up parsers “ 1. 2. and specify two common action : Shift : moves the next input token onto the parser’s stack. Reduce : replace RHS {the handle} on top of the parser stack by its corresponding LHS 37

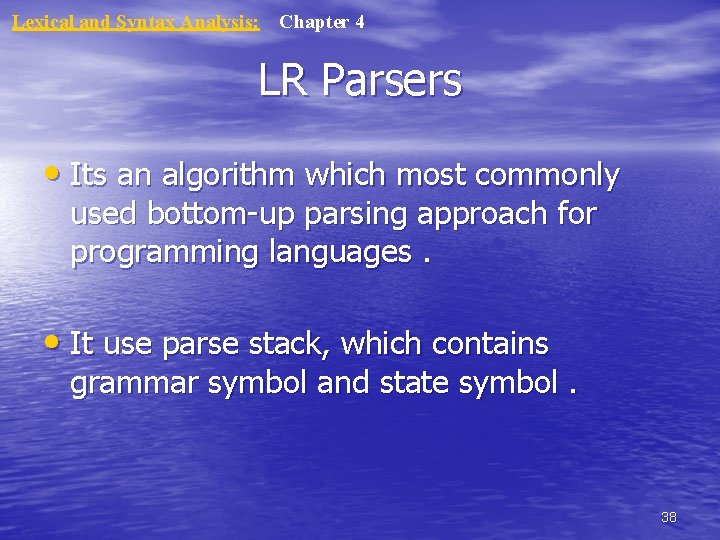

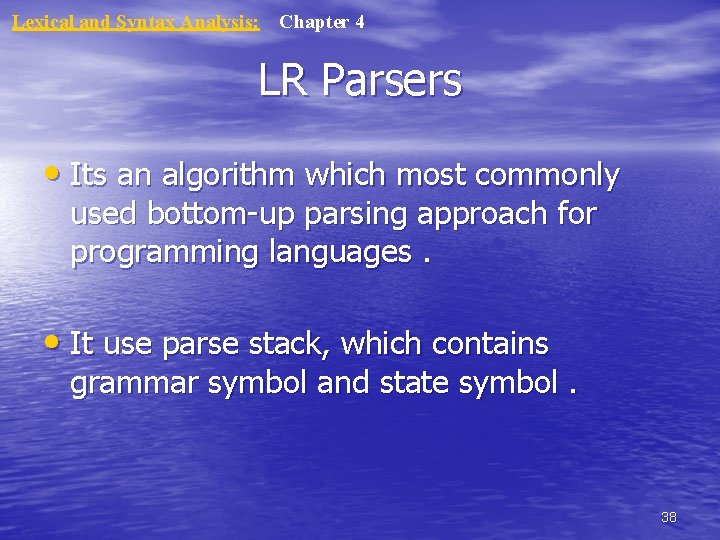

Lexical and Syntax Analysis: Chapter 4 LR Parsers • Its an algorithm which most commonly used bottom-up parsing approach for programming languages. • It use parse stack, which contains grammar symbol and state symbol. 38

Lexical and Syntax Analysis: Chapter 4 Advantages for LR parsers : 1. they work for nearly all grammars that 2. 3. 4. describe programming languages. they work on a larger class of grammars than other bottom-up algorithm and in the same efficient. They can detect syntax errors as soon as its possible. The LR class of grammar Is a super set of the class parsable by LL parsers 39

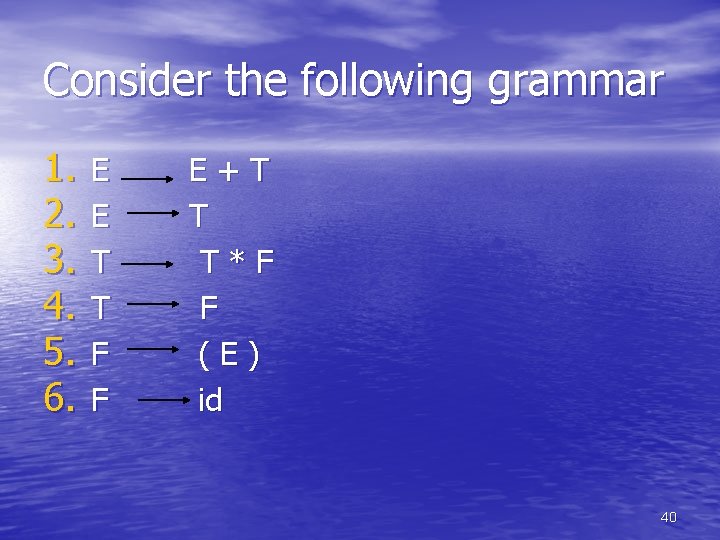

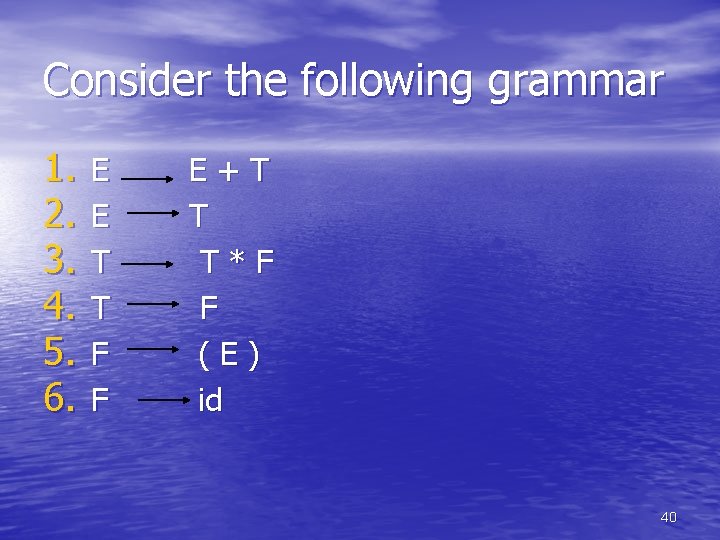

Consider the following grammar 1. 2. 3. 4. 5. 6. E E T T F F E+T T T*F F (E) id 40

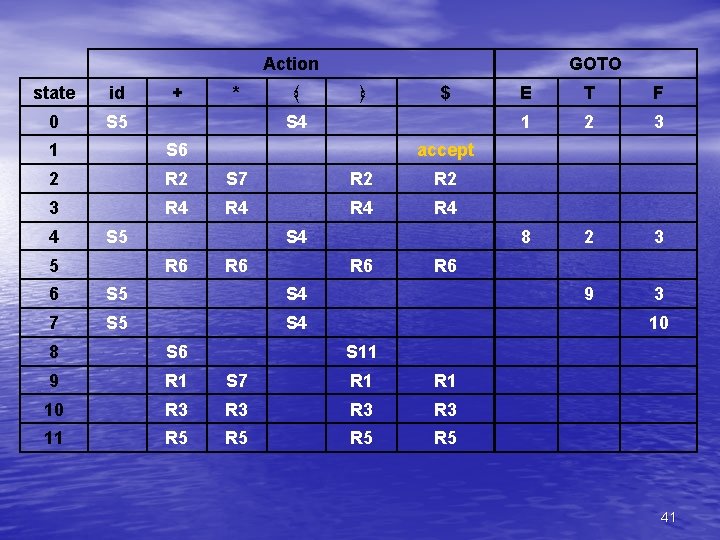

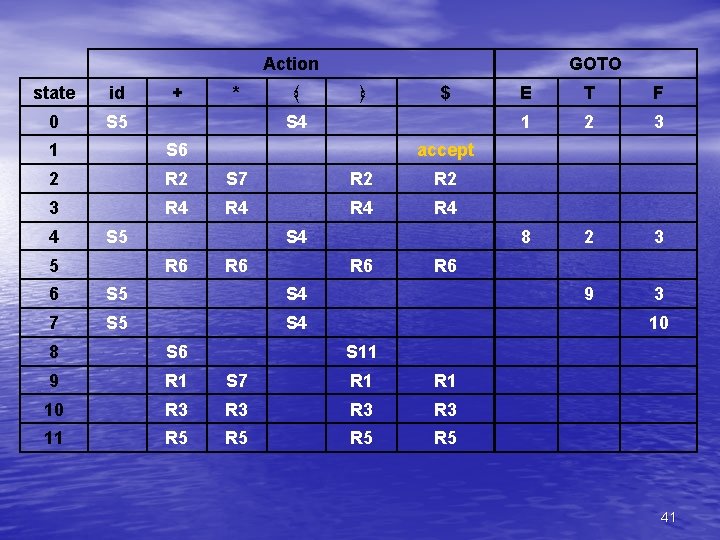

Action GOTO state id + * ﴾ ﴿ $ E T F 0 S 5 S 4 1 2 3 1 S 6 accept 2 R 2 S 7 R 2 3 R 4 R 4 4 S 5 S 4 8 2 3 5 R 6 R 6 6 S 5 S 4 9 3 7 S 5 S 4 10 8 S 6 S 11 9 R 1 S 7 R 1 10 R 3 R 3 11 R 5 R 5 41

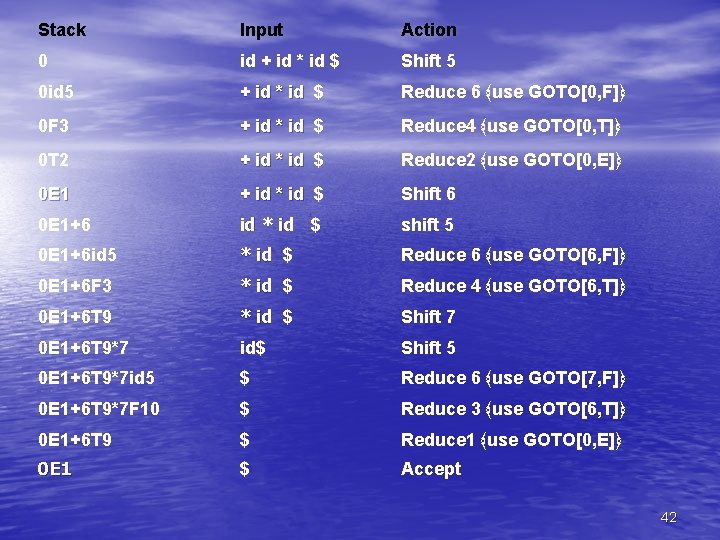

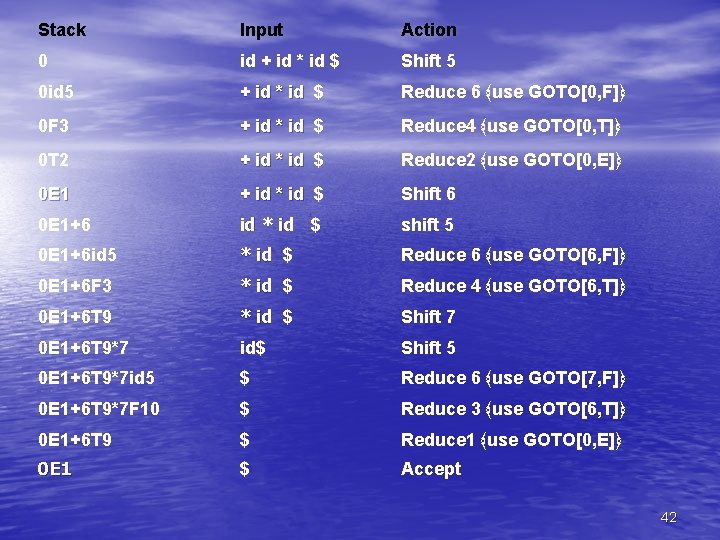

Stack Input Action 0 id + id * id $ Shift 5 0 id 5 + id * id $ + id * id Reduce 6 ﴾use GOTO[0, F]﴿ 0 F 3 + id * id $ + id * id Reduce 4 ﴾use GOTO[0, T]﴿ 0 T 2 + id * id $ + id * id Reduce 2 ﴾use GOTO[0, E]﴿ 0 E 1 + id * id $ + id * id Shift 6 0 E 1+6 id * id $ shift 5 0 E 1+6 id 5 * id $ Reduce 6 ﴾use GOTO[6, F]﴿ 0 E 1+6 F 3 * id $ Reduce 4 ﴾use GOTO[6, T]﴿ 0 E 1+6 T 9 * id $ Shift 7 0 E 1+6 T 9*7 id$ Shift 5 0 E 1+6 T 9*7 id 5 $ Reduce 6 ﴾use GOTO[7, F]﴿ 0 E 1+6 T 9*7 F 10 $ Reduce 3 ﴾use GOTO[6, T]﴿ 0 E 1+6 T 9 $ Reduce 1 ﴾use GOTO[0, E]﴿ 0 E 1 $ Accept 42