CDA 3101 Spring 2016 Computer Storage Practical Aspects

- Slides: 37

CDA 3101 Spring 2016 Computer Storage: Practical Aspects 29, 31 March 2016 Copyright © 2011 Prabhat Mishra 1

Storage Systems l Introduction l Disk Storage l Dependability and Reliability • I/O Performance l Server Computers l Conclusion CDA 3101 – Spring 2016 Copyright © 2011 Prabhat Mishra 2

Case for Storage l Shift in focus from computation to communication and storage of information u “The Computing Revolution” (1960 s to 1980 s) – IBM, Control Data Corp. , Cray Research u “The Information Age” (1990 to today) – Google, Yahoo, Amazon, … l Storage emphasizes reliability and scalability as well as cost-performance u Program crash – frustrating u Data loss is unacceptable dependability is key concern l Which software determines HW features? u Operating System for storage u Compiler for processor

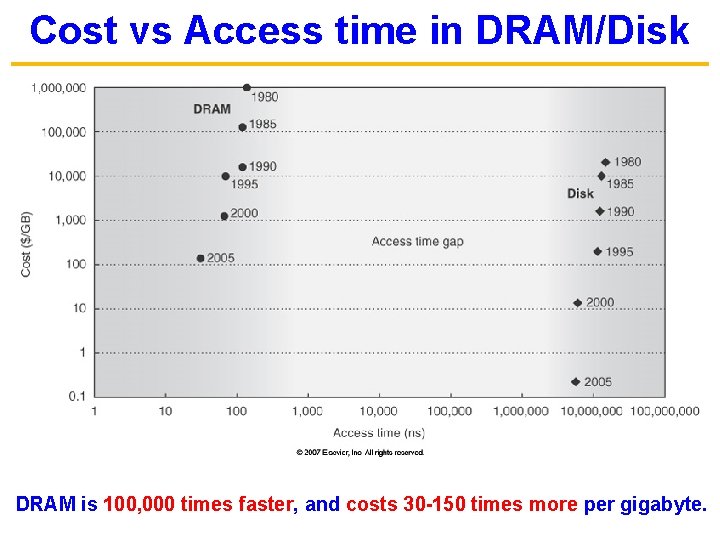

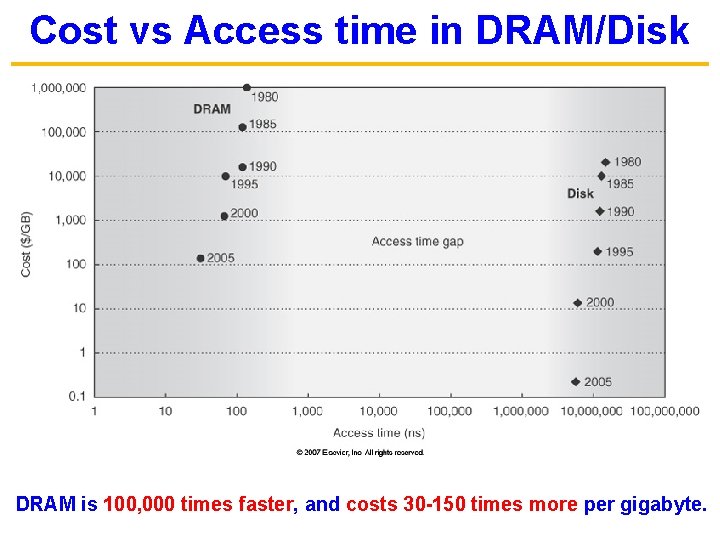

Cost vs Access time in DRAM/Disk DRAM is 100, 000 times faster, and costs 30 -150 times more per gigabyte.

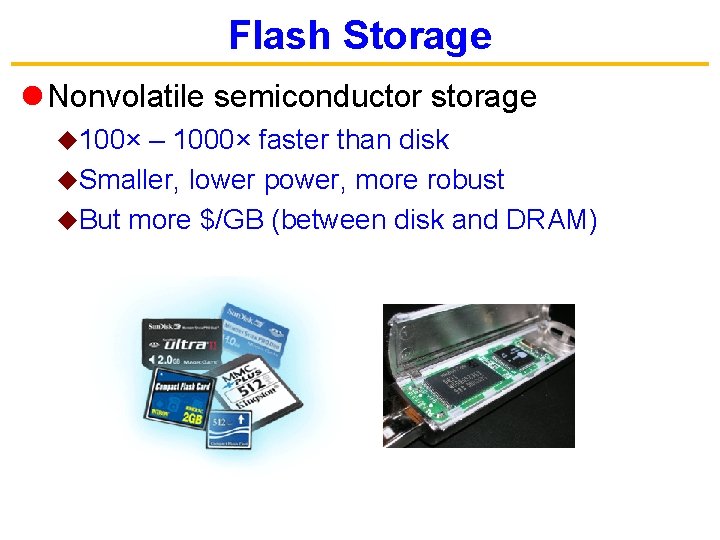

Flash Storage l Nonvolatile semiconductor storage u 100× – 1000× faster than disk u. Smaller, lower power, more robust u. But more $/GB (between disk and DRAM)

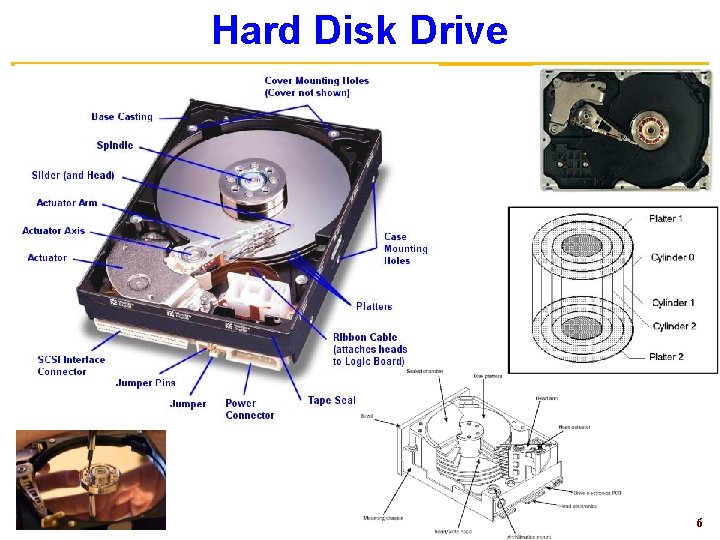

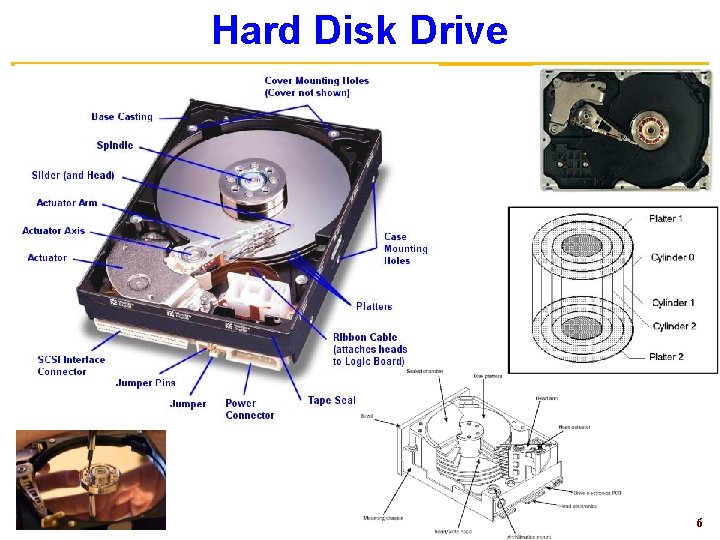

Hard Disk Drive 6

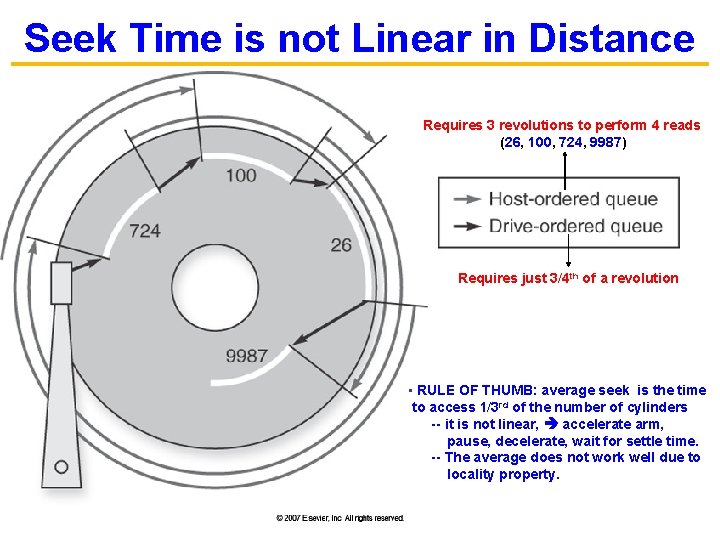

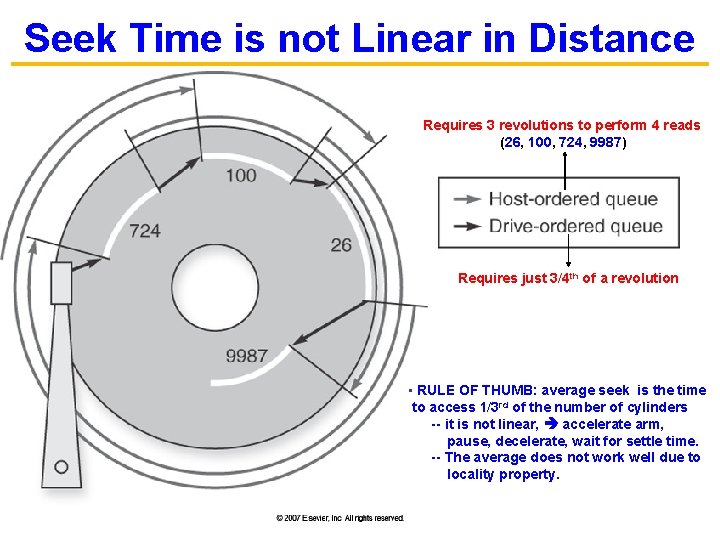

Seek Time is not Linear in Distance Requires 3 revolutions to perform 4 reads (26, 100, 724, 9987) Requires just 3/4 th of a revolution • RULE OF THUMB: average seek is the time to access 1/3 rd of the number of cylinders -- it is not linear, accelerate arm, pause, decelerate, wait for settle time. -- The average does not work well due to locality property.

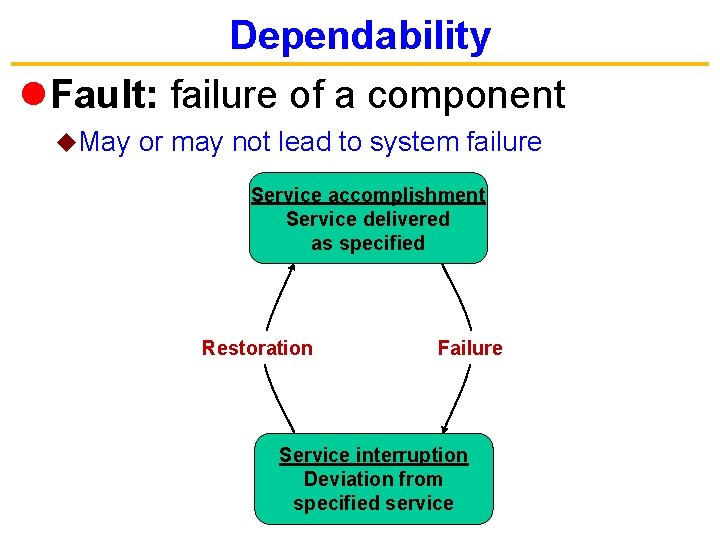

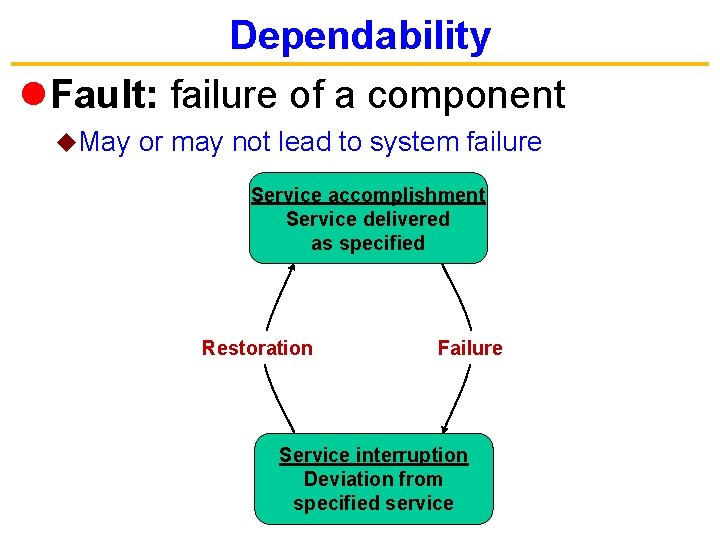

Dependability l. Fault: failure of a component u. May or may not lead to system failure Service accomplishment Service delivered as specified Restoration Failure Service interruption Deviation from specified service

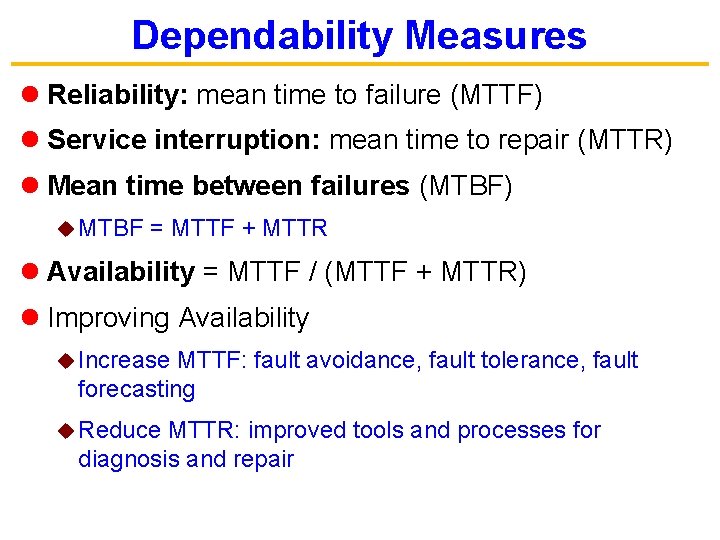

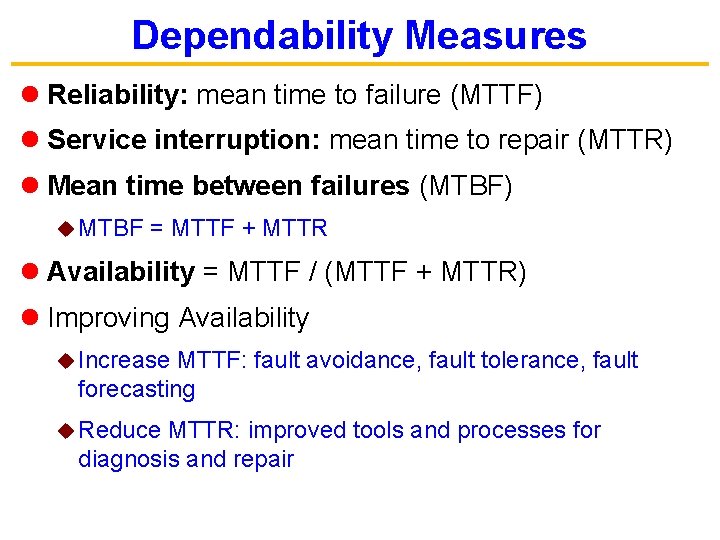

Dependability Measures l Reliability: mean time to failure (MTTF) l Service interruption: mean time to repair (MTTR) l Mean time between failures (MTBF) u MTBF = MTTF + MTTR l Availability = MTTF / (MTTF + MTTR) l Improving Availability u Increase MTTF: fault avoidance, fault tolerance, fault forecasting u Reduce MTTR: improved tools and processes for diagnosis and repair

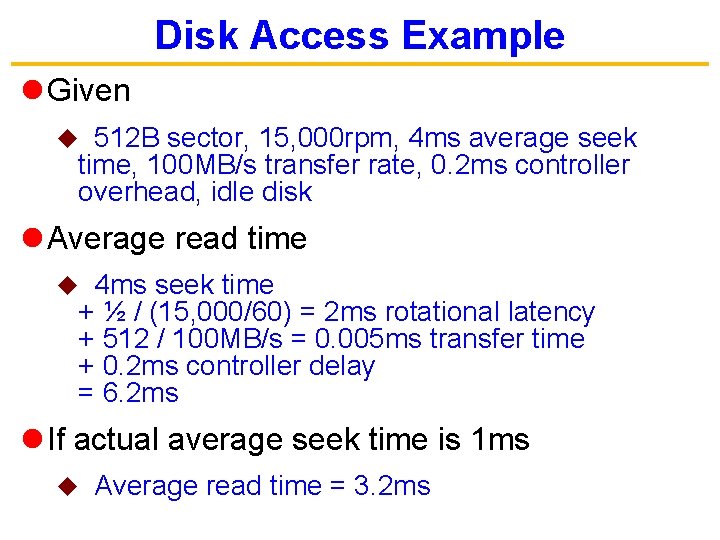

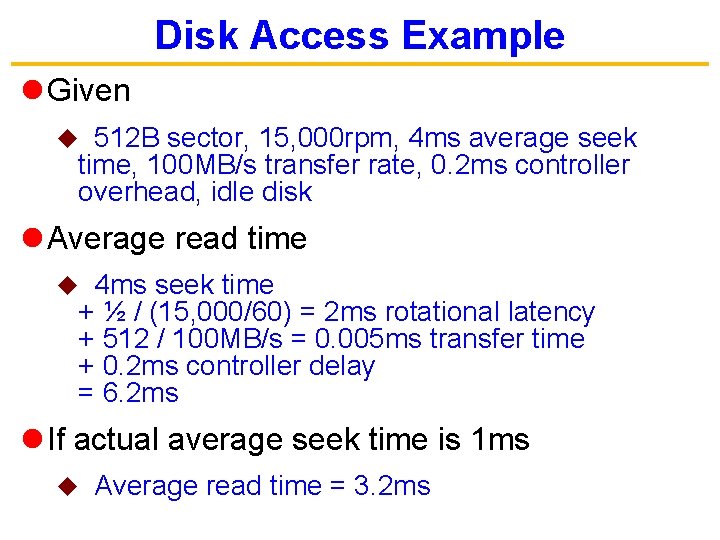

Disk Access Example l Given 512 B sector, 15, 000 rpm, 4 ms average seek time, 100 MB/s transfer rate, 0. 2 ms controller overhead, idle disk u l Average read time 4 ms seek time + ½ / (15, 000/60) = 2 ms rotational latency + 512 / 100 MB/s = 0. 005 ms transfer time + 0. 2 ms controller delay = 6. 2 ms u l If actual average seek time is 1 ms u Average read time = 3. 2 ms

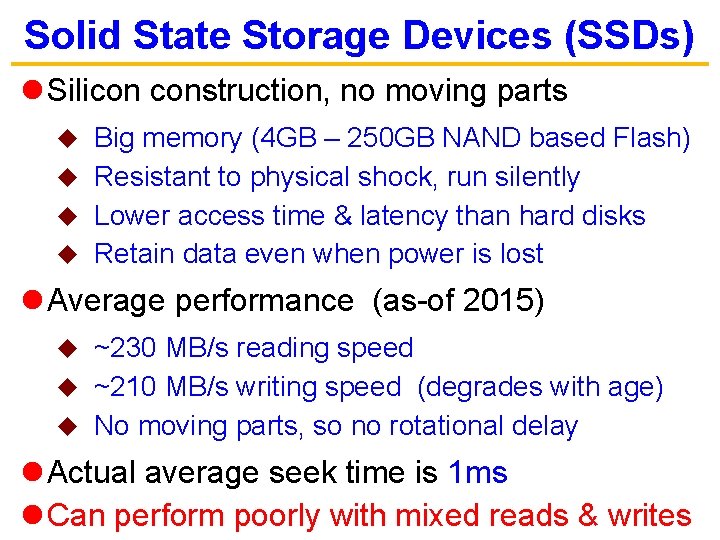

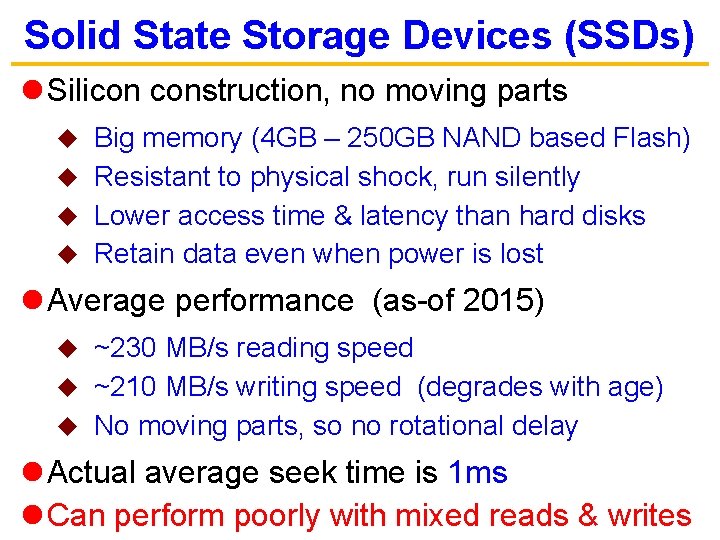

Solid State Storage Devices (SSDs) l Silicon construction, no moving parts Big memory (4 GB – 250 GB NAND based Flash) u Resistant to physical shock, run silently u Lower access time & latency than hard disks u Retain data even when power is lost u l Average performance (as-of 2015) ~230 MB/s reading speed u ~210 MB/s writing speed (degrades with age) u No moving parts, so no rotational delay u l Actual average seek time is 1 ms l Can perform poorly with mixed reads & writes

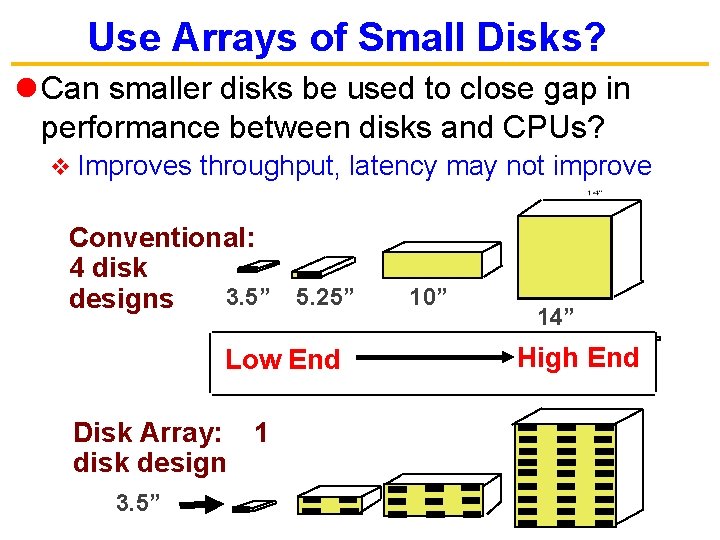

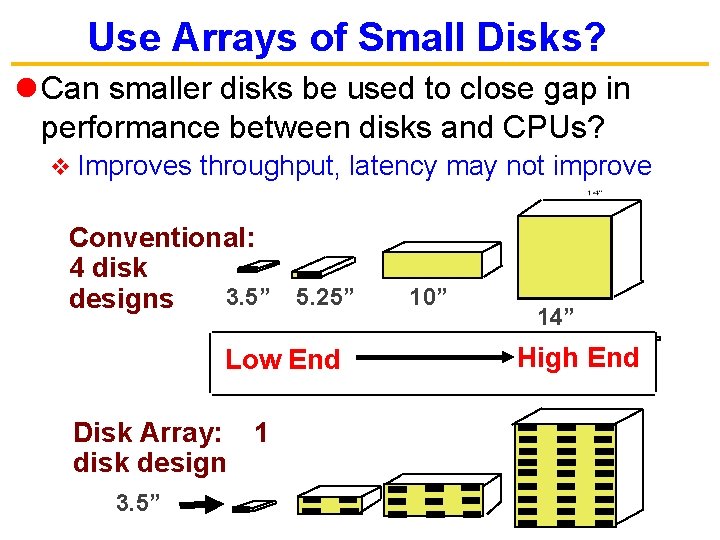

Use Arrays of Small Disks? l Can smaller disks be used to close gap in performance between disks and CPUs? v Improves throughput, latency may not improve Conventional: 4 disk 3. 5” 5. 25” designs Low End Disk Array: 1 disk design 3. 5” 10” 14” High End

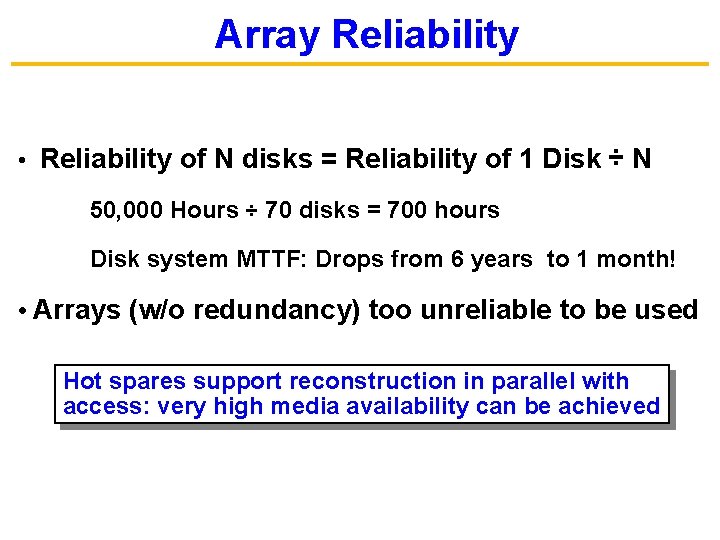

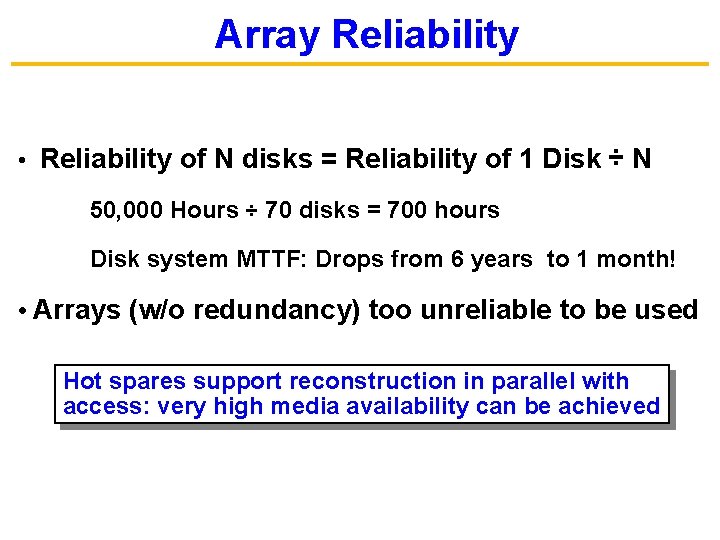

Array Reliability • Reliability of N disks = Reliability of 1 Disk ÷ N 50, 000 Hours ÷ 70 disks = 700 hours Disk system MTTF: Drops from 6 years to 1 month! • Arrays (w/o redundancy) too unreliable to be used Hot spares support reconstruction in parallel with access: very high media availability can be achieved

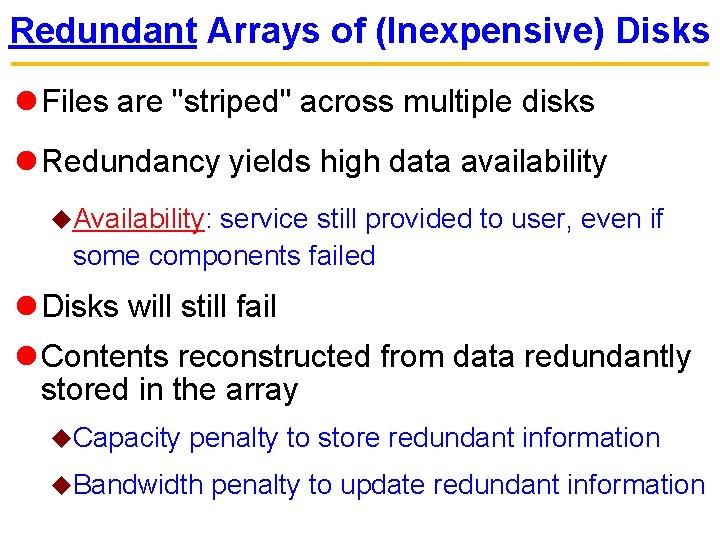

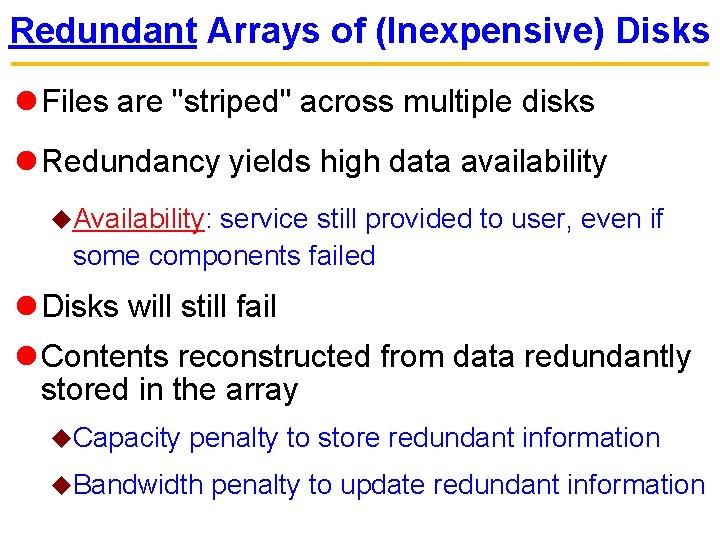

Redundant Arrays of (Inexpensive) Disks l Files are "striped" across multiple disks l Redundancy yields high data availability u. Availability: service still provided to user, even if some components failed l Disks will still fail l Contents reconstructed from data redundantly stored in the array u. Capacity penalty to store redundant information u. Bandwidth penalty to update redundant information

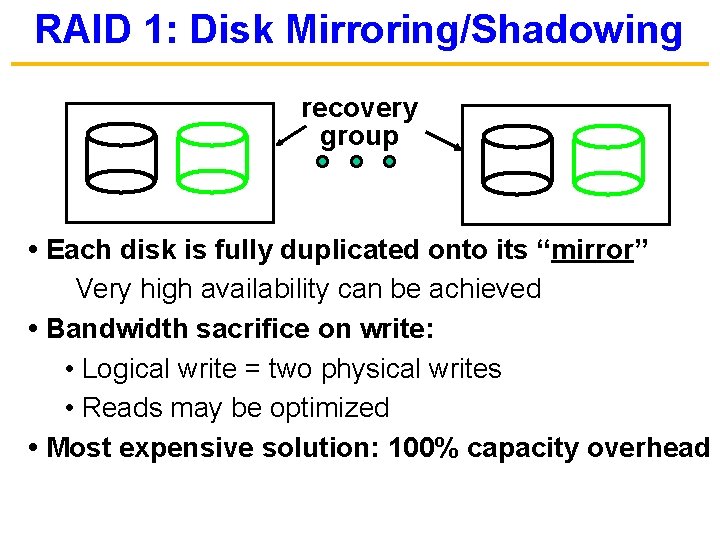

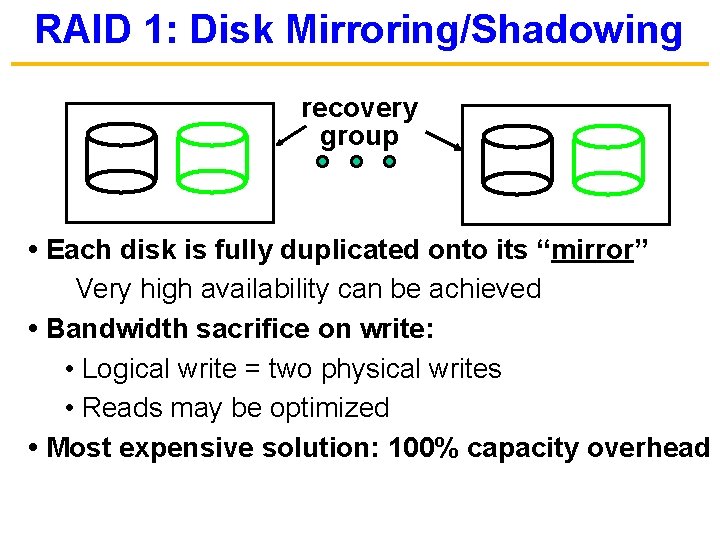

RAID 1: Disk Mirroring/Shadowing recovery group • Each disk is fully duplicated onto its “mirror” Very high availability can be achieved • Bandwidth sacrifice on write: • Logical write = two physical writes • Reads may be optimized • Most expensive solution: 100% capacity overhead

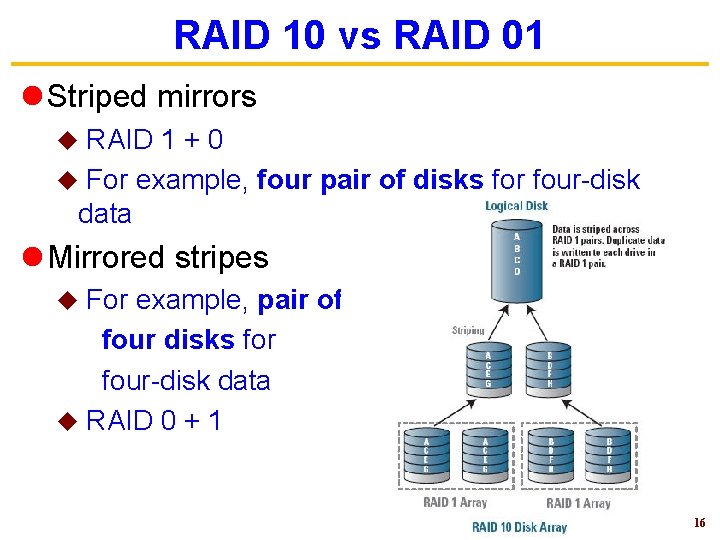

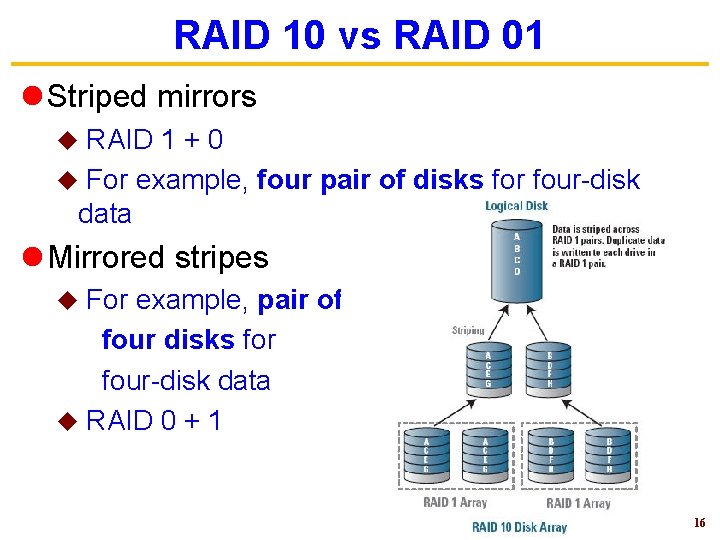

RAID 10 vs RAID 01 l Striped mirrors RAID 1 + 0 u For example, four pair of disks for four-disk data u l Mirrored stripes For example, pair of four disks for four-disk data u RAID 0 + 1 u 16

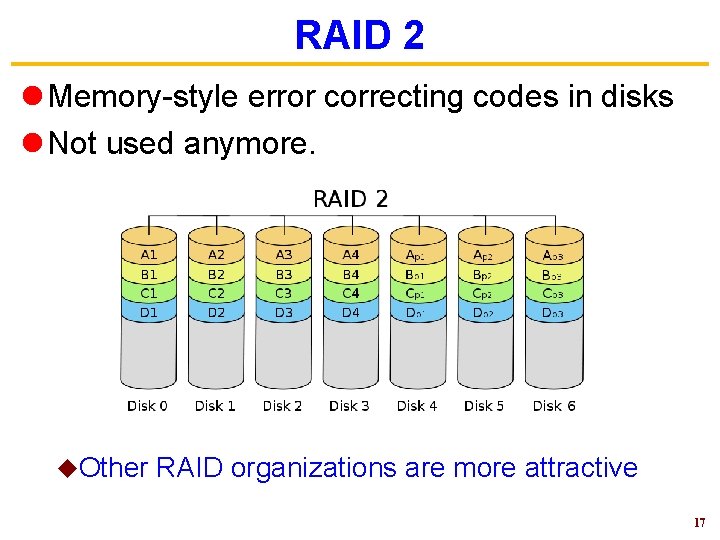

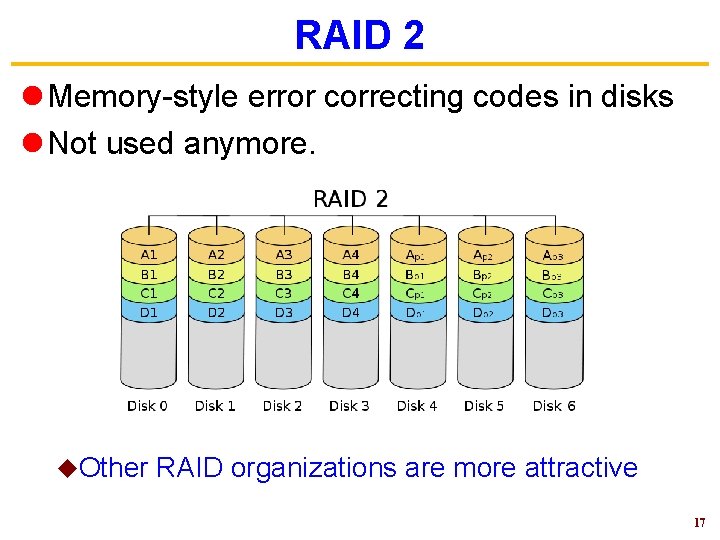

RAID 2 l Memory-style error correcting codes in disks l Not used anymore. u. Other RAID organizations are more attractive 17

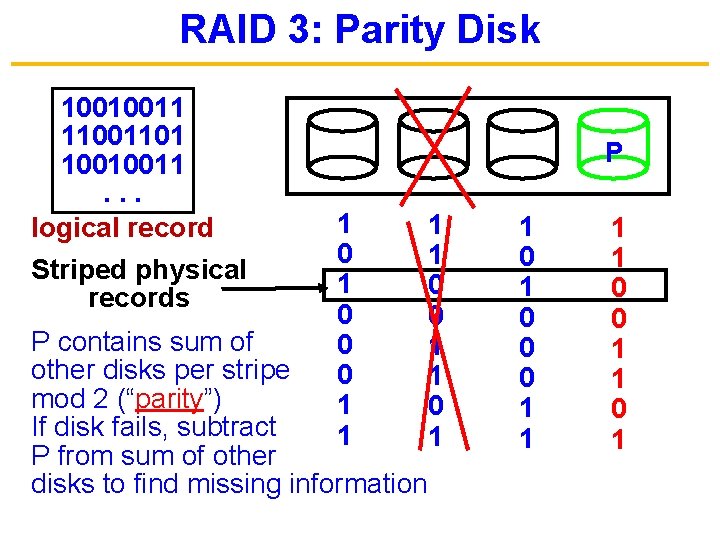

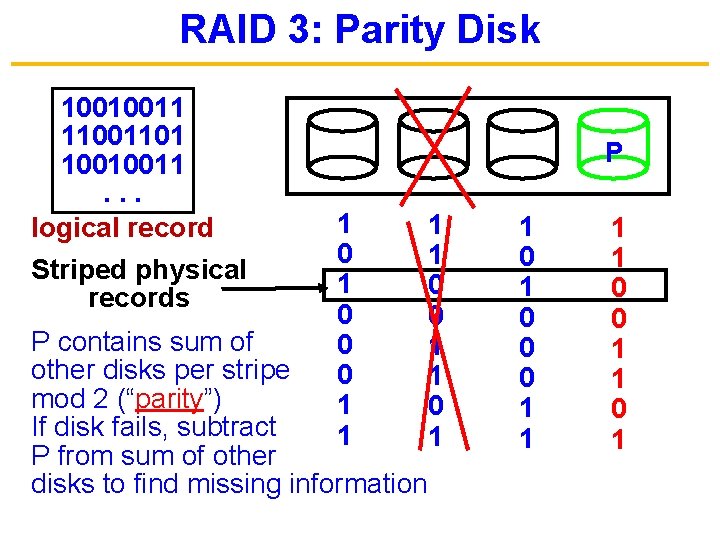

RAID 3: Parity Disk 10010011 11001101 10010011. . . logical record Striped physical records P 1 0 0 0 1 1 P contains sum of other disks per stripe mod 2 (“parity”) If disk fails, subtract P from sum of other disks to find missing information 1 1 0 0 1 1 0 1 0 0 0 1 1 0 1

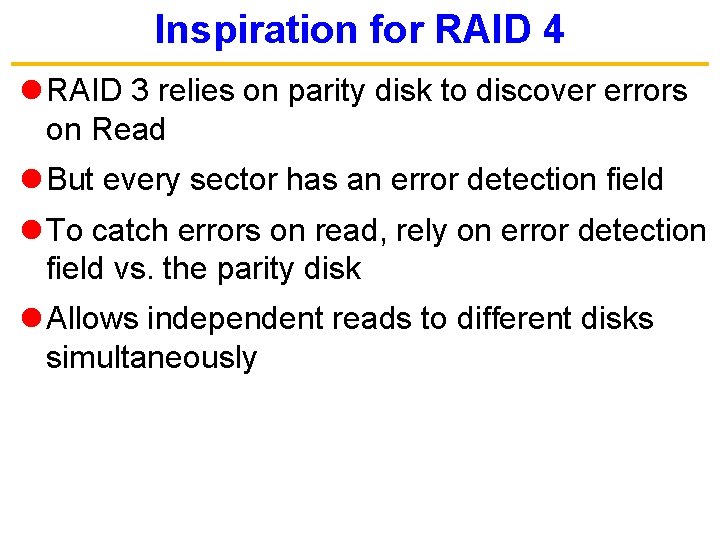

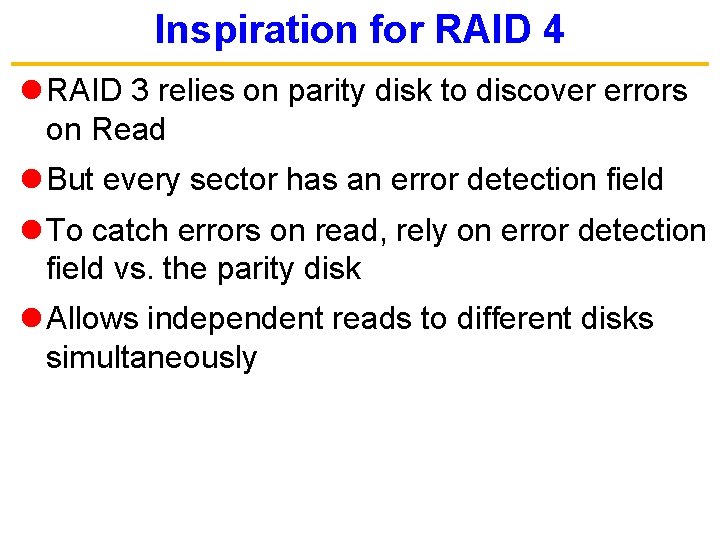

Inspiration for RAID 4 l RAID 3 relies on parity disk to discover errors on Read l But every sector has an error detection field l To catch errors on read, rely on error detection field vs. the parity disk l Allows independent reads to different disks simultaneously

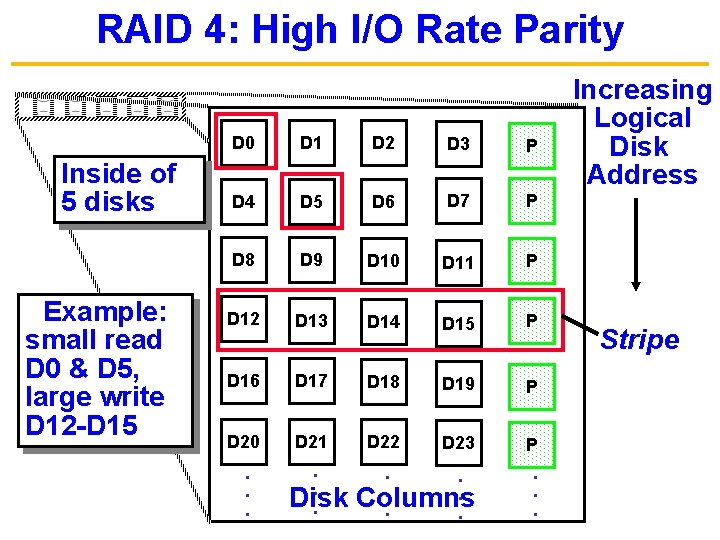

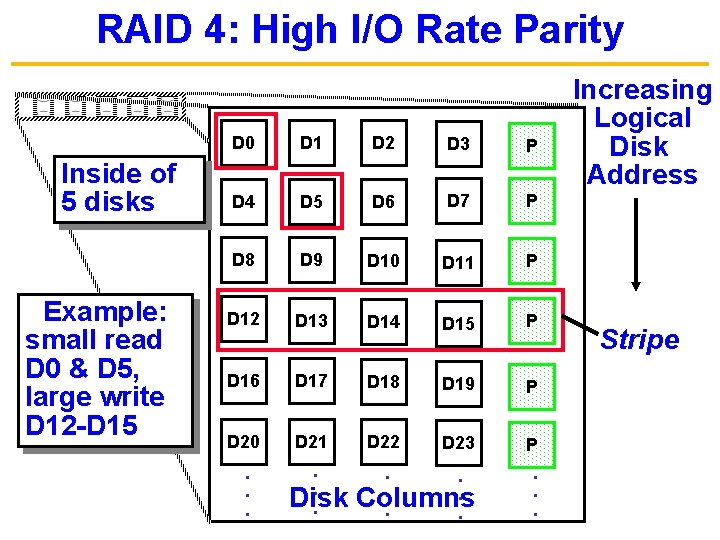

RAID 4: High I/O Rate Parity Inside of 5 disks Example: small read D 0 & D 5, large write D 12 -D 15 D 0 D 1 D 2 D 3 P D 4 D 5 D 6 D 7 P D 8 D 9 D 10 D 11 P D 12 D 13 D 14 D 15 P D 16 D 17 D 18 D 19 P D 20 D 21 D 22 D 23 P . . Columns. . Disk. Increasing Logical Disk Address Stripe

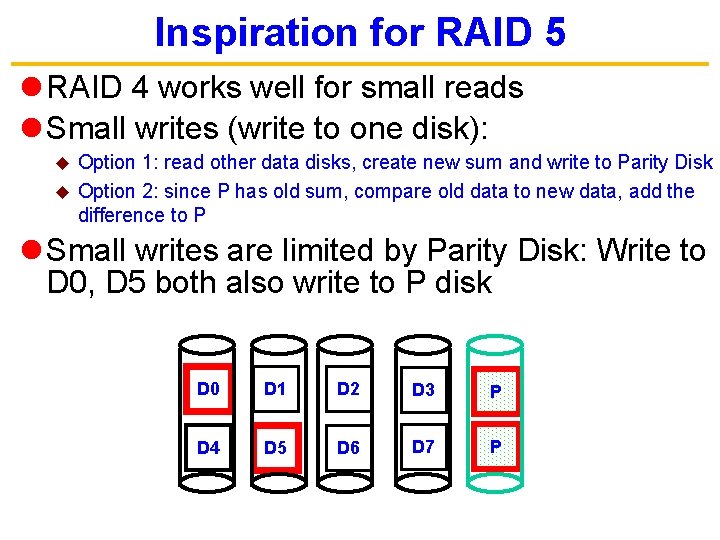

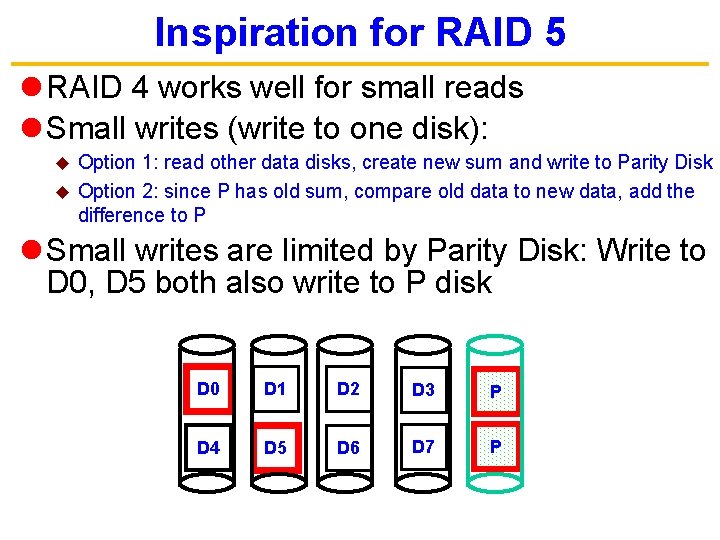

Inspiration for RAID 5 l RAID 4 works well for small reads l Small writes (write to one disk): Option 1: read other data disks, create new sum and write to Parity Disk u Option 2: since P has old sum, compare old data to new data, add the difference to P u l Small writes are limited by Parity Disk: Write to D 0, D 5 both also write to P disk D 0 D 1 D 2 D 3 P D 4 D 5 D 6 D 7 P

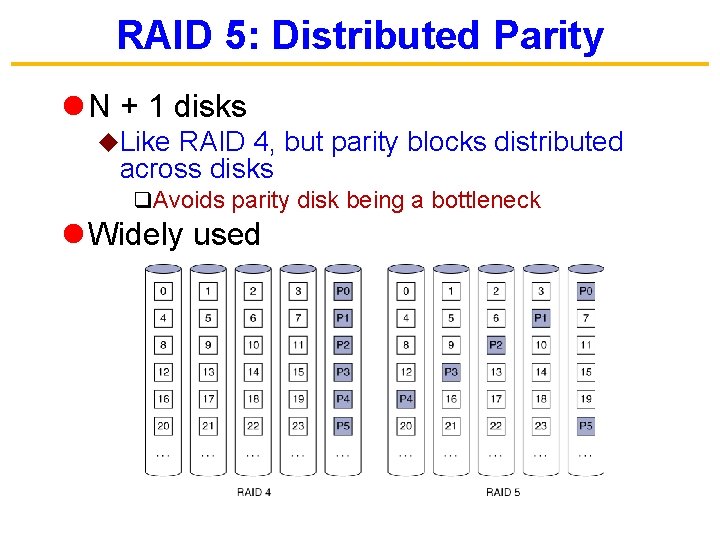

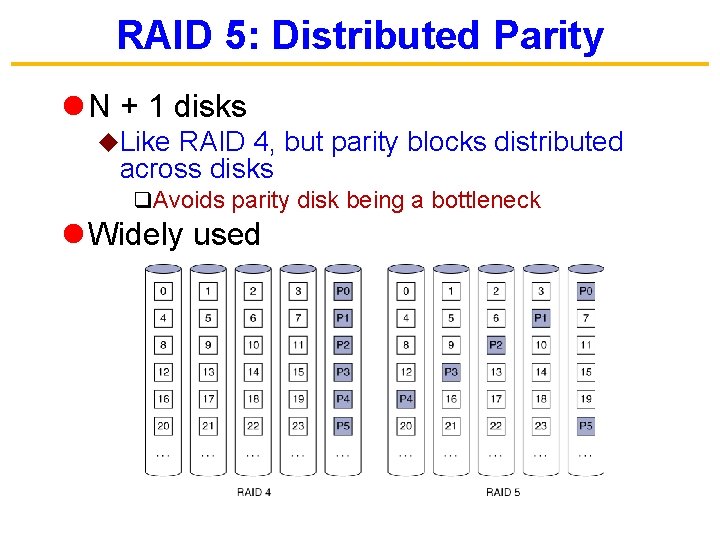

RAID 5: Distributed Parity l N + 1 disks u. Like RAID 4, but parity blocks distributed across disks q. Avoids parity disk being a bottleneck l Widely used

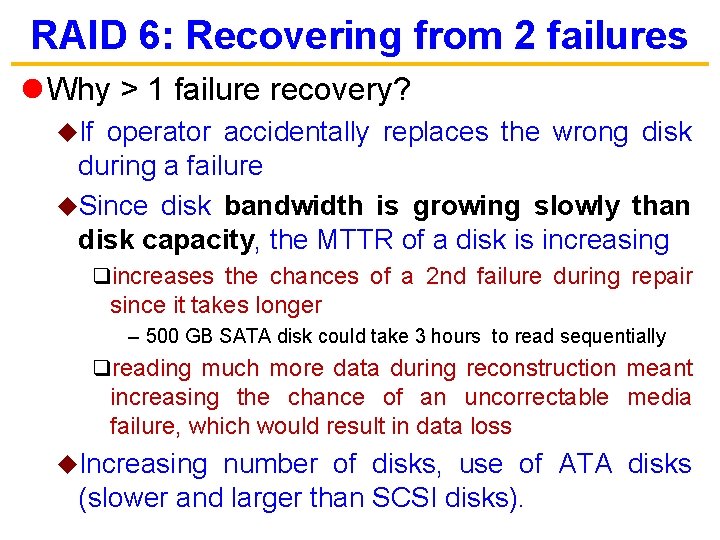

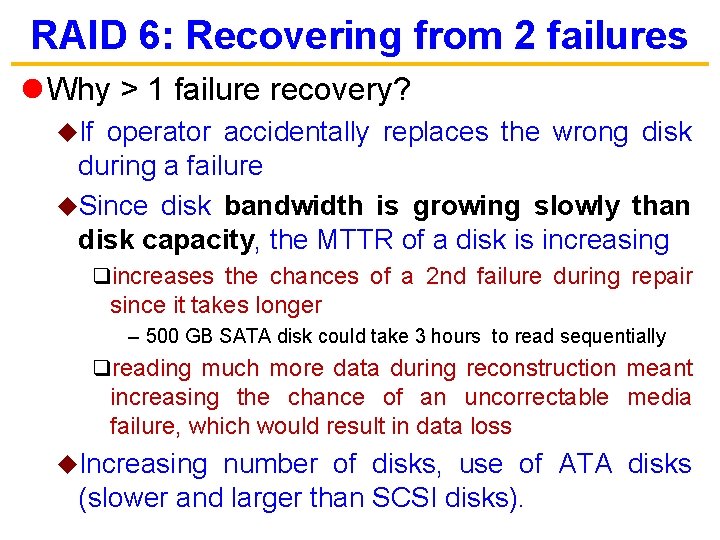

RAID 6: Recovering from 2 failures l Why > 1 failure recovery? u. If operator accidentally replaces the wrong disk during a failure u. Since disk bandwidth is growing slowly than disk capacity, the MTTR of a disk is increasing qincreases the chances of a 2 nd failure during repair since it takes longer – 500 GB SATA disk could take 3 hours to read sequentially qreading much more data during reconstruction meant increasing the chance of an uncorrectable media failure, which would result in data loss u. Increasing number of disks, use of ATA disks (slower and larger than SCSI disks).

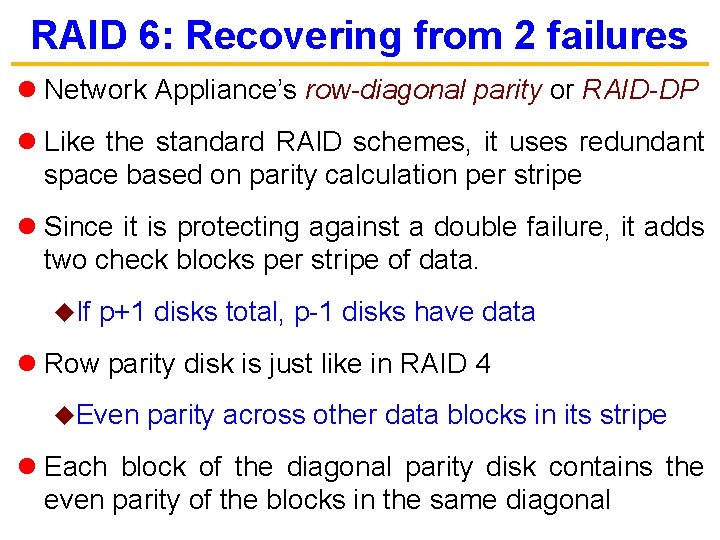

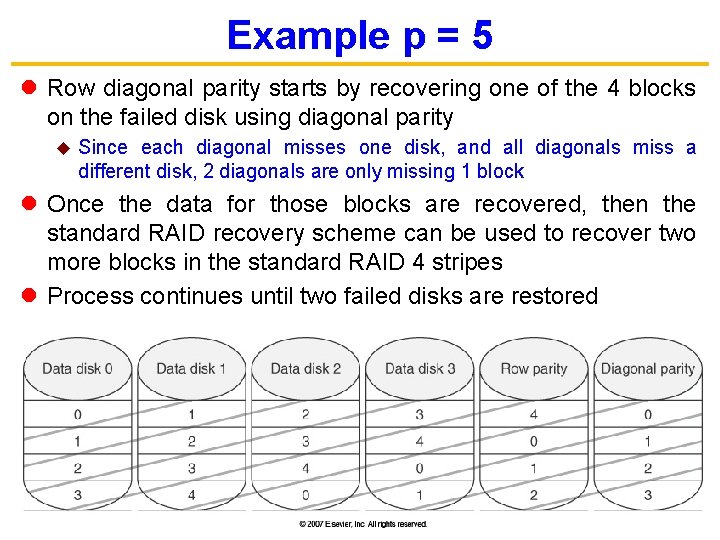

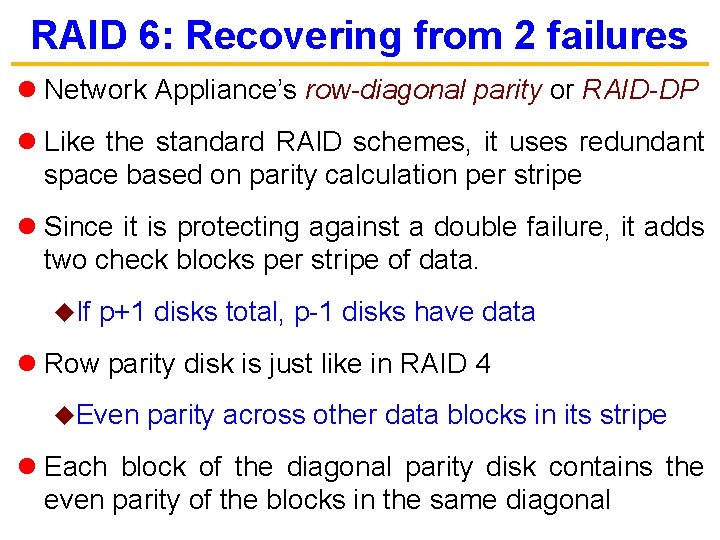

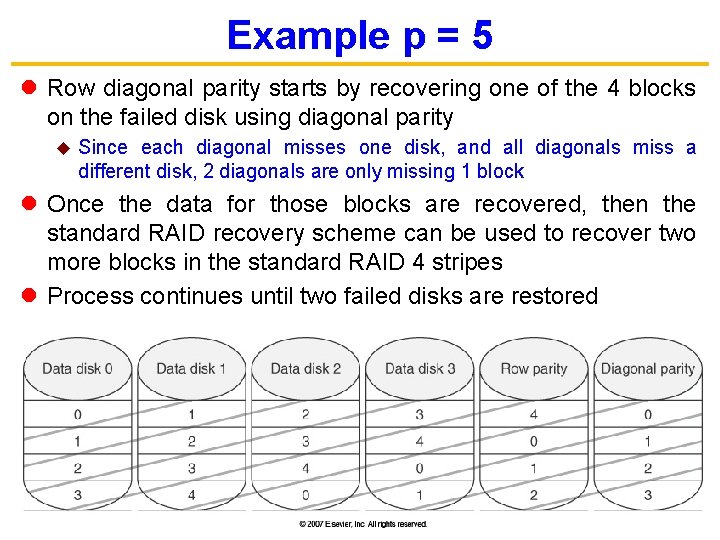

RAID 6: Recovering from 2 failures l Network Appliance’s row-diagonal parity or RAID-DP l Like the standard RAID schemes, it uses redundant space based on parity calculation per stripe l Since it is protecting against a double failure, it adds two check blocks per stripe of data. u. If p+1 disks total, p-1 disks have data l Row parity disk is just like in RAID 4 u. Even parity across other data blocks in its stripe l Each block of the diagonal parity disk contains the even parity of the blocks in the same diagonal

Example p = 5 l Row diagonal parity starts by recovering one of the 4 blocks on the failed disk using diagonal parity u Since each diagonal misses one disk, and all diagonals miss a different disk, 2 diagonals are only missing 1 block l Once the data for those blocks are recovered, then the standard RAID recovery scheme can be used to recover two more blocks in the standard RAID 4 stripes l Process continues until two failed disks are restored

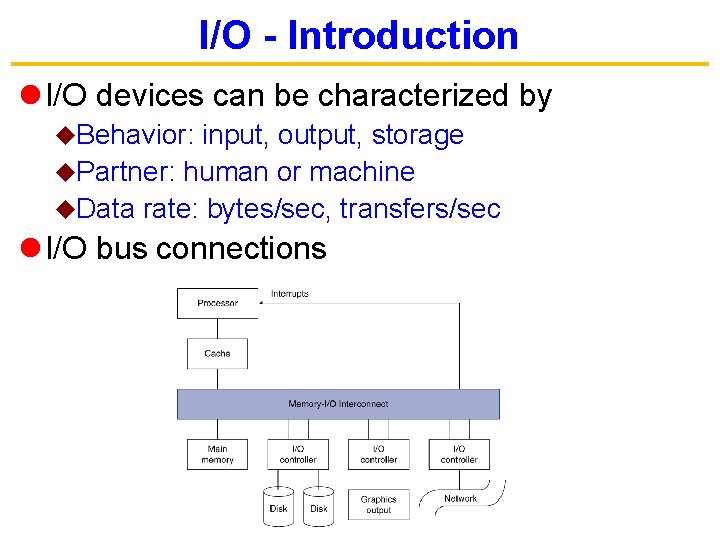

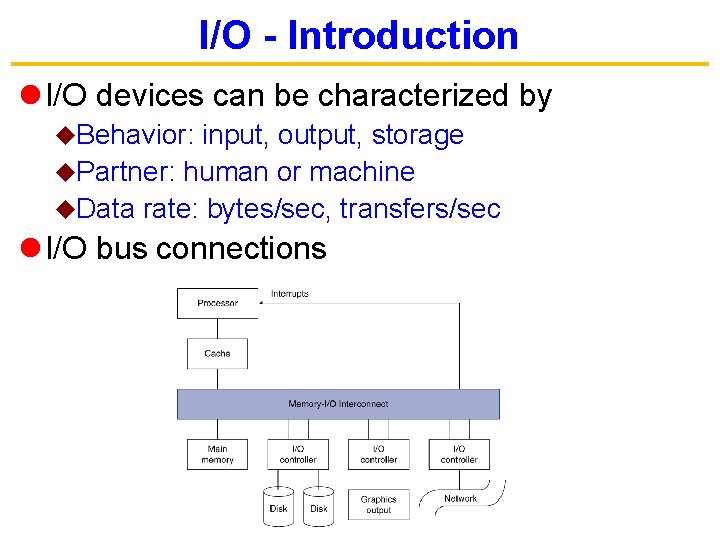

I/O - Introduction l I/O devices can be characterized by u. Behavior: input, output, storage u. Partner: human or machine u. Data rate: bytes/sec, transfers/sec l I/O bus connections

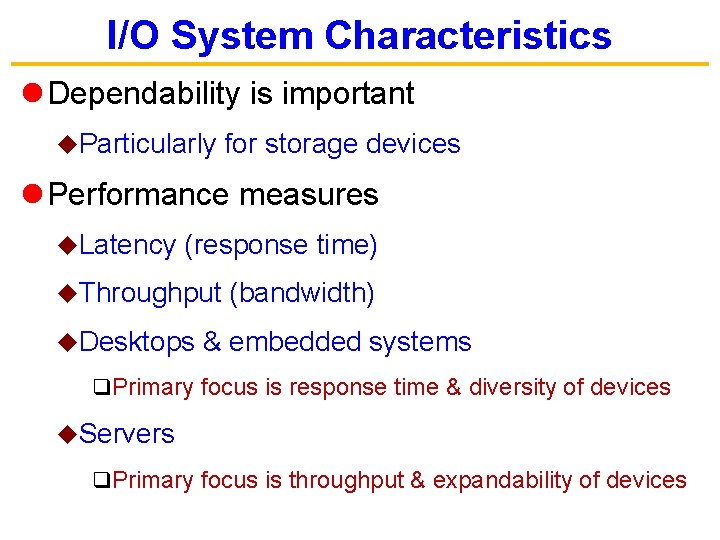

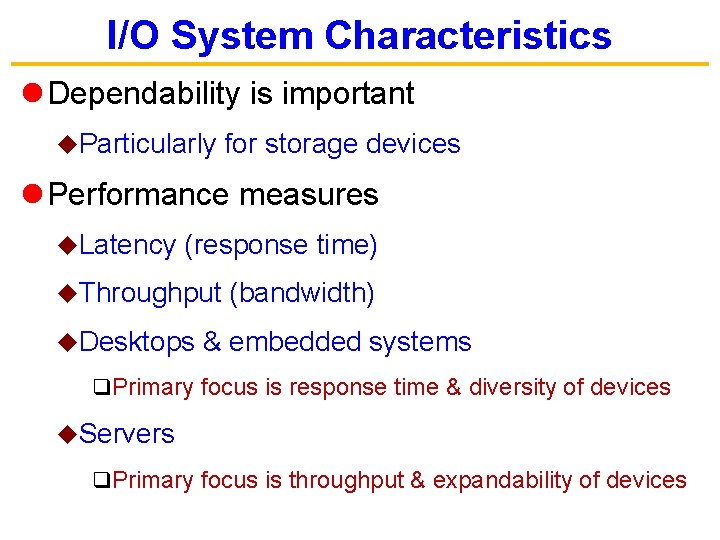

I/O System Characteristics l Dependability is important u. Particularly for storage devices l Performance measures u. Latency (response time) u. Throughput u. Desktops (bandwidth) & embedded systems q. Primary focus is response time & diversity of devices u. Servers q. Primary focus is throughput & expandability of devices

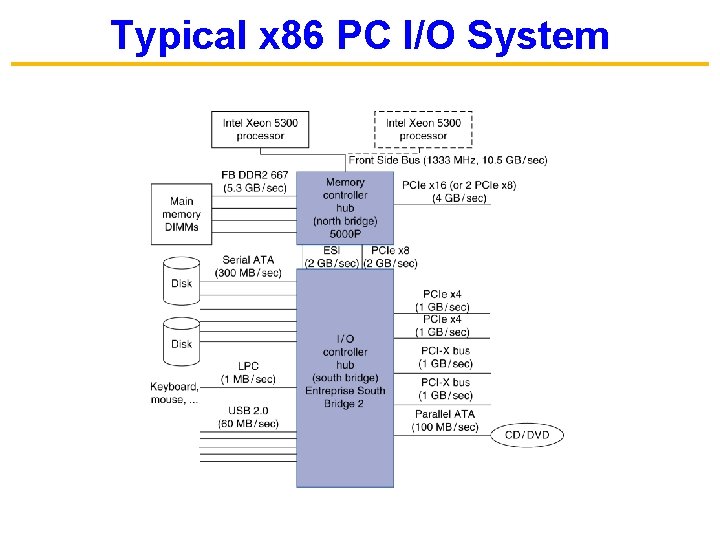

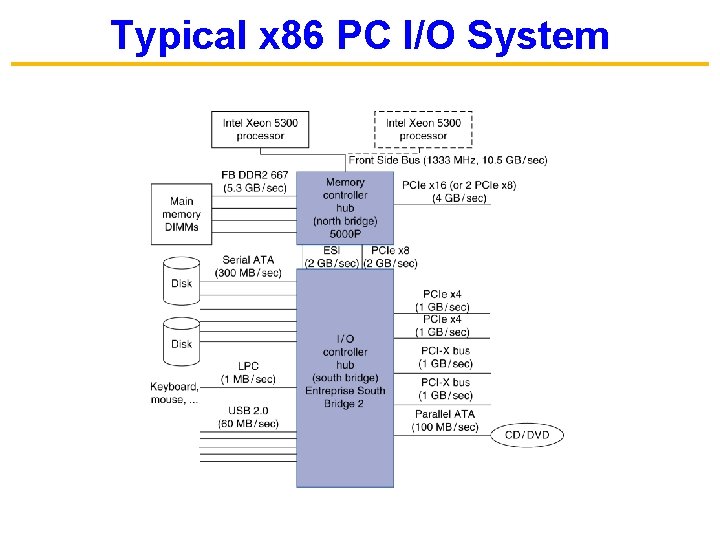

Typical x 86 PC I/O System

I/O Register Mapping l Memory mapped I/O u. Registers are addressed in same space as memory u. Address decoder distinguishes between them u. OS uses address translation mechanism to make them only accessible to kernel l I/O instructions u. Separate instructions to access I/O registers u. Can only be executed in kernel mode u. Example: x 86

Polling l Periodically check I/O status register u. If device ready, do operation u. If error, take action l Common in small or low-performance realtime embedded systems u. Predictable timing u. Low hardware cost l In other systems, wastes CPU time

Interrupts l When a device is ready or error occurs u. Controller interrupts CPU l Interrupt is like an exception u. But not synchronized to instruction execution u. Can invoke handler between instructions u. Cause information often identifies the interrupting device l Priority interrupts u. Devices needing more urgent attention get higher priority u. Can interrupt handler for a lower priority interrupt

I/O Data Transfer l Polling and interrupt-driven I/O u. CPU transfers data between memory and I/O data registers u. Time consuming for high-speed devices l Direct memory access (DMA) u. OS provides starting address in memory u. I/O controller transfers to/from memory autonomously u. Controller interrupts on completion or error

Server Computers l Applications are increasingly run on servers u. Web search, office apps, virtual worlds, … l Requires large data center servers u. Multiple processors, networks connections, massive storage u. Space and power constraints l Server equipment built for 19” racks u. Multiples of 1. 75” (1 U) high

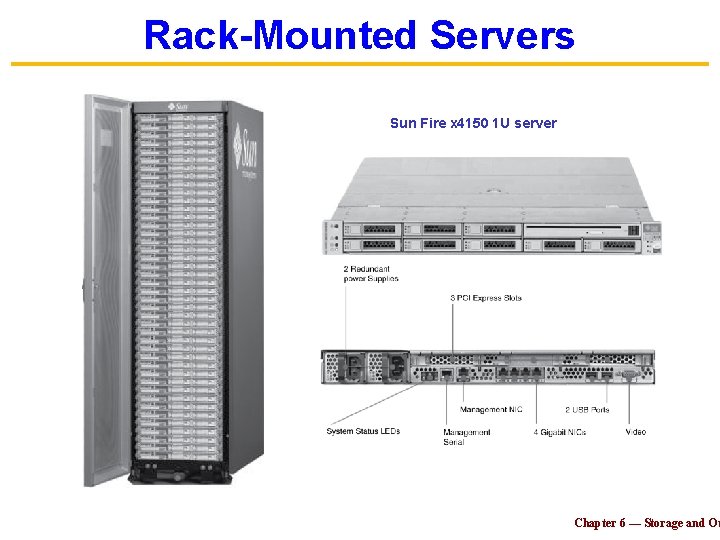

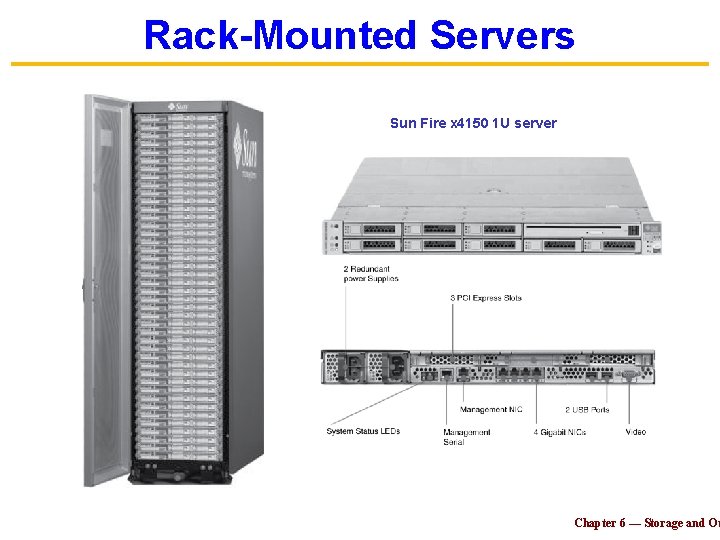

Rack-Mounted Servers Sun Fire x 4150 1 U server Chapter 6 — Storage and Ot

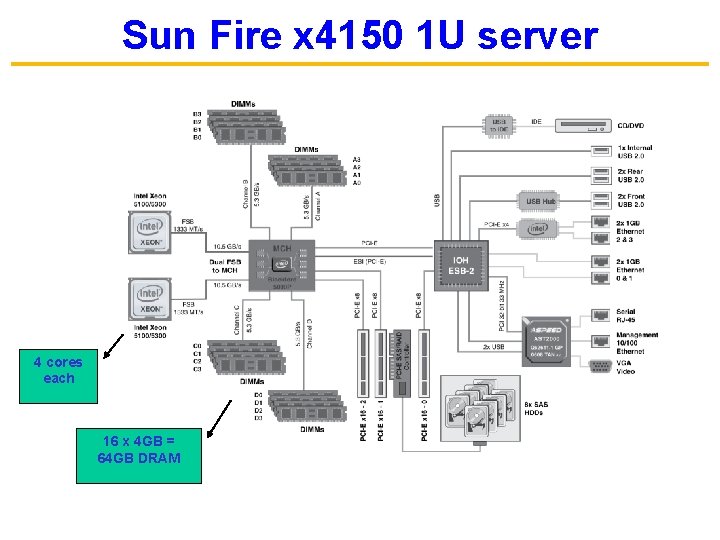

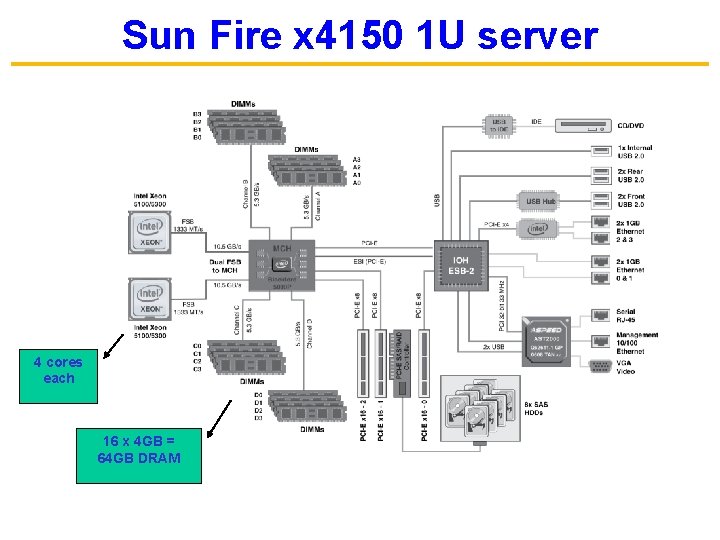

Sun Fire x 4150 1 U server 4 cores each 16 x 4 GB = 64 GB DRAM

Concluding Remarks l I/O performance measures u. Throughput, response time u. Dependability and cost also important l Buses used to connect CPU, memory, I/O controllers u. Polling, interrupts, DMA l RAID u. Improves performance and dependability

THINK: Weekend!! The best way to predict the future is to create it. Peter Drucker 37